Abstract

Background

Virtual reality, augmented reality, and mixed reality make use of a variety of different software and hardware, but they share three main characteristics: immersion, presence, and interaction. The umbrella term for technologies with these characteristics is extended reality. The ability of extended reality to create environments that are otherwise impossible in the real world has practical implications in the medical discipline. In ophthalmology, virtual reality simulators have become increasingly popular as tools for surgical education. Recent developments have also explored diagnostic and therapeutic uses in ophthalmology.

Objective

This systematic review aims to identify and investigate the utility of extended reality in ophthalmic education, diagnostics, and therapeutics.

Methods

A literature search was conducted using PubMed, Embase, and Cochrane Register of Controlled Trials. Publications from January 1, 1956 to April 15, 2020 were included. Inclusion criteria were studies evaluating the use of extended reality in ophthalmic education, diagnostics, and therapeutics. Eligible studies were evaluated using the Oxford Centre for Evidence-Based Medicine levels of evidence. Relevant studies were also evaluated using a validity framework. Findings and relevant data from the studies were extracted, evaluated, and compared to determine the utility of extended reality in ophthalmology.

Results

We identified 12,490 unique records in our literature search; 87 met final eligibility criteria, comprising studies that evaluated the use of extended reality in education (n=54), diagnostics (n=5), and therapeutics (n=28). Of these, 79 studies (91%) achieved evidence levels in the range 2b to 4, indicating poor quality. Only 2 (9%) out of 22 relevant studies addressed all 5 sources of validity evidence. In education, we found that ophthalmic surgical simulators demonstrated efficacy and validity in improving surgical performance and reducing complication rates. Ophthalmoscopy simulators demonstrated efficacy and validity evidence in improving ophthalmoscopy skills in the clinical setting. In diagnostics, studies demonstrated proof-of-concept in presenting ocular imaging data on extended reality platforms and validity in assessing the function of patients with ophthalmic diseases. In therapeutics, heads-up surgical systems had similar complication rates, procedural success rates, and outcomes in comparison with conventional ophthalmic surgery.

Conclusions

Extended reality has promising areas of application in ophthalmology, but additional high-quality comparative studies are needed to assess their roles among incumbent methods of ophthalmic education, diagnostics, and therapeutics.

Keywords: extended reality, virtual reality, augmented reality, mixed reality, ophthalmology, ophthalmic

Introduction

The rapid development of extended reality technologies has necessitated recent efforts to define and draw lines between new concepts and subgroups of extended reality applications [1]. Virtual reality has been defined as one in which our natural surroundings are completely replaced with a 3D computer-generated environment via wearable screens in the form of head-mounted displays [2]. Augmented reality is a superimposition of computer-generated content with limited interactivity onto our visible surroundings. Mixed reality is similar to augmented reality, except that the user is able to interact vividly with computer-generated content [1]. Mixed reality can be considered an amalgamation of the features of both virtual reality and augmented reality, as both highly interactive computer-generated objects and the real physical world are integrated to dynamically coexist within a single display [3,4]. Whereas virtual reality, augmented reality, and mixed reality make use of a variety of different software and hardware, these extended reality technologies share 3 main characteristics: immersion, presence, and interaction [2,5]. Immersion refers to a perception of physical existence within the extended reality environment, presence describes the perception of connection to the environment, whereas interaction is the ability to act and receive feedback within the environment [2].

In medicine, the nascent influence of extended reality is prevalent. Virtual reality platforms have been designed to teach foundational subjects, such as human anatomy [6,7], and train surgeons in complex surgical procedures [8-11]. Augmented and mixed reality offer methods of visualizing intraoperative procedures and diagnostic images with devices, such as Google Glass (Google Inc) or Microsoft HoloLens (Microsoft Inc), that have the potential to improve procedure safety and success [12-14]. The ability of virtual reality to distract patients from the physical environment also offers therapeutic approaches for rehabilitation and for treating pain or psychiatric disorders [15-17]. Likewise, ophthalmology has seen a growing influence of extended reality. Ophthalmic graduate medical education in the United States has seen an increase in the use of virtual eye surgery simulators, from 23% in 2010 to 73% in 2018 [18,19]. Extended reality technologies have also been explored as a method of therapy in ophthalmic diseases such as amblyopia and visual field defects [20,21]. Although the versatility of extended reality platforms can influence the practice of ophthalmology, health care providers should be well informed of the benefits and limitations of such technologies. This will allow evidence-based decision making when adopting nascent methods of ophthalmic education, diagnosis, and treatment. The focus of this review was to systematically evaluate current evidence of the efficacy, validity, and utility of the application of extended reality in ophthalmic education, diagnostics, and therapeutics.

Methods

Eligibility Criteria

We included studies evaluating the use of extended reality for ophthalmic applications in education, diagnostics, and therapeutics for eye care professionals and ophthalmic patients. All study designs were included with the exception of systematic reviews, case reports, and case series with ≤3 patients. Non-English publications and publications on the technical engineering of extended reality were excluded.

Search Methods

Three databases served as the source of our search—PubMed MEDLINE, Embase, and Cochrane Register of Controlled Trials. Search terms included “Virtual Reality,” “Augmented Reality,” “Mixed Reality,” “Simulation,” “Simulated,” “3D,” “Ophthalmology,” “Ophthalmic,” and “Eye.” The search was performed on April 15, 2020. Publications from January 1, 1956 to April 2020 were searched without language or publication-type restrictions. References in studies meeting the eligibility criteria were searched to identify additional eligible studies. EndNote X9 (2020; Clarivate Analytics) was used to manage all identified publications and remove duplicates (Multimedia Appendix 1). Search results were recorded according to PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-analyses) guidelines [22].

Study Selection

Two authors (CWO and MCJT) read all titles returned by the search. All abstracts of relevant titles and full texts of the relevant abstracts were read by the same authors to evaluate eligibility. Any uncertainties was resolved by discussion among all authors.

Data Collection and Analysis

For each study that met eligibility criteria, the quality of study was evaluated using Oxford Centre for Evidence-Based Medicine (OCEBM) levels of evidence [23].

Information from each study was extracted, including aim, design, population, sample size, extended reality technology type, application, outcomes, and findings.

A number of eligible studies investigated the use of extended reality educational training simulators as training and assessment tools. Evidence of validity should be used to support the appropriateness of interpretation of results from assessments of performance using these simulators [24,25]. Validation is critical to be able to trust the results of a given education tool, and educators need evidence of validity to identify the appropriate assessment tool to meet specific educational needs with finite resources [26]. We chose a contemporary model of validity [24], comprising 5 sources of validity evidence—Content, Response process, Internal structure, Relationship to other variables, and Consequences [25,27] (Multimedia Appendix 2), to evaluate the extent to which the validity of these simulator-based assessments had been established by evidence. Due to a high degree of heterogeneity between studies, quantitative statistical analysis was not conducted.

Results

General

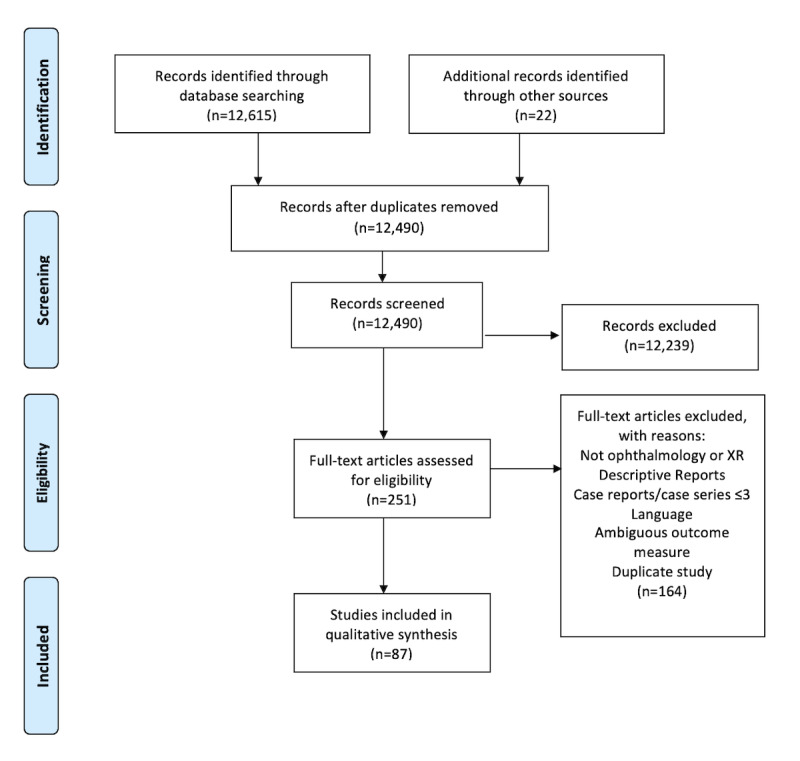

A total of 12,490 unique records were identified. After screening by title and abstract, 251 full-text publications were retrieved for assessment for final eligibility. Of these, 164 were excluded, and 87 studies met the final eligibility criteria (Figure 1). Of these, 54 were relevant to the use of extended reality in education, 5 were relevant to the use of extended reality in diagnostics, and 28 were relevant to the use of extended reality in therapeutics.

Figure 1.

Flow Diagram showing inclusion process for identified records. XR: extended reality.

Education

Overview

Applications of extended reality in education included surgical simulators (46/54), ophthalmoscopy simulators (6/54), and optometry training simulators (2/54), with medical students, optometry students, trainee, or trained ophthalmologists as participants.

Surgical Simulators

Of 46 studies evaluating surgical simulators, the EyeSi surgical simulator (VR Magic) was most commonly used (n=38). Others included MicroVisTouch (ImmersiveTouch) (n=1), PixEye Ophthalmic Simulator (SimEdge SA) (n=1), and 6 self-designed simulators. The most common surgical procedure simulated in these studies was cataract surgery (n=36), followed by vitreoretinal procedures (n=9), laser trabeculoplasty (n=1), and corneal laceration repair (n=1).

Of the 46 studies, 24 were studies that evaluated the efficacy of surgical simulators using evaluations of surgical performance on real patients, objective assessments by subspecialty experts, or simulator-based metrics as outcome measures. There were 14 [28-47] studies that used surgical performance on real patients as outcome measures, of which 4 were randomized trials [48-51] (Table 1); these randomized trials compared the use of virtual reality ophthalmic surgical simulators with conventional methods of surgical training. Objective assessment metrics of participants’ surgical performance on real patients were evaluated by subspecialty experts. All studies [48,50,51], except one [49], showed that simulator-training resulted in surgical performance significantly superior to that of conventional training methods in terms of quality, time efficiency, efficacy, or complication rates. In particular, Deuchler et al [50] found that warm-up simulation training improved performance for surgeons who had not operated for a significant period of time. Daly et al [49] showed that residents who underwent EyeSi training were significantly slower at performing their first continuous curvilinear capsulorhexis than participants who underwent wet-lab training but achieved similar surgical performance scores.

Table 1.

Randomized trials evaluating efficacy of surgical simulators in improving surgical performance on real patients.

| Study | Design (OCEBMa level) | Intervention group training | Control group training | Simulated task | Population | Findings |

| Peugnet (1998) [48] | Randomized trial (2b) | Laser photocoagulation simulator (n=5) | Real patients (n=4) | Retinal photo-coagulation | Eye residents | Simulator training was significantly more time efficient (time efficiency index of 0.59 vs 0.28, P<.05), and resulted in a trend of greater photocoagulation efficiency (duration/impact index of 0.040 vs 0.028) |

| Daly (2013) [49] | Randomized trial (2b) | EyeSi (n=11) | Wet lab (n=10) | Continuous curvilinear capsulorhexis | Eye residents | Wet-lab trained residents significantly faster than EyeSi-trained residents (P=.038) |

| Deuchler (2016) [50] | Randomized trial (1b) | EyeSi warm-up (n=9) | No warm-up (n=12) | Pars plana vitrectomy | Surgeries by vitreoretinal surgeons | EyeSi warm-up improved surgical performance significantly (GRASISb score of 1.0 vs 0.5, P=.0302) |

| Alwadani (2012) [51] | Randomized trial (2b) | PixEyes simulator (n=24) | Didactic, wet lab (n=23) | Argon laser trabeculoplasty | Medical students | VR-trained students had lower rates of inadvertent corneal/iris burns (4.5% vs 34.0%, P=.01), delivery misses (8% vs 55%, P=.001), overtreatment and undertreatment (7% vs 46%, P=.015) |

aOCEBM: Oxford Centre for Evidence-Based Medicine.

There were 10 nonrandomized studies [28-37] that evaluated the EyeSi simulator for cataract surgery training (Table 2). Thomsen et al [28] and la Cour et al [29] demonstrated statistically significant improved Objective Structured Assessment of Cataract Surgical Skill Scores (OSACSS) in novice surgeons after training with the EyeSi cataract module. Roohipoor et al [30] found significant correlations between residents’ EyeSi simulator-based scores and their eventual surgery count and Global Rating Assessment of Skills in Intraocular Surgery scores. The other 7 studies [31-37] investigated the relationship between EyeSi simulator use and complication rates in cataract surgery on real patients, of which 6 studies [31-36] showed that the use of the EyeSi simulator was associated with reduced complication rates. McCannel et al [37] found that EyeSi capsulorhexis training was not associated with lower vitreous loss rates overall but was associated with higher nonerrant continuous curvilinear capsulorhexis-associated vitreous loss.

Table 2.

Nonrandomized trials evaluating efficacy of surgical simulators in improving surgical performance on real patients.

| Study | Design (OCEBMa level) | Comparison or control group | Outcome measure | Participants and cataract surgeries, (n) | Findings |

| Thomsen (2017a) [28] | Cohort (2b) | Before EyeSi use | OSACSSb | Cataract surgeons (19) | Novices and less-experienced surgeons showed significant improvements in the operating room (32% and 38% improvement, P=.008 and P=.018 respectively) after EyeSi training |

| La Cour (2019) [29] | Cohort (2b) | Before EyeSi use | OSACSS | Cataract surgeons (19) | EyeSi training resulted in significantly improved surgical performance in less-experienced surgeons. Skill-transfer between modules was not demonstrable |

| Roohipoor (2017) [30] | Cohort (2b) | N/Ac | GRASISd | Ophthalmology residents (30) | Significant correlations between residents’ EyeSi simulator-based scores and their eventual surgery count and GRASIS scores |

| Belyea (2011) [31] | Cohort (3b) | No EyeSi use | Phaco time, percentage power, complications | Surgeries by ophthalmology residents (592) | EyeSi training resulted in significantly lower procedure duration (P=.002), percentage power (P=.001), and nonsignificantly fewer intraoperative complications |

| Pokroy (2013) [32] | Cohort (2c) | No EyeSi use | Incidence of posterior capsule tears, operation duration | Surgeries by ophthalmology residents (1000) | EyeSi training resulted in nonsignificantly fewer posterior capsule tears and shorter learning curves |

| Ferris (2020) [33] | Cohort (2b) | No EyeSi access | Posterior capsule rupture rates | Surgeries by ophthalmology residents (17831) | Residents with EyeSi access had a significant reduction in posterior capsule rupture rates (4.2% vs 2.6%, Difference in Proportions 1.5%, 95% CI 0.5-2.6%, P=.003). Posterior capsule rupture rates significantly lower after access to EyeSi (3.5% to 2.6%, Difference in proportions 0.9%, 95% CI 0.4-1.5%, P=.001) |

| Lucas (2019) [34] | Cohort (2b) | No EyeSi use | Complication rates | Surgeries by ophthalmology residents (140) | EyeSi training resulted in significantly fewer complications (12.86 vs 27.14%, P=.031) |

| Staropoli (2018) [35] | Cohort (2b) | No EyeSi use | Complication rates | Surgeries by ophthalmology residents (955) | EyeSi training resulted in significantly fewer complications (2.4 vs 5.1%, P=.037) |

| McCannel (2013) [36] | Case series (4) | Reduced EyeSi use | Errant continuous curvilinear capsulorhexis rates | Surgeries by ophthalmology residents (1037) | EyeSi training resulted in significantly lower errant continuous curvilinear capsulorhexis rates (5.0 vs 15.7%, P<.001) |

| McCannel (2017) [37] | Case series (4) | Reduced EyeSi use | Vitreous loss rates, retained lens material | Surgeries by ophthalmology residents (1037) | EyeSi training was not associated with lower vitreous loss rates or less retained lens material but was associated with higher vitreous loss in nonerrant CCCse |

aOCEBM: Oxford Centre for Evidence-Based Medicine.

bOSACCS: Objective Structured Assessment of Cataract Surgical Skill Score.

cN/A: not applicable.

dGRASIS: Global Rating Assessment of Skills in Intraocular Surgery.

eCCC: continuous curvilinear capsulorhexis.

Seven studies [38-44] investigated the efficacy of the EyeSi surgical simulator (n=6) or a self-made augmented reality microsurgery simulator (n=1) by evaluating participants’ surgical performance on the same simulators using simulator-based metrics (Table 3 and Table 4). Selvander et al [40] used the OSACSS and Objective Structured Assessment of Technical Surgical Skills (OSATS).

Table 3.

Randomized trials evaluating efficacy of surgical simulators in improving surgical performance as measured by the same simulator.

| Study | Design (OCEBMa level) | Intervention group | Control group | Participants | Simulated task |

| Bergqvist (2014) [38] | Randomized trial (1b) | EyeSi training (n=10) | No EyeSi training (n=10) | Medical students | Cataract surgery |

| Thomsen (2017b) [39] | Randomized trial (2b) | EyeSi training (n=6) | No EyeSi use (n=6) | Eye residents | Cataract surgery, vitreoretinal surgery |

| Selvander (2012) [40] | Randomized trial (2b) | EyeSi cataract navigation training first (n=17) | EyeSi capsulorhexis training first (n=18) | Medical students | Capsulorhexis, cataract navigation |

aOCEBM: Oxford Centre for Evidence-Based Medicine.

Table 4.

Nonrandomized trials evaluating efficacy of surgical simulators in improving surgical performance as measured by the same simulator.

| Study | Design (OCEBMa level) | Surgical simulator | Participants | Simulated task | Findings |

| Saleh (2013a) [41] | Case series (4) | EyeSi | Eye residents (n=17) | Cataract surgery | EyeSi training resulted in significantly improved scores (P<.001) among residents, especially for capsulorhexis and antitremor |

| Gonzalez-Gonzalez (2016) [42] | Case series (4) | EyeSi | Medical students, eye residents (n=14) | Capsulorhexis | EyeSi training resulted in significantly improved course scores for both dominant (33.4 vs. 46.5; P<.05) and nondominant hands (28.9 vs. 47.7; P<.001) and faster performance times (P<.001) |

| Bozkurt (2018) [43] | Cohort (2b) | EyeSi | Ophthalmologists (n=16) | Capsulorhexis module | EyeSi training resulted in significantly improved capsulorhexis scores (P=.001) |

| Ropelato (2020) [44] | Case series (4) | Microsoft HoloLens | Unspecified (n=47) | Internal limiting membrane peeling | There was significant improvement in micromanipulation performance scores after simulator-training |

aOCEBM: Oxford Centre for Evidence-Based Medicine.

Three studies were randomized trials [38-40] (Table 3). Thomsen et al [39] investigated if there could be interprocedural transfer of skills and found that residents with simulated cataract surgery training did not perform significantly better than those without (simulator score with training: mean 381, SD 129 vs simulator score without training: mean 455, SD 82, P=.262) at the vitreoretinal surgery module. Selvander et al [40] had a similar aim and found that training on the capsulorhexis or cataract navigation training module on the EyeSi did not significantly improve performance at the other (OSACSS—training: 8, no training: 8, P=.64; OSATS score—training: 7, no training: 10, P=.52); however, repeated practice with each module significantly improved simulator-based scoring for the respective modules. Bergqvist et al [38] found that medical students who trained with simulated cataract surgery had higher overall simulator scores and fewer complications than those who did not train on the simulator. These trials suggest that simulator training improves performance only for the specific procedure being trained. The other 4 studies [41-44] were prospective cohort studies or case series (Table 4) that demonstrated that extended reality surgical training resulted in significant improvements on subsequent simulator-based performance scores.

Three randomized trials investigated the effect of extended reality surgical simulator training on surgical performance in the wet lab (Table 5). Surgical performance was assessed using objective outcomes. Feldman et al [46] and Feudner et al [47] showed that training on the EyeSi simulator significantly improved wet-lab performance for corneal laceration repair and capsulorhexis, respectively, while Jonas et al [45] showed that training on a self-made virtual reality simulator improved wet-lab performance for pars plana vitrectomies.

Table 5.

Studies evaluating efficacy of surgical simulators in improving surgical performance in the wet lab.

| Study | Design (OCEBMa level) | Intervention group | Control group | Participants | Outcome measure tool |

| Jonas (2003) [45] | Randomized trial (2b) | Simulator training (n=7) | No simulator training (n=7) | Medical students, eye residents | Amount of vitreous removed, retinal lacerations, residual retinal detachment, duration |

| Feldman (2007) [46] | Randomized trial (2b) | EyeSi training (n=8) | No EyeSi training (n=8) | Medical students | Corneal Laceration Repair Assessment |

| Feudner (2009) [47] | Randomized trial (1b) | EyeSi training (n=31) | No EyeSi training (n=31) | Eye residents | Scoring based on capsulorhexis video |

aOCEBM: Oxford Centre for Evidence-Based Medicine.

Of 46 studies, 20 studies evaluated the validity of surgical simulator-based assessments (Multimedia Appendix 3). Most validity studies achieved an OCEBM level of evidence of 2b, corresponding to exploratory cohort studies with good reference standards. The most common source of validity evidence was Relationship with other variables, addressed in 19 of 20 studies (95%). Studies achieved this by statistically evaluating the relationship between surgical performance on the simulator and participants’ levels of expertise. Content validity was addressed in 18 studies (90%), Response process was addressed in 9 studies (45%), Internal structure was addressed in 5 studies (25%), and Consequences was addressed in 2 studies (10%). Only 2 of 20 (10%) studies addressed all 5 sources.

Of these 20 studies, 12 assessed surgical performance using simulator-based scoring only [39,43,52-61], 6 studies compared simulator-based scores with video-based scoring (OSACSS, OSATS, or motion-tracking software) [62-67], and 2 studies used video-based scoring only [40,68].

For the EyeSi surgical simulator, most studies found that the surgical performance of experienced participants was significantly better than that of less-experienced participants. Sikder et al [52] found that intervening surgical experience significantly improved capsulorhexis performance on the MicroVisTouch cataract surgery simulator. Lam et al [62] showed that in a self-made phacoemulsification simulator, more experienced participants attained significantly higher scores in all main procedures and completed tasks significantly faster.

Five studies [69-73] assessed the perception of ophthalmologists and medical students toward surgical simulators using user-reported outcome measures. These studies achieved OCEBM evidence levels of 4 (n=4) and 2b (n=1). Users found the EyeSi and a novel virtual reality continuous curvilinear capsulorhexis simulator to be useful in improving surgical skill, confidence, and understanding, while providing a safe and realistic alternative for training.

Ophthalmoscopy Simulators

Six studies [74-79] evaluated the use of extended reality as a tool for education in ophthalmoscopy. Simulators used were the EyeSi Augmented Reality Direct (n=1) and Binocular Indirect (n=3) ophthalmoscopy simulators, and 2 novel self-made direct ophthalmoscopy simulators comprising the HTC Vive Virtual Reality-Head-Mounted Display (n=1) and the RITECH II Virtual Reality-Head-Mounted Display (n=1).

Two randomized trials [74,75], with OCEBM evidence levels 2b, assessed the efficacy of the EyeSi Binocular Indirect Ophthalmoscopy simulator. Both studies showed that participants who trained with the EyeSi Binocular Indirect Ophthalmoscopy simulator performed significantly better than participants who underwent conventional training.

Three studies [75-77], with OCEBM evidence levels of 2b, assessed the validity of the EyeSi Binocular Indirect Ophthalmoscopy simulator (n=2) and the EyeSi Binocular Direct Ophthalmoscopy simulator (n=1) for training and assessment. All studies demonstrated Relationships with other variables as a source of validity evidence and found that participants with more experience had significantly higher ophthalmoscopy evaluation scores. Content validity was addressed in all studies. Only 1 study [77] addressed Internal structure by evaluating internal consistency between simulator modules and evaluated Consequences by calculating a pass or fail score.

Two user perception studies [78,79] found that medical students felt that self-assembled virtual reality direct ophthalmoscopy simulators were usable and useful in improving ophthalmoscopy skills.

Optometry Training Simulators

Two studies [80,81] evaluated the preliminary user experience of an augmented reality optometry simulator comprising a head-mounted display, a slit-lamp instrument, and a simulated eye, which allowed the simulation of optometry training tasks. User studies involving undergraduate optometry students showed that the simulator was feasible in simulating foreign body removal as a training task with a high level of user satisfaction.

Diagnostics

Overview

Five studies evaluated the use of extended reality for the production of immersive and interactive content for diagnostic applications. Two studies evaluated the use of extended reality to display ocular imaging data [82,83], and 3 studies [84-86] evaluated the validity of extended reality as a simulation tool for the functional assessment of patients with ophthalmic diseases.

Ocular Imaging

Two case series, which achieved OCEBM evidence levels of 4, evaluated the presentation of ocular imaging modalities in virtual reality and augmented reality environments.

Maloca et al [82] tested the feasibility of displaying optical coherence tomography images in a virtual reality environment with a virtual reality-head-mounted display. A user perception survey involving 57 participants found it to be well tolerated with minimal side-effects. Berger et al [83] demonstrated feasibility for a method of direct overlay of photographic and angiographic fundus images onto a real-time slit lamp fundus view in 5 participants.

Simulators for Functional Assessment

Three studies evaluated the use of extended reality simulators for the functional assessment of patients with ophthalmic diseases. The studies achieved OCEBM levels of evidence of 4.

Goh et al [84] trialed the use of the Virtual Reality Glaucoma Visual Function Test with a smartphone paired with the Google Cardboard head-mounted display to assess the visual function of glaucoma patients and found that stationary test person scores demonstrated criterion and convergent validity, corresponding to Relationship with other variables.

Ungewiss et al [85] compared the assessment of driving performance in a driving simulator with that in a real vehicle in patients with glaucoma (n=10), hemianopia (n=10), and normal controls (n=20) and found that patients with hemianopic and glaucoma performed worse than healthy controls on the driving simulator, demonstrating Relationship with other variables as a source of validity evidence.

Jones et al [86] evaluated the use of a head-mounted display to simulate visual impairment in glaucoma using virtual reality and augmented reality and found it able to replicate and objectively quantify functional impairments associated with visual impairments. When the simulated visual field loss was inferior, impairments were noted to be significantly greater than those noted when the simulated visual field loss was superior, which was consistent with previous experiences of real patients with glaucoma [87-89].

Therapeutics

Overview

A total of 28 studies evaluated the use of extended reality in therapeutics. These studies evaluated heads-up surgery (n=21), binocular treatment of amblyopia (n=2), functional improvement for the visually impaired (n=4), and an aid for achromatopsia (n=1).

Heads-up Surgery

Heads-up surgery involves the use of a 3D camera to capture images from a stereomicroscope for presentation on a 3D display. Of 21 studies [90-110], the most common heads-up surgical system evaluated was NGENUITY 3D (Alcon Laboratories) (n=12), followed by TrueVision 3D HD System (TrueVision Systems Inc) (n=2), TRENION 3D HD (Carl Zeiss Meditec) (n=1), MKC-700HD and CFA-3DL1 (Ikegami) (n=1), Digital Microsurgical Workstation (3D Vision Systems) (n=1), TIPCAM 1S 3D ORL endoscope (Karl Storz) (n=1). Surgical procedures included vitreoretinal procedures (n=17), cataract surgery (n=5), scleral buckle (n=1), and endoscopic lacrimal surgery (n=1).

Six studies had OCEBM evidence levels of 2b, corresponding to randomized trials (n=4) and cohort studies (n=2). There were 15 studies with OCEBM evidence levels of 4, corresponding to case series, case-control studies, or poor-quality cohort studies.

The 4 randomized trials [90-93] demonstrated noninferiority of heads-up surgery in comparison with conventional microscope surgery in postoperative outcomes and complications. Qian et al [90] performed phacoemulsification and intraocular lens implantation and reported no significant difference in mean surgery time, postoperative mean endothelial cell density between conventional surgery (n=10) and heads-up surgery (n=10). Talcott et al [91] performed pars plana vitrectomies and showed that compared with conventional surgery (n=16), heads-up surgery (n=23) significantly increased macular peel time (14.76 minutes vs 11.87 minutes, P=.004) but not overall operative time. There was no significant difference in visual acuity (logarithm of the minimum angle of resolution) or change from baseline, and no clinically significant intraoperative complications. Romano et al [92] randomized 50 eyes to the use of an unspecified heads-up surgical system (n=25) and conventional surgery (n=25) for 25-gauge pars plana vitrectomy; there was no significant difference in mean operation duration. Surgeons and observers were significantly more satisfied (P<.001) using the heads-up system. Kumar et al [93] randomized 50 patients to macular hole surgery with an unspecified heads-up system (n=25) and conventional macular hole surgery (n=25); there were no significant differences in postoperative visual acuity, macule hole indices, surgical time, total internal limiting membrane peel time, number of flap initiations, and macular hole closure rates. Microscope illumination intensity (heads-up: 100%; conventional: 45%) and endoillumination was significantly lower in heads-up surgery (heads-up: 40%; conventional: 13%).

The other 17 studies [94-110] were case series or cohort studies (Multimedia Appendix 4). They reported experiences with different heads-up surgical systems with various surgical procedures and assessed a few common outcomes with the following findings.

Of studies comparing heads-up surgical systems with conventional surgery, most reported procedural success or success that was not significantly different from that of conventional surgery. There were no significant differences in mean procedural durations, postoperative visual acuity, or improvements in visual acuity. The minimum required endoillumination was lower in heads-up surgery than that in conventional surgery. Zhang et al [94] found a significantly lower mean minimum required endoillumination for heads-up surgery than that for conventional surgery (10% vs 35%, 598.7 vs 1913 lx, P<.001). Matsumoto et al [95] operated safely and successfully on 74 eyes with an endoillumination intensity of 3% using a heads-up system. No study reported major perioperative complications or significant differences in complication rates between heads-up surgery and conventional surgery. Users preferred heads-up surgery to conventional surgery.

Binocular Treatment of Amblyopia

Two studies [21,111] evaluated the efficacy of the use of extended reality for interactive and immersive binocular treatment for amblyopia. Lee et al [111] randomized 22 children with amblyopia (mean age 8.7 years, SD 1.3) to treatment with virtual reality videogaming on an unspecified virtual reality-head-mounted display (n=7), virtual reality videogaming and Bangerter foil (n=5), or Bangerter foil only (n=10), achieving an OCEBM evidence level of 2b. Two of 7 (29%) of patients in the virtual reality videogaming group, and 2 of 5 (40%) patients in the virtual reality videogaming with Bangerter foil group gained more than 0.2 in logarithm of the minimum angle of resolution of vision. Ziak et al [21] trialed the use of the Oculus Rift virtual reality head-mounted display for dichoptic virtual reality video gaming in treating 17 adults with amblyopia, achieving an OCEBM evidence level of 4. There was a significant improvement in mean amblyopic eye visual acuity (logarithm of the minimum angle of resolution: from mean 0.58, SD 0.35 to mean 0.43, SD 0.38; P<.01). Mean stereoacuity also improved significantly from 263.3 s of arc to 176.7 s of arc (P<.01).

Functional Improvement for Visual Disorders

Five case series studies [20,112-115] evaluated the use of extended reality technologies to improve the function of patients with visual impairment and disorders. All reached an OCEBM evidence level of 4.

Two studies evaluated the use of digital spectacles (DSpecs) to improve mobility in a total of 43 patients with peripheral visual field deficits [20,112]. DSpecs work by capturing, relocating, and resizing video signals to fit within a patient’s visual field in real time using augmented reality technology. DSpecs enabled patients with visual field defects to have improved object identification, hand-eye coordination, and walking mobility.

Sanchez et al [113] evaluated Augmented Reality Tags for Assisting the Blind, an augmented reality system which helps the user determine the position of indoor objects by generating an audio-based representation of space. Blind participants perceived Augmented Reality Tags for Assisting the Blind to be a useful tool for assisting orientation and mobility tasks.

Tobler-Ammann et al [114] evaluated the use of virtual reality exercise games to encourage exploration of neglected space in patients with visuospatial neglect after stroke. Cognitive and spatial exploration skills trended toward improvement after the use of virtual reality exercise games and continued improving at follow-up in 5 of 7 participants. Adherence rates were high, and there were no adverse events.

Melillo et al [115] evaluated the efficacy of an augmented reality wearable improved vision system for patients with color vision deficiency. The system captures and remaps colors from the environment and displays it to the user via a head-mounted display. The system significantly improved Ishihara Vision Test scores in participants with color vision deficiency (mean score 5.8 vs 14.8, P=.03).

Discussion

Although a wide range of clinically evaluated ophthalmic applications of extended reality were identified, we predominantly focused on the following domains: education, diagnostics, and therapeutics. In education, simulators demonstrated efficacy and validity in improving surgical and ophthalmoscopy skills. In diagnostics, extended reality devices demonstrated proof-of-concept in displaying ocular imaging data and validity in assessing the function of patients with glaucoma. In therapeutics, heads-up surgical systems were found to be efficacious and safe alternatives to conventional microscope surgery. The overall evidence, however, for the utility of these applications is limited. Only 8 of 87 (9%) studies had OCEBM levels of evidence of 1b, which represented randomized trials with a narrow confidence interval, while 79 of 87 (91%) studies had OCEBM levels of evidence ranging from 2b to 4 (cohort studies, case-control studies, and case series). For extended reality applications only evaluated by 1 or 2 studies, this limited evidence makes it difficult to extrapolate their utility in a wider context.

The acquisition of motor skills, as described by the Fitts–Posner 3‐Stage Theory [116] and the Dreyfus and Dreyfus model [117], progresses from an initial cognitive phase, in which learners attempt to understand, to an associative phase, in which they modify movement strategies based on feedback, to a final autonomous phase, in which motor performance is fluid. Advances in surgical simulation technology have provided options to augment or replace traditional methods without compromising training efficiency and patient safety. Although most randomized trials [38,39,45-47] showed that simulator-trained groups performed better than those who had no training at all, only 3 studies [48,49,51] drew comparisons between simulator-trained participants and participants trained with conventional methods of surgical training such as wet-lab materials and real patients. The performance of simulator-trained inexperienced surgeons was superior to that of conventionally trained inexperienced surgeons only in the laser-based procedures [48,51]. Where intraocular surgery was concerned, the performance of simulator-trained inexperienced surgeons was comparable, but not superior, to that of wet-lab trained inexperienced surgeons. Moreover, they required a longer duration to complete the procedures [49]. This could be attributed to the fact that the instruments used in wet-lab training were identical to those used in the operating room, whereas surgical simulators may not have been able to emulate all tactile and ergonomic aspects of ophthalmic surgery. This suggests a continued role for wet-lab training, at least until simulators can closely replicate the full surgical experience.

It has been shown that practicing complex motor task skills is most effective in multiple short training sessions spaced over time with variable tasks [118]. Simulation-based training allows for this, and most efficacy studies [37,39,40] demonstrate that extended reality surgical simulators were able to improve surgical performance in the specific procedure for which the participants were trained; however, the same studies found little evidence of a crossover effect—that these improvements were applicable to other surgical procedures—suggesting that simulator training is highly specific. It is possible that intense focus on solitary surgical steps can result in lack of skill development in others [37], and the transfer of surgical skills thus cannot be anticipated when planning surgical training curriculum. Nonetheless, simulation training appears to reduce complications for both surgically naive and less experienced surgeons and to improve performance for experienced ones who have had a hiatus in undertaking procedures.

Studies evaluating ophthalmoscopy simulators found that simulator training can improve both direct and indirect ophthalmoscopy skills. In comparison with surgical simulators, ophthalmoscopy simulators are not as widely adopted in ophthalmic training curricula. One possible reason lies in the nature of the simulated task. Surgery performed by inexperienced novices risks harming the patient, whereas ophthalmoscopy constitutes a minor inconvenience to a patient in terms of discomfort and time. The availability of surgical simulators with efficacy and validity may be more of a necessity than ophthalmoscopy simulators.

Validity is the cornerstone upon which educational assessments depend to be appropriately justified in their application and without which the purpose of assessments in education will have little intrinsic meaning [25]. Most validity studies on educational simulators only addressed 1 or 2 sources of validity evidence. The presence of more studies addressing all sources of validity evidence would facilitate more robust interpretation of assessment scores in ophthalmic training.

In diagnostics, visualizing ocular imaging data, such as optical coherence tomography, fundus photography, and angiography in virtual reality and augmented reality can reveal important intraocular spatial relationships [119,120] and allow for interactive exploration of imaging data to aid education, understanding of diseases, clinical assessment, and therapy. These studies [119,120] demonstrated proof-of-concept, but more studies are needed to evaluate their efficacy and accuracy for clinical use. Extended reality applications also demonstrated validity evidence and feasibility in objectively assessing functional limitation and driving performance of glaucoma and hemianopic patients. The scope of their application, however, is currently limited by the small number of studies and low number of sources of validity evidence. While extended reality was able to simulate the visual environment, it was unable to account for nonvisual cues, such as sound and touch, that patients with ophthalmic disease might rely upon in daily function.

In therapeutics, heads-up surgery allowed for better visualization, better ergonomics, and reduced endoillumination intensities than those in traditional microscope surgery without compromising outcomes. Widespread adoption of heads-up surgery, however, is limited by a few factors. First, the comfort of assistant surgeons and anesthetists has been shown to be reduced due to the positioning of the heads-up display [94,96]. Second, the learning curve of heads-up surgery has yet to be studied comprehensively. Talcott et al [91] reported that surgeons had higher ease of use with the traditional microscope than with the NGENUITY 3D visualization system, showing that the preference for heads-up surgery was not unanimous. Additional experience with heads-up displays can guide ophthalmic surgeons in transitioning from traditional microscopes to these novel systems.

It has been shown that nonstereoscopic, nonimmersive binocular treatment is a promising approach in treating children with amblyopia, with positive outcomes in amblyopic eye visual acuity and stereoacuity [121,122]. Likewise, in our review, we found that stereoscopic immersive dichoptic stimulation conferred the same benefits onto amblyopic patients with amblyopia. The 2 studies included in our review reported high adherence rates [21,111], while there have been studies on nonimmersive dichoptic stimulation reporting lower adherence rates [123,124]. Although there has been no study comparing immersive dichoptic stimulation with nonimmersive dichoptic stimulation, we postulate that immersive dichoptic stimulation can engender better patient adherence and adherence to binocular treatment. Before immersive binocular treatment can be recommended over standard binocular treatment or even over conventional occlusion therapy, additional comparative studies are needed to determine if they would be appropriate replacements or adjuncts to conventional therapy. A cost-benefit analysis would also be important, given that conventional therapy is affordable yet still efficacious.

Extended reality applications are not without adverse effects. Studies have shown that viewing of 3D displays can induce objective changes to accommodative function, convergence, refractive errors, and tear films [125-130] and subjective symptoms such as asthenopia, motion sickness, fatigue, and head or neck discomfort [131]. Techniques such as discrete viewpoint control have been shown to potentially ameliorate these adverse effects, but they are not yet widely adopted [132]. Most studies in our review did not evaluate the incidence of adverse effects induced by the extended reality set-ups. While there is growing anticipation for the adoption of extended reality, more research is needed to ascertain if these adverse effects will significantly affect the efficacy of ophthalmic applications and shape user safety guidelines.

The cost of extended reality technologies will also be a major concern for potential users. One study [133] in 2013 estimated that the EyeSi surgical simulator would save the average US ophthalmic residency program $4980 yearly in nonsupply costs based on time saved in the operating room, requiring 34 years to recoup the simulator’s cost price. Another study [134] in 2013 found that nonsupply cost savings from EyeSi use were higher in larger residency programs, but still insufficient to recoup costs at 10 years. These cost-analyses, however, do not make comparisons with conventional methods of ophthalmic surgical training. The ability of extended reality surgical simulators to simulate surgical scenarios that are otherwise impossible to replicate in a wet lab, such as posterior polar cataracts, specific clock hours of zonulysis, or a shallow anterior chamber, may represent intangible cost-savings in ophthalmic pedagogy with respect to additional time spent supervising surgeons and operating room staff, resources, and schedule. The availability of such comparisons might help to better define the role of an extended reality simulator in surgical training from the perspective of cost.

Extended reality promises utility in many areas of application by overcoming the limits of the unalterable physical environment. In ophthalmic surgical education, extended reality surgical simulators demonstrate efficacy and validity in improving surgical performance. Before surgical simulators can be considered to be a competitive alternative to traditional ophthalmic surgical training, 2 main barriers need to be addressed—cost and the need for additional high-quality comparative studies. Until these issues are addressed, surgical simulators can only play a supporting role in surgical training programs, despite their versatility and ability to provide quantitative feedback. In therapy, extended reality heads-up surgical systems have already seen popular use in ophthalmic surgery, with the literature showing that this type of system provides an efficacious and safe platform for surgical visualization. Other diagnostic and therapeutic applications mainly demonstrate proof-of-concept, with a lack of robust comparative evidence. Additional comparative studies with designs that allow a high level of evidence should be encouraged to explore the efficacy of extended reality in these varied ophthalmic applications. As extended reality is a nascent technology, we predict that it will only continue to demonstrate value and offer novel alternatives in ophthalmic education, diagnostics, and therapy.

Abbreviations

- DSpecs

digital spectacles

- GRASIS

Global Rating Assessment of Skills in Intraocular Surgery

- OCEBM

Oxford Centre for Evidence-Based Medicine

- OSACSS

Objective Structured Assessment of Cataract Surgical Skill Score

- OSATS

Objective Structured Assessment of Technical Surgical Skills

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-analyses

Search strategy.

Messick’s five sources of validity evidence.

Studies evaluating validity of assessment based on surgical simulators.

Case-series and cohort studies evaluating the use of heads-up surgical systems.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Brigham TJ. Reality check: basics of augmented, virtual, and mixed reality. Med Ref Serv Q. 2017;36(2):171–178. doi: 10.1080/02763869.2017.1293987. [DOI] [PubMed] [Google Scholar]

- 2.Yeung AWK, Tosevska A, Klager E, Eibensteiner F, Laxar D, Stoyanov J, Glisic M, Zeiner S, Kulnik ST, Crutzen R, Kimberger O, Kletecka-Pulker M, Atanasov AG, Willschke H. Virtual and augmented reality applications in medicine: analysis of the scientific literature. J Med Internet Res. 2021 Feb 10;23(2):e25499. doi: 10.2196/25499. https://www.jmir.org/2021/2/e25499/ v23i2e25499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Milgram P, Kishino F. A taxonomy of mixed reality visual displays. IEICE Transactions on Information Systems. 1994;E77D(12):1321–1329. https://www.semanticscholar.org/paper/A-Taxonomy-of-Mixed-Reality-Visual-Displays-Milgram-Kishino/f78a31be8874eda176a5244c645289be9f1d4317 . [Google Scholar]

- 4.Martin G, Koizia L, Kooner A, Cafferkey J, Ross C, Purkayastha S, Sivananthan A, Tanna A, Pratt P, Kinross J, PanSurg Collaborative Use of the HoloLens2 mixed reality headset for protecting health care workers during the covid-19 pandemic: prospective, observational evaluation. J Med Internet Res. 2020 Aug 14;22(8):e21486–21486. doi: 10.2196/21486. https://www.jmir.org/2020/8/e21486/ v22i8e21486 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cipresso P, Giglioli IAC, Raya MA, Riva G. The past, present, and future of virtual and augmented reality research: a network and cluster analysis of the literature. Front Psychol. 2018 Nov 6;9:2086–20. doi: 10.3389/fpsyg.2018.02086. doi: 10.3389/fpsyg.2018.02086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Andrews C, Southworth MK, Silva JNA, Silva JR. Extended reality in medical practice. Curr Treat Options Cardiovasc Med. 2019 Mar 30;21(4):18. doi: 10.1007/s11936-019-0722-7. http://europepmc.org/abstract/MED/30929093 .10.1007/s11936-019-0722-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhao J, Xu X, Jiang H, Ding Y. The effectiveness of virtual reality-based technology on anatomy teaching: a meta-analysis of randomized controlled studies. BMC Med Educ. 2020 Apr 25;20(1):127. doi: 10.1186/s12909-020-1994-z. https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-020-1994-z .10.1186/s12909-020-1994-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mazur T, Mansour TR, Mugge L, Medhkour A. Virtual reality-based simulators for cranial tumor surgery: a systematic review. World Neurosurg. 2018 Feb;110:414–422. doi: 10.1016/j.wneu.2017.11.132.S1878-8750(17)32064-8 [DOI] [PubMed] [Google Scholar]

- 9.Bric JD, Lumbard DC, Frelich MJ, Gould JC. Current state of virtual reality simulation in robotic surgery training: a review. Surg Endosc. 2016 Jun;30(6):2169–78. doi: 10.1007/s00464-015-4517-y.10.1007/s00464-015-4517-y [DOI] [PubMed] [Google Scholar]

- 10.Alaker M, Wynn GR, Arulampalam T. Int J Surg. 2016 May;29:85–94. doi: 10.1016/j.ijsu.2016.03.034. https://linkinghub.elsevier.com/retrieve/pii/S1743-9191(16)00251-X .S1743-9191(16)00251-X [DOI] [PubMed] [Google Scholar]

- 11.Kyaw BM, Saxena N, Posadzki P, Vseteckova J, Nikolaou CK, George PP, Divakar U, Masiello I, Kononowicz AA, Zary N, Tudor Car L. Virtual reality for health professions education: systematic review and meta-analysis by the digital health education collaboration. J Med Internet Res. 2019 Jan 22;21(1):e12959. doi: 10.2196/12959. https://www.jmir.org/2019/1/e12959/ v21i1e12959 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jiang T, Yu D, Wang Y, Zan T, Wang S, Li Q. HoloLens-based vascular localization system: precision evaluation study with a three-dimensional printed model. J Med Internet Res. 2020 Apr 17;22(4):e16852. doi: 10.2196/16852. https://www.jmir.org/2020/4/e16852/ v22i4e16852 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wei NJ, Dougherty B, Myers A, Badawy SM. Using Google Glass in surgical settings: systematic review. JMIR Mhealth Uhealth. 2018 Mar 06;6(3):e54. doi: 10.2196/mhealth.9409. https://mhealth.jmir.org/2018/3/e54/ v6i3e54 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Eckert M, Volmerg JS, Friedrich CM. Augmented reality in medicine: systematic and bibliographic review. JMIR Mhealth Uhealth. 2019 Apr 26;7(4):e10967. doi: 10.2196/10967. https://mhealth.jmir.org/2019/4/e10967/ v7i4e10967 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Garrett B, Taverner T, Gromala D, Tao G, Cordingley E, Sun C. Virtual reality clinical research: promises and challenges. JMIR Serious Games. 2018 Oct 17;6(4):e10839. doi: 10.2196/10839. http://games.jmir.org/2018/4/e10839/ v6i4e10839 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Parsons TD, Riva G, Parsons S, Mantovani F, Newbutt N, Lin L, Venturini E, Hall T. Virtual reality in pediatric psychology. Pediatrics. 2017 Nov;140(Suppl 2):S86–S91. doi: 10.1542/peds.2016-1758I. http://pediatrics.aappublications.org/cgi/pmidlookup?view=long&pmid=29093039 .peds.2016-1758I [DOI] [PubMed] [Google Scholar]

- 17.de Rooij IJM, van de Port IGL, Meijer JG. Effect of virtual reality training on balance and gait ability in patients with stroke: systematic review and meta-analysis. Phys Ther. 2016 Dec;96(12):1905–1918. doi: 10.2522/ptj.20160054.ptj.20160054 [DOI] [PubMed] [Google Scholar]

- 18.Ahmed Y, Scott IU, Greenberg PB. A survey of the role of virtual surgery simulators in ophthalmic graduate medical education. Graefes Arch Clin Exp Ophthalmol. 2011 Aug 8;249(8):1263–5. doi: 10.1007/s00417-010-1537-0. [DOI] [PubMed] [Google Scholar]

- 19.Paul SK, Clark MA, Scott IU, Greenberg PB. Virtual eye surgery training in ophthalmic graduate medical education. Can J Ophthalmol. 2018 Dec;53(6):e218–e220. doi: 10.1016/j.jcjo.2018.03.018.S0008-4182(18)30080-2 [DOI] [PubMed] [Google Scholar]

- 20.Sayed AM, Kashem R, Abdel-Mottaleb M, Roongpoovapatr V, Eleiwa TK, Abdel-Mottaleb M, Parrish RK, Abou Shousha M. Toward improving the mobility of patients with peripheral visual field defects with novel digital spectacles. Am J Ophthalmol. 2020 Feb;210:136–145. doi: 10.1016/j.ajo.2019.10.005.S0002-9394(19)30494-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Žiak P, Holm A, Halička J, Mojžiš P, Piñero DP. Amblyopia treatment of adults with dichoptic training using the virtual reality oculus rift head mounted display: preliminary results. BMC Ophthalmol. 2017 Jun 28;17(1):105. doi: 10.1186/s12886-017-0501-8. https://bmcophthalmol.biomedcentral.com/articles/10.1186/s12886-017-0501-8 .10.1186/s12886-017-0501-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009 Aug 18;151(4):264–9, W64. doi: 10.7326/0003-4819-151-4-200908180-00135. https://www.acpjournals.org/doi/abs/10.7326/0003-4819-151-4-200908180-00135?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .0000605-200908180-00135 [DOI] [PubMed] [Google Scholar]

- 23.Jeremy HI, Paul G, Trish G, Carl H, Alessandro L, Ivan M, Bob P, Hazel T, Olive GH. The Oxford levels of evidence 2. Oxford Centre for Evidence-Based Medicine. [2020-05-01]. https://www.cebm.net/index.aspx?o=56532016 .

- 24.Messick S. Foundations of validity: meaning and consequences in psychological assessment. ETS Research Report Series. 2014 Aug 08;1993(2):i–18. doi: 10.1002/j.2333-8504.1993.tb01562.x. [DOI] [Google Scholar]

- 25.Downing SM. Validity: on meaningful interpretation of assessment data. Med Educ. 2003 Sep;37(9):830–7. doi: 10.1046/j.1365-2923.2003.01594.x. [DOI] [PubMed] [Google Scholar]

- 26.Cook DA, Hatala R. Validation of educational assessments: a primer for simulation and beyond. Adv Simul (Lond) 2016;1:31. doi: 10.1186/s41077-016-0033-y. https://advancesinsimulation.biomedcentral.com/articles/10.1186/s41077-016-0033-y .33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ghaderi Iman, Manji Farouq, Park Yoon Soo, Juul Dorthea, Ott Michael, Harris Ilene, Farrell Timothy M. Technical skills assessment toolbox: a review using the unitary framework of validity. Ann Surg. 2015 Feb;261(2):251–62. doi: 10.1097/SLA.0000000000000520. [DOI] [PubMed] [Google Scholar]

- 28.Thomsen ASS, Bach-Holm D, Kjærbo Hadi, Højgaard-Olsen Klavs, Subhi Y, Saleh GM, Park YS, la Cour M, Konge L. Operating room performance improves after proficiency-based virtual reality cataract surgery training. Ophthalmology. 2017 Apr;124(4):524–531. doi: 10.1016/j.ophtha.2016.11.015.S0161-6420(16)31160-5 [DOI] [PubMed] [Google Scholar]

- 29.la Cour M, Thomsen ASS, Alberti M, Konge L. Simulators in the training of surgeons: is it worth the investment in money and time? 2018 Jules Gonin lecture of the Retina Research Foundation. Graefes Arch Clin Exp Ophthalmol. 2019 May;257(5):877–881. doi: 10.1007/s00417-019-04244-y.10.1007/s00417-019-04244-y [DOI] [PubMed] [Google Scholar]

- 30.Roohipoor R, Yaseri M, Teymourpour A, Kloek C, Miller JB, Loewenstein JI. Early performance on an eye surgery simulator predicts subsequent resident surgical performance. J Surg Educ. 2017;74(6):1105–1115. doi: 10.1016/j.jsurg.2017.04.002.S1931-7204(16)30402-0 [DOI] [PubMed] [Google Scholar]

- 31.Belyea DA, Brown SE, Rajjoub LZ. Influence of surgery simulator training on ophthalmology resident phacoemulsification performance. J Cataract Refract Surg. 2011 Oct;37(10):1756–61. doi: 10.1016/j.jcrs.2011.04.032.S0886-3350(11)01084-4 [DOI] [PubMed] [Google Scholar]

- 32.Pokroy R, Du E, Alzaga A, Khodadadeh S, Steen D, Bachynski B, Edwards P. Impact of simulator training on resident cataract surgery. Graefes Arch Clin Exp Ophthalmol. 2013 Mar;251(3):777–81. doi: 10.1007/s00417-012-2160-z. [DOI] [PubMed] [Google Scholar]

- 33.Ferris JD, Donachie PH, Johnston RL, Barnes B, Olaitan M, Sparrow JM. Royal College of Ophthalmologists' National Ophthalmology Database study of cataract surgery: report 6. the impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br J Ophthalmol. 2020 Mar;104(3):324–329. doi: 10.1136/bjophthalmol-2018-313817.bjophthalmol-2018-313817 [DOI] [PubMed] [Google Scholar]

- 34.Lucas L, Schellini SA, Lottelli AC. Complications in the first 10 phacoemulsification cataract surgeries with and without prior simulator training. Arq Bras Oftalmol. 2019;82(4):289–294. doi: 10.5935/0004-2749.20190057. https://www.scielo.br/scielo.php?script=sci_arttext&pid=S0004-27492019005006104&lng=en&nrm=iso&tlng=en .S0004-27492019005006104 [DOI] [PubMed] [Google Scholar]

- 35.Staropoli PC, Gregori NZ, Junk AK, Galor A, Goldhardt R, Goldhagen BE, Shi W, Feuer W. Surgical simulation training reduces intraoperative cataract surgery complications among residents. Simul Healthc. 2018 Feb;13(1):11–15. doi: 10.1097/SIH.0000000000000255. http://europepmc.org/abstract/MED/29023268 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.McCannel CA, Reed DC, Goldman DR. Ophthalmic surgery simulator training improves resident performance of capsulorhexis in the operating room. Ophthalmology. 2013 Dec;120(12):2456–2461. doi: 10.1016/j.ophtha.2013.05.003.S0161-6420(13)00412-0 [DOI] [PubMed] [Google Scholar]

- 37.McCannel CA. Continuous curvilinear capsulorhexis training and non-rhexis related vitreous loss: the specificity of virtual reality simulator surgical training (an American Ophthalmological Society thesis) Trans Am Ophthalmol Soc. 2017 Aug;115:T2. http://europepmc.org/abstract/MED/29021716 . [PMC free article] [PubMed] [Google Scholar]

- 38.Bergqvist J, Person A, Vestergaard A, Grauslund J. Establishment of a validated training programme on the Eyesi cataract simulator. a prospective randomized study. Acta Ophthalmol. 2014 Nov;92(7):629–34. doi: 10.1111/aos.12383. doi: 10.1111/aos.12383. [DOI] [PubMed] [Google Scholar]

- 39.Thomsen ASS, Kiilgaard JF, la Cour M, Brydges R, Konge L. Is there inter-procedural transfer of skills in intraocular surgery? a randomized controlled trial. Acta Ophthalmol. 2017 Dec;95(8):845–851. doi: 10.1111/aos.13434. doi: 10.1111/aos.13434. [DOI] [PubMed] [Google Scholar]

- 40.Selvander Madeleine, Åsman Peter. Virtual reality cataract surgery training: learning curves and concurrent validity. Acta Ophthalmol. 2012 Aug;90(5):412–417. doi: 10.1111/j.1755-3768.2010.02028.x. doi: 10.1111/j.1755-3768.2010.02028.x. [DOI] [PubMed] [Google Scholar]

- 41.Saleh GM, Lamparter J, Sullivan PM, O'Sullivan F, Hussain B, Athanasiadis I, Litwin AS, Gillan SN. The international forum of ophthalmic simulation: developing a virtual reality training curriculum for ophthalmology. Br J Ophthalmol. 2013 Jun 26;97(6):789–92. doi: 10.1136/bjophthalmol-2012-302764.bjophthalmol-2012-302764 [DOI] [PubMed] [Google Scholar]

- 42.Gonzalez-Gonzalez LA, Payal AR, Gonzalez-Monroy JE, Daly MK. Ophthalmic surgical simulation in training dexterity in dominant and nondominant hands: results from a pilot study. J Surg Educ. 2016;73(4):699–708. doi: 10.1016/j.jsurg.2016.01.014.S1931-7204(16)00025-8 [DOI] [PubMed] [Google Scholar]

- 43.Bozkurt Oflaz A, Ekinci Köktekir B, Okudan S. Does cataract surgery simulation correlate with real-life experience? Turk J Ophthalmol. 2018 Jun;48(3):122–126. doi: 10.4274/tjo.10586. doi: 10.4274/tjo.10586.19076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ropelato S, Menozzi M, Michel D, Siegrist M. Augmented reality microsurgery: a tool for training micromanipulations in ophthalmic surgery using augmented reality. Simul Healthc. 2020 Apr;15(2):122–127. doi: 10.1097/SIH.0000000000000413. [DOI] [PubMed] [Google Scholar]

- 45.Jonas JB, Rabethge S, Bender H. Computer-assisted training system for pars plana vitrectomy. Acta Ophthalmol Scand. 2003 Dec;81(6):600–4. doi: 10.1046/j.1395-3907.2003.0078.x. https://onlinelibrary.wiley.com/resolve/openurl?genre=article&sid=nlm:pubmed&issn=1395-3907&date=2003&volume=81&issue=6&spage=600 .078 [DOI] [PubMed] [Google Scholar]

- 46.Feldman BH, Ake JM, Geist CE. Virtual reality simulation. Ophthalmology. 2007 Apr;114(4):828.e1–4. doi: 10.1016/j.ophtha.2006.10.016.S0161-6420(06)01453-9 [DOI] [PubMed] [Google Scholar]

- 47.Feudner EM, Engel C, Neuhann IM, Petermeier K, Bartz-Schmidt K, Szurman P. Virtual reality training improves wet-lab performance of capsulorhexis: results of a randomized, controlled study. Graefes Arch Clin Exp Ophthalmol. 2009 Jul;247(7):955–63. doi: 10.1007/s00417-008-1029-7. [DOI] [PubMed] [Google Scholar]

- 48.Peugnet F, Dubois P, Rouland JF. Virtual reality versus conventional training in retinal photocoagulation: a first clinical assessment. Comput Aided Surg. 1998;3(1):20–6. doi: 10.1002/(SICI)1097-0150(1998)3:1<20::AID-IGS3>3.0.CO;2-N.10.1002/(SICI)1097-0150(1998)3:1<20::AID-IGS3>3.0.CO;2-N [DOI] [PubMed] [Google Scholar]

- 49.Daly MK, Gonzalez E, Siracuse-Lee D, Legutko PA. Efficacy of surgical simulator training versus traditional wet-lab training on operating room performance of ophthalmology residents during the capsulorhexis in cataract surgery. J Cataract Refract Surg. 2013 Nov;39(11):1734–41. doi: 10.1016/j.jcrs.2013.05.044.S0886-3350(13)00973-5 [DOI] [PubMed] [Google Scholar]

- 50.Deuchler S, Wagner C, Singh P, Müller Michael, Al-Dwairi R, Benjilali R, Schill M, Ackermann H, Bon D, Kohnen T, Schoene B, Koss M, Koch F. Clinical efficacy of simulated vitreoretinal surgery to prepare surgeons for the upcoming intervention in the operating room. PLoS One. 2016;11(3):e0150690. doi: 10.1371/journal.pone.0150690. https://dx.plos.org/10.1371/journal.pone.0150690 .PONE-D-15-45359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Alwadani F, Morsi MS. PixEye virtual reality training has the potential of enhancing proficiency of laser trabeculoplasty performed by medical students: a pilot study. Middle East Afr J Ophthalmol. 2012 Jan;19(1):120–2. doi: 10.4103/0974-9233.92127. http://www.meajo.org/article.asp?issn=0974-9233;year=2012;volume=19;issue=1;spage=120;epage=122;aulast=Alwadani .MEAJO-19-120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Sikder S, Luo J, Banerjee PP, Luciano C, Kania P, Song JC, Kahtani ES, Edward DP, Towerki AA. The use of a virtual reality surgical simulator for cataract surgical skill assessment with 6 months of intervening operating room experience. Clin Ophthalmol. 2015;9:141–9. doi: 10.2147/OPTH.S69970. doi: 10.2147/OPTH.S69970.opth-9-141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Saleh G M, Theodoraki K, Gillan S, Sullivan P, O'Sullivan F, Hussain B, Bunce C, Athanasiadis I. The development of a virtual reality training programme for ophthalmology: repeatability and reproducibility (part of the International Forum for Ophthalmic Simulation Studies) Eye (Lond) 2013 Nov;27(11):1269–74. doi: 10.1038/eye.2013.166. http://europepmc.org/abstract/MED/23970027 .eye2013166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Rossi Juliana V, Verma Dinesh, Fujii Gildo Y, Lakhanpal Rohit R, Wu Sue Lynn, Humayun Mark S, De Juan Eugene. Virtual vitreoretinal surgical simulator as a training tool. Retina. 2004 Apr;24(2):231–6. doi: 10.1097/00006982-200404000-00007.00006982-200404000-00007 [DOI] [PubMed] [Google Scholar]

- 55.Mahr Michael A, Hodge David O. Construct validity of anterior segment anti-tremor and forceps surgical simulator training modules: attending versus resident surgeon performance. J Cataract Refract Surg. 2008 Jun;34(6):980–5. doi: 10.1016/j.jcrs.2008.02.015.S0886-3350(08)00255-1 [DOI] [PubMed] [Google Scholar]

- 56.Solverson Daniel J, Mazzoli Robert A, Raymond William R, Nelson Mark L, Hansen Elizabeth A, Torres Mark F, Bhandari Anuja, Hartranft Craig D. Virtual reality simulation in acquiring and differentiating basic ophthalmic microsurgical skills. Simul Healthc. 2009;4(2):98–103. doi: 10.1097/SIH.0b013e318195419e.01266021-200900420-00006 [DOI] [PubMed] [Google Scholar]

- 57.Privett Brian, Greenlee Emily, Rogers Gina, Oetting Thomas A. Construct validity of a surgical simulator as a valid model for capsulorhexis training. J Cataract Refract Surg. 2010 Nov;36(11):1835–8. doi: 10.1016/j.jcrs.2010.05.020.S0886-3350(10)01210-1 [DOI] [PubMed] [Google Scholar]

- 58.Nathoo Nawaaz, Ng Mancho, Ramstead Cory L, Johnson Michael C. Comparing performance of junior and senior ophthalmology residents on an intraocular surgical simulator. Can J Ophthalmol. 2011 Feb;46(1):87–8. doi: 10.3129/i10-065.S0008-4182(11)80018-9 [DOI] [PubMed] [Google Scholar]

- 59.Le Tran D B, Adatia Feisal A, Lam Wai-Ching. Virtual reality ophthalmic surgical simulation as a feasible training and assessment tool: results of a multicentre study. Can J Ophthalmol. 2011 Feb;46(1):56–60. doi: 10.3129/i10-051.S0008-4182(11)80011-6 [DOI] [PubMed] [Google Scholar]

- 60.Cissé Cécile, Angioi Karine, Luc Amandine, Berrod Jean-Paul, Conart Jean-Baptiste. EYESI surgical simulator: validity evidence of the vitreoretinal modules. Acta Ophthalmol. 2019 Mar;97(2):e277–e282. doi: 10.1111/aos.13910. doi: 10.1111/aos.13910. [DOI] [PubMed] [Google Scholar]

- 61.Spiteri A V, Aggarwal R, Kersey T L, Sira M, Benjamin L, Darzi A W, Bloom P A. Development of a virtual reality training curriculum for phacoemulsification surgery. Eye (Lond) 2014 Jan;28(1):78–84. doi: 10.1038/eye.2013.211. http://europepmc.org/abstract/MED/24071776 .eye2013211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Lam CK, Sundaraj K, Sulaiman MN, Qamarruddin FA. Virtual phacoemulsification surgical simulation using visual guidance and performance parameters as a feasible proficiency assessment tool. BMC Ophthalmol. 2016 Jun 14;16:88. doi: 10.1186/s12886-016-0269-2. https://bmcophthalmol.biomedcentral.com/articles/10.1186/s12886-016-0269-2 .10.1186/s12886-016-0269-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Selvander Madeleine, Asman Peter. Ready for OR or not? human reader supplements Eyesi scoring in cataract surgical skills assessment. Clin Ophthalmol. 2013;7:1973–7. doi: 10.2147/OPTH.S48374. doi: 10.2147/OPTH.S48374.opth-7-1973 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Thomsen Ann Sofia Skou, Kiilgaard Jens Folke, Kjaerbo Hadi, la Cour Morten, Konge Lars. Simulation-based certification for cataract surgery. Acta Ophthalmol. 2015 Aug;93(5):416–21. doi: 10.1111/aos.12691. doi: 10.1111/aos.12691. [DOI] [PubMed] [Google Scholar]

- 65.Thomsen Ann Sofia Skou, Smith Phillip, Subhi Yousif, Cour Morten la, Tang Lilian, Saleh George M, Konge Lars. High correlation between performance on a virtual-reality simulator and real-life cataract surgery. Acta Ophthalmol. 2017 May;95(3):307–311. doi: 10.1111/aos.13275. doi: 10.1111/aos.13275. [DOI] [PubMed] [Google Scholar]

- 66.Jacobsen Mads Forslund, Konge Lars, Bach-Holm Daniella, la Cour Morten, Holm Lars, Højgaard-Olsen Klavs, Kjærbo Hadi, Saleh George M, Thomsen Ann Sofia. Correlation of virtual reality performance with real-life cataract surgery performance. J Cataract Refract Surg. 2019 Sep;45(9):1246–1251. doi: 10.1016/j.jcrs.2019.04.007.S0886-3350(19)30276-7 [DOI] [PubMed] [Google Scholar]

- 67.Vergmann Anna Stage, Vestergaard Anders Højslet, Grauslund Jakob. Virtual vitreoretinal surgery: validation of a training programme. Acta Ophthalmol. 2017 Feb;95(1):60–65. doi: 10.1111/aos.13209. doi: 10.1111/aos.13209. [DOI] [PubMed] [Google Scholar]

- 68.Selvander Madeleine, Asman Peter. Cataract surgeons outperform medical students in Eyesi virtual reality cataract surgery: evidence for construct validity. Acta Ophthalmol. 2013 Aug;91(5):469–74. doi: 10.1111/j.1755-3768.2012.02440.x. doi: 10.1111/j.1755-3768.2012.02440.x. [DOI] [PubMed] [Google Scholar]

- 69.Wu DJ, Greenberg PB. A self-directed preclinical course in ophthalmic surgery. J Surg Educ. 2016;73(3):370–4. doi: 10.1016/j.jsurg.2015.11.005.S1931-7204(15)00286-X [DOI] [PubMed] [Google Scholar]

- 70.Yong JJ, Migliori ME, Greenberg PB. A novel preclinical course in ophthalmology and ophthalmic virtual surgery. Med Health R I. 2012 Nov;95(11):345–8. [PubMed] [Google Scholar]

- 71.Liang S, Banerjee PP, Edward DP. A high performance graphic and haptic curvilinear capsulorrhexis simulation system. Conf Proc IEEE Eng Med Biol Soc. 2009;2009:5092–5. doi: 10.1109/IEMBS.2009.5332727. [DOI] [PubMed] [Google Scholar]

- 72.Laurell C, Söderberg P, Nordh L, Skarman E, Nordqvist P. Computer-simulated phacoemulsification. Ophthalmology. 2004 Apr;111(4):693–8. doi: 10.1016/j.ophtha.2003.06.023.S0161-6420(03)01501-X [DOI] [PubMed] [Google Scholar]

- 73.Ng DS, Sun Z, Young AL, Ko ST, Lok JK, Lai TY, Sikder S, Tham CC. Impact of virtual reality simulation on learning barriers of phacoemulsification perceived by residents. Clin Ophthalmol. 2018;12:885–893. doi: 10.2147/OPTH.S140411. doi: 10.2147/OPTH.S140411.opth-12-885 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Leitritz MA, Ziemssen F, Suesskind D, Partsch M, Voykov B, Bartz-Schmidt KU, Szurman GB. Critical evaluation of the usability of augmented reality ophthalmoscopy for the training of inexperienced examiners. Retina. 2014 Apr;34(4):785–91. doi: 10.1097/IAE.0b013e3182a2e75d.00006982-201404000-00022 [DOI] [PubMed] [Google Scholar]

- 75.Rai AS, Rai AS, Mavrikakis E, Lam WC. Teaching binocular indirect ophthalmoscopy to novice residents using an augmented reality simulator. Can J Ophthalmol. 2017 Oct;52(5):430–434. doi: 10.1016/j.jcjo.2017.02.015.S0008-4182(16)30295-2 [DOI] [PubMed] [Google Scholar]

- 76.Chou J, Kosowsky T, Payal AR, Gonzalez Gonzalez LA, Daly MK. Construct and face validity of the eyesi indirect ophthalmoscope simulator. Retina. 2017 Oct;37(10):1967–1976. doi: 10.1097/IAE.0000000000001438. [DOI] [PubMed] [Google Scholar]

- 77.Borgersen NJ, Skou Thomsen AS, Konge L, Sørensen TL, Subhi Y. Virtual reality-based proficiency test in direct ophthalmoscopy. Acta Ophthalmol. 2018 Mar;96(2):e259–e261. doi: 10.1111/aos.13546. doi: 10.1111/aos.13546. [DOI] [PubMed] [Google Scholar]

- 78.Nguyen M, Quevedo-Uribe A, Kapralos B, Jenkin M, Kanev K, Jaimes N. An experimental training support framework for eye fundus examination skill development. Comput Methods Biomech Biomed Eng Imaging Vis. 2017 Oct 12;7(1):26–36. doi: 10.1080/21681163.2017.1376708. [DOI] [Google Scholar]

- 79.Wilson AS, O'Connor J, Taylor L, Carruthers D. A 3D virtual reality ophthalmoscopy trainer. Clin Teach. 2017 Dec;14(6):427–431. doi: 10.1111/tct.12646. [DOI] [PubMed] [Google Scholar]

- 80.Wei L, Najdovski Z, Nahavandi S, Weisinger H. Towards a haptically enabled optometry training simulator. Netw Model Anal Health Inform Bioinforma. 2014 May 1;3(1):1–8. doi: 10.1007/s13721-014-0060-3. [DOI] [Google Scholar]

- 81.Wei L, Najdovski Z, Wael A, Nahavandi S, Weisinger H. Augmented optometry training simulator with multi-point haptics. Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics; IEEE International Conference on Systems, Man, and Cybernetics; October 14-17; Seoul, South Korea. 2012. pp. 2991–2997. [DOI] [Google Scholar]

- 82.Maloca PM, de Carvalho JER, Heeren T, Hasler PW, Mushtaq F, Mon-Williams M, Scholl HPN, Balaskas K, Egan C, Tufail A, Witthauer L, Cattin PC. High-performance virtual reality volume rendering of original optical coherence tomography point-cloud data enhanced with real-time ray casting. Transl Vis Sci Technol. 2018 Jul;7(4):2. doi: 10.1167/tvst.7.4.2. https://tvst.arvojournals.org/article.aspx?doi=10.1167/tvst.7.4.2 .TVST-18-0803 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Berger JW, Madjarov B. Augmented reality fundus biomicroscopy: a working clinical prototype. Arch Ophthalmol. 2001 Dec;119(12):1815–8. doi: 10.1001/archopht.119.12.1815.eni10009 [DOI] [PubMed] [Google Scholar]

- 84.Goh RLZ, Kong YXG, McAlinden C, Liu J, Crowston JG, Skalicky SE. Objective assessment of activity limitation in glaucoma with smartphone virtual reality goggles: a pilot study. Transl Vis Sci Technol. 2018 Jan;7(1):10. doi: 10.1167/tvst.7.1.10. http://europepmc.org/abstract/MED/29372112 .TVST-17-0538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Ungewiss J, Kübler T, Sippel K, Aehling K, Heister M, Rosenstiel W, Kasneci E, Papageorgiou E, Simulator/On-road Study Group Agreement of driving simulator and on-road driving performance in patients with binocular visual field loss. Graefes Arch Clin Exp Ophthalmol. 2018 Dec;256(12):2429–2435. doi: 10.1007/s00417-018-4148-9.10.1007/s00417-018-4148-9 [DOI] [PubMed] [Google Scholar]

- 86.Jones PR, Somoskeöy T, Chow-Wing-Bom H, Crabb DP. Seeing other perspectives: evaluating the use of virtual and augmented reality to simulate visual impairments (OpenVisSim) NPJ Digit Med. 2020;3:32. doi: 10.1038/s41746-020-0242-6. http://europepmc.org/abstract/MED/32195367 .242 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Asfaw DS, Jones PR, Mönter VM, Smith ND, Crabb DP. Does glaucoma alter eye movements when viewing images of natural scenes? a between-eye study. Invest Ophthalmol Vis Sci. 2018 Jul 02;59(8):3189–3198. doi: 10.1167/iovs.18-23779.2687000 [DOI] [PubMed] [Google Scholar]

- 88.Dive S, Rouland JF, Lenoble Q, Szaffarczyk S, McKendrick AM, Boucart M. Impact of peripheral field loss on the execution of natural actions: a study with glaucomatous patients and normally sighted people. J Glaucoma. 2016 Oct;25(10):e889–e896. doi: 10.1097/IJG.0000000000000402. [DOI] [PubMed] [Google Scholar]

- 89.Lee SS, Black AA, Wood JM. Effect of glaucoma on eye movement patterns and laboratory-based hazard detection ability. PLoS One. 2017;12(6):e0178876. doi: 10.1371/journal.pone.0178876. https://dx.plos.org/10.1371/journal.pone.0178876 .PONE-D-17-06633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Qian Z, Wang H, Fan H, Lin D, Li W. Three-dimensional digital visualization of phacoemulsification and intraocular lens implantation. Indian J Ophthalmol. 2019 Mar;67(3):341–343. doi: 10.4103/ijo.IJO_1012_18.IndianJOphthalmol_2019_67_3_341_250756 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Talcott KE, Adam MK, Sioufi K, Aderman CM, Ali FS, Mellen PL, Garg SJ, Hsu J, Ho AC. Comparison of a three-dimensional heads-up display surgical platform with a standard operating microscope for macular surgery. Ophthalmol Retina. 2019 Mar;3(3):244–251. doi: 10.1016/j.oret.2018.10.016.S2468-6530(18)30496-2 [DOI] [PubMed] [Google Scholar]

- 92.Romano MR, Cennamo G, Comune C, Cennamo M, Ferrara M, Rombetto L, Cennamo G. Evaluation of 3D heads-up vitrectomy: outcomes of psychometric skills testing and surgeon satisfaction. Eye (Lond) 2018 Jun;32(6):1093–1098. doi: 10.1038/s41433-018-0027-1. http://europepmc.org/abstract/MED/29445116 .10.1038/s41433-018-0027-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Kumar A, Hasan N, Kakkar P, Mutha V, Karthikeya R, Sundar D, Ravani R. Comparison of clinical outcomes between "heads-up" 3D viewing system and conventional microscope in macular hole surgeries: a pilot study. Indian J Ophthalmol. 2018 Dec;66(12):1816–1819. doi: 10.4103/ijo.IJO_59_18. http://www.ijo.in/article.asp?issn=0301-4738;year=2018;volume=66;issue=12;spage=1816;epage=1819;aulast=Kumar .IndianJOphthalmol_2018_66_12_1816_245633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Zhang Z, Wang L, Wei Y, Fang D, Fan S, Zhang S. The preliminary experiences with three-dimensional heads-up display viewing system for vitreoretinal surgery under various status. Curr Eye Res. 2019 Jan;44(1):102–109. doi: 10.1080/02713683.2018.1526305. [DOI] [PubMed] [Google Scholar]

- 95.Matsumoto CS, Shibuya M, Makita J, Shoji T, Ohno H, Shinoda K, Matsumoto H. Heads-up 3d surgery under low light intensity conditions: new high-sensitivity hd camera for ophthalmological microscopes. J Ophthalmol. 2019;2019:5013463. doi: 10.1155/2019/5013463. doi: 10.1155/2019/5013463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Rizzo S, Abbruzzese G, Savastano A, Giansanti F, Caporossi T, Barca F, Faraldi F, Virgili G. 3D surgical viewing system in ophthalmology: perceptions of the surgical team. Retina. 2018 Apr;38(4):857–861. doi: 10.1097/IAE.0000000000002018. [DOI] [PubMed] [Google Scholar]