Abstract

A prevalent symptom of Parkinson’s disease (PD) is hypomimia — reduced facial expressions. In this paper, we present a method for diagnosing PD that utilizes the study of micro-expressions. We analyzed the facial action units (AU) from 1812 videos of 604 individuals (61 with PD and 543 without PD, with a mean age 63.9 y/o, sd. 7.8) collected online through a web-based tool (www.parktest.net). In these videos, participants were asked to make three facial expressions (a smiling, disgusted, and surprised face) followed by a neutral face. Using techniques from computer vision and machine learning, we objectively measured the variance of the facial muscle movements and used it to distinguish between individuals with and without PD. The prediction accuracy using the facial micro-expressions was comparable to methodologies that utilize motor symptoms. Logistic regression analysis revealed that participants with PD had less variance in AU6 (cheek raiser), AU12 (lip corner puller), and AU4 (brow lowerer) than non-PD individuals. An automated classifier using Support Vector Machine was trained on the variances and achieved 95.6% accuracy. Using facial expressions as a future digital biomarker for PD could be potentially transformative for patients in need of remote diagnoses due to physical separation (e.g., due to COVID) or immobility.

Subject terms: Parkinson's disease, Computer science

Parkinson’s disease can cause limb tremors, muscular rigidity, non-rhythmic head movements, soft speech, and much more1. One of the major symptoms of PD is stiffness in facial muscle movements and reduced facial expression, also known as hypomimia2. Hypomimia is considered an extremely sensitive biomarker for PD3, making it a promising tool for early diagnosis of PD4–6. It is very important to detect PD early since the reduced facial expressions are linked to reduced social wellbeing and depression7.

The existing digital biomarkers which capitalize on the motor symptoms often use wearable sensors (e.g., MC10 BioStampRC sensor8, tri-axial gyroscope9) that are reliable but require active participation, in addition to being expensive and thus, not scalable. On the other hand, facial expression analysis can be used as a non-invasive tool for detecting PD, only requiring a webcam or a phone with a camera. An additional benefit of analyzing videos is that potential PD patients do not need to be near a neurologist for an in-person diagnosis. This is potentially transformative for patients in need of physical separation from others (e.g., due to COVID-19) or are immobile. In addition, many underdeveloped areas and underrepresented populations can also benefit from such method that utilizes facial expressions without worrying about direct access to a neurologist.

In this work, we analyzed 1812 videos collected using an online tool (available at www.parktest.net). The videos contain 543 individuals performing three facial mimicry tasks. The facial mimicry tasks include making three facial expressions three times followed by a neutral face after every expression. The three facial expressions were smiling face, disgusted face, and surprise face. We analyzed the facial muscle movements using the facial action coding system10 while the participants were showing a facial expression. We computed the variance of the muscle movements in terms of action units (AUs) and showed that this measure of micro-expressions has the potential to be an important digital biomarker for PD. Our analysis shows that individuals with PD have fewer facial muscle movements than non-PD individuals (i.e., low variance in AU4 Brow Lowerer, AU6 Cheek Raiser, and AU12 Lip Corner Puller). We also find that the PD prediction accuracy using the facial muscles (95%) from videos is comparable to the existing state-of-the-art video analysis that utilizes limb movements (~93%)11.

Table 1 shows the demographics information. Table 2 shows the difference in the variance of AUs between PD and non-PD population. The significant differences were found in the smiling and surprised faces. Specifically, AU01 (inner brow riser) and AU06 (cheek raiser) had a significant difference in the smiling facial expression. This difference indicates that the smiling facial expression has the greatest potential in differentiating individuals with and without PD.

Table 1.

Demographic Information.

| Individuals with PD | Individuals without PD | |

|---|---|---|

| n | 61 | 543 |

| Age (mean/sd) | 68.03/7.1 | 63.50/5.4 |

| Gender (female/male) | 22/39 | 339/204 |

| Race (White/other) | 59/2 | 484/59 |

| Country (US/other) | 55/6 | 514/29 |

| Years of diagnosis (mean/sd) | 8.36/5.74 | – |

Table 2.

The difference in the variance of facial action units (AUs) between individuals with and without Parkinson’s disease.

| Expression | Facial action unit | Individuals with Parkinson’s disease Mean (SD) |

Individuals without Parkinson’s disease Mean (SD) |

MWU p-value |

Ancova p-value |

|---|---|---|---|---|---|

| Smiling face | AU01 (Inner Brow Raiser) | 0.15 (0.18) | 0.07 (0.12) | 0.001 | 0.002 |

| AU06 (Cheek Raiser) | 017 (0.15) | 0.25 (0.25) | 0.047 | 0.134 | |

| AU12 (Lip Corner Puller) | 0.21 (0.18) | 0.27 (0.24) | 0.065 | 0.226 | |

| Disgusted face | AU04 (Brow Lowerer) | 0.19 (0.20) | 0.26 (0.31) | 0.063 | 0.213 |

| AU07 (Eye Lid Tightener) | 0.19 (0.20) | 0.24 (0.27) | 0.103 | 0.298 | |

| AU09 (Nose Wrinkler) | 0.04 (0.06) | 0.04 (0.07) | 0.267 | 0.992 | |

| Surprising face | AU01 (Inner Brow Raiser) | 0.28 (0.28) | 0.27 (0.32) | 0.172 | 0.833 |

| AU02 (Outer Brow Raiser) | 0.15 (0.29) | 0.12 (0.18) | 0.144 | 0.455 | |

| AU04 (Brow Lowerer) | 0.31 (0.37) | 0.40 (0.43) | 0.061 | 0.159 |

The variance of AUs is an indicator of facial muscle movement.

We applied an SVM on the variance of nine AUs to classify individuals with PD. The result of the classifier is below.

Accuracy: 95.6%, F1: 0.95, AUC: 0.94, Precision: 95.8%, Recall: 94.3%

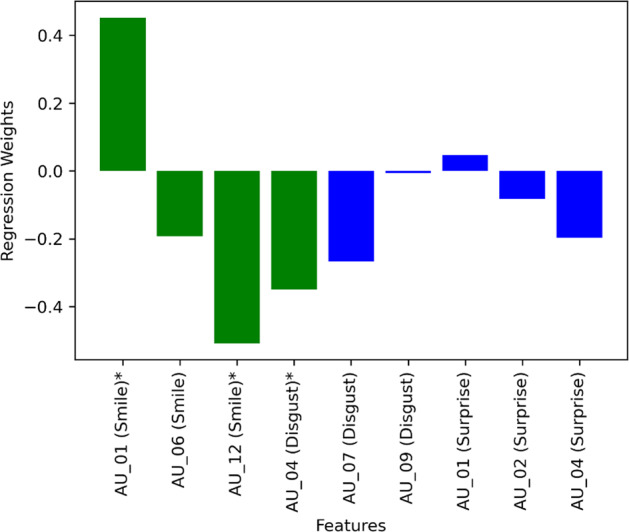

Figure 1 shows the weights of the features from logistic regression. The dependent variable was the binary diagnosis of PD and non-PD (PD being 1 and non-PD being 0). Here the independent variables were the variance in AUs. Each participant had nine associated AUs (see section 4.2) which yielded nine independent variables. For training and testing the logistic regression model we used leave-one-out cross-validation. The green bars indicate those features where p < 0.05. Seven out of nine features had negative weights. This means a lower variance in AUs indicates a higher probability of having PD, also suggesting that the facial muscle stiffness in PD participants influenced the weights to be negative.

Fig. 1. Logistic regression weights of the features while predicting PD.

The green color represents the features with p < 0.05.

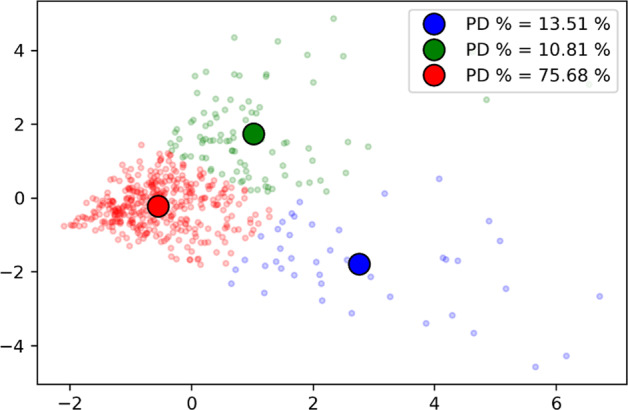

Figure 2 shows another visualization of the features. The nine features were converted to two-dimensional data using PCA and then the K-Means clustering was applied. Three clusters were identified. The cluster in red had the highest percentage of PD participants (76%). Also, it should be noted that the red cluster center is close to the (0, 0) coordinate. This indicates a similar effect of variance in AUs among the PD participants.

Fig. 2. A two-dimensional visualization of the nine action units (AUs) after applying the principal component analysis (PCA).

The dots on the surface represents each participant. The participants are clustered into three groups using K-means represented by three colors. The bold round dots represents the cluster center of the three clusters. The proportion of individuals with Parkinson’s disease in each group differed with 75.7% of individuals in the red cluster having Parkinson’s disease.

The distributions show that the variances of two AUs were significantly different between PD and non-PD. It is important to notice that both AUs were from the smiling facial expression. This indicates the smiling facial expression may be the most affected by PD among the other fundamental facial expressions, corroborating evidence from past studies12. The weights of logistic regression (Fig. 1) also show that all three of the AUs from the smiling facial expression had significant weight along with one AU from the disgusted expression. Three out of these four significant weights were negative, indicating an opposite relation to the PD. In other words, the lower level of muscle movements (i.e., Frontalis, pars medialis, Depressor Glabellae, Depressor Supercilli, Currugator, Orbicularis oculi, pars orbitalis, and Zygomatic Major) were associated with PD.

In both Table 2 and Fig. 1, AU01 has a higher level of variance in the PD than the non-PD group. AU01 is characterized by an inner eyebrow-raiser. In the past, the eyebrow tremor was found to be an initial manifestation of PD13. Thus, unlike for the other facial muscles, we think that having PD leads to the higher level of eyebrow movements.

A simple SVM classifier was able to achieve 95% classification accuracy. PD symptoms are often characterized by multiple different modalities, including limb tremors, head movements, voice, memory, sleep, and walking. The current state-of-the-art video analytic tools that rely on hand tremors and head movements were able to achieve 92% accuracy11. Our findings provide evidence that facial expressions, with further study may become a reliable biomarker for PD detection.

A computer algorithm analyzing micro-expressions can further augment a neurologist’s ability to objectively rate the symptoms of PD patients. A neurologist can only count the number of smiles or track the time involved in modulating a patient’s expression. We show that an algorithm’s ability to analyze the subtle characteristics of facial expressions, often invisible to a naked eye, adds significant new information to a neurologist.

It should be noted that not all individuals with PD show every symptom. This means a person with PD can have hand tremors but no stiffness in facial muscles or vice versa. Therefore, relying on only one modality cannot achieve perfect accuracy unless, in a very unlikely event, all PD individuals in the dataset show all symptoms. Because of this variability in symptom manifestation, it is important to find reliable biomarkers that utilize multiple different modalities to develop a robust AI model for identifying PD. Our findings only show that facial expressions, especially smiling, can be used as one of the reliable modalities.

One of the most common use cases of phones is taking photos of each other. Imagine, with the user’s permission, an app that can automatically analyze the characteristics of the user’s smile (from a short video) and provide a referral in case they are at risk. Additionally, this can enable low-cost screening where access to a neurologist is limited. For example, the population/neurologist ratio in African nations far exceeds that of the US and other developed countries (one study notes that in 23 African countries, the average population per neurologist exceeds half a million14. In contrast, ~75% of people in Africa have access to a mobile phone. In more developed regions such as in South Africa, more than 90% of the population has access to a mobile phone15. A similar situation exists in Asia and South America16.

Methods

Data set

The dataset consists of 1812 videos from 604 (61 with PD, 543 without PD) individuals. The videos were collected using an online PD video recording tool – PARK (Parkinson’s Analysis with Remote Kinetic tasks)17. Using this online tool, users can record their videos while performing the tasks from MDS-UPDRS (Movement Disorder Society – Unified Parkinson’s Disease Rating Scale). Before performing the tasks, users watch a short video of a person performing the task. In this paper, we present the analysis of the facial mimicry tasks collected using the PARK framework. The facial mimicry tasks include three separate facial expression tasks. Each video contains one facial expression. The participants were asked to make a facial expression, hold that for a couple of seconds, and then make a neutral face three times. The facial expressions smiling face, disgusted face, and surprised face. Figure 3 shows examples of these facial expressions. The authors affirm that human research participants provided informed consent for the publication of the images in Fig. 3. The videos are 10–12 s in duration.

Fig. 3. Sample frames from the data set.

All participants made three facial expressions followed by a neutral face.

The participants without PD were recruited through Facebook advertisements and Amazon Mechanical Turk. These participants self-identified as not having PD. The participants with PD were recruited by emailing PD patients who were receiving care or agreed to participate in research studies from the University of Rochester medical center. All PD participants had some degree of PD symptoms and were diagnosed by experts from the medical center.

Feature extraction and computational tools

The videos were analyzed using OpenFace software18 which automatically provides the facial action unit (AU) values of each frame. In the past, OpenFace software has been tested extensively to provide accurate detection of AUs19–21. The facial action units are associated with the muscle movements of the face. The facial action units are fundamental actions of facial muscles which was systematically developed by Paul Ekman in FACS (Facial Action Coding System)22. Activation of each facial action unit indicates the movement of a set of facial muscles22. For example, activation of AU 6 (also known as Cheek Raiser) indicates the movement of two facial muscles — orbicularis oculi and pars orbitalis —at a time. The OpenFace software gives a binary activation (0 or 1) and a raw magnitude (ranging 0 to 5) of each AU for each frame of a video that contains a human face. We analyzed the variance of the raw action unit when the corresponding action unit is active (i.e., the activation value is 1). This variance is an indicator of how much facial muscle movement occurs while showing a facial expression. A facial expression can be associated with multiple action units23. Since each facial expression was followed by a neutral face, we looked at raw AU values to find which AUs show three distinct peaks. Through this process, we identified the AUs that are associated with the three facial expressions. Each facial expression was found to be associated with three AUs. For example, AU01 (Inner Brow Raiser), AU06 (Cheek Raiser), and AU12 (Lip Corner Puller) were found to have three distinct peaks in smiling facial expression videos, thus they are associated with smiling. The disgusted face is associated with AU04 (Brow Lowerer), AU07 (Eye Lid Tightener), and AU09 (Nose Wrinkler); the surprised face is associated with AU01 (Inner Brow Raiser), AU02 (Outer Brow Raiser), and AU04 (Brow Lowerer). Table 2 shows the AUs associated with facial expressions.

We were interested in the muscle movements in those moments when the participants showed a particular facial expression. In the past, researchers have looked into the frequency and the magnitude of facial expressions12. In our case, the participants were asked to show a facial expression three times which makes the frequency of expressions the same for the PD group and the non-PD group. Additionally, the expressions were acted and not spontaneous, which is why analyzing the difference in AUs’ magnitude between PD and non-PD did not yield any significant outcome. Since we were interested in the muscle movements, we computed the variance of raw AUs when the binary value indicated the AU is activated (i.e., binary AU equals 1). This variance becomes the feature set of our analysis.

Analysis

The first set of analysis looks at the difference in the distribution of features between PD and non-PD patients. We performed the Mann–Whitney U test to identify the differences. Additionally, we used ANCOVA to control for the covariance of demographic variables such as age and gender. Since the data was not normally distributed, we used the non-parametric significance test. For the repeated significance test we used a Bonferroni correction to all the p-values reported here24. Each of the uncorrected p-values were multiplied by nine (number of tests) and an α = 0.05 was used to test the significance.

Next, we trained a machine learning model, SVM (Support Vector Machine) on the features collected from the videos to classify PD and non-PD. Our goal was to find out whether the data has any predictive power which could be utilized in the future for classification with more sophisticated machine learning models. The dataset was imbalanced so we used the SMOTE25 technique to resample the data, making the dataset balanced. We then used a leave-one-out cross-validation method to report the classification metrics. In this classification task, we computed the F1-score, precision, recall, and area under the curve metrics.

To find which features were important in predicting PD and non-PD, we applied a logistic regression model. We performed the Wald test to find the significance of the features as well as the weights of the fitted model.

We then focused on visualizing the data. Since the data had nine dimensions (from nine relevant AUs) we first reduced the dimension to two. We applied the principal component analysis (PCA) to reduce the dimensionality of the data. We then applied k-means clustering on the reduced dimensional data to see if there exist regions with a high percentage of PD populations.

Ethics

This analysis is a part of a study titled “Parkinson’s remote data” which is approved by the institutional review board of the University of Rochester. The data for analysis and photos presented in the figures were collected with participants’ informed consent electronically.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

This research is funded by the National Institute of Neurological Disorders and Stroke of the National Institutes of Health under Award Number P50NS108676 and a Google Faculty Award. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author contributions

M.R.A. performed the analysis on the dataset. T.M. and E.W. recruited participants and collected the dataset. E.W. also worked on the UX of the online tool. H.R. worked on the online tool which was used to collect the dataset. E.H. and R.D. are the PIs of this research. They interpreted the findings. All authors contributed to writing the manuscript.

Data availability

Due to the IRB requirements, the videos, which contain identifiable information, cannot be shared. The extracted features which are used in this analysis can be found here: https://github.com/mali7/PARK_facial_mimic

Code availability

All python scripts can be found here https://github.com/mali7/PARK_facial_mimic. The analyses were done using python version 3.7, numpy 1.21.0, and pandas 1.3.0.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article has been retracted. Please see the retraction notice for more detail: https://doi.org/10.1038/s41746-023-00761-7"

Change history

2/3/2023

This article has been retracted. Please see the Retraction Notice for more detail: 10.1038/s41746-023-00761-7

Supplementary information

The online version contains supplementary material available at 10.1038/s41746-021-00502-8.

References

- 1.Stoker, T. B. & Greenland, J.C. Parkinson’s Disease: Pathogenesis and Clinical Aspects. Parkinson’s Disease: Pathogenesis and Clinical Aspects (exon publications, 2018). [PubMed]

- 2.Rinn, W. E. The neuropsychology of facial expression: a review of the neurological and psychological mechanisms for producing facial expressions. Psychol. Bull. 10.1037/0033-2909.95.1.52 (1984). [PubMed]

- 3.Novellino, F. & Salsone, M. A Tool for Evaluating Facial Expression for Early Diagnosis of Parkinson’s Disease. (The Michael J. Fox Foundation, 2018).

- 4.Vinokurov, N., D. Arkadir, E. Linetsky, H. Bergman, & D. Weinshall. “Quantifying hypomimia in parkinson patients using a depth camera.” In International Symposium on Pervasive Computing Paradigms for Mental Health, pp. 63–71 (Springer, 2015).

- 5.Simons G, Pasqualini MCS, Reddy V, Wood J. Emotional and nonemotional facial expressions in people with Parkinson’s disease. J. Int. Neuropsychol. Soc. 2004;10:521. doi: 10.1017/S135561770410413X. [DOI] [PubMed] [Google Scholar]

- 6.Maycas-Cepeda T, et al. Hypomimia in Parkinson’s disease: what is it telling us? Front. Neurol. 2021;11:1775. doi: 10.3389/fneur.2020.603582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gunnery, S. D., Habermann, B., Saint-Hilaire, M., Thomas, C. A. & Tickle-Degnen, L. The relationship between the experience of hypomimia and social wellbeing in people with Parkinson’s disease and their care partners. J. Parkinsons Dis. 10.3233/JPD-160782 (2016). [DOI] [PMC free article] [PubMed]

- 8.Lonini, L. et al. Wearable sensors for Parkinson’s disease: which data are worth collecting for training symptom detection models. npj Digit. Med. 10.1038/s41746-018-0071-z (2018). [DOI] [PMC free article] [PubMed]

- 9.Schlachetzki, J. C. M. et al. Wearable sensors objectively measure gait parameters in Parkinson’s disease. PLoS ONE10.1371/journal.pone.0183989 (2017). [DOI] [PMC free article] [PubMed]

- 10.Ekman, P. & Friesen, W. V. Facial Action Coding System: A Technique for the Measurement of Facial Movement (Consulting Psychologists Press, 1978).

- 11.Rafayet Ali, M., Hernandez, J., Ray Dorsey, E., Hoque, E. & McDuff, D. Spatio-temporal attention and magnification for classification of Parkinson’s disease from videos collected via the internet. in 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020)(FG) (2020).

- 12.Smith, M. C., Smith, M. K. & Ellgring, H. Spontaneous and posed facial expression in Parkinson’s Disease. J. Int. Neuropsychol. Soc. 2, 383–391 (1996). [DOI] [PubMed]

- 13.Lopez Valdes, E. et al. Isolated eyebrow tremor as an initial presentation form of Parkinson’s disease. Mov. Disord. 10.1002/mds.26688 (2016).

- 14.Bower, J. H. & Zenebe, G. Neurologic services in the nations of Africa. Neurology10.1212/01.WNL.0000150894.53961.E2 (2005). [DOI] [PubMed]

- 15.Silver, L. & Johnson, C. Majorities In Sub-Saharan Africa Own Mobile Phones, But Smartphone Adoption Is Modest (Pew Research Center, 2019).

- 16.Tan C-T. Neurology in Asia. Neurology. 2015;84:623–625. doi: 10.1212/WNL.0000000000001224. [DOI] [PubMed] [Google Scholar]

- 17.Langevin, R. et al. The PARK Framework for Automated Analysis of Parkinson’s Disease Characteristics. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. Vol. 3. 10.1145/3328925 (2019).

- 18.Baltrusaitis, T., Zadeh, A., Lim, Y. C. & Morency, L.-P. Openface 2.0: facial behavior analysis toolkit. in 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018) 59–66 (2018).

- 19.Amos, B., B. Ludwiczuk, & M. Satyanarayanan. “Openface: A general-purpose face recognition library with mobile applications.” CMU School of Computer Science. Technical Report CMU-CS-16-118 (2016).

- 20.Santoso K, Kusuma GP. Face recognition using modified OpenFace. Proc. Comput. Sci. 2018;135:510–517. doi: 10.1016/j.procs.2018.08.203. [DOI] [Google Scholar]

- 21.Li, L., Z. Jun, J. Fei, & S. Li. “An incremental face recognition system based on deep learning.” In 2017 Fifteenth IAPR international conference on machine vision applications (MVA). p. 238–241 (IEEE, 2017).

- 22.Ekman, P., Friesen, W. V. & Hager, J. C. Facial action coding system (FACS). A Technique for the Measurement of Facial Action. Consulting, Palo Alto. Vol. 22 (Consulting Psychologists Press, 1978).

- 23.Du, S., Tao, Y. & Martinez, A. M. Compound facial expressions of emotion. Proc. Natl. Acad. Sci. USA2014, E1454–E1462 (2014). [DOI] [PMC free article] [PubMed]

- 24.Armstrong, R. A. When to use the Bonferroni correction. Ophthalmic Physiol. Opt.10.1111/opo.12131 (2014). [DOI] [PubMed]

- 25.Chawla, N. V., Bowyer, K. W., Hall, L. O. & Kegelmeyer, W. P. SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 10.1613/jair.953 (2002).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Due to the IRB requirements, the videos, which contain identifiable information, cannot be shared. The extracted features which are used in this analysis can be found here: https://github.com/mali7/PARK_facial_mimic

All python scripts can be found here https://github.com/mali7/PARK_facial_mimic. The analyses were done using python version 3.7, numpy 1.21.0, and pandas 1.3.0.