Abstract

Dimensionality reduction is an important first step in the analysis of single-cell RNA-sequencing (scRNA-seq) data. In addition to enabling the visualization of the profiled cells, such representations are used by many downstream analyses methods ranging from pseudo-time reconstruction to clustering to alignment of scRNA-seq data from different experiments, platforms, and laboratories. Both supervised and unsupervised methods have been proposed to reduce the dimension of scRNA-seq. However, all methods to date are sensitive to batch effects. When batches correlate with cell types, as is often the case, their impact can lead to representations that are batch rather than cell-type specific. To overcome this, we developed a domain adversarial neural network model for learning a reduced dimension representation of scRNA-seq data. The adversarial model tries to simultaneously optimize two objectives. The first is the accuracy of cell-type assignment and the second is the inability to distinguish the batch (domain). We tested the method by using the resulting representation to align several different data sets. As we show, by overcoming batch effects our method was able to correctly separate cell types, improving on several prior methods suggested for this task. Analysis of the top features used by the network indicates that by taking the batch impact into account, the reduced representation is much better able to focus on key genes for each cell type.

Keywords: batch effect removal, data integration, dimensionality reduction, domain adversarial training, single-cell RNA-seq

1. Introduction

Single-cell RNA sequencing (scRNA-seq) has revolutionized the study of gene expression programs (Hwang et al., 2018; Papalexi and Satija, 2018). The ability to profile genes at the single-cell level has revealed novel specific interactions and pathways within cells (Yu et al., 2016), differences in the proportions of cell types between samples (Jaitin et al., 2014; Zeisel et al., 2015), and the identity and characterization of new cell types (Villani et al., 2017). Several biological tissues, systems, and processes have recently been studied using this technology (Jaitin et al., 2014; Zeisel et al., 2015; Yu et al., 2016).

Although studies using scRNA-seq provide many insights, they also raise new computational challenges. One of the major challenges involves the ability to integrate and compare results from multiple scRNA-seq studies. There are several different commercial platforms for performing such experiments, each with their own biases. Furthermore, similar to other high-throughput genomic assays, scRNA-seq suffers from batch effects that can make cells profiled in one laboratory look very different from the same cells profiled at another laboratory (Tung et al., 2017; Stuart and Satija, 2019). This is a key issue for consortium-scale analysis, such as the Human Cell Atlas (Rozenblatt-Rosen et al., 2017; Regev et al., 2017) and HUBMaP Consortium (2019), where researchers across the globe are profiling single cells in their own laboratories and seeking to perform large-scale analysis that integrates data across the entire consortia. Even for cell profiles in the same laboratory, we often cannot avoid batch effects, for example, in studies where samples are collected at different times or across a large set of individuals (Nowotschin et al., 2019; Pijuan-Sala et al., 2019). Moreover, other types of high-throughput transcriptomics profiling, including microscopy-based techniques, are also generating single-cell expression data sets (Wang et al., 2018; Eng et al., 2019). The goal of fully utilizing these spatial data sets motivates the development of methods that can combine them with scRNA-seq when studying specific biological tissues and processes.

A number of recent methods have attempted to address this challenge by developing methods for aligning scRNA-seq data from multiple studies of the same biological system. Many of these methods rely on identifying nearest neighbors between the different data sets and using them as anchors. Methods that use this approach include mutual nearest neighbors (MNNs) (Haghverdi et al., 2018) and Seurat (Stuart et al., 2019). Others including scVI and scAlign first embed all data sets into a common lower dimensional space. scVI encodes the scRNA-seq data with a deep generative model conditioning on the batch identifiers (Lopez et al., 2018), whereas scAlign regularizes the representation between two data sets by minimizing the random walk probability differences between the original and embedding spaces. Although these methods were successful for some data sets, here we show that they are not always able to correctly match all cell types. A key problem with these methods is the fact that they are unsupervised and rely on the assumption that cell types profiled by the different studies overlap. Although this works for some data sets, it may fail for studies in which cells do not fully overlap or for those containing rare cell types. Unsupervised methods tend to group rare types with the larger types, making it hard to identify them in a joint space.

Recent machine learning work has focused on a related problem termed “domain adaptation/generalization.” Methods developed for these problems attempt to learn representations of diverse data that are invariant to technical confounders (Csurka, 2017; Motiian et al., 2017; Wang et al., 2019b). These methods have been used for multiple applications such as machine translation for domain-specific corpus (Chu and Wang, 2018) and face detection (Patel et al., 2015). Several methods proposed for domain adaptation rely on the use of adversarial methods (Ganin et al., 2016; Csurka, 2017; Li et al., 2018; Wang et al., 2019a), which has been proved effective to align latent distributions. In addition to the original task such as classification, these methods apply a domain classifier upon the learned representations. The encoder network is used for improving accurate classification while at the same time reducing the impact of the domain (by “fooling” a domain classifier). This is achieved by learning encoder weights that simultaneously perform gradient descent on the label classification task and gradient ascent on the domain classification task.

Here we extend these approaches, coupling them with Siamese network learning (Koch et al., 2015) for overcoming batch effects in scRNA-seq analysis. We define a “domain” in this article as a standalone data set profiled at a single laboratory using a single platform. We define “label” as the cell type for each cell in the data set. Considering the specificity of the cell types in the scRNA-seq data sets, we propose a conditional pair sampling strategy that constrains input pair selection when training the adversarial network. We discuss how to formulate a domain adaptation network for scRNA-seq data, how to learn the parameters for the network, and how to train it using available data.

We tested our method on several data sets ranging in size from 10 to 39 cell types and from 4 to 155 batches. As we show, for all of the data sets, our domain adversarial method improves on previous methods, in some cases significantly. Visualization of the learned representation from several different methods helps highlight the advantages of the domain adversarial framework. As we show, the framework is able to accurately mitigate the batch effects while maintaining the grouping of cells from the same type across different batches. Biological analysis of the resulting model identifies key genes that can correctly distinguish between cell types across different experiments. Such batch invariant genes are promising candidates for a cell-type specific signature that can be used across different studies to annotate cells.

2. Methods

2.1. Problem formulation

To formulate the problem we start with a few notation definitions. We assume that the single-cell RNA-seq data are drawn from the input space , where each sample (a cell) has p features corresponding to the gene expression values. Cells are also associated with the label , which represents their cell types. We associate each sample with a specific domain/batch that represents any standalone data set profiled at a single laboratory using a single platform. Note that we will use domain and batch interchangeably in this article for convenience. The data are divided into a training set and a test set that are drawn from multiple studies. The domains used to collect training data are not used for the test set and so batch effects can vary between the training and test data. In practice, each of the domains only contains a small subset of the cell types. This means that the distribution of cell types is correlated with the distribution of domains. Thus, the methods that naively learn cell types based on expression profile (Alavi et al., 2018; Kiselev et al., 2018; Lieberman et al., 2018) may instead fit domain information and not generalize well to the unobserved studies.

2.2. Domain adversarial training with Siamese network

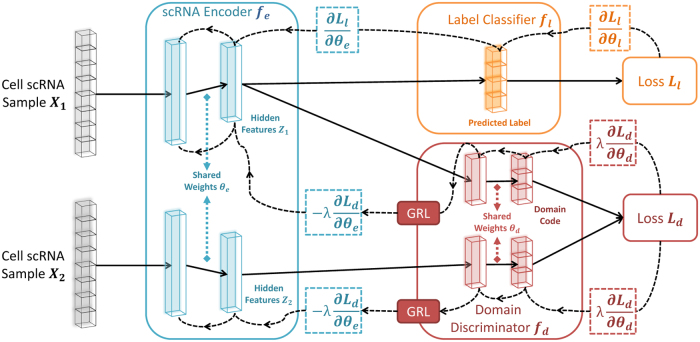

To overcome this problem and remove the domain impact when learning a cell-type representation, we propose a neural network (NN) framework that includes three modules as shown in Figure 1: scRNA encoder, label classifier, and domain discriminator. The encoder module is used to reduce the dimensions of the data and contains fully connected layers that produce the hidden features, where represents the parameters in these layers. The label classifier attempts to predict the label of input , whereas the goal of the domain discriminator is to determine whether a pair of inputs and is from the same domain or not. Past work for classifying scRNA-seq data only attempted to minimize the loss function for the label classifier (Lin et al., 2017; Alavi et al., 2018). Here, we extend these methods by adding a regularization term based on the adversarial loss of the domain discriminator , which we will elaborate later. The overall loss E on a pair of samples and is denoted by:

FIG. 1.

Architecture of scDGN. The network includes three modules: scRNA encoder , label classifier , and domain discriminator . Note that the red and orange networks use the same encoding as input. Solid lines represent the forward direction of the NN, whereas the dashed lines represent the backpropagation direction with the corresponding gradient it passes. GRLs have no effect in forward propagation, but flip the sign of the gradients that flow through them during backpropagation. This allows the combined network to simultaneously optimize label classification and attempt to “fool” the domain discriminator. Thus, the encoder leads to representations that are invariant to the different domains while still distinguishing cell types. GRLs, gradient reversal layers; NN, neural network; scDGN, single-cell domain generalization network; scRNA, single-cell RNA.

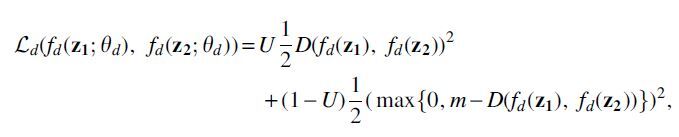

where can control the trade-off between the goals of domain invariance and higher classification accuracy. For convenience, we use and to denote the hidden representations of and calculated from . Inspired by Siamese networks (Koch et al., 2015), we implement our domain discriminator by adopting a contrastive loss (Hadsell et al., 2006):

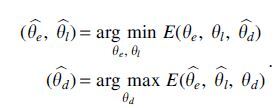

where indicates that two samples are from the same domain d and indicates that they are not, is the euclidean distance, and m is the margin that indicates the prediction boundary. The domain discriminator parameters, , are updated using back propagation to maximize the total loss E, whereas the encoder and classifier parameters, and , are updated to minimize E. To allow all three modules to be updated together end-to-end, we use a gradient reversal layer (GRL, Fig. 1) (Ganin et al., 2016; Pei et al., 2018). Specifically, GRLs have no effect in forward propagation, but flip the sign of the gradients that flow through them during backpropagation. The following provides the overall optimization problems solved for the network parameters:

In other words, the goal of the domain discriminator is to tell whether two samples are drawn from the same or different batches. By optimizing the scRNA encoder adversarially against the domain discriminator, we attempt to make sure that the network representation cannot be used to classify based on domain knowledge. During the training, the maximization and minimization tasks compete with each other, which is achieved by adjusting the representations to improve the accuracy of the label classifier and simultaneously fool the domain discriminator.

2.3. Conditional domain generalization strategy

Most prior domain adaption or generalization methods focused on the cases wherein the distribution of labels is independent of the domains (Csurka, 2017; Motiian et al., 2017). In contrast, as we show in Results section, for scRNA-seq experiments different studies tend to focus on certain cell types (Jaitin et al., 2014; Zeisel et al. 2015; Yu et al. 2016). Consequently, it is not reasonable to completely merge the scRNA-seq data from different batches. To be specific, aligning the scRNA-seq data from two batches with different sets of cell types would sacrifice its biological significance and prevent the cell classifier from predicting effectively. To overcome this issue, instead of arbitrarily choosing positive pairs (samples from the same domain) and negative pairs (samples from different domains), we constrain the selection as follows: (1) for positive pairs, only the samples with different labels from the same domain are selected, (2) for negative pairs, only the samples with the same label from different domains are selected. Figure 2 provides a visual interpretation of this strategy. Formally, letting yi and zi represent the i-th sample's cell-type label and domain label respectively, we have the following equations to define the value of U for sample pairs:

FIG. 2.

Conditional domain generalization strategy: shapes represent different labels, and colors (or patterns) represent different domains. For negative pairs from different domains, we only select those samples with the same label. For positive pairs from the same domain, we only select the samples with different labels.

This strategy prevents the domain adversarial training from aligning samples with different labels or separating samples with same labels. For example, to fool the discriminator with a positive pair, the encoder must implicitly increase the distance of two samples with different cell types. Therefore, combining this strategy with domain adversarial training allows the network to learn cell-type specific focused representations. We term our model single-cell domain generalization network (scDGN).

3. Results

3.1. Experiment setups

3.1.1. Data sets

To test our method and to compare it with previous methods for aligning and classifying scRNA-seq data, we used several recent data sets. These data sets contain between 6000 and 45,000 cells, and all include cells profiled in multiple experiments by different laboratories and on different platforms.

The evaluation data sets include a subset of the data from scQuery (Alavi et al., 2018), which contains 44,490 samples from 155 different experiments, including a broad range of cell types. In addition, we use a peripheral blood mononuclear cell (PBMC) data set with nine batches (sequencing technologies) and 28,969 cells (Ding et al., 2019). Finally we also test on a data set of human pancreatic islet cells from Seurat (Stuart et al., 2019), which we artificially split into 6 smaller data sets to simulate cases wherein cell types and domains are highly correlated. See Table 1 and Section A.1 in Supplementary Material for details on batches, cell-type distributions, train and test split information, and normalization for these three data sets.

Table 1.

Basic Statistics for scQuery, Suerat Pancreas, and Peripheral Blood Mononuclear Cell Data Sets

| scQuery |

Seurat pancreas |

Seurat PBMC |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Data | Cell type | Domain | Data | Cell type | Domain | Data | Cell type | Domain | |

| Training | 37,697 | 39 | 99 | 6321 | 13 | 3 | 25,977 | 10 | 8 |

| Validation | 3023 | 19 | 26 | — | — | — | — | — | — |

| Test | 3770 | 23 | 30 | 638 | 13 | 1 | 2992 | 10 | 1 |

PBMC, peripheral blood mononuclear cell.

3.1.2. Model configurations

We used the network of Lin et al. (2017) as the components for the encoder and the label classifier in our model. The encoder contains two hidden layers with 1136 and 100 units. The label classifier is directly connected to the 100 unit layer and makes predictions based on these values. The domain discriminator contains an additional hidden layer with 64 units and is also connected to the 100 unit layer of the encoder (Fig. 1). For each layer, is used as the nonlinear activation function. We test several other possible configurations but did not observe improvement in performance. As is commonly done, we use a validation set to tune the hyperparameters for learning including learning rates, decay, momentum, and the adversarial weight and margin parameters and m. In general, our analysis indicates that for larger data sets, a lower weight and larger margin m for the adversarial training are preferred and vice versa. More details about the hyperparameters and training are provided in Section A.3 in Supplementary Material.

3.1.3. Baselines

We compared scDGN with several prior methods for classifying and aligning scRNA-seq data. These included the NN model of Lin et al. (2017), which is developed for classifying scRNA-seq data, CaSTLe (Lieberman et al., 2018), which performs cell-type classification based on transfer learning, and several state-of-the-art alignment methods. For alignment, we compared with MNN (Haghverdi et al., 2018), which utilizes MNNs to align data from different batches, scVI (Lopez et al., 2018), which trains a deep generative model on the scRNA-seq data and uses an explicit batch identifier to retain conditional independence property of the representation, and Seurat (Stuart et al., 2019), which first identifies the anchors among different batches and then projects different data sets using a correction vector based on the order defined by hierarchical clustering with pairwise distances.

Our comparisons include both visual projection of the learned alignment (Fig. 5 and 6) and quantitative analysis of the accuracy of the predicted test cell types (Table 2). For the latter, to enable comparisons of the supervised and unsupervised methods, we used the resulting aligned data from the unsupervised methods to train a NN that has the same configuration as Lin et al. (2017). For scVI, which results in a much lower dimensional representation, we used a smaller input vector and a smaller hidden layer. Note that these alignment methods actually use the scRNA-seq test data to determine the final dimensionality reduction function, whereas our method does not utilize the test data for any model decision or parameter learning. To effectively apply Seurat to scQuery, we remove the batches that have samples. Also, for those data sets that the assumption of overlapped cell types is not guaranteed such as scQuery, we find that the performance of MNNs highly depends on the order of alignment. Therefore, for MNNs on the scQuery data set, we use 10 random permutations of batch orders and report the average accuracy.

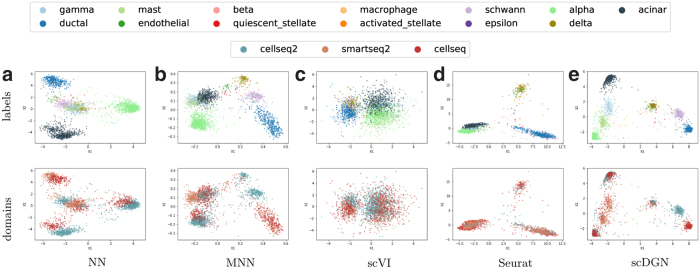

FIG. 5.

PCA visualizations of the representations learned by different models on the full Pancreas2 data set. Colors for different cell types and domains are shown in the legend at the top.

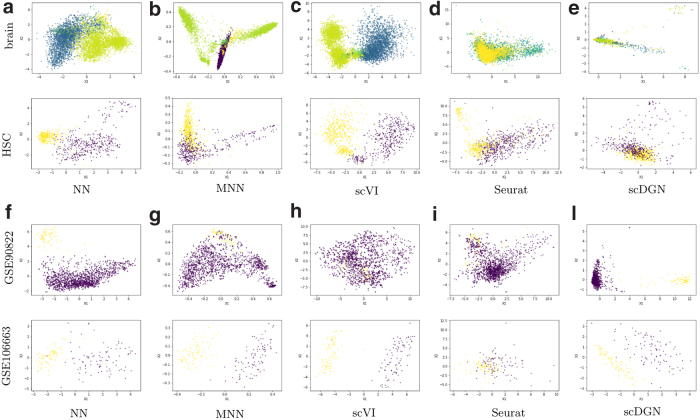

FIG. 6.

PCA visualizations of the representations of certain cell types and batches by different models for the scQuery data set. Top two rows: Cell types. Colors represent different batches. Bottom two rows: Batches. Colors represent different cell types. HSC, hematopoietic stem cell.

Table 2.

Overall Performances of Different Methods

| Experiments | MI | NN | CaSTLe | MNNs | scVI | Seurat | scDGN |

|---|---|---|---|---|---|---|---|

| scQuery | 3.025 | 0.255 | 0.156 | 0.200 | 0.257 | 0.144 | 0.286 |

| PBMC | 0.112 | 0.861 | 0.865 | 0.859 | 0.808 | 0.830 | 0.868 |

| Pancreas 1 | 0.902 | 0.720 | 0.705 | 0.591 | 0.855 | 0.812 | 0.856 |

| Pancreas 2 | 0.733 | 0.891 | 0.764 | 0.764 | 0.852 | 0.825 | 0.918 |

| Pancreas 3 | 0.931 | 0.545 | 0.722 | 0.722 | 0.651 | 0.751 | 0.663 |

| Pancreas 4 | 0.458 | 0.927 | 0.914 | 0.914 | 0.925 | 0.881 | 0.925 |

| Pancreas 5 | 0.849 | 0.928 | 0.882 | 0.895 | 0.865 | 0.923 | |

| Pancreas 6 | 0.670 | 0.944 | 0.917 | 0.946 | 0.893 | 0.907 | 0.950 |

| Average | — | 0.826 | 0.817 | 0.842 | 0.845 | 0.840 | 0.872 |

MI represents the mutual information between batch and cell type in the corresponding data set. The highest test accuracy for each data set is bolded.

MI, mutual information; MNNs, mutual nearest neighbors; NN, neural network; scDGN, single-cell domain generalization network.

3.2. Overall performance

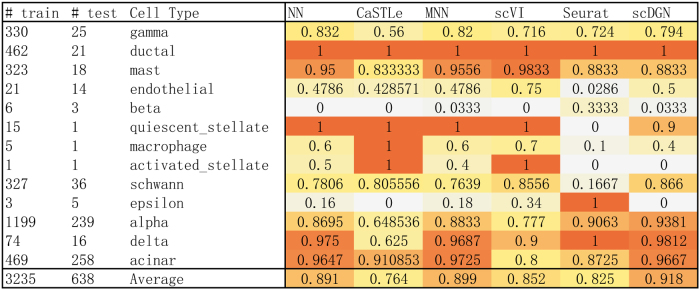

As already mentioned, we use the validation set to select the best model when using the scQuery data set. For the smaller data sets, we use the model obtained after 250 epochs (all models converged after this number of epochs). Test accuracy for the different methods is presented in Table 2. We show both mean and standard deviation of the accuracy for 10 randomly initialized experiments. An example for the performance for all methods we tested on the two data sets is shown in Figure 3. As can be seen, on average scDGN outperforms the other methods in terms of test accuracy, although for a particular cell type, we sometimes see other methods perform better. Performance comparisons for all cell types are presented in Section C (Tables C1–C8) in Supplementary Material.

FIG. 3.

Test accuracy of each model on different cell types from Pancreas2 data set. The darker shades represent better performance.

In addition, Table 2 presents the mutual information (MI) between labels and domains that corresponds to the difficulty of the data set. A larger MI indicates that models that do not account for the domain are likely to fit the domain information rather than the cell type. For the scQuery data set, we find the accuracy is low for all methods, indicating that this data set is relatively difficult. This is corroborated by the large MI value. For such data, we see a clear advantage for the scDGN: scDGN improves by >10% over all other methods (p = 5.069 × 10−5 based on Student's t-test when compared with the NN baseline that is tied for second best). The improvements over other single-cell alignment methods are even more significant. scDGN also achieves the best performance on the second largest data set, the PBMC data set. However, given the very low MI for this data set, the performance of the other methods, including the baseline NN, is almost as good as the performance of scDGN. The third data set we test on is the Seurat pancreas data set. This is the smallest data set and so it has the least number of training samples. Still, of the six settings we tested (which differed in the subset of cells that were excluded from training), we find that scDGN is the top performer in four of them, comparable with the top performer for another one and in only one setting (Pancreas 3, with the highest MI) is significantly outperformed by Seurat. Note that even for the Pancreas 3 data, the domain adversarial training helps: using this the scDGN is able to improve by >20% over the baseline NN used for the label classifier.

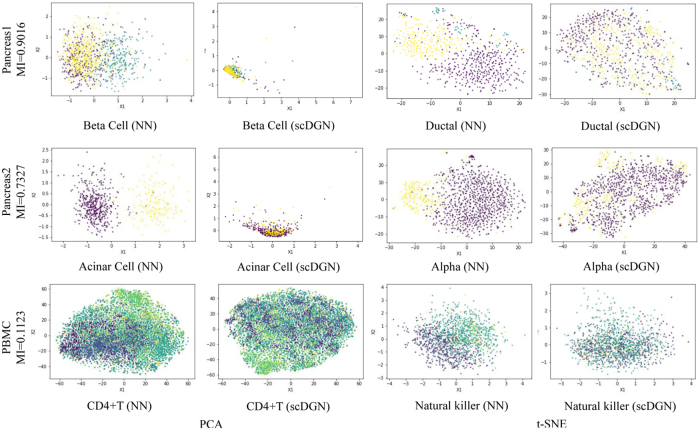

3.3. Visualization of the representation learned by alignment and classification methods

To further explore the effectiveness of the batch removal provided by our proposed domain adversarial training with conditional domain generalization strategy, we visualize the 100-dimensional hidden representations learned by NN and scDGN: Figure 4 presents both principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE) plots for several different cell types across the three data sets. Note that we only used the top two components for visualization, although the actual classification for all methods was performed using all raw feature values without any dimensionality reduction. Points are colored using their batch IDs to evaluate batch effects. As can be seen, using scDGN we obtain results that are much better at mixing cells from the different batches when compared with the baseline NN model. The impact is larger for the pancreas data sets that have larger MI compared with the PBMC data set, which helps explain the large increase in performance for these two data sets.

FIG. 4.

Visualization of learned representations for NN and scDGN: using PCA and t-SNE rows: the three data sets we tested the method on. Columns: Methods and cell types. For each row, data from different batches are distinguished using different colors.

We next extended this comparison and visualized the learned (aligned) representations for all methods using data from both the Pancreas2 and scQuery data sets (Figs. 5 and 6). For the Pancreas2 data set, we visualize the entire data set. For scQuery, given the large number of cell types and domains, we present PCA visualization of a subset of cell types and domains. As can be seen, in addition to scDGN, Seurat is also able to successfully mix the data from different batches. However, as the results in Table 2 indicate, this may come at the expense of not correctly separating cell types. MNNs and scVI are not always effective at removing batch effects for the cell types. In contrast, scDGN is able to do both domain mixing and cell-type assignment, leading to its better performance overall. For example, for the acinar and alpha cell types in the pancreas data set (Fig. 5), only scDGN, MNNs, and Seurat are able to align the data from different domains. However, MNNs and Seurat overcorrect the representation by aligning different cell types from different domains, mixing acinar and gamma cells. Additional visualizations for other cell types and domains can be found in Section D in Supplementary Material, where the same advantages of scDGN over other methods can be consistently observed.

3.4. Analysis of key genes

Although NNs are often treated as black boxes, recent methods provide useful directions for making them more interpretable (Ribeiro et al., 2016). Here we use activation maximization, which relies on the gradient of the correct category logit with respect to the input vector to select the key inputs for each of the models (Erhan et al., 2009; Simonyan et al., 2013; Springenberg et al., 2014). Formally, given a particular cell type i and a trained NN , activation maximization looks for important input genes x′ by solving the following optimization problem:

where ei is the natural basis vector associated with the i-th category. This can be solved through backpropagation, where the gradient of with respect to x, which can be viewed as the weight of the first-order Taylor expansion of the NN, is calculated to iteratively update the input. We follow a previous method (Simonyan et al., 2013) and initialize the optimization with a zero vector. Given this setting, we ran the optimization for 100 iterations with learning rate set to 1. The important genes are selected as those inputs leading to the largest changes compared with the initialization values. To compare scDGN and NN for certain cell types, we select the top k genes with the largest changes and perform gene ontology (GO) analysis on these selected genes.

As an example, consider the genes identified for the liver cell type using the scQuery data set. We select the top 100 genes for this cell type from NN and scDGN and present the enriched GO categories on biological process with adjusted p-value <1.0 × 10−4 in Tables 3 and 4. We also list these genes by order in Section A.3 in Supplementary Material. As can be seen, although a number of significant GO categories are identified for the top 100 NN genes, these are generic and not liver specific. They include general terms related to interactions between organs and immune response categories that are active in multiple organs and cell types. In sharp contrast, the categories identified for scDGN are much more specific and highlight key pathways that are mainly utilized in the liver.

Table 3.

GO Analysis Results for Top 100 scQuery Liver Genes in the Neural Network Method

| Term_name | Term_id | padj | −log10padj |

|---|---|---|---|

| Symbiotic process | GO:0044403 | 1.16E-08 | 7.935246875 |

| Interspecies interaction between organisms | GO:0044419 | 3.14E-08 | 7.503093471 |

| Viral process | GO:0016032 | 3.69E-08 | 7.433145019 |

| Immune response | GO:0006955 | 2.5491E-06 | 5.593613105 |

| Multiorganism process | GO:0051704 | 1.40837E-05 | 4.851282542 |

| Immune effector process | GO:0002252 | 4.53533E-05 | 4.34339136 |

| Response to stress | GO:0006950 | 5.56335E-05 | 4.254663785 |

| Defense response | GO:0006952 | 6.18759E-05 | 4.208478308 |

Table 4.

GO Analysis Results for Top 100 scQuery Liver Genes in the Single-Cell Domain Generalization Network Method

| Term_name | Term_id | padj | −log10padj |

|---|---|---|---|

| Chylomicron remodeling | GO:0034371 | 3.04042E-05 | 4.517066786 |

| Positive reg. of cholesterol esterification | GO:0010873 | 3.04042E-05 | 4.517066786 |

| Negative reg. of cellular component organization | GO:0051129 | 3.94437E-05 | 4.404022507 |

| Protein–lipid complex remodeling | GO:0034368 | 7.34551E-05 | 4.133978335 |

| Plasma lipoprotein particle remodeling | GO:0034369 | 7.34551E-05 | 4.133978335 |

| Protein-containing complex remodeling | GO:0034367 | 8.8522E-05 | 4.052948555 |

reg., regularization.

For example, the top category for the scDGN genes, “chylomicron remodeling,” refers to the main physiological purpose of chlyomicron remnants: to facilitate the return of bile lipoproteins and cholesterol to the liver (Redgrave, 2004). Specifically, in this pathway, chylomicrons (lipoproteins) are broken down (remodeled through hydrolysis) and converted to a form called “chlyomicron remnant” that is taken up by specific receptors that exist primarily on the surface of liver cells (Hara et al., 1997). The second term, “pos. regulation of cholesterol esterification,” refers to cholesterol esterification, a critical step in reverse cholesterol transport, the process in which excess cholesterol is sent to the liver to be removed from the body (Murakami et al., 1995; Komoda, 2010). Furthermore, cholesteryl ester transfer protein (CETP) is a key enzyme involved in this process and is highly expressed in liver cells, and variants of CETP are associated with increased risk of atherosclerosis (Komoda, 2010; Seidman et al., 2014). The fifth most significant term, “lipoprotein remodeling,” is part of the two aforementioned processes. The top 100 genes identified by the scDGN include apoa1 (main protein component of high-density lipoprotein cholesterol), apoa2, and apoc1, all of which encode lipoproteins that are primarily expressed in the liver (Ko et al., 2014; Domingo-Espın et al., 2018). These genes were not included in the top 100 genes by the NN. We present the results of GO analysis comparison for several additional cell types in Section E.2 in Supplementary Material.

4. Discussion

Single-cell computational methods that do not account for batch effects are likely to fit the noise introduced by the batches. Several recent methods have been proposed for aligning scRNA-seq from multiple studies of the same tissues or processes. Most of these methods are unsupervised and assume that the cell types among different batches overlap. However, we show that these methods would fail on the studies in which cell types do not fully overlap, which is often the case when dealing with multiple data sets. To overcome this problem, we extend a supervised scRNA-seq cell-type assignment method based on NN and regularize its prediction to be invariant to batch effects.

Our method is based on the ideas of domain adversarial training. In such training, two competing tasks are used to optimize the representation of scRNA-seq data. The first focuses on the traditional goal of cell-type identification, whereas the second attempts to construct representations that are not affected by specific batch or experimental artifacts. This is accomplished by jointly minimizing a loss function that takes into account both goals, accounting for the weight of each of the goals using a GRL. We also proposed a conditional strategy to avoid overcorrection. We presented efficient learning methods for this setting and tested it on three large scale scRNA-seq data sets containing experiments from several different platforms for partially overlapping cell types.

As we show, our scDGN method is able to correctly identify cell types in the test data sets. For the largest data set we tested on which contained close to 40 different cell types, scDGN significantly outperformed all prior methods. It also ranked first for the second largest data set, and for all but one of the six tests on the third data set. Importantly, it always outperformed the supervised learning-based method, indicating that batch effects should be addressed when designing such methods for cell-type assignments. In addition to accurately assigning cell types, further analysis of significant genes indicates that by overcoming batch effects, scDGN is better able to focus on relevant sets of genes when compared with prior supervised methods, explaining its improvement in accuracy.

Although scDGN performed best on the data we analyzed, there are a number of possible issues with this approach. First, it learns a large number of parameters that require large input data sets. However, as we showed, scDGN is able to perform well even for data sets with a few thousand cells that match current sizes of scRNA-seq data sets. Second, scDGN is based on NNs that are often seen as a black box, making it hard to interpret the resulting model and its biological relevance. Recent work provides a number of directions that can be used to overcome this issue. As we showed, using activation maximization, we were able to identify several relevant cell-type specific genes in the learned network. Future work would include using additional NN interpretation methods, including Local Interpretable Model-agnostic Explanations (LIME) (Ribeiro et al., 2016) or Remove and Retrain (ROAR) and Keep and Retrain (KAR) (Hooker et al., 2018), to further identify the set of genes that play the largest role in the decisions the network makes. Third, as shown in Section D.3 in Supplementary Material, scDGN sometimes does not mix up the representations from different batches for all cell types. Considering the visualization results for NN in Section D.8 in Supplementary Material and its competitive performance in Table 2 together, it may indicate that it is not always necessary to remove batch effects for the model to achieve high test accuracy. Therefore, it is worthwhile to further study when the alignment is imperative.

Finally, unlike prior scRNA-seq alignment methods, scDGN is supervised. Although this is an advantage when it comes to accuracy, as we have shown, it may be a problem for the new data. We believe that as more scRNA-seq and other high-throughput single-cell data accumulate, we would have labeled data for most cell types, which would enable training an scDGN for even more cell types. As we have shown with the scQuery data set, for which scDGN significantly outperformed all other methods, when such data exist, scDGN is able to correctly align experiments and platforms not seen in the training set. More generally, this article presents a method that connects the batch effect removal problem to domain adaptation tasks in machine learning. Recent developments in this direction in the machine learning community may lead to even better results for batch removal problems. For instance, it has been recently shown that self-supervision with domain knowledge, for instance rotation prediction (Sun et al., 2020), can greatly improve the learned features and generalization to unseen data. It would be interesting to consider whether similar biological information, for example, knowledge about gene interactions, can be used to further improve the solution for alignment problems.

scDGN is implemented in Python with the PyTorch API (Steiner et al., 2019), and users can obtain the code and sampled data from (https://github.com/SongweiGe/scDGN).

Supplementary Material

Author Disclosure Statement

The authors declare they have no conflicting financial interests.

Funding Information

This study was partially supported by National Institute of Health grants 1R01GM122096 and OT2OD026682 to Z.B.J. and by a Scholars Award in Studying Complex Systems from the James S. McDonnell Foundation to Z.B.J. H.W. was supported by the National Institutes of Health grants R01-GM093156 and P30-DA035778.

Supplementary Material

References

- Alavi, A., Ruffalo, M., Parvangada, A., et al. . 2018. A web server for comparative analysis of single-cell RNA-seq data. Nat. Commun. 9, 4768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chu, C., and Wang, R.. 2018. A survey of domain adaptation for neural machine translation. arXiv 1806.00258 [Google Scholar]

- Csurka, G.2017. Domain adaptation for visual applications: A comprehensive survey. arXiv 1702.05374 [Google Scholar]

- Ding, J., Adiconis, X., Simmons, S.K., et al. . 2019. Systematic comparative analysis of single cell RNA-seq methods. BioRxiv 632216 [Google Scholar]

- Domingo-Espın, J., Nilsson, O., Bernfur, K., et al. . 2018. Site-specific glycations of apolipoprotein A-I lead to differentiated functional effects on lipid-binding and on glucose metabolism. Biochim. Biophys. Acta –Mol. Basis Dis. 1864(9, Part B), 2822–2834 [DOI] [PubMed] [Google Scholar]

- Eng, C.-H.L., Lawson, M., Zhu, Q., et al. . 2019. Transcriptome-scale super-resolved imaging in tissues by RNA seqFISH+. Nature 568, 235–239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erhan, D., Bengio, Y., Courville, A., et al. . 2009. Visualizing higher-layer features of a deep network. Techreport 1341. University of Montreal [Google Scholar]

- Ganin, Y., Ustinova, E., Ajakan, H., et al. . 2016. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 17, 2096–2030 [Google Scholar]

- Hadsell, R., Chopra, S., and LeCun, Y.. 2006. Dimensionality reduction by learning an invariant mapping, 2, 1735–1742. Presented at the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'06). IEEE, New York, NY, USA [Google Scholar]

- Haghverdi, L., Lun, A.T., Morgan, M.D., et al. . 2018. Batch effects in single-cell RNA-sequencing data are corrected by matching mutual nearest neighbors. Nat. Biotechnol. 36, 421–427 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hara, T., Tan, Y., and Huang, L.. 1997. In vivo gene delivery to the liver using reconstituted chylomicron remnants as a novel nonviralvector. Proc. Natl Acad. Sci. U. S. A. 94, 14547–14552 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooker, S., Erhan, D., Kindermans, P.-J., et al. . 2018. Evaluating feature importance estimates. arXiv 1806.10758 [Google Scholar]

- HuBMAP Consortium. 2019. The human body at cellular resolution: The NIH human biomolecular atlas program. Nature 574, 187–192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hwang, B., Lee, J.H., and Bang, D.. 2018. Single-cell RNA sequencing technologies and bioinformatics pipelines. Exp. Mol. Med. 50, 1–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaitin, D.A., Kenigsberg, E., Keren-Shaul, H., et al. . 2014. Massively parallel single-cell RNA-seq for marker-free decomposition of tissues into cell types. Science 343, 776–779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiselev, V.Y., Yiu, A., and Hemberg, M.. 2018. scmap: Projection of single-cell RNA-seq data across data sets. Nat. Methods 15, 359–362 [DOI] [PubMed] [Google Scholar]

- Ko, H.-L., Wang, Y.-S., Fong, W.-L., et al. . 2014. Apolipoprotein C1 (APOC1) as a novel diagnostic and prognostic biomarker for lung cancer: A marker phase I trial. Thorac. Cancer 5, 500–508 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koch, G., Zemel, R., and Salakhutdinov, R.. 2015. Siamese neural networks for one-shot image recognition. In ICML Deep Learning Workshop 2, Lille, France [Google Scholar]

- Komoda, T., ed. 2010. Chapter 3, 35–59. In The HDL Handbook: Biological Functions and Clinical Implications. Academic Press, Boston [Google Scholar]

- Li, H., Pan, S.J., Wang, S., et al. . 2018. Domain generalization with adversarial feature learning. In CVPR, Salt Lake City, Utah, USA [Google Scholar]

- Lieberman, Y., Rokach, L., and Shay, T.. 2018. Castle–classification of single cells by transfer learning: Harnessing the power of publicly available single cell RNA sequencing experiments to annotate new experiments. PLoS One 13, e0205499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin, C., Jain, S., Kim, H., et al. . 2017. Using neural networks for reducing the dimensions of single-cell RNA-seq data. Nucleic Acids Res. 45:e156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopez, R., Regier, J., Cole, M.B., et al. . 2018. Deep generative modeling for single-cell transcriptomics. Nat. Methods 15, 1053–1058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Motiian, S., Piccirilli, M., Adjeroh, D.A., et al. . 2017. Unified deep supervised domain adaptation and generalization, vol. 2, 3. In ICCV Venice, Italy [Google Scholar]

- Murakami, T., Michelagnoli, S., Longhi, R., et al. . 1995. Triglycerides are major determinants of cholesterol esterification/transfer and HDL remodeling in human plasma. Arterioscler. Thromb. Vasc. Biol. 15, 1819–1828 [DOI] [PubMed] [Google Scholar]

- Nowotschin, S., Setty, M., Kuo, Y.Y., et al. . 2019. The emergent landscape of the mouse gut endoderm at single-cell resolution. Nature 569, 361–367 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papalexi, E., and Satija, R.. 2018. Single-cell RNA sequencing to explore immune cell heterogeneity. Nat. Rev. Immunol. 18, 35–45 [DOI] [PubMed] [Google Scholar]

- Patel, V.M., Gopalan, R., Li, R., et al. . 2015. Visual domain adaptation: A survey of recent advances. IEEE Signal Process. Mag. 32, 53–69 [Google Scholar]

- Pei, Z., Cao, Z., Long, M., et al. . 2018. Multi-adversarial domain adaptation. Presented at AAAI Conference on Artificial Intelligence, New Orleans, Couisiana, USA [Google Scholar]

- Pijuan-Sala, B., Griffiths, J.A., Guibentif, C., et al. . 2019. A single-cell molecular map of mouse gastrulation and early organogenesis. Nature 566, 490–495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redgrave, T.2004. Chylomicron metabolism. Biochem. Soc. Trans. 32, 79–82 [DOI] [PubMed] [Google Scholar]

- Regev, A., Teichmann, S.A., Lander, E.S., et al. . 2017. Science forum: the human cell atlas. Elife 6, e27041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro, M.T., Singh, S., and Guestrin, C.. 2016. Why should I trust you?: Explaining the predictions of any classifier, 1135–1144. In SIGKDD. ACM, San Francisco, California, USA [Google Scholar]

- Rozenblatt-Rosen, O., Stubbington, M.J., Regev, A., et al. . 2017. The human cell atlas: From vision to reality. Nature 550, 451–453 [DOI] [PubMed] [Google Scholar]

- Seidman, M.A., Mitchell, R.N., and Stone, J.R.. 2014. Chapter 12—Pathophysiology of atherosclerosis, 221–237. In Willis M.S., Homeister J.W., and Stone J.R., eds. Cellular and Molecular Pathobiology of Cardiovascular Disease. Academic Press, SanDiego [Google Scholar]

- Simonyan, K., Vedaldi, A., and Zisserman, A.. 2013. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv 1312.6034 [Google Scholar]

- Springenberg, J.T., Dosovitskiy, A., Brox, T., et al. . 2014. Striving for simplicity: The all convolutional net. arXiv 1412.6806 [Google Scholar]

- Steiner, B., DeVito, Z., Chintala, S., et al. . 2019. Pytorch: An imperative style, high-performance deep learning library. NeurIPS 32, 1–4 [Google Scholar]

- Stuart, T., Butler, A., Hoffman, P., et al. . 2019. Comprehensive integration of single-cell data. Cell 177:1888.e21–1902.e21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuart, T., and Satija, R.. 2019. Integrative single-cell analysis. Nat. Rev. Genet.20:257–272 [DOI] [PubMed] [Google Scholar]

- Sun, Y., Wang, X., Liu, Z., et al. . 2020. Test-time training with self-supervision for generalization under distribution shifts. Presented at International Conference on Machine Learning (ICML) online [Google Scholar]

- Tung, P.-Y., Blischak, J.D., Hsiao, C.J., et al. . 2017. Batch effects and the effective design of single-cell gene expression studies. Sci. Rep. 7, 39921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Villani, A.-C., Satija, R., Reynolds, G., et al. . 2017. Single-cell RNA-seq reveals new types of human blood dendritic cells, monocytes, and progenitors. Science 356, eaah4573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang, G., Moffitt, J.R., and Zhuang, X.. 2018. Multiplexed imaging of high-density libraries of RNAs with MERFISH and expansion microscopy. Sci. Rep. 8, 4847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang, H., Ge, S., Xing, E.P., et al. . 2019a. Learning robust global representations by penalizing local predictive power. arXiv 1905.13549 [Google Scholar]

- Wang, H., He, Z., Lipton, Z.C., et al. . 2019b. Learning robust representations by projecting superficial statistics out. arXiv 1903.06256 [Google Scholar]

- Yu, Y., Tsang, J.C., Wang, C., et al. . 2016. Single-cell RNA-seq identifies a PD-1 hi ILC progenitor and defines its development pathway. Nature 539, 102–106 [DOI] [PubMed] [Google Scholar]

- Zeisel, A., Munoz-Manchado, A.B., Codeluppi, S., et al. . 2015. Cell types in the mouse cortex and hippocampus revealed by single-cell RNA-seq. Science 347, 1138–1142 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.