Abstract

Couples often communicate their emotions, e.g., love or sadness, through physical expressions of touch. Prior efforts have used visual observation to distinguish emotional touch communications by certain gestures tied to one’s hand contact, velocity and position. The work herein describes an automated approach to eliciting the essential features of these gestures. First, a tracking system records the timing and location of contact interactions in 3-D between a toucher’s hand and a receiver’s forearm. Second, data post-processing algorithms extract dependent measures, derived from prior visual observation, tied to the intensity and velocity of the toucher’s hand, as well as areas, durations and parts of the hand in contact. Third, behavioral data were obtained from five couples who sought to convey a variety of emotional word cues. We found that certain combinations of six dependent measures well distinguish the touch communications. For example, a typical sadness expression invokes more contact, evolves more slowly, and impresses less deeply into the forearm than a typical attention expression. Furthermore, cluster analysis indicates 2–5 distinct expression strategies are common per word being communicated. Specifying the essential features of touch communications can guide haptic devices in reproducing naturalistic interactions.

I. Introduction

Touch is an effective medium for conveying emotion, such as expressing gratitude to a friend or comforting a grieving relative. Indeed, naturalistic expressions of emotion are a part of daily life and fundamental to human development, communication, and survival [1]. Unraveling how our nervous system encodes emotion is an emerging topic. Recent works indicate that emotion is encoded, at least in part, by unmyelinated C tactile (CT) afferents that project to the insular cortex [2]. This pathway is distinct from, yet somewhat redundant with, that of discriminative touch whereby low-threshold mechanosensitive afferents convey information to the somatosensory cortex. On-going endeavors are attempting to understand relationships between stimulus inputs and sensory percepts, at levels of single-unit microneurography, cortical fMRI, and behavioral psychophysics [3]–[5].

We currently know little about the rich, naturalistic details that underlie what is communicated in human-to-human touch, whereby individuals convey emotions through unrestricted, intuitive touch. It is thought that certain contact interactions underlie how one seeks to convey an emotional message such as love or gratitude [6], [7]. In this setting, the stimulus is often found at the physical contact of the toucher’s hand with the receiver’s forearm. Indeed, qualitative observation has told us of the importance of hand intensity, velocity and position. In particular, Hertenstein and Keltner examined “Ekman’s emotions,” of anger, fear and happiness, “self-focused emotions” of embarrassment, envy, and pride, as well as “prosocial emotions” of love, gratitude, and sympathy. Each emotion was broken down into its most component tactile behaviors – e.g., stroking, squeezing, and shaking – as well as the duration and intensity of each gesture. They found that accuracy of recognizing the gestures ranged from 48% to 83%, demonstrating the emotions were well conveyed through touch, and finding key differences in how the gestures were performed.

While the study of emotional gestures has mostly been limited to qualitative observation, other works have begun to quantify and classify gestures using, for example, pressure data derived from touch-sensitive surfaces [8]. From an engineering perspective, such efforts can help identify and quantify the essential features underlying the interactions – amidst very rich yet variable physical contact – so as to replicate these emotions with haptic actuators [9], [10]. Quantitative descriptions may also help more precisely understand how such interactions are encoded by the nervous system. For example, CT afferents respond maximally to velocities from about 1 to 10 cm/s [11] and A-beta afferents to both indentation and velocity [12]. Thus, a system to characterize details underlying these gestures – e.g., contact area, velocity, and position – is necessary to better understand the communication of affective touch. However, the use of pressure mats, in particular, can be problematic in that they can change the psychological and physical nature of how one person delivers the gestures to another, and attenuate their response. In particular, mechanosensitive afferents respond to forces at 0.08 mN (i.e., less than the weight of such a mat), to light shear force at the skin’s surface, and human body temperature [13], [14].

Towards the goal of quantifying emotional gestures, this work describes the customization, combination and validation of infrared video and electromagnetic tracking systems to measure contact between a toucher’s hand and a receiver’s forearm. In human-subjects experiments, we examine how these metrics differ when the toucher is asked to convey distinct sets of emotionally-charged words. The overall goal is to identify “primitive” attributes that underlie these contact metrics and tie those with the most salient perceptual responses.

II. Methods

By analyzing hand and forearm movements with a motion tracking system, we 1) quantify how romantic couples touch one another when attempting to convey a variety of emotions, and 2) determine which characteristics of the contact interaction might encode each emotion. First, we built a tracking system to measure the “toucher’s” hand (who performed the gesture) and the “receiver’s” forearm (who responded to the gesture). We then conducted behavioral experiments with five romantic couples, in which one participant attempted to convey one of six words to the other using only touch, “attention”, “calming”, “gratitude”, “happiness”, “love”, and “sadness.” Four of these words were chosen as a representative subset from prior work [7], with “happiness” and “sadness” as two of “Ekman’s emotions,” and “love” and “gratitude” as two prosocial emotions. We also chose two other words, “attention” and “calming.” The participants were naïve to the task such that they would perform intuitive, naturalistic gestures. In post-processing the data, we sought to identify contact characteristics underlying gestures used to convey each emotion. We further broke down each gesture into subgroups of expression strategies.

A. Behavioral experiments

Behavioral experiments with human subjects were performed as approved by the Institutional Review Board. In particular, five male-female romantic couples were enrolled, for a total of ten participants (mean age = 23.8 years, SD = 1.7, all right-handed) from the east coast of the United States of America. Each couple had been together at least three months (mean duration = 1.7 years). All enrollees granted consent to participate and continued to completion.

The couples were asked to perform gestures conveying one of six emotions to one another: “attention,” “happiness,” “calming,” “love,” “gratitude,” or “sadness.” Before each experiment for approximately five minutes, the touchers and receivers individually were given the list of the six words and asked to think about how they might perform gestures for each emotion. During each experiment, one participant of the couple would be chosen randomly to first be either the “toucher” or the “receiver.” Afterwards, the couple would switch roles, such that each one would act as the “toucher” and the “receiver” once. Both toucher and receiver were seated and separated by a curtain, such that the toucher could see the receiver’s forearm, as it rested on the armrest of a chair, with no other visual contact.

The receiver was given a laptop computer, which was used to record an individual response and queue the next question to the toucher, while the toucher was given a set of headphones over which the next emotion word was stated. For each trial, the toucher was instructed through the headphones to convey one of the six words to the receiver. The toucher was asked to touch only the forearm of the receiver, and to continue to perform the touch gesture for a full ten seconds, until instructed to stop through the headphones. Afterwards, the receiver was given seven seconds to choose which on-screen word they thought the toucher was trying to convey. Each of the six words was repeated five times during each experiment for a total of thirty gestures per participant. The tracking system was used over this duration to continuously measure the relative motions of the receiver’s hand and toucher’s forearm.

B. Tracking system

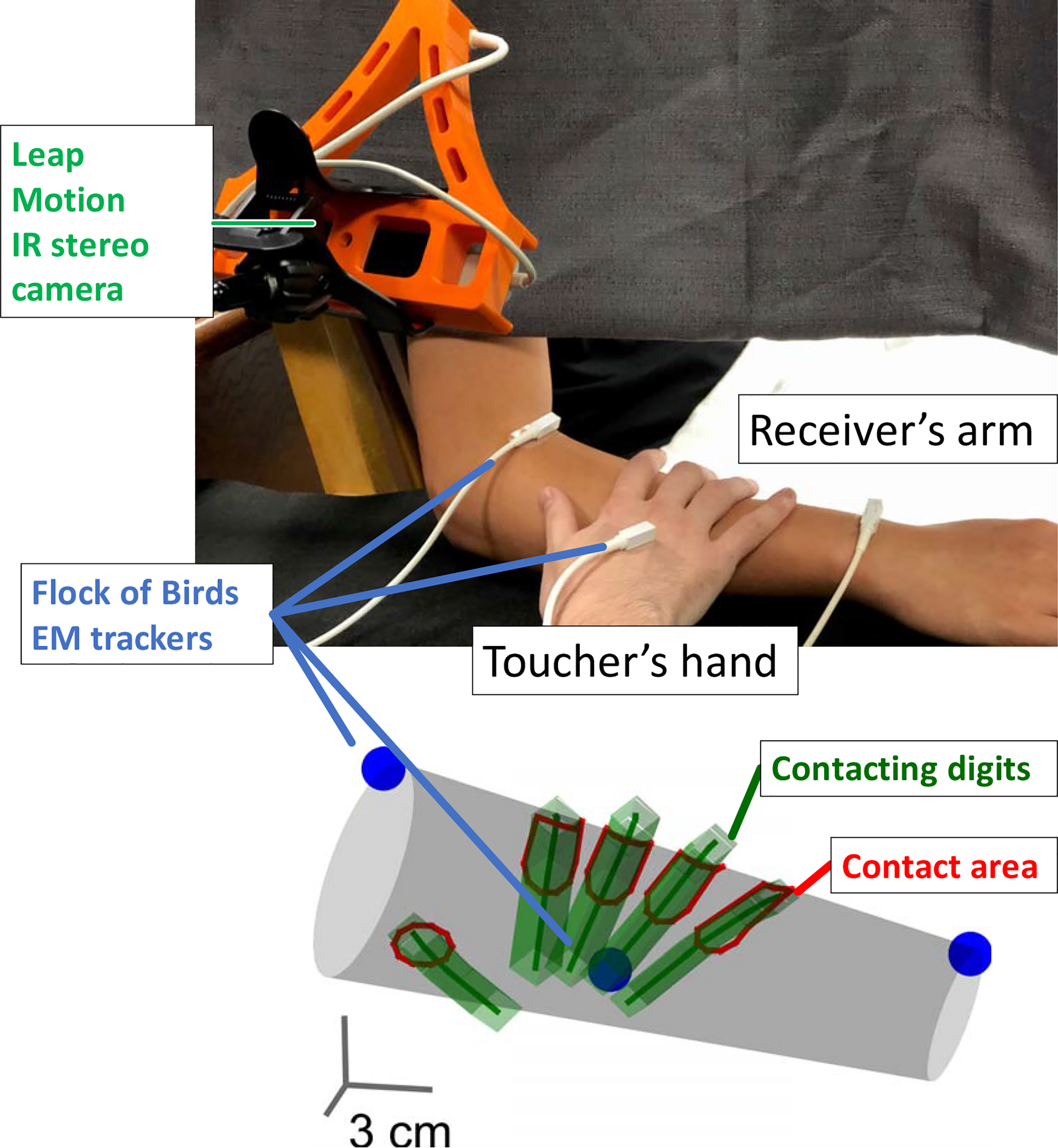

We customized off-the-shelf equipment to track a toucher’s hands in contact with a receiver’s forearm (Figure 1), consisting of electromagnetic tracking devices (Trakstar and Model 800 sensors, Ascension, Shelburne, VT) and infrared cameras and LEDs (Leap Motion, San Francisco, CA). These simultaneously enabled high accuracy while minimizing the physical attachment of the tracking equipment to the participants. Data were collected at approximately 30 Hz. To measure and track the forearm of the receiver, two Flock of Birds sensors were attached to the dorsal side of the forearm via double-sided tape, at the wrist and elbow. Each sensor tracked six dimensions of translation and rotation to an accuracy of 10 microns. The circumference at both locations was measured via tape measure. A 3-D conic section was fit to these measurements to represent the virtual forearm.

Fig 1. Hardware setup.

(Top) Contact between the toucher’s hand and receiver’s forearm was measured using motion tracking equipment, consisting of a stereo infrared camera device to measure the toucher’s hand and an electromagnetic tracking system to measure the receiver’s arm, as well as orient the toucher’s hand. (Bottom) A virtual representation of the hand and forearm illustrate the data captured along with the measured contact between them.

To measure and track the hand of the toucher, the Leap Motion controller was used in conjunction with one Flock of Birds sensor. The controller was mounted onto a 3-D printed housing, with the Flock of Birds sensor at a distance of 10 cm to avoid electrical noise from the Leap Motion controller. By fixing the position of this sensor and the Leap Motion controller together, hand coordinates could be transformed using the sensor pose into the same reference frame as the forearm coordinates. An additional Flock of Birds sensor was securely taped to the back of the toucher’s dominant hand for increased precision – the hand position was relocated based on this sensor position if it did not match properly in the recording.

C. Extracting contact characteristics

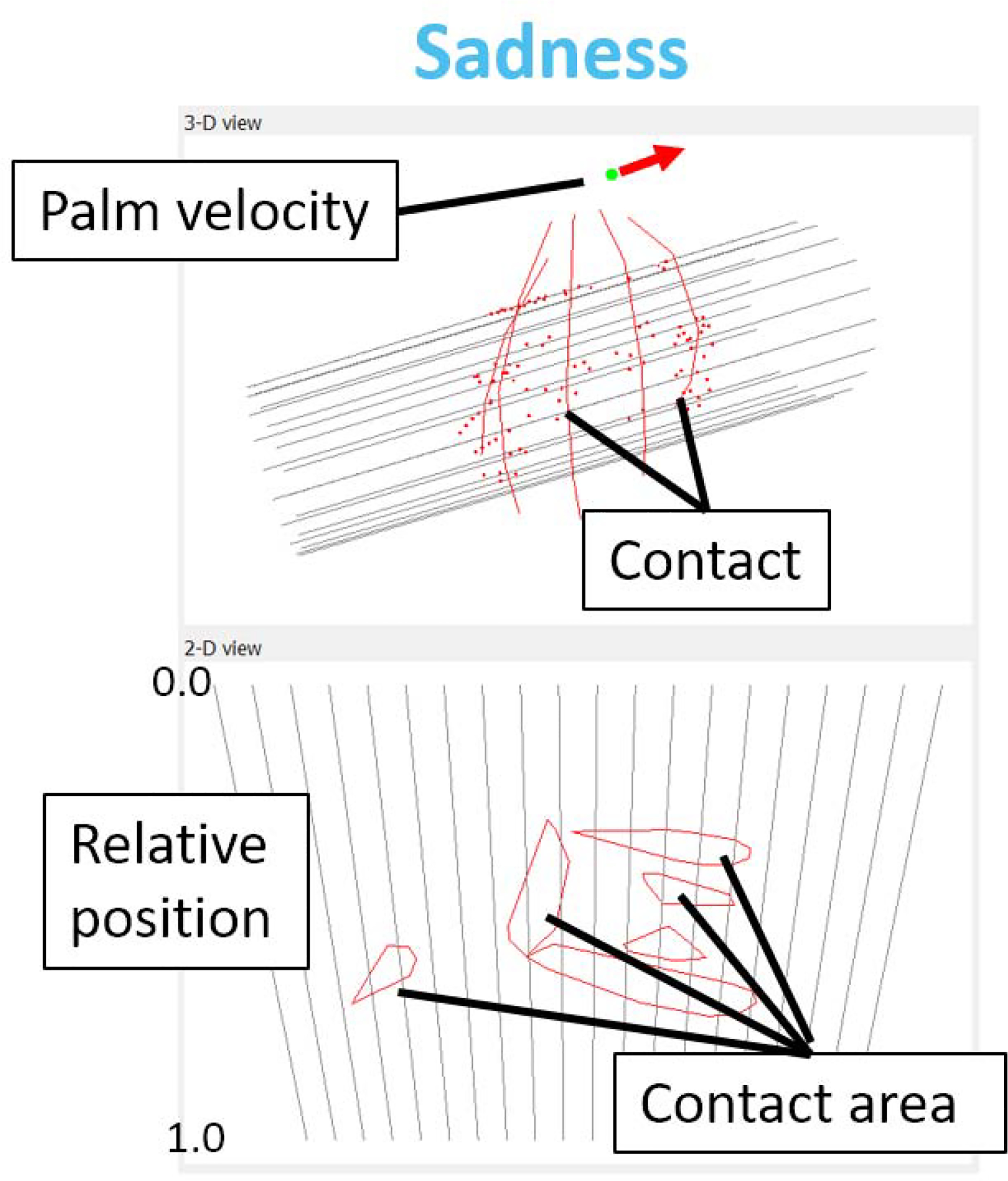

Data from the Leap Motion controller resulted in poses, widths and lengths for each bone of the hand. In custom-built software, these were translated into cylinders made up of 3-D line segments. Each intersection between a line segment and the forearm cone was treated as a contact point (Figure 2).

Fig 2. Measuring contact characteristics.

Screenshots of the custom-built tracking software demonstrating some of the contact metrics at a single time point as the toucher conveyed “sadness.” (Top) A series of lines form a cylinder representing the forearm of the receiver, and other lines crossing those represent the fingers and digits of the toucher’s hand. Red dots are intersections between the two delineate contact being made. (Bottom) The same forearm of the receiver is represented, but this time the series of lines is unwrapped onto a flat 2-D surface. Red areas denote contact.

Contact characteristics.

We examined six contact characteristics for each gesture, which were captured at each recording frame of the tracking system. All values were only considered when contact was made between toucher and receiver. For example, if the hand was picked up from the arm and moved rapidly to another point on the arm, this velocity would not be included in the calculations. The six contact characteristics were as follows. 1) Normal velocity was measured by the forward divided difference to the next measured position for palm or finger tips in contact with the arm, projected onto the surface normal of the closest point on the arm. This quantity related to the indentation-rate of each gesture; i.e., we found that gestures with high normal velocity tended to also have high force, but the measurement of normal velocity was more robust from a noise perceptive. 2) Tangential velocity was measured by the forward divided difference to the next measured position of the palm, projected onto the plane tangent to the closest point on the arm (orthogonal to the surface normal). 3) Contact area was determined by the area of the convex hull enveloping the contact points for each bone in the hands (as is illustrated in Figure 2). 4) Mean duration of contact was measured as the mean continuous duration of contact made with the arm during a gesture. 5) The number of fingers contacting was determined by averaging the sum of binary “in contact” values per finger over the gesture. 6) The time with palm in contact was measured as the proportion of time in which the palm was in contact with the arm out of the total contact time.

System validation.

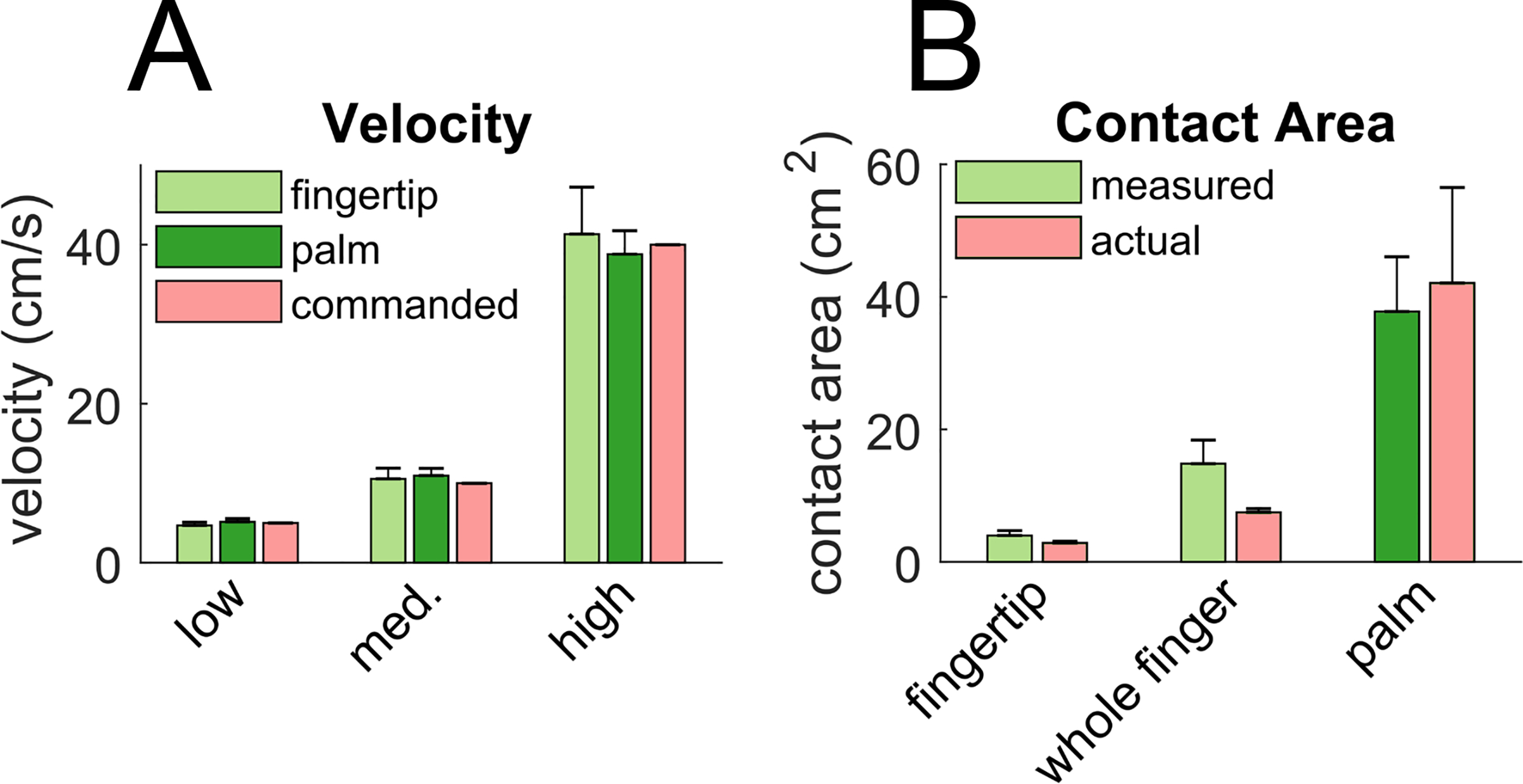

An experiment was performed with a single participant to examine the accuracy of the tracking system in measuring velocity and contact area (Figure 3). To command velocity, two marks were made on the receiver’s arm 4 cm apart, and the toucher moved their palm (or fingertip) between the marks according to a metronome commanding the desired velocity. To measure contact area, the toucher’s hand was covered in washable ink and pressed against the receiver’s forearm [15]. Ink prints were later compared to output from the tracking system.

Fig 3. System validation.

Tests of the hardware setup were conducted to evaluate the means of estimating the: A) velocity of index fingertip and palm, B) contact area. Contact area was compared to actual measurements using an ink-based technique.

D. Data analysis and statistics

The final cleaned dataset consisted of 227 of the 300 performed gestures, as some gestures were not properly recorded due to inconsistencies in the Leap Motion’s output [16]. A research assistant viewed each the motion tracking recording to remove any cases where the hand position was erratic or not picked up at all. In post-processing, only the toucher’s right hand was considered to simplify the comparisons across gestures (or the left hand if the right hand was not used). For each gesture, the six contact characteristics were averaged for all frames in the gesture, given contact was made in that frame.

Next, statistics were performed on the raw dataset (scikit-posthoc library and Scipy statistics packages, Python 2.7) using a conservative, non-parametric approach. A Kruskal-Wallis test was performed for each of the six contact characteristics across the gestures to determine significant differences. Afterwards, a post-hoc Conover’s test was performed to identify differences between gestures using the Holm-Bonferroni method for p-value correction.

Finally, clustering analysis was performed to determine the most common expression strategy for each gesture. Values were scaled from 0 to 1 based on the full range of values of each of the six contact characteristics. K-means clustering was performed (scikit-learn library, Python 2.7). The sum-squared errors were scored for values of k from 0 to 10 and a suitable value for k was chosen via the elbow method. Representative gestures were chosen from the dataset as those closest to the centroid of each identified cluster. Within each gesture, a representative snapshot was drawn to represent the gesture.

III. Results

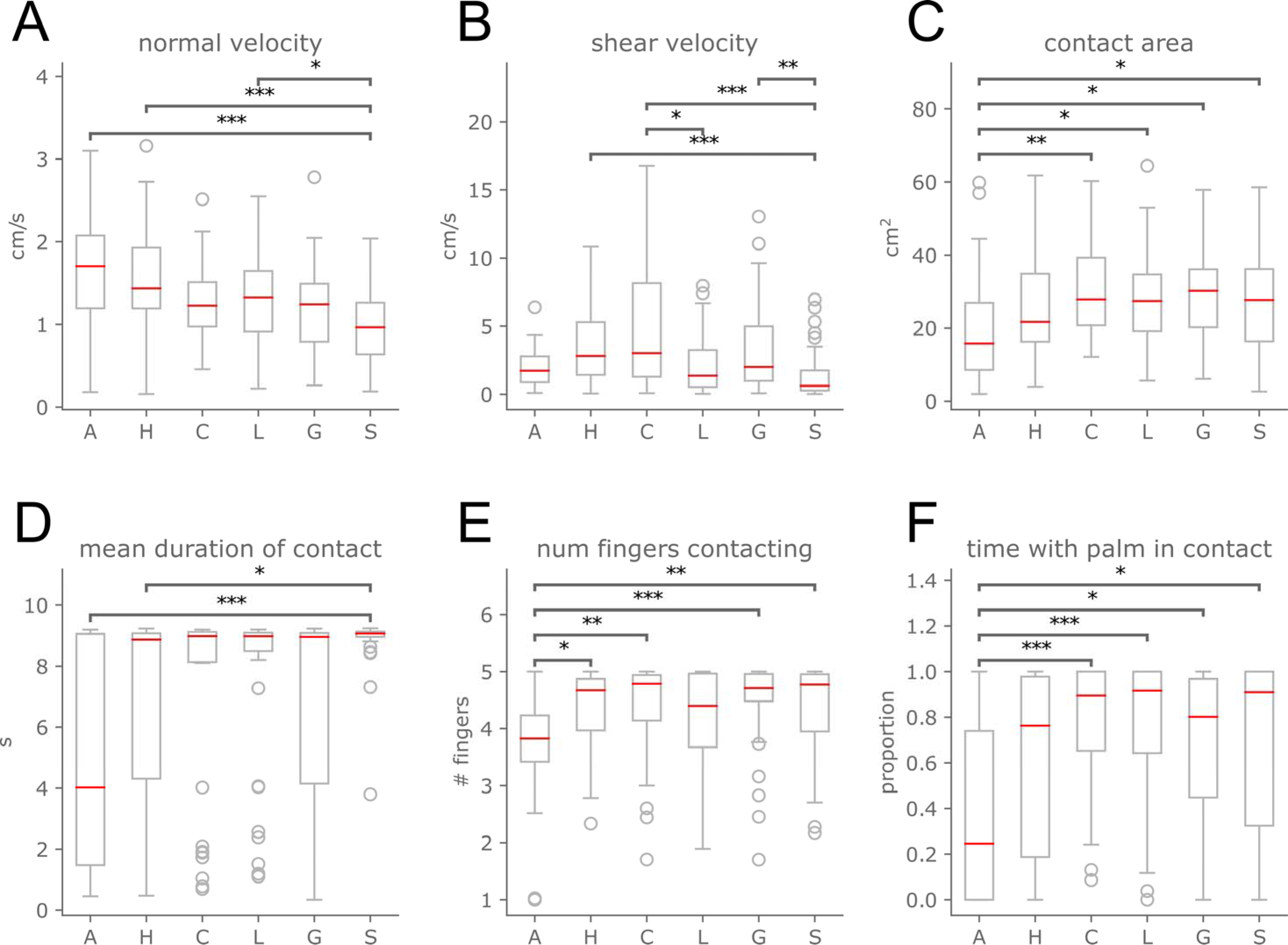

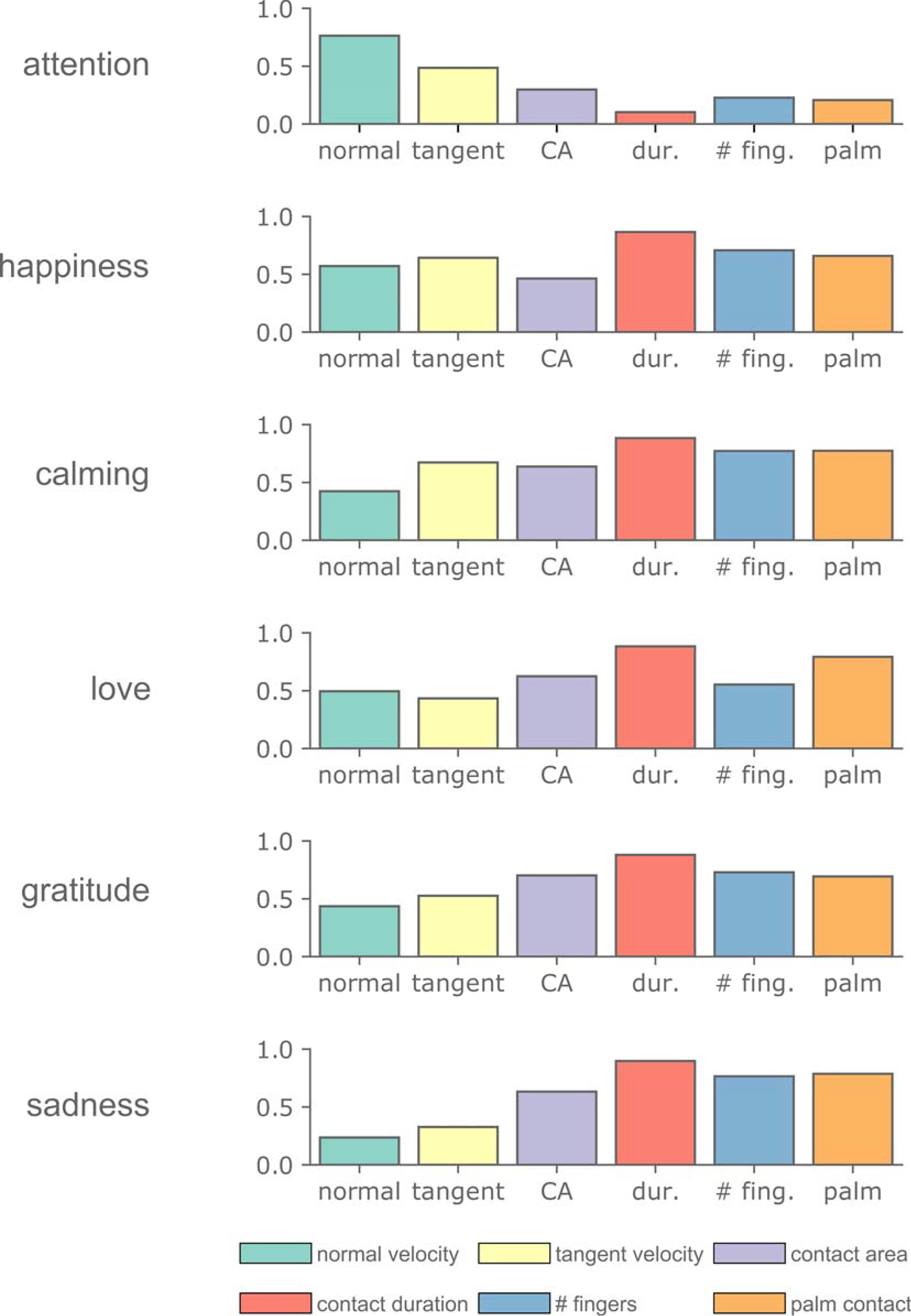

Raw data for the gestures are detailed in Figure 4. At first glance, most of the metrics exhibit a high level of variability across the ten participants, but there were several noteworthy trends. Summarizing these trends, Figure 5 shows the median gesture characteristics from the dataset. Attention and happiness, for example, had especially high normal velocity, suggesting that these were comparatively high force gestures. Happiness and calming were particularly high in tangential velocity, with love and sadness especially low, as often these gestures were motionless. Contact for calming, love, sadness, and happiness was usually maintained for the full duration of the trial, while gratitude, happiness and attention had noticeably shorter durations. Attention in particular stands out in terms of palm contact being rarely made, the low number of fingers in contact, and the total area of contact being low.

Fig 4. Distributions of contact characteristics for the raw dataset.

All measurements were taken from the toucher’s right hand. A) shows the mean velocity of contacting fingertips and palm in the direction towards the surface normal of the arm, relating to indentation-rate or intensity of the gesture. B) shows the tangential velocity of the contact, or the velocity vector projected onto the tangent plane of the closest point on the arm. C) plots the total contact area for each gesture, summed for the palm and fingers. D) plots the mean duration of contact made between toucher and receiver within each trial. E) depicts the mean number of fingers contacting the arm throughout each gesture. F) displays the proportion of total contact time in which the palm was touching the receiver. * p <= .05, ** p <= .01, *** p <= .001. A = attention, H = happiness, C = calming, L = love, G = gratitude, S = sadness.

Fig 5. Comparing median contact characteristics per gesture.

Each contact characteristic is normalized based on the average IQR across each gesture. Normal and tangential velocities in particular well separate the 6 gestures.

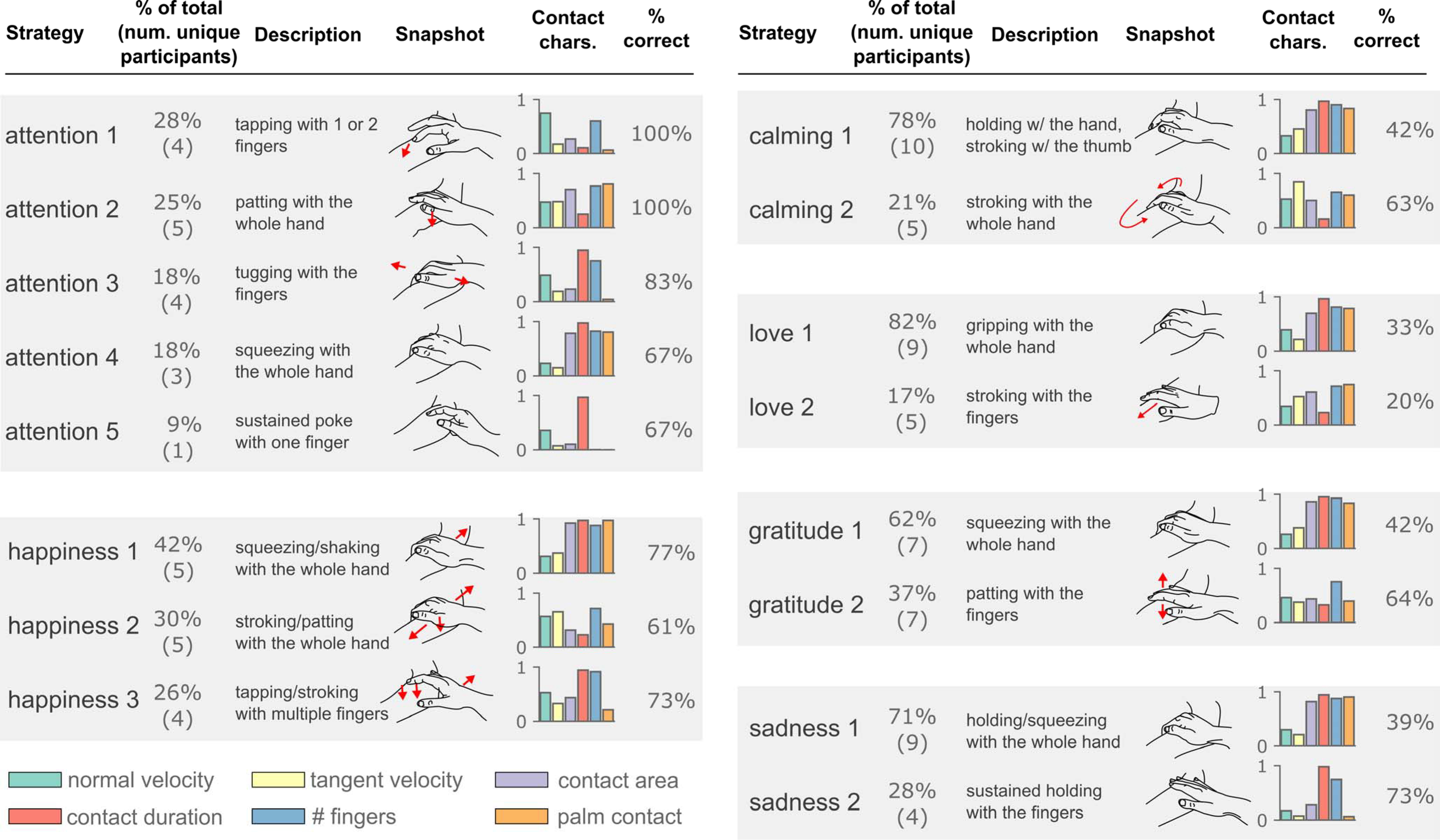

Further work was done to determine common expression strategies of each gesture via clustering analysis (Figure 6). Attention was the most variable gesture, with five strategies identified. Most common were short, high-intensity pokes with a small number of fingers. However, faster pulling on the arm with the whole hand was also seen with somewhat high frequency. A few participants used low intensity, high duration gestures to signal attention. The next most variable gesture was happiness, with three primary strategies identified. Most common were whole-hand gestures, although tapping with the fingers was also fairly common. All of the happiness strategies were relatively high with respect to tangential velocity. The most consistent gestures were calming, sadness, and love, with approximately 75% of these gestures being performed in a similar manner. For calming, either a low intensity, slow hold with the hand was used or a faster stroking gesture with the fingers. For gratitude, a slower grabbing motion or a faster shaking motion was used. For sadness, either a whole hand gesture or holding with a few fingers was used. Notably, both sadness strategies were always held for the whole trial.

Fig 6. Common expression strategies for each gesture.

Via k-means clustering analysis, multiple “expression strategies” were determined. For each gesture, the strategies are listed, along with 1) the percent of the gestures that were clustered into each strategy, 2) the number of unique participants which used each gesture (out of 10), 3) a description along with a representative “snapshot” of each gesture, 4) the relative quantities of our six contact characteristics, and 5) the percent of the time that the receiver correctly identified the strategy. % total values are floored to the nearest whole percent.

Behavioral experiments analysis.

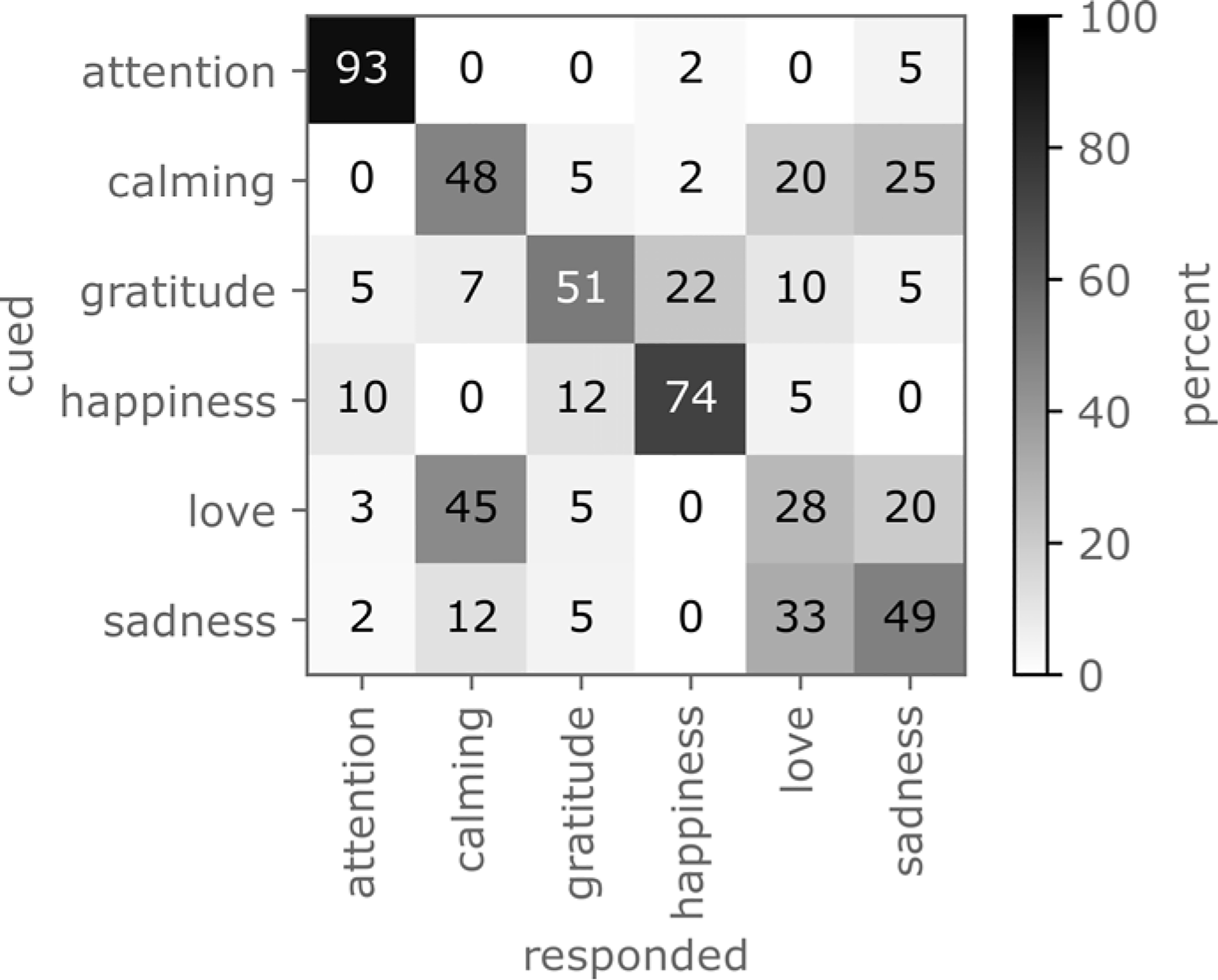

We also examined how receivers perceived the gestures (Figure 7). Participants were especially accurate in recognizing “attention” and “happiness” (93% and 74% correct, respectively). The worst performance was in recognizing “love” (28% correct), which was most often misrecognized as calming (45% responded). Recognition accuracy across all six gestures was 57%.

Fig 7. Human gesture recognition accuracy.

The confusion matrix from the human-subjects behavioral experiments. Overall classification accuracy was 57%.

IV. Discussion

We frequently communicate emotion with others through touch cues. Rich spatio-temporal details underlie how a toucher’s fingers and palm make contact in interactions with a receiver’s forearm. Prior efforts have used mostly qualitative, human visual observation to distinguish an emotion by certain gestures tied to one’s hand contact, velocity and position. The work herein describes an automated approach to quantitatively eliciting the essential features of these gestures that convey an emotion’s meaning. We developed six quantitative contact characteristics that were capable of describing and differentiating the gestures used to communicate our word cues that varied in emotional content. Furthermore, clustering analysis revealed that there are typically 2–5 expression strategies per gesture and that some lead to more successful communication of an emotion. Abstracting emotive touch gestures into quantitative contact characteristics, or primitives, may help in specifying design requirements to be implemented with haptic actuators.

Among our sample of ten participants, we found that certain expression strategies, i.e., combinations of the six contact characteristics, seemed to better convey certain emotions. One interesting finding came with regards to expressions of calming, love, and sadness. These gestures tended to be performed using strategies that are very similar to each other with respect to our six contact characteristics (see Figure 6, calming 1, love 1, and sadness 1). When touchers used those strategies, the receiver’s recognition dropped significantly, as one might expect. However, when using alternate expression strategies that varied from one another more significantly, recognition accuracy was much greater (see Figure 6, calming 2, love 2, sadness 2). This suggests that our quantitative analysis captures similar features to what the receivers use in their judgments, or at least that we have avoided capturing discriminative features to which the receivers are insensitive.

Tied to this are questions of whether the gestures used are innate or are learned on the fly to solve the particular tasks of this study, and of whether one’s ethnic or cultural background and norms influence one’s gestures. Such questions could be the topic of further study. A larger study size is also needed to confirm the trends found herein – the uniqueness of the six contact charactersitics – as our focus was to introduce a new approach to automate the tracking of features. Indeed, while the six characteristics are promising and tied to prior research, their number may need to be reduced or expanded. For one, the time duration to perform a gesture was held constant but might be an important variable.

Moreover, another next step is to determine the precise ranges and timescales for each of the six contact characteristics. For instance, perhaps contact area must be 1.0 cm and not 1.5 cm, rate must be 3 cm/sec, delay between subsequent touches 100 ms not 300 ms, etc. With the setup described herein, it is possible to investigate such questions. Some of their ranges may relate to receptive field size and density organization, integration of mechanosensitive afferent responses, as well as other perceptual mechanisms.

At a deeper level, deciphering the specific input-output relationships that tie contact on the forearm to populations of neural afferents in the skin (and further on to perception) is critical to our understanding of how these emotions are encoded [17]. The engagement of the CT afferents is particularly interesting, as these are associated with pleasant or hedonic touch [11] – in fact, we do note similarities in the range of tangential velocity of our gestures to the maximal activation range of these afferents (1–10 cm/s).

More broadly, a better understanding of these input-output relationships can greatly assist in generating design requirements for tactile communication devices and sensory prosthetics. Ideal devices should evoke the same behavioral responses as real human touch, although a device that falls short of this ideal may still be successful if it engages physiological systems sufficiently well. Some groups have worked to create such devices capable of creating stroking sensations on the arm [9], [10] which could be used for such a purpose. Measurements from our system should serve as a basis for the design specifications of these devices, providing critical information such as the contact areas and indentation velocities that need to be replicated.

Finally, in addition to machine-to-human touch, our results also have implications in facilitating human-to-robot interactions. Prior efforts have worked on training robots (typically humanoid or zoomorphic) with sensor arrays to recognize different kinds of social touch gestures [8], [18], [19]. Our study complements these efforts by first uncovering quantitative data for naturalistic human-to-human touch, among people with established relationships. Via our tracking system we can obtain metrics that are not readily available from pressure sensor arrays, such as the number of fingers making contact and 3-D positioning of the hand and contact.

Acknowledgments

*Research supported by Facebook, Inc.

Contributor Information

Steven C. Hauser, Systems Engineering, Mechanical Engineering and Biomedical Engineering at the University of Virginia, Charlottesville, VA, USA.

Sarah McIntyre, Center for Social and Affective Neuroscience (CSAN), Linköping University, Sweden..

Ali Israr, Facebook Reality Labs, Redmond, WA, USA..

Håkan Olausson, Center for Social and Affective Neuroscience (CSAN), Linköping University, Sweden..

Gregory J. Gerling, Systems Engineering, Mechanical Engineering and Biomedical Engineering at the University of Virginia, Charlottesville, VA, USA.

References

- [1].Dunbar RI, “The social role of touch in humans and primates: behavioural function and neurobiological mechanisms,” Neuroscience & Biobehavioral Reviews, vol. 34, no. 2, pp. 260–268, 2010. [DOI] [PubMed] [Google Scholar]

- [2].McGlone F, Wessberg J, and Olausson H, “Discriminative and affective touch: sensing and feeling,” Neuron, vol. 82, no. 4, pp. 737–755, 2014. [DOI] [PubMed] [Google Scholar]

- [3].Olausson H et al. , “Unmyelinated tactile afferents signal touch and project to insular cortex,” Nature neuroscience, vol. 5, no. 9, p. 900, 2002. [DOI] [PubMed] [Google Scholar]

- [4].Morrison I et al. , “Reduced C-afferent fibre density affects perceived pleasantness and empathy for touch,” Brain, vol. 134, no. 4, pp. 1116–1126, 2011. [DOI] [PubMed] [Google Scholar]

- [5].Gazzola V, Spezio ML, Etzel JA, Castelli F, Adolphs R, and Keysers C, “Primary somatosensory cortex discriminates affective significance in social touch,” Proceedings of the National Academy of Sciences, vol. 109, no. 25, pp. E1657–E1666, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Hertenstein MJ, Keltner D, App B, Bulleit BA, and Jaskolka AR, “Touch communicates distinct emotions.,” Emotion, vol. 6, no. 3, p. 528, 2006. [DOI] [PubMed] [Google Scholar]

- [7].Hertenstein MJ, Holmes R, McCullough M, and Keltner D, “The communication of emotion via touch.,” Emotion, vol. 9, no. 4, p. 566, 2009. [DOI] [PubMed] [Google Scholar]

- [8].Jung MM, Cang XL, Poel M, and MacLean KE, “Touch challenge’15: Recognizing social touch gestures,” presented at the Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, 2015, pp. 387–390. [Google Scholar]

- [9].Israr A and Abnousi F, “Towards Pleasant Touch: Vibrotactile Grids for Social Touch Interactions,” presented at the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, 2018, p. LBW131. [Google Scholar]

- [10].Culbertson H, Nunez CM, Israr A, Lau F, Abnousi F, and Okamura AM, “A social haptic device to create continuous lateral motion using sequential normal indentation,” presented at the Haptics Symposium (HAPTICS), 2018 IEEE, 2018, pp. 32–39. [Google Scholar]

- [11].Löken LS, Wessberg J, McGlone F, and Olausson H, “Coding of pleasant touch by unmyelinated afferents in humans,” Nature neuroscience, vol. 12, no. 5, p. 547, 2009. [DOI] [PubMed] [Google Scholar]

- [12].Edin BB, Essick GK, Trulsson M, and Olsson KA, “Receptor encoding of moving tactile stimuli in humans. I. Temporal pattern of discharge of individual low-threshold mechanoreceptors,” Journal of Neuroscience, vol. 15, no. 1, pp. 830–847, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Ackerley R, Wasling HB, Liljencrantz J, Olausson H, Johnson RD, and Wessberg J, “Human C-tactile afferents are tuned to the temperature of a skin-stroking caress,” Journal of Neuroscience, vol. 34, no. 8, pp. 2879–2883, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Johnson KO, Yoshioka T, and Vega–Bermudez F, “Tactile functions of mechanoreceptive afferents innervating the hand,” Journal of Clinical Neurophysiology, vol. 17, no. 6, pp. 539–558, 2000. [DOI] [PubMed] [Google Scholar]

- [15].Hauser SC and Gerling GJ, “Force-rate cues reduce object deformation necessary to discriminate compliances harder than the skin,” IEEE Transactions on Haptics, vol. 11, no. 2, pp. 232–240, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Guna J, Jakus G, Pogačnik M, Tomažič S, and Sodnik J, “An analysis of the precision and reliability of the leap motion sensor and its suitability for static and dynamic tracking,” Sensors, vol. 14, no. 2, pp. 3702–3720, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Hauser SC, Nagi SS, McIntyre S, Israr A, Olausson H, and Gerling GJ, “From Human-to-Human Touch to Peripheral Nerve Responses,” presented at the World Haptics Conference 2019, 2019, p. in press. [Google Scholar]

- [18].Cooney MD, Nishio S, and Ishiguro H, “Recognizing affection for a touch-based interaction with a humanoid robot,” presented at the Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Conference on, 2012, pp. 1420–1427. [Google Scholar]

- [19].Altun K and MacLean KE, “Recognizing affect in human touch of a robot,” Pattern Recognition Letters, vol. 66, pp. 31–40, 2015. [Google Scholar]