Abstract

The study outlines a model for how the COVID‐19 pandemic has uniquely exacerbated the propagation of conspiracy beliefs and subsequent harmful behaviors. The pandemic has led to widespread disruption of cognitive and social structures. As people face these disruptions they turn online seeking alternative cognitive and social structures. Once there, social media radicalizes beliefs, increasing contagion (rapid spread) and stickiness (resistance to change) of conspiracy theories. As conspiracy theories are reinforced in online communities, social norms develop, translating conspiracy beliefs into real‐world action. These real‐world exchanges are then posted back on social media, where they are further reinforced and amplified, and the cycle continues. In the broader population, this process draws attention to conspiracy theories and those who confidently espouse them. This attention can drive perceptions that conspiracy beliefs are less fringe and more popular, potentially normalizing such beliefs for the mainstream. We conclude by considering interventions and future research to address this seemingly intractable problem.

1. INTRODUCTION

Conspiracy theories about COVID‐19 pose a public health risk. For example, they trigger suspicion about well‐established scientific recommendations (Prichard & Christman, 2020; Romer & Jamieson, 2020), hamper response efforts to the pandemic (Buranyi, 2020), and even lead to the burning of 5G cellphone towers (Heilweil, 2020). Because of their powerful influence in shaping both our narratives about and responses to the pandemic, these conspiracy beliefs receive a great deal of public attention. A frequent contention in these discussions is that the internet—and social media in particular—contributes to the apparent prevalence of these problematic beliefs (Bomey, 2020; Easton, 2020; Lee, 2020). For example, US President Joe Biden recently asserted that, by allowing misinformation and conspiracy theories to proliferate, social media platforms were reducing vaccination rates and “killing people” (Cathey, 2021). Similarly, in a recent study linking social media use to the rate of COVID‐19’s spread, the authors speculated that one driver of the effect could be social media's tendency to prop up conspiracy theories about the disease (Kong et al., 2021). In this study, we assess claims such as these by examining the rapidly emerging literature around COVID‐19 conspiracy theories.

Prior to the COVID‐19 pandemic, researchers examined the key antecedents that lead to conspiracy theories’ spread (i.e., the rate at which conspiracy theories are communicated from person to person; Franks et al., 2013) and stickiness (i.e., the extent to which belief in conspiracy theories “takes root” and becomes difficult to change; Jolley & Douglas, 2017). Some work argues that the internet does not affect the propagation of conspiracy theories (Uscinski & Parent, 2014) or can even impede it (Clarke, 2007; Uscinski et al., 2018), others suggest that aspects of the internet critically enabled conspiracy theories to spread and stick (Stano, 2020). We join the latter group and conclude that, particularly in the context of the COVID‐19 pandemic, social media—i.e., internet spaces where people share information, ideas, and personal messages and form communities (Merriam‐Webster, 2021)—has played a central role in conspiracy beliefs.

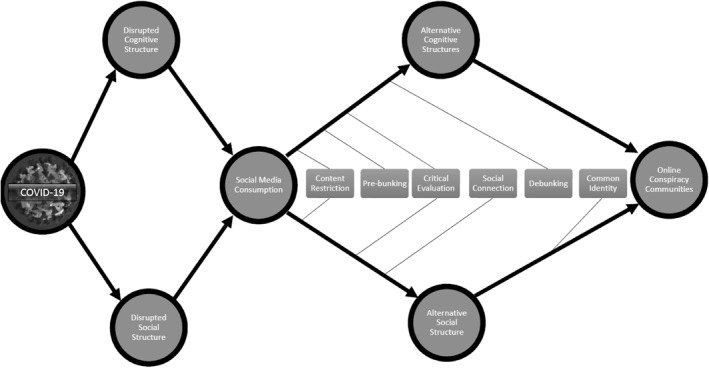

We offer a theoretical framework (see Figure 1) that outlines how the pandemic affects social media consumption, and how social media contributes to COVID‐19 conspiracy theories and their negative effects. We first suggest that effects of the pandemic (and the attempts to contain it) in our daily lives fray our cognitive structures (i.e., our understanding of the world and how to act agentically upon it) and our social structures (i.e., our means of maintaining social bonds and connections with others), and thus are part of the reason the internet has become so central in our lives during the pandemic. Next, we review evidence that features of the alternative cognitive and social structures that social media provides contributed to the spread of COVID‐19 conspiracy theories. We also discuss how characteristics of the social structures offered by social media help spread conspiracy theories and make them particularly sticky and resistant to disconfirming information. We go on to explore how these alternative social and cognitive structures translate into online communities with real‐world implications. Finally, we propose interventions and future research that might help address these pernicious online dynamics.

FIGURE 1.

Theoretical framework for explaining how social media contributes to COVID‐19 conspiracy theories and their negative effects

2. CONSPIRACY THEORIES AND THE INTERNET

Conspiracy theories are explanations for events that posit powerful actors are working together in secret to achieve self‐serving or malicious goals (Bale, 2007; Kramer & Gavrieli, 2005; Zonis & Joseph, 1994). While powerful actors certainly perpetrate actual conspiracies, our interest lies in the over‐perception of conspiracy‐based explanations. Such theories are by no means new, however, the way the internet influences their spread is a quickly evolving consideration. This is all the more relevant during the COVID‐19 pandemic, as individuals are forced out of public spaces due to social distancing measures, and the internet becomes more central to how they acquire information about and respond to the pandemic (Koeze & Popper, 2020; Naeem, 2020; Samet, 2020).

There is a great deal of debate about the role of the internet in the spread of conspiracy theories. On one hand, scholars argue that the critical atmosphere of the internet impedes the development of conspiracy theories because disagreement and disconfirming evidence is easily available (Clarke, 2007). Others point to the lack of evidence that the internet increases their spread beyond people already interested in them (Uscinski et al., 2018). Taken together, these scholars suggest there is little evidence that the internet has drastically altered the landscape for conspiracy theories.

Conversely, other scholars argue the internet provides fertile ground for conspiracy theories to spread. They assert that social media's pervasiveness lowers barriers to access, exposing potential believers directly to conspiracy theories via sites like Facebook, Twitter, Reddit, and YouTube (Douglas, Ang, et al., 2017; Stano, 2020). We engage in this debate, proposing a framework to describe how the COVID‐19 pandemic and attempts to contain it create nearly ideal conditions for social media to uniquely contribute to the spread and stickiness of conspiracy theories.

3. THE COVID‐19 PANDEMIC AND INTERNET CONSUMPTION

In general, the pandemic increased social media usage, such that general internet activity rose 25% in the days after lockdown (Rizzo & Click, 2020). More specifically, the year 2020 saw global social media use accelerate by 13%, with 330 million new users, resulting in a total of 4.7 billion total users in April 2021 (Kemp, 2021a; Kemp, 2021b). We argue that part of the reason people consumed more social media was because of pandemic‐related disruptions to their cognitive and social structures. Although this may not have been the only reason for the increase, it is a significant contributing factor.

3.1. Disrupted cognitive structures

People have a fundamental desire to feel that they understand the world and have agency in it (Douglas, Sutton, et al., 2017; Lewin, 1938; Mitchell, 1974). However, as measures intended to impede the spread of COVID‐19 were imposed, individuals experienced less control over their activities (e.g. via lockdowns, business closures, and mask mandates) and health outcomes (e.g. limited ability to prevent or treat illness). This loss of control had implications for many aspects central to one’s life, for example, health (e.g., “Will I get the disease?”), career (e.g., “Will I have a job?”), loved ones (e.g., “What about my family's health—and when will I see them next?”), and government response (e.g., “Will it solve the problem or make it worse?”)—to name just a few concerns (Douglas, 2021; Wang et al., 2020). Information was also limited, driving uncertainty about how the disease spread, what its effects were, how political entities would apply and enforce social measures intended to limit its spread, and the ultimate impact of the disease on individuals' economic and social worlds. Many were in an untenable holding pattern, perhaps hoping for the “old” normal to return, while not knowing what would come next in this “new” normal (Brooks et al., 2020). In other words, the pandemic disrupted people's sense that the world was predictable and navigable by making their understanding of their environment and how to act in it (i.e., their cognitive structures) appear inaccurate and outdated.

The loss of control and uncertainty caused by the pandemic led many people to increase their consumption of social media. As the COVID‐19 pandemic increased people's lack of control, they turned to social media as a place where they could still exert some control over their environment and how they interacted with it (Brailovskaia & Margraf, 2021). In addition, when individuals experience greater uncertainty they tend to seek diagnostic information (Weary & Jacobson, 1997), and during the pandemic, many people reported turning to social media to seek information (48%), or to share experiences and opinions about COVID‐19 (41%; Ritter, 2020). Indeed, approximately 66% of Instagram users used COVID‐19 hashtags to discuss information, in the period between February 20 and May 6, 2020 (Rovetta & Bhagavathula, 2020).

3.2. Disrupted social structures

The COVID‐19 pandemic also disrupted people's social structures, by which we mean the patterns of interactions with others through which we form and maintain social bonds. For example, many employees were exiled from their normal office environments. Gatherings were restricted, precluding even the basic milestones that organize social life (e.g., weddings, funerals, and holidays). Stuck in highly limited communications with their immediate core groups, the weak ties that provided diversity and broader informational content were sorely missed (Sandstrom & Whillans, 2020). In other words, the pandemic and associated social distancing measures disrupted the normal social interactions we rely on to fulfill our basic needs for affiliation and belonging (Young et al., 2021). To fulfill these needs, many turned to online tools that help maintain relationships with others and ameliorate pandemic‐induced feelings of loneliness (Cauberghe et al., 2021). Given that feeling isolated has been linked with increased social media use (Primack et al., 2017a, 2017b) it should be no surprise that social media consumption has risen since the onset of social distancing measures (Fischer, 2020; Samet, 2020). In addition, many people reported using social media to help maintain social connections (74%; Ritter, 2020) and to feel less lonely (57%; Trifonova, 2020) during the pandemic.

In sum, with their cognitive and social structures disrupted, people looked online for alternative structures. However, as we will discuss next, the price of entry into these alternative structures may be immersion in a world of conspiracy theories.

4. SEEKING STRUCTURE AND FINDING CONSPIRACY THEORIES ON SOCIAL MEDIA

As people search online for alternative structures, features of the social media environment can increase the likelihood the structures people find will promote conspiracy beliefs. Here we argue that certain aspects of social media tend to increase the spread and stickiness of conspiracy theories among those seeking structure.

4.1. Alternative cognitive structure

Lacking the dependable solidity of their usual cognitive structures, people will seek out alternative structures (Landau et al., 2015; Whitson & Galinsky, 2008). Although these alternative structures can take many different forms, conspiracy theories are a common instantiation (Whitson et al., 2019). Conspiracy theories are attractive because they offer non‐specific epistemic structure. In other words, they provide simple, clear, and consistent ways to interpret the key phenomena or events in one's environment (Landau et al., 2015). However, adopting conspiracy theories sometimes means sacrificing a more accurate understanding of the world (Douglas, Sutton, et al., 2017; Douglas et al., 2019). Though conspiracy theories may be briefly epistemically satisfying, like junk food, they do not resolve the underlying hunger, as they may not accurately reflect the real world. Indeed, recent work by Kofta and colleagues (2020) shows that in a cross‐lagged study that endorsement of a conspiracy theory at Time 1 ironically predicted an increased feeling of lack of control at Time 2. Nevertheless, people are attracted to conspiracy theories as a potential replacement for the cognitive structure they have lost.

In the context of the COVID‐19 pandemic, the sudden lack of control and increased uncertainty may have made people particularly vulnerable to conspiracy theories as a form of alternative structure (Douglas, 2021). While there is some evidence that uncertainty may lead to increased belief in conspiracies (van Prooijen & Jostmann, 2013; Whitson et al., 2015), there is a much broader swathe of empirical support that lacking control drives investment in conspiracy beliefs (Kofta et al., 2020; Landau et al., 2015; Whitson & Galinsky, 2008). In other words, the very same lack of control, and to a lesser extent uncertainty, that drove people onto social media also made them more open to conspiracy theories. We believe that this vulnerability to conspiracy beliefs combined with key characteristics of social media helped to promote the rapid spread of COVID‐19 conspiracy theories. In particular, we argue that the prevalence of unreliable posts, bots, and algorithms increased exposure to conspiratorial misinformation in a vulnerable population of users. By readily exposing those who are in need of cognitive structure to conspiracy theories, social media helped spread these theories to more people.

4.1.1. Unreliable content

In recent years, social media has played a growing role in the spread of news content, both reliable (i.e., vetted and accurate information) and unreliable (i.e., questionable or inaccurate information, Cinelli, Quattrociocchi, et al., 2020). For example, on sites such as Facebook and Reddit, unreliable posts (such as the conspiracy theory that pharmaceutical companies created COVID‐19 as a new source of profit; Romer & Jamieson, 2020) spread as quickly as reliably‐sourced COVID‐related posts (Cinelli, Quattrociocchi, et al., 2020; Papakyriakopoulos et al., 2020). This suggests that for social media, unlike more traditional outlets, the spread of information is less tied to content accuracy. On Twitter, research has shown that false rumors not only move six times faster than the truth but also penetrate further into the social network. While the top 1% of false posts diffuse to as many as 100,000 users, the truth rarely reaches more than 1000 (Vosoughi et al., 2018).

4.1.2. Bots

Bots are automated accounts that post only certain types of information, often in collaboration with other bots, to boost the visibility of certain hashtags, news sources, or storylines (Ferrara, 2020). This form of interactive technology is another source of false information on social media. While the identity of those who control bot networks is rarely public, several have been traced back to nation‐state actors (Prier, 2017). These bot networks are a unique feature of the social media landscape, able to create the illusion of popularity and mass endorsement or exaggerate the importance of topics in a way impossible in any other information‐sharing context. A sample of over 43 million English‐language tweets about COVID‐19 revealed that bots were more present in some areas of discourse than in others; namely, bots tweeted about political conspiracies, whereas humans tweeted about public health concerns (Ferrara, 2020). These bots not only help spread conspiracy theories, they also create a false impression that they are more widely endorsed than they are.

4.1.3. Algorithms

There is also emerging evidence that design decisions meant to increase user engagement on social media may have the unintended effect of promoting the spread of misinformation, including conspiracy theories. Prior work has shown that algorithms used by social media companies to recommend content can lead people toward misinformation (Alfano et al., 2020; Elkin et al., 2020; Faddoul et al., 2020; Tang et al., 2021). While some people may be actively seeking conspiracy theories (Uscinski et al., 2018), algorithms expose them to a broader audience. As mere exposure to conspiracy theories tends to increase belief in them, algorithms can create vulnerabilities in people who may not have initial inclinations toward those theories (Jolley & Douglas, 2014; Jolley et al., 2020; Kim & Cao, 2016; van der Linden, 2015).

In sum, social media has increased the spread of conspiracy theories through a variety of means. The spread of these conspiratorial alternative cognitive structures is exacerbated on social media by the freedom with which unreliable information travels, bots inflating impressions of their popularity, and algorithms putting them in front of an unwitting and vulnerable public.

4.2. Alternative social structure

As the COVID‐19 pandemic deprives people of their normal office environments, traditional life milestones, and much of the rich tapestry of social contact they are used to, they turn to social media to try to fulfill their needs for affiliation and belonging. Social media fulfills these needs via alternative social structures that have some distinguishing features that were not present in the social structures they replace. We propose that certain features of the alternative social structures that social media provides—namely the presence of social media influencers, information echo chambers, and constant social reinforcement—tend to make conspiracy theories spread further and become stickier.

4.2.1. Social media influencers

The role of so‐called “influencers” on social media (i.e., high‐status social media users with many followers; Enke & Borchers, 2019) may contribute to the spread of false beliefs. For example, a handful of conservative politicians and far‐right political activists used Twitter to spread the conspiracy that the pandemic itself was a hoax (#FilmYourHospital; Gruzd & Mai, 2020). People follow these influencers for a variety of reasons unrelated to conspiracy theories and often feel as if they are trusted friends (Kirkpatrick, 2016; Woods, 2016). This is troubling because people are less likely to critically evaluate information from people they trust and are more prone to believe misinformation when they are not evaluating it deeply (Kirkpatrick, 2016; Pennycook & Rand, 2019).

As a result, influencers who engage with conspiracy theories are another means by which these theories spread to a larger audience who would not have otherwise sought them out. Furthermore, unlike traditional journalistic outlets that have more formalized accountability to federal laws and ethics standards, social media allows high‐status individuals unmitigated, direct access to the general public and the ability to promote ideas largely unchallenged (Finkel et al., 2020).

Indeed, Brennen and colleagues (2020) found that high‐status individuals are key contributors to the spread of misinformation; while they produced only 20% of the misinformation in the study sample, this misinformation accounted for 69% of the observed social media engagement from followers. Another study finds that after Donald Trump was removed from Twitter, election‐related misinformation on the site dropped by 73% (Dwoskin & Timberg, 2021). Finally, a study of 812,000 social media posts containing vaccine misinformation between 1st February and 16th March of 2021 found that 65% of those posts could be traced back to just 12 influencers (Center for Countering Digital Hate, 2021).

4.2.2. Information echo chambers

Some aspects of social media make it harder for self‐correcting information to enter the system. Just as with the influencers who reside on them, social media platforms provide direct access to an unprecedented amount of unfiltered information, including a great deal of misinformation (Cinelli, Quattrociocchi, et al., 2020; Zarocostas, 2020).

The echo chamber. Left to sort through the overwhelming amount of content social media users, guided by their cognitive biases and pre‐existing beliefs (Bessi et al., 2015; Cinelli, Brugnoli, et al., 2020; Cinelli et al., 2021), tend to seek out information that is consistent with their existing views and ignore or reject contrary information (Brugnoli et al., 2019). In other words, people become trapped in information echo chambers where individuals with similar interests find each other and form increasingly homogenous groups, driving amplification of particular beliefs (Choi et al., 2020; Cinelli et al., 2021). Embedded in these groups, they find people with more extreme views dominating the conversation (Barberá & Rivero, 2014; Boutyline & Willer, 2017). Two studies of Facebook users’ information consumption found that those who circulate conspiracy content also tend to ignore science content, and vice versa (Del Vicario et al., 2016). Moreover, users in conspiracy communities tend to be more active within their community and interact less with information outside of it (Bessi et al., 2015). In other words, information echo chambers form online around conspiracy beliefs, making it more difficult for information that counters these beliefs to reach people once they have adopted conspiracy theories.

Entrance into the echo chamber. Evidence suggests that those joining COVID‐19 conspiracy echo chambers vary in the extent to which they endorsed conspiracy beliefs before entry.

On one hand, analysis of Reddit communities showed that the founders of COVID‐19 conspiracy theory subreddits were previously involved in other conspiracy theory communities (Zhang et al., 2021). Similarly, an analysis of Twitter data found that many of those sharing COVID‐19 misinformation had previously expressed anti‐vaccine sentiments (Memon & Carley, 2020). This suggests that people who were already involved with other conspiracy theories were attracted to COVID‐19 conspiracies.

On the other hand, previous endorsement of conspiracy theories is not a prerequisite. The eventual popularity of COVID‐19 conspiracy theories indicates that belief in them spilled over into the mainstream, attracting people who were not previously a part of other conspiracy theory communities. Studies have suggested that as much as a third of the population have endorsed at least one COVID‐19 related conspiracy theory, and one in 10 watched at least some part of the plandemic conspiracy theory video (Allington et al., 2020; Kuhn et al., 2021; Mitchell et al., 2020; Romer & Jamieson, 2020). These percentages may be even higher among Republicans, people who watched conservative media, and people who relied on Donald Trump for COVID‐19 information, suggesting that COVID‐19 conspiracy theories gain traction in groups other than those that were dedicated to conspiracy theories, in part due to their pre‐existing biases and beliefs about the world (Annenberg Public Policy Center of the University of Pennsylvania, 2021; Mitchell et al., 2020; Romer & Jamieson, 2020). This is consistent with an earlier analysis of Reddit posts which found that individuals who go on to post in conspiracy theory communities tend to be more active in certain communities that are unrelated to conspiracy theories, such as those discussing politics, before joining the conspiracy theory communities (Klein et al., 2019). In other words, conspiracy theories can be spread in communities (and their associated echo chambers) that have been formed around non‐conspiracy interests and in this way reach individuals who do not have a preexisting interest in conspiracy theories.

5. SOCIAL REINFORCEMENT

On social media, people are rewarded for producing messages that align with their communities’ views. This is gamified in a reward system that allocates shared posts, “likes,” and followers to users who produce or promote such content, thus increasing the self‐esteem and happiness of those users (Marengo et al., 2021; Stillman & Baumeister, 2009). This occurs even if the content being produced contains misinformation. One recent study found that this positive social reinforcement may be withdrawn from Twitter users who do not share the same fake news stories as others in their community (Lawson et al., 2021). These effects can create a feedback loop in which constant social reinforcement increases engagement with like‐minded people, ultimately leading to the reinforcement of social identities associated with shared beliefs. In other words, social media encourages the formation of “identity bubbles”, which are online communities that not only act as echo chambers, but also reinforce shared identities and encourage homophily (Kaakinen et al., 2020). In addition, these online bubbles do not simply reinforce existing beliefs, rather, they tend to encourage the adoption of even more extreme beliefs (Ohme, 2021). As might be expected, analysis of large‐scale social media data has shown that such bubbles often form around conspiracy beliefs (Bessi et al., 2016). This is problematic because once inside a conspiratorial identity bubble people experience constant reinforcement of their conspiracy beliefs, are encouraged to adopt more extreme beliefs, and are likely to associate core aspects of their self‐concept with those beliefs. The constant social reinforcement and entanglement of conspiracy beliefs with social identities are likely to make those beliefs extremely difficult to dislodge.

In sum, social media provides alternative social structures which play on individual needs for belonging and affiliation to draw attention to social influencers and to ensnare believers in echo chambers that positively reinforce endorsement of their beliefs and entangle those beliefs with their social identities, thereby increasing the stickiness of conspiracy theories.

6. ONLINE CONSPIRACY COMMUNITIES

Correlational evidence suggests that conspiracy beliefs are associated with a weaker social network (Freeman & Bentall, 2017) and that publicly endorsing conspiracy theories may lead to social exclusion (Lantian et al., 2018). Anecdotal evidence also suggests that believers who become invested in conspiracy theories can find their relationships with friends and family strained or even severed (Lord & Naik, 2020; Roose, 2020a). Conversely, there is also evidence that conspiracy believers tend to experience and even intentionally build a sense of community with other believers (Franks et al., 2017; Grant et al., 2015). Thus, adopting conspiracy beliefs can reshape one's social networks, fraying existing in‐person social ties while strengthening virtual networks through message boards and online communities. Once embedded within these virtual networks of fellow believers and increasingly cut off from non‐believers, individuals will tend to find their conspiracy beliefs are reinforced and, as discussed above, may even become a central feature of their social identities. Below we discuss some of the characteristics of the online communities that formed around COVID‐19 conspiracies.

A key ingredient of online communities focused on conspiracy theories is the nature of conspiracy theories themselves. By positing a cadre of shadowy actors working in concert with ill intent, conspiracy theories imply an explicit threat that invokes an intense intergroup process (Shahsavari et al., 2020). These narratives identify a core group (often those who believe the conspiracy theory), a threat to that group, and strategies intended to meet the threat (Drinkwater et al., 2021; Shahsavari et al., 2020). Conspiracy theories often reflect a “sense of anomie (normlessness) and societal estrangement” that enhances the sense of an uncaring world in which only those in the group care (Drinkwater et al., 2021, p. 2). In an attempt to unite against external threats, people strengthen their in‐group norms and exhibit outgroup derogation (Harambam, 2020; Knight, 2001). We provide a few brief examples of these processes as they pertain to online communication and behavior during the pandemic.

6.1. Public displays of identity

Social media profiles reveal how conspiracy beliefs become a part of an individual's social identity. For instance, QAnon conspiracy believers take oaths to become “digital soldiers,” and frequently repeat mantras such as “where we go one, we go all” in their profiles (Cohen, 2020). During the COVID‐19 pandemic, wearing a mask became a similarly important identity signal (Boykin et al., 2021; Powdthavee et al., 2021). Like dress or costumes, these public displays signal membership in the community both to insiders and the outside world.

6.2. Insider language

Conspiracy theories inspire linguistic innovations in the group. For instance, the word “plandemic” gained popularity as a hashtag and shorthand for a variety of COVID‐19 conspiracies before the release of the viral video of the same name (Kearney et al., 2020). Such language highlights the unique knowledge that separates believers from the uninformed. The easily searchable nature of social media means that these groups are easy to find for newcomers and for those seeking a receptive audience for their related conspiracy theories.

6.3. Outgroup derogation

Some of this language also involved stigmatizing outgroups. Terms like “China flu”, “Kung flu”, and “Wuhan virus” became markers of group identity (Macleod, 2020; Restuccia, 2020), that simultaneously stigmatized a foreign outgroup (China) and signaled membership to others in the same ingroup. Once popular media outlets, such as CNN and the New York Times, disavowed terms like “Wuhan virus” which they had previously used (Kaur, 2020), usage of these terms began to demarcate a stance against the dominant culture and served to distinguish COVID‐19 conspiracy communities from the general public.

7. FROM THE VIRTUAL WORLD TO REAL WORLD (AND BACK AGAIN)

A critical moment is when the conspiracy theory—which has been festering primarily online—is exposed to reality. Namely, the specific norms that are established concerning appropriate belief and behavior in the online community may spill into the “outside world.” These might include easily visible patterns of dress, like rejecting face mask use or flaunting mainstream norms and government rules about social distancing.

7.1. Effects of the real world on the virtual community

COVID‐19 conspiracy beliefs translate directly to various health‐protective behaviors (Allington et al., 2020; Sternisko et al., 2020). Conspiracy beliefs reduce healthy behaviors and promote harmful practices (Tasnim et al., 2020), interfere with the dissemination of clear information from reliable sources (Wong et al., 2020), and compromise efforts to contain and help recovery from the virus (Shaw et al., 2020), leaving conspiracy believers with worsened health outcomes (both mental and physical; Tasnim et al., 2020). For example, those who believe that pharmaceutical companies created COVID‐19 as a new source of profit or that the danger of the disease has been exaggerated by the CDC for political reasons are less likely to wear masks and to seek out a vaccine (Romer & Jamieson, 2020). Importantly, the content of the COVID‐19 conspiracy theory matters. When people believe more general pandemic conspiracy theories (e.g., that vague, shadowy groups stand to benefit from COVID‐19), they endorse institutional control and surveillance of ethnic minorities associated with the pandemic; however, when they believe government‐focused conspiracy theories (e.g., that the government is using the COVID‐19 pandemic to remove freedoms from its citizens), they are less likely to wear masks or socially distance (Oleksy et al., 2021).

When people behave this way, they are not dispassionately seeking to test their hypotheses about reality, but are rather motivated to seek confirmation of their theories (Kunda, 1990). Subsequently, even feedback which on its face seems negative—such as being confronted in a store for not wearing a mask—can ultimately be positively reinforcing, as it provides both attention and confirmation that the confronted individual is having an impact on the social world around them. The people who have these experiences then catalog them online, bringing them back as grist for the mill of their online culture. The process repeats as the community interprets, reinforces, and continues to push the line by updating to fit the facts at hand and escalating to perhaps more extreme norms.

Even when individuals encounter truly negative feedback (e.g., actually contracting or dying of COVID‐19), the overarching online community often remains intact, because only some individuals experience the negative feedback. Even worse, since many conspiracy theories rest on a broader foundation of science denial and distrust (Lewandowsky, Gignac, et al., 2013; Lewandowsky, Oberauer, et al., 2013), disconfirming evidence about a single belief does not topple the entire edifice. This implies that factual disconfirmation of these beliefs is likely insufficient and that we must consider other levers in the battle against the pernicious effects of conspiracy beliefs.

7.2. Effects of the virtual community on the real world

When people are embedded in online conspiracy communities and become accustomed to the customs therein, they may begin to lose touch with the norms of the wider society.

However, when people with these conspiracy beliefs turn outward from their online communities, whether by appearing in other online contexts or taking their beliefs into the real world, they can affect others by inducing questions about whether these conspiracy theories are fringe or are in fact widely shared. Often individuals with conspiracy beliefs intentionally hide the true nature of their beliefs in order to appeal to a wider audience (Roose, 2020b). In addition, these individuals can sometimes gain media attention or acknowledgment from public figures for their unusual beliefs, making them appear more widespread than they are (Phillips, 2018; Woods & Hahner, 2018). In other words, as these extreme, confident, attention‐grabbing voices and behaviors gain attention and publicity, they risk increasing perceptions in observers of their prevalence. What results is a form of pluralistic ignorance, or the idea that “no one believes, but everyone thinks that everyone believes” (Prentice & Miller, 1996). The more prevalent a conspiracy theory is seen to be, the more normalized it becomes (van den Broucke, 2020). Rather than reject these conspiracy theories outright, observers outside the community may become more open to evaluating them—at which point these theories can burst free from smaller reservoirs and spread more widely throughout the broader population. Equally concerning, is that these same processes can make conspiracy theories seem more mainstream to people within online conspiracy communities, and may even lead them to believe that the public would support them taking action to stop the supposed conspirators. Such beliefs may have contributed to real‐world events such as the January 6th incursion into the United States Capitol (Barry et al., 2021).

8. COMBATTING THE CONSPIRACY‐PROMOTING EFFECTS OF SOCIAL MEDIA

Social media played a central role in the spread and stick of COVID‐19 conspiracy theories. The question, of course, is how to interrupt this cycle of ever more elaborate and entrenched false beliefs. There are several proven options for reducing the negative influence of online conspiracy theories, content restriction (preventing exposure to and breaking up echo chambers); pre‐bunking (attempting to inoculate people before they are exposed); critical consumption (encouraging cognitive engagement during exposure); and, if all else fails, debunking (trying to alter conspiracy beliefs already held and address the problematic downstream attitudes and behavior directly). As well, encouraging a common identity (breaking down intergroup demarcations) and social connection (seeking to re‐establish the social connections lost or weakened during the pandemic).

8.1. Content restriction

Content restriction, a somewhat controversial approach, has the potential to address the more problematic aspects of the alternative cognitive and social structures that social media provides. It is predominantly used by large social media organizations that can enforce sweeping control over their platforms. Social media companies such as Twitter, YouTube, and Facebook have all implemented policies to remove conspiracy theory content, accounts, or groups that have been linked to conspiracy theories and violence (Ortutay, 2020). For example, in an attempt to address “militarized social movements”, Facebook banned all QAnon conspiracy accounts from its social media websites (Collins & Zadrozny, 2020), as well as all posts that distort or deny the Holocaust (O'Brien, 2020). Removing conspiracy content along with its most prominent purveyors can reduce peoples’ exposure to conspiracy theories and help slow their spread (Ahmed et al., 2020). In addition, banning conspiracy groups and hashtags may help break up echo chambers and dampen the constant social reinforcement they provide, preventing conspiracy beliefs from taking root and making believers more open to changing their views.

However, the social media landscape is constantly evolving and growing, providing conspiracy believers with new opportunities to find evidence to support their theories and ways around content restrictions. Thus, limiting postings and banning accounts and groups might slow the spread of the theories themselves, but it is unlikely to entirely eliminate exposure to them (Papakyriakopoulos et al., 2020). In addition, content restriction is controversial, with some asserting that targeting groups with unpopular opinions is unacceptable censorship, while others argue that social media companies have a moral responsibility to prevent damaging information from spreading on their platforms (Shields & Brody, 2020). Social media companies may be hesitant to wade into this controversy any more than necessary for fear of inviting political backlash and unwanted regulations.

8.2. Pre‐bunking

Another option is to inoculate people before they encounter a conspiracy theory, either via information or via mindset. One study found that participants who read about the effectiveness of vaccination before encountering an anti‐vaccination conspiracy theory showed greater vaccination intentions than those who read the same information after (Jolley & Douglas, 2017). Greater science literacy may also reduce the sharing of misleading or unverified COVID‐19 information on social media (Pennycook et al., 2020), suggesting that improvements in science education may equip people with better cognitive defenses when they encounter conspiracy theories online. There is also evidence that encouraging people to think about their level of resistance to persuasion is effective in reducing conspiracy beliefs (Bonetto et al., 2018). Congruently, when people are given a chance to play a game that highlights the manipulative persuasion tactics sometimes used to spread conspiracy theories, they later show reduced vulnerability to those same tactics (Roozenbeek & van der Linden, 2019). Taken together, these findings suggest that addressing conspiracy theories before they become beliefs may be an effective strategy for preventing the adoption of conspiratorial cognitive structures.

8.3. Critical consumption

Other interventions can occur when individuals are in the midst of being exposed to a conspiracy theory. When people exposed to a conspiracy theory are encouraged to think more critically about how convincing it is, they show lower downstream beliefs than those who are simply exposed to it (Einstein & Glick, 2013; Ståhl & van Prooijen, 2018). Research has found the cognitive engagement of actually thinking about how convinced one was by a conspiracy theory unlocked greater critical thinking, whereas mere exposure allowed conspiracy beliefs to “slip in under the radar” (Einstein & Glick, 2013; see also Einstein & Glick, 2015; Salovich & Rapp, 2021). In addition, on social media, if first asked to judge the accuracy of a neutral headline (i.e., one unrelated to misinformation), participants were subsequently less likely to share information that was misleading or unverified, regardless of whether or not it aligned with their political ideology (Pennycook et al., 2020). Thus invoking cognitive reflection may both decrease the likelihood that exposure will lead to the adoption of conspiracy beliefs and reduce the sharing of conspiracy theories within online communities. However, it is worth noting that simply labeling something a “conspiracy theory” did not make people find it any less believable (Wood, 2016) suggesting that labels or disclaimers may not be enough to invoke the necessary critical thought.

8.4. Debunking

Pre‐bunking and critical consumption target individuals either before or as they encounter a conspiracy theory. Findings concerning whether beliefs can be reduced after someone already endorses a conspiracy theory are more mixed. On one hand, exposing conspiracy believers to disconfirming facts has produced some hopeful findings. Rational counter‐arguments reduce conspiracy beliefs in some studies (Lyons et al., 2019; Orosz et al., 2016; Swami et al., 2013).

On the other hand, there is evidence that the challenge of dismantling conspiracy beliefs cannot be met with disconfirming facts alone (Bricker, 2013; Clarke, 2002), and that the group identity of conspiracy believers stands as a substantive roadblock to these interventions. A “backfire effect” (of conspiracy beliefs increasing rather than decreasing) can occur when disconfirming evidence challenges beliefs entangled with one's political ideology, an effect which seems to intensify as commitment to the ideology increases (Nyhan & Reifler, 2010; Nyhan et al., 2014). Indeed, disconfirming evidence failed to reduce partisan conspiracy beliefs for both Democrats and Republicans (although notably, only Republicans exhibited the backfire effect; Enders & Smallpage, 2019). This evidence suggests that group identity may be an important social barrier for addressing conspiracy theories.

In addition, for debunking information to reach individuals online and be effective it must break into echo chambers and overcome the social reinforcement that occurs within these communities. Unfortunately, little is known about what interventions may successfully do this. Attempts by debunkers to use pro‐conspiracy hashtags to reach believers do not appear to be effective (Mahl et al., 2021). Some social media companies have begun to attach fact‐checking information to posts containing misinformation, however, these warnings may be ineffective when addressing conspiracy beliefs that have already taken root and which are linked to social identities (Drutman, 2020). The paucity of research on the social aspects of effective debunking interventions is concerning because it is likely that purely cognitive approaches will be less effective if they do not address the social barriers to debunking.

8.5. Encouraging a common identity

One factor which emerges from our review is that conspiracy theories and the communities who endorse them can exacerbate delineations between groups and the in‐group out‐group dynamics which naturally follow from that. One potential way to reduce the divisions is to encourage people to focus on shared superordinate social identities (Finkel et al., 2020; Gaertner et al., 2000). This is particularly relevant to the effects social media influencers can have in combating conspiracy theories. Research has shown that “people become less divided after observing politicians treating opposing partisans warmly, and nonpartisan statements from leaders can reduce violence” (Finkel et al., 2020). Thus, an effective intervention may be to encourage key social influencers to emphasize common identities, the importance of nonpartisanship, and fundamental tolerance of other groups in order to improve the climate and culture around the collective identities that feed online conspiracy communities.

8.6. Social connection

Loneliness has intensified during the pandemic (Shah et al., 2019; Jeste et al., 2020; Luiggi‐Hernández & Rivera‐Amador, 2020). In July 2020, during the peak of many lockdowns, 83% of respondents to a global survey said they were using social media to help “cope with COVID‐19‐related lockdowns” (Kemp, 2020). Unfortunately, this can drive people to invest in alternative social structures with dangerous ramifications, particularly in terms of the spread and stickiness of conspiracy beliefs. However, the turn to online connections also provides a glimmer of hope that the same digital technology tools which may introduce people to conspiracy theories could also provide other forms of support.

For example, digital interconnectivity can improve access to mental health services and the pandemic has increased interest in telehealth for mental health therapy and medical appointments. Specifically, teletherapy may be one way to combat loneliness and other pandemic‐drive challenges (Luiggi‐Hernández & Rivera‐Amador, 2020). The ability to access skilled professionals may reduce the need for social connection that increases the appeal of parasocial relationships with influencers, entry into echo chambers, and the catnip of social approval once one is there.

Innovations in online connectivity may also help people maintain pre‐pandemic relationships with friends and family. Digital tools such as Zoom, Skype, and other social connectivity apps which allow people to maintain existing social ties may prevent individuals from being swept into the enclosed spaces of online echo chambers.

9. FUTURE DIRECTIONS

While this study has emphasized the theoretical mechanisms by which social media has affected COVID‐19 conspiracy theories, a core question is the empirical agenda going forward. We consider three areas for more empirical study (a) testing specific elements of social media engagement to determine the primary drivers of conspiracy spread and stickiness, (b) consequences of engagement in online conspiracy beliefs for real‐world social relationships in a post‐pandemic world, and (c) the motives driving online engagement with conspiracy theories

9.1. Internet usage patterns as an antecedent to conspiracy endorsement and spread

As we noted at the beginning of the study, researchers have debated the extent to which the internet affects the spread and stickiness of conspiracy theories. The discrepant findings may arise from changes over time in the way we use the internet. In 2005, 68% of US adults used the internet, but just 5% used social media (Pew Research Center, 2021a; Pew Research Center 2021b). As of 2021, 93% of US adults use the internet and 72% use social media, and as noted above social media usage increased significantly during COVID‐19. Our focus in this study has been on the role of social media, rather than online engagement in general, in promoting the spread and stickiness of conspiracy theories. If, as we suggest, it is social media in particular that is driving these effects, then as the percentage of internet users who engage with social media has increased the impact of internet usage in general will appear to have changed. However, further research is needed to determine the extent to which, social media engagement, rather than internet usage in general, is the key driver of conspiracy theories.

In addition, as social media platforms continue to change over time, new features may alter their influence on conspiracy beliefs. For example, in 2016 Facebook shifted its focus to promoting communities via Facebook groups (Rodriguez, 2020a). These groups have since been implicated in the spread of misinformation on the platform (McCammon, 2020; Rodriguez, 2020b). More research is needed to understand how past changes such as these have influenced the spread and stickiness of conspiracy theories. This knowledge is crucial for predicting the likely impact of future changes to these platforms.

To more precisely gauge how specific aspects of social media affect the proliferation and staying power of conspiracy theories, experimental manipulations are useful (e.g., randomly assigning people to different types of online engagement, and measuring how rapidly the theory is elaborated into extreme permutations, how much people believe it, and whether they are willing to share it). However, such manipulations have limits in terms of external validity. More naturalistic empirical opportunities lie in studying large‐scale, cross‐cultural internet data. Historical analysis may help reveal how changes to social media platforms over time have altered their role in spreading misinformation. In addition, researchers might compensate for the comparatively short time span covered by social media data by comparing social conditions in different parts of the world. Events that were more localized than COVID‐19 may have impacted how people use social media in particular regions, providing insight into how different types of crises may impact social media use and its connections to conspiracy theories.

9.2. Conspiracy engagement and consequences for People’s social relationships

In addition, our review of the COVID‐19 conspiracy theory literature reveals far more work is needed to understand how disrupted social structures were rebuilt online during the pandemic and how long‐lasting those changes are. For example, as the pandemic subsides, will echo chambers begin to become more permeable? As people return to face‐to‐face interactions, how will their social networks change? To what extent will new group identities, which have been formed online, continue to be an important part of people's self‐concepts? Researchers must move quickly to examine these questions as “normal” life resumes. Of particular interest is how newly formed online group identities carry over to face‐to‐face interaction, blurring the distinctions between virtual and real‐world networks. We reviewed evidence that this occurred during the pandemic, but it remains to be seen if such patterns persist in the real world post‐pandemic and to what extent the online conspiracy communities that were formed will persist as the crisis passes.

9.3. Motives driving online engagement with conspiracy theories

Borrowing from a large body of work on conspiracy theories that existed prior to the pandemic we argued that both control and uncertainty can play a role in the disruption of cognitive structures and subsequent search for alternative structures online, which leads to increased spread and stickiness of conspiracy theories. However, relatively little work has compared these two mechanisms. While the pandemic undoubtedly created both uncertainty and feelings of lacking of control, it is unclear which one was the primary driver of the effects on conspiracy beliefs. Kofta and colleagues (2020) are among the few who have examined both uncertainty and feelings of control in the same study. They find that control rather than uncertainty predicts conspiracy beliefs. However, given the importance of the internet as a tool for information search, it is possible uncertainty plays a larger role in an online environment. In addition, during events such as the COVID‐19 pandemic when both are affected on a massive scale, the two may operate differently during non‐crisis times. For example, we may find that they interact and mutually reinforce one another. More studies that simultaneously examine both uncertainty and feelings of control are needed to establish how they operate to influence conspiracy beliefs online and during periods of crisis.

10. CONCLUSION

Kaptan has noted that the verb conspire “literally means to breathe together” and suggests that we “reflect on the power of the collective breath… to shape the way we interact with the world and people around us” (2020, p. 291). This study considers exactly what it means to be together during a global pandemic, and shows social media's ability to focus collective breath in a uniquely potent manner. Despite being in different parts of the world, separated by computer screens, people are able to mutually create and uphold a powerful, albeit illusory, edifice. The critical question is whether (and how) social media could focus our collective breath in more productive ways.

CONFLICT OF INTEREST

The authors declare that there are no conflicts of interest.

ACKNOWLEDGMENTS

We are grateful to Grace DeAngelis and Nina Petrouski for their research assistance. We would also thank the Editor and anonymous reviewers for their help comments and insightful feedback. There is no funding to report.

Biographies

Benjamin Dow is a Postdoctoral Research Scholar in Organizational Behavior at Washington University in St. Louis, Olin School of Business. His primary areas of research involve leadership and non‐traditional forms of sensemaking, such as conspiratorial thinking.

Amber Johnson is a doctoral student at the University of Maryland, working on several interdisciplinary projects, broadly evaluating how social cognition affects individuals' behaviors and organizational outcomes.

Cynthia Wang is the Executive Director of the Dispute Resolution and Research Center and a Clinical Professor of Management and Organizations at Northwestern University's Kellogg School of Management. Her research interests fall in the areas of diversity, decision‐making, and intergroup processes.

Jennifer Whitson is an Associate Professor at the UCLA Anderson School of Management. Her research explores two phenomena crucial to sensemaking and social regulation: control and power.

Tanya Menon is a Professor of Management and Human Resources at Fisher College of Business, Ohio State University. She studies how people think about relationships, and how this affects the way they make decisions, collaborate, and lead at work.

Dow, B. J. , Johnson, A. L. , Wang, C. S. , Whitson, J. , & Menon, T. (2021). The COVID‐19 pandemic and the search for structure: Social media and conspiracy theories. Social and Personality Psychology Compass, e12636. 10.1111/spc3.12636

REFERENCES

- Ahmed, W. , Seguí, F. L. , Vidal‐Alaball, J. , & Katz, M. S. (2020). Covid‐19 and the “film your hospital” conspiracy theory: Social network analysis of twitter data. Journal of Medical Internet Research, 22(10), e22374. 10.2196/22374 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alfano, M. , Fard, A. E. , Carter, J. A. , Clutton, P. , & Klein, C. (2020). Technologically scaffolded atypical cognition: The case of YouTube's recommender system. Synthese, 1–24. 10.1007/s11229-020-02724-x [DOI] [Google Scholar]

- Allington, D. , Duffy, B. , Wessely, S. , Dhavan, N. , & Rubin, J. (2020). Health‐protective behaviour, social media usage and conspiracy belief during the COVID‐19 public health emergency. Psychological Medicine, 1–7. 10.1017/S003329172000224X [DOI] [PMC free article] [PubMed] [Google Scholar]

- Annenberg Public Policy Center of the University of Pennsylvania . (2021, May 3). COVID‐19 conspiracy beliefs increased among users of conservative and social media . Phys.org. https://phys.org/news/2021‐05‐covid‐conspiracy‐beliefs‐users‐social.html [Google Scholar]

- Bale, J. M. (2007). Political paranoia v. political realism: On distinguishing between bogus conspiracy theories and genuine conspiratorial politics. Patterns of Prejudice, 41(1), 45–60. 10.1080/00313220601118751 [DOI] [Google Scholar]

- Barberá, P. , & Rivero, G. (2014). Understanding the political representativeness of Twitter users. Social Science Computer Review, 33(6), 712–729. 10.1177/0894439314558836 [DOI] [Google Scholar]

- Barry, D. , McIntyre, M. , & Rosenberg, M. (2021, January 9). ‘Our president wants us here’: The mob that stormed the Capitol. The New York Times. https://www.nytimes.com/2021/01/09/us/capitol‐rioters.html [Google Scholar]

- Bessi, A. , Coletto, M. , Davidescu, G. A. , Scala, A. , Caldarelli, G. , & Quattrociocchi, W. (2015). Science vs conspiracy: Collective narratives in the age of misinformation. PloS One, 10(2), e0118093. 10.1371/journal.pone.0118093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bessi, A. , Petroni, F. , Del Vicario, M. , Zollo, F. , Anagnostopoulos, A. , Scala, A. , Caldarelli, G. , & Quattrociocchi, W. (2016). Homophily and polarization in the age of misinformation. The European Physical Journal ‐ Special Topics, 225(10), 2047–2059. 10.1140/epjst/e2015-50319-0 [DOI] [Google Scholar]

- Bomey, N. (2020, October 2). Social media teems with conspiracy theories from QAnon and Trump critics after president's positive COVID‐19 test. USA Today. https://www.usatoday.com/story/tech/2020/10/02/trump‐covid‐conspiracy‐theories‐emerge‐social‐media‐after‐positive‐test/5892808002/ [Google Scholar]

- Bonetto, E. , Troïan, J. , Varet, F. , Lo Monaco, G. , & Girandola, F. (2018). Priming resistance to persuasion decreases adherence to conspiracy theories. Social Influence, 13(3), 125–136. 10.1080/15534510.2018.1471415 [DOI] [Google Scholar]

- Boutyline, A. , & Willer, R. (2017). The social structure of political echo chambers: Variation in ideological homophily in online networks. Political Psychology, 38(3), 551–569. 10.1111/pops.12337 [DOI] [Google Scholar]

- Boykin, K. , Brown, M. , Macchione, A. L. , Drea, K. M. , & Sacco, D. F. (2021). Noncompliance with masking as a coalitional signal to US conservatives in a pandemic. Evolutionary Psychological Science, 1–7. 10.1007/s40806-021-00277-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brailovskaia, J. , & Margraf, J. (2021). The relationship between burden caused by coronavirus (COVID‐19), addictive social media use, sense of control and anxiety. Computers in Human Behavior, 119, 106720. 10.1016/j.chb.2021.106720 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brennen, J. S. , Simon, F. , Howard, P. N. , & Nielsen, R. K. (2020). Types, sources, and claims of COVID‐19 misinformation. Reuters Institute, 7, 3–1. [Google Scholar]

- Bricker, B. J. (2013). Climategate: A case study in the intersection of facticity and conspiracy theory. Communication Studies, 64(2), 218–239. 10.1080/10510974.2012.749294 [DOI] [Google Scholar]

- Brooks, S. K. , Webster, R. K. , Smith, L. E. , Woodland, L. , Wessely, S. , Greenberg, N. , & Rubin, G. J. (2020). The psychological impact of quarantine and how to reduce it: Rapid review of the evidence. The Lancet, 395(10227), 912–920. 10.1016/S0140-6736(20)30460-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brugnoli, E. , Cinelli, M. , Quattrociocchi, W. , & Scala, A. (2019). Recursive patterns in online echochambers. Scientific Reports, 9(1), 1–18. 10.1038/s41598-019-56191-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buranyi, S. (2020, September 4). How coronavirus has brought together conspiracy theorists and the far right. The Guardian. https://www.theguardian.com/commentisfree/2020/sep/04/coronavirus‐conspiracy‐theorists‐far‐right‐protests [Google Scholar]

- Cathey, L. (2021, July 16). President Biden says Facebook, other social media ‘killing people’ when it comes to COVID‐19 misinformation. ABC News. https://abcnews.go.com/Politics/president‐biden‐facebook‐social‐media‐killing‐people‐covid/story?id=78890692 [Google Scholar]

- Cauberghe, V. , Van Wesenbeeck, I. , De Jans, S. , Hudders, L. , & Ponnet, K. (2021). How adolescents use social media to cope with feelings of loneliness and anxiety during COVID‐19 lockdown. Cyberpsychology, Behavior, and Social Networking, 24(4), 250–257. 10.1089/cyber.2020.0478 [DOI] [PubMed] [Google Scholar]

- Center for Countering Digital Hate . (2021, March 24). The disinformation dozen: Why platforms must act on twelve leading online anti‐vaxxers. Counterhate.com. https://www.counterhate.com/disinformationdozen [Google Scholar]

- Choi, D. , Chun, S. , Oh, H. , Han, J. , & Kwon, T. T. (2020). Rumor propagation is amplified by echo chambers in social media. Scientific Reports, 10(1), 310. 10.1038/s41598-019-57272-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cinelli, M. , Brugnoli, E. , Schmidt, A. L. , Zollo, F. , Quattrociocchi, W. , & Scala, A. (2020). Selective exposure shapes the Facebook news diet. PloS One, 15(3), e0229129. 10.1371/journal.pone.0229129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cinelli, M. , De Francisci Morales, G. , Galeazzi, A. , Quattrociocchi, W. , & Starnini, M. (2021). The echo chamber effect on social media. Proceedings of the National Academy of Sciences, 118(9), e2023301118. 10.1073/pnas.2023301118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cinelli, M. , Quattrociocchi, W. , Galeazzi, A. , Valensise, C. M. , Brugnoli, E. , Schmidt, A. L. , Zola, P. , Zollo, F. , & Scala, A. (2020). The COVID‐19 social media infodemic. Scientific Reports, 10(1), 1–10. 10.1038/s41598-020-73510-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke, S. (2002). Conspiracy theories and conspiracy theorizing. Philosophy of the Social Sciences, 32(2), 131–150. 10.1177/004931032002001 [DOI] [Google Scholar]

- Clarke, S. (2007). Conspiracy theories and the internet: Controlled demolition and arrested development. Episteme, 4(2), 167–180. 10.3366/epi.2007.4.2.167 [DOI] [Google Scholar]

- Cohen, M. (2020, July 8). Michael Flynn posts video featuring QAnon slogans. CNN Politics. https://www.cnn.com/2020/07/07/politics/michael‐flynn‐qanon‐video/index.html [Google Scholar]

- Collins, B. , & Zadrozny, B. (2020, August 19). QAnon groups hit by Facebook crackdown. NBC News. https://www.nbcnews.com/tech/tech‐news/qanon‐groups‐hit‐facebook‐crack‐down‐n1237330 [Google Scholar]

- Del Vicario, M. , Bessi, A. , Zollo, F. , Petroni, F. , Scala, A. , Caldarelli, G. , Stanley, H. E. , & Quattrociocchi, W. (2016). The spreading of misinformation online. Proceedings of the National Academy of Sciences, 113(3), 554–559. 10.1073/pnas.1517441113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douglas, K. M. (2021). COVID‐19 conspiracy theories. Group Processes & Intergroup Relations, 24(2), 270–275. 10.1177/1368430220982068 [DOI] [Google Scholar]

- Douglas, K. M. , Ang, C. S. , & Deravi, F. (2017). Reclaiming the truth. The Psychologist, 30, 36–42. [Google Scholar]

- Douglas, K. M. , Sutton, R. M. , & Cichocka, A. (2017). The psychology of conspiracy theories. Current Directions in Psychological Science, 26(6), 538–542. 10.1177/0963721417718261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douglas, K. M. , Uscinski, J. E. , Sutton, R. M. , Cichocka, A. , Nefes, T. , Ang, C. S. , & Deravi, F. (2019). Understanding conspiracy theories. Political Psychology, 40(S1), 3–35. 10.1111/pops.12568 [DOI] [Google Scholar]

- Drinkwater, K. G. , Dagnall, N. , Denovan, A. , & Walsh, R. S. (2021). To what extent have conspiracy theories undermined COVID‐19: Strategic narratives? Frontiers in Communication, 6, 47. 10.3389/fcomm.2021.576198 [DOI] [Google Scholar]

- Drutman, L. (2020, June 3). Fact‐checking misinformation can work. But it might not be enough. FiveThirtyEight. https://fivethirtyeight.com/features/why‐twitters‐fact‐check‐of‐trump‐might‐not‐be‐enough‐to‐combat‐misinformation/ [Google Scholar]

- Dwoskin, E. , & Timberg, C. (2021, January 16). Misinformation dropped dramatically the week after Twitter banned Trump and some allies. Washington Post. https://www.washingtonpost.com/technology/2021/01/16/misinformation‐trump‐twitter/ [Google Scholar]

- Easton, M. (2020, June 18). Coronavirus: Social media ‘spreading virus conspiracy theories’. BBC News. https://www.bbc.com/news/uk‐53085640 [Google Scholar]

- Einstein, K. L. , & Glick, D. M. (2013, August). Scandals, conspiracies and the vicious cycle of cynicism . In Annual Meeting of the American Political Science Association. [Google Scholar]

- Einstein, K. L. , & Glick, D. M. (2015). Do I think BLS data are BS? The consequences of conspiracy theories. Political Behavior, 37(3), 679–701. 10.1007/s11109-014-9287-z [DOI] [Google Scholar]

- Elkin, L. E. , Pullon, S. R. H. , & Stubbe, M. H. (2020). ‘Should I vaccinate my child?’ Comparing the displayed stances of vaccine information retrieved from Google, Facebook and YouTube. Vaccine, 38(13), 2771–2778. 10.1016/j.vaccine.2020.02.041 [DOI] [PubMed] [Google Scholar]

- Enders, A. M. , & Smallpage, S. M. (2019). Informational cues, partisan‐motivated reasoning, and the manipulation of conspiracy beliefs. Political Communication, 36(1), 83–102. 10.1080/10584609.2018.1493006 [DOI] [Google Scholar]

- Enke, N. , & Borchers, N. S. (2019). Social media influencers in strategic communication: A conceptual framework for strategic social media influencer communication. International Journal of Strategic Communication, 13(4), 261–277. 10.1080/1553118X.2019.1620234 [DOI] [Google Scholar]

- Faddoul, M. , Chaslot, G. , & Farid, H. (2020). A longitudinal analysis of YouTube's promotion of conspiracy videos. arXiv. https://arxiv.org/abs/2003.03318 [Google Scholar]

- Ferrara, E. (2020). What types of Covid‐19 conspiracies are populated by Twitter bots? First Monday. 10.5210/fm.v25i6.10633 [DOI] [Google Scholar]

- Finkel, E. J. , Bail, C. A. , Cikara, M. , Ditto, P. H. , Iyengar, S. , Klar, S. , Mason, L. , McGrath, M. C. , Nyhan, B. , Rand, D. G. , Skitka, L. J. , Tucker, J. A. , Van Bavel, J. J. , Wang, C. S. , & Druckman, J. N. (2020). Political sectarianism in America. Science, 370(6516), 533–536. 10.1126/science.abe1715 [DOI] [PubMed] [Google Scholar]

- Fischer, S. (2020, April 24). Social media use spikes during pandemic. Axios. https://www.axios.com/social‐media‐overuse‐spikes‐in‐coronavirus‐pandemic‐764b384d‐a0ee‐4787‐bd19‐7e7297f6d6ec.html [Google Scholar]

- Franks, B. , Bangerter, A. , & Bauer, M. (2013). Conspiracy theories as quasi‐religious mentality: An integrated account from cognitive science, social representations theory, and frame theory. Frontiers in Psychology, 4, 424. 10.3389/fpsyg.2013.00424 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franks, B. , Bangerter, A. , Bauer, M. W. , Hall, M. , & Noort, M. C. (2017). Beyond “monologicality”? Exploring conspiracist worldviews. Frontiers in Psychology, 8, 861. 10.3389/fpsyg.2017.00861 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman, D. , & Bentall, R. P. (2017). The concomitants of conspiracy concerns. Social Psychiatry and Psychiatric Epidemiology, 52(5), 595–604. 10.1007/s00127-017-1354-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaertner, S. L. , Dovidio, J. F. , Nier, J. A. , Banker, B. S. , Ward, C. M. , Houlette, M. , & Loux, S. (2000). Reducing intergroup bias: The common ingroup identity model. Psychological Press. 10.4324/9781315804576 [DOI] [Google Scholar]

- Grant, L. , Hausman, B. L. , Cashion, M. , Lucchesi, N. , Patel, K. , & Roberts, J. (2015). Vaccination persuasion online: A qualitative study of two provaccine and two vaccine‐skeptical websites. Journal of Medical Internet Research, 17(5), e133. 10.2196/jmir.4153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gruzd, A. , & Mai, P. (2020). Going viral: How a single tweet spawned a COVID‐19 conspiracy theory on Twitter. Big Data & Society, 7, 205395172093840. 10.1177/2053951720938405 [DOI] [Google Scholar]

- Harambam, J. (2020). Contemporary conspiracy culture: Truth and knowledge in an era of epistemic instability. Taylor & Francis. [Google Scholar]

- Heilweil, R. (2020, April 24). How the 5G coronavirus conspiracy theory went from fringe to mainstream. Vox. https://www.vox.com/recode/2020/4/24/21231085/coronavirus‐5g‐conspiracy‐theory‐covid‐facebook‐youtube [Google Scholar]

- Jeste, D. V. , Lee, E. E. , & Cacioppo, S. (2020). Battling the modern behavioral epidemic of loneliness: Suggestions for research and interventions. JAMA Psychiatry, 77(6), 553–554. 10.1001/jamapsychiatry.2020.0027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jolley, D. , & Douglas, K. M. (2014). The social consequences of conspiracism: Exposure to conspiracy theories decreases intentions to engage in politics and to reduce one's carbon footprint. British Journal of Psychology, 105(1), 35–56. 10.1111/bjop.12018 [DOI] [PubMed] [Google Scholar]

- Jolley, D. , & Douglas, K. M. (2017). Prevention is better than cure: Addressing anti‐vaccine conspiracy theories. Journal of Applied Social Psychology, 47(8), 459–469. 10.1111/jasp.12453 [DOI] [Google Scholar]

- Jolley, D. , Meleady, R. , & Douglas, K. M. (2020). Exposure to intergroup conspiracy theories promotes prejudice which spreads across groups. British Journal of Psychology, 111(1), 17–35. 10.1111/bjop.12385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaakinen, M. , Sirola, A. , Savolainen, I. , & Oksanen, A. (2020). Shared identity and shared information in social media: Development and validation of the identity bubble reinforcement scale. Media Psychology, 23(1), 25–51. 10.1080/15213269.2018.1544910 [DOI] [Google Scholar]

- Kaptan, S. (2020). Corona conspiracies: A call for urgent anthropological attention. Social Anthropology: The Journal of the European Association of Social Anthropologists, 28, 290–291. 10.1111/1469-8676.12841 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaur, H. (2020, March 28). Yes, we long have referred to disease outbreaks by geographic places. Here's why we shouldn't anymore. CNN. https://www.cnn.com/2020/03/28/us/disease‐outbreaks‐coronavirus‐naming‐trnd/index.html [Google Scholar]

- Kearney, M. D. , Chiang, S. C. , & Massey, P. M. (2020). The Twitter origins and evolution of the COVID‐19 “plandemic” conspiracy theory. Harvard Kennedy School Misinformation Review, 1(3). 10.37016/mr-2020-42 [DOI] [Google Scholar]

- Kemp, S. (2020, July 21). Digital 2020 July global snapshot report. Datareportal. https://datareportal.com/reports/digital‐2020‐july‐global‐statshot [Google Scholar]

- Kemp, S. (2021a, January 27). Digital 2021: Global overview report. Datareportal. https://datareportal.com/reports/digital‐2021‐global‐overview‐report [Google Scholar]

- Kemp, S. (2021b, April 21). Digital 2021: April global statshot report. Datareportal. https://datareportal.com/reports/digital‐2021‐april‐global‐statshot [Google Scholar]

- Kim, M. , & Cao, X. (2016). The impact of exposure to media messages promoting government conspiracy theories on distrust in the government: Evidence from a two‐stage randomized experiment. International Journal of Communication, 10, 20. [Google Scholar]

- Kirkpatrick, D. (2016, May 12). Twitter says influencers are almost as trusted as friends. Marketing Dive. https://www.marketingdive.com/news/twitter‐says‐influencers‐are‐almost‐as‐trusted‐as‐friends/419076/ [Google Scholar]

- Klein, C. , Clutton, P. , & Dunn, A. G. (2019). Pathways to conspiracy: The social and linguistic precursors of involvement in Reddit's conspiracy theory forum. PloS One, 14(11), e0225098. 10.1371/journal.pone.0225098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knight, P. (2001). Conspiracy culture: American paranoia from Kennedy to the X‐files. Routledge. 10.4324/9780203354773 [DOI] [Google Scholar]

- Koeze, E. , & Popper, N. (2020, April 9). The virus changed the way we internet. New York Times. https://www.nytimes.com/interactive/2020/04/07/technology/coronavirus‐internet‐use.html [Google Scholar]

- Kofta, M. , Soral, W. , & Bilewicz, M. (2020). What breeds conspiracy antisemitism? The role of political uncontrollability and uncertainty in the belief in Jewish conspiracy. Journal of Personality and Social Psychology, 118(5), 900–918. 10.1037/pspa0000183 [DOI] [PubMed] [Google Scholar]

- Kong, J. D. , Tekwa, E. W. , & Gignoux‐Wolfsohn, S. A. (2021). Social, economic, and environmental factors influencing the basic reproduction number of COVID‐19 across countries. PloS One, 16(6), e0252373. 10.1371/journal.pone.0252373 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer, R. M. , & Gavrieli, D. (2005). The perception of conspiracy: Leader paranoia as adaptive cognition. In Messick D. M. & Kramer R. M. (Eds.), The psychology of leadership: New perspectives and research (pp. 241–274). Psychology Press. [Google Scholar]

- Kuhn, S. , Lieb, R. , Freeman, D. , Andreou, C. , & Zander‐Schellenberg, T. (2021). Coronavirus conspiracy beliefs in the German‐speaking general population: Endorsement rates and links to reasoning biases and paranoia. Psychological Medicine, 1–15. https://doi.org10.1017/S0033291721001124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108(3), 480–498. 10.1037/0033-2909.108.3.480 [DOI] [PubMed] [Google Scholar]

- Landau, M. J. , Kay, A. C. , & Whitson, J. A. (2015). Compensatory control and the appeal of a structured world. Psychological Bulletin, 141(3), 694–722. 10.1037/a0038703 [DOI] [PubMed] [Google Scholar]

- Lantian, A. , Muller, D. , Nurra, C. , Klein, O. , Berjot, S. , & Pantazi, M. (2018). Stigmatized beliefs: Conspiracy theories, anticipated negative evaluation of the self, and fear of social exclusion. European Journal of Social Psychology, 48(7), 939–954. 10.1002/ejsp.2498 [DOI] [Google Scholar]

- Lawson, M. A. , Anand, S. , & Kakkar, H. (2021, July 12). Tribalism and tribulations: The social cost of not sharing fake news. Paper presented at the 34th Annual Conference of the International Association for Conflict Management, Virtual Conference. [Google Scholar]

- Lee, J. (2020, December 8). How social media influencer tactics help conspiracy theories gain traction online. ABC Science. https://www.abc.net.au/news/science/2020‐12‐09/social‐media‐conspiracy‐theorists‐5g‐covid‐19‐influencers/12937950 [Google Scholar]

- Lewandowsky, S. , Gignac, G. E. , & Oberauer, K. (2013). The role of conspiracist ideation and worldviews in predicting rejection of science. PloS One, 8(10), e75637. 10.1371/journal.pone.0075637 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewandowsky, S. , Oberauer, K. , & Gignac, G. E. (2013). NASA faked the moon landing—therefore, (climate) science is a hoax: An anatomy of the motivated rejection of science. Psychological Science, 24(5), 622–633. 10.1177/0956797612457686 [DOI] [PubMed] [Google Scholar]

- Lewin, K. (1938). The conceptual representation and the measurement of psychological forces. Duke University Press. 10.1037/13613-000 [DOI] [Google Scholar]

- Lord, B. , & Naik, R. (2020, October 18). He went down the QAnon rabbit hole for almost two years. Here's how he got out. CNN Business. https://www.cnn.com/2020/10/16/tech/qanon‐believer‐how‐he‐got‐out/index.html [Google Scholar]

- Luiggi‐Hernández, J. G. , & Rivera‐Amador, A. I. (2020). Reconceptualizing social distancing: Teletherapy and social inequality during the COVID‐19 and loneliness pandemics. Journal of Humanistic Psychology, 60(5), 626–638. 10.1177/0022167820937503 [DOI] [Google Scholar]

- Lyons, B. , Merola, V. , & Reifler, J. (2019). Not just asking questions: Effects of implicit and explicit conspiracy information about vaccines and genetic modification. Health Communication, 34(14), 1741–1750. 10.1080/10410236.2018.1530526 [DOI] [PubMed] [Google Scholar]

- Macleod, A. (2020, March 24). In pursuit of Chinese scapegoats, media reject life‐saving lessons. Fairness & accuracy in reporting. https://fair.org/home/in‐pursuit‐of‐chinese‐scapegoats‐media‐reject‐life‐saving‐lessons/ [Google Scholar]

- Mahl, D. , Zeng, J. , & Schäfer, M. S. (2021). From “Nasa Lies” to “Reptilian Eyes”: Mapping communication about 10 conspiracy theories, their communities, and main propagators on Twitter. Social Media + Society, 7(2), 205630512110174. 10.1177/20563051211017482 [DOI] [Google Scholar]

- Marengo, D. , Montag, C. , Sindermann, C. , Elhai, J. D. , & Settanni, M. (2021). Examining the links between active Facebook use, received likes, self‐esteem and happiness: A study using objective social media data. Telematics and Informatics, 58, 101523. 10.1016/j.tele.2020.101523 [DOI] [Google Scholar]

- McCammon, S. (Host) (2020, June 22). [Audio podcast]. All things considered. NPR. https://www.npr.org/2020/06/22/881826881/facebook‐groups‐are‐destroying‐america‐researcher‐on‐misinformation‐spread‐onlin [Google Scholar]

- Memon, S. A. , & Carley, K. M. (2020). Characterizing covid‐19 misinformation communities using a novel twitter dataset. arXiv. https://arxiv.org/abs/2008.00791 [Google Scholar]

- Merriam‐Webster . (n.d. ) Social Media. In Merriam‐Webster.com dictionary. https://www.merriam‐webster.com/dictionary/social%20media [Google Scholar]

- Mitchell, A. , Jurkowitz, M. , Oliphant, J. B. , & Shearer, E. (2020, June 29). Most Americans have heard of the conspiracy theory that the COVID‐19 outbreak was planned, and about one‐third of those aware of it say it might be true. Pew Research Center. https://www.journalism.org/2020/06/29/most‐americans‐have‐heard‐of‐the‐conspiracy‐theory‐that‐the‐covid‐19‐outbreak‐was‐planned‐and‐about‐one‐third‐of‐those‐aware‐of‐it‐say‐it‐might‐be‐true/ [Google Scholar]

- Mitchell, T. R. (1974). Expectancy models of job‐satisfaction, occupational preference and effort: Theoretical, methodological, and empirical appraisal. Psychological Bulletin, 81, 1053–1077. 10.1037/h0037495 [DOI] [Google Scholar]

- Naeem, M. (2020). The role of social media to generate social proof as engaged society for stockpiling behaviour of customers during Covid‐19 pandemic. Qualitative Market Research. 10.1108/QMR-04-2020-0050 [DOI] [Google Scholar]

- Nyhan, B. , & Reifler, J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303–330. 10.1007/s11109-010-9112-2 [DOI] [Google Scholar]

- Nyhan, B. , Reifler, J. , Richey, S. , & Freed, G. L. (2014). Effective messages in vaccine promotion: A randomized trial. Pediatrics, 133(4), e835–e842. 10.1542/peds.2013-2365 [DOI] [PubMed] [Google Scholar]