Abstract

The novel coronavirus disease 2019 (COVID‐19) has been a severe health issue affecting the respiratory system and spreads very fast from one human to other overall countries. For controlling such disease, limited diagnostics techniques are utilized to identify COVID‐19 patients, which are not effective. The above complex circumstances need to detect suspected COVID‐19 patients based on routine techniques like chest X‐Rays or CT scan analysis immediately through computerized diagnosis systems such as mass detection, segmentation, and classification. In this paper, regional deep learning approaches are used to detect infected areas by the lungs' coronavirus. For mass segmentation of the infected region, a deep Convolutional Neural Network (CNN) is used to identify the specific infected area and classify it into COVID‐19 or Non‐COVID‐19 patients with a full‐resolution convolutional network (FrCN). The proposed model is experimented with based on detection, segmentation, and classification using a trained and tested COVID‐19 patient dataset. The evaluation results are generated using a fourfold cross‐validation test with several technical terms such as Sensitivity, Specificity, Jaccard (Jac.), Dice (F1‐score), Matthews correlation coefficient (MCC), Overall accuracy, etc. The comparative performance of classification accuracy is evaluated on both with and without mass segmentation validated test dataset.

Keywords: COVID‐19, quantitative evaluation, respiratory diagnosis, X‐Rays or CT images

1. INTRODUCTION

Recently, the coronavirus has been affected as a new disease throughout the globe rapidly. Generally, coronavirus transmission is from animals or humans. From the animal transmission, it is found to spread through bats. Scientists have also discovered that coronavirus spreads in the human body with several other types of common coronaviruses such as 229E: Alpha, NL63: Alpha, OC43: Beta, and HKU1: Beta (Liu, Wu, et al., 2020; Liu, Xu, et al., 2020). Further other human beta coronavirus causing similar disease also exists in the climate, such as (1) MERS‐COV (Middle East Respiratory Syndrome or MERS), (2) SARS‐COV (Severe Acute Respiratory Syndrome, SARS), (3) SARS‐COV‐2 (the novel coronavirus causes coronavirus disease 2019 or COVID‐19) (Coronavirus, 2020). Most scientists or researchers have been emphasized special COVID‐19 diagnostic tools (Lalit et al., 2020), therapies, or vaccines to prevent further spread. Reverse Transcription Polymerase Chain Reaction (RT‐PCR) (Lalit et al., 2020; Wang, Kang, et al., 2020; Wang, Yang, et al., 2020) is currently used as a gold standard to establish the diagnosis based on higher sensitivity and specificity concerning measurements. Other techniques such as radiographic technologies (X‐rays and CT images) are also used in advanced disease stages to reveal features of pneumonia. The suspected or asymptomatic cases of COVID‐19 patients are suffering from a lung infection (Zhang et al., 2020). Chest X‐ray provides a lower sensitivity, around 59%–69%, compared to RT‐PCR; however, in a few studies performance of CT scan is found better than RT‐PCR (Ai et al., 2020). Radiological and clinical examinations offer limited value in a definitive diagnosis in their current form but could be potentially useful in disease quantification and prediction (Huang et al., 2020). Further, these imaging methods will very effective if the process is regulated quickly with widely available economical technology. Radiological findings evolve in four phases during the COVID‐19 diseases, which could be helpful to for quantification such as (1) Early phase: few or moderate clinical abnormalities with single or multiple lesion areas, (B) Progressive phase: Progressive phase: lesions progress in lung parenchyma with extending density and extracellular exudates, (C) Severe phase: Severe phase: parenchymal lesions reach the peak with bilateral infiltrations and a large number of exudates, (D) Dissipative phase: inflammation recovery phase after 14 days characterized by gradual absorption of lesions.

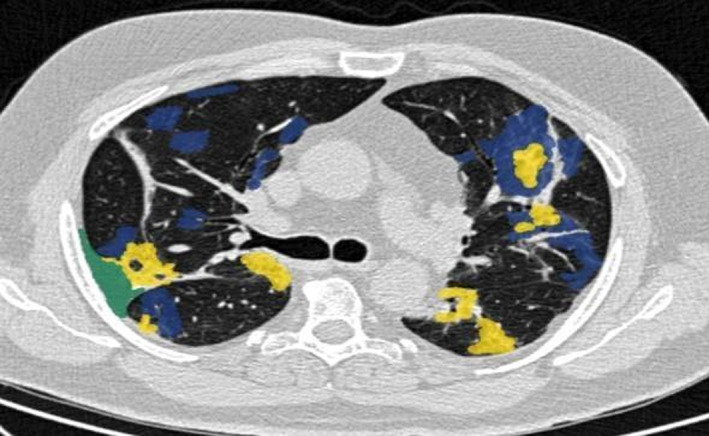

Excluding the above phases, the CT image visual quantification is more effective (Li et al., 2020). The most powerful technique of deep learning is CNNs used and trained on large‐scale datasets to demonstrate their capability. Although in a few cases, it could not meet the demand (Cohen et al., 2020; Jenssen, 2020; Sajid, 2020) and only indicates the patient was infected or not due to a lack of fine‐grained pixel‐level annotations. Thus, CNN models take specific steps to train those datasets on valuable information for explaining the final predictions. One simple example has been taken to identify the different locations infected by a coronavirus (COVID‐19) at the lungs of a patient. An image from Figure 1 is identified in several infected areas by the coronavirus. It uses several colours to identify the pathophysiological changes such as ground‐glass opacities (GGO), consolidation, and pleural effusion in blue, yellow, and green colour respectively. The image is also considered based on the size of the image slices 512 × 512 pixels, and the same is retained for the masks. The image is also maintained three‐segmented anomalies such as GGO (mask value = 1), consolidation (= 2), and pleural effusion (= 3), including background (= 0). A segmented sample image is pictured in Figure 1. This image collects from (COVID‐19, 2020) to identify the several infected areas of the lung. It is a CT scan image of the lung. The statistical calculations of CT, ground‐glass opacities/consolidation are used to find the prognosis estimation for COVID‐19 patients (Lalit et al., 2020).

FIGURE 1.

One segmented COVID‐19 CT axial slice (COVID‐19, 2020)

Since sufficient data on COVID‐19 are not available, it cannot describe details of infected areas of COVID‐19 patients. Thus, it is motivated to analyse different COVID‐19 presentations. It proposed a novel model based on (1) patient level and pixel‐level annotations and (2) four techniques such as detection, segmentation, and classification and quantification. The contribution is explained on the proposed diagnosis system i.e., firstly identified the suspected COVID‐19 patients by a detection model. It also provides diagnosis explanations using activation mapping techniques. The system is feasible to discover the locations and areas of the COVID‐19 infection in lung radiographs with fine‐grained image segmentation and classification techniques.

Since COVID‐19 is an acute disease (i.e., between 14–30 days), pathophysiological changes affecting normal lung area can be recognized easily by the above approaches. Generally, these techniques existed in deep learning, so it is exciting for researchers to work on COVID‐19 and its distinction from different patient images. In summary, the contributions of this work are mainly focused as following techniques, data set, and analysis:

Initially, it collected different suspected and infected COVID‐19 patient cases for training databases. It constructs a new large‐scale COVID‐19 dataset that contains fine‐grain pixel‐level labelled serial images from both uninfected and infected patients.

It develops a novel COVID‐19 diagnosis system to perform detection, segmentation, and classification showing clear superiority.

On the collected COVID dataset, the proposed system achieves several good performances on sensitivity and specificity and other cross‐validation terms on COVID‐19 classification using the deep learning CNN model.

Disease quantification is generated through fourfold cross‐validations with data evaluation.

The subsequent sections of this paper are systematized as follows. In section 2, it describes the background of the paper shortly. In section 3, it formulates the problem statement and motivation behind this research. Section 4 presents the COVID‐DSC dataset with labelling procedures in detail and introduces the developed diagnosis system for recognizing and analysing the COVID‐19 cases. Inclusive experiments are conducted in section 5 to generate a good presentation of the proposed system on disease recognition, with in‐depth analysis. The whole work is summarized in section 6.

2. RELATED WORK

COVID‐19 has infected people of almost all countries since the pandemic started in December 2019. Initially, due to lockdown measures worldwide, it was challenging to collect different COVID‐19 datasets from different laboratories. Additionally, few of them were restricted access which further makes it difficult to gain accurate data for diagnostic systems. Subsequently, to facilitate the development of diagnostic systems, many researchers released diverse COVID‐19 related datasets to the public worldwide. The X‐ray dataset from Cohen et al. (2020) is among them, which contains frontal X‐ray images, including various images of COVID‐19 infected patients, respiratory syndrome images, and other pneumonia‐like images. All the images in these datasets are collected from public websites and COVID‐19 related papers on medRxiv, bioRxiv, and journals, etc. Some other resources of the COVID‐19 dataset are PLXR (Sajid, 2020) and CTSeg (Jenssen, 2020), which contain CT scan image cases, respectively. However, these datasets are on a small scale and lack diversity since they often contain hundreds of images from tens of cases. To fully exploit the power of deep CNNs, it is highly essential to construct a large‐scale dataset to train deep CNNs, enabling accurate and robust COVID‐19 diagnostic systems. Most medical imaging systems are developed for common diseases that last for long years, such as tuberculosis (Liu, Wu, et al., 2020; Liu, Xu, et al., 2020). These systems can also be directly modified to repurpose in the COVID‐19 outbreak. The clinicians observed that the chest radiographs of COVID‐19 patients exhibit certain radiological features suggestive of inflammation. Based on ResNet‐50 (He et al., 2016), COVID‐ResNet (Farooq & Hafeez, 2020) proposed a system to differentiate three types of COVID‐19 infections from usual pneumonia, achieving a good percentage of sensitivity and specificity. Yang et al. (2020) also developed a system to diagnose the images of volunteers with acceptable sensitivity and specificity. Li et al. (2020) developed the system that identifies COVID‐19 patients with sensitivity and specificity by using the lung CT severity index (CTSI). Zhou et al. (2020) implemented the examination using the non‐contrast CTSI of 62 COVID‐19 patients evaluated by CT scan for better accuracy at an early disease stage. Despite their success on small sets of samples, these COVID‐19 diagnosis systems have not been tested by large‐scale samples and could not provide helpful diagnostic evidence during their diagnostic inference. Roberts et al. (2020) use machine learning techniques for COVID‐19 detection and prognostication based on chest radiographs and CT scans whereas Shaoping et al. (2020) use weakly supervised deep learning COVID‐19 infection detection and classification from CT images. Further, the detection work is also developed using cloud and fog computing, the Internet of things, and federated learning by few authors such as Wang, Kang, et al. (2020), Wang, Yang, et al. (2020), Wang et al. (2019), and Khattab and Youssry (2020).

Rajinikanth et al. (2020) worked on segmentation which is the only one that extracts infected regions via pixel‐level segmentation. But the segmentation is performed using watershed transform techniques (Roerdink & Meijster, 2000) with coarse results and limited accuracy. Current work proposes a diagnosis system by integrating learning‐based classification and segmentation networks to provide explainable diagnostic evidence for clinicians and improve the diagnosis process's user‐interactive aspects. This work develops a novel COVID‐19 diagnostic system by integrating deep learning‐based image classification and segmentation techniques. Ali, Zhu, Zhou, and Liu (2019) describe classification based on feature selection and Ali, Zhu, Zhou, and Liu (2019) also made automated detection of Parkinson's disease with own diagnosis system. Bhuyan and Kamila (2014, 2015) and Bhuyan et al. (2016) also developed novel classification techniques using several biological data sets. Iwendi, Moqurrab, et al. (2020) developed a semantic privacy‐preserving framework for unstructured medical datasets, and Iwendi, Bashir, et al. (2020) used Boosted Random Forest Algorithm to test COVID‐19 patient health prediction.

The deep learning approaches have been used for testing several X‐rays of CT scan images of different diseases. Still, it does not use the COVID‐19 diagnostic system with detection, segmentation, classification, and quantification, which generates the gap for the above diagnostic system. These limitations or gaps are motivated to develop several parts of the diagnosis system using deep learning approaches. Thus, it has analysed the above gaps through problem statements and made its frameworks and implementation in subsequent sections accordingly.

3. PROBLEM STATEMENTS

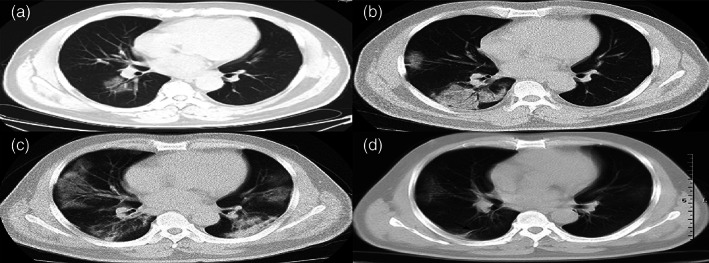

Usually, individual with virus infection developed symptoms are ranging from mild to acute respiratory distress, accompanied by radiological findings such as (1) interstitial grained opacity (ground‐glass), (2) opacity due to tissue consolidation, (3) alveolar wall thickening (4) membrane fibrosis (5) cavitation, (6) discrete nodules, (7) pleural effusion, (8) lymphadenopathy, (9) bilaterally distributed, or (10) peripherally distributed grained abnormalities, typical of acute lung injury or viral pneumonia. Infection does not sustain in the long term if treatment is continued, resulting in radiological improvements. The different reference images from COVID‐19‐infected patients' chest region are considered in Figure 2 as in (Fu et al., 2020).

FIGURE 2.

An adult male patient with COVID‐19 pneumonia showing disease progression and pathophysiological steps, imaged using different tests on several days

In Figure 2, a patient is affected by a novel coronavirus and shows the distinct phases as above on chest radiographs, on day1 in (1), day 4 in (2), day 9 in (3), and day 24 in (4). Detection, risk classification, and disease progress are essential during treatment. Clinicians need to test X‐Rays or CT scans of lungs to detect and quantify the affected tissue from patients' respiratory systems. Based on these circumstances, this paper tries to get the appropriate solution for the diagnosis into four ways such as (1) detection, (2) segmentation, and (3) classification (4) quantification and outcome prediction as follows.

Detection: Usually, tissue variations are observed during detection from the surrounding affected area, based on the shape, size, and anatomical location of the abnormality in the lungs. However, it becomes a challenging task for the detection of coronavirus disease. Conventional machine learning techniques also identify suspicious detection. Deep learning approaches can also be utilized to make more practical tasks, particularly for mass detection from affected areas.

Segmentation: The segmentation is used to extract specific regions that are infected by a coronavirus. The improvement of the accuracy of these regions is determined by avoiding false positive and negative ratios after image segmentation. It takes strong determination for the COVID‐19 test due to the variation in shape, size, and location of the lungs' abnormal anatomical area. Deep learning models have been taken to get alternative solutions from conventional segmentation methods.

Classification: The infected respiratory system area can be identified and confirmed by using conventional machine learning (ML) classifiers. To build the classification model, features outlining characteristics of the infected image region are essential. These features should have a good differentiation capability among virus‐affected or non‐affected areas by the tissue. Although different methods can be used to classify the infected area, deep learning techniques consider extracting detailed feature characteristics from infected region data directly. Classification performance can be considered during experiments (Chinmay & Arij, 2021; Mugahed et al., 2018; Muhammad et al., 2020).

Image quantification: The responsive image quantitative model is considered based on a deep network for inflammatory lung regions from the image. This quantitative image analysis can be applied in various pathophysiological stages of COVID‐19 cases. This model considers the dimensions, region opacity, and shape of the inflamed lung tissue. The basic results are collected from involved acute respiratory distress syndrome (ARDS). Thus several variable‐based logistic evaluations can be considered to understand the temporal association between quantification output and sustainability of ARDS to predict disease prognosis.

Thus, it is challenging to detect the infected patient accurately with existing infrastructure and assess its seriousness to predict the outcome. Alternative detection approaches are very challenging due to viral infectivity and transmission risk to healthcare workers, such as collecting a swab from the patient's nose, blood sample collection, or fluid handling to establish the diagnosis. Further, ongoing evidence is suggestive of a possible air transmission risk associated with patient care. There is no particular treatment or preventive vaccine to cure or protect individuals against this disease. Therefore, it becomes more important to diagnose the patient and subsequently evaluate its outcome risk. Current research has taken steps towards plugging knowledge gaps in identifying and quantifying the affected area of the patient's body by X‐Ray or CT scan. Details on the framework, observations, and inferences are outlined in the following sections.

4. FRAMEWORK FOR DIAGNOSIS SYSTEM OF COVID‐19 USING DEEP LEARNING TECHNIQUES

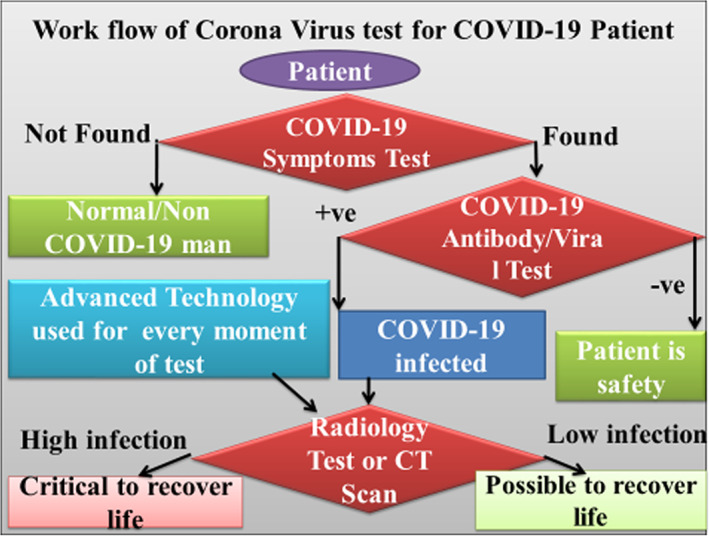

Several COVID‐19 symptoms are identified so far, such as fever, cough, shortness of breath or dyspnea, chills, muscle pain, the new loss of taste or smell, congestion or runny nose, vomiting, diarrhoea, sore throat, excessive fatigue, etc. (WHO, 2019). The whole process suggested evaluating the proposed workflow of the COVID‐19 patient, which is mentioned as in Figure 3. As per the proposed workflow model, a patient can detect as a normal or serious COVID‐19 patient. This model also identifies that a patient can recover or fall in death. Initially, a patient recommends a COVID‐19 test. If the patient is not affected by a coronavirus, further COVID‐19 treatment is not required; otherwise, the patient is considered as a COVID‐19 patient, and treatment will consider accordingly. As per the proposed model for COVID‐19 patient, treatment will start for the patient as radiology test or CT scan or X‐rays of lungs and respiratory system to measure the infected area of lungs. If less area is infected or just started, a patient may recover; otherwise, a regular CT scan or X‐rays test is required for the severe patient. For regular checking of the severe patient, several approaches of deep learning mentioned in this section. As outlined in problem statements, it develops the COVID‐19 diagnosis system using deep learning techniques to leverage the computing capabilities for diagnosis (CADx). Although deep learning has many techniques, it considers three techniques such as You‐Only‐Look‐Once (YOLO), Full‐resolution Convolutional Network (FrCN), and Convolutional Neural Network (CNN) for infected area detection, segmentation, and classification respectively from COVID‐19 affected patients' lung X‐Rays or CT scan, in a single framework.

FIGURE 3.

Workflow of coronavirus test for COVID‐19 patient

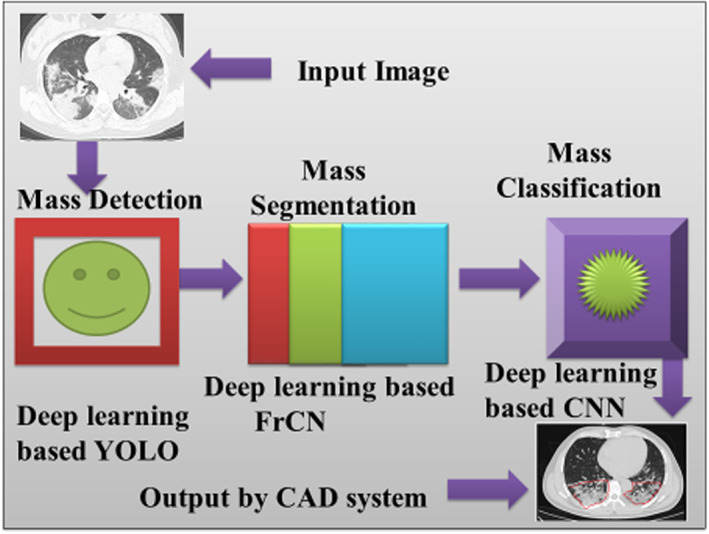

Generally, the above techniques have been used for breast cancer decision support systems (Mugahed et al., 2018) and leveraged in this work to detect coronavirus‐affected radiographic images. We use deep learning (DL) approaches to detect, segmentation, and classify COVID‐19 images in this part, and its working is also explained consecutively. The outlined three steps of masses are significant to diagnosing the coronavirus‐affected lung tissue of the patient. The first step takes an X‐Ray or CT scan image of the patients' lungs through the proposed model. The image of the lungs is used for mass detection using the deep learning YOLO technique. Here, it identifies the coronavirus‐affected lung regions from the normal tissue. In the second step, a segmentation methodology is considered using deep learning FrCN. Subsequently, in the third step, it classifies images based on CNN. The above method of deep learning techniques is shown in Figure 4. The reader can further refer to (Mugahed et al., 2018).

FIGURE 4.

Flow of diagram of X‐ray image using deep learning techniques to detect, segment, and classify the coronavirus‐affected area

4.1. Mass detection

Generally, infected area detection is a critical task for the processing of the image. It considers the detection of the coronavirus‐affected lung region where pathophysiological changes are set in from normal tissue. The research challenge here is to identify the dimensional changes in the infected tissue. Based on this technique, a deep learning model adopted the YOLO technique to detect the infected area through a region of interest (ROIs) across the whole lung. The primary performance of YOLO is to ensure that the detection task is practical. YOLO is an ROI‐based CNN technique that is used to identify suspicious infected regions directly from the whole lungs. YOLO can accurately identify and demarcate bounding boxes around the lungs. YOLO can be an alternative technique for COVID‐19 detection due to the following reasons, (1) It can detect the infected area directly from the entire lungs, (2) it detects the bounding boxes of the infected area as well as whole lungs for which false positives will be reduced, (3) it detects the dense region of the infected areas.

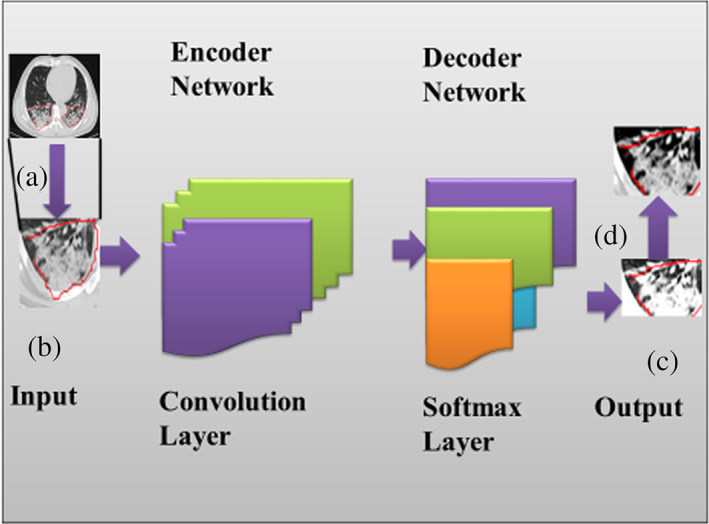

4.2. FrCN for mass segmentation

When the YOLO technique identifies the infected area, the detected area is used for the proposed segmentation stage. It considers pixel‐wise segmentation, resulting in a good outcome compared to other methods. Thus in this method, the FrCN model is proposed for infected pixel area segmentation. FrCN maintains cryptic network techniques. Next, the decoder network of FrCN is considered to replace all full convolutional layers to avoid full resolution of the image. Implemented design of FrCN model used in the current work for pixel‐wise segmentation is shown in (Figure 5). The Softmax classifier is used to calculate the statistical measurement of all pixels for classification into “infected” and “not‐infected.” Thus, it uses the SegNet technique of deep learning for more clarity.

FIGURE 5.

The design of FrCN model for pixel‐wise segmentation

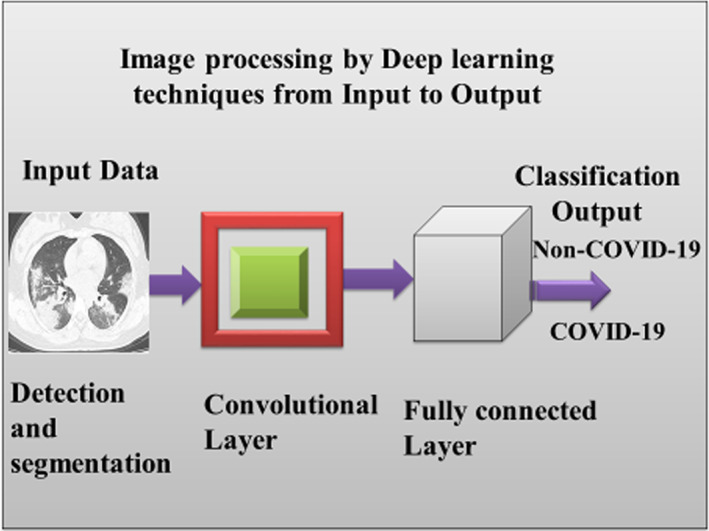

4.3. CNN for mass classification

There is no specific COVID‐19 class available in literature so far due to the absence of precedence. Thus, anybody infected and displaying a specific outlined pattern is identified as a COVID‐19 patient; otherwise, it is normal. Also, to differentiate between COVID‐19 and normal, it has taken steps for classification after detection and segmentation of the infected area by a deep CNN (i.e., ConvNet (Krizhevsky et al., 2012a, 2012b), which produces the desired output of the proposed work. Generally, CNN is a good deep learning model to use input raw images based on deep hierarchical features directly. CNN model contains several convolutional layers and fully convolutional (FC) layers, as shown in Figure 6. For our deep learning model, we consider CNN, which generate deep hierarchical features from the previous stage (i.e., segmentation stage) as (Carneiro et al., 2017; Qiu et al., 2017; Krizhevsky et al., 2012a, 2012b). Our CNN model maintains five convolutional layers and two FC layers inside the convolutional layer and FC layer of Figure 6 (Mugahed et al., 2018). Different convolutional layers are designed as (1) the equal size of 5 × 5 with 20 and 64 filters used in first and second convolutional layers respectively, (2) equal size of 3 × 3 for each with 256 filters in third, fourth, and fifth convolutional layers.

FIGURE 6.

CNN model for infected classification output

Further, for better performance, it is used local response normalization layers after each convolutional layer of the proposed CNN model (Badrinarayanan et al., 2016; Krizhevsky et al., 2012a, 2012b). The local response normalization layers are used to develop the performance by the CNN model. Subsequently, ReLU functions are also used for COVID‐19 classification after each stage of CNN, leading to the potentially improved and rapid outcome.

A separate method is also used to further evaluate CT images for quantification is discussed in the next section.

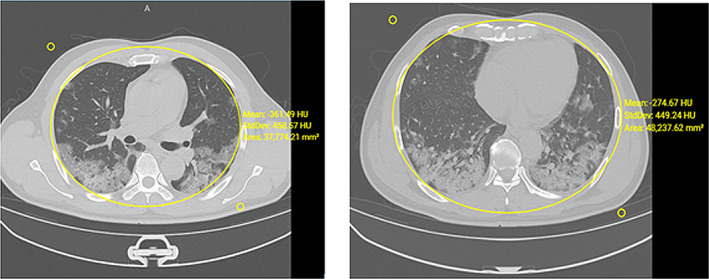

4.4. CT visual quantitative evaluation

Recently, related work Chung et al. (2020), where a method is discussed to determine the severity of inflammation on CT images. The approach is intended to quantify the inflammatory lung response and its manifestations due to immune response. Further, quantifying pulmonary inflammation in terms of alveolar and pleural wall thickening, parenchymal infiltration, and membrane abnormalities and correlating the clinical classifications are also developed using a similar method. Sometimes, a score is overlapped with two groups, such as standard type and severe‐critical type. It is observed that the above group is involved in the following characteristics as in Table 1. The radiological findings in one of the cases are shown in Figure 7. Those patients further call for a wide‐ranging clinical evaluation.

TABLE 1.

Comparison of two major types of patients

| Common type (8 cases) | Severe‐critical type (5 cases) |

|---|---|

| Higher score | Lower score |

| 7 Cases had fibrotic lesions that needed to be repaired | Less fibrotic lesions |

| Patients aged <70 years (range 36–65 years; Average 52.5 years) |

1st patient: Female with h/o nicotine use, Diabetes Mellitus (DM), emphysema, and mild effusion observed on radiographs; 2nd patient: Geriatric female patient h/o emphysema and mild effusion observed on radiographs; 3rd patient: Geriatric female patient with h/o hypertension 4th patients: Middle‐aged male with radiological findings of progressing pathophysiological changes but no fibrosis 5th patients: Female with idiopathic causation and severe lung involvement |

FIGURE 7.

A middle‐aged male with no comorbidities and h/o onset of high temperature and cough. Hemogram shows normal leukocytic ranges with reduced lymphocytes. Radiograph shows the appearance of patchy widespread infiltration in lung parenchyma without exudates and fluid. Follow‐up CT slices after 18 days show inflammatory response and tissue consolidation

4.5. Metrics for deep learning approaches

Three models of deep learning approaches are computed separately as follows. Overall accuracy is considered at the mass detection. Here the dataset is used to evaluate F1‐score and MCC, where the F1‐score is considered for the Dice similarity coefficient. It evaluates a harmonic average of precision and sensitivity with a score of 1 for perfect and 0 for worst. MCC is utilized to obtain the quality of classifications on several sizes with counting in true and false positives and negatives. Sensitivity, specificity, overall accuracy, Dice similarity coefficient or F1‐score, and MCC are used to evaluate the proposed segmentation method of FrCN against others (i.e., fully convolutional network [FCN]). All criteria are defined as follows,

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

where TP, TN, FP, and FN are considered to identify the number of true positive, true negative, false positive, and false negative detections, respectively as per pixel. A good performance of segmentation is obtained based on masses and surrounding infected COVID‐19 virus with high sensitivity and specificity. However, the rate of similarity between predicted and ground‐truth regions is measured using the Dice (F1‐score) and Jaccard metrics as TP, FP, and FN pixels. Further, MCC is considered to evaluate the correlation between the segmented mass pixels and its ground truth. The above techniques of the model are evaluated in the experiment section.

5. EXPERIMENTAL RESULTS

5.1. Dataset

In experiment parts, 150 training and 50 test COVID‐19 data set from (Cohen et al., 2020; Jenssen, 2020; Li et al., 2020; Sajid, 2020) are considered for experiments and evaluated as per the proposed model. Here, both X‐ray images and CT images are used for experiments based on the proposed system. It is considered different cases of infected areas in different stages of the test in the lungs. Sometimes, the infected areas are changed day‐wise; thereby total images were collected accordingly and used for experiments.

5.2. Experimental settings

The received data set is used for the detection, segmentation, and classification task based on the training set containing 2794 images from 150 COVID‐19 patients, and the test set has 1061 images from the other 50 COVID‐19 cases.

5.2.1. Experimental setup

The proposed system has experimented with Windows 10, 8 GB Ram, I‐5 Processor, 1 TB Hard disk, Radiology Artificial Intelligence One‐Stop Solution (RAIOSS) software, Python language, etc.

5.2.2. Evaluation metrics

The proposed system has considered the test images from alive or dead COVID‐19 patients on true or false measurements by two specific calculations: specificity and sensitivity for the classification task.

From Table 2, different kinds of items are performed as per Equation ((1), (2), (3), (4), (5), (6)). It also uses the metrics of specificity and sensitivity as suggested by (Liu, Wu, et al., 2020; Liu, Xu, et al., 2020). Several evaluations of COVID‐19 patients are also developed by (COVID‐19, 2020). For the segmentation task, it uses two standard metrics, that is, Dice score (Shan et al., 2020) (Chinmay, 2019) and Intersection over Union (IoU).

TABLE 2.

Mass detection over 4‐fold cross‐validation via YOLO

| Fold test | Non‐COVID‐19 | COVID‐19 | Total | |||

|---|---|---|---|---|---|---|

| True | False | True | False | True | False | |

| 1st fold | 29 | 1 | 169 | 1 | 198 | 2 |

| 96.66% | 3.34% | 99.41% | 0.59% | 99.00% | 1.0% | |

| 2nd fold | 28 | 2 | 168 | 2 | 196 | 4 |

| 93.33% | 6.67% | 98.82% | 1.18% | 98.00% | 2.00% | |

| 3rd fold | 29 | 1 | 169 | 1 | 198 | 2 |

| 96.66% | 3.34% | 99.41% | 0.59% | 99.00% | 1.00% | |

| 4th fold | 29 | 1 | 169 | 1 | 198 | 2 |

| 96.66% | 3.34% | 99.41% | 0.59% | 99.00% | 1.00% | |

| Average (%) | 95.82% | 4.17% | 99.26% | 0.73% | 98.75% | 1.25% |

Here it considered fourfold cross‐validation tests with training, validation, and test datasets. All models (detection, segmentation, and classification) are tested four times to obtain the proposed frameworks' whole performance. It considered the whole data set to divide into two type's datasets such as COVID‐19 and Non‐COVID‐19 patient data. Further whole data set is used for three groups as training (60%), validation (10%), and testing (30%). The training dataset is not steady for detection, segmentation, and classification of deep learning models based on accuracy testing. To avoid error, it considered the data set through three steps of training. In the first step, the data set is trained through a small batch of images where each image is used once. In the second step, the entropy loss function is used to evaluate the data set through parameters of the deep learning model (Badrinarayanan et al., 2016). In the third step, the cross‐validation method is used to obtain optimal parameters based on the training and validation dataset. The performance for the above models is based on a tested set (Szymańska et al., 2012).

5.2.3. Comparison methods

On classification tasks, it compares the proposed classification model with or without an image mixing technique. On the segmentation task, to provide an in‐depth evaluation of the DSC model, it compares it with versatile cutting‐edge models, i.e., the UNet for medical imaging and the DSS (Hou et al., 2019), and PoolNet (Liu et al., 2019) for saliency detection.

5.2.4. Challenges of several approaches

Since the images COVID‐19 are involved with a corona virus‐infected area and increased from time to time as per testing, it is challenging to experiment through the proposed system.

5.3. Data augmentation and transfer learning

A large amount of interpreted datasets is needed to train deep learning models. It has taken the small size of the COVID‐19 affected image datasets sample test. Two techniques are considered to take this challenge as; data augmentation and transfer learning. Here, data augmentation is considered as a process of changing the image as needed. This part has augmented the original COVID‐19 affected images into the number of times of different images by using RAIOSS (Coronavirus, 2020). It uses ten coronavirus‐affected cases for the training dataset. In this case, originally, three images are collected from each patient.

Once these images are augmented, it further generates several different images from the original image. For example, from patient 1, (1) first, second and third images create 301, 45, and 58 augmented images from the original image of the 1st patient. Similarly, other images of the different patients are outlined in Table 3. We have shown the few patient data here.

TABLE 3.

10 COVID‐19 patient with different image quantity

| Patient no. | Image of patient | SAGITTAL image | Corona large image | Pixel size image | Total |

|---|---|---|---|---|---|

| 1 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 58 | 45 | 301 | 404 | |

| 2 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 53 | 57 | 200 | 310 | |

| 3 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 66 | 76 | 200 | 342 | |

| 4 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 50 | 51 | 301 | 402 | |

| 5 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 71 | 50 | 301 | 422 | |

| 6 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 62 | 64 | 213 | 339 | |

| 7 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 62 | 58 | 249 | 369 | |

| 8 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 63 | 61 | 301 | 425 | |

| 9 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 59 | 43 | 256 | 358 | |

| 10 | Original image | 1 | 1 | 1 | 3 |

| Augmented image | 48 | 58 | 301 | 407 |

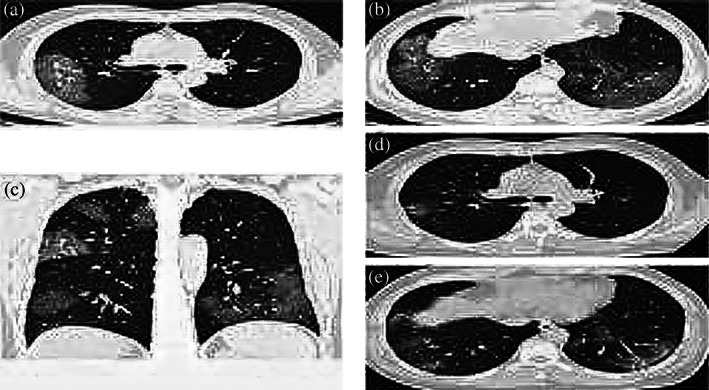

Figure 8 is collected from the process outlined in Figure 5 and replicated for all images by the proposed deep learning models such as detection, segmentation, and classification. Thus it can evaluate the clinical prognosis of COVID‐19 affected patients. It considered two parameters for deep learning models such as random initialization and transfer learning. The parameters are randomly initialized with mean, standard deviation, and affected area of lungs for deep models. Meanwhile, the identification area of images is developed by network biases of the convolutional and FC layers. After that, the transfer learning parameter is used to process an extensive data set for deep learning models. Then augmented data is used for the proposed model. Transfer learning is used for the COVID‐19 virus‐infected patient images to analyse through proposed systems.

FIGURE 8.

Augmented images of COVID‐19 patients with a mean and standard deviation of rounded area

5.4. Performance of deep learning

The current system considers deep learning techniques in detection, segmentation, classification, and quantification, which are explained below.

5.4.1. Performance of detection via YOLO

Here, all images are resized to 400 × 400 from different image sizes. For correct mass detection, it is considered the masses based on extracted and ground truth of bounding region of masses. The false‐positive part of images is removed for segmentation and classification models due to lack of ground truth of false masses identification. It helps to make the performance of evaluation metrics. Thus, without false detected part, segmentation and classification models are processed for evaluation. The performance of fourfold cross‐validation for mass detection is mentioned in Table 2. Each test fold is considered for ground‐truth detection. Thus YOLO detector is performed through each test fold.

Initially, different origin images are collected and resize as required where the images do not lose their infected coronavirus area. It also considers the infected area to be accurately detected by augmenting the image. Moreover, it also neglects the false‐positive rate of ROIs before the segmentation and classification stages of the proposed model. The performance of the detection method over the test datasets is mentioned in Figures 10 and 11. In this figure, the infected detection results show the potential ROIs in three stages: original ROI and invert. In each test, the detected areas are correct in all tests when the infected area is identified. The detected area is also evaluated through statistical measurements such as mean and standard deviation of the infected area, as shown in Table 4. This table outlines reference values used to identify COVID‐19 patients. This table took only three patients' statistical experimental information out of the extensive information of all patients. The false detections are also automatically identified by this detection technique. A non‐infected area is identified as false detection which expresses the consistent performance of the YOLO detector. Thus the YOLO detector performed better on accuracy aspects.

TABLE 4.

Details of the infected area of three COVID‐19 patients

| Patient no. | Age | Gender | Type of image | No. of image | Pixel | Thick | Wide & Length | Total mean, Std Dev, area of image | Left mean, Std Dev area of infected image | Right mean, Std Dev area of infected image |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 61 | Female | 1.0 × 1.0 | 301 | 512 × 512 | 1.00 mm spacing | 1400 × 40 | ‐385.87, 433.41, 41,547.99 | ‐707.07, 233.21, 4,758.51 | ‐657.17, 313.50, 5675.96 |

| CORONAL | 45 | 512 × 371 | 0.81 mm spacing | 400 × 37 | ‐373.83, 430.84, 52,253.88 | ‐517.13. 385.72, 12,167.18 | ‐615.24, 325.10, 12,409.70 | |||

| SAGITAL | 58 | 840 × 430 | 1.00 mm spacing | 400 × 40 | ‐757.63, 187.55, 13,914.95 | ‐735.47, 220.38, 9,338.44 | ||||

| 2 | 47 | Male | 1.5 × 1.5 | 200 | 512 × 512 | 1.00 mm spacing | 1400–400 | ‐404.62, 449.05, 47,840.65 | ‐591.38, 364.92, 12,734.38 | ‐489.00, 411.63, 13,538.52 |

| CORONAL | 57 | 512 × 438 | 0.68 | 1400–400 | ‐443.71, 462.89, 48,299.46 | ‐622.01, 341.13, 16,471.73 | ‐710.33, 223.92, 4,263.27 | |||

| SAGITAL | 53 | 512 × 438 | 0.68 | 1400–400 | ‐542.95, 392.93, 30,679.70 | |||||

| 3 | 50 | Male | 1.5 × 1.5 | 200 | 512 × 512 | 1.5 | 1400–400 | ‐359.04, 433.74, 38,818.34 | ‐451.85, 352.53, 8,286.58 | ‐327.93, 374.60, 5,029.44 |

| CORONAL | 76 | 512 × 406 | 0.74 | ‐1400–400 | ‐234.70, 439.07, 42,520.89 | ‐402.99, 332.79, 11,708.09 | ‐322.56, 318.96, 8,686.86 | |||

| SAGITAL | 66 | 512 × 406 | 0.74 | ‐1400–400 | ‐471.41, 421.17, 27,064.67 | ‐340.21, 321.91, 6,847.29 | ||||

5.4.2. Segmentation performance

In this part, only corrected detected masses are utilized where fourfold cross‐validation is performed on all segmentation models. It also used cross‐entropy as a loss function to manage several pixel numbers at the training process. The segmentation performances of the proposed FrCN are better than FCN. The quantitative evaluation is processed as per pixel of the segmented map.

With the multi‐scale data augmentation strategy, the boosted DSC obtains further improvements on the Dice score, IoU. Besides, and PoolNet (Liu et al., 2019) obtained comparable results on the three metrics. U‐Net performs better than DSS in terms of IoU, though they are comparable on the Dice score. One can see that the other competitors produce inaccurate or even wrong predictions of the lesion areas in the CT images of mild, medium, and severe COVID‐19 infections. But the proposed segmentation model correctly discovers the whole lesion areas on all levels of COVID‐19 infections. In this part, the FrCN model is used to find infected pixel area segmentation during CT images. It helps to detect pixel‐wise segmentation. For more stability, it performs the statistical analysis of the segmentation model on our segmentation test set.

Further, it considers the correlation between the Dice score of our model and the infected areas of CT images. It also explores the relationship between the lesion count of each slice and the Dice score from different sections. The Dice score's probability distribution is little affected by the number of lesion counts in a CT image. The consistently promising performance on segmenting lesion areas and the low probability of failure confirm the stability of our segmentation model.

5.4.3. Performance of classification

This part uses the CNN model to get sound output by using deep hierarchical features. During CT images, it is considered up to a certain level to get the exact infected area by a virus. The classification model trained with or without image mixing is shown in Figure 7. The activation mapping (AM) is used for classification models trained with random horizontal flip and random crop. It not only covers the lesion areas but also presents unrelated areas. This indicates that the classification model is biassed to non‐lesion areas.

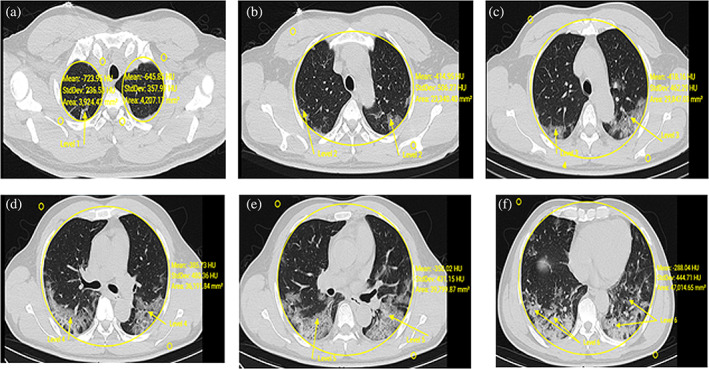

The proposed classification model provides more precise locations of the graze areas. It considers the threshold value for the affected area of the COVID‐19 patient image. When the affected area of CT images from a suspected patient is larger than a threshold, the patient is diagnosed as COVID‐19 positive. Changing the threshold enables the proposed model to get a trade‐off among sensitivity and specificity. After detection, segmentation, and classification, the statistical evaluation is made as per the infected area which is shown in Figure 9a–f.

FIGURE 9.

Detection, segmentation, classification, and statistical evaluation of COVID‐19 patient

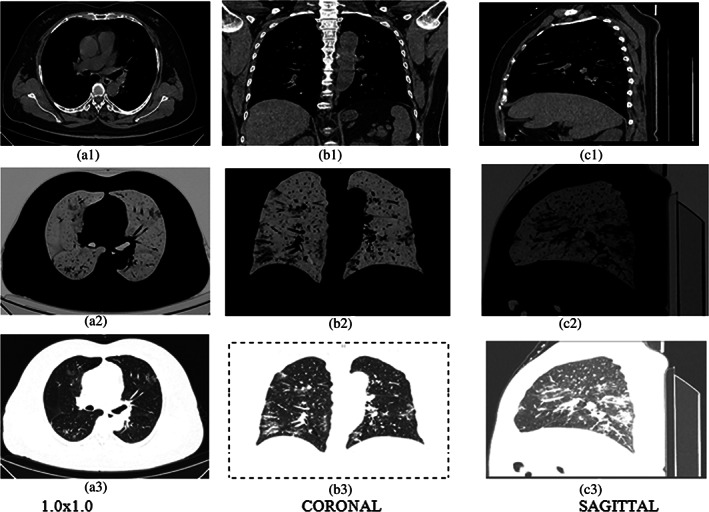

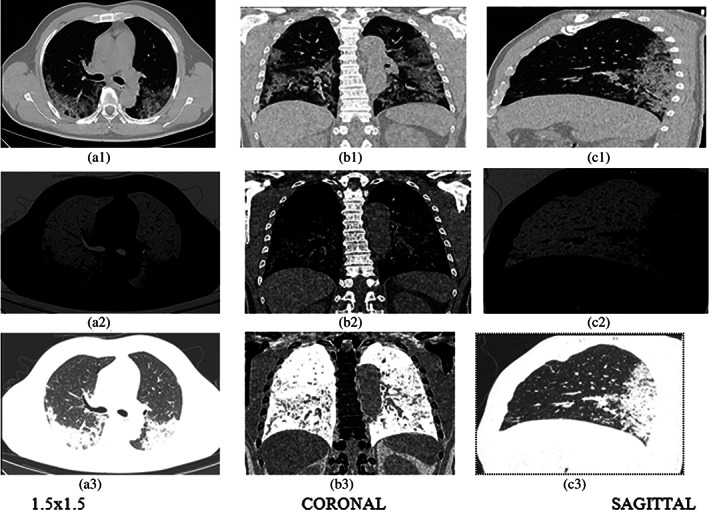

The infected area is determined by mean and standard deviation from the affected image as shown in Figure 9 and also in Table 2. Further, it considers three kinds of images such as pixel levels, CORONAL, SAGITTAL of the single patient which is shown in Figures 10 and 11. It is also tested through RAIOSS very carefully to identify and classify the infected area of each image.

FIGURE 10.

Three types of images (a) 1.0 × 1.0, (b) CORONAL, (c) SAGITTAL. In first row‐original; second row‐ROI, third row‐invert of the 61 years old patient

FIGURE 11.

Three types of images (a) 1.5 × 1.5, (b) CORONAL, (c) SAGITTAL. In first row‐original; second row‐ROI, third row‐invert of 50 years old patient

The statistical measurement is used for the infected area by the artificial intelligence technical tool that is, RAIOSS. It is determined whether the patient is suffering from mild, moderate, or severe form disease and predict the clinical outcome.

In this part, all segmented masses are resized to a size of 50 × 50 using (Dhungel et al, 2017). All segmented masses are used for the classification process with the same test folds. The classification process is performed through CNN as shown in Figure 6. The performance of classification is computed through several terms such as Sen., Spe., Acc., MCC, F1‐score with and without mass segmentation, shown in Table 5. As per the comparison of classification performance from Table 5, each term of “with mass segmentation” is better than terms without mass segmentation. When it minimizes the false positive and negative, the classification performance is better as in Table 5.

TABLE 5.

Comparison of classification on performance (%) over 4‐fold cross‐validation of test data sets

| Test fold | Without mass segmentation | With mass segmentation | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sen. | Spe. | Acc. | MCC | F1‐score | Sen. | Spe. | Acc. | MCC | F1‐score | |

| 1st fold | 93.33 | 98.82 | 98.0 | 92.15 | 93.33 | 96.66 | 99.41 | 99.0 | 96.07 | 96.66 |

| 2nd fold | 90.0 | 98.23 | 97.0 | 88.23 | 90.0 | 93.33 | 98.82 | 99.0 | 92.15 | 93.33 |

| 3rd fold | 93.33 | 98.82 | 98.0 | 92.15 | 93.33 | 96.66 | 99.41 | 99.0 | 96.07 | 96.66 |

| 4th fold | 93.33 | 98.82 | 98.0 | 92.15 | 93.33 | 96.66 | 99.41 | 99.0 | 96.07 | 96.66 |

Suppose the statistical measurement of the affected area from the image is less. In that case, it is inferred that the disease has affected the small lung volume and can be cured with symptomatic treatment as guided by health authorities. In advanced stages of the disease, affected areas from images could be observed with extensive involvement and statistical measurements with higher deviations. In such patients, it is inferred that they could lead to secondary complications and hence require advanced care support and close monitoring. The segmentation of lesion areas further employs the proposed segmentation model to discover the lesion areas in the COVID‐19 patient's CT images. Thus, the above model is evaluated by detection, segmentation, and classification, which is partially successful due to the lack of information collected from several resources.

5.4.4. CT visual quantitative evaluation

Similar to (Chung et al., 2020), where radiologists have verified all collected images and re‐examined on collected images independently based on the clinical information to generate the percentage of lobar involvement from each lobe with a record of the whole lung “total severity score (TSS)”.

The lobar involvement and classified name of each of the five lung lobes are mentioned in Table 6. The TSS is calculated by adding all studied lobe scores (range: 0–20) (COVID‐19, 2020). A manual observation is conducted by an experienced thoracic radiologist who consolidated the final score. The score is generated from the acute lung inflammatory lesions involving each lobe. The TSS is found by summing the above five lobe scores.

TABLE 6.

CT visual quantitative evaluation is designed through summing of five lobe scores

| Score | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| Percentage of the lobar involvement | 0% | (1–25)% | (26–50)% | (51–75)% | (76–100)% |

| Classified Name | None | Minimal | Mild | Moderate | Severe |

Since accuracy is the most instinctive performance measure based on a ratio between correctly predicted observations to total observations. The model will be great for high accuracy, which depends on the true positive and true negative and false positive and false negative. Similarly, sensitivity is measured by the proportion of true positives that are correctly identified by the diagnostic test. Both are very vital measurements of the diagnostic test for disease‐affected patients.

We did not mention all the practical information of all mentioned patients in section 5.2. It is not also shown all experimented images of all patients to avoid memory space. Readers can understand the strategies with techniques as our model and can be implemented as their own choice.

Since the proposed model is made for the COVID‐19 patient and detects the infected area of the respiratory system as well as lungs, it is very difficult to find the COVID‐19 patient whether the patient is infected by coronavirus or not. Thus it was a challenging task to develop the proposed model. When the proposed model tests the CT scan or X‐rays of patient lungs, it was another challenging task due to changes in the infected area of lungs, particularly for the serious patient. For many serious patients, there was no conclusion for recovery from disease because of increasing and decreasing the infected area of lungs and other areas of the patient body, which is very serious for the patient to live. But the proposed model tries to make possible identification of an infected area of the patient. For comparisons of complexity is not considered due to the algorithm is not taken in this paper. This paper is considered only the proposed model and experiments. It does not consider more mathematical models and their corresponding descriptions. It is a very confined model for testing COVID‐19 patients to avoid the robustness of the proposed model.

6. CONCLUSIONS

This paper presents the COVID‐19 diagnosis system based on deep learning techniques such as detection, segmentation, and classification in a single setting framework. The proposed diagnosis system detects the COVID‐19 infected area from the respiratory system. It specially detects the coronavirus infected area and location from X‐Rays or CT scan images of the lungs. YOLO‐based deep learning detects several locations of the infected area. Thus, the proposed deep learning technique helps identify COVID‐19 patients and provides image‐based pathological area quantification. Three kinds of images are tested such as pixel level, CORONAL, and SAGITTAL images. Statistical measurement is used to find the infected area from each image. High consistency and high diagnostic ability are considered for visual, quantitative analysis of CT images that replicate clinical severity classification of COVID‐19. This paper helps to find whether a patient is suffering from COVID‐19 or not and if yes, then how much pathological involvement in lung tissue. Key limitations with the current approach include mutation‐induced variations in clinical symptoms and image patterns associated with it. Hence, It is imperative to incorporate such evolving needs with changing clinical symptoms and image patterns and detect such variations accurately, which will be done in the future.

CONFLICT OF INTEREST

The authors declare that they have no conflict of interest.

Biographies

Hemanta Kumar Bhuyan received PhD degree in Computer Science and Engineering from Sikshya ‘O’ Anusandhan (SOA) University, Odisha, India and MTech from Utkal University, Odisha, India in 2016 and 2005 respectively. He is currently working as associate professor in the Department of Information Technology at Vignan's Foundation for Science, Technology & Research (Deemed to be University), Andhra Pradesh, India. He has published many research papers in SCIE indexed journals such as Elsevier, Springer, IEEE Transactions of repute. He has also published many Elsevier, Springer, IEEE conference proceedings and edited book chapters. His research interests include data mining, Big data analytics, Machine learning, fuzzy system and image processing.

Dr. Chinmay Chakraborty is an assistant professor in the Electronics and Communication Engineering, Birla Institute of Technology, Mesra, India. His main research interests include the internet of medical things, wireless body sensor networks, wireless networks, telemedicine, m‐Health/e‐health, and medical imaging. Dr. Chakraborty has published more than 120 papers at reputed international journals, conferences, book chapters, more than 20 books and more than 16 special issues. He is an editorial board member in the different journals and conferences. He is serving as a guest editor of MDPI‐Future Internet Journal, Wiley‐Internet Technology Letters, Springer‐Annals of Telecommunications, Springer‐International Journal of System Assurance Engineering and Management, Springer‐Environment, Development, and Sustainability, Wiley‐Business and Society Review, and lead guest editors of IEEE‐JBHI, Hindawi‐Journal of Health Engineering, Mary Ann Liebert‐Big Data Journal, IGI‐International Journal of E‐Health and Medical Communications, Springer‐Multimedia Tools and Applications, TechScience CMC, Springer‐Interdisciplinary Sciences: Computational Life Sciences, Inderscience‐International Journal of Nanotechnology, BenthamScience‐Current Medical Imaging, Journal of Medical Imaging and Health Informatics, lead series editor of CRC‐Advances in Smart Healthcare Technologies, and also associate editor of International Journal of End‐User Computing and Development, Journal of Science & Engineering, International Journal of Strategic Engineering, and has conducted a session of SoCTA‐19, ICICC‐2019, Springer CIS 2020, SoCTA‐20, SoCPaR 2020, and also a reviewer for international journals including IEEE Access, IEEE Sensors, IEEE Internet of Things, Elsevier, Springer, Taylor & Francis, IGI, IET, TELKOMNIKA Telecommunication Computing Electronics and Control, and Wiley. Dr Chakraborty is co‐editing several books on Smart IoMT, Healthcare Technology, and Sensor Data Analytics with Elsevier, CRC Press, IET, Pan Stanford, and Springer. He has served as a publicity chair member at renowned international conferences including IEEE Healthcom, IEEE SP‐DLT. Dr Chakraborty is a member of Internet Society, Machine Intelligence Research Labs, and Institute for Engineering Research and Publication. He received a Best Session Runner‐up Award, Young Research Excellence Award, Global Peer Review Award, Young Faculty Award, and Outstanding Researcher Award.

Dr. Yogesh Shelke has more than a decade of experience spanning roles from IP researcher, innovation consulting and med‐tech advisory to leading healthcare companies, to drive their future R&D strategies. Starting his career as physician in tertiary care delivery centers, he has been acknowledged for his expertise in emerging healthcare trends such Internet of Medical Things, Artificial Intelligence and Machine Learning for healthcare applications, Data analytics in care delivery, and product development in digital healthcare domain. He has been associated with industry‐academic collaborations, Journal editorials, conferences and strategic research initiatives from healthcare organizations.

Subhendu Kumar Pani received the PhD degree from Utkal University, Odisha, India, in 2013. He is currently working as Principal at Krupajal Computer Academy (KCA), Bhubaneswar. He has published 51 International Journal articles (25 Scopus index). His professional activities include roles as book series editor (CRC Press, Apple Academic Press, and Wiley‐Scrivener), associate editor, editorial board member, and /or a reviewer of various international journals. He is an associate with number of conference societies. He has more than 150 international publications, five authored books, 15 edited and upcoming books; 20 book chapters into his account. He has more than 17 years of teaching and research experience. In addition to research, he has guided 31 MTech students and two PhD students. His research interests include datamining, big data analysis, web data analytics, fuzzy decision making, and computational intelligence. He is a fellow in SSARS Canda LifeMember in IE, ISTE, ISCA, OBA. OMS, SMIACSIT, SMUACEE, and CSI. He was a recipient of five researcher awards.

Bhuyan, H. K. , Chakraborty, C. , Shelke, Y. , & Pani, S. K. (2022). COVID‐19 diagnosis system by deep learning approaches. Expert Systems, 39(3), e12776. 10.1111/exsy.12776

Correction added on 22 September 2021 after first online publication: The fourth author's name has been corrected in this version.

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

REFERENCES

- Ai, T. , Yang, Z. , Hou, H. , Zhan, C. , Chen, C. , Lv, W. , Tao, Q. , Sun, Z. , & Xia, L. (2020). Correlation of chest CT and RT‐PCR testing in coronavirus disease 2019 (COVID‐19) in China: A report of 1014 cases. Radiology, 296(2), E32–E40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ali, L. , Zhu, C. , Zhang, Z. , & Liu, Y. (2019). Automated detection of Parkinson's disease based on multiple types of sustained phonations using linear discriminant analysis and genetically optimized neural network. IEEE Journal of Translational Engineering in Health and Medicine, 7, 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ali, L. , Zhu, C. , Zhou, M. , & Liu, Y. (2019). Early diagnosis of Parkinson's disease from multiple voice recordings by simultaneous sample and feature selection. Expert Systems with Applications, 137, 22–28. [Google Scholar]

- Badrinarayanan, V. , Kendall, A. , & Cipoll, R. (2016). SegNet: A deep convolutional encoder‐decoder architecture for image segmentation, arXiv:1511.00561. [DOI] [PubMed]

- Bhuyan, H. K. , & Kamila, N. K. (2014). Privacy preserving sub‐feature selection based on fuzzy probabilities. Cluster Computing, 17(4), 1383–1399. [Google Scholar]

- Bhuyan, H. K. , & Kamila, N. K. (2015). Privacy preserving sub‐feature selection in distributed data mining. Applied Soft Computing, 36, 552–569. [Google Scholar]

- Bhuyan, H. K. , Kamila, N. K. , & Jena, L. D. (2016). Pareto‐based multi‐objective optimization for classification in data mining. Cluster Computing, 19(4), 1723–1745. [Google Scholar]

- Carneiro, G. , Nascimento, J. , & Bradley, A. P. (2017). Automated analysis of unregistered multiview mammograms with deep learning. IEEE Transactions on Medical Imaging, 36(11), 2355–2365. [DOI] [PubMed] [Google Scholar]

- Chinmay, C. (2019). Performance analysis of compression techniques for chronic wound image transmission under smartphone‐enabled tele‐wound network. International Journal of E‐Health and Medical Communications, 10(2), 1–15. 10.4018/IJEHMC.2019040101 [DOI] [Google Scholar]

- Chinmay, C. , & Arij, N. A. (2021). Intelligent internet of things and advanced machine learning techniques for COVID‐19. EAI Endorsed Transactions on Pervasive Health and Technology, 21, 1–14. [Google Scholar]

- Chung, M. , Bernheim, A. , Mei, X. , Zhang, N. , Huang, M. , Zeng, X. , Cui, J. , Xu, W. , Yang, Y. , Fayad, Z. A. , Jacobi, A. , Li, K. , Li, S. , & Shan, H. (2020). CT imaging features of 2019 novel coronavirus (2019‐nCoV). Radiology, 295, 202–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen, J. P. , Morrison, P. , & Dao, L. (2020). COVID‐19 image data collection. arXiv:2003.11597. https://github.com/ieee8023/covid-chestxray-dataset

- Coronacases . (2020). Coronavirus is helping radiologists in more than 100 countries. https://coronacases.org/forum/coronacases-org-helping-radiologists-to-help-people-in-more-than-100-countries-1

- COVID‐19 . (2020, April 05). COVID‐19 CT segmentation dataset . http://medicalsegmentation.com/COVID-19/

- Dhungel, N. , Carneiro, G. , & Bradley, A. P. (2017). A deep learning approach for the analysis of masses in mammograms with minimal user intervention. Medical Image Analysis, 37(1), 114–128. [DOI] [PubMed] [Google Scholar]

- Farooq, M. , & Hafeez A. (2020). Covid‐resnet: A deep learning framework for screening of COVID‐19 from radiographs, arXiv:2003.14395.

- Fu, F. , Lou, J. , Xi, D. , Bai, Y. , Ma, G. , Zhao, B. , Liu, D. , Bao, G. , Lei, Z. , & Wang, M. (2020). Chest computed tomography findings of coronavirus disease 2019(COVID‐19) pneumonia. European Radiology, 30(10), 1–10. 10.1007/s00330-020-06920-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He, K. , Zhang, X. , Ren, S. , & Sun, J . (2016). Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, 770–778.

- Hou, Q. , Cheng, M.‐M. , Hu, X. , Borji, A. , Tu, Z. , & Torr, P. (2019). Deeply supervised salient object detection with short connections. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(4), 815–828. [DOI] [PubMed] [Google Scholar]

- Huang, Z. , Zhao, S. , Li, Z. , Chen, W. , Zhao, L. , Deng, L. , & Song, B. (2020). The battle against coronavirus disease 2019 (COVID‐19): Emergency management and infection control in a radiology department. Journal of the American College of Radiology, 17(6), 710–716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwendi, C. , Bashir, A. K. , Peshkar, A. , Sujatha, R. , Chatterjee, J. M. , Pasupuleti, S. , Mishra, R. , Pillai, S. , & Jo, O. (2020). COVID‐19 patient health prediction using boosted random Forest algorithm. Frontiers in Public Health, 8, 1–9. 10.3389/fpubh.2020.00357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwendi, C. , Moqurrab, S. A. , Anjum, A. , Khan, S. , Mohan, S. , & Srivastava, G. (2020). N‐sanitization: A semantic privacy‐preserving framework for unstructured medical datasets. Journal: Computer Communications, 161, 160–171. [Google Scholar]

- Jenssen, H. B. (2020, April 15). COVID‐19 CT segmentation dataset . http://medicalsegmentation.com/COVID-19/

- Khattab, A. , & Youssry, N. (2020). Machine learning for IoT systems. In Internet of Things (IoT) (pp. 105–127). Springer. [Google Scholar]

- Krizhevsky, A. , Sutskever, I. , & Hinton, G. E. (2012a). ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25 (pp. 1097–1105). Curran Associates, Inc. [Google Scholar]

- Krizhevsky, A. , Sutskever, I. , & Hinton, G. E . (2012b). ImageNet classification with deep convolutional neural networks. 25th International Conference on Neural Information Processing Systems, USA. 1097–1105.

- Lalit, G. , Emeka, C. , Nasser, N. , Chinmay, C. , & Garg, G. (2020). Anonymity preserving IoT‐based COVID‐19 and other infectious disease contact tracing model. IEEE Access, 8, 159402–159414. 10.1109/ACCESS.2020.3020513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li, K. , Fang, Y. , Li, W. , Pan, C. , Qin, P. , Zhong, Y. , Liu, X. , Huang, M. , Liao, Y. , & Li, S. (2020). Ct image visual quantitative evaluation and clinical classification of coronavirus disease (COVID‐19). European Radiology, 30, 4407–4416. 10.1007/s00330-020-06817-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, K.‐C. , Xu, P. , Lv, W.‐F. , Qiu, X.‐H. , Yao, J.‐L. , Gu, J.‐F. , & Wei, W. (2020). CT manifestations of coronavirus disease‐2019: A retrospective analysis of 73 cases by disease severity. European Journal of Radiology, 126, 108941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, Q. , Hou, Q. , Cheng, M.‐M. , Feng, J. , & Jiang, J. (2019). A simple poolingbased design for real‐time salient object detection. IEEE Conference of Computer Vision and Pattern Recognition. 3917–3926.

- Liu, Y. , Wu, Y.‐H. , Ban, Y. , Wang, H. , & Cheng, M.‐M . (2020). Rethinking computer‐aided tuberculosis diagnosis. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2643–2652.

- Mugahed, A. A.‐a. , Mohammed, A. A.‐m. , Mun‐Taek, C. , Han, S. , & Kim, T. (2018). A fully integrated computer‐aided diagnosis system for digital X‐ray mammograms via deep learning detection, segmentation, and classification. International Journal of Medical Informatics, 117, 44–54. 10.1148/radiol.2020200230 [DOI] [PubMed] [Google Scholar]

- Muhammad, L. J. , Ebrahem, A. A. , Sani, S. U. , Abdulkadir, A. , Chinmay, C. , & Mohammed, I. A. (2020). Supervised machine learning models for prediction of COVID‐19 infection using epidemiology dataset. SN Computer Science, 2(11), 1–13. 10.1007/s42979-020-00394-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qiu, Y. , Yan, S. , Gundreddy, R. R. , Wang, Y. , Cheng, S. , Liu, H. , & Zheng, B. (2017). A new approach to develop computer‐aided diagnosis scheme of breast mass classification using deep learning technology. Journal of X‐Ray Science and Technology, 25(5), 751–763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rajinikanth, V. , Dey, N. , Raj, A. N. J. , Hassanien, A. E. , Santosh, K. C. , & Raja, N. S. M. (2020). Harmony‐search and otsu based system for coronavirus disease (COVID‐19) detection using lung CT scan images. arXiv:2004.03431.

- Roberts, M. , Driggs, D. , Thorpe, M. , Gilbey, J. , Yeung, M. , Ursprung, S. , Aviles‐Rivero, A. I. , Etmann, C. , McCague, C. , Beer, L. , Weir‐McCall, J. R. , Teng, Z. , Rudd, J. H. F. , Sala, E. , & Schönlieb, C.‐B. (2020). Machine learning for COVID‐19 detection and prognostication using chest radiographs and CT scans: A systematic methodological review. arXiv:2008.06388.

- Roerdink, J. B. , & Meijster, A. (2000). The watershed transform: Definitions, algorithms and parallelization strategies. Fundamenta Informaticae, 41, 187–228. [Google Scholar]

- Sajid N. (2020, April 10). COVID‐19 patients lungs X‐ray images 10000 . https://www.kaggle.com/nabeelsajid917/COVID-19-x-ray-10000-images.

- Shan, F. , Gao, Y. , Wang, J. , Shi, W. , Shi, N. , Han, M. , Xue, Z. , Shen, D. , & Shi, Y. (2020). Lung infection quantification of COVID‐19 in CT images with deep learning. arXiv:2003.04655.

- Shaoping, H. , Yuan, G. , Zhangming, N. , Yinghui, J. , Lao, L. , Xianglu Xiao, M. , Wang, E. F. F. , Wade, M.‐S. , Jun, X. , Hui, Y. , & Guang, Y. (2020). Weakly supervised deep learning for covid‐19 infection detection and classification from ct images. IEEE Access, 8, 118869–118883. [Google Scholar]

- Szymańska, E. , Saccenti, E. , Smilde, A. K. , & Westerhuis, J. A. (2012). Double‐check: Validation of diagnostic statistics for PLS‐DA models in metabolomics studies. Metabolomics, 8(1), 3–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang, C. , Dong, S. , Zhao, X. , Papanastasiou, G. , Zhang, H. , & Yang, G. (2019). Saliencygan: Deep learning semisupervised salient object detection in the fog of IoT. IEEE Transactions on Industrial Informatics, 16(4), 2667–2676. [Google Scholar]

- Wang, C. , Yang, G. , Papanastasiou, G. , Zhang, H. , Rodrigues, J. , & Albuquerque, V. (2020). Industrial cyber‐physical systems‐based cloud IoT edge for federated heterogeneous distillation. IEEE Transactions on Industrial Informatics, 17, 5511–5521. [Google Scholar]

- Wang, S. , Kang, B. , Ma, J. , Zeng, X. , Xiao, M. , Guo, J. , Cai, M. , Yang, J. , Li, Y. , Meng, X. , & Xu, B. (2020). A deep learning algorithm using CT images to screen for coronavirus disease (covid‐19). European Radiology, 1–9. 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO . (2019). Coronavirus disease (COVID‐19) outbreak situation . https://www.who.int/emergencies/diseases/novel-coronavirus-2019.

- Yang, R. , Li, X. , Liu, H. , Zhen, H. , Zhang, X. , Xiong, Q. , Luo, Y. , Gao, C. , & Zeng, W. (2020). Chest ct severity score: An imaging tool for assessing severe COVID‐19. Radiology: Cardiothoracic Imaging, 2(2), e200047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, J. , Xie, Y. , Li, Y. , Shen, C. , & Xia, Y. (2020). COVID‐19 screening on chest x‐ray images using deep learning based anomaly detection. arXiv:2003.12338.

- Zhou, Z. , Guo, D. , Li, C. , Fang, Z. , Chen, L. , Yang, R. , Li, X. , & Zeng, W. (2020). Coronavirus disease 2019: Initial chest ct findings. European Radiology, 30(8), 4398–4406. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing is not applicable to this article as no new data were created or analyzed in this study.