Abstract

Health services made many changes quickly in response to the SARS-CoV-2 pandemic. Many more are being made. Some changes were already evaluated, and there are rigorous research methods and frameworks for evaluating their local implementation and effectiveness. But how useful are these methods for evaluating changes where evidence of effectiveness is uncertain, or which need adaptation in a rapidly changing situation? Has implementation science provided implementers with tools for effective implementation of changes that need to be made quickly in response to the demands of the pandemic? This perspectives article describes how parts of the research and practitioner communities can use and develop a combination of implementation and improvement to enable faster and more effective change in the future, especially where evidence of local effectiveness is limited. We draw on previous reviews about the advantages and disadvantages of combining these two domains of knowledge and practice. We describe a generic digitally assisted rapid cycle testing (DA-RCT) approach that combines elements of each in order to better describe a change, monitor outcomes, and make adjustments to the change when implemented in a dynamic environment.

INTRODUCTION

In response to the SARS-CoV-2 pandemic, health services introduced numerous changes including clinical practice, service delivery, and assessment methods, among others. There are well-developed research methods and frameworks for rigorous evaluation research into the effectiveness and implementation of these changes. But, do we have the knowledge and tools to provide research-informed help to evaluate changes when the evidence is questionable, and while the change is being implemented? For changes where there is good evidence, do researchers and practitioners have the methods and tools to adapt these effectively to a rapidly changing situation?

Given the fluid nature of the pandemic and the health system response, it is likely that many more changes will be made. We propose that combining and developing improvement and implementation methods and tools can help services respond more effectively to the pandemic and its consequences and to other emergencies or evolving crises. We highlight elements of implementation and improvement that can be combined for this purpose, and provide a clearer understanding of the relevant features of the implementation and improvement in what can be a confusing field with many different terms and models.

Before the pandemic, we conducted a narrative review of the overlap and differences between improvement and implementation sciences and practices.1, 2 In this article, we suggested combining improvement and implementation science in ways which would be fruitful for developing the applied knowledge and practice needed in this era of change and uncertainty. Combining these sciences can help clinicians, managers, and policymakers to bring a range of changes into routine practice more quickly to effectively respond to the demands of the pandemic. This combined approach can help to adapt proven changes to reduce disparities for vulnerable populations. In addition, combining these sciences in this way provides a method to rapidly give feedback to adjust the changes so as to reduce harm and make the change more effective. An appropriate combination of these two applied sciences could be more effective than each individually for reducing suffering, mortality, health disparities, and waste.

WHAT IS IMPROVEMENT AND IMPLEMENTATION SCIENCE AND PRACTICE?

The improvement and implementation sciences are relatively new and generally refer to knowledge about change in organization and individual practice. As such they draw on and overlap with the knowledge domains of organization behavior, systems science, operational research, and the psychology of behavior change, among others. From a sociology of knowledge perspective, they are self-proclaimed “sciences,” involving researchers competing with other knowledge fields for funding and legitimacy, and changing definitions to suit their purpose and interests. Related to both domains are a growing occupation of practitioners who draw on and sometime contribute to this body of knowledge. This includes clinical and management practitioners, as well as specialist practitioners such as quality improvement facilitators and implementation specialists who form growing occupational groups with professional societies.

IS THERE A DIFFERENCE?

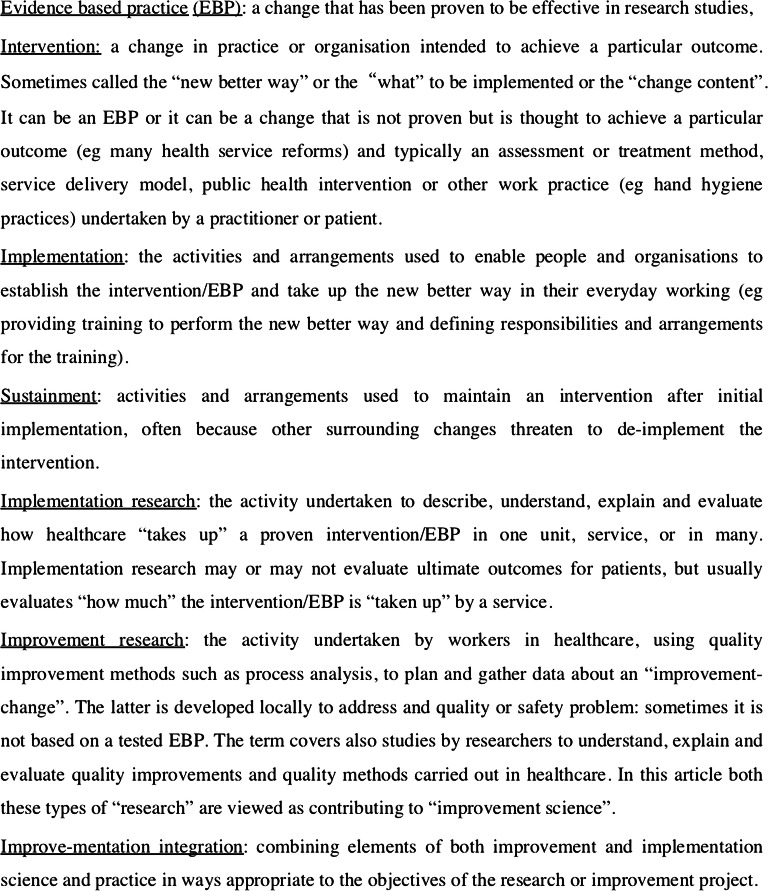

Traditionally, implementation is about establishing “evidence-based practices” in everyday service delivery.3 Improvement is about a project team devising and testing changes locally. The change may be one developed by the project team using a set of quality improvement tools,4 or it may be a change found effective elsewhere. Both sciences seek to enable change in practice and organization and an understanding of which changes are effective. While exchanges between the domains are beginning, one hindrance is the different definitions of terms for similar concepts, due to the separate historical development of each field. The purpose of this article is not to provide a scholarly presentation of the different understandings and definitions of the two fields—these are described in our reviews and summarized in key overviews.1, 2, 5–7 Researchers should define the terms they are using in presentations and papers to improve communication, accumulation, and dissemination of knowledge. Table 1 identifies seven common terms that cross implementation and improvement sciences, and the definitions that we have used in this article (Fig. 1). We use a pragmatic definition of science as “knowledge published in a peer-reviewed academic journal with a high-impact factor rating.”

Table 1.

Common Terms That Cross Implementation and Improvement Sciences (Our Definitions)

| Evidence-based practice (EBP): | A change that has been proven to be effective in a research study published in a credible peer-reviewed scientific journal. |

|---|---|

| Intervention: | A change in practice or organization intended to achieve a particular outcome (sometimes called the “new better way” or the “what” to be implemented, or the “change content.” It can be an EBP or it can be a change that is not proven but is thought to achieve a particular outcome (e.g., many health services reforms)). Typically, it is an assessment or treatment method, service delivery model, public health intervention, or other work practices (e.g., hand hygiene practice) undertaken by a practitioner or patient). |

| Implementation: | The activities and arrangements used to enable people and organizations to establish the intervention/EBP and take up this “new better way” in their everyday working (e.g., providing training to perform the “new better way” and defining responsibilities and arrangements for the training). |

| Sustainment: | The activities and arrangements used to maintain an intervention after initial implementation, often because other surrounding changes threaten to de-implement the intervention. |

| Implementation research: | An activity undertaken to describe, understand, explain, and evaluate how healthcare “takes up” a proven intervention/EBP in one unit, service, or in many. Implementation research may or may not evaluate ultimate outcomes for patients, but usually evaluates “how much” the intervention/EBP is “taken up” by a service (e.g., number of times the new practice is performed/opportunities to perform it). |

| Improvement research: | The activity undertaken by workers in healthcare, using quality improvement methods such as process analysis, to plan and gather data about an “improvement-change.” The change is often developed locally to address a quality or safety problem: sometimes it is not based on a tested EBP. The term also covers also studies by researchers to understand, explain, and evaluate quality improvements and quality methods carried out in healthcare. In this article, both these types of “research” are viewed as contributing to “improvement science.” |

| Improve-mentation integration: | Combining elements of both improvement and implementation science and practice in ways appropriate to the objectives of the research or improvement project. |

Figure 1.

Common terms that cross implementation and improvement sciences (our definitions).

A common assumption of implementation has been that “exactly copying” a proven intervention, with fidelity to the original evaluated intervention, will achieve similar results in other settings. More recent implementation research has considered intentional—as well as unintentional—adaptations to both the intervention and the implementation strategy, especially in scale-up programs. For interventions with evidence of effectiveness, such as many quality breakthrough collaboratives, fidelity to the intervention may be important.33 But improvement practitioners do not always start with a change already evaluated in research: sometimes improvement teams formulate their own change to address a quality problem, for example, by using a method such as process-flow analysis or failure mode analysis.4 Another significant difference is that improvement practitioners perform iterative tests of change (e.g., plan-do-study-act cycles) while implementation practitioners generally do not.

Many researchers would not consider these iterative tests of change to be sufficiently rigorous to contribute to science. However, practitioner testing using rigorous statistical process control and annotated time series designs can provide critical information about implementation that is not available through traditional health services research designs. Traditional health services research designs can also further constrain the process of continuous intervention improvement.8 Reflecting different understandings, institutional ethics review boards in one institution will classify a quality project as research and in another as improvement.9

Recent implementation studies have considered adaptation of an intervention. For example, where one component of the intervention is educational materials, a small adaptation is translation to Spanish for certain practitioners or patients, and this may or may not be sensitive to cultural meanings. Could implementation researchers test a range of types of adaptations, and if so, can they work closely with practitioners to give timely feedback about the local effectiveness of the adaptations? There are examples of researchers testing adaptations made to implementation strategies to help units by showing limited fidelity implementation of evidence-based practices. In one study, some sites showed limited implementation so the study added more facilitation assistance to help to exactly copy a new evidence-based practice for patients with mental health challenges.10

Some methods, like evidence-based quality improvement, specifically target and shape a delivery system’s use of QI methods for adapting implementation of evidence-based practices, such as guidelines, to specific settings.11, 12 However, a more common situation is where adaptation of the evidence-based improvement change is required to enable local implementation. There are examples of implementation research documenting adaptations to the improvement change that practitioners make, rather than to the implementation methods. In a few cases, the implementation research evaluated the adaptations, but did not make use of improvement methods that could have strengthened the study.13, 14

Other differences between implementation and improvement science relates to the range of sectors and fields where the research is undertaken. To date, most improvement research has been undertaken in hospitals and has focused on less complex interventions than those studied in implementation research. In contrast, implementation research is carried out across a broad range of welfare and education services as well as in healthcare, and often involves cross-sector and multi-level complex programs.15 Central to implementation research is the theory that outcomes are due to context–intervention interactions: context is viewed not as the “background” as is often the case in traditional biomedical and health services research, but as an “actor in the play.” This leads to some implementation research using observational research designs and mixed methods approaches to learn more about implementation of interventions within specific contexts.16

Similarities and Overlaps

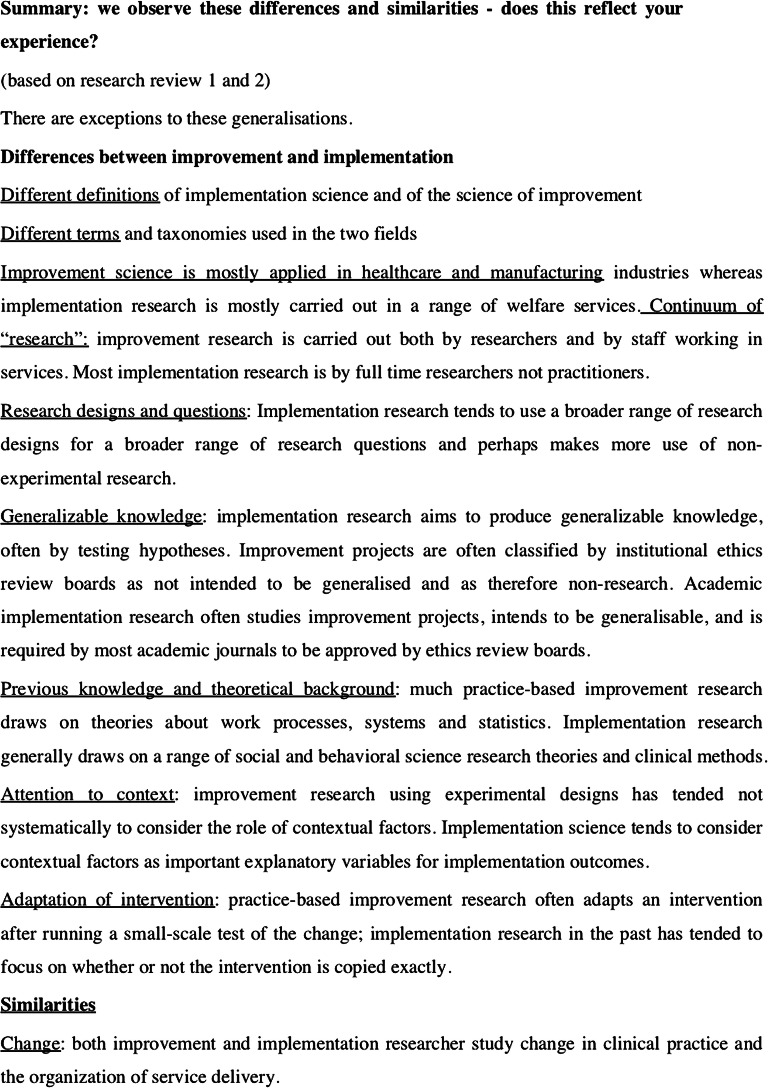

Yet there are also similarities that are often not sufficiently recognized by those working in each field (Table 2 and Fig. 2). Both types of science are concerned with changing clinical practices or changing how services are organized. Also, more recently, both implementation and improvement scientists have learned that complex interventions require adaptation to context. Both fields are concerned with the question of whether the adaptations are more or less effective than the originally tested version, leading to guidance about how best to adapt tested interventions.14

Table 2.

Summary: We Observe These Differences and Similarities—Does This Reflect Your Experience? (Based on Our Two Reviews of Research1, 2)

| Differences | Similarities |

|---|---|

| Different definitions: of implementation science and of the science of improvement | Change: both improvement and implementation researcher study change in clinical practice and the organization of service delivery. |

| Different terms: and taxonomies used in the two fields | Researcher’s role in change: both fields include researchers who observe and study change, but also include researchers who are more involved in making changes in different ways. |

| Application: Improvement science is mostly applied in healthcare and manufacturing industries whereas implementation research is carried out in a range of welfare services. | Applied research with practical aims: both fields of research aim to produce knowledge that is actionable in practice. |

| Continuum of “research”: improvement research is carried out both by researchers and by staff working in services. Most implementation research is by full-time researchers not practitioners. | Under-use of costing and return on investment analysis: neither of the fields pays sufficient attention to estimating the costs of the intervention and its implementation to different parties, and the savings or extra costs to different parties at different time points. (We have found that this information is essential for decision-makers and for tracking pilot studies in order to decide scale-up, and one reason why many practitioners find the research not relevant to their concerns.) |

| Research designs and questions: Implementation research tends to use a broader range of research designs for a broader range of research questions, and makes more use of observational research designs. | Innovation in research methods: compared to a number of other fields, there has been limited innovation in research practice and methods, especially regarding the use of digital data and digital technologies. |

| Previous knowledge and theoretical background: much practice-based improvement research draws on theories about work processes, systems, and statistics. Implementation research generally draws on a range of social and behavioral science research theories and clinical methods. | Systems theory: researchers in both fields have sought to make use of systems theory. |

| Attention to context: improvement research using experimental designs has tended not to consider the role of contextual factors in a systematic way. Implementation science tends to consider contextual factors as important explanatory variables for implementation outcomes. | |

| Adaptation of intervention: practice-based improvement research often adapts an intervention after running a small-scale test of the change; implementation research in the past has tended to focus on whether or not the intervention is copied exactly (fidelity). | |

| Generalizable knowledge: implementation research aims to produce generalizable knowledge, often by testing hypotheses, and is required by most academic journals to be approved by ethics review boards. Improvement projects are often classified by institutional ethics review boards as not intended to be generalized and as therefore non-research. (Academic research into improvement is different and aims to produce generalizable knowledge.) |

Figure 2.

Summary: we observe these differences and similarities—does this reflect your experience? (based on our two reviews of research12

EXCHANGE BETWEEN THE FIELDS AND COMBINATIONS

The recent pandemic and the continuing changes have motivated development and innovation in the practice and science of both implementation and improvement. We suggest four areas for greater exchange and mutual development, so as to be more relevant to the demands posed by the pandemic and its consequences for both short-term investigations and other types of research and practice.

Defining Terms and Using Taxonomies

The development of each science is hindered by different uses of terms,7 and sometimes these differences are not recognized, which causes more confusion. A recent review of 72 Cochrane reviews of improvement strategies concluded that “researchers reported neither fidelity definitions nor conceptual frameworks for fidelity in any articles”.17 To address these issues, taxonomies from both fields could be drawn upon to describe an intervention. Implementation science includes taxonomies of 73 different implementation strategies18 and 93 behavior change techniques,19 both produced from systematic reviews of research. Improvement science, likewise, provides taxonomies of types of quality improvement,20, 21 and also ways to distinguish improvement approaches from clinical interventions such as a tool for assessing QI-specific features of QI publications.22 In the new COVID-19 era, researchers and practitioners can use these taxonomies to widen their choice of changes, and design a strategy more suited to the improvement change or context. We do note, however, that a recent study found that there was no consensus between experts about which strategies would address different contextual and other challenges of implementing different interventions.23

As regards guidance for choosing an implementation strategy for a particular improvement change in a particular context, we endorse the conclusion of this recent study, of the “need for a more detailed evaluation of the underlying determinants of barriers and how these determinants are addressed by strategies as part of the implementation planning process”.23 There are particular problems with the growing number of “readiness assessment tools” and “capacity for change tools” (e.g.,24–26). There is uncertainty about whether these assessments measure all, or some, of the context of an intervention, and which types of intervention each might be most suited for assessing readiness or capacity to change. Researchers from both fields together could collect and assess these tools and provide practical improvers with a guide about which to use, and for which purposes.

Using Research Theories and Frameworks from “the Other Field”

Implementation science provides evidence-based models about context factors that influence implementation.16 Related to this are theories about the interaction between context implementation and the intervention, and how this affects outcomes. Improvers can use these frameworks in several ways:

Before a study: To identify data to gather to describe existing care practices and organization as well as barriers and facilitators to change.

To develop a theory or model of how the intervention might work, which can later be refined using data from the study, which can test the theory of the mechanisms hypothesized to be at work (e.g.27–29)

To help to design an intervention based on earlier theories of effective interventions for the purpose29

During a study: To decide which data to gather to describe the changes actually made and then to be able to explain outcomes.23

After a study: Frameworks and theories produced by the study can help address some challenges of generalization by giving guidance to implementers for repeating the intervention elsewhere.30

Implementation science and other research recommends that intervention designs be theory-based,30, 31 but such “program theories” or “logic models” are rarely used. Lack of attention to the theory underlying the intervention can impair the accumulation of evidence and reduces the help that research can give to practical innovators hoping to design effective change.

Improvement science can contribute theories and frameworks to assist research and practice in implementation. This includes theories of scale-up or spread and the collaborative break-through approach.32, 33 Many other frameworks are described in,4 but the evidence-basis for each varies greatly, and the scope of interventions and contexts for which they are relevant are not well-delineated.

Using Research Methods from Other Fields and the DA-RCT Generic Approach

Pragmatic experimentation or iterative testing is a generic approach used in many fields to test a change on real people or organizational processes. The plan-do-study-act testing cycle is central to quality improvement projects.4, 8, 34 Combined with statistical process control, and the choice of the right outcome data to track, this method is under-used in change projects and research and is one method of choice in the COVID-19 era. Some implementation research has used versions of this iterative testing model to give rapid feedback of the effects of implementation actions.11

We propose a generic model for iterative testing of changes where controlled trials are not possible, and where feedback during implementation can be used quickly to adjust a change to make it more effective. For neutral language that is easily understandable by colleagues in both fields, we have found “digitally assisted rapid cycle testing” (DA-RCT) to be a useful generic approach. The approach is to choose outcomes that can be attributed to the change intervention and that can be easily collected using digital technology, or where digital data already exists (e.g., data on prescribing of antibiotics as an outcome of more appropriate prescribing that is part-attributable to a change intervention). Rapid cycle testing can be performed on small samples using statistical methods and time series or other comparative designs.8 This approach can be used to provide rapid feedback to implementers about the effects of COVID-19 responses, or adaptations. These include adaptions to patient assessment or treatment methods, patient information or self-help interventions, staff daily work practices, organization flow and patient pathway arrangements, supplies logistics changes, IT changes, public health interventions, the effects of policy implementation strategies, and changes to financing systems.

In addition, we propose that improvement researchers can increase the range of research designs they use by employing non-experimental or naturalistic methods commonly used in research into public health, social work, and education implementation research.15, 16

Finally, more researchers from both fields could develop closer partnerships with practitioners, to learn about the change in the researcher role and the new methods needed for practice-engaged research. By exchanging lessons, for example, about when and how to get ethics review board approval, more might be gained by researchers coming together to consider ways forward for practice-based and partnership research.34

“Improve-mentation” Research Teams, or Centers

One combination is to establish new “improve-mentation” research centers with staff having background in both fields, with the explicit objective to bring together improvement and implementation research for faster-impact improvements. An alternative would be to reform existing centers for this purpose, although there is the possibility that “carry-over” of existing projects and staff may dilute the focus, or give a bias towards either improvement or implementation research rather than the combination. Another option is to form research teams that combine researchers with skills and knowledge in the two fields for specific studies. The rapid impact research into Stockholm Healthcare response to the pandemic used this approach.35 This option could be assisted with a searchable improve-mentation database of researchers’ skills, which could also be used by researchers quickly to assemble project teams for preparing or undertaking COVID-19 and other proposals or projects.

CONCLUSIONS

Our purpose was to describe how research and practitioner communities can use and develop a combination of implementation and improvement approaches to enable faster and more effective change in the future, especially where evidence of local effectiveness is limited. We provided a simple overview of two diverse and sometime confusing fields. More clearly defining terms and measures in scientific articles and presentations could help exchange, give consistent and less confusing terminology, and also help to develop the fundamental concepts of both fields. We presented definitions that we found allowed shared communication in workshops and conferences involving both implementers and improvers, and have been particularly useful for our work in the first part of 2020 to give rapid assistance for the COVID-19 changes and adaptations and for addressing disparities.

Both research and practical change programs could use frameworks, methods, and designs from the other field to speed improvement and help develop the sciences. We gave our understanding of the differences and some of the consequences of what we see is now an unnecessary separation of the domains, and one not justifiable in the COVID-19 era. Different “improve-mentation” combinations range from minimal exchange over specific subjects, to full integration of the fields, including forming combined science and development units. One aim of the article was to raise for debate the value and costs of combining the sciences and methods of improvement and implementation, both in research and in practical improvement. We look forward to hearing others’ views about whether they perceive an unnecessary separation between the domains, and other ideas for drawing on both fields, as well as how a third domain which we have not considered—“knowledge translation”—may speed and make improvements more effective.

Funding

This article draws on a project funded in part by the US Department of Veterans Affairs, Veterans Health Administration Quality Enhancement Research Initiative, through core funding to the Center for Implementation Practice and Research Support (Project # TRA 08-379), as well as funding from the Department of Medical Management, Karolinska Institutet, Stockholm, Sweden. Additional support was provided by the Quality Enhancement Research Initiative through its Care Coordination QUERI program (Project #QUE 15-276).

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Disclaimer

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the U.S. Department of Veterans Affairs or the Karolinska Institutet.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Øvretveit J. Combining improvement and implementation to speed and spread enhanced VA healthcare for Veterans - VA White Paper 1. Available from Center for Implementation Practice and Research Support (CIPRS), Veterans Health Administration, Sepulveda, West LA Medical Center Group, Ca, 2015.

- 2.Øvretveit J, Mittman B, Rubenstein L, Ganz D. Using implementation tools to design and conduct quality improvement projects for faster and more effective improvement. Int J Health Care Qual Assur. 2017;30(8):1–17. doi: 10.1108/IJHCQA-01-2017-0019. [DOI] [PubMed] [Google Scholar]

- 3.Eccles MP, Mittman BS. Welcome to Implementation Science. Implement Sci. 2006;1:1. doi: 10.1186/1748-5908-1-1. [DOI] [Google Scholar]

- 4.Langly G, Nolan K, Nolan T, Norman C, Provost L. The Improvement Guide. San Francisco: Jossey Bass; 1997. [Google Scholar]

- 5.Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, Robinson N. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26:13–24. doi: 10.1002/chp.47. [DOI] [PubMed] [Google Scholar]

- 6.McDonald KM, Chang C, Schultz E. Appendix D Taxonomy of Quality Improvement Strategies, IN Through the Quality Kaleidoscope: Reflections on the Science and Practice of Improving Health Care Quality. Closing the Quality Gap: Revisiting the State of the Science. Methods Research Report 2013 (Prepared by Stanford-UCSF Evidence-based Practice Center under Contract No. 290-2007-10062-I.) AHRQ Publication No. 13-EHC041-EF. Rockville, MD: Agency for Healthcare Research and Quality; February 2013.www.EffectiveHealthCare.ahrq.gov/reports/final.cfm. [PubMed]

- 7.Koczwara B, Stover AM, Davies L, Davis M, Fleisher L, Ramanadhan S, Schroeck FR, Zullig L, Chambers D, Proctor E. Harnessing the synergy between improvement science and implementation science in cancer: A call to action. J Oncol Pract. 2018;14(6):335–340. doi: 10.1200/JOP.17.00083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Speroff T, O'Connor GT. Study designs for PDSA quality improvement research. Qual Manag Health Care. 2004;13(1):17–32. doi: 10.1097/00019514-200401000-00002. [DOI] [PubMed] [Google Scholar]

- 9.Ocrinc G, Nelson W, Adams S, O’hara A. An Instrument to Differentiate between Clinical Research and Quality Improvement, IRB: Ethics and Human Research SEPTEMBER-OCTOBER 2013 available from https://pdfs.semanticscholar.org/867c/775353701304f268b83896ed115bb9914095.pdf accessed 10 august 2019 [PubMed]

- 10.Kilbourne AM, Abraham KM, Goodrich DE, Bowersox NW, Almirall D, Lai Z, Nord KM. Enhancing outreach for persons with serious mental illness: 12-month results from a cluster randomized trial of an adaptive implementation strategy. Implement Sci. 2014;9:163. doi: 10.1186/s13012-014-0163-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rubenstein L, Meredith L, Parker P, Gordon N, Hickey S, Oken K, Lee M. Impacts of Evidence-Based Quality Improvement on Depression in Primary Care A Randomized Experiment. J Gen Intern Med. 2006;21:1027–1035. doi: 10.1111/j.1525-1497.2006.00549.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rubenstein J, Stockdale S, Sapir N, Altman L, Dresselhaus T, Salem-Schatz S, Vivell S, Ovretveit J, Hamilton A, Yano E. Patient-Centered Primary Care Practice Approach Using Evidence-Based Quality Improvement: Rationale, Methods, and Early Assessment of Implementation. J Gen Intern Med. 2014;2014:S589–597. doi: 10.1007/s11606-013-2703-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Baumann A, Powell B, Kohl P, Tabak R, Penalba V, Proctor E Domenech-Rodriguez M, Cabassa L, 2015 Cultural adaptation and implementation of evidence-based parent-training: A systematic review and critique [DOI] [PMC free article] [PubMed]

- 14.DHHS 2015 Department of Health and Human Services 2015 Making Adaptations to Evidence-Based Programs Tip Sheet. 2015. www.acf.hhs.gov/sites/default/files/fysb/prep-making-adaptations-ts.pdf. Accessed 3 Nov 2015.

- 15.Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A synthesis of the literature. Tampa: National Implementation Research Network, University of South Florida; 2005. [Google Scholar]

- 16.Brownson RC, Colditz GA, Proctor EK (eds). Dissemination and implementation research in health: translating science to practice. Oxford University Press, 2012.

- 17.Slaughter S, Hill J, Snelgrove-Clarke E. What is the extent and quality of documentation and reporting of fidelity to implementation strategies: a scoping review. Implement Sci. 2015;10:129. doi: 10.1186/s13012-015-0320-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Powell B, Waltz T, Chinman M, Damschroder L, Smith J, Matthieu M, Proctor E, Kirchner J. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. doi: 10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, Eccles MP, Cane J, Wood CE. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46(1):81–95. doi: 10.1007/s12160-013-9486-6. [DOI] [PubMed] [Google Scholar]

- 20.Shojania KG, McDonald KM, Wachter RM, et al. Series Overview and Methodology. Vol. 1 of: Shojania KG, McDonald KM, Wachter RM, Owens DK, editors. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies. Technical Review 9 (Prepared by the Stanford University-UCSF Evidence-based Practice Center under Contract No. 290-02-0017). AHRQ Publication No. 04-0051-1. Rockville, MD: Agency for Healthcare Research and Quality. 2004. www.ncbi.nlm.nih.gov/books/NBK43908/. [PubMed]

- 21.McDonald KM, Chang C, Schultz E. Appendix D Taxonomy of Quality Improvement Strategies, IN Through the Quality Kaleidoscope: Reflections on the Science and Practice of Improving Health Care Quality. Closing the Quality Gap: Revisiting the State of the Science. Methods Research Report. (Prepared by Stanford-UCSF Evidence-based Practice Center under Contract No. 290-2007-10062-I.) AHRQ Publication No. 13-EHC041-EF. Rockville, MD: Agency for Healthcare Research and Quality; February 2013.www.EffectiveHealthCare.ahrq.gov/reports/final.cfm. [PubMed]

- 22.Hempel S, Shekelle P, Liu J, Danz M, Foy R, Lim Y, Motala A, Rubenstein L. Development of the Quality Improvement Minimum Quality Criteria Set (QI-MQCS): a tool for critical appraisal of quality improvement intervention publications. BMJ Qual Saf. 2015;24:796–804. doi: 10.1136/bmjqs-2014-003151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Waltz T, Powell B, Fernández M, Abadie B, Damschroder L. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. 2019;14:42. doi: 10.1186/s13012-019-0892-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Brach C, Lenfestey N, Roussel A, Amoozegar J, Sorensen A. Will It Work Here? A Decisionmaker’s Guide to Adopting Innovations. Prepared by RTI International under Contract No. 233-02-0090. Agency for Healthcare Research and Quality (AHRQ) Publication No. 08-0051. Rockville, MD: AHRQ; September 2008.

- 25.Weiner B, Amick A, Lee S. Review: Conceptualization and Measurement of Organizational Readiness for Change: A Review of the Literature in Health Services Research and Other Fields. Med Care Res Rev. 2008;65(379):380–437. doi: 10.1177/1077558708317802. [DOI] [PubMed] [Google Scholar]

- 26.Helfrich C, Li Y, Sharp N, Sales A. Organizational readiness to change assessment (ORCA): Development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implement Sci. 2009;4:38. doi: 10.1186/1748-5908-4-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Michie S, Abraham C. Identifying techniques that promote health behaviour change: evidence based or evidence inspired? Psychol Health. 2004;19:29–49. doi: 10.1080/0887044031000141199. [DOI] [Google Scholar]

- 28.Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A. Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005;14:26–33. doi: 10.1136/qshc.2004.011155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Walker AE, Grimshaw J, Johnston M, Pitts N, Steen N, Eccles M. PRIME—PRocess modelling in ImpleMEntation research: selecting a theoretical basis for interventions to change clinical practice. BMC Health Serv Res. 2003;19(3(1)):22. doi: 10.1186/1472-6963-3-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.ICEBeRG The Improved Clinical Effectiveness through Behavioural Research Group (ICEBeRG). Designing theoretically-informed implementation interventions. Implement Sci. 2006;1:4. doi: 10.1186/1748-5908-1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Eccles M, Grimshaw J, Walker A, Johnston M, Pitts N. Changing the behavior of healthcare professionals: the use of theory in promoting the uptake of research findings. J Clin Epidemiol. 2005;58(2):107–12. doi: 10.1016/j.jclinepi.2004.09.002. [DOI] [PubMed] [Google Scholar]

- 32.Massoud MR, Nielsen GA, Nolan K, Nolan T, Schall MW, Sevin C. A Framework for Spread: From Local Improvements to System-Wide Change. IHI Innovation Series white paper. Cambridge, Massachusetts: Institute for Healthcare Improvement; 2006. available on www.IHI.org, accessed 11august 2019.

- 33.Kilo CM. A framework for collaborative improvement: lessons from the Institute for Healthcare Improvement's Breakthrough Series. Qual Manag Health Care. 1998;6(4):1–13. doi: 10.1097/00019514-199806040-00001. [DOI] [PubMed] [Google Scholar]

- 34.Øvretveit J, Hempel S, Magnabosco J, Mittman B, Rubenstein L, Ganz D. Guidance for Research-Practice Partnerships (R-PPs) and Collaborative Research. J Health Organ Manag. 2014;28(1):115–126. doi: 10.1108/JHOM-08-2013-0164. [DOI] [PubMed] [Google Scholar]

- 35.Ohrling M, Øvretveit J, Lockowandt U, Brommels M. Management of emergency response to SARS COV-2 outbreak in Stockholm and winter preparations. J Prim Healthcare. 2020;V12(3):207–214. doi: 10.1071/HC20082. [DOI] [PubMed] [Google Scholar]