Abstract

Purpose

During the coronavirus disease 2019 pandemic, face-to-face teaching has been severely disrupted and limited for medical students internationally. This study explores the views of medical students and academic medical staff regarding the suitability and limitations, of a bespoke chatbot tool to support medical education.

Methods

Five focus groups, with a total of 16 participants, were recruited using a convenience sample. The participants included medical students across all year groups and academic staff. The pre-determined focus group topic guide explored how chatbots can augment existing teaching practices. A thematic analysis was conducted using the transcripts to determine key themes.

Results

Thematic analysis identified five main themes: (1) chatbot use as a clinical simulation tool; (2) chatbot use as a revision tool; (3) differential usefulness by medical school year group; (4) standardisation of education and assessment; (5) challenges of use and implementation.

Conclusions

Both staff and students have clear benefits from using chatbots in medical education. However, they documented possible limitations to their use. The creation of chatbots to support the medical curriculum should be further explored and urgently evaluated to assess their impact on medical students training both during and after the global pandemic.

Keywords: Artificial intelligence, digital health, machine learning, technology, education, lifestyle

Introduction

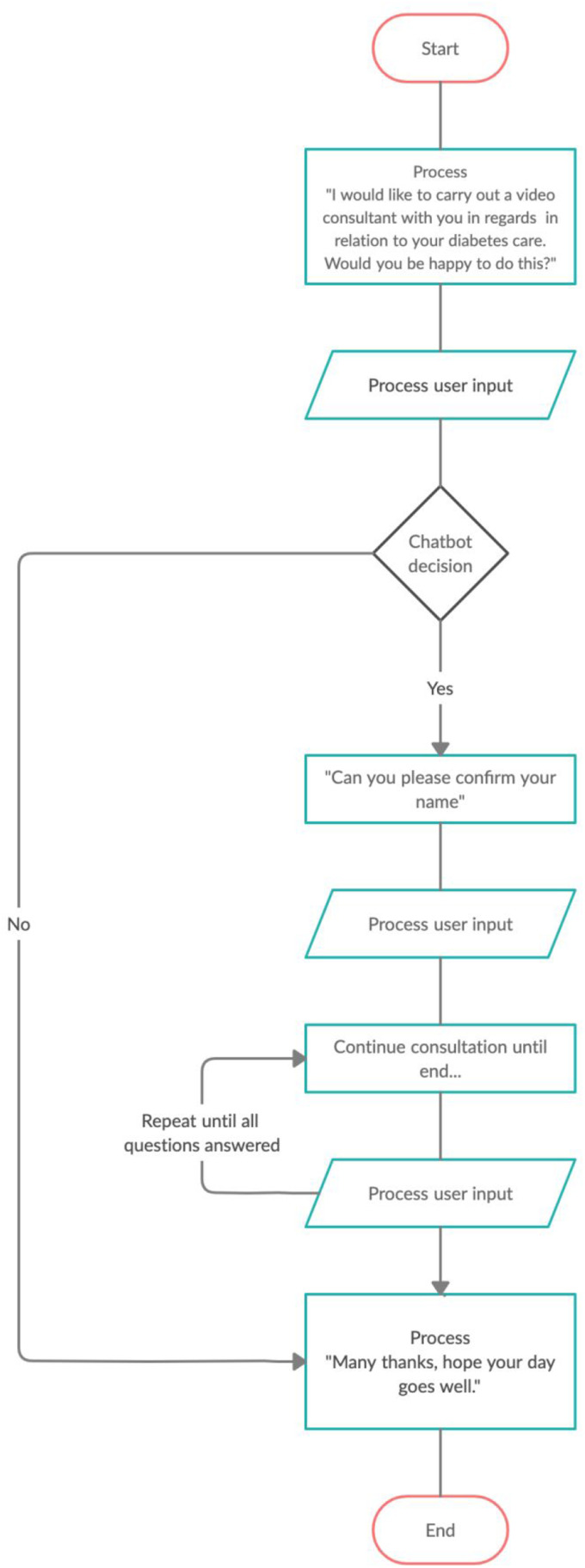

Chatbots are an application of the emerging field of artificial intelligence. It underpins the knowledge of whether machines can use evidenced-based algorithms to enhance efficiency, communication and dialogue. Chatbots have the potential to simulate a natural dialogue either by audio or text and have the capacity, to perform complex tasks, by interacting with human users, as demonstrated by notable examples such as the Amazon Alexa.1 A simple flowchart, as illustrated in Figure 1, demonstrates a flow of conversation between the user (the medical student) and the chatbot. The user input are keywords from a command by a user which would be recognised by the chatbot, based on which the chatbot will decide to answer the question, allowing a flow of conversation to occur.

Figure 1.

Flowchart illustrating how a potential chatbot would function to simulate a virtual diabetes clinic consultation, by a medical student.

The focus of the study was to qualitatively explore the views of medical students and medical educators in integrating the use of chatbots into the medical curriculum, using focus groups. The context of the study was based on making current simulations which were being conducted across one National Health Service (NHS) trust, more efficient, with the use of chatbots. This would mean that the number of health care professionals available would not limit the number of medical students being allowed to complete the simulation as a chatbot could serve numerous medical students at one time. This would mean that the simulations would no longer be limited to just one NHS site.

The chatbot market in healthcare is expected to grow over £410 million from 2019 to 20252 particularly in primary care. Currently, chatbots in healthcare, developed by Babylon Health,3 have predominantly been used to facilitate patient consultations. Despite the advancements in the use of chatbots for patient-facing services, such as those support by Babylon Health,3 there are no chatbots that are actively used to support medical students in education. As face-to-face teaching is being limited for clinical medical students by coronavirus disease 2019 (COVID-19), a chatbot could augment teaching that is being delivered remotely by medical schools.

There is an increasing body of research showing how technology-mediated machine learning can increase quality of learning processes, to achieve better outcomes for medical students. Studies have shown a positive effect of using mobile learning devices, such as smartphones and tablets, in clinical environments, to support the learning of medical students.4

There is substantial evidence that a range of skills can be improved using virtual patient simulations.5 A further study illustrated that a simple chatbot can be created for a tutoring system,6 and that interviewing patients can be practiced using a chatbot.7 To conclude, it was shown that students who used these chatbots were attaining higher grades.8 However, a recent paper suggested that teachers need to be willing to promote technology amongst students to influence their use.9

Despite research suggesting how chatbots can improve education the implementation of these chatbots are rarely researched. There are currently limitations in our understanding of how to formally incorporate chatbots into medical education curricula due to a lack of understanding of their application and lack of evidence for consideration by regulators. We therefore need a study to examine the views of students and staff as to the use of chatbots in medical education. To our knowledge, this is the first paper that directly assesses the use of chatbots in British medical education and the first international paper with a formal focus on group methodology.

We performed a pilot implementation and assessment of Chatbot technology at Warwick Medical School. Warwick Medical School is a United Kingdom Medical School located in Coventry, with 1900 medical students. At Warwick Medical School, students participate in case-based learning (CBL) exercises and ‘Clinically Observed Medical Education Training (COMET)’10 simulation sessions at one NHS trust base. Feedback for these sessions are very positive, however significant human resources, such as experienced postgraduate tutors are required, and the cost of mannequins to help aid simulation. Each station requires one tutor and on average there are four stations per COMET tutorial. Topics include emergency care, clinical consultations and practical procedures.

We propose that chatbot technology could potentially be integrated to facilitate such sessions both at Warwick Medical School and internationally. The aim of this study is to assess the suitability and limitations of creating a chatbot, which can be implemented into a medical curriculum. The use of chatbot technology is particularly useful in resource-limited environments and in response to the COVID-19 pandemic where there have been, and remain, significant disruptions to face–face medical education training.

Methods

Assessing the perspectives of medical students and medical educators is vital to forming a long-term plan for the future optimisation of chatbot design and widespread implementation into medical education curricula. Their input is a strong predictor of how well students might engage and benefit. We adopted a pedagogical theory approach to understand teaching practices across medical education. This required understanding the students’ perspective along with the medical educators’ perspective. Collaboratively this was expected to provide a focus on where chatbots could be integrated into the medical education curriculum

Sampling and recruitment

This was a study conducted over a 2-month period at Warwick Medical School. Medical students from years 1 to 4 and academic medical staff were recruited by AK. Medical students and medical educators were invited to take part in the study via course-wide emails.

Focus groups

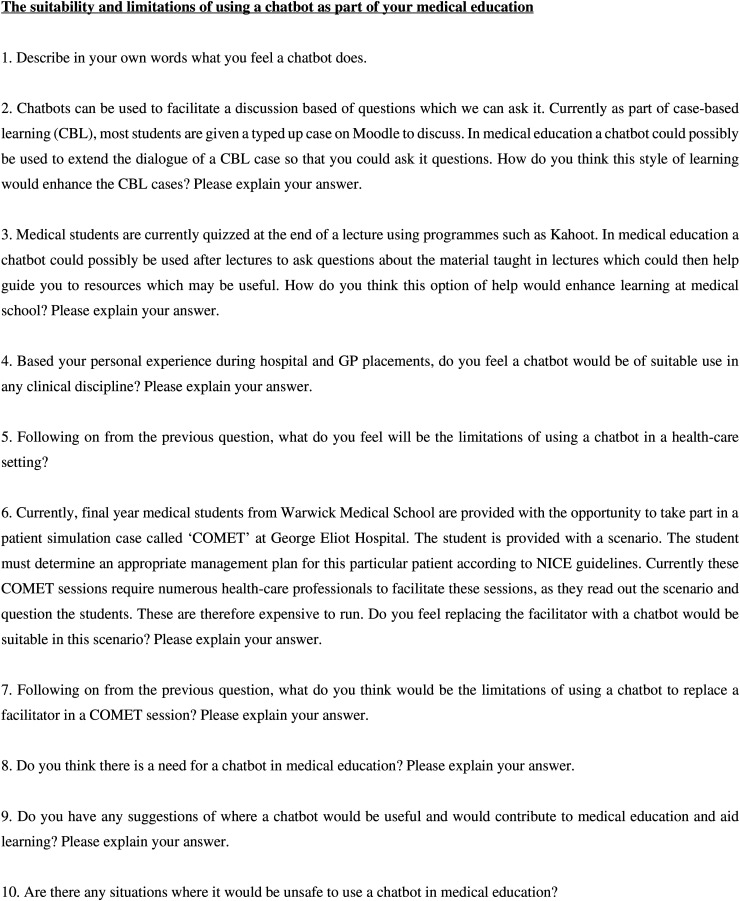

Face-to-face focus groups, averaging three participants per group, took place at Warwick Medical School. The focus groups were semi-structured in nature supported by a pre-determined topic guide, as shown in Figure 2. The topic guide explored topics such as the uses of chatbots in teaching practices common to the medical school, such as CBL, and their perception of using chatbots to learn from, instead of traditional methods such as lectures and textbooks. The topic guide questions were designed following exploration of the literature and remained iterative in nature. This allowed for the questions to change as the focus groups went on, in case new important topics emerged. The focus groups lasted between 24 and 36 min and were recorded on an encrypted recording device by AK.

Figure 2.

The topic guide consisting of 10 pre-set questions which were asked during the focus groups.

Analysis

Each transcript was transcribed verbatim. The analysis of the data was carried out alongside conducting focus groups by AK. This ensured that themes that were raised in prior focus groups, were addressed and open for discussion in the forthcoming focus groups. The transcripts from the focus groups formed the raw data for the study. Data was analysed using NVivo 11.0 software; a thematic framework approach11 was used for the thematic analysis in five steps:

Familiarisation of the data collected by transcribing and re-reading the transcripts.

Identifying common themes amongst the focus groups and coding appropriately.

Indexing particular sections of the discussions which corresponded to a theme or an idea.

Charting the data in illustrative forms respective of the themes identified.

Mapping and analysis of key characteristics laid out in the charts created to obtain a conclusion.

Results

Following recruitment, there were 13 participating medical students and three staff members. In total 16 participants took part in the focus groups as outlined in Table 1. There appeared to be an overall student consensus during each focus group, and no reports were made of feeling uncomfortable whilst expressing views. Five main themes were identified which were further categorised into subthemes as shown in Table 2.

Table 1.

Description of participants, each alphabet letter represents individual participants from that category for example (year 1, participant a).

| Year of medical student | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| Number of participants | 3 (a, b, c) | 3 (a, b, c) | 4 (a, b, c, d) | 3 (a, b, c) |

| Number of academic faculty participants | 3 (a, b, c) | |||

Table 2.

Themes were identified during thematic analysis of raw data.

| Theme | Subthemes |

|---|---|

| Chatbot used as a clinical simulation tool |

|

| Chatbot use as revision tool |

|

| Differential usefulness by medical school year group |

|

| Standardisation of education and assessment |

|

| Challenges of use and implementation |

|

Chatbot use as a clinical simulation tool

Participants expressed that potential use for a chatbot would be to use it as a patient simulator that a medical student could interact with.

It's helping you practice before going into a real patient setting.

(Year 1, participant b)

However, some participants raised concerns about how a chatbot could limit the capacity at which the chatbot could be used to learn or practice from.

Depends how descriptive you have to be with your own responses, to get the right trigger words to get the responses.

(Year 3, participant c)

History taking

Participants expressed that one area where chatbots could be used in are where students have to practise history taking.

On the clinical skills side of things, it lets you hone history taking skills.

(Year 1, participant c)

Some participants suggested that audio chatbots could be used to practice for telephone consultations.

Would be useful for doing phone consultations, because you lose that human contact straight away and having something to use to practice on before trialling on a patient would be useful.

(Year 3, participant c)

However, some participants expressed the limitations of taking a history using a chatbot which would therefore not be beneficial for their learning.

You will lose the non-verbal aspect.

(Year 3, participant a)

Cannot replace actually chatting to patients […] Imagine asking it [chatbot] something and it's answering another question instead.

(Year 3, participant d)

I would prefer a real patient than interacting with a chatbot. They will never have the subtilties and the nuances that a patient can present with and that experience.

(Academic faculty, participant 3)

Hospital patient simulations

Another limitation which participants outlined were that if facilitators involved with their learning were replaced by chatbots they would be losing out on personal experience that health care professional brings during teaching sessions.

With real life facilitators the nurses are actually bringing their experience […] The tips and advice that they would be able to provide would be really useful.

(Year 1, participant b)

Participants were also asked about COMET sessions whereby a chatbot could potentially replace facilitators and felt that the chatbot would not be taken seriously and would not be a beneficial exercise.

If a student asks a question which isn't phrased in a way that the chatbot couldn't understand […] You would then technically be speaking to an inanimate object […] wouldn't exactly be taken seriously or even be helpful.

(Year 1, participant c)

You can't visualise/associate with just a voice in a sphere […] Actually seeing people makes a big difference.

(Academic faculty, participant 3)

Some participants felt that their experience of hospital patient simulations with facilitators was beneficial for their teaching and that the chatbot would have limitations hindering that learning experience.

If I didn't know the answer they [facilitators] were then teaching me […] that would be a limitation with a chatbot.

(Year 4, participant c)

I had a prescribing station. […] I don't think I would have learnt much from that if it was just a chatbot asking me in comparison to an actual facilitator who explained it all to me.

(Year 4, participant a)

Professional and personal development

Some participants felt using a chatbot during training would hinder their empathy and professional development.

With a chatbot you take out that patient doctor element and it turns into a checklist […] For undergraduates who are still training, getting a lack of exposure so early on may be detrimental to their learning and may be less competent in comparison to others who have got more exposure.

(Year 4, participant c)

We are training to treat patients. Even in those settings we have, aren't realistic of what we experience. If you change it to a computer, it's even more unrealistic.

(Year 3, participant d)

Participants were worried about how they would later interact with patients if they were being taught by chatbots for a significant part of their medical education.

If you learn how to use the chatbot and draw all the necessary information out of it […] Then you’re just teaching a student to interact with a chatbot.

(Year 1, participant c)

I wonder about the impact on the students developing their empathy skills, by interacting with something that is so … fixed.

(Academic faculty, participant 3)

However, participants mentioned how using a chatbot would create a safe space whereby students can practice using chatbots prior to seeing patients in real life, early in their training.

It does mean we can practice in a safe space without it being stressful […] I don't think that it matters as much, because you’re not taking someone's time and it would be less stressful.

(Year 1, participant b)

It would be useful because not everyone is comfortable asking questions […] if we could ask this chatbot, it would be useful.

(Year 1, participant b)

Chatbot use as a revision tool

Participants expressed an interest in having a chatbot which they could use as a revision tool one which could analyse their answers to locate their weaknesses.

For clinical skills we ought to know how to do a certain exam and if we can present it [chatbot] some symptoms and if it told us what we would need to do, it would be useful.

(Year 1, participant b)

I know there are some tests which can analyse your answers and figure out an area of weakness and you can learn from that.

(Year 1, participant c)

Pharmacology

Participants mentioned pharmacology being an area of their curriculum for which a chatbot would be useful for their revision.

Pharmacology would be useful if you could develop a chatbot that could ask you MOA [mechanism of action] of drugs and you’d have to say it back.

(Year 4, participant c)

Good for pharmacology mechanism of action and things like that could easily be delivered by a chatbot. It could be a way to make that livelier and more interactive.

(Academic faculty, participant 3)

Information retrieval

Many participants felt that learning would be more efficient if they could locate resources using a chatbot.

It would be good to use as an index to find out more about a presentation which could direct you to internal lectures and textbooks.

(Year 3, participant b)

Moodle is really confusing and if you’re trying to find someone's email, it can sometimes take a very long time. If you had a chatbot you could ask it and it would spit the email right at you.

(Year 4, participant c)

May be useful in discussing what GMC guidelines […] guiding you to the correct guidance and working out what to do.

(Academic faculty, participant 2)

Differential usefulness by medical school year group

Participants expressed that first-year students would be more suited to using a chatbot in their early training instead of final year students.

If you want to practice taking a history, especially in the earlier years, that would be very useful.

(Year 4, participant c)

The younger years may find it to be useful in a safe space […] the later years it could feel like a waste of time.

(Year 4, participant a)

First years practicing their history skills would be useful because going into second year we hardly had any exposure.

(Year 4, participant b)

Yes because it's basic sciences […] there are definite answers. But I think the subtilties and clinical reasoning as you go into the older years you need a person with emotions.

(Academic faculty, participant 3)

Standardisation of education and assessment

Participants suggested that using a chatbot part of their training could ensure some level of standardisation amongst teaching opportunities.

With the chatbot you get a relative standardised test setting.

(Year 2, participant c)

There is a whole subjectivity in marking, it might actually standardise marking and make it more fairer.

(Academic faculty, participant 1)

Challenges of use and implementation

The majority of participants expressed numerous challenges of the use and implementation of chatbots. There was a negative perception of using chatbots in medical education in contrast to one which was positive.

Positive perception

One participant had a positive perception of using chatbots in medical education.

If it was introduced, it could be beneficial. Depends on people. It's quite hard to know.

(Year 4, participant c)

Negative perception

A larger part of medicine, you need to be compassionate, empathetic, maintaining a patient's dignity which I feel would be lost completely, if you’re interacting with a screen.

(Year 1, participant a)

As of now we have to look at a patient in their entirety whereas if you are practicing with a chatbot or the way a patient would use google.

(Year 4, participant b)

I think medical education has coped well without technology and we are producing good doctors. I don't think it would be filling a gap per se, whether it is more an efficient way of delivering, would be a slightly different question.

(Academic faculty, participant 2)

Discussion

The use of chatbots is emerging in a wide variety of industrial sectors, including more recently in the healthcare setting, however their use in medical education is largely untested. This study advances knowledge of areas in which a chatbot can be integrated into a medical curriculum. In this pilot study, we identified the following five themes: patient simulation, revision tools, suitable users, standardisation of testing and the perception of chatbots. These themes elicited strengths and limitations of the potential adaptations and uses of chatbots.

A major theme identified in the study was the use of chatbots for patient simulation. Many participants felt that a chatbot could be suitably used to practice history taking where the chatbot acts as the patient. This is consistent with findings of a metanalysis5 which provided evidence that in contrast to traditional education, clinical reasoning and procedural skills are improved whilst using virtual patient simulations. Medical students who are currently based at an NHS trust, often take part in hospital patient simulations. The participants felt that replacing the facilitators with a chatbot would not be an ideal situation as they learn from the facilitators and from the experiences that they share. Further from this, many participants expressed concern about their professional and empathic development when dealing with patients. This was consistent with the findings of another research group12 whereby physicians felt that chatbots would not be able to comprehend the emotional aspect when dealing with patients and thus would not provide a realistic experience for the student. Participants further felt that practicing patient consultation and simulations would hinder their ability to interact with patients in real life because consultations are complicated, which is a thought echoed by previous studies.13 One research group14 has suggested that there are challenges when interacting with chatbots due to cultural and language differences. However, these can be tackled by exploring the user experience and mimicking a range of changes to user responses based on trigger words such as ‘pain’ to allow the chatbot to respond in an empathetic manner.

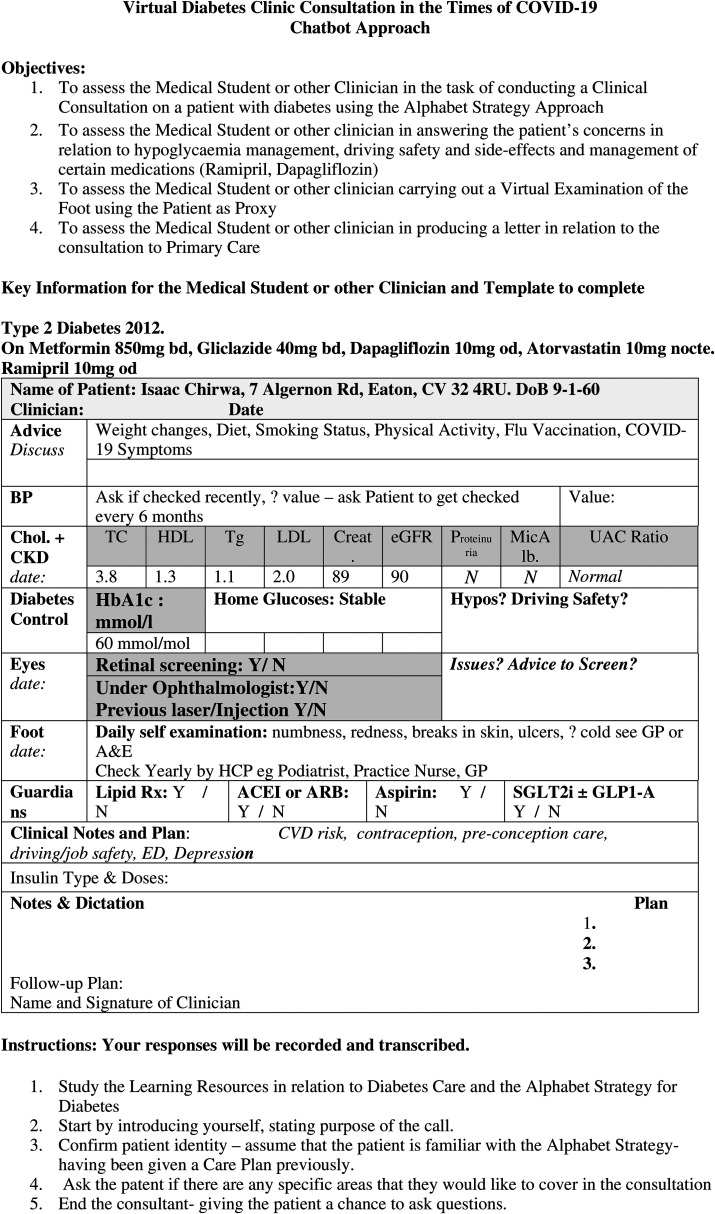

Given the results of the thematic analysis in regard to using chatbots for patient simulations, we can propose a chatbot for virtual diabetes clinics, during the COVID-19 pandemic. As face-to-face teaching has been limited this would be an effective way of preparing medical students to manage diabetes mellitus as it too, is part of an on-going global epidemic. There are approximately 415 million15 people living globally with diabetes. It has become a challenge to provide efficient and effective management of diabetes; this unfortunately leads to overwhelming complications. Diabetes is particularly suited to the use of digital technologies as teaching aids due to its quantitative nature importance across primary, secondary and tertiary care.

The Alphabet strategy16 is a framework for the management of diabetes. It incorporates the core components of diabetes care, in a simple mnemonic-based checklist It consists of: (1) advice on lifestyle changes such as smoking, exercise, diet and vaccine recommendations; (2) blood pressure targets; (3) cholesterol measurement with targets; (4) glucose control with targets; (5) annual eye exams; (6) annual foot exams; (7) use of guardian drugs: aspirin, angiotensin converting enzyme inhibitors/angiotensin receptor blockers and statins. Collectively this checklist ensures the reduction of complications caused by diabetes such as cardiovascular disease, retinopathy and nephropathy.

The novel contribution of this study is that it proposes an approach to educate medical students in the preparation of managing patients with diabetes, using a chatbot. The objective of the chatbot would be to run a virtual diabetes clinic consultation, in order to assess medical students or clinicians, using the Alphabet Strategy approach. The Alphabet strategy has already proved to be a useful instrument for patient education. By incorporating the Alphabet strategy into a chatbot, a technical solution is provided in training medical students and clinicians on the task of achieving effective and efficient diabetes care for all patients.

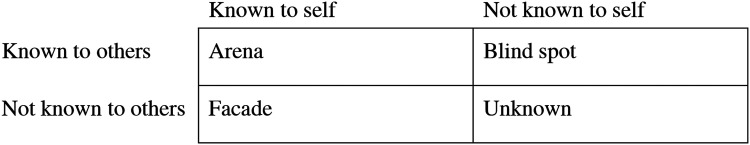

The second major theme identified was using a chatbot as a revision tool. Many participants felt that using a chatbot to learn simple concepts of pharmacology would make it more interactive. Participants also expressed that an information retrieval chatbot would be useful in making their revision less time consuming when it comes to locating resources. This is consistent with the findings presented by previous studies6 whereby a simple chatbot was created for a tutoring system which provided answers for administrative questions. The ‘Johari Window Model’17 is applicable when discussing revision. It outlines what is and is not known to self, as shown in Figure 3. This can therefore be applied to the development of a chatbot for medical education. The chatbot could allow the student to ‘bypass’ areas of learning that they feel competent in, thus spending more time on areas that need further competency development (Figure 4).

Figure 3.

The Johari Window Model.

Figure 4.

Script of running a virtual diabetes clinic using a chatbot.

The third theme identified was the differential usefulness by medical school year group. The majority of participants felt that first-year students would benefit from using a chatbot to practice history taking as it was a safe space and can help build their confidence before meeting real patients. This concept of interviewing patients using a chatbot has been tested before in the form of a ‘Virtual Patient Bot’.7 Students were able to type their message and the chatbot replied back either in text or audio. A study subsequently completed8 showed that students who used the chatbot gained higher grades in comparison to those that did not.

The fourth theme identified was the standardisation of education and assessment. Participants commented on how experiences differ in regard to their examiners. A chatbot could therefore provide a standardised experience for each student and limit personal bias from a human examiner. However, this would still result in limitations of providing feedback.

The final theme identified was the challenges of use and implementation of using a chatbot. Many participants felt that in a course where patient care is integral to their learning. Substituting a chatbot with real patients would hinder their development and feel that this would put them at a disadvantage, in comparison to those who got more patient exposure. These perceptions were based on participants who have not used a chatbot in their education. Previous research9 has shown that the willingness of teachers in promoting technology, could influence the student's positive response towards said chatbot, which today is an emerging technological concept.

Strengths and limitations

One strength of the study was the use of focus groups as a methodology. This provided a direct interaction with the participants, enabling key questions along with exploring a wide range of opinions. The rigorous approach to thematically analyse the dataset ensured flexibility to build and explore on further discussions with the latter focus groups. An additional strength of the study included getting a student perspective among all year groups of the medical school. Despite shared ideas and opinions, participants from each year shared personal experiences which contributed to identifying particular student groups which are more likely to benefit from a chatbot and those that would not greatly benefit from a chatbot, in their opinion.

There were limitations in the sampling framework used for this study. A convenience sample is a non-probability sampling method. This meant that participants were selected based on their availability. This therefore meant that there was a risk of not equally targeting participants which represented the population being investigated.18 However, as this was a pilot study and thematic saturation was achieved, as the emerging themes were similar in the latter focus groups, no further participants were required.

Despite emailing the entire medical school cohort there was a low uptake on the offers for participation. Many of the participants that did get involved, stated they were unclear about what a chatbot was and felt intimidated about taking part in a discussion with limited knowledge. The participants were briefed prior to the focus groups to ensure them that substantial knowledge about chatbots was not required to take part, which in hindsight posed as a limitation. This meant that when certain situations were discussed it was difficult for participants to visualise possible uses for a chatbot. If participants had current knowledge of chatbot advancements, perhaps an immediate suitable use of a chatbot could be identified for future developments. However, as this study was a detailed qualitative analysis, the limited number of participants allowed an in-depth assessment of the participant's perceptions.

Another limitation was that a member check was not done for the study. This would have allowed validation of the data collected by the participants which could have been done by appraising the transcribed discussions from the focus groups.19 However, participants were provided with opportunities to contact the principal researcher after the focus group.

A final limitation of the study was that the analysis was conducted by the team so there will be an element of bias, as there is a possibility that during analysis points were found to validate a specific theme, however saturation was met whilst gathering data and therefore this was reduced.

Implications

The conclusions which were drawn from this pilot qualitative study, highlighted areas which require further research to confirm its findings. A further qualitative study using: a larger sample size, participants who develop chatbots for education and members of the medical education community and who utilise technology to enhance learning. Such participants would be able to provide clearer insight into the production of a minimum viable product which could be further evaluated. This study has suggested implications for such a product.

The study highlighted that medical students and their educators believe that there is scope for integrating a chatbot into the medical curriculum and more importantly, its potential contribution to changing or supplementing the way medicine is taught today. This study identified that there is a need for medical institutions to promote the use of technology in order to encourage its students to explore the various programmes which are being developed for students’ learning. Medical schools have a commitment in improving education for their students and this is one potential way of improving how medicine can be taught outside of the lecture hall.

Whilst it is important to understand the limitations and appropriateness of replacing patient contact with a chatbot to improve medical education, it is also important to understand that a lot of time is spent away from clinical placements. There will always be an accepted belief that medical students learn best by meeting patients and shadowing doctors on the wards. However, there clearly is a niche in the timeline of medical education at the beginning of the degree, where these clinical placements have not yet been established. A chatbot could help create a safe environment for students to learn and develop their skills, before moving into a clinical environment. This study has shed light on areas where a chatbot could be integrated during these transition periods in order to enhance their medical school experience.

Conclusion

Chatbots are currently underused in British medical education. (1) They are not being developed for the correct needs of medical students. (2) They may not be encouraged by institutions, as traditional trial and tested methods are often utilised. (3) Students are unaware they exist and are therefore unsure how a chatbot can benefit their learning. The key findings of this study were that there is an opportunity to integrate a chatbot into the British medical curriculum either as a patient simulator, a revision tool and a tool to standardise examinations. This study also highlighted that a chatbot would be most useful during the earlier years of medical training rather than the later years. However, due to the limitations of the study, we may be underestimating the value of chatbot use in the later years of medical school, as its value could potentially come from being a virtual teaching assistant. The perceptions of chatbot use by medical students need to be urgently addressed with education on its benefits and limitations.

To conclude, the aim of building a chatbot for medical students would be to produce a chatbot which could be used to reduce tutor input and promote self-learning, something which many of the participants expressed in the study. Frequent use of the chatbot would then in turn logically improve competence as outlined by the General Medical Council framework on the ‘Generic Professional Capabilities’ especially when ‘creating effective learning opportunities for students and doctors’20 and increase performance feedback with regular use. This would therefore improve patient safety. The chatbot could be used as a protocol for standard assessment either by (a) the medical school or (b) the medical students during independent learning. Chatbots could prove to be great tools for medical students. We have demonstrated a clear example of how a chatbot can be implemented into the medical education system to run a virtual diabetes clinic, using the Alphabet Strategy, for managing patients with diabetes during the COVID-19 pandemic. Students can engage and utilise chatbots to ensure that they are regularly practicing key skills and knowledge, which is required of them to be competent doctors for the NHS especially during the COVID-19 global pandemic which has disrupted medical education globally.

Footnotes

Contributorship: All listed authors have (1) made substantial contributions to conception and design, acquisition of data, or analysis and interpretation of data; (2) drafted the article or revised it critically for important intellectual content; and (3) approved the final version to be published.

Declaration of Conflicting Interests: The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Disclaimers: This article formed part of the medical studies of AK at the University of Warwick and was submitted as part of an ongoing assessment.

Ethical approval: Ethical approval for the methodology was received from the Biomedical & Scientific Research Ethics Committee at the University of Warwick (BSREC) (ref: BSREC-CDA-SSC2-2019-46 (02/10/2019)). Prior to initiating the focus groups, written and verbal consent was obtained from each participant. All focus groups took place in private settings and were recorded on an encrypted recording device issued from Warwick Medical School. All focus groups were transcribed keeping participant identity anonymous.

ORCID iDs: Anjuli Kaur https://orcid.org/0000-0002-7803-306X

Tim Robbins https://orcid.org/0000-0002-5230-8205

References

- 1.Echo and Alexa Devices by Amazon.co.uk. Amazon.co.uk. https://www.amazon.co.uk/Echo-and-Alexa-Devices/b?ie=UTF8&node=10983873031. Published 2020. Accessed May 31, 2020.

- 2.Healthcare Chatbots Market – Global Opportunity Analysis and Industry Forecast (2019–2025). Meticulous Research. https://www.meticulousresearch.com/product/healthcare-chatbots-market-4962/. Published 2020. Accessed May 31, 2020.

- 3.https://www.babylonhealth.com/product. Published 2020. Accessed May 31, 2020.

- 4.Chase T, Julius A, Chandan Jet al. Mobile learning in medicine: an evaluation of attitudes and behaviours of medical students. BMC Med Educ 2018; 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kononowicz A, Woodham L, Edelbring Set al. Virtual patient simulations in health professions education: systematic review and meta-analysis by the digital health education collaboration. J Med Internet Res 2019; 21: e14676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kazi H, Chowdhry S, Memon Z. Medchatbot: an UMLS based chatbot for medical students. Int J Comput Appl 2012; 55: 1–5. [Google Scholar]

- 7.Webber GM. Data representation and algorithms for biomedical informatics applications. PhD thesis, Harvard University, 2005. [Google Scholar]

- 8.Kerfoot B, Baker H, Jackson T, et al. A multi-institutional randomized controlled trial of adjuvant web-based teaching to medical students. Acad Med 2006; 81: 224–230. [DOI] [PubMed] [Google Scholar]

- 9.P. KB, Too J, Mukwa C. Teacher attitude towards use of chatbots in routine teaching. Univers J Educ Res 2018; 6: 1586–1597. [Google Scholar]

- 10.Nair R, Morrissey K, Carasco Det al. et al. COMET: clinically observed medical education tutorial – a novel educational method in clinical skills. Int J Clin Skills 2007; 1: 25–29. [Google Scholar]

- 11.Ritchie J, Spencer L. Qualitative Data Analysis For Applied Policy Research. 1994. The Qualitative Researcher’s Companion. pp 305–329.

- 12.Palanica A, Flaschner P, Thommandram A, et al. Physicians’ perceptions of chatbots in health care: cross-sectional web-based survey. J Med Internet Res 2019; 21: e12887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Innes AD, Campion PD, Griffiths FE. Complex consultations and the ‘edge of chaos’. Br J Gen Pract 2005; 55: 47–52. [PMC free article] [PubMed] [Google Scholar]

- 14.Fadhil A., Schiavo G., 2021. Designing for Health Chatbots. [online] arXiv.org. Available at: https://arxiv.org/abs/1902.09022[Accessed 8 July 2021].

- 15.Diabetes. 2020. Diabetes Prevalence. [online] Available at: https://www.diabetes.co.uk/diabetesprevalence.html#:∼:text=It%20is%20estimated%20that%20415,with%20diabetes%20worldwide%20by%202040.]. [Accessed 16 July 2020].

- 16.Lee J, Saravanan P, Patel V. Alphabet strategy for diabetes care: a multi-professional, evidence-based, outcome-directed approach to management. World J Diabetes 2015; 6: 874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Luft J, Ingham H. The Johari window, a graphic model of interpersonal awareness. 1955. Proceedings of the Western Training Laboratory in Group Development. Los Angeles: University of California, Los Angeles. [Google Scholar]

- 18.Elfil M, Negida A. Sampling methods in clinical research; an educational review. Emergency (Tehran, Iran) 2017; 5: e52. [PMC free article] [PubMed] [Google Scholar]

- 19.Birt L, Scott S, Cavers Det al. et al. Member checking. Qual Health Res 2016; 26: 1802–1811. [DOI] [PubMed] [Google Scholar]

- 20.Gmc-uk.org. 2021. [online] Available at: https://www.gmc-uk.org/-/media/documents/generic-professional-capabilities-framework--0817_pdf-70417127.pdf#page=10 [Accessed 8 July 2021].