Abstract

This Campbell systematic review examines the impact of class size on academic achievement. The review summarises findings from 148 reports from 41 countries. Ten studies were included in the meta‐analysis.

Included studies concerned children in grades kindergarten to 12 (or the equivalent in European countries) in general education. The primary focus was on measures of academic achievement. All study designs that used a well‐defined control group were eligible for inclusion.

A total of 127 studies, consisting of 148 papers, met the inclusion criteria. These 127 studies analysed 55 different populations from 41 different countries. A large number of studies (45) analysed data from the Student Teacher Achievement Ratio (STAR) experiment which was for class size reduction in grade K‐3 in the US in the eighties. However only ten studies, including four of the STAR programme, could be included in the meta‐analysis.

Overall, the evidence suggests at best a small effect on reading achievement. There is a negative, but statistically insignificant, effect on mathematics.

For the non‐STAR studies the primary study effect sizes for reading were close to zero but the weighted average was positive and statistically significant. There was some inconsistency in the direction of the primary study effect sizes for mathematics and the weighted average effect was negative and statistically non‐significant.

The STAR results are more positive, but do not change the overall finding. All reported results from the studies analysing STAR data indicated a positive effect of smaller class sizes for both reading and maths, but the average effects are small

Plain language summary

Small class size has at best a small effect on academic achievement

Reducing class size is seen as a way of improving student performance. But larger class sizes help control education budgets. The evidence suggests at best a small effect on reading achievement. There is a negative, but statistically insignificant, effect on mathematics, so it cannot be ruled out that some children may be adversely affected.

What is this review about?

Increasing class size is one of the key variables that policy makers can use to control spending on education.

But the consensus among many in education research is that smaller classes are effective in improving student achievement which has led to a policy of class size reductions in a number of US states, the UK, and the Netherlands. This policy is disputed by those who argue that the effects of class size reduction are only modest and that there are other more cost‐effective strategies for improving educational standards.

Despite the important policy and practice implications of the topic, the research literature on the educational effects of class‐size differences has not been clear.

This review systematically reports findings from relevant studies that measure the effects of class size on academic achievement.

What is the aim of this review?

This Campbell systematic review examines the impact of class size on academic achievement. The review summarises findings from 148 reports from 41 countries. Ten studies were included in the meta‐analysis.

What are the main findings of this review?

What studies are included?

Included studies concerned children in grades kindergarten to 12 (or the equivalent in European countries) in general education. The primary focus was on measures of academic achievement. All study designs that used a well‐defined control group were eligible for inclusion.

A total of 127 studies, consisting of 148 papers, met the inclusion criteria. These 127 studies analysed 55 different populations from 41 different countries. A large number of studies (45) analysed data from the Student Teacher Achievement Ratio (STAR) experiment which was for class size reduction in grade K‐3 in the US in the eighties. However only ten studies, including four of the STAR programme, could be included in the meta‐analysis.

What are the main results?

Overall, the evidence suggests at best a small effect on reading achievement. There is a negative, but statistically insignificant, effect on mathematics.

For the non‐STAR studies the primary study effect sizes for reading were close to zero but the weighted average was positive and statistically significant. There was some inconsistency in the direction of the primary study effect sizes for mathematics and the weighted average effect was negative and statistically non‐significant.

The STAR results are more positive, but do not change the overall finding. All reported results from the studies analysing STAR data indicated a positive effect of smaller class sizes for both reading and maths, but the average effects are small.

What do the findings of this review mean?

There is some evidence to suggest that there is an effect of reducing class size on reading achievement, although the effect is very small. There is no significant effect on mathematics achievement, though the average is negative meaning a possible adverse impact on some students cannot be ruled out.

The overall reading effect corresponds to a 53 per cent chance that a randomly selected score of a student from the treated population of small classes is greater than the score of a randomly selected student from the comparison population of larger classes. This is a very small effect.

Class size reduction is costly. The available evidence points to no or only very small effect sizes of small classes in comparison to larger classes. Moreover, we cannot rule out the possibility that small classes may be counterproductive for some students. It is therefore crucial to know more about the relationship between class size and achievement in order to determine where money is best allocated.

How up‐to‐date is this review?

The review authors searched for studies published up to February 2017. This Campbell systematic review was published in 2018.

Executive Summary/Abstract

BACKGROUND

Increasing class size is one of the key variables that policy makers can use to control spending on education. Reducing class size to increase student achievement is an approach that has been tried, debated, and analysed for several decades. Despite the important policy and practice implications of the topic, the research literature on the educational effects of class‐size differences has not been clear.

The consensus among many in education research, that smaller classes are effective in improving student achievement has led to a policy of class size reductions in a number of U.S. states, the United Kingdom, and the Netherlands. This policy is disputed by those who argue that the effects of class size reduction are only modest and that there are other more cost‐effective strategies for improving educational standards.

OBJECTIVES

The purpose of this review is to systematically uncover relevant studies in the literature that measure the effects of class size on academic achievement. We will synthesize the effects in a transparent manner and, where possible, we will investigate the extent to which the effects differ among different groups of students such as high/low performers, high/low income families, or members of minority/non‐minority groups, and whether timing, intensity, and duration have an impact on the magnitude of the effect.

SEARCH METHODS

Relevant studies were identified through electronic searches of bibliographic databases, internet search engines and hand searching of core journals. Searches were carried out to February 2017. We searched to identify both published and unpublished literature. The searches were international in scope. Reference lists of included studies and relevant reviews were also searched.

SELECTION CRITERIA

The intervention of interest was a reduction in class size. We included children in grades kindergarten to 12 (or the equivalent in European countries) in general education. The primary focus was on measures of academic achievement. All study designs that used a well‐defined control group were eligible for inclusion. Studies that utilized qualitative approaches were not included.

DATA COLLECTION AND ANALYSIS

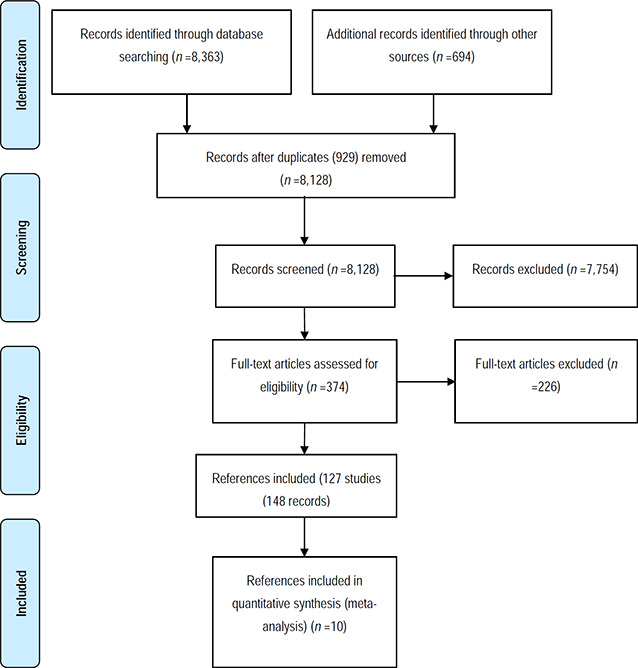

The total number of potential relevant studies constituted 8,128 hits. A total of 127 studies, consisting of 148 papers, met the inclusion criteria and were critically appraised by the review authors. The 127 studies analysed 55 different populations from 41 different countries.

A large number of studies (45) analysed data from the STAR experiment (class size reduction in grade K‐3) and its follow up data.

Of the 82 studies not analysing data from the STAR experiment, only six could be used in the data synthesis. Fifty eight studies could not be used in the data synthesis as they were judged to have too high risk of bias either due to confounding (51), other sources of bias (4) or selective reporting of results (3). Eighteen studies did not provide enough information enabling us to calculate an effects size and standard error or did not provide results in a form enabling us to use it in the data synthesis.

Meta‐analysis was used to examine the effects of class size on student achievement in reading and mathematics. Random effects models were used to pool data across the studies not analysing STAR data. Pooled estimates were weighted using inverse variance methods, and 95% confidence intervals were estimated. Effect sizes were measured as standardised mean differences (SMD). It was only possible to perform a meta‐analysis by the end of the treatment year (end of the school year).

Four of the studies analysing STAR data provided effect estimates that could be used in the data synthesis. The four studies differed in terms of both the chosen comparison condition and decision rules in selecting a sample for analysis. Which of these four studies' effect estimates should be included in the data synthesis was not obvious as the decision rule (concerning studies using the same data set) as described in the protocol could not be used. Contrary to usual practice we therefore report the results of all four studies and do not pool the results with the studies not analysing STAR data except in the sensitivity analysis. We took into consideration the ICC in the results reported for the STAR experiment and corrected the effect sizes and standard errors using ρ = 0.22. No adjustment due to clustering was necessary for the studies not analysing STAR data.

Sensitivity analysis was used to evaluate whether the pooled effect sizes were robust across components of methodological quality, in relation to inclusion of a primary study result with an unclear sign, inclusion of effect sizes from the STAR experiment and to using a one‐student reduction in class size in studies using class size as a continuous variable.

RESULTS

All studies, not analysing STAR data, reported outcomes by the end of the treatment (end of the school year) only. The STAR experiment was a four year longitudinal study with outcomes reported by the end of each school year. The experiment was conducted to assess the effectiveness of small classes compared with regular‐sized classes and of teachers' aides in regular‐sized classes on improving cognitive achievement in kindergarten and in the first, second, and third grades. The goal of the STAR experiment was to have approximately 100 small classes with 13‐17 students (S), 100 regular classes with 22‐25 students (R), and 100 regular with aide classes with 22‐25 students (RA).

Of the six studies not analysing STAR, only five were used in the meta‐analysis as the direction of the effect size in one study was unclear. The studies were from USA, the Netherlands and France, one was a RCT and five were NRS. The grades investigated spanned kindergarten to 3. Grade and one study investigated grade 10. The sample sizes varied; the smallest study investigated 104 students and the largest study investigated 11,567 students. The class size reductions varied from a minimum of one student in four studies, a minimum of seven students in another study to a minimum of 8 students in the last study.

All outcomes were scaled such that a positive effect size favours the students in small classes, i.e. when an effect size is positive a class size reduction improves the students' achievement.

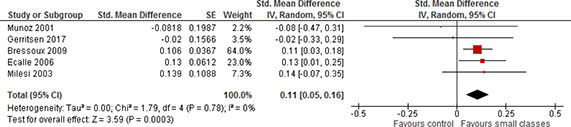

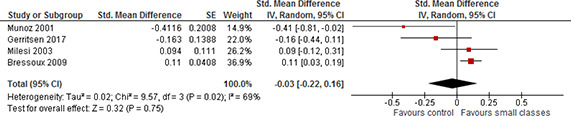

Primary study effect sizes for reading lied in the range ‐0.08 to 0.14. Three of the study‐level effects were statistically non‐significant. The weighted average was positive and statistically significant. The random effects weighted standardised mean difference was 0.11 (95% CI 0.05 to 0.16) which may be characterised as small. There is some inconsistency in the direction of the effect sizes between the primary studies. Primary study effect sizes for mathematics lies in the range ‐0.41 to 0.11. Two of the study‐level effects were statistically non‐significant. The weighted average was negative and statistically non‐significant. The random effects weighted standardised mean difference was ‐0.03 (95% CI ‐0.22 to 0.16). There is some inconsistency in the direction as well as the magnitude of the effect sizes between the primary studies.

All reported results from the four studies analysing STAR data indicated a positive effect favouring the treated; all of the study‐level effects were statistically significant. The study‐level effect sizes for reading varied between 0.17 and 0.34 and the study‐level effect sizes for mathematics varied between 0.15 and 0.33.

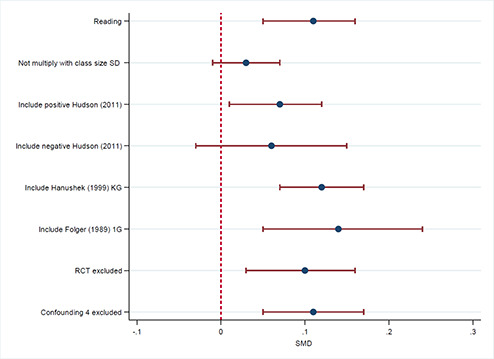

There were no appreciable changes in the results when we included the extremes of the range of effect sizes from the STAR experiment. The reading outcome lost statistical significance when the effect size from the primary study reporting a result with an unclear direction was included with a negative sign and when the results from the studies using class size as a continuous variable were included with a one student reduction in class size instead of a standard deviation reduction in class size.

Otherwise, there were no appreciable changes in the results.

AUTHORS’ CONCLUSIONS

There is some evidence to suggest that there is an effect of reducing class size on reading achievement, although the effect is very small. We found a statistically significant positive effect of reducing the class size on reading. The effect on mathematics achievement was not statistically significant, thus it is uncertain if there may be a negative effect.

The overall reading effect corresponds to a 53 per cent chance that a randomly selected score of a student from the treated population of small classes is greater than the score of a randomly selected student from the comparison population of larger classes. The overall effect on mathematics achievement corresponds to a 49 per cent chance that a randomly selected score of a student from the treated population of small classes is greater than the score of a randomly selected student from the comparison population of larger classes.

Class size reduction is costly and the available evidence points to no or only very small effect sizes of small classes in comparison to larger classes. Taking the individual variation in effects into consideration, we cannot rule out the possibility that small classes may be counterproductive for some students. It is therefore crucial to know more about the relationship between class size and achievement and how it influences what teachers and students do in the classroom in order to determine where money is best allocated.

1 Background

1.1 THE PROBLEM, CONDITION OR ISSUE

Increasing class size is one of the key variables that policy makers can use to control spending on education. The average class size at the lower secondary level is 23 students in OECD countries, but there are significant differences, ranging from over 32 in Japan and Korea to 19 or below in Estonia, Iceland, Luxembourg, Slovenia and the United Kingdom (OECD, 2012). On the other hand, reducing class size to increase student achievement is an approach that has been tried, debated, and analysed for several decades. Between 2000 and 2009, many countries invested additional resources to decrease class size (OECD, 2012).

Despite the important policy and practice implications of the topic, the research literature on the educational effects of class‐size differences has not been clear. A large part of the research on the effects of class size has found that smaller class sizes improve student achievement (for example Finn & Achilles, 1999; Konstantopoulos, 2009; Molnar et al., 1999; Schanzenbach, 2007). The consensus among many in education research that smaller classes are effective in improving student achievement has led to a policy of class size reductions in a number of U.S. states, the United Kingdom, and the Netherlands. This policy is disputed by those who argue that the effects of class size reduction are only modest and that there are other more cost‐effective strategies for improving educational standards (Hattie, 2005; Hedges, Laine, & Greenwald, 1994; Rivkin, Hanushek, & Kain, 2005). There is no consensus in the literature as to whether class size reduction can pass a cost‐benefit test (Dustmann, Rajah & van Soest, 2003; Dynarski, Hyman & Schanzenbach, 2011; Finn, Gerber & Boyd‐Zaharias, 2005; Muenning & Woolf, 2007).

As it is costly to reduce class size, it is important to consider the types of students who might benefit most from smaller class sizes and to consider the timing, intensity, and duration of class size reduction as well. Low socioeconomic status is strongly associated with low school performance. Results from the Programme for International Student Assessment (PISA) point to the fact that most of the students who perform poorly in PISA are from socio‐economically disadvantaged backgrounds (OECD, 2010). Across OECD countries, a student from a more socio‐economically advantaged background outperforms a student from an average background by about one year's worth of education in reading, and by even more in comparison to students with low socio‐economic background. Results from PISA also show that some students with low socioeconomic status excel in PISA, demonstrating that overcoming socio‐economic barriers to academic achievement is indeed possible (OECD, 2010).

Smaller class size has been shown to be more beneficial for students from socioeconomically disadvantaged backgrounds (Biddle & Berliner, 2002). Evidence from the Tennessee STAR randomised controlled trial showed that minority students, students living in poverty, and students who were educationally disadvantaged benefitted the most from reduced class size (Finn, 2002; Word et al. (1994). Further, evidence from the controlled, though not randomised, trial, the Wisconsin's Student Achievement Guarantee in Education (SAGE) program, showed that students from minority and low‐income families benefitted the most from reduced class size (Molnar et al., 1999). Thus, rather than implementing costly universal class size reduction policies, it may be more economically efficient to target schools with high concentrations of socioeconomic disadvantaged students for class size reductions.

In the case of the timing of class size reduction, the question is: when does class size reduction have the largest effect? Ehrenberg, Brewer, Gamoran and Willms (2001) hypothesized that students educated in small classes during the early grades may be more likely to develop working habits and learning strategies that enable them to better take advantage of learning opportunities in later grades. According to Bascia and Fredua‐Kwarteng (2008), researchers agree that class size reduction is most effective in the primary grades. That empirical research shows class size to be most effective in the early grades is also concluded by Biddle and Berliner (2002) and the evidence from both STAR and SAGE back this conclusion up (Finn, Gerber, Achilles, & Boyd‐Zaharias, 2001; Smith, Molnar, & Zahorik, 2003). Of course, there is still the possibility that smaller classes may also be advantageous at later strategic points of transition, for example, in the first year of secondary education. Research evidence on this possibility is, however, needed.

For intensity, the question is: how small does a class have to be in order to optimize the advantage? For example, large gains are attainable when class size is below 20 students (Biddle & Berliner, 2002; Finn, 2002) but gains are also attainable if class size is not below 20 students (Angrist & Lavy, 2000; Borland, Howsen & Trawick, 2005; Fredrikson, Öckert & Oosterbeek, 2013; Schanzenbach, 2007). It has been argued that the impact of class size reduction of different sizes and from different baseline class sizes is reasonably stable and more or less linear when measured per student (Angrist & Pischke, 2009, see page 267; Schanzenbach, 2007). Other researchers argue that the effect of class size is not only non‐linear but also non‐monotonic, implying that an optimal class size exists (Borland, Howsen & Trawick, 2005). Thus, the question of whether the size of reduction and initial class size matters for the magnitude of gain from small classes is still an open question.

Finally, researchers agree that the length of the intervention (number of years spent in small classes) is linked with the sustainability of benefits (Biddle & Berliner, 2002; Finn, 2002; Grissmer, 1999; Nye, Hedges & Konstantopoulos, 1999) whereas the evidence on whether more years spent in small classes leads to larger gains in academic achievement is mixed (Biddle & Berliner, 2002; Egelson, Harman, Hood & Achilles, 2002; Finn 2002; Kruger, 1999). How long a student should remain in a small class before eventually returning to a class of regular size is an unanswered question.

1.2 THE INTERVENTION

The intervention in this systematic review is a reduction in class size. What constitutes a reduced class size? This seemingly simple issue has confounded the understanding of outcomes of the research and it is one of the reasons there is disagreement about whether class size reduction works (Graue, Hatch, Rao & Oen, 2007).

Two terms are used to describe the intervention, class size and student‐teacher ratio, and it is important to distinguish between these two terms. The first, class size, focuses on reducing group size and, hence, is operationalized as the number of students a teacher instructs in a classroom at a point in time. For this definition, a reduced number of students are assigned to a class in the belief that teachers will then develop an in‐depth understanding of student learning needs through more focused interactions, better assessment, and fewer disciplinary problems. These mechanisms are based on the dynamics of a smaller group (Ehrenberg et al., 2001). The second term is student‐teacher ratio and is often used as a proxy for class size, defined as a school's total student enrollment divided by the number of its full time teachers.

From this perspective, lowering the ratio of students to teachers provides enhanced opportunities for learning. The concept of using student‐teacher ratios as a proxy for class size is based on a view of teachers as units of expertise and is less focused on the student‐teacher relationship. Increasing the relative units of expertise available to students increases learning, but does not rely on particular teacher‐student interactions (Graue et al., 2007).

Although class size and student‐teacher ratio are related, they involve different assumptions about how a reduction changes the opportunities for students and teachers. In addition, the discrepancy between the two can vary depending on teachers' roles and the amount of time teachers spend in the classroom during the school day.

In this review, the intervention is class size reduction. Studies only considering average class size measured as student‐teacher ratio at school level (or higher levels) will not be eligible. Neither will studies where the intervention is the assignment of an extra teacher (or teaching assistants or other adults) to a class be eligible. The assignment of additional teachers (or teaching assistants or other adults) to a classroom is not the same as reducing the size of the class, and this review focuses exclusively on the effects of class size in the sense of number of students in a classroom.

1.3 HOW THE INTERVENTION MIGHT WORK

Smaller classes allow teachers to adapt their instruction to the needs of individual students. For example, teachers' instruction can be more easily adapted to the development of the individual students. The concept of adaptive education refers to instruction that is adapted to meet the individual needs and abilities of students (Houtveen, Booij, de Jong & van de Grift, 1999). With adaptive education, some students receive more time, instruction, or help from the teacher than other students.

Research has shown that in smaller classes, teachers have more time and opportunity to give individual students the attention they need (Betts & Shkolnik, 1999; Blatchford & Mortimore, 1994; Bourke, 1986; Molnar et al., 1999; Molnar et al., 2000; Smith & Glass, 1980). Additional, less pressure may be placed upon the physical space and resources within the classroom. Both of these factors may be connected to less pupil misbehaviour and disciplinary problems detected in larger classes (Wilson, 2002).

In smaller classes, it is possible for students with low levels of ability to receive more attention from the teacher, with the result that not necessarily all students profit equally. More generally, teachers are able to devote more of their time to educational content (the tasks students must complete) and less to classroom management (for example, maintaining order) in smaller classes. An increased amount of time spend on task, contributes to enhanced academic achievement.

It has often been pointed out, however, that teachers do not necessarily change the way they teach when faced with smaller classes and therefore do not take advantage of all of the benefits offered by a smaller class size. Research suggests that such situations do indeed exist in practice (e.g.Blatchford & Mortimore, 1994; Shapson, Wright, Eason & Fitzgerald, 1980).

Anderson (2000) addressed the question of why reductions in class size should be expected to enhance student achievement and part of his theory was tested in Annevelink, Bosker and Doolaard (2004). To explain the relationship between class size and achievement, Anderson developed a causal model, which starts with reduced class size and ends with student achievement. Anderson noted that small classes would not, in and of themselves, solve all educational problems. The number of students in a classroom can have only an indirect effect on student achievement. As Zahorik (1999) states: “Class size, of course, cannot influence academic achievement directly. It must first influence what teachers and students do in the classroom before it can possibly affect student learning” (p. 50). In other words, what teachers do matter. Anderson's causal model of the effect of reduced class size on student achievement is depicted in Figure 1.

Figure 1.

An explanation of the impact of class size on student achievement (Anderson, 2000).

Anderson's model predicts that a reduced class size will have direct positive effects on the following three variables: 1) Disciplinary problems, 2) Knowledge of student, and 3) Teacher satisfaction and enthusiasm. Each of these variables, in turn, begins a separate path. Fewer disciplinary problems are expected to lead to more instructional time, which in combination with teacher knowledge of the external test, produces greater opportunity to learn. In combination with more appropriate, personalised instruction and greater teacher effort, more instructional time potentially produces greater student engagement in learning as well as more in‐depth treatment of content.

Greater knowledge of students is expected to provide more appropriate personalised instruction, and in combination with more instructional time and greater teacher effort, potentially produces greater student engagement in learning and more in‐depth treatment of content.

Greater teacher satisfaction and enthusiasm are expected to result in greater teacher effort, which in combination with more instructional time and more appropriate, personalised instruction produces greater student engagement in learning and more in‐depth treatment of content.

Finally greater student achievement is the expected result of a combination of the three variables: Greater opportunity to learn, greater student engagement in learning, and more in‐depth treatment of content.

The path from greater knowledge of students through appropriate, personalised instruction and student engagement in learning to student achievement is tested in Annevelink et al. (2004) on students in Grade 1 in 46 Dutch schools in the school year 1999‐2000. Personalised instruction is operationalised as the number of specific types of interactions. Teachers seeking to provide more personalised instruction are expected to provide fewer interactions directed at the organization and personal interactions, and more interactions directed at the task and praising interactions. These changes in interactions are expected to result in a situation where the student spends more time on task.

The level of student engagement is operationalised as the amount of time a student spends on task. Students who spend more time on task are expected to achieve higher learning results.

Smaller classes were related to more interactions of all kinds and more task‐directed and praising interactions resulted in more time spent on task which in turn was related to higher student achievement as expected. Notice that more organizational or personal interactions in smaller classes were contrary to expectations whereas more task‐directed interactions or praising interactions was consistent with expectations (Annevelink et al., 2004).

1.4 WHY IT IS IMPORTANT TO DO THE REVIEW

Class size is one of the most researched educational interventions in social science, yet there is no clear consensus on the effectiveness of small class sizes for improving student achievement. While one strand of class size research points to small and insignificant effects of smaller classes, another points to positive and significant effects on student achievement of smaller classes.

The early meta‐analysis by Glass and Smith (1979) analysed the outcomes of 77 studies including 725 comparisons between smaller and larger class sizes on student achievement. They concluded that a class size reduction had a positive effect on student achievement. Hedges and Stock (1983) reanalysed Glass and Smith's data using different statistical methods, but found very little difference in the average effect sizes across the two analysis methods.

However, the updated literature reviews by Hanushek (Hanushek, 1989; 1999; 2003) cast doubt on these findings. His reviews looked at 276 estimates of pupil‐teacher ratios as a proxy for class size, and most of these estimates pointed to insignificant effects. Based on a vote counting method, Hanushek concluded that “there is no strong or consistent relationship between school resources and student performance” (Hanushek, 1989, p. 47). Krueger (2003), however, points out that Hanushek relies too much on a few studies, which reported many estimates from even smaller subsamples of the same dataset. Many of the 276 estimates were from the same dataset but estimated on several smaller subsamples, and these many small sample estimates are more likely to be insignificant. The vote counting method used in Hanushek's original literature review (Hanushek, 1989) is also criticised by Hedges et al. (1994), who offer a reanalysis of the data from Hanushek's reviews using more sophisticated synthesis methods. Hedges et al. (1994) used a combined significance test.1 They tested two null hypotheses: 1) no positive relation between the resource and output and 2) no negative relation between the resource and output. The tests determine if the data are consistent with the null hypothesis in all studies or false in at least some of the studies. Further, Hedges et al. (1994) reported the median standardized regression coefficient.2 The conclusion is that “it shows systematic positive relations between resource inputs and school outcomes” (Hedges et al., 1994, p. 5). Hence, dependent upon which synthesis method3 is considered appropriate; conclusions based on the same evidence are quite different.

The divergent conclusions of the above‐mentioned reviews are further based on non‐experimental evidence, combining measurements from primary studies that have different specifications and assumptions. According to Grissmer (1999), the different specifications and assumptions, as well as the appropriateness of the specifications and assumptions, account for the inconsistency of the results of the primary studies.

The Tennessee STAR experiment provides rare evidence of the effect of class size from a randomized controlled trial (RCT). The STAR experiment was implemented in Tennessee in the 1980s, assigning kindergarten children to either normal sized classes (around 22 students) or small classes (around 15 students). The study ran for four years, until the assigned children reached third grade, but not even based on this kind of evidence do researchers agree about the conclusion.

According to Finn and Achilles (1990), Nye et al. (1999) and Krueger (1999), STAR results show that class size reduction increased student achievement. However, Hanushek (1999; 2003) questions these results because of attrition from the project, crossover between treatments, and selective test taking, which may have violated the initial randomization.

While the class size debate on what can be concluded based on the same evidence is acceptable and meaningful in the research community, it is probably of less help in guiding decision‐makers and practitioners. If research is to inform practice, there must be an attempt to reach some agreement about what the research does and does not tell us about the effectiveness of interventions as well as what conclusions can be reasonably drawn from research. The researchers must reach a better understanding of questions such as: for who does class size reduction have an effect? When does class size reduction have an effect on student achievement? How small does a class have to be in order to be advantageous?

The purpose of this review is to systematically uncover relevant studies in the literature that measure the effects of class size on academic achievement and synthesize the effects in a transparent manner.

2 Objectives

The purpose of this review is to systematically uncover relevant studies in the literature that measure the effects of class size on academic achievement. We will synthesize the effects in a transparent manner and, where possible, we will investigate the extent to which the effects differ among different groups of students such as high/low performers, high/low income families, or members of minority/non‐minority groups, and whether timing, intensity, and duration have an impact on the magnitude of the effect.

3 Methods

3.1 TITLE REGISTRATION AND REVIEW PROTOCOL

The title for this systematic review was approved in The Campbell Collaboration on 9. October 2012. The systematic review protocol was published on March 3, 2015. Both the title registration and the protocol are available in the Campbell Library at:

3.2 CRITERIA FOR CONSIDERING STUDIES FOR THIS REVIEW

3.2.1 Types of studies

The study designs eligible for inclusion were:

-

Controlled trials:

-

○

RCTs ‐ randomized controlled trials

-

○

QRCTs ‐ quasi‐randomized controlled trials where participants are allocated by, for example, alternate allocation, participant's birth date, date, case number or alphabetically

-

○

NRCTs ‐ non‐randomized controlled trials where participants are allocated by other actions controlled by the researcher

-

○

Non‐randomized studies (NRS) where allocation is not controlled by the researcher and two or more groups of participants are compared. Participants are allocated by, for example, time differences, location differences, decision makers, policy rules or participant preferences.

We included study designs that used a well‐defined control group; i.e. the control or comparison condition was students in classes with more students than in the treatment classes.

Non‐randomised studies, where the reduction of class size has occurred in the course of usual decisions outside the researcher's control, must demonstrate pre‐treatment group equivalence via matching, statistical controls, or evidence of equivalence on key risk variables and participant characteristics. These factors are outlined in section 3.4.3 under the subheading of Confounding, and the methodological appropriateness of the included studies was assessed according to the risk of bias model outlined in section 3.4.3.

Different studies used different types of data. Some used test score data on individual students and actual class‐size data for each student. Others used individual student data but average class‐size data for students in that grade in each school. Still others used average scores for students in a grade level within a school and average class size for students in that school. We only included studies that used measures of class size and measures of outcome data at the individual or class level. We excluded studies that relied on measures of class size as and measures of outcomes aggregated to a level higher than the class (e.g., school or school district).

Some studies did not have actual class size data and used the average student‐teacher ratio within the school (or at higher levels, e.g. school districts). Studies only considering average class size measured as student‐teacher ratio within a school (or at higher levels) were not eligible.

3.2.2 Types of participants

We included children in grades kindergarten to 12 (or the equivalent in European countries) in general education. Studies that met inclusion criteria were accepted from all countries. We excluded children in home‐school, in pre‐school programs, and in special education.

3.2.3 Types of interventions

The intervention in this review is a reduction in class size, i.e. a comparison of classes with larger and small numbers. The more precise class size is measured the more reliable the findings of a study will be.

Studies only considering the average class size measured as student‐teacher ratio within a school (or at higher levels) were not eligible. Neither were studies where the intervention was the assignment of an extra teacher (or teaching assistants or other adults) to a class eligible. The assignment of additional teachers (or teaching assistants or other adults) to a classroom is not the same as reducing the size of the class, and this review focused exclusively on the effects of reducing class size. We acknowledge that class size can change per subject or eventually vary during the day. The precision of the class size measure was recorded.

3.2.4 Types of outcome measures

Primary outcomes

The primary focus was on measures of academic achievement. Academic achievement outcomes included reading and mathematics. Outcome measures had to be standardised measures of academic achievement. The primary outcome variables used in the identified studies were standardised reading and mathematics tests (Stanford Achievement Test (SAT), Item Response Theory‐scaled scores, State wide End‐of‐Grade test (EOG) and NovLex (a lexical database for French elementary‐school readers)).

Studies were only included if they considered one or more of the primary outcomes.

Secondary outcomes

We planned to code the following effect sizes as secondary outcomes when available: standardised test in other academic subjects at primary school level (e.g. in science or second language) and measures of global academic performance (e.g. Woodcock‐Johnson III Tests of Achievement, Stanford Achievement Test (SAT), Grade Point Average). None of these secondary outcomes were reported in studies that could be used in the data synthesis.

3.2.5 Duration of follow‐up

All follow‐up durations reported in the primary studies were recorded.

Time points for measures we planned to consider were:

0 to 1 year follow up

1 to 2 year follow up

More than 2 year follow up

All studies that could be used in the data synthesis reported outcomes in the short run only; by the end of the school year in which treatment were given.

3.2.6 Types of settings

The location of the intervention was classes, grades kindergarten to 12 (or the equivalent in European countries) in regular private, public or boarding schools were eligible. Home‐schools would have been excluded.

3.3 SEARCH METHODS FOR IDENTIFICATION OF STUDIES

3.3.1 Bibliographical database searches

The original electronic searches for this review were performed in 2015. Those searches covered content from 1980‐2015. In February 2017 the searches were updated to cover content from 2015‐2017. The 2017 update had a minor change in the searched electronic resources. These changes are described below. Following electronic databases were searched:

ERIC (EBSCO‐host) ‐ searched from 1980‐2017

SocIndex (EBSCO‐host) ‐ searched from 1980‐2017

EconLit (EBSCO‐host) ‐ searched from 1980‐2017

PsycInfo (EBSCO‐host) ‐ searched from 1980‐2017

Academic Search Premier (EBSCO‐host) ‐ searched from 2015‐2017

Teacher Reference Center (EBSCO‐host) ‐ searched from 2015‐2017

Education Research Complete (EBSCO‐host) ‐ searched from 1980‐2015

International Bibliography of the Social Sciences (ProQuest‐host) ‐ searched from 1980‐2015

ProQuest Dissertations & Theses A&I (ProQuest‐host) ‐ searched from 1980‐2015

Social Science Citation Index (ISI Web of Science) ‐ searched from 1980‐2017

Science Citation Index (ISI Web of Science) ‐ searched from 1980‐2017

3.3.2 Searching other resources

We also searched in other electronic resources for relevant publications:

Campbell Collaboration Library ‐ searched from 1980‐2017

Centre for Reviews and Dissemination Databases ‐ searched from 1980‐2017

EPPI‐Centre Systematic Reviews ‐ Database of Education Research ‐ searched from 1980‐2017

Social Care Online ‐ searched from 1980‐2017

Bibliotek.dk (Danish National Library portal) ‐ searched from 1980‐2015

Bibsys.no (Norwegian National Library portal) ‐ searched from 1980‐2015

Libris.kb.se (Swedish National Library portal) ‐ searched from 1980‐2015

3.3.3 Grey literature search

We searched specific electronic repositories for additional grey literature:

What Works Clearinghouse – U.S. Department of Education ‐ searched from 1980‐2017

EDU.au.dk – Danish Clearinghouse for Education ‐ searched from 1980‐2017

European Educational Research Association ‐ searched from 1980‐2017

American Education Research Association ‐ searched from 1980‐2017

Social Science Research Network ‐ searched from 1980‐2017

Google Scholar ‐ searched from 2015‐2017

3.3.4 Hand search

We hand‐searched following journals for additional references:

Middle School Journal – (2014‐2015)

Elementary School Journal – (2014‐2015)

American Educational Research Journal – (2014‐2015)

Learning Environments Research – (2014‐2015)

3.3.5 Search documentation

Selected search strings from the recent search update as well as the resources searched in the 2015 original 2015 search can be found in the Appendix 11.1.

3.4 DATA COLLECTION AND ANALYSIS

3.4.1 Selection of studies

Under the supervision of review authors, two review team assistants first independently screened titles and abstracts to exclude studies that were clearly irrelevant. Studies considered eligible by at least one assistant or studies where there was not enough information in the title and abstract to judge eligibility, were retrieved in full text. The full texts were then screened independently by two review team assistants under the supervision of the review authors. Any disagreements of eligibility were resolved by the review authors. Exclusion reasons for studies that otherwise might be expected to be eligible were documented and presented in the appendix.

The study inclusion criteria were piloted by the review authors (see Appendix 11.3). The overall search and screening process was illustrated in a flow‐diagram. None of the review authors were blind to the authors, institutions, or the journals responsible for the publication of the articles.

3.4.2 Data extraction and management

Two review authors independently coded and extracted data from included studies. A coding sheet was piloted on several studies and no revision was necessary (see Appendix 11.4). Disagreements were minor and were resolved by discussion. Data and information was extracted on: Available characteristics of participants, intervention characteristics and control conditions, research design, sample size, risk of bias and potential confounding factors, outcomes, and results. Extracted data was stored electronically. Analysis was conducted in RevMan5 and Stata.

3.4.3 Assessment of risk of bias in included studies

We assessed the methodological quality of studies using a risk of bias model developed by Prof. Barnaby Reeves in association with the Cochrane Non‐Randomised Studies Methods Group.4 This model is an extension of the Cochrane Collaboration's risk of bias tool and covers risk of bias in non‐randomised studies that have a well‐defined control group.

The extended model is organised and follows the same steps as the risk of bias model according to the 2008‐version of the Cochrane Hand book, chapter 8 (Higgins & Green, 2008). The extension to the model is explained in the three following points:

-

1)

The extended model specifically incorporates a formalised and structured approach for the assessment of selection bias in non‐randomised studies by adding an explicit item about confounding. This is based on a list of confounders considered to be important and defined in the protocol for the review. The assessment of confounding is made using a worksheet where, for each confounder, it is marked whether the confounder was considered by the researchers, the precision with which it was measured, the imbalance between groups, and the care with which adjustment was carried out (see Appendix 11.5). This assessment will inform the final risk of bias score for confounding.

-

2)

Another feature of non‐randomised studies that make them at high risk of bias is that they need not have a protocol in advance of starting the recruitment process. The item concerning selective reporting therefore also requires assessment of the extent to which analyses (and potentially, other choices) could have been manipulated to bias the findings reported, e.g., choice of method of model fitting, potential confounders considered / included. In addition, the model includes two separate yes/no items asking reviewers whether they think the researchers had a pre‐specified protocol and analysis plan.

-

3)

Finally, the risk of bias assessment is refined, making it possible to discriminate between studies with varying degrees of risk. This refinement is achieved with the addition of a 5‐point scale for certain items (see the following section, Risk of bias judgement items for details).

The refined assessment is pertinent when thinking of data synthesis as it operationalizes the identification of studies (especially in relation to non‐randomised studies) with a very high risk of bias. The refinement increases transparency in assessment judgements and provides justification for not including a study with a very high risk of bias in the meta‐analysis.

Risk of bias judgement items

The risk of bias model used in this review is based on nine items (see Appendix 11.5). The nine items refer to:

sequence generation (Judged on a low/high risk/unclear scale)

allocation concealment (Judged on a low/high risk/unclear scale)

confounders (Judged on a 5 point scale/unclear)

blinding (Judged on a 5 point scale/unclear)

incomplete outcome data (Judged on a 5 point scale/unclear)

selective outcome reporting (Judged on a 5 point scale/unclear)

other potential threats to validity (Judged on a 5 point scale/unclear)

a priori protocol (Judged on a yes/no/unclear scale)

a priori analysis plan (Judged on a yes/no/unclear scale)

In the 5‐point scale, 1 corresponds to Low risk of bias and 5 corresponds to High risk of bias. A score of 5 on any of the items assessed on the 5‐point scale translates to a risk of bias so high that the findings will not be considered in the data synthesis (because they are more likely to mislead than inform).

Confounding

An important part of the risk of bias assessment of non‐randomised studies is how the studies deal with confounding factors (see Appendix 11.5). Selection bias is understood as systematic baseline differences between groups and can therefore compromise comparability between groups. Baseline differences can be observable (e.g. age and gender) and unobservable (to the researcher; e.g. motivation). There is no single non‐randomised study design that always deals adequately with the selection problem: Different designs represent different approaches to dealing with selection problems under different assumptions and require different types of data. There can be particularly great variations in how different designs deal with selection on unobservables. The “adequate” method depends on the model generating participation, i.e. assumptions about the nature of the process by which participants are selected into a program. A major difficulty in estimating causal effects of class size on student outcomes is the potential endogeneity of class size, stemming from the processes that match students with teachers, and schools. Not only do families choose neighbourhoods and schools, but principals and other administrators assign students to classrooms. Because these decision makers utilize information on students, teachers and schools, information that is often not available to researchers, the estimators are quite susceptible to biases from a number of sources.

The primary studies must at least demonstrate pre‐treatment group equivalence via matching, statistical controls, or evidence of equivalence on key risk variables and participant characteristics. For this review, we identified the following observable confounding factors to be most relevant: age and grade level, performance at baseline, gender, socioeconomic background and local education spending. In each study, we assessed whether these confounding factors had been considered, and in addition we assessed other confounding factors considered in the individual studies. Furthermore, we assessed how each study dealt with unobservables.

Importance of pre‐specified confounding factors

The motivation for focusing on age and grade level, performance at baseline, gender, socioeconomic background and local education spending is given below.

Generally development of cognitive functions relating to school performance and learning are age dependent, and furthermore systematic differences in performance level often refer to systematic differences in preconditions for further development and learning of both cognitive and social character (Piaget, 2001; Vygotsky, 1978).

Therefore, to be sure that an effect estimate is a result from a comparison of groups with no systematic baseline differences it is important to control for the students' grade level (or age) and their performance at baseline (e.g. reading level, mathematics level).

With respect to gender it is well‐known that there exist gender differences in school performance (Holmlund & Sund, 2005). Girls outperform boys with respect to reading and boys outperform boys with respect to mathematics (Stoet & Geary, 2013). Although part of the literature finds that these gender differences have vanished over time (Hyde, Fennema, & Lamon, 1990; Hyde & Linn, 1988), we find it important to include this potential confounder.

Students from more advantaged socioeconomic backgrounds on average begin school better prepared to learn and receive greater support from their parents during their schooling years (Ehrenberg et al., 2001). Further, there is evidence that class size may be negatively correlated with the student's socioeconomic backgrounds. For example, in a study of over 1,000 primary schools in Latin America, Willms and Somers (2001) found that the correlation between the pupil/teacher ratio in the school and the socioeconomic level of students in the school was about –.15. Moreover, Willms and Somers (2001) found that schools enrolling students from higher socioeconomic backgrounds tended to have better infrastructures, more instructional materials, and better libraries. The correlations of these variables with school‐level socioeconomic status varied between .26 and .36.

Finally, as outlined in the background section, students with socio‐economically disadvantaged backgrounds perform poorly in school tests (OECD, 2010).

Therefore, the accuracy of the estimated effects of class size will depend crucially on how well socioeconomic background is controlled for. Socioeconomic background factors are, e.g. parents' educational level, family income, minority background, etc.

3.4.4 Measures of treatment effect

For continuous outcomes, effects sizes with 95% confidence intervals were calculated using means and standard deviations where available, or alternatively from mean differences, standard errors and 95% confidence intervals (whichever were available), using the methods suggested by Lipsey & Wilson (2001). Hedges' g was used for estimating standardised mean differences (SMD).

Software for storing data and statistical analyses were Excel and RevMan 5.0.

3.4.5 Unit of analysis issues

To account for possible statistical dependencies, we examined a number of issues: we assessed whether suitable cluster analysis was used (e.g. cluster summary statistics, robust standard errors, the use of the design effect to adjust standard errors, multilevel models and mixture models), if assignment of units to treatment was clustered, whether individuals had undergone multiple interventions, whether there were multiple treatment groups, and whether several studies were based on the same data source.

Cluster assignment to treatment

We checked for consistency in the unit of allocation and the unit of analysis, as statistical analysis errors can occur when they are different. In cases where study investigators had not applied appropriate analysis methods that control for clustering effects, we estimated the intra‐cluster correlation (Donner, Piaggio, & Villar, 2001) and corrected the effect size and standard error. Based on the analysis in Stockford (2009), we used an intra‐cluster correlation () of 0.22. We report the corrected results and the non‐corrected results. We used the following formulas (see Hedges, 2007, page 349):

where n is cluster size and NT , NC are treatment and control group sample sizes and N is total sample size.

Multiple Interventions per Individual

There were no studies with multiple interventions per individual.

Multiple Studies using the Same Sample of Data

Five studies analysed the same population, using data from the Third International Mathematics and Science Study (TIMSS) data set from 1995. Three studies used TIMMS data from 2011. Data from the National Educational Longitudinal Study (NELS data from USA) was used in five studies.

Two studies analysed the same US population using the Early Childhood Longitudinal Study‐Kindergarten Class of 1998‐1999 data set. Five studies analysed data from Indiana's Prime Time Project (1984‐1988). Five studies analysed the Student Achievement Guarantee in Education Program (SAGE) implemented in Wisconsin in 1996‐2001. Three studies analysed the same sample of students from Israel. Four studies analysed the same population using the PRIMA survey which contains information on Dutch pupils who were enrolled in grades 2, 4, 6 and 8 in the school‐year 1994/95. Two studies used the same sample of Swedish students from 1998 to 1999. Finally, four studies analysed the British Class Size Study (1996‐1999). We reviewed all studies, but in the meta‐analysis we only included one estimate of the effect from each sample of data in order to avoid dependencies between the “observations” (i.e. the estimates of the effect) in the meta‐analysis. The choice of which estimates to include was based on our risk of bias assessment of the studies. We chose the estimate from each sample of data from the study that we judged to have the least risk of bias due to confounding.

One RCT (the STAR experiment conducted in Tennessee in 1985–1989) was reported in several studies (45 studies reported in 51 papers). We reviewed all studies but it was unclear which study should be judged to have the least risk of bias. We reported all relevant results from the studies analysing STAR but none of the studies were included in the meta‐analysis of non‐STAR studies.

Multiple Time Points

All studies that could be used in the data synthesis reported outcomes in the short run only.

3.4.6 Dealing with missing data

Where studies had missing summary data, such as missing standard deviations, we calculated SMDs from mean differences, standard errors and 95% confidence intervals (whichever were available), using the methods suggested by Lipsey & Wilson (2001). We requested information from the principal investigators (if current contact information could be located) if not enough information was provided to calculate an effect size and standard error.

3.4.7 Assessment of heterogeneity

Heterogeneity among primary outcome studies was assessed with the Chi‐squared (Q) test, and the I‐squared, and τ‐squared statistics (Higgins, Thompson, Deeks, & Altman, 2003). Any interpretation of the Chi‐squared test was made cautiously on account of its low statistical power.

3.4.8 Data synthesis

All studies that could be used in the data synthesis reported outcomes in the short run only; by the end of the school year in which treatment were given. We carried out our meta‐analyses using the standardised mean differences (SMD). All analyses were inverse variance weighted using random effects statistical models that incorporate both the sampling variance and between study variance components into the study level weights. Random effects weighted mean effect sizes were calculated using 95% confidence intervals.

3.4.9 Sensitivity analysis

Sensitivity analysis was used to evaluate whether the pooled effect sizes were robust across components of methodological quality.

For methodological quality, we performed sensitivity analysis for study design and the confounding item of the risk of bias checklists, respectively. Sensitivity analysis was further used to examine the robustness of conclusions in relation to inclusion of a result with an unclear sign, inclusion of effect sizes from the STAR experiment and to multiplying the reported effect with a standard deviation reduction in class size in the studies using class size as a continuous variable.

4 Results

4.1 DESCRIPTION OF STUDIES

4.1.1 Results of the search

The search was performed between 2015 and February 2017.

The results are summarised in Figure 1 in section 11.2. The total number of potential relevant records was 8,128 after excluding duplicates (database: 7,434, grey, hand search, snowballing and other resources: 694). All 8,128 records were screened based on title and abstract; 7754 were excluded for not fulfilling the first level screening criteria and 374 records were ordered for retrieval and screened in full text. Of these, 226 did not fulfil the second level screening criteria and were excluded. Eighteen records were unobtainable despite efforts to locate them through libraries and searches on the internet. The references are listed in section 8.3.

A total of 127 unique studies, reported in 148 papers were included in the review. Further details of the included and excluded studies are provided in section 10.

4.1.2 Included studies

The search resulted in a final selection of 127 studies, reported in 148 papers, which met the inclusion criteria for this review. The 127 studies analysed 55 different populations. A large number of studies (45) analysed data from the STAR experiment (class size reduction in grade K‐3) and its follow up data.

Of the 82 studies not analysing data from the STAR experiment, only six could be used in the data synthesis. Fifty eight studies could not be used in the data synthesis as they were judged to have too high risk of bias on either the confounding item (51), for the other bias item (4) or for the selective reporting item (3). Eighteen studies did not provide enough information enabling us to calculate an effects size and standard error or did not provide results in a form enabling us to use it in the data synthesis.

4.1.2.1 STAR studies

A large number of studies analysed data from the STAR experiment (class size reduction in grade K‐3) and its follow up data5, 45 studies reported in 51 papers.

The four‐year STAR experiment was conducted in Tennessee in 1985–1989, to assess the effectiveness of small classes compared with regular‐sized classes and of teachers' aides in regular‐sized classes on improving cognitive achievement in kindergarten and in the first, second, and third grades. According to the Technical report (Word, 1994) and Word et al. (1990), 6 the goal of the STAR experiment was to have approximately 100 small classes with 13‐17 students (S), 100 regular classes with 22‐25 students (R), and 100 regular with aide classes with 22‐25 students (RA). In Word et al. (1994) it is reported that in the 1985‐86 year (the first year of the experiment), the STAR project had 128 small classes (approximately 1,900 students), 101 regular classes, (approximately 2,300 students), and 99 regular classes with teacher aides (approximately 2,200 students). Both students and teachers were randomised and randomisation was done within schools so at least one of each class type (S, R and RA) was present at each school. Every class was to remain the same type for four years and a new teacher was randomly assigned to each class in each subsequent grade.

Four studies provided results for grade K‐3, that could be used in the data synthesis. The first study, by Folger and Breda (1989), provided effect sizes comparing small classes to regular classes for each grade level (K‐3). The results of the analysis conducted by Folger and reported in Folger and Breda (1989) was also reported in Word et al. (1990) and Word et al. (1994). Both reports by Word et al. provide a summary of original results from the primary analyses of the STAR experiment. The primary analyses were analysis‐of‐variance models conducted by Professor Finn. However, only a summary of the analyses showing significance levels (.05, .01, .001, all levels are only reported as < = and not the exact level of significance) are reported (which cannot be used in the data synthesis). The second study, by Finn, Gerber, Achilles and Boyd‐Zaharias (2001), provided effect sizes comparing small classes to regular classes for each grade level (K‐3) but used different decision rules in selecting a sample for analysis than in Folger and Breda (1989). In addition Finn et al. (2001) included covariates in the analysis. The third study, by Nye, Achilles, Boyd‐Zaharias, Fulton & Wallenhorst (1992/1994) (Nye, Achilles, Boyd‐Zaharias, Fulton & Wallenhorst (1994) is a published and shorter version of the 1992 paper), provided effect sizes comparing small classes to the average of regular and regular with aide classes and other than the different comparison they also used different decision rules in selecting a sample for analysis than in Folger & Breda (1989). The effect sizes from the analysis in Nye et al. (1992/1994) are also reported in Finn (1998), Finn & Achilles (1999) and Nye, Achilles, Boyd‐Zaharias & Fulton (1993). Finally, effect sizes comparing small classes to the average of regular and regular with aide classes were also provided in the study by Hanushek (1999).

Which of these four studies's effect estimates should be included in the data synthesis is not obvious as the decision rule as described in the protocol cannot be used (all studies analysing the same RCT).

The four studies differed in terms of both the chosen comparison condition and decision rules in selecting a sample for analysis (see table 4.1) and which one should be judged to have the least risk of bias is not obvious. Below we describe the different posibilities of chosing a comparison and selecting a sample for analysis.

Table 4.1.

Characteristics of studies analysing STAR data used in the data synthesis

| Folger, 1989 | Nye, 1992/994 | Finn, 2001 | Hanushek, 1999 | |

| Comparison | R | R + RA | R | R + RA |

| Size of R and RA classes used | 21‐28 | 22‐26 | 22‐26 | 22‐25 |

| Out‐of range classes | Excluded | Included | Not reported | Not reported |

| STAR trained teachers | Included | Excluded | Not reported | Not reported |

| Regression with covariate adjustment | No | No | Yes | No |

| Intention to treat/treatment as received | Not reported | Not reported | Not reported | Not reported |

The numbers of S, R and RA classes and students, as reported in the Technical report (Word, 1994) and Word et al. (1990), are probably the number of students and classes that initially were randomised to any of the three conditions (S, R and RA). However, a considerably proportion of classes did not fall into the range they were intended to. According to the STAR Database User's Guide (Finn et al.,2007, using a table of the distribution of classes by grade and designation reported in Achilles, 1999) between 18 and 32 per cent of classes each year was ‘out of range’; falling in the range of either 18‐21 students or 26‐30 students (see section 10.3 for details). In addition a total of 14 regular and regular with aide classes fell in the range of small classes throughout one of the four years but were not considered out of range according to Finn et al. 2007. The four studies providing effect estimates of the STAR experiment either excluded, included or did not report how they handled the out of range classes. In addition the range of regular sized classes used in the four studies differed, only one study used the range 22‐25 (see table 4.1).

In 2. Grade a number of schools and teachers were randomly chosen to receive special STAR training. A second choice of selection of analysis sample concerns whether to include or exclude the classes whose teachers received STAR training and in addition it is unclear how many actually received training. According to Word et al. (1990) and Folger and Breda (1989), 57 teachers in grade 2 from 13 randomly chosen schools and another 57 teachers in grade 3 received Project STAR training. According to Word et al. (1994) p. 73, 67 teachers received training in grade 2 and on page 117 it is stated that all teachers (57 teachers and 57 classes) from 13 schools received training in 2. Grade and all teachers from the same 13 schools (57 classes) received training in 3. Grade. According to Finn et al. (2007) the training was given to 54 second grade teachers from 15 STAR schools. The four studies either excluded, included or did not report how they handled these classes (see table 4.1).

The four studies also differed in the comparison condition they chose. They either compared small classes to regular classes only or to the average of regular and regular with aide classes. Which comparison is most appropriate for this review is however not obvious. At the beginning of 1. Grade approximately half of the students in regular and regular with aide classes interchanged classes (see section 10.3 for details). At the beginning of 2. Grade (3. Grade) 6 (5) per cent of the students in regular and regular with aide classes interchanged classes. Which choice of comparison is appropriate concerning the analysis for grades 1‐3 is thus unclear.

In addition to the regular and regular with aide class interchanging; each year students from small classes moved to regular or regular‐with‐aide classes and students from regular and regular with aide classes moved to small classes (6, 4 and 4 per cent at the beginning of 1. 2. and 3. Grade). In total 25 per cent of all students moved class type at some point. Whether all of these students actually moved classes or a part of the reported movement of students between classes were due to reclassification of class type (small or regular sized) is unclear. The reported number of students moving to and from classes with aide cannot be due to reclassification between small and regular sized classes. At least some reclassification must have occurred though as the following two pieces of evidence show: First, according to the numbers reported in the Technical report (Word et al., 1994), the distribution of class type was not constant in the 13 schools randomly chosen to receive STAR training. It is reported there are 21 small classes, 19 regular classes and 17 regular with aide classes in these schools in 2. Grade. In 3. Grade it is reported there are 25 small, 15 regular and 17 regular with aide classes in the same 13 schools. Thus four classes are apparently reclassified from regular sized to small even though classes were to remain the same type for four years. Second, according to the Technical report (Word et al., 1994) two schools in 3. Grade had incomplete test data and were removed. Compared to the total number of classes in 2. Grade however, only the number of regular classes is reduced from second to third grade (with 11). Some classes must have been reclassified as randomisation was done within schools so each school had at least one class of each type (S, R and RA). None of the four studies providing effect estimates, were explicit about how they handled this moving around of students (and classes).

The four studies are characterised concerning comparison, selection of sample for analysis and method of estimation in table 4.1. Only the study by Hanushek (1999) used the range 22‐25 for regular sized classes. The study compares small classes to the average of regular and regular with aide classes and otherwise nothing is reported concerning sample selection (out of range classes and STAR trained teachers) nor how the treatment was defined (as received or intended). The study by Folger and Breda (1989) compares small classes to regular classes only but uses a range of 21‐28 students for regular classes. It is reported that STAR trained teachers and their classes are included and out of range classes are excluded but it is not reported how out of range classes are defined (for example are the 14 regular and regular with aide classes that fell in the range of small classes excluded and is the definition of out of range classes different than that reported in Finn et al., 2007, considering the different range og regular classes?). The study by Nye et al. (1992/1994) compares small classes to the average of regular and regular with aide classes and uses a range of 22‐26 students for regular sized classes. It is reported that STAR trained teachers and their classes are excluded and out of range classes are included but it is not reported how out of range classes are categorised (for example are the 14 regular and regular with aide classes that fell in the range of small classes considered small and are the classes in the range 18‐21 categorised as small or regular?). The study by Finn et al. (2001) compares small classes to regular classes only and uses a range of 22‐26 students for regular classes. Otherwise nothing is reported concerning sample selection (out of range classes and STAR trained teachers) or how the treatment was defined (as received or intended). The study includes covariates in the analysis.

We find it very difficult to decide which study or effect estimate is the ‘right’ one to include in the data synthesis. Contrary to usual practice we will therefore not chose one study to include in the data synthesis but will report the results of all four studies in section 4.3 and further examine the robustness of our conclusions when including the extremes (smallest and largest) of the range of effect sizes from the STAR experiment in section 4.3.5.

Concerning the follow up study of the STAR experiment (known as the Lasting benefits study, LBS) a technical report providing effect estimates concerning grade 4, 5, 6, 7 and 8 was published each year. However, only one of the technical reports could be located (Nye et al., 1992, reporting results for grade 5). The remaining technical reports (Nye et al., 1991, 1993, 1994 and 1995) for grade 4, 6, 7 and 8 were unobtainable. The results for grade 4 are however reported in Finn & Achilles (1989). In addition the effect sizes from the technical reports for grade 4 and 5 are also reported in Nye, Achilles, Zaharias & Fulton (1993), Achilles, Nye, Zaharias & Fulton (1993) and Finn & Achilles (1999). Finn & Achilles (1999) also report the effect sizes from the technical reports for grade 6 and 7. The effect sizes from the technical report for grade 8 could not be located. Finn, Gerber, Achilles & Boyd‐Zaharias (2001) report effect sizes for grade 8 (and grade 4 and 6) in a reanalysis of the follow up data. None of these studies reporting results using follow up data from the STAR experiment could however be used in the data synthesis due to too high risk of bias (see section 4.2).

Several other studies reported results from a variety of re‐analyses of the STAR experiment (and follow up data) but none of them could be used in the data synthesis. An overview of the reasons for exclusions from the data synthesis is given in section 10.2.

4.1.2.2 Non‐STAR studies

Of the 82 studies (reported in 97 papers) not analysing data from the STAR experiment (or follow up data), only six could be used in the data synthesis.

Five studies (West & Wößmann, 2006; Wößmann & West, 2006; Wößmann, 2003; Wößmann, 2005b and Pong & Pallas, 2001) analysed the same population, using data from the Third International Mathematics and Science Study (TIMSS) data set from 1995. None of these studies were used in the data synthesis as all five studies were judged to have a score of 5 on the risk of bias scale for the confounding item. Three studies used TIMMS data from 2011 (Konstantopoulos & Li, 2016; Li & Konstantopoulos, 2017 and Li, 2015). All three studies were judged 5 on the confounding item and were not included in the analysis. Data from the National Educational Longitudinal Study (NELS data from USA) was used in five studies (Akerhielm, 1995; Boozer and Rouse, 2001; Dee & West, 2011; Hudson, 2011 and Maasoumi, Millimet & Rangaprasad, 2005). The studies by Boozer and Rouse (2001) and Akerhielm (1995) were judged to have a score of 5 on the risk of bias scale for the confounding item and were excluded from the data synthesis. The studies by Dee and West (2011) and Maasoumi, Millimet and Rangaprasad (2005) did not provide results we could use in the data synthesis (results were reported as differences between subjects and first or second order stochastic dominance tests respectively). The study by Hudson (2011) was used in the data synthesis.

Two studies (Milesi & Gamoran, 2006 and Wenfan & Qiuyun, 2005) analysed the same US population using the Early Childhood Longitudinal Study‐Kindergarten Class of 1998‐1999 data set. The study by Wenfan and Qiuyun (2005) was judged to have a too high risk of bias (scored 5 on the confounding item) and was excluded from the data synthesis. The study by Milesi and Gamoran (2006) was used in the data synthesis. Five studies analysed data from Indiana's Prime Time Project (1984‐1988) (Gilman, 1988; Gilman, Swan & Stone, 1988; McGiverin, 1989; Sanogo & Gilman, 1994 and Tillitsky et al., 1988). The four studies by Gilman (1988), Gilman et al. (1988), McGiverin (1989) and Tillitsky et al., (1988) were all rated 5 on the risk of bias scale and the study by Sanogo and Gilman (1994) did not provide results we could use in the data synthesis (do not report what type of classes are included). Five studies analysed the Student Achievement Guarantee in Education Program (SAGE) implemented in Wisconsin in 1996‐2001 (Maier et al., 1997; Molnar, Smith & Zahorik, 1997; Molnar, Smith & Zahorik, 1998; Molnar et al., 1999 and Molnar et al., 2001). None of the studies provided results that could be used in the data synthesis (for details see section 10.1). Three studies analysed the same sample of students from Israel (Angrist & Lavy, 1999; Lavy, 2001 and Otsu, Xu & Matsushita, 2015). The two studies by Angrist and Lavy (1999) and Lavy (2001) were both judged to have a too high risk of bias (scored 5 on the confounding item) and in the study by Otsu et al. (2015) relevant results were presented graphically and no effect sizes or standard errors could be extracted.

Four studies (Dobbelsteen, Levin & Oosterbeek, 2002; Levin, 2001; Ma & Koenker, 2006 and Gerritsen, Plug & Webbink, 2017) analysed the same population using the PRIMA survey which contains information on Dutch pupils who were enrolled in grades 2, 4, 6 and 8 in the school‐year 1994/95. Three studies (Dobbelsteen et al., 2002; Levin, 2001 and Ma & Koenker, 2006) were however judged to have a too high risk of bias (scored 5 on the confounding item) and were excluded from the data synthesis. The study by Gerritsen et al. (2017) was used in the data synthesis. Another two studies (Krueger & Lindahl, 2002 and Lindahl, 2005) used the same sample of Swedish students from 1998 to 1999. Both were judged to have a too high risk of bias (scored 5 on the confounding item).