Abstract

The incorporation of active learning improves student learning and persistence compared to traditional lecture-based teaching. However, there are numerous active learning strategies and the degree to which each one enhances learning relative to other techniques is largely unknown. I analyzed the effectiveness of the addition of simulations to cooperative group problem-solving assignments in an undergraduate 400-level neurobiology course. One section of the course carried out group problem-solving alone, whereas the other section used neuroscience simulations (Neuronify) as part of the problem-solving assignments. Overall, both groups of students learned course concepts effectively and did not differ in their performance on exams or specific exam questions related to the assignments. Students perceived that the assignments and simulations were helpful in their understanding of course material but did not overwhelmingly recommend including simulations in the future. Students using simulations were more likely to report gaining experience with experimental design, and this may be an effective way to build scientific reasoning in non-laboratory courses. However, student frustration with technology was the primary reason that students reported dissatisfaction with the simulations. Overall, cooperative group problem-solving with or without simulations is very effective at helping students learn neuroscience concepts.

Keywords: simulations, cooperative group learning, problem solving, Neuronify

Active and inquiry-based teaching methods lead students to better understand, use, and retain scientific concepts and skills (Hall and McCurdy, 1990; Luckie et al., 2004; Gehring and Eastman, 2008; Gormally et al., 2009; Keen-Rhinehart et al., 2009; Ferreira and Trudel, 2012; Freeman et al., 2014; Smallhorn et al., 2015). Furthermore, small group learning, especially formal cooperative learning groups, have been shown to increase both performance and persistence in STEM courses (Springer et al., 1999; Johnson and Johnson, 2002; Tanner et al., 2003; Kyndt et al., 2013). However, there are numerous active learning methods that can be used to engage cooperative learning groups with course material. Cooperative learning strategies can be highly structured, such as process-oriented guided inquiry learning (POGIL), which requires students to explore and apply concepts via scaffolded worksheets, and problem-based learning, requiring students to solve a real-life problem using course concepts (Arthurs and Kreager, 2017; Faust and Paulson, 1998). On the other hand, some cooperative learning strategies are more flexible and can be used with traditional lectures or other activities, including think-pair-share, jigsaw activities (groups work on different, but related, activities), discussions and debates, and active-review sessions (groups answer review questions given by the instructor) (Arthurs and Kreager, 2017; Faust and Paulson, 1998). In most cases, group-based active learning has been compared to traditional lecture-based teaching methods, but there is relatively little information about how various active learning methods compare to one another. I have used cooperative learning groups in my neurobiology course for the past eight years to help students practice applying course concepts to real-life (often medically-relevant) scenarios, analyzing and evaluating figures and experimental methods, and designing experiments. Recently, simulations, one method of active learning, have been shown to be much more effective when used cooperatively than as an individual learning tool (Chang et al., 2017; Liu et al., 2021; Mawhirter and Garofalo, 2016). Therefore, in Spring 2018, I compared the use of group problems by themselves with the use of group problems that also incorporated computer simulations of neural networks.

Numerous neuroscience simulations are now available and can be incorporated into courses for undergraduates. Students using simulations in courses have shown improvements in assessment scores and tend to rate their experiences positively (Bish and Schleidt, 2008; Crisp, 2012; Schettino, 2014; Latimer et al., 2018). Simulations are particularly influential for teaching cellular and molecular biology, including cellular neuroscience (Lewis, 2014). Although simulations are primarily used in laboratory courses to replace or supplement traditional wet-lab experiments, they have also been used successfully in non-laboratory courses (Wolfe, 2009). The advantage of using simulations in this environment is that it can provide students with a way to build scientific skills and confidence without requiring as many resources or dedicated laboratory time. In particular, students gain the opportunity to design models, test them, and then interpret and evaluate them (Lorenz and Egelhaaf, 2008). Although aspects of experimental design can be captured in other types of assignments, the complete and iterative scientific experience is usually not attainable.

By assigning group problem sets alone in one section of a 400-level neurobiology course and group problem sets with simulations in the other section of the course, I was able to compare student performance on course assessments between the two approaches. I also evaluated student perceptions of the assignments to determine how students perceive and engage with simulations.

MATERIALS AND METHODS

Course

In Spring semester 2018, I assessed the use of simulations as part of group assignments in my 400-level Neurobiology course. This is a 3-hour non-laboratory course; it is required for all neuroscience majors but can also count as an elective towards the biology major. The pre-requisite for the course is a C or better in the second course of a two-semester introductory biology sequence. I taught two sections of the course in Spring 2018: one section used simulations as part of assignments (SIM) and the other section answered questions about the same concepts but not using simulations (PROB).

Students

Students in the course were Mercer University undergraduate students. Mercer’s Institutional Review Board approved this study, and all students signed an informed consent form prior to participation. Based on self-reported information, the two sections of the course were similar in terms of class year and previous courses taken, but the SIM class happened to have more neuroscience majors (Table 1). Although most students had taken (or were currently taking) Introduction to Psychology, many more students in the SIM class had also taken (or were currently taking) Biopsychology because it is also a requirement for neuroscience majors.

Table 1.

Self-reported characteristics of students in the two sections.

| Section | Class year | Major | % having taken Biopsych |

|---|---|---|---|

| SIM (21 students) | 52% seniors, 48% juniors | 57% neuroscience, 33% biology, 5% biochemistry and molecular biology, 5% other | 67% |

| PROB (23 students) | 52% seniors, 39% juniors, 4% sophomores, 4% non-degree-seeking | 22% neuroscience, 65% biology, 4% psychology, 4% biology and psychology double major, 4% other | 30% |

Simulations

Neuronify, a free neural network simulation software, was primarily used (https://ovilab.net/neuronify/; Dragly et al., 2017). Neuronify is available for download on Windows, MacOS, Android, and iOS, which allows students to use the software even if they are only able to bring a smartphone or tablet to class. I also used two assignments at the beginning of the semester partially based on NEURON (https://www.neuron.yale.edu/neuron/), software that simulates intracellular recordings from individual neurons or networks of neurons (see Latimer et al., 2018 for assignments). Students had many technical difficulties installing NEURON software on their computers, and I therefore proceeded only with Neuronify for the remainder of the semester.

Assignments

Eight times during the semester, we spent an entire class period working on a problem set. Both sections of the course had several questions in common, requiring them to analyze a scenario or apply lecture material to a real-life scenario. Then, the SIM section of the class answered additional questions related to the simulations they were asked to perform. The PROB section of the course had additional questions about the same content, but not requiring a simulation (structured similarly to the common problem set questions). Students worked in cooperative groups of three to complete the problem sets. I assigned groups such that each group ideally had at least one student who had completed Biopsychology and a mix of majors. Students worked with the same group for the first half of the semester; I then reassigned groups based on the same parameters and they worked with their new group for the second half of the semester. Each group turned in one assignment, either at the end of the class period (if the group had completed the questions) or at the beginning of the next class period (if they needed additional time).

The Appendix includes sample questions for both sections of the course. These questions were the ones that SIM students commented on most in the end-of-semester surveys.

Assessments and Surveys

I compared performance on each exam between the two sections of the course using independent t-tests. Similarly, performance on specific final exam questions, relating directly to simulation/problem questions, were also compared in this way. When comparing performance on multiple-choice questions or whether specific information was included in an answer, I used a chi-squared test.

At the end of the semester, I asked students to complete anonymous surveys about their experiences with problem sets in the course. On Likert scale questions answered by all students (0 = strongly disagree to 4 = strongly agree), student responses between the two sections of the course were compared using independent t-tests. All students answered the following questions:

-

1) Students rated the following statements on a 5-point Likert scale (strongly disagree to strongly agree):

Problem sets enhanced my ability to understand course material.

Problem sets enhanced my ability to apply course material to new situations.

Problem sets enhanced by ability to talk with others about neuroscience concepts.

My performance on tests was improved by the time I spent on problem sets.

Problem sets were worth my time and effort.

Problem sets were frustrating.

I would recommend that Dr. Northcutt continue to use problem sets for this course in the future.

2) List 2–3 topics/concepts in the course that problem sets particularly helped you understand.

3) Do you have any suggestions for improving problem sets in the future?

4) Do you have any other comments about the problem sets?

Students in the SIM section also answered the following questions:

-

5) Students rated the following statements on a 5-point Likert scale (strongly disagree to strongly agree):

Simulations enhanced my ability to understand course material.

Simulations enhanced my ability to apply course material to new situations.

My performance on tests was improved by the time I spent on simulations.

Simulations were worth my time and effort.

Simulations were frustrating.

I would recommend that Dr. Northcutt continue to use simulations for this course in the future.

6) Which simulation was most helpful for you? Why?

7) Which simulation was most problematic for you? Why?

RESULTS

Student Performance on Exams

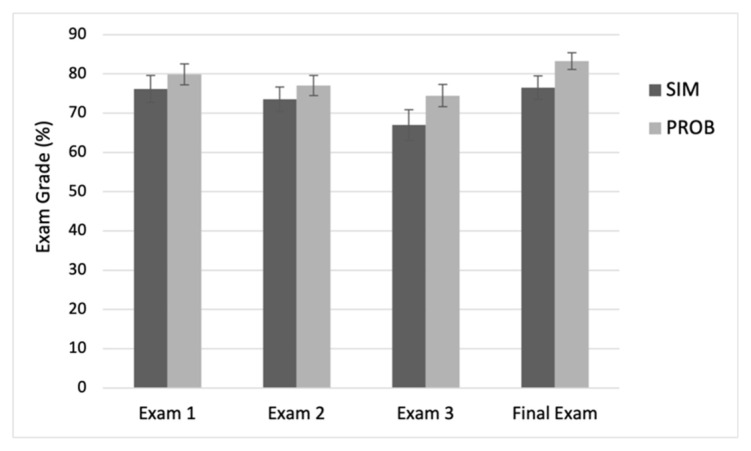

Students in the PROB class had higher exam averages, but there were no significant differences between the two sections on any exam (Figure 1; Exam 1: t(42) = 0.86, p = 0.394; Exam 2: t(42) = 0.87, p = 0.388; Exam 3: t(42) = 1.56, p = 0.127; Final Exam: t(42) = 1.86, p = 0.070). Students earned almost identical grades on problem sets between the two sections.

Figure 1.

Student performance on all four exams in the course, including the cumulative final exam. Although the average was slightly higher for the PROB section on all four exams, there were no significant differences between sections on any exam. Error bars represent one standard error of the mean.

Specific Questions on the Final Exam

When I looked at student performance on specific questions on the final exam, I found that all students performed very well on questions related to simulations or related PROB questions (see Appendix for these problem set questions). On a multiple-choice question requiring students to identify the underlying biological basis of the absolute refractory period, 91% of SIM students and 100% of PROB students selected the correct answer (not significantly different). Similarly, on a multiple-choice question that required students to analyze a scenario and recognize it as an example of spatial summation, 86% of SIM students and 92% of PROB students answered correctly (not significantly different). Interestingly, on a short answer question that asked students to describe the function of the basal ganglia in regulating movement, PROB students performed better than SIM students (out of 3 points, PROB students scored 2.875 ± 0.069 and SIM students scored 2.045 ± 0.213; t(44) = 3.84, p < 0.001). Furthermore, more PROB students included a thorough and accurate description of basal ganglia circuitry in their answer (38% vs. 14%), though the groups were not significantly different.

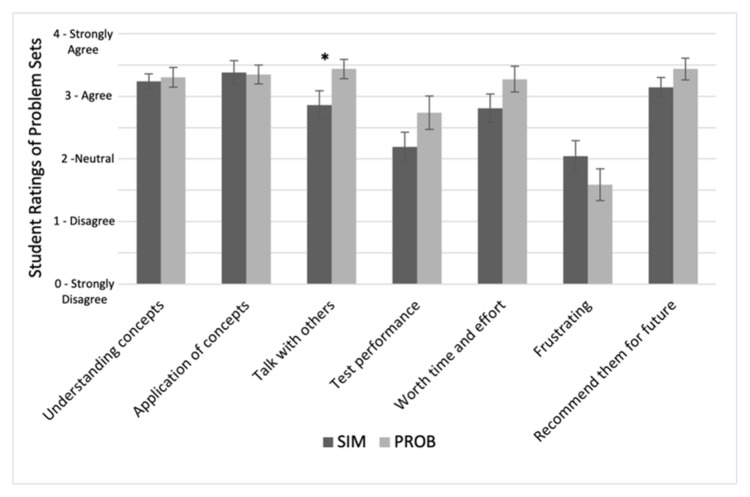

Student Feedback

When asked about the assignments as a whole, students in both sections felt that they were helpful in understanding course concepts, helped them learn to apply course material, were worth spending class time on, and recommended that I continue to use them in the future (Figure 2; Table 2). Interestingly, students in the PROB section felt that assignments enhanced their abilities to talk with others about neuroscience more than those in the SIM group (t(42) = 2.12, p = 0.039). There were no other significant differences in student perceptions between the two sections.

Figure 2.

Student assessment of the degree to which problem sets improved their understanding of course material, ability to apply concepts, ability to talk with others, and test performance, as well as their perception of being worthwhile, frustrating, and recommended for the future. Ratings were on a 5-point Likert scale (0 = strongly disagree, 2 = neutral, 4 = strongly agree). PROB students reported that the problem sets increased their ability to talk with others about course concepts more than SIM students did. Error bars represent one standard error of the mean.

Table 2.

Student comments about problem sets in general on anonymous end-of-semester surveys.

| List 2–3 topics/concepts in the course that problem sets particularly helped you understand. **Numbers in parentheses indicate the number of students mentioning this concept/skill.** |

Concepts/skills mentioned by SIM students: Designing experiments (10) Receptive fields (4) Sensory neural pathways (5) Transduction pathways and disruption of these steps (5) Action potentials (4) Hypothetical dysfunctions/related diseases and disorders (2) Sexual differentiation (2) Lateral inhibition (1) Basal ganglia functioning (1) Synaptic plasticity (1) Long-term potentiation (1) Neurotransmitter release and receptor activation (1) Interactions between concepts (putting the pieces together) (2) Apply information to bigger concepts/relatable situations (2) |

Concepts/skills mentioned by PROB students: Designing experiments (6) Receptive fields (5) Sensory neural pathways (11) Transduction pathways and disruption of these steps (2) Action potentials (4) Hypothetical dysfunctions/related diseases and disorders (1) Sexual differentiation (4) Lateral inhibition (1) Basal ganglia functioning (1) Synaptic plasticity (4) Long-term potentiation (2) Neurotransmitter release and receptor activation (2) Hunger regulation (4) Learning and memory (5) Motor systems (1) |

| Do you have any suggestions for improving assignments in the future? |

Selected comments by SIM students: “Focusing more in-depth on trickier parts of material… than trying to cover the breadth [of a topic].” “I think they would be improved by completing them outside of class and coming to class to discuss/compare answers.” “I would recommend using Neuronify for lateral inhibition, but I would not recommend it for other concepts.” “I think Neuronify and NEURON are good learning tools that help visualize this info, but most of the time it took more time to set up the simulation than to understand it. I recommend in the future that you display [simulations] on the projector to the whole class rather than us wasting time getting frustrated setting it up.” “Skip the computer software.” “I am content with the problem sets.” “Make the questions more specific/clear.” Selected comments by PROB students: “More take home/individual problem sets.” “I think working on them individually with the option to talk to classmates could be helpful.” “Honestly, I found the problem sets extremely helpful.” “More flow charts and pathway questions.” “I would suggest assigning more problem sets that are shorter as homework to have more time to learn from them.” “Mix up groups every time.” |

|

| Do you have any other comments about the assignments? |

Selected comments by SIM students: “[They were] very good preparation for written questions on tests because they were less about memorizing and more about understanding and applying.” “Assignments effectively taught me the material and testing strategies while not having the stress a quiz can cause.” “Helped me think about the topics critically.” “They greatly assisted with synthesizing info and comprehension.” “Questions about pathways REALLY helped me put together information and form a sort of story in my head.” “I think they’re very helpful and keep students up to date with material.” Selected comments by PROB students: “I would prefer to do them individually so that I can synthesize the info at my own pace.” “I really enjoyed them and found them to be helpful.” “Have more!” “Working in groups was helpful as it allowed for feedback from multiple people.” “They were helpful in requiring integration of information across broad topics.” “Changing groups mid-semester threw a cog in the gear.” |

|

When specifically asked about the simulations, students reported that they helped to understand course material, but the average student response fell close to “neutral” on the other questions (Figure 3). Student comments were quite illuminating in making sense of these data (Table 3). While some students seemed to recognize a benefit from the simulations, many were frustrated with the software. Interestingly, there was a wide array of answers on which simulation was most helpful.

Figure 3.

SIM student assessment of the degree to which simulations in particular improved their understanding of course material, ability to apply concepts, and test performance, as well as their perception of being worthwhile, frustrating, and recommended for the future. Ratings were on a 5-point Likert scale (0 = strongly disagree, 2 = neutral, 4 = strongly agree). Error bars represent one standard error of the mean.

Table 3.

Student comments about simulations on anonymous end-of-semester problem set surveys.

| Which simulation was most helpful for you? Why? | Frequency of students mentioning the following simulations:

“It was helpful to see summation on the simulation because you could get a feel for how quickly it needed to occur.” “The simulation showing lateral inhibition was the most helpful because I could see the firing pattern of each neuron that was difficult to understand during lecture.” “[Lateral inhibition] because it helped me visualize the pathway.” “The basal ganglia pathway. The pathway is overall difficult for me to memorize and seeing how it function helped me to understand it.” “The basal ganglia loop because it was cool to see how all of the different neurons interact – this circuit was much more complicated than the others we simulated.” |

| Which simulation was most problematic for you? Why? | Frequency of students mentioning the following simulations:

“Ones where we had to build a whole [network] because the software was hard to navigate.” “Lateral inhibition was annoying because there were so many steps.” “All of them were frustrating.” |

DISCUSSION

Overall, students in both sections of the course mastered course concepts, performed well on assessments, and rated that the cooperative group assignments helped them succeed. Interestingly, there were very few differences between the two sections of the course, suggesting that the incorporation of simulations is a good alternative approach to more traditional problem sets but that neither is inherently superior for student learning in this particular course. In particular, on specific questions that addressed simulation-related content on the final exam, there were few differences between the two groups. On one particular question, about the function of the basal ganglia, the PROB group outperformed the SIM group. I do not know what accounts for this difference; it could be that analyzing a figure in the textbook was more useful than composing a network via the simulation. There could be other factors at play. For example, the PROB section final exam was scheduled a day earlier than the SIM exam, and the SIM class may have been more fatigued and not taken as much time to write thorough answers (this was the second-to-last question on the exam). Overall, however, the data indicate that the use of simulations can be just as effective in this learning environment.

Student perceptions of problem sets were quite similar between the SIM and PROB groups. The only significant difference between the two involved the PROB group reporting that problem sets helped them learn to talk with others about scientific concepts more than the SIM group did. I cannot explain this finding based on the design of the assignments, as both encouraged group discussion of concepts. The difference may lie in the previous experiences of the two groups; the SIM group may have felt more comfortable discussing some of these concepts with their peers prior to taking the course, particularly because two thirds of them had taken Biopsychology (compared to 30% in the PROB) group. Additionally, although there was no significant difference in exam performance or perceived help preparing for exams, SIM students did have slightly lower exam averages and reported that problem sets were less helpful preparation. Because these students had already received the first three exam grades for the semester when they completed the survey, student perceptions may have been skewed by this feedback. It would be interesting to add additional surveys prior to each exam to see if this trend continued.

Interestingly, when students reported the 2–3 concepts and skills that they gained most from the assignments, the SIM students mentioned experimental design much more often (45% vs. 25% of students). I did ask some scientific process-related questions on the problem set questions common to both groups, which included asking students to design experiments to test a given hypothesis, predict results, or explain seemingly conflicting evidence. However, the SIM students also had the opportunity to run models, collect data, and visualize experiments. Furthermore, they could get instant feedback on their ideas through trial-and-error, rather than waiting for me to point out potential pitfalls when grading their problem sets. Although I did not directly assess students’ ability to design or analyze experiments, it is encouraging that SIM students recognized their skill development in these areas. Given that many undergraduate neuroscience courses do not include laboratories, simulations may be a particularly effective way to incorporate some of the same skill development that would typically happen in laboratory-based courses.

Student perceptions of the simulations were quite mixed. While SIM students overall reported that simulations tended to help them understand course concepts, they did not rate simulations in particular as high as the problem sets as a whole (2.77 vs. 3.24). On other measures of how simulations aided their concept and skill development, students reported scores close to “neutral,” and they seemed to be neutral on whether to recommend simulations in the future. Others have found that students respond positively to simulations when they are related to certain types of material, including cellular neurophysiology, but prefer other types of assignments when learning other concepts (Lewis, 2014). The focus of NEURON simulations is electrophysiological properties, whereas Neuronify focuses on network properties. Ideally both simulations could be used in one semester to provide students with these experiences, and it is possible that students would have reported overwhelmingly positive feedback had they been able to use NEURON without glitches. Student preferences for particular simulations varied widely, so it is unclear whether students would have gained more from additional neurophysiology simulations rather than network-based ones. Neural network-based simulations have been beneficial for student conceptual learning (Fink, 2017), and so it is possible that students do not have an accurate perception of the degree to which they benefit from such simulations.

It is also important to note that some SIM students clearly felt more comfortable with the technology than others. This may also have contributed to their reluctance to endorse the use of simulations in the future, as the ease of use does affect student ratings of simulations (Lewis, 2014). Although students seemed to work well with their group members rather quickly, additional scaffolding of student roles within the group may have helped students become acquainted with the technology. For example, Nichols (2015) suggests that when students alternated controlling a MATLAB-based simulation, they became more comfortable than when watching their peers. I encouraged all students to run the simulations or at least take turns, but students had different access to technology (for example, some could not consistently bring a computer or tablet to class and preferred to watch a groupmate run the simulation). In the future, additional structure may improve the experience of some students within the course.

Furthermore, initial student frustration with NEURON may have led to reluctance to use simulations across the board. Approximately half of the students in the class had problems either downloading NEURON or running simulations once it was downloaded. Because my survey questions asked about simulations as a whole instead of having them specifically focus on Neuronify, positive aspects of Neuronify may have been cancelled out by their trouble with NEURON. In the future, having a small group of students troubleshoot the software prior to the beginning of the semester will be critical for success so that students do not have initial frustrations and lose motivation.

Finally, the difference in student populations between the two sections of the course cannot be discounted when examining these results. It is possible that simulations would have been more successful with the PROB group, which contained more biology majors. As neuroscience majors at Mercer typically take fewer laboratory classes and often have less familiarity with virtual labs than biology majors, the learning curve may have been steeper and the time required for success with simulations longer. Student population and familiarity with this type of learning is important to consider when building a course with simulations so that the proper amount of time can be allotted to these assignments. This is also an area for further research, as neuroscience courses often have students coming from diverse academic backgrounds; future studies should examine whether simulations are more effective with students who have more previous biology or laboratory coursework.

In conclusion, cooperative problem-solving, both with and without the incorporation of simulations, can be an effective teaching method in undergraduate neuroscience courses. Simulations do have advantages in that they allow students more opportunities to gain scientific process skills, but they can also frustrate students who are not as familiar with certain types of technology. Structuring simulations and assigning and rotating student roles may overcome some of these limitations.

APPENDIX. SAMPLE QUESTIONS

Note that simulations became more complex as we progressed through the semester and students became more familiar with Neuronify.

Topic: Refractory periods

SIM Instructions/Question (Figure A1)

Open Neuronify. Go to New and select “Tutorial 1: Single cell” (if using the App, go to Examples to find the Tutorial). Read an explanation for all of the parts of this tutorial.

Select the DC current source (the orange box with an up arrow in it) and at the bottom left corner of the window, you should see “DC current source Properties.” Click on this and change the magnitude of current injected into this neuron. What happens?

What is the threshold of current needed to produce an action potential?

Model the relative refractory period: Change the Current output back to 0.3, then select the neuron and change its properties such that its resting membrane potential is −75 mV (this is under the Potentials tab). Then, find the new threshold of current needed to produce an action potential. What is this threshold?

PROB Question

Compare and contrast the absolute and relative refractory periods. Pay particular attention to what’s going on in terms of cellular/molecular events in the neuron to cause these periods. How would you expect the refractory periods to change if voltage-gated sodium channels inactivated more slowly than is typical?

Figure A1.

Neuronify screen showing how neuron properties can be altered. In addition to changing the threshold and resting membrane potential, the duration of the refractory period and capacitance and resistance of the membrane can also be altered.

Topic: Synaptic Integration

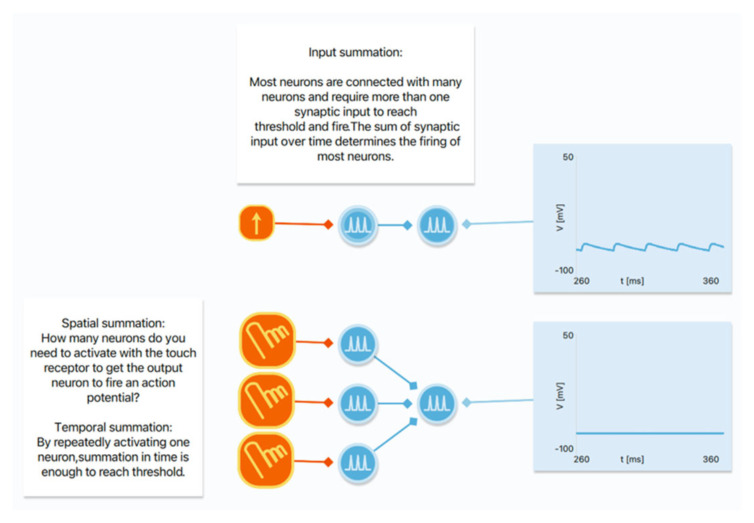

SIM Instructions/Question (Figure A2)

Open Neuronify and open the Summation Example (it’s in the last category under Examples if you’re using the app). You can see that in the top scenario, one presynaptic neuron firing occasional action potentials is not enough to reach action potential threshold in the second neuron.

They’ve added touch activators (the three orange boxes with fingers) so that every time you touch/click on this box, the neuron it’s connected to has an action potential. Play with these so you get a feel for how they work. Using spatial summation, how many neurons must be active (almost simultaneously) to get the postsynaptic neuron to action potential threshold? (experiment with the timing of your clicks)

Using temporal summation, how many sequential trains of action potentials do you need to reach action potential threshold in the postsynaptic neuron?

Add a leaky inhibitory neuron and connect it to one of the existing touch activators and the postsynaptic neuron (when you click on the touch receptor a new line will appear, and you can drag it to the inhibitory neuron; when you click on the new neuron, a new line [axon] will appear and you can drag it to the neuron on the right). What happens when you activate this touch receptor now? Explain this finding.

PROB Question

Compare and contrast the mechanisms and roles of temporal summation with that of spatial summation (don’t forget about inhibitory synapses). Give a scenario in which summation may determine whether or not a specific outcome occurs.

Topic: Lateral Inhibition

SIM Instructions/Question (Figure A3)

Open Neuronify and open the Lateral Inhibition 1 model. This illustrates how lateral inhibition works in the somatosensory system.

Explain in your own words what this simulation demonstrates, including the activity of the three neurons in the input layer (the left column, which represents the first neuron in the pathway) and the activity of the three neurons in the output layer (the right column, which represents the neurons that go from the dorsal column nucleus to the thalamus). How does this contribute to our ability to perceive stimuli on our body?

Figure A2.

The set-up of the summation simulation in Neuronify. Activation of touch receptors in the bottom half of the simulation can be used to illustrate both spatial and temporal summation. Neuron characteristics can be modified, and neurons (excitatory or inhibitory) can be added or eliminated.

Figure A3.

The set-up of the lateral inhibition example in Neuronify. Blue neurons are excitatory and pink neurons are inhibitory. Neuron properties can be modified, and axonal connections can be added or eliminated.

Adjust the properties of inhibitory neurons in the network. How did this alter lateral inhibition? In reality, each neuron inhibits its neighbors, and you can see more of the complexity in Lateral Inhibition 2.

PROB Question

Explain the process of lateral inhibition (include a diagram in your answer). What does this contribute to our ability to perceive somatosensory stimuli? How would you expect perceptual abilities to change if lateral inhibition did not exist?

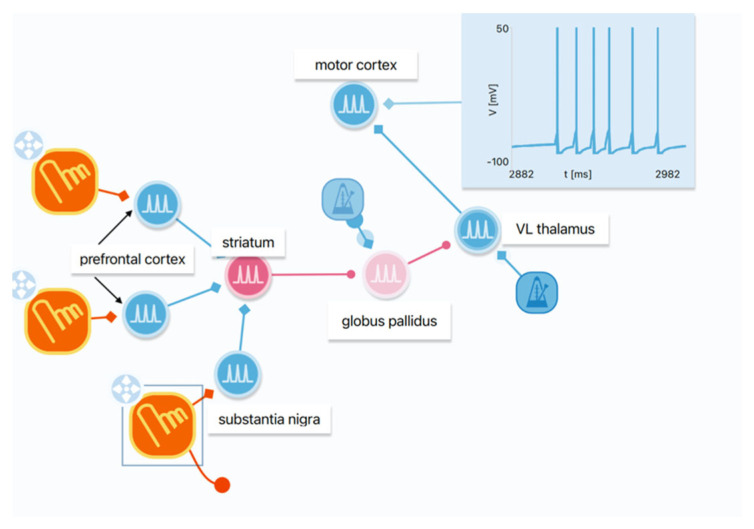

Topic: Basal Ganglia Direct Loop

SIM Instructions/Question (Figure A4)

Using Neuronify, build the direct basal ganglia motor loop, using the figure in your textbook as a guide. All of your neurons should be “leaky” neurons. Attach regular spike generators to the GPi neurons and to the VL thalamus neurons (these are typically spontaneously active). Attach a touch activator to the prefrontal cortex and substantia nigra neurons. Attach a voltmeter to the motor cortex neurons. You will need to adjust the parameters of the spike generators in order to achieve typical basal ganglia function.

Click on the touch activator to excite your prefrontal cortex neuron. When prefrontal cortex neurons sufficiently activate the putamen (think of this as saying “there is sufficient activity to justify this movement”), this facilitates premotor cortex activity to proceed with the movement. Explain this process using your model. Also include a screenshot of your model.

PROB Question

When prefrontal cortex neurons sufficiently activate the putamen (think of this as saying “there is sufficient activity to justify this movement”), this facilitates premotor cortex activity to proceed with the movement. Explain this process and include all of the populations of neurons in the direct basal ganglia loop and how their activity changes to cause this effect.

Figure A4.

Example of direct basal ganglia loop model built in Neuronify. The activity of motor cortex neurons is measured; their action potential frequency will drastically increase if inputs to the striatum are activated by clicking on the touch receptors (orange squares).

REFERENCES

- Arthurs LA, Kreager BZ. An integrative review of in-class activities that enable active learning in college science classroom settings. Int J Sci Educ. 2017;39(15):2073–2091. [Google Scholar]

- Bish JP, Schleidt S. Effective use of computer simulations in an introductory neuroscience laboratory. J Undergrad Neurosci Educ. 2008;6(2):A64–A67. [PMC free article] [PubMed] [Google Scholar]

- Chang C, Chang M, Chiu B, Liu C, Chiang SF, Wen C, Hwang F, Wu Y, Chao P, Lai C, Wu S, Chang C, Chen W. An analysis of student collaborative problem solving activities mediated by collaborative simulations. Comput Educ. 2017;114:222–235. [Google Scholar]

- Crisp KM. A structured-inquiry approach to teaching neurophysiology using computer simulation. J Undergrad Neurosci Educ. 2012;11(1):A132–A138. [PMC free article] [PubMed] [Google Scholar]

- Dragly S-A, Mobarhan MH, Solbrå AV, Tennøe S, Hafreager A, Malthe-Sørenssen A, Fyhn M, Hafting T, Einevoll GT. Neuronify: an educational simulator for neural circuits. eNeuro. 2017;4(2):e0022-17.2017. doi: 10.1523/ENEURO.0022-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faust JL, Paulson DR. Active learning in the college classroom. J Excell Coll Teach. 1998;9(2):3–24. [Google Scholar]

- Ferreira MM, Trudel AR. the impact of problem-based learning (pbl) on student attitudes toward science, problem-solving skills, and sense of community in the classroom. J Classr Interact. 2012;47(1):23–30. [Google Scholar]

- Fink CG. An interactive simulation program for exploring computational models of auto-associative memory. J Undergrad Neurosci Educ. 2017;16(1):A1–A5. [PMC free article] [PubMed] [Google Scholar]

- Freeman S, Eddy SL, McDonough M, Smith MK, Okoroafor N, Jordt H, Wenderoth MP. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci. 2014;111(23):8410–8415. doi: 10.1073/pnas.1319030111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gehring KM, Eastman DA. Information fluency for undergraduate biology majors: applications of inquiry-based learning in a developmental biology course. CBE Life Sci Educ. 2008;7(1):54–63. doi: 10.1187/cbe.07-10-0091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gormally C, Brickman P, Hallar B, Armstrong N. Effects of inquiry-based learning on students’ science literacy skills and confidence. International Journal for the Scholarship of Teaching and Learning. 2009;3(2):16. [Google Scholar]

- Hall DA, McCurdy DW. A comparison of a biological sciences curriculum study (BSCS) laboratory and a traditional laboratory on student achievement at two private liberal arts colleges. J Res Sci Teach. 1990;27(7):625–636. [Google Scholar]

- Johnson D, Johnson R. Learning Together and alone: overview and meta-analysis. Asia Pacific J Educ. 2002;22(1):95–105. [Google Scholar]

- Keen-Rhinehart E, Eisen A, Eaton D, McCormack K. Interactive methods for teaching action potentials, an example of teaching innovation from neuroscience postdoctoral fellows in the fellowships in research and science teaching (FIRST) program. J Undergrad Neurosci Educ. 2009;7(2):A74–A79. [PMC free article] [PubMed] [Google Scholar]

- Kyndt E, Raes E, Lismont B, Timmers F, Cascallar E, Dochy F. A meta-analysis of the effects of face-to-face cooperative learning. do recent studies falsify or verify earlier findings? Ed Res Rev. 2013;10:133–149. [Google Scholar]

- Latimer B, Bergin D, Guntu V, Schulz D, Nair S. Open source software tools for teaching neuroscience. J Undergrad Neurosci Educ. 2018;16(3):A197–A202. [PMC free article] [PubMed] [Google Scholar]

- Lewis DI. The pedagogical benefits and pitfalls of virtual tools for teaching and learning laboratory practices in the Biological Sciences. Heslington, UK: The Higher Education Academy; 2014. [Google Scholar]

- Liu CC, Hsieh IC, Wen CT, Chang MH, Chiang SHF, Tsai M, Chang CJ, Hwang FK. The affordances and limitations of collaborative science simulations: the analysis from multiple evidences. Comput Educ. 2021;160:104029. [Google Scholar]

- Lorenz S, Egelhaaf M. curricular integration of simulations in neuroscience - instructional and technical perspectives. Brains, Minds & Media. 2008;3:1427. [Google Scholar]

- Luckie DB, Maleszewski JJ, Loznak SD, Krha M. Infusion of collaborative inquiry throughout a biology curriculum increases student learning: a four-year study of “Teams and Streams”. Adv Physiol Educ. 2004;28(1–4):199–209. doi: 10.1152/advan.00025.2004. [DOI] [PubMed] [Google Scholar]

- Mawhirter DA, Garofalo PF. Expect the unexpected: simulation games as a teaching strategy. Clin Simul Nurs. 2016;12(4):132–136. [Google Scholar]

- Nichols DF. A series of computational neuroscience labs increases comfort with MATLAB. J Undergrad Neurosci Educ. 2015;14(1):A74–A81. [PMC free article] [PubMed] [Google Scholar]

- Schettino LF. NeuroLab: A set of graphical computer simulations to support neuroscience instruction at the high school and undergraduate level. J Undergrad Neurosci Educ. 2014;12(2):A123–129. [PMC free article] [PubMed] [Google Scholar]

- Smallhorn M, Young J, Hunter N, Burke da Silva K. Inquiry-based learning to improve student engagement in a large first year topic. Student Success. 2015;6(2):65–71. [Google Scholar]

- Springer L, Stanne ME, Donovan SS. Effects of small-group learning on undergraduates in science, mathematics, engineering, and technology: a meta-analysis. Rev Ed Res. 1999;69(1):21–51. [Google Scholar]

- Tanner K, Chatman LS, Allen D. approaches to cell biology teaching: cooperative learning in the science classroom—beyond students working in groups. Cell Biol Educ. 2003;2(1):1–5. doi: 10.1187/cbe.03-03-0010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe U. successful integration of interactive neuroscience simulations into a non-laboratory sensation & perception course. J Undergrad Neurosci Educ. 2009;7(2):A69–A73. [PMC free article] [PubMed] [Google Scholar]