Abstract

Based on Salp Swarm Algorithm (SSA) and Slime Mould Algorithm (SMA), a novel hybrid optimization algorithm, named Hybrid Slime Mould Salp Swarm Algorithm (HSMSSA), is proposed to solve constrained engineering problems. SSA can obtain good results in solving some optimization problems. However, it is easy to suffer from local minima and lower density of population. SMA specializes in global exploration and good robustness, but its convergence rate is too slow to find satisfactory solutions efficiently. Thus, in this paper, considering the characteristics and advantages of both the above optimization algorithms, SMA is integrated into the leader position updating equations of SSA, which can share helpful information so that the proposed algorithm can utilize these two algorithms' advantages to enhance global optimization performance. Furthermore, Levy flight is utilized to enhance the exploration ability. It is worth noting that a novel strategy called mutation opposition-based learning is proposed to enhance the performance of the hybrid optimization algorithm on premature convergence avoidance, balance between exploration and exploitation phases, and finding satisfactory global optimum. To evaluate the efficiency of the proposed algorithm, HSMSSA is applied to 23 different benchmark functions of the unimodal and multimodal types. Additionally, five classical constrained engineering problems are utilized to evaluate the proposed technique's practicable abilities. The simulation results show that the HSMSSA method is more competitive and presents more engineering effectiveness for real-world constrained problems than SMA, SSA, and other comparative algorithms. In the end, we also provide some potential areas for future studies such as feature selection and multilevel threshold image segmentation.

1. Introduction

In recent years, metaheuristic algorithms have been widely concerned by a large number of scholars. Compared with other traditional optimization algorithms, the concept of metaheuristic algorithms is simple. Besides, they are flexible and can bypass local optima. Thus, metaheuristics have been successfully applied in different fields to solve various complex optimization problems in the real world [1–3].

Metaheuristic algorithms include three main categories: evolution-based, physics-based, and swarm-based techniques. The inspirations of evolutionary-based methods are the laws of evolution in nature. The most popular evolution-based algorithms include Genetic Algorithm (GA) [4], Differential Evolution Algorithm (DE) [5], and Biogeography-Based Optimizer (BBO) [6]. Physics-based algorithms mimic the physical rules in the universe. There are some representative algorithms such as Simulated Annealing (SA) [7], Gravity Search Algorithm (GSA) [8], Black Hole (BH) algorithm [9], Multiverse Optimizer (MVO) [10], Artificial Chemical Reaction Optimization Algorithm (ACROA) [11], Ray Optimization (RO) [12], Curved Space Optimization (CSO) [13], Sine Cosine Algorithm (SCA) [14], Arithmetic Optimization Algorithm (AOA) [15], and Heat Transfer Relation-based Optimization Algorithm (HTOA) [16]. The third category algorithm is swarm-based techniques, which simulate the social behavior of creatures in nature. Some optimization techniques of this class include Particle Swarm Optimization (PSO) [17] Ant Colony Optimization Algorithm (ACO) [18], Firefly Algorithm (FA) [19], Grey Wolf Optimizer (GWO) [20], Cuckoo Search (CS) Algorithm [21], Whale Optimization Algorithm (WOA) [22], Bald Eagle Search (BES) algorithm [23], and Aquila Optimizer (AO) [24].

It is worth noting that the most widely used swarm-based optimization algorithm is PSO [25]. PSO simulates the behavior of birds flying together in flocks. During the search, they all follow the best solutions in their paths. Cacciola et al. [26] discussed the problem of corrosion profile reconstruction starting from electrical data, in which PSO was utilized to obtain the image of the reconstructed corrosion profile. The result shows that PSO can obtain the optimal solution compared with LSM, and it takes the least time. This allows us to recognize the huge potential of the optimization algorithm.

Salp Swarm Algorithm (SSA) [27] is a swarm-based algorithm proposed in 2017. SSA is inspired by swarm behavior, navigation, and foraging of salps in the ocean. Since SSA has fewer parameters and is easier to be realized in a program than other algorithms, SSA has been applied to many optimization problems, such as feature selection, image segmentation, and constrained engineering problems. However, like other metaheuristic algorithms, SSA may be easy to trap into local minima and lower population density. Therefore, many improved researches have been proposed to enhance the performance of SSA in many fields. Tubishat et al. [28] presented a Dynamic SSA (DSSA), which shows better accuracy than SSA in feature selection. Salgotra et al. [29] proposed a self-adaptive SSA to enhance exploitation ability and convergence speed. Neggaz et al. [30] proposed an improved leader in SSA using Sine Cosine Algorithm and disrupt operator for feature selection. Jia and Lang [31] presented an enhanced SSA with a crossover scheme and Levy flight to improve the movement patterns of salp leaders and followers. There are also other attempts on the hybrid algorithm of SSA. Saafan and El-gendy [32] proposed a hybrid improved Whale Optimization Salp Swarm Algorithm (IWOSSA). The IWOSSA achieves a better balance between exploration and exploitation phases and avoids premature convergence effectively. Singh et al. [33] developed a hybrid SSA with PSO, which integrated the advantages of SSA and PSO to eliminate trapping in local optima and unbalanced exploitation. Abadi et al. [34] proposed a hybrid approach by combining SSA with GA, which could obtain good results in solving some optimization problems.

Slime Mould Algorithm (SMA) [35] is the latest swarm intelligence algorithm proposed in 2020. This algorithm simulates the oscillation mode and the foraging of Slime Mould in nature. SMA has a unique search mode, which keeps the algorithm from falling into local optima, and has superior global exploration capability. The approach has been applied in real-world optimization problems like feature selection [36], parameters optimization of the fuzzy system [37], multilevel threshold image segmentation [38], control scheme [39], and parallel connected multistacks fuel cells [40].

Therefore, based on the capabilities of both above algorithms, we try to do a hybrid operation to improve the performance of SMA or SSA and then propose a new hybrid optimization algorithm (HSMSSA) to speed up the convergence rate and enhance the overall optimization performance. The specific method is that we integrate SMA as the leader role into SSA and retain the exploitation phase of SSA. At the same time, inspired by the significant performance of opposition-based learning and quasiopposition-based learning, we propose a new strategy named mutation opposition-based learning (MOBL), which switches the algorithm between opposition-based learning and quasiopposition-based learning through a mutation rate to increase the diversity of the population and speed up the convergence rate. In addition, Levy flight is utilized to improve SMA's exploration capability and balance the exploration and exploitation phases of the algorithm. The proposed HSMSSA algorithm can improve both the exploration and exploitation abilities. The proposed HSMSSA is tested on 23 different benchmark functions and compared with other optimization algorithms. Furthermore, five constrained engineering problems are also utilized to evaluate HSMSSA's capability on real-world optimization problems. The experimental results illustrate that the HSMSSA possesses the superior capability to search the global minimum and achieve less cost engineering design results than other state-of-the-art metaheuristic algorithms.

The remainder of this paper is organized as follows. Section 2 provides a brief overview of SSA, SMA, Levy flight, and mutation opposition-based learning strategy. Section 3 describes the proposed hybrid algorithm in detail. In Section 4, the details of benchmark functions, parameter settings of the selected algorithms, simulation experiments, and results analysis are introduced. Conclusions and prospects are given in Section 5.

2. Preliminaries

2.1. Salp Swarm Algorithm

In the deep sea, salps live in groups and form a salp chain to move and forage. In the salp chain, there are leaders and followers. The leader moves towards the food and guides the followers. In the process of moving, leaders explore globally, while followers thoroughly search locally [27]. The shapes of a salp and salp chain are shown in Figure 1.

Figure 1.

Individual salp and the swarm of salp [25].

2.1.1. Leader Salps

The front salp of the chain is called the leader, so the following equation is used to perform this action to the salp leader:

| (1) |

| (2) |

where Xj1 and Fj represent the new position of the leader and food source in the jth dimension and r1 and r2 are randomly generated numbers in the interval [0, 1]. It is worth noting that c1 is essential for SSA because it balances exploration and exploitation during the search process. t is the current iteration and T is the max iteration.

2.1.2. Follower Salps

To update the position of the followers, the new concept is introduced, which is based on Newton's law of motion as in the following equation:

| (3) |

where Xji represents the position of ith follower salp in the jth dimension and g and ω0 indicate the acceleration and the velocity, respectively. The updating process of followers can be expressed as in the following equation:

| (4) |

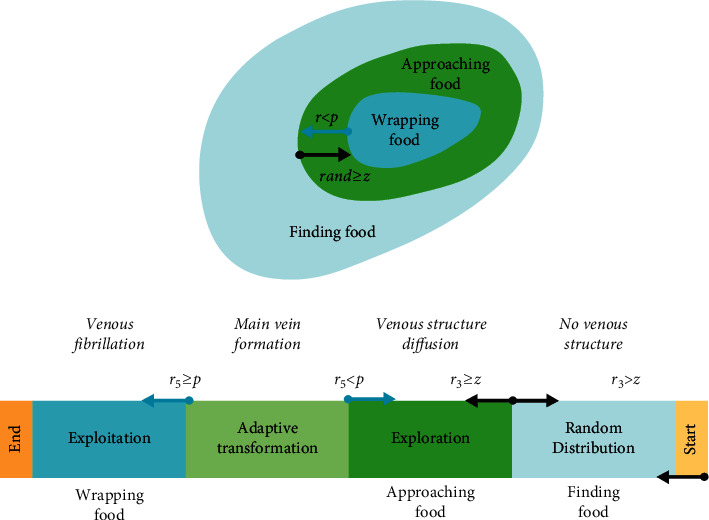

The pseudocode of SSA is presented in Algorithm 1.

Algorithm 1.

Pseudocode of Salp Swarm Algorithm.

2.2. Slime Mould Algorithm

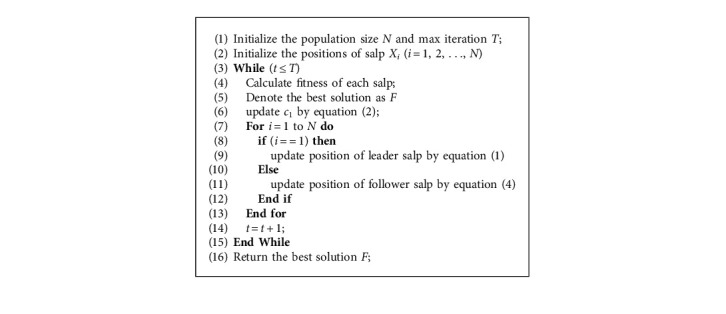

The main idea of SMA is inspired by the behavior and morphological changes of Slime Mould in foraging. Slime Mould can dynamically change search mode based on the quality of food sources. If the food source has a high quality, the Slime Mould will use the region-limited search method. If the food concentration is low, the Slime Mould will explore other food sources in the search space. Furthermore, even if Slime Mould has found a high-quality food source, it still divides some individuals to explore another area in the region [35]. The behavior of Slime Mould can be mathematically described as follows:

| (5) |

where parameters r3, r4, and r5 are random values in the range of 0 to 1. UB and LB indicate the upper and lower bound of search space. z is a constant. represents the best position obtained in all iterations, and represent two individuals selected randomly from the population, and represents the location of Slime Mould. decreases linearly from one to zero, and is an oscillation parameter in the range [−a, a], in which a is calculated as follows:

| (6) |

The coefficient is a very important parameter, which simulates the oscillation frequency of different food concentrations so that Slime Mould can approach food more quickly when they find high-quality food. The formula of is listed as follows:

| (7) |

| (8) |

where i ∈ 1, 2,…, N and S(i) represents the fitness of X. condition indicates that S(i) ranks first half of the Slime Mould, and r6 are random numbers uniformly generated in the interval of [0, 1]. bF represents the optimal fitness obtained in the current iterative process, wF represents the worst fitness value obtained in the iterative process currently, and SmellIndex denotes the sequence of fitness values sorted (ascends in the minimum value problem).

The p parameter can be described as follows:

| (9) |

where DF represents the best fitness over all iterations. Figure 2 visualizes the general logic of SMA.

Figure 2.

The steps of SMA.

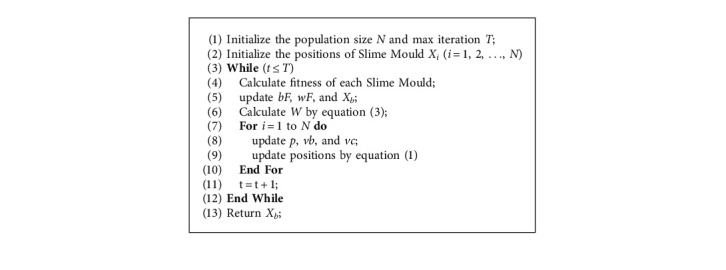

The pseudocode of SMA is presented in Algorithm 2.

Algorithm 2.

Pseudocode of Slime Mould Algorithm.

2.3. Levy Flight

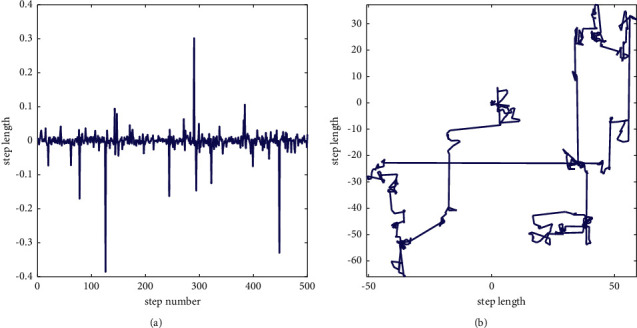

Levy flight is an effective strategy for metaheuristic algorithms, successfully designed in many algorithms [41–44]. Levy flight is a class of non-Gaussian random processes that follows Levy distribution. It alternates between short-distance and occasionally long-distance walking, which can be inferred from Figure 3. The formula of Levy flight is as follows:

| (10) |

where r7 and r8 are random values in the range of [0, 1] and β is a constant equal to 1.5.

Figure 3.

Levy distribution and 2D Levy trajectory.

2.4. Mutation Opposition-Based Learning

Opposition-based learning (OBL) was proposed by Tizhoosh in 2005 [45]. The essence of OBL is selecting the best solution to the next iteration by comparing the current solution and its opposition-based learning solution. The OBL strategy has been successfully used in varieties of metaheuristic algorithms [46–51] to improve the ability of local optima stagnation avoidance, and the mathematical expression is as follows:

| (11) |

Quasiopposition-based learning (QOBL) [52] is an improved version from OBL, which applies quasiopposite points instead of opposite points. These points produced through QOBL have more likelihood of being unknown solutions than the points created by OBL. The mathematical formula of QOBL is as follows:

| (12) |

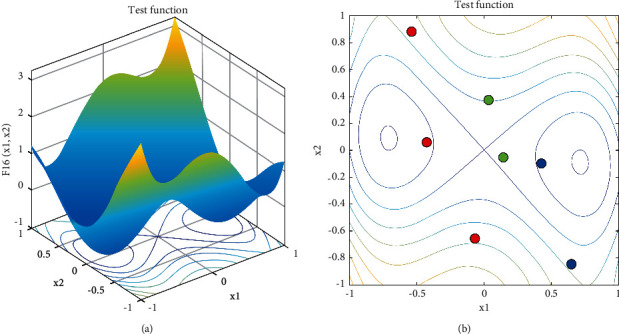

Considering the superior performance of the two kinds of opposition-based learning, we propose mutation opposition-based learning (MOBL) by combining the mutation rate with these two opposition-based learning. By selecting the mutation rate, we can give full play to the characteristics of the OBL and QOBL and effectively enhance the ability of the algorithm to jump out of the local optima. Figure 4 is an MOBL example, in which Figure 4(a) shows an objective function and Figure 4(b) displays three candidate solutions and their OBL solutions or QOBL solutions. The mathematical formula is as follows:

| (13) |

where rate is mutation rate, and we set it to 0.1.

Figure 4.

MOBL example: (a) objective function and (b) candidate solutions (red point), its OBL solution (blue point), and its QOBL solution (green point).

3. The Proposed Algorithm

3.1. Details of HSMSSA

In SSA, the population is divided into leader salps and follower salps: leader salps are the first half of salps in the chain, and follower salps follow the leader. However, the leader salp has poor randomness and is easy to fall into local optima. For the SMA algorithm, Slime Mould selects different search modes according to the positive and negative feedback of the current food concentration and has a certain probability of isolating some individuals to explore other regions in search space. These mechanisms increase the randomness of Slime Mould and enhance the ability to explore. The vb parameter is utilized to realize the oscillation mode of Slime Mould, which is in the range of [−a, a]. However, vb has the drawback of low randomness, which cannot effectively simulate the process of Slime Mould looking for food sources. Therefore, we introduce Levy flight into the exploration phase to further enhance the exploration ability. Next, we integrate SMA into SSA, change the position update method of leader salps, and further improve the randomness of the algorithm through Levy flight. For followers, we propose a mutation opposition-based learning to enhance its population diversity and increase the ability of the algorithm to jump out of the local optima. The mathematical formula of leader salps is as follows:

| (14) |

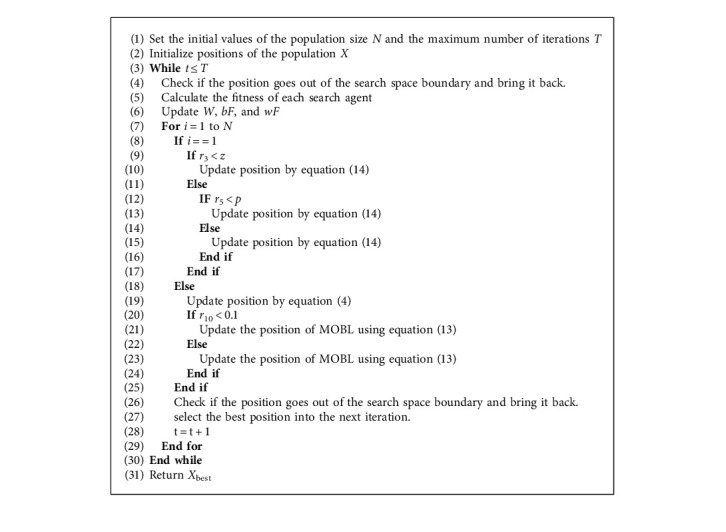

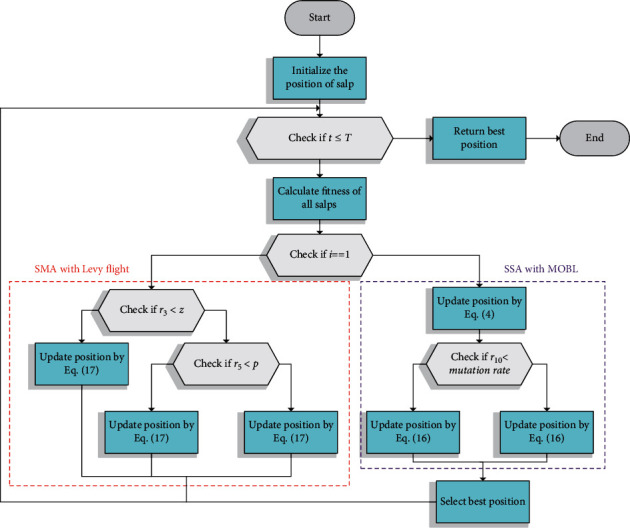

The pseudocode of HSMSSA is given in Algorithm 3, and the summarized flowchart is displayed in Figure 5. As shown in Algorithm 3, the position of the population is initially generated randomly. Then, each individual's fitness will be calculated. For the entire population in each iteration, parameter W is calculated using equation (7). The search agents of population size N are assigned to the two algorithms, which can utilize the advantages of SSA and SMA, and realize the sharing of helpful information to achieve global optimization. If the search agent belongs to the first half of the population, the position will be updated using equation (14) in SMA with Levy flight. Otherwise, the position is determined using equation (4) and MOBL. Finally, if the termination criteria are satisfied, the algorithm returns the best solution found so far; else the previous steps are repeated.

Algorithm 3.

Pseudocode of HSMSSA.

Figure 5.

Flowchart of HSMSSA.

3.2. Computational Complexity Analysis

HSMSSA mainly consists of three components: initialization, fitness evaluation, and position updating. In the initialization phase, the computational complexity of positions generated is O(N × D), where D is the dimension size of the problem. Then, the computational complexity of fitness evaluation for the solution is O(N) during the iteration process. Finally, we utilize mutation opposition-based learning to keep the algorithm from falling into local optima; thus, the computational complexities of position updating of HSMSSA are O(2 × N × D). Therefore, the total computational complexity of the proposed HSMSSA algorithm is O(N × D + N + 2 × N × D).

4. Experimental Results and Discussion

This section compared the HSMSSA with some state-of-the-art metaheuristics algorithms on 23 benchmark functions to validate its performance. Moreover, five engineering design problems are employed as examples for real-world applications. The experimentations ran on Windows 10 with 24 GB RAM and Intel (R) i5-9500. All simulations were carried out using MATLAB R2020b.

4.1. Definition of 23 Benchmark Functions

To assess HSMSSA's ability of exploration, exploitation, and escaping from local optima, 23 benchmark functions, including unimodal and multimodal functions, are tested [27]. The unimodal benchmark functions (F1–F7) are utilized to examine the exploitation ability of HSMSSA. The description of the unimodal benchmark function is shown in Table 1. The multimodal and fixed-dimension multimodal benchmark functions (F8–F23) shown in Tables 2 and 3 are used to test the exploration ability of HSMSSA.

Table 1.

Unimodal benchmark functions.

| Function | Dim | Range | F min |

|---|---|---|---|

| F1(x)=∑i=1nxi2 | 30 | [−100, 100] | 0 |

| F2(x)=∑i=1n|xi|+∏i=1n|xi| | 30 | [−10, 10] | 0 |

| F3(x)=∑i=1n(∑j−1ixj)2 | 30 | [−100, 100] | 0 |

| F4(x)=maxi{|xi|, 1 ≤ i ≤ n} | 30 | [−100, 100] | 0 |

| F5(x)=∑i=1n−1[100(xi+1 − xi2)2+(xi − 1)2] | 30 | [−30, 30] | 0 |

| F6(x)=∑i=1n(xi+5)2 | 30 | [−100, 100] | 0 |

| F7(x)=∑i=1nixi4+random[0,1) | 30 | [−1.28, 1.28] | 0 |

Table 2.

Multimodal benchmark functions.

| Function | Dim | Range | F min |

|---|---|---|---|

| 30 | [−500, 500] | −418.9829 × Dim | |

| F9(x)=∑i=1n[xi2 − 10 cos(2πxi)+10] | 30 | [−5.12, 5.12] | |

| 30 | [−32, 32] | 0 | |

| 30 | [−600, 600] | 0 | |

| 30 | [−50, 50] | 0 | |

| 30 | [−50, 50] | 0 |

Table 3.

Fixed-dimension multimodal benchmark functions.

| Function | Dim | Range | F min |

|---|---|---|---|

| F14(x)=((1/500)+∑j=1251/(j+∑i=12(xi − aij)6))−1 | 2 | [−65, 65] | 0.998 |

| F15(x)=∑i=111[ai − (x1(bi2+bix2)/(bi2+bix3+x4))]2 | 4 | [−5, 5] | 0.00030 |

| F16(x)=4x12 − 2.1x14+(1/3)x16+x1x2 − 4x22+x24 | 2 | [−5, 5] | −1.0316 |

| F17(x)=(x2 − (5.1/4π2)x12+(5/π)x1 − 6)2+10(1 − (1/8π))cos x1+10 | 2 | [−5, 5] | 0.398 |

| 2 | [−2, 2] | 3 | |

| F19(x)=−∑i=14ciexp(−∑j=13aij(xj − pij)2) | 3 | [−1, 2] | −3.86 |

| F20(x)=−∑i=14ciexp(−∑j=16aij(xj − pij)2) | 6 | [0, 1] | −3.32 |

| F21(x)=−∑i=15[(X − ai)(X − ai)T+ci]−1 | 4 | [0, 10] | −10.1532 |

| F22(x)=−∑i=17[(X − ai)(X − ai)T+ci]−1 | 4 | [0, 10] | −10.4028 |

| F23(x)=−∑i=110[(X − ai)(X − ai)T+ci]−1 | 4 | [0, 10] | −10.5363 |

In order to show the experimental results more representative, the HSMSSA is compared with the basic SMA [35] and SSA [27], AO [24], AOA [15], WOA [22], SCA [14], and MVO [10]. For all tests, we set the population size N = 30, dimension size D = 30, and maximum iteration T = 500, respectively, for all algorithms with 30 independent runs. The parameter settings of each algorithm are shown in Table 4. After all, average results and standard deviations are employed to evaluate the results. Note that the best results will be bolded.

Table 4.

Parameter settings for the comparative algorithms.

| Algorithm | Parameters |

|---|---|

| SMA [35] | z = 0.03 |

| SSA [27] | c1 = [1, 0]; c2 ∈ [0, 1]; c3 ∈ [0, 1] |

| AO [24] | U = 0.00565; r1 = 10; ω = 0.005; α = 0.1; δ = 0.1; G1 ∈ [−1, 1]; G2 = [2, 0] |

| AOA [15] | α = 5; μ = 0.5; |

| WOA [22] | a1 = [2, 0]; a2 = [−1, −2]; b = 1 |

| SCA [14] | a = [2, 0] |

| MVO [10] | WEP ∈ [0.2, 1]; TDR ∈ [0, 1]; r1, r2, r3 ∈ [0, 1] |

4.1.1. Evaluation of Exploitation Capability (F1–F7)

As we can see, unimodal benchmark functions have only one global optimum. These functions are allowed to evaluate the exploitation ability of the metaheuristic algorithms. It can be seen from Table 5 that HSMSSA is very competitive with SMA, SSA, and other metaheuristic algorithms. In particular, HSMSSA can achieve much better results than other metaheuristic algorithms except F6. For F1–F4, HSMSSA can find the theoretical optimum. For all unimodal functions except F5, HSMSSA gets the smallest average values and standard deviations compared to other algorithms, which indicate the best accuracy and stability. Hence, the exploitation capability of the proposed HSMSSA algorithm is excellent.

Table 5.

Results of algorithms on 23 benchmark functions.

| Function | HSMSSA | SMA | SSA | AO | AOA | WOA | SCA | MVO | |

|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0.0000E + 00 | 3.3100E − 303 | 2.1200E − 07 | 3.4900E − 113 | 4.9600E − 06 | 3.0200E − 74 | 2.2039E + 01 | 1.2581E + 00 |

| Std | 0.0000E + 00 | 0.0000E + 00 | 2.3600E − 07 | 1.9100E − 112 | 2.1600E − 06 | 1.6500E − 73 | 3.4334E + 01 | 4.4824E − 01 | |

|

| |||||||||

| F2 | Mean | 0.0000E + 00 | 8.7800E − 161 | 1.9590E + 00 | 5.0900E − 59 | 1.9805E − 03 | 3.1700E − 50 | 2.6402E − 02 | 1.2927E + 00 |

| Std | 0.0000E + 00 | 4.8000E − 160 | 1.4157E + 00 | 2.7900E − 58 | 1.9063E − 03 | 1.5800E − 49 | 2.9329E − 02 | 1.1310E + 00 | |

|

| |||||||||

| F3 | Mean | 0.0000E + 00 | 1.4159e − 313 | 2.1110E + 03 | 3.7000E − 101 | 9.6539E − 04 | 4.3306E + 04 | 8.0776E + 03 | 2.3785E + 02 |

| Std | 0.0000E + 00 | 0.0000E + 00 | 1.5514E + 03 | 2.0200E − 100 | 8.1952E − 04 | 1.6945E + 04 | 4.7486E + 03 | 1.2902E + 02 | |

|

| |||||||||

| F4 | Mean | 0.0000E + 00 | 1.4800E − 150 | 1.1761E + 01 | 1.7300E − 55 | 1.7772E − 02 | 5.2341E + 01 | 3.4937E + 01 | 1.9521E + 00 |

| Std | 0.0000E + 00 | 8.1300E − 150 | 4.0647E + 00 | 9.5000E − 55 | 1.1446E − 02 | 2.8502E + 01 | 1.3547E + 01 | 7.5824E − 01 | |

|

| |||||||||

| F5 | Mean | 8.0753E − 02 | 5.0378E + 00 | 1.0827E + 02 | 5.2141E − 03 | 2.8034E + 01 | 2.8032E + 01 | 7.1843E + 04 | 4.7661E + 02 |

| Std | 2.7660E − 01 | 9.1628E + 00 | 1.4901E + 02 | 7.2117E − 03 | 2.0336E − 01 | 4.1099E − 01 | 1.2854E + 05 | 7.8524E + 02 | |

|

| |||||||||

| F6 | Mean | 8.2900E − 07 | 5.8093E − 03 | 1.3800E − 07 | 1.3859E − 04 | 3.1018E + 00 | 3.6069E − 01 | 1.1633E + 01 | 1.1004E + 00 |

| Std | 4.9600E − 07 | 2.4730E − 03 | 1.5300E − 07 | 2.1774E − 04 | 2.2067E − 01 | 2.2358E − 01 | 9.7843E + 00 | 3.6012E − 01 | |

|

| |||||||||

| F7 | Mean | 6.4400E − 05 | 1.6357E − 04 | 1.6394E − 01 | 7.8800E − 05 | 1.0695E − 04 | 3.0691E − 03 | 1.3682E − 01 | 3.6527E − 02 |

| Std | 6.2600E − 05 | 1.3341E − 04 | 6.1309E − 02 | 6.5100E − 05 | 1.0095E − 04 | 2.8218E − 03 | 2.0698E − 01 | 1.6353E − 02 | |

|

| |||||||||

| F8 | Mean | −1.2569E + 04 | −1.2569E + 04 | −7.1073E + 03 | −9.3742E + 03 | −5.6142E + 03 | −1.0050E + 04 | −3.7684E + 03 | −7.6853E + 03 |

| Std | 2.0511E − 02 | 5.2108E − 01 | 9.0982E + 02 | 3.7928E + 03 | 3.9485E + 02 | 1.6846E + 03 | 2.8948E + 02 | 6.3161E + 02 | |

|

| |||||||||

| F9 | Mean | 0.0000E + 00 | 0.0000E + 00 | 5.3927E + 01 | 0.0000E + 00 | 1.5400E − 06 | 0.0000E + 00 | 3.6516E + 01 | 1.2767E + 02 |

| Std | 0.0000E + 00 | 0.0000E + 00 | 1.6886E + 01 | 0.0000E + 00 | 1.0800E − 06 | 0.0000E + 00 | 3.5214E + 01 | 3.5868E + 01 | |

|

| |||||||||

| F10 | Mean | 8.8800E − 16 | 8.8800E − 16 | 2.5258E + 00 | 2.1600E − 14 | 4.2571E − 04 | 4.3200E − 15 | 1.4566E + 01 | 2.5576E + 00 |

| Std | 0.0000E + 00 | 0.0000E + 00 | 6.8528E − 01 | 1.1400E − 13 | 1.8869E − 04 | 2.7200E − 15 | 8.3427E + 00 | 3.3443E + 00 | |

|

| |||||||||

| F11 | Mean | 0.0000E + 00 | 0.0000E + 00 | 2.0677E − 02 | 0.0000E + 00 | 5.1653E − 04 | 1.9620E − 02 | 9.3797E − 01 | 8.7497E − 01 |

| Std | 0.0000E + 00 | 0.0000E + 00 | 1.4808E − 02 | 0.0000E + 00 | 2.6954E − 03 | 5.4806E − 02 | 3.3652E − 01 | 7.4092E − 02 | |

|

| |||||||||

| F12 | Mean | 2.4700E − 05 | 5.9758E − 03 | 7.1903E + 00 | 3.3600E − 06 | 7.3782E − 01 | 1.7457E − 02 | 1.4353E + 03 | 2.1818E + 00 |

| Std | 5.1900E − 05 | 6.9167E − 03 | 2.7304E + 00 | 3.9400E − 06 | 2.7918E − 02 | 1.2428E − 02 | 7.0295E + 03 | 1.3736E + 00 | |

|

| |||||||||

| F13 | Mean | 4.6300E − 07 | 5.4042E − 03 | 1.2368E + 01 | 1.4900E − 05 | 2.9623E + 00 | 5.1556E − 01 | 1.8722E + 05 | 1.4761E − 01 |

| Std | 1.4800E − 07 | 7.9865E − 03 | 1.2274E + 01 | 2.0600E − 05 | 2.0518E − 02 | 2.3195E − 01 | 4.3886E + 05 | 6.2682E − 02 | |

|

| |||||||||

| F14 | Mean | 9.9800E − 01 | 9.9800E − 01 | 1.2294E + 00 | 2.4408E + 00 | 1.0093E + 01 | 2.9945E + 00 | 1.7920E + 00 | 9.9800E − 01 |

| Std | 5.0400E − 16 | 3.5900E − 13 | 6.7284E − 01 | 2.6435E + 00 | 3.9607E + 00 | 3.2280E + 00 | 9.8831E − 01 | 4.2300E − 11 | |

|

| |||||||||

| F15 | Mean | 3.9234E − 04 | 5.4854E − 04 | 2.8300E − 03 | 4.8202E − 04 | 7.5731E − 03 | 8.4669E − 04 | 1.0574E − 03 | 5.7644E − 03 |

| Std | 1.0289E − 04 | 2.5100E − 04 | 5.9501E − 03 | 1.0743E − 04 | 1.9781E − 02 | 6.5602E − 04 | 3.8453E − 04 | 1.2715E − 02 | |

|

| |||||||||

| F16 | Mean | −1.0316E + 00 | −1.0316E + 00 | −1.0316E + 00 | −1.0311E + 00 | −1.0316E + 00 | −1.0316E + 00 | −1.0316E + 00 | −1.0316E + 00 |

| Std | 1.4300E − 13 | 6.6000E − 10 | 2.3300E − 12 | 4.6089E − 04 | 2.7600E − 11 | 1.0400E − 09 | 4.2000E − 05 | 3.8000E − 07 | |

|

| |||||||||

| F17 | Mean | 3.9789E − 01 | 3.9789E − 01 | 3.9789E − 01 | 3.9808E − 01 | 4.0051E − 01 | 3.9789E − 01 | 3.9921E − 01 | 3.9789E − 01 |

| Std | 2.6700E − 12 | 3.0000E − 08 | 1.3400E − 10 | 2.2131E − 04 | 1.4391E − 02 | 6.9400E − 06 | 1.2200E − 03 | 1.3300E − 07 | |

|

| |||||||||

| F18 | Mean | 3.9000E + 00 | 3.0000E + 00 | 3.0000E + 00 | 3.0365E + 00 | 1.2901E + 01 | 3.0003E + 00 | 3.0001E + 00 | 8.4000E + 00 |

| Std | 4.9295E + 00 | 1.8000E − 10 | 1.5000E − 13 | 3.6597E − 02 | 2.1835E + 01 | 1.5988E − 03 | 9.1200E − 05 | 2.0550E + 01 | |

|

| |||||||||

| F19 | Mean | −3.8628E + 00 | −3.8628E + 00 | −3.8628E + 00 | −3.8560E + 00 | −3.7416E + 00 | −3.8575E + 00 | −3.8512E + 00 | −3.8628E + 00 |

| Std | 4.2800E − 11 | 8.4700E − 07 | 1.4600E − 10 | 7.4968E − 03 | 5.3652E − 01 | 5.7697E − 03 | 8.9408E − 03 | 1.9300E − 06 | |

|

| |||||||||

| F20 | Mean | −3.2823E + 00 | −3.2426E + 00 | −3.2259E + 00 | −3.2075E + 00 | −3.2903E + 00 | −3.1996E + 00 | −2.9186E + 00 | −3.2655E + 00 |

| Std | 5.7048E − 02 | 5.7100E − 02 | 5.9965E − 02 | 7.2462E − 02 | 6.3492E − 02 | 1.2887E − 01 | 3.7689E − 01 | 6.1457E − 02 | |

| F21 | Mean | −1.0153E + 01 | −1.0153E + 01 | −6.3092E + 00 | −1.0142E + 01 | −7.4694E + 00 | −8.1869E + 00 | −2.4287E + 00 | −7.0425E + 00 |

| Std | 1.0300E − 07 | 1.9553E − 04 | 3.5233E + 00 | 2.6961E − 02 | 3.0153E + 00 | 2.6299E + 00 | 2.0334E + 00 | 3.0750E + 00 | |

|

| |||||||||

| F22 | Mean | −1.0403E + 01 | −1.0403E + 01 | −7.8362E + 00 | −1.0392E + 01 | −7.3418E + 00 | −7.6682E + 00 | −3.4206E + 00 | −8.4065E + 00 |

| Std | 1.6600E − 07 | 3.1185E − 04 | 3.4923E + 00 | 1.8669E − 02 | 3.2433E + 00 | 3.4333E + 00 | 1.7953E + 00 | 2.9406E + 00 | |

|

| |||||||||

| F23 | Mean | −1.0536E + 01 | −1.0536E + 01 | −9.4298E + 00 | −1.0525E + 01 | −7.9685E + 00 | −7.2480E + 00 | −3.6694E + 00 | −9.6618E + 00 |

| Std | 9.5800E − 08 | 2.5920E − 04 | 2.5607E + 00 | 1.6743E − 02 | 3.5174E + 00 | 3.2297E + 00 | 1.8159E + 00 | 2.3084E + 00 | |

4.1.2. Evaluation of Exploration Capability (F8–F23)

Unlike unimodal functions, multimodal functions have many local optima. Thus, this kind of test problem turns very useful to evaluate the exploration capability of an optimization algorithm. The results shown in Table 5 for functions F8–F23 indicate that HSMSSA also has an excellent exploration capability. In fact, we can see that HSMSSA can find the theoretical optimum in F9, F11, F16–F17, and F19–F23. These results reveal that HSMSSA can also provide superior exploration capability.

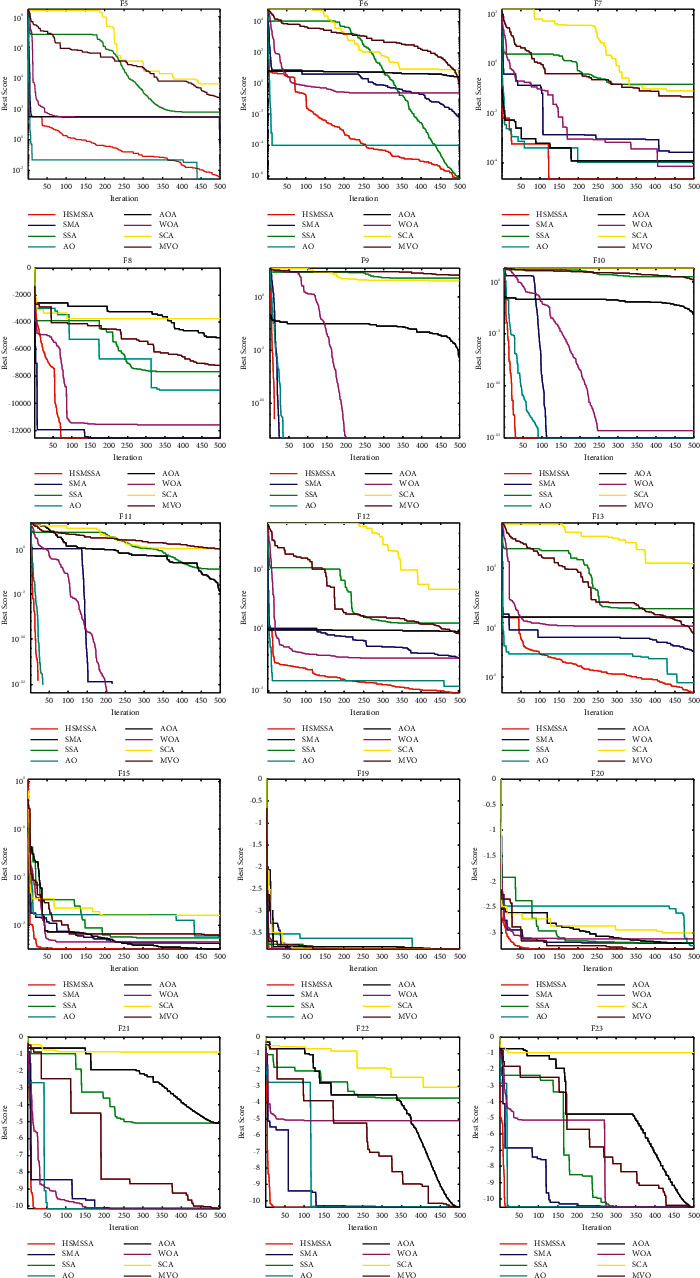

4.1.3. Analysis of Convergence Behavior

The convergence curves of some functions are selected and shown in Figure 6, which show the convergence rate of algorithms. It can be seen that HSMSSA shows competitive performance compared to other state-of-the-art algorithms. The HSMSSA presents a faster convergence speed than all other algorithms in F7–F13, F15, and F19–F23. For other benchmark functions, HSMSSA shows a better capability of local optima avoidance than other comparison algorithms in F5 and F6.

Figure 6.

Convergence curves of 23 benchmark functions.

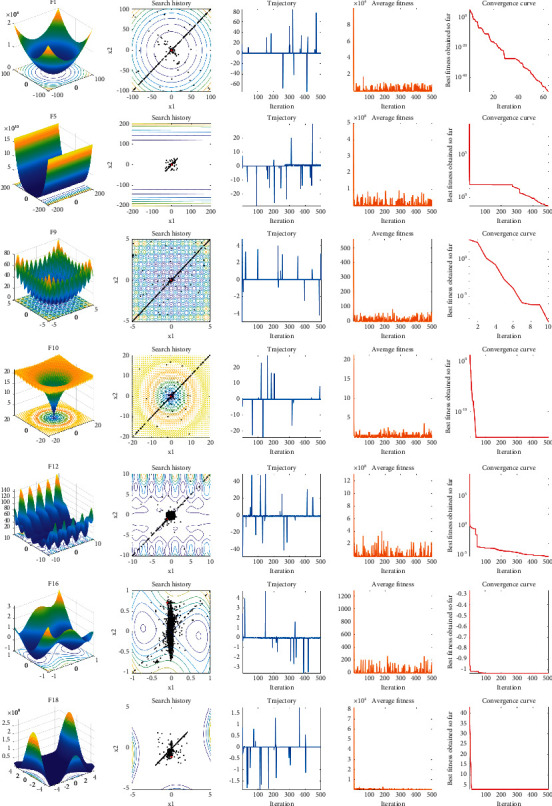

4.1.4. Qualitative Results and Analysis

Furthermore, Figure 7 shows the results of several representative test functions on search history, trajectory, average fitness, and convergence curve. From search history maps, we can see the search agent's distribution of the HSMSSA while exploring and exploiting the search space. Because of the fast convergence, the vast majority of search agents are concentrated near the global optimum. Inspecting trajectory figure in Figure 5, the first search agent constantly oscillates in the first dimension of the search space, which suggests that the search agent investigates the most promising areas and better solutions widely. This powerful search capability is likely to come from the Levy flight and MOBL strategies. The average fitness presents if exploration and exploitation are conducive to improve the first random population, and an accurate approximation of the global optimum can be found in the end.

Figure 7.

Parameter space, search history, trajectory, average fitness, and convergence curves of HSMSSA.

Similarly, it can be noticed that the average fitness oscillates in the early iterations and then decreases abruptly and begins to level off. The average fitness maps also show the significant improvement of the first random population and the final global optimal, accurate approximation acquisition. At last, the convergence curves reveal the best fitness value found by search agents after each iteration. By observing this, the HSMSSA shows breakneck convergence speed.

4.1.5. Wilcoxon Signed-Rank Test

Because the algorithm results are random, we need to carry out statistical tests to prove that the results have statistical significance. We use Wilcoxon signed-ranks (WSR) test results to evaluate the statistical significance of the two algorithms at 5% significance level [53]. The WSR is a statistical test that is applied to two different results for searching the significantly different. As is well-known, a p-value less than 0.05 indicates that it is significantly superior to other algorithms. Otherwise, the obtained results are not statistically significant. The calculated results of the Wilcoxon signed-rank test between HSMSSA and other algorithms for each benchmark function are listed in Table 6. HSMSSA outperforms all other algorithms in varying degrees. This superiority is statistically significant on unimodal functions F2 and F4–F7, which indicates that HSMSSA possesses high exploitation. HSMSSA also shows better results on multimodal function F8–F23, suggesting that HSMSSA has a high capability of exploration. To sum up, HSMSSA can provide better results for almost all benchmark functions than other comparative algorithms.

Table 6.

The results of Wilcoxon's sign rank test for all functions.

| Function | HSMSSA versus | ||||||

|---|---|---|---|---|---|---|---|

| SMA | SSA | AO | AOA | WOA | SCA | MVO | |

| F1 | NaN | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F2 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F3 | NaN | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F4 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F5 | 6.1035E − 05 | 6.1035E − 05 | 4.2725E − 03 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F6 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F7 | 2.1545E − 02 | 6.1035E − 05 | 1.6882E − 01 | 7.1973E − 01 | 1.8311E − 04 | 6.1035E − 05 | 6.1035E − 05 |

| F8 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F9 | NaN | 6.1035E − 05 | NaN | 6.1035E − 05 | NaN | 6.1035E − 05 | 6.1035E − 05 |

| F10 | NaN | 6.1035E − 05 | NaN | 6.1035E − 05 | 1.9531E − 03 | 6.1035E − 05 | 6.1035E − 05 |

| F11 | NaN | 6.1035E − 05 | NaN | 6.1035E − 05 | NaN | 6.1035E − 05 | 6.1035E − 05 |

| F12 | 6.1035E − 05 | 6.1035E − 05 | 3.0518E − 04 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F13 | 6.1035E − 05 | 6.1035E − 05 | 3.0518E − 04 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F14 | 6.1035E − 05 | 5.0000E − 01 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F15 | 1.5259E − 03 | 6.1035E − 05 | 1.2207E − 04 | 4.3721E − 02 | 1.8311E − 04 | 6.1035E − 05 | 1.2207E − 04 |

| F16 | 6.1035E − 05 | 1.3184E − 02 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F17 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 4.2725E − 04 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F18 | 1.7334E − 01 | 6.4697E − 03 | 4.3252E − 02 | 3.5339E − 02 | 4.3252E − 02 | 4.3252E − 02 | 4.3252E − 02 |

| F19 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F20 | 2.5574E − 02 | 1.0254E − 02 | 1.1597E − 03 | 2.2931E − 01 | 4.5359E − 02 | 6.1035E − 05 | 3.5913E − 01 |

| F21 | 6.1035E − 05 | 2.5574E − 02 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F22 | 6.1035E − 05 | 4.2120E − 01 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| F23 | 6.1035E − 05 | 4.2120E − 01 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 | 6.1035E − 05 |

| (W|L|T) | (17|1|5) | (20|3|0) | (19|1|3) | (21|2|0) | (21|0|2) | (23|0|0) | (22|1|0) |

4.2. Experiments on Engineering Design Problems

In this section, HSMSSA is evaluated to solve five classical engineering design problems: pressure vessel design problem, tension spring design problem, three-bar truss design problem, speed reducer problem, and cantilever beam design. To address these problems, we set the population size N = 30 and maximum iteration T = 500. The results of HSMSSA are compared to various state-of-the-art algorithms in the literature. The parameter settings are the same as previous numerical experiments.

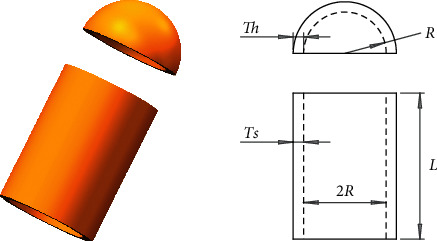

4.2.1. Pressure Vessel Design Problem

The pressure vessel design problem [53] is to minimize the total cost of cylindrical pressure vessel to match pressure requirements and form the pressure vessel shown in Figure 8. Four parameters in this problem need to be minimized, including the thickness of the shell (Ts), the thickness of head (Th), inner radius (R), and the length of the cylindrical section without the head (L), as shown in Figure 8. The constraints and equation are as follows.

Figure 8.

Pressure vessel design problem.

Consider

| (15) |

Minimize

| (16) |

subject to

| (17) |

Variable range is

| (18) |

From the results in Table 7, we can see that HSMSSA can obtain superior optimal values compared with SMA, SSA, AO, AOA, WOA, SCA, and MVO.

Table 7.

Comparison of HSMSSA results with other competitors for the pressure vessel design problem.

| Algorithm | Optimum variables | Optimum cost | |||

|---|---|---|---|---|---|

| T s | T h | R | L | ||

| HSMSSA | 0.8533992 | 0.4183956 | 45.8059 | 135.5385 | 5961.5318 |

| SMA | 0.8744808 | 0.4273835 | 46.83887 | 125.5848 | 6008.4161 |

| SSA | 0.8934774 | 0.4387327 | 47.53594 | 119.2156 | 6047.6543 |

| AO | 0.8828092 | 0.4312524 | 47.26462 | 121.6449 | 6028.1585 |

| AOA | 0.807105 | 0.4426515 | 44.63354 | 147.5659 | 6052.8917 |

| WOA | 0.8310091 | 0.3646671 | 44.00895 | 154.3182 | 6047.0417 |

| SCA | 0.8820038 | 0.4335084 | 47.24144 | 125.7922 | 6139.5293 |

| MVO | 0.8380677 | 0.4992101 | 45.82367 | 135.3623 | 6253.5397 |

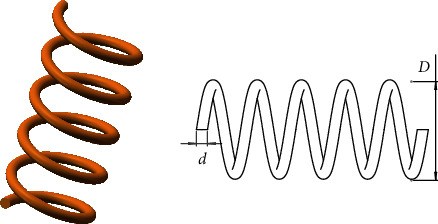

4.2.2. Tension Spring Design Problem

This problem [27] tries to minimize the weight of the tension spring, and there are three parameters that need to be minimized, including the wire diameter (d), mean coil diameter (D), and the number of active coils (N). Figure 9 shows the structure of the tension spring. The mathematical of this problem can be written as follows.

Figure 9.

Tension spring design problem.

Consider

| (19) |

Minimize

| (20) |

subject to

| (21) |

Variable range is

| (22) |

Results of HSMSSA for solving tension spring design problem are listed in Table 8, which are compared with SMA, SSA, AO, AOA, WOA, SCA, and MVO. It is evident that HSMSSA obtained the best results compared to all other algorithms.

Table 8.

Comparison of HSMSSA results with other competitors for the tension spring design problem.

| Algorithm | Optimum variables | Optimum weight | ||

|---|---|---|---|---|

| d | D | N | ||

| HSMSSA | 0.05 | 0.348099 | 10.6486 | 0.011007 |

| SMA | 0.057372 | 0.57232 | 4.1494 | 0.011584 |

| SSA | 0.05936 | 0.63542 | 3.4741 | 0.012256 |

| AO | 0.056912 | 0.55833 | 4.3271 | 0.011442 |

| AOA | 0.052579 | 0.41008 | 8.9843 | 0.012453 |

| WOA | 0.055292 | 0.47277 | 6.929 | 0.012906 |

| SCA | 0.0588 | 0.6172 | 3.65 | 0.012057 |

| MVO | 0.05251 | 0.37602 | 10.33513 | 0.012790 |

4.2.3. Three-Bar Truss Design Problem

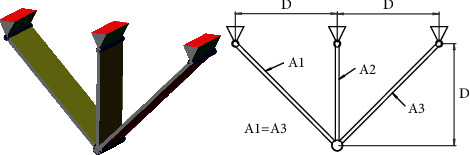

Three-bar truss design is a complex problem in the field of civil engineering [49]. The goal of this problem is to achieve the minimum weight in truss design. Figure 10 shows the design of this problem. The formula of this problem can be described as follows.

Figure 10.

Three-bar truss design problem.

Consider

| (23) |

Minimize

| (24) |

subject to

| (25) |

Variable range is

| (26) |

where l=100 cm, P=2 KN/cm2, and σ=2 KN/cm2.

Results of HSMSSA for solving the three-bar design problem are listed in Table 9, which are compared with SMA, SSA, AO, AOA, WOA, SCA, and MVO. It can be observed that HSMSSA has an excellent ability to solve the problem in confined space.

Table 9.

Comparison of HSMSSA results with other competitors for the three-bar truss design problem.

| Algorithm | Optimum variables | Optimum cost | |

|---|---|---|---|

| x 1 | x 2 | ||

| HSMSSA | 0.78842 | 0.40811 | 263.8523 |

| SMA | 0.79109 | 0.40022 | 263.8668 |

| SSA | 0.77823 | 0.43736 | 263.9363 |

| AO | 0.77899 | 0.43541 | 263.9197 |

| AOA | 0.76342 | 0.48382 | 264.3549 |

| WOA | 0.78357 | 0.42252 | 263.886 |

| SCA | 0.78058 | 0.43766 | 264.5463 |

| MVO | 0.75492 | 0.51216 | 264.7851 |

4.2.4. Speed Reducer Problem

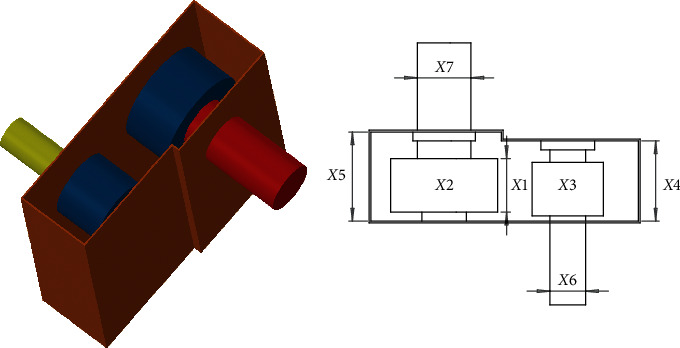

In this problem [15], the total weight of the reducer is minimized by optimizing seven variables. Figure 11 shows the design of this problem, and the mathematical formula is as follows.

Figure 11.

Speed reducer problem.

Minimize

| (27) |

subject to

| (28) |

Variable range is

| (29) |

The comparison results are listed in Table 10, which shows the advantage of HSMSSA in realizing the minimum total weight of the problem.

Table 10.

Comparison of HSMSSA results with other competitors for the speed reducer design problem.

| Algorithm | Optimum variables | Optimum weight | ||||||

|---|---|---|---|---|---|---|---|---|

| x 1 | x 2 | x 3 | x 4 | x 5 | x 6 | x 7 | ||

| HSMSSA | 3.4976 | 0.7 | 17 | 7.3 | 7.8 | 3.35006 | 5.28553 | 2995.4374 |

| SMA | 3.51443 | 0.7 | 17 | 7.32444 | 7.80527 | 3.35235 | 5.28494 | 3002.5941 |

| SSA | 3.49767 | 0.7 | 17 | 7.87797 | 8.09401 | 3.3943 | 5.28574 | 3018.644 |

| AO | 3.5138 | 0.7 | 17 | 7.41461 | 7.81291 | 3.37709 | 5.28457 | 3009.9097 |

| AOA | 3.55989 | 0.7 | 17 | 7.49997 | 8.3 | 3.4623 | 5.28512 | 3061.8731 |

| WOA | 3.49739 | 0.7 | 17 | 7.87039 | 8.0769 | 3.45309 | 5.2854 | 3034.0596 |

| SCA | 3.6 | 0.7 | 17 | 7.3 | 8.3 | 3.37932 | 5.2799 | 3063.9102 |

| MVO | 3.6 | 0.7 | 17 | 8.3 | 8.3 | 3.38276 | 5.36041 | 3111.6609 |

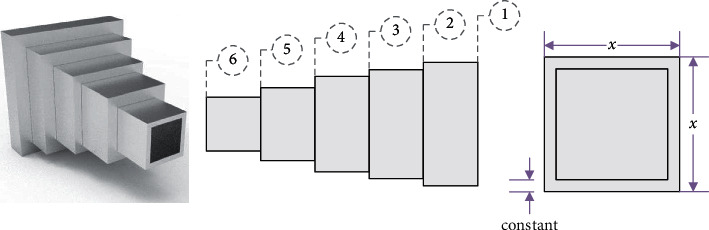

4.2.5. Cantilever Beam Design

Cantilever beam design is a type of concrete engineering problem. This problem aims to determine the minimal total weight of the cantilever beam by optimizing the hollow square cross-section parameters [24]. Figure 12 illustrates the design of this problem, and the mathematical described is as follows.

Figure 12.

Cantilever beam design [24].

Consider

| (30) |

Minimize

| (31) |

subject to

| (32) |

Variable range is as follows: 0.01 ≤ x1, x2, x3, x4, x5 ≤ 100.

The results are shown in Table 11. From this table, we can see that the performance of HSMSSA is better than all other algorithms and the obtained total weight is minimized.

Table 11.

Comparison of HSMSSA results with other competitors for the cantilever beam design problem.

| Algorithm | Optimum variables | Optimum weight | ||||

|---|---|---|---|---|---|---|

| x 1 | x 2 | x 3 | x 4 | x 5 | ||

| HSMSSA | 5.9979 | 5.3289 | 4.4999 | 3.4817 | 2.166 | 1.3400 |

| SMA | 6.0846 | 5.2309 | 4.5602 | 3.4725 | 2.1349 | 1.3405 |

| SSA | 6.1056 | 5.1001 | 4.303 | 3.7365 | 2.3183 | 1.3456 |

| AO | 5.8236 | 5.3813 | 4.5178 | 3.4768 | 2.3159 | 1.3426 |

| AOA | 5.7014 | 5.3722 | 4.6577 | 3.6643 | 2.1551 | 1.3448 |

| WOA | 5.8492 | 5.0587 | 4.8917 | 3.682 | 2.1564 | 1.3469 |

| SCA | 5.9165 | 5.9187 | 4.5133 | 3.3167 | 1.9823 | 1.3508 |

| MVO | 7.1088 | 5.0161 | 4.2203 | 3.4064 | 2.105 | 1.3647 |

As a summary, this section demonstrates the superiority of the proposed HSMSSA algorithm in different characteristics and real case studies. HSMSSA is able to outperform the basic SMA and SSA and other well-known algorithms with very competitive results, which are derived from the robust exploration and exploitation capabilities of HSMSSA. Excellent performance in solving industrial engineering design problems indicates that HSMSSA can be widely used in real-world optimization problems.

5. Conclusion

In this paper, a Hybrid Slime Mould Salp Swarm Algorithm (HSMSSA) is proposed by combining the whole SMA as leaders and the exploitation phase of SSA as followers. At the same time, two strategies, including Levy flight and mutation opposition-based learning, are incorporated to enhance the capabilities of exploration and exploitation of HSMSSA. The 23 standard benchmark functions are utilized to evaluate this algorithm for analyzing its exploration, exploitation, and local optima avoidance capabilities. The experimental results show competitive advantages compared to other state-of-the-art metaheuristic algorithms, proving that HSMSSA has better performance than others. Five engineering design problems are solved as well to verify the superiority of the algorithm further, and the results are also very competitive with other metaheuristic algorithms.

The proposed HSMSSA can produce very effective results for complex benchmark functions and constrained engineering problems. In the future, HSMSSA can be applied to real-world optimization problems such as multiobjective problems, feature selection, multithresholding image segmentation, convolution neural network, or any problem that belongs to NP-complete or NP-hard problems.

Acknowledgments

This research was funded by the Sanming University Introduces High-Level Talents to Start Scientific Research Funding Support Project (20YG01 and 20YG14), the Guiding Science and Technology Projects in Sanming City (2020-S-39, 2020-G-61, and 2021-S-8), the Educational Research Projects of Young and Middle-Aged Teachers in Fujian Province (JAT200638 and JAT200618), the Scientific Research and Development Fund of Sanming University (B202029 and B202009), the Open Research Fund of Key Laboratory of Agricultural Internet of Things in Fujian Province (ZD2101), the Ministry of Education Cooperative Education Project (202002064014), the School Level Education and Teaching Reform Project of Sanming University (J2010306 and J2010305), and the Higher Education Research Project of Sanming University (SHE2102 and SHE2013).

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

All authors declare that there are no conflicts of interest.

References

- 1.Jia H., Lang C., Oliva D., Song W., Peng X. Dynamic harris hawks optimization with mutation mechanism for satellite image segmentation. Remote Sensing. 2019;11(12):1421–1456. doi: 10.3390/rs11121421. [DOI] [Google Scholar]

- 2.Abualigah L., Diabat A. Advances in sine cosine algorithm: a comprehensive survey. Artificial Intelligence Review. 2021;54(4):2567–2608. doi: 10.1007/s10462-020-09909-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Abualigah L., Diabat A. A comprehensive survey of the Grasshopper optimization algorithm: results, variants, and applications. Neural Computing & Applications. 2020;32(19):15533–15556. doi: 10.1007/s00521-020-04789-8. [DOI] [Google Scholar]

- 4.Grefenstette J. J. Genetic algorithms and machine learning. Machine Learning. 1988;3(2-3):95–99. doi: 10.1007/bf00113892. [DOI] [Google Scholar]

- 5.Storn R., Price K. Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. Journal of Global Optimization. 1997;11(4):341–359. doi: 10.1023/A:1008202821328. [DOI] [Google Scholar]

- 6.Simon D. Biogeography-based optimization. IEEE Transactions on Evolutionary Computation. 2008;12(6):702–713. doi: 10.1109/TEVC.2008.919004. [DOI] [Google Scholar]

- 7.Kirkpatrick S., Gelatt C. D., Vecchi M. P. Optimization by simulated annealing. Science. 1983;220(4598):671–680. doi: 10.1126/science.220.4598.671. [DOI] [PubMed] [Google Scholar]

- 8.Rashedi E., Nezamabadi-pour H., Saryazdi S. GSA: a gravitational search algorithm. Information Sciences. 2009;179(13):2232–2248. doi: 10.1016/j.ins.2009.03.004. [DOI] [Google Scholar]

- 9.Hatamlou A. Black hole: a new heuristic optimization approach for data clustering. Information Sciences. 2013;222:175–184. doi: 10.1016/j.ins.2012.08.023. [DOI] [Google Scholar]

- 10.Mirjalili S., Mirjalili S. M., Hatamlou A. Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Computing & Applications. 2015;27(2):495–513. doi: 10.1007/s00521-015-1870-7. [DOI] [Google Scholar]

- 11.Alatas B. ACROA: artificial chemical reaction optimization algorithm for global optimization. Expert Systems with Applications. 2011;38(10):13170–13180. doi: 10.1016/j.eswa.2011.04.126. [DOI] [Google Scholar]

- 12.Kaveh A., Khayatazad M. A new meta-heuristic method: Ray Optimization. Computers & Structures. 2012;112-113:283–294. doi: 10.1016/J.COMPSTRUC.2012.09.003. [DOI] [Google Scholar]

- 13.Moghaddam F. F., Moghaddam R. F., Cheriet M. Curved Space Optimization: a random search based on general relativity theory. 2012. pp. 1–16. https://arxiv.org/abs/1208.2214.

- 14.Mirjalili S. SCA: a sine cosine algorithm for solving optimization problems. Knowledge-Based Systems. 2016;96:120–133. doi: 10.1016/j.knosys.2015.12.022. [DOI] [Google Scholar]

- 15.Abualigah L., Diabat A., Mirjalili S., Abd Elaziz M., Gandomi A. H. The arithmetic optimization algorithm. Computer Methods in Applied Mechanics and Engineering. 2021;376:113609–113647. doi: 10.1016/j.cma.2020.113609. [DOI] [Google Scholar]

- 16.Majidnezhad V., Derakhshi M. R. F., Parsai S. Heat transfer relation-based optimization algorithm (HTOA) Soft Computing. 2021;25:8129–8158. [Google Scholar]

- 17.Eberhart R., Kennedy J. A new optimizer using particle swarm theory. Proceedings of the sixth international symposium on micro machine and human science; Octomber 1995; Nagoya, Japan. pp. 1942–1948. [DOI] [Google Scholar]

- 18.Dorigo M., Birattari M., Stutzle T. Ant colony optimization. IEEE Computational Intelligence Magazine. 2006;1(4):28–39. [Google Scholar]

- 19.Yang X. S. Firefly algorithm, stochastic test functions and design optimization. International Journal of Bio-Inspired Computation. 2013;2:78–84. doi: 10.1504/IJBIC.2010.032124. [DOI] [Google Scholar]

- 20.Mirjalili S., Mirjalili S. M., Lewis A. Grey wolf optimizer. Advances in Engineering Software. 2014;69:46–61. doi: 10.1016/j.advengsoft.2013.12.007. [DOI] [Google Scholar]

- 21.Yang X.-S., Suash Deb S. Cuckoo search via lévy flights. Proceedings of the World Congress on Nature & Biologically Inspired Computing; December 2009; Coimbatore, India. pp. 210–214. [DOI] [Google Scholar]

- 22.Mirjalili S., Lewis A. The whale optimization algorithm. Advances in Engineering Software. 2016;95:51–67. doi: 10.1016/j.advengsoft.2016.01.008. [DOI] [Google Scholar]

- 23.Alsattar H. A., Zaidan A. A., Zaidan B. B. Novel meta-heuristic bald eagle search optimisation algorithm. Artificial Intelligence Review. 2019;53(3):2237–2264. doi: 10.1007/s10462-019-09732-5. [DOI] [Google Scholar]

- 24.Abualigah L., Yousri D., Abd Elaziz M., Ewees A. A., Al-qaness M. A. A., Gandomi A. H. Aquila optimizer: a novel meta-heuristic optimization algorithm. Computers & Industrial Engineering. 2021;157 doi: 10.1016/j.cie.2021.107250.107250 [DOI] [Google Scholar]

- 25.Bonyadi M. R., Michalewicz Z. Particle swarm optimization for single objective continuous space problems: a review. Evolutionary Computation. 2017;25(1):1–54. doi: 10.1162/EVCO_r_00180. [DOI] [PubMed] [Google Scholar]

- 26.Cacciola M., Calcagno S., Morabito F. C., Versaci M. Swarm optimization for imaging of corrosion by impedance measurements in eddy current test. IEEE Transactions on Magnetics. 2007;43(4):1853–1856. doi: 10.1109/TMAG.2007.892513. [DOI] [Google Scholar]

- 27.Mirjalili S., Gandomi A. H., Mirjalili S. Z., Saremi S. Faris H., Mirjalili S. M. Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Advances in Engineering Software. 2017;114:163–191. doi: 10.1016/j.advengsoft.2017.07.002. [DOI] [Google Scholar]

- 28.Tubishat M., Ja’afar S., Alswaitti M., et al. Dynamic Salp Swarm algorithm for feature selection. Expert Systems with Applications. 2021;164 doi: 10.1016/j.eswa.2020.113873.113873 [DOI] [Google Scholar]

- 29.Salgotra R., Singh U., Singh S., Singh G., Mittal N. Self-adaptive salp swarm algorithm for engineering optimization problems. Applied Mathematical Modelling. 2021;89:188–207. doi: 10.1016/j.apm.2020.08.014. [DOI] [Google Scholar]

- 30.Neggaz N., Ewees A. A., Elaziz M. A., Mafarja M. Boosting salp swarm algorithm by sine cosine algorithm and disrupt operator for feature selection. Expert Systems with Applications. 2020;145 doi: 10.1016/j.eswa.2019.113103.113103 [DOI] [Google Scholar]

- 31.Jia H., Lang C. Salp swarm algorithm with crossover scheme and Lévy flight for global optimization. Journal of Intelligent and Fuzzy Systems. 2021;40(5):9277–9288. doi: 10.3233/JIFS-201737. [DOI] [Google Scholar]

- 32.Saafan M. M., El-gendy E. M. IWOSSA: an improved whale optimization salp swarm algorithm for solving optimization problems. Expert Systems with Applications. 2021;176(2) doi: 10.1016/j.eswa.2021.114901.114901 [DOI] [Google Scholar]

- 33.Singh N., Singh S. B., Houssein E. H. Hybridizing salp swarm algorithm with particle swarm optimization algorithm for recent optimization functions. Evolutionary Intelligence. 2020;1(1):1–34. doi: 10.1007/s12065-020-00486-6. [DOI] [Google Scholar]

- 34.Abadi M. Q. H., Rahmati S., Sharifi A., Ahmadi M. HSSAGA: designation and scheduling of nurses for taking care of COVID-19 patients using novel method of hybrid salp swarm algorithm and genetic algorithm. Applied Soft Computing. 2021;108 doi: 10.1016/j.asoc.2021.107449.107449 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Li S., Chen H., Wang M., Heidari A. A., Mirjalili S. Slime Mould algorithm: a new method for stochastic optimization. Future Generation Computer Systems. 2020;111(3):300–323. doi: 10.1016/j.future.2020.03.055. [DOI] [Google Scholar]

- 36.Abdel-Basset M., Mohamed R., Chakrabortty R. K., Ryan M. J., Mirjalili S. An efficient binary slime mould algorithm integrated with a novel attacking-feeding strategy for feature selection. Computers & Industrial Engineering. 2021;153 doi: 10.1016/j.cie.2020.107078.107078 [DOI] [Google Scholar]

- 37.Precup R.-E., David R.-C., Roman R.-C., Petriu E. M., Szedlak-Stinean A.-I. Slime mould algorithm-based tuning of cost-effective fuzzy controllers for servo systems. International Journal of Computational Intelligence Systems. 2021;14(1):1042–1052. doi: 10.2991/ijcis.d.210309.001. [DOI] [Google Scholar]

- 38.Zhao S., Wang P., Heidari A. A., et al. Multilevel threshold image segmentation with diffusion association Slime Mould algorithm and Renyi’s entropy for chronic obstructive pulmonary disease. Computers in Biology and Medicine. 2021;134 doi: 10.1016/j.compbiomed.2021.104427.104427 [DOI] [PubMed] [Google Scholar]

- 39.Izci D., Ekinci S., Ekinci S. Comparative performance analysis of slime mould algorithm for efficient design of proportional-integral-derivative controller. Electrica. 2021;21(1):151–159. doi: 10.5152/electrica.2021.20077. [DOI] [Google Scholar]

- 40.Othman A. M., El-fergany A. A. Optimal dynamic operation and modeling of parallel connected multi-stacks fuel cells with improved slime mould algorithm. Renewable Energy. 2021;175:770–782. doi: 10.1016/j.renene.2021.04.148. [DOI] [Google Scholar]

- 41.Jensi R., Jiji G. W. An enhanced particle swarm optimization with levy flight for global optimization. Applied Soft Computing. 2016;43:248–261. doi: 10.1016/j.asoc.2016.02.018. [DOI] [Google Scholar]

- 42.Iacca G., Santos V. C., Jr., Veloso de Melo V., Melo V. V. An improved Jaya optimization algorithm with Lévy flight. Expert Systems with Applications. 2021;165 doi: 10.1016/j.eswa.2020.113902.113902 [DOI] [Google Scholar]

- 43.Liu Y., Cao B. A novel ant colony optimization algorithm with Levy flight. IEEE Access. 2020;8:67205–67213. doi: 10.1109/ACCESS.2020.2985498. [DOI] [Google Scholar]

- 44.Lu X.-l., He G. QPSO algorithm based on lévy flight and its application in fuzzy portfolio. Applied Soft Computing. 2021;99(1) doi: 10.1016/j.asoc.2020.106894.106894 [DOI] [Google Scholar]

- 45.Tizhoosh H. R. Opposition-based learning: a new scheme for machine intelligence. Proceedings of the Computational Intelligence for Modelling, Control & Automation; November 2005; Vienna, Austria. pp. 695–701. [DOI] [Google Scholar]

- 46.Wang H., Wu Z., Rahnamayan S., Liu Y., Ventresca M. Enhancing particle swarm optimization using generalized opposition-based learning. Information Sciences. 2011;181(20):4699–4714. doi: 10.1016/j.ins.2011.03.016. [DOI] [Google Scholar]

- 47.Ewees A. A., Abd Elaziz M., Houssein E. H. Improved grasshopper optimization algorithm using opposition-based learning. Expert Systems with Applications. 2018;112(12):156–172. doi: 10.1016/j.eswa.2018.06.023. [DOI] [Google Scholar]

- 48.Gupta S., Deep K. A hybrid self-adaptive sine cosine algorithm with opposition based learning. Expert Systems with Applications. 2019;119(4):210–230. doi: 10.1016/j.eswa.2018.10.050. [DOI] [Google Scholar]

- 49.Gupta S., Deep K., Heidari A. A., Moayedi H., Wang M. Opposition-based learning harris hawks optimization with advanced transition rules: principles and analysis. Expert Systems with Applications. 2020;158 doi: 10.1016/j.eswa.2020.113510.113510 [DOI] [Google Scholar]

- 50.Guo Z., Wang S., Yue X., Yang H. Global harmony search with generalized opposition-based learning. Soft Computing. 2017;21(8):2129–2137. doi: 10.1007/s00500-015-1912-1. [DOI] [Google Scholar]

- 51.Sharma T. K., Pant M. Opposition based learning ingrained shuffled frog-leaping algorithm. Journal of Computational Science. 2017;21:307–315. doi: 10.1016/j.jocs.2017.02.008. [DOI] [Google Scholar]

- 52.Fan Q., Chen Z., Xia Z. A novel quasi-reflected harris hawks optimization algorithm for global optimization problems. Soft Computing. 2020;24(19):14825–14843. doi: 10.1007/s00500-020-04834-7. [DOI] [Google Scholar]

- 53.Jia H., Peng X., Lang C. Remora optimization algorithm. Expert Systems with Applications. 2021;185 doi: 10.1016/j.eswa.2021.115665.115665 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.