Abstract

Research examining the continued influence effect (CIE) of misinformation has reliably found that belief in misinformation persists even after the misinformation has been retracted. However, much remains to be learned about the psychological mechanisms responsible for this phenomenon. Most theorizing in this domain has focused on cognitive mechanisms. Yet some proposed cognitive explanations provide reason to believe that motivational mechanisms might also play a role. The present research tested the prediction that retractions of misinformation produce feelings of psychological discomfort that motivate one to disregard the retraction to reduce this discomfort. Studies 1 and 2 found that retractions of misinformation elicit psychological discomfort, and this discomfort predicts continued belief in and use of misinformation. Study 3 showed that the relations between discomfort and continued belief in and use of misinformation are causal in nature by manipulating how participants appraised the meaning of discomfort. These findings suggest that discomfort could play a key mechanistic role in the CIE, and that changing how people interpret this discomfort can make retractions more effective at reducing continued belief in misinformation.

Keywords: Continued influence effect of misinformation, Discomfort, Mental models

In 1998, a fraudulent study published in the Lancet claimed that the measles, mumps, and rubella vaccine made children more susceptible to developing autism (Rao & Andrade, 2011). This misinformation provided a simple, causal explanation for why children develop autism that likely accounts, in part, for why as many as 33 million Americans believe vaccines are not safe (Reinhart, 2020). Efforts to refute this misinformation and the false causal explanation it offers were undertaken by governmental, scientific, and media sources, but widespread belief in the misinformation remains (Kata, 2010). Increases in this belief has coincided with increases in outbreaks of vaccine-targeted diseases, such as measles (Hall et al., 2017), and more recent antivaccination misinformation threatens to reduce COVID-19 vaccine uptake (Cornwall, 2020).

Research examining the continued influence effect (CIE; Johnson & Seifert, 1994) of misinformation suggests that this is not an isolated problem. Indeed, people continue to be influenced by misinformation even after learning that it is false across a variety of domains (for reviews, see Lewandowsky et al., 2012; Seifert, 2002; Swire & Ecker, 2018). To date, efforts to explain the CIE have largely been cognitive in nature. For instance, some argue that the CIE results from memory processes, whereby people fail to retrieve the retraction from memory when the associated misinformation is activated (Lewandowsky et al., 2012; Swire & Ecker, 2018). Other explanations focus on perceptual fluency, positing that misinformation is generally more familiar than the retraction. This might lead to a greater metacognitive experience of fluency when the misinformation is later encountered, leading people to subsequently rely more on the misinformation than the retraction (Schwarz et al., 2007; Skurnik et al., 2005).

An additional model of particular interest for the present research is the Mental Models (MM) account (Johnson & Seifert, 1994; Wilkes & Leatherbarrow, 1988). This account posits that people create mental models of events that establish how antecedents causally led to final outcomes (e.g., Event A led to Event B, which led to Event C). Thus, a mental model is a mental representation of an event that contains information about the causal sequence of events that led to the final outcome. If a piece of misinformation is centrally located in a mental event model, a retraction of this misinformation creates a causal gap in the model, leaving it unclear how initial causes led to final outcomes (Hamby et al., 2020). According to this account, people then reject correcting information and maintain belief in the misinformation to reestablish causal completeness of the mental model. It is important to note that the reason for this final step is currently unclear. Namely, it is not clear exactly why people reject corrections and continue believing misinformation instead of adopting an incomplete mental model.

A possible cognitive reason stems from the Knowledge Revision Components (KReC; Kendeou & O’Brien, 2014) framework. Because misinformation that confers causality about an event is likely embedded within a rich network of causally related information stored in memory, misinformation might enjoy greater activation when the event is recalled than a retraction that does not confer causality. This relative lack of activation might result in the retraction being disregarded (Kendeou et al., 2014). Alternatively, it has been speculated that having a causally incomplete mental model could be uncomfortable (Lewandowsky et al., 2012), and this discomfort might motivate one to act to reduce this discomfort. In many cases, the simplest way to do this could be to disregard the retraction and continue believing that the misinformation is valid. Importantly for the present research, this possible motivational aspect of the MM account has yet to be examined empirically in the CIE literature. Though currently untested, this type of motivational proposition would fit with several theoretical approaches that link causal uncertainty, conflicting cognitions, uncertainty more generally, or threats to meaning maintenance with psychological discomfort. Moreover, these approaches also suggest that such discomfort motivates people to take steps to reduce that discomfort. (e.g., Brashers, 2001; Festinger, 1957; Heine et al., 2006; Weary & Edwards, 1996).

Documenting this motivational mechanism is important for several reasons. In addition to providing clarity for how causal incompleteness of a mental model leads one to reject retracting information, it would also suggest a novel means to make retractions more effective. If retractions are rejected because they make people feel uncomfortable, changing how people respond to that discomfort could make them more accepting of retracting information. The present research seeks to both demonstrate the role discomfort plays in the CIE and to show how changing people’s responses to discomfort can make them more accepting of retracting information.

Differentiating belief in misinformation from use of misinformation to make inferences

In addition to the previously discussed theoretical contributions, this research adds additional granularity to the measurement of psychological mechanisms that might play a role in the CIE. To date, CIE research has largely been divided on the main outcome of interest. Much research has focused on how retractions impact the extent to which participants continue to make inferences about the focal event based on the misinformation (e.g., Ecker & Ang, 2019; Ecker & Antonio, 2021; Ecker, Lewandowsky & Apai, 2011; Ecker et al., 2015; Ecker et al., 2014; Ecker, Lewandowsky, et al., 2011; Ecker et al., 2010; Johnson & Seifert, 1994; Rich & Zaragoza, 2016; Wilkes & Leatherbarrow, 1988). Other research has focused on how retractions impact continued belief that the misinformation is true (e.g., Nyhan & Reifler, 2010; Nyhan et al., 2014; Nyhan et al., 2013; Schwarz et al., 2007; Skurnik et al., 2005; Swire, et al., 2017), though some of this research has measured both outcomes.

Although these outcomes might appear similar, they are likely not redundant. For instance, it might be possible for one to believe misinformation less after seeing a retraction, but still make inferences based on the misinformation due to a lack of other knowledge on which to base those inferences. Likewise, one’s belief in misinformation might not be altered by a retraction, but one might make few misinformation-based inferences because one possesses other knowledge one judges as more relevant to those inferences. Furthermore, these variables might not simply be alternative outcomes, but rather belief in misinformation could predict one’s likelihood of making inferences based on that misinformation (Ecker & Antonio, 2021). Therefore, it seems worthwhile to measure both belief in misinformation and the use of the misinformation when making inferences in the CIE context and to test whether belief in misinformation might mediate the impact of other variables on the use of the misinformation to form inferences.

Present research

The present research had three aims: First, Studies 1 and 2 examined the prediction stemming from the MM account of the CIE that causal gaps in one’s mental event model elicit discomfort, and this discomfort then motivates one to disregard the retraction and maintain one’s belief in the misinformation (cf. Johnson & Seifert, 1994; Lewandowsky et al., 2012; Wilkes & Leatherbarrow, 1988). Second, Study 3 tested whether discomfort in response to a retraction causes continued belief in misinformation and whether changing how people respond to discomfort increases the effectiveness of retractions. Third, in all studies we tested whether belief in misinformation might mediate the effects of other variables on the use of misinformation to form inferences.

Study 1

Study 1 used a procedure adapted from one used by Wilkes and Leatherbarrow (1988). Some participants read a report in which a piece of initially presented misinformation about the cause of a fire (combustible materials that had been carelessly stored in a side room) was later retracted, whereas others read a report in which the same piece of misinformation was later confirmed to be true. We chose to use this misinformation confirmation condition as the comparison condition for two reasons. First, we wanted to ask participants in both conditions about how uncomfortable they felt in response to something related to the misinformation that occurred at the same point in both reports. Additionally, we wanted to hold the number of references to the misinformation constant across conditions because past research has suggested that fluency with the misinformation might be involved in the occurrence of the CIE (Schwarz et al., 2007; Skurnik et al., 2005; but see also Ecker et al., 2020). Note that for clarity and consistency across conditions we refer to the information about storage of combustible materials in a side room as misinformation even though participants in the confirmation condition were not told that the misinformation was false.

We predicted that those who saw a retraction of the misinformation would report greater discomfort than those who saw a confirmation. Additionally, we predicted that this discomfort would impact misinformation endorsement and the use of misinformation to inform inferences, but that this impact would be different depending on whether the discomfort came from a retraction or a confirmation. Specifically, we predicted that discomfort felt in response to a retraction would positively predict misinformation endorsement and use, as the discomfort ought to motivate one to disregard the retraction to maintain one’s belief in the misinformation. Conversely, any discomfort felt in response to a confirmation of the misinformation should negatively predict misinformation endorsement and use, as the misinformation itself would be the source of the discomfort. Additionally, we expected that endorsement of the misinformation would be positively associated with use of the misinformation when making inferences and that observed relations between discomfort and the number of misinformation-based inferences would be mediated by misinformation endorsement.

Method

Sample size selection

We based our decisions of how many participants to recruit on previous literature in the CIE domain. Studies using paradigms similar to ours generally have sample sizes ranging from approximately 120 to 160 total participants (Ecker, Lewandowsky & Apai, 2011; Ecker et al., 2015; Ecker et al., 2014; Ecker, Lewandowsky, Swire, et al., 2011). We used these numbers as a rough guide for how many participants we attempted to recruit. However, because the present study involved statistical mediation and was therefore more complex than many previous designs in the CIE literature, we decided to recruit a moderately larger sample for this study. The sample sizes of subsequent studies reported in this paper were determined using these same guidelines. Additionally, there were occasions where a priori exclusions were made resulting in fewer participants being included in analyses than were recruited.

Participants

Participants were 186 (50.5% men, 49% women, 0.5% other; mean age = 37.48, SD = 12.64, range: 20–70) Mechanical Turk workers who participated in exchange for monetary compensation.

Design

Study 1 used a two (retraction vs. confirmation) between-participant design. Dependent measures included discomfort, misinformation endorsement (which was also treated as a mediator in some analyses) and the number of misinformation-based inferences.

Measures and manipulations

Report manipulation

Two report conditions were constructed for this study: a confirmation report in which initially presented misinformation was later confirmed and a retraction report in which the misinformation was later retracted. The reports were closely adapted from those used by Wilkes and Leatherbarrow (1988). Both reports consisted of a series of statements presented one at a time about an event in which a warehouse caught fire at a paper company. There were 13 statements that participants read at their own pace. In both the retraction and confirmation conditions, Statement 4 stated that a short-circuit in a side room started the fire. Statement 5 contained a message from the police stating that combustible materials had been carelessly stored in the side room. The presence of combustible materials in the side room constituted the misinformation. In the confirmation condition, the presence of combustible materials was confirmed by the police in Statement 12, stating that combustible materials were definitely stored in the side room. In the retraction condition, the misinformation was retracted via a message from the police in Statement 12. The police message stated that their earlier statement was incorrect, specifying that combustible materials had not been stored in the side room and that it was empty before the fire occurred. The other statements in the reports provided filler information about the fire, such as the occurrence of several explosions (see the online supplement for complete study materials).

Discomfort measure

Based on measures used by Elliot and Devine (1994) to assess discomfort associated with cognitive dissonance, we created three self-report items to assess participants’ level of discomfort stemming from their exposure to a confirmation or retraction of the misinformation. Participants were asked how uncomfortable, bothered, and uneasy the correction or retraction made them feel. An example item for those who read the confirmation report is: “Did it make you feel uncomfortable that the police were correct in their initial assessment of what caused the fire?” (1 = I did not feel uncomfortable at all to 7 = I felt extremely uncomfortable). An example item for those who read the retraction report is: “Did it make you feel uncomfortable that the police were incorrect in their initial assessment of what caused the fire and that they issued a retraction?” We created a single composite measure of discomfort stemming from the presence or absence of a retraction by averaging the responses to each of the three items. Reliability of this measure was high (α = .97).

Misinformation endorsement measure

To assess participants’ endorsement of the misinformation as being true, participants were asked to indicate the extent to which they agreed with the following statement: “Combustible material stored in the side room contributed to the fire” (1 = strongly disagree to 7 = strongly agree). Higher levels of agreement indicated greater belief that the misinformation was true.

Misinformation-based inference measure

Nine open-ended questions used by Wilkes and Leatherbarrow (1988) were used to measure the extent to which participants would use the misinformation as a basis for inferences about what happened during the warehouse fire. Examples of these questions are “Why do you think the fire was particularly intense?” and “What could have caused the explosions?” Responses to these questions were coded by two independent raters to index whether each response directly or indirectly referenced the misinformation that combustible materials were stored in the side room. A response that made an inference based on the misinformation was coded as a 1, whereas a response that was not based in misinformation was coded as a 0. Importantly, if a participant referenced the misinformation but then also acknowledged that the misinformation was false within that response, the response was coded as a 0. If the codes given for a particular inference differed between the two raters (e.g., one rater coded the inference as a 1 and the other coded it as a 0), the response was assigned the value .5. Codes for all nine questions were then summed to provide a measure indexing the total number of misinformation-based inferences (interrater correlation: r = .92).

Retraction recall measures

To ensure that participants who saw a retraction were aware they had seen a retraction, we employed two measures. The first was an item asking participants if there was any information presented in the report that retracted information provided earlier in the report (dichotomous response: Yes/No). The second item was an open-ended question adapted from Wilkes and Leatherbarrow (1988) asking what the point was of the second message from the police. This item was coded by a third coder independent from those who coded the inference items. Responses that referenced the presence of a retraction were coded as a 1, and those that did not reference the retraction were coded as a 0.

Procedure

All studies reported in this article received ethics approval from the Ohio State University IRB prior to data collection. After providing informed consent, participants were given the cover story that this study was designed to examine how people process and remember reports, so they should read the report carefully. Participants were then randomly assigned to read the report including either the confirmation or the retraction. After this, participants responded to the discomfort measures that corresponded to the report they saw followed by the misinformation endorsement measure. Participants then completed an unrelated 5–10-minute filler task in which they provided their opinions about recycling. The filler task was included to create temporal distance between the report and the inference dependent variable, which is common in research in this domain (Ecker, Lewandowsky, & Apai, 2011; Ecker et al., 2010; Wilkes & Leatherbarrow, 1988). After this, participants completed the inference dependent measure followed by the retraction recall measures. Finally, participants were debriefed and thanked for their participation.

Results

Discomfort

As expected, those who saw a retraction reported significantly greater discomfort (M = 4.27, SD = 1.61) than those who saw a confirmation (M = 1.99, SD = 1.40), t(184) = −10.26, p < .001, 95% CI [−2.718, −1.841], d = 1.51.

Misinformation endorsement

To test whether the relation between psychological discomfort and misinformation endorsement differed as a function of the report manipulation, a multiple regression model was created. Misinformation endorsement was predicted from report type (effects coded: −1 = confirmation report, 1 = retraction report), discomfort (mean-centered), and the interaction of these two variables. No main effect of discomfort emerged, b = 0.01, SE = .08, t(182) = 0.07, p = .94, 95% CI [−.153, .164], r = .01. but a significant main effect of report type was evident such that those who saw the confirmation endorsed the misinformation more overall than those who saw the retraction, b = −1.44, SE = .15, t(182) = −9.60, p < .001, 95% CI [−1.735, −1.143], r = .58. Importantly, the predicted interaction was significant, b = 0.27, SE = .08, t(182) = 3.42, p < .001, 95% CI [.116, .433], r = .25. For those who saw a confirmation, greater discomfort with the confirmation predicted less endorsement of the misinformation, b = −0.27, SE = .12, t(182) = −2.17, p = .031, 95% CI [−.513, −.024], r = .16. For those who saw a retraction, greater discomfort with the retraction predicted greater endorsement of the misinformation, b = 0.28, SE = .10, t(182) = 2.74, p = .007, 95% CI [.079, .482], r = .20.

Misinformation-based inferences

A one-sample t test revealed that the number of misinformation-based inferences made by those who saw a retraction (M = 3.32, SD = 2.25) was still significantly greater than zero, indicating that the retraction did not eliminate reliance on the misinformation, t(97) = 14.60, p < .001 95% CI [2.870, 3.773], d = 1.47. Additionally, misinformation endorsement and use of misinformation to form inferences were positively correlated, r(184) = .57, p < .001, but not so highly correlated as to suggest they are redundant measures.

We also examined whether the relation between the discomfort and inference measures was moderated by the report manipulation. No main effect of discomfort emerged, b = −0.12, SE = .11, t(182) = −1.03, p = .30, 95% CI [−.338, .106], r = .08, but a significant main effect of report type did emerge. Participants who saw a confirmation made significantly more misinformation-based inferences than those who saw a retraction, b = −1.20 SE = .21, t(182) = −5.68, p < .001, 95% CI [−1.610, −.780], r = .39. The interaction did not reach statistical significance, b = 0.18, SE = .11, t(182) = 1.57, p = .12, 95% CI [−.046, .399], r = .12.

Retraction recall

Analysis of the recall measures was restricted to those in the retraction condition, as we were primarily concerned with whether these participants were aware they had seen a retraction. Within this condition, 77% reported that information had been retracted in response to the dichotomous measure, and 72% referenced the retraction in their open-ended responses. In this study and across all other studies reported in this article, excluding those who failed to accurately recall the retraction in response to one of these measures or the other did not meaningfully change the pattern of results. This was expected, as past research on the CIE generally finds that whether or not participants are able to recall the retraction does not change the observed patterns of results (Ecker et al., 2015; Ecker et al., 2011; Ecker et al., 2010; Johnson & Seifert, 1994). That said, interested readers should see the online supplement for analyses excluding those who failed to recall the retraction.

Mediation analysis

Though the interactive effect of discomfort and the report manipulation on the inference measure did not reach statistical significance, we wanted to examine whether there might be indirect effects of discomfort on inferences though misinformation endorsement. We also wanted to examine whether such indirect effects might differ as a function of the report manipulation.

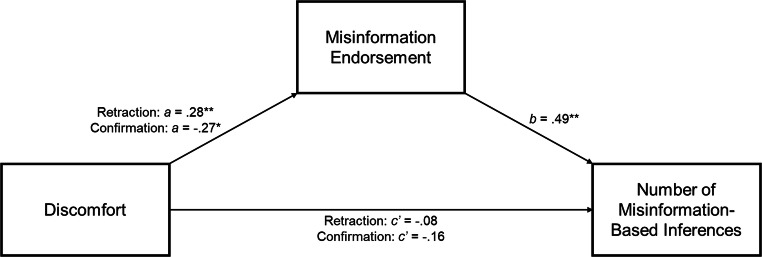

To do so, we specified a moderated mediation model using the PROCESS macro (Hayes, 2017). Discomfort was entered as the focal predictor, misinformation endorsement as the mediator, and the number of misinformation-based inferences as the outcome. Because report type moderated the relation between discomfort and misinformation endorsement (and a similar trending pattern was observed predicting inferences), it was included as a moderator of the paths between discomfort and misinformation endorsement and between discomfort and number of misinformation-based inferences. We used 5,000 bootstrapped samples (i.e., sampling with replacement treating the current data as the population) to test the significance of the indirect effects. Results were consistent with predictions. At both levels of the report manipulation, significant conditional indirect effects emerged (see Fig. 1). A significant positive indirect effect emerged in the retraction condition, b = 0.14, SE = .06, 95% CI [.015, .271]. Discomfort positively predicted misinformation endorsement, b = 0.28, SE = .10, t(182) = 2.74, p = .007, 95% CI [.079, .482], r = .20, and misinformation endorsement positively predicted the number of misinformation-based inferences, b = 0.49, SE = .10, t(181) = 5.00, p < .001, 95% CI [.295, .681], r = .35. The indirect effect was negative in the confirmation condition, b = −0.13, SE = .05, 95% CI [−.231, −.050], because discomfort negatively predicted misinformation endorsement in that condition, b = −0.27, SE = .12, t(182) = −2.17, p = .031, 95% CI [−.513, −.024], r = .16. The index of moderated mediation was significant, indicating that the two conditional indirect effects differed significantly from each other, index = 0.27, SE = .08, 95% CI [.117, .438]. Neither conditional direct effect reached significance (ps > .32).

Fig. 1.

Moderated mediation analysis from Study 1 testing the indirect effect of psychological discomfort on the number of misinformation-based inferences through misinformation endorsement with moderation of the a and c’ paths by report condition. Coefficients for each path appear next to the arrows. For paths moderated by report condition, conditional coefficients for those in the retraction and confirmation report conditions are shown. *p < .05, **p < .01

Study 1 discussion

Study 1 supported the prediction that retractions of misinformation elicit discomfort. Additionally, this discomfort predicted continued belief in the misinformation which, in turn, predicted use of the misinformation to make inferences about the event. These findings align with the MM account of the CIE and are consistent with Lewandowsky et al.’s (2012) speculation that discomfort could be a reason why people disregard retractions that threaten the causal completeness of a mental model. Therefore, it appears possible that the CIE might occur, at least in part, due to underlying motivational factors.

However, there were several potential limitations of Study 1. First, the use of a misinformation confirmation condition as the comparison condition might leave ambiguity regarding whether the presence of a retraction increased discomfort or the presence of a confirmation decreased discomfort. Relatedly, Study 1 does not rule out the possibility that any retraction, and not just those directly related to the mental model, could create discomfort. For instance, retraction of any piece of information might create discomfort if that discomfort stems from the perception that a responsible agency, such as the police, got something wrong and had to retract a previous public statement. Therefore, a more direct comparison might be between two retractions that both indicate the police were wrong, but one invalidates information that is causally central to the focal mental model (the presence of combustible materials) whereas the other invalidates information that is irrelevant to the focal mental model. Such a comparison would address the possibility that the presence of any retraction, and not just one that threatens the causal completeness of a mental model, would create discomfort. Another possible limitation was the discomfort measure phrasing. It is possible that the measure used in the retraction condition captured discomfort in response to the idea that the police were wrong rather than discomfort felt in response to a threat to causal completeness. Discomfort measures more directly tied to a threat to causal completeness would be desirable.

Study 2

Study 2 was designed to replicate the findings of Study 1 and to address its possible limitations. Rather than comparing a retraction of causally central misinformation with a confirmation of the same misinformation, Study 2 compared the retraction of causally central misinformation with a retraction of misinformation not relevant to the causal flow of the event. Additionally, the discomfort measures were changed to rule out the possibility that discomfort with the police being wrong was responsible for the observed results. We predicted that participants who encountered the retraction of the misinformation would report experiencing greater discomfort than those who encountered a retraction of the causally irrelevant information. We also expected that this discomfort would predict continued endorsement and use of the misinformation for those who saw a retraction of the misinformation but not for those who saw a retraction of the causally irrelevant information. Finally, we predicted that associations between discomfort and misinformation-based inferences would be mediated by misinformation endorsement when participants received a retraction of the misinformation.

Method

Participants

Two hundred thirty-one Mechanical Turk workers participated in exchange for monetary compensation. Of these 231 participants, 52 were excluded for either being flagged by Qualtrics as a likely bot, failing to correctly answer a basic Winograd question (a question involving a simple scenario that required basic English comprehension and common sense reasoning to answer correctly), and/or providing gibberish responses to open-ended questions. This resulted in a final sample of 179 (41% female, 58% male, 1% other; mean age = 42.8, SD = 13.67, range: 18–77) who were included in the analysis.

Design

Study 2 used a two (misinformation retraction vs. irrelevant retraction) between-participant design. Dependent measures were discomfort, misinformation endorsement (which was also treated as a mediator in some analyses), and the number of misinformation-based inferences.

Measures and manipulations

Report manipulation

The reports were identical to those used in Study 1, except that the confirmation message from the police was replaced with an irrelevant retraction message from the police. To do this, in both the retraction and irrelevant retraction report conditions, participants were told in statement three that the police reported that the night watchman was the person who initially raised the alarm about the fire. In the irrelevant retraction condition, this information was later retracted by the police, stating that their earlier message was incorrect and that the night watchman was not the person who initially raised the alarm. This retraction was presented in the same position as the retraction of the misinformation in the relevant retraction condition.

To ensure that the causally central misinformation (the presence of combustible materials in the side room) was indeed more causally central than the causally irrelevant information (that the night watchman was the person who first raised the alarm about the fire), we adapted procedures from causal network analysis (Trabasso & Sperry, 1985; Trabasso & Van Den Broek, 1985) to code how many of the statements in each report are plausible causal consequences of the focal (causally central) misinformation or the causally irrelevant information. Two independent coders unaware of the hypotheses coded whether the causally central and causally irrelevant information were plausible causes of the contents of each of the other statements within the report. The retractions were omitted from the coding so that the coders would be unaware of the retraction manipulation and that each claim, depending on condition, was said to be false. This left 11 statements to be judged in light of the causally central and irrelevant information. Of the 22 total judgements (11 judgments each for whether the causally central and causally irrelevant information were plausible causes of the contents of the other statements), coders agreed on 19. Coders agreed that 10 of the 11 statements reflected plausible causal consequences of the focal misinformation intended to be causally central (i.e., that there were combustible materials inappropriately stored), and no statements were agreed to not reflect causal consequences of this information. Conversely, coders agreed that nine of the 11 statements did not reflect plausible causal consequences of the information intended to be causally irrelevant (i.e., that the night watchman initially raised the alarm), and no statements were agreed to reflect causal consequences of this information. Thus, it seemed that our manipulation of causal centrality of the information to be retracted across conditions was appropriate.

Discomfort measure

The discomfort measure was more specifically tied to the presence of a retraction in Study 2. Participants in the relevant retraction condition were asked how uncomfortable, bothered, and uneasy they felt when the police said their earlier message was incorrect and that no combustible materials had been stored in the side room. For those who saw the irrelevant retraction, these items were identical, except that they asked about how uncomfortable, bothered, and uneasy they felt when the police said their earlier message was incorrect and that the night watchman was not the one who initially sounded the alarm. As such, the police were said to be wrong in both conditions, but the conditions differed regarding whether the retraction was of causally relevant versus causally irrelevant information. All scale anchors were the same as used in Study 1. A composite measure was created by averaging the responses, and internal reliability was good (α = .97).

Misinformation endorsement measure

Two additional misinformation endorsement items were added to the one used in Study 1 to create an index of misinformation endorsement. These items asked how accurate (−3 = extremely inaccurate to 3 = extremely accurate) and how true (−3 = extremely untrue to 3 = extremely true) it is to say that combustible materials stored in the side room contributed to the fire. The scale of the first item was also changed to range from −3 to 3. This new range was adopted for Study 2 because it better represented the bipolar nature of the items. A composite measure was created by averaging the responses, and internal reliability was good (α = .99).

Misinformation-based inference measure

The inference measure was identical to the one used in Study 1 (interrater correlation: r = .79).

Retraction recall measures

These measures were identical to those used in Study 1, except for several minor wording changes.

Procedure

The procedure was identical to that used in Study 1, except that participants were randomly assigned to see either the relevant or irrelevant retraction report.

Results

Discomfort

As predicted, participants who saw a retraction of the misinformation reported significantly greater discomfort (M = 4.29, SD = 1.76) than those who saw the retraction of the irrelevant information (M = 3.76, SD = 1.74), t(177) = −2.02, p = .045, 95% CI [−1.05, −0.01], d = 0.30. This suggests that retractions that invalidate misinformation central to a causal mental model create greater discomfort than retractions that invalidate information that is not causally central to one’s mental model.

Misinformation endorsement

Additionally, a multiple regression was conducted predicting misinformation endorsement from retraction type, discomfort, and their interaction. There was a main effect of retraction type, such that those who saw a retraction of the misinformation endorsed the misinformation significantly less than those who saw a retraction of the irrelevant information, b = −1.75, SE = .10, t(175) = −17.39, p < .001, 95% CI [−1.951, −1.554], r = .80. There was also a significant main effect of discomfort, such that the greater discomfort in response to the retraction seen predicted greater endorsement of the misinformation as true, b = 0.23, SE = .06, t(175) = 4.00, p < .001, 95% CI [.116, .342], r = .29. Importantly and as predicted, there was a significant interaction, b = 0.21, SE = .06, t(175) = 3.75, p < .001, 95% CI [.101, .327], r = .27, such that discomfort positively predicted misinformation endorsement when a retraction of the misinformation was seen, b = 0.44, SE = .08, t(175) = 5.28, p < .001, 95% CI [.275, .609], r = .37, but not when a retraction of the irrelevant information was seen, b = 0.01, SE = .08, t(175) = 0.19, p = .85, 95% CI [−.139, .168], r = .01. As such, it is not discomfort with any retraction that predicts continued misinformation belief, but specifically discomfort in response to retractions of the causally central misinformation itself.

Misinformation-based inferences

As in Study 1, the retraction of the misinformation did not eliminate reliance on the misinformation to make inferences. The number of misinformation-based inferences made by those who saw a retraction of the misinformation (M = 3.86, SD = 1.95) remained significantly greater than zero, t(81) = 17.89, p < .001 95% CI [3.43, 4.29], d = 1.95. Additionally, misinformation endorsement and use of misinformation to form inferences were again positively correlated, r(177) = .52, p < .001.

A multiple regression analysis was conducted predicting the number of misinformation-based inferences from retraction type, discomfort, and the interaction between these variables. There was a significant main effect of retraction type, such that those who saw the retraction of the misinformation made significantly fewer inferences based on the misinformation than those who saw the retraction of the irrelevant information, b = −.99, SE = .14, t(175) = −6.92, p < .001, 95% CI [−1.275, −0.709], r = .46. There was no main effect of discomfort, b = 0.02, SE = .08, t(175) = 0.18, p = .85, 95% CI [−.146, .176], r = .01. The interaction did not reach significance, b = 0.14, SE = .08, t(175) = 1.76, p = .08, 95% CI [−.018, .304], r = .13.

Retraction recall

Within the misinformation retraction condition, 90% indicated that a retraction was present in response to the dichotomous measure, and 62% referenced the retraction in response to the open-ended question.

Mediation analysis

As in Study 1, a similar mediation analysis conducted using Hayes’ Process Macro (Hayes, 2017) examined whether there was an indirect effect of discomfort on the number of misinformation-based inferences through misinformation endorsement, and whether this pattern was moderated by the type of retraction seen. Discomfort was the focal predictor, misinformation endorsement was the mediator, and number of misinformation-based inferences was the outcome measure. Retraction type moderated the paths between discomfort and misinformation endorsement and between discomfort and number of misinformation-based inferences. Five thousand bootstrapped samples were used to calculate the significance of the indirect effects. As predicted, there was a significant positive indirect effect when participants saw a retraction of the causally-central misinformation, b = 0.17, SE = .06, 95% CI [.062, .303], such that discomfort positively predicted misinformation endorsement, b = 0.44, SE = .08, t(175) = 5.28, p < .001, 95% CI [.275, .609], r = .37, which positively predicted the number of misinformation-based inferences, b = 0.38, SE = .10, t(174) = 3.70, p < .001, 95% CI [.179, .589], r = .27. However, when a retraction of the irrelevant information was seen, no indirect effect emerged, b = 0.01, SE = .02, 95% CI [−.031, .042]. This was because discomfort did not predict misinformation endorsement in this condition, b = 0.01, SE = .08, t(175) = 0.19, p = .85, 95% CI [−.139, .168], r = .01. These indirect effect patterns significantly differed from each other, index = .16, SE = .06, 95% CI [.056, .304]. Neither conditional direct effect reached significance (ps > .20).

Study 2 discussion

The results of Study 2 replicated those of Study 1. Retractions of misinformation central to a causal mental model were rated as particularly uncomfortable to encounter, and that discomfort predicted continued belief in misinformation, which was also related to use of the misinformation to make inferences about the event. These results suggest that the results of Study 1 were likely not driven by the use of a misinformation-confirmation comparison condition or by the rather global discomfort measures used in that study.

It was unexpected that discomfort significantly interacted with report and retraction type to predict misinformation endorsement in Studies 1 and 2 but did not significantly interact to directly predict the number of misinformation-based inferences in either study. That said, a combined analysis examining this interactive effect on inferences across all relevant data reported in this article (e.g., all data from Studies 1 and 2 along with the data from the control condition in Study 3 discussed below) resulted in a significant interaction (see the online supplement for specifics about this combined analysis), suggesting this pattern can emerge when examined using a larger sample. Also, significant indirect effects were observed between discomfort and misinformation-based inferences through misinformation endorsement within both studies.

The use of a single item measure of misinformation endorsement in Study 1 might have contributed to differences in the significance of effects between misinformation endorsement and inferences (Swire-Thompson et al., 2020), but that possibility seems less likely for Study 2 give that a three-item composite measure was used. Another possible reason for weaker effects on the inference measure might have been because this measure was noisier than the misinformation endorsement measure, thereby allowing for effects of discomfort to be more easily observed on endorsement. Additionally, if inferences are more distal to discomfort in a causal sequence than endorsement, observations of a direct relation between discomfort and inferences would often be less likely (MacKinnon et al., 2000; Rucker et al., 2011).

There could also be theoretical reasons why discomfort might be more predictive of misinformation endorsement than the use of misinformation to form inferences. From a mental models perspective, it is the belief that the misinformation is true (and therefore any causal understanding to which it is a part) that is threatened by a retraction, so any resulting discomfort should primarily motivate one to address this threat to one’s belief in the misinformation. Resolving this threat to belief in misinformation might generally precede the creation of inferences about the event in question, and these inferences might also be informed by more than just one’s belief in the misinformation (such as the presence or absence of alternative knowledge that can be used in inference making). For these reasons, discomfort might be expected to have its strongest influences on belief in misinformation while having potentially weaker or only indirect effects on the formation of inferences.

A possible implication of Studies 1 and 2 is that, because discomfort elicited by a retraction predicts continued belief in misinformation, lessening the motivation to avoid this discomfort might make retractions more effective. This would not only demonstrate a novel approach to increase the efficacy of retractions, but it would also show that the link between discomfort and misinformation belief is causal. Indeed, because this relation was only examined non-experimentally in the previous studies, it is possible that the observed discomfort was merely an alternative outcome that correlated with misinformation endorsement—a “read out” on the fact that people continued to believe the misinformation in the face of the retraction and were uncomfortable doing so. Therefore, evidence showing that changes in how people interpret the meaning of discomfort elicited by a retraction also changes continued misinformation endorsement and use would help rule out this alternative explanation and demonstrate a causal role for discomfort. Study 3 was designed to provide this evidence.

Study 3

Past research suggests that people can be prompted to positively reappraise aversive feelings, such as anxiety, and that doing so changes how they respond to those feelings. For instance, cognitive reappraisal can change how people interpret anxiety (Hofmann et al., 2009) and bolster performance in anxiety-evoking situations (Brooks, 2014). Given this, it might be possible to prompt people to reappraise discomfort in response to a retraction as being a good thing, which might lessen motivation to reject the retraction and continue believing the misinformation.

In Study 3, some participants were prompted to reappraise discomfort in a positive way, whereas others were not prompted to do so. We predicted that those prompted to reappraise discomfort would endorse the misinformation less and use it less when making inferences about the focal event compared with participants who were not prompted to reappraise discomfort. We also predicted that the reappraisal manipulation would not impact the amount of discomfort felt, so any effects of the manipulation on endorsement and inferences would be because reappraisal instructions changed how participants responded to discomfort and not because those instructions reduced the amount of discomfort felt.

Method

Participants

One hundred ninety-two undergraduates taking introductory psychology courses at our university were recruited to participate in exchange for course credit. One participant did not complete all of the critical measures and was excluded from analysis, resulting in a final sample of 191 (43% men, 55.5% women, 0.5% other; mean age = 19.2, SD = 3.21, range: 18–47).

Design

Study 3 used a two (discomfort reappraisal vs. control) between-participant design. Dependent measures were discomfort, misinformation endorsement (which was also treated as a mediator in some analyses) and the number of misinformation-based inferences.

Measures and manipulations

Reappraisal manipulation

Participants were assigned to either a control or reappraisal condition. In the control condition, participants were told prior to seeing the report that they would see a series of statements related to a warehouse fire event. They were also told that they would be asked questions about what they read to test their memory, so they should read the report as carefully as possible. Participants assigned to the reappraisal condition saw the same initial instructions as those in the control group and were then shown an additional set of instructions. Participants were told that this second set of instructions would help them with the memory test by providing suggestions for how people best form understandings of events. Participants were told that research finds that information surrounding events is often contradictory or incomplete, and that this can be uncomfortable because people want to understand the causes of events. Participants were also told that research suggests feeling uncomfortable in these instances is actually a good thing because it means that one is not jumping to conclusions based on incomplete information. The instructions concluded by saying that participants should remember that it is a good thing to feel uncomfortable and embrace this discomfort in these instances because it means that one is doing what one should be doing to form an accurate understanding of the event. The report in both conditions was identical to the misinformation retraction reports used in Studies 1 and 2 with a slight modification. In this study, the fire department, rather than the police, was said to be conducting the investigation into the fire and was the source of the retraction. This change was made because the study was conducted shortly after widespread protests of police violence occurred, so we wanted to ensure that sentiment about police external to the study could not potentially impact interpretation of the study materials.

Misinformation endorsement

Misinformation was measured using the same items as in Study 2 modified to fit the narrative that the fire department oversaw the investigation and issued the retraction. Reliability of the items was high, and a composite endorsement measure was created (α = .95).

Misinformation-based inferences measure

This measure was identical to the ones used in Studies 1 and 2 except that they were adapted to reflect that the fire department oversaw the investigation. Because the inter-rater correlation between the initial two coders was low (r = .62), a third, independent coder evaluated the inferences in which the initial two coders disagreed. The third coder resolved the disagreements by assigning codes of 1 (if they judged the inference to be based on the misinformation), 0 (if they judged the inference to not be based on the misinformation), or 0.5 (if the inference was too ambiguous to rate as being based on the misinformation or not).

Discomfort measure

Psychological discomfort was measured using the same items used in Study 2 modified to reflect that the fire department was the source of the retraction. Because the items were strongly correlated, a composite measure was created (α = .91).1

Retraction recall measures

The retraction recall measures were identical to those used in Study 2 with minor wording changes to reflect the narrative that the fire department was in charge of the investigation.

Procedure

After providing informed consent, participants were randomly assigned to read either the control or discomfort reappraisal instructions. All participants then read the same report containing the misinformation and a retraction of that misinformation. Next, participants answered the misinformation endorsement items followed by the misinformation-based inferences measure. Discomfort experienced when they received the retraction was then measured. We chose to measure discomfort after endorsement and inferences to ensure that its measurement could not potentially interfere with the reappraisal manipulation prior to gathering responses to the endorsement and inference measures. Participants then responded to the retraction recall measures. Finally, they were debriefed and thanked for participation.

Results

Discomfort

As expected, the amount of discomfort reported by those in the reappraisal condition (M = 4.11, SD = 1.74) did not differ significantly from the amount of discomfort reported by those in the control condition (M = 3.88, SD = 1.65), t(189) = −0.93, p = .35, 95% CI [−.713, .257], d = 0.13. This suggests that any effects of the reappraisal manipulation were due to changes in how people respond to experienced discomfort rather than changes in the level of discomfort.

Misinformation endorsement

Consistent with predictions, those in the reappraisal condition endorsed the misinformation significantly less following the retraction (M = −1.35, SD = 1.94) than those in the control condition (M = −0.74, SD = 2.03), t(187.62) = 2.17, p = .031, 95% CI [.055, 1.156], d = 0.31.

Misinformation-based inferences

As in previous studies, misinformation endorsement and the inference measure were positively correlated, r(189) = .32, p < .001. Though it did not reach statistical significance, those in the reappraisal condition tended to make fewer misinformation-based inferences (M = 3.30, SD = 1.88) than those in the control condition (M = 3.84, SD = 1.94), t(189) = 1.94, p = .054, 95% CI [−.009, 1.083], d = 0.28.

Retraction recall

Ninety-three percent of participants indicated that they had encountered a retraction in response to the dichotomous measure, and 56% referenced the retraction in response to the open-ended question,2

Mediation analysis

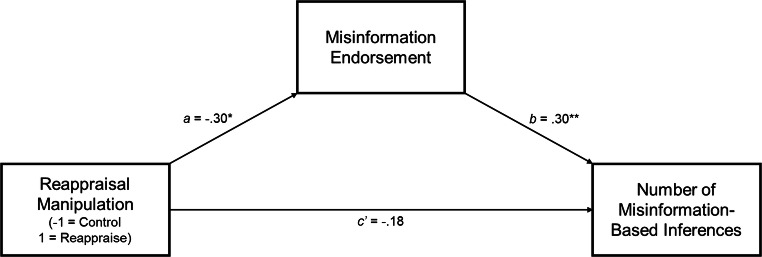

We used a mediation model to examine whether there might be indirect effects of the reappraisal condition through misinformation endorsement on the inference measure. The mediation model treated the reappraisal manipulation (effects-coded: −1 = control condition, 1 = reappraisal condition) as the focal predictor, endorsement as a mediator, and inferences as the dependent variable. Hayes’ Process Macro (Hayes, 2017) was again used to conduct this analysis. As expected, the indirect effect of reappraisal on inferences through endorsement was significant, b = −.09, SE = .05, 95% CI [−.200, −.008]. Those in the control condition endorsed the misinformation significantly more than those in the reappraisal condition, b = −0.30, SE = .14, t(189) = −2.17, p = .032, 95% CI [−.578, −.027], r = .16, and the more the misinformation was endorsed, the more misinformation-based inferences were made (see Fig. 2), b = 0.30, SE = .07, t(188) = 4.38, p < .001, 95% CI [.166, .437], r = .30. The direct effect of the reappraisal manipulation on inferences was not significant, b = −0.18, SE = .13, t(188) = −1.33, p = .19, 95% CI [−.441, .087], r = .10.

Fig. 2.

Mediation analysis from Study 3 testing the indirect effect of the reappraisal manipulation on the number of misinformation-based inferences through misinformation endorsement. Coefficients for each path appear next to the arrows. *p < .05, **p < .01

Study 3 discussion

Study 3 suggested, for the first time in the misinformation literature, that discomfort felt in response to a retraction of misinformation (or at least how people interpret that discomfort) causes people to continue believing misinformation, and prompting people to reappraise the meaning of this discomfort increases the efficacy of retractions. Specifically, those prompted to interpret this discomfort positively endorsed the misinformation less after seeing a retraction than those who were not prompted to change their interpretation of the discomfort, which produced indirect effects on the number of misinformation-based inferences made. Such evidence speaks directly to the potential causal role of discomfort in the CIE. Indeed, if discomfort had no causal influence on misinformation belief and was, instead, simply a consequence of continuing to believe something that had been labeled as false, there should have been no influence of the reappraisal manipulation on continued belief in the misinformation.

General discussion

Across three studies, we found that retractions of misinformation do cause people to feel uncomfortable, and that this discomfort predicts continued belief in the misinformation (Studies 1 and 2). Additionally, we found that discomfort (or the interpretation of discomfort) causes continued belief in misinformation. That is, people who positively reappraised the meaning of discomfort believed the misinformation less than those who did not reappraise its meaning (Study 3). Across all studies we also found that greater belief in the misinformation predicted greater use of the misinformation when making inferences.

These findings suggest that motivational factors might play a key role in the MM account, a suggestion that is consistent with motivation theory from social psychology and communications (e.g., Brashers, 2001; Festinger, 1957; Heine et al., 2006; Weary & Edwards, 1996). This implication helps to clarify the MM theoretical perspective. Although it is logical to assert that retractions of misinformation central to a mental model create causal ambiguity within that model, this alone does not account for why retractions would be disregarded. The idea that discomfort motivates individuals to disregard the retraction fills this gap in the MM account (Lewandowsky et al., 2012), and the present findings confirm that such a mechanism can play a role in the CIE. Additionally, the present work extends this theorizing by showing that retractions can be made more effective by changing how people interpret the discomfort they experience.

However, it is important to note that we do not believe that this evidence for a motivational mechanism undermines the likelihood that cognitive mechanisms contribute to the CIE. Rather, it is possible they occur in parallel or at different points in time. For instance, from a retrieval failure or KReC perspective (Kendeou & O’Brien, 2014; Lewandowsky et al., 2012; Swire & Ecker, 2018), failure to activate a retraction when the misinformation is activated might prevent discomfort from being felt and result in a continued influence effect via a cognitive mechanism. Successful retrieval of the retraction could still result in a continued influence effect, however, if the retraction elicits feelings of discomfort. Similarly, from a fluency perspective (Schwarz et al., 2007; Skurnik et al., 2005), it might be especially uncomfortable to learn that familiar misinformation is false, which could lead to heightened continued influence effects. As such, previously proposed cognitive mechanisms and the motivational mechanism we document are not in conflict, and interesting interactions between these mechanisms might play out to create continued influence effects.

Future examinations could further investigate the process by which people reduce discomfort felt in response to a retraction. An assumption made by the MM account of the CIE (Lewandowsky et al., 2012)—and implicit in the present research—is that participants reduce the amount of discomfort felt in response to a retraction by discounting or ignoring the retraction, thereby allowing them to maintain their belief in the misinformation. However, because past work has predominantly focused on continued misinformation endorsement or use rather than belief in the retraction per se (Ecker et al., 2014; Ecker et al., 2011; Ecker et al., 2010; Johnson & Seifert, 1994; Wilkes & Leatherbarrow, 1988), such effects have largely not been examined. Some work has examined belief in the retraction as a predictor of the CIE, but that research has not examined the potential role of discomfort (O’Rear & Radvansky, 2019).

Further, research could examine additional factors that impact the amount of discomfort felt in response to a retraction. One possibility is that retractions from more versus less credible sources might be seen as a greater challenge to one’s causal understanding of an event (Ecker & Antonio, 2021; Guillory & Geraci, 2013), making such retractions especially uncomfortable to encounter. Additionally, in some cases the amount of discomfort felt in response to a retraction might be impacted by considerations of how accepting or rejecting the retraction might impact one’s relationship with the person or entity issuing the retraction (Scoboria & Henkel, 2020).

Implications and concluding remarks

The present research has notable real-world implications. By evidencing a novel mechanism by which the CIE can occur, this work opens the door for new interventions to be developed to reduce the CIE through changing how people interpret and respond to discomfort. Such strategies might be particularly applicable to health contexts where people have a personal stake in understanding the causes of conditions. In cases where causes are unclear, such as conditions like autism, changing how people interpret discomfort might make them more accepting of retractions that refute misinformation about the causes of that condition. However, in cases where misinformation does not provide causal understanding, such as defamatory information about a person where continued influence effects can be less pronounced (Ecker & Rodricks, 2020), discomfort might play less of a role and discomfort-focused interventions might have less of an effect.

In conclusion, the present research reveals several insights into the CIE. First, this research demonstrates that motivational concerns play a key role in the CIE, and that discomfort might contribute to the CIE. Additionally, this research suggests changing how people interpret and respond to discomfort can mitigate the CIE. Integration of these considerations into existing theory in the CIE domain is desirable, as it would allow for the development of a more nuanced understanding of the phenomenology underlying continuing influences of misinformation.

Availability of data and materials

All study materials are publicly available in the online supplemental materials for this article. Because the IRB protocols for this research do not allow for public sharing of individual participants’ raw data, the data for the studies within this article are available in the form of means, standard deviations, and covariance matrices for all studies reported in the online supplementary materials. These data enable interested researchers to conduct regression analyses using structural equation modeling (SEM) that parallel the analyses reported in this article.

Author contributions

M. W. Susmann designed all of the reported studies in consultation with D. T. Wegener. M. W. Susmann conducted all data analyses with input from D. T. Wegener. M. W. Susmann drafted the manuscript and revised it based on feedback from D. T. Wegener.

Funding

No outside funding supported this research.

Declarations

Conflicts of interest

The authors have no conflicts of interests to disclose.

Ethics approval

All research within this article received prior ethics approval from the university IRB.

Consent to participate

All participants provided informed consent prior to taking part in any of the studies reported in this article.

Consent to publish

All participants consented to having their data published as part of the informed consent process.

Code availability

Because the individual raw data for the present studies is not publicly available, the specific code used for the reported data analyses would be of limited use, so it has not been posted publicly. We are happy to provide code to conduct parallel SEM analyses based on the provided means, standard deviations, and covariance matrices upon request.

Footnotes

After completing data collection, it was discovered that, for the “bothered” item, the label for value “5” was accidently set to “4,” meaning that the response scale for that item went “4, 4, 6” rather than the intended “4, 5, 6.” As such, the second “4” was recoded to be a “5” in the data. Excluding this item did not result in a meaningful change in the reliability of the index, nor did inclusion of this item change the significance of any of the analyses. As such, we have reported results that include this item in the discomfort index.

We believe this low recall rate stems from an oversight when wording this question. Namely, participants were asked what the point was of the final message from the fire department. Because the final statement in the message (presented after the retraction) was said to come from a fire officer, it is possible many participants thought this question referred to that statement and not the statement that included the retraction. Therefore, we do not think this measure captured retraction awareness well, so we believe the dichotomous measure better reflects the percentage of participants who were aware of the retraction.

Open practices statement

All study materials from the reported studies are available in the online supplementary materials for this article. Because the IRB protocols for this research do not allow for public sharing of individual participants’ raw data, the data for the studies within this article are available in the form of means, standard deviations, and covariance matrices for all studies reported in the online supplementary materials. These data enable interested researchers to conduct regression analyses using structural equation modeling (SEM) that parallel the analyses reported in this article. None of these studies were preregistered.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Brashers DE. Communication and uncertainty management. Journal of Communication. 2001;51(3):477–497. doi: 10.1111/j.1460-2466.2001.tb02892.x. [DOI] [Google Scholar]

- Brooks AW. Get excited: Reappraising pre-performance anxiety as excitement. Journal of Experimental Psychology: General. 2014;143(3):1144–1158. doi: 10.1037/a0035325. [DOI] [PubMed] [Google Scholar]

- Cornwall W. Officials gird for a war on vaccine misinformation. Science. 2020;369:14–19. doi: 10.1126/science.369.6499.14. [DOI] [PubMed] [Google Scholar]

- Ecker UK, Ang LC. Political attitudes and the processing of misinformation corrections. Political Psychology. 2019;40(2):241–260. doi: 10.1111/pops.12494. [DOI] [Google Scholar]

- Ecker UK, Antonio LM. Can you believe it? An investigation into the impact of retraction source credibility on the continued influence effect. Memory & Cognition. 2021;49(4):631–644. doi: 10.3758/s13421-020-01129-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ecker, U. K., Lewandowsky, S., & Apai, J. (2011). Terrorists brought down the plane!—No, actually it was a technical fault: Processing corrections of emotive information. The Quarterly Journal of Experimental Psychology, 64(2), 283–310. 10.1080/17470218.2010.497927 [DOI] [PubMed]

- Ecker UK, Lewandowsky S, Chadwick M. Can corrections spread misinformation to new audiences? Testing for the elusive familiarity backfire effect. Cognitive Research: Principles and Implications. 2020;5(1):1–25. doi: 10.1186/s41235-020-00241-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ecker UK, Lewandowsky S, Cheung CS, Maybery MT. He did it! She did it! No, she did not! Multiple causal explanations and the continued influence of misinformation. Journal of Memory and Language. 2015;85:101–115. doi: 10.1016/j.jml.2015.09.002. [DOI] [Google Scholar]

- Ecker UK, Lewandowsky S, Fenton O, Martin K. Do people keep believing because they want to? Preexisting attitudes and the continued influence of misinformation. Memory & Cognition. 2014;42(2):292–304. doi: 10.3758/s13421-013-0358-x. [DOI] [PubMed] [Google Scholar]

- Ecker UK, Lewandowsky S, Tang DT. Explicit warnings reduce but do not eliminate the continued influence of misinformation. Memory & Cognition. 2010;38(8):1087–1100. doi: 10.3758/MC.38.8.1087. [DOI] [PubMed] [Google Scholar]

- Ecker UK, Rodricks AE. Do false allegations persist? Retracted misinformation does not continue to influence explicit person impressions. Journal of Applied Research in Memory and Cognition. 2020;9(4):587–601. doi: 10.1016/j.jarmac.2020.08.003. [DOI] [Google Scholar]

- Elliot AJ, Devine PG. On the motivational nature of cognitive dissonance: Dissonance as psychological discomfort. Journal of Personality and Social Psychology. 1994;67(3):382–394. doi: 10.1037/0022-3514.67.3.382. [DOI] [Google Scholar]

- Festinger, L. (1957). A theory of cognitive dissonance. Row, Peterson.

- Guillory JJ, Geraci L. Correcting erroneous inferences in memory: The role of source credibility. Journal of Applied Research in Memory and Cognition. 2013;2(4):201–209. doi: 10.1016/j.jarmac.2013.10.001. [DOI] [Google Scholar]

- Hall, V., Banerjee, E., Kenyon, C., Strain, A., Griffith, J., Como-Sabetti, K., ... & Ehresmann, K. (2017). Measles outbreak—minnesota april–may 2017. Morbidity and Mortality Weekly Report, 66(27), 713–717. 10.15585/mmwr.mm6627a1 [DOI] [PMC free article] [PubMed]

- Hamby A, Ecker U, Brinberg D. How stories in memory perpetuate the continued influence of false information. Journal of Consumer Psychology. 2020;30(2):240–259. doi: 10.1002/jcpy.1135. [DOI] [Google Scholar]

- Hayes, A. F. (2017). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. Guilford.

- Heine SJ, Proulx T, Vohs KD. The meaning maintenance model: On the coherence of social motivations. Personality and Social Psychology Review. 2006;10(2):88–110. doi: 10.1207/s15327957pspr1002_1. [DOI] [PubMed] [Google Scholar]

- Hofmann SG, Heering S, Sawyer AT, Asnaani A. How to handle anxiety: The effects of reappraisal, acceptance, and suppression strategies on anxious arousal. Behaviour Research and Therapy. 2009;47(5):389–394. doi: 10.1016/j.brat.2009.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson HM, Seifert CM. Sources of the continued influence effect: When misinformation in memory affects later inferences. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1994;20(6):1420. doi: 10.1037/0278-7393.20.6.1420. [DOI] [Google Scholar]

- Kata A. A postmodern Pandora's box: anti-vaccination misinformation on the Internet. Vaccine. 2010;28(7):1709–1716. doi: 10.1016/j.vaccine.2009.12.022. [DOI] [PubMed] [Google Scholar]

- Kendeou, P., & O’Brien, E. J. (2014). The knowledge revision components (KReC) framework: Processes and mechanisms. In D. Rapp & J. Braasch (Eds.), Processing inaccurate information: Theoretical and applied perspectives from cognitive science and the educational sciences (pp. 353–377). MIT Press.

- Kendeou P, Walsh E, Smith E, O’Brien E. Knowledge revision processes in refutation texts. Discourse Processes. 2014;51(5/6):374–397. doi: 10.1080/0163853X.2014.913961. [DOI] [Google Scholar]

- Lewandowsky S, Ecker UK, Seifert CM, Schwarz N, Cook J. Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest. 2012;13(3):106–131. doi: 10.1177/1529100612451018. [DOI] [PubMed] [Google Scholar]

- MacKinnon DP, Krull JL, Lockwood CM. Equivalence of the mediation, confounding and suppression effect. Prevention Science. 2000;1(4):173–181. doi: 10.1023/A:1026595011371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyhan B, Reifler J. When corrections fail: The persistence of political misperceptions. Political Behavior. 2010;32(2):303–330. doi: 10.1007/s11109-010-9112-2. [DOI] [Google Scholar]

- Nyhan B, Reifler J, Richey S, Freed GL. Effective messages in vaccine promotion: A randomized trial. Pediatrics. 2014;133(4):e835–e842. doi: 10.1542/peds.2013-2365. [DOI] [PubMed] [Google Scholar]

- Nyhan B, Reifler J, Ubel PA. The hazards of correcting myths about health care reform. Medical Care. 2013;51(2):127–132. doi: 10.1097/MLR.0b013e318279486b. [DOI] [PubMed] [Google Scholar]

- O’Rear, A. O., & Radvansky, G. A. (2019). Failure to accept retractions: A contribution to the continued influence effect. Memory & Cognition, 1–18. Advance online publication. 10.3758/s13421-019-00967-9 [DOI] [PubMed]

- Rao TS, Andrade C. The MMR vaccine and autism: Sensation, refutation, retraction, and fraud. Indian Journal of Psychiatry. 2011;53(2):95–96. doi: 10.4103/0019-5545.82529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reinhart, R. J. (2020). Fewer in U.S. continue to see vaccines as important. https://news.gallup.com/poll/276929/fewer-continue-vaccines-important.aspx

- Rich PR, Zaragoza MS. The continued influence of implied and explicitly stated misinformation in news reports. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2016;42(1):62–74. doi: 10.1037/xlm0000155. [DOI] [PubMed] [Google Scholar]

- Rucker DD, Preacher KJ, Tormala ZL, Petty RE. Mediation analysis in social psychology: Current practices and new recommendations. Social and Personality Psychology Compass. 2011;5(6):359–371. doi: 10.1037/xlm0000155. [DOI] [Google Scholar]

- Schwarz N, Sanna LJ, Skurnik I, Yoon C. Metacognitive experiences and the intricacies of setting people straight: Implications for debiasing and public information campaigns. Advances in Experimental Social Psychology. 2007;39:127–161. doi: 10.1016/S0065-2601(06)39003-X. [DOI] [Google Scholar]

- Scoboria A, Henkel L. Defending or relinquishing belief in occurrence for remembered events that are challenged: A social-cognitive model. Applied Cognitive Psychology. 2020;34(6):1243–1252. doi: 10.1002/acp.3713. [DOI] [Google Scholar]

- Seifert CM. The continued influence of misinformation in memory: What makes a correction effective? The Psychology of Learning and Motivation. 2002;41:265–292. doi: 10.1016/S0079-7421(02)80009-3. [DOI] [Google Scholar]

- Skurnik I, Yoon C, Park DC, Schwarz N. How warnings about false claims become recommendations. Journal of Consumer Research. 2005;31(4):713–724. doi: 10.1086/426605. [DOI] [Google Scholar]

- Swire B, Berinsky AJ, Lewandowsky S, Ecker UK. Processing political misinformation: comprehending the Trump phenomenon. Royal Society Open Science. 2017;4(3):1–21. doi: 10.1098/rsos.160802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swire, B., & Ecker, U. K. H. (2018). Misinformation and its correction: Cognitive mechanisms and recommendations for mass communication. In B. G. Southwell, E. A. Thorson, & L. Sheble (Eds.), Misinformation and Mass Audiences. : University of Texas Press.

- Swire-Thompson B, DeGutis J, Lazer D. Searching for the backfire effect: Measurement and design considerations. Journal of Applied Research in Memory and Cognition. 2020;9(3):286–299. doi: 10.1016/j.jarmac.2020.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trabasso T, Sperry LL. Causal relatedness and importance of story events. Journal of Memory and Language. 1985;24(5):595–611. doi: 10.1016/0749-596X(85)90048-8. [DOI] [Google Scholar]

- Trabasso T, Van Den Broek P. Causal thinking and the representation of narrative events. Journal of Memory and Language. 1985;24(5):612–630. doi: 10.1016/0749-596X(85)90049-X. [DOI] [Google Scholar]

- Weary, G., & Edwards, J. A. (1996). Causal-uncertainty beliefs and related goal structures. In R. M. Sorrentino & E. T. Higgins (Eds.), Handbook of motivation and cognition, Vol. 3. The Interpersonal Context (pp. 148–181). Guilford.

- Wilkes AL, Leatherbarrow M. Editing episodic memory following the identification of error. The Quarterly Journal of Experimental Psychology. 1988;40(2):361–387. doi: 10.1080/02724988843000168. [DOI] [Google Scholar]