Summary:

Neurons in the medial entorhinal cortex alter their firing properties in response to environmental changes. This flexibility in neural coding is hypothesized to support navigation and memory by dividing sensory experience into unique episodes. However, it is unknown how the entorhinal circuit as a whole transitions between different representations when sensory information is not delineated into discrete contexts. Here, we describe rapid and reversible transitions between multiple spatial maps of an unchanging task and environment. These remapping events were synchronized across hundreds of neurons, differentially impacted navigational cell types, and correlated with changes in running speed. Despite widespread changes in spatial coding, remapping comprised a translation along a single dimension in population-level activity space, enabling simple decoding strategies. These findings provoke a reconsideration of how medial entorhinal cortex dynamically represents space and suggest a remarkable capacity for cortical circuits to rapidly and substantially reorganize their neural representations.

Keywords: medial entorhinal cortex, dynamic coding, behavioral state, population coding, attractor manifolds

eTOC Blurb:

Low et al. record from hundreds of neurons in entorhinal cortex and reveal transitions between distinct neural maps of space in an unchanging task and environment. These transitions are reversible, synchronized across neurons, and correlated with running speed. Alignment of the two activity manifolds enables reliable position decoding across maps.

Graphical Abstract

Introduction

As an animal engages in complex behaviors, it must dynamically integrate external sensory features with internal behavioral state changes. Arousal (Hulse et al., 2017; Salay et al., 2018; Vinck et al., 2015), satiety (Jennings et al., 2019), attention (Kentros et al., 2004), and running speed (Bennett et al., 2013; Hardcastle et al., 2017; Hulse et al., 2017; Niell and Stryker, 2010; Vinck et al., 2015) can all impact cortical processing and influence how an animal interacts with its surroundings (Calhoun et al., 2019; Salay et al., 2018). As an animal navigates the world, encountering a continuous stream of sensory features while experiencing behavioral state changes, navigational centers in the brain may integrate these external and internal factors to encode unique episodes or contexts. The extent to which these navigational centers incorporate changes in an animal’s internal behavioral state, as opposed to reflecting the external sensory features in the world, remains incompletely understood.

One key navigational center is the medial entorhinal cortex (MEC), which is hypothesized to support navigation and memory through neurons that encode the animal’s position and orientation relative to sensory features such as environmental boundaries and objects (Diehl et al., 2017; Gil et al., 2018; Hafting et al., 2005; Høydal et al., 2019; Sargolini et al., 2006; Solstad et al., 2008). When environmental features change, MEC position and orientation cells can “remap” through changes in firing rate and rotations or shifts in firing field locations (Barry et al., 2007; Diehl et al., 2017; Fyhn et al., 2007; Keene et al., 2016; Krupic et al., 2015; Marozzi et al., 2015; Munn et al., 2020; Solstad et al., 2008). This flexibility in MEC neural coding is hypothesized to be an integral part of navigational and memory processes. It may also interface with a similar phenomenon in the hippocampus (Fyhn et al., 2007), a neural substrate involved in memory formation, where neurons also remap in response to changes in sensory features or task context (Bostock et al., 1991; Frank et al., 2000; Jezek et al., 2011; Markus et al., 1995; Moita et al., 2004; Muller and Kubie, 1987; Wood et al., 2000). Recent work in the hippocampus has revealed remapping in response to other latent factors, which may include changes in an animal’s behavioral state (Keinath et al., 2020; Sheintuch et al., 2020; Ziv et al., 2013), suggesting that MEC may be responsive to these factors as well. Consistent with this possibility, changes in task demands can evoke MEC remapping (Boccara et al., 2019; Butler et al., 2019). However, studies have yet to identify the specific impact of latent factors such as behavioral state on MEC remapping. Further, it is not yet known what MEC remapping dynamics might look like when environmental features or task demands are not delineated into distinct episodes.

Until recently, technological constraints limited most studies to small numbers of simultaneously recorded MEC neurons. As a result, previous studies of flexibility in MEC neural coding often focused on how the firing fields of single functionally-defined neurons (e.g. grid, border, or head direction cells) responded to changing environmental features. However, not all MEC neurons fall into discrete functional classes (Hardcastle et al., 2017; Hinman et al., 2016) and many MEC coding properties are proposed to emerge at the population level (Burak and Fiete, 2009; Couey et al., 2013; Fuhs and Touretzky, 2006; McNaughton et al., 2006; Ocko et al., 2018; Pastoll et al., 2013). These features of MEC coding raise the question of how the the MEC circuit as a whole transitions between contextual representations.

Here, we investigated how large populations of MEC neurons transition between spatial representations in an invariant environmental context. Using silicon probes, we simultaneously recorded from hundreds of MEC neurons while mice navigated a virtual reality (VR) environment. We found that remapping events occurred synchronously across the MEC population—recruiting a large swath of cells with heterogeneous coding properties—without any change in environmental features or task demands. Further, we demonstrated that each map corresponded to a distinct activity manifold. Running speed correlated with transitions between these manifolds and the manifolds for different maps were geometrically aligned, which enabled consistent position decoding in spite of remapping. Altogether our findings demonstrate that a single population of neurons can preserve unchanging information (e.g. fixed environmental features), while also encoding changes in internal context, demonstrating a remarkable capacity for both reliability and flexibility in MEC navigational coding.

Results

Spatial representations remap in an invariant VR environment

Head-fixed mice foraged for water rewards along an infinite VR track with landmark cues that repeated every 400 cm (Campbell et al., 2020)(Figure 1A, B; Figure S1). 11 mice navigated a cue rich track (5 landmarks, Figure 1B, top)(n = 43 sessions, i.e., cue rich sessions), 6 mice navigated a cue poor track (2 landmarks, Figure 1B, bottom)(n = 11 sessions, i.e., cue poor sessions), and 5 mice navigated alternating blocks of cue rich and cue poor trials within each each session (n = 13 sessions, i.e., double-track sessions). Mice could lick for water in a visually marked reward zone, which appeared at random locations along the track (Figure S1). In reward zones, mice slowed (difference in running speed, 11.7 ± 1.2 cm/s; p = 3.1×10−11) and licked for water (difference in lick number 8.0 ± 0.2; p < 1×10−11), demonstrating familiarity with the task (Figure 1C–F).

Figure 1: Acute neuropixels recordings during a VR random foraging task.

(A) Schematized recording set-up. (B) Schematized side view of the cue rich (top, pink) and cue poor (bottom, green) environments (colors maintained throughout). (C) Running speed near rewards for an example 100 trials (gray traces, each trial; black line, average). (D) As in (C), for smoothed lick count. (E) Running speed in versus outside of reward zones (mean difference ± standard error of the mean (SEM): 11.7 ± 1.2 cm/s; Wilcoxon signed-rank test, p = 3.1×10−11)(points, individual sessions; for double-track sessions, color indicates which track was first). (F) As in (E), but for fraction of licks (mean difference in lick number ± SEM: 8.0 ± 0.2; Wilcoxon signed-rank test, p < 1×10−11; n = 16,083 reward trials). (G) Number of MEC units recorded in each session. (J) Locations for all recorded MEC units relative to their dorsal-ventral (DV), medial-lateral (ML), and anterior-posterior (AP) location. (I) Rasters (top) and tuning curves (bottom) for four example cells from one session (black lines, average firing rate; shading, SEM). (J) Average spatial correlation of nearby trials (mean moving average correlation, 5 nearest trials ± SEM: 0.441 ± 0.009; mean spatial correlation range: 0.28 to 0.73)(points, individual sessions; bars, individual mice; error bars, SEM across sessions). Double-track sessions show cue rich and cue poor blocks separately (26 blocks from 13 sessions). (E, F, G, H, J) n = 10,440 cells in 67 sessions across 22 mice. Example session in (C, D) is mouse 6a, session 1009_1 and in (I) is mouse 6a, session 1010_1. (See also Figure S1.)

To record neural activity during behavior, we acutely inserted Neuropixels silicon probes (Jun et al., 2017) into MEC for up to six recording sessions per mouse (up to three sessions per hemisphere). Each session was associated with a unique probe insertion and sessions from the same hemisphere were recorded in distinct parts of MEC (Figure S1; STAR Methods). Thus we recorded simultaneously from hundreds of neurons across a large portion of the MEC dorsal to ventral axis in individual mice (n = 10,410 cells)(Figure 1G, H; Figure S1). To quantify spatial coding stability across co-recorded neurons, we estimated each neuron’s position-aligned firing rate on each trial and computed the correlation between all spatial representations for each pair of trials (STAR Methods). Network-wide position coding was typically stable across neighboring trials (moving average correlation, 5 nearest trials: 0.441 ± 0.009)(Figure 1I, J; STAR Methods). In some cases population-wide neural representations appeared untethered from landmarks for part or all of the session (interquartile range min to max: 0.034 to 0.394)(Figures S1, S2).

To characterize the stability of population-wide spatial coding across all trials for each session, we collected the spatial correlations for all trial pairs into a trial-by-trial similarity matrix of network-wide spatial representations (Figure 2B–F right; Figure S2). In many recording sessions, we observed clear changes in these similarity matrices and in the spatial firing patterns of single neurons distributed across MEC (i.e., remapping events) (Figure 2B–F; Figure S2). These remapping events occurred without any change to environmental sensory cues or task demands. Importantly, remapping events did not reflect recording probe movement (Figures S1, S3). MEC cells often switched abruptly between stable spatial representations (i.e., maps)(Figure 2A), with cells returning repeatedly to one of several distinct maps within a single session, resulting in a checkerboard pattern of trial-by-trial similarity (Figure 2B–F; Figures S1, S2). In other sessions, spatial representations underwent a single transition between stable maps or transitioned between spatially stable and unstable coding regimes (Figure 2B, F; Figure S2). While the frequency and stability of remapping was thus heterogeneous across mice and sessions, in all cases remap events appeared to recruit co-recorded neurons all along the dorsal to ventral MEC axis (Figure 2B–F, right; Figures S1, S2).

Figure 2: Spatial representations remap in an invariant VR environment.

(A) Rasters (top) and tuning curves (bottom) for four example cells (40 example trials) from one session (black lines, average firing rate; shading, SEM; same as Fig. 1J). (B) Full rasters (left) and network-wide trial-by-trial similarity matrix (right) from the same example session (arrowheads, transitions in spatial coding; colors alternate light/dark for discernibility; colorbar, trial-by-trial spatial correlation)(n = 142 cells). (C, D) As in B, but for a different example cue poor (C; n = 227 cells) and cue rich (D; n = 139 cells) session. (E, F) As in (B-D), but for one double-track session split into cue poor (E) and cue rich (F) trial blocks (dashed lines indicate breaks between blocks; n = 55 cells). (B-F) Pink arrowheads indicate cue rich track; green, cue poor. (See also Figure S2, S3.)

Data-driven detection of network-wide remapping

To characterize neural remapping not related to changing visual cues, we focused our main analysis on sessions from mice who only experienced one of the two tracks (single-track sessions; n = 54 sessions in 17 mice). Double-track sessions recapitulated all major single-track findings, indicating that the general properties of network-wide remapping were consistent across different VR tasks (Figure S4). To group trials with similar network-wide spatial activity, we applied k-means clustering to each session (Figure 3A). The k-means model assigns a single cluster label to each trial and these cluster labels often visibly matched the checkerboard pattern in trial-by-trial similarity matrices (Figure 3B; Figures S2, S5). Despite making the strict assumption that each trial belongs to a single spatial map, a 2-cluster k-means model consistently approached the performance of a less constrained uncentered PCA model, which allows each trial to contain a blend of multiple spatial maps (Figure 3C). These results suggest that remapping events were well-approximated by discrete transitions between spatial maps.

Figure 3: Data-driven detection of discrete, synchronous remapping.

(A) Schematized k-means clustering strategy: raw spikes (top) are converted to normalized firing rate (center), k-means gives a low-dimensional estimate of the neural activity (bottom). (B) Trial-by-trial similarity (top, as in Figure 2B–F), distance score for population-wide neural activity by trial (middle; a score of 1 indicates that population activity is in the map 1 k-means cluster centroid; score = −1, map 2 centroid), and single neuron distance to k-means cluster centroid across trials, sorted by depth (bottom; colorbar, distance to cluster: gray, midway between maps; black, at or beyond map 1 centroid; white, map 2) for an example session (mouse 1c, session 0430_1, n = 227 cells). (C) 2-factor k-means versus PCA performance (uncentered R2) for all single-track sessions (left). Model performance for uncentered PCA (indigo), k-means (gold), and k-means on shuffled data (gray) for an example session (right)(mouse 1c, session 0501_1, n = 122 cells). (D) Selection criteria for 2-map sessions (gold shading; performance gap with PCA < 70% relative to shuffle, ). (E) Average trial-to-trial spatial similarity for trials from the same map versus across maps for all 2-map sessions (mean change in correlation ± SEM: −32.43 ± 2.57%; Wilcoxon signed-rank test, p = 3.79×10−6)(green, cue poor sessions; pink, cue rich). (F) Percent of all cells that were consistent remappers by location in MEC (4,108/4,984 cells). (C, D) n = 54 sessions, 17 mice; (E, F) n = 4,984 cells, 28 sessions, 13 mice. (See also Figure S2, S5.)

Using the relative performance of k-means to PCA, we identified 28 out of 54 single-track sessions that were adequately fit by a 2-cluster k-means model (Figure 3D, n = 23 cue rich sessions, 5 cue poor sessions; 4,984 cells; 13 mice). In many of these sessions, neural activity repeatedly returned to the same set of maps (13/28 sessions exhibited three or more remap events)(Figure S3). Trials within a given map were more similar to each other than to trials from the other map (p = 3.79×10−6)(Figure 3E). We focused subsequent analysis on these 28 “2-map sessions,” as they were the simplest and most common case. The remaining 26 sessions showed heterogeneous remapping that was not well-captured by this two factor model (Figures 2, S1, S2, S5).

How do these network-wide events recruit single neurons? In 2-map sessions, the majority of cells throughout MEC (82.4%) changed their firing properties in precise agreement with population-derived remapping events (i.e., consistent remappers, average distance to cluster centroid < 1)(Figure 3B bottom, Figure 3F)(STAR Methods). There was some regional variability in the proportion of these consistent remappers, but they always comprised the bulk of the population (Figure 3F). Thus, most MEC neurons remapped abruptly and synchronously.

Entorhinal neurons reversibly and heterogeneously remap

We next asked how remapping influences the coding properties of single MEC neurons. In 2-map sessions, many single neurons exhibited distinct spiking patterns and spatial tuning curves within each k-means identified map (Figure 4A; see Figure S5 for >2-map sessions). To quantify these tuning changes, we calculated the change in peak firing rate (i.e., rate remapping) and the spatial dissimilarity (i.e., global remapping) across the two maps for each cell (Knierim et al., 1998; Muller and Kubie, 1987; O’Keefe and Conway, 1978)(STAR Methods). Across all cells (spatial and non-spatial), we observed diverse remapping responses, with no obvious clustering in type of remapping (Figure 4B, C). Many cells showed some rate remapping (median absolute change in peak firing rate: 1.28-fold), but largely retained their spatial tuning across maps (median spatial dissimilarity: 0.031)(Figure 4A–C). Nevertheless, the dissimilarity distribution was heavy-tailed (Figure 4B), indicating a subpopulation of cells with large changes in spatial tuning across maps (5% of cells had dissimilarity > 0.223)(e.g. Figure 4A, C, cool colors).

Figure 4: Reversible and heterogeneous remapping of MEC representations.

(A) Single-neuron spiking (top) and tuning curves (middle) for example cells from a cue poor, 2-map session, colored/divided by k-means cluster labels (black, map 1; color, map 2), versus averaged over the session (bottom)(solid line, average firing rate; shading, SEM; color scheme denotes cell identity and is preserved in C, D). (B) Absolute fold change in firing rate versus spatial dissimilarity across maps for neural tuning curves from all 2-map sessions (n = 4,982 cells)(points, single cells; histograms, density distribution for each variable; red dashes, median; gold dashes, 95th percentile). (C) Same as (B), but for the example session. (D) Spatial information for single neurons in map 1 versus map 2 for the example session. (E) Percent of MEC neurons from all 2-map sessions that were spatial in one map (gray), both maps (black), or neither map (white)(mean absolute change in spatial information ± SEM: 57.3 ± 1.0%). (F) Distance to k-means cluster centroid by highest average spatial information across maps (black dashes, threshold for consistent remappers). Example session in (A, C, D) is from mouse 1c, session 0430_1 (n = 227 cells). (E, F) n = 4,984 cells from 28 sessions in 13 mice. (See also Figure S5.)

Cells also exhibited changes in spatial information across the two maps (e.g. Figure 4D), resulting in many cells (13 ± 1%) that were significantly spatial in one map, but not the other (p < 0.05)(Figure 4E). Cells with high spatial information in at least one map were likely to remap in coordination with the rest of the population (1,449 out of 1,545 spatial cells)(Figure 4F). Thus remapping reflected changes in the spatial coding properties of MEC neurons and recruited most spatially informative cells.

Navigationally relevant cell types differentially remap

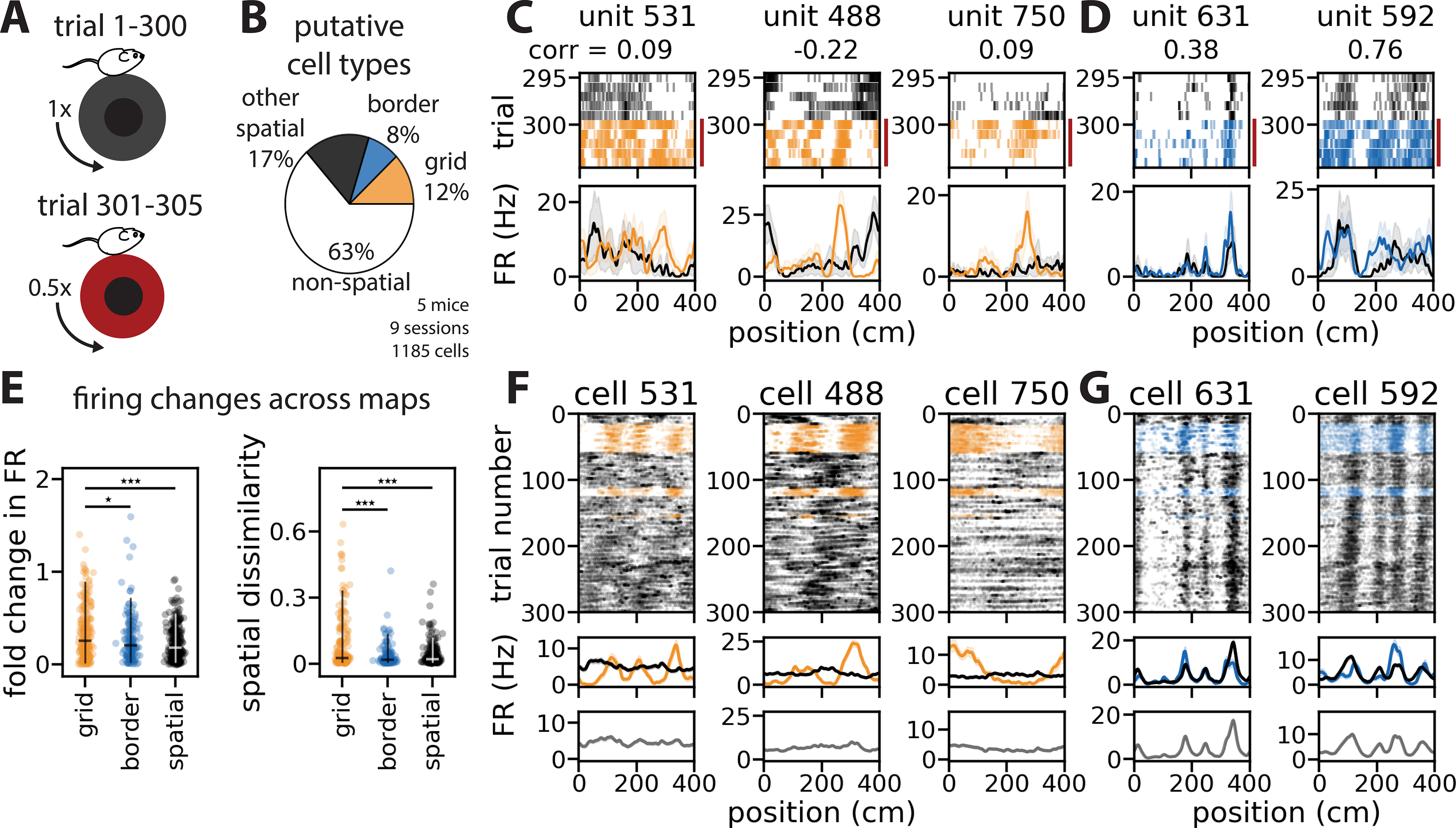

MEC contains functional cell types that exhibit distinct spatial coding statistics (e.g. grid, border, object vector, and head direction cells)(Hafting et al., 2005; Høydal et al., 2019; Kropff et al., 2015; Sargolini et al., 2006; Savelli et al., 2008; Solstad et al., 2008), raising the question of how remapping recruits these cell types. To investigate this question, we leveraged a previous finding that grid cells are responsive to mismatches between visual and motor feedback in 1D VR, while border cells remain stable (Campbell et al., 2018). In a subset of 2-map sessions (n = 9 cue rich sessions), we appended 5 trials in which the mouse had to run twice as far to traverse the same visual landscape (i.e., gain mismatch)(Figure 5A). We classified spatially stable cells that were highly responsive to gain mismatch as putative grid cells (224 out of 1,379 cells) and those that were unaffected by gain mismatch as putative border cells (152 cells)(Figure 5B–D; Figure S6)(referred to here as grid and border cells; STAR Methods). Using this metric, we found that the proportions of each cell type were similar to what is seen in freely moving experiments (Campbell et al., 2018; Mallory et al., 2018; Miao et al., 2015)(Figure 5B, STAR Methods). Grid cells often had spatially periodic firing fields of various spatial frequencies and border cells often had periodic firing fields that qualitatively reflected the tower spacing (Figure 5G; Figure S6). In keeping with our analysis of all 2-map sessions, most grid, border, and other spatial cells were consistent remappers (Figure S6). Grid cells exhibited more extensive remapping than border cells or other spatial cells, demonstrating more global remapping (p = 1.97×10−5)(Figure 5E, right) and more rate remapping than the other cell types (p = 4.6×10−4)(Figure 5E, left). These results indicate that remapping events may differentially impact specific functional cell types.

Figure 5: Navigationally relevant cell types differentially participate in remapping.

(A) Schematized trial structure: 300 trials with a fixed relationship between visual and motor feedback (top, “normal trials”), 5 trials requiring the animal to run twice as far to travel the same visual distance (bottom, “gain change trials”). (B) Distribution of putative cell types (session mean ± SEM: grid cells, 18.26 ± 3.81%; border cells, 13.23 ± 2.07%; spatially stable cells, 24.57 ± 3.12%). (C) Rasters (top) and tuning curves (bottom) from example grid cells for adjacent normal (black) and gain change trials (red bar, colored spikes)(numbers indicate cell identity and correlation of tuning curves across gain change; solid line, average firing rate; shading, SEM). (D) Same as (C), but for example border cells. (E) Absolute fold change in firing rate (left)(median change, 95th percentile: grid cells = 25.5%, 89.2%; border cells = 20.6%, 71.6%; other spatial cells = 18.5%, 55.5%; Kruskal-Wallis H-test, p = 4.6×10−4; Wilcoxon rank-sum test: border vs. grid cells, p = 0.045; other spatial vs. grid cells, p = 8.59×10−5; other spatial vs. border cells, p = 0.217) and cosine dissimilarity (right)(median, 95th percentile: grid cells = 0.027, 0.334; border cells = 0.019, 0.137; spatial cells = 0.022, 0.119; Kruskal-Wallis H-test, p = 1.97×10−5; Wilcoxon rank-sum test: border vs. grid cells, p = 4.13×10−5; other spatial vs. grid cells, p = 1×10−4; other spatial vs. border cells, p = 0.227) across maps for all grid, border, and other spatial cell tuning curves (***, p < 0.001; *, p < 0.05). (F) Rasters (top) and tuning curves (middle) for example grid cells, colored by k-means cluster labels (color, map 1; black, map 2), versus averaged over the session (bottom)(solid line, trial-averaged firing rate; shading, SEM). (G) As in (F), but for example border cells. All example cells are from mouse 9b, session 1207_1 (n = 61 grid cells, 9 border cells, 165 total cells). (B, E) n = 224 grid cells, 152 border cells, 308 other spatial cells out of 1,1185 total excitatory cells from 9 cue rich sessions in 5 mice. Orange color denotes grid cells, blue denotes border cells throughout. (See also Figure S6.)

Positional information is conserved at a population level across distinct spatial maps

MEC neurons project to multiple brain areas involved in spatial information processing (Kerr et al., 2007). To consider how downstream brain areas might make use of positional information encoded in MEC in spite of network-level remapping, we investigated whether linear mechanisms can predict position from MEC neural activity by fitting circular-linear regression models (“decoders”) (Figure 6A–C; see Figure S5 for sessions with >2 maps)(STAR Methods). We found that performance was comparable between models trained and tested on trials from a single map, and models trained and tested on trials from both maps (p = 0.65)(Figure 6D). While remapping could disrupt the performance of a simple linear decoder if each neuron’s spatial tuning was sufficiently different across the maps, this finding may arise because only ~5% of cells showed large changes in spatial tuning across maps (Figure 4B). To simulate an alternative case where each neuron’s spatial tuning changed dramatically across maps, we produced a shuffled “synthetic map” for each of the two maps in which spatial coding was greatly altered (STAR Methods). Linear decoders trained jointly on data from each true map and its synthetic map performed substantially worse than decoders trained on data from the pair of true maps (p < 0.05)(Figure 6D).

Figure 6: Positional information is conserved at a population level across maps.

(A-C) Schematic: decoder training and testing strategy (yellow, map 1; white, map 2). (A) Population-wide spiking activity (hash marks) was divided into k-means clusters; 10% of data was held out for testing (gray). (B) Decoders were trained on data from either map 1 (top), map 2 (middle), or both maps (bottom). (C) Each model was used to predict position on held-out data from either map 1 (top), map 2 (middle), or both maps (bottom). Models trained only on data from one map were also used to predict position using only data from the other map (diagonal arrows from B to C; labels indicate test map→train map). (D) Decoder performance for models trained and tested on data from the same map (map 1, map 2), from both maps, or from each map and its shuffle for all 2-map sessions (score = 0, chance; score = 1, perfect prediction)(mean performance ± SEM: train/test map 1 = 0.75 ± 0.04; train/test map 2 = 0.78 ± 0.04; train/test both maps = 0.74 ± 0.04; Kruskal-Wallis H-test, p = 0.65; mean ± SEM decrease from train/test both to shuffle, 60.84% ± 1.5%). (E, F) Decoder performance within versus across maps for all sessions (grey bars, interquartile range). (G) Decoder performance for models trained on one map and tested on the other, relative to shuffle (0%) and within map performance (100%)(mean relative performance ± SEM, 61.81% ± 4.01%; Wilcoxon signed-rank test, p = 7.55×10−11; n = 56 model pairs). (D-F) Points indicate individual sessions; green, cue poor; pink, cue rich; n = 4,984 cells, 28 sessions, 13 mice.

Models also performed poorly when trained on data from one map and tested on data from the other map (p = 7.55×10−11)(Figure 6E–F), though these decoders still largely outperformed a shuffle control (trained on one map, tested on a the shuffled other map; 3/56 model pairs were no better than shuffle, p ≥ 0.05)(Figure 6G; STAR Methods). Therefore the two maps are different—decoders specialized to a single map struggled to generalize to the other map (Figure 6E–G)—yet, given data from both maps, a single linear decoder performed as well as separate, specialized decoders (Figure 6D). Altogether, these results indicate that, in principle, downstream brain areas can exploit positional information encoded in MEC in the presence of remapping.

Neural activity transitions between geometrically aligned ring attractor manifolds

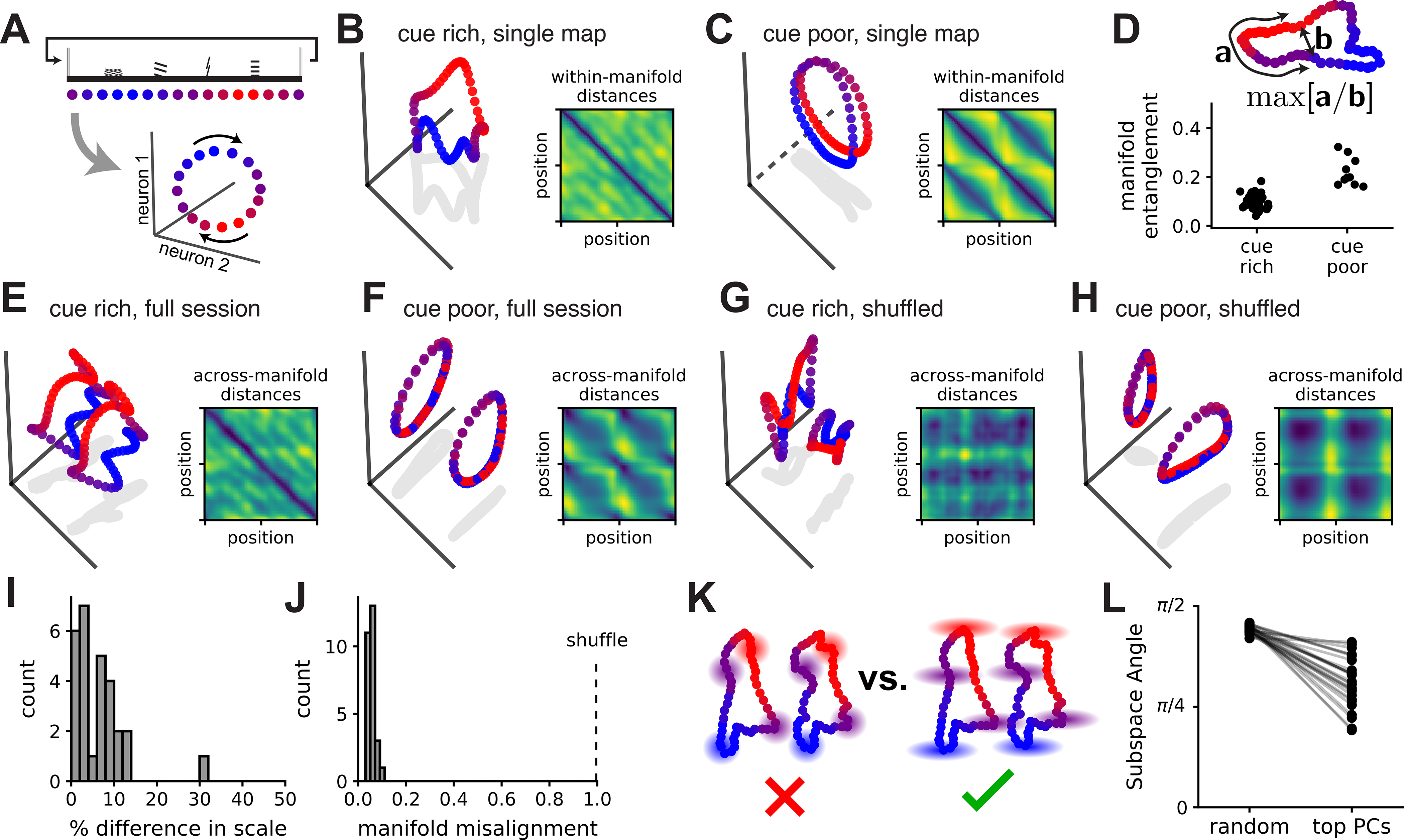

MEC representations of space thus can be both flexible (e.g. spontaneous remapping) and reliable (e.g. consistent decoding performance). To reconcile these two aspects of the circuit, we characterized the geometry of position coding in N-dimensional firing rate space (where N denotes the number of simultaneously recorded neurons). For the continuous 1D VR tracks used in this study, spatially driven network activity should follow a 1D ring manifold as the animal moves through space, reflecting the ring-shaped environment (Figure 7A). Attractor models of spatial navigation predict that circuit dynamics are locally stable around these ring manifolds, enabling persistent internal representations of position (Samsonovich and McNaughton, 1997). Each k-means cluster centroid provides an empirical estimate of this attractor manifold and, indeed, the low-dimensional linear embedding of each centroid had a qualitative ring structure (Figure 7B–C). Of note, we derived these ring-shaped manifolds from all co-recorded MEC neurons, making them distinct from the ring structures that arise in attractor models of co-modular grid cells (Burak and Fiete, 2009; Fuhs and Touretzky, 2006; Guanella et al., 2007; Spalla et al., 2019). Manifolds derived from cue poor environments were tightly wound around themselves, as quantified by an entanglement metric (Figure 7D; n = 10 manifolds from 5 cue poor sessions, 46 manifolds from 23 cue rich sessions)(see STAR Methods), indicating that there may be limited discriminability between the first and second halves of the track in this environment. These results suggest that the number of landmark cues alters the geometry of the spatial map without altering its topology as a 1D ring.

Figure 7: Neural activity transitions between geometrically aligned ring attractor manifolds.

(A) Schematic: the 1D environment (top) should produce a circular 1D trajectory (i.e., ring manifold) in neural activity space (bottom)(color scheme indicates position and is preserved throughout). (B) PCA projection of a single map (k-means centroid) from a cue rich session. (Inset) Pairwise distances in neural activity for all points (position bins) in the manifold (blue, minimum value; yellow, maximum). (C) As in (B), but for a cue poor session. The linear projection uses two principal components and a third orthogonal axis (dashed line) that maximally distinguishes between the first and second half of the track. (D)(Top) Schematic of the entanglement measure, which is the maximum ratio of distance along the manifold (“a”) to extrinsic distance (“b”)(STAR Methods). (Bottom) Manifold entanglement in cue rich and cue poor environments. (E) PCA projection of two manifolds from a 2-map cue rich session. (Inset) Across-manifold distances in neural firing rates for every pair of points (colors as in B, C insets). (F) As in (E), but for a cue poor session. (G, H) As in (E, F), respectively, but after randomly rotating each manifold. (I) Percent difference in manifold size for maps from the same session. (J) Normalized manifold misalignment scores (0, perfectly aligned; 1, shuffle). (K) Schematics: variability around the manifolds is oriented towards the other manifold (right), not isotropically distributed (left). (L) (Right) Angles between the remapping dimension and the top PC subspace for the model residuals (20% of total noise variance), (left) null distribution (random linear subspaces, n = 1,000 samples). All sessions displayed smaller angles than their shuffle control (Wilcoxon rank-sum test, p < 1×10−6). Example in (B, E, G) is session 1005_2 from mouse 6b (n = 149 cells) and in (C, F, H) is session 0502_1 from mouse 1c (n = 227 cells). (D, I, J, L) n = 46 manifolds from 23 cue rich sessions in 10 mice, 10 manifolds from 5 cue poor sessions in 3 mice.

We next used PCA to visualize the two manifolds of each 2-map session in the same low-dimensional space. In both cue rich and cue poor environments, the manifolds appeared geometrically aligned such that position coding was translated along a single dimension in neural activity space (Figure 7E–F), relative to a shuffle control (Figure 7G–H). Using Procrustes shape analysis (STAR Methods), we found that the two manifolds required only modest rescaling (23/28 sessions ≤ 10% difference in scale)(Figure 7I) and rotation (all were within 7% of the optimal rotation)(Figure 7J) to align with one another. This alignment could arise from preserved spatial tuning in some neurons and indicates that single neuron coding changes (Figure 4A–B) did not result in net firing rate differences across maps. Though tuning is correlated within grid cell modules (Hafting et al., 2005), manifold alignment was not dependent on putative grid cells (Figure S6). Thus, remapping largely corresponded to a translation in neural activity space between geometrically aligned ring manifolds.

As the k-means-identified ring manifolds show only the average neural activity within each map, we next asked how single-trial variability around these manifolds was structured. For each session, we applied PCA to the residuals of the k-means model and kept enough components to capture at least 20% of variance in these residuals. These dimensions contain the most “noise” or variability that isn’t captured by the k-means model. We then computed the angle between the PC subspace and the dimension separating the two manifolds (the “remapping dimension”); this was smaller than expected under a null distribution of random linear subspaces, indicating that unexplained neural variability was preferentially oriented in the remapping dimension (Figure 7K–L). The scale of this variability was limited so that the two manifolds were often well-separated (the maps can be considered two separate ring manifolds, rather than a single hollow cylinder). This result suggests that variability in network activity could predispose the network to remap (or jump from one ring manifold to the other), with attractor dynamics locally stabilizing the network activity within each map (Video S3).

A simple model explains the alignment of spatial attractor manifolds

Is the geometric alignment of spatial manifolds (Figure 7E–F) expected under existing theories of navigational circuit dynamics? To address this question, we constructed a bistable ring attractor model by linearly combining the weights of a winner-take-all network and a ring attractor network (Figure S7; STAR Methods). This approach is similar to previous models that have linearly combined decorrelated connectivity matrices to support multiple attractor structures (Romani and Tsodyks, 2010; Roudi and Treves, 2008; Samsonovich and McNaughton, 1997; Stringer et al., 2004). We could induce remapping in this model with perturbations that pushed the neural state into the other map’s basin of attraction (Monasson and Rosay, 2014, 2015). This model was sufficient to reproduce multiple ring manifolds, but it failed to recapitulate the experimentally observed geometrical manifold alignment (Figure S7). This finding demonstrates that geometrically aligned ring manifolds are not an obvious consequence of the attractor model framework.

A minor modification to this model—adding a sub-population of “shared neurons” whose spatial tuning was preserved across maps—was sufficient to produce a pair of bistable ring attractors that were highly aligned (Figure S7). This subpopulation is analogous to the experimentally observed neurons with preserved spatial tuning across maps (Figure 4A–B). The modified model had two additional noteworthy properties. First, perturbing the neural activity in the direction of the other map induced a remap but preserved the positional representation (Figure S7). Second, remapping was well-described by a translation along a single dimension; thus any spatial decoder that is insensitive to this dimension will be robust to remapping. For a linear decoder (as in Figure 6), this means the remapping dimension should lie in the null space of the decoder weights (Kaufman et al., 2014; Rule et al., 2020). Together, these results suggest that neurons with shared coding across maps may result in geometrically aligned ring manifolds, which in turn enables simple mechanisms for remapping and position decoding.

Remapping events and neural variability correlate with slower running speeds

As the task and environment in our experiments did not change within a given session, we next examined whether single-trial variability and network-wide remapping correlated with aspects of the animal’s behavior. We focused on running speed, as speed is known to modulate spatial representations in MEC (Bant et al., 2020; Hardcastle et al., 2017). We first asked whether the animal’s running speed was different on “remap trials” (the two trials book-ending each transition from one map to the other), compared to the intervening “stable blocks” (the block of trials at least two trials away from a remap event, which all reside in the same map). We restricted our analysis to 2-map sessions that contained at least three remap events and to stable blocks of at least five trials (n = 13 sessions in 7 mice; see Figure S5 for sessions with >2 maps)(STAR Methods). Across most of these sessions, the animal’s average running speed on remap trials was slower compared to its average running speed in the preceding stable block (difference in running speed: 9.8 ± 2.2%; p = 1.15×10−4)(Figure 8A, B). Running speed largely did not vary systematically across the two maps (Figure S8)(STAR Methods).

Figure 8: Remapping events and neural variability correlate with slower running speeds.

(A) Average running speed in remap trials versus stable blocks for an example session (points, pairs of remap trials/stable blocks; dashed line, unity; n = 13 pairs) (B) As in (A), but for all 2-map sessions (remap trial speeds were slower in 9 sessions, equal in 2 sessions, faster in 2 sessions; Wilcoxon signed-rank test, p = 1.15×10−4)(points, session average; green, cue poor; pink, cue rich; grey error bars, SEM; dashed line, unity; n = 127 pairs from 13 sessions, 7 mice). (C) (Top and bottom panels) Running speed and distance to the midpoint between k-means clusters for trials from two example stable blocks (black trace, running speed; black shading, map 1; white, map 2; gray, between maps). (Middle panels) Zoom in on the first (left) and last (right) example trials, showing neural activity approaching the midpoint (arrowheads)(across sessions, stable bin distance interquartile range: 0.763 to 1.338). (D) Average distance to the midpoint versus binned running speed for an example session (distance score = 1, activity is in a cluster centroid; 0, equidistant from each map)(black line, average; gray shading, SEM; distance to midpoint is z-scored). (E) as in (D), but for all 2-map sessions; speed is normalized within each session (ordinary least squares regression, R2 = 0.778, p < 1×10−6; n = 10 speed bins per session, 28 sessions, 13 mice, 4,984 cells). (F) Schematic model showing how running speed might encourage neural activity (ball, activity; arrow, trajectory in state space) to transition between manifolds (top) by shaping the energy landscape (black line)(middle, slow speeds; bottom, fast; dashed line, midpoint between clusters). Example session in (A, C, D) is from mouse 1c, session 0430_1 (n = 227 cells). (See also Figure S5, S8.)

We next investigated the moment-by-moment correlation between neural variability and running speed by binning neural activity and speed into 5 cm position bins within each trial. Given the random reward structure, the running speed distribution for each track position was comparable (Figure S8). For each position bin, we calculated how close the neural activity was to the midpoint between manifolds, where activity is most likely to switch between maps (STAR Methods). As expected, neural activity was closer to the midpoint in remap trials compared to stable blocks (p < 1×10−6)(Figure S8). However, we also observed instances where the neural activity approached the midpoint within stable periods, indicating that neural variability does not always provoke a remap event (Figure 8C, arrowheads). Across all position bins, slower running speeds were correlated with a reduced neural distance to the midpoint (p < 1×10−6)(Figure 8D, E). Altogether, these results suggest that neural variability increases in the direction of the other map at slow running speeds, likely increasing the probability of a remap event. If we model the two spatial maps as bistable ring attractors (as discussed in the previous section), then a decrease in running speed could correspond to a reduction in the energetic barrier that separates the two basins of attraction (Figure 8F) or to another change that encourages neural activity to flip to the other attractor. Although the neural distance to the midpoint between clusters was variable across different track positions and appeared to dip slightly in the reward zone, this variability was no greater than expected by chance (p > 0.05)(Figure S8).

Discussion

Here, we report that MEC representations are capable of remapping in VR environments without any changes to sensory cues (Diehl et al., 2017; Fyhn et al., 2007; Marozzi et al., 2015; Solstad et al., 2008) or task demands (Boccara et al., 2019; Butler et al., 2019; Keene et al., 2016). Remapping events were coordinated across the MEC population, recruited many different cell types, and often comprised discrete and reversible switches between spatial maps that persisted over long (roughly 1–30 min) timescales. Remapping comprised diverse coding changes across neurons—including up to three-fold variation in peak firing rate and 50% reconfiguration of spatial coding—and appeared to differentially impact different functional cell types. While previous reports have found that faster running speed is associated with sharpened spatial tuning in single neurons (Bant et al., 2020; Hardcastle et al., 2017), we found that changes in running speed can also correspond to remapping events that produce large and persistent shifts in MEC coding. Finally, neural activity remapped by transitioning between geometrically aligned manifolds, enabling simple, linear decoding strategies. Together, our results suggest that a single population of MEC neurons can rapidly switch between multiple stable representations of a single environment.

While neural activity often alternated between two stable spatial maps, we also observed sessions with more than two stable maps and sessions where activity remapped between spatially stable and unstable coding regimes. We largely focused on single-track sessions with two maps (“2-map sessions”), which allowed us to thoroughly characterize this common form of remapping and provided insight into how neural circuits might store and interpret multiple neural representations more generally. We found that a single decoder could accurately infer position across remapping events, indicating that MEC spatial representations contain both changing contextual information and stable positional information that is segregated into orthogonal linear subspaces (Kaufman et al., 2014; Rule et al., 2020). Our finding that remapping comprised a translation in neural activity space provides a geometric interpretation for this possibility. This geometric arrangement could allow downstream neurons that are insensitive to the remapping dimension to extract consistent positional information regardless of the map, while neurons sensitive to this dimension could extract contextual information.

We further showed that a bistable ring attractor model network (Battaglia and Treves, 1998; Romani and Tsodyks, 2010; Samsonovich and McNaughton, 1997) does not produce aligned rings, but that adding a sub-population of “shared neurons” was sufficient to recapitulate the experimentally observed alignment. Previous work has studied transitions between continuous attractors in the context of hippocampal and co-modular grid cell attractor networks (Monasson and Rosay, 2014, 2015; Spalla et al., 2019). Our modeling results differ by (1) considering the full MEC circuit (not only grid cells) and (2) interpreting each continuous attractor as representing the same external environment (not different environments). Overall, these results illustrate how our experimental findings might be interpreted and integrated into ongoing theoretical research on MEC and hippocampal circuits.

One feature of remapping between aligned manifolds is that it allows the network to preserve unchanging information while rapidly and synchronously updating changing contextual information. Our findings complement previous studies in the hippocampus (Rubin et al., 2015; Taxidis et al., 2020; Ziv et al., 2013) and MEC (Diehl et al., 2019), which found context delineation in a single environment over longer timescales (hours to days) via representational drift that is asynchronous across neurons. This type of remapping over longer timescales may relate to learning dynamics (Taxidis et al., 2020), grouping of temporally adjacent memories (Rubin et al., 2015), and building an episodic timeline for repeated interactions with a single environment (Diehl et al., 2019; Sheintuch et al., 2020; Ziv et al., 2013). The rapid remapping (under 1 min) that we observed across MEC may interact with these slower processes of representational drift to form a rich internal map of temporal and contextual episodes.

Of note, it is possible that the impoverished sensory experience of our virtual environment promoted remapping, perhaps leading the animal to infer multiple different contexts in the same VR track (Ravassard et al., 2013; Sanders et al., 2020). However, complete remapping of hippocampal spatial representations of a single environment was recently observed in freely behaving animals, suggesting that similar remapping can occur under more naturalistic settings (Sheintuch et al., 2020). It is also well-established that MEC neurons can remap in freely behaving animals in response to changing sensory features (Diehl et al., 2017; Fyhn et al., 2007; Marozzi et al., 2015; Solstad et al., 2008) or task demands (Boccara et al., 2019; Butler et al., 2019; Keene et al., 2016). Our findings may represent a related form of MEC remapping that is driven by internal, rather than external factors, which likely interacts with previously established forms of remapping.

Finally, our finding that remapping events correlated with slower running speeds raises specific hypotheses for how behavioral state may drive widespread changes in MEC coding. Running speed can indicate task engagement—e.g., in our task faster speeds lead the animal to “find rewards” more quickly—or of arousal more broadly (Bennett et al., 2013; Niell and Stryker, 2010)(but see Vinck et al., 2015). Behavioral state changes related to running speed and arousal can alter neural processing in complex ways; for example targeted neuromodulation of GABAergic interneurons modulates sensory neuron responses (Ferguson and Cardin, 2020). It is possible that similar or analogous biophysical mechanisms act on MEC neurons and it will be of particular interest for future studies to dissect the mechanisms by which MEC neurons are commonly and differentially impacted by running speed. Moreover, detailed consideration and tracking of multiple behavioral state variables (e.g. pupil dilation and whisking) will be needed to identify which specific variables control remapping in the navigation circuitry.

Altogether, we find that MEC has the capacity to remap in a rapid and reversible fashion, which could support a role for this circuit in dividing the unbroken stream of sensory features encountered during navigation into discrete contextual episodes. Further, our findings align with a larger body of emerging studies demonstrating that cortical activity is highly responsive to behavioral state changes (Jennings et al., 2019; Salay et al., 2018; Stringer et al., 2019). Our results suggest that these behavioral state changes may drive rapid, large-scale reconfigurations of internal representations of the external world in higher-order cortex.

STAR Methods

Resource Availability

Lead Contact:

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Lisa M. Giocomo (giocomo@stanford.edu).

Materials Availability:

This study did not generate new unique reagents.

Data and Code Availability

All data required to reproduce the paper figures have been deposited at Mendeley and are publicly available as of the date of publication. DOIs are listed in the key resources table.

All original code has been deposited at Zenodo and is publicly available as of the date of publication. DOIs are listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited data | ||

| Raw and analyzed data | This paper | https://data.mendeley.com/10.17632/hntn6m2pgk.1 |

| Experimental models: Organisms/strains | ||

| Mouse: C57BL/6 | The Jackson Laboratories | 000664 |

| Software and algorithms | ||

| SciPy ecosystem of open-source Python libraries (numpy, matplotlib, scipy, etc.) | Jones et al., 2001; Hunter, 2007; Harris et al., 2020 | https://www.scipy.org/ |

| scikit-learn | Pedregosa et al., 2011 | https://www.scikit-learn.org/ |

| MATLAB | MathWorks | https://www.mathworks.com/products/matlab.html |

| Kilosort2 | https://github.com/MouseLand/Kilosort2 | |

| Other | ||

| Phase 3B Neuropixels 1.0 silicon probes | Jun et al., 2017 | https://www.neuropixels.org/probe |

| Original code | This paper | https://doi.org/10.5281/zenodo.5062491 |

Experimental Model and Subject Details

Mice

All techniques were approved by the Institutional Animal Care and Use Committee at Stanford University School of Medicine. Recordings were made from 17 C57BL/6 mice aged 4 weeks to 3.5 months at the time of first surgery (15.7–35 g). All mice were female except litters 5 and 9 (6 mice), which were male. Mice were group housed with same-sex littermates, and in one case with the dam (2a, b, c with 3a), unless separation was required due to water restriction, aggression, or disturbance of prep site. Mice were housed in transparent cages on a 12-h light-dark cycle and experiments were performed during the light phase.

Method Details

Training and handling

Mice were handled at least every 2 days following headbar implantation and given an in-cage running wheel. Starting 1 day after headbar implantation, same-sex mice were placed daily in a large (100×100cm), communal environment with enrichment objects including a running wheel, Lego tower, textured floor tape, and crushed chocolate cheerios for between 15 mins and 2 hours. Mice were monitored for aggression and separated as needed. Mice were given free access to water until 3 days after headbar implantation, after which they were water restricted to 1mL of water per day and weighed daily to ensure a body weight of >80% of their starting weight.

After >1 day of water restriction, mice were acclimated to head fixation and trained to drink water from a custom lickport for 10–20 mins over 2 days. Mice were then trained to run on the virtual random forage task (described below) starting with a reward probability of 0.1/cm (essentially one reward per 50 cm), for gradually decreasing reward probability and gradually increasing session length as behavior improved. Mice were trained on the exact track(s) that they were recorded in, either cue poor (litters 1, 2, and 3), cue rich (litters 6, 7, 9 and 10), or both (litters 4 and 5). Mice trained on both tracks were exposed to each track in a counterbalanced fashion, initially alternating tracks over days and ultimately alternating the order of presentation as mice improved sufficiently to run 2 or more sessions per training day. Training continued at least until mice ran >300 trials in 2 hours and demonstrated proficient licking from the lickport in the reward zone; training was sometimes extended in order to stagger recording periods (7 days to 7 weeks; mean ± SEM: 23 ± 3 days; 7 mice never learned the task).

In vivo survival surgeries

For all surgeries, anesthesia was induced with isoflurane (4%; maintained at 0.5–1.5%) followed by injection of buprenorphrine (0.05–0.1 mg/kg). Animals were injected with baytril (10 mg/kg) and rimadyl (5 mg/kg) immediately following each surgery and for 3 days afterwards. In the first surgery, animals were implanted with a custom-built metal headbar containing two holes for head fixation, as well as with a jewelers’ screw with an attached gold pin, to be used as a ground. The craniotomy sites were exposed and marked during headbar implantation and the surface of the skull was coated in metabond. After completion of training, a second surgery was performed to make bilateral craniotomies (~200μm diameter) at 3.7–3.95mm posterior and 3.3–3.4mm lateral to bregma. A small plastic well was implanted around each craniotomy and affixed with metabond. Craniotomy sites were covered with a drop of sterile saline and with silicone elastomer (Kwik-sil, WPI) in between surgery and recordings.

In vivo electrophysiological data collection

All recordings were performed at least 16-h after craniotomy surgery. Mice were head-fixed on the VR recording rig. Craniotomy site was exposed and rinsed with saline—occasionally dura was re-nicked or debris removed using a syringe tip. Recordings were performed using Phase 3B Neuropixels 1.0 silicon probes (Jun et al., 2017) with 384 active recording sites (out of 960 total) along the bottom ~4 mm of a ~10 mm shank (70 μm wide shank diameter, 24 μm thick, 25 μm electrode spacing), and reference and ground shorted together. The probe was positioned over the craniotomy site at 8–14° from vertical and targeted to ~50–300 μm anterior of the transverse sinus using a micromanipulator. On consecutive recording days, probes were targeted medial or lateral of previous recording sites as permitted by the craniotomy and/or probe angle was varied to ensure that we were recording from a new population of cells each day (Figure S1). The reference electrode was then connected to a gold ground pin implanted in the skull. The probe was advanced slowly (~4–10 μm/s) into the brain until it encountered resistance or until activity quieted on channels near the probe tip, then retracted 100–500μm and allowed to sit for at least 30 mins prior to recording. While the probe was implanted, the craniotomy site was covered with sterile saline and silicon oil. Signals were sampled at 30 kHz with gain = 200 (7.63 μV/bit at 10 bit resolution) in the action potential band, digitized with a CMOS amplifier and multiplexer built into the electrode array, then written to disk using SpikeGLX software.

Virtual reality (VR) environment

The VR recording set-up was nearly identical to the set-up in Campbell et al. (Campbell et al., 2018). Head-fixed mice ran on a 15.2-cm-diameter foam roller (ethylene vinyl acetate) constrained to rotate about one axis. The cylinder’s rotation was measured by a high-resolution quadrature encoder (Yumo, 1024 P/R) and processed by a microcontroller (Arduino UNO). The virtual environment was generated using commercial software (Unity 3D) and updated according to the motion signal. VR position traces were synchronized to recording traces on each frame of the virtual scene. The virtual scene was displayed on three 24-inch monitors surrounding the mouse. The gain of the linear transformation from ball rotation to translation along the virtual track was calibrated so that the virtual track was 4 m long. At the end of the track, the mouse was teleported seamlessly back to the start to begin the next trial, such that the track was seemingly infinite (all visual cues were repeated and visible into the distance as the mouse approached the track end).

Cue rich tracks consisted of 5 towers of different heights, widths, and patterns (black and white, neutral luminance), placed at 80 cm intervals starting at 0 cm and a black and white checkerboard on the floor for optic flow (see schematic, Figure 1B, top, and screenshot, Figure S1A). Cue poor tracks contained 2 towers of different patterns placed at 0 and 200 cm and a white to black horizontal sinusoidal pattern on the floor (see schematic, Figure 1B, bottom, and screenshot, Figure S1B). Both tracks had uniform gray walls and sky beyond the towers. For mice that experienced a single track, recording sessions consisted of 57–450 trials (mean ± SEM: 328 ± 14 trials). For mice that experienced both tracks, each track was presented in a block of 75–100 trials with ~1 min of darkness in between tracks (Figure S8). Blocks alternated between cue rich and poor—each track was presented twice (barring rare cases when the mouse failed to complete the session) and which track was presented first was alternated on each day.

In a subset of cue rich, single-track sessions (N = 21 sessions in 5 mice) we appended 5 gain manipulation trials to the end of each session in which the mouse had to run twice as far to traverse the same distance along the virtual track, as in Campbell et al. (Campbell et al., 2018).

Random foraging task

In both cue rich and cue poor tracks, visually marked reward zones appeared at a probability of 0.01–0.001 per cm, titrated to mouse performance, within the middle 300 cm of the track and at least 50 cm apart. Reward zones were 50 cm long and track-width, were patterned with a black and white diamond checkerboard, and hovered slightly above the floor (Figure S1). Upon entering the reward zone, animals could request water by licking and breaking an infrared beam at the mouth of the lickport; if not requested, water was dispensed automatically at the center of the zone. For mice 1c, 4a, and 4b for some recording sessions there were between 1–5 probe trials every 10 trials in which water was only dispensed if requested in the reward zone (no automatic dispensation). Upon water dispensation (or next trial start for missed probe trials), the current reward zone disappeared and the next zone became visible. Water rewards (~1.5 μL) were delivered using a solenoid (Cole-Parmer) triggered from the virtual environment software, generating an audible click with water delivery. Licks were recorded as new breaks in the lickport infrared beam and were processed by a microcontroller (Arduino UNO).

Histology and probe localization

Before each implantation, probes were dipped in fixable lipophilic dye (1mM DiI, DiO, DiD, Thermo Fisher) 10 times at 10 second intervals. Within 7 days of the first probe insertion, mice were killed with an overdose of pentobarbital and transcardially perfused with phosphate-buffered saline (PBS) followed by 4% paraformaldehyde. Brains were extracted and stored in 4% paraformaldehyde for at least 24 h before transfer to 30% sucrose in PBS. Brains were then rapidly frozen, cut into 30-μm sagittal sections with a cryostat, mounted and stained with DAPI. Histological sections were examined and the location of the probe tip and entry into the dorsal MEC for each recording were determined based on the reference Allen Brain Atlas (Allen Institute for Brain Science, 2004)(Figure S1). The location of each recording site along the line delineated by the probe tip and entry point was then determined based on each site’s distance from the probe tip. Only cells within MEC, again based on the reference Allen Brain Atlas (Allen Institute for Brain Science, 2004), were included for analysis (Figure S1). In all cases, “depth” reported is the ventral distance from the dorsal boundary of MEC in the medial section where the probe entered MEC.

Offline spike sorting

Electrophysiological recordings were common-average referenced to the median across channels and high-pass filtered above 150 Hz. Automatic spike sorting was then performed using Kilosort2, a high-throughput spike sorting algorithm that identifies clusters in neural data and is designed to track small amounts of neural drift over time (open source software by Marius Pachitariu, Nick Steinmetz, and Jennifer Colonell, https://github.com/MouseLand/Kilosort2)(see also Kilosort1 (Pachitariu et al., 2016)). After automatic spike-sorting, all clusters with peak-to-peak amplitude over noise ratio < 3 (with noise defined as the standard deviation of voltage traces in a 10ms window preceding detected spike times), total number of spikes < 100, and repeated refractory period violations (0–1 ms autocorrelegram bin > 20% of maximum autocorrelation) were excluded. All remaining clusters were manually examined and labeled as “good” (i.e., stable and likely belonging to a single, well-isolated neural unit), “MUA” (i.e., likely to represent multi-unit activity), or “noise.” Only well-isolated “good” units from within MEC (barring Figure S1I–J, which were non-MEC units) with greater than 400 spikes were included for analysis in this paper (Figure 1G). Sessions with fewer than 10 cells meeting these criteria were excluded.

Behavioral data preprocessing

On each frame of the virtual reality scene, the virtual position and time stamps were recorded and a synchronizing TTL pulse was sent from an Arduino UNO to the electrophysiological recording equipment. These pulses were recorded in SpikeGLX using an auxiliary National Instruments data acquisition card (NI PXIe-6341 with NI BNC-2110). The location of each reward zone, time of each lick, and time of each reward dispensation were also recorded. Thus all time stamps and behavioral factors were synchronized to the neurophysiological data. Time stamps were adjusted to start at 0 and all behavioral data was interpolated to convert the variable VR frame rate to a constant frame rate of 50Hz. As the track was effectively circular and 400 cm long, recorded positions less than 0 or greater than 400 cm were converted to the appropriate position on the circular track (eg. a recorded position of 404 cm would be converted to 4 cm and a recorded position of −4 cm would be converted to 396 cm). Trial transitions were identified as timepoints where the difference in position across time bins was less than −100 cm (i.e., a transition from ~400 cm to ~0 cm) and a trial number was accordingly assigned to each timepoint.

Running speed for each timepoint was computed by calculating the difference in position between that timepoint and the previous, divided by the framerate (speed at the first timepoint was assigned to be equal to that at the second timepoint). Speeds greater than 150 cm/s or less than −5 cm/s were removed. Speed was then interpolated to fill removed timepoints and smoothed with a gaussian filter (standard deviation 0.2 time bins). For all analyses except lick and reward zone analyses (Figure 1C–F), stationary time bins (speed < 2 cm/s) were excluded.

Running speed across maps

Because the k-means cluster labels are arbitrarily assigned, we designated the map with the slower overall average running speed as map 1 for consistency across analyses. To assess possible differences in running speed across maps, we computed the fractional difference in running speed across the k-means identified maps in all 2-map sessions. To control for any systematic changes in running speed over time in the session, we calculated the average running speed for each block of adjacent trials from the same map and compared it to the average running speed of the subsequent block of trials from the other map. We compared the resulting distribution of fractional difference in running speed to a shuffle control in which running speed was randomly shuffled across time bins (re-designating map 1 according to whichever shuffled map had a slower overall average running speed; 1000 shuffles per session)(Figure S8J).

Population analysis and clustering model

The 1D track was divided into 5 cm position bins (total of 80 bins). On each traversal of the track, the empirical firing rate of each neuron—i.e., number of spikes divided by time elapsed—was computed for every position bin. We then smoothed the firing rate traces with a Gaussian filter (standard deviation 5 cm). For each session this resulted in a 3-dimensional array of raw firing rates, with dimensions corresponding to trials, positions, and neurons.

Because these raw firing rates varied widely across neurons, we rescaled them so that the peak firing rate was commensurate across cells. Similar normalization steps or variance-stabilizing transformations have been used in previous population analyses of neural data (Churchland et al., 2012; Williams et al., 2018; Yu et al., 2009), to prevent neurons with high firing rates from washing out low firing rate neurons. Here, we normalized firing rates by first clipping the maximum firing rate of each neuron at its 90th percentile (to exclude large outliers), and then re-scaling each neuron’s firing rate to range between zero and one. That is, if denotes the clipped firing rate on trial i, position bin j, and neuron k, then the normalized firing rate was computed as:

| (1) |

The max(·) and min(·) operations (as well as the 90th percentile clipping operation) are applied on a neuron-by-neuron basis, pooling observations across all trials and timebins. For double-track sessions (which contained 2 blocks of cue rich trials and 2 blocks of cue poor trials, in alternating order) we added an additional per-neuron correction factor to account for drift in firing rates: the mean normalized firing rate for each neuron (across all trials and position bins) was subtracted within each block of trials, and the result was renormalized to values between zero and one, as above.

On each trial, MEC’s representation of position is summarized by a matrix, denoted Xi∷ for trial i, with rows and columns respectively corresponding to position bins and neurons. A simple measure of similarity between two trials, indexed by i and i′, is given by the Pearson correlation between the vectors vec(Xi∷) and . Network-wide trial-by-trial similarity matrices (as in Figure 2, 3, S1, S2, S4, and S5) were found by computing this correlation across all pairs of trials.

Let Xijk denote the I × J × K array, or tensor, of normalized firing rates defined in equation (1). As before the index variables i, j, and k, respectively represent trials, position bins, and neurons. Now consider the following low-rank matrix factorization model (Singh and Gordon, 2008; Udell et al., 2016) of these data:

| (2) |

Where R < min(I, J, K) denotes the number of model components, or the model rank. Equation (2) represents an approximate factorization of the I × JK matricization or tensor unfolding of the data array (Kolda and Bader, 2009; Seely et al., 2016). We will see that k-means clustering arises as a special case of this model, and in this special case R represents the number of clusters (i.e., the number of spatial maps).

The parameters and are optimized according to a least squares criterion:

| (3) |

It is well-known that a rank-R truncated singular value decomposition (SVD) provides a solution to this optimization problem (Eckart and Young, 1936). Further, the solution provided by truncated SVD is closely related to Principal Components Analysis (PCA)—indeed, these two methods are identical for the case of mean-centered data (see, e.g., (Shlens, 2005)). Since the normalized firing rate array Xijk in equation (3) is not mean-centered, we refer to this initial model as “uncentered PCA.” We use the uncentered coefficient-of-determination (uncentered R2) as a normalized measure of model performance associated with equation (3).

The k-means clustering model incorporates an additional constraint into the uncentered PCA model. Specifically, k-means seeks to minimize equation (3),

| (4) |

Thus, if we view as the elements of an R × I matrix, the rows of this matrix are constrained to be standard Cartesian basis vectors of R-dimensional space (“one-hot vectors”). Each of these vectors specifies the cluster assignment label for every trial (see Figure 3A for a schematic illustration for the R = 2 case). Further, we can interpret as elements of an R × J × K array. For a fixed cluster index r, the remaining elements form a J × K matrix, called a “slice” of the original array (Kolda and Bader, 2009). Each slice corresponds to a cluster centroid, which we may interpret as a spatial map—the columns are J-dimensional vectors holding the spatial tuning curves for every neuron, so different slices correspond to different sets of spatial tuning curves (i.e., different spatial maps).

This connection between k-means clustering and other matrix factorization models is well-known and expanded upon in detail by (Singh and Gordon, 2008; Udell et al., 2016). We exploit this connection to assess the k-means model, which is more constrained than uncentered PCA (i.e., truncated SVD) in that each row of is constrained to be a one-hot vector as opposed to an arbitrary vector. Intuitively, this allows us to interpret each trial as belonging to one of R types, as opposed to a linear combination of them. The fact that the more restrictive k-means model performs nearly as well as uncentered PCA gives credence to the multiple-map interpretation. To compare these two models we used a randomized cross-validation procedure in which 10% of the data, representing the validation set, were censored in a speckled holdout pattern (Williams et al., 2018; Wold, 1978). Ten randomized replicates were performed for all models. For the case of R = 2 components, we often observed similar performance (measured by the uncentered R2 averaged over validation sets) between uncentered PCA and k-means for all sessions (Figure 3C). Further, we compared the test performance of k-means on “shuffled” datasets (Figure 3C right, Figure 3D). Firing rates from a behavioral session were shuffled by applying a random rotation (i.e., an orthogonal linear transformation) to Xijk across trials. That is, we sample a random rotation matrix and define

as the new shuffled dataset, which is substituted into the objective function defined in equation (3). This form of shuffling preserves many features of the data, including the overall norm of the data and correlations between neurons and position bins. However, it destroys the sparsity pattern on which is imposed by the k-means model. This procedure is similar in spirit to methods proposed by Elsayed & Cunningham (2017).

Sessions that were well-approximated by the k-means model with R = 2 clusters were classified as “two-map” sessions (Figure 3D). We required that the performance gap (measured by the uncentered R2 averaged over validation sets) between k-means and uncentered PCA and be less than 70% relative to the shuffle control. Further, we required an uncentered R2 of at least 0.63 for the k-means model with R = 2 maps. Sessions not meeting these criteria sometimes displayed more than two maps (see Figure S5), long periods of unstable coding (see Figures S1 and S2), or little to no remapping at all (Figure S2). For all k-means analyses, we ran the clustering model at least 100 times on all neural data from each session to account for model variability, keeping the model with the best fit to the data.

Manifold alignment analysis

We used standard Procrustes analysis methods (Gower and Dijksterhuis, 2004) to assess the degree to which the two ring manifolds, representing spatial maps, were aligned in neural firing rate space. Recall that the k-means centroids provide an estimate of each spatial map—in this case, we restrict our focus to R = 2 maps, so the two maps are given by and . Geometrically, these maps are represented as J points embedded in a K-dimensional space (recall that J denotes the number of position bins and K is the number of simultaneously recorded neurons). Procrustes Analysis begins by centering each of these manifolds at the origin and rescaling them to have unit norm. Let and denote the maps after these preprocessing steps have been applied, i.e.,

Since position bin j in map 1 and position bin j in map 2 correspond to the same location on the track, we consider the root-mean-squared-error (RMSE) between these centered and rescaled maps as the empirically “observed” alignment score (reported in Figure 5J):

The central step of Procrustes analysis is to find the optimal rotation matrix that aligns these two point clouds. That is, we wish to find the matrix that solves the following optimization problem:

This optimization problem admits a closed form solution that is expressed in terms of the singular value decomposition of the K × K matrix (Schönemann, 1966). See Gower & Dijksterhuis (Gower and Dijksterhuis, 2004) for further background material. In Figure 7J, we report the RMSE compared to the optimal rotation (misalignment score = 0) and a random rotation matrix (“shuffle”).

Manifold entanglement calculation

We quantified the entanglement of a manifold as the maximum ratio of intrinsic distance (i.e., distance along the manifold) to extrinsic distance (i.e., Euclidean distance in K-dimensional space) between any two points on the manifold. Concretely, the extrinsic distance between two points corresponding to position bins j and j′ was computed as:

The intrinsic distance, , was the sum of extrinsic distances along the shortest path from j to j′ (see diagram in Figure 7D). Depending on whether one travels clockwise or counterclockwise along the ring, there are two paths connecting any pair of points—the intrinsic distance is given by whichever path is shorter. The triangle inequality implies that for every pair of points along the manifold. When the ratio is large, this implies that the pair of points (j, j′) on the manifold are close together in neural firing rate space despite encoding very different positions on the VR track—speaking loosely, we say these points are “entangled.” Conversely, when is small, the two points are far apart in firing rate space in proportion to their encoded positions on the VR track. Thus, a measure of manifold entanglement is given by , which measures the worst-case entanglement over the full manifold (the max[·] operator is understood to be taken over all pairs of position bins, j and j′). Noting that this raw entanglement score is upper bounded by and lower bounded by one, we can normalize the tangling metric to range between zero (no entanglement) and one (high entanglement) as follows:

We report this normalized entanglement metric in our results.

Distance to cluster calculations

After fitting the k-means model and obtaining two centroids, and , we can quantify how close network activity is to each centroid on a trial-by-trial or neuron-by-neuron basis. In each case we project the activity onto a one-dimensional space where −1 corresponds to one centroid and +1 corresponds to the other centroid. That is, for each trial i, we compute

Note that Pi = 1 when and Pi = −1 when . Further, if the network activity on trial i is at the midpoint, then and so Pi = 0.

We can compute an analogous statistic for each combination of trial i and position bin j:

Likewise, we can compute for each combination of trial i and neuron k:

Note that Pi, Pij, and Pik refer to three different quantities, which are only distinguished on the basis of their indices. This concise, somewhat informal, notation is common in tensor algebra, but is restricted to the present section to prevent potential confusion.

In Figure 3, we use Pik to identify neurons that consistently remap. Let ci denote the cluster label of each trial such that ci = 1 if trial i is in map 1 and ci = −1 if trial i is in map 2. Then provides a measure of distance between the spatial firing pattern of neuron k to the spatial map (i.e., cluster centroid) on trial i. Specifically, this calculation corresponds to a logistic loss function in the context of classification models. Averaging this distance over trials summarizes the remapping strength—intuitively, an average distance close to zero implies that the neuron “agrees with” the rest of the population on each trial, while a large average distance implies that the neuron is inconsistent (e.g., because the neuron exhibits high levels of noise on each trial). We classified neurons as “consistent remappers” when the average distance was less than 1.

In Figures 8 and S8, we used Pij to assess the relationship between running speed and the distance of neural coding to the midpoint between clusters. In Figure 8D, we plotted |Pij|, i.e., the distance to midpoint, in 10 running speed bins for an example session—the first 9 bins were evenly spaced between 0 cm/s and 20 cm/s below the maximum speed; the last bin included all top speeds above this final threshold (this was done to account for rare bursts of high speeds). Similarly, in Figure 8E we plotted |Pij| in 10 running speed bins, normalized within each session. In Figure S8C–E, we use histograms to visualize Pij for all trials and position bins. To account for arbitrary map assignment, we randomly flipped the sign of Pij for each session in Figure S8E. Likewise, the white-to-black heatmaps in Figures 8C and S8H–I visualize Pij for a subset of trials. In all cases, Pij was z-scored to normalize across sessions.

In Figure S8 we performed a similar analysis examining the effect of track position (and therefore landmarks) or reward on the distance of neural coding to the midpoint between clusters. In Figure S8M we examined the average distance to the midpoint within each 5cm position bin along the track, split by cue rich and cue poor session types (to assess the effect of landmarks). We compared these distances to a shuffle control in which neural activity was shifted by a random distance up to 400cm. Similarly, in Figure S8L, we examined the average distance to midpoint for 5cm position bins for 100cm centered on each random reward zone (25cm approaching, 50cm within the zone, and 25cm exiting). We compared these distances to a shuffle control created by the same procedure.

Position decoding analysis

We fit linear models to predict the animal’s position from the spiking activity of all MEC neurons, and call the optimized model a “decoder” following common terminology and practice (Kriegeskorte and Douglas, 2019) (Figure 6). Let yt ∈ [0, 2π) denote the position on the circular track at time t, and let snt denote the number of spikes fired by neuron n at time t after smoothing with a Gaussian filter (standard deviation = 200 ms). Due to the nature of the VR environment, yt is a circular variable—i.e., it should be interpreted as an angle on the unit circle. In the statistics literature, a regression that predicts a circular variable from linear covariates is known as a circular-linear regression model. Several approaches to circular-linear regression have been developed (Fisher and Lee, 1992; Pewsey and García-Portugués, 2020; Sikaroudi and Park, 2019). Here, we used a spherically projected multivariate linear model (Presnell et al., 1998). Two regression coefficients, and , are optimized for each neuron using the expectation maximization routine described by Presnell et al. (Presnell et al., 1998). After fitting the model to a set of training data, the model estimate for a given set of inputs is given by