Significance

Conversation is the platform where minds meet to create and exchange ideas, hone norms, and forge bonds. But how do minds coordinate with each other to build a shared narrative from independent contributions? Here we show that when two people converse, their pupils periodically synchronize, marking moments of shared attention. As synchrony peaks, eye contact occurs and synchrony declines, only to recover as eye contact breaks. These findings suggest that eye contact may be a key mechanism for enabling the coordination of shared and independent modes of thought, allowing conversation to both cohere and evolve.

Keywords: pupillometry, synchrony, eye contact, conversation, shared attention

Abstract

Conversation is the platform where minds meet: the venue where information is shared, ideas cocreated, cultural norms shaped, and social bonds forged. Its frequency and ease belie its complexity. Every conversation weaves a unique shared narrative from the contributions of independent minds, requiring partners to flexibly move into and out of alignment as needed for conversation to both cohere and evolve. How two minds achieve this coordination is poorly understood. Here we test whether eye contact, a common feature of conversation, predicts this coordination by measuring dyadic pupillary synchrony (a corollary of shared attention) during natural conversation. We find that eye contact is positively correlated with synchrony as well as ratings of engagement by conversation partners. However, rather than elicit synchrony, eye contact commences as synchrony peaks and predicts its immediate and subsequent decline until eye contact breaks. This relationship suggests that eye contact signals when shared attention is high. Furthermore, we speculate that eye contact may play a corrective role in disrupting shared attention (reducing synchrony) as needed to facilitate independent contributions to conversation.

Good conversations proceed effortlessly, as if conversation partners share a single mind. This effortlessness obscures the complexities involved. Conversation partners must weave shared understanding from alternating independent contributions (1–4) in an act of spontaneous, dynamic cocreation. Given too few independent insights, conversation stagnates. When there is insufficient common ground, people talk past each other. Even deciding when conversation should end is a feat of social coordination (5). Engaging conversation must continuously negotiate the delicate balance between creating shared understanding while allowing the conversation to move forward and evolve. How do two minds negotiate this balance?

There are several reasons to believe that eye contact may play an instrumental role. Eye contact—when two people look at each other’s eyes—occurs ubiquitously in conversation, often at the ends of turns when partners pass the conversational baton (6–9). It is possible that the known effects of eye contact on arousal (10–12) and attention (13–15) may nudge partners at critical moments in the conversation that facilitate this exchange. The ubiquity yet brevity of eye contact in natural conversation, averaging 1.9 s (9), also suggests that these attentional nudges are not scattered randomly but occur at precise times to optimize the attention of both parties. Here we tested whether eye contact has a particular relationship with shared attention during natural conversation.

To quantify the dynamic wax and wane of shared attention during natural conversation, we employed dyadic high temporal resolution pupillometry. Under light constancy, pupil diameter fluctuates coincident with neuronal activity in the locus coeruleus (16, 17), which is associated with attention (18–20). Furthermore, an attention-inducing stimulus evokes an associated pupillary response (i.e., a dilation and return to baseline) (21). Monitoring these pupil responses over time provides a continuous index of attention (22–26). When people attend to the same dynamic stimulus, their pupillary time series can be compared as a measure of shared attention, with similar pupillary time series indexing similar time series of attention. Unlike joint attention, shared attention does not require eye contact or gestures followed by attention to a third object. Rather, shared attention can refer to any situation in which individuals are attending to the same stimulus and can occur with or without eye contact (27). While pupil mimicry has been a documented outcome of looking at another’s eyes (28, 29), shared attention can also exist in the absence of any visual stimulus (e.g., two people listening to the same music) (30).

We use the term “pupillary synchrony” to refer to how closely two conversation partners’ pupillary time series covary over time (31). Previous studies have also used this definition of synchrony to measure covariation of other physiological and behavioral responses [e.g., brain blood oxygenation levels (32); neuroelectrical activity (33–35); heart-rate variability (36)]. Here we used pupillary synchrony between conversation partners to capture temporal variation in shared attention over the course of a natural conversation.

We are not the first to examine intersubject synchrony and eye contact. Leong et al. (33) showed that infant–adult dyads exhibit more brain-to-brain synchrony in EEG while making direct gaze than when interacting with their gaze averted. Similarly, students in real classrooms showed more neural synchrony in EEG while learning with classmates with whom they had previously made eye contact (34). Kinreich et al. (35) used EEG to measure synchrony while dyads planned a fun day to spend together and found that moments of eye contact were correlated with neural synchrony. Additionally, research with both functional near infrared spectroscopy and hyperscanning fMRI paradigms have also found neural synchrony during eye contact in a wide variety of scenarios and across multiple brain regions (37–41), leading to the prevailing inference that eye contact enhances synchrony (37). Yet exactly how eye contact and synchrony are linked is not understood. Does eye contact precede synchrony, as enhancement would suggest, or follow it? Here we probe how eye contact is linked to pupillary synchrony by investigating the temporal relationship between the two.

It is also unclear when synchrony in conversation is desirable. The majority of the synchrony literature relies on tasks in which shared attention equates to better performance. For example, when individuals hear the same story or watch the same movie, the degree to which they have similar responses positively predicts their comprehension of (42, 43) and shared memory for (44) the shared stimulus. But conversation involves more than perceiving a shared stimulus: it requires fluidly shifting between shared and independent modes of thought, from comprehension of what is being said to formulating one’s next contribution (45). These independent contributions allow a conversation to move and evolve. We therefore not only test the relationship between eye contact and pupillary synchrony, but also how both relate to success in conversation as measured by ratings of engagement (46, 47) made by the partners themselves.

In the present study, dyads engaged in unstructured conversation while their eyes were tracked. Afterward, they separately rewatched their conversations while continuously rating how engaged they remembered feeling. We show that eye contact is correlated with dyadic pupillary synchrony during these unstructured conversations, consistent with the research summarized above. Furthermore, we find that eye contact has a distinct temporal relationship with pupillary synchrony consistent with (though not proof of) a coordinative role. Conversation partners make eye-contact as pupillary synchrony peaks, which may provide a communicative signal that shared attention is high. Pupillary synchrony then immediately and sharply decreases until eye contact stops. This temporal relationship suggests that eye contact (or its correlates) may actually reduce shared attention, perhaps to allow for individual contributions that enable a conversation to evolve. Consistent with this inference, we find that the amount of eye contact—not synchrony—predicts self-reported engagement by conversation partners. Rather than maximizing shared attention, a good conversation may require shifts into and out of a shared attentional state, with these shifts accompanied by eye contact.

Results

Dyads Are More Synchronous during Eye Contact.

A generalized linear mixed-effects analysis was performed in R using the lme4 package (48) to investigate the relationship between dyads’ pupillary synchrony and eye contact during their conversations. Pupillary synchrony was computed in 1-s windows using dynamic time warping (DTW), a well-established algorithm that allows and accounts for small offsets in time between otherwise similar signals (see Materials and Methods for a full description of this algorithm). For this analysis, DTW scores were log-transformed to correct for homoscedasticity, and dyads’ time series of eye contact were down-sampled to 1 Hz. The model included a fixed effect for pupillary synchrony, random intercepts for dyads, and assumed a binomial distribution in order to determine whether dyads were more synchronous during moments of eye contact vs. no eye contact.

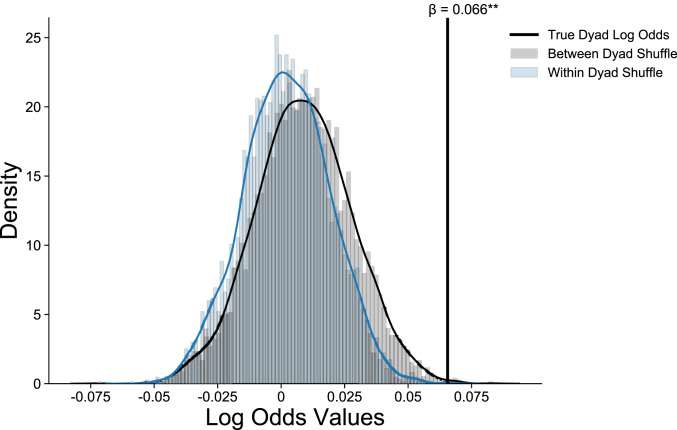

There was a significant effect of pupillary synchrony on eye contact (log odds = 0.066, odds ratio = 1.07, CI = 1.03 to 1.11, P < 0.001), demonstrating that dyads were more synchronous during eye contact than in the absence of eye contact. To more robustly test the probability that our effect of pupillary synchrony on eye contact was not due to chance, we compared our true effect estimate to: 1) a null distribution created by shuffling where moments of eye contact occurred within a dyad’s time series, in order to determine whether our true estimate was the result of random variation in eye contact; and 2) a null distribution created by shuffling intact eye contact time series between subjects, in order to see whether our true estimate was the result of common variation in eye contact across all conversations. Our true effect was significantly positively skewed from both of these distributions (Fig. 1), suggesting that not only are pupillary synchrony and eye contact significantly related to one another, but they are also related to one another in ways that are unique to the structure of individual dyadic conversations.

Fig. 1.

Results of a permutation test comparing the true log odds (β = 0.066) of the association between eye contact and pupillary synchrony to: 1) 5,000 permutations shuffling dyads eye contact and pupillary synchrony time series, and 2) 5,000 permutations shuffling eye contact time series within each dyad. The true relationship between pupillary synchrony and eye contact fell significantly outside these two distributions.

Eye Contact Follows (Rather than Elicits) Synchrony.

Multilevel vector autoregression.

We investigated the directionality of the relationship between eye contact, pupil size, and pupillary synchrony using a multilevel vector autoregression analysis (mlVAR; see Materials and Methods for full description). mlVAR outputs temporal, contemporaneous, and between-subjects networks to represent the directed and undirected relationships between variables of interest. Because mlVAR was designed for continuous time series, we parsed each binary time series of eye contact, comprised of samples where eye contact either was or was not being made, into 1-s epochs and took the average of the eye contact in these epochs, resulting in a proportion of eye contact made per 1-s window.

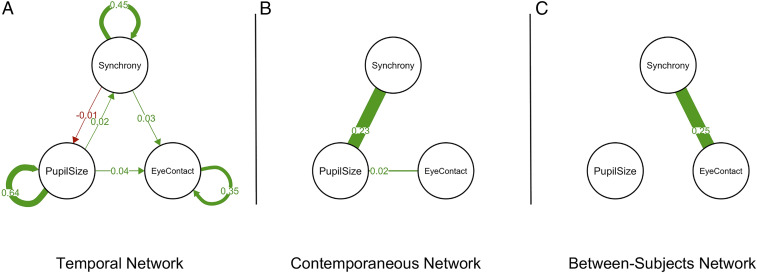

We found a significant between-subjects relationship for eye contact and pupillary synchrony (partial r = 0.25, P = 0.01), suggesting that dyads who made significantly more eye contact overall also showed higher levels of pupillary synchrony over their entire conversations. We also found contemporaneous network relationships between pupil size and eye contact (partial r = 0.02, P = 0.001) and pupil size and synchrony (partial r = 0.23, P < 0.001), suggesting that in moments when an individual’s pupils dilate, they both make more eye contact and are more synchronous with their conversation partner. Finally, we found temporal network relationships suggesting that both pupil size (partial r = 0.04, P < 0.001) and synchrony (partial r = 0.03, P < 0.001) in one moment leads to eye contact in the next (all results shown in Fig. 2). While these r values are small, this analysis rules out eye contact as a causal mechanism for creating pupillary synchrony. Instead, this temporal network result suggests that rather than being a strategy used to increase pupillary synchrony during conversation, eye contact may be a behavioral marker of alignment already occurring.

Fig. 2.

Gaussian graphical networks depicting the relationships between pupillary synchrony, pupil size, and eye contact. All edges depict significant relationships at α = 0.05. Edge thickness represents the size of each relationship, and specific edge weights are overlaid on top of each edge. Green edges represent positive relationships, and red edges represent negative relationships. (A) Temporal network illustrating the relationship between pupillary synchrony, pupil size, and eye contact at one lag. Pupillary synchrony and pupil size temporally precede eye contact. (B) Contemporaneous network illustrating the simultaneous relationships between pupillary synchrony, pupil size, and eye contact. Pupil size is positively related to both eye contact and to pupillary synchrony. (C) Between-subjects network illustrating the per subject average relationship between pupillary synchrony, eye contact, and pupil size. Subjects who shared more eye contact during their conversations were more synchronous.

Event-related analysis.

To further examine the temporal relationship between eye contact and pupillary synchrony, we investigated how pupillary synchrony fluctuates, on average, around a single instance of eye contact (see Materials and Methods for full description of this analysis). For example, a dyad could synchronize prior to the instance of eye contact and remain highly aligned for the duration, suggesting that dyadic pupillary synchrony is maintained during eye contact. Conversely, a dyad could synchronize prior to the onset of eye contact, but begin to move out of alignment during the instance, which would suggest that pupillary synchrony is broken during moments of eye contact.

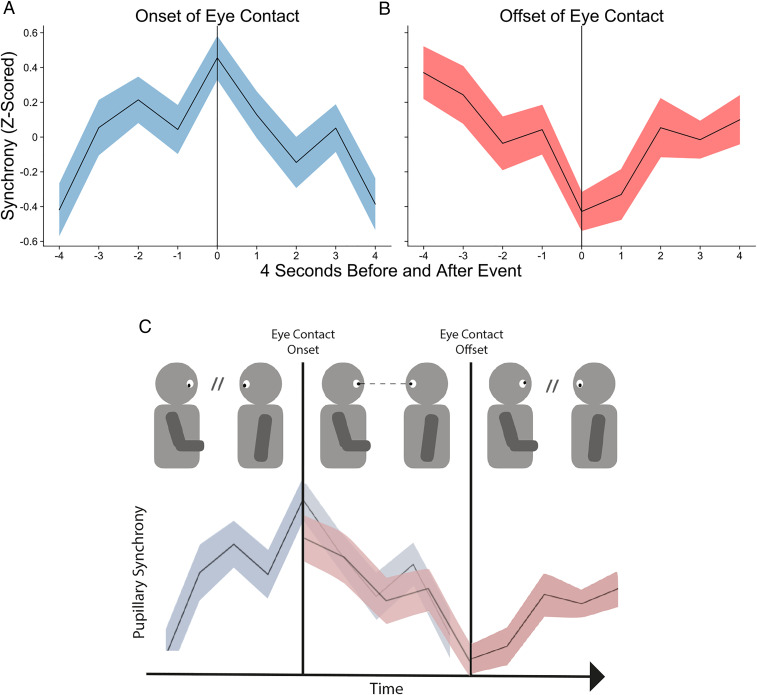

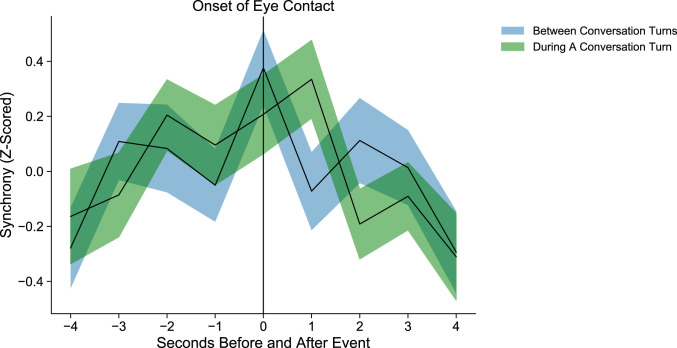

We found that pupillary synchrony begins to increase prior to the start of eye contact, peaks at the onset of eye contact, and decreases immediately thereafter (Fig. 3).

Fig. 3.

Time series of pupillary synchrony in the 4 s leading up to and following the (A) onset of eye contact with SE and (B) offset of eye contact with SE (mean duration of eye contact = 1.07 s, SD = 1.14). Peak and trough pupillary synchrony occur at the onset and offset of eye contact, respectively. (C) Illustrative cartoon of how a single instance of eye contact coincides with pupillary synchrony. Prior to eye contact, pupillary synchrony increases until it peaks at eye contact onset (real data from A). As eye contact is maintained, synchrony declines until its trough when eye contact is broken (real data from B).

We statistically tested this effect by computing a linear model predicting the average pupillary synchrony curve per dyad and specifying planned contrasts for how synchrony might vary over time (e.g., linear, quadratic, cubic shapes). This model was significant [F(8, 414) = 3.84, R2 = 0.05, P < 0.001], and results confirmed that a quadratic contrast was the best fit for dyadic pupillary synchrony (β = −0.65, CI = −0.93 to −0.37, P < 0.001). These effects also proved to be robust to naturalistic data issues (e.g., irregular spacing and duration of eye contact) (SI Appendix).

We also performed the same event-related analysis of pupillary synchrony surrounding the offset of eye contact: that is, when dyads broke eye contact. Here we found the opposite effect such that pupillary synchrony decreased leading up to the offset, reached its lowest point at the offset, then began to increase following the offset. We statistically tested this effect as well by computing a linear model predicting our average pupillary synchrony curve per dyad and again specifying planned contrasts for how pupillary synchrony might vary over time. This model was also significant [F(8, 414) = 3.08, R2 = 0.04, P = 0.002], and a quadratic contrast was also a significant fit for dyads’ pupillary synchrony curves surrounding the offset of eye contact (β = 0.53, CI = 0.25 to 0.81, P < 0.001). These results suggest that although eye contact is positively associated with pupillary synchrony in general, it does not causally increase pupillary synchrony. Rather, eye contact occurs as pupillary synchrony peaks and persists over its decline until pupillary synchrony reaches a nadir. Pupillary synchrony only begins to increase again once the moment of eye contact ends.

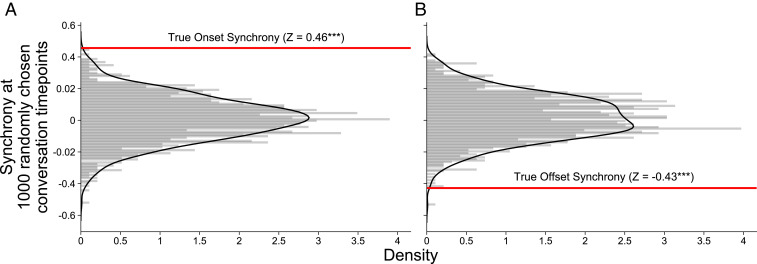

To further test whether this parabolic contour was peculiar to moments surrounding eye contact, we compared true eye contact onsets and offsets to a randomly sampled, equal number of moments in the conversation where eye contact was not made, creating “pseudo-onsets” and “pseudo-offsets.” We calculated dyadic pupillary synchrony curves for the 4 s prior and 4 s following these randomly chosen conversation points, repeating the process 1,000 times for each dyad in order to compare the distribution of pupillary synchrony at pseudo-onsets and -offsets to pupillary synchrony at true eye contact onsets and offsets. For both onsets and offsets, the true pupillary synchrony value fell outside the null distribution (Fig. 4). Thus, eye contact onsets and offsets are associated with more pupillary synchrony (z = 0.46, P < 0.001) and less pupillary synchrony (z = −0.43, P < 0.001), respectively, than what could be expected from natural fluctuations of pupillary synchrony during conversation.

Fig. 4.

Results of two permutation tests comparing the (A) onset and (B) offset of eye contact to 1,000, randomly chosen, 9-s moments in each conversation, per dyad. The distributions depicted above were created by taking the pupillary synchrony value at the “onset” and “offset” point (position 5) of each randomly chosen moment in the conversation. The true pupillary synchrony values for the onset and offset of eye contact are represented by the red horizontal lines in each figure. Pupillary synchrony at the onset of eye contact is significantly higher than would be expected by chance, and pupillary synchrony at the offset of eye contact is significantly lower than would be expected by chance.

Eye Contact is Associated with Pupillary Synchrony within and between Conversation Turns.

Past research has shown that eye contact often occurs as the conversation shifts from one partner to the other (9, 49). We therefore examined whether the effect of eye contact on pupillary synchrony was similarly constrained to moments when partners passed the conversational baton. We separated instances of eye contact by whether they occurred within or between conversational turns. An instance of eye contact was determined to have occurred between turns if its onset began a maximum of 1 s prior to the start of the turn or ended a maximum of 1 s following the end of the turn (see Materials and Methods for full turn-taking quantification).

Consistent with the prior literature, we found that within each conversation, dyads made more eye contact between conversational turns (mean = 63.74, SD = 27.76) than during conversational turns [mean = 33.36, SD = 14.91; t(46) = 7.82, P < 0.001]. Across all dyads, eye contact occurred between turns 64.8% of the time, and within a turn 35.2% of the time.

To test whether pupillary synchrony associated with eye contact differed by when that eye contact occurred, we computed two separate pupillary synchrony time series: one for eye contact between conversation turns and one for eye contact within conversation turns (see Fig. 5 for a visualization of these two time series). We then computed paired two-sided t tests at each of the nine points along the associated pupillary synchrony time series. None of these t tests were significant [t(46) ranged from −1.8 to 1.52, Ps 0.07 to 0.94]. Together, these findings suggest that while people do tend to make eye contact as they trade turns in conversation, the relationship between eye contact and pupillary synchrony is not confined to these moments.

Fig. 5.

Visualization of pupillary synchrony at the onset of eye contact occurring within a conversation turn (SE plotted in green) and between conversation turns (SE plotted in blue). These two pupillary synchrony curves did not significantly differ from one another.

Greater Eye Contact, Greater Engagement.

Given the nature of the relationship we found between pupillary synchrony and eye contact, we examined how eye contact and pupillary synchrony related to how engaged partners were by the conversation itself. Mean engagement was quantified as an average of the two continuous self-reported engagement ratings that each conversation partner made while rewatching a video of their conversation (Materials and Methods). A linear mixed-effects analysis of the relationship between eye contact, dyads’ pupillary synchrony, and their continuous mean engagement was performed in R using the lme4 package (48). For this analysis, pupillary synchrony was again computed in 1-s windows using DTW and log-transformed to correct for homoscedasticity, and dyads’ time series of eye contact and mean engagement were down-sampled to 1 Hz. The model included fixed effects and an interaction term for pupillary synchrony and eye contact, and random intercepts for dyads in order to see how the interplay of eye contact and pupillary synchrony related to dyads’ self-reported engagement.

There was a significant main effect of eye contact on mean engagement [t(28,160) = 2.83, β = 0.028, CI = 0.01 to 0.05, P = 0.006], such that dyads were more engaged during eye contact than in the absence of eye contact. This result was also robust to within-subjects (P = 0.002) and between-subjects (P = 0.02) permutation tests, suggesting that not only are eye contact and engagement significantly related to one another, but they are also related to one another in ways that are unique to the individual structure of a dyad’s conversation.

There was also a significant relationship between pupillary synchrony and mean engagement [t(28,170) = 1.965, β = 0.012, CI = 0 to 0.02, P = 0.05], suggesting that more pupillary synchrony also predicted more dyadic engagement. While this result was robust to a within-subjects permutation test (P = 0.02), we found this result was largely driven by the fact that all dyads tended to become both more synchronous and more engaged over the course of their conversations. Therefore, this effect did not survive a between-subjects permutation test (P = 0.56).

Finally, we found a marginal interaction effect between eye contact and pupillary synchrony on engagement [t(28,150) = −1.83, β = −0.017, CI = −0.04 to 0, P = 0.08]. This interaction suggests that eye contact may act as a moderator for the effects of pupillary synchrony on engagement such that when dyads were not making eye contact, pupillary synchrony and engagement were positively related, but when dyads were making eye contact, pupillary synchrony and engagement were negatively related. This result was robust to a within-subjects permutation test (P = 0.04), but not to a between-subjects permutation test (P = 0.12) (see SI Appendix for a more detailed description of permutation tests and visualizations of all permutation distributions).

Discussion

Here, we demonstrate that eye contact marks moments of shared attention, as measured by dyadic pupillary synchrony, in natural conversation. Furthermore, this link is temporally directed: eye contact does not precede pupillary synchrony, but rather commences as pupillary synchrony peaks and persists through its decline. This temporal relationship suggests that the onset of eye contact may not only be coincident with high shared attention (peak pupillary synchrony) but may also provide a signal of the same for conversation partners. The immediate decline in pupillary synchrony, which persists until eye contact breaks, suggests that eye contact may play a role in disrupting shared attention, perhaps to facilitate independent (nonshared) contributions. Finally, we found that dyads reported being more engaged by their conversations when they were making eye contact compared to when they were not. Together, these findings support the idea that eye contact, or its neural corollaries, may facilitate social interaction by signaling moments of high attentional coupling and then aiding attentional decoupling as needed to maintain engagement.

The prevailing theory in the literature is that synchrony, referring to a wide variety of coupled behaviors, is a precursor to successful communication, shared understanding, and many other prosocial benefits (32, 50–52). Synchrony is, indeed, a robust signal of shared understanding and has been shown to causally increase feelings of rapport (53–55). These effects are strong and compelling, even showing that brain-to-brain synchrony while watching videos predicts friendship (56) and underscoring the need to study interacting humans rather than passive stimuli (57). However, it is not clear that more interpersonal synchrony is always better, regardless of the task.

Our findings here are in line with a growing body of work suggesting that moving out of synchrony may also be an important feature of social interaction. The need to occasionally break synchrony may be especially true when individual exploration and creativity is required, as with acts of cocreation. More securely attached infants and adults tend to show less synchrony during a mirror game when interacting with their mothers and study partners, respectively, suggesting that more security in relationships might allow for more independent exploration (58, 59). In a joint construction task, where dyads were instructed to build a model car, researchers found that synchrony was associated with more constrained construction strategies, and those dyads who worked more asynchronously (i.e., had less synchronous hand movements while building) created superior model cars (60). A study investigating synchrony in a body movement task found that, while dyads who moved more synchronously experienced more positive affect overall, they also had more trouble with self-regulation of that affect (61). Finally, a study investigating interpersonal coordination during conversation found that dyads decoupled their head movements during conversation in a way that tracked with their status as a speaker or listener (62). Synchrony and asynchrony appear to provide differential benefits and work together to create successful interaction.

These findings are consistent with recent papers demonstrating the tendency for dyads to move continuously in and out of synchrony with one another. For example, even when participants were instructed to synchronize their behavior in an improvisational joint mirroring task, researchers found that interaction quality was maximized when participants sacrificed synchrony at times in favor of more novel and challenging mirroring interactions (63), and a model that accounted for periodically entering and exiting synchrony provided the best fit for their joint motion (64). Building on this finding, Mayo and Gordon (65) proposed a new model of interpersonal synchrony in which the tendencies to synchronize as well as act independently both exist during social interaction, and that the ability to move flexibly between these two states is the marker of a truly adaptive social system. Their model incorporates ideas from complex dynamical systems and treats the tendency to move flexibly in and out of synchrony as a system that is metastable. They provide evidence for their model by demonstrating that individuals who have less severe social symptoms of autism spectrum disorders tend to move in and out of eye contact with their conversation partner in a more metastable way. Our findings mirror and extend these findings to natural social interaction, suggesting that eye contact may index coordination between synchrony and asynchrony, and thus shared and independent thought. In this way, while heightened pupillary synchrony may indicate conversational convergence, eye contact may help coordinate this convergence with independent contributions from each conversation partner. Although we found no differences in how eye contact related to pupillary synchrony during conversation turns versus between conversation turns, it is possible that other coordinative mechanisms of conversation could predict pupillary synchrony [e.g., topic shifts (66), turn taking (6), and even blinking (67)]. In particular, Turn Construction Units (68, 69)—segments of conversation within turns that communicate complete thoughts—could also predict moments of shared attention.

Our study used unconstrained, naturalistic conversation to measure how dyads couple and decouple attention while interacting. We found that eye contact, a ubiquitous and universal feature of conversation, predicts shifts in pupillary synchrony, a measure of shared attention. Specifically, our results suggest that eye contact marks breakpoints in pupillary synchrony, perhaps facilitating movement into and out of attentional alignment and, in so doing, facilitating engaging conversation. These findings raise many questions for further research—both for typical and atypical neurological populations—about how attentional states are modulated during interaction with downstream consequences on how minds engage with each other.

Materials and Methods

Materials.

Participants.

In this study, 186 subjects comprising 93 dyads (mean age: 19.38 y; 120 females) participated. Subjects were recruited from Dartmouth College and were compensated either monetarily or with extra course credit for participation. The study was approved by the Committee for the Protection of Human Subjects Institutional Review Board at Dartmouth College, and informed consent was obtained prior to the start of the study. After the study had concluded, subjects indicated consent to the release of the videos obtained during their conversations for use in scientific presentations or publications. All subjects had normal or corrected-to-normal vision.

Eye-tracking data collection.

Pupil dilation data were collected continuously while dyads engaged in conversation. Pupil diameter was recorded from both eyes at 60 Hz using SensoMotoric Instruments (SMI) wearable eye-tracking glasses or at 200 Hz using Pupil Labs wearable eye-tracking glasses. Dyads were seated across from one another at a distance of ∼3 feet in a luminance-controlled testing room. Lux values were recorded at multiple points in the room to ensure that luminance in the room did not exceed the 150 lx necessary to elicit a luminance-induced pupil dilation (70). We aligned the dyads’ eye-tracking recordings in time using audio obtained from each pair of eye-tracking glasses and FaceSync software (71), which computes the offset in time between two audio signals by correlating one with the other at each moment within a given window. It then returns the offset as the time point that produces the highest correlation between the two signals.

Ratings of engagement.

After their conversation, participants watched a video recording of their conversation and continuously reported how engaged they were at each moment by moving a slider bar (PsychoPy software) (72). Participants’ continuous engagement ratings were recorded at 10 Hz.

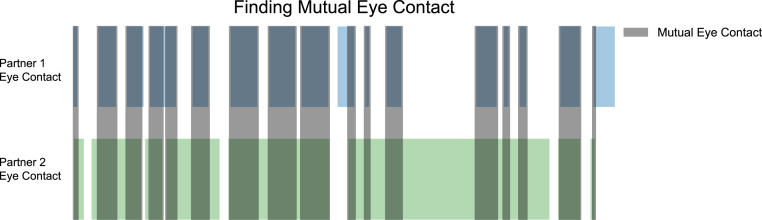

Eye contact annotations.

We annotated moments of eye contact during the conversation using ELAN annotation software (73). Annotations were obtained by watching videos recorded from a camera on the eye-tracking glasses located at each participant’s nasion. These videos thus captured the eyes of the participant’s conversation partner. Moments of individual eye contact were defined as moments when the partner in the video looked straight at the participant’s camera (the video’s point of view). Two independent annotations from two independent raters were obtained for each participant, and only moments in which the two annotations agreed were accepted as individual eye contact annotations for that participant. Each participant’s individual eye contact annotations were then compared with the eye contact annotations of their conversation partner to find moments of mutual eye contact (when both participants were looking at each other’s eyes at the same time). Henceforth, mutual eye contact will be referred to simply as “eye contact,” consistent with the lay use of the term. An illustration of this process can be found in Fig. 6.

Fig. 6.

Annotating instances of eye contact. Individual eye contact for each conversation partner, denoted by the green and blue, was determined by comparing moments of individual eye contact marked by each independent annotator, and accepting all moments where the annotations agreed eye contact was being made. Mutual eye contact, denoted by the gray bars, was calculated by comparing eye contact across conversation partners and recording moments where both partners were looking at each other’s eyes.

Because of the way these moments of eye contact were obtained, there is a possibility that some moments of face gaze were mistakenly recorded as moments of eye gaze, by both independent raters. Previous research has demonstrated that looking at the face area still produces the perception of eye contact (74), suggesting that if some such moments were included, they would likely achieve the same dyadic consequence as eye contact given the equivalent perception by the recipient.

Procedure.

Conversation phase.

Dyads were seated across from one another and videotaped and eye-tracked while they engaged in a 10-min, unstructured conversation. Before their conversations, dyads received the following instructions:

In this portion of the study, you will be having a conversation. Your eyes will be tracked during this conversation, and the conversation will also be video and audio recorded. Treat this conversation however you normally treat conversations in your daily life. You can talk about anything you would like. I know the glasses look a little funny, but try to ignore them during your conversation. Also, because the glasses don’t work as well when you move around, try to remain still, though you can still gesture and nod your head if that feels natural during your conversation. Continue talking until I come back, around 10 minutes from now.

Engagement rating phase.

After each conversation, a video of the conversation was saved and used to obtain participants’ continuous ratings of how engaged they were during the conversation. Participants were seated at computers in separate rooms and instructed to watch the video of the conversation they just had, while moving a computer mouse-operated slider bar to indicate how engaged they were in the conversation from moment to moment. Participants received the following instructions:

You will be watching the video-taped conversation that you just had. As you watch, think back to how you were feeling during that conversation. Your task is to report how engaging you felt the conversation was. You will make these ratings by moving the computer mouse. These ratings will not be shared with your study partner. Please do your best to accurately report how engaged you felt at each point in time.

Questionnaire phase.

Participants remained seated at the computer in separate rooms and filled out a battery of questions pertaining to their feelings about the conversation and their conversation partner. Participants additionally completed the autism spectrum quotient and interpersonal reactivity index as a measure of individual differences in interpersonal skills.

Data Analysis.

Preprocessing and quality control.

Raw pupil dilation time series were preprocessed for further analysis by first performing linear interpolation over eye-blinks and other dropout in signal, removing any subject who required more than 25% of their data interpolated (31). To remove spikes, the data were then median filtered (fifth order) and low-pass–filtered at 10 Hz and finally detrended to reduce drift. Preprocessed data were then visually inspected for any remaining spikes, and a final interpolation was performed over these spikes if necessary. Data collected using Pupil Labs eye-tracking glasses were down-sampled to 60 Hz to match the sampling rate of data collected using SMI eye-tracking glasses.

Because of the naturalistic nature of the experiment, it proved difficult to collect dyads where both partners were below the conservative 25% interpolation threshold. Movement during the conversations and individual variation in eye-tracking quality, in addition to the normal dropout due to eye blinks, caused significant data loss for many participants. For two participants, eye-tracking video data were corrupt, which meant that no eye contact annotations for these participants were able to be obtained. From the original dataset, 94 subjects comprising 47 dyads (mean age: 19.78 y; 53 females) were included for further analysis.

Computing pupillary synchrony: DTW.

DTW is an algorithm used to compare signals that may be offset in time (75). We used DTW to measure synchrony between dyads’ pupils during conversation, following the method used by Kang and Wheatley (30, 31). The DTW algorithm divides two signals into a user-defined number of segments, each representing some window of time. Then, DTW calculates the cosine similarity of these segments (though other distance metrics, such as Euclidean distance, can also be used). DTW then evaluates the cosine similarity values associated with comparing each time point within the segments. The time series pair that yields the smallest cosine similarity value is deemed to be the time at which the content of the two signals best align, and both signals are adjusted according to that cosine similarity comparison. Each adjustment incurs a penalty, or a “cost” of realignment. The sum of these penalties yields an overall cost value, which represents the overall effort involved in warping one signal onto the other. Higher cost values indicate greater dissimilarity between two patterns, thus “synchrony” as defined here, refers to the inverse DTW cost.

Because of the way DTW is calculated, the best fit of signal 1 onto signal 2 can be found in a time-varying way, allowing for small offsets in time to be measured and understood as synchrony. We chose a 1-s sampling window for calculating DTW to allow for such offsets, while also capturing a larger number of eye contact instances than the window used by previous literature using DTW to calculate pupillary synchrony (3 s) (31).

Multilevel vector autoregression.

To investigate the directionality of the relationship between eye contact, pupil size, and pupillary synchrony, we used the R package mlVAR (76), which is used for the analysis of multivariate time series using a network framework. This method allows for the estimation of temporal, contemporaneous, and between-subjects networks of relationships between time series variables, where variables are “nodes” in the network, and the partial correlations between those variables are “edges.” The temporal network is estimated by calculating the regression coefficients obtained by predicting one variable at time t from another variable at time t − 1. Once the temporal network has been estimated, the contemporaneous network is calculated by taking partial correlations of the residuals from the temporal network. Finally, the between-subjects network is calculated by taking partial correlations of mean-centered predictors and adding person-means as level 2 predictors.

Event-related analysis.

To examine fluctuations of synchrony around single instances of eye contact, we performed an event-related analysis, treating each instance of eye contact as an event and computing averages of pupillary synchrony across subjects before and during these instances. First, we extracted all instances of eye contact lasting 1 s or longer from each dyad (mean number of instances per conversation = 71.6, SD = 25.08). We restricted eye contact in this way because our pupillary synchrony time series were calculated at 1 Hz (see Computing pupillary synchrony: DTW, above, for detailed description of our calculation). Because of this sampling rate, for instances of eye contact lasting less than 1 s, the onset and offset of this eye contact both occur in the same pupillary synchrony window. Therefore, for these short instances of eye contact, the onset and offset pupillary synchrony values are the same. Since we were separately interested in pupillary synchrony at the onset and offset of eye contact, an instance of eye contact where the onset and offset occur in the same sampling window of pupillary synchrony could confound true pupillary synchrony levels, if pupillary synchrony at the onset of eye contact is different from pupillary synchrony at the offset of eye contact.

We then extracted pupillary synchrony in 1-s epochs for the 4 s prior to the onset and offset of these eye contact instances, as well as the 4 s following the onset and offset of these eye contact instances. We chose to calculate pupillary synchrony in this window after plotting eye contact at a range of time-series lengths and finding that no matter the length, this 4-s fluctuation around the onset and offset of eye contact remained (SI Appendix). We then z-scored each dyad’s averaged pupillary synchrony during these events to more clearly look at the changes surrounding each instance of eye contact.

Extracting Conversation Turns.

To investigate whether the observed fluctuations of synchrony around eye contact could be explained by turn-taking, another ubiquitous form of coordination during dyadic conversation, we extracted time points during dyads’ conversations where speaking switched from one partner to the other. To do this, we first created transcripts of dyads’ conversations using Scribie audio transcription services. These transcripts contained timestamps denoting when turn switches occurred. We used these timestamps to determine whether or not eye contact had occurred at a turn switch. Because eye contact did not often line up exactly with the onset of a turn switch in our data, and has historically been found to begin prior to the actual switch from one conversation partner to the other (9), we allowed any instance of eye contact occurring within 1 s of a turn switch to be considered eye contact occurring between conversation turns.

Supplementary Material

Acknowledgments

We thank Oriana Gamper, Maria Goldman, Peter Kaiser, and Brody McNutt for assistance with data collection and eye contact annotations.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2106645118/-/DCSupplemental.

Data Availability

All pupillometry, eye contact, engagement data, de-identified conversation transcript timestamps, and custom scripts associated with this study are available at https://github.com/sophiewohltjen/eyeContact-in-conversation (77).

References

- 1.Frith U., Frith C., The social brain: Allowing humans to boldly go where no other species has been. Philos. Trans. R. Soc. Lond. B Biol. Sci. 365, 165–176 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Heldner M., Edlund J., Pauses, gaps and overlaps in conversations. J. Phonetics 38, 555–568 (2010). [Google Scholar]

- 3.Levinson S. C., Torreira F., Timing in turn-taking and its implications for processing models of language. Front. Psychol. 6, 731 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Stolk A., Verhagen L., Toni I., Conceptual alignment: How brains achieve mutual understanding. Trends Cogn. Sci. 20, 180–191 (2016). [DOI] [PubMed] [Google Scholar]

- 5.Mastroianni A. M., Gilbert D. T., Cooney G., Wilson T. D., Do conversations end when people want them to? Proc. Natl. Acad. Sci. U.S.A. 118, 1–9 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mortensen C. D., Communication Theory (Transaction Publishers, 2011). [Google Scholar]

- 7.Hedge B. J., Everitt B. S., Frith C. D., The role of gaze in dialogue. Acta Psychol. (Amst.) 42, 453–475 (1978). [DOI] [PubMed] [Google Scholar]

- 8.Hirvenkari L., et al., Influence of turn-taking in a two-person conversation on the gaze of a viewer. PLoS One 8, e71569 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kendon A., Some functions of gaze-direction in social interaction. Acta Psychol. 26, 22–63 (1967). [DOI] [PubMed] [Google Scholar]

- 10.Binetti N., Harrison C., Coutrot A., Johnston A., Mareschal I., Pupil dilation as an index of preferred mutual gaze duration. R. Soc. Open Sci. 3, 160086 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Jarick M., Bencic R., Eye contact is a two-way street: Arousal is elicited by the sending and receiving of eye gaze information. Front. Psychol. 10, 1262 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mazur A., et al., Physiological aspects of communication via mutual gaze. AJS 86, 50–74 (1980). [DOI] [PubMed] [Google Scholar]

- 13.Abney D. H., Suanda S. H., Smith L. B., Yu C., What are the building blocks of parent-infant coordinated attention in free-flowing interaction? Infancy 25, 871–887 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Conty L., Tijus C., Hugueville L., Coelho E., George N., Searching for asymmetries in the detection of gaze contact versus averted gaze under different head views: A behavioural study. Spat. Vis. 19, 529–545 (2006). [DOI] [PubMed] [Google Scholar]

- 15.Senju A., Hasegawa T., Direct gaze captures visuospatial attention. Vis. Cogn. 12, 127–144 (2005). [Google Scholar]

- 16.Aston-Jones G., Rajkowski J., Kubiak P., Alexinsky T., Locus coeruleus neurons in monkey are selectively activated by attended cues in a vigilance task. J. Neurosci. 14, 4467–4480 (1994). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rajkowski J., Correlations between locus coeruleus (LC) neural activity, pupil diameter and behavior in monkey support a role of LC in attention. Society for Neuroscience Abstracts 19, 974 (1993). [Google Scholar]

- 18.Alnæs D., et al., Pupil size signals mental effort deployed during multiple object tracking and predicts brain activity in the dorsal attention network and the locus coeruleus. J. Vis. 14, 1 (2014). [DOI] [PubMed] [Google Scholar]

- 19.Gilzenrat M. S., Nieuwenhuis S., Jepma M., Cohen J. D., Pupil diameter tracks changes in control state predicted by the adaptive gain theory of locus coeruleus function. Cogn. Affect. Behav. Neurosci. 10, 252–269 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Joshi S., Li Y., Kalwani R. M., Gold J. I., Relationships between pupil diameter and neuronal activity in the locus coeruleus, colliculi, and cingulate cortex. Neuron 89, 221–234 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hoeks B., Levelt W. J. M., Pupillary dilation as a measure of attention: A quantitative system analysis. Behav. Res. Meth. Instrum. Comput. 25, 16–26 (1993). [Google Scholar]

- 22.Kang O. E., Huffer K. E., Wheatley T. P., Pupil dilation dynamics track attention to high-level information. PLoS One 9, e102463 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kang O., Banaji M. R., Pupillometric decoding of high-level musical imagery. Conscious. Cogn. 77, 102862 (2020). [DOI] [PubMed] [Google Scholar]

- 24.van den Brink R. L., Murphy P. R., Nieuwenhuis S., Pupil diameter tracks lapses of attention. PLoS One 11, e0165274 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Smallwood J., et al., Pupillometric evidence for the decoupling of attention from perceptual input during offline thought. PLoS One 6, e18298 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wierda S. M., van Rijn H., Taatgen N. A., Martens S., Pupil dilation deconvolution reveals the dynamics of attention at high temporal resolution. Proc. Natl. Acad. Sci. U.S.A. 109, 8456–8460 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Shteynberg G., Shared attention. Perspect. Psychol. Sci. 10, 579–590 (2015). [DOI] [PubMed] [Google Scholar]

- 28.Prochazkova E., et al., Pupil mimicry promotes trust through the theory-of-mind network. Proc. Natl. Acad. Sci. U.S.A. 115, E7265–E7274 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Van Breen J. A., De Dreu C. K. W., Kret M. E., Pupil to pupil: The effect of a partner’s pupil size on (dis)honest behavior. J. Exp. Soc. Psychol. 74, 231–245 (2018). [Google Scholar]

- 30.Kang O., Wheatley T., Pupil dilation patterns reflect the contents of consciousness. Conscious. Cogn. 35, 128–135 (2015). [DOI] [PubMed] [Google Scholar]

- 31.Kang O., Wheatley T., Pupil dilation patterns spontaneously synchronize across individuals during shared attention. J. Exp. Psychol. Gen. 146, 569–576 (2017). [DOI] [PubMed] [Google Scholar]

- 32.Hasson U., Frith C. D., Mirroring and beyond: Coupled dynamics as a generalized framework for modelling social interactions. Philos. Trans. R. Soc. Lond. Ser. B, Biol. Sci. 371, 1693 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Leong V., et al., Speaker gaze increases information coupling between infant and adult brains. Proc. Natl. Acad. Sci. U.S.A. 114, 13290–13295 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dikker S., et al., Brain-to-brain synchrony tracks real-world dynamic group interactions in the classroom. Curr. Biol. 27, 1375–1380 (2017). [DOI] [PubMed] [Google Scholar]

- 35.Kinreich S., Djalovski A., Kraus L., Louzoun Y., Feldman R., Brain-to-brain synchrony during naturalistic social interactions. Sci. Rep. 7, 17060 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Konvalinka I., et al., Synchronized arousal between performers and related spectators in a fire-walking ritual. Proc. Natl. Acad. Sci. U.S.A. 108, 8514–8519 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hirsch J., Zhang X., Noah J. A., Ono Y., Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. Neuroimage 157, 314–330 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kelley M. S., Noah J. A., Zhang X., Scassellati B., Hirsch J., Comparison of human social brain activity during eye-contact with another human and a humanoid robot. Front. Robot. AI 7, 599581 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Koike T., et al., Neural substrates of shared attention as social memory: A hyperscanning functional magnetic resonance imaging study. Neuroimage 125, 401–412 (2016). [DOI] [PubMed] [Google Scholar]

- 40.Koike T., Sumiya M., Nakagawa E., Okazaki S., Sadato N., What makes eye contact special? Neural substrates of on-line mutual eye-gaze: A hyperscanning fMRI study. eNeuro 6, ENEURO.0284-18.2019 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Noah J. A., et al., Real-time eye-to-eye contact is associated with cross-brain neural coupling in angular gyrus. Front. Hum. Neurosci. 14, 19 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Silbert L. J., Honey C. J., Simony E., Poeppel D., Hasson U.. Coupled neural systems underlie the production and comprehension of naturalistic narrative speech. Proc. Natl. Acad. Sci. U.S.A. 111, E4687–E4696 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Stephens G. J., Silbert L. J., Hasson U., Speaker-listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. U.S.A. 107, 14425–14430 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Chen J., et al., Shared memories reveal shared structure in neural activity across individuals. Nat. Neurosci. 20, 115–125 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bögels S., Magyari L., Levinson S. C., Neural signatures of response planning occur midway through an incoming question in conversation. Sci. Rep. 5, 12881 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Redcay E., Schilbach L., Using second-person neuroscience to elucidate the mechanisms of social interaction. Nat. Rev. Neurosci. 20, 495–505 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Schilbach L., et al., Toward a second-person neuroscience. Behav. Brain Sci. 36, 393–414 (2013). [DOI] [PubMed] [Google Scholar]

- 48.Bates D., Maechler M., Bolker B., Walker S., Fitting linear mixed-effects models using lme4. J. Stat. Software 67, 1–48 (2015). [Google Scholar]

- 49.Rutter D. R., Stephenson G. M., Ayling K., White P. A., The timing of looks in dyadic conversation. Br. J. Soc. Clin. Psychol. 17, 17–21 (1978). [Google Scholar]

- 50.Launay J., Tarr B., Dunbar R. I. M., Synchrony as an adaptive mechanism for large-scale human social bonding. Ethology 122, 779–789 (2016). [Google Scholar]

- 51.Mogan R., Fischer R., Bulbulia J. A., To be in synchrony or not? A meta-analysis of synchrony’s effects on behavior, perception, cognition and affect. J. Exp. Soc. Psychol. 72, 13–20 (2017). [Google Scholar]

- 52.Wheatley T., Kang O., Parkinson C., Looser C. E., From mind perception to mental connection: Synchrony as a mechanism for social understanding. Soc. Personal. Psychol. Compass 6, 589–606 (2012). [Google Scholar]

- 53.Hove M. J., Risen J. L., It’s all in the timing: Interpersonal synchrony increases affiliation. Soc. Cogn. 27, 949–960 (2009). [Google Scholar]

- 54.Ramseyer F., Tschacher W., Nonverbal synchrony in psychotherapy: Coordinated body movement reflects relationship quality and outcome. J. Consult. Clin. Psychol. 79, 284–295 (2011). [DOI] [PubMed] [Google Scholar]

- 55.Wiltermuth S. S., Heath C., Synchrony and cooperation. Psychol. Sci. 20, 1–5 (2009). [DOI] [PubMed] [Google Scholar]

- 56.Parkinson C., Kleinbaum A. M., Wheatley T., Similar neural responses predict friendship. Nat. Commun. 9, 332 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hari R., Henriksson L., Malinen S., Parkkonen L., Centrality of social interaction in human brain function. Neuron 88, 181–193 (2015). [DOI] [PubMed] [Google Scholar]

- 58.Beebe B., Steele M., How does microanalysis of mother–infant communication inform maternal sensitivity and infant attachment? Attach. Hum. Dev. 15, 583–602 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Feniger-Schaal R., et al., Would you like to play together? Adults’ attachment and the mirror game. Attach. Hum. Dev. 18, 33–45 (2016). [DOI] [PubMed] [Google Scholar]

- 60.Wallot S., Mitkidis P., McGraw J. J., Roepstorff A., Beyond synchrony: Joint action in a complex production task reveals beneficial effects of decreased interpersonal synchrony. PLoS One 11, e0168306 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Galbusera L., Finn M. T. M., Tschacher W., Kyselo M., Interpersonal synchrony feels good but impedes self-regulation of affect. Sci. Rep. 9, 14691 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Hale J., Ward J. A., Buccheri F., Oliver D., Hamilton A. F. C., Are you on my wavelength? Interpersonal coordination in naturalistic conversations. J. Nonverbal Behav. 44, 63–83 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ravreby I., Shilat Y., Yeshurun Y., Reducing synchronization to increase interest improves interpersonal liking. bioRxiv [preprint] (2021). https://www.biorxiv.org/content/10.1101/2021.06.30.450608v1.full.pdf. Accessed 7 July 2021.

- 64.Dahan A., Noy L., Hart Y., Mayo A., Alon U., Exit from synchrony in joint improvised motion. PLoS One 11, e0160747 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Mayo O., Gordon I., In and out of synchrony—Behavioral and physiological dynamics of dyadic interpersonal coordination. Psychophysiology 57, e13574 (2020). [DOI] [PubMed] [Google Scholar]

- 66.Egbert M. M., Schisming: The transformation from a single conversation to multiple conversations. Res. Lang. Soc. Interact. 30, 1–51 (1997). [Google Scholar]

- 67.Hömke P., Holler J., Levinson S. C., Eye blinking as addressee feedback in face to face conversation. Res. Lang. Soc. Interact. 50, 54–70 (2017). [Google Scholar]

- 68.Clayman S. E., “Turn-constructional units and the transition-relevance place” in The Handbook of Conversation Analysis, Sidell J., Stivers T., Eds. (Blackwell Publishing, 2013). pp. 151–166. [Google Scholar]

- 69.Sacks H., Schegloff E. A., Jefferson G., “A simplest systematics for the organization of turn taking for conversation.” in Studies in The Organization of Conversational Interaction, Schenkein J., Ed. (Elsevier, 1978). pp. 7–55. [Google Scholar]

- 70.Maqsood F., Effects of varying light conditions and refractive error on pupil size. Cogent Med. 4, 1338824 (2017). [Google Scholar]

- 71.Cheong J. H., Brooks S., Chang L. J., FaceSync: Open source framework for recording facial expressions with head-mounted cameras. F1000 Res. 8, 702 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Peirce J. W., PsychoPy—Psychophysics software in Python. J. Neurosci. Methods 162, 8–13 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Brugman H., Russel A., Nijmegen X., Annotating Multi-Media/Multi-Modal Resources with ELAN (LREC, 2004). [Google Scholar]

- 74.Rogers S. L., Guidetti O., Speelman C. P., Longmuir M., Phillips R., Contact is in the eye of the beholder: The eye contact illusion. Perception 48, 248–252 (2019). [DOI] [PubMed] [Google Scholar]

- 75.Berndt D. J., Clifford J., Using dynamic time warping to find patterns in time series. KDD Workshop, 48, 359–370 (1994). [Google Scholar]

- 76.Epskamp S., Deserno M. K., Bringmann L. F., mlVAR: Multi-level vector autoregression. R Package Version 0.4 (2017). https://CRAN.R-project.org/package=mlVAR. Accessed 3 September 2021.

- 77.Wohltjen S., Wheatley T., eyeContact-in-conversation. GitHub. https://github.com/sophiewohltjen/eyeContact-in-conversation. Deposited 6 April 2021.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All pupillometry, eye contact, engagement data, de-identified conversation transcript timestamps, and custom scripts associated with this study are available at https://github.com/sophiewohltjen/eyeContact-in-conversation (77).