Abstract

Predicting postoperative survival of lung cancer patients (LCPs) is an important problem of medical decision-making. However, the imbalanced distribution of patient survival in the dataset increases the difficulty of prediction. Although the synthetic minority oversampling technique (SMOTE) can be used to deal with imbalanced data, it cannot identify data noise. On the other hand, many studies use a support vector machine (SVM) combined with resampling technology to deal with imbalanced data. However, most studies require manual setting of SVM parameters, which makes it difficult to obtain the best performance. In this paper, a hybrid improved SMOTE and adaptive SVM method is proposed for imbalance data to predict the postoperative survival of LCPs. The proposed method is divided into two stages: in the first stage, the cross-validated committees filter (CVCF) is used to remove noise samples to improve the performance of SMOTE. In the second stage, we propose an adaptive SVM, which uses fuzzy self-tuning particle swarm optimization (FPSO) to optimize the parameters of SVM. Compared with other advanced algorithms, our proposed method obtains the best performance with 95.11% accuracy, 95.10% G-mean, 95.02% F1, and 95.10% area under the curve (AUC) for predicting postoperative survival of LCPs.

1. Introduction

Lung cancer (LC) is the deadliest cancer in the world. More than 85% of lung cancer patients are diagnosed with non-small-cell LC [1]. Surgical resection is the standard and most effective treatment for LC stage I, stage II, and nonsmall cell stage III A [1]. A major problem of the clinical decision on LC operation is to select candidates for surgery based on the patient's short-term and long-term risks and benefits, where survival time is one of the most important measures. Accurately predicting a patient's survival after surgery can help doctors make better treatment decisions. At the same time, it can help patients better understand their conditions to have good psychological expectations and financial preparation.

In recent years, more and more data-driven methods have been used to predict the postoperative survival of LCPs. In terms of statistical methods, Kaplan–Meier curves, multivariable logistic regression, and Cox regression are the three most widely used statistical methods to predict survival or complications for LCPs [2]. However, taking into account the shortcomings of traditional statistical methods and the incompleteness of medical data, data mining and machine learning techniques are introduced in recent years. Mangat and Vig [3] proposed an association rule algorithm based on a dynamic particle swarm optimizer, and the classification accuracy is 82.18%. Saber Iraji [4] compared the accuracy of adaptive fuzzy neural networks, extreme learning machine, and neural networks for predicting the 1-year postoperative survival of LCPs. The results show that sensitivity (90.05%) and specificity (81.57%) of an extreme learning machine are the highest, respectively. Tomczak et al. [5] used the boosted support vector machine (SVM) algorithm to predict the postoperative survival of LCPs. This algorithm combines the advantages of ensemble learning and cost-sensitive SVM, and the G-mean can reach 65.73%. As can be seen from the previous research, most of them ignore the impact of imbalanced data distribution, which may reduce the performance of classifiers.

Class imbalance refers to the phenomenon in which one class of data in a dataset is much larger than the others [6]. Standard machine learning classifiers are effective for balanced data, but they are not good for imbalanced data. Specifically, with the progress of medical technology, the number of long-term survivors after surgery for LCPs is much larger than that of short-term deaths. This will lead to higher prediction accuracy for survivors (majority class) and poorer recognition for deceases (minority class). Therefore, it is necessary to propose a method that has good classification performance for both survivors and deceased ones for predicting postoperative survival of LCPs.

During the past decades, the imbalanced data classification problem has widely become a matter of concern and has been intensively researched. The existing papers on imbalanced data processing methods have two main research directions: data level and algorithm level [7]. The data-level processing methods create a balanced class distribution by resampling the input data. Algorithm-level processing methods mainly involve two aspects: ensemble learning and cost-sensitive learning. Among these imbalanced data processing methods, the synthetic minority oversampling technique (SMOTE) is one of the most widely used methods, as it is relatively simple and effective [8]. However, it is likely to be unsatisfactory or even counterproductive if SMOTE is used alone, which is because its blind oversampling ignores the distribution of samples, such as the existence of noise [9, 10]. To solve this problem, many approaches are proposed to improve SMOTE. Ramentol et al. [11] combined rough set theory with SMOTE and proposed the SMOTE-RSB algorithm. SMOTE-RSB first uses SMOTE for oversampling and then removes noise and outliers in the dataset based on rough set theory. SSMNFOS [12] is a hybrid method based on stochastic sensitivity measurement (SSM) noise filtering and oversampling, which can improve the robustness of the oversampling method with respect to noise samples. The CURE-SMOTE [13] uses CURE (clustering using representatives) to cluster minority samples for removing noise and outliers and then uses SMOTE to insert artificial synthetic samples between representative samples and central samples to balance the dataset. However, most of these methods need to set the noise threshold through prior parameters, which increases the risk of misidentification of noise. In addition, some researchers consider ensemble filtering methods, which have been proven to be generally more efficient than single filters [14]. In this paper, we propose to use the cross-validated committees filter (CVCF) to detect and remove noise before applying SMOTE and record this method as CVCF-SMOTE. CVCF is an ensemble-based filter, which can reduce the risk of error in the threshold setting of prior parameters [15].

In addition, SVM as one of the most advanced classifiers has not been well used to predict postoperative survival of LC. In the previous research, SVM has been widely used in statistical classification and regression analysis due to its excellent performance [16]. Considering the limitations of SVM on imbalanced data, some studies combine resampling technology and SVM to deal with imbalanced data. D'Addabbo and Maglietta [17] proposed a method combining parallel selective sampling and SVM (PSS-SVM) to process imbalanced big data. Experimental results show that the performance of PSS-SVM is better than that of SVM and RUSBoost classifiers. Huang et al. [18] designed an undersampling technique based on clustering and combined it with optimized SVM to deal with imbalanced data. The classification performance of SVM is improved by the linear combination of SVM based on a mixed kernel. Fan et al. [19] proposed a hybrid technology combining principal component analysis (PCA), SMOTE, and SVM to diagnose chiller fault. Experimental results prove that this hybrid technology can improve the overall performance of chiller fault diagnosis.

However, these studies usually require a manual setting of SVM parameters, which may lead to failure to obtain the best experimental results. The standard SVM has a limitation that its performance depends on the selection of initial parameters. Some studies optimize the parameters of SVM through evolutionary calculations which have achieved good results. In these optimization algorithms, the particle swarm optimization- (PSO-) optimized SVM has been widely used with promising results due to its simplicity and fast convergence [20]. With the development of PSO technology, some improved PSO algorithms are used to optimize SVM. Wei et al. [21] proposed a binary PSO-optimized SVM method for feature selection, which overcomes the problem of premature convergence and obtained high-quality features. A switching delayed particle swarm optimization- (SDPSO-) optimized SVM is proposed to diagnose Alzheimer's disease [22]. Experimental results show that the proposed method outperforms several other variants of SVM and has obtained excellent classification accuracy. However, these methods often require parameter settings for PSO or improved PSO, such as particle size and inertial weight. In general, getting the best settings is complicated and time-consuming. If the PSO parameters are set improperly, it will even reduce the performance of the SVM.

In recent years, many new metaheuristics techniques have been proposed, such as Monarch Butterfly Optimization (MBO) [23], slime mould algorithm [24], Moth Search (MS) [25], Hunger Games Search (HGS) [26], and Harris Hawks Optimizer (HHO) [27]. However, most of these methods require users to tune parameters to achieve satisfactory performance. Fuzzy self-tuning PSO (FPSO) is a kind of setting-free adaptive PSO proposed in recent years [28]. The advantage of FPSO is that every particle is adaptively adjusted during the optimization process without any PSO expertise and parameter settings. Moreover, experimental results show that FPSO is better than several previous competitors in convergence speed and finding optimal solution aspects. Based on the above considerations, the FPSO algorithm is exploited to optimize the parameters of SVM, which leads to a novel FPSO-SVM classification algorithm.

Based on the improved SMOTE and FPSO-SVM, we propose a two-stage hybrid method to improve the performance of the postoperative survival prediction of LCPs. In the first stage, CVCF is used to remove noise samples to improve the performance of SMOTE. Then, SMOTE is adopted to handle the imbalanced nature of the dataset. In the second stage, we apply FPSO-SVM to predict the postoperative survival of LCPs. The experimental results show that the proposed hybrid method outperforms other comparative state-of-the-art algorithms. This hybrid method can effectively improve the accuracy of survival prediction after LC surgery and provide reliable medical decision-making support for doctors and patients. Our contributions are summarized as follows:

A novel hybrid method that combines improved SMOTE with adaptive SVM is proposed for predicting postoperative survival of LCPs

We apply CVCF to clean up data noise to improve the performance of SMOTE

FPSO is used to optimize the parameters of SVM and achieve an adaptive SVM

The proposed hybrid method not only performs higher predictive accuracy than other compared algorithms for predicting postoperative survival of LCPs but also has better G-mean, F1, and area under the curve (AUC)

The rest of this paper is as follows: Section 2 shows the materials and methods. The experiment design, performance metrics, and experimental results are described in Section 3. A brief summary is described in Section 4.

2. Materials and Methods

2.1. Data Description

In this paper, the thoracic surgery dataset in Zięba et al. [5], is selected to predict the postoperative survival of LCPs. Data were collected from the Wroclaw Thoracic Surgery Center. These patients underwent lung resection for primary LC from 2007 to 2011. It contains 470 samples with an imbalance rate of 5.71. There are 400 patients who survived more than one year and 70 patients who survived less than one year in this dataset. Table 1 shows the features of the dataset. These features were selected from 36 preoperative predictors by the information gain method and were used to predict the postoperative survival expectancy. Our task is to predict whether the survival time in patients after surgery was greater than one year.

Table 1.

Feature details of the thoracic surgery dataset.

| Feature ID | Description | Type of attribute |

|---|---|---|

| 1 | Size of the original tumor, from OC11 (smallest) to OC14 (largest) | Nominal |

| 2 | Diagnosis (specific combination of ICD-10 codes for primary and secondary as well multiple tumors if any) | Nominal |

| 3 | Forced vital capacity | Numeric |

| 4 | Pain (presurgery) | Binary |

| 5 | Age at surgery | Numeric |

| 6 | Performance status | Nominal |

| 7 | Weakness (presurgery) | Binary |

| 8 | Dyspnoea (presurgery) | Binary |

| 9 | Cough (presurgery) | Binary |

| 10 | Haemoptysis (presurgery) | Binary |

| 11 | Peripheral arterial diseases | Binary |

| 12 | MI up to 6 months | Binary |

| 13 | Asthma | Binary |

| 14 | Volume that has been exhaled at the end of the first second of forced expiration | Numeric |

| 15 | Smoking | Binary |

| 16 | Type 2 diabetes mellitus | Binary |

| 17 | 1-year survival period (true value if died) | Binary |

2.2. Data Preprocessing

2.2.1. CVCF for Noise Cleaning

Although SMOTE is one of the most widely used methods for imbalanced data processing, it has some drawbacks in dealing with data noise. A major concern is that SMOTE may exacerbate the presence of noise in the data, as shown in Figure 1. Given the good performance of CVCF, we consider using it to improve SMOTE.

Figure 1.

Using SMOTE alone may indiscriminately aggravate the noise.

The CVCF algorithm is a well-known representative of an ensemble-based noise filter [29]. It induces multiple single classifiers by means of cross-validation. Afterward, samples mislabeled by all classifiers (or most classifiers) will be marked as noise and removed from the dataset. Choosing an appropriate base classifier is a key operation to ensure the excellent performance of CVCF. In this paper, we choose the C4.5 algorithm as the base classifier of CVCF because it has better robustness to noise data and suitability for ensemble learning [30, 31].

C4.5 is an improved version of the ID3 algorithm [32]. It improves ID3 by handing numeric attributes and missing values and by introducing pruning. In addition, essentially different from the ID3, the information gain ratio is used to select split attributes in C4.5, which can be denoted by

| (1) |

where InfoGainRatio(S, A) represents the information gain ratio of attribute A in dataset S. InfoGain(S, A) is the information gain of dataset S after splitting through attribute A and can be denoted by

| (2) |

where Info(S) is the entropy of dataset S. Info(S, A) is the conditional entropy about attribute A. SpiltInfo(S, A) denotes the splitting information of attribute A and is expressed by

| (3) |

where |S| represents the number of samples of dataset S. |Si| indicates the number of samples of subset i after the original dataset is divided into m subsets according to the attribute value of A.

2.2.2. SMOTE to Balance Data

The core idea of SMOTE is to insert artificial samples of similar values into the minority class, thereby improving the imbalanced distribution of classes. More specifically, the sampling ratio is set firstly, and then, the k nearest neighbors of each minority sample are found. Finally, according to equation (4), one of the neighbors is randomly selected to generate a synthetic sample that is put back into the dataset until the sampling number reaches the set ratio. The synthesized new sample is calculated as follows:

| (4) |

where Xnew represents a new synthetic sample, X is the feature vector for each sample in the minority class, and Xi is the i-th nearest neighbor of sample X. ∂ is a random number between 0 and 1.

2.3. The Proposed FPSO-Optimized SVM (FPSO-SVM)

2.3.1. SVM

SVM is a supervised learning classifier based on statistical theory and structural risk optimization [33]. SVM is not prone to overfitting and can handle high-dimensional data well. The principle of SVM is to map the original data to a high-dimensional space to discover a hyperplane that maximizes the margin determined by the support vectors. Suppose there is a dataset D = {(x1, y1), (x2, y2), ⋯, (xn, yn)}. The optimal hyperplane of dataset D can be expressed as

| (5) |

where aT is the weight vector and b represents the bias.

For nonlinear problems, the above-mentioned optimal hyperplane can be transformed into

| (6) |

where C is the penalty factor and ζi is the slack variable. The above constrained objective function can satisfy the KKT condition by introducing the Lagrange formulation. The original objective function is transformed into

| (7) |

where β is a Lagrangian multiplier. According to the previous experimental experience, a larger value of C means a larger separation interval and a greater generalization risk. Conversely, when the value of C is too small, it is easy to have an underfitting problem.

Finally, the decision function is shown in

| (8) |

where βi∗ and b∗ are the optimal Lagrangian multiplier and optimal value of b, respectively, and sgn(·) represents a symbolic function. K < xi · xj> is a kernel function. Usually, the radial basis function (RBF) kernel function is selected for SVM, which can be expressed as

| (9) |

where γ is the kernel parameter. The classification performance of SVM depends heavily on the setting of penalty factor C and kernel parameter γ. Therefore, parameter setting is a key step in applying SVM.

2.3.2. FPSO-SVM Model

In order to make SVM have better classification performance, we use FPSO to optimize the penalty factor C and kernel parameter γ of SVM, called FPSO-SVM. The classification accuracy is taken as the fitness function of FPSO, which is defined as

| (10) |

where TP, TN, FP, and FN represent four different classification results which are shown in Table 2.

Table 2.

Confusion matrix.

| Actual positive | Actual negative | |

|---|---|---|

| Predicted positive | TP | FP |

| Predicted negative | FN | TN |

FPSO is a fully adaptive version of PSO, which calculates the inertia weight, learning factor, and velocity independently for each particle based on fuzzy logic. The outstanding advantages of FPSO are that it does not require any prior knowledge about PSO and its optimization performance and convergence speed are better than those of PSO.

In FPSO, first, the number of particle swarms is set to based on the heuristic [34, 35]. Here, M is the dimension of the optimization problem. In this paper, since there are two SVM parameters that need to be optimized, M = 2 and N = 12 (round down). After initializing the particles, we need to update them according to the position and velocity of the particles. Let xik and vik be the velocity and position of the i-th particle at the k-th iteration, respectively. At the (k + 1)-th iteration, the velocity vik+1 and position xik+1 of the i-th particle can be defined as

| (11) |

| (12) |

where wik is the inertia weight of particle i at the k-th iteration and csocik and ccogik are social and cognitive factors of particle i at the k-th iteration, respectively. In FPSO, unlike conventional PSO, the values of wik, csocik, and ccogik are not fixed but are calculated separately for different particles at each iteration. r1 and r2 are two random vectors, respectively. bik and gk are the position of the i-th particle and the best global position in the swarm at the k-th iteration.

The maximum velocity (vmaxm) and minimum velocity (vminm) of all particles in the m-th dimension are defined as

| (13) |

| (14) |

where bmaxm and bminm represent upper and lower bounds of the m-th dimension for the optimization problem, respectively. η and λ (η > λ) are two coefficients determined by linguistic variables, in order to clamp vmaxm and vminm of each particle.

In order to get the w, csoc, ccog, η, and λ values of each particle in each iteration, two concepts are introduced: the distance between each particle and the global optimal particle and the fitness increment of each particle relative to the previous iteration.

The distance between any two particles in the k-th iteration is expressed as

| (15) |

The function ϕ represents the normalized fitness increment of particle i for the previous iteration, which is calculated as

| (16) |

where δmax is the diagonal length of the rectangle formed by the search space. fwor is the worst fitness value.

The linguistic variable of function δ is defined as Same, Near, and Far, which is used to measure the distance from a particle to the global best particle. The trapezoid membership function of Same is defined as

| (17) |

The triangle membership function of Near is defined as

| (18) |

The trapezoid membership function of Far is defined as

| (19) |

where δ1 = 0.2 · δmax, δ2 = 0.4 · δmax, and δ3 = 0.6 · δmax.

The linguistic variable of function ϕ is defined as Better, Same, and Worse, which is used to measure the improvement of a particle's fitness value for the previous iteration. The trapezoid membership function of Better can be obtained by

| (20) |

The triangle membership function of Same is expressed as follows:

| (21) |

The triangle membership function of Worse is as follows:

| (22) |

According to the preset fuzzy rules, w, csoc, ccog, η, and λ have three levels including Low, Medium, and High [28]. Table 3 shows the defuzzification values of w, csoc, ccog, η, and λ, which are calculated by the Sugeno inference method [36]. It is defined as follows:

| (23) |

where R represents the number of rules. ρr and zr are the membership degree of the input variable and output value of the r-th rule, respectively.

Table 3.

Defuzzification of w, csoc, ccog, η, and λ.

| Output | Level | ||

|---|---|---|---|

| Low | Medium | High | |

| w | 0.3 | 0.5 | 1.0 |

| c soc | 1.0 | 2.0 | 3.0 |

| c cog | 0.1 | 1.5 | 3.0 |

| λ | 0.0 | 0.001 | 0.01 |

| η | 0.1 | 0.15 | 0.2 |

Then, update the position of each particle based on the obtained values of w, csoc, ccog, η, and λ. Finally, recalculate the fitness of each particle, that is, accuracy of the SVM corresponding to each particle. Repeat the above process until the maximum number of iterations is reached and output SVM with the optimal parameters.

The time complexity of FPOS-SVM consists of two parts: FPSO and SVM. In FPSO, the velocity and position of each particle are calculated in each iteration. Therefore, the computational complexity of FPSO is determined by the number of iterations, the particle swarm size, and the dimensionality of each particle. Thus, FPSO requires O(TNm) time complexity, where T is the number of iterations of FPSO, N is the particle swarm size of FPSO, and m is the dimensionality of the optimization problem. For SVM, the optimal hyperplane is obtained by computing the distance between the support vector and the decision boundary. Then, the time complexity required for SVM is O(dnsv), where d is the input vector dimension and nsv is the number of support vectors. In FPSO-SVM, the number of SVM computations depends on the particle swarm size and the number of iterations of FPSO. Therefore, the time complexity of FPSO-SVM is O(TNm + TNdnsv).

2.4. Specific Steps of the Proposed Hybrid Method for Predicting Postoperative Survival of LCPs

Based on improved SMOTE and FPSO-SVM, we propose a two-stage hybrid method to improve the performance of the postoperative survival prediction of LCPs. In the first stage, CVCF is used to remove noise samples to improve the performance of SMOTE. Then, apply SMOTE to balance data. In the second stage, FPSO-SVM is adopted to predict postoperative survival of LCPs. Figure 2 shows the flowchart of the proposed hybrid method. The specific steps of the hybrid method are presented as follows:

Set CVCF to n-fold cross-validation. Then, the original dataset is divided into n subsets

Take a different subset from the n subsets each time as the testing set and the remaining n − 1 subsets as the training set. Therefore, a total of n different C4.5 classifiers are trained. Then, all the trained C4.5 classifiers will vote for each sample in the dataset. In this way, each sample has a real class label and n labels marked by C4.5

For each sample, determine whether all (or most) labels marked with C4.5 are different from the real one. If all (or most) of them are different from the real class label, the sample will be treated as noise and removed from the dataset. On the contrary, the sample is retained. Finally, all the retained samples make up a cleaned dataset

Oversample from the cleaned dataset with SMOTE until the class distribution of the dataset is balanced

After data preprocessing with CVCF-SMOTE, the new dataset is divided into a training set and a testing set

Set the search range for the penalty factor C and kernel parameter γ. Initialize particle swarm

Evaluate the fitness of each particle based on equation (10). Calculate the linguistic values of Inertia, Social, Cognitive, η, and λ according to equations (13)-(22)

Convert the language values of Inertia, Social, Cognitive, η, and λ into numerical values based on equation (23) and Table 3. Update the velocity and position of each particle based on equations (11) and (12)

Determine whether the maximum number of iterations has been reached. If it is reached, the optimized SVM is output. Otherwise, return to steps (7) and (8)

Apply the optimized SVM on the testing set

Figure 2.

Flowchart of the proposed hybrid method for predicting postoperative survival of LCPs.

3. Experiments and Results

3.1. Experiment Design

To evaluate our proposed hybrid method, we compare it with several state-of-the-art algorithms including PSO-optimized SVM (PSO-SVM), SVM, k-nearest neighbor (KNN) [37], random forest (RF) [38], gradient boosting decision tree (GBDT) [39], and AdaBoost [40]. In addition, we consider six preprocessing approaches, including CVCF-SMOTE, Borderline-SMOTE (B-SMOTE) [41], Safe-Level-SMOTE (SL-SMOTE) [42], SMOTE-TL [43], SMOTE, and no preprocessing (marked as NONE), to explore the performance of our proposed CVCF-SMOTE method. B-SMOTE, SL-SMOTE, and SMOTE-TL are three representative SMOTE extensions, which can handle imbalanced data with noise. In addition, in order to better evaluate the effectiveness of the proposed hybrid method, we tested its performance on two other imbalanced data. The value range of penalty factor C and kernel parameter γ is set to [0, 30], and the maximum number of iterations is set to 30. All of these algorithms are programmed in the Python programming language, except for CVCF-SMOTE which is run in the KEEL software [44]. To eliminate randomness, experiments are repeated 10 times and the average performance is shown in this study.

3.2. Performance Metrics

In this section, we introduce the selected widely used imbalanced data classification performance metrics, including accuracy (defined by equation (10)), G-mean, F1, and AUC. They can be calculated according to the confusion matrix in Table 2.

| (24) |

| (25) |

where precision = TP/(TP + FP) and recall = TP/(TP + FN). Precision can be regarded as a measure of the exactness of a classifier, while recall can be regarded as a measure of the completeness of a classifier.

AUC is defined as the area under the ROC curve and the coordinate axis. AUC is very suitable for the evaluation of imbalanced data classifiers because it is not sensitive to imbalanced distribution and error classification costs, and it can achieve the balance between true positive and false positive [45].

3.3. Result and Discussion

Tables 4–7 demonstrate the accuracy, G-mean, F1, and AUC values of different algorithms under different preprocessing methods for predicting postoperative survival of LCPs, respectively. The best experimental results of different preprocessing methods are marked in bold. We can see from Tables 4–7 that the proposed CVCF-SMOTE+FPSO-SVM model obtains the best performance among all methods with 95.11% accuracy, 95.10% G-mean, 95.02% F1, and 95.10% AUC. This shows that our proposed hybrid method can balance the classification accuracy of the minority class and the majority class while ensuring overall accuracy. That is, the proposed CVCF-SMOTE+FPSO-SVM method has a higher recognition rate for patients who survived after LC surgery for both longer than 1 year and less than 1 year.

Table 4.

Accuracy comparison for different algorithms with different preprocessing methods.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0.8440 | 0.7149 | 0.6385 | 0.7378 | 0.8679 | 0.9511 |

| PSO-SVM | 0.8440 | 0.6570 | 0.6217 | 0.6776 | 0.7267 | 0.8643 |

| SVM | 0.8440 | 0.5294 | 0.5561 | 0.4781 | 0.5493 | 0.5204 |

| RF | 0.8369 | 0.7149 | 0.6023 | 0.7388 | 0.8430 | 0.8869 |

| GBDT | 0.8156 | 0.7059 | 0.5864 | 0.7025 | 0.8213 | 0.9276 |

| KNN | 0.8227 | 0.6561 | 0.5833 | 0.6910 | 0.7905 | 0.9005 |

| AdaBoost | 0.7943 | 0.6652 | 0.5615 | 0.6458 | 0.7674 | 0.9095 |

Table 5.

G-mean comparison for different algorithms with different preprocessing methods.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0 | 0.6942 | 0.6148 | 0.7203 | 0.8625 | 0.9510 |

| PSO-SVM | 0 | 0.5832 | 0.5628 | 0.6150 | 0.6567 | 0.8501 |

| SVM | 0 | 0 | 0 | 0.1537 | 0.1015 | 0.1659 |

| RF | 0 | 0.7092 | 0.6017 | 0.7385 | 0.8404 | 0.8868 |

| GBDT | 0.2938 | 0.6901 | 0.5835 | 0.7024 | 0.8154 | 0.9274 |

| KNN | 0 | 0.6572 | 0.5819 | 0.6874 | 0.7919 | 0.9000 |

| AdaBoost | 0.2059 | 0.6550 | 0.5552 | 0.6464 | 0.7597 | 0.9096 |

Table 6.

F1 comparison for different algorithms with different preprocessing methods.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0 | 0.6612 | 0.5549 | 0.7059 | 0.8482 | 0.9502 |

| PSO-SVM | 0 | 0.5089 | 0.4995 | 0.5600 | 0.6022 | 0.8336 |

| SVM | 0 | 0 | 0 | 0.2823 | 0.0605 | 0.0536 |

| RF | 0 | 0.6834 | 0.5713 | 0.7458 | 0.8241 | 0.8889 |

| GBDT | 0.1333 | 0.6524 | 0.5470 | 0.7025 | 0.7950 | 0.9292 |

| KNN | 0 | 0.6545 | 0.5473 | 0.7094 | 0.7760 | 0.9035 |

| AdaBoost | 0.0645 | 0.6186 | 0.5101 | 0.6425 | 0.7323 | 0.9099 |

Table 7.

AUC comparison for different algorithms with different preprocessing methods.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0.5000 | 0.7265 | 0.6268 | 0.7400 | 0.8639 | 0.9510 |

| PSO-SVM | 0.5000 | 0.6426 | 0.6069 | 0.6754 | 0.7094 | 0.8631 |

| SVM | 0.5000 | 0.5000 | 0.5000 | 0.4993 | 0.5059 | 0.5138 |

| RF | 0.4958 | 0.7115 | 0.6038 | 0.7397 | 0.8411 | 0.8873 |

| GBDT | 0.5202 | 0.6993 | 0.5857 | 0.7052 | 0.8171 | 0.9281 |

| KNN | 0.4874 | 0.6581 | 0.5842 | 0.6919 | 0.7927 | 0.9010 |

| AdaBoost | 0.4891 | 0.6603 | 0.5582 | 0.6483 | 0.7621 | 0.9097 |

In addition, it is easy to see from Tables 5–7 that the G-mean, F1, and AUC performances of different classifiers for the original dataset without preprocessing are extremely poor. However, it can be found from Table 4 that the classification accuracy of all the classifiers for the original dataset is higher than the accuracy after SMOTE preprocessing. This indicates susceptibility to imbalanced data; although the classifiers perform well in the majority class, it performs very poorly in the minority class. That is to say, these classifiers fail to balance the classification accuracy of LCPs whose survival time after surgery is longer than 1 year and less than 1 year.

For the performance after preprocessing with SMOTE, we found that the G-mean, F1, and AUC values of most classifiers (except SVM) are higher than those of the original dataset. However, as can be seen from Table 4, the accuracy of all classifiers with SMOTE is lower than that of the original dataset. This shows that although SMOTE can balance precision and recall, it leads to a decrease in accuracy. For the three SMOTE extensions SL-SMOTE, SMOTE-TL, and B-SMOTE, we find that B-SMOTE has the most competitive performance. B-SMOTE+FPSO-SVM obtained the experimental results second only to CVCF-SMOTE+FPSO-SVM.

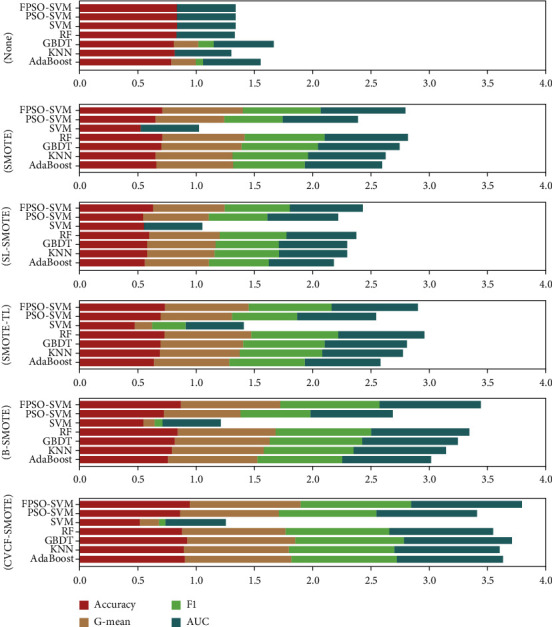

Figure 3 shows the stacked histograms of accuracy, G-mean, F1, and AUC for different algorithms under different preprocessing methods. It can be seen from Figure 3 that our proposed CVCF-SMOTE+FPSO-SVM has the best performance in predicting postoperative survival of LCPs. The main reasons behind the experimental results are as follows: first, CVCF identifies and removes noise to improve the data quality so that blind oversampling can be reduced when applying SMOTE. Second, FPSO-SVM can search the optimal parameters of SVM adaptively, which improves the classification accuracy of SVM.

Figure 3.

Stacked histograms of accuracy, G-mean, F1, and AUC for different algorithms under different preprocessing methods.

In order to further test the difference between CVCF-SMOTE+FPSO-SVM and other combination methods, a paired t-test was conducted among CVCF-SMOTE+FPSO-SVM and the best results under different preprocessing methods. A p value less than 0.05 is considered to be statistically significant in the experiment. From Table 8, it can be seen that CVCF-SMOTE+FPSO-SVM achieves significantly better results than the best results under different preprocessing methods in terms of the accuracy, F1, G-mean, and AUC at the prescribed statistical significance level of 5%.

Table 8.

Paired t-test results of CVCF-SMOTE+FPSO-SVM and the best performance under different preprocessing methods in terms of accuracy, F1, G-mean, and AUC on the thoracic surgery dataset. For CVCF-SMOTE, the p value is the statistic of the best result and the second best result.

| Methods | Accuracy | F1 | G-mean | AUC |

|---|---|---|---|---|

| NONE | 11.034 (0.000) | 25.502 (0.000) | 21.102 (0.000) | 27.01 (0.000) |

| SMOTE | 14.348 (0.000) | 16.01 (0.000) | 10.261 (0.000) | 12.469 (0.000) |

| SL-SMOTE | 29.947 (0.000) | 25.764 (0.000) | 30.349 (0.000) | 31.255 (0.000) |

| SMOTE-TL | 29.815 (0.000) | 30.281 (0.000) | 22.248 (0.000) | 26.895 (0.000) |

| B-SMOTE | 6.541 (0.000) | 5.176 (0.001) | 5.297 (0.000) | 5.997 (0.000) |

| CVCF-SMOTE | 5.237 (0.001) | 4.994 (0.001) | 4.67 (0.001) | 4.719 (0.001) |

We also compare the accuracy of our proposed model with previous studies as shown in Table 9. We can see from Table 9 that the accuracy of the CVCF-SMOTE+FPSO-SVM model is higher than that of other methods of the previous literature. Finally, we compare the ROC curves of different algorithms under different preprocessing methods, as shown in Figure 4. The greater the AUC value, the better the classifier performance. It can be seen that the AUC of our proposed CVCF-SMOTE+FPSO-SVM is the largest, which means that our proposed model is outperforming other comparison methods for predicting postoperative survival of LCPs.

Table 9.

Comparative results with previous studies based on accuracy.

Figure 4.

ROC curve comparison of different algorithms under different preprocessing methods.

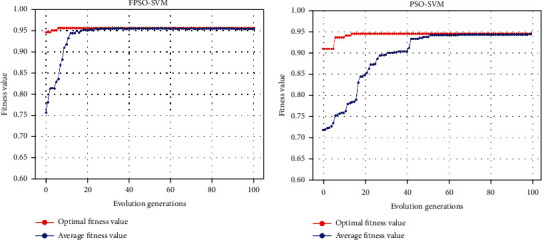

In order to further prove that the performance of our proposed FPSO-SVM is superior to that of PSO-SVM, we draw the fitness curves of these two algorithms. Figures 5(a) and 5(b) show fitness curves of FPSO-SVM and PSO-SVM with CVCF-SMOTE preprocessing. As can be seen from (Figures 5(a) and 5(b)), we can clearly see that compared with PSO-SVM, FPSO-SVM not only has a higher fitting degree but also a faster convergence speed. This shows that our proposed FPSO-SVM algorithm can identify the optimal solution in the search space faster and more accurately than PSO-SVM.

Figure 5.

Fitness curves of FPSO-SVM (a) and PSO-SVM (b) with CVCF-SMOTE.

3.4. Works on Other Datasets

To show the generalization ability of our proposed method, we apply CVCF-SMOTE+FPSO-SVM to the other two imbalanced datasets collected from KEEL (https://sci2s.ugr.es/keel/) [44]. Table 10 shows the details of the two selected datasets.

Table 10.

Details of Haberman and appendicitis datasets.

| Datasets | Case number | Attribute number | Class distribution |

|---|---|---|---|

| Haberman | 306 | 3 | 225/81 |

| Appendicitis | 106 | 7 | 85/21 |

Tables 11 and 12 show the accuracy and AUC of different algorithms in different preprocessing methods on the Haberman dataset. It can be seen from Tables 11 and 12 that under different preprocessing methods, accuracy and AUC of CVCF-SMOTE+FPSO-SVM are higher than those of the comparison classifiers. As shown in Table 13, the results of the paired t-test also show that CVCF-SMOTE+FPSO-SVM is significantly better than the best experimental results under different preprocessing methods on the Haberman dataset. For the appendicitis dataset, it can be seen from Tables 14 and 15 that CVCF-SMOTE+FPSO-SVM also obtains the highest accuracy and AUC value compared to other preprocessing methods and classifier combinations. As can be seen from Table 16, for the appendicitis dataset, CVCF-SMOTE+FPSO-SVM achieves significantly better results than the best performance under NONE, SMOTE, SL-SMOTE, and B-SMOTE. However, it is not a significant difference for the best performance under SMOTE-TL.

Table 11.

Accuracy comparison for different algorithms with different preprocessing methods on the Haberman dataset.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0.7402 | 0.6890 | 0.6386 | 0.7396 | 0.7795 | 0.8205 |

| PSO-SVM | 0.7098 | 0.6435 | 0.6504 | 0.6538 | 0.6831 | 0.7205 |

| SVM | 0.7196 | 0.6291 | 0.6409 | 0.6423 | 0.6772 | 0.7165 |

| RF | 0.6989 | 0.6795 | 0.6142 | 0.7315 | 0.7559 | 0.7772 |

| GBDT | 0.6837 | 0.6606 | 0.6299 | 0.7252 | 0.7465 | 0.7764 |

| KNN | 0.7174 | 0.6630 | 0.6417 | 0.7000 | 0.7449 | 0.7992 |

| AdaBoost | 0.7163 | 0.6402 | 0.6331 | 0.6117 | 0.6819 | 0.7559 |

Table 12.

AUC comparison for different algorithms with different preprocessing methods on the Haberman dataset.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0.5274 | 0.6813 | 0.6288 | 0.7310 | 0.7748 | 0.8206 |

| PSO-SVM | 0.5012 | 0.6131 | 0.6325 | 0.6669 | 0.6518 | 0.7121 |

| SVM | 0.5077 | 0.6096 | 0.6246 | 0.6598 | 0.6566 | 0.7035 |

| RF | 0.5731 | 0.6815 | 0.6132 | 0.7283 | 0.7588 | 0.7784 |

| GBDT | 0.5492 | 0.6607 | 0.6274 | 0.7226 | 0.7475 | 0.7765 |

| KNN | 0.5737 | 0.6649 | 0.6418 | 0.6997 | 0.7433 | 0.8009 |

| AdaBoost | 0.5809 | 0.6359 | 0.6293 | 0.6118 | 0.6779 | 0.7549 |

Table 13.

Paired t-test results of CVCF-SMOTE+FPSO-SVM and the best performance under different preprocessing methods in terms of accuracy and AUC on the Haberman dataset.

| Methods | Accuracy | AUC |

|---|---|---|

| NONE | 6.603 (0.000) | 18.744 (0.000) |

| SMOTE | 6.555 (0.000) | 10.315 (0.000) |

| SL-SMOTE | 15.959 (0.000) | 15.806 (0.000) |

| SMOTE-TL | 4.506 (0.001) | 3.539 (0.006) |

| B-SMOTE | 2.601 (0.029) | 2.83 (0.02) |

| CVCF-SMOTE | 4.669 (0.001) | 4.392 (0.002) |

Table 14.

Accuracy comparison for different algorithms with different preprocessing methods on the appendicitis dataset.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0.8688 | 0.8792 | 0.8208 | 0.9381 | 0.9167 | 0.9511 |

| PSO-SVM | 0.8625 | 0.8713 | 0.7620 | 0.8104 | 0.8714 | 0.9277 |

| SVM | 0.8469 | 0.7979 | 0.7854 | 0.8310 | 0.8813 | 0.9021 |

| RF | 0.8438 | 0.8438 | 0.7271 | 0.8714 | 0.9083 | 0.9106 |

| GBDT | 0.8188 | 0.8479 | 0.7146 | 0.8690 | 0.8917 | 0.9085 |

| KNN | 0.8500 | 0.7708 | 0.7354 | 0.8476 | 0.8708 | 0.8957 |

| AdaBoost | 0.8031 | 0.8396 | 0.7458 | 0.8690 | 0.8896 | 0.9106 |

Table 15.

AUC comparison for different algorithms with different preprocessing methods on the appendicitis dataset.

| Algorithms | NONE | SMOTE | SL-SMOTE | SMOTE-TL | B-SMOTE | CVCF-SMOTE |

|---|---|---|---|---|---|---|

| FPSO-SVM | 0.6878 | 0.8807 | 0.8167 | 0.9411 | 0.9135 | 0.9512 |

| PSO-SVM | 0.5893 | 0.7602 | 0.7708 | 0.9311 | 0.8917 | 0.9239 |

| SVM | 0.6674 | 0.7966 | 0.7832 | 0.8423 | 0.8788 | 0.8982 |

| RF | 0.6930 | 0.8475 | 0.7324 | 0.8755 | 0.9064 | 0.9070 |

| GBDT | 0.6460 | 0.8539 | 0.7207 | 0.8713 | 0.8909 | 0.9092 |

| KNN | 0.6885 | 0.7736 | 0.7374 | 0.8499 | 0.8676 | 0.8954 |

| AdaBoost | 0.6352 | 0.8461 | 0.7492 | 0.8685 | 0.8888 | 0.9102 |

Table 16.

Paired t-test results of CVCF-SMOTE+FPSO-SVM and the best performance under different preprocessing methods in terms of accuracy and AUC on the appendicitis dataset.

| Methods | Accuracy | AUC |

|---|---|---|

| NONE | 6.591 (0.000) | 15.628 (0.000) |

| SMOTE | 4.562 (0.001) | 5.176 (0.001) |

| B-SMOTE | 3.024 (0.014) | 3.373 (0.008) |

| SL-SMOTE | 6.227 (0.000) | 7.009 (0.000) |

| SMOTE-TL | 1.089 (0.304) | 0.785 (0.453) |

| CVCF-SMOTE | 2.764 (0.022) | 2.787 (0.21) |

From the experimental results, we see that CVCF-SMOTE+FPSO-SVM outperforms the compared algorithms for both the thoracic surgery dataset and the other two imbalanced datasets. On the one hand, it is because CVCF-improved SMOTE is well adapted to different datasets. On the other hand, FPSO-SVM automatically adjusts the optimal parameters according to different datasets, thus improving the generalization ability of the SVM.

3.5. Running Time Analysis

We compared the running time of CVCF-SMOTE+FPSO-SVM with the algorithms with the highest accuracy among all the compared methods. For the three datasets thoracic surgery, Haberman, and appendicitis, the algorithms with the highest accuracy among the compared methods are CVCF-SMOTE+GBDT, CVCF-SMOTE+KNN, and SMOTE-TL+FPSO-SVM, respectively. In addition, in order to compare the running time of FPSO-SVM with that of PSO-SVM, CVCF-SMOTE+PSO-SVM is also involved in the comparison. The comparison results are shown in Table 17. It can be seen from Table 17 that the running time for CVCF-SMOTE+FPSO-SVM is less than that of CVCF-SMOTE+PSO-SVM for the three datasets. However, the running time of CVCF-SMOTE+FPSO-SVM is slower than that of CVCF-SMOTE+GBDT, CVCF-SMOTE+KNN, and SMOTE-TL+FPSO-SVM for the thoracic surgery, Haberman, and appendicitis datasets, respectively. Considering the higher classification performance of our proposed method, it can still be considered superior to other algorithms.

Table 17.

Running time (in second) by CVCF-SMOTE+FPSO-SVM and state-of-the-art algorithms.

| Datasets | Algorithms | ||

|---|---|---|---|

| Thoracic surgery | CVCF-SMOTE+GBDT | CVCF-SMOTE+PSO-SVM | CVCF-SMOTE+FPSO-SVM |

| 31.2 | 53.6 | 43.5 | |

|

| |||

| Haberman | CVCF-SMOTE+KNN | CVCF-SMOTE+PSO-SVM | CVCF-SMOTE+FPSO-SVM |

| 18.8 | 27.5 | 24.5 | |

|

| |||

| Appendicitis | SMOTE-TL+FPSO-SVM | CVCF-SMOTE+PSO-SVM | CVCF-SMOTE+FPSO-SVM |

| 13.8 | 22.2 | 17.3 | |

4. Conclusion

In this work, we proposed a hybrid improved SMOTE and adaptive SVM method to predict the postoperative survival of LCPs. In our proposed hybrid model, CVCF is adopted to clear the data noise to improve the performance of SMOTE. Then, we use FPSO-optimized SVM to estimate whether the postoperative survival of LCPs is greater than one year. Experimental results show that our proposed CVCF-SMOTE+FPSO-SVM hybrid method obtains the best accuracy, G-mean, F1, and AUC as compared to other compared algorithms for postoperative survival prediction of LCPs.

Our proposed hybrid method can provide valuable medical decision-making support for LCPs and doctors. Considering the excellent classification performance for the other two imbalanced datasets, in the future, we will try to apply the proposed method to other problems based on imbalanced data, such as disease diagnosis and financial fraud detection. There are two limitations that need to be pointed out: one is that we only consider the 1-year survival after lung cancer surgery. In future studies, we will try to predict survival at other time points, such as survival 3 or 5 years after lung cancer surgery. The other is that the value range of the parameters of SVM in FPSO-SVM needs to be set manually, which may require some experience or experimental attempts. Designing a setting-free SVM is our future research direction.

Acknowledgments

This research is supported by the National Natural Science Foundation of China (71971123).

Data Availability

The dataset for this study can be obtained from the UCI machine learning database (http://archive.ics.uci.edu/ml/datasets/Thoracic+Surgery+Data).

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Rotman J. A., Plodkowski A. J., Hayes S. A., et al. Postoperative complications after thoracic surgery for lung cancer. Clinical Imaging. 2015;39(5):735–749. doi: 10.1016/j.clinimag.2015.05.013. [DOI] [PubMed] [Google Scholar]

- 2.Osuoha C. A., Callahan K. E., Ponce C. P., Pinheiro P. S. Disparities in lung cancer survival and receipt of surgical treatment. Lung Cancer. 2018;122:54–59. doi: 10.1016/j.lungcan.2018.05.022. [DOI] [PubMed] [Google Scholar]

- 3.Mangat V., Vig R. Novel associative classifier based on dynamic adaptive PSO: application to determining candidates for thoracic surgery. Expert Systems with Applications. 2014;41(18):8234–8244. doi: 10.1016/j.eswa.2014.06.046. [DOI] [Google Scholar]

- 4.Iraji M. S. Prediction of post-operative survival expectancy in thoracic lung cancer surgery with soft computing. Journal of Applied Biomedicine. 2017;15(2):151–159. doi: 10.1016/j.jab.2016.12.001. [DOI] [PubMed] [Google Scholar]

- 5.Zięba M., Tomczak J. M., Lubicz M., Świątek J. Boosted SVM for extracting rules from imbalanced data in application to prediction of the post-operative life expectancy in the lung cancer patients. Applied Soft Computing. 2014;14:99–108. doi: 10.1016/j.asoc.2013.07.016. [DOI] [Google Scholar]

- 6.Haixiang G., Yijing L., Shang J., Mingyun G., Yuanyue H., Bing G. Learning from class-imbalanced data: review of methods and applications. Expert Systems with Applications. 2017;73:220–239. doi: 10.1016/j.eswa.2016.12.035. [DOI] [Google Scholar]

- 7.Tsai C.-F., Lin W.-C., Hu Y.-H., Yao G.-T. Under-sampling class imbalanced datasets by combining clustering analysis and instance selection. Information Sciences. 2019;477:47–54. doi: 10.1016/j.ins.2018.10.029. [DOI] [Google Scholar]

- 8.Chawla N. V., Bowyer K. W., Hall L. O., Kegelmeyer W. P. SMOTE: synthetic minority over-sampling technique. Journal of Artificial Intelligence Research. 2002;16:321–357. doi: 10.1613/jair.953. [DOI] [Google Scholar]

- 9.Sáez J. A., Luengo J., Stefanowski J., Herrera F. SMOTE-IPF: addressing the noisy and borderline examples problem in imbalanced classification by a re-sampling method with filtering. Information Sciences. 2015;291:184–203. doi: 10.1016/j.ins.2014.08.051. [DOI] [Google Scholar]

- 10.Douzas G., Bacao F., Last F. Improving imbalanced learning through a heuristic oversampling method based on k-means and SMOTE. Information Sciences. 2018;465:1–20. doi: 10.1016/j.ins.2018.06.056. [DOI] [Google Scholar]

- 11.Ramentol E., Caballero Y., Bello R., Herrera F. SMOTE-RSB: a hybrid preprocessing approach based on oversampling and undersampling for high imbalanced data-sets using SMOTE and rough sets theory. Knowledge and Information Systems. 2011;33(2):245–265. doi: 10.1007/s10115-011-0465-6. [DOI] [Google Scholar]

- 12.Zhang J., Ng W. W. Stochastic sensitivity measure-based noise filtering and oversampling method for imbalanced classification problems. In 2018 IEEE international conference on systems, man, and cybernetics (SMC); 2018; IEEE. pp. 403–408. [Google Scholar]

- 13.Ma L., Fan S. CURE-SMOTE algorithm and hybrid algorithm for feature selection and parameter optimization based on random forests. BMC Bioinformatics. 2017;18(1):169–169. doi: 10.1186/s12859-017-1578-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Luengo J., Shim S.-O., Alshomrani S., Altalhi A., Herrera F. CNC-NOS: class noise cleaning by ensemble filtering and noise scoring. Knowledge-Based Systems. 2018;140:27–49. doi: 10.1016/j.knosys.2017.10.026. [DOI] [Google Scholar]

- 15.Afanasyev D. O., Fedorova E. A. On the impact of outlier filtering on the electricity price forecasting accuracy. Applied Energy. 2019;236:196–210. doi: 10.1016/j.apenergy.2018.11.076. [DOI] [Google Scholar]

- 16.Tao Z., Huiling L., Wenwen W., Xia Y. GA-SVM based feature selection and parameter optimization in hospitalization expense modeling. Applied Soft Computing. 2018;75:323–332. doi: 10.1016/j.asoc.2018.11.001. [DOI] [Google Scholar]

- 17.D’Addabbo A., Maglietta R. Parallel selective sampling method for imbalanced and large data classification. Pattern Recognition Letters. 2015;62:61–67. doi: 10.1016/j.patrec.2015.05.008. [DOI] [Google Scholar]

- 18.Huang B., et al. Systems and Computers: Journal of Circuits; 2020. Imbalanced data classification algorithm based on clustering and SVM. [Google Scholar]

- 19.Fan Y., Cui X., Han H., Lu H. Chiller fault diagnosis with field sensors using the technology of imbalanced data. Applied Thermal Engineering. 2019;159(10):p. 113933. doi: 10.1016/j.applthermaleng.2019.113933. [DOI] [Google Scholar]

- 20.Moradi P., Gholampour M. A hybrid particle swarm optimization for feature subset selection by integrating a novel local search strategy. Applied Soft Computing. 2016;43:117–130. doi: 10.1016/j.asoc.2016.01.044. [DOI] [Google Scholar]

- 21.Wei J., Zhang R., Yu Z., et al. A BPSO-SVM algorithm based on memory renewal and enhanced mutation mechanisms for feature selection. Applied Soft Computing. 2017;58:176–192. doi: 10.1016/j.asoc.2017.04.061. [DOI] [Google Scholar]

- 22.Zeng N., Qiu H., Wang Z., Liu W., Zhang H., Li Y. A new switching-delayed-PSO-based optimized SVM algorithm for diagnosis of Alzheimer's disease. Neurocomputing. 2018;320:195–202. doi: 10.1016/j.neucom.2018.09.001. [DOI] [Google Scholar]

- 23.Wang G. G., Deb S., Cui Z. Monarch butterfly optimization. Neural Computing and Applications. 2015;31 doi: 10.1007/s00521-015-1923-y. [DOI] [Google Scholar]

- 24.Li S., Chen H., Wang M., Heidari A. A., Mirjalili S. Slime mould algorithm: a new method for stochastic optimization. Future Generation Computer Systems. 2020;111:300–323. doi: 10.1016/j.future.2020.03.055. [DOI] [Google Scholar]

- 25.Wang G.-G. Moth search algorithm: a bio-inspired metaheuristic algorithm for global optimization problems. Memetic Computing. 2018;10(2):151–164. doi: 10.1007/s12293-016-0212-3. [DOI] [Google Scholar]

- 26.Yang Y., Chen H., Heidari A. A., Gandomi A. H. Hunger games search: visions, conception, implementation, deep analysis, perspectives, and towards performance shifts. Expert Systems with Applications. 2021;177:p. 114864. doi: 10.1016/j.eswa.2021.114864. [DOI] [Google Scholar]

- 27.Heidari A. A., Mirjalili S., Faris H., Aljarah I., Mafarja M., Chen H. Harris hawks optimization: algorithm and applications. Future Generation Computer Systems. 2019;97:849–872. doi: 10.1016/j.future.2019.02.028. [DOI] [Google Scholar]

- 28.Nobile M. S., Cazzaniga P., Besozzi D., Colombo R., Mauri G., Pasi G. Fuzzy self-tuning PSO: a settings-free algorithm for global optimization. Swarm and Evolutionary Computation. 2018;39:70–85. doi: 10.1016/j.swevo.2017.09.001. [DOI] [Google Scholar]

- 29.Verbaeten S., Van Assche A. Ensemble methods for noise elimination in classification problems. In international workshop on multiple classifier systems; 2003; Springer, Berlin, Heidelberg. pp. 317–325. [Google Scholar]

- 30.Lee S.-J., Xu Z., Li T., Yang Y. A novel bagging C4.5 algorithm based on wrapper feature selection for supporting wise clinical decision making. Journal of Biomedical Informatics. 2017;78:144–155. doi: 10.1016/j.jbi.2017.11.005. [DOI] [PubMed] [Google Scholar]

- 31.Garcia L. P. F., Lehmann J., de Carvalho A. C. P. L. F., Lorena A. C. New label noise injection methods for the evaluation of noise filters. Knowledge Based Systems. 2019;163:693–704. doi: 10.1016/j.knosys.2018.09.031. [DOI] [Google Scholar]

- 32.Quinlan J. R. Improved use of continuous attributes in C4.5. Journal of Artificial Intelligence Research. 1996;4(1):77–90. doi: 10.1613/jair.279. [DOI] [Google Scholar]

- 33.Cortes C., Vapnik V. N. Support-vector networks. Machine Learning. 1995;20(3):273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 34.Hansen N., Ros R., Mauny N., Schoenauer M., Auger A. Impacts of invariance in search: when CMA-ES and PSO face ill-conditioned and non-separable problems. Applied Soft Computing. 2011;11(8):5755–5769. doi: 10.1016/j.asoc.2011.03.001. [DOI] [Google Scholar]

- 35.Nobile M. S., Pasi G., Cazzaniga P., Besozzi D., Colombo R., Mauri G. Proactive particles in swarm optimization: a self-tuning algorithm based on fuzzy logic. In 2015 IEEE international conference on fuzzy systems (FUZZ-IEEE); 2015; IEEE. pp. 1–8. [Google Scholar]

- 36.Sugeno M. Industrial Applications of Fuzzy Control. Elsevier Science Inc.; 1985. [Google Scholar]

- 37.Altman N. S. An introduction to kernel and nearest-neighbor nonparametric regression. American Statistician. 1992;46(3):175–185. doi: 10.1080/00031305.1992.10475879. [DOI] [Google Scholar]

- 38.Ho T. K. Random decision forests. In Proceedings of 3rd international conference on document analysis and recognition; IEEE. 1995. pp. 278–282. [Google Scholar]

- 39.Friedman J. H. Greedy function approximation: a gradient boosting machine. Annals of Statistics. 2001;29(5) doi: 10.1214/aos/1013203451. [DOI] [Google Scholar]

- 40.Freund Y. Boosting a weak learning algorithm by majority. Information and Computation. 1995;121(2):256–285. doi: 10.1006/inco.1995.1136. [DOI] [Google Scholar]

- 41.Han H., Wang W. Y., Mao B. H. Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In international conference on intelligent computing; 2005; Springer, Berlin, Heidelberg. pp. 878–887. [Google Scholar]

- 42.Bunkhumpornpat C., Sinapiromsaran K., Lursinsap C. Safe-level-SMOTE: safe-level-synthetic minority over-sampling technique for handling the class imbalanced problem. In Pacific-Asia conference on knowledge discovery and data mining; 2009; Springer, Berlin, Heidelberg. pp. 475–482. [Google Scholar]

- 43.Batista G. E., Prati R. C., Monard M. C. A study of the behavior of several methods for balancing machine learning training data. ACM SIGKDD explorations newsletter. 2004;6(1):20–29. doi: 10.1145/1007730.1007735. [DOI] [Google Scholar]

- 44.Alcala-fdez J. KEEL data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. Journal of Multiple Valued Logic & Soft Computing. 2011;17(2-3):255–287. [Google Scholar]

- 45.Veganzones D., Severin E. An investigation of bankruptcy prediction in imbalanced datasets. Decision Support Systems. 2018;112:111–124. doi: 10.1016/j.dss.2018.06.011. [DOI] [Google Scholar]

- 46.Elyan E., Gaber M. M. A genetic algorithm approach to optimising random forests applied to class engineered data. Information Sciences. 2017;384:220–234. doi: 10.1016/j.ins.2016.08.007. [DOI] [Google Scholar]

- 47.Li J., Zhu Q., Wu Q. A self-training method based on density peaks and an extended parameter-free local noise filter for k nearest neighbor. Knowledge-Based Systems. 2019;184:p. 104895. doi: 10.1016/j.knosys.2019.104895. [DOI] [Google Scholar]

- 48.Muthukumar P., Krishnan G. S. S. A similarity measure of intuitionistic fuzzy soft sets and its application in medical diagnosis. Applied Soft Computing. 2016;41:148–156. doi: 10.1016/j.asoc.2015.12.002. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset for this study can be obtained from the UCI machine learning database (http://archive.ics.uci.edu/ml/datasets/Thoracic+Surgery+Data).