Abstract

We describe a neurobiologically informed computational model of phasic dopamine signaling to account for a wide range of findings, including many considered inconsistent with the simple reward prediction error (RPE) formalism. The central feature of this PVLV framework is a distinction between a Primary Value (PV) system for anticipating primary rewards (USs), and a Learned Value (LV) system for learning about stimuli associated with such rewards (CSs). The LV system represents the amygdala, which drives phasic bursting in midbrain dopamine areas, while the PV system represents the ventral striatum, which drives shunting inhibition of dopamine for expected USs (via direct inhibitory projections) and phasic pausing for expected USs (via the lateral habenula). Our model accounts for data supporting the separability of these systems, including individual differences in CS-based (sign-tracking) vs. US-based learning (goal-tracking). Both systems use competing opponent-processing pathways representing evidence for and against specific USs, which can explain data dissociating the processes involved in acquisition vs. extinction conditioning. Further, opponent processing proved critical in accounting for the full range of conditioned inhibition phenomena, and the closely-related paradigm of second-order conditioning. Finally, we show how additional separable pathways representing aversive USs, largely mirroring those for appetitive USs, also have important differences from the positive valence case, allowing the model to account for several important phenomena in aversive conditioning. Overall, accounting for all of these phenomena strongly constrains the model, thus providing a well-validated framework for understanding phasic dopamine signaling.

Keywords: dopamine, reinforcement learning, basal ganglia, Pavlovian conditioning, conditioned inhibition, computational model

Introduction

Phasic dopamine signaling plays a well-documented role in many forms of learning (e.g., Wise, 2004) and understanding the mechanisms involved in generating these signals is of fundamental importance. The temporal differences (TD) framework (Sutton & Barto, 1981, 1990, 1998), building on the reward prediction error (RPE) theory of Rescorla and Wagner (1972), provided a major advance by formalizing phasic dopamine signals in terms of continuously computed RPEs (Montague, Dayan, & Sejnowski, 1996; Schultz, Dayan, & Montague, 1997). To summarize this dopamine reward prediction error hypothesis (DA-RPE; Glimcher, 2011), the occurrence of better than expected reward outcomes produces brief, short-latency increases in dopamine cell firing (phasic bursts), while worse than expected outcomes produce corresponding phasic decreases (pauses/dips) relative to a tonic firing baseline. These punctate error signals have been shown to function as temporally precise teaching signals for Pavlovian and instrumental learning, and are widely believed to play an important role in the acquisition and performance of many higher cognitive functions including: action selection (Frank, 2006), sequence production (Suri & Schultz, 1998), goal-directed behavior (Goto & Grace, 2005), decision making (Doll & Frank, 2009; St Onge & Floresco, 2009; Takahashi, Matsui, Camerer, Takano, Kodaka, Ideno, Okubo, Takemura, Arakawa, Eguchi, Murai, Okubo, Kato, Ito, & Suhara, 2010), and working memory manipulation (O’Reilly & Frank, 2006; Rieckmann, Karlsson, Fischer, & Backman, 2011).

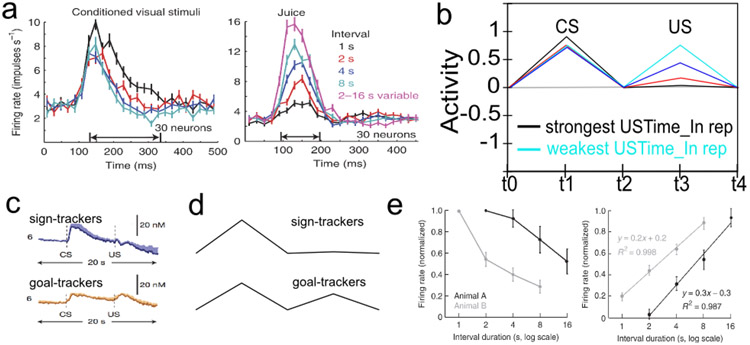

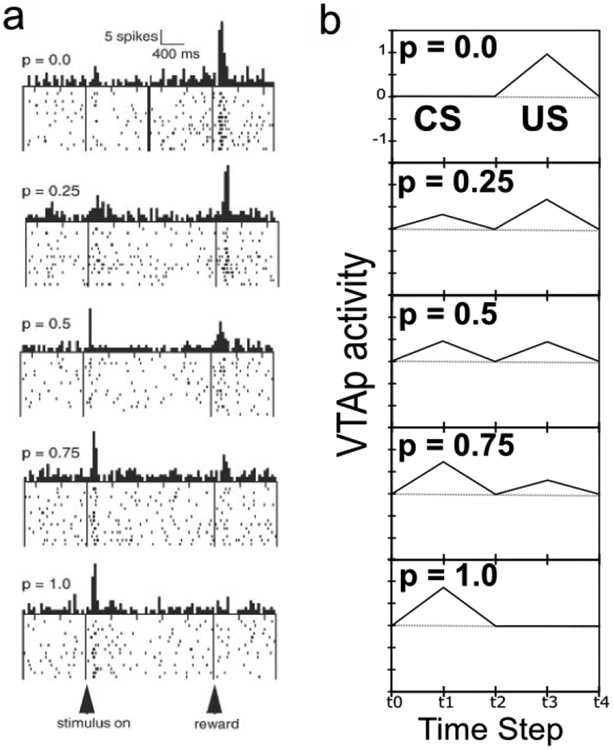

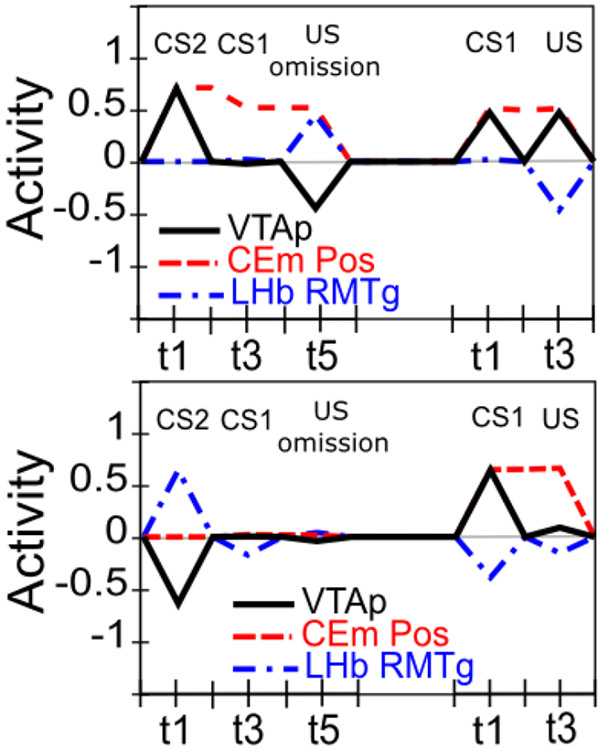

Despite the well-documented explanatory power of this simple idea, it has become increasingly clear that a more nuanced understanding is needed, as there are many aspects of dopamine cell firing that are hard to reconcile within a simple RPE formalism. For example, dopamine cell bursting has long been known to occur robustly at both CS- and US-onset for a period of time early in training (Ljungberg, Apicella, & Schultz, 1992). Moreover, recent work suggests that as the delay between CS-onset and US-onset increase beyond a few seconds, dopamine cell bursting at the time of the US diminishes progressively less until it is statistically indistinguishable from the response to randomly delivered reward, even after a task has been thoroughly learned (Fiorillo, Newsome, & Schultz, 2008; Kobayashi & Schultz, 2008). In contrast, CS firing is acquired relatively robustly across these same delays, albeit less so as a function of increasing delay (i.e., flatter decay slope; Fiorillo et al., 2008; Kobayashi & Schultz, 2008).

More subtle anomalies include the asymmetrical pattern seen for earlier than expected versus later than expected rewards (Hollerman & Schultz, 1998); and certain aspects of the conditioned inhibition paradigm, including the lack of a RPE-like dopamine response at the time of omitted reward when a conditioned inhibitor is presented alone at test (Tobler, Dickinson, & Schultz, 2003). Further, extinction learning and related reacquisition phenomena have been shown to involve additional learning mechanisms beyond those involved in initial acquisition, suggesting the likelihood of additional wrinkles in the pattern of dopamine signaling involved. Finally, the pattern of phasic dopamine signaling seen under aversive conditioning paradigms is not a simple mirror-image of the appetitive case, with evidence for heterogeneous sub-populations of dopamine neurons that respond to primary aversive outcomes in opposite ways (Brischoux, Chakraborty, Brierley, & Ungless, 2009; Bromberg-Martin, Matsumoto, & Hikosaka, 2010b; Lammel, Lim, Ran, Huang, Betley, Tye, Deisseroth, & Malenka, 2012; Lammel, Lim, & Malenka, 2014; Matsumoto & Hikosaka, 2009a; Fiorillo, 2013). In addition, a long-standing controversy has surrounded the phasic bursting often seen for aversive and/or high intensity stimulation (e.g., Mirenowicz & Schultz, 1996; Horvitz, 2000; Fiorillo, 2013; Schultz, 2016; Comoli, Coizet, Boyes, Bolam, Canteras, Quirk, Overton, & Redgrave, 2003; Dommett, Coizet, Blaha, Martindale, Lefebvre, Walton, Mayhew, Overton, & Redgrave, 2005; Humphries, Stewart, & Gurney, 2006), which has been interpreted as a component of salience or novelty-coding in addition to simple RPE-coding (Kakade & Dayan, 2002).

Such departures from the simple RPE formalism should not be surprising, however, since it is an abstract, mathematical formalism corresponding to David Marr’s (1982) algorithmic, or even computational, level of analysis. Thus, the present work can be seen as an attempt to bridge between the biological mechanisms at Marr’s implementational level and the higher-level RPE formalism, providing specific testable hypotheses about how the critical elements of that formalism arise from interactions among distributed brain systems, and the ways in which these neural systems diverge from the simpler high-level formalism. There is an important need for this bridging between levels of analysis, because the neuroscience literature has implicated a large and complex network of brain areas as involved in dopamine signaling, but understanding the precise functional contributions of these diverse areas, and their interrelationships, is difficult without being able to see the interacting system function as a whole. The computational modeling approach provides this ability, and the ability to more systematically test and manipulate areas to determine their precise contributions to a range of different behavioral phenomena. Furthermore, the considerable divergences between appetitive (reward-defined) and aversive (punishment-defined) processing are particularly challenging and informative, because the same networks of brain areas are involved in both to a large extent, and the abstract RPE formalism makes no principled distinction between them. Thus, our biologically-based model can help provide new principles that make sense of these discrepancies, in ways that could be of interest to those working at the higher abstract levels.

There have been various attempts to develop more detailed neurobiological frameworks for understanding phasic dopamine function (e.g., Houk, Adams, & Barto, 1995; Brown, Bullock, & Grossberg, 1999; Suri & Schultz, 1999, 2001; O’Reilly, Frank, Hazy, & Watz, 2007; Redish, Jensen, Johnson, & Kurth-Nelson, 2007; Tan & Bullock, 2008; Hazy, Frank, & O’Reilly, 2010; Vitay & Hamker, 2014; Carrere & Alexandre, 2015), which we build upon here to provide a comprehensive framework that accounts for the above-mentioned empirical anomalies to the simple RPE formalism while also incorporating most of the major biological elements identified to date. This framework builds on our earlier PVLV model (Primary Value, Learned Value; pronounced “Pavlov”) (O’Reilly et al., 2007; Hazy et al., 2010), and includes mechanistically explicit models of the following major brain systems: the basolateral amygdalar complex (BLA); central amygdala (lateral and medial segments: CEl & CEm); pedunculopontine tegmentum (PPTg); ventral striatum (VS, including the Nucleus Accumbens, NAc); lateral habenula (LHb); and of course the midbrain dopaminergic nuclei themselves (ventral tegmental area, VTA; and substantia nigra, pars compacta, SNc). These areas are driven by simplified inputs representing the brain systems encoding appetitive and aversive USs, CSs, variable contexts, and temporally-evolving working memory-like representations of US-defined goal-states mapped to ventral-medial frontal cortical areas, primarily the orbital frontal cortex (OFC).

Our overall goal is to provide a single comprehensive framework for understanding the full scope of phasic dopamine firing across the biological, behavioral, and computational levels. Although the model is considerably more complex than the single equation at the heart of the RPE framework, it nevertheless is based on two core computational principles that together determine much of its overall function — many more details are required to account for critical biological data, but these are all built upon the foundation established by these core computational principles. The basic learning equations are consistent with the classic Rescorla-Wagner / delta rule framework (Rescorla & Wagner, 1972), but the first core computational principle is that two separate systems are needed to enable this form of learning to account for both the anticipatory nature of dopamine firing (at the time of a CS, which occurs in the LV or learned-value system, associated with the amygdala), and the discounting of expected outcomes at the time of the US (in the PV or primary-value system, associated with the ventral striatum). These two systems give the PVLV model its name, and have remained the central feature of the framework since its inception (O’Reilly et al., 2007; Hazy et al., 2010). The recent discovery of strong individual differences in behavioral phenotypes, termed sign-tracking (CS-focused learning and behavior) vs. goal-tracking (US-focused learning and behavior) is suggestive of this kind of anatomical dissociation (Flagel, Robinson, Clark, Clinton, Watson, Seeman, Phillips, & Akil, 2010; Flagel, Clark, Robinson, Mayo, Czuj, Willuhn, Akers, Clinton, Phillips, & Akil, 2011).

The second core computational principle, which cuts across both the LV and PV systems in our model, is the use of opponent-processing pathways based on the reciprocal functioning of dopamine D1 versus D2 receptors (Mink, 1996; Frank, Loughry, & O’Reilly, 2001; Frank, 2005; Collins & Frank, 2014). The value of opponent-processing has long been recognized, in terms of enabling fundamentally relative (instead of absolute) comparisons (e.g., in color vision), and allowing more flexible forms of learning, for example learning a broad positive association with specific negative exceptions. Furthermore, the dopamine modulation of these pathways supports both the opposite valence-orientation of appetitive vs. aversive conditioning, as well as acquisition vs. extinction learning, across both systems. The importance of this opponent-processing framework is particularly evident in the extinction learning case, where the context-specificity of extinction can be understood as the learning of context-specific exceptions in the opponent pathway relative to the retained initial association.

Thus, it is important to appreciate that we did not just add biological mechanisms in an ad-hoc manner to account for specific data — our goal was to simplify and exploit essential computational mechanisms, while remaining true to the known biological and behavioral data. As the famous saying attributed to Einstein goes: “Everything should be made as simple as possible, but not simpler” — here we weigh heavier on the “but not simpler” part of things relative to the abstract RPE framework and associated models, in order to account for relevant biological data. Nevertheless, neuroscientists may still regard our models as overly abstract and computational — it is precisely this middle ground that we seek to provide, so that we can build bridges between these levels, even though it may not fully satisfy many on either side. As such, this model represents a suitable platform for generating numerous novel, testable predictions across the spectrum from biology to behavior, and for understanding the nature of various complex disorders that can arise within the dynamics of these brain systems, which have been implicated in a number of major mental disorders.

As noted earlier, PVLV builds upon various neural-level implementational models that have been proposed for the phasic dopamine system, integrating proposed neural mechanisms that explain the effects of both timing (Vitay & Hamker, 2014; Houk et al., 1995) and reward magnitude and probability on phasic dopamine responses (Tan & Bullock, 2008; Montague et al., 1996), as well as the neural mechanisms underlying inhibitory learning that contribute to extinction of responses to reward (Pan, Schmidt, Wickens, & Hyland, 2005; Redish et al., 2007). Several models also integrate timing and magnitude and probability signals, proposing that separate neural pathways may be involved in each type of computation (Brown et al., 1999; Contreras-Vidal & Schultz, 1999).

Also relevant, although not explicitly about the phasic dopamine signaling system, are recent neural models of fear conditioning in the amygdala. These models have highlighted the circuitry that contributes to the learning and extinction of responses to negative valence stimuli, including neural circuits implementing the effects of context on learning and extinction (Moustafa, Gilbertson, Orr, Herzallah, Servatius, & Myers, 2013; Krasne, Fanselow, & Zelikowsky, 2011; Carrere & Alexandre, 2015). Despite this wealth of neural modeling work, the PVLV model provides additional explanatory power beyond these prior models by incorporating both the positive and negative valence pathways, along with excitatory and inhibitory learning in both systems and their effects on the phasic dopamine system, grounded in a wide range of neural data supporting the computations made by each part of the model and their effects on phasic dopamine firing.

Motivating Phenomena

Several empirical phenomena — and related neuro-computational considerations — have especially guided our thinking about phasic dopamine signaling as a functioning neurobiological system. These are briefly summarized here, with additional details provided later in the relevant sections.

The acquisition of phasic dopamine bursting for CSs, and reduction for expected USs, are dissociable phenomena. The dissociation between these two aspects of phasic dopamine function is central to the PVLV model, as noted above, and reviewed extensively in our earlier papers (O’Reilly et al., 2007; Hazy et al., 2010). The evidence for this dissociation includes: 1) phasic bursting at both CS and US onset co-exist for a period of time before the latter is lost (e.g., Ljungberg et al., 1992); 2) at interstimulus intervals greater than about four seconds, very little loss of US-triggered bursting is observed in spite of extensive overtraining – even though substantial bursting to CS-onset is acquired (Fiorillo et al., 2008; Kobayashi & Schultz, 2008); and, 3) under probabilistic reward schedules the acquired CS signals come to reflect the expected value of the outcomes, but US-time signals adjust to reflect the range or variance of outcomes that occur (Tobler, Fiorillo, & Schultz, 2005). Thus, CS- and US- triggered bursting are neither mutually exclusive nor conserved, in contradistinction to simple TD models that predict a fixed-sum backward-chaining of phasic signals. There now seems to be a consensus among biologically-oriented modelers that there are two distinct (though interdependent) subsystems with multiple sites of plasticity (e.g., Tan & Bullock, 2008; Hazy et al., 2010; Vitay & Hamker, 2014). Under the PVLV framework, the acquisition of phasic dopamine cell bursting at CS-onset (i.e., LV learning) is mapped to the amygdala, while the loss of phasic bursting at US-onset (PV learning) is mapped to the ventral striatum (VS, including the Nucleus Accumbens, NAc). In the present version of the model, we also include an explicit lateral habenula (LHb) component that is driven by the VS to cause phasic pauses in dopamine cell firing, e.g., for omissions of expected rewards.

Rewards that occur earlier than expected produce phasic dopamine cell bursting, but no pausing at the usual time of reward, whereas rewards that occur late produce both signals. While a simple RPE formalism predicts that both early and late rewards should exhibit both bursts and pauses, the empirically observed result (Hollerman & Schultz, 1998; Suri & Schultz, 1999) actually makes better sense ecologically: once an expected reward is obtained an agent should not continue to expect it. We interpret this within a larger theoretical framework in which a temporally-precise goal-state representation for a particular US develops in the OFC as each CS-US association is acquired. The occurrence of a CS activates this OFC representation, which is then maintained via robust frontal active-maintenance mechanisms, and it is cleared when the US actually occurs (i.e., when the goal outcome is achieved). It is the clearing of this expectation representation that prevents the pause from occurring after early rewards. This role of OFC active maintenance in bridging between the two systems in PVLV (LV / CS and PV / US) replaces the temporal chaining dynamic in the TD model, and provides an important additional functional and anatomical basis for the specialization of these systems: the PV (VS) system depends critically on OFC input for learning when to expect US outcomes, while the LV (amygdala) system is more strongly driven by sensory inputs that then acquire CS status through learning. In other words, the LV / amygdala system is critical for sign tracking while the PV / VS system is critical for goal tracking (Flagel et al., 2010; see General Discussion). In the present model, we do not explicitly simulate the active maintenance dynamics of the OFC system, but other models have done so (Frank & Claus, 2006; Pauli, Hazy, & O’Reilly, 2012; Pauli, Atallah, & O’Reilly, 2010).

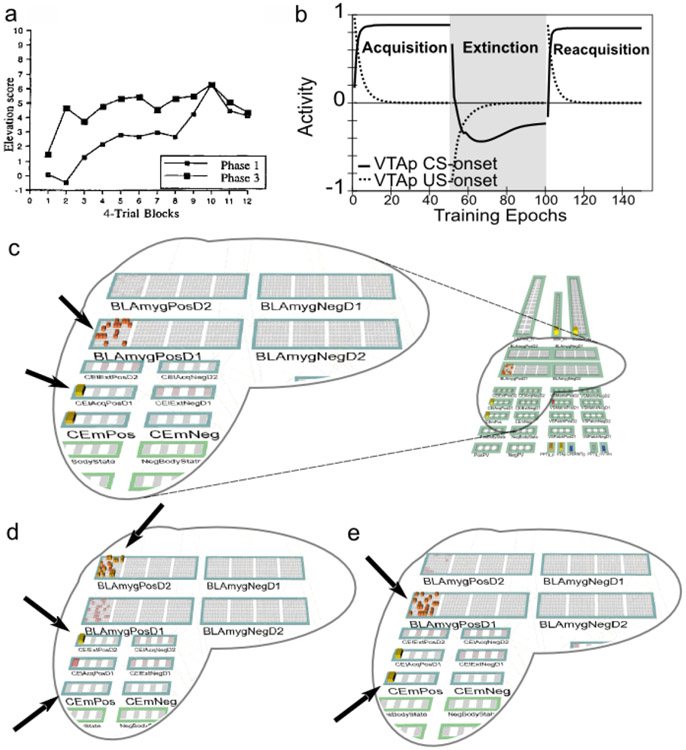

Extinction is not simply the unlearning of acquisition. Extinction and the related phenomena of reacquisition, spontaneous recovery, renewal, and reinstatement exhibit clear idiosyncracies in comparison with initial acquisition. For example, reacquisition generally proceeds faster after extinction than does original acquisition (rapid reacquisition; Pavlov, 1927; Ricker & Bouton, 1996; Rescorla, 2003), and a single unpredicted presentation of a US after extinction can reinstate CRs to near pre-extinction levels (reinstatement; Pavlov, 1927; Bouton, 2004). In addition, extinction learning has a significantly stronger dependency on context than does initial acquisition as demonstrated in the renewal paradigm (Bouton, 2004; Corcoran, Desmond, Frey, & Maren, 2005; Krasne et al., 2011). The clear implication is that extinction learning is not the symmetrical weakening of weights previously strengthened during acquisition, which a simple RPE formalism typically assumes, but instead involves the strengthening of a different set of weights that serve to counteract the effects of the acquisition weights. In support of this inference, much empirical evidence implicates extinction-related plasticity in different neurobiological substrates from those implicated in initial acquisition (e.g., Bouton, 2004; Herry, Ciocchi, Senn, Demmou, Müller, & Lüthi, 2008; Quirk & Mueller, 2008; Bouton, 2011). These phenomena support the use of opposing pathways — one for acquisition and another for extinction — within both the LV-learning amygdala subsystem and the PV-learning VS subsystem.

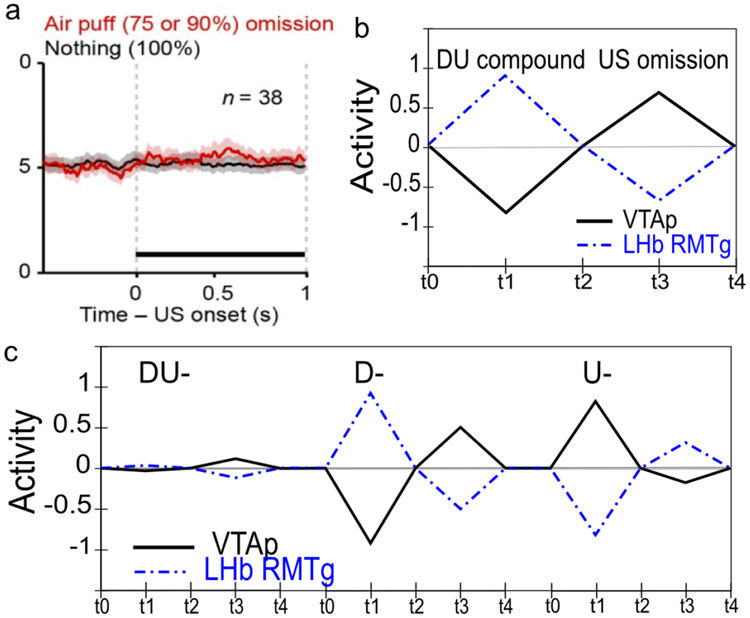

Although logically related, the loss of bursting at the time of an expected reward and pausing when rewards are omitted are dissociable phenomena. There is evidence that the mechanisms involved in the former are relatively temporally imprecise, compared to the latter, which are necessarily more punctate since they cannot begin until it has been determined that a reward has, in fact, been omitted. Rewards delivered early show progressively more bursting the earlier they are, implying the mechanisms involved in blocking expected rewards are ramping up before the expected time of reward (Fiorillo et al., 2008; Kobayashi & Schultz, 2008). Further, there is a slight, but statistically significant, ramping decrease in tonic firing rate prior to expected rewards (Bromberg-Martin, Matsumoto, & Hikosaka, 2010a). On the other hand, the mechanisms implicated in producing pauses for omitted rewards are more temporally precise, with an abrupt, discretized onset (Matsumoto & Hikosaka, 2009b), and no apparent sign of early increases in firing in the lateral habenula (LHb; Matsumoto & Hikosaka, 2009b). This dissociation, along with congruent anatomical data, motivates a distinction between the inhibitory shunting of phasic bursts (hypothesized to be accomplished by known VS inhibitory projections directly onto dopamine neurons; Joel & Weiner, 2000), and a second, probably collateral pathway through the LHb (and RMTg) that is responsible for pausing tonic firing. This latter pathway enables the system to make the determination that a specific expected event has not in fact occurred (Brown et al., 1999; O’Reilly et al., 2007; Tan & Bullock, 2008; Hazy et al., 2010; and see Vitay & Hamker, 2014, for an excellent review and discussion of this important problem space).

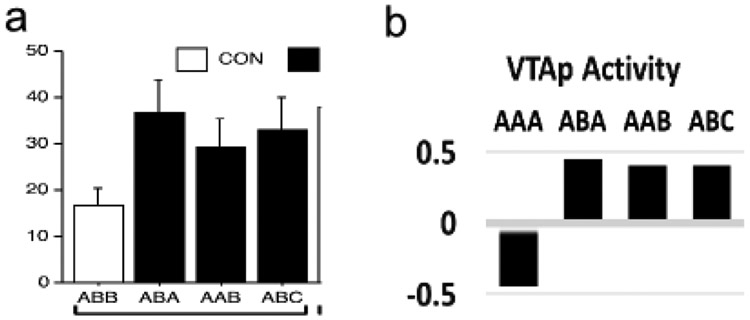

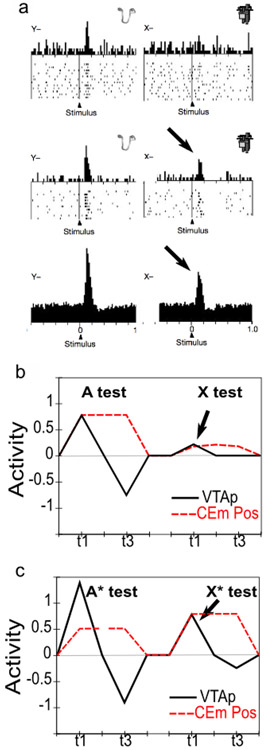

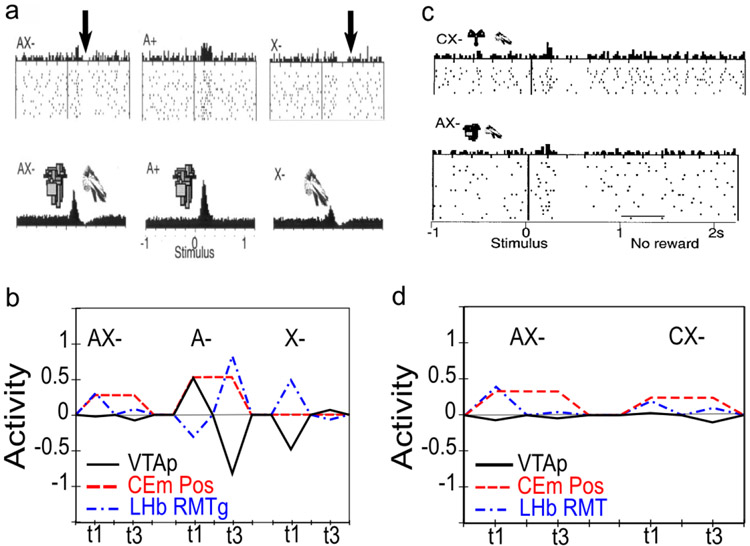

Conditioned inhibitors acquire the ability to generate phasic pauses in dopamine cell firing when presented alone. When a novel stimulus (conditioned inhibitor, CI, denoted X) is presented along with a previously trained CS (denoted A), and trained with the non-occurrence of an expected appetitive outcome (i.e., AX−), the CI takes on a negative valence association and produces a phasic pause in dopamine firing (Tobler et al., 2003). This represents an important point of overlap between appetitive and aversive conditioning, since a CI stimulus (X−) behaves very much like a CS directly paired with an aversive US as reported by e.g., Mirenowicz and Schultz (1996). However, in the CI case, there is no overt negative US involved — only the absence of a positive US. Thus, the conditioned inhibition paradigm helps inform ideas about the role of USs in driving CS learning. In our framework, aversive CSs come to excite the LHb via the striatum (and pallidum), to produce dopamine cell pauses. Biologically, there is a pathway through the striatum to the LHb, in addition to well-documented direct US inputs to LHb, and electrophysiological results consistent with the role of the striatal pathway in driving pauses in dopamine firing via the LHb (Hong & Hikosaka, 2013). Preliminary direct evidence for a role of the LHb in conditioned inhibition has recently been reported (Laurent, Wong, & Balleine, 2017).

InRescorla’s (1969)summation test of conditioned inhibition, conditioned inhibitors tested with a different conditioned stimulus can immediately prevent both the expression of acquired conditioned responses as well as phasic dopamine pauses. Specifically, this paradigm involves first training A+ and separately B+; then training AX− (i.e., conditioned inhibition training), but not BX−; and then, finally, testing BX−. At the otherwise expected time of the B+ US, there is no dopamine pause for the BX− case (Tobler et al., 2003), indicating that the X has acquired a generalized ability to negate the expectation of the US and is not just specific to the AX compound. Furthermore, presentation of the BX compound at test also prevents the expression of acquired B+ CRs (e.g., salivation, food-cup approach) (Tobler et al., 2003), implying that the acquired X inhibitory representation has reached deep subcortical behavioral pathways.

Conditioned inhibitors do not produce bursting at the expected time of the US when presented alone. According to a simple RPE formalism of conditioned inhibition, the X stimulus should acquire negative value itself and also serve to drive learning that predicts its occurrence, all trained by the dopamine pauses. Subsequently, when the X is presented by itself (without A-driven expectation of getting a reward), an unopposed expectation of the negative (reward omission) outcome should trigger a positive dopamine burst at the time when the US would have otherwise occurred. This is analogous to the modest relief bursting reported when a trained CS is presented but the aversive US is omitted at test (Matsumoto & Hikosaka, 2009a; Matsumoto, Tian, Uchida, & Watabe-Uchida, 2016), or when a sustained aversive US is terminated (Brischoux et al., 2009). In fact, however, no such X− relief burst was detected by Tobler et al. (2003) — even though they explicitly looked for one.

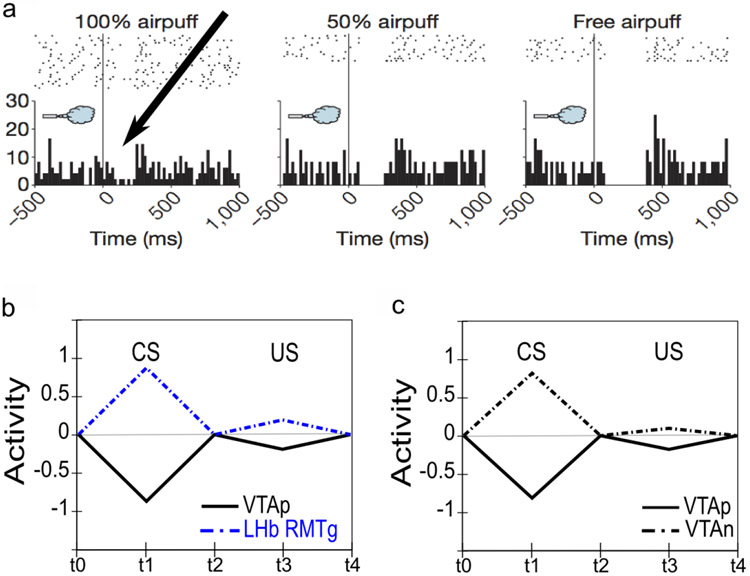

Phasic dopamine responses to aversive outcomes include both pauses and bursts, with distinct subpopulations identifiable. The nature of phasic dopamine responses to primary aversive outcomes has been a topic of long-standing controversy with multiple studies reporting either pauses (e.g., Mirenowicz & Schultz, 1996), bursts (Horvitz, Stewart, & Jacobs, 1997; Horvitz, 2000), or a mixture of both including cells exhibiting a biphasic response pattern (Matsumoto & Hikosaka, 2009a). Although there is now a clear consensus that bursting responses for aversive events do occur, the interpretation remains controversial (e.g., Fiorillo, 2013; Schultz, 2016). All things considered, the most parsimonious interpretation may be that different populations of dopamine neurons may have different response profiles, with a majority (generally more laterally-located) displaying a predominantly valence-congruent (RPE-consistent) response profile (i.e., pausing for aversive outcomes), while a smaller (more medial) subpopulation responds with bursting for aversive outcomes. Functionally, it may be that both forms of response make sense: for instrumental learning based on reinforcing actions that produce “good” outcomes and punishing those leading to “bad” ones (e.g., Thorndike, 1898, 1911; Frank, 2005), valence-congruent dopamine signaling would seem essential to prevent confusion across both appetitive and aversive contexts; on the other hand, one or more smaller specialized subpopulation(s) displaying bursting responses for aversive outcomes may be important for learning to suppress freezing and enable behavioral exploration for active avoidance learning. In line with this latter idea, it now appears there may be at least two small subpopulations of dopamine cells that respond with unequivocal bursting to aversive events: 1) a small subpopulation of posteromedial VTA neurons exhibiting unequivocal bursting to aversive events project narrowly to subareas of the accumbens shell and to certain ventromedial prefrontal areas that may play a role in the suppression of freezing (Maier & Watkins, 2010; Moscarello & LeDoux, 2013; Lammel et al., 2012); and, 2) even more recently, a second subpopulation of aversive-bursting dopamine cells has been described in the posterolateral aspect of the SNc, with this population projecting only to the caudal tail of the dorsal striatum and seemingly involved in simple avoidance learning (Menegas, Bergan, Ogawa, Isogai, Venkataraju, Osten, Uchida, & Watabe-Uchida, 2015; Menegas, Babayan, Uchida, & Watabe-Uchida, 2017; Menegas, Akiti, Uchida, & Watabe-Uchida, 2018). Aversive-bursting dopamine cells are included in the PVLV framework as a second, distinct dopamine unit as discussed in Neurobiological Substrates and Mechanisms.

Dopamine pauses to aversive outcomes appear not to be fully discounted through learned expectations. For the subset of dopamine neurons that exhibit valence-congruent pauses to aversive outcomes and CSs, these pauses seem not to be fully predicted away (Matsumoto & Hikosaka, 2009a; Fiorillo, 2013). Behaviorally, it makes sense not to fully suppress aversive outcome signals since these outcomes remain undesirable, even potentially life-threatening, and an agent should continue to be biased to learn to avoid them. In contrast, the discounting of expected appetitive outcomes would seem to serve the beneficial purpose of biasing the animal toward exploring for even better opportunities. Thus, there are several fundamental asymmetries between the appetitive and aversive cases that sensibly ought to be incorporated into functional models.

Both appetitive and aversive processing involve many of the same neurobiological substrates — in particular the amygdala and the lateral habenula. Overwhelming empirical evidence shows that the amygdala, ventral striatum, and lateral habenula all participate in both appetitive and aversive processing (Paton, Belova, Morrison, & Salzman, 2006; Lee, Groshek, Petrovich, Cantalini, Gallagher, & Holland, 2005; Cole, Powell, & Petrovich, 2013; Belova, Paton, Morrison, & Salzman, 2007; Shabel & Janak, 2009; Roitman, Wheeler, & Carelli, 2005; Setlow, Schoenbaum, & Gallagher, 2003; Donaire, Morón, Blanco, Villatoro, Gámiz, Papini, & Torres, 2019; Matsumoto & Hikosaka, 2009b; Stopper & Floresco, 2013). This implies that the processing of primary aversive events must coexist without disrupting the processing of appetitive events in these substrates, despite all the important differences between these basic situations as noted above. Properly integrating yet differentiating these two different valence contexts within a coherent overall framework presents an important challenge for any comprehensive model of the phasic dopamine signaling system. We find that an opponent processing framework — based on the opposite effects of D1 and D2 dopamine receptors on cells in the striatum and amygdala — can go a long way towards meeting this challenge, combined with an architecture that specifically segregates the processing of individual USs.

Pavlovian conditioning generally requires a minimum 50-100 msec interval between CS-onset and US. Our original PVLV model emphasized the problem that a phasic dopamine signal generated by CS onset could create a positive feedback loop of further learning to that CS, leading to saturated synaptic weights (O’Reilly et al., 2007; Hazy et al., 2010). We now account for data indicating CSs must precede USs by a minimum of 50-100 msec to drive conditioned learning (Schneiderman, 1966; Smith, 1968; Smith, Coleman, & Gormezano, 1969; Mackintosh, 1974; Schmajuk, 1997). With this constraint in place, it is not possible for CS-driven dopamine to reinforce itself, preventing the positive feedback problem. Incorporating this change now allows our model to include the effects of phasic dopamine on CS learning in the amygdala (in addition to the important role that US inputs play in driving learning there, as captured in the prior models), supporting phenomena such as second-order conditioning in the BLA (Hatfield, Han, Conley, & Holland, 1996).

Conceptual Overview of the PVLV Model

In this section we provide a high-level, conceptual overview of the PVLV model and how all the different parts fit together. Figure 1 shows how the fundamental LV vs. PV distinction cuts through a standard hierarchical organization of brain areas at three different levels: cortex, basal ganglia (BG), and brain stem. Cortex is generally thought to represent higher-level, more abstract, dynamic encodings of sensory and other information, which provides a basis for learning about the US-laden value of different states of the world (in standard reinforcement learning terminology). The basolateral amygdala (BLA) is described as having a cortex-like histology in its neural structure (e.g., Pape & Pare, 2010), but it also receives direct US inputs from various brain stem areas. Thus, it serves nicely as a critical hub / connector area that learns to associate these cortical state representations with US outcomes, which is the core of the LV function in the PVLV framework. In contrast, the central amygdala (CEA) has cell types and connectivity characteristic of the striatum of the basal ganglia (Cassell, Freedman, & Shi, 1999), and according to classic BG models (e.g., Mink, 1996; Frank et al., 2001; Frank, 2005; Collins & Frank, 2014), it should be specialized for selecting the best overall interpretation of the situation by separately weighing evidence-for (Go, direct pathway, CElON) vs. evidence-against (NoGo, indirect pathway, CElOFF) in a competitive, opponent-process dynamic (Ciocchi, Herry, Grenier, Wolff, Letzkus, Vlachos, Ehrlich, Sprengel, Deisseroth, Stadler, Müller, & Lüthi, 2010; Li, Penzo, Taniguchi, Kopec, Huang, & Li, 2013).

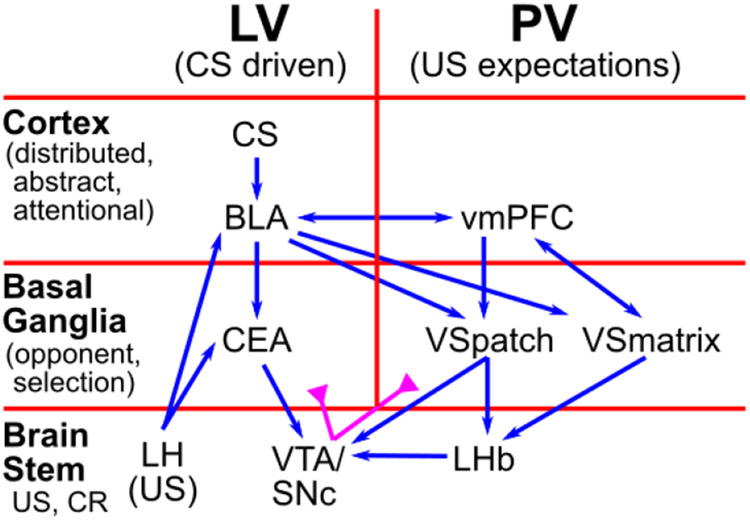

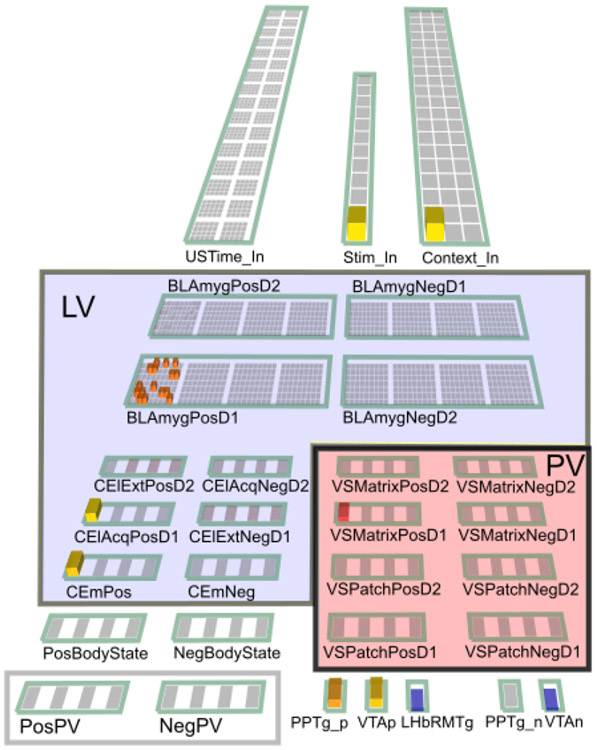

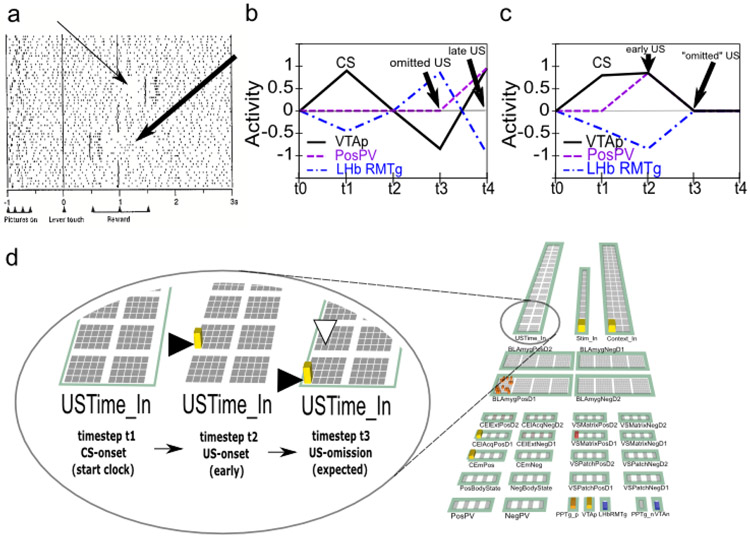

Figure 1:

Overview of PVLV: The main division into LV (learned value) and PV (primary value) cuts across a hierarchy of function in cortical, basal ganglia, and brain stem areas. The cortex provides high-level, abstract, dynamic state representations, and the basolateral amygdala (BLA), which has a cortex-like histology, links these with specific US outcomes. The basal-ganglia-like central amygdala (CEA) quantitatively evaluates the overall evidence for the occurrence of reward or punishment using opponent-processing pathways, and drives phasic dopamine bursts in the midbrain dopamine areas (VTA, SNc) if this evaluation is in favor of expected rewards. BLA also triggers updating of US expectations in ventral / medial prefrontal cortex (vmPFC), especially the OFC (orbitofrontal cortex), which then drives another opponent-process evaluation process, in the ventral striatum patch-like areas (VSpatch), the results of which can shunt dopamine bursts for expected US’s, and drive pauses in dopamine firing when an expected US fails to arrive, via projections to the lateral habenula (LHb). Various brain stem areas (e.g., the lateral hypothalamus, LH) drive US inputs into the system, and are also driven to activate conditioned responses (CR’s).

Thus, the CEA in our model takes the higher-dimensional, distributed, contextualized representations from BLA and boils them down to a simpler, quantitative evaluation of how likely a particular US outcome is given the current cortical state representations. When this evaluation results in an increased expectation of positive outcomes, it drives phasic bursting in the VTA/SNc dopamine nuclei. This occurs via direct connections, and via the pedunculopontine tegmental nucleus (PPTg), which may help in driving bursting as a function of changes in expectations, as sustained activity in BLA does not appear to drive further phasic dopamine bursting (e.g., Ono, Nishijo, & Uwano, 1995). In summary, through these steps, this stack of LV areas is responsible for driving phasic dopamine bursting in response to CS inputs.

The opponent organization scheme in the amygdala also serves to address the subtly challenging problem of learning about the absence of an expected US outcome as occurs during extinction training. This is challenging from a learning perspective because the absence of a US is a “non event”, and thus cannot drive learning in the traditional activation-based manner, and further, the issue remains of which of the indeterminate number of non-occurring events should direct learning. The explicit representation of absence in the opponent-processing scheme solves this problem by using selective modulatory, permissive connections from acquisition-coding to extinction-coding units so that only USs with some expectation of occurrence can accumulate evidence about non-occurrence. Thus, only at the last step in the pathway is the US-specific nature of the representations abstracted away to the pure value-coding nature of the effectively-scalar phasic dopamine signal, in contrast to many other computational models that only deal with this abstract value signal (e.g., standard TD models). In addition, learning constrained to separate representations for different types of rewards (punishments) can directly account for phenomena such as unblocking by reward type, something that is otherwise challenging for value-only models like TD (e.g., Takahashi, Batchelor, Liu, Khanna, Morales, & Schoenbaum, 2017), and depends on activity of dopamine neurons (Chang, Gardner, Di Tillio, & Schoenbaum, 2017).

Bridging the CS-driven US expectations into the PV side of the system, the BLA also drives areas in the orbital (OFC) and ventromedial prefrontal cortex (vmPFC), particularly the OFC (Figure 1). Projections from this cortical level to ventral striatum drive a BG-like evaluation of evidence for and against the imminent occurrence of specific USs at particular points in time. Cells in the patch-like compartment of the VS send direct inhibitory projections to the midbrain dopamine cells so as to produce a shunt-like inhibition that blocks dopamine bursts that would otherwise arise from an appetitive US. Furthermore, via a pallidal pathway, the VSpatch also drives a more temporally-precise activation (disinhibition) of the LHb that causes pausing (dips) of tonic dopamine firing if not offset by excitatory drive from an actual US occurrence. In summary, this PV stack of areas works together to anticipate and cancel expected US outcomes.

There is another pathway through the VS that does not fit as cleanly within the simple LV / PV distinction, which we hypothesize is mediated by the matrix-like compartments within the VS (VS-matrix). This pathway is necessary for supporting the ability of CS inputs to drive phasic dipping / pausing of dopamine firing, which appears to be exclusively driven by the LHb in response to VS inputs (Christoph, Leonzio, & Wilcox, 1986; Ji & Shepard, 2007; Matsumoto & Hikosaka, 2007; Hikosaka, Sesack, Lecourtier, & Shepard, 2008; Matsumoto & Hikosaka, 2009b; Hikosaka, 2010). We are not aware of any evidence supporting a direct projection from the amygdala to the LHb (Herkenham & Nauta, 1977), which would otherwise be a more natural pathway for CS activation of phasic dipping according to the overall PVLV framework. An important further motivation for this VSmatrix pathway is that, by hypothesis, it is also responsible for gating information through the thalamus so as to produce robust maintenance of US outcome / goal state representations in OFC (Frank & Claus, 2006; Pauli et al., 2012; Pauli et al., 2010). Such working memory-like goal state representations are hypothesized to be important for supporting goal-directed (vs. habitual) instrumental behavior, behavior known to depend on intact OFC (e.g., Gallagher, McMahan, & Schoenbaum, 1999). Thus, the very same plasticity events occurring at corticostriatal synapses onto VSMatrix cells could be responsible for learning to gate US information into OFC working memory in response to a particular CS, while acquiring an ability to drive phasic dopamine signals (via LHb) in response to those same CS events.

Appetitive / Aversive and Acquisition / Extinction Pathways

The above overview is framed in terms of appetitive conditioning, as that is the simplest and most well-established case. However, a critical feature of the current model is that it incorporates pathways within the LV and PV systems for processing aversive USs as well, leveraging the same opponent-process dynamics, with an appropriate sign-flip, as described above. Figure 2 shows the full set of pathways and areas in the PVLV model. As in the BG, each pathway is characterized by having a preponderance of dopamine D1 vs. D2 receptors, which then drives learning from phasic bursts (D1) or dips (D2) (e.g., Mink, 1996; Frank et al., 2001; Frank, 2005; Gerfen & Surmeier, 2011). Thus, assuming the standard RPE form of dopamine firing, D1-dominated pathways are strengthened by unexpected appetitive outcomes, while D2-dominated ones are strengthened by unexpected aversive outcomes. Thus, this differential dopamine receptor expression can account for the differential responses of appetitive- vs. aversive-coding neurons in the amygdala (LV), as shown in Figure 2. Although the BLA is not strongly topographically organized, we assume a similar opponency between subsets of neurons, as is more clearly demonstrated in the central amygdala CElON vs. CElOFF cells (Ciocchi et al., 2010; Li et al., 2013). In addition to these lateral pathway neurons, we include a final medial output pathway (CEm) that computes the net balance between on vs. off for each valence pathway (appetitive and aversive).

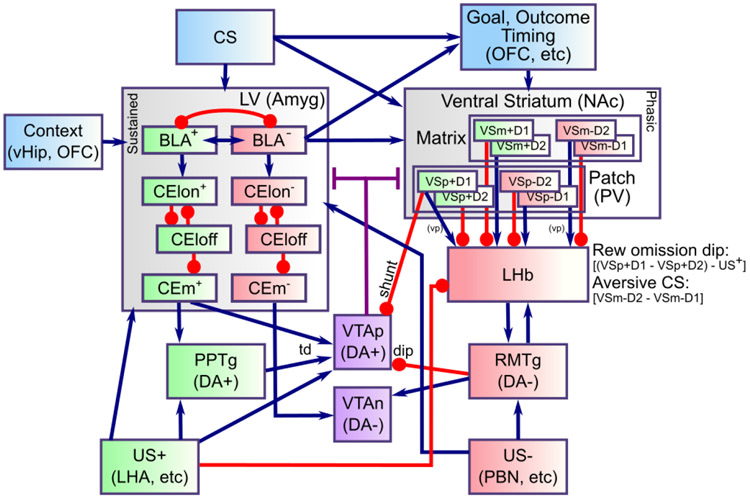

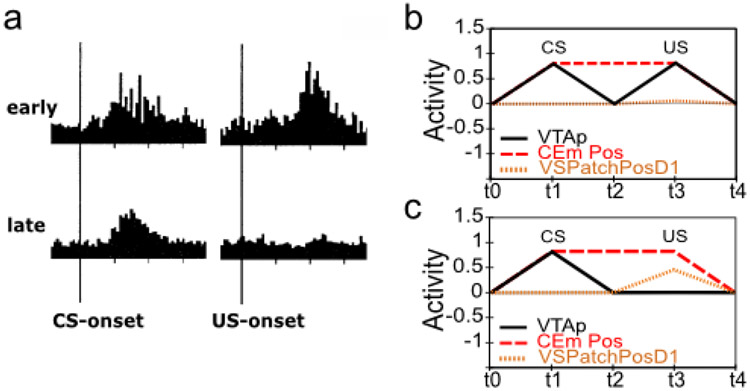

Figure 2:

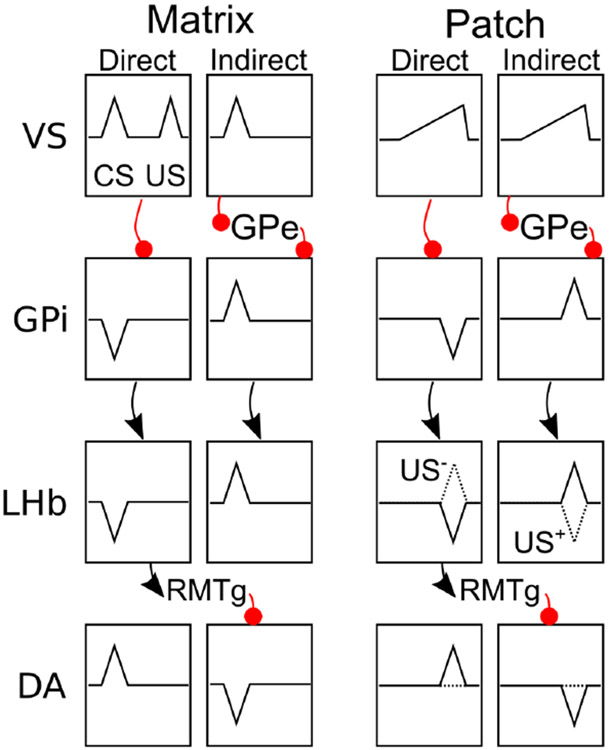

Detailed components of PVLV, showing the opponent processing pathways within the PV and LV systems, which separately encode the strength of support for and against each US, and with opposite dynamics for appetitive versus aversive valence. BLA has pathways for appetitive and aversive USs, along with distinctions between acquisition and extinction learning, all of which engage in broad inhibitory competition. The BLA projects to central amygdala (CEl, CEm) neurons that integrate the evidence for-and-against a given US, and communicate this net value to the VTA (and SNc, not shown). The ventral striatum (VS) has matrix and patch subsystems, where matrix (VSm) receives modulatory inputs from corresponding BLA neurons and represents CSs in a phasic manner, and patch (VSp) anticipates and cancels USs. Both have a full complement of opposing D1- and D2-dominant pathways, which have opposing effects for appetitive versus aversive USs.

The VS (PV) system is likewise organized according to standard D1 vs. D2 pathways, within the US-coding Patch areas and the CS-coding Matrix areas, again with separate pathways for appetitive vs. aversive, with the sign of D1 vs. D2 effects flipped as appropriate. For example, VSpatch aversive-pathway D2 neurons learn from unexpected aversive outcomes, and thereby learn to anticipate such outcomes. The complementary D1 pathway there learns from any dopamine bursts associated with the non-occurrence of these aversive outcomes, such that the balance between these pathways reflects the net expectation of the aversive outcome. Figure 2 shows how each VS pathway sends a corresponding net excitation or inhibition to the LHb (via a pallidal pathway), with excitation of the LHb causing inhibition of VTA / SNc tonic firing via the RMTg (rostromedial tegmental nucleus — in our model, we combine the LHb and RMTg into a single functional unit).

In addition, the VSpatch D1 appetitive pathway sends direct shunting inhibition to these midbrain dopamine areas, to block excitatory firing from expected US’s. Although this pathway may seem redundant with the LHb inhibition, the differential timing of these two functions motivates the need for separate mechanisms. On the one hand, a complete inhibition of bursting requires an input arriving at least slightly prior to the time of reward, or else at least a little activity will necessarily occur on the front end. On the other hand, an omission-signaling input (for pausing) can only arrive at least slightly after the expected time of the reward because an agent can determine that an expected event did not occur only after the time it was expected, reflecting at least some finite amount of time to compute and transmit the omission signal. Indeed, omission pauses are empirically seen to have greater latency than corresponding bursts.

Finally, apropos of the asymmetries between appetitive vs. aversive conditioning discussed above, there are a number of aspects where these two differ in the model. For example, appetitive, but not aversive, pathways in the amygdala can directly drive dopamine burst firing, consistent with our overall hypothesis (and extant data) that the LHb is exclusively responsible for driving all phasic pausing in dopamine cell firing. This has some important functional implications, by allowing the amygdala dopamine pathway to be positively rectified — i.e., it only reports when the amygdala estimates the current situation to be better than the preceding one. Furthermore, the extent to which VSpatch expectancy representations can block dopamine pauses associated with expected aversive outcomes is significantly less than its ability to block bursts for expected appetitive outcomes as suggested by the available empirical data (Matsumoto & Hikosaka, 2009a).

Differences From Previous Versions of PVLV

The present model represents a significant elaboration and refinement of the PVLV framework since our prior publication (Hazy et al., 2010), as briefly summarized here:

Earlier versions of PVLV included only a central nucleus amygdalar component (CEA; formerly CNA). In the current version we have added a basolateral amygdalar complex (BLA), which serves as a primary site for CS-US pairing during acquisition (acquisition-coding cells) and, critically, for the pairing of CSs with the non-occurrence of expected USs (extinction-coding cells). This is especially important in accounting for extinction-related phenomena reflecting the idea that extinction is an additional layer of learning and not just the unlearning (weakening) of acquisition learning and, importantly, underlies the ability of the current version to account for the differential sensitivity of extinction to context (see simulation 2b).

Earlier versions of PVLV treated the inhibitory PV component as unitary with no distinction between a shunting effect onto dopamine cells that prevents bursting at the time of expected rewards and the pausing effect that occurs when expected rewards are omitted. Since that time it has been established that the LHb plays a critical role in the latter phenomenon and may serve as the sole substrate responsible for producing pauses on dopamine cell firing of any cause. Accordingly, the new version adds a LHb component which receives disynaptic collaterals from the same VSpatch cells that provide direct shunting inhibition onto dopamine cells. These collaterals result in net excitatory inputs onto LHb cells. Critically, the LHb also receives direct (excitatory) inputs for aversive USs, as well as net inhibitory inputs associated with both rewarding outcomes and expectations of reward. The LHb component is important for producing the dissociation between shunting inhibition and overt pauses, it also enables the new model to produce (modest) disinhibitory positive dopamine signals at the time of expected-but-omitted punishment (see simulation 4b).

Like TD, and RPE generally, earlier versions of PVLV really only contemplated appetitive context, i.e., the occurrence and omission of positively-valenced reward; it largely ignored learning under aversive context (e.g., fear conditioning). In the current version, additional complementary channels for appetitive vs. aversive processing (and associated learning) have been incorporated throughout the model, with their convergence occurring only at two distinct sites where population coding is largely, but not exclusively, unitary: 1) the LHb (which projects to the VTA/SNc); and, 2) the dopamine cells themselves in the VTA/SNc. Incorporating aversive processing channels alongside appetitive ones is important for demonstrating that the core idea underlying the DA-RPE theory can survive the integration of all these parallel processing pathways and their significant convergence onto most dopamine cells. This extension enabled the current PVLV version to simulate basic aspects of aversive conditioning (see simulation 4a,b), and provides a richer more accurate account of conditioned inhibition.

Also like TD and RPE, earlier versions of PVLV treated reward as a single scalar value throughout the model without distinguishing between different kinds of reward (or punishment), e.g., food vs. water, or shock vs. nausea. By representing different kinds of reward separately in both the amygdala and ventral striatum, learning in the current version of PVLV can also produce separate expectancy representations about different rewards. This provides a direct mechanism that can help account for the phenomenon of unblocking-by-identity (e.g., see simulation 3a).

Overview of Remainder of Paper

The next two sections examine first the neurobiology that constrains various aspects of the PVLV framework, and then the actual computational implementation of the model. After that, the Results section describes and discusses twelve simulations covering several well-established Pavlovian conditioning phenomena and, especially, serve to highlight the most important features of the overall framework. The paper concludes with a General Discussion in which we highlight the main contributions of the PVLV framework, compare our approach with others in the literature, and identify several unresolved questions for future research.

Neurobiological Substrates and Mechanisms

In this section, we provide a neurobiological-level account of the computational model outlined above, followed in the subsequent section by a computationally-focused description. To that end, we provide a selective review of salient biological and behavioral data most influential in informing the overall framework, and we focus specifically on data that go beyond the foundations covered in earlier papers (O’Reilly et al., 2007; Hazy et al., 2010).

The Amygdala: Anatomy, Connectivity, & Organization

The amygdala is composed of a dozen or so distinct nuclei and/or subareas (Amaral, Price, Pitkanen, & Carmichael, 1992), each of which can exhibit several subdivisions (McDonald, 1992). Despite such anatomical complexity, however, the literature has largely conceptualized amygdalar function in terms of two main components: a deeper/inferior basolateral amygdalar complex (BLA) more involved in the processing of inputs; and a more superficial/superior central amygdalar nucleus (CEA) that has long been implicated in driving many of the more primitive manifestations of emotional expression (changes in heart rate, breathing, blood pressure; freezing, and so on; Figure 3a). Both BLA and CEA contain both glutamatergic and GABAergic cells (both local interneurons and projecting), with considerable topographic patchiness in their relative proportions; for example, the lateral segment of the CEA (CEl) seems to be almost exclusively GABAergic. Importantly, the amygdala is richly innervated by all four neuromodulatory systems including a dense, heterogeneously distributed dopaminergic projection (Amaral et al., 1992; Fallon & Ciofi, 1992). Both main classes of dopamine receptors (D1-like, D2-like) are richly expressed, although not homogeneously (Bernal, Miner, Abayev, Kandova, Gerges, Touzani, Sclafani, & Bodnar, 2009; de la Mora, Gallegos-Cari, Arizmendi-García, Marcellino, & Fuxe, 2010; de la Mora, Gallegos-Cari, Crespo-Ramirez, Marcellino, Hansson, & Fuxe, 2012; Lee, Kim, Kwon, Lee, & Kim, 2013).

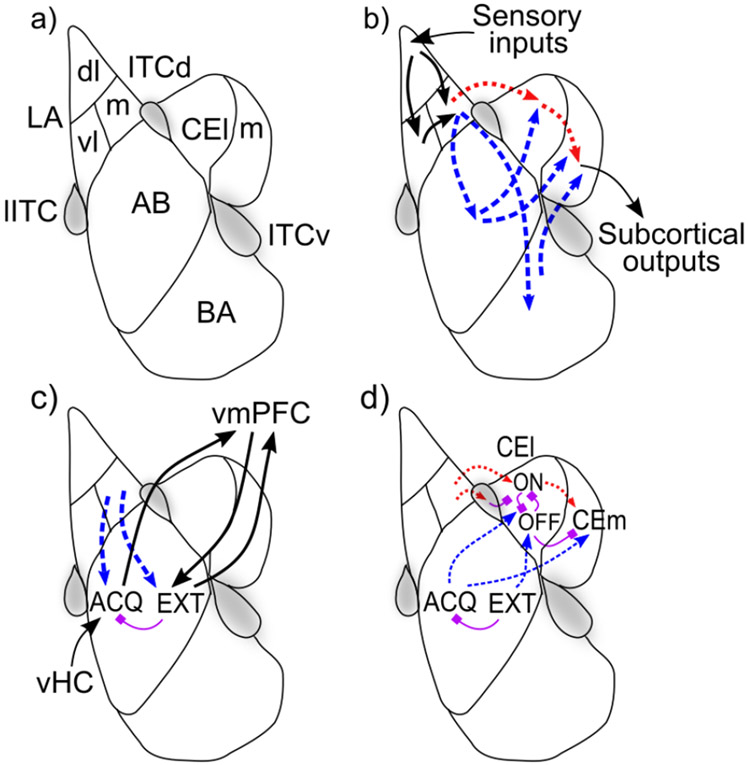

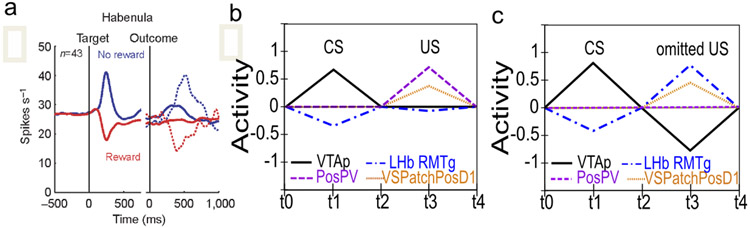

Figure 3:

Basic organization, information flow, and opponent-processing in the amygdala. a) Schematic diagram of a coronal section of unilateral amygdala with most prominent nuclei outlined according to one common scheme. The BLA is composed of: lateral (LA), basal (BA), and accessory basal (AB) nuclei. The central nucleus is composed of a lateral (CEl) and medial (CEm) segments. Three collections of GABAergic cells make up the intercalated cell masses (ITCs): the lateral paracapsular (lITC); dorsal (ITCd); and ventral (ITCv). b) Basic information flow through the amygdala: sensory information enters via the LA predominantly flowing from dorsolateral (LAdl) to ventrolateral (LAvl) and medial (LAm) divisions. From there two parallel pathways reach the central amygdala: 1) directly from LA to CEA (via CEl) (red dotted arrows); and, 2) via the the basal (BA) and accessory basal (AB) nuclei (blue dash arrows). c) Opponent processing in the BLA following the scheme of Herry et al., 2008: acquisition-coding cells (ACQ) receive context inputs from the ventral hippocampus (vHC) and project to the ventromedial PFC, which connects reciprocally with extinction-coding cells (EXT) in the BLA, with the vmPFC providing additional context information relevant for extinction. d) Opponent processing in the CEl following the scheme of Pare & Duvarci, 2012, with CElON = acquisition and CElOFF = extinction.

Figure 3 shows the major areas and connectivity. The BLA receives dense afferents from much of the cerebral cortex, including the higher areas in all sensory modalities, as well as associative and affective cortex, and from corresponding thalamic nuclei and subcortical areas (Pitkanen, 2000; Doyère, Schafe, Sigurdsson, & LeDoux, 2003; LeDoux, 2003; Uwano, Nishijo, Ono, & Tamura, 1995). The lateral nucleus (LA) receives the preponderance of sensory input, preferentially into its dorsolateral division (Pitkanen, 2000) and projects to CEA both directly, and indirectly via the basal and accessary basal nuclei (Pitkanen, 2000). The basal and accessory basal nuclei exhibit extensive local and contralateral interconnectivity, and also send feedback projections to two of the divisions of the LA (Pitkanen, 2000), whereas the LA has relatively little local or contralateral interconnectivity. The BLA also projects heavily to the ventral striatum and to much of the cortical mantle (Amaral et al., 1992; Pitkanen, 2000), including a strong reciprocal interconnection with the orbital frontal cortex (OFC; Schoenbaum, Chiba, & Gallagher, 1999; Ongür & Price, 2000) and parts of ventromedial prefrontal cortex including the anterior cingulate cortex (ACC; Ongür & Price, 2000). Based on neural recording studies, there seems to be little discernible local topographical organization of different cell responses in the BLA (i.e., a salt-and-pepper distribution; Herry et al., 2008; Maren, 2016), with one notable exception of a recently described positive-negative valence gradient in a posterior-to-anterior direction (Kim, Pignatelli, Xu, Itohara, & Tonegawa, 2016).

The CEA can be functionally divided into medial (CEm) and lateral (CEl) segments (Figure 3a), with the CEl exerting a tonic inhibitory influence on the CEm that, when released, performs a kind of gating function for CEm outputs analogous to that seen in the basal ganglia. Both CEl and, especially, CEm send efferents to subcortical visceromotor areas (autonomic processing) as well as to certain primitive motor effector sites involved in such affective behaviors as freezing (Koo, Han, & Kim, 2004; Veening, Swanson, & Sawchenko, 1984; Li et al., 2013). Importantly, among the subcortical efferents from CEm are projections to the VTA/SNc, both directly, and via the pedunculopontine tegmental nucleus (PPTg; Everitt, Cardinal, Hall, & Parkinson, 2000; Fudge & Haber, 2000), and stimulation of the CEm has been shown to drive phasic dopamine cell bursting and/or dopamine release in downstream terminal fields (Rouillard & Freeman, 1995; Fudge & Haber, 2000; Ahn & Phillips, 2003; Stalnaker & Berridge, 2003; see Hazy et al. (2010) for detailed discussion). The CEA also receives broad cortical and thalamic afferents directly (Amaral et al., 1992; Pitkanen, 2000); these direct inputs are presumably responsible for the result that the CEA can support first-order Pavlovian conditioning independent of the BLA (Everitt et al., 2000).

Division-of-Labor Between BLA and CEA: Analogy With the Cortical – Basal Ganglia System

In addition to the long-held view of basic amygdalar organization that posits the BLA as the input side and the CEA as the output side, we also embrace emerging ideas (e.g., Duvarci & Pare, 2014; Holland & Schiffino, 2016) that posit that the two areas may have distinct functional roles analogous to the distinction between those of the cortex (i.e., BLA) and the basal ganglia (CEA; Figure 1). The BLA has long been described as cortex-like (McDonald, 1992), while the CEA is more basal-ganglia like, particularly its lateral segment (CEl) whose principal cells bear a strong resemblance with the medium spiny neurons (MSNs) of the neostriatum, with which it is contiguous laterally (McDonald, 1992; Cassell et al., 1999). Thus, one can think about the BLA computing complex, high-dimensional representations of current states of the world (including both external and internal components) that are anchored by expectations about the imminent occurrence of specific USs; in contrast, the CEA involves simpler, low-dimensional representations about particular primitive actions to be taken based on those US-anchored anticipatory states (e.g., fear, food anticipation). Both BLA and CEA subserve both input and output roles and function partially in parallel as well as serially, with a major distinction between their output projections. The BLA projects to neocortex and basal-ganglia (especially ventral striatum) and exerts a more modulatory effect, while CEA projects almost exclusively to subcortical areas (excluding the basal ganglia), and is a strong driver of subcortical visceromotor and primitive motor effectors.

Electrophysiological recording shows that BLA neurons exhibit a wide range of selectivity to different CSs, USs, and contexts (Muramoto, Ono, Nishijo, & Fukuda, 1993; Ono et al., 1995; Toyomitsu, Nishijo, Uwano, Kuratsu, & Ono, 2002; Herry et al., 2008; Johansen, Hamanaka, Monfils, Behnia, Deisseroth, Blair, & LeDoux, 2010a; Johansen, Tarpley, LeDoux, & Blair, 2010b; Repa, Muller, Apergis, Desrochers, Zhou, & LeDoux, 2001; Roesch, Calu, Esber, & Schoenbaum, 2010; Beyeler, Namburi, Glober, Simonnet, Calhoon, Conyers, Luck, Wildes, & Tye, 2016). By adulthood, a significant proportion of the principal cells in both BLA and CEA appear to stably represent specific kinds of primary rewards and punishments and not undergo significant change thereafter. For example, discriminative- and reversal-learning experiments have shown that CS-US associative pairings can undergo rapid remapping when environmental contingencies change, leaving the underlying US-specific representational scheme intact (Schoenbaum et al., 1999). A simple model for Pavlovian conditioning is that previously neutral CSs acquire the ability to activate these US-coding cells by strengthening synapses they send to them (Muramoto et al., 1993; Ono et al., 1995; Toyomitsu et al., 2002). More recent studies examining larger population-level samples suggests that learning in the BLA is complex, high-dimensional, and distributed — consistent with a cortex-like system (Beyeler et al., 2016; Grewe, Gründemann, Kitch, Lecoq, Parker, Marshall, Larkin, Jercog, Grenier, Li, Lüthi, & Schnitzer, 2017). Nevertheless, the essential function of BLA in linking CSs and USs remains a useful overarching model.

In addition to a strong US-anchored organization for amygdala representations, there are also cells in both BLA and CEA that reflect evidence against the imminent occurrence of particular US outcomes. For example, Herry et al. (2008) showed that a distinct set of BLA neurons progressively increased in activity in response to CS-onset over multiple US omission trials (extinction training), in contrast with those (acquisition-coding) neurons that had acquired activity in response to CS-onset during fear acquisition. Similarly, Ciocchi et al. (2010) showed opponent coding of aversive US presence versus absence in separate populations of CElON versus CElOFF neurons. These CEl neurons are exclusively GABAergic and have mutually inhibitory connections, producing a direct opponent-processing dynamic. This pattern of opponent organization, which is one of two core computational principles in our model, is essential for supporting extinction learning from the absence of expected USs, and also for probabilistic learning paradigms (Esber & Haselgrove, 2011; Fiorillo, Tobler, & Schultz, 2003).

Extinction Learning and the Role of Context

Considerable behavioral data strongly supports the idea that extinction learning is particularly sensitive to changes in both external and internal context, and that areas in the vmPFC play an important role in contextualizing extinction learning (Quirk, Likhtik, Pelletier, & Paré, 2003; Laurent & Westbrook, 2010). Further, Herry et al. (2008) looked specifically at the connectivity of extinction-coding versus acquisition-coding cells in the BLA and found that only the former receive connections from vmPFC. This has been incorporated into the PVLV framework in the form of contextual inputs to the model that connect exclusively to the extinction coding layers of the BLA. Somewhat surprisingly, Herry et al. (2008) also reported that hippocampal inputs to the BLA (long implicated in conditioned place preference and aversion) connected only with acquisition-coding cells; this rather paradoxical situation is discussed in a section on the role and nature of context representations in the General Discussion section. In essence, it is hard to avoid the conclusion that the hippocampus and vmPFC must convey distinctly different forms of context information to the amygdala. Simulation 2b in the Results section explores the differential context-sensitivity of extinction versus acquisition learning.

There are likely differential contributions of the BLA vs. CEA to extinction learning, in part due to the greater innervation of BLA by contextual inputs. For example, limited evidence suggests that the CEA may not be able to support extinction learning by itself and instead depends on learning in the BLA (Falls, Miserendino, & Davis, 1992; Lu, Walker, & Davis, 2001; Lin, Yeh, Lu, & Gean, 2003; Quirk & Mueller, 2008; Zimmerman & Maren, 2010). However, muscimol inactivation of BLA at different stages of extinction learning demonstrates that extinction can persist in the absence of BLA activation (Herry et al., 2008). Although not currently implemented in PVLV, this can potentially be explained in terms of BLA driving learning in vmPFC which can in turn drive extinction via direct projections into CEA (e.g., Anglada-Figueroa & Quirk, 2005). Finally, the intercalated cells (ITCs) have been widely discussed as suppressing fear expression under various circumstances (Royer, Martina, & Paré, 1999; Marowsky, Yanagawa, Obata, & Vogt, 2005; Likhtik, Popa, Apergis-Schoute, Fidacaro, & Paré, 2008; Ehrlich, Humeau, Grenier, Ciocchi, Herry, & Luthi, 2009; Maier & Watkins, 2010; Pare & Duvarci, 2012). However, some conflicting data has emerged in this regard (Adhikari, Lerner, Finkelstein, Pak, Jennings, Davidson, Ferenczi, Gunaydin, Mirzabekov, Ye, Kim, Lei, & Deisseroth, 2015). Nonetheless, it seems likely that ITCs participate somehow in the opponent-processing scheme for acquisition vs. extinction coding in the amygdala. Their role is currently subsumed within the basic extinction-coding function in PVLV and not explicitly modeled.

Dopamine Modulation of Acquisition Versus Extinction Learning

Dopamine has been shown to be important for plasticity-induction in the amygdala (Bissire, Humeau, & Lthi, 2003; Andrzejewski, Spencer, & Kelley, 2005). While the other three neuromodulatory systems (ACH, NE, 5-HT) are undoubtedly important (e.g., Carrere & Alexandre, 2015), they are not currently included in the PVLV framework. There are both D1-like and D2-like receptors in in the BLA (de la Mora et al., 2010), and blocking of D2s in the BLA impaired acquisition of fear learning, reducing conditioned responses such as freezing (Guarraci, Frohardt, Falls, & Kapp, 2000; LaLumiere, Nguyen, & McGaugh, 2004) and fear-potentiated startle (Nader & LeDoux, 1999; de Oliveira, Reimer, de Macedo, de Carvalho, Silva, & Brandaõ, 2011) to a CS. Similarly, Chang, Esber, Marrero-Garcia, Yau, Bonci, and Schoenbaum (2016) reported that optogenetically-driven pauses in DA firing produce expected effects consistent with aversive conditioning, while antagonism of D1s blocked fear extinction (Hikind & Maroun, 2008). In the positive valence domain, antagonism of D1s in the amygdala attenuated the ability of a cue paired with cocaine to reinstate conditioned responding (Berglind, Case, Parker, Fuchs, & See, 2006). Similarly consistent D1 and D2 receptor effects have been documented in CEl as well (De Bundel, Zussy, Espallergues, Gerfen, Girault, & Valjent, 2016).

Extending the results and model of Herry et al. (2008), the PVLV framework accounts for the differential learning of acquisition versus extinction cells in the BLA (and acquisition only in CEl) in terms of a 2 X 2 matrix of valence X dopamine receptor dominance. For example, acquisition for appetitive Pavlovian conditioning is trained by (appetitive) US occurrence and modulated by phasic dopamine bursting effects on D1-expressing positive US-coding cells, while extinction learning is mediated by phasic dopamine pausing effects on corresponding D2-expressing cells. Conversely, aversive acquisition is trained by (aversive) US occurrence and phasic dopamine pausing at D2-expressing, negative US-coding cells and so on. Considerable circumstantial, but not yet direct, evidence supports something like this basic 2 X 2 framework.

As noted earlier, the relative timing of phasic dopamine effects is critical for our model, to prevent CS-driven bursts from reinforcing themselves. Behaviorally, it has long been recognized that excitatory Pavlovian conditioning does not generally occur at CS-US interstimulus (ISIs) intervals less than approximately 50 msec (Schneiderman, 1966; Smith, 1968; Smith et al., 1969; Mackintosh, 1974; Schmajuk, 1997), and becomes progressively weaker and more difficult at ISIs exceeding 500 msec or so, although there is a great deal of variability across different CRs in the optimal ISI, which can extend to several seconds for some CRs (Mackintosh, 1974). Importantly, virtually all of the evidence bearing on optimal ISIs appears to involve the delay conditioning paradigm in which the CS remains on until the time of US onset, which fosters stronger and/or more reliable conditioning relative to trace paradigms in which there is gap between CS-offset and US-onset. Although not in the amygdala, recent optogenetic studies have documented a temporal window of 50-2000 msec or so after striatal MSN activity during which phasic dopamine activity can be effective in inducing synaptic plasticity, which serves as a kind of proof of concept (Yagishita, Hayashi-Takagi, Ellis-Davies, Urakubo, Ishii, & Kasai, 2014; Fisher, Robertson, Black, Redgrave, Sagar, Abraham, & Reynolds, 2017).

Amygdala-Driven Phasic Dopamine and the PPTg

The medial segment of the central amygdalar nucleus (CEm) has been shown to project to the midbrain dopamine nuclei both directly (Wallace, Magnuson, & Gray, 1992; Fudge & Haber, 2000) and indirectly via the pedunculopontine tegmental nucleus (PPTg; Takayama & Miura, 1991; Wallace et al., 1992; Fudge & Haber, 2000), and stimulation of the CEm has been shown to produce bursting of dopamine cells (Rouillard & Freeman, 1995; Fudge & Haber, 2000; Ahn & Phillips, 2003). It seems likely that the PPTg pathway (along with its functionally-related neighbor the laterodorsal tegmental nucleus, LDTg) plays a particularly important role in bursting behavior (e.g., Floresco, West, Ash, Moore, & Grace, 2003; Lodge & Grace, 2006; Omelchenko & Sesack, 2005; Pan & Hyland, 2005; Grace, Floresco, Goto, & Lodge, 2007), via direct efferents to the VTA and SNc (Watabe-Uchida, Zhu, Ogawa, Vamanrao, & Uchida, 2012). The PPTg and LDTg are located in the brainstem near the substantia nigra and both have additionally been implicated in a disparate set of functions including arousal, attention, and aspects of motor output (Redila, Kinzel, Jo, Puryear, & Mizumori, 2015). The PPTg projects preferentially to the SNc while the LDTg projects more to the VTA (Watabe-Uchida et al., 2012).

Both the PPTg and LDTg contain glutamatergic, GABAergic, and cholinergic cells (Wang & Morales, 2009) and all appear to be involved in the projection to the dopamine nuclei, although specific functions assignable to each remain poorly characterized (Lodge & Grace, 2006). Recently, subpopulations of cells in PPTg have been shown to code separately for primary rewards and their predictors and it has been suggested that the PPTg may play the key role in calculating RPEs (Kobayashi & Okada, 2007; Hazy et al., 2010; Okada, Nakamura, & Kobayashi, 2011; Okada & Kobayashi, 2013). The current PVLV framework implements a non-learning version of this basic idea by having the PPTg compute the positive-rectified derivative of its ongoing excitatory inputs from the amygdala (where the learning occurs), the positive rectification serving to restrict the effects of all amygdala-PPTg input onto dopamine cells to positive-only signaling (i.e., bursting).

Homogeneity and Heterogeneity in Phasic Dopamine Signaling

The midbrain dopamine system is constituted by a continuous population of dopamine cells generally divided into three groups based on location and connectivity: retrorubral area (RRA; A8; most caudal and dorsal), substantia nigra, pars compacta (SNc; A9), and ventral tegmental area (VTA; A10; most ventromedial; Joel & Weiner, 2000). Early electrophysiological studies emphasized the relative homogeneity of responding to reward-related events, with roughly 75% of identified dopamine cells displaying the now-iconic pattern of burst firing for unexpected rewards and reward-predicting stimuli (e.g. Schultz, 1998). However, it is now clear that there is considerable heterogeneity in response patterns existing within this basic homogeneity (e.g., Brischoux et al., 2009; Bromberg-Martin et al., 2010b; Lammel et al., 2012; Lammel et al., 2014; Menegas et al., 2015; Menegas et al., 2017; Menegas et al., 2018). For example, it appears that a greater proportion of the more laterally situated dopamine cells of the SNc may exhibit a reliable, early salience-driven excitatory response irrespective of the valence of the US. In the case of aversive USs, this results in a distinct, biphasic burst-then-pause response pattern (Matsumoto & Hikosaka, 2009a).

Furthermore, Brischoux et al. (2009) has described a small subpopulation of putative dopamine cells clustered in the ventrocaudal VTA in and near the paranigral nucleus, likely not recorded from previously, that respond with robust bursting to primary aversive events as reported by Brischoux et al. (2009). Those authors speculated that those cells might participate in a specialized subnetwork distinct from the preponderance of dopamine cells, based on some older studies reporting that cells in the paranigral nucleus project densely and selectively to the vmPFC and NAc shell (Abercrombie, Keefe, DiFrischia, & Zigmond, 1989; Kalivas & Duffy, 1995; Brischoux et al., 2009). However, some caution is warranted before concluding that these cells are actually dopaminergic as several studies have now characterized a heterogeneous population of glutamatergic projecting cells intermingled throughout the dopamine cell population, including the VTA where they are particularly concentrated near the midline (see Morales & Root, 2014, for review). Some of these cells project to the vmPFC and NAc shell and some respond with excitation to aversive stimuli (Morales & Root, 2014; Root, Mejias-Aponte, Qi, & Morales, 2014; Root, Estrin, & Morales, 2018a). Thus, further studies are needed to confirm that the cells described by Brischoux et al. (2009) are indeed dopaminergic. In any case these aversively-bursting cells are largely out of scope for the current framework, but are included in the model largely for illustrative purposes; their efferents are not used by any downstream components for learning or otherwise (see simulation 4a and related discussion). A possible role for such an aversive-specific subnetwork in the learning of safety signals is discussed in the General Discussion.

The Ventral Striatum

The ventral striatum (VS) is a theoretical construct based on functional considerations. As usually defined the VS is composed of the entirety of the nucleus accumbens (NAc) as well as ventromedial aspects of the neostriatum (caudate and putamen). The NAc is further subdivided into a core which is histologically indistinguishable from, and continuous with, ventromedial aspects of the neostriatum (Heimer, Alheid, de Olmos, Groenewegen, Haber, Harlan, & Zahm, 1997), and a shell which is histologically distinct from the core. The shell is itself internally heterogeneous, composed of multiple subareas participating in many distinct subnetworks involving primitive processing pathways (Reynolds & Berridge, 2002). For the purposes of the current framework, we focus only on the non-shell aspects of the ventral striatum.

The principal and projecting cells of the striatum are known as medium-spiny neurons (MSNs). By hypothesis, VS MSNs can be partitioned into eight phenotypes according to a 2 X 2 X 2 cubic matrix: The first two axes are identical to those used to partition the principal cells of the amygdala, namely the valence of the US defining the current situation (positive/negative) and the dominant dopamine receptor expressed for the MSN (D1/D2). To these are added a third orthogonal axis reflecting the compartment of the striatum in which an MSN resides — patch (striosomes) versus matrix (matrisomes). The definitive work identifying this latter compartmental partitioning has been done in the neostriatum (e.g., Gerfen, 1989; Fujiyama, Sohn, Nakano, Furuta, Nakamura, Matsuda, & Kaneko, 2011), but these same subdivisions have been established histologically for the NAc core as well (e.g., Joel & Weiner, 2000; Berendse, Groenewegen, & Lohman, 1992) — although the patch and matrix compartments are more closely intermixed in the ventral as compared to the dorsal striatum. Both D1- and D2-expressing MSNs have been shown to reside in both compartments of the neostriatum (Rao, Molinoff, & Joyce, 1991), and individual cells have been found in the VS that code selectively for appetitive or aversive USs (Roitman et al., 2005). Nonetheless, despite the considerable circumstantial evidence, our proposal for partitioning VS MSNs into eight functional phenotypes remains speculative.

The positive / negative valence and D1 / D2 distinctions work essentially the same in VS as described for the amygdala. As noted in the above model overview, we hypothesize that the patch MSNs learn to represent temporally-specific expectations for when specific USs should occur (based largely on external cortical inputs, not through timing mechanisms intrinsic to striatum as hypothesized by Brown et al., 1999). By contrast, matrix MSNs are hypothesized to learn to respond immediately based on CS inputs that indicate the possibility of imminent specific USs, producing a gating-like updating signal to OFC and vmPFC areas while simultaneously modulating phasic dopamine via projections to the LHb. The following sections provide some key empirical data that motivates this basic division-of-labor.

VS Patch MSNs Learn Temporally-Specific US Expectations

A strong constraint distinguishing the function of patch versus matrix subtypes comes from studies showing that at least some MSNs in the patch compartment, but not the matrix, synapse directly onto dopamine cells of the VTA and SNc, and this is particularly the case for VS patch cells (Joel & Weiner, 2000; Bocklisch, Pascoli, Wong, House, Yvon, Roo, Tan, & Lüscher, 2013; Fujiyama et al., 2011). Further, it appears that the MSNs that synapse directly onto dopamine cells express D1 receptors (Bocklisch et al., 2013; Fujiyama et al., 2011). Thus, as described in our earlier paper (Hazy et al., 2010) and elsewhere (Houk et al., 1995; Brown et al., 1999; Vitay & Hamker, 2014), D1-expressing MSNs of the VS patch compartment that synapse onto dopamine cells are in a position to prevent bursting of dopamine cells for primary appetitive events (i.e., USs) as these become predictable. This produces a negative feedback loop where phasic dopamine bursts drive learning on these D1-patch neurons, causing them to inhibit further bursting for expected rewards. This corresponds directly to the classic Rescorla-Wagner learning mechanism, and the PV system in PVLV.

We extend this core model by suggesting that these same D1-expressing VS patch MSNs also send US expectations to the lateral habenula (LHb), enabling the latter to drive pauses in dopamine cell firing when expected rewards have been omitted. Complementarily, some D2-expressing VSPatch MSNs serve as an extinction-coding or evidence-against counterweight to this D1-anchored pathway, mitigating the strength of the expectation, for example in the case of probabilistic reward schedules (see Simulation 2c in Results), and conditioned inhibition training (Simulation 3c).

In essential symmetry with the appetitive case, a second subpopulation of D2-expressing patch MSNs are hypothesized to provide the key substate responsible for learning a temporally-explicit expectation of aversive outcomes. Again, dopamine cell pauses provide the appropriate plasticity-inducing signals so as to strengthen thalamo- and corticostriatal synapses at these D2-expressing MSNs. In this case, however, there is no direct shunting of dopamine cells involved and instead it is in the LHb where the critical cancelling out of expected punishment occurs. The integration of these signals with other inputs is discussed in the section on the lateral habenula below.

VS Matrix MSNs Immediately Report CSs