Abstract

The recent introduction of wireless head-mounted displays (HMD) promises to enhance 3D image visualization by immersing the user into 3D morphology. This work introduces a prototype holographic augmented reality (HAR) interface for the 3D visualization of magnetic resonance imaging (MRI) data for the purpose of planning neurosurgical procedures. The computational platform generates a HAR scene that fuses pre-operative MRI sets, segmented anatomical structures, and a tubular tool for planning an access path to the targeted pathology. The operator can manipulate the presented images and segmented structures and perform path-planning using voice and gestures. On-the-fly, the software uses defined forbidden-regions to prevent the operator from harming vital structures. In silico studies using the platform with a HoloLens HMD assessed its functionality and the computational load and memory for different tasks. A preliminary qualitative evaluation revealed that holographic visualization of high-resolution 3D MRI data offers an intuitive and interactive perspective of the complex brain vasculature and anatomical structures. This initial work suggests that immersive experiences may be an unparalleled tool for planning neurosurgical procedures.

Keywords: Magnetic resonance imaging, Holographic visualization, Surgery, Intervention planning, Neurosurgery, HoloLens

Introduction

The ever-growing evolution and adaptation of image-guided surgeries and interventions (IGI) underscore the need for effective and intuitive visualization and use of three-dimensional (3D) or multi-slice imaging sets [1–7] for diagnosis and planning procedures. Procedure planning requires accurate mapping of the spatial relationships between anatomical structures to accurately target the tissue-of-interest and avoid harming healthy tissue or vital structures (such as blood vessels). In neurosurgery, magnetic resonance imaging (MRI) is a valuable modality offering features important for surgical planning: a plethora of contrast mechanisms, operator-selected orientation and position of multi-slice and true-3D scanning, and an inherent coordinate system generated by the native magnetic field gradients of the scanner [8–12]. State-of-the-art scanners further enable interactive computer control of the imaging parameters on-the-fly while images are collected. This level of interactive processing during procedures makes MRIs unique [13–19].

While MRI guidance offers a vast volume of 3D information, clinical practitioners need to view and plan in two-dimensional (2D) displays. A significant challenge for clinicians is to mentally extract 3D features and their spatial relationships by viewing multiple 2D MRI slices from 3D or multi-slice sets [17, 20–22]. Understanding the complex 3D architecture of the tissue, especially considering multi-contrast 3D imaging data sets, is challenging and time-consuming. Many groundbreaking rendering techniques have been introduced to enable 3D visualization, such as maximum intensity projections in angiography and virtual colonoscopy or angioscopy (e.g., [2–4] and references therein), but 2D visualization remains the standard practice. Augmented reality (AR) visualization has been hailed as a potential solution to the above challenges. By fusing and co-registering images, segmented anatomical structures, patient models, vital signs, and other data into a combined model projected onto the physical world, information is contextualized. Most recently, this concept of operator immersion into information has been further enhanced with AR holographic scenes through head-mounted displays (HMD). Furthermore, because these are wireless devices, they become practical for use in the operating room (OR). A growing number of pioneering studies demonstrate the potential of AR through HMD in different medical domains, including IGI [1–7, 23].

This work introduces a generic computational platform that establishes a data and command pipeline that integrates the MRI scanner/data, computational modules for image processing and rendering, and the operator via a holographic AR (HAR) interface for performing MRI-guided interventions. The platform has certain software-architecture and computational features selected based on operational needs and criteria set by collaborating clinicians: (i) speed of data access, (ii) interactive manipulation of images and objects, and (iii) interaction with the system front-end as hands-free as possible. Secondary to these aspects, the proposed platform’s design expanded upon the concept that the HMD acts as a human and data interface. Simultaneously, a separate processor (the Host PC) performs most of the processing to eliminate latencies and enable efficient computation. Specifically, in this work, the HMD is used to immerse the operator into a holographic surgical scene (HoloScene) and interactive manipulation of MRI data for planning neurosurgical procedures. The HoloScene includes the combination of original MRI data (DICOM format), renderings of the segmentation of anatomical structures extracted from the MRI data, and virtual graphical objects, such as paths, annotations, and forbidden regions. Moreover, to ensure safe planning, we implemented virtual fixtures extracted from the MRI sets, which in turn were fed into the collision detection module.

While the interface is platform-independent, in this work, it was implemented on and optimized for the commercially available HMD Microsoft HoloLens [24]. Using the native HoloLens voice and hand gesture control, the interface enables the operator to interactively, i.e., on-the-fly, select the presented objects, and manipulate them to appreciate 3D anatomies. The operator can select on-the-fly MRI slice(s), segmented structures, and perform standard visualization actions, such as 3D rotations and zooming. The HoloScene was further endowed with the capability to adjust its scale, offering the capability to walk inside the brain structures. Inherent to our implementation is that all objects in the HoloScene are co-registered to the MRI space. In addition to realism, the direct matching of holographic and MRI spaces can be used in planning as well as in intraoperative co-registration of HoloScene and tracked interventional tools [23, 25–27]. The platform was tested in silico by clinical and research personnel at the four collaborating sites regarding latencies and functionality for the specific neurosurgical clinical paradigm of accessing a brain meningioma with a needle-based tool. Specific workflow protocols were developed and are reported in this paper.

Methods

Holographic Augmented Reality Platform

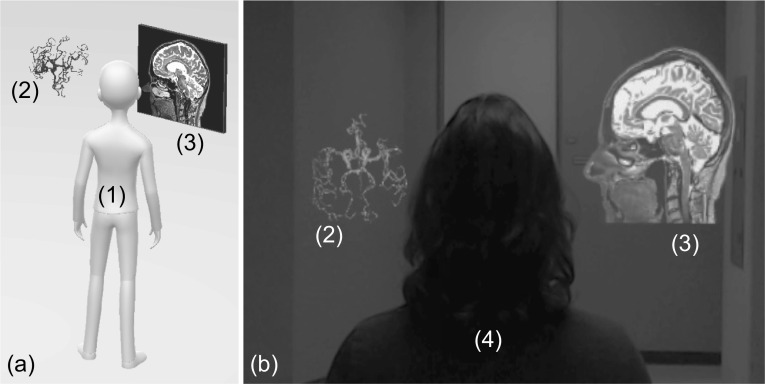

Figure 1a shows a cartoon impression of the entities’ topology in the holographic scene, including the hologram and a virtual 2D display centered around the operator to specific locations inside the room (a default functionality of the HoloLens device). Figure 1b is a capture of the HoloLens output, i.e., what the operator sees, including the hologram, the 2D virtual display, and the real world. The virtual 2D display is used inside the HAR scene for conventional visualization of individual slices on which the operator may prefer to perform certain tasks, such as annotation of targets, setting trajectories, or marking boundaries.

Fig. 1.

Cartoon impression (a) and single frame captured from the HoloLens HMD (b) of the HAR scene depicting the operator (1), holographic structures (2), and an embedded 2D virtual window (3). In (b), a volunteer subject (4) stands in-front of the operator who wears the HMD; note how the augmented reality objects are fused with real-space

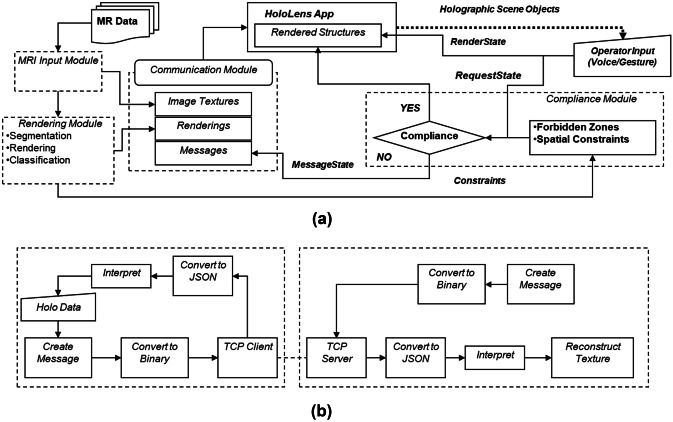

The system’s computational component was deployed in a two-CPU fashion: part run on the HoloLens and part run on an external PC (Host-PC) connected via a 2-way wi-fi TCP/IP connection with the HoloLens. The use of the Host-PC, instead of a only-HoloLens single CPU implementation, was adopted to address the limited capabilities of the HoloLens Processor and enable real-time interactive manipulation of the HAR scene based on a computational framework, called the Framework for Interactive Immersion into Imaging Data (FI3D), described in Velazco-Garcia et al. [28]. The Host-PC was a laptop running Windows 10 Pro (processor Intel Core i7-7820HQ Quad-Core 2.9/3.9 GHz; RAM 64 GB) with an NVIDIA Quadro P5000 GPU (2560 NVIDIA CUDA® Cores and 16 GB GDDR5X RAM). The Microsoft HoloLens HMD has a custom Microsoft holographic processing unit, an Intel 32-bit architecture CPU, 2 GB of RAM and runs the Windows Mixed Reality operating system. The FI3D framework handles most foundational computational tasks, e.g., communication of the two devices and renderings, allowing us to focus on visualization and interactions with the holograms. This dual CPU implementation offers (i) a platform-independent implementation of the computational core and (ii) expansion and customization with additional image processing and planning facilities (as shown in [26, 28–31]). Figure 2a and b illustrate the two-way communication between the Host-PC and the HoloLens based on messages that (i) carry data (i.e., MRI images in the form of textures, segmented anatomical structures, and messages to the operator) from the Host-PC to the HoloLens and (ii) carry instructions (commands and parameters) from the HoloLens to the Host-PC. The feed received by the HoloLens is supplied to the HoloScene application that updates the HAR scene presented to the operator. The instructions from the operator are supplied to the modules that perform the activated task. The Host-PC is composed of three primary modules: MRI input, rendering, and communication. See the attached Video 1 for a demonstration of the software features.

Fig. 2.

(a) The architecture of the HAR computational core depicting its modules and their interconnectivity and (b) the communication of the Host-PC (left dashed box) and HoloLens (right dashed box)

MRI Input Module

The MRI input module receives data from either a storage device and/or directly from the MRI scanner via a dedicated TCP/IP connection with the scanner’s local area network. First, the module extracts spatial information (position and orientation of the slice relative to the MRI scanner coordinate system) from the corresponding DICOM header. The extracted information is fed to the rendering module, which generates the objects to be presented in the HAR scene, and the communication module to prepare and send the data to the HoloLens.

Rendering Module

Segmentation and rendering are performed in the MRI processing module based on the work in Kensicher et al. [32]. In brief, the module includes three routines for the segmentation of the tumor, skin, and vessels. The tumor was extracted from a post-contrast T1-weighted fast field echo (post-T1FFE) multi-slice dataset using a manually seeded region-growing algorithm. Then, the tumor was segmented using the criteria introduced by Pohle and Toennies [33], and its surface was smoothed using a morphological closing with a sphere mask to erase small gaps produced by noise. The blood vessels were extracted from a time-of-flight (TOF) multi-slice set based on high-pass filtering with two manual threshold levels. The first level was applied to the processed image, and the second was obtained from applying a Frangi filter for detailed vessels [33]. The third routine was used to extract the patient skin (the routine was applied to all data sets of the same patient to verify head motion between scans). Skin extraction included two steps: (i) high-pass filtering with a manual threshold to segment the whole visible skin and (ii) a morphological closing was applied with a spherical mask to eliminate any Rician noise surrounding the skin [34]. All renderings were based on surface rendering.

Communication Module

The communication module manages the connection and interaction between the Host-PC and the HoloLens. These two elements are in constant communication, i.e., the HoloLens constantly sends its current status, and the Host PC responds with the requested information. The functionalities described in Table 1 are mapped to a set of gestures described in Table 2. When triggered by the user, the HoloLens translates these gestures into a request message formatted in JSON [35] and sent to the Host-PC. Subsequently, the Host-PC decodes the JSON string, performs the required tasks, and transmits the process’s result (e.g., contrast changes to an image) to the HoloLens.

Table 1.

Purpose and functionality of HAR features

| Purpose | Functionalities |

|---|---|

| 2D Virtual Windows | |

|

• Manipulation of single MRI slice • Selection of parameters for a function or algorithm • Selection of MRI protocol |

• Adjust contrast, lightning, gamma • Segmentation (band pass thresholding) • Apply actions to all slices of MRI dataset • Region-of-interest definition • Customized size and position definition |

| AR/MRI co-registration | |

|---|---|

| Spatial co-registration of HAR objects and the MR images for planning and real-space manipulations |

• All coordinates are in the MRI scanner coordinate system. • In HAR: selection of point, slice or volume; then mark them on the MRI dataset • In MRI: select point or slice; then mark them on the HAR • Record coordinates of any selections |

| Voice and gesture control | |

|---|---|

| Hands-free interaction |

• Select orientations of MR images (transverse, sagittal, coronal) • Adjust contrast |

Table 2.

Voice and gesture command purposes

| Voice Command | Parameter Adjustment | Function |

|---|---|---|

| Lock | N/A | Disable all interactions |

| Image adjustment | ||

|---|---|---|

| Sagittal | x-axis scroll | Toggle visibility and adjustment of the image in the sagittal plane |

| Transverse | z-axis scroll | Toggle visibility and adjustment of the image in the transverse plane |

| Coronal | y-axis scroll | Toggle visibility and adjustment of the image in the coronal plane |

| Length | x-axis scroll | Enable adjustment of the window-length |

| Width | x-axis scroll | Enable adjustment of the window-width |

| HAR interaction | ||

| Image | Pinch and move | Move the image within the holographic scene |

| Robot | Pinch and move | Move the robot within the holographic scene |

| Vessels | N/A | Toggle rendered vessels in the hologram ON/OFF |

| Tumor | N/A | Toggle rendered tumor in the hologram ON/OFF |

| Skin | N/A | Toggle rendered skin in the hologram ON/OFF |

| Trajectory planning | ||

|---|---|---|

| Target | Pinch and move | Move target point within the holographic scene |

| Insert | x-axis scroll | Insert the needle |

An important aspect in planning surgical procedures or interventions is the inherent coordinate system of the MRI. In compliance with clinical practice, all calculations, segmentations, and objects in the holographic scene are relative to the MRI scanner coordinate system. This is an essential feature for three reasons: (i) The MRI represents real space for the interventionists; therefore, the holographic scene is also scaled accordingly. (ii) During planning, selected targeted structures and paths are known in the patient space, and after registration of the patient, with some form of tracking device, these become relative to the operating room space. (iii) When the MRI scanner is controlled on-the-fly by the operator, any area selected in the holographic space for additional scanning can immediately be sent to the MRI scanner for updated data collection. It should be emphasized that the mentioned benefits of the inherent MRI coordinate system are generic and can be used with a variety of scenarios, including (a) using the system online with the MRI scanner intraoperatively, e.g., in sessions that interleave imaging and interventional steps, or (b) offline, for pre-operative planning using data from an earlier imaging session. It is noted that all the presented studies were performed accessing pre-acquired MRI data sets, without a patient inside the MRI scanner with the system using the coordinate system of the acquisition MRI scanner using pertinent information from the DICOM file headers (e.g., slice position, orientation, in-plane spatial resolution, slice thickness).

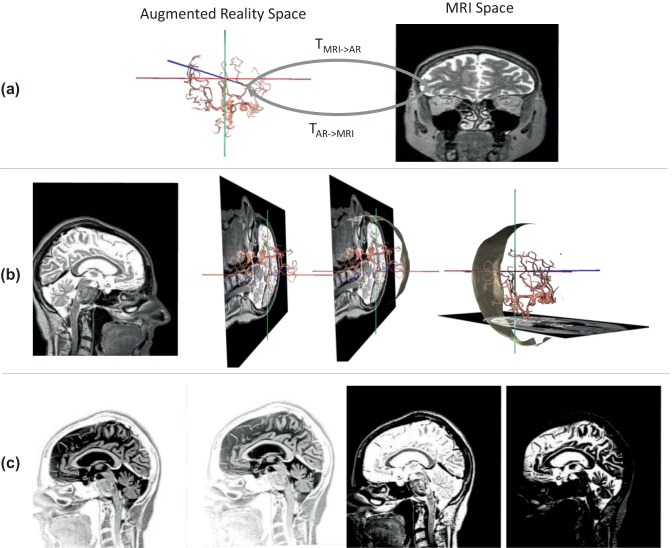

Figure 3a illustrates the two coordinate systems: the MR image and MRI scanner coordinate system, and the holographic scene coordinate system, which is related by rigid-body transformations. With this feature, any point selected in the MRI space (e.g., on any 2D image) is known in the holographic space, and vice-versa.

Fig. 3.

Operator-controlled functionalities of the HAR using MRI and the renderings of segmented structures from a healthy subject. (a) The transformation between the coordinate system of the MRI scanner (into which all images are inherently registered and used for planning) and the coordinate system of the HAR scene. In (b), there are four examples of holographic outputs showing (from left to right) a sagittal MRI in the 2D virtual display, the same slice with the vessels rendered, the same scene with the patient skin, and the same scene but with a transverse slice at its corresponding position (also depicted in embedded 2D). The views in (b) and (c) were generated and altered on-the-fly by voice control. In (c), an example of contrast manipulation showing the same sagittal slice with four different contrast settings is demonstrated

HoloLens Control and Communication

The holographic module was developed in C# using Unity 3D v5.6 [36]; this entailed developing and uploading an application (APP) to the HoloLens HMD. Currently, the input comes from a file containing the pixel intensity value as color information for every slice. A script reads the file and saves the information in 2D textures. After the textures are generated, a mesh is loaded and rendered in its corresponding slice location. When a user wants to change to a different plane, these textures are generated without affecting the original set of textures (i.e., those initially generated from the MRI data files). However, when the user changes the contrast setting, the system must re-generate the original set of textures.

Interactive Control of the HAR Scene

Interactive manipulation of the holographic scene and objects was implemented using a hands-free approach based on the native gesture and voice recognition features of the HoloLens to perform the procedures above. This approach was adopted in response to a primary design objective to achieve streamlined operation without the need to sterilize equipment, operator repositioning to access them (keyboards, mouse, etc.), or an assistant. Table 1 lists the voice and gesture commands and the corresponding functions performed upon their call or performance, respectively. It should be noted that the HoloLens tools allow the developer or the operator to implement other voice or gesture commands depending on operational needs or preferences. Apart from the voice/gesture commands, no other form of human-machine interfacing was used or deemed necessary at any point in these studies.

Regarding content manipulation, the operator can select and view in the hologram and the virtual 2D display any of the three principal scanner orientations using the voice commands “Coronal,” “Transverse,” and “Sagittal.” Figure 3 b illustrates the two output entities; the hologram includes the rendered vascular tree together with the MRI coordinate system and the embedded 2D virtual window as selected by the operator. The operator can switch among the principal orientations and scroll through the slices of the loaded MRI multi-slice set using the HoloLens scrolling gesture, i.e., moving one’s hand in the horizontal direction. As the operator changes orientations and/or scrolls through slices, the focused slice is automatically updated in both (1) the embedded 2D window (that remains at the same location in the HAR scene) and (2) the 3D hologram; i.e., the slice is fused with the rendered vascular tree in the MRI coordinate system. As shown in Fig. 3c, the operator can additionally adjust contrast settings by adjusting the contrast’s value window-length and the window-width with the voice commands “Length” and “Width,” respectively. The window-length and window-width values are selected using the scrolling gesture, and the image is updated in real-time as the operator adjusts the contrast.

Planning and Safety Control

As reported in Table 2 (under Trajectory Planning), selection of a stereotactic access trajectory entails two steps: (i) selecting the target point T and (ii) interactively setting the trajectory to this point by grabbing and moving a virtual trajectory graphical object, defining the insertion point I on the skin. The latter movement changes the Euler angles, i.e., x, y, and z rotational values, of the virtual trajectory (with its distal tip always anchored at point T). During planning, the software continuously runs two tests. First, as the operator adjusts the orientation of the trajectory, a collision module checks (i) whether any point of the virtual trajectory collides with or passes through a structure classified as forbidden and (ii) whether the distance from point T to point I is longer than the insertable length of the interventional tool. In either case, the operator is warned with (i) a pop-up window, (ii) a change in the needle’s color to red, and (iii) a change in color of the collision area on the forbidden structure to red. Concurrently, the software updates all virtual structures in the hologram, as well as on the embedded 2D virtual display.

Testing

Three sets of studies were performed: (i) ergonomics, (ii) assessment of protocols for stereotactic neurosurgical planning, and (iii) characterizing system latencies. A total of seven operators tested the platform, of whom three were clinical personnel. The other four were computer science and engineering school faculty and graduate students. The subjects were first trained on the implemented functionalities for interaction with the HAR sign gestures (such as blooming and air tapping gestures) and voice command. The subjects were guided, as well as left to explore alone, using gesture and voice commands to manipulate the HAR objects, including images, rendered structures, and virtual tools. In addition, the subjects were also familiarized with viewing a 3D hologram, guided to walk around the HAR, and get used to the idea of having a virtual object placed in real space. To alleviate potential challenges associated with the testing environment and user practices of the HoloLens 1 HMD, we followed certain conditions. First, we used lighting conditions that were optimized in pilot tests to ensure that HoloLens 1 did not lose its localization and orientation. We performed all studies in regulated lighting conditions, since the goal of this work was to implement the software and demonstrate feasibility. Second, to ensure that HoloLens 1 did not lose co-registration with the room, we instructed the user to wear the HoloLens continuously and not to remove it. During the operation of the system, we recorded what action was taken, for what purpose, and the comments of the operator regarding whether the action was (a) inferior, equal, or superior and (b) slower, same, or faster to standard practice. In these studies, we used subjective evaluations, and no quantifiable metrics were used. Testing of the system further included the evaluation of possible workflows to perform neurosurgical needle-based interventions. Clinical operators went through numerous iterations of simulated procedures, and the workflows were merged to find the most streamlined supported by the HAR and to identify improvements that can be implemented in the software. The third type of testing entailed assessing the system’s performance for documenting the extent of latencies in the different tasks performed on the HoloLens. These tests included measuring the CPU time required for different tasks using the Visual Studio Performance Profiler.

MRI Data

In these studies, we used MRI data sets collected during clinical imaging sessions on two meningioma patients performed on a 1.5-T Multiva (Philips Medical Systems). Specifically, the vessels were extracted from a time-of-flight (TOF) magnetic resonance angiography (MRA) 2D multislice gradient recalled echo sequence that collected 150 slices, with a 1.2-mm slice thickness and a 0.6-mm spacing between slices, TR/TE = 23/6.905 ms, excitation angle of 20°, an acquisition matrix size of 400 × 249 and in-plane resolution of 0.46 × 0.46 mm (pixel spacing). The meningiomas and patient skin were segmented out from a T1-weighted 3D gradient recalled echo sequence that collected 330 slices, with a 1.1-mm slice thickness and a 0.55-mm spacing between slices, TR/TE = 23/7.654 ms, excitation angle of 23°, an acquisition matrix size of 232 × 229, and in-plane resolution of 0.47 × 0.47 mm (pixel spacing).

Results

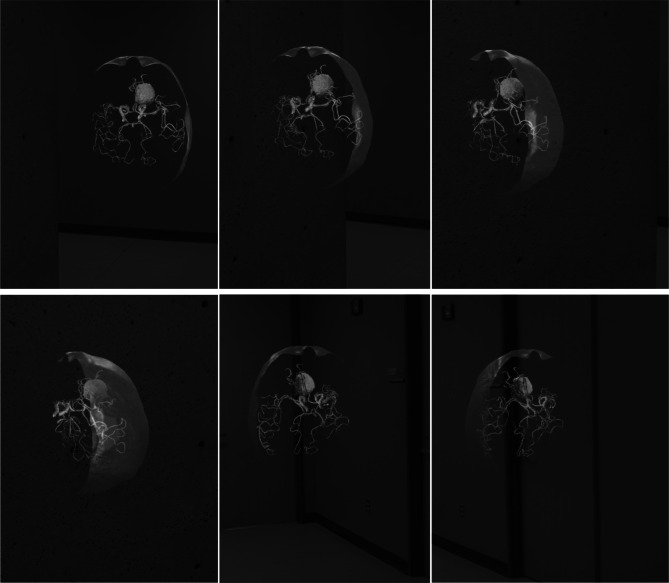

Figure 4 shows representative captions from the visualization of the HoloScene. While the interface enables the operator to rotate the hologram to appreciate the 3D structures, we observed the operators’ preference to move around the hologram while also tilting their head. The holographic AR visualization was subjectively found to be superior over desktop-based volume rendering in regard to (i) 3D appreciation of the spatial relationship of segmented structures, (ii) detection and collision avoidance of critical structures (vessels), and (iii) ergonomics and ease in selecting a trajectory in stereotactic planning.

Fig. 4.

An example holographic output of a meningioma patient showing the blood vessels, meningioma, and patient skin extracted from the MRI data set. The operator walks around the hologram (the 2D virtual window is toggled OFF). The degree of assessing the 3D vascular tree at the vicinity of the meningioma is measured

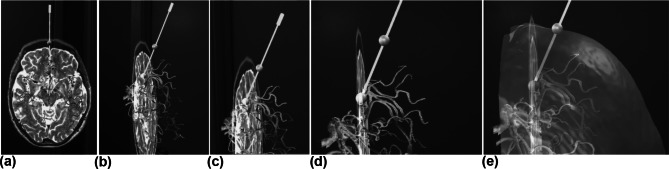

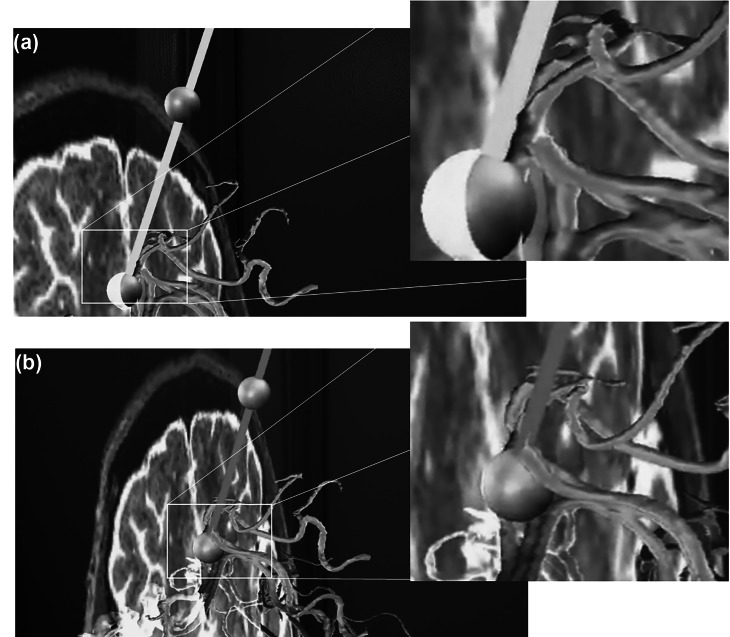

Figure 5 shows representative frames from stereotactic planning with the HAR interface. The operator first selected the target point and delineated it with a green sphere. While the distal tip of the virtual needle was anchored to the target point, i.e., at the center of the marking sphere, the operator moved the needle in the holographic space, i.e., changed the Euler angles of the needle relative to a coordinate system that was centered on the target point and parallel to the MR scanner principal axes. While the operators were testing, we noticed they moved their body and head relative to the scene to better view the trajectory. This behavior was a clear indication of the value provided by the AR holographic interface. The operators were intuitively moving in 3D space, immersed in the scene. The clinical personnel stressed the benefit of intuitive ergonomics and speed of interactively setting the trajectory while viewing the entire vascular tree (forbidden zones). This conclusion was based on their previous experience with desktop 3D visualization (e.g., maximum intensity projections for vessels) or with the conventional mental extraction of 3D features by inspecting numerous 2D MRI slices.

Fig. 5.

Representative captions from the HoloLens output for a case of safe stereotactic planning depicting a transverse slice, the segmented vessels, the selected target and insertion points delineated with green spheres, and the virtual needle (in green since there is no collision). In (a to d), the different shots were collected while the operator was moving around the hologram to appreciate different perspectives. In (e), the operator requests via a voice command the superposition of the skin (and adjusted transparency). Note the insertion point is centered on the skin boundary as expected from its definitions, the crossing point of the trajectory with the skin

In Fig. 6a, the trajectory is green since there is no collision with a forbidden zone (blood vessels), whereas in (b), the trajectory is presented in red since there is a collision with a blood vessel. When a trajectory was fixed, an insertion point was assigned that was the intersection of the trajectory and the head skin mask and was delineated by a green sphere.

Fig. 6.

Representative close-up shots from the HoloLens output during stereotactic trajectory planning from a target to an insertion point (green spheres). The trajectory in (a) is safe, depicted as a green needle, with no point in the needle colliding with the forbidden regions (blood vessels). The trajectory in (b) resulted in a collision of the needle with a vessel and as a result the color of the needle is shown in red to warn the operator

We performed numerous simulations of planning stereotactic procedures with the HAR. In this process, we iterated through a workflow outlined in Table 3 that serves as a starting point for future evaluations as well as an indirect demonstration of the capabilities offered by the HAR interface through an HMD to investigate and optimize the customization of interventional protocols in silico under realistic spatial conditions. The user studies also underscored the need for customizable training. We noticed some users were immersed and navigated with ease right after the basic instructions, while others initially found the air tap gesture challenging. In these studies, the FI3D framework transmits and renders grayscale images with a resolution of 512 × 512 once per second and can modify visual properties, such as translation, rotation, and color changes, once every 16 ms [28].

Table 3.

Planning workflow

| • Load MRI data sets, pre-segmented models, and structure classification |

| • Generate textures (Host-PC and voice/HoloLens) |

| • Activate imaging 2D virtual display and present the first selected image set (voice) |

| • Activate hologram and superimpose forbidden virtual fixtures, per classification |

| • Inspect hologram and images on the 2D virtual display to identify the targeted pathologic foci |

| • Select the target point T on 2D virtual display (gesture); software records its coordinates in the HoloScene coordinate system (xT, yT, zT) and replicates it in the hologram.a |

| • The software generates and presents as a hologram and in the 2D virtual display a virtual trajectory (initially set along the MRI z-axis) that starts from the point (xT, yT, zT). |

| • The operator interactively adjusts the virtual trajectory (with its distal tip anchored at point T).b |

| • On-the-fly constraints are applied to avoid collision with forbidden zones and ensure the constraints imposed by the needle’s length are met. |

| • Calculate insertion point I.c |

aThis is a workflow suggested for the HAR by the work in this paper; other workflows may be appropriate for other procedures and by other operators.

bAll calculations and maneuvering are performed in the MRI coordinate system; these measures can be transformed to any coordinate, such as that of the operating room, for example, using the appropriate rigid body transformation as illustrated in Fig. 3a.

cCalculation of the insertion point on the skin could correspond to identification of a drilling point on the patient’s scalp, for example.

Discussion

The rapid evolution of low-cost user-to-information immersion interfaces, such as the current generation of wireless HMD, has attracted significant attention from clinicians. In particular, HMD holographic interfaces enable operator immersion into the imaging data and imaging-based 3D or 4D segmentations [4–7, 23]. This work is motivated by the potential of merging imaging and holographic visualization to achieve more ergonomic and intuitive planning of procedures. This, in turn, may enable new practices and improve patient outcomes and cost-effectiveness. The presented system can be used in several possible scenarios. One such scenario is using the HAR for planning stereotactic neurosurgical procedures and extracting coordinates and spatial data for using them on a stereotactic frame. Notably, in such a scenario, planning and intervention are two separate procedures; the latter being performed later. Another scenario is the real-time use of the HAR system to guide a stereotactic procedure, which may require co-registration of the patient and the HAR scene. This is a special case where the neurosurgical room includes an MRI scanner that is used for interleaving imaging and surgical steps. In this scenario, real-time MRI, processing, rendering, and HAR co-registration need to occur on-the-fly. In such cases, special safety and operational precautious need to be followed. For example, the HoloLens should remain outside the 5 Gauss line of the scanner. These and other similar scenarios may be implemented in the future assuming clinical merit is proven.

Our system’s design was based on addressing practical on-site needs such as how the MR data need to be presented and how one can perform planning that includes selecting the point for drilling through the scalp and avoiding vital structures. While this work is at an early stage, it has already become apparent that streamlining the workflow, reducing the workload, and achieving the smallest possible learning curve are critical features. The current implantation of the interface has been the result of iterative feedback from the users, primarily focused on two key aspects. The first was related to the visualization features of the rendered elements (such as color and opacity) that were subjective to the user and were specific to the application needs (e.g., the number of distinct visuals for proper contrast). Based on their input, we enabled the users to select customizable or default parameters. The second aspect was the interaction with the holograms. With the pointing-activated features of the hologram, the user needs to point at the element (as it is the mechanism available within the Microsoft HoloLens), and as a result, the user needs to look away from the hologram, stripping away part of the immersion. Based on the users’ input about these challenges, we incorporated voice commands using the native HoloLens voice control functionalities.

In response to the directives mentioned above, several architectural and functional aspects were incorporated into the work presented here. First, the dual Host-PC/HMD provides the computational resources needed for current as well as future tasks (e.g., maintaining 60 fps even during demanding image manipulation and integrating with real-time MRI reconstruction). Second, the holographic interface offers superior visualization of complex structures which, when paired with virtual fixtures, enhance the effective negotiation of vital structures in both stereotactic and man-in-the-loop interactive freehand control. Third, touch-less or hands-free interfacing between the physician and the system, with the HMD’s hand-gesture and voice commands, were praised and found highly desirable by all study subjects. The work further enabled us to identify preliminary workflow protocols for performing image-guided interventions with holographic immersion. While the platform was only assessed in silico for an MRI-guided neurosurgical procedure, it is adaptable for other modalities, such as CT or ultrasound, and other procedures, including interventions to the spine, prostate, and breast.

While the described implementation provides a proof-of-concept and a roadmap for focusing future efforts, the presented work has certain limitations that will be the subject of future work. First, only three clinical personnel contributed to setting the platform’s specifications and assessing its functionality, and we used only three patient cases (a healthy volunteer and two meningioma patients.) Second, this preliminary work did not include a quantitative assessment of the platform’s functionality and ergonomics. We are designing quantitative studies that incorporate metrics for comparing functionality and ergonomics, and multi-site studies are planned with the next update of the software. Third, in this work the “reality” of the HAR studies was not a patient in the operating room, rather the laboratory rooms where the system was evaluated. It is emphasized that the future target situation is the use of the system in the operating room.

These studies also demonstrated that future developmental work must be directed towards identifying a more intuitive alternative to voice control in the joint space and incorporating image-based force-feedback for improved immersion with respect to 3D and 4D information (as shown before in Navkar et al. [37]). Currently, we are incorporating appropriate image processing protocols and optimizing them with multithread implementation [25] and GPU acceleration [38]. Future studies will also investigate the effect of environmental conditions and operator practices, as discussed in [39].

Conclusion

A holographic augmented reality interface was implemented for interactive visualization of 3D MRI data and planning trajectories for accessing targeted tissue with straight paths. The interface was demonstrated to be intuitive and effective for assessing 3D anatomical scenes and especially for planning interventions with the virtual fixture collision detection feature.

Supplementary Information

Below is the link to the electronic supplementary material.

Funding

This work was supported by the National Science Foundation award CNS-1646566, DGE-1746046, and DGE-1433817. NVT and IS also received in part support by the Stavros Niarchos Foundation that is administered by the Institute of International Education (IIE). All opinions, findings, conclusions, or recommendations expressed in this work are those of the authors and do not necessarily reflect the views of our sponsors.

Compliance with Ethical Standard

Conflict of Interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Cristina M. Morales Mojica and Jose D. Velazco-Garcia equally contributed on this work.

Contributor Information

Cristina M. Morales Mojica, Email: cmmoralesmojica@uh.edu

Jose D. Velazco-Garcia, Email: jdvelazcogarcia@uh.edu

Eleftherios P. Pappas, Email: elepappas@phys.uoa.gr

Theodosios A. Birbilis, Email: birbilist@med.duth.gr

Aaron Becker, Email: atbecker@central.uh.edu.

Ernst L. Leiss, Email: eleiss@uh.edu

Andrew Webb, Email: a.webb@lumc.nl.

Ioannis Seimenis, Email: iseimen@med.uoa.gr.

Nikolaos V. Tsekos, Email: nvtsekos@central.uh.edu

Supplementary Information

The online version contains supplementary material available at 10.1007/s10278-020-00412-3.

References

- 1.Kersten-Oertel M, Gerard IJ, Drouin S, et al. Towards augmented reality guided craniotomy planning in tumour resections. Cham: Springer; 2016. pp. 163–174. [Google Scholar]

- 2.Cutolo F, Freschi C, Mascioli S, et al. Robust and Accurate Algorithm for Wearable Stereoscopic Augmented Reality with Three Indistinguishable Markers. Electronics. 2016;5(4):59. doi: 10.3390/electronics5030059. [DOI] [Google Scholar]

- 3.Kim KH. The Potential Application of Virtual, Augmented, and Mixed Reality in Neurourology. Int Neurourol J. 2016;20(3):169–170. doi: 10.5213/inj.1620edi005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kuhlemann I, Kleemann I, Jauer P, et al. Towards X-ray Free Endovascular Interventions – Using HoloLens for On-line Holographic Visualisation. Healthc Technol Lett. 2017;4(5):184–187. doi: 10.1049/htl.2017.0061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chen AD, Lin SJ. Discussion: Mixed Reality with HoloLens: Where Virtual Reality Meets Augmented Reality in the Operating Room. Plast Reconstr Surg. 2017;140(5):1071–1072. doi: 10.1097/PRS.0000000000003817. [DOI] [PubMed] [Google Scholar]

- 6.Tepper OM, Rudy HL, Lefkowitz A, et al. Mixed Reality with HoloLens: Where Virtual Reality Meets Augmented Reality in the Operating Room. Plast Reconstr Surg. 2017;140(5):1066–1070. doi: 10.1097/PRS.0000000000003802. [DOI] [PubMed] [Google Scholar]

- 7.Qian L, Barthel A, Johnson A, et al. Comparison of Optical See-through Head-mounted Displays for Surgical Interventions with Object-anchored 2D-display. Int J Comput Assist Radiol Surg. 2017;12(6):901–910. doi: 10.1007/s11548-017-1564-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nabavi A, Gering DT, Kacher DF, et al. Surgical Navigation in the Open MRI. Acta Neurochirurgica, Supplement. 2003;85:121–125. doi: 10.1007/978-3-7091-6043-5_17. [DOI] [PubMed] [Google Scholar]

- 9.Kral F, Mehrle A, Kikinis R, et al. Using CAVE Technology for Educating and Training Residents. Otolaryngol Neck Surg. 2004;131(2):P210–P210. doi: 10.1016/j.otohns.2004.06.400. [DOI] [Google Scholar]

- 10.Fedorov A, Beichel R, Kalpathy-Cramer J, et al. 3D slicer as an image computing platform for the quantitative imaging network. Magn Reson Imaging. 2012;30(9):1323–1341. doi: 10.1016/j.mri.2012.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Jolesz FA, Nabavi A, Kikinis R, et al. Integration of interventional MRI with computer-assisted surgery. J Magn Reson Imaging. 2001;13(1):69–77. doi: 10.1002/1522-2586(200101)13:1<69::AID-JMRI1011>3.0.CO;2-2. [DOI] [PubMed] [Google Scholar]

- 12.Black PM, Moriarty T, Alexander E, et al. Development and implementation of intraoperative magnetic resonance imaging and its neurosurgical applications. Neurosurgery. 1997;41(4):831–845. doi: 10.1097/00006123-199710000-00013. [DOI] [PubMed] [Google Scholar]

- 13.Guttman MA, Lederman RJ, Sorger JM, et al. Real-time volume rendered MRI for interventional guidance. J. Cardiovasc. Magn. Reson. 2002;4(4):431–442. doi: 10.1081/JCMR-120016382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.George AK, Derbyshire JA, Saybasili H, et al. Visualization of active devices and automatic slice repositioning (‘SnapTo’) for MRI-guided interventions. Magn. Reson. Med. 2010;63(4):1070–1079. doi: 10.1002/mrm.22307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McVeigh ER, Guttman MA, Kellman P, et al. Real-time, interactive MRI for cardiovascular interventions. Academic Radiology. 2005;12(9):1121–1127. doi: 10.1016/j.acra.2005.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wacker FK, Elgort D, Hillenbrand CM, et al. The catheter-driven MRI scanner: a new approach to intravascular catheter tracking and imaging-parameter adjustment for interventional MRI. Am J Roentgenol. 2004;183(2):391–395. doi: 10.2214/ajr.183.2.1830391. [DOI] [PubMed] [Google Scholar]

- 17.Christoforou E, Akbudak E, Ozcan A, et al. Performance of interventions with manipulator-driven real-time MR guidance: implementation and initial in vitro tests. Magn Reson Imaging. 2007;25(1):69–77. doi: 10.1016/j.mri.2006.08.016. [DOI] [PubMed] [Google Scholar]

- 18.Velazco Garcia JD, Navkar NV, Gui D, et al: A platform integrating acquisition, reconstruction, visualization, and manipulator control modules for MRI-guided interventions, J Digit Imaging, 32(3):420–432, 2019. [DOI] [PMC free article] [PubMed]

- 19.Guttman MA, McVeigh ER. Techniques for fast stereoscopic MRI. Magn Reson Med. 2001;46(2):317–323. doi: 10.1002/mrm.1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gobbetti E, Pili P, Zorcolo A, et al: “Interactive Virtual Angioscopy,” in Proceedings Visualization ’98(Cat. No.98CB36276), 435–438, 1998.

- 21.Muntener M, Patriciu A, Petrisor D, et al. Magnetic resonance imaging compatible robotic system for fully automated brachytherapy seed placement. Urology. 2006;68(6):1313–1317. doi: 10.1016/j.urology.2006.08.1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gomez-Rodriguez M, Peters J, Hill J, et al: Closing the sensorimotor loop: haptic feedback facilitates decoding of motor imagery, J Neural Eng, 8(3), 2011. [DOI] [PubMed]

- 23.Morales Mojica CM, Navkar NV, Tsekos NV, et al: Holographic interface for three-dimensional visualization of MRI on HoloLens: a prototype platform for MRI guided neurosurgeries,” in Proceedings - 2017 IEEE 17th International Conference on Bioinformatics and Bioengineering, BIBE 2017.

- 24.Microsoft, “The leader in mixed reality technology | hololens,” Microsoft, 2017. [Online]. Available: https://www.microsoft.com/en-us/hololens. Accessed 16 Nov 2019.

- 25.Navkar NV, Deng Z, Shah DJ, et al. A framework for integrating real-time MRI with robot control: application to simulated transapical cardiac interventions. IEEE Trans Biomed Eng. 2013;60(4):1023–1033. doi: 10.1109/TBME.2012.2230398. [DOI] [PubMed] [Google Scholar]

- 26.Velazco-Garcia JD, Leiss EL, Karkoub M, et al: Preliminary evaluation of robotic transrectal biopsy system on an interventional planning software, in Proceedings - 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering, BIBE, 357–362, 2019.

- 27.Morales Mojica CM, Velazco-Garcia JD, Navkar NV, et al: A prototype holographic augmented reality interface for image-guided prostate cancer interventions, Proc Eurographics Work Vis Comput Biol Med, 17–21, 2018.

- 28.Velazco-Garcia JD, Shah DJ, Leiss EL, et al. A modular and scalable computational framework for interactive immersion into imaging data with a holographic augmented reality interface. Comput Methods Programs Biomed. 2020;198:105779. doi: 10.1016/j.cmpb.2020.105779. [DOI] [PubMed] [Google Scholar]

- 29.Velazco‐Garcia JD, Navkar NV, Balakrishnan S, et al: End-user evaluation of software-generated intervention planning environment for transrectal magnetic resonance-guided prostate biopsies, Int J Med Robot Comput Assist Surg, 2020. [DOI] [PubMed]

- 30.Velazco-Garcia JD, Navkar NV, Balakrishnan S, et al: Evaluation of interventional planning software features for MR-guided transrectal prostate biopsies, in Proceedings - 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering, BIBE, 2020.

- 31.Molina G, Velazco-Garcia JD, Shah D, et al: Automated segmentation and 4D reconstruction of the heart left ventricle from CINE MRI, in Proceedings - 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering, BIBE, 2019.

- 32.Kensicher T, Leclerc J, Biediger D, et al: Towards MRI-guided and actuated tetherless milli-robots: preoperative planning and modeling of control, in IEEE International Conference on Intelligent Robots and Systems, 2017, vol. 2017-Septe, pp. 6440–6447.

- 33.Pohle R and Toennies KD, Segmentation of medical images using adaptive region growing, in Medical Imaging 2001: Image Processing, 4322:1337–1346, 2001.

- 34.Gudbjartsson H, Patz S. The Rician distribution of noisy MRI data. Magn Reson Med. 1995;34(6):910–914. doi: 10.1002/mrm.1910340618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.“JSON,” 2019. [Online]. Available: http://www.json.org/. Accessed 16 Nov 2019.

- 36.Unity Technologies, Unity real-time development platform | 3D, 2D VR and AR visualizations, 2019. [Online]. Available: https://unity.com/. Accessed 24 Sep 2019.

- 37.Navkar NV, Deng Z, Shah DJ, et al: Visual and force-feedback guidance for robot-assisted interventions in the beating heart with real-time MRI, in Proceedings - IEEE International Conference on Robotics and Automation, 689–694, 2012.

- 38.Rincon-Nigro M, Navkar NV, Tsekos NV, et al. GPU-accelerated interactive visualization and planning of neurosurgical interventions. IEEE Comput Graph Appl. 2014;34(1):22–31. doi: 10.1109/MCG.2013.35. [DOI] [PubMed] [Google Scholar]

- 39.Microsoft, HoloLens environment considerations | Microsoft docs. [Online]. Available: https://docs.microsoft.com/en-us/hololens/hololens-environment-considerations. Accessed 25 Nov 2020.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.