Abstract

The rates of skin cancer (SC) are rising every year and becoming a critical health issue worldwide. SC's early and accurate diagnosis is the key procedure to reduce these rates and improve survivability. However, the manual diagnosis is exhausting, complicated, expensive, prone to diagnostic error, and highly dependent on the dermatologist's experience and abilities. Thus, there is a vital need to create automated dermatologist tools that are capable of accurately classifying SC subclasses. Recently, artificial intelligence (AI) techniques including machine learning (ML) and deep learning (DL) have verified the success of computer-assisted dermatologist tools in the automatic diagnosis and detection of SC diseases. Previous AI-based dermatologist tools are based on features which are either high-level features based on DL methods or low-level features based on handcrafted operations. Most of them were constructed for binary classification of SC. This study proposes an intelligent dermatologist tool to accurately diagnose multiple skin lesions automatically. This tool incorporates manifold radiomics features categories involving high-level features such as ResNet-50, DenseNet-201, and DarkNet-53 and low-level features including discrete wavelet transform (DWT) and local binary pattern (LBP). The results of the proposed intelligent tool prove that merging manifold features of different categories has a high influence on the classification accuracy. Moreover, these results are superior to those obtained by other related AI-based dermatologist tools. Therefore, the proposed intelligent tool can be used by dermatologists to help them in the accurate diagnosis of the SC subcategory. It can also overcome manual diagnosis limitations, reduce the rates of infection, and enhance survival rates.

1. Introduction

The World Health Organization (WHO) has declared that cancer is the foremost cause of death globally. It estimates that the number of individuals identified with cancer would be twice over a subsequent couple of decades [1]. Among cancer types, skin cancer (SC) is considered one of the most common deadly tumors among both women and men populations with almost 9% of them diagnosed with SC in the United States [2]. Throughout the last few decades, countries such as Canada and Australia experienced a huge increase in the number of patients diagnosed with SC [3–5]. Moreover, in Brazil, based on the Brazilian Cancer Institute (INCA), 33% of the people affected by cancer are due to SC [6]. The rates of death and SC infection are still growing. These rates can be decreased if cancer is detected and cured during its initial stages. Primary detection of SC is the keystone to enhancing outcomes and is associated with great improvement in survival rates. Nevertheless, if the disease is progressed ahead of the skin, the survival rates become poor [7].

SC happens when skin cells are harmed and injured, for instance, by overexposure to the sun's ultraviolet radiations. SC can be classified into two main categories: melanocytic and nonmelanocytic lesions. The former category involves melanoma and nevus SC subtypes which occur in malignant and benign forms whereas nonmelanocytic lesions include basal cell and squamous cell carcinoma (SCC) which also appear in malignant and benign types. Actinic keratosis (ak) is the primary sort of SCC. Furthermore, vascular, benign keratosis, and dermatofibroma are recognized as nonmelanocytic benign lesions [8, 9]. In the present medical routine, traditional methods to diagnose and detect SC subtypes involve manual screening and visual examination. These procedures are exhausting, complicated, prone to diagnostic error, and highly dependent on the dermatologist's experience and abilities [10]. The reason for misdiagnosis is the complex patterns of skin lesions located in images [8]. Moreover, to analyze, clarify, and interpret skin lesions, the pixels of these lesions should be recognized explicitly which is hard due to several reasons [11]. First, skin lesions usually suppress hair, oils, and blood vessels that disturb the segmentation process. Furthermore, the low contrast among the lesion and the surrounding regions presents challenges in the accurate segmentation of the lesion. Lastly, these lesions commonly have distinct shapes, dimensions, and colors which increase the difficulty in precisely classifying lesions subtypes. These reasons lead to the massive need for automated intelligent systems for skin lesions analysis to overcome the above-mentioned challenges [12].

Recently, artificial intelligence- (AI-) based assistant systems have offered solutions to revolutionize medicine and health care. AI techniques have shown impressive outcomes in numerous medical fields including breast cancer diagnosis [13, 14], brain tumors [15, 16], gastrointestinal diseases [17], lung diseases [18], and heart complications [19–22]. They also revealed remarkable success in healthcare applications such as telerehabilitation [23], health monitoring [24], and assisting people with disabilities [25, 26]. Furthermore, the latest surveys [10, 27, 28] have proven the achievement of AI-based dermatologist tools in the automatic diagnosis and detection of SC diseases. These automated systems can assist and support clinicians in the fast and accurate decisions regarding the SC subtype, thus avoiding the challenges of the manual diagnosis. They can also offer a user-friendly atmosphere for nonskilled dermatologists. Moreover, they may provide a second opinion which leads to a more confident decision [29].

Radiomics is an evolving field in medical image quantitative analysis [30]. It is also known as quantitative image features. Radiomics associates the large number of significant features extracted from medical images to the biological or clinical endpoints [31]. The integration of radiomics and AI techniques has facilitated the accurate diagnosis of cancer types [32]. This is because radiomics can determine texture and other fundamental components of the tumor from medical images which help the AI methods to perform well and achieve accurate classification or diagnostic results [33]. This paper proposes an intelligent dermatologist tool for the automatic classification of several SC subtypes using an integration of AI and radiomics feature extraction techniques [34]. The motivation behind this work and the novelty of the proposed tool is discussed in the next section. The details of the proposed intelligent tool are illustrated in the methods sections.

The paper is arranged as follows. Section 2 includes background regarding AI-enabled tools for SC diagnosis. Section 3 involves the dataset description, methods of deep learning, and the proposed intelligent tool. Section 4 illustrates the evaluation metrics. Section 5 presents and discusses the results of the proposed tool and Section 5 concludes the paper.

2. Background on Artificial Intelligence in Skin Cancer Diagnosis

Throughout the past years, several automated tools have been introduced for SC detection and diagnosis. These tools can be classified into two classes, conventional and deep learning- (DL-) based methods. The former methods are based on traditional machine learning which includes image preprocessing, image segmentation, and feature extraction that mine low-level radiomics features based on handcrafted approaches. Monica et al. [35] proposed an automated system based on low-level radiomics feature extraction methods such as grey level covariance matrix (GLCM) and some statistical features to learn an SVM classifier to classify 8 subclasses of SC reaching an accuracy of 96.25%. Likewise, Arora et al. [36] fused several low-level features using bag of features (BoF) with SURF features to classify skin images into cancerous and noncancerous. The authors classified images using an SVM classifier and obtained an 85.7% accuracy. Also, Kumar et al [37] implemented a system for differentiating cancerous and noncancerous lesions of the skin using low-level features. First, the authors preprocessed images using a median filter. Then, they segmented the lesions using the fuzzy-C-means clustering approach. Next, they extracted textural features as GLCM and local binary pattern (LBP) as well as color features. Finally, an artificial neural network was trained using the differential evaluation algorithm to classify skin lesions reaching an accuracy of 97.7%.

On the other hand, DL-based techniques are the most recent branch of machine learning techniques that are commonly used in image processing. This is due to their great capacity in diagnosing several diseases from images even without preprocessing, segmentation, and feature extraction. They can also be used as feature extractors to extract high-level radiomics features from medical images [38–40] to be used in the classification process. Rodrigues et al. [41] designed an automated system based on DL and the Internet of things (IoT) to assist doctors in distinguishing between nevi and melanoma skin cancer subclasses. The authors utilized VGG, Inception, ResNet, Inception-ResNet, Xception, MobileNet, DenseNet, and NASNet convolutional neural networks (CNNs) as feature extractors. These high-level features were used separately to construct and train numerous classifiers. The highest performance (accuracy of 96.805%) was attained using the deep radiomics features of the DenseNet-201 and k-nearest neighbor (KNN) classifier. Similarly, Khamparia et al. [42] proposed a framework that can remotely classify skin tumors into malignant and benign using DL techniques. The authors extracted high-level deep features from four CNNs, including ResNet-50, VGG-19, Inception, and SqueezeNet using transfer learning (TL). Next, these features were utilized as inputs to the fully connected layer of a CNN for classification using dense and max-pooling operation attaining a maximum accuracy of 99.6%. Khan et al. [43] presented a novel framework for diagnosing SC subclasses. The framework consists of two main stages: segmentation and classification. In the segmentation stage, a mask recurrent CNN (R-CNN) was employed based on ResNet-50 and the feature pyramid network. Afterward, in the classification stage, a 24-layer based CNN was constructed which employs the Softmax activation function for classification. The accuracy achieved was 86.5%. Later, Khan et al. [44] preprocessed images using decorrelation deformation algorithm, and then employed mask-R-CNN for segmenting skin lesions from these images. Next, deep features from the pooling and fully connected layers of DenseNet are extracted and combined. Afterward, optimal features were selected using entropy-controlled least square SVM. The accuracy attained was 88.5%.

Alternatively, some authors combined several high-level deep features; for example, in [45], the authors mined high-level features from pretrained AlexNet and VGG-16. Afterward, these features are combined in a concatenation method and reduced using principal component analysis. Finally, these reduced features were used to learn several classifiers to classify skin tumors into malignant and benign. The Bagged tree classifier obtained the highest accuracy of 98.71%. Similarly, To˘gaçar et al. [46] introduced an intelligent system to differentiate malignant and benign skin tumors. Initially, images were reconstructed using an autoencoder and then used to train MobileNet. The original images are used to train another MobileNet. The high-level features extracted from the two MobileNet are combined, and a spiking neural network (SNN) is employed to perform classification reaching a 95.27% accuracy. Conversely, the authors of [47] extracted low-level radiomics features based on textural analysis such as GLCM and LBP features and then reduced these features using principal component analysis (PCA). Afterward, the reduced features are used to train several individual classifiers to classify malignant and benign skin lesions. Parallelly, the authors extracted high-level features from a VGG-19 and a customized CNN to classify images into malignant and benign using individual classifiers. Finally, the predictions attained using both levels of features are merged using a voting ensemble classifier reaching a 97.5% accuracy.

The aforementioned techniques have several drawbacks. First, most of them were constructed for binary classification problems such as differentiating between benign and malignant, cancerous and noncancerous, or two skin lesions subclasses. A few of them have classified several subtypes of cancer discussed earlier. The majority of them are either based on the low level or high level of features except for [47] that have fused both levels to perform binary classification. These shortcomings have motivated us to propose a new intelligent dermatologist tool to classify seven skin cancer categories. The proposed tool examines the influence of combining two low-level radiomics features. It also studies the effect of merging several high-level deep features. Finally, it investigates the impact of fusing manifold low-level and high-level features.

3. Methods and Materials

3.1. Feature Extraction Methods

3.1.1. High-Level Radiomics Features Based on Deep Learning Techniques

ResNet is one of the most potent CNNs that are commonly used in the medical field. It received a prominent place in ILSVRC and COCO 2015 competition [48]. It has high capabilities to converge effectively with adequate computation time despite the expanding number of layers. This superiority is due to its new construction introduced by He et al. [48] that entirely relies on the deep residual block. This block embeds shorter paths along the conventional deep CNN to exclude some layers during the training phase which leads to great acceleration in the convergence process [18]. The number of deep layers used in the pretrained ResNet employed in the paper is 50.

DenseNet: several research articles have stated that deep networks may be considerably deeper, accurate, and time-cost effective when created with shorter ties including layers close to the input and output. Thus, the Dense Convolutional Network (DenseNet) was implemented by Huang et al. [49] depending on the aforementioned short links. DenseNet ties all the layers to each other in a feed-forward practice where feature maps are inputs to the subsequent layer while the feature maps of the current layer are supplied to the whole succeeding layers. The DenseNet CNN included in this study has 201 deep layers.

DarkNet was initially implemented by Redmon and Farhadi [50] in 2017. It extremely depends on YOLO-V2. It has a cascaded series of convolutional layers having sizes of 1 × 1 and 3 × 3 which are doubled after each pooling process. DarkNet employs a global average pooling layer to lower the feature presentation between the 3×3 convolutional layers. The number of deep layers involved in the DarkNet used in this study is 53.

3.1.2. Low-Level Features Based on Handcrafted Techniques

Discrete wavelet transform (DWT) applies orthogonal basis functions termed “wavelets” to analyze input data [51]. For 1D input data as the deep radiomics features mined in the earlier phases, the DWT procedure is accomplished through convolving the input features with a low and high pass filter [52]. After that, a reduction process is accomplished by downsampling the output data by 2 [53]. Subsequently, two clusters of coefficients are produced called the approximation coefficients CA1 and detail coefficients CD1 [54].

Local binary pattern (LBP) was proposed by Ojala et al. [55] as a feature extractor approach that determines the local demonstrations and information from pixels. It simply transforms an image into a set of local textures. LBP gives a binary label to each pixel value in an image according to a specific threshold calculated from the neighbor pixel values around the center pixel.

3.2. Dataset

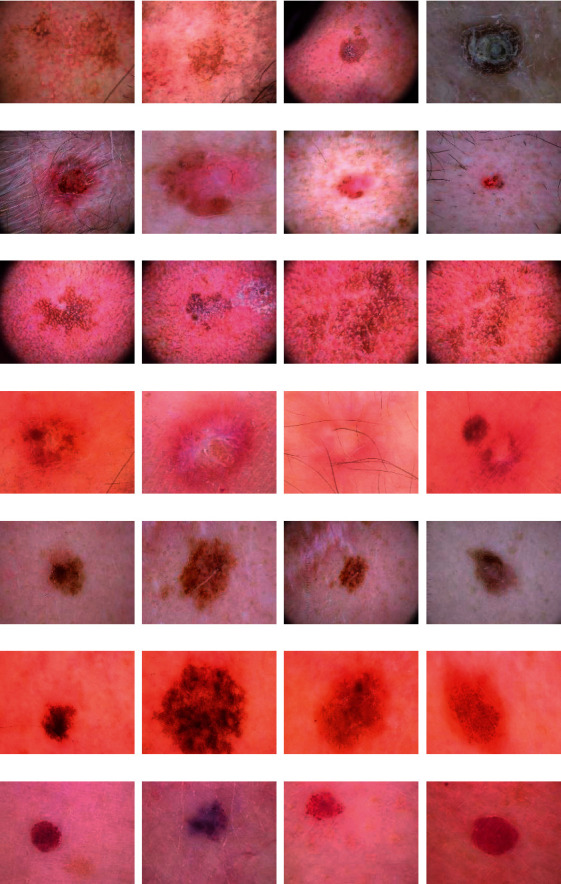

The dataset used in this work is called HAM10000 [56]. This dataset contains images of seven subclasses of SC including melanoma (mel), nevus (nv), basal cell carcinoma (bcc), actinic keratosis (ak), vascular (vasc), benign keratosis (bkl), and dermatofibroma (df). The HAM10000 dataset consists of 10,008 images dermoscopic photos. Among these images 514 are bcc, 327 are ak, 6705 are nv, 1095 are bkl, 1110 are mel, 115 are df, and 142 are vasc skin lesion subtypes. Samples of these images are shown in Figure 1.

Figure 1.

Samples of HAM10000 dataset: (a) ak, (b) bcc, (c) blk, (d) df, (e) mel, (f) nv, and (g) vasc SC class.

3.3. Proposed Intelligent Dermatologist Tool

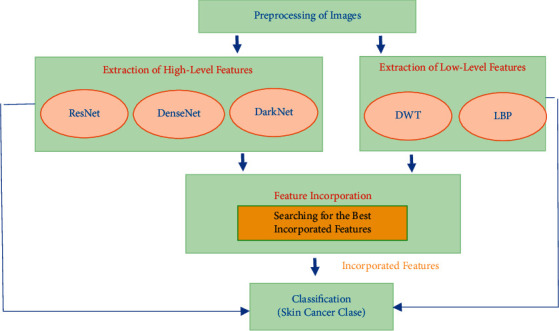

The proposed intelligent tool consists of four steps involving preprocessing of the dermoscopic photos, feature mining, feature incorporation and selection, and classification steps. Initially, photos are resized and augmented. Then, in the feature mining step, low-level features are extracted from two traditional feature extractions. Also, high-level features are mined using three DL techniques. Afterward, features of different levels are integrated and examined and then reduced in the feature incorporation and selection step. Finally, three support vector machine (SVM) classifiers are utilized to classify multiple SC subclasses. The block diagram of the proposed intelligent dermatologist tool is shown in Figure 2.

Figure 2.

The block diagram of the proposed intelligent dermatologist tool.

3.3.1. Preprocessing of Dermoscopic Images

The dermoscopic images of the HAM10000 dataset are of different sizes; therefore, they are all resized to the corresponding dimension of each of the CNNs DL techniques used in this work (224 × 224 × 3 for ResNet-50 and DenseNet-201, and 256 × 256 × 3 for DarkNet-53). Furthermore, as noticed in the dataset section, the number of photos in each class of the dataset is unbalanced; therefore, we used several augmentation techniques to balance the dataset. These augmentation techniques include shearing, rotation, and top and bottom hat filtering. The number of images after augmentation is 1028 for bcc, 981 for ak, 1050 for nv, 1095 for bkl, 1110 for mel, 920 for df, and 994 for vasc.

3.3.2. Feature Mining

In this step, two categoriesof radiomics features are mined consisting of low level and high levelfeatures. In the low-level features, two handcrafted feature extraction methods including LBP [57] and DWT [58] are used. These techniques are based on texture analysis which frequently produces sufficient classification performance, particularly when merged [59]. In the DWT, 3 decomposition levels with Daubechies 4 (db-4) mother wavelet are made. The coefficients of approximation coefficients CA3 and three detail coefficients CD3 are considered as low-level features.

On the other hand, the high-level features include features extracted from three DL approaches. These techniques are the ResNet-50, DenseNet-201, and DarkNet-53 CNNs. To mine these features, initially, TL [60] is performed on the three deep pretrained CNNs learned with the ImageNet dataset to be capable of classifying the seven skin lesion categories. Afterward, few parameters are adjusted for each CNN. Next, the three CNNs are trained with images of the HAM10000 dataset after being resized and augmented. Lastly, high-level features are extracted from the last average pooling layer of the three CNNs. The dimensions of the high-level and low-level features are shown in Table 1.

Table 1.

The size of low-level and high-level features.

| Feature type | Size |

|---|---|

| Low-level features | |

| DWT-A | 1444 |

| DWT-V | 1444 |

| DWT-H | 1444 |

| DWT-D | 1444 |

| LBP | 59 |

|

| |

| High-level features | |

| ResNet-50 | 2048 |

| DenseNet-201 | 1920 |

| DarkNet-53 | 1024 |

To reproduce the high-level features, first, some parameters of the three CNNs should be adjusted such as the learning rate (0.003), number of epochs to 30, validation frequency to 20, and min-batch size to 4. Afterward, TL is employed to use the pretrained CNNs (previously trained on the ImageNet dataset) and change the number of output layers to seven. Next, the three CNNs are trained with the HAM10000 dataset using stochastic gradient descent with a momentum algorithm. Finally, TL is used to extract the high-level features from the latest average pooling layer of the three CNNs. Some features comply with the 174 standards of image biomarker standardization initiative (IBSI) [61, 62] while others are not. Table S1 in the supplementary material discusses the compliance/noncompliance of these features.

3.3.3. Feature Incorporation and Selection

The feature incorporation step is accomplished in three phases. In the first phase, the low-level features extracted in the feature mining stage are integrated using a concatenated procedure. In the second phase, high-level features are fused in a concatenated manner. Additionally, in the third phase, each combination of low- and high-level feature sets is combined to determine the influence of incorporating manifold feature categories and select the integrated manifold feature combination which impacts the classification performance. After accomplishing the incorporation phases, the integrated features set that accomplished the high impact on the classification performance undergo a feature selection stage. Feature selection is done to reduce the huge dimension of fused features. Minimum redundancy maximum relevance (mRMR) feature selection procedure [63] is used in this step.

3.3.4. Classification

In the classification step, the well-known SVM classifier is used to classify the seven subclasses of SC. The kernel functions employed in the classification process are linear, cubic, and quadratic. The 5-fold cross-validation (CV) method is utilized to validate the classification outcomes of the proposed dermatologist tool. In the CV procedure, the dataset is initially split into 5 equal folds. Afterward, 4 folds of them are employed in the training process of the SVM classifiers, where the 5th fold is used for testing. This process is repeated 5 times where at each time the SVM classifiers are trained with different 4 training folds and the 5th is used for testing. Several performance metrics that will be mentioned in the next sections are calculated for each testing fold and averaged for the 5 folds.

4. Metrics of Performance

Some metrics are used to measure the performance of the proposed intelligent dermatologist tool, including classification accuracy (CA), F1-score, sensitivity, precision, and specificity [16]. Formulas (1)–(5) are used to determine these metrics:

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

where TP is the true positive, FN exemplifies false negative, TN represents the true negative, and FP is the and false-positive.

5. Results and Discussion

This section will present and discuss the results of the proposed dermatologist tool. The section will first discuss the classification results utilizing low-level features. Afterward, it will show and illustrate the classification outputs using the high-level features. Next, it will introduce and explain the classification outcomes using the integration of manifold radiomics feature categories. Finally, it will compare the results of the proposed intelligent dermatologist tool with recent related works constructed with the same dataset to verify its competence.

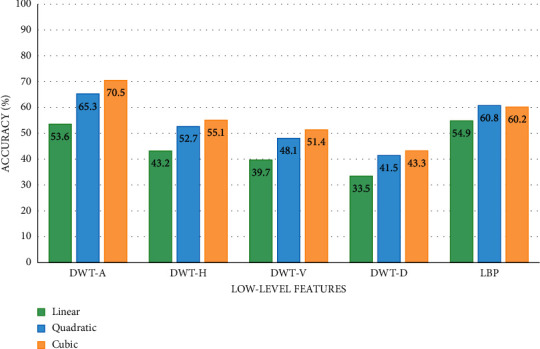

5.1. Results of Low-Level Features

The classification results of the SVM classifiers trained with low-level features including DWT and LBP are shown in Figure 3. Note that the DWT-A, DWT-H, DWT-V, and DWT-D correspond to the approximation, horizontal, vertical, and diagonal DWT coefficients, respectively. As it can be noticed from Figure 3, the SVM classifiers trained with low-level features produce classification accuracy that ranges between 33.5 and 70.5%. The highest accuracy is obtained with the cubic SVM classifier constructed using the DWT-A features. These results verify that using low-level features alone is not capable of reaching accurate results for SC classification.

Figure 3.

The SVM classifiers' accuracy (%) obtained using the low-level features.

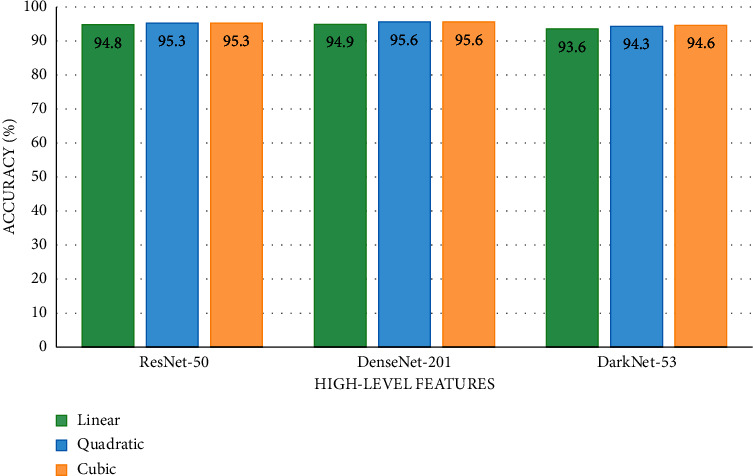

5.2. Results of High-Level Features

The outputs of the SVM classifiers learned with high-level features of DenseNet-201, ResNet-50, and DarkNet-53 CNNs are shown in Figure 4. The maximum accuracies of 95.6%, 95.6%, and 94.9% are obtained by the cubic, quadratic, and linear SVM classifiers correspondingly trained with the high-level features of DenseNet-201. Slightly lower accuracies (95.3%, 95.3%, and 94.8%) are achieved using the same classifiers learned with ResNet-50 features. The DarkNet-53 features accomplish accuracies of 94.6%, 64.3%, and 93.65 using the cubic, quadratic, and linear SVM classifiers, respectively. Figure 4 proves that utilizing high-level features has higher classification accuracy compared to low-level features shown in Figure 3.

Figure 4.

The SVM classifiers' accuracy (%) obtained using the high-level features.

5.3. Results of Incorporating Manifold Feature Categories and Feature Selection

The classification accuracies for the SVM classifiers trained with the incorporated manifold levels features are shown in Table 2. Table 2 first illustrates the accuracy attained using each combination of high-level features with low-level features. As it is clear, the fusion of every single high-level feature with one and two low-level feature sets has improved the accuracy of classification reaching peak accuracies of 97.5%, 97.9%, and 97.9% (linear, quadratic, and cubic SVM correspondingly) using the incorporation of DenseNet-201+ DWT-A + LBP features. These accuracies are higher than those attained using either the individual high-level features or single low-level features shown in Figures 3 and 4.

Table 2.

Classification accuracy of incorporating manifold feature levels.

| Incorporated manifold feature sets | Linear | Quadratic | Cubic |

|---|---|---|---|

| Single high-level features incorporated with low-level features | |||

| ResNet-50 + DWT-A | 95.7 | 96.1 | 96.2 |

| ResNet-50 + LBP | 96.1 | 96.4 | 96.4 |

| ResNet-50 + LBP + DWT-A | 96.5 | 96.9 | 96.8 |

| DarkNet-53 + DWT-A | 94.6 | 94.9 | 95.1 |

| DarkNet-53 + LBP | 95.8 | 96 | 96.1 |

| DarkNet-53 + DWT-A + LBP | 95.7 | 95.9 | 96 |

| DenseNet-201 + DWT-A | 96.5 | 97.1 | 97.2 |

| DenseNet-201 + LBP | 97.1 | 97.5 | 97.4 |

| DenseNet-201 + DWT-A + LBP | 97.5 | 97.9 | 97.9 |

|

| |||

| Two high-level feature sets incorporated | |||

| ResNet-50 + DarkNet-53 | 95.9 | 96.3 | 96.6 |

| ResNet-50 + DenseNet-201 | 97.7 | 98 | 98.1 |

| DenseNet-201 + DarkNet-53 | 97.9 | 98.1 | 98 |

|

| |||

| Two high-level feature sets incorporated with low-level feature sets | |||

| ResNet-50 + DenseNet-201 + DWT-A | 98.2 | 98.5 | 98.5 |

| ResNet-50 + DenseNet-201 + LBP | 97.8 | 98.1 | 98.1 |

| ResNet-50 + DenseNet-201 + DWT-A + LBP | 98.2 | 98.6 | 98.5 |

| ResNet-50 + DarkNet-53 + DWT-A | 96.4 | 96.9 | 96.9 |

| ResNet-50 + DarkNet-53 + LBP | 96.7 | 96.9 | 97 |

| DenseNet-201 + DarkNet-53 + DWT-A | 98.2 | 98.4 | 98.5 |

| DenseNet-201 + DarkNet-53 + LBP | 97.9 | 98.4 | 98.4 |

| DenseNet-201 + DarkNet-53 + DWT-A + LBP | 98.3 | 98.6 | 98.6 |

|

| |||

| Three high-level feature sets incorporated | |||

| ResNet-50 + DenseNet-201 + DarkNet-53 | 98.2 | 98.5 | 98.5 |

|

| |||

| Three high-level feature sets incorporated with low-level feature sets | |||

| ResNet-50 + DenseNet-201 + DarkNet-53 + DWT-A | 98.6 | 98.8 | 98.8 |

| ResNet-50 + DenseNet-201 + DarkNet-53 + LBP | 98.5 | 98.8 | 98.7 |

| ResNet-50 + DenseNet-201 + DarkNet-53 + DWT-A + LBP | 98.7 | 99 | 99 |

Next, Table 2 discusses the results of fusing every two high-level features as well as combining every two high-level feature sets with low-level features. Table 2 verifies that combining two high-level features has a positive impact on the accuracy as it increases to reach 97.9%, 98.1%, and 98% (linear, quadratic, and cubic SVM, respectively) using DenseNet-201 + DarkNet-53 high-level features. Moreover, when merging two high-level features with two low-level features, the classification accuracies of the SVM classifiers are enhanced to reach maximum accuracies in this scenario of 98.2%, 98.6%, and 98.5% utilizing the combined features of ResNet-50 + DenseNet-201 + DWT-A + LBP which are higher than those achieved when combining one high-level feature set with low-level features.

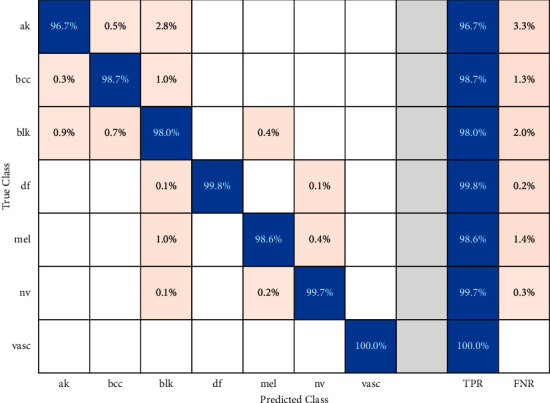

Finally, Table 2 displays the classification accuracies of fusing the three high-level features along with integrating the three high-levels features with low-levels features. Table 2 proves that incorporating manifold features of different categories has a high impact on classification accuracy. This is obvious as when merging the three high-levels features of ResNet-50 + DenseNet-201+ DarkNet-53 with the low-level features of DWT-A + LBP, the accuracy is boosted to 98.7%, 99%, and 99% (linear, quadratic, and cubic SVM, respectively). This improvement in the classification accuracy indicates the capacity of the proposed intelligent dermatologist tool in classifying the subclasses of skin cancer. Figure 5 shows the confusion matrix for the cubic SVM classifier trained with the manifold features of ResNet-50 + DenseNet-201+ DarkNet-53 + DWT-A + LBP.

Figure 5.

Confusion matrix for cubic SVM classifier trained with ResNet-50 +DenseNet-201 + DarkNet-53 + DWT-A + LBP features.

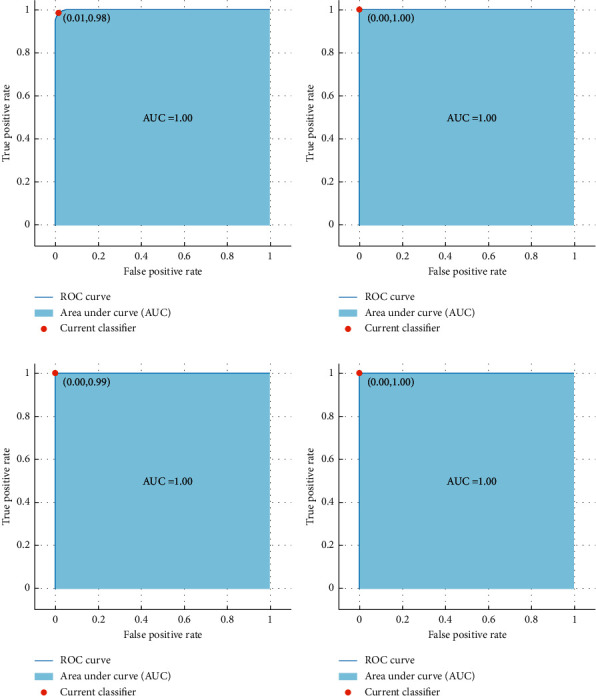

The performance metrics including the sensitivity, specificity, precision, and F1-score for the cubic SVM classifier trained with ResNet-50 +DenseNet-201 + DarkNet-53 + DWT-A + LBP features are shown in Table 3. Table 3 shows that the mean specificity, sensitivity, precision, and F1-score for the seven classes of SC are 0.9969, 0.9854, 0.9884, and 0.988. These results verify that the proposed dermatologist tool is reliable. This is because, as stated in [64–66], for any medical system to be reliable, the precision and specificity must be more than 0.95 and sensitivity should exceed 0.8. The receiving operating characteristics (ROC) curves along with the area under the curve (AUC) are displayed in Figure 6.

Table 3.

Performance metrics for the cubic SVM classifier trained with ResNet-50 +DenseNet-201 + DarkNet-53 + DWT-A + LBP features.

| Class | Specificity | Sensitivity | Precision | F1-score |

|---|---|---|---|---|

| ak | 0.9979 | 0.9674 | 0.98765 | 0.9768 |

| bcc | 0.9979 | 0.9874 | 0.9874 | 0.9874 |

| blk | 0.9918 | 0.9799 | 0.9555 | 0.9675 |

| df | 1 | 1 | 1 | 1 |

| mel | 0.999 | 0.9865 | 0.9946 | 0.9905 |

| nv | 0.9992 | 0.9971 | 0.9952 | 0.9962 |

| vasc | 1 | 1 | 1 | 1 |

| mean | 0.9969 | 0.9854 | 0.9884 | 0.9883 |

Figure 6.

ROC curves along with the AUC for quadratic SVM classifier, (a) blk is the positive class, (b) df is the positive class, (c) nv is the positive class, and (d) vasc is the positive class.

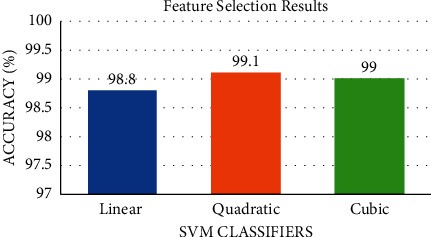

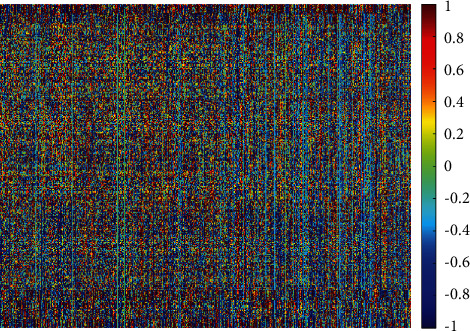

The results after using the mRMR feature selection approach are shown in Figure 7. Note that the classification accuracy for both quadratic and linear SVM has increased to 99.1% and 98.8%, respectively, whereas, for the cubic SVM, the accuracy is the same (99%). The mRMR feature selection procedure has reduced the number of features to 2500 which is lower than the 6495 of the combined manifold features of ResNet-50 + DenseNet-201+ DarkNet-53 + DWT-A + LBP. Figure 8 shows the heat map analysis of the selected radiomics features.

Figure 7.

The classification accuracy after the mRMR feature selection procedure for the three SVM classifiers.

Figure 8.

Heat map analysis of the selected radiomics features.

5.4. Comparing the Performance of the Proposed Tool with Related Works

To verify the competence of the proposed intelligent dermatologist tool, its performance is compared with recent related studies based on the HAM1000 dataset. This comparison is shown in Table 4. It is obvious from Table 4 that the proposed tool has a superior performance compared to other related works since the accuracy, sensitivity, specificity, and F1 score achieved using the proposed tool are 99%, 98.54%, 99.69%, 98.84%, and 98.83% which are greater than all other studies. This outperformance is because the proposed intelligent dermatologist tool is based on incorporating manifold features categories. It first examined the use of three individual high-level features and then two low-level handcrafted features. Next, it investigated the influence of incorporating several high- and low-level features and searched for the best-integrated manifold features. The results of the proposed tool have shown that merging manifold features of different categories have a great impact on classification accuracy. This is not the case in other related studies shown in Table 4 as they are based on either low-level features or high-level features. Most of them employed individual features sets and did not examine the influence of feature fusion.

Table 4.

Performance comparison between the proposed intelligent tool and related works based on the HAM1000 dataset.

| Article | Accuracy (%) | Sensitivity | Specificity | Precision | F1-score |

|---|---|---|---|---|---|

| [67] | 85.8 | — | — | — | — |

| [43] | 86.5 | 85.57% | — | 87.01% | 86.28% |

| [44] | 88.5 | — | — | 88.66% | 88.66% |

| [68] | 90.72 | — | — | — | — |

| [69] | 90.67 | 90.2% | — | — | — |

| [70] | 92.08 | 92.53% | — | 93.73% | 92.74 |

| [35] | 96.25 | — | — | — | — |

| [37] | 97.4 | 92% | 90% | — | — |

| Proposed tool | 99 | 98.54% | 99.69% | 98.84% | 98.83% |

Early detection of SC is very important to prevent it from progression. It can also help in choosing appropriate treatments and follow-up plans and decreasing death rates. This study proposed an intelligent tool for the automatic classification of lesion types. The results achieved using the proposed intelligent tool are promising. They verify that the proposed tool is an effective method that can be used in clinical practice. In this common sense, the key privilege of the proposed tool is its accessibility which means that it can be used in several regions easily especially those which suffer from the lack of skilled dermatologists. Besides, this tool will enable dermatologists to automatically diagnose the SC subclass and avoid challenges they face during manual examinations due to the complex patterns of skin lesions located in SC images [8]. It will also ease and fasten the diagnosis procedure compared to manual diagnosis. Moreover, the accurate classification of the lesion using the proposed tool will prevent patients diagnosed with a noncancerous lesion from the excess hospital visits, as normal medication can cure them without the need for exposure to radiation or chemotherapy. On the other hand, the tool can accurately diagnose patients with the specific SC category which helps doctors to select the suitable treatment procedure. Several studies have studied the use of individual feature extraction methods including traditional low-level features and high-level features based on deep learning to diagnose SC; however, the fusion among these features is of great importance, as the results of the proposed tool showed that integrating these features can enhance the performance. The results of the proposed tool prove that this tool adds value to the healthcare division. This is because the tool can diagnose the SC category more accurately than those methods used in the literature.

6. Conclusion

Skin cancer (SC) is one of the widespread malignant tumors among human populations. The increasing rate of infection of this type of cancer can be reduced if accurately diagnosed and treated during its initial stages. This paper proposed a dermatologist tool based on AI methods and manifold radiomics features categories to enable doctors to accurately diagnose the SC subtype. This could facilitate choosing the appropriate follow-up and treatments plans. The proposed intelligent tool is based on several deep learning and machine learning techniques. It incorporates manifold radiomics features categories including three high-level features of ResNet-50, DenseNet-201, and DarkNet-53 and two low-level radiomics features of DWT and LBP. This study proved that integrating both levels of radiomics features boosted the performance of the dermatologist tool compared to using either high-level or low-level features alone. The performance of the intelligent dermatologist tool was compared with related AI-based dermatologist tools and this comparison verified the superiority of the proposed tool over other tools; thus the proposed intelligent tool can be used to assist dermatologists in the accurate diagnosis of the subcategory of SC and avoid the complications of manual diagnosis. Upcoming work will consider using more deep learning techniques, other radiomics techniques, segmentation methods, and applying other integration techniques. The main limitation of this tool is using the 5-fold cross-validation method for validating the performance; however, cross-center validation using other datasets is required. Therefore, future work will consider using another dataset for cross-center validation.

Data Availability

The dataset employed in the paper can be found in Kaggle (https://www.kaggle.com/kmader/skin-cancer-mnist-ham10000). Codes are available at the following link: https://drive.google.com/file/d/1ifD8xzUm-lxzvvLghrjbeo55uar8Xio8/view?usp=sharing.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

Supplementary Materials

Some radiomics features extracted in this study comply with the 174 standards of IBSI. Table S1 illustrates the compliance/noncompliance of these features.

References

- 1.Naeem A., Farooq M. S., Khelifi A., Abid A. Malignant melanoma classification using deep learning: datasets, performance measurements, challenges and opportunities. IEEE Access. 2020;8:110575–110597. doi: 10.1109/access.2020.3001507. [DOI] [Google Scholar]

- 2.Sung H., Ferlay J., Siegel R. L., et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: a cancer journal for clinicians. 2021;71(3):209–249. doi: 10.3322/caac.21660. [DOI] [PubMed] [Google Scholar]

- 3.Sinclair C., Foley P. Skin cancer prevention in Australia. British Journal of Dermatology. 2009;161:116–123. doi: 10.1111/j.1365-2133.2009.09459.x. [DOI] [PubMed] [Google Scholar]

- 4.Marrett L. D., De P., Airia P., Dryer D. Cancer in Canada in 2008. Canadian Medical Association Journal. 2008;179(11):1163–1170. doi: 10.1503/cmaj.080760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.O’Sullivan D. E., Brenner D. R., Demers P. A., Paul J. V., Christine M. F., Will D. K. Indoor tanning and skin cancer in Canada: a meta-analysis and attributable burden estimation. Cancer epidemiology. 2019;59:1–7. doi: 10.1016/j.canep.2019.01.004. [DOI] [PubMed] [Google Scholar]

- 6.Marcelo de P. C., Dubuisson P. An overview of the ultraviolet index and the skin cancer cases in Brazil. Photochemistry and Photobiology. 2003;78 doi: 10.1562/0031-8655(2003)078<0049:aootui>2.0.co;2. [DOI] [PubMed] [Google Scholar]

- 7.Apalla Z., Lallas A., Sotiriou E., Lazaridou E, Ioannides D. Epidemiological trends in skin cancer. Dermatology Practical & Conceptual. 2017;7:1–6. doi: 10.5826/dpc.0702a01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Esteva A., Kuprel B., Novoa R. A., et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Han S. S., Kim M. S., Lim W., Park G. H., Park I., Chang S. E. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. Journal of Investigative Dermatology. 2018;138(7):1529–1538. doi: 10.1016/j.jid.2018.01.028. [DOI] [PubMed] [Google Scholar]

- 10.Adegun A., Viriri S. Deep learning techniques for skin lesion analysis and melanoma cancer detection: a survey of state-of-the-art. Artificial Intelligence Review. 2021;54(2):811–841. doi: 10.1007/s10462-020-09865-y. [DOI] [Google Scholar]

- 11.Vestergaard M. E., Macaskill P., Holt P. E., Menzies S. W. Dermoscopy compared with naked eye examination for the diagnosis of primary melanoma: a meta-analysis of studies performed in a clinical setting. British Journal of Dermatology. 2008;159:669–676. doi: 10.1111/j.1365-2133.2008.08713.x. [DOI] [PubMed] [Google Scholar]

- 12.Masood A., Ali Al-Jumaily A. Computer aided diagnostic support system for skin cancer: a review of techniques and algorithms. International Journal of Biomedical Imaging. 2013;2013:22. doi: 10.1155/2013/323268.323268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ragab D. A., Attallah O., Sharkas M., Ren J. A framework for breast cancer classification using multi-DCNNs. Computers in Biology and Medicine. 2021;131 doi: 10.1016/j.compbiomed.2021.104245.104245 [DOI] [PubMed] [Google Scholar]

- 14.Ragab D. A., Sharkas M., Attallah O. Breast cancer diagnosis using an efficient CAD system based on multiple classifiers. Diagnostics. 2019;9 doi: 10.3390/diagnostics9040165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Attallah O. MB-AI-His: histopathological diagnosis of pediatric medulloblastoma and its subtypes via AI. Diagnostics. 2021;11:359–384. doi: 10.3390/diagnostics11020359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Attallah O. CoMB-deep: composite deep learning-based pipeline for classifying childhood medulloblastoma and its classes. Frontiers in Neuroinformatics. 2021;15:p. 21. doi: 10.3389/fninf.2021.663592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Attallah O., Sharkas M. GASTRO-CADx: a three stages framework for diagnosing gastrointestinal diseases. PeerJ Computer Science. 2021;7:p. e423. doi: 10.7717/peerj-cs.423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Attallah O., Ragab D. A., Sharkas M. MULTI-DEEP: a novel CAD system for coronavirus (COVID-19) diagnosis from CT images using multiple convolution neural networks. PeerJ. 2020;8 doi: 10.7717/peerj.10086.e10086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Attallah O., Ma X. Bayesian neural network approach for determining the risk of re-intervention after endovascular aortic aneurysm repair. Proceedings of the Institution of Mechanical Engineers - Part H: Journal of Engineering in Medicine. 2014;228(9):857–866. doi: 10.1177/0954411914549980. [DOI] [PubMed] [Google Scholar]

- 20.Attallah O., Karthikesalingam A., Holt P. J., et al. Using multiple classifiers for predicting the risk of endovascular aortic aneurysm repair re-intervention through hybrid feature selection. Proceedings of the Institution of Mechanical Engineers-Part H: Journal of Engineering in Medicine. 2017;231(11):1048–1063. doi: 10.1177/0954411917731592. [DOI] [PubMed] [Google Scholar]

- 21.Attallah O., Karthikesalingam A., Holt P. J. E., et al. Feature selection through validation and un-censoring of endovascular repair survival data for predicting the risk of re-intervention. BMC Medical Informatics and Decision Making. 2017;17(1):115–133. doi: 10.1186/s12911-017-0508-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Karthikesalingam A., Attallah O., Ma X., et al. An artificial neural network stratifies the risks of Reintervention and mortality after endovascular aneurysm repair; a retrospective observational study. PloS One. 2015;10(7) doi: 10.1371/journal.pone.0129024.e0129024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Baraka A., Shaban H., Abou El-Nasr M., Attallah O. Wearable accelerometer and sEMG-based upper limb BSN for tele-rehabilitation. Applied Sciences. 2019;9(14):2795–2816. doi: 10.3390/app9142795. [DOI] [Google Scholar]

- 24.Ayman A., Attalah O., Shaban H. An efficient human activity recognition framework based on wearable imu wrist sensors. Proceedings of the 2019 IEEE International Conference on Imaging Systems and Techniques (IST); December 2019; Abu Dhabi, UAE. pp. 1–5. [DOI] [Google Scholar]

- 25.Attallah O., Abougharbia J., Tamazin M., Nasser A. A. A BCI system based on motor imagery for assisting people with motor deficiencies in the limbs. Brain Sciences. 2020;10(11):864–888. doi: 10.3390/brainsci10110864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Abougharbia J., Attallah O., Tamazin M. A novel BCI system based on hybrid features for classifying motor imagery tasks. Proceedings of the 2019 Ninth International Conference on Image Processing Theory, Tools and Applications (IPTA); November 2019; Istanbul, Turkey. pp. 1–6. [DOI] [Google Scholar]

- 27.Oliveira R. B., Papa J. P., Pereira A. S., Tavares J. M. R. S. Computational methods for pigmented skin lesion classification in images: review and future trends. Neural Computing & Applications. 2018;29(3):613–636. doi: 10.1007/s00521-016-2482-6. [DOI] [Google Scholar]

- 28.Goyal M., Knackstedt T., Yan S., Hassanpour S. Artificial intelligence-based image classification methods for diagnosis of skin cancer: challenges and opportunities. Computers in Biology and Medicine. 2020;127 doi: 10.1016/j.compbiomed.2020.104065.104065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nami N., Giannini E., Burroni M., Fimiani M., Rubegni P. Teledermatology: state-of-the-art and future perspectives. Expert Review of Dermatology. 2012;7(1):1–3. doi: 10.1586/edm.11.79. [DOI] [Google Scholar]

- 30.Le V.-H., Kha Q.-H., Hung T. N. K., Le N. Q. K. Risk score generated from CT-based radiomics signatures for overall survival prediction in non-small cell lung cancer. Cancers. 2021;13(14):p. 3616. doi: 10.3390/cancers13143616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Avanzo M., Wei L., Stancanello J., et al. Machine and deep learning methods for radiomics. Medical Physics. 2020;47:e185–e202. doi: 10.1002/mp.13678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Le N. Q. K., Hung T. N. K., Do D. T., Lam L. H. T., Dang L. H., Huynh T.-T. Radiomics-based machine learning model for efficiently classifying transcriptome subtypes in glioblastoma patients from MRI. Computers in Biology and Medicine. 2021;132 doi: 10.1016/j.compbiomed.2021.104320.104320 [DOI] [PubMed] [Google Scholar]

- 33.Afshar P., Mohammadi A., Plataniotis K. N., Oikonomou A., Benali H. From handcrafted to deep-learning-based cancer radiomics: challenges and opportunities. IEEE Signal Processing Magazine. 2019;36(4):132–160. doi: 10.1109/msp.2019.2900993. [DOI] [Google Scholar]

- 34.Le N. Q. K., Do D. T., Chiu F.-Y., Yapp E. K. Y., Yeh H.-Y., Chen C.-Y. XGBoost improves classification of MGMT promoter methylation status in IDH1 wildtype glioblastoma. Journal of Personalized Medicine. 2020;10(3):p. 128. doi: 10.3390/jpm10030128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Monika M. K., Arun Vignesh N., Usha Kumari C., Kumar M. N. V. S. S., Lydia E. L. Skin cancer detection and classification using machine learning. Materials Today: Proceedings. 2020;33:4266–4270. doi: 10.1016/j.matpr.2020.07.366. [DOI] [Google Scholar]

- 36.Arora G., Dubey A. K., Jaffery Z. A. Bag of feature and support vector machine based early diagnosis of skin cancer. Neural Computing & Applications. 2020:1–8. doi: 10.1007/s00521-020-05212-y. [DOI] [Google Scholar]

- 37.Kumar M., Alshehri M., AlGhamdi R., Sharma P., Deep V. A de-ann inspired skin cancer detection approach using fuzzy c-means clustering. Mobile Networks and Applications. 2020;25(4):1319–1329. doi: 10.1007/s11036-020-01550-2. [DOI] [Google Scholar]

- 38.Attallah O., Sharkas M. A., Gadelkarim H. Deep learning techniques for automatic detection of embryonic neurodevelopmental disorders. Diagnostics. 2020;10(1):27–49. doi: 10.3390/diagnostics10010027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ragab D. A., Attallah O. FUSI-CAD: coronavirus (COVID-19) diagnosis based on the fusion of CNNs and handcrafted features. PeerJ Computer Science. 2020;6 doi: 10.7717/peerj-cs.306.e306 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Attallah O., Anwar F., Ghanem N. M., Ismail M. A. Histo-CADx: duo cascaded fusion stages for breast cancer diagnosis from histopathological images. PeerJ Computer Science. 2021;7 doi: 10.7717/peerj-cs.493.e493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rodrigues D. d. A., Ivo R. F., Satapathy S. C., Wang S., Hemanth J., Filho P. P. R. A new approach for classification skin lesion based on transfer learning, deep learning, and IoT system. Pattern Recognition Letters. 2020;136:8–15. doi: 10.1016/j.patrec.2020.05.019. [DOI] [Google Scholar]

- 42.Khamparia A., Singh P. K., Rani P., Debabrata S., Ashish K., Bharat B. An internet of health things-driven deep learning framework for detection and classification of skin cancer using transfer learning. Transactions on Emerging Telecommunications Technologies. 2020;7e3963 [Google Scholar]

- 43.Khan M. A., Zhang Y.-D., Sharif M., Akram T. Pixels to classes: intelligent learning framework for multiclass skin lesion localization and classification. Computers & Electrical Engineering. 2021;90 doi: 10.1016/j.compeleceng.2020.106956.106956 [DOI] [Google Scholar]

- 44.Khan M. A., Akram T., Zhang Y.-D., Sharif M. Attributes based skin lesion detection and recognition: a mask RCNN and transfer learning-based deep learning framework. Pattern Recognition Letters. 2021;143:58–66. doi: 10.1016/j.patrec.2020.12.015. [DOI] [Google Scholar]

- 45.Amin J., Sharif A., Gul N., et al. Integrated design of deep features fusion for localization and classification of skin cancer. Pattern Recognition Letters. 2020;131:63–70. doi: 10.1016/j.patrec.2019.11.042. [DOI] [Google Scholar]

- 46.Toğaçar M., Cömert Z., Ergen B. Intelligent skin cancer detection applying autoencoder, MobileNetV2 and spiking neural networks. Chaos, Solitons & Fractals. 2021;144110714 [Google Scholar]

- 47.Alizadeh S. M., Mahloojifar A. Automatic skin cancer detection in dermoscopy images by combining convolutional neural networks and texture features. International Journal of Imaging Systems and Technology. 2021;31 [Google Scholar]

- 48.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); June 2016; Las Vegas, NV, USA. [DOI] [Google Scholar]

- 49.Huang G., Liu Z., Van Der Maaten L. Densely connected convolutional networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2017; Las Vegas, NV, USA. pp. 4700–4708. [DOI] [Google Scholar]

- 50.Redmon J., Farhadi A. YOLO9000: better, faster, stronger. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2017; Las Vegas, NV, USA. pp. 7263–7271. [DOI] [Google Scholar]

- 51.Antonini M., Barlaud M., Mathieu P., Daubechies I. Image coding using wavelet transform. IEEE Transactions on Image Processing. 1992;1(2):205–220. doi: 10.1109/83.136597. [DOI] [PubMed] [Google Scholar]

- 52.Demirel H., Ozcinar C., Anbarjafari G. Satellite image contrast enhancement using discrete wavelet transform and singular value decomposition. IEEE Geoscience and Remote Sensing Letters. 2009;7:333–337. [Google Scholar]

- 53.Hatamimajoumerd E., Talebpour A. A Temporal neural trace of wavelet coefficients in human object vision: an MEG study. Frontiers in Neural Circuits. 2019;13:p. 20. doi: 10.3389/fncir.2019.00020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Attallah O., Sharkas M. A., Gadelkarim H. Fetal brain abnormality classification from MRI images of different gestational age. Brain Sciences. 2019;9(9):231–252. doi: 10.3390/brainsci9090231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Ojala T., Pietikainen M., Maenpaa T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2002;24(7):971–987. doi: 10.1109/tpami.2002.1017623. [DOI] [Google Scholar]

- 56.Tschandl P., Rosendahl C., Kittler H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Scientific data. 2018;5:180161–180169. doi: 10.1038/sdata.2018.161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Zhenhua Guo Z., Lei Zhang L., Zhang D. A completed modeling of local binary pattern operator for texture classification. IEEE Transactions on Image Processing. 2010;19(6):1657–1663. doi: 10.1109/tip.2010.2044957. [DOI] [PubMed] [Google Scholar]

- 58.Zhang D. Fundamentals of Image Data Mining. Berlin, Germany: Springer; 2019. Wavelet transform, texts in computer science; pp. 35–44. [DOI] [Google Scholar]

- 59.Bharati M. H., Liu J. J., MacGregor J. F. Image texture analysis: methods and comparisons. Chemometrics and Intelligent Laboratory Systems. 2004;72(1):57–71. doi: 10.1016/j.chemolab.2004.02.005. [DOI] [Google Scholar]

- 60.Pan S. J., Yang Q. A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering. 2009;22:1345–1359. [Google Scholar]

- 61.Zwanenburg A., Leger S., Vallières M. Image biomarker standardisation initiative. 2016. http://arxiv.org/abs/161207003.

- 62.Zwanenburg A., Vallières M., Abdalah M. A., et al. The image biomarker standardization initiative: standardized quantitative radiomics for high-throughput image-based phenotyping. Radiology. 2020;295(2):328–338. doi: 10.1148/radiol.2020191145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Radovic M., Ghalwash M., Filipovic N., Obradovic Z. Minimum redundancy maximum relevance feature selection approach for temporal gene expression data. BMC Bioinformatics. 2017;18:9–14. doi: 10.1186/s12859-016-1423-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Colquhoun D. An investigation of the false discovery rate and the misinterpretation of p -values. Royal Society Open Science. 2014;1(3) doi: 10.1098/rsos.140216.140216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ellis P. D. The Essential Guide to Effect Sizes: Statistical Power, Meta-Analysis, and the Interpretation of Research Results. Cambridge, UK: Cambridge University Press; 2010. [Google Scholar]

- 66.Attallah O. An effective mental stress state detection and evaluation system using minimum number of frontal brain electrodes. Diagnostics. 2020;10(5):292–327. doi: 10.3390/diagnostics10050292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Huang H. W., Hsu B. W. Y., Lee C. H., Tseng V. S. Development of a light‐weight deep learning model for cloud applications and remote diagnosis of skin cancers. The Journal of Dermatology. 2021;48(3):310–316. doi: 10.1111/1346-8138.15683. [DOI] [PubMed] [Google Scholar]

- 68.Srinivasu P. N., SivaSai J. G., Ijaz M. F., Bhoi A. K., Kim W., Kang J. J. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors. 2021;21(8):p. 2852. doi: 10.3390/s21082852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Khan M. A., Sharif M., Akram T., Damaševičius R., Maskeliūnas R. Skin lesion segmentation and multiclass classification using deep learning features and improved moth flame optimization. Diagnostics. 2021;11(5):p. 811. doi: 10.3390/diagnostics11050811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Hosseinzadeh Kassani S., Hosseinzadeh Kassani P. A comparative study of deep learning architectures on melanoma detection. Tissue and Cell. 2019;58:76–83. doi: 10.1016/j.tice.2019.04.009. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Some radiomics features extracted in this study comply with the 174 standards of IBSI. Table S1 illustrates the compliance/noncompliance of these features.

Data Availability Statement

The dataset employed in the paper can be found in Kaggle (https://www.kaggle.com/kmader/skin-cancer-mnist-ham10000). Codes are available at the following link: https://drive.google.com/file/d/1ifD8xzUm-lxzvvLghrjbeo55uar8Xio8/view?usp=sharing.