Abstract

This article tells the story of how a public charter school serving students with autism spectrum disorder (ASD) adopted Direct Instruction (DI) as their primary form of instruction. The journey from recognizing the need for evidence-based curriculum focused on academic skills to integrating DI on a daily basis was outlined using a common implementation framework. We measured results of the implementation process on student outcomes using reading scores obtained from the Kaufman Test of Educational Achievement (KTEA-II Brief). Results for 67 students who participated in a DI reading program for at least 2 years suggest that the implementation of DI led to significantly improved reading scores; with some students demonstrating greatly accelerated rates of learning for their age. Our study suggests that the road to adoption of DI may be long, but the results are powerful for the individuals served. We offer our steps to implementation as a guide and resource to educators and behavior analysts eager to utilize DI in their settings.

Keywords: autism, Direct Instruction, implementation, reading, teachers

In the 2018–2019 school year, the National Center for Education Statistics (2020) reported that over 750,000 students with autism spectrum disorder (ASD) between the ages 3 and 21 years were served under Part B of the Individuals with Disabilities Education Act (IDEA, 2004). Individuals with ASD present with varying degrees of impairment in the areas of social interaction, social communication, rigid and repetitive behaviors, and associated problematic behaviors (American Psychiatric Association, 2013). In addition to describing eligibility for special education services, IDEA (2004) outlined standards for the use of evidence-based practices (EBPs) when serving these students. Mandating the use of EBPs was a critical step forward in recognizing the rights of individuals with ASD and other specialized needs (Cook & Odom, 2013). However, as noted by Odom et al. (2010), “the devil in the details” (p. 276) becomes apparent as schools are challenged with identifying and ultimately implementing research-based procedures in classroom settings.

Direct Instruction (DI) is an EBP with strong empirical support that has crossed the threshold from research to classroom implementation (Hattie, 2009). The success of DI is not altogether surprising given that it was developed with the explicit aim of giving all students the opportunity to access high quality instruction at a pace that may afford them the chance to catch-up with more advantaged peers (Engelmann et al., 1988). The two main rules of DI are: “Teach more in less time [and] . . . Control the details of what happens” (Engelmann et al., 1988, p. 303). These rules are exemplified by DI’s core instructional tactics. DI teachers describe the content of the lessons prior to instruction, present the content by modeling desired responses, provide individual and choral response opportunities to students, and provide differential consequences for responding (Engelmann et al., 1988). Instruction is provided to groups of students with matched abilities, allowing for more efficiency in instruction and close monitoring of student specific progress (Watkins & Slocum, 2004). To support teacher’s implementation of instruction, curricula are developed with embedded scripts to follow and materials to use for a variety of different content areas. DI programs are designed to “control the details” (Engelmann et al., 1988) and promote both consistent implementation across instructors and a reduced amount of daily teacher preparation time. Over time, DI curricula have expanded to address educational areas such as reading (Engelmann & Osborn, 2008a; Engelmann et al., 2002), math (Engelmann et al., 2012), writing (Engelmann & Silbert, 1983), and language (Engelmann & Osborn, 2008b).

The efficacy and effectiveness of DI has been well-established for a number of years (Hattie, 2009; Stockard et al., 2018). Increased academic performance has been shown across learner age groups, from preschool to high school (Becker & Gersten, 1982; Weisberg, 1988), from various socioeconomic backgrounds (Gersten & Carnine, 1984; Gersten et al., 1988), and with varying disabilities (Horner & Albin, 1988). Results of Hattie’s (2009) meta-analysis provided an average effect size of .59 across 300 studies and over 400,000 students. These findings suggest that DI can work for a variety of learners and can be adopted in the settings in which students receive their education.

Over the past several decades there has been increasing evidence that DI may be efficacious for learners with ASD (Frampton et al., in press). Initial studies examined the efficacy of specific strands or components of DI programs. For example, Flores and Ganz (2007) successfully taught skills related to inference, use of facts, and analogies to elementary students with ASD by implementing selected strands from within the Corrective Reading Thinking Basics (Engelmann et al., 2002) program. Ganz and Flores (2009) used selected strands from the Language for Learning (Engelmann & Osborn, 2008b) program to teach elementary students with autism to identify materials from which items were made. Flores and Ganz (2009) demonstrated a functional relationship between explicit instruction using selected strands from the Corrective Reading Thinking Basics (Engelmann et al., 2002) program and subsequent improvements on curriculum-based assessment measures for reading comprehension skills related to analogies, induction, and deduction with elementary school students with ASD.

Subsequent studies have extended the line of research into the effectiveness of DI for individuals with ASD by incorporating more complete components of DI programs as independent variables, such as entire lessons delivered without modification, or DI programs delivered in their entirety. Flores et al. (2013) found that whole lessons from the Corrective Reading Thinking Basics (Engelmann et al., 2002) program and Language for Learning (Engelmann & Osborn, 2008b) program, delivered without modification over the course of a 4-week extended school year program, resulted in statistically significant improvements in reading comprehension and language skills on curriculum-based assessments. Shillingsburg et al. (2015) examined the efficacy of using the entirety of the Language for Learning (Engelmann & Osborn, 2008b) program with 18 children with ASD. Participants received 1:1 intervention using the program for 3 hours per week across 4 consecutive months. Results comparing preintervention and postintervention scores on a battery of curriculum-based assessments revealed statistically significant improvements in targeted language skills.

Unfortunately, DI programs have struggled to achieve widespread implementation within educational settings despite repeated evidence of effectiveness (Viadero, 1999; Kim & Axelrod, 2005; Stockard et al., 2018). Head et al. (2018) summarized the importance of their research findings by expressing frustration with the contrast between the growing body of research demonstrating the efficacy of DI programs for individuals on the autism spectrum and the dismal state of DI implementation in school settings, stating, “It is disheartening that, given the extensive research regarding the effectiveness of DI, this methodology and curricula is underutilized in educational settings” (p. 190). This sentiment echoes findings from Odom et al. (2010) that identification of EBPs is not enough. We need evidence-based methods to implement EBPs.

The gap between scientifically identified best practices and actual implementation has received growing attention across fields (Cook & Odom, 2013; Fixsen et al., 2013). Smith et al. (2007) identified multiple phases of research ranging from studies demonstrating basic effects, to manualization, to RCTs, to implementation in community settings. Each phase of research has unique aims and requires different methods to achieve those aims. The efficacy of DI has been established in multiple single-subject and group designs across research groups with individuals with ASD (see Steinbrenner et al., 2020). It appears this line of research is ready for evaluations of community effectiveness in schools serving students with ASD.

One evidence-based practice for the introduction and implementation of EBP’s is the Exploration, Preparation, Implementation, and Sustainment framework (EPIS; Moullin et al., 2019). Implementation frameworks have arisen within the field of implementation science as tools that provide context and guide the manner in which implementation of an evidence-based practice can be structured and executed (Bauer et al., 2015). During the exploration phase, an organization is aware of a clinical or public health need and has started examining ways to address the need (Aarons et al., 2011; Moullin et al., 2019). As an EBP is identified by the group, potential barriers are then identified in the preparation phase. As each setting is unique, and no EBP is perfect, this phase is critical to identify whether adaptations to the EBP are warranted and identify facilitators who can work effectively to overcome barriers. The preparation phase entails creating detailed plans for training and support that will be deployed in the later phases by the facilitators. As needed, the preparation phase may also include specific steps to acclimate the individuals that will be participating in the change process. In particular, the goal is to clarify that implementation of the EBP is eminent, endorsed by the leaders/administrators, and that implementation will be rewarded (Aarons et al., 2014).

The EBP is initiated and closely monitored during the implementation phase. Adjustments are made as needed to align implementation with the plans created in the preparation phase. In the sustainment phase, the EBP continues to be implemented and progress related to key metrics is evaluated. In other words, during the sustainment phase the effects of the EBP on the referring problem are evaluated. The EPIS framework has been used to guide implementation projects across a broad range of public sector settings, including public health, child welfare, mental or behavioral health, substance use, rehabilitation, juvenile justice, education, and school-based nursing. Between 2011 and 2017, a total of 67 peer-reviewed articles were published outlining research projects that have used the EPIS framework (Moullin et al., 2019).

We used the EPIS framework to describe how DI was implemented throughout a midwestern public charter school serving students with ASD. The implementation process we followed was highly aligned with the recommendations and procedures in EPIS. In sharing our experience with this process, we hope to extend the DI literature with individuals with ASD in two ways. First, this study may serve as an example of how DI may be brought into the final phases of adoption, as illustrated by Smith et al. (2007). The study includes a step-by-step description of a successful site-wide implementation effort. This type of detailed description may provide valuable insight to support future implementation efforts, in particular for practitioners who may be unfamiliar with the critical components of DI implementation. In addition, operationalizing and sharing implementation processes may support a continuous improvement process related to DI implementation, leading to increasingly efficient processes. Second, we present reading outcomes for 67 students who completed 2 consecutive years of DI reading instruction as part of the comprehensive site-wide implementation of DI to contribute to the growing evidence-base regarding the effectiveness of DI for students with ASD.

Method

Setting and Participants

This site-wide implementation of DI took place at a public charter school located in the midwestern United States, which serves students on the autism spectrum. Throughout the implementation, 115 students with ASD and other developmental disabilities ranging in age from 5 to 22 years old were enrolled at the school. Approximately 70% of the students qualified as economically disadvantaged. Each classroom within the school was staffed with a licensed special education teacher and one to five paraprofessionals. Paraprofessionals were allocated to classrooms based on the learning and supervision needs of the students. Each classroom served six or seven students. Throughout implementation, DI lessons were delivered by both the special education teachers as well as the classroom paraprofessionals. In total, there were 14 teachers and 25 paraprofessionals involved in the delivery of DI lessons across the 5-year implementation.

Students were identified for participation in various DI curricula based on outcomes of individually administered curriculum-based placement tests, consistent with typical procedures guiding use of DI programs. The Kaufman Test of Educational Achievement, Second Edition, Brief Form (KTEA-II Brief Form) was administered annually to all students who participated in at least one DI program (regardless of curricular focus). Following 5 years of comprehensive site-wide DI implementation, an analysis of student outcomes was conducted utilizing the reading Growth Scale Value (GSV) scores obtained from the KTEA-II Brief Form assessments. We selected GSV scores as the primary evaluation metric because they compare performance over time, as raw scores and/or standard scores are insufficient in providing an apples-to-apples comparison (Kaufman & Kaufman, 2005).

Reading scores were selected for use in the evaluation of student outcomes on the basis that more students participated in DI reading instruction during the implementation effort than any other curricular area. Scores for all students who had participated in at least 2 consecutive years of DI reading instruction using either Headsprout Early Reading ©, Reading Mastery (Engelmann & Osborn, 2008a), Corrective Reading-Decoding (Engelmann et al., 2002), or Corrective Reading-Comprehension over the course of the 5-year site-wide implementation effort were included in the analysis. In total, 67 students met inclusion criteria. Participation in other DI programs (i.e. curricula focusing on other English language arts skills) was variable across the 67 participants over the course of the study. This variability was a result of participant performance on DI placement tests and natural course progression as participants demonstrated mastery.

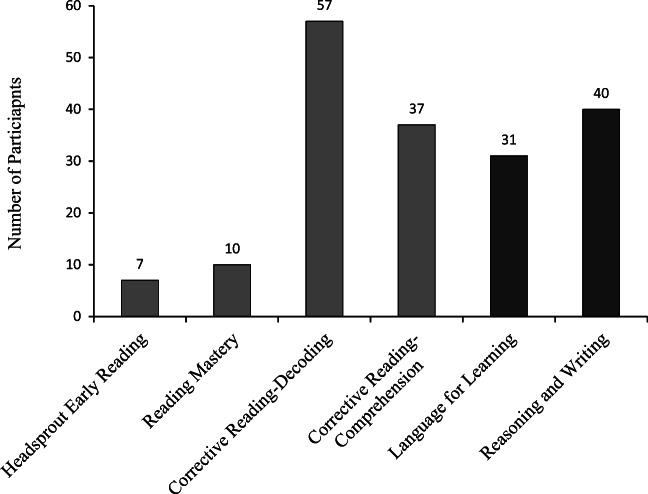

Figure 1 displays the number of participants who received instruction in each of the DI reading curricula, as well as DI curricula focusing on other English language arts content, at any point in time during which their reading scores were assessed as part of this study. Of the 67 students included in the analysis, 63 qualified for special education under the eligibility criteria for autism (94%), 1 qualified for special education for a cognitive disability, 1 for an emotional disability, 1 as other health impaired, and 1 as a student with a speech/language disorder. Students included in the evaluation of outcomes ranged in age from 6 to 19 years at the onset of instruction, with a mean age of 11.7 years. Results of the Clinical Evaluation of Language Fundamentals, 5th edition (Wiig et al., 2013), conducted with each student in the sample, indicated that 65% of the students presented with a severe language delay, 16% presented with a mild/moderate language delay, and 19% presented with language skills in the average range.

Fig. 1.

Number of Participants Receiving Instruction in Each DI Curricula. Note: Data representing participation in Language for Learning and Reasoning and Writing have been darkened because they represent "other ELA content" and were not part of the participant inclusion criteria used for the study

Implementation Team

Implementation rollout was led by two master’s-level board certified behavior analysts (BCBA). The implementation team consisted of the school director (a parent of children on the autism spectrum and a licensed dietician), two administrative support staff, one licensed speech-language pathologist (SLP), and one licensed occupational therapist (OTR/L). The two BCBAs, the SLP, and the OTR/L collectively formed an interdisciplinary team that fulfilled a supervisory role for the school’s 19 classrooms.

DI Implementation Phases

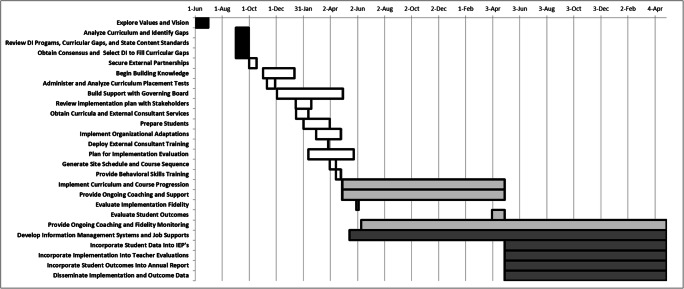

A comprehensive review of organizational records (e.g., meeting notes, emails, electronic files) was completed to create a detailed and chronologically organized description of the implementation steps that were used to rollout, evaluate, and sustain the DI implementation, which aligns with the phases of implementation identified within the EPIS framework. Use of the EPIS framework to describe the process adds important context with which to examine and effectively communicate a complex process of systemic change. Figure 2 illustrates the overall implementation project, with each implementation step illustrated in terms of its occurrence within the process, approximate duration, and implementation phase according to the EPIS framework.

Fig. 2.

Timeline of Implementation Activities According to Implementation Phase within EPIS Implementation Framework. Note: Exploration Phase activities are shown in black. Preparation activities are shown in white. Implementation activities are shown in light gray. Sustainment activities are coded in dark gray

Exploration Phase (4 Months Duration)

The EPIS framework identifies the first phase of implementation as the exploration phase. During this phase of implementation, an organization is aware of a clinical or public health need and starts examining ways to address the need (Aarons et al., 2011; Moullin et al., 2019). Specific steps completed as part of the school’s exploration process related to DI implementation are described below:

Step 1: Explore values and vision

The implementation process began when the implementation team began facilitating a series of internal meetings with teachers. The meetings focused on reviewing the organization’s mission (i.e., values clarification), reviewing the current service delivery model, identifying pain points currently experienced by teachers, examining a description of an alternative service-delivery model (i.e., a vision), and exploring perceived advantages and disadvantages associated with a potential shift. The description of the alternative service-delivery model incorporated many aspects of a site-wide implementation of DI including grouping and regrouping students based on instructional content and student ability but did not mention DI by name.

Perceived advantages shared by teachers throughout the meetings included potential increases in opportunities for collaboration/teamwork, opportunities to improve programming for generalization of student skills, increased opportunities to work with a variety of students, increased variety of activities for students, increased exposure to peers, and increased opportunities to practice flexibility. Perceived disadvantages shared by teachers included increased requirements to trust other teachers to effectively manage students on another teacher’s caseload, potential increases in behavioral challenges if the current system were to be changed too quickly, the possibility that increased transitions could diminish close relationships between teachers and their students, the possibility of increased stress for students, and difficulty implementing behavior support plans with students across various settings. All teacher feedback from these meetings was documented and subsequently distributed to meeting participants.

Step 2: Analyze curriculum and identify gaps

Members of the implementation team, in partnership with several classroom teachers, facilitated a systematic review of the school’s current curriculum. The process occurred within the context of a volunteer Curriculum Committee that met monthly. The analysis revealed that the school’s curriculum had relative strengths in terms of tools for assessing and teaching adaptive behavior, but a significant gap was exposed within availability of evidence-based curricular materials to address academic content areas.

Step 3: Review DI programs, curricular gaps, and state content standards

Members of the implementation team had previous exposure to DI curricula and identified it as being a good fit to fill the current curricular gap. To facilitate additional buy-in from various stakeholders, a systematic review of various DI programs and their alignment with the state’s academic content standards was completed. The results of the review indicated strong alignment between various DI programs and state content standards. Results of the review were shared with relevant stakeholders (including teachers and school board members) at various stages throughout the implementation process.

Step 4: Obtain consensus and select DI to fill curricular gap

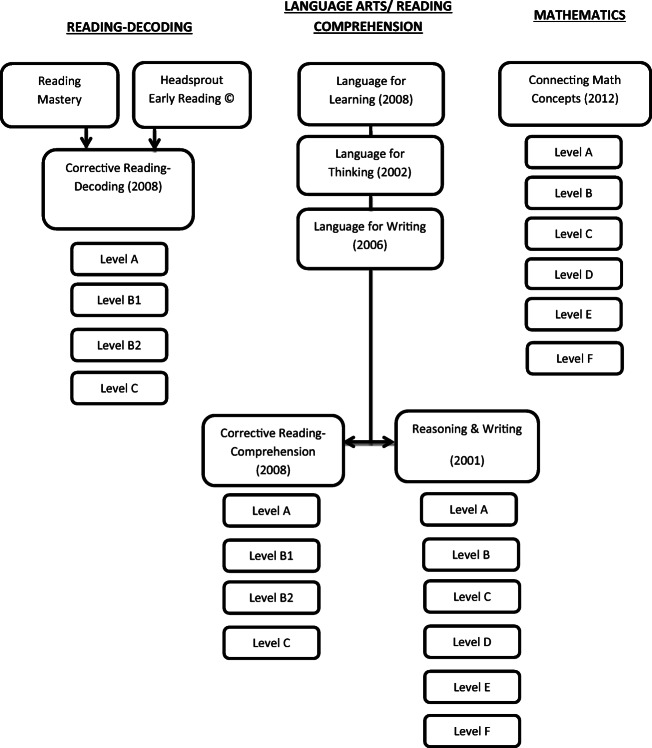

Upon the recommendation of the implementation team, and after reviewing alignment with the state content standards, the Curriculum Committee reached consensus to initiate implementation of DI. The following DI curricula were selected for implementation: Language for Learning (Engelmann & Osborn, 2008b), Language for Thinking (Engelmann & Osborn, 2002), Language for Writing (Engelmann & Osborn, 2006), Headsprout Early Reading©, Reading Mastery (Engelmann & Osborn, 2008a), Corrective Reading- Decoding (Engelmann et al., 2002), Corrective Reading-Comprehension, Reasoning and Writing (Engelmann et al., 2002), and SRA Connecting Math Concepts (Engelmann et al., 2012).

Preparation Phase (7 Months Duration)

The EPIS framework describes the preparation phase as the point at which implementation efforts shift from identifying an evidence-based practice to preparing for implementation. Efforts in this phase are focused on identification of barriers and facilitators to implementation, identification of potential adaptation needs, and the development of a detailed implementation plan. Specific steps completed as part of the school’s preparation process prior to the onset of DI implementation are described below.

Step 1: Secure external partnerships

Members of the implementation team initiated a collaborative partnership with a local university professor who had extensive experience with DI programs. Over the course of the implementation process, guidance provided through this partnership included the provision of sample curricular materials for teachers to examine, relevant literature regarding effectiveness of DI, a recommendation to hire an external consultant to provide initial training, a recommendation of an evaluation tool that could be used to monitor student outcomes, and the provision of ongoing moral support to the implementation team.

Step 2: Begin building knowledge

The implementation team distributed literature related to DI to all teachers to formally introduce them to DI, its features, and its effectiveness with individuals on the autism spectrum. Materials were shared via email for voluntary consumption and included Barbash (2012) and Flores and Ganz (2009). At the same time, samples of the DI programs were made available on-site for teachers to begin exploring.

Step 3: Administer and analyze curriculum placement tests

Teachers were provided with placement tests for all programs targeted for roll-out along with instructions for test administration. Completed placement tests were turned into the implementation team and analyzed to arrange initial student groupings, assign instructional staff for each group, and guide initial curricula purchasing decisions. Initial instructional groupings included a lead teacher and at least one paraprofessional to provide support for each group.

Step 4: Build support with governing board

The implementation team began delivering a series of monthly presentations to the school’s Board of Directors. The content of these monthly presentations advanced over the course of the implementation process but ultimately included: (1) a review of research literature related to DI and its effectiveness across a variety of populations—including students with autism; (2) an overview of curricular analysis and gaps in programming; (3) anticipated rollout costs for initial purchase of curricular materials and professional development with an external consultant; (4) an overview of the implementation plan including staff training activities, methods for developing instructional groupings, methods for evaluating student outcomes, and methods for evaluating fidelity of program implementation; and (5) implementation progress updates.

Step 5: Review implementation plan with stakeholders

The implementation team began holding weekly rollout meetings with teachers. The content of these meetings evolved over the course of the implementation process but ultimately included (1) an overview of the implementation plan; (2) discussion and planning of organizational adaptations required to support implementation (e.g., following a master schedule of classes, rearranging scheduled activities that would conflict with DI classes, activating a transition cue to signal class changes); and (3) ongoing discussion of questions and concerns. The implementation plan that was shared with teachers included key staff training activities that would occur, methods that were used for developing instructional groupings, methods to be used for evaluating student outcomes and guiding decisions to continue ongoing implementation beyond the initial year, methods for supporting fidelity of implementation, and a timeline of when key events were planned to occur.

The implementation team also initiated communication with parents of students at this time to provide an overview of the upcoming curricular adjustments. An informational memo was provided to parents with the following information included: (1) a brief overview of the history of DI; (2) an overview of some of the key features of DI; and (3) links to websites for the National Institute for Direct Instruction and the Association for Direct Instruction for parents to seek out additional information.

Step 6: Obtain curricula and external consultant services

The implementation team began working with a representative from McGraw-Hill Education to identify and secure curricular materials as well as a DI consultant to provide on-site in-service training. Results of placement testing were used to guide initial purchases.

Step 7: Prepare students

The implementation team organized a series of teacher meetings to discuss specific methods to begin preparing students for successful participation in DI programs. Sample lesson plans (Appendix A) focusing on teaching students how to chorally respond to teacher questions and instructions were distributed to teachers. Teachers were instructed to begin implementing the lesson plans with their students. Ongoing coaching and support from members of the implementation team was provided to teachers to support implementation of the choral response lesson plans and to ensure the regular occurrence of practice.

Step 8: Implement organizational adaptations

The implementation team began overseeing a variety of adaptations to everyday school procedures to remove barriers to site-wide DI implementation. Specific adaptations that were required included modifications to scheduled school-wide activities (e.g., gym, lunch, recess) to ensure all students were available for instruction at the same time, testing of the schools public address system to signal class changes, and role-played practice sessions with students to support their ability to make the transition between various classrooms in response to the public address system.

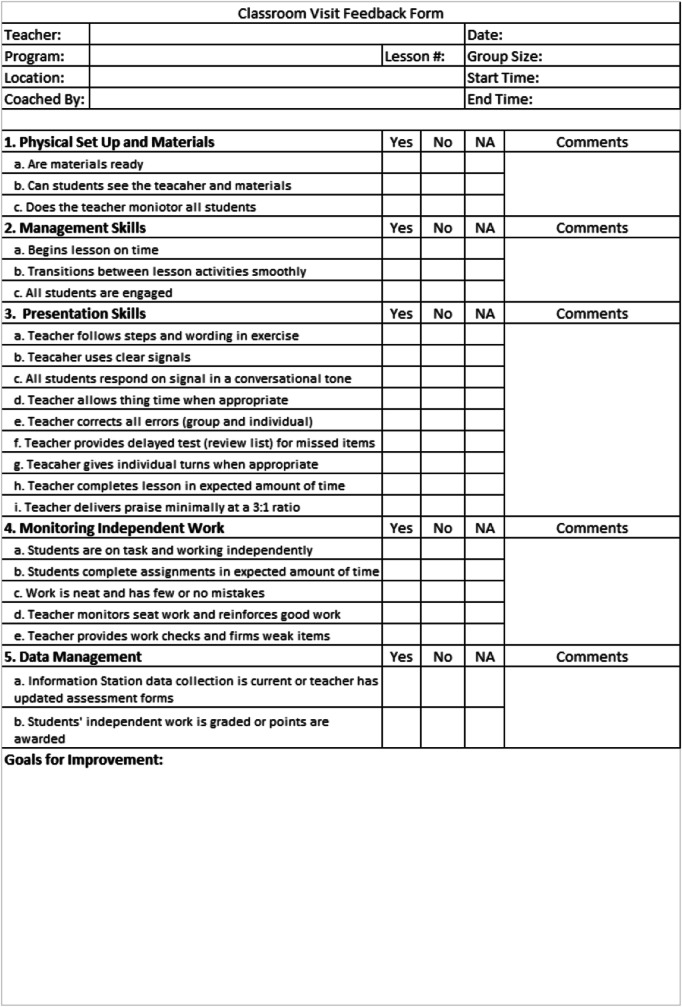

Step 9: Deploy external consultant training

An 8-hour in-service was provided by a DI consultant from McGraw-Hill Education. The in-service included an overview of DI curricula to be used in the school, demonstration of components of DI lesson delivery (including presentation of the script, signaling, and error correction methods), and opportunities for role-playing of lesson delivery with immediate feedback from the consultant. At this time, the consultant also provided the implementation team with a 20-item fidelity checklist (Appendix B) that would be used to guide coaching and feedback sessions throughout the implementation effort.

Step 10: Plan for implementation evaluation

Prior to the initial delivery of DI curricula to students, the implementation team began outlining a plan to evaluate the success of the implementation effort. Two specific variables were identified as important within the evaluation plan: (1) evaluation of DI implementation fidelity following initial roll-out, and (2) evaluation of student outcomes. Efforts to support implementation fidelity were also outlined at this time and were to include ongoing classroom observations and coaching sessions completed by the internal implementation team. These observations and coaching sessions would be guided by the 20-item fidelity checklist provided by the external DI consultant. The checklist focused on the following areas: physical set-up and materials, classroom management skills, DI presentation skills and monitoring of independent work. In addition, the DI consultant who provided the initial DI in-service was contracted for a second on-site visit to conduct an independent evaluation of initial implementation fidelity.

The evaluation plan for student outcomes included both formative and summative measures of student achievement. For the formative assessment of student achievement, ongoing comparison of pre- and posttest measures from DI mastery tests would be used. For the summative measure, the implementation team planned annual administrations of the KTEA-II Brief Form. One month prior to the implementation of DI curricula, all participating students were administered the KTEA-II Brief Form to obtain baseline scores in reading, writing, and mathematics.

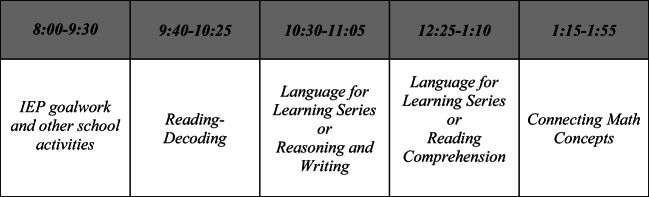

Step 11: Generate site schedule and course sequence

Because the implementation included multiple DI programs to be implemented simultaneously on a school-wide basis, a master schedule was developed to indicate when particular curricula would be implemented and for how long each day. The schedule was developed to match recommended durations within the manuals for each of the DI curricula selected. The daily schedule for each classroom also included 1.5 hr allotted for supplemental instruction focused on individual IEP goals and other classroom group activities such as art, gym, social games, etc. Figure 3 shows the daily schedule used throughout this implementation. It should be noted that two separate schedules were implemented resulting in different levels of intervention intensity. DI programming occurred 5 days per week for participating school-age students and 3 days per week for participating students who were in classrooms serving transition-age students. This distinction was made to ensure adequate instructional time for community and work-based experiences for older students. In addition, a course progression map was created to outline the sequence with which students would progress through curricula (see Figure 4).

Fig. 3.

Daily DI Schedule Used within Site-Wide Implementation

Fig. 4.

DI Course Sequence Map Used within Site-Wide Implementation

Step 12: Provide behavioral skills training

Prior to delivery of DI content to students, the implementation team conducted four separate 1-hr training sessions to allow all DI instructors to practice delivering 15-min sample DI lessons. Training sessions occurred in a group format with one instructor delivering a lesson and the remaining group members role-playing as students. Each DI instructor completed two 15-min sample lessons that included an opportunity to practice error correction procedures. Following the lesson, immediate performance feedback was provided to each trainee by other trainees as well as members of the implementation team.

Implementation Phase (12 Months Duration)

The EPIS framework describes the implementation phase as the point at which implementation of the selected evidence-based practice begins to occur under the guidance of the support activities that occurred during the preparation phase (Moullin et al., 2019). Specific steps completed as part of the school’s implementation of DI programs are described below.

Step 1: Implement curriculum and course progression. Teachers began implementation of DI curricula according to the planned school schedule. Students progressed through the DI curricula upon passing each program’s mastery tests and according to the planned course progression map.

Step 2: Provide ongoing coaching and support. Immediately upon the onset of DI curriculum implementation, members of the implementation team began ongoing coaching and support activities. Activities completed included regular classroom observations and coaching sessions guided by the 20-item DI fidelity checklist that was provided by the external consultant. Following an observation, if critical steps related to DI curriculum implementation were not observed to be occurring correctly, behavioral skills training (BST; Parsons et al., 2012) was provided. BST consisted of reviewing critical implementation components, modeling correct implementation, providing an immediate opportunity to practice, and delivering immediate performance feedback. Related to implementation fidelity, it should be noted that there were no predetermined fidelity criteria that DI instructors were required to meet prior to being assigned to deliver DI lessons to students. Upon roll-out, DI curricula became the school’s core academic curricula. As such, even if implementation fidelity were observed to be poor with a particular instructor, withholding instruction until fidelity improved was not an option.

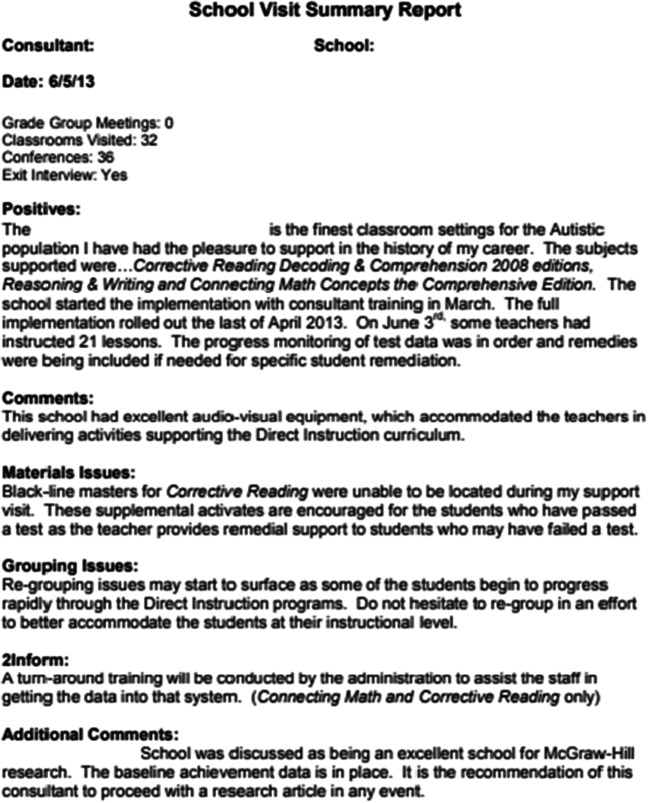

Step 3: Evaluate implementation fidelity. As outlined in Step 10 of the preparation phase, the external DI consultant returned to the school to conduct a 4-day independent evaluation of the school’s DI implementation. Prior to the visit, the implementation team developed an observation schedule to ensure that all staff members who were delivering DI content would have an opportunity to be observed multiple times during the visit, and across implementation of various DI programs. During the 4-day evaluation, the DI consultant completed a series of DI classroom observations using the 20-item DI fidelity checklist to provide immediate feedback to instructional staff. In addition to items on the checklist, the form provided space for specific comments from the observer. In total 36 observations of DI instruction were completed across the 4-day evaluation. Following the visit, the DI consultant provided an independent report to the school summarizing the visit. Although quantitative fidelity data were not provided within the report, qualitative information indicated that the school’s DI implementation was exceptional. A redacted copy of that report is provided in Figure 5. It should be noted that within the consultant’s report, there was a recommendation that McGraw-Hill consider the school for research. A follow-up email recommending research be conducted at the school was sent by the DI consultant to a representative at McGraw-Hill shortly after completing the evaluation.

Step 4: Evaluate student outcomes. Formative evaluation of student outcomes began immediately upon the implementation of DI programs. Teachers administered each DI mastery test to students twice, once prior to delivering instruction on the lessons that would be covered on the mastery test and once after students had completed instruction on those lessons. Results of the pre- and posttests provided immediate opportunities for teachers to examine student outcomes.

Fig. 5.

Redacted Fidelity Report from External DI Consultant Evaluation

Summative evaluation began 1-year after implementation of DI curriculum was initiated and continued on an annual basis thereafter. Teachers re-administered the KTEA-II Brief Form assessment to each student and results were compared across annual administrations.

Sustainment Phase (Ongoing)

The EPIS framework describes the sustainment phase as the point at which an organization’s implementation efforts shift to ongoing analysis of implementation variables, processes, and supports to ensure that the evidence-based practice can continue to be delivered (Aarons et al., 2011; Moullin et al., 2019). Specific steps in the school’s sustainment efforts supporting ongoing implementation of DI are described below.

Step 1: Provide ongoing coaching and fidelity monitoring. The implementation team developed a schedule to ensure ongoing coaching and fidelity monitoring continued in the absence of the external DI consultant. Each member of the implementation team was responsible for conducting regular observations guided by the DI fidelity checklist, with follow-up coaching provided as needed. As trends were noted related to implementation fidelity issues, larger group meetings were held to conduct additional training/coaching.

Step 2: Develop information management systems and job supports. The implementation team developed a series of additional processes and job supports to help guide various aspects of DI implementation. For example, a system of monthly data collection was developed to track the instructional pacing of each DI group. This information was helpful in regrouping students due to differences in rates of skill acquisition over the course of the implementation. In addition, a variety of checklists were developed to standardize the means by which teachers organized and shared student records related to DI coursework, such as pre- and posttest records for various DI mastery tests. Lastly, mock student IEP documents were developed to serve as models for teachers to support and guide their inclusion of specific information related to DI courses into student IEP’s in a consistent manner.

Step 3: Incorporate student data into IEP. Implementation team members ensured that data related to pre- and posttest scores on DI mastery tests as well as results of annual KTEA-II Brief Form testing were included in the student profile section of every student’s IEP. The organizational expectation for including this information into student IEPs began to tie specific DI implementation steps to federally mandated special education processes, which was also intended to help sustain DI implementation.

Step 4: Incorporate DI implementation into teacher evaluations. The implementation team began incorporating information from classroom observations focused on DI implementation into state mandated annual teacher evaluations. This helped tie organizational expectations for DI implementation to state-level educational processes. This step was intended to support the teacher’s perspective of the value of DI implementation fidelity.

Step 5: Incorporate student outcomes into an annual report. At an organizational level, annual analysis of student outcomes using data from the KTEA-II Brief Form became an item that was regularly included in the organization’s annual report. As a nonprofit (501(c)(3)) organization, annual reports updating the Internal Revenue System on an organization’s activities, income, and financial status is a federal requirement.

Step 6: Disseminate implementation and outcome data. Data from DI implementation efforts, including information related to roll-out and student outcomes was shared at professional conferences. Dissemination efforts, including potential for publication of the organization’s DI implementation process and outcome data, highlight the importance of implementing evidence-based educational strategies.

Results

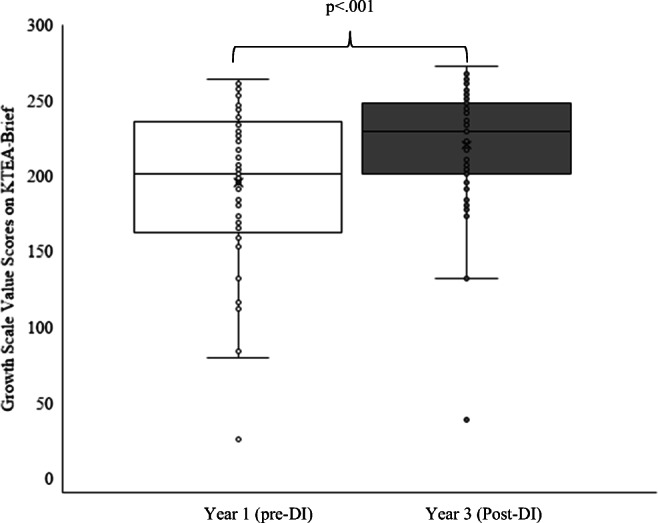

Figures 6, 7 and 8 and Table 1 show the results of the DI implementation process on student outcome measures in reading. Figure 6 shows the distribution of all individual student KTEA-II Brief Form GSV scores for reading (N = 67), before and after 2 years of DI reading instruction. At year 1, before DI, the average student GSV score for reading was 195.1 (range: 25–264). At year 3, after 2 years of DI, the average student GSV score for reading was 220.3 (range: 38–273). To determine if the differences in group scores were significant, a paired samples t-test was conducted using Microsoft EXCEL©. Results indicated that after 2 years of DI the difference in scores reached statistical significance, t(66) = .7.72, p < .001.

Fig. 6.

Comparison of KTEA-II Growth Scale Value Scores. Note. Each student was evaluated at varying times between the 5-year evaluation period

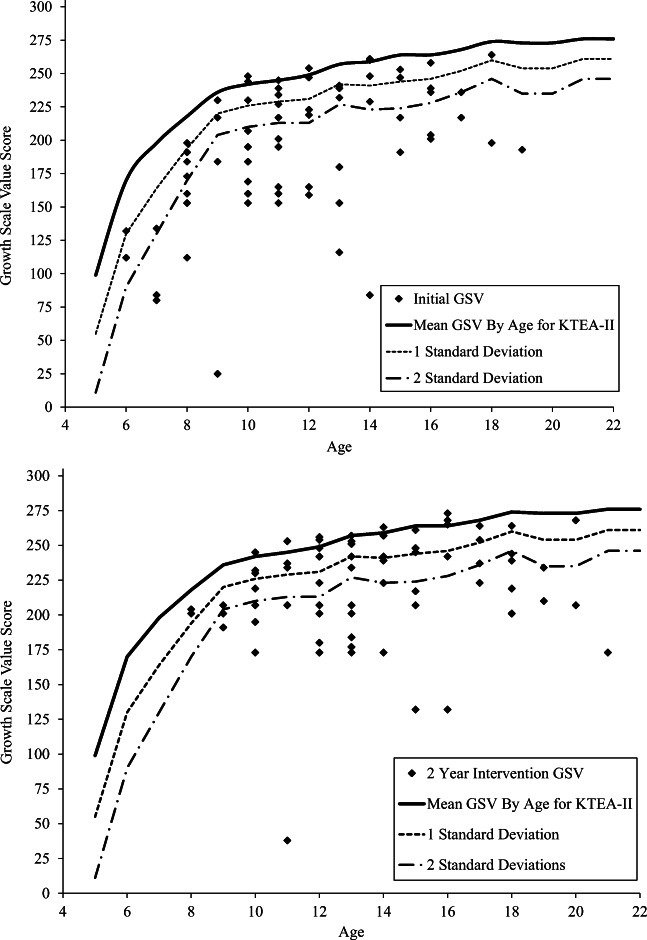

Fig. 7.

KTEA-II Brief Form GSV Scores for Students by Age, Pre- (top), and Post-DI (bottom)

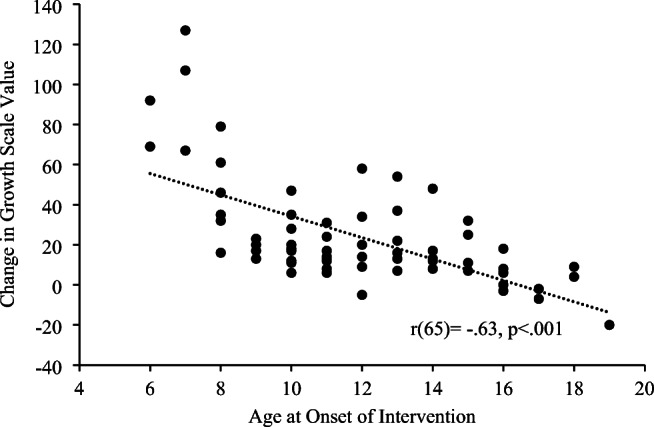

Fig. 8.

Relationship between Age at Onset of Intervention and Change in Growth Scale Value Score

Table 1.

KTEA-II Brief Form GSV Scores—Participant Makeup

| Initial Assessment | Postintervention | Change | |

|---|---|---|---|

| Number of GSV Scores at or above Mean | 6 | 9 | +3 |

| Number of GSV Scores within 1 SD of Mean | 12 | 20 | +8 |

| Number of GSV Scores below 1 SD of Mean | 49 | 38 | -11 |

| Total | 67 | 67 |

Figure 7 shows the same student scores arranged according to age on the x-axis and GSV value on the y-axis. The thick black line represents the mean GSV for reading by age according to the KTEA-II Brief Form manual. Dotted lines illustrate 1 and 2 standard deviations (SDs) from the mean, respectively, as found in the KTEA-II Brief Form manual. Results indicate that upon initial assessment (top panel) with the KTEA-II Brief Form 6 participants (9%) had reading GSV scores that were at or above the mean for their age, 12 participants (18%) had reading GSV scores within 1 SD of the mean for their age, and 49 participants (73%) had reading GSV scores that were below 1 SD of the mean for their age. Results indicate that following 2 years of intervention with DI reading curricula (bottom panel), 9 participants (13%) had reading GSV scores that were at or above the mean, 20 participants (30%) had reading GSV scores that were within 1 SD of the mean, and 38 participants (57%) had reading GSV scores below 1 SD of the mean.

Table 1 further explores the changes observed in reading GSV scores between initial assessment and postintervention. Participant GSV scores were sorted following initial assessment, and again postintervention, into one of three categories: (1) at or above the normative sample mean for their age group, (2) within 1 SD below the normative sample mean for their age group, or (3) more than 1 SD below the normative sample mean for their age group. Results indicate that the number of participants with reading GSV scores more than 1 SD below the mean for their age group decreased by 11 across initial and postintervention assessments. At the same time, the number of participants with reading GSV scores within 1 SD below the mean for their age group increased by 8 and the number of participants with reading GSV scores at or above their age group mean increased by 3. These data indicate that at least 11 of the participants experienced changes in reading GSV scores between initial assessments and postintervention, which may indicate higher than expected rates of learning.

A Pearson’s r correlation was conducted using Microsoft EXCEL© to evaluate the strength of the relationship between age at the onset of DI (M = 11.7, SD = 3.2) and change in the GSV score (M = 25.2, SD =26.8). As shown in Figure 8, there was a significant negative correlation between age of onset of DI and change on the reading GSV, r(65)= -.63, p < .001. In other words, these results indicate that age was inversely related to change in reading GSV score; younger participants experienced larger changes in reading GSV than older students; however, increases in reading GSV were still observed for students that were up to 17 years old at the onset of DI reading intervention.

Discussion

The purposes of this article are twofold. First, as the evidence-base supporting the efficacy of DI for individuals with ASD continues to grow, the article aimed to provide valuable insight into the detailed steps taken to initiate and sustain a successful comprehensive site-wide DI implementation effort within a public school setting. For readers seeking to initiate implementation of DI within their own practice settings, the steps outlined within the current article may serve as a beneficial model and help promote successful implementation and long term sustainment.

Second, the current article aimed to add to the evidence-base regarding the effectiveness of DI by providing “real world” outcome data for 67 students who participated in at least 2 years of reading intervention with DI reading programs, delivered by special education teachers and paraprofessionals, within a public school setting. Resulting outcome data suggest that DI reading programs led to improved scores on standardized measures of academic achievement in the area of reading at a statistically significant level. We found that multiple students made progress at rates beyond what would be expected for their age, indicated by scores nearing or exceeding the mean for the KTEA-II Brief Form after DI intervention. These findings are promising, given that students with ASD need to learn at higher rates than their peers to “catch up.” Results from our study also indicated that age may be a significant variable in the effectiveness of DI. This finding must be interpreted with caution, however, because this result was not obtained as a part of a prospective, controlled analysis. Overall, these data indicate that DI can be effective when delivered within a “real world” setting, that it serves a diverse age range of students with diverse needs, and when it is delivered by licensed special education teachers and paraprofessionals.

Examples of systems to integrate DI into broad educational services have existed for decades in the National Project Follow Through (Engelmann et al., 1988) and Morningside Academy model (Johnson & Street, 2012). Yet, this is the first large-scale demonstration of DI with a population of students with ASD. Consistent with Smith et al.’s (2006) description of community effectiveness research, the implementers of DI in this evaluation were the actual classroom teachers and paraprofessionals native to the school. The facilitators were the supervisors, administrators, and key personnel also native to the school. External expert support was leveraged to ensure that the delivery of DI was of sufficient quality, but that support was used in strategic, short-term doses. These factors are important as they lend support to the notion that DI can be implemented without expert-level support on an ongoing basis, even when serving individuals with ASD with complex learning needs.

It should be noted that this is the largest pool of participants with ASD to be included in a study of DI. In this case, size matters as a strong demonstration of the generality of the effectiveness of DI. Participants were not specifically recruited nor excluded from evaluation. All students who attended the school during the evaluation period, and qualified for participation in DI programs based on results of individually administered curriculum-based placement tests, were included in the analysis of outcomes. The population of the present study is also unique because it includes individuals of various ages, developmental levels, and socioeconomic status. DI has been demonstrated as effective across diverse populations in terms of race, ethnicity, learning needs, and ages (Hattie, 2009; Stockard, in press). DI literature with learners with ASD has yet to achieve this degree of generality, but this study offers a meaningful contribution in this direction.

Results from the present study are highly promising because they demonstrate effectiveness on broad measures of achievement. Much of the DI literature with children with ASD has utilized the lesson tests or skills pulled from the lesson tests embedded in the curriculum as the dependent measure (e.g., Flores & Ganz, 2007, 2009; Ganz & Flores, 2009; Flores et al., 2013; Shillingsburg et al., 2015). This is logical, as DI intervention should lead to improvements on DI lesson tests. However, the importance of these findings may not be as immediately clear for learners with ASD as it is unclear to what extent progress on DI leads to progress outside of DI for members of this population. Evaluating progress on DI lesson tests alone is akin to the faulty practice of train and hope that Stokes and Baer (1977) cautioned against. Results with learners with and without disabilities have shown that DI can yield outcomes on broader achievement measures (Hattie, 2009). This study adds additional support by showing significant changes on the reading measures of the KTEA-II Brief Form. In a similar effort, Kamps et al. (2016) showed improvement on a measure from the Woodcock Johnson Reading Mastery Test (Woodcock et al., 2001) and a measure from the Dynamic Indicators of Basic Early Literacy Skills-DIBELS (Kaminski & Good, 1998). Future studies should build on these demonstrations by including measures considered important to stakeholders and aligned with relevant educational outcomes. Use of these measures will allow for meaningful comparisons to other educational procedures and practices, which will be useful as a means to support a decision-making process for educators facing an array of intervention choices (Cook & Odom, 2013; Odom et al., 2010).

Limitations

There are several limitations to this study that must be considered. To begin, the study was completed entirely within a “real-world” public charter school over a period of 5 years. During this time, there were a range of variables that may have affected (at least on a temporary basis) the fidelity with which DI was implemented at any given time. These variables include such things as staff turnover, staff and student absenteeism, and changes in the frequency of demands placed on the implementation team to provide non-DI related support, such as increased time spent providing intensive support for newly enrolled students or students experiencing significantly challenging behavior. In addition, there was limited quantitative evaluation of DI fidelity across the duration of the study. For instance, although ongoing coaching and feedback was provided to individuals implementing DI throughout the implementation, the frequency and outcomes of the coaching sessions were not recorded. Furthermore, the measures of DI fidelity provided in the report from the external evaluator at the onset of DI implementation were strictly qualitative in nature. Although these are certainly limitations to providing a scientific measurement of procedural fidelity, in general we believe them to be consistent with practices of “real world” settings serving individuals on the autism spectrum. Although outcome data should be interpreted with caution in light of these unknowns, the positive results observed in terms of student growth may provide additional evidence of the overall utility and effectiveness of DI, even in “messy” or less than perfectly controlled circumstances.

A second limitation relates to the KTEA-II Brief Form assessments that were administered to participants as outcome measures within this study. Although these assessments were delivered by licensed special education teachers, no measures of procedural fidelity were undertaken to monitor the accuracy or fidelity of their administration. It should be noted that although this is a limitation of the current study, we do not believe it to be a common educational practice to monitor the fidelity of the administration of academic achievement assessments within typical public school settings.

A third limitation relates to the use of reading scores obtained on the KTEA-II Brief alone to evaluate outcomes of DI implementation for participants. As implementation involved a series of DI programs focused on the areas of reading, oral language comprehension, critical thinking, and mathematics, participants included in the outcomes analysis were only selected based on their involvement in a DI reading program for at least 2 years. Participant involvement in other DI programs was variable across individual study participants. We suggest that future studies could attempt to compare the effects of simultaneous participation in multiple DI programs versus participation in a single DI program.

A fourth limitation relates to the intensity with which participants received DI. As mentioned, school-age participants received DI 5 days per week. Students who were served in classrooms for transition-age students received DI 3 days per week to allow time for participation in community-based instruction and job training activities. Unfortunately, school records did not allow for precise sorting of participant data to accurately determine the extent that a given participant received 5-day-per-week DI or 3-day-per-week DI. Varying degrees of improvements in GSV scores for students over the age of 14 should therefore be viewed with this consideration in mind.

Lastly, although early implementation steps included an investigation into perceived advantages and disadvantages of DI instruction by various stakeholders prior to implementation, specific measures of social validity were not included postimplementation. Future studies might further analyze whether or not there are changes to stakeholder perspectives of DI following successful implementation and sustainment efforts. Likewise, the addition of social validity measures examining stakeholder perspectives related to the overall implementation effort (as well as individual steps included in the process) might advance our understanding of how best to arrange implementation processes in the most efficient manner.

Practical Considerations

With respect to the DI implementation process presented in this article, a few considerations are notable related to the unique organizational characteristics in which the implementation effort occurred. First, the current implementation effort was carried out within a public charter school setting. Charter schools, by design, involve fewer bureaucratic processes and can therefore respond more readily to change initiatives such as the one discussed here. Likewise, the incorporation of DI implementation proficiency and student outcome data into teacher evaluations were key steps listed in the sustainment efforts described in this article. Similar steps, if implemented within a traditional public-school setting, may encounter different barriers and potentially affect the inclusion of these steps into the implementation process.

Second, this implementation effort was carried out at a school that employed two full-time BCBAs as curricular leaders. Both BCBAs had previous exposure to DI programs as well as experience with effective training methods such as BST. This organizational leadership characteristic may not be present in other settings and may have an impact on implementation efforts.

Third, the school in which the implementation effort occurred endorsed specific values within their mission statement that may have further influenced the willing adoption of DI programs. For example, the school’s mission statement explicitly stated that the school believed educational programs should be held accountable for providing effective programs and achieving outcomes that are socially valuable, functional, and acceptable. These values are largely consistent with many of the key philosophical principles of DI, such as the belief that all children can be taught, all children can improve academically and in terms of self-image, and all teachers can succeed if provided with adequate training and materials (Engelmann et al., 1988).

The current study attempts to add to the literature base regarding the effectiveness of DI when implemented on a large-scale within a public school setting serving students with ASD. Results presented here, and in conjunction with previous research that has experimentally demonstrated the efficacy of various DI lessons, strands, and whole programs, further supports the categorization of DI as an evidence-based practice for individuals on the autism spectrum (see Steinbrenner et al., 2020). In addition, the current study attempts to describe explicit steps used within the implementation process in hopes that such a description can provide guidance to other practitioners. We hope this work serves to accelerate important conversations that may one day begin to bridge the gap that exists between the evidence of efficacy of DI with the ASD population and its unfortunate absence from widespread usage in school settings (Kim & Axelrod, 2005; Stockard et al., 2018; Viadero, 1999). Although the results presented here were positive with respect to both implementation and student outcomes, additional research will be needed to further refine understanding of specific variables and their impact on the successful implementation of DI.

Author’s Note

Study conception and manuscript preparation was contributed to by Joel Vidovic and Alice Shillingsburg. Study design, material preparation, and data collection were performed by Joel Vidovic and Mary Cornell. Data Analysis and written description was performed by Sarah Frampton and Joel Vidovic. The first draft of the manuscript was written by Joel Vidovic with major contributions from Sarah Frampton. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript. The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

The authors thank the following individuals for their contributions and support of the work described in this article: Mary Walters, Ed Cancio, Amy Mullins, Julie Carter, Alison Thomas, Tony Baird, Jeana Kirkendall, The Autism Model School Board of Directors, and the outstanding students, parents, teachers, and paraprofessionals of the Autism Model School.

Sample Lesson Plan to Teach Choral Response Skills

Objective:

Students will learn to chorally respond to a teacher initiated question or instruction immediately upon hearing/seeing a designated teacher signal to do so.

Rational:

This skill will rapidly increase the number of active responses that students can exhibit within a given lesson

Active student response has been linked to student outcomes as well as increases in on-task behavior (or decreases in off-task behavior)

Choral response is a key component of Direct Instruction curricula

Teaching Strategies/Procedures:

Throughout the school day and just prior to transitions to preferred activities (lunch, dismissal, breaks, recess, etc.), students will be asked to respond in unison, following a teacher signal, to known questions using choral response.

The teacher will inform all students of the signal that will indicate that it is time for them to speak. The teacher will also demonstrate the signal for students in advance of the first trial.

The signal will be one of the following: clap hands, snap fingers, ring a bell, tap word on board with finger, etc.

Each trial will consist of the teacher presenting a question that contains a short answer (one or two words), a small amount of wait time for students to formulate their response, immediately followed by the teacher giving the designated signal.

A token system will be displayed on a board in front of the classroom for students to observe progress towards completion of the task. Each practice opportunity will require students to earn between three and five tokens.

CORRECT RESPONSE

Correct responses will be considered to have occurred when ALL students respond in unison. Teacher will respond to correct responses by praising students and placing an X in one of the boxes on the token board.

INCORRECT RESPONSE

Incorrect responses will be considered to have occurred when NOT ALL of the students respond in unison. Teacher will respond to incorrect responses by praising several students who did respond and reminding all students that they will earn an X when ALL of the students respond. Teacher will NOT deliver attention (i.e. reminders or reprimands) to individual students who did not respond

20-Item Fidelity Checklist

Declarations

Ethics Approval

This research study (Project # 2020 HS-13) was approved by May Institute Institutional Review Board for an exempt status under Federal regulation Category 1: 45 CFR 46.104(d)(1). It is deemed acceptable according to the Belmont Principles and the American Psychological Association’s Ethical Guidelines for the use of Human Participants.

Conflicts of Interest

No funding was received to assist with the preparation of this manuscript. The authors have no relevant financial or nonfinancial interests to disclose.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration & Policy in Mental Health & Mental Health Services Research. 2011;38(1):4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons, G. A., Ehrhart, M. G., Farahnak, L. R., & Sklar, M. (2014). Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annual Review of Public Health, 35, 55-274. 10.1146/annurev-publhealth-032013-182447. [DOI] [PMC free article] [PubMed]

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). American Psychiatric Publishing.

- Barbash, S. (2012). Clear teaching: With Direct Instruction, Siegfried Engelmann discovered a better way of teaching. Education Consumers Foundation.

- Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychology. 2015;3(1):32. doi: 10.1186/s40359-015-0089-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker WC, Gersten R. A follow-up of Follow Through: The later effects of the Direct Instruction Model on children in fifth and sixth grades. American Educational Research Journal. 1982;19(1):75–92. doi: 10.3102/00028312019001075. [DOI] [Google Scholar]

- Cook BG, Odom SL. Evidence-based practices and implementation science in special education. Exceptional Children. 2013;79(3):135–144. doi: 10.1177/001440291307900201. [DOI] [Google Scholar]

- Engelmann, S., Becker, W. C., Carnine, D., & Gersten, R. (1988). The direct instruction follow through model: Design and outcomes. Education & Treatment of Children, 303–317.

- Engelmann, S., Haddox, P., Hanner, S., & Osborn, J. (2002). Corrective reading thinking basics. Science Research Associates/McGraw-Hill.

- Engelmann, S., Engelmann, O., Carnine, D., & Kelly, B. (2012). SRA connecting math concepts: Comprehensive edition. McGraw-Hill Education.

- Engelmann, S., & Osborn, J. (2006). Language for writing. SRA/McGraw-Hill.

- Engelmann, S., & Osborn, J. (2008a). Reading mastery: Signature edition. Science Research Associates/McGraw-Hill.

- Engelmann, S., & Osborn, J. (2008b). Language for learning. Science Research Associates.

- Engelmann, S., & Silbert, J. (1983). Expressive writing I. Science Research Associates.

- Fixsen D, Blase K, Metz A, Van Dyke M. Statewide implementation of evidence-based programs. Exceptional Children. 2013;79(2):213–230. doi: 10.1177/0014402913079002071. [DOI] [Google Scholar]

- Flores MM, Ganz JB. Effectiveness of direct instruction for teaching statement inference, use of facts, and analogies to students with developmental disabilities and reading delays. Focus on Autism & Other Developmental Disabilities. 2007;22(4):244–251. doi: 10.1177/10883576070220040601. [DOI] [Google Scholar]

- Flores MM, Ganz JB. Effects of direct instruction on the reading comprehension of students with autism and developmental disabilities. Education & Training in Developmental Disabilities. 2009;44(1):39–53. doi: 10.1177/10883576070220040601. [DOI] [Google Scholar]

- Flores MM, Nelson C, Hinton V, Franklin TM, Strozier SD, Terry L, Franklin S. Teaching reading comprehension and language skills to students with autism spectrum disorders and developmental disabilities using direct instruction. Education & Training in Autism & Developmental Disabilities. 2013;48(1):41–48. [Google Scholar]

- Frampton, S., Munk, G. T., Shillingsburg, L. A., & Shillingsburg, M. A. (in press). A systematic review and quality appraisal of applications of direct instruction with individuals with autism spectrum disorder. Perspectives on Behavior Science. [DOI] [PMC free article] [PubMed]

- Ganz JB, Flores MM. The effectiveness of direct instruction for teaching language to children with autism spectrum disorders: Identifying materials. Journal of Autism & Developmental Disorders. 2009;39(1):75–83. doi: 10.1007/s10803-008-06002-2. [DOI] [PubMed] [Google Scholar]

- Gersten R, Carnine D. Direct instruction mathematics: A longitudinal evaluation of low-income elementary school students. Elementary School Journal. 1984;84(4):395–407. doi: 10.1086/461372. [DOI] [Google Scholar]

- Gersten R, Darch C, Gleason M. Effectiveness of a direct instruction academic kindergarten for low-income students. Elementary School Journal. 1988;89(2):227–240. doi: 10.1086/461575. [DOI] [Google Scholar]

- Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses relating to achievement. Routledge

- Head CN, Flores MM, Shippen ME. Effects of direct instruction on reading comprehension for individuals with autism or developmental disabilities. Education & Training in Autism & Developmental Disabilities. 2018;53(2):176–191. [Google Scholar]

- Horner, R. H., & Albin, R. W. (1988). Research on general-case procedures for learners with severe disabilities. Education & Treatment of Children, 11(4), 375-388.

- Individuals with Disabilities Education Act (IDEA). (2004). 20 U.S. C. §§ 34 CFR 300.307 (2004).

- Johnson K, Street EM. From the laboratory to the field and back again: Morningside Academy's 32 years of improving students' academic performance. Behavior Analyst Today. 2012;13(1):20–40. doi: 10.1037/h0100715. [DOI] [Google Scholar]

- Kaminski, R. A., & Good, R. H. (1998). Assessing early literacy skills in a problem solving model: dynamic indicators of basic early literacy skills. In M. R. Shinn (Ed.), Advanced applications of curriculum-based measurement (pp. 113–142) Guilford.

- Kamps D, Heitzman-Powell L, Rosenberg N, Mason R, Schwartz I, Romine RS. Effects of reading mastery as a small group intervention for young children with ASD. Journal of Developmental & Physical Disabilities. 2016;28(5):703–722. doi: 10.1007/s10882-016-9503-3. [DOI] [Google Scholar]

- Kaufman, A. S., & Kaufman, N. L. (2005), Kaufman Test of Academic Achievement, Second Edition, Brief Form. Bloomington, MN: NCS Pearson.

- Kim T, Axelrod S. Direct Instruction: An educators' guide and a plea for action. Behavior Analyst Today. 2005;6(2):111–120. doi: 10.1037/h0100061. [DOI] [Google Scholar]

- Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the exploration, preparation, implementation, sustainment (EPIS) framework. Implementation Science. 2019;14(1):1. doi: 10.1186/s13012-018-0842-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Center for Education Statistics. (2020). Digest of education statistics 2019. Institute of Education Sciences. https://nces.ed.gov/programs/digest/d19/tables/dt19_204.30.asp?current=yes

- Odom SL, Collet-Klingenberg L, Rogers SJ, Hatton DD. Evidence-based practices in interventions for children and youth with autism spectrum disorders. Preventing School Failure: Alternative Education for Children & Youth. 2010;54(4):275–282. doi: 10.1080/10459881003785506. [DOI] [Google Scholar]

- Parsons MB, Rollyson JH, Reid DH. Evidence-based staff training: A guide for practitioners. Behavior Analysis in Practice. 2012;5(2):2–11. doi: 10.1007/BF03391819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shillingsburg MA, Bowen CN, Peterman RK, Gayman MD. Effectiveness of the direct instruction language for learning curriculum among children diagnosed with autism spectrum disorder. Focus on Autism & Other Developmental Disabilities. 2015;30(1):44–56. doi: 10.1177/1088357614532498. [DOI] [Google Scholar]

- Smith T, Scahill L, Dawson G, Guthrie D, Lord C, Odom S, Rogers S, Wagner A. Designing Research Studies on Psychosocial Interventions in Autism. Journal of Autism and Developmental Disorders. 2007;37:354–366. doi: 10.1007/s10803-006-0173-3. [DOI] [PubMed] [Google Scholar]

- Steinbrenner JR, Hume K, Odom SL, Morin KL, Nowell SW, Tomaszewski B, Szendrey S, McIntyre NS, Yücesoy-Özkan Ş, Savage MN. Children, youth, and young adults with autism. 2020. [DOI] [PMC free article] [PubMed]

- Stockard, J. (in press). Building a more effective, equitable, and compassionate educational system: The role of direct instruction. Perspectives on Behavior Science. [DOI] [PMC free article] [PubMed]

- Stockard J, Wood TW, Coughlin C, Rasplica Khoury C. The effectiveness of direct instruction curricula: A meta-analysis of a half century of research. Review of Educational Research. 2018;88(4):479–507. doi: 10.3102/0034654317751919. [DOI] [Google Scholar]

- Stokes TF, Baer DM. An implicit technology of generalization 1. Journal of Applied Behavior Analysis. 1977;10(2):349–367. doi: 10.1007/BF03392465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Viadero D. Scripting success. Teacher Magazine. 1999;11(2):20–23. [Google Scholar]

- Watkins, C., & Slocum, T. (2004). The components of direct instruction. In N. E. MarchandMartella, T. A. Slocum, & R. C. Martella (Eds.), Introduction to direct instruction (pp. 28–65). Allyn & Bacon. 10.1080/10573560490264125

- Weisberg, P. (1988). Direct Instruction in the preschool. Education & Treatment of Children, 11(4), 349-363.

- Wiig, E. H., Semel, E., & Secord, W. A. (2013). Clinical evaluation of language fundamentals (5th ed.; CELF-5). NCS Pearson.

- Woodcock, R.W., McGrew, K. S., & Mather, N. (2001). Woodcock Johnson III tests of achievement (WJ-III). Riverside Publishing.