Abstract

Like many Title 1 schools in the United States, the host site for this study in rural South Carolina represents a widespread literacy crisis in our public education system. In this particular school, only 20% of 3rd graders demonstrated proficient reading skills. Although extremely effective precision teaching–based literacy intervention programs have been developed in the private sector, such as the Fit Learning™ model, the extensive time and related costs of training classroom teachers in those methods prohibit struggling schools on tight budgets. As such, the current study sought to develop and test the feasibility of a truncated version of the Fit Learning™ model, dubbed Fit Lite™. Fourteen students identified by the school as “high risk” for literacy struggles were instructed in the Fit Lite™ model in their after-school program. With expert oversight and only 1 week of training, a group of 4 implementers with no prior experience using precision teaching or implementing Fit Lite™ produced promising reading improvements. Over the course of approximately 12 weeks, the 14 students improved by an average of 16 percentile points against the national average on standardized progress-monitoring tools. Details of the Fit Lite™ model, results achieved in this study, and considerations for future replications are described.

Keywords: at risk, early literacy, precision teaching, Read to Succeed

A literacy crisis persists in the United States, with nearly two thirds of fourth and eighth graders nationwide unable to read proficiently and only one in five Black students is a proficient reader (U.S. Department of Education, National Center for Education Statistics, 2019). More than 30 states have passed third-grade reading laws incorporating prevention, intervention, and retention strategies over the past 12 years (National Center for Learning Disabilities, 2019). These laws have been implemented in an attempt to disrupt the premature transition from learning to read to reading to learn that often occurs when students advance from third to fourth grade. In kindergarten through third grade, reading instruction emphasizes the development of reading skills such as decoding and recognizing sight words (i.e., learning to read). In fourth grade, the focus shifts to using presumably developed reading skills to learn new information (i.e., reading to learn). Although some debate as to whether the transition from learning to read to reading to learn truly occurs in schools, concern about what happens to struggling third-grade readers is grounded in troubling statistics. For example, a failure to read proficiently by the end of third grade increases the probability that a child will drop out of school by four times, and children of color are twice as likely as their similar White peers to not graduate from high school if they are not reading proficiently in third grade (Fiester, 2010).

In South Carolina, where only 32% of fourth graders read on grade level (U.S. Department of Education, National Center for Education Statistics, 2019), the Read to Succeed Act was put into legislation in an attempt to address the issue by ensuring all students read proficiently by the end of third grade. Beginning in the 2017–2018 school year, third graders who fail to demonstrate proficiency as evidenced on the state’s summative reading assessment by the end of the year are retained (South Carolina Department of Education, 2019). Fifteen other states and Washington, DC, also have adopted required retention mandates (National Conference of State Legislatures, 2019), despite evidence suggesting a myriad of negative effects that retention may have on students’ academic achievement in all subject areas, including reading and language arts, as well as on their social, emotional, and behavioral achievement (Hattie, 2009). Retention has been identified as the second greatest predictor of school dropout (Foster, 1993) and occurs most often among economically disadvantaged African American and Hispanic male groups (Hattie, 2009), which are among the high school student groups with the highest dropout rates (U.S. Department of Education, National Center for Education Statistics, 2019).

In contrast to the breadth of knowledge regarding educational practices that have negative impacts, empirical evidence strongly supports effective methods (Hattie, 2009). The National Reading Panel (NRP), convened by Congress in 1999, conducted the largest, most comprehensive evidence-based review to date on how children learn to read (U.S. Department of Health and Human Services, 2019). They identified phonemic awareness, phonics, fluency, vocabulary, and comprehension as the five pillars of effective reading instruction (National Institute of Child Health and Human Development, 2000). The panel has not reconvened since, likely because the way in which children learn to read has not changed over the last 20 years.

Numerous examples of effective educational practices and models informed by behavior analysis exist. Perhaps the most notable is Project Follow Through. Project Follow Through (PFT) represents the largest and most expensive government-funded educational experiment to date. PFT was launched in 1967 in the wake of President Johnson’s War on Poverty imperative, with the charge of identifying the most effective teaching methods for at-risk youth (Watkins, 1988). More than 22 educational models were evaluated with over 200,000 students. After 9 years, the results of the educational experiment were analyzed. One model, Direct Instruction (DI), yielded significantly better outcomes on measures of achievement than the other educational models.

DI, an instructional approach that uses planned, sequential, and systematic teaching of specified learning objectives, demonstrates that controlling details of content to minimize misinterpretation of information maximizes learning (National Institute for Direct Instruction, 2015). Thus, DI is well known for its evidence-based curricular programs that provide educators with scripted lessons and designed sequences for introducing concepts. Uppercase “DI” denotes empirically validated DI programs; lowercase “di” denotes a set of instructional variables inherent in the DI approach. These variables include student engagement and active responding, as well as specific and immediate feedback to students (Rosenshine, 1976).

Precision teaching (PT) is another widely applied technology of behavior analysis to education. A measurement approach and set of decision-making strategies, PT can be applied to any curriculum or teaching scenario (Binder & Watkins, 1990; Kubina & Yurich, 2012). Several guiding principles of PT have been instrumental in its effectiveness and adoption as a system for teaching in behavior analysis (Kubina & Yurich, 2012). The viewpoint that the learner knows best is central to PT. From this perspective, a failure to learn is not attributable to internal traits or characteristics of a student; rather, a failure to learn indicates a failure to effectively teach. Additional principles that guide PT include a focus on observable behavior, the use of frequency as the primary measure of behavior, and the use of the standard celeration chart (SCC) to record those frequencies.

One of the most notable discoveries from PT research is the relationship between component-skill strength and the acquisition or emergence of related composite repertoires. Component skills involve the response constituents that come together in the execution of more elaborate skills. Haughton (1972) discovered that both frequency and accuracy of a component skill, rather than accuracy alone, were important for more complex learning when the component skill was necessary for the performance of more advanced skills. For example, it was discovered that classroom students who could read and write digits at a frequency of 100/min were more likely to acquire and master addition and subtraction (cf. Binder, 1996; Haughton, 1972; Starlin, 1972). In a similar manner, a variety of component skills comprise the terminal behavior of reading, such as the identification of letter names, letter sounds, and the skill of blending sounds into words. These components, when at sufficient strength (i.e., frequency and accuracy), facilitate a learner’s ability to decode unknown words.

One of the most noteworthy, large-scale implementations of PT took place in Great Falls, Montana, in 1975. Student achievement was compared between control classrooms and experimental classrooms, in which students received a PT intervention in addition to regular instruction. In the PT classrooms, daily, timed practice on academic skills (e.g., solving math facts); standard celeration charting; and frequent decision making bolstered instruction. After 3 years, students in the experimental sites scored between 20 and 40 percentile points higher on standard achievement tests than students in the control sites (Beck & Clement, 1991).

Several studies provide support for the efficacy of PT for improving reading skills. Hughes, Beverley, and Whitehead (2007) evaluated the effectiveness of a PT intervention to increase the frequency and accuracy of sight-word reading on standardized measures of reading. Compared to “treatment as usual,” or standard reading support, the PT intervention proved effective for improving sight-reading skills in all participants, with two participants showing significant improvements on the standardized measures employed. In contrast, none of the participants in the control group showed improvement on standardized tests for reading. Similarly, Lambe, Murphy, and Kelly (2015) employed a PT intervention targeting sight-word reading to improve reading fluency. The accuracy and frequency of targeted skills improved for all participants as a result of the intervention, providing further support for the effectiveness of PT for improving reading skills.

More recently, Brosnan et al. (2016) employed a PT intervention to improve early reading skills in kindergarten students identified as at risk for reading difficulties. Namely, the intervention focused on building accuracy and frequency in four critically important components for reading: letter sounds, blending sounds into words, sight-word reading, and word decoding. These components reflect an emphasis on the NRP’s pillars of phonics, phonemic awareness, and fluency. Their results provide support for the effectiveness of PT for building accuracy and frequency in foundational reading skills. Moreover, the intervention yielded statistically significant improvements in the frequency of targeted component skills, and the effects were maintained months after the intervention was removed.

Curriculum-based measurement (CBM) is a progress-monitoring strategy largely influenced by behavior analysis and PT and has over 30 years of research demonstrating its validity in predicting academic success (Deno, 1985, 1997, 2003). A process of ongoing academic assessment, progress monitoring permits an analysis of the effectiveness of classroom instruction at the level of the individual student. CBM is one type of progress-monitoring approach that provides a distal lens for directly assessing and quantifying academic growth on content closely related to classroom instruction. CBM practices have been adopted into educational practice on a large scale within the response to intervention (RTI) and multitiered systems of support (MTSS) movements (Johnson & Street, 2013; Kubina & Yurich, 2012).

The influence of behavior analysis on CBM is reflected in the use of count-per-time measures of academic performance displayed graphically with real calendar time on the horizontal axis. With the use of time-series displays, frequent CBM assessments allow an educator to evaluate whether or not a student is responding to academic intervention through the comparison of past performance with current performance. This enables the educator to engage in expeditious instructional decision making to ensure students are making progress. In conjunction with independent variables of DI and PT, CBM as a dependent variable can create a powerful, evidence-based approach to education.

The Fit LearningTM Model

The Fit LearningTM model is an extensively researched program developed and refined over the past 20 years, initially by students and faculty in the behavior analysis program at the University of Nevada, Reno, and subsequently as an independent entity. The program combines behavior analysis, DI, PT, and CBM into one comprehensive approach. The method isolates core learning skills and teaches them to fluency, a measure of true mastery, through one-on-one instruction with a fully individualized curriculum. Each student serves as his or her own case study; thus, the scientific method is brought to bear on the educational process at the individual level. The Fit Reading™ program incorporates the NRP’s five pillars of effective reading education and consistently produces growth of one to two grade levels in 40 hr of instruction. The model is typically delivered in private learning laboratories by certified learning coaches, under the careful advisement of a clinical team.

Fit LiteTM Model

Designed for struggling readers and/or nonreaders in kindergarten through third grade, the Fit LiteTM model represents a truncated version of the Fit Learning™ model. Fit Lite™ uses a personalized system of instruction paradigm to allow each learner to progress through a curriculum at his or her own pace. Progression through targeted skills related to phonics, phonemic awareness, and fluency is determined by the achievement of particular measures of competency. The intended result of contact with the Fit LiteTM model is for learners to acquire an emerging, foundational literacy repertoire that will improve their ability to benefit from standard classroom instruction. The Fit Lite™ model does not yet have the empirical foundation of the full Fit Reading™ model, with hundreds of case studies demonstrating its effectiveness for improving reading. Thus, this investigation serves as the first effort to provide empirical support for the use of Fit Lite™ in reading intervention. Additionally, the results obtained in the Brosnan et al. (2016) study provide empirical support for the effectiveness of PT for kindergarten learners at risk for reading difficulty. The current investigation seeks to extend the empirical basis for PT in two ways. First, we set out to replicate, in PT research, a focus on the pillars of phonics, phonemic awareness, and fluency as employed in the Fit Lite™ model to improve reading. The second objective of this investigation was to extend the intervention to a population of third-grade students at risk for grade retention based on their reading abilities.

Method

Participants and Setting

This project took place in an elementary school in rural South Carolina where only 20% of third-grade students were reading proficiently according to standardized test score data found on the South Carolina Department of Education website. Fourteen third-grade students at risk for failure to pass the state’s summative assessment were selected for inclusion by the school’s principal and literacy coach. Twelve of the participants were male, and two were female. All of the participants were Black with the exception of one White male. All participants demonstrated basic vocal expressive and receptive communication skills and a following-instructions repertoire, and none displayed problem behaviors that would require physical redirection, such as aggression, self-injurious behavior, property destruction, or elopement. Fit Lite™ sessions were implemented in an unoccupied classroom at the school during after-school hours.

Independent Variable and Procedures

The Fit Lite™ model shares many key characteristics with the full Fit Reading™ model. Specifically, the shared features are (a) isolation and mastery of critical component skills for reading, (b) fluency-based mastery criteria, (c) a mechanism for mastery-based progression through lessons, (d) a one-on-one instructional format, (e) oversight by personnel certified in Fit Learning™, (f) a reinforcement system based on a token economy, and (g) common materials, including worksheets and other learning aids. However, in the current preliminary investigation, several features of the full model were stripped away to meet the needs of the hosting school in terms of the time for implementation and the availability of school personnel for training. The most notable deviations from the standard model included (a) truncated sessions, from 50–55 min to 20 min; (b) fixed curriculum sequences instead of customized programming; (c) fixed fluency aims for all students rather than individualized aims; (d) a post hoc analysis of data instead of real-time decision making; and (e) the implementation of services by local university students who were trained by the first author but were intentionally not provided with the full scope of training to meet the criteria to be certified learning coaches in Fit Learning™, which typically takes hundreds of hours to achieve.

Facilitator training and oversight

Four undergraduate psychology majors from a local university were trained by a certified Fit Learning™ trainer (i.e., the first author) as facilitators to deliver the Fit Lite™ model. Facilitators participated in a 4-day behavioral skills training workshop during which they practiced until they demonstrated proficiency with the literacy components of the Fit Lite™ model. See Table 1 for the specific literacy components targeted and the associated frequency aims used to define proficiency for both facilitators and student participants. The framework of facilitator training involved a competency-based progression through the various skills required to implement the Fit Lite™ model with fidelity. Specific facilitator skills built to fluency included (a) a demonstration of fluency in the basic skills included in the Fit Lite™ curriculum as “the learner”; (b) a demonstration of fluency in the delivery of concept instruction using a DI approach; (c) the execution of practice exercises with appropriate materials, correct learning channels, timing lengths, and within-timing coaching strategies; (d) the use of between-timing feedback and error-correction procedures; (e) the use of the digital program Chartlytics for data recording on the SCC; (f) the use of standard procedures for CBM administration; and (g) the administration of the token economy system.

Table 1.

Behavioral Pinpoints and Frequency Aims

| Pinpoint | Frequency Aim |

|---|---|

| Reading Readiness and Phonics | |

| F/S alphabet letter names | 200+ |

| S/S letter names | 80+ |

| S/S consonant letter sounds | 80+ |

| S/S vowel letter sounds | 80+ |

| H/S consonant letter names from sounds | 60+ |

| H/S vowel letter names from sounds | 60+ |

| S/S Fry sight words | 80+ |

| S/S words in repeated reading passages | 120+ |

| Phonetic Pattern 1: CVC/CVCE | |

| H/S blend word from sound | 10+ |

| S/S segment sounds / blend words | 120+ |

| S/S read words | 80+ |

| Phonetic Pattern 2: Consonant Blends/Digraphs | |

| H/S blend word from sound | 10+ |

| S/S segment sounds / blend words | 120+ |

| S/S read words | 80+ |

Note. F/S = free/say; S/S = see/say; H/S = hear/say; H/P/S/S = hear/point/see/say; CVC = consonant vowel consonant; CVCE = consonant vowel consonant e. Frequency aims are all reported as a count of correct responses per minute.

Immediately following the initial boot camp, the first author supervised the initial launch of the after-school program, providing in situ behavioral skills training to the facilitators during the first 3 days of implementation. In situ behavioral skills training included the first author providing additional instructions, modeling, role-play, and feedback as needed during live implementation of Fit Lite™ sessions. Subsequent oversight was conducted remotely. During the second and third week of implementation, the first author observed livestreaming of the after-school sessions for approximately 30 min per facilitator (i.e., 2 hr of observation). Specific praise was provided for aspects of the Fit Lite™ model that were implemented correctly (e.g., “Great job providing her points for hitting the goal!”), and coaching statements were provided for aspects incorrectly implemented or in need of prompting (e.g., “Remember to enter the data from that timing into Chartlytics.”). Throughout the nearly 12 weeks of implementation, the quality and fidelity of the Fit LiteTM model were monitored by the first author via 30–60 min of data review and analysis per week and an additional 1-hr meeting with the facilitators and their faculty advisor per week. Additionally, the first author met with the lead facilitator as needed for a total of 4 hr of student-specific instructional decision-making support. In all, the first author provided the facilitators with approximately 100 hr of in-person and remote training and supervision.

DI and rate building to performance standards

Participants were enrolled in an after-school program, and three groups of three students were pulled from the after-school program daily Monday through Thursday to work one-on-one with facilitators for approximately 20 min each. Students contacted an average of 20 sessions (6.6 hr) with the Fit Lite™ model (range 13–25 sessions). Each intervention session included DI in phonics and phonemic awareness, followed by timed rate-building trials designed to build fluency in the application of the literacy components and reading skills. Each daily session involved a series of “instructional cycles” assembled around each targeted skill. An instructional cycle begins with several 1- to 2-min timings of DI exercises in which the facilitator delivers information about the target skill (e.g., the irregular vowel pair rule) and prompts high rates of active responding from the student. The DI exercises were not trained to specific fluency aims (and have therefore been omitted from Table 1); rather, facilitators sought to achieve accuracy and stability in student responding to DI questions. Following the timings of concept instruction with DI methods, three to five rate-building timed trials of 15–30 s were conducted. For example, timed trials for letter sounds were 15 s, and timed trials for blending sounds were 30 s. The student practiced the skill during the timed trial while the facilitator counted correct and incorrect responses. Practice exercises were guided by Fit Lite™ facilitators and repeated daily. When fluency criteria were achieved on a given component skill, a new skill would be introduced to the student’s daily programming.

Feedback and error correction

At the end of each rate-building timed trial, the facilitator provided feedback on performance to the learner. Verbal praise and points were provided for improved performance (i.e., an increased rate of correct responses and/or a decreased rate of incorrect responses). Points were tracked via a handheld tally counter and implemented as a token economy (i.e., points were delivered differentially contingent on target behaviors representing benchmarks of improved performance and exchanged for backup reinforcers such as small toys and edibles). After acknowledging improved performance, the facilitator systematically corrected any errors made during the timed trial. If no improvements were made during a timed trial, facilitators still attempted to “catch the student being good.” That is, praise was provided contingent on other relevant behavior, such as sitting up straight, reading quickly, listening to instructions, and making a continued effort. The learner’s performance data were then plotted on the SCC. Data collected in sessions were regularly reviewed by both personnel certified in Fit Learning™ and the Fit Lite™ implementation team. Sessions continued until the student achieved mastery criterion across the sequence of literacy component skills, or until the end of the school year.

Dependent Variables

Oral reading fluency

CBM was achieved via the deployment of standardized progress-monitoring tools licensed from aimswebPlus© called R-CBM. These brief (1-min) standardized assessments of oral reading fluency were completed weekly by all participating students. Each assessment yields a raw score of words per minute (wpm) read, a projected rate-of-improvement (ROI) metric, and a percentile ranking assigned to the word-per-minute score by the aimswebPlus© normative database. As such, the words per minute, ROI, and percentile gains observed in participating students served as additional dependent variables to track the overall impact of component-skill acquisition. On average, R-CBM was conducted once weekly with each student, with the occasional disruption of the weekly schedule due to an absence or other unexpected school event.

Component-skill fluency

Rate data from students’ acquisition of literacy component-skill targets were entered into a digital standard celeration charting program called Chartlytics following each timed practice. The count of component skills meeting fluency criteria by the end of the intervention and the relative celerations thereof provide one set of dependent variables.

Case Study Design and Analysis

Novel R-CBMs were administered as pre-/posttests in addition to being used as progress-monitoring tools. Data on all targeted pinpoints were displayed on a count-per-day SCC, and data on R-CBM probes were displayed on a count-per-week SCC. All celeration and multiplier values were calculated using the data analysis software Chartlytics (Chartlytics, n.d.).

Results

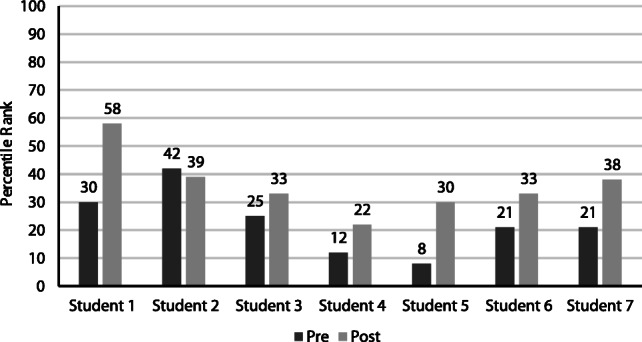

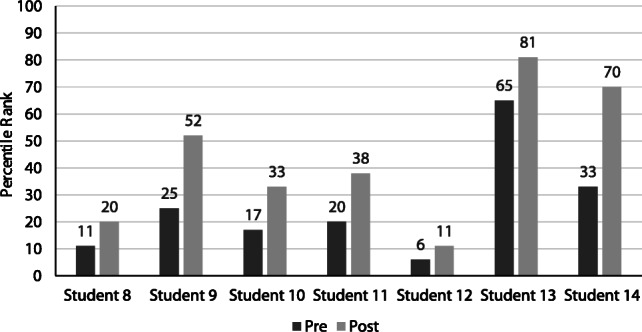

CBM: Progress Monitoring

Following an average of 400 min of contact with the Fit Lite™ model, a mean gain of 16 percentile points was observed, or 1 point per every 25 min. The average baseline reading speed was 81.5 wpm (range 48–120). Following the Fit Lite™ intervention, the average reading speed was 100 wpm (range 60–146). The average ROI for the sample was an increase of 2.8 wpm/hr. The average baseline percentile ranking was the 25th percentile (range 6th to 65th). Following intervention, the average percentile ranking was the 41st percentile (range 11th to 81st). Individual pre/post scores for all participating students are available for inspection in the figures provided here. Data from Students 1–7 are depicted in Figure 1, and data from Students 8–14 in Figure 2.

Fig. 1.

Pre/post R-CBM scores for Students 1–7.

Fig. 2.

Pre/post R-CBM scores for Students 8–14.

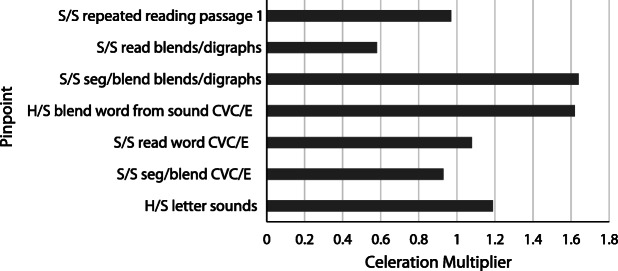

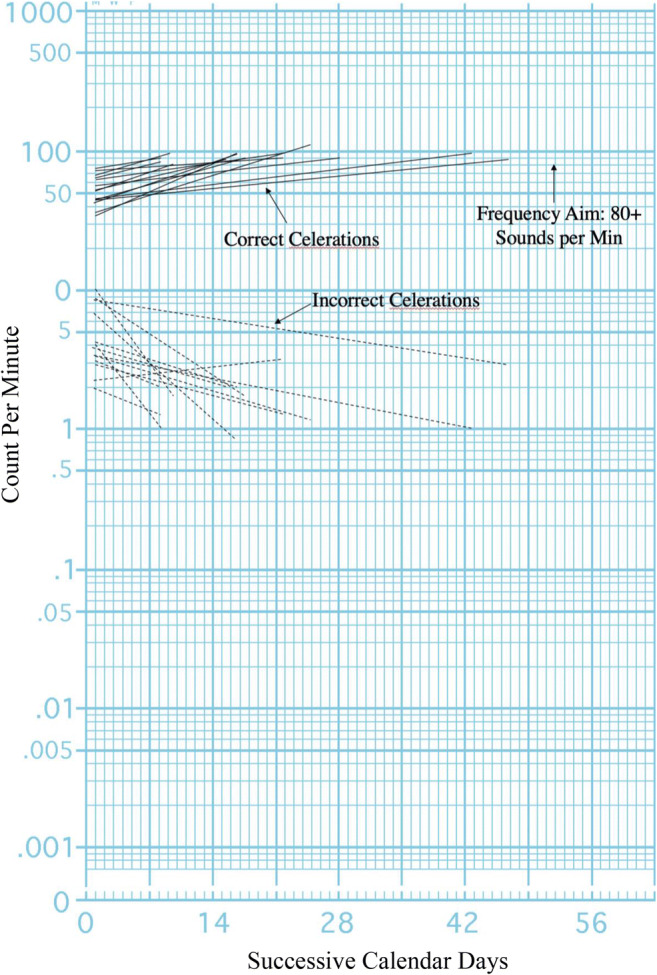

Component-Skill Acceleration

Acceleration of correct component skills ranged from a multiplier of 0.58 to 1.64, averaged across students. See Figure 3 for a summary graph depicting the average celerations across students on a sample of targeted component skills. As is to be expected in a competency-based PSI progression, some students got further in the curriculum than others. Therefore, only a sample of pinpoints is provided, as the sample illustrates the pinpoints contacted by the majority of participants. Figure 4 shows a collection of celerations across students on one specific component skill: see/say consonant sounds. The see/say consonant pinpoint was selected for showcasing here because all students contacted this pinpoint and most mastered it by achieving the fluency aim. The fluency aim for that pinpoint was 80+ sounds/min. Thirteen of the 14 participants reached the fluency aim during enrollment in the program, and one student achieved a rate of 72 sounds/min. On average, participants achieved the fluency criteria in 20.9 sessions (range 9–45). The data presented on this pinpoint illustrate the way in which capturing data on specific pinpoints across students facilitates the evaluation of the strength of the functional relations between obtaining fluency in component skills and CBM outcomes.

Fig. 3.

Average celerations across pinpointed skills combined across students; S/S = see/say; H/S = hear/say; seg/blend = segment sounds / blend words; CVC/E = consonant vowel consonant / consonant vowel consonant silent e phonetic pattern.

Fig. 4.

Celeration collection for see/say consonant sounds across students.

Discussion

In the present study, an educational approach informed by behavior analysis and pioneered in the private sector yielded promising results in a brief period of time. The evidence-based approach of DI, the guiding principles of PT, and the use of CBM as a distal lens for evaluating a student’s progress coalesce in the Fit Lite™ reading intervention employed. As a result, all participants made meaningful gains in reading achievement. The average improvement obtained with participants (a gain of 2.8 wpm/hr) is significant when compared to the national ROI for third graders of 1 wpm/week. The Fit Lite™ intervention, therefore, was both efficient and effective for improving the reading skills of at-risk students.

The results produced through the Fit Lite™ model have implications for the design of mainstream classroom practices and MTSS models. Classroom instruction for reading, for instance, could be designed to incorporate practice periods to build frequencies on essential tool skills such as letter sounds and decoding. There is evidence to support that adding daily, timed practice on academic skills, standard celeration charting, and frequent decision making yields improvements in educational achievement when incorporated into mainstream classrooms. The results produced in the Great Falls PT project provide one such example. Morningside Academy, a laboratory school in Seattle, Washington, provides another example of an educational model that incorporates PT (Johnson & Street, 2004). Their students engage in daily practice on component skills and chart the data on the SCC to make data-based decisions about their performance. At Morningside Academy, students consistently gain 2 years of academic growth in 1 academic year (Johnson & Street, 2004). Taken together, these two models and the results obtained in the current study suggest that most students would benefit from the integration of a PT approach to component-skill building in the mainstream classroom.

Beyond informing mainstream classroom practices, the Fit Lite™ intervention can inform the process and content of RTI or MTSS models for improving educational achievement (see McIntosh & Goodman, 2016, for a full discussion of RTI and MTSS). Students in mainstream education who fail to respond to core classroom instruction (Tier 1) escalate to Tier 2 or Tier 3 levels of support. These tiers often involve increased instructional intensity to improve learning gains. Although there are core features inherent to MTSS focused on improving academic skills (e.g., integrated data, increased intensity of instruction, small group or one-on-one instruction, and data evaluation), these models often lack a process for identifying what to teach (i.e., individual pinpoints), how to teach (i.e., instructional strategies and tactics), and how to measure whether those methods are effective for a particular student. As Ysseldyke, Burns, Scholin, and Parker (2010) note, “considerable precision is needed in the measurement of student progress toward instructional goals or outcomes” (p. 56). The elements of the Fit Lite™ model, therefore, offer a framework for guiding content and process in MTSS models. The inclusion of DI and CBM nestled in a PT approach to skill building and decision making adds the precision necessary to ensure that the intervention is appropriate and successful for the individual student.

The Fit Lite™ intervention employed is akin to a Tier 2 MTSS intervention given the relatively brief amount of practice that participants received. Although all participants showed marked improvement, some showed a more robust response to the intervention than others. It is possible that a more intensive intervention akin to Tier 3 (e.g., longer sessions with additional pinpoints) would yield more substantial gains for the lower performers. Future research might seek to identify predictors or moderators of treatment effects that would guide student placement in the appropriate tier of support.

Several limitations to the current investigation exist. First, the study lacked experimental design elements necessary to rule out confounding variables and demonstrate functional control. However, the study was pragmatic in nature and successful in achieving the primary goal of developing foundational reading skills among students at risk for academic failure. Second, no formal measures of procedural fidelity were collected. Although the facilitators were required to demonstrate proficiency in each of the pinpoints and received ongoing implementation oversight, data on their adherence to Fit Lite™ session procedures are not available. Moreover, the first author (i.e., facilitator-trainer) was a certified Fit Learning™ trainer, and procedural fidelity data were not collected on her implementation of the facilitator training. Therefore, both facilitator training and Fit Lite™ session procedures may be difficult to replicate.

Notwithstanding these limitations, the Fit Lite™ model highlights the feasibility of an intervention package easily translated to the classroom to improve reading achievement. The Fit Lite™ model fits well within a scientist-practitioner model in which pragmatism guides data-based decisions. Facilitators incorporated evidence-based techniques into daily practice with their students to achieve an average gain of 16 percentile points on reading fluency CBMs. Moreover, results were obtained in as little as 20 min/day, allowing for easy integration of the Fit Lite™ model into regular classroom instruction or into MTSS for students at risk. The results herein, therefore, provide a model for effective instruction that may be employed to begin eradicating the literacy crisis in the United States.

Compliance with Ethical Standards

Conflict of interest

The authors know of no conflicts of interest that would present any financial or nonfinancial gain as a result of the publication of this manuscript.

Ethical approval

All of the procedures in this study, which involved human participants, were conducted according to ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Funding

The undergraduate student facilitators were compensated for their involvement by a Francis Marion University REAL Grant obtained by their student advisor. A license from Chartlytics was provided for use of the software, and a license from Fit Learning™ was donated. All authors are owners of Fit Learning Laboratories but were not financially compensated for their work on this project.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Footnotes

A faculty member at the local university was awarded a research grant used to compensate the undergraduate interventionists for their work on the project. Chartlytics™ donated the graphing software. A license to use the Fit Learning™ model was donated by Fit Learning™. All of the authors are owners of Fit Learning Laboratories, but none were financially compensated for their work on this project.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Aimsweb. (n.d.). Progress monitoring and improvement system. Retrieved May 6, 2020, from http://www.aimsweb.com

- Beck, R., & Clement, R. (1991). The Great Falls Precision Teaching Project: An historical examination. Journal of Precision Teaching, 8, 8–12.

- Binder C. Behavioral fluency: Evolution of a new paradigm. The Behavior Analyst. 1996;19(2):163–197. doi: 10.1007/BF03393163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder C, Watkins CL. Precision teaching and direct instruction: Measurably superior instructional technology in schools. Performance Improvement Quarterly. 1990;3(4):74–96. doi: 10.1111/j.1937-8327.1990.tb00478.x. [DOI] [Google Scholar]

- Brosnan J, Moeyaert M, Brooks Newsome K, Healy O, Heyvaert M, Onghena P, Van den Noortgate W. Multilevel analysis of multiple-baseline data evaluating precision teaching as an intervention for improving fluency in foundational reading skills for at risk readers. Exceptionality. 2018;26(3):137–161. doi: 10.1080/09362835.2016.1238378. [DOI] [Google Scholar]

- Chartlytics. (n.d.). Retrieved from https://app.chartlytics.com/login

- Deno S. Curriculum-based measurement: The emerging alternative. Exceptional Children. 1985;52:219–232. doi: 10.1177/001440298505200303. [DOI] [PubMed] [Google Scholar]

- Deno S. Whether thou goest: Perspectives on progress monitoring. In: Kaneemui E, Lloyd J, Chard D, editors. Issues in educating students with disabilities. Mahwah, NJ: Erlbaum; 1997. pp. 77–99. [Google Scholar]

- Deno S. Developments in curriculum-based measurement. Journal of Special Education. 2003;37(3):184–192. doi: 10.1177/00224669030370030801. [DOI] [Google Scholar]

- Fiester, L. (with Smith, R.). (2010). Early warning! Why reading by the end of third grade matters. Baltimore, MD: The Anne E. Casey Foundation. Retrieved from https://www.aecf.org/resources/early-warning-why-reading-by-the-end-of-third-grade-matters/

- Foster JE. Retaining children in grade. Childhood Education. 1993;70:38–43. doi: 10.1080/00094056.1993.10520982. [DOI] [Google Scholar]

- Hattie J. Visible learning: A synthesis of over 800 meta-analyses relating to achievement. New York, NY: Routledge; 2009. [Google Scholar]

- Haughton E. Aims: Growing and sharing. In: Jordan JB, Robbins LS, editors. Let’s try doing something else kind of thing. Arlington, VA: Council on Exceptional Children; 1972. pp. 20–39. [Google Scholar]

- Haughton EC. R/APS and REAPS. Data-Sharing Newsletter. 1981;33:4–5. [Google Scholar]

- Hughes JC, Beverley M, Whitehead J. Using precision teaching to increase fluency of word reading with problem readers. European Journal of Behaviour Analysis. 2007;8:221–238. doi: 10.1080/15021149.2007.11434284. [DOI] [Google Scholar]

- Johnson K, Street EM. The Morningside model of generative instruction: An integration of research-based practices. In: Moran DJ, Malott RW, editors. The Educational Psychology Series. Evidence-based educational methods. Cambridge, MA: Elsevier Academic Press; 2004. pp. 247–265. [Google Scholar]

- Johnson KR, Street EM. Response to intervention and precision teaching: Creating synergy in the classroom. New York, NY: Guilford Press; 2013. [Google Scholar]

- Kubina RM, Yurich KKL. The precision teaching book. Lemont, PA: Greatness Achieved; 2012. [Google Scholar]

- Lambe D, Murphy C, Kelly ME. The impact of a precision teaching intervention on the reading fluency of typically developing children. Behavioral Interventions. 2015;30(4):364–377. doi: 10.1002/bin.1418. [DOI] [Google Scholar]

- McIntosh K, Goodman S. Integrated multi-tiered systems of support: Blending RTI and PBIS. New York, NY: Guilford Press; 2016. [Google Scholar]

- National Center for Learning Disabilities. (2019). Third-grade reading laws. Retrieved October 31, 2019, from https://www.ncld.org/archives/action-center/what-we-ve-done/third-grade-reading-laws-their-impact-on-students-with-learning-attention-issues

- National Conference of State Legislatures. (2019). Third-grade reading legislation. Retrieved October 31, 2019, from http://www.ncsl.org/research/education/third-grade-reading-legislation.aspx

- National Institute for Direct Instruction. (2015). Basic philosophy of direct instruction. Retrieved October 31, 2019, from https://www.nifdi.org/what-is-di/basic-philosophy.html

- Rosenshine B. Recent research on teaching behaviors and student achievement. Journal of Teacher Education. 1976;27(1):61–64. doi: 10.1177/002248717602700115. [DOI] [Google Scholar]

- South Carolina Department of Education. (2019). Read to Succeed. Retrieved October 31, 2019, from https://ed.sc.gov/instruction/early-learning-and-literacy/read-to-succeed1/

- Starlin A. Sharing a message about curriculum with my teacher friends. In: Jordan JB, Robbins LS, editors. Let’s try doing something else kind of thing. Arlington, VA: Council on Exceptional Children; 1972. pp. 13–18. [Google Scholar]

- U.S. Department of Education, National Center for Education Statistics. (2019). The nation’s report card. Retrieved November 15, 2019, from https://nces.ed.gov/nationsreportcard/

- Watkins CL. Project Follow Through: A story of the identification and neglect of effective instruction. Youth Policy. 1988;10(7):7–11. [Google Scholar]

- Ysseldyke J, Burns MK, Scholin SE, Parker DC. Instructionally valid assessment within response to intervention. TEACHING Exceptional Children. 2010;42(4):54–61. doi: 10.1177/004005991004200406. [DOI] [Google Scholar]