Abstract

Say All Fast Minute Every Day Shuffled (SAFMEDS) is one behaviorally based teaching tactic. Like flash cards, SAFMEDS helps build familiarity with course objectives and can be used to promote fluency in the corresponding verbal repertoire. However, SAFMEDS differs from flash cards in that it follows specific design features and the acronym specifies how to practice flash cards. Students might practice in the traditional see-say learning channel used with SAFMEDS, or they could practice in a see-type learning channel (i.e., Type All Fast Minute Every Day Shuffled [TAFMEDS]), as the precision teaching community has sought to bring digital technology to their teaching, using computerized standard celeration charts and programs that present flash cards in a digital format. The present study explored the use of computerized charting and a see-type learning channel program developed for TAFMEDS in several sections of an undergraduate Introduction to Behavior Principles course. Course instructors explored the correlations between daily TAFMEDS practice with behavior-analytic terminology and student performance. After 3 weeks of daily practice, the study concluded with a culmination of 4 checkouts that examined endurance, application, stability, retention (when possible), and performance in different learning channels. Results indicated a correlation between daily practice and higher daily performance frequencies and longer term outcomes, including maintenance, endurance, stability, application, and generativity. The findings are discussed in terms of bringing frequency-building activities to university settings and the advantages and disadvantages of bringing technological advancements into frequency-based instruction.

Keywords: computer-based instruction, flash cards, frequency building, higher education, SAFMEDS, TAFMEDS, university students

Lindsley (1992) defined precision teaching (PT) as “basing educational decisions on changes in continuous self-monitored performance frequencies displayed on ‘standard celeration charts’”1 (p. 51). PT is a behavior monitoring and measurement system directly descended from B. F. Skinner’s laboratory science that in turn was based on rate of response and standard visual displays. PT itself has never been a complete system of instruction in the same sense that Keller’s (1968) personalized system of instruction, Skinner’s (1968) programmed instruction, Becker and Engelmann’s direct instruction (Engelmann, Becker, Carnine, & Gersten, 1988), and more recently the Morningside model of Generative Instruction (MMGI; Johnson & Street, 2012) have been. Lindsley (1992) frequently stressed that PT’s behavior monitoring and change system could be applied to any existing instructional system, a distinction that speaks to the versatility and generality of PT. For example, the MMGI system incorporates PT in the sense that Lindsley envisioned it, building in evaluations of fluency outcomes such as retention, endurance, stability, and application.

Some instructional tactics developed by precision teachers (e.g., frequency building, 1-min timings) have been generalized to encompass what other behavior analysts view as the entire field of PT. One such tactic is “Say All Fast Minute Every Day Shuffled,” or SAFMEDS (pronounced saff-meds). Sometime in the late 1970s or early 1980s, Lindsley coined the acronym that specified some behaviors students engage in with flash cards and presented behaviors students often do not engage in with flash cards. In point of fact, SAFMEDS are simply flash cards, designed in a specific way (cf. Graf & Auman, 2005), but with a set of instructions for how to use them indicated by the acronym. Prior to coining the acronym, for 6 years Lindsley and colleagues (e.g., Starlin & Lindsley, 1980) referred to the cards used for instructional purposes simply as “flash cards.”

Flash cards historically have been paper cards with some text and/or graphics on one side, designated as the front, and some text on the other side, the back side. Generally, they were not necessarily used in timed practices2 and not necessarily shuffled, and the responses were not necessarily spoken out loud. Overall, methods of using flash cards have varied and may not have included any overt actions beyond flipping from one side to the other and back again. Given the varied practices in education, teachers and students have worked with flash cards to teach and study, respectively. As a cultural practice within education, flash cards, as Kubina (2018) shared, have a history in American education that extends back about two centuries. Even though flash cards were never “proven” to work via any controlled research, their longevity as a cultural practice within education suggests inductively that they have a sustained pragmatic value and likely provide some instructional benefits. If they did not work at all, or perhaps not well, then presumably as a cultural practice they would have gone extinct (see, e.g., Glenn, 1986, 2004; Glenn et al., 2016). Lindsley’s SAFMEDS suggestions were intended to improve upon this centuries-old instructional practice.

With SAFMEDS, the primary learning channel (Haughton, 1972) is “see-say”; the first term describes the input channel or the sense mode that the stimulus interacts with (e.g., seeing the term on one side of a card), and the second term describes the output channel or the response (e.g., saying the definition). The basic instructional task runs as follows: First, SAFMEDS is conducted in relatively brief timed practices, often 1 min in duration. Such brief practices are called “timings.” Second, before a timing begins, the learner shuffles the set of cards in order to prevent serial learning. Third, given any card, the learner sees what is on the front of the card, then says a response out loud. Fourth, the learner flips the card over and checks whether the given spoken response matches the text on the back. Fifth, after checking for a match, the learner then places the card onto a “corrects” pile or onto an “incorrects” pile. Sixth, the learner proceeds to the next card in the stack. Typically, a learner follows and repeats this chain of behaviors until a timer sounds.

As a general rule, the learner, rather than someone else, holds the cards, so as to not impose a ceiling on the response rate (e.g., free operant; Skinner, 1938). Practice sessions are frequent (“every day”). Timings are short (“a minute”) but can be of any duration, and responses are made out loud (“say”), though how loud they are said can vary (and can be a source of further research).

When designing SAFMEDS, two relatively important instructional design considerations are to have the verbiage (a) fairly brief and (b) about the same length (number of words) across all cards (Eshleman, 2000; Graf & Auman, 2005). These considerations arise because one generally counts cards, not words read or words spoken. A card itself functions as something of a clear response definer and thus shares a functional similarity with a manipulandum. Separately counting words spoken likely seems to defeat the purpose of using cards.

Graf and Auman (2005) further differentiated SAFMEDS from flash cards. SAFMEDS, for example, are typically generated by the instructor, whereas flash cards may be generated by either the learner or the instructor (see Cihon, Sturtz, & Eshleman, 2012, for an exception). SAFMEDS are typically given as an entire set and practiced all at once (see Adams, Cihon, Urbina, & Goodhue, 2017, and Urbina, Cihon, & Baltazar, 2019, for exceptions), whereas flash cards may be all or only partially available and may be practiced in chunks of cards. As previously mentioned, with SAFMEDS learners say the response out loud, whereas with flash cards, learners typically think of the response or say it to themselves. Flash cards frequently do not involve timed practice sessions like SAFMEDS, nor do they involve the learner anticipating the answer; with flash cards, learners typically read the response, whereas with SAFMEDS, they recall the response without looking. Additionally, SAFMEDS are practiced every day, whereas the practice for flash cards is typically sporadic, often only on the night before an exam or quiz. Flash cards may be practiced with or without shuffling, but shuffling is an important step in the practice and testing requirements for SAFMEDS. Finally, with SAFMEDS, testing occurs in the same learning channels, whereas the learning channels for practice and testing with flash cards often shift when students are asked to respond instead to multiple-choice or true/false questions, for example, on quizzes and exams.

Some researchers may contend that a worthwhile scientific question would be one that seeks to compare SAFMEDS to regular flash cards. Whether SAFMEDS works any better than traditional flash card methods certainly appears to be debatable. Moreover, no clear resolution in answering such a question seems likely, mainly because such a research question would entail all of the problems inherent in comparison studies (Johnston, 1988). There are probably better research questions than those that incur the problems of comparison studies. Thus, despite various concerns and issues regarding SAFMEDS (see, e.g., Quigley, Peterson, Frieder, & Peck, 2017, for a critical review of the literature exploring SAFMEDS as an instructional strategy), at the very least, SAFMEDS as an instructional strategy extends earlier traditional flash card practices. Additionally, SAFMEDS as an instructional strategy may make flash cards more effective under some conditions and may produce the benefit of increased learning efficiency (Adams, Cihon, et al., 2017; Beverley, Hughes, & Hastings, 2009; Cihon et al., 2012; Eshleman, 1985; Mason, Rivera, & Arriaga, 2017; Meindl, Ivy, Miller, Neef, & Williamson, 2013; Stockwell & Eshleman, 2010; Urbina et al., 2019). Furthermore, no evidence exists that SAFMEDS works less well than traditional flash cards, or that it inhibits learning or represents a cause for concern. In terms of behavior function, flash cards, and SAFMEDS as an extension of them, may lead to fluency in a verbal repertoire. Thus, a viable research question would be to what extent SAFMEDS does so, or what kinds of learning efficiencies can result from its use. Such general research questions demand greater clarification about what SAFMEDS is, and numerous studies have been conducted to evaluate the effects of SAFMEDS on student performance in university settings (Adams, Cihon, et al., 2017; Cihon et al., 2012; Eshleman, 1985; Mason et al., 2017; Meindl et al., 2013; Stockwell & Eshleman, 2010; also see Quigley et al., 2017, for a recent review of studies that have been conducted on the effectiveness of SAFMEDS as an instructional strategy in a variety of settings).

In the past decade, interest in computerizing PT has increased. The standard celeration chart (SCC) has been put into Microsoft Excel format (Harder, Neely, & Edinger, 2005, starting in March 2002) and into proprietary systems, including AimChart (in December 2001), Chartlytics3 (in November 2014), and AimStar (in August 2018). Interest in putting SAFMEDS into digital format has increased as well (Fluency Flashcard App, Tucci Learning Systems, Tucci & Johnson, 2012), perhaps alongside the development of such technologies for flash cards such as Quizlet, Anki, and the quiz functions in online course management systems such as Canvas and Blackboard.

However, such programs and applications have also presented several challenges for instructors who want to incorporate PT, and SAFMEDS specifically, into their courses. For example, Quizlet often imposes a ceiling on students’ responses in situations in which an insufficient number of cards are provided to sustain a full 1-min timed practice session. Additionally, online course management systems often restrict students’ response opportunities or require students to complete all responses before feedback is given, rather than providing the opportunity to check a response after it is emitted. Moreover, many of these platforms do not provide a permanent product of students’ responses to the tasks (i.e., students are emitting the responses covertly or to themselves like traditional flash card practice), which may contribute to data fabrication.

Nonetheless, there is a history of computer-based SAFMEDS going back over 30 years, even before these virtual platforms were established. One notable example is Eshleman’s (1988) development of computer-based SAFMEDS. Because computers then, as now, could only respond to voice-activated input that occurs at a certain speed and clarity, the learning channel was not see-say, but rather see-type. Eshleman (1988) coined the acronym TAFMEDS, where the acronym was mostly the same but with the T standing for “Type.” A learner would see text and/or images on a computer screen and type a response on a keyboard. An extension of this early form of TAFMEDS was the Precision Learning System (PLS) developed in the early 1990s. PLS was a subsidiary of Aubrey Daniels & Associates, Inc. The PLS included responses typed on a keyboard and point-and-click responses using a computer mouse as an input device such that one could move a pointer around the computer screen and click on buttons or on graphic objects. Also, during the same era came Parsons’s (2005) ThinkFast, a system in which learners would say responses out loud (as with SAFMEDS) but would be on the honor system to score the correctness of their responses. A long-term hope has been that voice recognition and input would advance so that learners could say responses out loud and the computer itself could judge the correctness. Alas, even though technological advances have been made, some 30 years later it is still difficult to capture responses at such a high rate with great accuracy. Moreover, as learners become more fluent, they often change the way they form their responses (e.g., softer, faster, perhaps more mumbled, removing all sounds that cause fluency barriers). Thus, in the meantime, it makes sense that applications of SAFMEDS to a computer format still follow more of a TAFMEDS format, which may be stronger for grading accuracy and decreasing the likelihood of data fabrication.

As recently suggested by Adams, Eshleman, and Cihon (2017), implementing SAFMEDS or TAFMEDS along with an online platform for charting data on SCCs like Chartlytics (Chartlytics, 2018) could assist college educators in making chart-based decisions along with their students. Chartlytics allows students and teachers to record and view data from anywhere they have Internet access, making charting more practical and more sustainable than using paper charts.4 Such a combination could be a useful, more practical approach to encouraging daily timed practice in the university setting when practice occurs outside the classroom environment. Specifically, required daily practice that is directly imported to Chartlytics would allow course instructors to not only emphasize the importance of daily practice to students but also monitor the students’ daily practice. Additionally, certain kinds of practice procedures (with the help of digital technologies) could improve the accuracy of recording and decrease the previously mentioned concerns related to data fabrication. Moreover, access to SCCs online can give course instructors (and students) easy access to students’ learning pictures (see Fig. 1 for examples of learning pictures), which would allow instructors to quickly interpret patterns in students’ performance and make recommendations to improve performance (Binder & Watkins, 1990; Kazdin, 1976; West, Young, & Spooner, 1990). These features of TAFMEDS are more closely aligned with Lindsley’s (1990) recommendations for the use of PT that stressed the importance of self-recording, sharing data, and daily frequency monitoring.

Fig. 1.

Examples of common learning pictures one might see on a standard celeration chart and suggested interventions for each. Green arrows on the chart represent celeration correct, whereas red arrows represent celeration error. The top row shows learning pictures requiring no change. The middle row shows learning pictures with suggestions to slice back and/or shorten timings. The bottom row shows learning pictures that suggest stepping back the curriculum (bottom left), teaching to errors (bottom middle), and drilling the errors (bottom right). © 2000 by John Eshleman and reprinted with permission.

The purpose of this article is to describe an exploration of the use of TAFMEDS in several sections of an undergraduate Introduction to Behavior Principles course. Course sections met on different days and at different times and were taught by one of three course instructors, but consisted of the same course material, overall course structure, and course activities.5 Course instructors included, as part of the course design, TAFMEDS for a set of technical behavior-analytic terms and definitions. Students could earn points toward their course grade for completing daily TAFMEDS practice. Course instructors were interested in how required daily practice would affect students’ rate of learning (i.e., celeration) on a see term–type definition task in behavior-analytic terminology and on the corollary effects often reported as outcomes of fluent responding (e.g., MESsAGe6; Johnson & Street, 2012), as well as in gauging the students’ interest in TAFMEDS.

Researchers sought to answer the following questions:

How might instructor-provided celeration aims influence undergraduate students’ rate of learning (celeration) of behavior-analytic terminology using TAFMEDS?

What correlation, if any, might exist between students’ celerations over the course of a 3-week practice period and the number of definitions entered correctly in a single, cumulative TAFMEDS timing at the end of that practice period?

How do students’ celerations on TAFMEDS over the course of a 3-week practice period correlate with the number of days a student engaged in daily timed practice with the TAFMEDS program during that practice period?

What correlation, if any, might exist between the number of days a student practiced TAFMEDS in a 3-week practice period and the student’s subsequent performance on in-class cumulative timings at the end of that practice period?

Are students’ TAFMEDS performance frequencies at the end of a 3-week period of practice with a set of terms and definitions predictive of performance on fluency-derived outcomes—including maintenance, endurance, stability, application, and generativity—related to those terms and definitions studied using TAFMEDS?

What are students’ perceptions regarding required daily TAFMEDS practice in an undergraduate Introduction to Behavior Principles course?

Through addressing each of these questions, experimenters hoped to gain some insight on the use of TAFMEDS in producing fluency outcomes and encouraging daily practice, as well as on the use of celeration aims to potentially drive student performance in terms of celeration and frequency of correct definitions typed per minute. Additionally, experimenters sought to confirm the value of daily practice for producing higher rates of performance frequencies and evaluate the social validity of such procedures.

Method

Participants and Setting

Experimenters analyzed the data for 146 undergraduate students enrolled in one of five sections of an introductory behavior analysis course in the fall of 2017 at a large public university in the southwest region of the United States. Each of the course sections met twice per week for 1.5 hr each class period. One of three graduate teaching fellows (TFs) taught each course section under the supervision of a faculty adviser.7 A group of graduate and undergraduate student academic assistants (SAAs) facilitated each course section and were responsible for data collection, course implementation, and grading (graduate-level SAAs only) for a group of students (hereafter referred to as a caseload). Each section of the course enrolled between 13 and 38 students and was supported by two to five undergraduate or graduate SAAs in addition to the TF (see Table 1). In addition to assisting the TF with other course components, SAAs assigned points for students’ completed TAFMEDS timings, assisted students with in-class TAFMEDS timings, responded to students’ questions regarding the TAFMEDS procedures or forwarded such questions to the experimenters, and assisted the primary investigator in conducting and facilitating each of the activities involved in the cumulative checks (described in the section below entitled “Cumulative checks”). SAAs also monitored their caseload during daily timings to ensure that timings were completed correctly and to assist students as needed. SAAs monitored the charts for each student on their caseload to make decisions about student progress based on learning pictures (see the section on training for course personnel). Researchers did not exclude student data based on age, race, experience, or area of study.

Table 1.

Number of Participants in Each Course Section

| Section 001 | Section 002 | Section 003 | Section 004 | Section 005 | |

|---|---|---|---|---|---|

| # Students | 39 | 24 | 37 | 36 | 14 |

| # SAAs | 5 | 3 | 4 | 3 | 2 |

| Student/SAA ratio | 8/1 | 8/1 | 9/1 | 12/1 | 7/1 |

Note. SAAs = student academic assistants

Each course section met in a technology-based classroom that was designed with podlike desks where students sat in groups of six to seven with laptop computers and large monitors available to each pod. In addition to the technology-based classroom, students could complete TAFMEDS timings at home, in the university computer labs, and in the departmental office where office hours were conducted for the course.

Materials

The materials included a SAFMEDS deck of 72 paper cards on which the terms and definitions were printed. Researchers selected terms from the key terms in the course textbook (Ledoux, 2014), as well as commonly used terms within the field of behavior analysis from the perspective of the course instructors. Each card included a behavior-analytic term on the front of the card and a short definition of the term, as well as the slice (described next) number and the number of syllables in the definition, on the back of the card (see Fig. 2). Course instructors and the faculty adviser controlled for the number of syllables per definition (the average number of syllables per card was 10 [range 4–15]). Organizing slices based on syllables tightened the sensitivity of the count.

Fig. 2.

Example of a SAFMEDS card. SAFMEDS cards were paper cards with the term on the front (left) and the definition, slice number, and number of syllables on the back (right).

The deck of 72 cards was divided into several smaller units, referred to as “slices,” resulting in 12 slices of six cards each. Slices were then combined to make four “chunks” (three slices of six terms resulting in 18 terms per chunk). The terminology included in each slice and chunk corresponded to the unit in the course at the time that slice or chunk was assigned for daily practice. For example, the fifth slice consisted of the terms “positive,” “negative,” “consequence,” “contingencies of reinforcement,” “negative reinforcement,” and “positive punishment” and corresponded to the course unit on the “Three-Term Contingency.” The terms and definitions included on the SAFMEDS cards were part of a deck that had undergone six revisions in order to revise terms and definitions that students found confusing, to create a smaller deck (from 131 terms to 72 terms), and to shorten the definitions as much as possible, adhering to suggestions from Graf and Auman (2005). During the semester in which TAFMEDS was implemented, course instructors also worked to balance syllables and characters across cards. Revisions always ensured that shortened definitions maintained the critical features of the original definition.

The same terms and definitions, controls, slices, and chunks were used for TAFMEDS. Students completed TAFMEDS practice on computers using a program that was created in Visual Basic using Visual Studio. The program was converted for use with Mac computers in Visual Studio for Mac. The program failed if students shifted the keyboard or mouse focus away from the program to prevent students from accessing other websites or programs to view definitions. From an instructional perspective, this prevented students from accessing the definitions and engaging in copy-text behavior, which was not the goal of the TAFMEDS practice. When a student logged in, the program first presented a prompt that required the student to enter a unique identification number, referred to as a TAFMEDS ID, based on the section number for that student’s class, which allowed the data to be plotted on the correct chart in Chartlytics.

Course instructors received the TAFMEDS program file, an encryption/decryption program, and the list of terms and definitions. SAAs and TFs updated and edited the terms and definitions as necessary before encrypting the file. The TAFMEDS terms and definitions were imported into the program from a .xlsx file created using Microsoft Excel that contained the term, the correct definition, the slice number, the date assigned, and the chunk number. Students did not have access to this file. The program presented only the terms that matched the currently assigned terms based on the assigned date provided within this file. Terms were numbered and randomized with a random number generator first; then they were shuffled four times before a term was displayed. Experimenters used a TAFMEDS encryption/decryption program to encrypt the TAFMEDS words and definitions file, generating a new file. The encrypted terms and definitions file could not be edited by students, and the TAFMEDS program ran successfully only if it referenced this encrypted file. This encrypted file, the TAFMEDS program, and instructional documents were distributed to students via Blackboard™. Students were instructed to download both files in the same directory/folder.

If no TAFMEDS practice was assigned for that day, then the program presented a window stating “No terms assigned today” and then closed. Similarly, the program closed if no terms file was available in the same directory as the TAFMEDS program file, and it displayed a message stating “No terms file found. Please download the terms file and/or make sure it is in the same directory as the TAFMEDS program.” Experimenters instructed students to contact an experimenter via e-mail with a screenshot of any errors at the time the error was received to obtain assistance. If an error occurred because the terms file was not working correctly, then the program closed after displaying a message stating “Critical error: terms file has been edited or corrupted! Please download a new copy.” An error of this type may have occurred if students edited the encrypted terms and definitions file or if SAAs and TFs uploaded the incorrect or unencrypted file. This ensured that students’ timings reflected practice with the assigned terms and definitions only.

At the end of a timing, the program generated an encrypted output file that was automatically uploaded to Chartlytics. The data were imported to Chartlytics, and timings were displayed on the students’ SCCs within Chartlytics. The program also automatically sent a copy of the file to a university e-mail address associated with this experiment. If for any reason the program failed to send the file to either Chartlytics or the university e-mail address, the program generated a message directing students to e-mail the file to the university e-mail address. All files generated by the program (output files) were placed in a directory. If necessary, course instructors could view these files later. Output files included students’ unique TAFMEDS ID; the date; the total number of terms presented; the number correct; the number incorrect; the number skipped; baseline characters per minute; the total time spent working on the program; a list of terms; a list of correct definitions; a list of student responses; a list of responses that were correct, incorrect, or skipped; a list of time taken per term; and a list of characters per minute per term. The output files also retained a count of how many attempts the students had engaged in when they submitted the daily practice for grading.

Data from each TAFMEDS timing (see Data Collection and Analysis) were exported directly to Chartlytics as soon as the timing ended, and Chartlytics automatically plotted the count per minute (rate) for correct and incorrect definitions and the celeration based on the raw data received from the TAFMEDS program, and displayed them on a daily per minute SCC for each student (Fig. 3). Students could view their timing performance on their charts immediately upon completion of their daily timing, at home or in class, by logging in to the Chartlytics website with the unique username and password associated with their SCC; however, they were not required to do so. Hovering over the celeration line that Chartlytics plotted on the SCC allowed a user to see the current celeration for correct responses and incorrect responses. SAAs and TFs could also view the timings on students’ charts immediately upon completion of a timing. SAAs had access to the charts for the students in their caseload, whereas TFs had access to all of the charts for the students enrolled in their course section(s). If a student completed more than one practice timing in a day, a setting within Chartlytics allowed for the best daily timing to be displayed on the SCC. The best timing was defined as the practice timing having the highest number of correct responses per minute regardless of the number of skips or errors made. This setting was applied to all student charts, but any other timings for each day were available for viewing by scrolling beneath the chart where each timing was displayed in a table format. The table displayed the total number of correct definitions and incorrect definitions, the counting time (duration of the practice timing), the date and time when the practice timing occurred, and the rate of correct and incorrect definitions per minute as calculated by Chartlytics (Fig. 3, bottom). Students used university-provided Dell laptop computers or personal computers during in-class timings (see Daily TAFMEDS practice timings below) and their personal computers to run at-home timings. The program required an Internet connection in order for data to be automatically exported to Chartlytics.

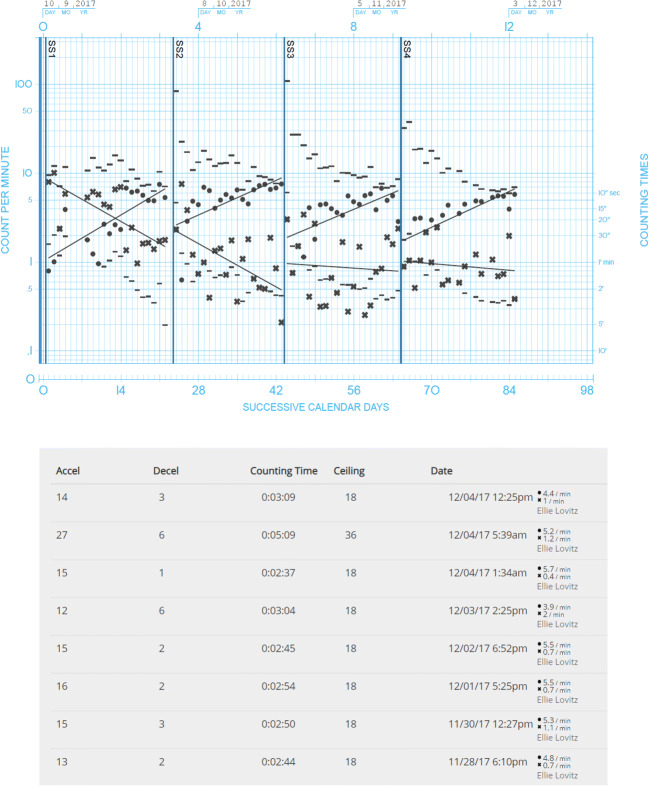

Fig. 3.

Example Chartlytics student SCC Daily count per minute for the number of correct TAFMEDS definitions typed (accel./dots) and the number of incorrect TAFMEDS definitions typed (decel./x’s) are plotted on the SCC. A table displayed below the SCC shows the number of correct definitions and incorrect definitions typed, the counting time, the number of terms displayed during the timing (ceiling), the date and time of the timing, as well as the calculated rate per minute for correct and incorrect definitions. This sample SCC shows the entire semester. Phase change lines (labeled SS1, SS2, SS3, and SS4) indicate when the cumulative check occurred and a new slice and/or chunk was introduced.

Experimenters created task analyses to teach undergraduate students, TFs, and SAAs how to run TAFMEDS practice timings. Experienced SAAs created videos to demonstrate the correct technique for running TAFMEDS timings.8 Task analyses, videos, and job aids were available to SAAs and TFs within a course-specific Google Drive, and a printed document was available for review and reference as needed in the course office.

Procedure

Training for course personnel

Experimenters conducted all trainings with SAAs and TFs in large and small groups during two 3-hr weekly meetings prior to the onset of the TAFMEDS activities in the course. The first training consisted of an introduction to the pragmatics of running the TAFMEDS course components with a focus on what students should be doing during this component of class time. This training also included information about using Chartlytics, grading student assignments, and coding data.

The second training component consisted of a brief review of PT literature and an extensive training on chart-based decision making based on Dr. John Eshleman’s Learning Pictures and Change Effects materials available at the PT hub and wiki (Eshleman, 2008). Training consisted of a short introductory lecture on the various learning pictures SAAs and TFs might encounter during the semester and the corresponding interventions recommended for each (see Fig. 1).9 This was followed by extensive small-group practice exercises in which TFs and SAAs looked at sample SCCs and determined if an intervention was needed, what the intervention would be, and how and why the intervention should be implemented. Figure 1 displays suggested interventions for each learning picture.

No formal measures of staff proficiency were taken, and data were not taken on training to criterion or on how frequently SAAs and TFs discussed learning pictures with each student; however, TFs in each course section and the faculty adviser monitored SAAs’ interaction with their caseload during class. Additionally, SAAs were encouraged to make chart-based decisions, but they could defer to the TF and faculty adviser whenever they were unsure of the next step to take. No one without experience doing so made chart-based decisions without the help of an experienced SAA, TF, or the faculty adviser. SAAs and TFs were considered experienced if they had been a part of the TSL for more than one semester and were familiar with the SCC. During weekly lab meetings, SAAs and TFs brought up any charts with concerns to be discussed. Significant concerns might have included several missed days of practice, extreme numbers of corrects per minute, and flatline charts with no acceleration of corrects or deceleration of incorrects. Chart-based decisions were discussed as a group, and SAAs and TFs could subsequently work with students to make necessary changes. Specific procedures for aim setting were adhered to, but implementation of chart-based decisions and feedback was the responsibility of individual instructional personnel using guidelines for continuing or making changes to how a student was engaging in daily practice.

Continue

SAAs and TFs were instructed to continue with no recommended changes when the frequency of correct responses was accelerating toward the aim—in other words, if students were meeting or exceeding the current celeration aim and the frequency of errors was maintaining below the record floor (as defined by the students’ baseline typing speed determined by that day’s typing warm-up) or decelerating. When students were meeting the celeration aim, SAAs and TFs were instructed to praise their performance while looking at the SCC and assign full points for the daily timing.

Change

SAAs and TFs were instructed to intervene and encourage a change in the way the student was practicing if the frequency of correct responses decelerated while the frequency of incorrect responses accelerated, if the celeration of correct responses maintained below the aim while the frequency of incorrect responses accelerated, if the frequency of correct responses decelerated even if the frequency of incorrect responses maintained, if the frequency of correct responses decelerated even if the frequency of incorrect responses also decelerated, or if the frequency of correct and incorrect responses both accelerated. Suggested interventions included dividing decks into smaller slices for practice, practicing in another learning channel, practicing “see term, read definition,” identifying and practicing the terms that were incorrect or skipped during the timing, and practicing typing skills.

Student performance contingencies

Students engaged in daily practice count-up timings with TAFMEDS in class and at home. Count-up timings referred to variable-duration practice timings with no time limit in which students responded to each term presented by typing the definition or pressing Enter to skip. Instructors used a count-up timing rather than a count-down timing, in which there is a set amount of time to respond to as many terms as possible in that time period, in order to provide students with the opportunity to engage in responding to each term regardless of their rate of performance. Had a count-down timing been used, students performing at lower rates may not have engaged with all of the terms and therefore may not have had the opportunity to learn each term and definition, which is problematic from an instructional perspective. Rate was calculated per minute after the timing had been completed based on the total duration of the timing.

In-class timings occurred at the beginning of each class meeting. On days in which students did not attend class, including weekends, they were required to complete at-home practice timings. Students earned up to five points for each daily practice timing. Points were contingent on whether students maintained an instructor-provided celeration aim. Students, SAAs, and TFs confirmed that students were meeting the celeration aim by hovering over the celeration line on the SCCs displayed in Chartlytics. Instructors encouraged students to check their charts after each timing to be sure the timing had been plotted correctly and that they had met their celeration aim. If they did not meet their aim on the first try, students had the opportunity to engage in more practice timings until the aim was met. Even though unlimited time was not available for timings in class, students could earn daily practice points by later completing the practice timing at home. SAAs assigned points for in-class timings once students had completed their timing and showed their SCCs displayed on Chartlytics to their assigned SAA. This ensured that students contacted their charts and allowed SAAs and TFs the opportunity to discuss charts and any potential changes with students at least twice per week.

TAFMEDS accounted for 500 of 1,600 total points in the course. Other activities included in-class guided notes, in-class reading checks, in-class shaping activities, and a behavior-change project with associated study guides, in-class vocal checkouts, in-class workshops, and a final paper. Corresponding point values for each course activity are shown in Table 2. Course instructors assigned TAFMEDS grades based on the celeration that Chartlytics calculated for each student based on the student’s past and present performance. For practice timings that occurred during the weeks leading to the first and second cumulative checks, students earned zero, one, two, three, or five points based on their celeration; for practice timings that occurred during the weeks leading to the third and fourth cumulative checks, students earned zero, one, three, five, or six points based on their celeration (see Table 3). Initially, in order for students to earn the highest number of points possible for the daily practice timing, five points, they must have maintained the celeration aim of x2, essentially doubling their performance frequency across 1 week. In other words, if a student typed four correct definitions per minute on Tuesday, the following Tuesday, the student would need to type eight correct definitions per minute in order to earn full points for that day’s TAFMEDS timing. The celeration ranges and associated point values (see Table 3) were determined based on guidelines related to aim values and their corresponding significance for accuracy improvement set forth in Kubina and Yurich (2012, p. 223), with corresponding significance ranging from very slight accuracy improvement for a celeration range between x1 and x1.2 to exceptional accuracy improvement for a celeration range between x2 and x3. A celeration aim, rather than a frequency aim, was chosen in order to provide students the opportunity to earn points by demonstrating increased proficiency regardless of the particular frequency at which they performed, due to the experimental nature of TAFMEDS. This allowed students to earn points based on their rate of learning and avoided penalizing students for low frequencies, as there are no established frequency aims for TAFMEDS. The course instructors’ goal for TAFMEDS was to see consistently improving student performance, not a specific frequency of correct definitions typed per minute.

Table 2.

Course Point Summary

| Course Component | Points |

|---|---|

| Guided notes | 150 |

| Reading checks | 280 |

| TAFMEDS | 500 |

| Shaping activities | 200 |

| Behavior-change project | 470 |

| Total | 1,600 |

Table 3.

Point Contingencies for TAFMEDS

| Points | ||||

|---|---|---|---|---|

| Celeration Range | CC1 | CC2 | CC3 | CC4 |

| <x1 | 0 | 0 | 0 | 0 |

| x1–x1.1 | 1 | 1 | 1 | 1 |

| x1.2–x1.4 | 2 | 2 | 3 | 3 |

| x1.5–x1.9 | 3 | 3 | 5 | 5 |

| >x1.9 | 5 | 5 | 6 | 6 |

Note. CC = cumulative check

As there is no established template for how to conduct TAFMEDS in the classroom, instructors relied on experience with SAFMEDS to inform the instructional design for TAFMEDS, which at times led to the need for instructional changes to be made based on students’ performance and feedback. Instructors adjusted point values between Cumulative Checks 2 and 3 based on student performance and the exploratory nature of assigning point values based on celeration. Because the majority of students failed to meet the preestablished aim of x2 celeration during the practice leading up to the first and second cumulative checks, instructors adjusted the aim to x1.5 after the second cumulative check. In other words, a student who typed four definitions correct per minute on Tuesday needed to type six definitions correct per minute on the following Tuesday in order to earn full points for that timing. Instructors gave one extra-credit point if a student maintained a celeration higher than x1.9, in accord with Kubina and Yurich’s (2012, p. 23) designation of this celeration range as exceptional performance.

Higher stakes cumulative checks occurred in class after students completed in-class and at-home daily practice for each chunk (three slices of terms and definitions) over a 3-week practice period. Students could earn up to 25 points for participating in all of the activities associated with the higher stakes cumulative checks (stability checks, endurance checks, tests for generativity, application checks, and tests for learning channel transfer) regardless of their performance in each activity (described in more detail below in the sections with the headers that correspond to each type of check listed).

Daily TAFMEDS practice timings

Daily practice timings for TAFMEDS included both at-home and in-class conditions. Students completed daily practice in class each day that a class was scheduled when there was not a scheduled cumulative check. At-home practice timings occurred on all days of the week that students did not attend class.

Students began by opening the TAFMEDS program, entering their TAFMEDS ID in the response window, and clicking “OK.” If the TAFMEDS timing was the first timing completed that day, then students completed a baseline timing before their actual TAFMEDS timing. The baseline timing was presented as a typing warm-up exercise and consisted of a series of third grade–level words. The program instructed students to type a definition or synonym in the box below the term in order to generate a baseline typing speed. Students could not skip these terms. This provided a baseline typing speed for each student per day that served as the record floor and gave students the opportunity to have a typing warm-up before they began practicing their terms and definitions. At the end of the typing baseline, or after entering the TAFMEDS ID for all timings following the first timing of the day, a new prompt was displayed that instructed students to type the definition in the box below the displayed TAFMEDS term or press Enter to skip. Students clicked “OK” when they were ready to begin. Students saw the term on the screen, typed the definition in the response box below the term, and pressed the Enter key either when they were finished with a definition or to skip a term.

Once each term assigned had been displayed once, the program displayed the results in a table that included the terms the student answered incorrectly and the terms the student skipped, along with the correct definitions for each, as well as the total number of corrects, incorrects, and skips. The program reminded students to log in to Chartlytics upon completing a timing in order to view their SCC and verify that their timing was correctly plotted and that the celeration met the aim for the day.

For in-class timings, after the completion of a timing, SAAs and TFs verified that students had completed a timing and that their data were plotted correctly on their SCCs. SAAs and TFs also verified that students had met the celeration aim to earn points for that practice timing. This also allowed for the opportunity for SAAs and TFs to view each student’s chart and discuss learning pictures and any changes that might be necessary for any individual student, as described previously. About 10 min of each class period were dedicated to TAFMEDS timings, and students could use the time to complete extra timings if they did not meet their aim on the first timing or to discuss their SCC with their assigned SAA or the TF.

Instructors assigned a new slice each subsequent week. For example, in the first week of TAFMEDS, students practiced Slice 1; in the following week, students practiced Slices 1–2. This procedure was followed until students completed the first chunk (Slices 1–4) of TAFMEDS terms and definitions. Course instructors initially assigned a new slice each week in accordance with procedures that were followed in prior semesters for SAFMEDS activities, and adjusted as student performance and feedback indicated the need for adjustment. For Chunks 2 through 4, instructors assigned all 18 terms at the beginning of the 3-week period during which students would engage in practice with those terms, removing a barrier for students to reach higher performance frequencies. For example, during the week in which Slice 1 was assigned, only six terms were presented in the TAFMEDS program. If a student typed all definitions correctly in the first timing, the only way for the student to maintain the celeration aim was to speed up each subsequent timing at a x2 or x1.5 rate. A ceiling effect in terms of how quickly students could type affected their ability to meet the celeration aim when they had fewer terms presented and thus fewer response opportunities. Instructors attempted to remedy this by presenting all 18 terms and definitions for every timing during the entire period of time leading to the cumulative check.

Cumulative checks

Cumulative checks occurred four times during the most immediate class day after the third slice of a chunk had been assigned. Stability, endurance, and application checks, as well as tests for generativity and transfer across learning channels, occurred during the cumulative checks. Students received 25 points for completing each of five activities associated with these fluency outcomes; instructors allocated points based on participation and not on performance. Instructors set up five stations, one for each of the five activities, in the classroom before the cumulative check started. During the cumulative check, students rotated through each station at their own pace and received SAA or TF initials on a checkout form verifying completion of each station. Checkout forms also included a space to record the data.

Data recorded on the checkout form included the number of corrects, incorrects, and skips; the duration for the endurance, application, generativity, and stability checks; and the duration for the test for learning channel transfer. The first author was present during all cumulative checks for all course sections across the semester, and SAAs and TFs ensured the accuracy of the recorded data and initialed the student’s checkout form before the student moved to the next station. SAAs and TFs each had an assigned station during the cumulative check and received a brief training on that particular station immediately before the cumulative check, as well as a printed task analysis to which they could refer for any questions. If the course section did not have enough SAAs to cover each station, volunteer SAAs or TFs were recruited from other course sections, or the faculty adviser would help.

Stability

Stability checks followed the same format as the daily practice timings; however, during the stability checks, students used headphones to listen to an audiobook recording of Walden Two (Skinner, 1948) read aloud by B. F. Skinner. After completing the timing, students recorded the number of correct, incorrect, and skipped definitions, as well as the time to complete the stability timing on the checkout form. The assigned SAA or TF at this station assisted students in playing the audiobook, ensured that students were listening to the audiobook during the entire timing, and verified that the correct information was plotted on the student’s SCC and recorded on the data sheet at the end of the timing. The stability check provided the opportunity to assess any differences in performance on TAFMEDS in the face of distraction between students who had performed at higher rates on their daily TAFMEDS timings and those that had performed at lower rates on their daily TAFMEDS timings.

Endurance

Endurance checks also followed the general format of the daily practice timings with one exception: each of the 18 terms appeared twice within the TAFMEDS program for a total of 36 response opportunities. Upon completing the timing, students recorded the number of correct, incorrect, and skipped definitions, as well as the time to complete the endurance timing on the checkout form. The assigned SAA or TF at this station verified that students completed the endurance timing and that the correct information was plotted on the student’s SCC and recorded on the data sheet at the end of the timing. The endurance check provided the opportunity to assess any differences in rates of performance on TAFMEDS over a longer duration of time and whether any differences between students’ performance on the endurance check correlated with differences in their rate of performance on daily TAFMEDS timings.

Application

For the application check, students completed a pen-and-paper handout that included definitions for 10 of the terms that differed from the definitions students had studied for TAFMEDS.10 Correct responding on the application check demonstrated students’ ability to apply what they learned when studying the terms with TAFMEDS in a new context by responding with the correct term when given a definition that was not identical to the one with which they had previous experience from their TAFMEDS practice. Experimenters selected terms for inclusion on the application check randomly from the 18 terms that students had studied in the weeks leading to that cumulative check. One of the main differences between TAFMEDS definitions and those present on the application check was the length of definitions, but wording also differed (see Table 4 for examples). Experimenters obtained definitions from the glossaries of various behavior-analytic textbooks (e.g., Cooper, Heron, & Heward, 2007; Ledoux, 2014; Miller, 2006). SAAs and TFs instructed the students to set a timer before beginning the application check and then to write the term they had studied with TAFMEDS that best corresponded to the presented definition. Upon completion of the activity, students recorded the total time to complete the activity on the checkout form, and the first author scored the number of correct, incorrect, and skipped terms and calculated correct responses per minute at a later time.

Table 4.

Example Application Check Definitions and Their Corresponding TAFMEDS Definitions

| Term | Application Check Definition | TAFMEDS Definition |

|---|---|---|

| Overt | Behavior that is publicly observable and measurable | Outside the skin |

| Independent variable | A condition or procedure that is systematically varied to observe its effect on behavior | Environmental change |

| Science | The systematic study of relations between phenomena, and the formulation of those relations into scientific laws that predict when events occur and the conditions required | Systematic approach to understanding events |

| Operant | The behavior that operates on the environment to produce a change, effect, or consequence | Behavior controlled by antecedents and consequences |

| Respondent | The response component of a reflex; behavior that is elicited, or induced, by antecedent stimuli | Behavior controlled by antecedents |

| Negative reinforcement | The procedure of following a behavior with an event that, when terminated or prevented by a behavior, increases the rate of that behavior | Removed consequences increase behavior |

| Reinforcement | Occurs when a stimulus change immediately follows a response and increases the future frequency of that type of behavior in similar conditions | Process by which consequences increase behavior |

| Extinction burst | An increase in the frequency of responding when an extinction procedure is initially implemented | Brief behavior increase at start of extinction |

| Radical behaviorism | A thoroughgoing form of behaviorism that attempts to understand all human behavior, including private events such as thought and feelings, in terms of controlling variables in the history of the person and the species | Skinner; covert events are behavior |

| Methodological behaviorism | A philosophical position that views behavioral events that cannot be publicly observed as outside the realm of science | Watson; covert events are not behavior |

Generativity

Students completed a pen-and-paper handout consisting of fill-in-the-blank questions or word problems associated with the TAFMEDS terms and definitions.11 Correct responding on the generativity check demonstrated a student’s ability to solve novel problems that were not explicitly trained using the terms and definitions the student had studied with TAFMEDS. SAAs and TFs instructed students to set a timer and begin working on the handout, answering each question to the best of their ability. Experimenters selected terms from the most recently practiced TAFMEDS timings. The first author scored the number of correct, incorrect, and skipped terms and calculated correct responses per minute at a later time. Instructors were interested in whether the daily TAFMEDS rate of performance correlated with students’ ability to perform at a high rate of correct responses on a novel task using the terms and definitions they had studied with TAFMEDS.

Learning channel assessment

Students completed count-up timings in the see-say learning channel associated with SAFMEDS. SAAs and TFs served as listeners and scorers. The SAA or TF set a timer and ensured that students shuffled the deck of 18 terms and definitions. Students covertly read the term on the front of the card and either attempted to say the definition or placed the card in a skip pile. The SAA or TF sorted the cards based on whether they were correct, incorrect, or skipped; correct, incorrect, and skipped definitions were counted at the end of the timing. The timing ended when the student had responded to or skipped each of the 18 cards. Corrects, incorrects, and skips were recorded on the checkout form, along with the duration of the timing. The first author calculated responses per minute at a later time. Instructors were interested in whether the rate of performance on TAFMEDS correlated with the rate of performance on SAFMEDS, indicating possible learning channel transfer between the see-say learning channel of SAFMEDS and the see-type learning channel of TAFMEDS.

Maintenance

Tests for maintenance occurred during the following semester for those students enrolled in the next course in the sequence, Introduction to Behavior Principles 2. Thirty students participated in the maintenance check. The TAFMEDS maintenance check included all 72 terms from the prior semester, and the TAFMEDS program recorded the number of correct, incorrect, and skipped definitions and reported the duration of the timing. Students earned 5 extra-credit points for participating in the maintenance check.

Dependent Variables

Although data on several variables were collected throughout the implementation of the instructional sequence, the following dependent variables were the focus of this experiment. The dependent variables included the total number of correct definitions typed per minute during daily practice timings and during the retention check in the following semester, as well as each of the dependent measures collected during the cumulative checks. Data collected during the cumulative checks included the total number of correct definitions typed per minute during the TAFMEDS timing and the stability and endurance timings, the total number of correct definitions spoken per minute during the SAFMEDS timing, and the total number of correct responses written per minute on the application and generativity checks.

Data Collection and Analysis

The TAFMEDS program collected data automatically for TAFMEDS daily practice, cumulative checks (stability and endurance), and the maintenance check. Students, supervised by trained SAAs and TFs collected data for stability, generativity, application, and learning channel transfer checks. SAAs and TFs collected data during cumulative checks, including the number of student responses that were correct, the number of student responses that were incorrect, and the number of student responses that were skipped, as well as the total time spent on SAFMEDS timings, stability timings, and endurance timings. Additionally, the computer program reported data for characters per minute for correct and incorrect definitions for TAFMEDS SAAs and TFs recorded syllables per minute for correct and incorrect definitions for SAFMEDS. SAAs and TFs recorded the total time spent on the application and generativity checks as students completed those activities. At a later date, the first author scored application and generativity checks in order to report correct, incorrect, and skipped terms.

In the SAFMEDS procedure, which was used for learning channel transfer checks and checks for response generalization for a short period at the onset of the semester,12 corrects were counted if the student vocally responded with a definition that matched (had point-to-point correspondence; Skinner, 1957) the definition written on the back of the card. Errors were counted if the student vocally responded with a definition that did not match the definition written on the back of the card (did not have point-to-point correspondence). Skips were counted if the student did not attempt to vocally respond for a card or said “skip,” “pass,” or an equivalent vocal response.

In the TAFMEDS procedure, corrects were counted if the definitions that were entered into the response window below the term matched the programmed definition. This requirement excluded any punctuation within the definition (i.e., “using an event to ‘explain’ itself” would not require participants to type the quotations) and accounted for simple spelling errors. Skips were counted for those terms for which the student made no attempt at a definition but instead pressed the Enter key. Errors were counted for those terms for which students entered definitions that did not meet the criteria for corrects.

Experimenters and/or Chartlytics used these raw data to calculate individual rates and celerations for students’ performances for daily checks and cumulative checks (stability, endurance, generativity, response generalization to a different learning channel, and application), as well as the maintenance check.

Experimental Design

A traditional single-subject experimental design was not employed. Experimenters were interested in several correlations between some of the procedural variables employed with TAFMEDS (e.g., daily practice), students’ performance frequencies and celerations during the use of TAFMEDS, and the outcomes frequently reported as measures of fluency (i.e., MESsAGe). Each of the correlations calculated related to one or more of the research questions asked at the onset of the study.

Experimenters calculated the following Pearson correlations:

the correlation between the number of correct definitions typed per minute on the last TAFMEDS timing before a cumulative check and the celeration at the time of the cumulative check;

the correlation between the total number of days a student engaged in TAFMEDS practice in the 3-week period before the cumulative check and the corresponding celeration as displayed on the TAFMEDS SCC at the time of the cumulative check;

the correlation between the total number of days a student engaged in TAFMEDS practice in the 3-week period before the cumulative check and the number of correct definitions typed per minute on a TAFMEDS timing during that cumulative check;

the correlation between a student’s performance on the TAFMEDS timing during the cumulative check and their corresponding performance on the stability, endurance, application, generativity, and SAFMEDS timings during that cumulative check; and

the correlation between the average number of correct responses per minute on all of the TAFMEDS timings over the course of the fall 2017 semester and performance frequencies on maintenance checks that occurred during the following semester for those students enrolled in the second half of the Behavior Principles course sequence.

Social Validity

Course-wide social validity questionnaires given to students at the end of the semester provided some measures of social validity. Students earned 10 extra-credit points for completing the questionnaire. Questionnaires prompted students to respond to questions that included what their most preferred course component was as it compared with TAFMEDS and why, as well as what their least preferred course component was as it compared with TAFMEDS and why. Students also answered questions regarding their use of any additional study tools, such as SAFMEDS, when studying the terms and definitions and whether they used any study aids while completing at-home practice timings.

Results

Results indicated that student performance generally followed instructor-provided celeration aims (see Tables 3 and 5). Students’ celerations were grouped across five categories (below a x1 per minute per week celeration, between x1 and x1.1, between x1.2 and x1.4, between x1.5 and x1.9, and greater than x2). Table 5 depicts the number of students who fell into each of the five celeration ranges for each cumulative check. In general, more students fell in those celeration ranges above x1.1 across all cumulative checks than in celeration ranges below x1.1, suggesting that learning was taking place for the majority of the students in the class.

Table 5.

Number of Students in Each Celeration Range per Cumulative Check

| Celeration range | CC1 | CC2 | CC3 | CC4 | Mean |

|---|---|---|---|---|---|

| <x1 | 10 | 8 | 8 | 4 | 7.5 |

| x1–x1.1 | 20 | 6 | 9 | 13 | 12 |

| x1.2–x1.4 | 49 | 26 | 31 | 28 | 33.5 |

| x1.5–x1.9 | 37 | 50 | 46 | 30 | 40.8 |

| >x2 | 29 | 51 | 43 | 55 | 44.5 |

Note. CC = Cumulative check

Results of the Pearson correlation for students across all sections of the course indicated that across Cumulative Checks 1, 2, 3, and 4, there was no statistically significant correlation between students’ celerations and the number of definitions that students entered correctly per minute during the last TAFMEDS timing before the cumulative check, r(144) = .07, p = .40; r(139) = .19, p = .02; r(135) = .41, p = .35; and r(128) = .22, p = .01, respectively (see Fig. 4). Similarly results of the Pearson correlation indicated that across Cumulative Checks 1, 2, 3, and 4, there was not a statistically significant correlation between the number of days of practice and students’ celerations except in Cumulative Check 4, r(144) = .06, p = 0.48; r(139) = .07, p = .38; r(135) = .11, p = .22; and r(128) = .28, p <.01, respectively (see Fig. 5). However, during Cumulative Checks 1, 2, 3, and 4, results of the Pearson correlation indicated a statistically significant positive correlation between the number of days of practice and the number of definitions correctly entered per minute, r(144) = .44, p < .001; r(139) = .43, p < .001; r(126) = .41, p < .001; and r(128) = .46, p < .001, respectively (see Fig. 6). During Cumulative Checks 1, 2, 3, and 4, results of the Pearson correlation indicated a statistically significant positive correlation between the number of definitions entered correctly per minute on the TAFMEDS timing and the number of definitions correctly entered per minute on the stability timing, r(128) = .86, p < .001; r(121) = .84, p < .001; r(126) = .86, p < .001; and r(121) = .79, p < .001, respectively (see Fig. 7). During Cumulative Checks 1, 2, 3, and 4, results of the Pearson correlation indicated a statistically significant positive correlation between the number of definitions correctly entered per minute on a standard TAFMEDS timing and the number correct on the endurance timing, r(128) = .84, p < .001; r(120) = .92, p < .001; r(124) = .89, p < .001; and r(122) = .79, p < .001, respectively (see Fig. 8). During Cumulative Checks 1, 2, 3, and 4, results of the Pearson correlation indicated a statistically significant positive correlation between the number of definitions correctly entered per minute on a standard TAFMEDS timing and the number correct on the application check, r(134) = .15, p = 0.07; r(114) = .38, p < .001; r(124) = .27, p = .002; and r(120) = .46, p < .001, respectively (see Fig. 9). During Cumulative Checks 1, 2, 3, and 4, results of the Pearson correlation indicated a statistically significant positive correlation between the number of definitions correctly entered per minute on a standard TAFMEDS timing and the number correct on the generativity check, r(134) = .38, p < .001; r(114) = .42, p < .001; r(124) = .28, p = .002; and r(120) = .48, p < .001, respectively (see Fig. 10). During Cumulative Checks 1, 2, 3, and 4, results of the Pearson correlation indicated a statistically significant positive correlation between the number of definitions correctly entered per minute on a standard TAFMEDS timing and the number correct on the SAFMEDS timing, r(129) = .61, p < .001; r(120) = .77, p < .001; r(121) = .74, p < .001; and r(118) = .62, p < .001, respectively (see Fig. 11). These data are summarized in Table 6.

Fig. 4.

Correlation between TAFMEDS correct per minute and celeration. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between the students’ celeration at the time of the cumulative check and students’ performance on the TAFMEDS timing at the time of the cumulative check for all students who participated in the cumulative check. The dotted line represents a celeration of x1.1. There is no correlation between the students’ celeration and their performance on the TAFMEDS timing, but the majority of students do fall above the x1.1 celeration line.

Fig. 5.

Correlation between the number of days of practice and celeration at the time of the cumulative check. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between the total number of days each student engaged in practice in the 3 weeks prior to the cumulative check and each student’s performance celeration at the time of the cumulative check for all students who participated in the cumulative check. There is no correlation between the number of days of practice and the students’ celeration at the time of the cumulative check.

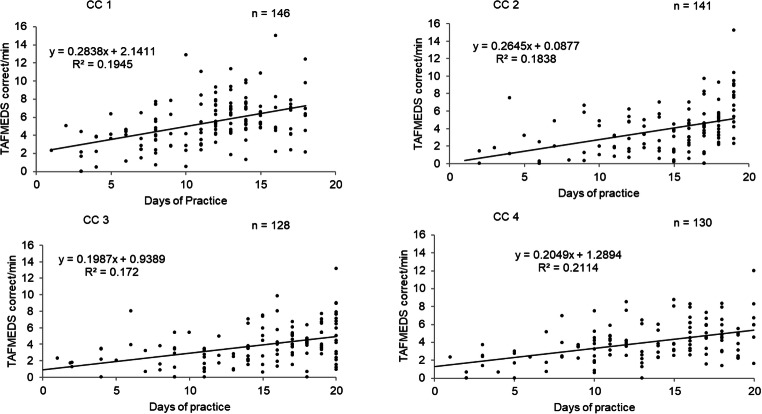

Fig. 6.

Correlation between the number of days of practice and performance on TAFMEDS timings. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between the total number of days each student engaged in practice in the 3 weeks prior to the cumulative check and that student’s performance on the TAFMEDS timing at the time of the cumulative check in terms of the number of correct responses per minute for all students who participated in the cumulative check. There is a positive correlation between the number of days of practice and the number of correct responses per minute on the TAFMEDS timing for each of the four cumulative checks.

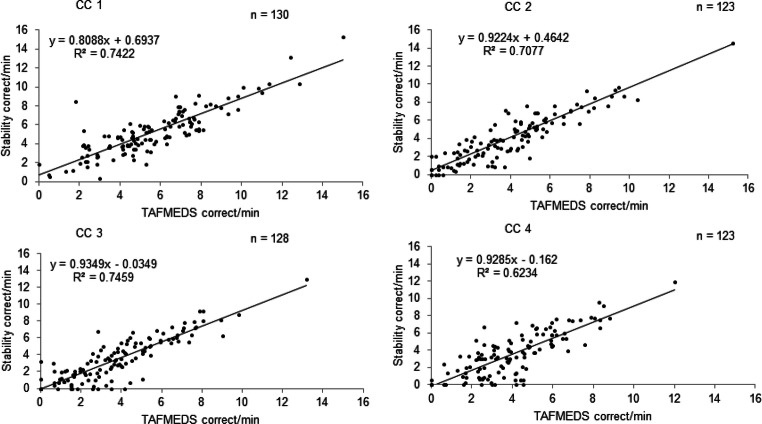

Fig. 7.

Correlation between performance on TAFMEDS timings and performance on stability timings. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between the students’ performance on the TAFMEDS timing at the time of the cumulative check in terms of the number of correct responses per minute and performance on the stability timing component of the cumulative check in terms of correct responses per minute for all students who participated in the cumulative check. There is a positive correlation between student performance on TAFMEDS timings and performance on stability timings for all four cumulative checks.

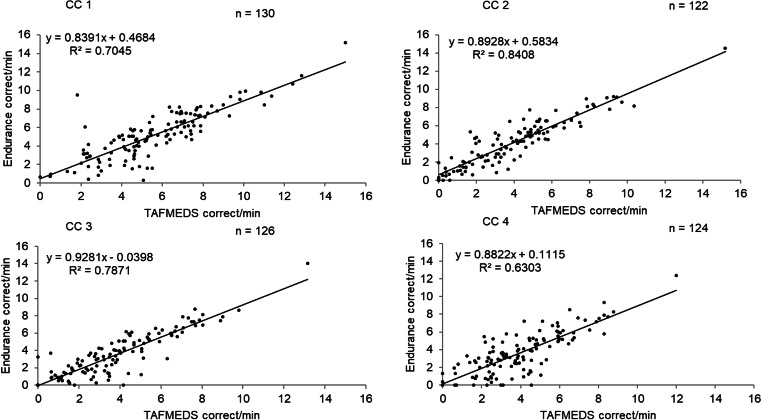

Fig. 8.

Correlation between performance on TAFMEDS timings and performance on endurance timings. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between the students’ performance on the TAFMEDS timing at the time of the cumulative check in terms of the number of correct responses per minute and performance on the endurance timing component of the cumulative check in terms of correct responses per minute for all students who participated in the cumulative check. There is a positive correlation between student performance on TAFMEDS timings and performance on endurance timings for all four cumulative checks.

Fig. 9.

Correlation between performance on TAFMEDS timings and performance on application checks. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between students’ performance on the TAFMEDS timing at the time of the cumulative check in terms of the number of correct responses per minute and performance on the application check component of the cumulative check in terms of correct responses per minute for all students who participated in the cumulative check. There is a positive correlation between student performance on TAFMEDS timings and performance on the application check for all four cumulative checks.

Fig. 10.

Correlation between performance on TAFMEDS timings and performance on generativity checks. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between students’ performance on the TAFMEDS timing at the time of the cumulative check in terms of the number of correct responses per minute and performance on the generativity check component of the cumulative check in terms of correct responses per minute for all students who participated in the cumulative check. There is a positive correlation between student performance on TAFMEDS timings and performance on the generativity check for all four cumulative checks.

Fig. 11.

Correlation between performance on TAFMEDS timings and performance on SAFMEDS timings. Each quadrant represents a cumulative check (CC1, 2, 3, and 4). Each graph depicts the correlation between students’ performance on the TAFMEDS timing at the time of the cumulative check in terms of the number of correct responses per minute and performance on the SAFMEDS timing component of the cumulative check in terms of correct responses per minute for all students who participated in the cumulative check. There is a positive correlation between student performance on TAFMEDS timings and performance on SAFMEDS timings for all four cumulative checks.

Table 6.

Pearson’s Correlation Data for Each Cumulative Check

| Cumulative Check 1 | |||

| n | r | p | |

| TAFMEDS correct/min vs. celeration | 146 | .07 | .40 |

| Days of practice vs. celeration | 146 | .06 | .48 |

| Days of practice vs. TAFMEDS correct/min | 146 | .44 | <.001 |

| TAFMEDS correct/min vs. stability correct/min | 130 | .86 | <.001 |

| TAFMEDS correct/min vs. endurance correct/min | 130 | .84 | <.001 |

| TAFMEDS correct/min vs. application | 136 | .15 | .07 |

| TAFMEDS correct/min vs. generativity | 136 | .38 | <.001 |

| TAFMEDS correct/min vs. SAFMEDS correct/min | 131 | .61 | <.001 |

| Cumulative Check 2 | |||

| n | r | p | |

| TAFMEDS correct/min vs. celeration | 141 | .19 | .02 |

| Days of practice vs. celeration | 141 | .07 | .38 |

| Days of practice vs. TAFMEDS correct/min | 141 | .43 | <.001 |

| TAFMEDS correct/min vs. stability correct/min | 123 | .84 | <.001 |

| TAFMEDS correct/min vs. endurance correct/min | 122 | .92 | <.001 |

| TAFMEDS correct/min vs. application | 116 | .38 | <.001 |

| TAFMEDS correct/min vs. generativity | 116 | .42 | <.001 |

| TAFMEDS correct/min vs. SAFMEDS correct/min | 122 | .77 | <.001 |

| Cumulative Check 3 | |||

| n | r | p | |

| TAFMEDS correct/min vs. celeration | 137 | .08 | .35 |

| Days of practice vs. celeration | 137 | .11 | .21 |

| Days of practice vs. TAFMEDS correct/min | 128 | .41 | <.001 |

| TAFMEDS correct/min vs. stability correct/min | 128 | .86 | <.001 |

| TAFMEDS correct/min vs. endurance correct/min | 126 | .89 | <.001 |

| TAFMEDS correct/min vs. application | 126 | .27 | .002 |

| TAFMEDS correct/min vs. generativity | 126 | .28 | .002 |

| TAFMEDS correct/min vs. SAFMEDS correct/min | 123 | .74 | <.001 |

| Cumulative Check 4 | |||

| n | r | p | |

| TAFMEDS correct/min vs. celeration | 130 | .22 | .01 |

| Days of practice vs. celeration | 130 | .28 | .001 |

| Days of practice vs. TAFMEDS correct/min | 130 | .46 | <.001 |

| TAFMEDS correct/min vs. stability correct/min | 123 | .79 | <.001 |

| TAFMEDS correct/min vs. endurance correct/min | 124 | .79 | <.001 |

| TAFMEDS correct/min vs. application | 122 | .46 | <.001 |

| TAFMEDS correct/min vs. generativity | 122 | .48 | <.001 |

| TAFMEDS correct/min vs. SAFMEDS correct/min | 120 | .62 | <.001 |

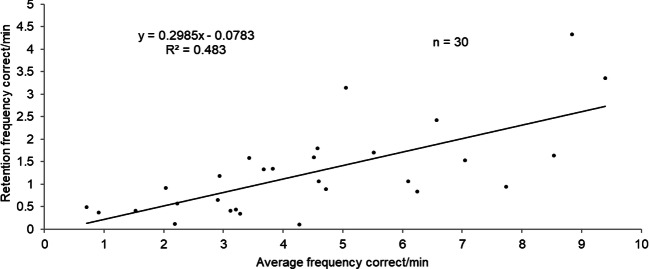

Results of the Pearson correlation for the maintenance check indicated a statistically significant positive correlation between the average frequency of definitions correct per minute across all TAFMEDS timings in the Introduction to Behavior Principles 1 course and the frequency of correct definitions per minute on the maintenance checks in the subsequent semester, r(28) = .69, p < .001 (see Fig. 12).

Fig. 12.

Correlation between average performance on TAFMEDS timings in fall 2017 and performance on maintenance checks in spring 2018. This graph represents the correlation between the average number of correct responses per minute on all TAFMEDS timings in the fall 2017 semester for 30 students and those students’ performance on a maintenance check that occurred in the following semester, spring 2018. There is a positive correlation between the average number of correct responses per minute across all TAFMEDS timing in fall 2017 and the number of correct responses per minute on the maintenance check in spring 2018.

Fifty-eight total social validity questionnaires were available for scoring.13 One student out of 58 reported that TAFMEDS was the most preferred of the three course activities, whereas 44 students reported that TAFMEDS was their least preferred course activity, and 13 students reported that TAFMEDS was neither the most nor the least preferred course activity. Twenty-three students out of 58 reported that they used additional study tools such as SAFMEDS when studying the terms and definitions, and 41 students out of 58 reported that study tools were used to aid in completion of at-home practice timings.

Discussion

The current study provided an analysis of performance outcomes that occurred with the use of TAFMEDS rather than the typical SAFMEDS flash card–based paradigm. Additionally, the researchers explored the outcomes associated with placing performance aims and grade contingencies on celerations rather than frequencies, which are more commonly reported in the PT (Lindsley, 2000), and especially the SAFMEDS (Urbina et al., 2019) literature. Limitations, implications for instruction and course design, and suggestions for future research are discussed in what follows.

In general, students’ performance improved over time with respect to the number of TAFMEDS definitions entered correctly in each timing, as well as across fluency-derived outcomes of maintenance, endurance, stability, application, and generativity. Additionally, higher performances correlated with an increased number of daily practice timings. This finding suggests that those students who engaged in regular daily practice with TAFMEDS performed better on their TAFMEDS timings than those who engaged in fewer instances of daily practice. These students also performed better on maintenance, endurance, stability, application, and generativity checks.