Abstract

This study evaluated the effects of a staff training package on the frequency (rate) of trial presentations to children diagnosed with autism. The training consisted of a combination of repeated timings, modeling plus frequency building, and modeling in vivo with the client plus frequency building. The experimenters implemented 20-min training sessions or frequency-building sessions with staff that used 1-min timings for trial presentation in each phase. Training resulted in a higher frequency of trials across all 3 participants in the modeling and feedback phase.

Keywords: Autism, Behavioral skills training, Frequency building, Staff training, Trial presentation

The quality of services that a child with autism receives relies heavily on the quality of training that the direct staff members have received (Reed & Henley, 2015). There are a variety of training methods, one of them being behavioral skills training (BST; Nigro-Bruzzi & Sturmey, 2010). The components of BST are providing instructions that describe the skill, modeling the skill, rehearsing the skill, and providing repeated feedback on performance (Ward-Horner & Sturmey, 2012). Nigro-Bruzzi and Sturmey (2010) used BST to train staff on mand-training procedures, resulting in an increase in both staff performance and the number of unprompted mands emitted by the children in the study, demonstrating that staff training procedures can lead to collateral gains in client performance.

Another method to increase child outcomes is to increase the number of learning opportunities presented and increase fluency in responding (Greer & McDonough, 1999). Fluency in responding is important for both the learner and the staff member. Research has shown that performance below fluency for basic skills results in failure to perform more complex behavior (Binder, 1996). Fluent behavior includes maintenance or retention of a skill over time (retention), the abbility to perform the skill for longer amounts of time (endurance), the ability to use the skill in distracting environemnts (stability), and the ability to apply that skill to novel situations or novel clients (application), known as RESA in the precision-teaching (PT) community (Johnson & Layng, 1996). The ability to provide this fast-paced instruction in any environment and across learners is particularly important given that higher rates of responding result in greater academic gains (Berens, Boyce, Berens, Doney, & Kenzer, 2003). If fluency is the goal for staff members, then it would make sense for instruction that aims to reach fluent behavior to be incorporated into staff training.

Fluency-based instruction, also known as frequency building, is an intervention that uses timed sessions with ongoing feedback and performance standards known as aims (Binder, 1996). Researchers have used frequency building as an effective parent-training tool. Haughton, Maloney, and Desjardins (1980) demonstrated that a single 20-min training session increased the speed and accuracy at which a parent was able to implement a skill acquisition program. Despite the training outcomes of the use of frequency-building methods, there is limited research on using frequency building for staff training. The purpose of the current study was to determine if frequency building could be combined with BST to increase the number of trials presented by therapists in applied behavior analysis (ABA) to children diagnosed with autism.

Method

Participants

Three female participants completed the study. Participant 1 was 24 years old and had worked with the agency for 7 months. She held a master’s degree in special education with a concentration in ABA. She had 1 year of experience in a special education classroom using the principles of ABA. Participant 2 was 26 years old and had worked with the agency for 6 months. She held a bachelor’s degree in recreational therapy and was in her final semester of a master’s program in ABA. She had 2.5 years of experience as an ABA therapist for other agencies serving children diagnosed with autism. Participant 3 was 40 years old and had worked with the agency for 11 months. She held a bachelor’s degree in history and had 2 years of previous experience in providing early intervention services to children with autism diagnoses.

Setting

Researchers conducted the study in a private center that provided ABA therapy to children ages 2 to 6. Researchers conducted baseline and probe sessions in the child’s individualized ABA therapy room or in the center’s playroom. Training sessions were also conducted in the cafeteria and in the conference room. All rooms contained tables, chairs, individualized program materials, and toys. The playroom and therapy rooms contained art and craft supplies, blocks, cars, a pretend kitchen, puzzles, books, and a variety of other preschool age-appropriate toys that were used for instruction.

Design

A multiple-probe design (Horner & Baer, 1978) was used to assess the effectiveness of the intervention. Baseline probes measured the frequency of trials presented during therapy sessions prior to participants receiving training. Initial baseline probes continued until the data demonstrated a stable level of responding or a deceleration (a PT term for a decreasing trend) in trials; at that point, the researchers introduced the intervention to the first participant. Once the first participant completed the first training phase and the posttraining probe demonstrated an increase in trials, the second participant started training. Following an increase in trials in the second participant’s posttraining probe, the third participant entered the training phase.

Dependent Variable and Measurement

The dependent variable was the number of trials presented during one-on-one ABA therapy sessions. A trial was defined as a teacher-directed acquisition task that resulted in student responding and the teacher providing a consequence. An acquisition task was defined as a task that directly corresponded to a current treatment plan target selected by the behavior analyst. For example, while sitting at a table with the client, the therapist said, “Tell me one thing in this room that has stripes” (one of the child’s current targets was identifying items by feature). A nonexample would be the therapist saying “clap your hands” when hand clapping is a mastered skill not on the current data sheet. Researchers measured trials by taking frequency data during baseline, all training sessions, and probes during the specified amount of time. Frequency data were collected by tallying all trials presented on a data sheet and totaling the tally marks at the end of the session. Error correction did not count as a new trial. All data were plotted on a standard celeration chart as a count per minute.

Procedures

Baseline and Posttraining Probes

The experimenter observed the participant providing one-on-one ABA therapy in the child’s individualized therapy room. The same child who was observed in the first baseline phase was observed for follow-up probes throughout the entire study. There were two children involved in the study; Participants 2 and 3 worked with the same student. Observations were conducted for 10 min, and the experimenter told the participant when an observation began and ended. No programmed consequences or feedback was given. The experimenter made a copy of the child’s daily data sheet prior to the start of the observation for data collection. Posttraining probes were conducted after the completion of each treatment phase, and the procedure was identical to that of the baseline probes.

Training

All sessions started with 3 min to review the data sheet, set up materials, and ask clarification questions if needed. Timings that lasted 1 min were conducted on the number of trials presented from the client’s program. The timer started after the therapist presented the first trial. Following each timing, the researcher gave feedback to the participant based on the current treatment phase, and timings continued until the training session reached the 20-min time limit or the therapist had met criteria for that training phase. The experimenter charted the results on the timings chart after each timing, and the participants could see their charts throughout the training session. The differences in the training sessions are as follows.

Feedback. Participants received a daily data sheet and the corresponding therapy materials for at least three different clients without the client present. Following a timing, they were given a brief verbal comment on the number of trials presented and then began another 1-min timing.

Modeling and feedback. Participants received a copy of their client’s daily data sheet and the corresponding therapy materials without the client present. The experimenter modeled or coached the participant through how to best arrange and present therapy materials. After each 1-min timing, the experimenter provided feedback on the number of trials presented and coached or modeled ways to increase the speed of trial presentations.

In vivo training with modeling and feedback. Participants received a copy of their client’s daily data sheet and the corresponding therapy materials with the client present in the therapy room or cafeteria. A senior-level therapist demonstrated setting up a session and presenting trials at a rate of at least four trials per minute with the client for 1 min. Following the 1-min demonstration, the participant completed a 1-min timing with the client. When the minute concluded, participants received feedback on rate of trial presentation and potential ceilings the participants were putting on their performance. After the feedback, participants completed another timing for 1 min. Once their performance reached at least four trials per minute, the training session transitioned with the client to a new location. The participant and experimenter continued conducting 1-min timings with feedback with the child until the 20-min training session was complete or until the therapist demonstrated four to six trials per minute across at least two environments.

Social Validity

A social validity questionnaire was e-mailed to the participants following the completion of the study. The participants were directed to leave it in the experimenter’s mailbox without their name on it to remain anonymous. The questionnaire contained seven questions on a 5-point scale ranging from strongly disagree to strongly agree about the training and how they perceived the procedures and effects. Questions focused on the extent to which participants felt their skills had improved as a result of the training, they enjoyed the training, and they felt their assigned child’s performance had improved as a result of the training.

Interobserver Agreement

Interobserver agreement (IOA) was assessed by having a second independent observer record the frequency of trials during 36% of probe sessions through video recording or by watching the session as it occurred. Mean IOA was 94% with a range of 91%–100%.

Results

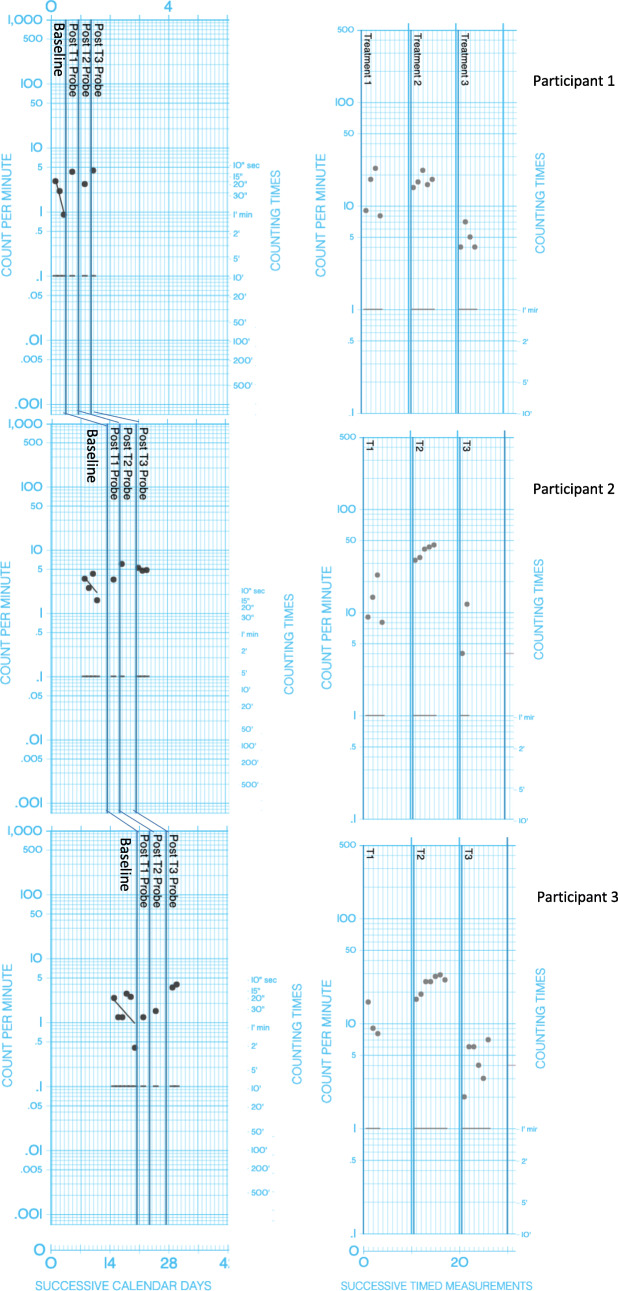

The results of the posttraining probes are displayed in Figure 1. Baseline demonstrated a deceleration for all three participants. Baseline for Participants 2 and 3 also showed bounce, the PT term for variability, at x2.3 and x4, respectively. The level, or mean, of trial presentation for Participants 1, 2, and 3 during baseline was 1.8, 2.8, and 1.5 per minute, respectively. Following the feedback phase (T1 in the figure), the frequency of trials increased from baseline levels for Participants 1 and 2 by 2.4 and 0.6 per minute, respectively, and decreased for Participant 3 by 0.3 per minute. Following the modeling and feedback phase (T2 in the figure), the frequency of trials increased from baseline levels for Participants 1 and 2 by 0.9 and 3.2 per minute, respectively. The frequency of trial presentation stayed the same for Participant 3. Following the last phase, feedback and in vivo modeling (T3 in the figure), all three participants showed an increase in the frequency of trials presented from baseline levels by 2.6, 2.1 and 2.4 per minute, respectively for Participants 1, 2, and 3).

Fig. 1.

Count per minute of trials presented on a standard celeration chart. The first set of charts is on a daily per-minute chart. The second set is on a timings chart. T1 = feedback; T2 = modeling and feedback; T3 = in vivo modeling and feedback

Results from the social validity questionnaire are presented in Table 1. Responses to all seven questions ranged from 4 to 5 (agree to strongly agree). All three participants responded to the questionnaire, and all rated “I think that my client’s skills have increased due to this training procedure” the highest.

Table 1.

Social Validity Questionnaire Results

| Statement | Average Rating |

|---|---|

| My knowledge of discrete-trial training has increased. | 4.67 |

| My overall therapy skills have increased. | 4.67 |

| My therapy skills with the clients used in this training have increased. | 4.67 |

| I found this training procedure to be enjoyable. | 4.67 |

| I like this training procedure better than others that I have received in the past. | 4 |

| I would like to use this training procedure with other clients. | 4.67 |

| I think that my client’s skills have increased due to this training procedure. | 5 |

Note. Ratings use a Likert scale from 1 to 5: 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, and 5 = strongly agree

Discussion

The purpose of this study was to determine if feedback and modeling combined with frequency-building instruction would be an effective staff training model to increase the number of trials presented in an ABA therapy session. The results for all three participants showed an increase in the number of trials from baseline levels to the final phase of treatment. During baseline, all three participants’ behavior decelerated. Researchers hypothesized that the deceleration in baseline data was due to initial reactivity when being observed by the experimenter. After the ABA therapists acclimated to the experimenter’s observations, their rate of trial presentation decreased to what was presumably the rate at which they typically presented trials throughout their therapy sessions. Frequent observations are a typical control for reactivity when conducting research with staff (Parsons, Rollyson, and Reid, 2012).

The first training phase with only feedback on the number of trials presented was enough to increase frequency from the last data point in baseline in the posttreatment probe for two of the participants. The experimenters predicted that modeling combined with feedback would result in greater gains based on the the findings in the BST literature (Ward-Horner & Sturmey, 2012) and the fact that solely being told the number of trials and goal number of trials is not enough to improve performance.

It should be noted that by the third training phase, the participants also had more practice sessions overall, and that could have resulted in some of the increase. In addition, the use of frequency building combined with modeling and feedback used in BST could have helped with the immediate increase in trial presentation following the first training. Frequency building provides a large amount of practice opportunities in a short amount of time. In a 20-min training session, the participants had practiced conducting anywhere from 30 to 150 trials. A hallmark of frequency-building instruction is repeated practice (Pennypacker, Koenig, & Lindsley, 1972); thus, it is possible that staff training could be conducted in fewer sessions, taking up less time for trainers, and result in greater gains. All three participants made a gain in the rate of trial presentation during the training, which only took the trainer a total of 60 min in training time.

One of the limitations of this study was the limited number of training sessions that were conducted. Participant 1 was unable to complete additional training sessions in the third phase because the child she was working with moved out of state. The largest limitation was the lack of an empirical frequency aim (i.e., set mastery criteria for fluent behavior) for this skill, so it is difficult to determine if staff reached fluency of trial presentation in their sessions. The data suggest that the participants were not fluent because their trial presentation rate decreased when they were moved to a more distracting environment. In order to teach trial presentation as a fluent behavior, a frequency aim needs to be established. Future research should look to empirically validate the range of frequencies that produces RESA (fluent responding) in training skills. If there were an established aim, this study would have continued until the participants reached criteria; then an application and stability check would have been conducted to determine if the participants were fluent in the skill.

It is also recommended that future research compare the use of frequency-building instruction with typical didactic or discrete-trial-based staff instruction to determine if one method leads to a faster gain in instructional skills. This is especially needed, as the results of this study indicated high social validity with employees, and employee satisfaction should be something that employers consider when determining what staff training method to use.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Berens KN, Boyce TE, Berens NM, Doney JK, Kenzer AL. A technology for evaluating relations between response frequency and academic performance outcomes. Journal of Precision Teaching and Celeration. 2003;19(1):20–34. [Google Scholar]

- Binder C. Behavioral fluency: Evolution of a new paradigm. The Behavior Analyst. 1996;19(2):163–197. doi: 10.1007/BF03393163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greer RD, McDonough SH. Is the learn unit a fundamental measure of pedagogy? The Behavior Analyst. 1999;22:5–16. doi: 10.1007/BF03391973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haughton EC, Maloney M, Desjardins A. The tender loving care chart. Journal of Precision Teaching. 1980;1(2):22–25. [Google Scholar]

- Horner RD, Baer DM. Multiple-probe technique: A variation on the multiple baseline. Journal of Applied Behavior Analysis. 1978;11:189–196. doi: 10.1901/jaba.1978.11-189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson K, Layng TVJ. On terms and procedures: Fluency. The Behavior Analyst. 1996;19:281–288. doi: 10.1007/BF03393170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nigro-Bruzzi D, Sturmey P. The effects of behavioral skills training on mand training by staff and unprompted vocal mands by children. Journal of Applied Behavior Analysis. 2010;43(4):757–761. doi: 10.1901/jaba.2010.43-757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parsons MB, Rollyson JH, Reid DH. Evidence-based staff training: A guide for practitioners. Behavior Analysis in Practice. 2012;5:2–11. doi: 10.1007/BF03391819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennypacker HS, Koenig CH, Lindsley OR. The handbook of the standard behavior chart. Kansas City, KS: Behavior Research Co.; 1972. [Google Scholar]

- Reed FDD, Henley AJ. A survey of staff training and performance management practices: The good, the bad, and the ugly. Behavior Analysis in Practice. 2015;8(1):16–26. doi: 10.1007/s40617-015-0044-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ward-Horner J, Sturmey P. Component analysis of behavior skills training in functional analysis. Behavioral Interventions. 2012;27:75–92. doi: 10.1002/bin.1339. [DOI] [Google Scholar]