Abstract

Over the years, many clinical and engineering methods have been adapted for testing and screening for the presence of diseases. The most commonly used methods for diagnosis and analysis are computed tomography (CT) and X-ray imaging. Manual interpretation of these images is the current gold standard but can be subject to human error, is tedious, and is time-consuming. To improve efficiency and productivity, incorporating machine learning (ML) and deep learning (DL) algorithms could expedite the process. This article aims to review the role of artificial intelligence (AI) and its contribution to data science as well as various learning algorithms in radiology. We will analyze and explore the potential applications in image interpretation and radiological advances for AI. Furthermore, we will discuss the usage, methodology implemented, future of these concepts in radiology, and their limitations and challenges.

Keywords: artificial intelligence, data science, data science in radiology, deep learning, machine learning, machine learning in radiology, radiology

Introduction

In a world of fast-advancing technology, a vast amount of data are generated and constantly increasing.1 Given appropriate tools, these data can be captured and could provide a very insightful resource. Data capture for this purpose has already been implemented in various fields such as defense, healthcare, media and communication, cybersecurity, and banking, to name a few.

Evolution in hardware and software application has led to an escalating number of tasks performed by machines that were initially unimaginable.2 The most noteworthy tool has been the introduction of learning algorithms. Tasks can now be performed, which were previously limited to humans, thus indicating that these algorithms have significantly improved recently.3 Among the myriad methods available, it is comparatively less challenging to train deep learning (DL) algorithms; however, the downsides are vast data requirements and high supervision during the interpretation of results.4 For example, in DL systems, the activation function rectified linear unit (ReLU) outshined the sigmoid function in terms of performance. To understand this better, the ReLU function calculates the sum of input values, and if it falls below 0, it gives an output of 0; else, the output will be the same as the input.5–7

In addition, DL algorithms have been able to outdo the performance of other AI approaches and medical experts in specific tasks such as recognizing pneumonia on imaging scans.8–10 In 2012, a DL algorithm first entered this field, which dramatically reduced the error rate from 0.258 to 0.153 in 1 year. After 3 years, in 2015, using DL methods, the quality of error decreased to the extent that it was the lowest value obtained by human observers.11

While radiology is an expanding field, these novel learning algorithms could improve its standards and clinical efficiency by achieving swift clinical and economic benefits. Healthcare today is an evolving technological landscape in which data-generating devices are increasingly ubiquitous, creating what is commonly referred to as ‘Big Data’. Researchers are investigating how these quantifications benefit radiological improvements, and quite enthralling pioneers have been identified. In their current application, a methodology where a vast number of quantitative features are extracted using mathematical equations from radiographic medical images is known as radiomics.12 The radiomics features can be categorized into texture features (wavelet texture, laws’ texture, run-length encoding, gray-level co-occurrence matrix, etc.), first- and second-order statistics features (homogeneity, entropy, standard deviation, skewness, etc.), and shape-size features (volume, longest diameter, etc.). Radiomics can offer a comprehensive understanding of radiographic qualities of fundamental tissues. The acquired information can be used throughout the clinical care path to improve diagnosis and treatment planning, as well as assess the potential and subsequent response to treatment.12 Figure 1 shows the patient journey in conventional versus artificial intelligence (AI) setup for diagnosis and prediction of the disease.

Figure 1.

Patient journey: conventional versus artificial intelligence.

In this article, previously used learning algorithms will be explained and those most useful to the field of radiology will be described, examining current and upcoming applications. We will endeavor to evaluate how this noteworthy new-fangled tool will be able to benefit radiologists in the future.13 The concept of data science and various learning algorithms including the application of these radiology techniques will be reviewed. Furthermore, we will discuss various roles these techniques play, their pitfalls, advantages, and scope in the field of radiology.

Methods

Search strategy and article selection

A non-systematic review of all relevant English-language literature in Radiology that were published in the last decade (2010–2020) was conducted in June 2020 using MEDLINE, Elsevier, Springer, RSNA, Scopus, and Google Scholar. Our search strategy involved creating a search string based on a combination of keywords. They were as follows: ‘Data Science’, ‘radiology’, ‘Artificial intelligence’, ‘AI’, ‘Machine learning’, ‘ML’, ‘ANN’, ‘Neural networks’, ‘convolutional networks’, ‘Deep Learning’, ‘DL’, ‘MRI’, ‘CT scans’, ‘X-Ray’, ‘healthcare’, ‘diagnosis’, ‘medicine’, ‘algorithms’, ‘image processing’, ‘image recognition’, and ‘image reconstruction’. This review includes only the original research work published in English.

Inclusion criteria

Articles on Radiology that address data science, DL, and machine learning (ML).

Full-text original articles on all aspects of analysis, algorithms, and outcomes of radiological diagnosis.

Exclusion criteria

Commentaries, articles with no full-text context, and book chapters.

Articles without image processing and articles that do not address radiology directly but are generic healthcare articles.

The literature review was performed, conforming to the inclusion and exclusion criteria. The evaluation of titles and abstracts, screening, and full article text was conducted for the chosen articles that satisfied the inclusion criteria. Furthermore, the authors manually reviewed the selected articles’ reference list to screen for any additional work of interest. The authors resolved the disagreements about eligibility for a consensus decision after discussion.

Results

Contemporary history of data science and learning algorithms

AI is expressed as ‘the capacity of computers or other machines to exhibit or simulate intelligent behavior’, and it is now a flourishing area and the subject of extensive research in several fields.14,15 The advent of ML, which is the potential of an AI system to extract information from un-processed data (raw data) and learn from experience, was also growing. Thus, the need for ‘human operators to formally define all the information needed by computers’ was avoided to an extent.16

ML algorithms were developed and used to evaluate healthcare data sets. ML provides a number of vital tools for performing intelligent data analysis. The digital revolution has made data collection and storage extremely inexpensive and accessible. Modern hospitals have state-of-the-art monitoring and data collection systems. In contrast to rule-based algorithms, ML takes advantage of increased exposure to large and new data sets and has the ability to improve and learn with experience.14

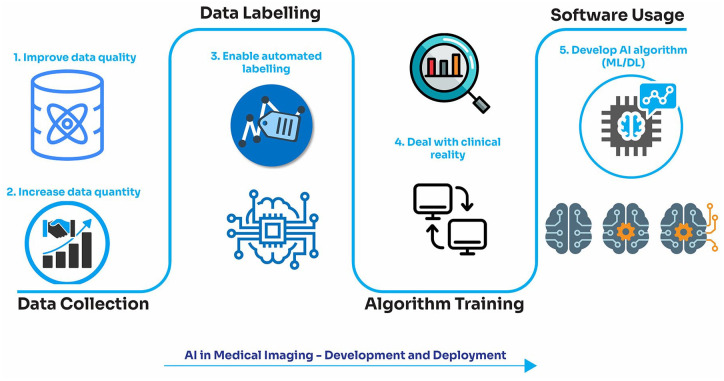

DL is often referred to as being one of the most complex collections of algorithms. Although DL has made considerable strides in recent times, some may consider DL algorithms a hindrance to healthcare and radiology.16 DL is nevertheless a useful tool, although it depends on the requirement and usage, and is much easier to train. Figure 2 shows the pathway of artificial intelligence (AI) in various medical imaging modalities.

Figure 2.

Pathway of artificial intelligence in medical imaging.

To understand the above terms, one must be able to differentiate DL algorithms from ML algorithms, especially the data presentation technique in the system. ML is a branch of AI that enables machines to learn the shape of data as well as adapt and improve their performance without the need for human intervention. DL is a class of ML algorithms that gradually extracts higher level features from the raw data using several layers. Almost always, ML algorithms require structured data, whereas DL networks rely on artificial neural network (ANN) layers. The biological neural network of the human brain is the inspiration for ANNs. An ANN is made up of numerous artificial neurons, each with its own weight, that form a layer (or level). A multilevel representation or network, which is a fully connected network, is created by connecting neurons on one level to neurons on the next level in a multitude of ways. An ANN is built up of input (shape of input data), hidden (one or multiple level), and output layers (number of class labels). Because each successive layer is interconnected in an ANN, the number of weights will rapidly increase as the number of layers increases, affecting the scaling and learning of ANN. For image analysis, this problem can be solved by applying a series of small filters (convolution filters) to the input image and subsampling the filter activation space until there are enough high-level features to use ANN, which leads to Convolutional Neural Networks (CNNs). CNNs are currently a very successful solution for image classification and recognition applications. A CNN can be built with a few convolutional layers with some activation function, which are frequently accompanied by a max-pooling layer for subsampling. Following the acquisition of high-level features via subsampling and convolution, the high-level features are fed through the fully connected layers (hidden layer and output layer) to achieve the final decision. Lately, DL has gained significant recognition in the consumer world and the healthcare community, while ML algorithms have been a research subject for several years.17 The difference between DL and ‘traditional’ ML is extensively discussed over its functionality and capability. The distinction is critical, especially in the context of medical imaging. In traditional machine learning, the first step typically is feature extraction. This indicates that in order to classify an item, one must first establish which qualities are significant and then implement algorithms that can classify the object,18 whereas DL utilizes several layers of algorithms to find patterns and imitate human cognition. DL algorithms have recently gained a resurgence of interest after observing a 10% decrease in the top-5 error on the ImageNet data set.17 DL outperforms traditional ML as data volume increases and does not perform well when the data are small. A large volume of data is required for DL algorithms to fully understand the data. High-end devices are required for the majority of DL algorithms, while traditional ML algorithms can run on low-end machines. Mathematical processes such as matrix multiplication are inherent to DL algorithms.

It usually takes a long time to train a DL algorithm. An algorithm’s training time is longer than typical because there are so many parameters involved. Training the state-of-the-art DL system ‘ResNet’ takes approximately 2 weeks. ML, on the contrary, can be trained in a matter of seconds to a few hours.

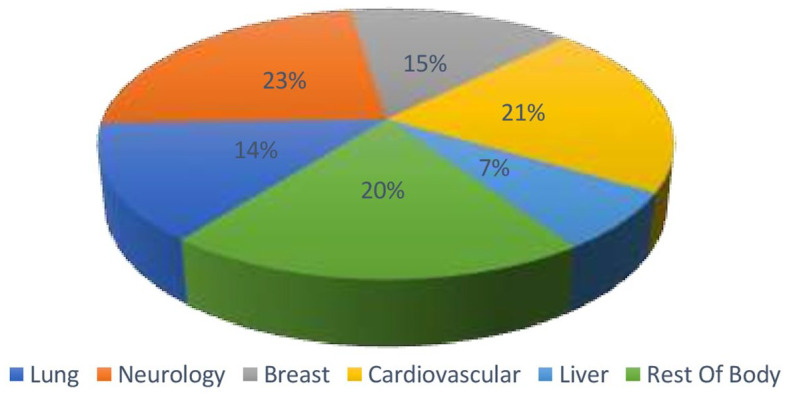

The era of modern medicine

Statistics is a critical feature of ML, and statistical interference is the basis of ML algorithms. Different ML models are developed using different statistical models, and these are crucial steps in making the right assumptions while training the data. Statistics alone may not be able to bring about a change in radiology, but its application in promising fields will give us better insights when used in radiology. The development of AI-driven applications in healthcare has significantly augmented the demand for image identification tools. ML and DL algorithms are widely used in various domains of healthcare and are applied to the images for extraction of features which help to provide valuable insights into diagnosis and patient treatment (Figure 3).

Figure 3.

Global market for machine leaning (ML)-based image analysis.

Radiology has dealt with accelerated technological advancements almost as much as in any other clinical field. Since radiology is fundamentally a data-driven discipline, it is particularly suited to the use of data processing methods. The world market for algorithms in image analysis is growing rapidly, and progress in ML and its applications, including medical imaging, seems to be quite promising.19 ML enables computers to understand like humans and extract or identify patterns. Therefore, machines can process more data sets and derive data characteristics than we can do manually.20 One of the most challenging tasks during clinical image interpretation is identifying the disease and swiftly separating anomalies from normal anatomy.

Google’s Peter Norvig has demonstrated that vast volumes of information and data will address weak points in ML algorithms.21 Narrow-scope ML algorithms do not need vast data from testing but might need data from high-quality ground truth training. In medical imaging research, as with some other ML forms, the volume of data required differs significantly according to the task at hand. For instance, segmentation tasks may need only limited data; however, performance classification tasks such as classifying a liver lesion as malignant or benign may require many more labeled data. This ultimately depends on the number of classification methods used to differentiate and classify a lesion as malignant or benign.22

Learning algorithms in radiology: a breakthrough

DL approaches and ML algorithms, in general, have a tremendous opportunity to shape the radiology practice. Disease identification is one of the most challenging and time-consuming tasks, especially when interpreting images and identifying them as normal or abnormal findings. The type of data used in radiology is mostly ‘labeled data’. Since we are dealing with labeled data, the supervised learning model is preferred in most cases of radiology. The algorithm is trained with enough labeled data to build an optimal model. A substrate for developing ‘learning algorithms’ is provided by a substantial quantity of image and report data, now available in digital form (‘Big Data’). The inability to compile a sufficiently large training set is a possible setback, resulting in less reliable or generalizable tests. Figure 4 shows the learning algorithms and their practical utility that are implemented in radiology applications.23

Figure 4.

Clinical applications of machine learning in radiology.

Image acquisition

ML can make imaging applications smart and time-efficient. ML-based models of data analysis can minimize imaging time. Thus, intelligent imaging systems may eliminate needless imaging, improve orientation, and improve detecting characterization. For example, smart magnetic resonance imaging (MRI) will identify a lesion and propose changes in the subsequent sequence to obtain favorable lesion characterization.24 This will, therefore, benefit patients from faster scanning and enhanced and improved scan reports.

Automated detection and interpretation of findings

ML can detect significant unintended findings. Certain fields that may benefit from potential ML include cancer detection through mammograms and diagnosis of pulmonary nodules. Examining the bone age and automated assessment of anatomical age based on medical imaging has great utility for pediatric radiology and endocrinology. Additional ML studies that were carried out identified important findings such as pneumothoraces, fractures, organ lacerations, and stroke.25–31

Interpretation of scans is a frequent discussion area and the topic of contention as to whether such techniques could/would eventually fully replace radiologists. The interpretation of detected findings ‘requires a high level of expert knowledge, experience, clinical judgment and correlation based on each clinical scenario’. Most opinionated leaders in this field have indicated that radiologists should utilize these techniques to increase their reporting accuracy rather than replacing them. With ML, extraction of the features from breast MRI might enhance the analysis of breast cancer diagnostic results.26 An ML approach based on radiological attributes (semantic characteristics such as contour, texture, and margin) of incidental pulmonary nodules was shown to enhance cancer prediction accuracy and diagnostic understanding of the same.27

Post-processing: image segmentation, registration, quantification

With the accessibility of large image data set and using ML, medical imaging has made substantial advances in post-processing activities such as image recognition, segmentation, and quantification. Studies have demonstrated how to use ML to segment images and aid radiologists with image synthesis, image analysis, and image quantification. For example, an algorithm based on a CNN to segment the volume of adipose tissue on computed tomography (CT) scan images or even an estimate of CT images from the accompanying MR images can be rendered using an adversarial generative network.32 ML can also be applied for the quantitative assessment of three-dimensional (3D) structures in cross-sectional imaging.33,34

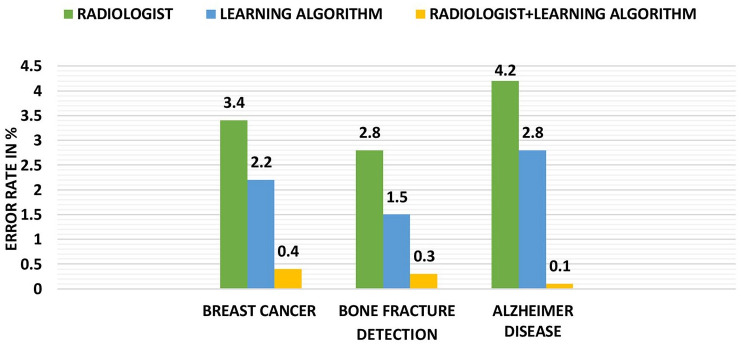

Image quality analytics

The image quality and accuracy are crucial in radiology (Figure 5). However, the large number of images, the greater the time required for analysis. To solve this issue, numerical observers in ML (also known as model observers) have been developed as a proxy for image quality measurement for human observers. With ML and DL advancements, it is now possible to detect or even anticipate bad image quality immediately, enabling radiologists to resolve these irregularities before reviewing the images.35

Figure 5.

Graphical representation of detection versus error in prediction.

Although significant technical advancements in ML have been made in recent years, including improvements in DL algorithms, further progress in the performance of graphics processing units (GPUs) and memory will enhance its capabilities.

Thinking beyond image analysis

The learning algorithms aid radiologists in image analysis and interpretation. However, they also have a use beyond imaging. For instance, the introduction of speech recognition and translation of texts in the final radiology reports was advantageous to patients as they could be translated into various languages and could reduce the potential for errors.1,36–38 These algorithms are continuously learning and can handle various tasks apart from image analysis. (Table 1).

Table 1.

Beyond image analysis: detection of medical conditions using learning algorithms.

| Authors | Objective | Model and algorithm | Outcome | Results |

|---|---|---|---|---|

| Wu et al.39 | Diagnosis of breast cancer | • A three-layer ANN is used • The interpretation is based on features retrieved from mammograms by radiologists |

The performance of ANN was better than that of radiologists alone, to distinguish between benign and malignant lesions | Yielding a value of 0.95 for the area under the curve (AUC) |

| McBee et al.40 | Detect metastatic disease | A five-layer CNN is developed to detect metastatic disease | This result was complementary to the low false-positive rates obtained by researchers using a DL algorithm for ophthalmologists | The model achieved an image-level AUC score above 97% |

| Diabetic retinopathy | A five-layer CNN with two different validation sets and different set points | It achieved 90.3% and 87.0% sensitivity and 98.1% and 98.5% specificity | ||

| Wang and Summers20 | Neurological disease diagnosis | • Kernel-based learning methods in radiology are used in computer- aided design (CAD) • SVMs for detection of micro calcifications on mammography |

ML algorithm allows radiologists to make decision on data like traditional X-rays, CT, MRI, PET, and radiology records | It is likely to be applied in reinforcement learning. The CAD system reported 80% sensitivity. |

| Text analysis of radiology reports | • Converting text into a structured format using NLP. • This enabled computers to derive meaning from human language. |

Tests on a set of 230 radiology reports showed high accuracy and recall in text recovery. | ||

| Hussain et al.41 | Prostate cancer detection | • The Gaussian kernel method is used • It is used to solve specific applications involving many, heterogeneous types of data with the help of support vector machine (SVM) |

80 cancerous tissues were perceived efficiently with a high specificity and sensitivity | Accuracy of 98.34% with AUC of 0.999, specificity of 90–95%, and sensitivity of 92–96% |

| Jnawali et al.42 | Intracranial hemorrhage (ICH) detection | • A DL algorithm consists of 1D CNN, long short-term memory (LSTM) units • Trained and tested on a data set CT radiological report |

LSTMs are able to extract key ICH features, already been proved using a weakly labeled data set in the computer vision problems | The AUC is 0.94, which is very promising |

| Al-antari et al.43 | Breast cancer detection | • First model: It randomly extracts four regions of interest with a size of 32 × 32 pixels from a detected mass • Second model: The whole detected breast mass is utilized • Using deep belief network (DBN). |

The outcomes prove that the proposed DBN outperforms the traditional classifiers | The overall accuracies of DBN are 92.86% and 90.84% for the two ROI techniques, respectively. |

| Dikaios et al.44 | Prostate cancer detection | • A layer-wise unsupervised pre-training model, followed by supervised fine-tuning • Each layer is pre-trained using a restricted Boltzmann machine (RBM) |

DBN outperformed the other models; when extended to an individual patient cohort, their performance was not significantly reduced | DBN performed better than the other models, demonstrating value of ROC-AUC = 0.811 |

| Pavithra and Pattar45 | Pneumonia or lung cancer detection | • Two stages of feature extraction and classification; feature extraction is done using Gabor filter • Classification is using neural network. • Feed-forward network (FFN) and radial bias function (RBF) |

Three types of ANN classifiers were studied, out of which, FFN and RBF network have shown best performance | Accuracy been obtained is about 94.8% for FFN. Accuracy is 94.82% for RBF network |

ANN, artificial neural network; AUC, area under curve; CAD, computer-aided design; CNN, convolutional neural network; CT, computed tomography; DBN, deep belief network; DL, deep learning; FFN, feed-forward network; ICH, intracranial hemorrhage; LSTM, long short-term memory; ML, machine learning; MRI, magnetic resonance imaging; NLP, natural language processing; PET, positron emission tomography; RBF, radial bias function; RBM, restricted Boltzmann machine; ROC, receiver operating characteristic; ROI, region of interest; SVM, support vector machine.

Optimize MR scanner utilization

MRI scanning is particularly time-consuming due to the variety and number of sequences often required, improvements in efficiency, and the number of scans/sequences obtained per time could reduce cost. An ongoing fundamental study utilized neural networks to help decide the ideal time allotment per scan dependent on different information boundaries (scan protocol, patient age, contrast utilization, and convention mean of impromptu sequence recurrences). This can optimize scanner usage and diminish costs.46

Image reconstruction

Recently, a deep image prior (DIP) framework was proposed, which showed that CNNs have the intrinsic ability to regularize various problems without pre-training. Since no prior training pairs are needed, random noise can be employed as the network input to generate denoised images.47,48 In addition to using training pairs to perform supervised learning, many methods focus on exploiting images acquired from the same patient from a previous scan to improve the quality of the image. To test the effectiveness of the conditional DIP framework, an experiment was performed by using either the uniform random noise or the patient’s MR image from before as the network input. ML reconstruction of the real brain data at the 60th iteration was treated as the label image and 300 epochs were run.49 When the input was random noise, the image was smooth, but some cortex structures could be recovered. When the input was MR image, more cortex structures showed up.49 As per the studies, compared with the ML-plus-filter method and the CNN Penalty method, the kernel method and the DIP Recon method can recover more cortex details, and the image noise in the white matter is much reduced.49

In another method, DL was used to rapidly perform phase recovery and reconstruct complex-valued images of the specimen using a single intensity–only hologram. This process was less time-consuming and necessitated approximately 3.11 s on a GPU-based laptop or computer to recover the phase and amplitude images of a specimen.50

Radiology reporting and analytics

By applying ML methods to natural language processing (NLP), researchers can extricate information from free-text radiology reports, additional data and estimations from descriptive radiology reports, and even track commendations made by radiologists to alluding doctors. There have been efforts to extricate clinically significant information from such content with progress in NLP, including important findings, which could help in the detection of breast cancer with the help of Breast Imaging-Reporting and Data System (BI-RADS) classes and discoveries. Fleischner Society commends frequently utilizing heuristic methods.51–55 ML and NLP algorithm calculations could help track radiologists’ proposals and help in the follow-up.56

Creation of study schedules

Creating study protocols is a part of a radiologist’s role.57 It includes referring to health records, information on the clinical details and imaging request, previous images, and radiology reports. It is a crucial step and has radiation dose implications for patients. By applying data interpretation to the available information, these learning algorithms can precisely ascertain imaging protocols, saving time and money.58,59

Scheduling, screening, and optimization

One significant way radiomics is changing healthcare is by ‘well man or woman’ screening, thereby improving general health and using AI to recognize patients at risk of harm. These advances likewise can help improve on-going screening. AI applications are can help in understanding well-being via patient screening60 or add an addendum to existing reports which can potentially change radiology practice.61

Automated radiation dose estimation

ML algorithms could support radiologists and technologists by making dose estimations before tests and helping to reduce the dosage. Many studies to improve image quality and decrease radiation dose in CT scans are being carried out. They want to reduce CT radiation, even though these outcomes compromise with expanded image noise and lower quality of images because there are confinements of the usually utilized filtered back-projection reconstruction strategies. A portion of the more current iterative recreation advances has diminished noise in images produced with lower dosages.62 DL can decrease radiation dose significantly. The idea is to train a classifier for mapping out ‘noisy’ images produced from ultra-dose CT protocols to excellent quality images from standard protocols, utilizing DL strategies.63,64

Complications and future of learning algorithms in radiology

While a significant number of training models are accessible for issues identified with images, the data sets for clinical images are usually very small, with an average number of patients on the scale of hundreds. The huge number of parameters in a deep neural network that requires streamlining brings about a great danger of over-training and ensuing low performances on data sets that were not utilized in the training procedure. Given the probability of over-training and over-fitting of images, a high probability of reporting performance does not show the actual capacity of a model to group, predict, or segment when the validation is not handled appropriately. Every new development contributes to computational speed, although this does not mean fewer flaws. The inability to gather an adequately sized training set is a potential limitation, which could impact the outcomes, making them less precise or generalized.4 This complication of over-fitting was already noted.50

Concerning the future scope of learning algorithms, there is an overall understanding that DL will have a significant role in the practice of radiology, especially for MRI. There will be no lack of data as they are continuously generated; learning algorithms can extract information and make useful predictions and outcomes. Some anticipate that DL algorithms will handle enormous tasks, leaving radiologists with more opportunity to concentrate on more clinical duties such as multidisciplinary meetings (MDTs) and intellectually taxing tasks. In contrast, others agree that radiologists and DL algorithms will work together to produce a performance that is better than when working as individuals. Finally, some foresee that DL algorithms will supplant radiologists (at least in their image interpretation analysis) altogether. It seems that the future of AI is intricately linked to radiology, and specialist scan reporting will increasingly use AI to work synergistically.

Conclusion

This article discusses the current practices using ML algorithms and advancements in this field. Although the extent of their influence on radiology is unclear, these algorithms give a promising new arrangement of methods for cross-examining image data that ought to be analyzed with robustness. Radiology has needed to cope with technological change as much as any other branch in healthcare, with radiologists having profited enormously from working with advanced digital frameworks. Yet, concerns exist about machines taking these jobs away from humans, showing a potential, even likely, cultural boundary to accept DL in radiology.

Although some envision that machines may replace radiologists in the near future, most healthcare leaders do not accept that idea. It is believed that machines may help to improve the accuracy, but will not eradicate radiologists’ need. The job of a radiologist is not only to analyze images and identify the pathology, but it also extends much beyond just interpreting images into clinical knowledge and correlation, and so although machines can perform many sophisticated tasks, they may or may not be able to replace medical practitioners in their application beyond image diagnosis.

In addition, if the quantity of the available labeled image is constrained for a given application, it will be exigent to train DL algorithm frameworks or systems, and there is a danger of ‘over-fitting’ the information with an increase in generalization and lack of specific detail. Data science and learning algorithms contribute extensively to the healthcare field, and there is a positive growth in this field. More healthcare practitioners are becoming aware of these techniques, and the true potential of these tools will be harnessed in the years to come.

Footnotes

Author contributions: BKS, BPR, NN, VP, SM, and GV were involved in study conception and design. BMZH, GP, PS, SZR, and HK were involved in acquisition of data. NN, BMZH, VP, RP, PS, and PC were involved in analysis and interpretation of data. BMZH, GP, VP, SZR, and HK were involved in drafting of manuscript. BKS, SM, RP, BPR, GV, and PC were involved in critical revision.

Conflict of interest statement: The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

ORCID iDs: Nithesh Naik  https://orcid.org/0000-0003-0356-7697

https://orcid.org/0000-0003-0356-7697

Bhaskar K. Somani  https://orcid.org/0000-0002-6248-6478

https://orcid.org/0000-0002-6248-6478

Contributor Information

B.M. Zeeshan Hameed, Department of Urology, Father Muller Medical College, Mangalore, Karnataka, India; Department of Urology, Kasturba Medical College, Manipal, Manipal Academy of Higher Education, Manipal, India; KMC Innovation Centre, Manipal Academy of Higher Education, Manipal, Karnataka, India; International Training and Research in Uro-oncology and Endourology (iTRUE) Group, Manipal, India; Curiouz Techlab Private Limited, Manipal Government of Karnataka Bioincubator, Manipal, Karnataka, India.

Gayathri Prerepa, Department of Electronics and Communication, Manipal Institute of Technology, Manipal Academy of Higher Education, Manipal, Karnataka, India.

Vathsala Patil, Department of Oral Medicine and Radiology, Manipal College of Dental Sciences, Manipal, Manipal Academy of Higher Education, Manipal, Karnataka 576104, India.

Pranav Shekhar, Department of Computer Science and Engineering, Manipal Institute of Technology, Manipal Academy of Higher Education, Manipal, Karnataka, India.

Syed Zahid Raza, Department of Urology, Dr. B.R. Ambedkar Medical College, Bengaluru, Karnataka, India.

Hadis Karimi, Manipal College of Pharmaceutical Sciences, Manipal Academy of Higher Education, Manipal, Karnataka, India.

Rahul Paul, Department of Radiation Oncology, Massachusetts General Hospital, Harvard Medical School, Boston, MA, USA.

Nithesh Naik, International Training and Research in Uro-oncology and Endourology (iTRUE) Group, Manipal, India; Curiouz Techlab Private Limited, Manipal Government of Karnataka Bioincubator, Manipal, India; Faculty of Engineering, Manipal Institute of Technology, Manipal Academy of Higher Education, Manipal, Karnataka, India.

Sachin Modi, Department of Interventional Radiology, University Hospital Southampton NHS Foundation Trust, Southampton, UK.

Ganesh Vigneswaran, Department of Interventional Radiology, University Hospital Southampton NHS Foundation Trust, Southampton, UK.

Bhavan Prasad Rai, International Training and Research in Uro-oncology and Endourology (iTRUE) Group Manipal, India; wDepartment of Urology, Freeman Hospital, Newcastle upon Tyne, UK.

Piotr Chłosta, Department of Urology, Jagiellonian University in Kraków, Kraków, Poland.

Bhaskar K. Somani, International Training and Research in Uro-oncology and Endourology (iTRUE) Group, Manipal, India Department of Urology, University Hospital Southampton NHS Foundation Trust, Southampton, UK.

References

- 1.Thrall JH, Li X, Li Q, et al. Artificial intelligence and machine learning in radiology: opportunities, challenges, pitfalls, and criteria for success. J Am Coll Radiol 2018; 15: 504–508. [DOI] [PubMed] [Google Scholar]

- 2.Schmidhuber J. Deep learning in neural networks: an overview. Neural Netw 2015; 61: 85–117. [DOI] [PubMed] [Google Scholar]

- 3.Yue W, Wang Z, Chen H, et al. Machine learning with applications in breast cancer diagnosis and prognosis. Designs 2018; 2: 13. [Google Scholar]

- 4.Cho J, Lee K, Shin E, et al. How much data is needed to train a medical image deep learning system to achieve necessary high accuracy? arXiv preprint arXiv 2015: 1511.06348. [Google Scholar]

- 5.Srivastava N, Hinton G, Krizhevsky A, et al. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 2014; 15: 1929–1958. [Google Scholar]

- 6.Shang W, Sohn K, Almeida D, et al. Understanding and improving convolutional neural networks via concatenated rectified linear units. In: Proceedings of the international conference on machine learning, 19–24 June 2016, pp. 2217–2225. New York: ICML. [Google Scholar]

- 7.Glorot X, Bordes A, Bengio Y. Deep sparse rectifier neural networks. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics (JMLR workshop and conference proceedings), Fort Lauderdale, FL, 11–13 April 2011, pp. 315–323. http://proceedings.mlr.press/v15/glorot11a/glorot11a.pdf [Google Scholar]

- 8.Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 2012; 25: 1097–1105. [Google Scholar]

- 9.Dodge S, Karam L. A study and comparison of human and deep learning recognition performance under visual distortions. In: Proceedings 2017 26th international conference on computer communication and networks (ICCCN), Vancouver, BC, Canada, 31 July–3 August 2017, pp. 1–7. New York: IEEE. [Google Scholar]

- 10.Rajpurkar P, Irvin J, Zhu K, et al. CheXNet: radiologist-level pneumonia detection on chest x-rays with deep learning. arXiv preprint arXiv 2017: 1711.05225. [Google Scholar]

- 11.He K, Zhang X, Ren S, et al. Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. In: Proceedings of the IEEE international conference on computer vision, Santiago, Chile, 7–13 December 2015, pp. 1026–1034. New York: IEEE. [Google Scholar]

- 12.Aerts HJ. The potential of radiomic-based phenotyping in precision medicine: a review. JAMA Oncol 2016; 2: 1636–1642. [DOI] [PubMed] [Google Scholar]

- 13.Ravì D, Wong C, Deligianni F, et al. Deep learning for health informatics. IEEE J Biomed Health 2016; 21: 4–21. [DOI] [PubMed] [Google Scholar]

- 14.Kononenko I. Machine learning for medical diagnosis: history, state of the art and perspective. Artif Intell Med 2001; 23: 89–109. [DOI] [PubMed] [Google Scholar]

- 15.Saba L, Biswas M, Kuppili V, et al. The present and future of deep learning in radiology. Eur J Radiol 2019; 114: 14–24. [DOI] [PubMed] [Google Scholar]

- 16.Goodfellow I, Bengio Y, Courville A, et al. Deep learning. Cambridge: MIT Press, 2016. [Google Scholar]

- 17.Morid MA, Borjali A, Del Fiol G. A scoping review of transfer learning research on medical image analysis using ImageNet. Comput Biol Med 2020; 128: 104115. [DOI] [PubMed] [Google Scholar]

- 18.Mazurowski MA, Buda M, Saha A, et al. Deep learning in radiology: an overview of the concepts and a survey of the state of the art with focus on MRI. J Magn Reson Im 2019; 49: 939–954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Erickson BJ, Korfiatis P, Akkus Z, et al. Machine learning for medical imaging. Radiographics 2017; 37: 505–515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wang S, Summers RM. Machine learning and radiology. Med Image Anal 2012; 16: 933–951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Choy G, Khalilzadeh O, Michalski M, et al. Current applications and future impact of machine learning in radiology. Radiology 2018; 288: 318–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Figueroa RL, Zeng-Treitler Q, Kandula S, et al. Predicting sample size required for classification performance. BMC Med Inform Decis 2012; 12: 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.8 key clinical applications of machine learning in radiology. Radiology Business, 26June2018, http://www.radiologybusiness.com/topics/artificial-intelligence/8-key-clinical-applications-machine-learning-radiology (accessed 16 February 2021). [Google Scholar]

- 24.Kohli M, Prevedello LM, Filice RW, et al. Implementing machine learning in radiology practice and research. Am J Roentgenol 2017; 208: 754–760. [DOI] [PubMed] [Google Scholar]

- 25.Reza Soroushmehr SM, Davuluri P, Molaei S, et al. Spleen segmentation and assessment in CT images for traumatic abdominal injuries. J Med Syst 2015; 39: 87–81. [DOI] [PubMed] [Google Scholar]

- 26.Pustina D, Coslett HB, Turkeltaub PE, et al. Automated segmentation of chronic stroke lesions using LINDA: lesion identification with neighborhood data analysis. Hum Brain Mapp 2016; 37: 1405–1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Maier O, Schröder C, Forkert ND, et al. Classifiers for ischemic stroke lesion segmentation: a comparison study. PLoS ONE 2015; 10: e0145118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Liu S, Xie Y, Reeves AP. Automated 3D closed surface segmentation: application to vertebral body segmentation in CT images. Int J Comput Assist Radiol Surg 2016; 11: 789–801. [DOI] [PubMed] [Google Scholar]

- 29.Do S, Salvaggio K, Gupta S, et al. Automated quantification of pneumothorax in CT. Comput Math Methods Med 2012; 2012: 736320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Herweh C, Ringleb PA, Rauch G, et al. Performance of e-ASPECTS software in comparison to that of stroke physicians on assessing CT scans of acute ischemic stroke patients. Int J Stroke 2016; 11: 438–445. [DOI] [PubMed] [Google Scholar]

- 31.Burns JE, Yao J, Muñoz H, et al. Automated detection, localization, and classification of traumatic vertebral body fractures in the thoracic and lumbar spine at CT. Radiology 2016; 278: 64–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. In: Ghahramani Z, Welling M, Cortes C, et al. (eds) Advances in Neural Information Processing Systems (NIPS). San Diego, CA: NeurIPS, 2014, pp. 2672–2680. [Google Scholar]

- 33.Wang Y, Qiu Y, Thai T, et al. A two-step convolutional neural network based computer-aided detection scheme for automatically segmenting adipose tissue volume depicting on CT images. Comput Methods Programs Biomed 2017; 144: 97–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Akkus Z, Galimzianova A, Hoogi A, et al. Deep learning for brain MRI segmentation: state of the art and future directions. J Digit Imaging 2017; 30: 449–459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Esses SJ, Lu X, Zhao T, et al. Automated image quality evaluation of T2-weighted liver MRI utilizing deep learning architecture. J Magn Reson Im 2018; 47: 723–728. [DOI] [PubMed] [Google Scholar]

- 36.Hannun A, Case C, Casper J, et al. Deep speech: scaling up end-to-end speech recognition. arXiv preprint arXiv 2014: 1412.5567. [Google Scholar]

- 37.Hannaford N, Mandel C, Crock C, et al. Learning from incident reports in the Australian medical imaging setting: handover and communication errors. Br J Radiol 2013; 86: 20120336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Morgan TA, Helibrun ME, Kahn CE., Jr.Reporting initiative of the Radiological Society of North America: progress and new directions. Radiology 2014; 273: 642–645. [DOI] [PubMed] [Google Scholar]

- 39.Wu Y, Giger ML, Doi K, et al. Artificial neural networks in mammography: application to decision making in the diagnosis of breast cancer. Radiology 1993; 187: 81–87. [DOI] [PubMed] [Google Scholar]

- 40.McBee MP, Awan OA, Colucci AT, et al. Deep learning in radiology. Acad Radiol 2018; 25: 1472–1480. [DOI] [PubMed] [Google Scholar]

- 41.Hussain L, Ahmed A, Saeed S, et al. Prostate cancer detection using machine learning techniques by employing combination of features extracting strategies. Cancer Biomark 2018; 21: 393–413. [DOI] [PubMed] [Google Scholar]

- 42.Jnawali K, Arbabshirani MR, Ulloa AE, et al. Automatic classification of radiological report for intracranial hemorrhage. In: Proceedings of the 2019 IEEE 13th international conference on semantic computing (ICSC), Newport Beach, CA, 2019, pp. 187–190. New York: IEEE. [Google Scholar]

- 43.Al-antari MA, Al-masni MA, Park SU, et al. An automatic computer-aided diagnosis system for breast cancer in digital mammograms via deep belief network. J Med Biol Eng 2018; 38: 443–456. [Google Scholar]

- 44.Dikaios N, Johnston EW, Sidhu HS, et al. Deep learning to improve prostate cancer diagnosis. In: Joint Annual Meeting ISMRM-ESMRMB, vol. 3, Paris, 16–21 June 2018, http://cds.ismrm.org/protected/17MPresentations/abstracts/0669html [Google Scholar]

- 45.Pavithra R, Pattar SY. Detection and classification of lung disease-pneumonia and lung cancer in chest radiology using artificial neural network. Int J Sci Res Publ 2015; 5: 128–132. [Google Scholar]

- 46.Morey JM, Haney NM, Kim W. Applications of AI beyond image interpretation. In: Ranschaert E, Morozov S, Algra P. (eds) Artificial intelligence in medical imaging. Cham: Springer, 2019, pp. 129–143. [Google Scholar]

- 47.Ulyanov D, Vedaldi A, Lempitsky V. Deep image prior. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, 18–23 June 2018, pp. 9446–9454. New York: IEEE. [Google Scholar]

- 48.Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv 2015: 1511.06434. [Google Scholar]

- 49.Gong K, Catana C, Qi J, et al. PET image reconstruction using deep image prior. IEEE T Med Imaging 2018; 38: 1655–1665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Rivenson Y, Zhang Y, Günaydın H, et al. Phase recovery and holographic image reconstruction using deep learning in neural networks. Light Sci Appl 2018; 7: 17141–17141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Larson DB, Towbin AJ, Pryor RM, et al. Improving consistency in radiology reporting through the use of department-wide standardized structured reporting. Radiology 2013; 267: 240–250. [DOI] [PubMed] [Google Scholar]

- 52.Pons E, Braun LM, Hunink MM, et al. Natural language processing in radiology: a systematic review. Radiology 2016; 279: 329–343. [DOI] [PubMed] [Google Scholar]

- 53.Lakhani P, Kim W, Langlotz CP. Automated detection of critical results in radiology reports. J Digit Imaging 2012; 25: 30–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Sippo DA, Warden GI, Andriole KP, et al. Automated extraction of BI-RADS final assessment categories from radiology reports with natural language processing. J Digit Imaging 2013; 26: 989–994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Lacson R, Prevedello LM, Andriole KP, et al. Factors associated with radiologists’ adherence to Fleischner Society guidelines for management of pulmonary nodules. J Am Coll Radiol 2012; 9: 468–473. [DOI] [PubMed] [Google Scholar]

- 56.Oliveira L, Tellis R, Qian Y, et al. Follow-up recommendation detection on radiology reports with incidental pulmonary nodules. Stud Health Technol Inform 2015; 216: 1028–1028. [PubMed] [Google Scholar]

- 57.Sachs PB, Gassert G, Cain M, et al. Imaging study protocol selection in the electronic medical record. J Am Coll Radiol 2013; 10: 220–222. [DOI] [PubMed] [Google Scholar]

- 58.Lakhani P, Prater AB, Hutson RK, et al. Machine learning in radiology: applications beyond image interpretation. J Am Coll Radiol 2018; 15: 350–359. [DOI] [PubMed] [Google Scholar]

- 59.Singh R, Homayounieh F, Vining R, et al. The value in artificial intelligence. In: Silva C, von Stackelberg O, Kauczor HU. (eds) Value-based radiology. Cham: Springer, 2019, pp. 35–49. [Google Scholar]

- 60.Marella WM, Sparnon E, Finley E. Screening electronic health record–related patient safety reports using machine learning. J Patient Saf 2017; 13: 31–36. [DOI] [PubMed] [Google Scholar]

- 61.Fong A, Howe JL, Adams KT, et al. Using active learning to identify health information technology related patient safety events. Appl Clin Inform 2017; 8: 35–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Yasaka K, Katsura M, Akahane M, et al. Model-based iterative reconstruction for reduction of radiation dose in abdominopelvic CT: comparison to adaptive statistical iterative reconstruction. SpringerPlus 2013; 2: 209–209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Chen H, Zhang Y, Zhang W, et al. Low-dose CT via convolutional neural network. Biomed Opt Express 2017; 8: 679–694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Chen H, Zhang Y, Kalra MK, et al. Low-dose CT with a residual encoder-decoder convolutional neural network. IEEE T Med Imaging 2017; 36: 2524–2535. [DOI] [PMC free article] [PubMed] [Google Scholar]