SUMMARY

Rates of simultaneous liver kidney (SLK) transplantation in the United States have progressively risen. On 8/10/17, the Organ Procurement and Transplantation Network implemented a policy defining criteria for SLK, with a “Safety Net” to prioritize kidney allocation to liver recipients with ongoing renal failure. We performed a retrospective review of the United Network for Organ Sharing (UNOS) database to evaluate policy impact on SLK, kidney after liver (KAL) and kidney transplant alone (KTA). Rates and outcomes of SLK and KAL transplants were compared, as was utilization of high-quality kidney allografts with Kidney Donor Profile Indices (KDPI) <35%. Here, SLK transplants comprised 9.0% and 4.5% of total post-policy liver and kidney transplants compared to 10.2% and 5.5% prior. Policy enactment did not affect 1-year graft or patient survival for SLK and KAL populations. Less post-policy SLK transplants utilized high-quality kidney allografts; in all transplant settings, outcomes using high-quality grafts remained stable. These findings suggest that policy implementation has reduced kidney allograft use in SLK transplantation, although both SLK and KAL rates have recently increased. Despite decreased high-quality kidney allograft use, SLK and KAL outcomes have remained stable. Additional studies and long-term follow-up will ensure optimal organ access and sharing.

Keywords: multiorgan, organ allocation, policy, safety net, simultaneous liver kidney transplant, United Network for Organ Sharing

Introduction

Simultaneous liver and kidney (SLK) transplantation in the United States (US) has steadily increased since the Model for End-Stage Liver Disease (MELD) score became the primary determinant of liver allograft allocation [1,2]. Until recently, no policy existed regarding SLK use over liver transplant alone (LTA) in patients with end-stage liver disease (ESLD) and renal dysfunction. Instead, dual listing was determined by clinician preference and supported by data demonstrating a 6–7.4% increase in 5-year survival for ESLD patients receiving SLK compared to LTA [3]. With no regulation in place, SLK rates in the US increased from 3% of all liver transplants in 2001 to 11% in 2017 [4,5].

Despite these trends, proposed regulations on SLK transplantation have been met with resistance. Those supporting more stringent kidney allocation noted that upwards of 15% of SLK transplants were performed in nondialysis-dependent patients with a pre-transplant creatinine of <2.5 mg/dl [6]. Additionally, primary non-function (PNF) of the kidney still occurred in up to 20.7% of SLK transplants [7] despite more frequent use of high-quality kidneys with Kidney Donor Profile Indices (KDPI) of <35% [1]. Proponents of standard practice, conversely, have cited an average waitlist time of 6 years for subsequent kidney transplantation in liver recipients with ongoing renal failure, additionally noting a threefold increase in waitlist mortality compared to those awaiting isolated kidney transplant [8].

In June 2016, after several years of ongoing revisions the United Network for Organ Sharing (UNOS) approved a policy officially regulating SLK transplantation [1]. Effective August 10, 2017, patients would have to meet one of three criteria to be listed for SLK trans-plant. The first is chronic renal insufficiency, defined by a glomerular filtration rate (GFR) <60 ml/min for 90 consecutive days with a GFR <35 ml/min or maintenance dialysis at time of listing. The second involves sustained acute kidney injury, defined by six consecutive weeks of dialysis dependence or documented GFR <25 ml/min. The third is the presence of a metabolic disease associated with development of renal failure including hyperoxaluria, atypical hemolytic uremic syndrome, familial non-neuropathic systemic amyloid, or methylmalonic aciduria. While these criteria serve to limit SLK recipients to patients with renal failure with-out likelihood of renal recovery, the new policy also recognizes that patients who may benefit from renal transplant may not meet initial criteria and would “fall through the cracks” [1]. To address this population, a “Safety Net” was implemented to allow priority listing of post liver transplant patients with persistent renal failure for kidney transplantation. Newly developed end-stage renal disease (ESRD) is highest 6 months after liver transplant [9]; to capture these patients and exclude those with early post-transplant renal recovery, the Safety Net allowed patients with ongoing renal failure to be prioritized for kidney transplantation if they met criteria and were listed 60–365 days after liver transplant.

Post-implementation surveillance of the newly enacted policy requires consideration of liver and kidney allograft allocation and careful monitoring of post-transplant outcomes in ESLD and ESRD populations. While policy efficacy may be determined in a number of different contexts, this study evaluated success by its ability to improve overall kidney utilization while pre-serving outcomes in patients with concomitant liver and renal failure. Here we evaluated the extent to which the SLK allocation policy has impacted these metrics.

Materials and methods

Patient population

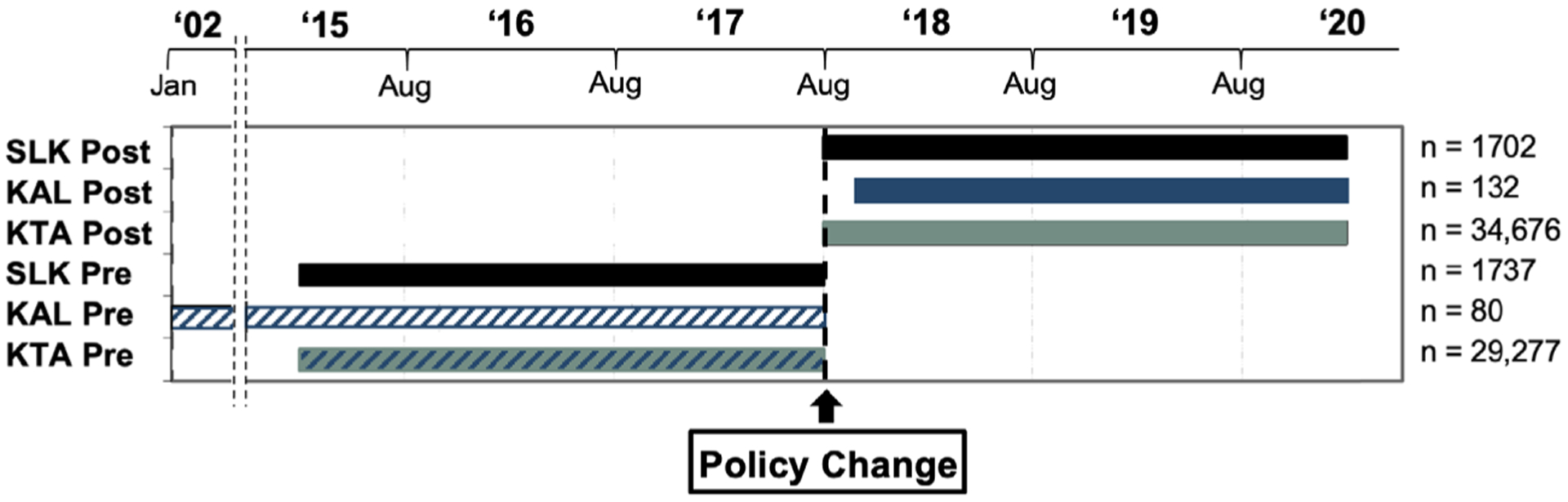

We performed a retrospective review of the UNOS Organ Procurement and Transplantation Network (OPTN) database for adult (≥18 year-old) deceased donor liver and deceased donor kidney transplant recipients. Those receiving multiorgan transplants other than SLK were excluded. Patients were divided into six separate groups to compare transplant populations before and after policy implementation (Figure 1). The pre-policy SLK group was comprised of 1737 patients receiving SLK in the 2.5 years prior to policy implementation (2/ 10/15–8/9/17), while 1702 patients in the subsequent 2.5 years (8/10/17–2/9/20) made up the post-policy SLK cohort. To compare outcomes related to the Safety Net, KAL patients were similarly compared before and after policy implementation. Prior to the Safety Net, few patients receiving KAL transplants would have fit the temporal requirements for KAL listing and even fewer would have been transplanted. The pre-Safety Net KAL group, then, was derived from KAL recipients from 2/ 27/02–8/9/17 who were listed for kidney transplant between 60 and 365 days after their liver transplant (n = 80). Safety Net KAL patients were comprised of those who met criteria for KAL transplantation according to the Safety Net and received a kidney transplant in the 2.5 years following policy implementation (10/ 10/17–2/9/20; n = 132). Deceased donor kidney trans-plant alone (KTA) patients were examined from the 2.5 years prior to policy implementation (n = 29 277) and the 2.5 years after (n = 34 676).

Figure 1.

Composition of study populations. SLK Post = Postpolicy SLK transplant (8/10/17–2/9/20); KAL Post = Safety Net KAL transplant (10/9/17–2/9/20); KTA Post = Postpolicy kidney transplant alone (8/10/17–2/9/20); SLK Pre = Prepolicy SLK transplant (2/10/15–8/9/17); KAL Pre = Pre-Safety Net KAL transplant (2/27/02–8/9/17); KTA Pre = Prepolicy kidney transplant alone (2/10/15–8/9/17).

Ethical statement

In accordance with the ethical standards laid down by the 2000 Declaration of Helsinki and the 2008 Declaration of Istanbul, this study has been reviewed by the Thomas Jefferson Institutional Review Board, who have given approval to conduct this analysis. No informed consent was required as the data source utilized federally maintained de-identified patient information.

Study design

We evaluated the impact of the SLK allocation policy and Safety Net on allograft utilization through three hypotheses. The first asserted that SLK use would decrease as a proportion of total deceased donor liver and kidney transplants. The second was that the policy would not adversely impact outcomes for liver transplant recipients with chronic renal failure. Finally, we hypothesized that policy implementation would reduce rates of high-quality renal allograft use for SLK and improve overall utilization of these grafts in non-SLK settings.

To test the first hypothesis that policy implementation would decrease SLK use we compared pre and post-policy trends in SLK transplantation. Here we reported the net difference in total number of SLK transplants before and after policy implementation, the proportion of SLK use relative to all liver transplants and the ratio of kidneys utilized for SLK transplantation relative to all deceased donor kidney transplants.

To test our second hypothesis that policy implementation would not adversely affect outcomes in liver trans-plant recipients with chronic renal failure, we compared both SLK and KAL populations before and after policy implementation. As post-policy KAL recipients were no longer in liver failure and represented a population similar to that of isolated renal failure, these patients were compared both to selected pre-Safety Net KAL recipients and to KTA recipients in the post-policy era. To reduce the impact of inherent variability between KAL and KTA patients, post-policy KTA patients were propensity score matched (PSM) to Safety Net KAL patients and com-pared. Here, primary outcomes assessed were patient survival, kidney allograft survival, and for SLK recipients liver allograft survival. Secondary outcomes included rates of PNF and acute rejection for both liver and kidney as well as kidney-specific delayed graft function (DGF). We analyzed utilization of highest quality kidney allografts across periods in SLK, KAL and KTA transplant settings, and outcomes related to kidney allograft quality in SLK transplantation. Primary outcomes in each analysis were patient survival as well as liver and kidney graft survival, while secondary outcomes included kidney-related outcomes.

Statistical analysis

Continuous variables were evaluated for normality using the Shapiro–Wilk test. Non-normally distributed variables were compared with a Wilcoxon rank-sum test and were represented as median (interquartile range (IQR)). Categorical variables were compared using a v2 test and were represented as number (percentage of population). PSM of post-policy KTA to Safety Net KAL recipients was per-formed using 1:1 nearest-neighbor matching using a caliper width of 0.2. Variables used in the matching process were: recipient age, ethnicity, sex, renal failure diagnosis, body mass index (BMI), dialysis dependence, calculated panel reactive antibody (cPRA), waitlist duration, donor kidney donor profile index (KDPI) and cold ischemia time. Bias reduction was determined by graphical representation of each cohort’s propensity scores as well as by comparing standardized mean differences. Post-transplant survival was reported graphically with Kaplan–Meier curves and numerically by time-varying Cox proportional hazards ratios (HRs) and 95% confidence intervals (95% CIs). A P-value of <0.05 was considered statistically significant. All statistical analyses were performed using Stata/MP 16.1 (Statacorp, College Station, TX, USA).

Results

Assessing relative SLK use before and after policy implementation

SLK transplants comprised 10.2% of liver transplants the 2.5 years prior to policy implementation (n = 1737). In the first year after implementation 9.0%of all deceased donor liver transplants were SLK trans-plants (n = 647); in the second year this was 8.7% (n = 669), and in the first half of the third year was 9.6% (n = 386). Similarly, SLK transplants comprised 5.5% of all deceased donor kidney transplants in the 2.5 years prior to policy implementation; this was 4.7%, 4.4% and 4.6% in each successive year after the policy was enacted.

Evaluating SLK and KAL outcomes before and after policy implementation

We first evaluated SLK recipient characteristics. In the post-policy era, recipients were less frequently trans-planted from the ICU (16.9% vs. 21.1%, P < 0.01; Table 1). Post-policy SLK recipients also demonstrated lower MELD scores (28 vs. 29, P < 0.01), were less likely to be functionally disabled requiring assistance with >50% of activities of daily living (53.8% vs. 57.6%, P = 0.03) and had a lower preponderance of hepatitis C virus (HCV; 14.3% vs. 21.5%, P < 0.01). Transplants performed in the post-policy SLK setting also had shorter cold ischemia times for liver (5.8 vs. 6.0 h, P = 0.01) but longer for kidney (10.5 vs. 10.2 h, P < 0.01). No other statistically significant baseline donor or transplant-related differences were noted between groups. Outcomes between SLK groups were largely similar (Table 2), with a 1-year patient survival of 90.0% in post-policy SLK recipients com-pared to 91.4% in the pre-policy group (HR = 1.12, 95% CI = 0.89–1.41, P = 0.30). Liver and kidney allograft survival were also comparable at 1 year across groups, as were incidences of liver and kidney PNF and DGF.

Table 1.

Baseline recipient, donor and transplant characteristics of SLK transplants before and after policy implementation.

| SLK - Pre | SLK - Post | P-value | |

|---|---|---|---|

| Number | 1737 | 1702 | |

| Recipient characteristics | |||

| Age | 59 (51–64) | 59 (52–64) | 0.10 |

| Female Sex | 667 (38.4%) | 662 (38.9%) | 0.78 |

| Race | 0.13 | ||

| White | 1102 (63.4%) | 1037 (60.9%) | |

| Black | 266 (15.3%) | 246 (14.5%) | |

| Asian | 55 (3.2%) | 66 (3.9%) | |

| Other | 314 (18.1%) | 353 (20.7%) | |

| BMI | 26.6 (23.1–31.2) | 26.7 (23.5–31.3) | 0.25 |

| ICU status at transplant | 367 (21.1%) | 287 (16.9%) | <0.01 |

| Days on waitlist | 69 (16–273) | 88 (18–289) | 0.07 |

| MELD | 29 (23–36) | 28 (23–34) | <0.01 |

| Disabled functional status | 1001 (57.6%) | 916 (53.8%) | 0.03 |

| HCC | 224 (12.9%) | 185 (10.9%) | 0.07 |

| Diabetes mellitus | 746 (43.0%) | 735 (43.2%) | 0.89 |

| Portal vein thrombosis | 207 (12.0%) | 238 (14.1%) | 0.08 |

| History of SBP | 189 (10.9%) | 214 (12.6%) | 0.12 |

| TIPS | 169 (9.7%) | 138 (8.1%) | 0.11 |

| Hemodialysis | 1194 (68.7%) | 1191 (70.0%) | 0.44 |

| Previous liver transplant | 113 (6.5%) | 111 (6.5%) | 0.99 |

| Previous kidney transplant | 53 (3.0%) | 57 (3.3%) | 0.63 |

| Primary etiology of liver disease | <0.01 | ||

| NASH | 353 (21.2%) | 400 (24.5%) | |

| HCV | 359 (21.5%) | 234 (14.3%) | |

| EtOH | 475 (28.5%) | 498 (30.5%) | |

| Other | 480 (28.8%) | 501 (30.7%) | |

| Etiology of renal failure | 0.77 | ||

| ATN/HRS | 456 (26.3%) | 458 (26.9%) | |

| Diabetes mellitus | 824 (47.4%) | 788 (46.3%) | |

| Hypertension | 338 (19.4%) | 348 (20.5%) | |

| Other | 119 (6.9%) | 108 (6.3%) | |

| Donor characteristics | |||

| Age | 33 (24–45) | 34 (25–46) | 0.06 |

| Female Sex | 667 (38.4%) | 662 (38.9%) | 0.78 |

| BMI | 26.0 (23.0–29.9) | 26.4 (23.1–30.4) | 0.09 |

| Creatinine (mg/dL) | 0.90 (0.70–1.20) | 0.90 (0.70–1.25) | 0.76 |

| Bloodstream Infection | 182 (10.5%) | 162 (9.5%) | 0.36 |

| LDRI | 1.81 (1.62–2.09) | 1.84 (1.64–2.13) | 0.07 |

| KDPI (%) | 28 (12–50) | 30 (13–53) | 0.06 |

| DCD | 108 (6.2%) | 115 (6.7%) | 0.53 |

| Cause of death - CVA | 391 (22.5%) | 337 (19.8%) | 0.06 |

| Transplant details | |||

| CIT - Liver (hours) | 6.0 (4.8–7.5) | 5.8 (4.7–7.2) | 0.01 |

| CIT - Kidney (hours) | 10.2 (7.8–14.0) | 10.5 (8.1–16.1) | <0.01 |

Values are listed as median +/− interquartile range unless otherwise stated

BMI, body mass index; cPRA, calculated panel reactive antibodies; ATN, acute tubular necrosis; HRS, hepatorenal syndrome; CVA, cerebrovascular accident; KDPI, Kidney Donor Profile Index.

Table 2.

SLK outcomes before and after allocation policy implementation.

| SLK - Pre | SLK - Post | HR | 95% CI | P-value | |

|---|---|---|---|---|---|

| Number | 1737 | 1702 | |||

| One-year survival | 91.4% | 90.0% | 1.12 | 0.89–1.41 | 0.30 |

| Liver-related outcomes | |||||

| Primary non-function | 12 (0.7%) | 13 (0.8%) | - | - | 0.84 |

| Acute rejection within 1 year | 90 (6.5%) | 73 (8.1%) | - | - | 0.16 |

| One-year graft survival | 90.4% | 89.1% | 1.24 | 0.92–1.43 | 0.22 |

| Kidney-related outcomes | |||||

| Primary non-function | 40 (2.3%) | 29 (1.7%) | - | - | 0.22 |

| Delayed graft function | 467 (26.9%) | 439 (25.8%) | - | - | 0.49 |

| Acute rejection within 1 year | 63 (4.8%) | 33 (3.8%) | - | - | 0.29 |

| Three-month graft survival | 94.2% | 93.1% | 1.20 | 0.92–1.58 | 0.18 |

| One-year graft survival | 89.0% | 88.9% | 1.01 | 0.81–1.24 | 0.92 |

Values are listed as median +/− interquartile range unless otherwise stated.

A total of 132 patients received kidney after liver transplant after implementation of the Safety Net, translating to an average of 4.7 KAL transplants per month in the first 2.5 years since policy implementation. These patients waited an average of 57 (IQR 19–149) days from time of listing to kidney transplant. Prior to the Safety Net, 80 KAL transplants were per-formed (0.4 per month) with an average waitlist time of 470 (IQR 105–932) days. KAL recipients since the Safety Net were less frequently diagnosed with acute tubular necrosis or hepatorenal syndrome and less likely to require dialysis (65.1% vs. 90.0%, Table 3). Coinciding with prolonged waitlist times, the interval from liver to kidney transplant was longer than the KAL pre-Safety Net group (737 vs. 314 days, P < 0.01). Safety Net KAL transplants also had shorter cold ischemia times (11.6 vs. 17.2 h, P < 0.01). Despite these differences, outcomes between KAL recipients remained comparable (Table 4). Comparing KAL before and after the Safety Net revealed that patient survival was similar at one year (92.7% vs. 93.1%, P = 0.54) as was graft survival (90.9% vs. 95.0%, P = 0.21). DGF incidence and rates of acute rejection within 1 year of transplant were also similar.

Table 3.

Baseline recipient, donor and transplant characteristics in pre- and post-Safety Net KAL transplants.

| KAL - Pre-Safety Net | KAL - Safety Net | P-value | |

|---|---|---|---|

| Number | 80 | 132 | |

| Recipient characteristics | |||

| Age | 59 (52–63) | 58 (51–65) | 0.59 |

| Female Sex | 28 (35.0%) | 42 (31.8%) | 0.26 |

| Race | 0.16 | ||

| White | 64 (80.0%) | 88 (66.7%) | |

| Black | 6 (7.5%) | 11 (8.3%) | |

| Asian | 2 (2.5%) | 3 (2.3%) | |

| Other | 8 (10.0%) | 30 (22.7%) | |

| BMI | 27.1 (23.5–30.7) | 26.7 (23.1–31.0) | 0.02 |

| cPRA | 0 (0–50) | 0 (0–31) | 0.77 |

| Days on waitlist | 470 (105–932) | 57 (19–149) | 0.01 |

| Days between liver and kidney transplant | 737 (349–1225) | 446 (332–619) | <0.01 |

| Hemodialysis | 72 (90.0%) | 86 (65.1%) | <0.01 |

| Previous kidney transplant | 2 (2.5%) | 3 (2.3%) | 0.14 |

| Etiology of renal failure | <0.01 | ||

| ATN/HRS | 37 (46.2%) | 36 (27.3%) | |

| Diabetes mellitus | 23 (28.8%) | 69 (52.3%) | |

| Hypertension | 8 (10.0%) | 22 (16.7%) | |

| Other | 12 (15.0%) | 5 (3.7%) | |

| Donor characteristics | |||

| Age | 37 (24–47) | 41 (34–48) | 0.14 |

| Female Sex | 29 (36.3%) | 63 (47.7%) | 0.56 |

| BMI | 27.5 (23.3–30.6) | 27.5 (23.5–32.2) | 0.74 |

| Diabetes mellitus | 7 (8.8%) | 6 (4.6%) | 0.52 |

| Hypertension | 21 (26.2%) | 27 (20.4%) | 0.72 |

| Creatinine (mg/dl) | 0.90 (0.70–1.35) | 1.07 (0.80–1.41) | 0.05 |

| Bloodstream infection | 2 (2.5%) | 15 (11.4%) | 0.21 |

| KDPI (%) | 41 (22–63) | 44 (32–58) | 0.21 |

| Cause of death - CVA | 21 (26.3%) | 36 (27.3%) | 0.74 |

| Transplant details | |||

| Cold ischemia time (hours) | 17.2 (10.4–21.5) | 11.6 (8.2–15.8) | <0.01 |

Values are listed as median +/− interquartile range unless otherwise stated.

BMI, body mass index; cPRA, calculated panel reactive antibodies; ATN, acute tubular necrosis; HRS, hepatorenal syndrome; CVA, cerebrovascular accident; KDPI, Kidney Donor Profile Index.

Table 4.

Kidney-related outcomes in pre- and post-Safety Net KAL transplants.

| KAL - Pre-Safety Net | KAL - Safety Net | HR | 95% CI | P-value | |

|---|---|---|---|---|---|

| Number | 80 | 132 | |||

| Primary non-function | 0 (0.0%) | 2 (1.5%) | - | - | 0.53 |

| Delayed graft function | 16 (20.0%) | 19 (14.4%) | - | - | 0.34 |

| Acute rejection within 1 year | 5 (7.5%) | 4 (7.5%) | - | - | 0.99 |

| Three-month graft survival | 97.50% | 93.50% | 2.56 | 0.53–12.33 | 0.24 |

| One-year graft survival | 95.00% | 92.70% | 1.85 | 0.56–6.06 | 0.31 |

| One-year patient survival | 93.10% | 92.70% | 1.46 | 0.43–5.10 | 0.54 |

Values are listed as median +/− interquartile range unless otherwise stated.

We identified 104 matched pairs after propensity score matching KTA patients after policy implementation to Safety Net KAL recipients. No significant differences were observed in relation to recipient or transplant characteristics (Table 5). Donors were also comparable and KDPI similar (KAL: 44% vs. KTA: 46%, P = 0.61). Outcomes, too, were largely similar (Table 6) – 1-year patient survival in the KAL group was 92.9% vs. 95.5% in KTA recipients (HR: 1.42, CI: 0.38–5.29, P = 0.60) while graft survival at 1 year was 89.7% vs. 94.2% (HR: 1.85,CI: 0.60–5.68, P = 0.28). PNF rates were comparably low (1.9% vs. 0.0%, P = 0.50) as were rates of acute rejection within 1 year (7.5% vs. 10.3%, P = 0.73). DGF was more common in the KTA population (13.5% vs. 28.9%, P = 0.01).

Table 5.

Baseline recipient, donor and transplant characteristics in Safety Net KAL transplants and propensity matched postpolicy KTA transplants.

| KTA | KAL - Safety Net | P-value | |

|---|---|---|---|

| Number | 104 | 104 | |

| Recipient characteristics | |||

| Age | 61 (50–66) | 57 (49–66) | 0.23 |

| Female Sex | 33 (31.7%) | 33 (31.7%) | 0.99 |

| Race | 0.21 | ||

| White | 72 (69.2%) | 64 (61.5%) | |

| Black | 13 (12.5%) | 11 (10.6%) | |

| Asian | 0 (0.0%) | 3 (2.9%) | |

| Other | 19 (18.3%) | 26 (25.0%) | |

| BMI | 27.7 (24.0–32.6) | 27.2 (23.7–31.7) | 0.60 |

| cPRA | 0 (0–25) | 0 (0–37) | 0.73 |

| Days on waitlist | 61 (16–175) | 59 (19–164) | 0.91 |

| Hemodialysis | 71 (68.3%) | 72 (69.2%) | 0.99 |

| Previous kidney transplant | 5 (4.8%) | 3 (2.9%) | 0.72 |

| Etiology of renal failure | 0.86 | ||

| ATN/HRS | 32 (30.8%) | 36 (34.6%) | |

| Diabetes mellitus | 41 (39.4%) | 41 (39.4%) | |

| Hypertension | 27 (26.0%) | 22 (21.1%) | |

| Other | 4 (3.8%) | 5 (4.8%) | |

| Donor characteristics | |||

| Age | 41 (26–53) | 41 (33–48) | 0.59 |

| Female Sex | 39 (37.5%) | 48 (46.1%) | 0.26 |

| BMI | 27.1 (24.2–31.1) | 28.5 (23.7–33.5) | 0.10 |

| Diabetes mellitus | 12 (11.5%) | 6 (5.8%) | 0.22 |

| Hypertension | 29 (28.4%) | 22 (21.1%) | 0.26 |

| Creatinine (mg/dl) | 1.00 (0.73–1.41) | 1.01 (0.79–1.50) | 0.46 |

| Bloodstream infection | 6 (5.8%) | 11 (10.6%) | 0.31 |

| KDPI (%) | 46 (20–72) | 44 (32–58) | 0.61 |

| Cause of death - CVA | 27 (26.0%) | 29 (27.9%) | 0.88 |

| Transplant details | |||

| CIT (hours) | 12.6 (8.5–16.3) | 13.0 (8.9–16.2) | 0.54 |

Values are listed as median +/− interquartile range unless otherwise stated.

BMI, body mass index; cPRA, calculated panel reactive antibodies; ATN, acute tubular necrosis; HRS, hepatorenal syndrome; CVA, cerebrovascular accident; KDPI, Kidney Donor Profile Index.

Table 6.

Kidney-related outcomes in Safety Net KAL and propensity matched postpolicy KTA transplants.

| KTA | KAL - Safety Net | HR | 95% CI | P-value | |

|---|---|---|---|---|---|

| Number | 104 | 104 | |||

| Primary non-function | 0 (0.0%) | 2 (1.9%) | - | - | 0.50 |

| Delayed graft function | 30 (28.9%) | 14 (13.5%) | - | - | 0.01 |

| Acute rejection within 1 year | 6 (10.3%) | 3 (7.5%) | - | - | 0.73 |

| Three-month graft survival | 97.8% | 93.0% | 3.21 | 0.65–15.89 | 0.15 |

| One-year graft survival | 94.2% | 89.7% | 1.85 | 0.60–5.68 | 0.28 |

| One-year patient survival | 95.5% | 92.9% | 1.42 | 0.38–5.29 | 0.60 |

Values are listed as median +/− interquartile range unless otherwise stated.

Comparing kidney allograft utilization

We then sought to determine whether implementation of both SLK allocation policy and the KAL Safety Net affected the usage of the highest quality (KDPI < 35%) deceased donor kidney allografts. This was performed on a transplant-specific basis, where trends and out-comes of SLK, KAL and KTA using <35% KDPI allografts were individually compared across policy implementation. Before policy implementation, 7.1% of KDPI < 35% allografts were used in SLK compared to 6.2% after (P < 0.01); 0.2% of KDPI < 35% grafts were used for KAL before compared to 0.3% after (P = 0.39), while high-quality grafts were used in 92.7%of KTA compared to 93.5% after (P < 0.01).

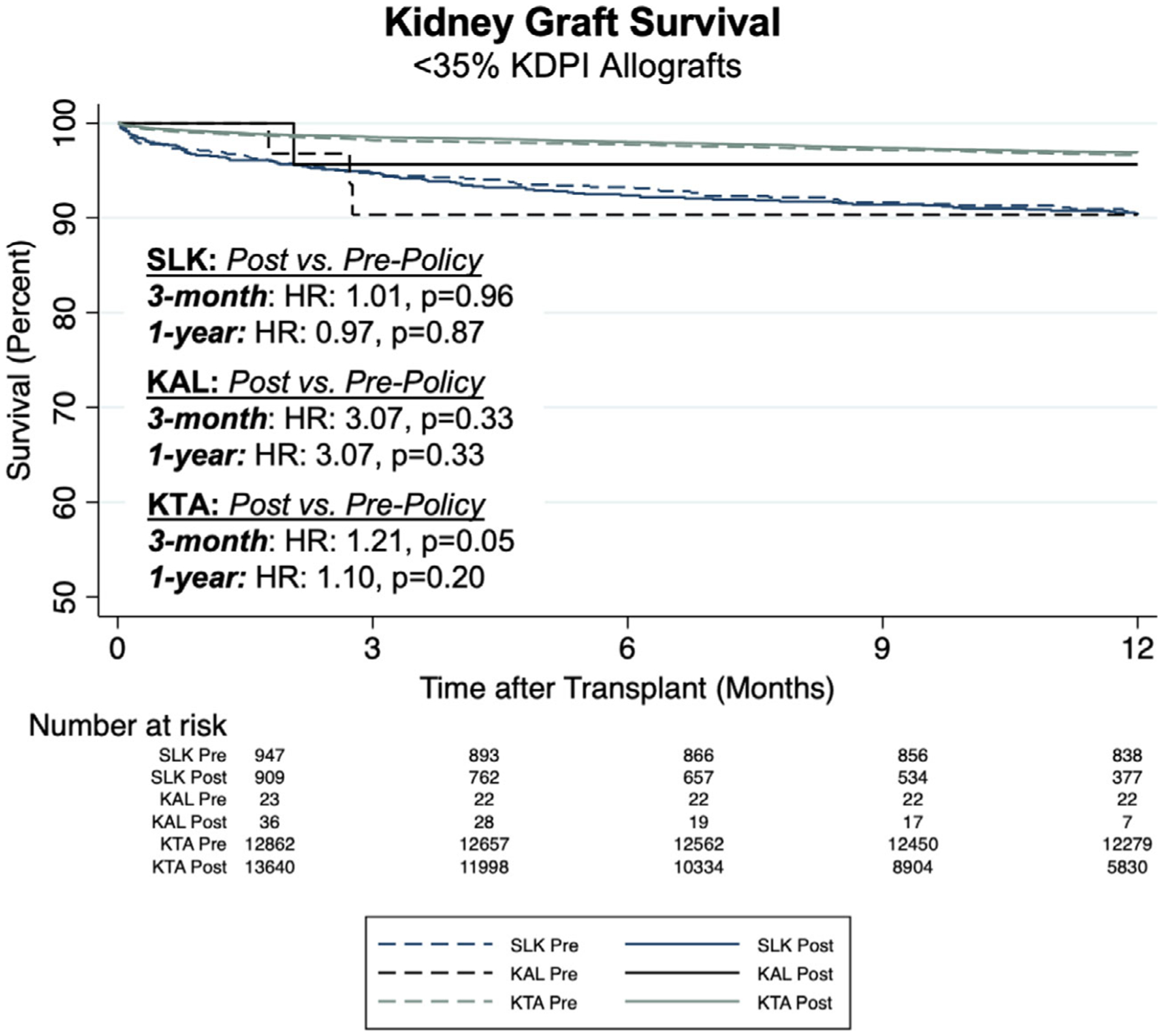

We first compared baseline recipient characteristics in SLK, KAL and KTA in transplants using <35% KDPI allografts before and after policy implementation (Table 7). Similar to the aggregate, quality-independent analyses, outcomes in each setting remained comparable across policy eras (Table 8, Figure 2). SLK kidney graft survival at 1 year was 90.5% after policy implementation compared to 90.4% before (P = 0.55); in KAL transplants this was 90.9% vs. 96.5% (P = 0.39) and in KTA 96.6% vs. 96.9% (P = 0.20). PNF and DGF rates were the same across time periods in all three settings.

Table 7.

Baseline characteristics of recipients of <35% KDPI renal allografts in SLK, KAL and KTA transplant settings before and after policy implementation.

| SLK | KAL | KTA | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Prepolicy | Postpolicy | P-value | Prepolicy | Postpolicy | P-value | Prepolicy | Postpolicy | P-value | |

| Number (% of total KDPI <35% transplants in era) | 992 (7.1%) | 917 (6.2%) | <0.01 | 34 (0.2%) | 38 (0.3%) | 0.39 | 12 938 (92.7%) | 13 698 (93.5%) | <0.01 |

| Age | 58 (50–64) | 58 (51–64) | 0.76 | 57 (49–62) | 59 (45–63) | 0.93 | 45 (33–57) | 45 (33–57) | 0.27 |

| Female Sex | 382 (38.5%) | 380 (41.4%) | 0.21 | 13 (38.2%) | 13 (34.2%) | 0.81 | 5229 (40.4%) | 5665 (41.4%) | 0.12 |

| Ethnicity | 0.31 | 0.23 | <0.01 | ||||||

| White | 660 (66.5%) | 576 (62.8%) | 28 (82.4%) | 24 (63.2%) | 4843 (37.4%) | 4963 (36.2%) | |||

| Black | 134 (13.5%) | 128 (14.0%) | 1 (2.9%) | 5 (13.2%) | 4426 (34.2%) | 4390 (32.1%) | |||

| Asian | 29 (2.9%) | 29 (3.1%) | 1 (2.9%) | 1 (2.6%) | 831 (6.4%) | 978 (7.1%) | |||

| Other | 169 (17.1%) | 184 (20.1%) | 4 (11.8%) | 8 (21.0%) | 2838 (22.0%) | 3367 (24.6%) | |||

| BMI | 26.7 (23.3–31.6) | 26.8 (23.5–31.1) | 0.98 | 24.8 (23.1–28.8) | 28.2 (23.8–31.2) | 0.24 | 27.1 (23.1–31.6) | 27.3 (23.2–31.8) | 0.15 |

| cPRA | 0 (0–0) | 0 (0–0) | 0.28 | 0 (0–87) | 0 (0–17) | 0.18 | 0 (0–50) | 0 (0–45) | 0.02 |

| Days on waitlist | 33 (8–163) | 44 (11–188) | 0.03 | 457 (104–1001) | 57 (20–116) | <0.01 | 596 (160–1313) | 505 (126–1294) | <0.01 |

| Hemodialysis | 468 (47.2%) | 436 (47.5%) | 0.89 | 31 (91.1%) | 26 (68.4%) | 0.02 | 10 943 (84.6%) | 11 105 (81.1%) | <0.01 |

| Previous kidney transplant | 26 (2.6%) | 31 (3.4%) | 0.35 | 1 (2.9%) | 3 (7.9%) | 0.62 | 1400 (10.8%) | 1149 (8.4%) | <0.01 |

| Etiology of renal failure | 0.43 | <0.01 | 0.25 | ||||||

| ATN/HRS | 263 (26.5%) | 263 (28.7%) | 17 (50.0%) | 9 (23.7%) | 7526 (58.2%) | 7973 (58.2%) | |||

| Diabetes Mellitus | 483 (48.7%) | 436 (47.5%) | 10 (29.4%) | 21 (55.3%) | 61 (0.5%) | 65 (0.5%) | |||

| Hypertension | 195 (19.7%) | 162 (17.7%) | 0 (0.0%) | 7 (18.4%) | 2368 (18.3%) | 2613 (19.1%) | |||

| Other | 51 (5.1%) | 56 (6.1%) | 7 (20.6%) | 1 (2.6%) | 2983 (23.0%) | 3047 (22.2%) | |||

Values are listed as median +/− interquartile range unless otherwise stated.

Table 8.

Outcomes of SLK, KAL and KTA transplants using renal allografts with <35% KDPI before and after policy implementation.

| SLK | KAL | KTA | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Prepolicy | Postpolicy | P-value | Prepolicy | Postpolicy | P-value | Prepolicy | Postpolicy | P-value | |

| Number | 992 | 917 | 34 | 38 | 12 938 | 13 698 | |||

| Primary non-function | 12 (1.2%) | 10 (1.1%) | 0.83 | 0 (0.0%) | 1 (2.6%) | 0.99 | 44 (0.3%) | 36 (0.3%) | 0.26 |

| Delayed graft function | 238 (24.0%) | 194 (21.2%) | 0.14 | 3 (8.8%) | 5 (13.2%) | 0.71 | 2637 (20.4%) | 2734 (20.0%) | 0.39 |

| Acute rejection within 1 year | 37 (5.2%) | 20 (4.2%) | 0.68 | 0 (0.0%) | 0 (0.0%) | 0.99 | 866 (8.1%) | 553 (6.9%) | <0.01 |

| Three-month kidney graft survival | 94.7% | 94.7% | 0.96 | 97.0% | 90.9% | 0.33 | 98.5% | 98.2% | 0.05 |

| One-year kidney graft survival | 90.4% | 90.5% | 0.55 | 97.0% | 90.9% | 0.33 | 96.9% | 96.6% | 0.20 |

| One-year patient survival | 91.5% | 90.5% | 0.55 | 97.0% | 93.9% | 0.57 | 98.0% | 97.8% | 0.38 |

Values are listed as median +/− interquartile range unless otherwise stated.

Figure 2.

Comparison of kidney graft survival in SLK, KAL and KTA using renal allografts with <35% KDPI before and after policy implementation.

Rates of acute rejection also remained comparable in the SLK and KAL populations, while they were lower in KTA in transplants performed since policy implementation (6.9% vs. 8.1%, P < 0.01).

Discussion

The implementation of the OPTN SLK allocation policy and Safety Net set out to regulate SLK transplantation and improve overall kidney allocation. Now based on clearly defined criteria, simultaneous liver and kidney allocation and priority listing of liver recipients with ongoing renal failure intend to better prioritize both liver and kidney failure populations’ access to organs.

Recent studies have sought to define the specific ESLD population who best benefit from dual organ transplantation. These showed that SLK improved graft survival in patients with prolonged dialysis-dependent renal failure [6,10] but did not in non-dialysis-dependent patients [6,11,12]. The ongoing concern is that unregulated renal allograft allocation for SLK reduces organ availability for renal failure patients requiring isolated kidney transplant. This may ultimately increase waitlist times and waitlist mortality for patients in isolated renal failure and reduce their access to high-quality kidneys. Additionally, each kidney allocated for SLK effectively represents one less graft which carries a 7.2-year survival benefit when utilized in a patient with isolated renal failure [13]. With one in twenty pre-policy deceased donor kidneys allocated to SLK transplants, optimizing SLK allocation represents an epidemiologic priority in the transplant community.

Success of the OPTN policy depends on its overall, cumulative impact on patients in liver and renal failure. Before policy implementation, SLK transplant patterns were highly variable from center to center [14]. Previous Markov simulation models demonstrated the potential variability in SLK use after policy implementation –if widely accepted and more commonly used in previously low-volume SLK centers, SLK use could actually increase [15]. While our overall findings that SLK trans-plantation represented a smaller proportion of both deceased donor liver and kidney transplants, we did observe two important temporal trends that require careful ongoing monitoring. The first is that SLK rates, after decreasing from 10.4% of all deceased donor liver transplants the year prior to policy implementation to 9.0% the year after, remained stable at 8.7% the following year but increased to 9.6% in the final 6 months of follow-up. The second is related to the increasingly common use of the Safety Net. A concern regarding early evaluation of policy efficacy is that transplant centers’ more comfort using the KAL Safety Net may effectively mitigate the reduced rates of SLK transplantation. Our study observed an overall rate of 4.7 KAL trans-plants performed per month after Safety Net implementation; while this number itself does not suggest that reduced SLK rates will be mitigated by KAL transplants, the trend in KAL usage does. When subdivided into successive 6-month intervals after introduction of the Safety Net, the KAL transplantation rate increased from 0.5 to 2.4 to 4.8 to 8.2 per month, although this decreased to 5.3 per month during the last 6 months of study follow-up. KAL waitlist additions continued to rise from 1.8 to 8.2 to 14.3 to 15.3 to 19.5 per month in each successive six-month period. These increases, however, have been partially balanced by a reduction in living donor KAL transplants, which represented 10.9% of all KAL transplants in the 2.5 years prior to Safety Net implementation compared to 3.8% after.

A formal policy dictating SLK transplant has succeeded in changing the overall composition of the SLK recipient population. While pre-policy SLK recipients were derived from ESLD populations suffering both acute and chronic renal failure and transplanted at the discretion of the surgeon and transplant center, the intent of the policy was to reduce kidney use for acute, reversable renal failure. Our findings indicate that the policy has succeeded in doing just that. We observed a post-policy SLK population composed of recipients less likely to be transplanted from the ICU, less frequently functionally disabled and with lower MELD. Addition-ally, more transplants in the post-policy era were performed for a diagnosis of nonalcoholic steatohepatitis (NASH), which is an increasingly prevalent ESLD etiology and carries a high rate of chronic renal failure [16,17] along with other systemic comorbidities in its recipient population [18]. In fact, the impact of policy implementation on SLK rates may be dampened given the increasing prevalence of NASH in the ESLD population [19] and ongoing analyses must consider the evolving liver failure landscape.

Ultimately, and regardless of pre and post-policy cohort characteristics, outcomes related to patient survival and liver and kidney graft function remained com-parable for patients meeting criteria for up-front SLK and KAL transplantation. Prior liver transplantation did not appear to affect early outcomes related to kidney transplantation either, as Safety Net KAL recipients per-formed equally to propensity matched KTA recipients of the same time period with regards to patient and graft survival. KAL patients also showed significantly lower rates of DGF than the matched KTA recipients even when transplanted with similar quality grafts and comparable cold ischemia times.

A significant point of contention in SLK practice is related to the quality of kidney allografts used in these transplants. Allocation of isolated renal allografts attempts to preserve kidneys with lowest KDPI for highly sensitized, pediatric patients, or those healthy listed patients with Estimated Post-Transplant Survival score ≤ 20. SLK transplants, however, have historically drawn from this pool [17,20,21]. Prior to OPTN policy, median KDPI for SLK transplants was 36% vs. 46% for kidney transplant alone [17]. This practice has been justified by previous reports demonstrating inferior out-comes in SLK transplantation as KDPI increases [21,22]; in particular, lowest quality grafts with KDPI > 85% were associated a 1.83 hazard ratio related to patient mortality compared to those <85% [21]. Conversely, renal allograft survival in SLK transplantation was on average one year shorter than its paired organ used in an isolated kidney transplant setting [23].

The importance of kidney quality in KAL transplantation has been addressed in the tiered organ allocation system defined by the Safety Net, although OPTN policy does not regulate kidney allocation in SLK based off of renal allograft quality. It is critical, then, to assess the impact of policy implementation on kidney utilization in SLK and in the context of overall kidney allograft utility. Our analysis of overall kidney utilization before and after policy implementation demonstrated areduction in kidney transplants using allografts from donors with KDPI < 35%; this was observed both glob-ally and within the KTA population, and while not statistically significant, was similarly observed in SLK and KAL settings. While defining kidney quality on a binary scale oversimplifies nuances behind individual graft utility, previous large-scale analysis has noted improved allograft survival when comparing kidneys with < or >/= 35% KDPI [24]. Our study also noted improved kidney graft survival in SLK transplants with higher quality allografts after policy implementation, although out-comes in SLK and KAL using <35% KDPI allografts did not change from pre to post-policy eras.

Our study did suffer from several limitations. As our populations were derived only from patients who were transplanted, the effect of the policy of those in renal failure not receiving a transplant cannot be determined. This is due to the nature of our data source, which only tracks outcomes for patients listed for transplant or transplanted. Granularity within the database is also limited and provides patient information only at discrete time-points. Furthermore, our data did not provide specific information regarding transplant eligibility according to the OPTN policy and thus certain exceptions were not able to be tracked. Similarly, patients eligible for the Safety Net priority listing were inferred based off of their meeting criteria listed by the OPTN as longitudinal monitoring of dialysis dependence, creatinine and eGFR are limited in the OPTN database. Measuring renal function itself is a controversial topic, and prior studies have noted that using creatinine as a marker of renal function in patients with anasarca and sarcopenia secondary to liver failure is not a reliable comparator to creatinine in patients with renal failure alone [25]. This serves a potential shortcoming both in the policy itself and data gathered for policy analysis. Additionally, assessing Safety Net KAL transplants required appropriately matched controls. Our study attempted to address this by comparing Safety Net KAL transplants to two distinct control groups – a propensity matched KTA population and a pre-Safety Net KAL cohort listed for transplant within the same window as the Safety Net. Ultimately, while these comparisons allowed for more equitable comparison of KAL Safety Net transplants, our findings must be taken in context of residual heterogeneity between groups. Finally, our findings were limited by the relatively short duration since policy implementation. This study there-fore focused on analyzing early outcomes related to the policy, making further studies assessing longer-term follow-up necessary in assessing the full impact of the policy.

Overall, this study provides an early investigation into the utility of the OPTN SLK allocation policy and Safety Net. With an ongoing organ supply-demand mismatch, there are inevitable concessions that must be made to optimize global liver and kidney allocation. This analysis demonstrates that the OPTN policy has been able to reduce kidney use in SLK transplantation while maintaining SLK outcomes, although there has been a trend toward increasing rates of both SLK and KAL transplants of late. Additionally, kidney-related outcomes were preserved in both SLK and KAL trans-plantation compared to pre-policy transplants despite using higher KDPI allografts. Finally, we found that policy implementation did not affect outcomes when using high-quality kidney allografts in any transplant setting. In order to better define and prognosticate renal dysfunction in patients with liver failure and ensure equitable access to organs across all patients with liver and renal failure, further longitudinal and granular studies are needed to determine the true impact of the allocation policy and the role for SLK transplantation itself. Early analyses reflect favorably on policy efficacy, although close monitoring is necessary and policy refinement inevitable.

Funding

This work was supported in part by Health Resources and Services Administration contract 234-2005- 370011C. The content is the responsibility of the authors alone and does not necessarily reflect the views or policies of the Department of Health and Human Services, nor does mention of trade names, commercial products, or organizations imply endorsement by the U.S. Government. PJA was supported by National Institutes of Health institutional training grant T32GM008562.

Conflicts of interest

The authors associated with this work have no conflicts of interest, no relevant financial disclosures and no funding sources pertinent to the study.

Footnotes

This manuscript was presented at the 2020 American Transplant Congress Virtual Meeting on June 1st, 2020

REFERENCES

- 1.Boyle G. Simultaneous Liver Kidney (SLK) allocation policy. Available at: https://optn.transplant.hrsa.gov/media/1192/0815-12SLKAllocation.pdf.

- 2.Miles CD, Westphal S, Liapakis A, Formica R. Simultaneous liver-kidney transplantation: impact on liver transplant patients and the kidney transplant waiting list. Curr Transplant Rep 2018; 5: 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fong TL, Khemichian S, Shah T, Hutchinson IV, Cho YW. Combined liver-kidney transplantation is preferable to liver transplant alone for cirrhotic patients with renal failure. Transplantation 2012; 94: 411. [DOI] [PubMed] [Google Scholar]

- 4.United States Department of Health & Human Services. Organ Procurement and Transplantation Network. United Network for Organ Sharing transplant trends: Transplants by Liver. National Data as ofJune3, 2020. Available at: https://optn.transplant.hrsa.gov/data/view-data-reports/national-data/# [Google Scholar]

- 5.Port FK. Organ donation and transplantation trends in the United States, 2001. Am J Transplant 2003; 3(Suppl 4): 7. [DOI] [PubMed] [Google Scholar]

- 6.Formica RN, Aeder M, Boyle G, et al. Simultaneous liver-kidney allocation policy: a proposal to optimize appropriate utilization of scarce resources. Am J Transplant 2016; 16: 758. [DOI] [PubMed] [Google Scholar]

- 7.Lunsford KE, Bodzin AS, Markovic D, et al. Avoiding futility in simultaneous liver-kidney transplantation: analysis of 331 consecutive patients listed for dual organ replacement. Ann Surg 2017; 265: 1016. [DOI] [PubMed] [Google Scholar]

- 8.Cassuto JR, Reese PP, Sonnad S, et al. Wait list death and survival benefit of kidney transplantation among nonrenal transplant recipients. Am J Transplant 2010; 10: 2502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Israni AK, Xiong H, Liu J, et al. Predicting end-stage renal disease after liver transplant. Am J Transplant 2013; 13: 1782. [DOI] [PubMed] [Google Scholar]

- 10.Pita A, Kaur N, Emamaullee J, et al. Outcomes of liver transplantation in patients on renal replacement therapy: considerations for simultaneous liver kidney transplantation versus safety net. Transplant Direct 2019; 5: e490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tanriover B, MacConmara MP, Parekh J, et al. Simultaneous liver kidney transplantation in liver transplant candidates with renal dysfunction: importance of creatinine levels, dialysis, and organ quality in survival. Kidney Int Rep 2016; 1: 221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yadav K, Serrano OK, Peterson KJ, et al. The liver recipient with acute renal dysfunction: a single institution evaluation of the simultaneous liver-kidney transplant candidate. Clin Transplant 2018; 32(1): e13148. [DOI] [PubMed] [Google Scholar]

- 13.Locke JE, Warren DS, Singer AL, et al. Declining outcomes in simultaneous liver-kidney transplantation in the MELD era: ineffective usage of renal allografts. Transplantation 2008; 85: 935. [DOI] [PubMed] [Google Scholar]

- 14.Luo X, Massie AB, Haugen CE, et al. Baseline and center-level variation in simultaneous liver- kidney listing in the United States. Transplantation 2018; 102: 609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cheng XS, Kim WR, Tan JC, Chertow GM, Goldhaber-Fiebert J. Comparing simultaneous liver-kidney transplant strategies: a modified cost-effectiveness analysis. Transplantation 2018; 102: e219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Molnar MZ, Joglekar K, Jiang Y, et al. Association of pretransplant renal function with liver graft and patient survival after liver transplantation in patients with nonalcoholic steatohepatitis. Liver Transpl 2019; 25: 399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cheng XS, Stedman MR, Chertow GM, Kim WR, Tan JC. Utility in treating kidney failure in end-stage liver disease with simultaneous liver-kidney transplantation. Transplantation 2017; 101: 1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Byrne CD, Targher G. NAFLD as a driver of chronic kidney disease. J Hepatol 2020; 72: 785. [DOI] [PubMed] [Google Scholar]

- 19.Povsic M, Wong OY, Perry R, Bottomley J. A Structured Literature review of the epidemiology and disease burden of non-alcoholic steatohepatitis (NASH). Adv Ther 2019; 36: 1574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Reese PP, Veatch RM, Abt PL, Amaral S. Revisiting multi-organ transplantation in the setting of scarcity. Am J Transplant 2014; 14: 21. [DOI] [PubMed] [Google Scholar]

- 21.Sharma P, Xu S, Schaubel DE, Sung RS, Magee JC. Propensity score-based survival benefit of simultaneous liver-kidney transplant over liver transplant alone for recipients with pretransplant renal dysfunction. Liver Transpl 2016; 22: 71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jay C, Pugh J, Halff G, Abrahamian G, Cigarroa F, Washburn K. Graft quality matters: survival after simultaneous liver-kidney transplant according to KDPI. Clin Transplant 2017; 31: e12933. [DOI] [PubMed] [Google Scholar]

- 23.Choudhury RA, Reese PP, Goldberg DS, Bloom RD, Sawinski DL, Abt PL. A paired kidney analysis of multiorgan trans- plantation: implications for allograft survival. Transplantation 2017; 101: 368. [DOI] [PubMed] [Google Scholar]

- 24.Gupta A, Francos G, Frank AM, Shah AP. KDPI score is a strong predictor of future graft function: moderate KDPI (35–85) and high KDPI (> 85) grafts yield similar graft function and survival. Clin Nephrol 2016; 86: 175. [DOI] [PubMed] [Google Scholar]

- 25.Francoz C, Glotz D, Moreau R, Durand F. The evaluation of renal function and disease in patients with cirrhosis. J Hepatol 2010; 52: 605. [DOI] [PubMed] [Google Scholar]