Abstract

Background

Accurate segmentation of lung lobe on routine computed tomography (CT) images of locally advanced stage lung cancer patients undergoing radiotherapy can help radiation oncologists to implement lobar-level treatment planning, dose assessment and efficacy prediction. We aim to establish a novel 2D–3D hybrid convolutional neural network (CNN) to provide reliable lung lobe auto-segmentation results in the clinical setting.

Methods

We retrospectively collected and evaluated thorax CT scans of 105 locally advanced non-small-cell lung cancer (NSCLC) patients treated at our institution from June 2019 to August 2020. The CT images were acquired with 5 mm slice thickness. Two CNNs were used for lung lobe segmentation, a 3D CNN for extracting 3D contextual information and a 2D CNN for extracting texture information. Contouring quality was evaluated using six quantitative metrics and visual evaluation was performed to assess the clinical acceptability.

Results

For the 35 cases in the test group, Dice Similarity Coefficient (DSC) of all lung lobes contours exceeded 0.75, which met the pass criteria of the segmentation result. Our model achieved high performances with DSC as high as 0.9579, 0.9479, 0.9507, 0.9484, and 0.9003 for left upper lobe (LUL), left lower lobe (LLL), right upper lobe (RUL), right lower lobe (RLL), and right middle lobe (RML), respectively. The proposed model resulted in accuracy, sensitivity, and specificity of 99.57, 98.23, 99.65 for LUL; 99.6, 96.14, 99.76 for LLL; 99.67, 96.13, 99.81 for RUL; 99.72, 92.38, 99.83 for RML; 99.58, 96.03, 99.78 for RLL, respectively. Clinician's visual assessment showed that 164/175 lobe contours met the requirements for clinical use, only 11 contours need manual correction.

Conclusions

Our 2D–3D hybrid CNN model achieved accurate automatic segmentation of lung lobes on conventional slice-thickness CT of locally advanced lung cancer patients, and has good clinical practicability.

Keywords: Artificial intelligence, Computed tomography, Automatic segmentation, Lung lobe, Convolutional neural network

Background

Lung cancer is one of the most devastating tumors with a high incidence and mortality worldwide [1]. Radiotherapy is considered the main option for locally advanced lung cancer patients. Although radiotherapy improves locoregional control and survival in patients with lung cancer, radiation-induced lung injury (RILI) is common treatment-related toxicity, which can be fatal in severe cases. Due to the large tumor volume and extensive lymph node involvement, patients at locally advanced stage would risk inadequate dose delivery to the target because of dose limitations arising from adjacent critical organs. Some of them may even lose the opportunity to receive curative treatment. Intensity-modulated radiotherapy produces accurate dose homogeneity around targets and less toxicity to normal organs by optimizing 3D dose distributions based on dose constraints prescribed for the target and normal tissues. In previous studies, most of the dosimetric constraints used standardly refer to both lungs as a single functional unit [2–6]. Some recent studies [7–9] suggest that lobar level treatment planning and radiation dose assessment may be an accessible way to improve treatment planning and reduce the incidence of radiation-induced lung injury. Radiation oncologists often need to manually delineate the tumor and normal tissues slice-by-slice on CT images acquired for radiotherapy planning. Manual segmentation of the lung lobe is time-consuming and has poor replicability among different observers. Therefore, there is the prerequisite to develop a fully automatic methodology that produces reliable segmentation of the lung lobe in the clinical setting.

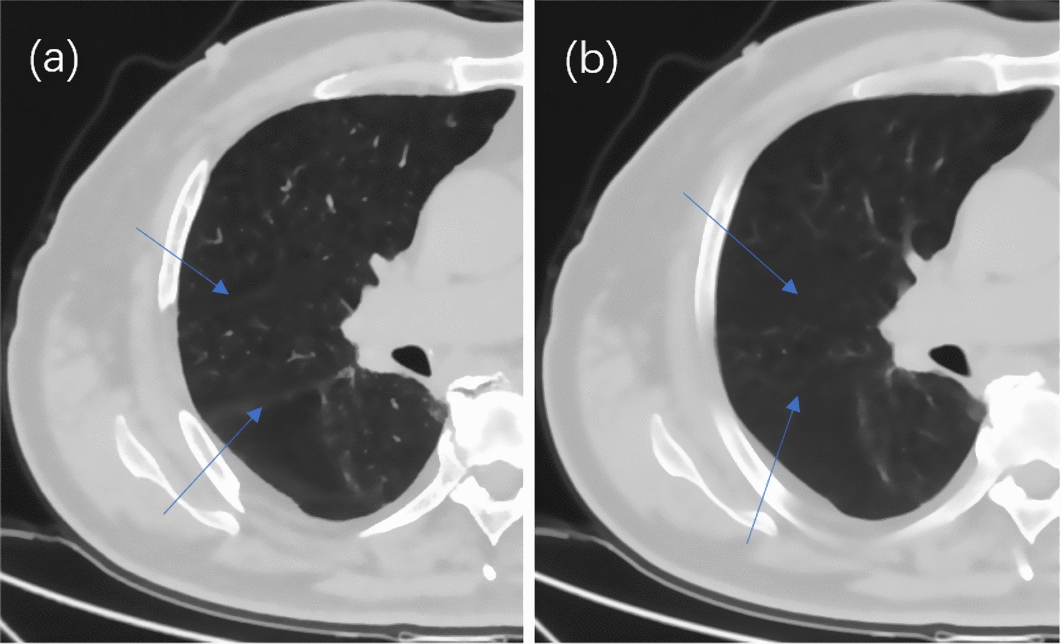

For locally advanced lung cancer patients undergoing radiotherapy, the treatment planning CT is usually acquired with 5 mm slice thickness, this adds to the difficulty of lung lobe auto-contouring, since slice thickness will generally affect the tissue contours in the images. The lungs have five different partitions called lobes. The left lung is divided into upper and lower lobes, and the right lung is divided into upper, middle, and lower lobes [10]. The boundaries of each lobe are fissures. The thin-section CT was beneficial for recognizing the fissures. As shown in Fig. 1, it was clear that the interlobar fissures often appear as a cavity without the intersection of the vascular tree or the bronchial tree on a standard CT, while they are displayed as lines on thin-section CT [11]. Most of the existing technologies rely on pulmonary blood vessel/airway segmentation or semi-automated methods [12]. However, the segmentation of lung airways and blood vessels is relatively complicated and not always reliable, especially in the presence of disease.

Fig. 1.

Axial view of right lung for one patient. Blue arrows point to the fissures in right lung. a 1 mm slice CT, b 5 mm slice CT

In recent years, in the field of image segmentation, various deep learning-based segmentation algorithms based on 2D or 3D convolutional neural network (CNN) have been proposed. Some studies [13–16] reported the use of deep learning in lung lobe segmentation. George et al. [14] employ a 2D fully convolutional network combined with a 3D random walker refinement. This approach achieves high accuracy without reliance on prior segmentation of the airway or vessel. However, it relied on prior segmentation of the lobar boundaries, and the 3D random walker algorithm needs the initialization of seeds and weights. Harrison et al. [13] adapt the holistically nested network (HNN) with a progressive constraint on multi-scale pathways to overcome issues with HNN output ambiguity and the coarsening resolution of fully convolutional networks. Imran et al. [15] introduced a fast and fully automated lung lobe segmentation method based on a Progressive Dense V-Network. This method can segment lung lobes in one forward pass of the network. Park et al. [16] used a lung lobe segmentation method with 3D U-Net architecture and achieved high accuracy of lobe segmentation on the chest CT scans of mild-to-moderate COPD patients. These studies provide a very important base for further researches. Training a deep neural network that can generalize well to new data is a challenging problem. These reported automatic segmentation algorithms are usually developed and tested on carefully selected high-resolution public data sets, slice thickness ranged from 0.50 to 1.50 mm. However, experiments on conventional imaging data show that algorithms that perform well on public data sets cannot produce accurate and reliable segmentation in clinical CT images of patients with severe diseases [17].

Unlike CT images for diagnostic purposes, radiotherapy planning CT, as the basis for treatment planning, needs to consider the efficiency of treatment and the accuracy of target delineation. There are usually standard slice thicknesses of CT scans for tumors of different volumes at different sites. When patients with locally advanced non-small-cell lung cancer are treated with radiation therapy, a 5-mm slice thickness is generally used to obtain CT images for radiation therapy planning. The increased slice thickness of clinical standard CT affects the image quality, which results in segmentation accuracy reduction. To the best of our knowledge, lung lobe auto-contouring by deep learning method in 5 mm slice CT of lung cancer patients has not been previously investigated.

When it comes to these conventional but not perfect clinical data, it is necessary to make modifications to the learning model network architecture such that improves the model’s performance on these data. The 2D network is inefficient and cannot capture inter-slice correlations. To learn volumetric information in CT images, the convolution kernels is to extend from 2 to 3D. In this way, the networks can take full advantage of the 3D context for better performance. But 3D CNN has more parameters than 2D CNN, and the training of 3D CNN is computationally expensive, which limits the construction of very deep networks. In 5 mm slice CT images, the voxel scale in the Z-axis is much larger than that in the XY plane. Directly performing 3D convolutions with isotropic kernels on these anisotropic volumetric images could be problematic.

In this study, we propose a 2D–3D hybrid segmentation network based on a convolutional neural network to automatically segment lung lobes from 5 mm slice-thickness computed tomography (CT) images. The proposed network combines the advantages of both 2D and 3D CNN, improving the model accuracy, and achieved high performances in this challenging data set. Its purpose is to assist clinicians in their decision-making with lobar level treatment planning, radiation dose assessment, and efficacy prediction, which may help to improve treatment outcomes and reduce toxicity for locally advanced lung cancer patients undergoing radiotherapy.

Results

The tumors of 70 patients in the training & validation set and 35 patients in the test set were distributed in 5 lobes. The tumor location information of all cases is shown in Table 1.

Table 1.

Tumor location information of all cases

| Location | Entire cohort | Training-validation cohort | Test cohort |

|---|---|---|---|

| LUL | 32 | 22 | 10 |

| LLL | 12 | 9 | 3 |

| RUL | 22 | 16 | 6 |

| RML | 14 | 4 | 10 |

| RLL | 25 | 19 | 6 |

| Total | 105 | 70 | 35 |

LUL left upper lobe, LLL left lower lobe, RUL right upper lobe, RML right middle lobe, RLL right lower lobe.

For the 35 patients in the test group, the DSC of the auto-contour of all lobes was over 0.75, which met the pass standard of segmentation result.

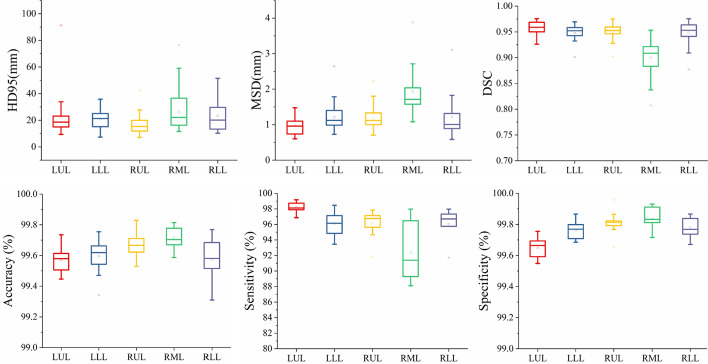

All the automatic segmentation profiles were divided into five groups according to the left upper lobe, left lower lobe, right upper lobe, right middle lobe, and right lower lobe. Six different quantitative indexes, HD95, MSD, DSC, Sensitivity, Specificity, and Accuracy were used for evaluation. Figure 2 shows that DSC results of all the other lobes were around 0.95 except for the mean DSC of the right middle lobe of 0.9003 ± 0.0331. Quantitative parameters for lung lobe contouring are shown in Table 2.

Fig. 2.

Boxplot of HD95, MSD, DSC, accuracy, sensitivity and specificity

Table 2.

Quantitative parameters for lung lobe segmentation

| N=35 | LUL | LLL | RUL | RML | RLL |

|---|---|---|---|---|---|

| HD95 (mm) | 22.3584±17.2096 | 20.9913±7.1894 | 16.9986±7.8134 | 26.553±13.995 | 23.4818±11.1656 |

| MSD (mm) | 0.9754±0.2355 | 1.2095±0.3613 | 1.1752±0.2935 | 1.9358±0.7122 | 1.2164±0.5285 |

| DSC | 0.9579±0.0125 | 0.9479±0.0157 | 0.9507±0.0133 | 0.9003±0.0331 | 0.9484±0.0225 |

| Accuracy | 99.5715±0.0928 | 99.5951±0.1209 | 99.6668±0.0892 | 99.7161±0.0707 | 99.5753±0.1421 |

| Sensitivity | 98.2261±0.6801 | 96.1441±1.5422 | 96.1279±1.7629 | 92.3785±3.6881 | 96.0335±2.0398 |

| Specificity | 99.6506±0.0652 | 99.7638±0.0603 | 99.8104±0.0756 | 99.8346±0.0762 | 99.7793±0.0638 |

LUL left upper lobe, LLL left lower lobe, RUL right upper lobe, RML right middle lobe, RLL right lower lobe.

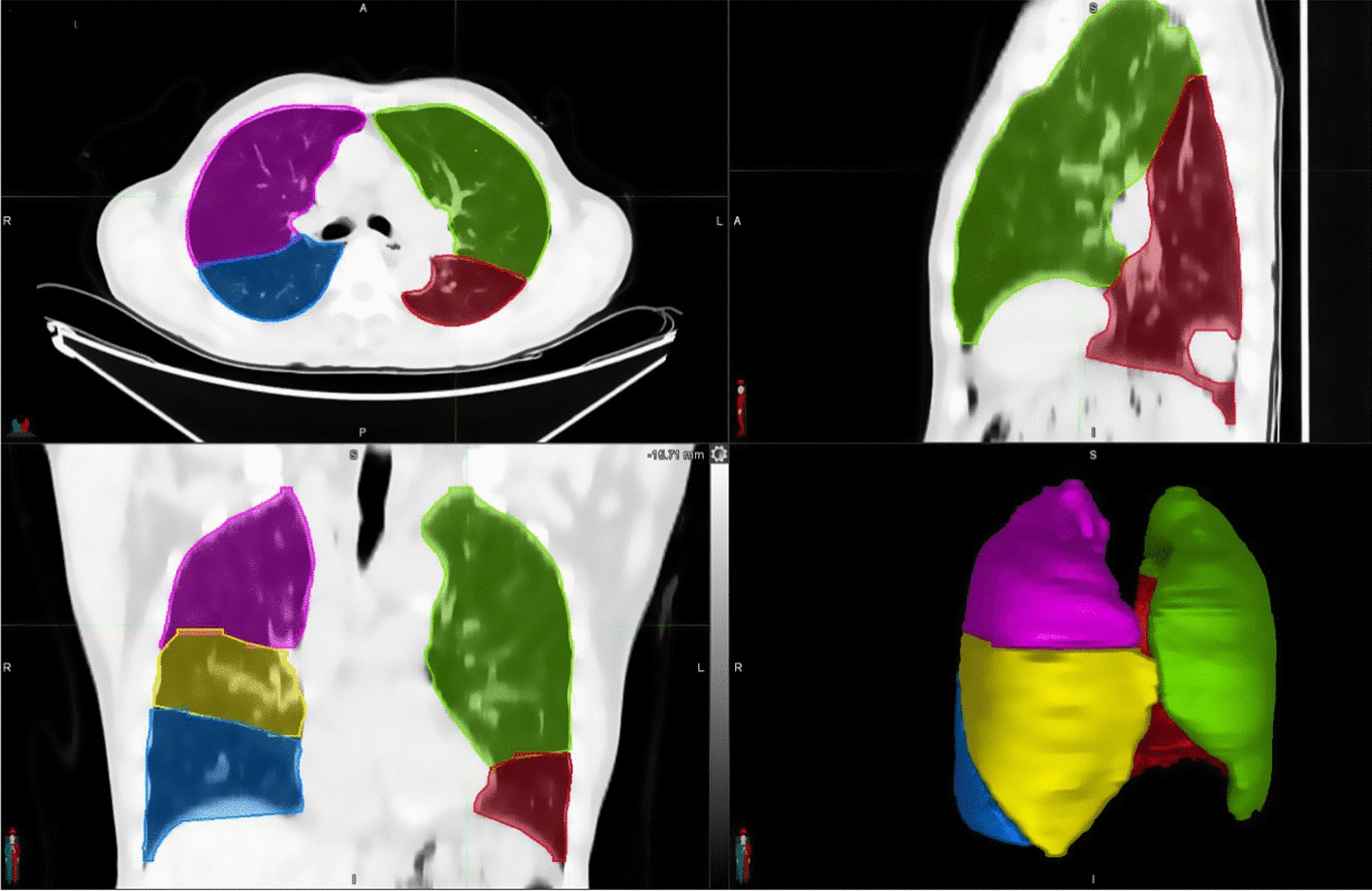

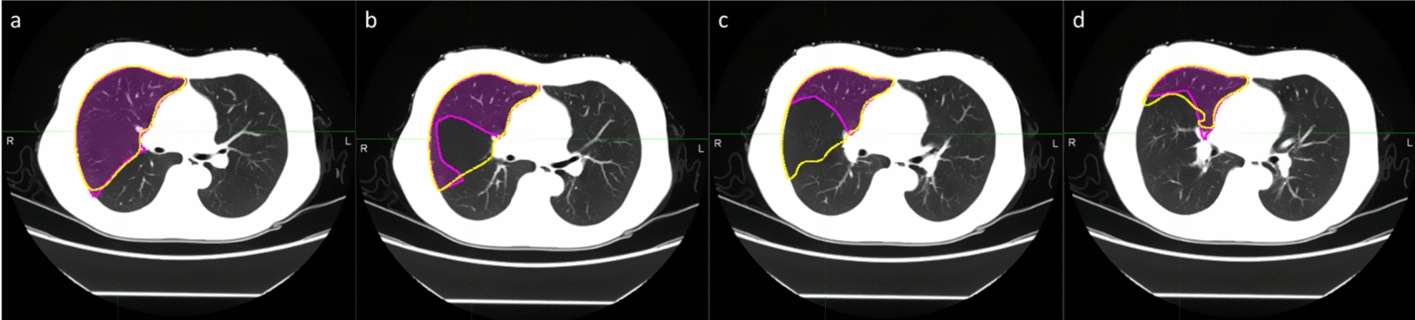

Figure 3 shows an example of lung lobe contours from our 2D–3D hybrid auto-segmentation network. A radiation oncologist performed quality evaluation (accepted as is/need manual correction/failed) on the segmentation results of our lung lobe segmentation model on 35 cases in the test group in slice (axial, coronal, sagittal) and 3Dviews. Among the 175 lung lobe contours from 35 cases, 164 (93.7%) were accepted as is; only 11 (6.2%) of the lung lobes, distributed in a total of 28 slices, need manual correction before clinical use; 0(0%) failed.

Fig. 3.

Example of lung lobe contours from our 2D–3D segmentation network

Figure 4 shows an example case in which there are differences between the automatical segmentation and the manual contour of RUL. Figure 4a–d shows four consecutive slices in the same CT sequence. Due to the sudden occurrence of fissures, the right upper lobe contours vary greatly between consecutive slices, and the automatic segmentation network failed to accurately identify these changes until the contour of the right middle lobe appears in slice (d).

Fig. 4.

Example of auto-segmentation requires manual correction

Discussion

In this study, we proposed a 2D–3D hybrid CNN model for lung lobes segmentation on the conventional 5 mm slice-thickness CT images of locally advanced NSCLC patients. The segmentation results were assessed using quantitative indexes, all contours passed the criteria with DSC > = 0.75. Our segmentation results have been visually evaluated by the clinician. Most of the segmentation results can be clinically acceptable as is except for a few (11 / 175 contours) which need minor manual correction. Our model greatly alleviates the workload of the clinician in manually contouring the lung lobes and provides help for lobar-level contouring, treatment planning, dose distribution prediction, and evaluation on 5 mm slice-thickness CT images of locally advanced lung cancer patients.

Before locally advanced NSCLC patients undergoing radiotherapy, planning CT images were obtained on the simulation CT, target and OARs were delineated on the planning CTs, and then the CT images and RT structures were used for treatment planning. Since the treatment volume of these patients includes tumor and lymph nodes, and the irradiation volume is large, their planning CT images are usually acquired with a thickness of 5 mm. Compared with other CT images with thinner thickness, due to the partial volume effect (PVE) in Z-axis, the resolution and contrast of CT images will be reduced, and the thin front and back connecting lines in the images will be affected [18]. In addition, the existence of a tumor in the lung will also damage the structure of the adjacent lung lobe, which increases the difficulty of automatic segmentation. Although there are some commercial automatic contouring softwares provide auto-segmentation of some normal tissues on the radiotherapy planning CT images, but, as far as we know, there is no commercial software that can automatically contour the lung lobes to meet the needs of clinical applications on this type of CT images.

In recent years, some studies [13, 15, 16, 19] have reported the application of deep learning models based on convolutional neural networks in lung lobes segmentation, and many have obtained satisfactory results. Most of the models in these studies use public data sets for model training and verification. Taking into account the difficulties in the automatic segmentation of lung lobes in conventional radiotherapy planning CT images for patients with locally advanced NSCLC, these algorithms that perform well on public data sets cannot produce accurate and reliable segmentation when directly applied to clinical standard images, so we tried to establish a new automatic segmentation model that can meet this clinical need.

The 2D–3D CNN hybrid segmentation model we proposed uses 3D CNN to learn 3D volumetric information [14] while taking advantage of 2D CNN to extract the edge information within the layers [13] to maximize the potential of the algorithm on limited data. Thereby improving the accuracy of segmentation. Our test results on a test set containing 35 cases and 175 segmentation contours show that the DSCs of LUL, LLL, RUL, RML, and RLL are 0.9579 ± 0.0125, 0.9479 ± 0.0157, 0.9507 ± 0.0133, 0.9003 ± 0.0331, and 0.9484 ± 0.0225, respectively. The PDV-Net model reported by Imran [15], select the chest CT data training model from the LIDC data set, the slice thickness of the scans ranged from 0.50 to 1.50 mm, and the in-plane resolution varied between 0.53 and 0.88 mm The DSC results of lung lobe segmentation of the model are 0.966 ± 0.014, 0.966 ± 0.037, 0.937 ± 0.031, 0.882 ± 0.057, and 0.956 ± 0.017. Park [16] adopts the 3D U-Net model and select chest CT scans of mild-to-moderate chronic obstructive pulmonary disorder (COPD) patients, Slice thickness ranged from 0.625 mm to 0.80 mm, the reported result is 0.9556 ± 0.013, 0.9701 ± 0.010, 0.9697 ± 0.007, 0.9306 ± 0.030, and 0.9697 ± 0.007. Compared with previous studies, although our image data slice thickness is up to 5 mm, our hybrid segmentation model achieves satisfactory results in clinical data sets with less information in the image and large boundary variation between successive layers.

Similar to that reported in previous studies, the DSC value of RML was lower than those of other lobes. The reasons may be as follows: First, in terms of volume, RML is much smaller than the upper and lower lobes, about 30–50% of the upper or lower lobe. Small errors in auto-segmentation volumes can lead to large differences in Dice value and reduce the overall performance. Second, segmentation errors generally occur at the starting slices, where the RML/RLL appears, especially for CT images with a thickness of 5 mm, since the fissures between RML and RUL or RLL are more difficult to recognize than those are displayed as lines on thin-section CT, the boundary of a middle or lower lobe in its starting slice is not often sensitively identified by segmentation network (Fig. 4), which makes accurate segmentation of RML a challenge. In the proposed hybrid network, the advantages of 2D and 3D networks are combined to improve the segmentation accuracy of RML. From our results, the mean DSC of RML reached 0.9, which was comparable to the results of other models in 1–2 mm slice thickness CT images. Among 175 contours, 11 contours required manual correction by the physician. We analyzed the cases that are significantly different from manual contours in the evaluation results. The main differences are in the continuous layers, where the contour of the lung lobes changes greatly in the superior–inferior direction, especially in the slices, where the fissures between adjacent lung lobes appear. However, most of the differences are considered acceptable for clinical use after visual assessment.

Our research has some limitations. Because our main research goal is to establish a hybrid model that can achieve automatic lung lobe segmentation on clinical standard CT images for locally advanced NSCLC patients undergoing radiotherapy and to evaluate the clinical applicability of the model. We did not perform much post-processing optimization to improve our segmentation results, which may also affect some of our segmentation results. We will further optimize our model in future work to achieve more precise lung lobe segmentation.

Conclusion

The 2D–3D CNN hybrid segmentation model proposed in this study can achieve relatively accurate automatic segmentation of lung lobes on conventional chest CT of locally advanced lung cancer patients, and results that meet the needs of clinical applications can be obtained with minimal manual participation. Our model reduces the impact of heavy manual segmentation workload and time-consuming on clinical workflow and provides a basis for the implementation of lobar-level contouring, treatment planning, dose evaluation, and treatment outcome prediction for locally advanced lung cancer patients.

Methods

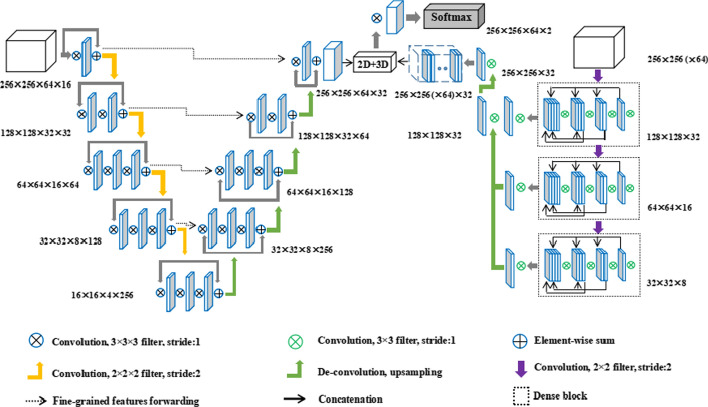

2D–3D hybrid CNN

Our model is an end-to-end trainable hybrid convolutional neural network, which combines a 3D CNN to learn the long-range 3D contextual information of CT images, and a 2D CNN to capture intra-slice semantic information. The complete network structure diagram is shown in Fig. 5.

Fig. 5.

Schematic diagram of network structure

2D CNN adopts dense connection to ensure maximum information flow, which could capture more details in each layer for edge detection. The gradually decreasing number of channels in the encoding path increases the weight of the low-level information, which is beneficial to the network to focus on extracting the edge information.

2D features are effectively fused with 3D features through a hybrid features fusion module, which combines the complementary information of the two networks and integrates the information on different spatial scales. It solves the problem that 2D CNN cannot extract the volume information of CT image context, and alleviates the problem that 3D CNN is not sufficient in extracting intra-layer features. The Dice Loss function used by the network effectively alleviates the common problem of category imbalance in segmentation.

3D CNN

We employ the V-Net model proposed by Milletari [20] to extract the context information of lung lobes on CT images. The training volumes were first normalized, followed by rescaling to 512 × 512 × 64. The model is an encoder–decoder structure, where the encoder is used to extract features and reduce its resolution at the end of each stage, and the decoder is used to gradually restore the low-resolution features generated by the encoder to the same resolution as the input image through transpose convolution, the final output feature map of 3D CNN was 256 × 256 × 64 × 32, and the resolution is compressed for convolutional downsampling using a kernel size of 2 × 2 × 2 with stride 2. The resolution of the feature map is reduced by half in three directions (XYZ) with each down-sampling. The parametric rectified linear units (PReLU) activation function is used in the network.

2D CNN

The 2D CNN proposed in this paper has an encoding path similar to the V-net structure. Three dense blocks are set on the encoding path to extract features. Through the dense connection scheme, network feature propagation is promoted and feature utilization is enhanced so that 2D CNN can fully excavate the 2D texture information. We trained the network with axial slices from all the training volumes, each sized 256 × 256 and normalized to have values between 0 and 1. To avoid over-fitting to the background, only the axial slices, wherein at least one lung lobe is present were used. Different from V-net, the size of the feature map and the number of channels will be reduced after every down-sampling, which is beneficial for the network to concentrate on extracting the edge information of lung lobes on CT images. The decoding path eliminates most convolution processing for low-resolution feature maps, directly restores the resolution of feature maps through Transpose convolution processing, which improves the operating efficiency of the network.

2D–3D fusion

Before obtaining the final segmentation map, features extracted by 2D CNN and those extracted by 3D CNN need to be fused, and the feature maps of the two networks need to be consistent in size and dimension. The featured image extracted by 2D CNN becomes 256 × 256 × 32 after up-sampling, and the final output results of 64 consecutive CT slices in 2D CNN are combined to generate a tensor that can be used for 3D convolution. The size and channel number of the tensor are 256 × 256 × 64 × 32, which is the same as that of 3D CNN. After that, two-dimensional features and three-dimensional features are concatenated to form hybrid features. The mixed features are refined through a 3 × 3 × 3 convolution kernel and generate feature maps of two channels. Finally, a softmax layer is applied to generate the final segmentation.

Loss function: To capture the local and global relationship between different output pixel predictions of this hybrid network, the context information based on the Dice coefficient was used to correct the lobe shape information in both networks:

where xi is the prediction probability for each voxel and yi is the binary ground truth.

Data set

105 locally advanced non-small cell lung cancer patients with pathologically confirmed IIIA (N2) were retrospectively selected from the case database from June 2019 to August 2020. The diagnostic CT of the patient is in the supine position, lying on the ordinary CT diagnostic curved bed, and the arm is raised above the head. The reconstructed image has a resolution of 512 × 512 and a slice thickness of 5 mm. It is transmitted to MIM 7.0.4 (MIM vista Corp, Cleveland, US-OH). A total of 350 three-dimensional lung lobe contours, seventy contours per lobe was trained and validated after the image data augmentation process, so as to ensure the amount of data needed for model training. One experienced radiation oncologist contoured five lung lobes for each CT scan, and the data is saved in the RTstructure Dicom file.

Implementation

Each CT slice is resampled at the same resolution (1 × 1 × 3 mm) and cropped to 256 × 256. For each CT sequence, a 64 × H × W 3D image was synthesized from 64 consecutive images with stride as 1. 105 patients were randomly divided into a training group (50 cases) to optimize the network, a validation group (20 cases) to determine the optimal performance model, and a test group (35 cases) to test and evaluate the model after complete training. The total data set includes 7090 CT slices, 4705 slices for the Training-Validation cohort, and 2385 slices for the Test cohort. The augmentation of input data was used to avoid overfitting and improve the generalization capabilities of deep neural networks. Random Translation, scaling, rotation, and other data enhancement techniques were used to increase the sample size.

The proposed CNN was trained on two NVIDIA 1080Ti GPU with 11 GB of RAM for 1000 epochs. The code was written in PyTorch Library using Python. Considering the GPU memory limitation, the batch size was set to 1. For the gradient descent optimization algorithms, we used the Adam optimizer with a learning rate of 0.001 and a weight decay of 10–8.

Evaluation metrics

Multiple metrics were used to quantitatively evaluate the accuracy of the proposed segmentation method. The manual contours delineated by a single expert (RO) were considered ground truth in this study. Dice Similarity Coefficient (DSC) [21, 22] is the main evaluation metric that quantifies the spatial overlap between the ground truth and the automated contours, defined as

where X was the set of segmentation results and Y was the set of ground-truth delineation. The value of Dice varied from 0 to 1, and a higher value of Dice usually implied a better match between the two contours. A Dice score of 0.75 was considered an acceptable match in this study.

Hausdorff distance (HD) [22] is a measure of surface distance between two point sets, defined as

where X and Y denoted the boundary-surface set of the automated contours and the ground truth, d (x, y) indicated the Euclidean distance between voxels x and y. 95th-percentile Hausdorff distance (HD95) describes the largest surface-to-surface separation among the 95th percentile of surface points of automated contours and ground truth. Hausdorff distance referred to the maximum distance of all surface voxels. However, it was sensitive to small outlying objects and HD95 was employed to skip the outliers. A smaller value of HD95 usually implied a better result.

Mean surface distance (MSD) [23] was defined as follows:

Sensitivity, Specificity, and Accuracy [24] were also used as performance assessment parameters:

where TP, TN, FP, and FN denotes true positive, true negative, false positive, and false negative correspondingly.

Statistical analysis

All statistical comparisons were performed using SPSS software (version 22.0; IBM, Inc., Armonk, NY, USA). A value of P < 0.05 indicated statistical significance.

Acknowledgements

This study was financially supported by the Nurture projects for basic research of Shanghai Chest Hospital (No.2019YNJCM05). Guarantor: The scientific guarantor of this publication is Zhiyong Xu, Dr.

Abbreviations

- 2D, 3D

Two-dimensional, three-dimensional

- CNN

Convolutional Neural Network

- CT

Computed tomography

- NSCLC

Non-small-cell lung cancer

- DSC

Dice Similarity Coefficient

- LUL

Left upper lobe

- LLL

Left lower lobe

- RUL

Right upper lobe

- RML

Right middle lobe

- RLL

Right lower lobe

- RILI

Radiation-induced lung injury

- HD

Hausdorff distance

- HD95

95Th-percentile Hausdorff distance

- MSD

Mean surface distance

- PVE

Partial volume effect

- COPD

Chronic obstructive pulmonary disease

Authors’ contributions

HG was involved in conceptualization, data curation, data analysis, investigation, methodology, and writing; WG was involved in conceptualization, data curation, data analysis, and methodology; CZ was involved in conceptualization, data analysis and methodology; AF, HW, YH, HC, YS and YD were involved in methodology, resources, supervision. ZX was involved in conceptualization, data analysis, methodology, project administration, supervision, and writing—review/editing.

Funding

Sponsored by the Nurture projects for basic research of Shanghai Chest Hospital (No.2019YNJCM05).

Availability of data and materials

The data sets during and/or analysed during the current study available from the corresponding author on reasonable request.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Siegel RL, Miller KD, Jemal A. Cancer statistics, 2020. CA Cancer J Clin United States. 2020;70:7–30. doi: 10.3322/caac.21590. [DOI] [PubMed] [Google Scholar]

- 2.Hernando ML, Marks LB, Bentel GC, Zhou S-M, Hollis D, Das SK, et al. Radiation-induced pulmonary toxicity: a dose-volume histogram analysis in 201 patients with lung cancer. Int J Radiat Oncol. 2001;51:650–659. doi: 10.1016/S0360-3016(01)01685-6. [DOI] [PubMed] [Google Scholar]

- 3.Rodrigues G, Lock M, D’Souza D, Yu E, van Dyk J. Prediction of radiation pneumonitis by dose–volume histogram parameters in lung cancer—a systematic review. Radiother Oncol. 2004;71:127–138. doi: 10.1016/j.radonc.2004.02.015. [DOI] [PubMed] [Google Scholar]

- 4.Barriger RB, Fakiris AJ, Hanna N, Yu M, Mantravadi P, McGarry RC. Dose-volume analysis of radiation pneumonitis in non–small-cell lung cancer patients treated with concurrent cisplatinum and etoposide with or without consolidation docetaxel. Int J Radiat Oncol Biol Phys. 2010;78:1381–1386. doi: 10.1016/j.ijrobp.2009.09.030. [DOI] [PubMed] [Google Scholar]

- 5.Barriger RB, Forquer JA, Brabham JG, Andolino DL, Shapiro RH, Henderson MA, et al. A dose-volume analysis of radiation pneumonitis in non-small cell lung cancer patients treated with stereotactic body radiation therapy. Int J Radiat Oncol. 2012;82:457–462. doi: 10.1016/j.ijrobp.2010.08.056. [DOI] [PubMed] [Google Scholar]

- 6.Tang X, Li Y, Tian X, Zhou X, Wang Y, Huang M, et al. Predicting severe acute radiation pneumonitis in patients with non-small cell lung cancer receiving postoperative radiotherapy: development and internal validation of a nomogram based on the clinical and dose–volume histogram parameters. Radiother Oncol. 2019;132:197–203. doi: 10.1016/j.radonc.2018.10.016. [DOI] [PubMed] [Google Scholar]

- 7.Bailey DL, Farrow CE, Lau EM. V/Q SPECT—normal values for lobar function and comparison with CT volumes. Non-PE Uses Lung Scan. 2019;49:58–61. doi: 10.1053/j.semnuclmed.2018.10.008. [DOI] [PubMed] [Google Scholar]

- 8.Ramella S, Trodella L, Mineo TC, Pompeo E, Stimato G, Gaudino D, et al. Adding ipsilateral V20 and V30 to conventional dosimetric constraints predicts radiation pneumonitis in stage IIIA–B NSCLC treated with combined-modality therapy. Int J Radiat Oncol Biol Phys. 2010;76:110–115. doi: 10.1016/j.ijrobp.2009.01.036. [DOI] [PubMed] [Google Scholar]

- 9.Zhu J, Simon A, Haigron P, Lafond C, Acosta O, Shu H, et al. The benefit of using bladder sub-volume equivalent uniform dose constraints in prostate intensity-modulated radiotherapy planning. OncoTargets Ther. 2016;9:7537–7544. doi: 10.2147/OTT.S116508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xu Y, Gan F, Xia C, Wang Z, Zhao K, Li C, et al. Discovery of lung surface intersegmental landmarks by three-dimensional reconstruction and morphological measurement. Transl Lung Cancer Res. 2019;8:1061–1072. doi: 10.21037/tlcr.2019.12.21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hayashi K, Aziz A, Ashizawa K, Hayashi H, Nagaoki K, Otsuji H. Radiographic and CT appearances of the major fissures. Radiogr Radiol Soc N Am. 2001;21:861–874. doi: 10.1148/radiographics.21.4.g01jl24861. [DOI] [PubMed] [Google Scholar]

- 12.Bragman FJS, McClelland JR, Jacob J, Hurst JR, Hawkes DJ. Pulmonary lobe segmentation with probabilistic segmentation of the fissures and a groupwise fissure prior. IEEE Trans Med Imaging. 2017;36:1650–1663. doi: 10.1109/TMI.2017.2688377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Harrison AP, Xu Z, George K, Lu L, Summers RM, Mollura DJ. Progressive and multi-path holistically nested neural networks for pathological lung segmentation from CT images. In: Descoteaux M, Maier-Hein L, Franz A, Jannin P, Collins DL, Duchesne S, editors. Medical Image Computing and Computer Assisted Intervention—MICCAI 2017. Cham: Springer International Publishing; 2017. pp. 621–629. [Google Scholar]

- 14.George K, Harrison AP, Jin D, Xu Z, Mollura DJ, et al. Pathological pulmonary lobe segmentation from CT images using progressive holistically nested neural networks and random walker. In: Cardoso MJ, Arbel T, Carneiro G, Syeda-Mahmood T, Tavares JMRS, Moradi M, et al., editors. Deep learning medical image analysis multimodal learning for clinical decision support. Cham: Springer International Publishing; 2017. pp. 195–203. [Google Scholar]

- 15.Imran A-A-Z, Hatamizadeh A, Ananth SP, Ding X, Terzopoulos D, Tajbakhsh N, et al. Automatic segmentation of pulmonary lobes using a progressive dense V-network. In: Stoyanov D, Taylor Z, Carneiro G, Syeda-Mahmood T, Martel A, Maier-Hein L, et al., editors. Deep learning in medical image analysis multimodal learninf clinical decision support. Cham: Springer International Publishing; 2018. pp. 282–290. [Google Scholar]

- 16.Park J, Yun J, Kim N, Park B, Cho Y, Park HJ, et al. Fully automated lung lobe segmentation in volumetric chest CT with 3D U-Net: validation with intra- and extra-datasets. J Digit Imaging. 2020;33:221–230. doi: 10.1007/s10278-019-00223-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lenchik L, Heacock L, Weaver AA, Boutin RD, Cook TS, Itri J, et al. Automated segmentation of tissues using CT and MRI: a systematic review. Acad Radiol. 2019;26:1695–1706. doi: 10.1016/j.acra.2019.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Monnin P, Sfameni N, Gianoli A, Ding S. Optimal slice thickness for object detection with longitudinal partial volume effects in computed tomography. J Appl Clin Med Phys. 2017;18:251–259. doi: 10.1002/acm2.12152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Imran A-A-Z, Hatamizadeh A, Ananth SP, Ding X, Tajbakhsh N, Terzopoulos D. Fast and automatic segmentation of pulmonary lobes from chest CT using a progressive dense V-network. Comput. Methods Biomech. Biomed. Eng. Imag. Visualization. 2020;8:509–18. doi: 10.1080/21681163.2019.1672210. [DOI] [Google Scholar]

- 20.Milletari F, Navab N, Ahmadi S-A. V-Net: fully convolutional neural networks for volumetric medical image segmentation. 2016 Fourth international conference on 3D vision 3DV. Stanford, CA, USA: IEEE; 2016 [cited 2020 Oct 29]. p. 565–71. http://ieeexplore.ieee.org/document/7785132/

- 21.Taha AA, Hanbury A. Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Med Imaging. 2015;15:29. doi: 10.1186/s12880-015-0068-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Choi MS, Choi BS, Chung SY, Kim N, Chun J, Kim YB, et al. Clinical evaluation of atlas- and deep learning-based automatic segmentation of multiple organs and clinical target volumes for breast cancer. Radiotherapy Oncol. 2020;153:139–45. doi: 10.1016/j.radonc.2020.09.045. [DOI] [PubMed] [Google Scholar]

- 23.Vrtovec T, Močnik D, Strojan P, Pernuš F, Ibragimov B. Auto-segmentation of organs at risk for head and neck radiotherapy planning: from atlas-based to deep learning methods. Med Phys U S. 2020;47:e929–e950. doi: 10.1002/mp.14320. [DOI] [PubMed] [Google Scholar]

- 24.Akter O, Moni MA, Islam MM, Quinn JMW, Kamal AHM. Lung cancer detection using enhanced segmentation accuracy. Appl Intell. 2021;51:3391–3404. doi: 10.1007/s10489-020-02046-y. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data sets during and/or analysed during the current study available from the corresponding author on reasonable request.