Abstract

PURPOSE

Accurate recording of diagnosis (DX) data in electronic health records (EHRs) is important for clinical practice and learning health care. Previous studies show statistically stable patterns of data entry in EHRs that contribute to inaccurate DX, likely because of a lack of data entry support. We conducted qualitative research to characterize the preferences of oncological care providers on cancer DX data entry in EHRs during clinical practice.

METHODS

We conducted semistructured interviews and focus groups to uncover common themes on DX data entry preferences and barriers to accurate DX recording. Then, we developed a survey questionnaire sent to a cohort of oncologists to verify the generalizability of our initial findings. We constrained our participants to a single specialty and institution to ensure similar clinical backgrounds and clinical experience with a single EHR system.

RESULTS

A total of 12 neuro-oncologists and thoracic oncologists were involved in the interviews and focus groups. The survey developed from these two initial thrusts was distributed to 19 participants yielding a 94.7% survey response rate. Clinicians reported similar user interface experiences, barriers, and dissatisfaction with current DX entry systems including repetitive entry operations, difficulty in finding specific DX options, time-consuming interactions, and the need for workarounds to maintain efficiency. The survey revealed inefficient DX search interfaces and challenging entry processes as core barriers.

CONCLUSION

Oncologists seem to be divided between specific DX data entry and time efficiency because of current interfaces and feel hindered by the burdensome and repetitive nature of EHR data entry. Oncologists' top concern for adopting data entry support interventions is ensuring that it provides significant time-saving benefits and increasing workflow efficiency. Future interventions should account for time efficiency, beyond ensuring data entry effectiveness.

INTRODUCTION

The adoption of electronic health records (EHRs) into clinical practice not only has provided a wealth of opportunities to improve patient care1,2 but also had unintended consequences (eg, increased clinical process complexity3,4 and clinicians’ administrative burden5,6). Still, secondary analysis of data from EHRs is fundamental to building learning health systems1,2,7 and care quality–improving research practices (eg, precision genomic medicine).8-10 Accurate structured diagnosis (DX) data are key to supporting secondary clinical data uses11 via accurate patient cohort selection.12,13 However, DX data have been fraught with data quality limitations14,15 due, in part, to nonspecific DX coding system definitions and their inconsistent implementation across EHR systems.11,16-18 More recently, these data quality issues have been linked to limitations in EHR usability19,20 and the burdensome task of selecting a precise DX code.21,22 These findings align with the increased charting burdens placed on clinicians via complex and repetitive EHR interactions.3-6,21,23 DX data entry, although relatively simple compared with other data entry tasks, is ever-present in clinical workflows because of clinical billing requirements24,25 and may contribute significantly to clinician's perceived EHR data entry burden.21,26,27

CONTEXT

Key Objective

To understand and characterize the barriers faced by oncology care providers when attempting to enter clinically accurate structured diagnosis (DX) data in electronic health records (EHRs) and uncover their preferences in receiving data entry support within EHR interfaces to support this repetitive task.

Knowledge Generated

Current EHR user interfaces seem to constrain oncologists to find a compromise between specific DX data entry and time efficiency. The core barrier seems to be the burdensome and repetitive nature of EHR data entry. Our participants preferred entry support via an unobtrusive list of DX suggestions intelligently derived from existing EHR DX data.

Relevance

Oncologists' top concern for data entry improvement and intervention adoption was having significant time-saving benefits while improving efficiency. Current EHR designs seem to affect oncologists during data entry and the patient when existing data are to be reused to support their care.

Although DX data entry challenges affect most of the health care, their complexity is compounded in oncology charts in at least two ways. First, the DX codes in the International Statistical Classification of Diseases and Related Health Problems, 10th Revision are designed for general medical use rather than being oncology DX classification (eg, does not include histology) and provide limited DX descriptions.28 This can bias clinicians to use clinical notes for charting rather than structured data entry fields. This complicates retrieval, while also increasing burden on other clinicians who are forced to search information in the unstructured text.14,29 Second, EHR systems often fail to support precise structured DX recording consistently across EHR data entry workflows and EHR users.20,30 This lack of system support is likely to make the DX logging process burdensome to oncologists.19,21,31

These findings suggest a need for EHR interventions to support precise and consistent structured DX data recording, which could reduce clinician burden while improving clinical data quality.32,33 However, a system capable of suggesting accurate and precise DX codes is unlikely to guarantee successful adoption into clinical practice or clinician burden reduction. Many EHR-based interventions (eg, drug-drug interaction alerts, best practice advisory, automatically triggered screening forms, etc) support EHR tasks, but fail to reduce burden and instead overload clinicians with information, which leads to well-known alert fatigue phenomena.19,34-36 It is challenging to define an ideal support information delivery mode, but a necessary first step is to define oncologist preferences for DX data entry support. This paper reports our initial and exploratory findings in characterizing oncology care provider data entry preferences. This work supports the development of EHR data entry support interventions in oncology care in reducing clinician EHR burden. Our findings underscore the impact of EHR interface design37 on clinician burden,6 resulting data quality,20,30 and the need for clinician-supportive EHR data entry interfaces.

METHODS

We employed qualitative methods based on grounded theory to develop our understanding of oncologists' preferences for the development of a DX data entry support intervention. Specifically, we conducted two semistructured interviews to uncover the preferences of practicing oncologists making use of our EHR system, followed by two clinician-led focus groups, followed by a survey questionnaire sent out to a larger population of clinicians. Participants for all three studies were recruited via e-mail invitation. We employed a purposive sampling strategy38 to focus on oncology healthcare providers' opinions of EHR DX entry. Our study population was constrained to our cancer center to ensure clinical workflow and EHR system homogeneity. We included practitioners in the areas of neuro-oncology and thoracic oncology to assess potential differences and similarities across subspecialties. Subspecialties were selected because of physician interest in DX EHR data entry improvement research, subject accessibility, and established collaborative relationships. Our study was approved by our Institutional Review Board (IRB No.: 00044728) before any contact with our participants. All participants were provided written informed consent information and signed informed consent forms where needed.

Semistructured Clinician Interviews

We conducted semistructured interviews to contextualize oncologist's views, habits, data entry processes, and EHR interaction workflows while recording structured DX data into our local EHR. A secondary aim was to uncover potential challenges faced when entering DX data during clinical practice. This helped define the broad lines of established user-system interaction processes in the form recurring interview themes. The interviews asked clinicians to describe their default actions when entering structured DX data for clinical and billing purposes. Data entry processes were carried out using a think aloud approach39 while using the EHR in four entry interfaces (ie, encounter, problem list, order, and an oncology-specific module DX entry) (Appendix 1). Clinicians were also asked to share ideas on how to support accurate and specific DX entry in this system. Our interview guide was developed by the informaticians in our study team and then circulated to the rest of the study team twice for feedback. The third version was circulated to our local qualitative research shared resource team for feedback. Each interview was audio-recorded, and computer screen interactions were recorded; recordings were summarized as field notes. A thematic analysis38 was carried out by our local qualitative research shared resource team to reveal common themes in both interviews. These themes were used to develop our focus group guide.

Clinician-Led Focus Groups

We conducted clinician-led focus groups to broaden our understanding of DX logging practices and the challenges when entering specific DX. We followed the same development process as for our interview guide (ie, informatics team development, two review rounds, and qualitative research team review). The focus group moderator guide is included in Appendix 2. Two focus group sessions were conducted by clinicians from two specialties (ie, thoracic oncologists and neuro-oncologists). Each focus group was audio-recorded and transcribed verbatim. A thematic analysis38 was carried out by our local qualitative shared resource team, revealing common themes in both focus groups. These findings were used to develop our survey questionnaire.

Clinician Preference Surveys

We administered an electronic survey via RedCAP40 to determine the generalizability interview and focus group results. We developed a 12-question survey based on findings from our clinician interviews and focus groups. This survey was developed based on previous findings with the same three-round feedback process (ie, initial internal development, collaborator team feedback, and qualitative shared resource team feedback).20,22,28,30 The survey questionnaire is included in Appendix 3. Survey data analysis was conducted using Tableau (version 2020.1, Tableau Software Inc, Seattle, WA).

RESULTS

Clinicians throughout the study reported similar user interface experiences, barriers, and dissatisfaction with DX entry systems (Table 1). The interviews revealed that clinicians were dissatisfied with DX data entry interfaces and had to come up with standardized data entry interaction processes for the sake of efficiency. Focus groups confirmed this, revealing that the system forced repetitive, time-consuming data entry. The survey also confirmed our initial findings and revealed additional barriers to efficient data entry.

TABLE 1.

Participant Quotes for Semistructured Clinician Interviews and Clinician-Led Focus Groups

Semistructured Clinician Interviews

Two semistructured clinician interviews were conducted in-person by a research team member in September 2018. Both clinicians were practicing oncologists in the fields of neuro-oncology and thoracic oncology. Interview lengths were 46 and 26 minutes, respectively. Both clinicians reported using the EHR daily and seeing upward of 20 patients per week, entering approximately two to five DX codes per patient, and had been using the EHR system for at least 3 years. Neither clinician felt that they had received adequate EHR data entry training upon adoption (Table 1, Quote [Q] 1, 2). They reported to have gained familiarity with the EHR mainly through hands-on use. Both clinicians expressed dissatisfaction with the EHR's DX data entry process and interface (Table 1, Q 3, 4). They described developing their own systematic EHR interaction to streamline the entry process (Table 1, Q 5-7) and mentioned that they often select more general DX to reduce the search interaction burden for the sake of efficiency (Table 1, Q 8, 9). One suggested that improvement was DX selection consistency across EHR data entry workflows and autopopulation throughout the chart (Table 1, Q 10).

Clinician-Led Focus Groups

The first focus group consisted of six thoracic oncologists and was conducted in October 2018. The session lasted approximately 63 minutes and was moderated by a practicing thoracic oncologist. The second focus group consisted of six neuro-oncologists and was conducted in November 2018. The session lasted approximately 55 minutes and was moderated by a practicing neuro-oncologist. Participants reported that they would like to record precise DX code descriptions into the EHR but did not believe that existing clinical workflow combined with the existing EHR data entry systems would allow them to enter and maintain them in a time-efficient manner.

Beyond the frequent and repetitive nature of DX data entry during oncology visits, clinicians reported three core barriers to accurate and precise oncological DX codes selection including challenges in DX search, lack of discernable order in DX search results, and the overabundance of data entry modes that need to be used frequently. First, clinicians in both groups considered it difficult to find specific DX codes in the EHR because of the very large number of options returned by the existing search feature. They suggested finding alternative interaction modes to a search-based interface (Table 1, Q 11). Second, participants mentioned that there is no hierarchy to the search results returned by the DX data entry interfaces and suggested that a DX search feature to return results by specificity (ie, general DX to specific DX options), popularity (ie, common DX to uncommon DX), or usage (ie, most used to least used DX) would help improve data entry (Table 1, Q 12). Finally, clinicians revealed that the variety of interaction modes available to enter DX data and the frequency with which DX codes needed to be entered were a barrier because of time consumption and a threat to data entry consistency across providers. The group reported that some clinicians enter DX only when putting in orders, some enter the DX into the problem list, and others enter DX in the visit DX (Table 1, Q 13-15). Participants envisioned a solution where the EHR would learn from previous entries and test results and pull DX information from those into a prompt. Their ideal prompt would be unobtrusive but store relevant DX for selection and entry as needed (Table 1, Q 16).

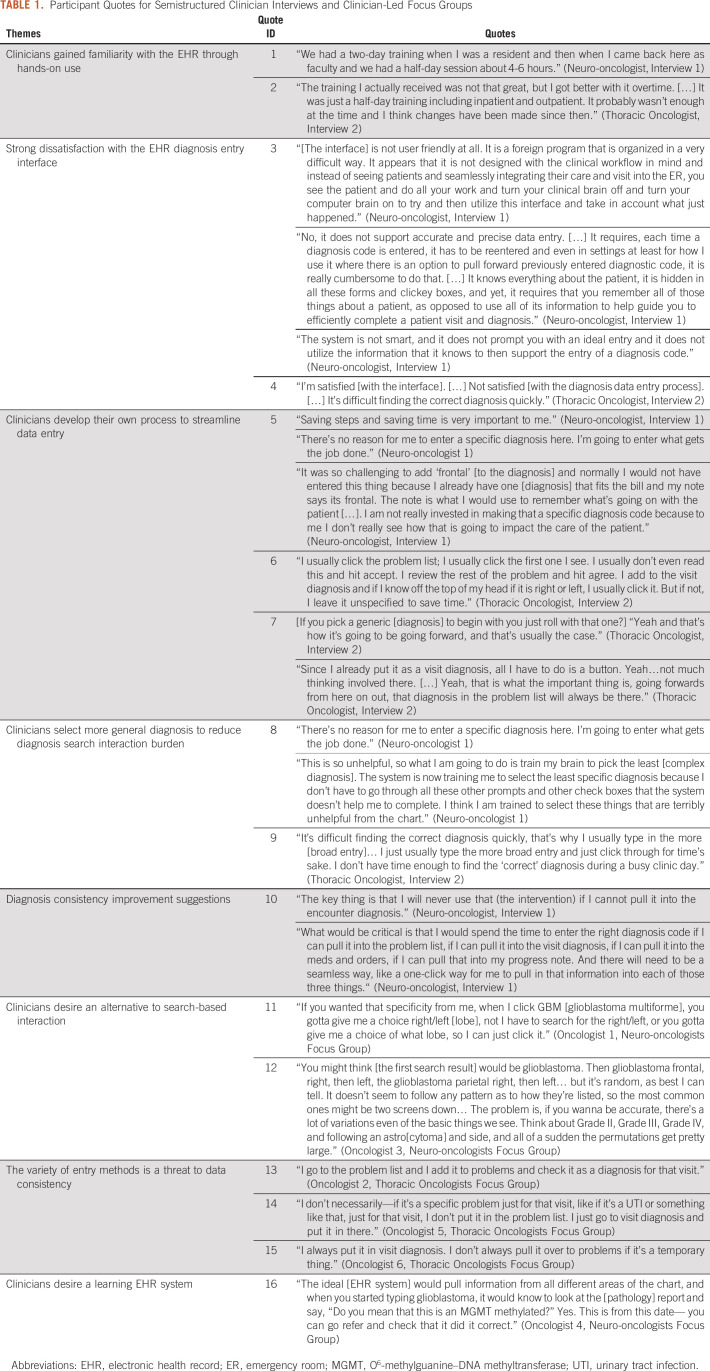

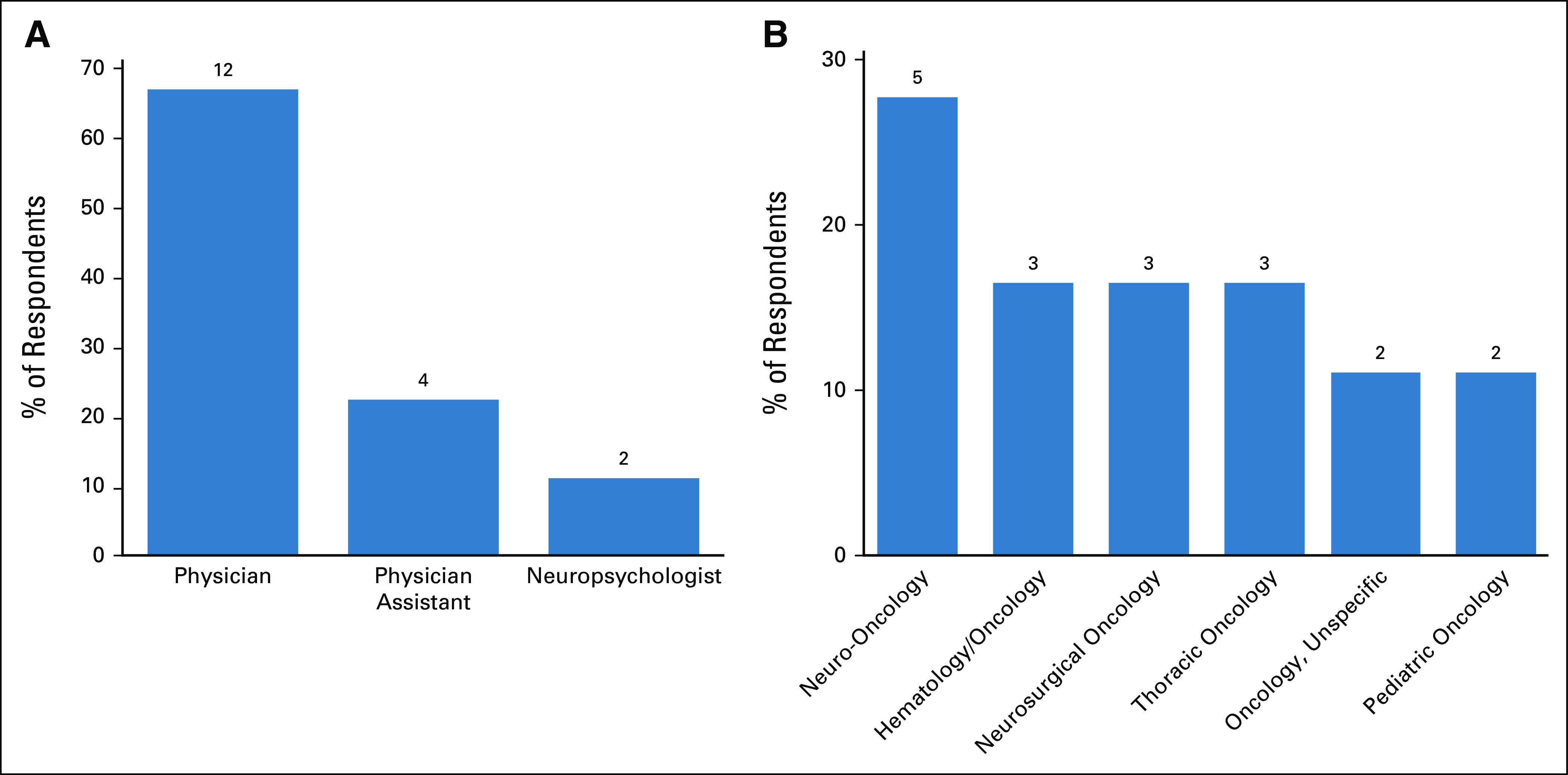

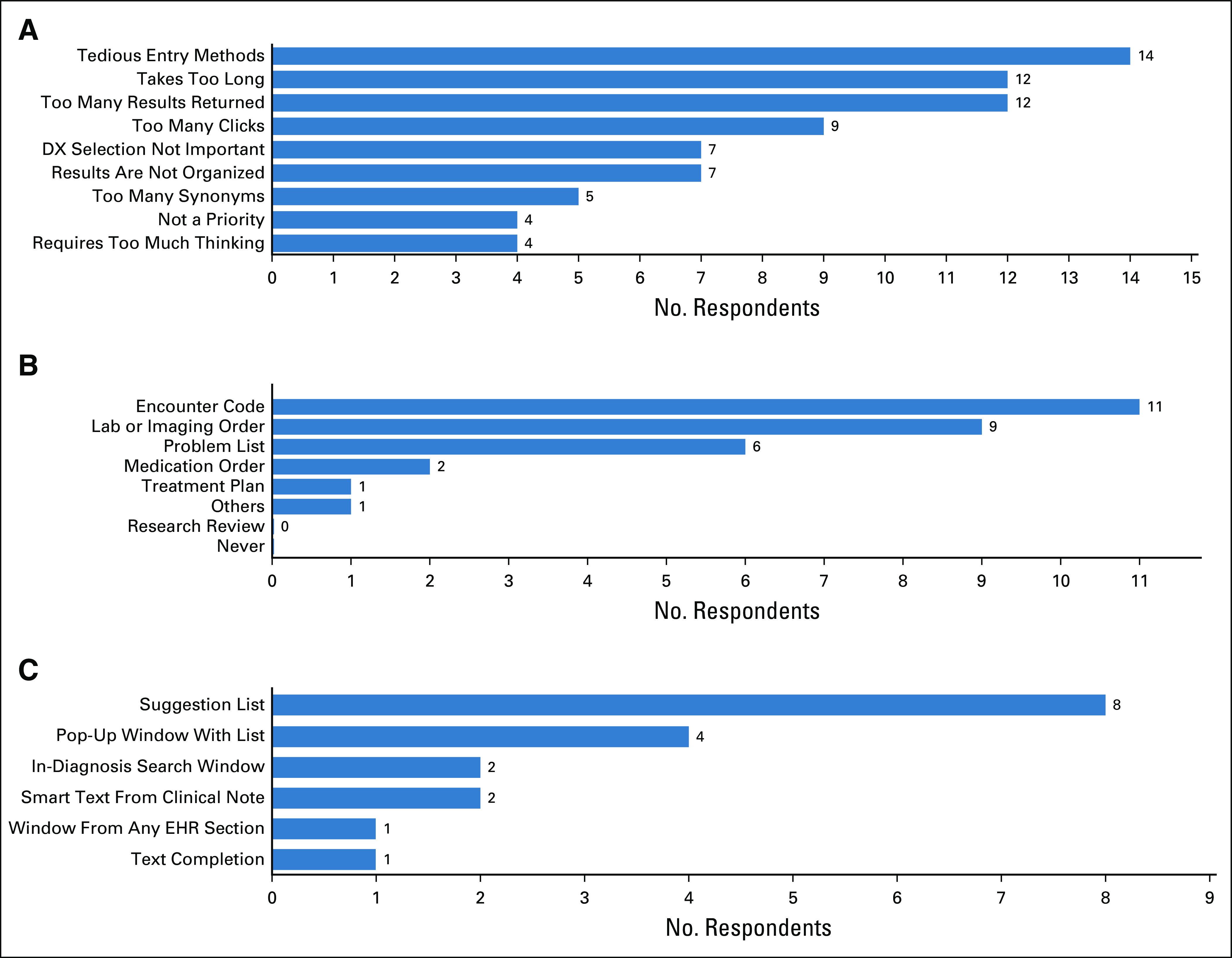

Clinician Preference Surveys

This survey was distributed electronically to 19 members of the thoracic and neuro-oncology departments on February 2019, followed by four reminders for unresponsive participants at weekly intervals. Eighteen participants responded to our survey (response rate = 94.7%). Survey responses predominantly reflected sentiments established in the interviews and focus groups. Our respondents were 67% Physicians, 22% Physician Assistants, and 11% Neuropsychologists (Fig 1). The specialties included Neuro-oncology (44.4%), Hematology and/or Oncology (16.6%), and Thoracic Oncology (16.6%) with additional respondents in Pediatric Oncology and Unspecified Oncology specialty (11.1%, each) (Fig 1). Clinicians identified numerous barriers to success with the current system (Fig 2). The most frequently cited barrier was tedious entry methods and inability to find the desired DX using the search feature (77.8% cited, each). Clinicians entered their initial DX in all sections of the EHR, but predominantly as encounter DX (61.1%) and attached to lab or imaging orders (50%), followed by problem list entries (33.3%) (Fig 2). Most clinicians acknowledged that they do not search for the most precise DX possible (72.2%). However, respondents overwhelmingly indicated willingness to enter the most precise DX if the system would autopopulate throughout the chart or be available in a flow sheet like module for reuse in other components such as lab or imaging orders or progress notes (88.9% and 94.4%, respectively). All survey respondents (100%) preferred an interface-provided suggestion list of relevant DX versus using the search feature. The most popular interface prompt methods for DX entry support were suggestion list (44.4%) and pop-up window (22.2%) (Fig 2).

FIG 1.

(A) Clinical role and (B) specialty distribution of survey respondents.

FIG 2.

(A) Reported barriers to precise DX data entry in EHRs. (B) EHR sections most frequently used for DX entry and (C) preferences for precise DX suggestion modes are also reported. DX, diagnosis; EHR, electronic health record.

DISCUSSION

We employed a combination of quantitative and qualitative research methods to oncologists' data entry support preferences. We found that surveyed oncologists had similar views on EHR data entry user interface, faced similar barriers, and showed similar points of dissatisfaction with their existing interface across interviews, focus groups, and surveys. Our participants reported their willingness to undertake precise DX code selection if the EHR system simplified and unified DX data entry throughout each patient's chart. The key barrier to adoption was ensuring that the intervention would save time and make data entry workflows significantly more efficient. This further confirmed the link between data entry and EHR-induced oncologist burden.

Our findings are in line with existing literature and extend it in four ways. First, the entry of generic DX codes had been noted from secondary analyses in previous research,20,28,30 yet, to our knowledge, this is the first study to link this phenomenon to clinician-EHR workarounds aimed at reducing data entry time and EHR-induced burden for DX data entry in oncology, specifically.21,27,41 Second, our study uncovered the importance of presenting search results that matched clinician's expectations and mental models.42,43 This is one of the key heuristics of usable interface design34,44 and is known to contribute to safer, more usable EHR interfaces.34,45 This had not been evidenced in EHR data entry for oncologists. Third, our findings confirm that complex EHR interactions put additional burden on oncologists. This is in line with existing findings.21,27 In conjunction with previous quantitative analysis findings of poor data quality resulting from burdensome system designs28 along its inter-relation with workflow and user factors,20 our findings provide initial evidence of the link between system interface design, clinical workflows, data quality, clinician preference, and clinician burden. Finally, our results confirm existing knowledge of the burden placed on clinicians by data entry tasks21,23,27 while reporting findings specific to oncological DX data entry.

Our findings underscore the impact of EHR design on oncological workflows and practice. Oncologists surveyed in this initial study conveyed a clear imperative of holding interaction efficiency and time saving in EHR interventions to support clinical practice. Their dissatisfaction with the EHR data entry interface stemmed from its complexity, lack of adaptability, and failure to support visual search by providing ordered DX search results. This is in line with previous research21,27 and seems to be a recurring topic affecting clinical practice. These findings have two important implications. First, this disincentivizes the selection and entry of precise DX codes and limits the accuracy, reliability, and usability of secondary data analysis that relies on structured DX coding data. Second, it undermines the accuracy of structured DX codes and calls into question the ability to trust the accuracy of these data. Given the link between EHR interface design and clinician burden, satisfaction, and burnout,5,6,27,34,45-47 a more pointed understanding of the limitation of current systems paired to clinical workflows and the real oncological practice conditions is necessary.

Current oncology practice standards require clinicians to spend a large portion of their time entering data into EHR systems using repetitive and sometimes complex operations.48 Although these standards can improve the care of future patients with cancer, they also add clinician burden that can lead to decreased satisfaction27,46-48 and burnout,6,49 particularly in workflows where clinicians are required to conduct data entry during clinical hours.50,51 Challenging data entry interaction exacerbates these conditions and leads to workaround solutions by providers, which degrade the quality of data entry.20,22,28,30 As a result, the imprecise and inaccurate DX codes that are entered complicate the retrieval of patient data dramatically13 and prevent the reliable reuse of clinical data in real time.33,52,53 Our findings are a necessary first step toward the development of an unobtrusive, supportive interface that would assist clinicians in entering high-quality data efficiently to enable learning health care.1,9,53 We propose that such an interface would not only improve clinician satisfaction but also reduce adverse effects of EHR such as e-iatrogenesis,54 documentation delays,33,52,53 and provider burden.5,55

Our study has four core limitations because of its design as an initial exploration of oncologists’ preferences and its purposive sampling.38 First, our interview study included limited participants. Still, the initial interviews were only used as a launching point for other studies. Each additional study confirmed and expanded on initial findings, confirming their internal validity. The focus group and survey also increased participant coverage. Second, we had limited participants available to participate in our focus groups and surveys because of the highly specialized nature of our study population. This made it difficult to recruit because of not only the scarcity of participants but also oncologists' busy schedules. Still, this small sample size was adequate for an initial research project that aims to define the first broad strokes that will frame future studies. Third, we did not include all oncological subspecialties. However, we included two distinct subspecialties (ie, neuro-oncology and thoracic oncology), and neither our focus groups nor our surveys found any obvious differences in EHR data entry. Fourth, our study was conducted at a single site, which may limit generalizability. However, this site is one of the largest oncology centers in its region and uses one of the most broadly adopted EHR systems in the country. Future work will address these limitations by expanding our study to a larger number of oncologists from other sites and subspecialties to confirm the generalizability and external validity of these initial findings.

In conclusion, current user interfaces seem to constrain oncologists to find a compromise between specific DX data entry and time efficiency. The core barrier seems to be the burdensome and repetitive nature of EHR data entry. This initial survey provides three simple guidelines for interface improvements including avoid DX search interfaces, privilege DX code suggestion interfaces, and deliver suggestions via interface-integrated suggestion list. Future work will explore the external validity of these findings by replicating this research at multiple sites and additional oncological subspecialties.

ACKNOWLEDGMENT

The authors acknowledge the use of the services and facilities, funded by the National Center for Advancing Translational Sciences (NCATS), National Institutes of Health (UL1TR001420). The authors also acknowledge the support of the Qualitative and Patient-Reported Outcomes Developing Shared Resource of the Wake Forest Baptist Comprehensive Cancer Center's NCI Cancer Center Support Grant P30CA012197 and the Wake Forest Clinical and Translational Science Institute's NCATS Grant UL1TR001420.

APPENDIX 1. Semistructured Interview Guide

Study Introduction

Thank you for agreeing to participate in today's interview. The purpose of the interview is for us to gather information we can use to improve the ease of logging DX data in the EHR. The interview will last approximately one hour and will be recorded for data analysis purposes. During the interview, you will be asked questions about your experience logging diagnosis (DX) data in our EHR system. The interview questions will be related to your reasoning process during such interactions, the challenges you encounter, and potential ways of supporting your DX data entry in practice. I will also ask you to interact with the system and enter data for a few mock cases. While you interact with the system, the computer screen will be recorded so we can later analyze your data entry patterns.

Please remember that there are no right or wrong answers to my questions. I am interested in hearing your thoughts, as you are the expert in this area. Everything you say will be kept confidential.

Let's get started. Is it okay for me to start the audio and computer recording?

General EHR questions

We're going to start out by discussing our EHR and your use of it in general.

How often do you use our EHR?

How many patients do you see per week, on average?

How long have you been using our EHR?

Tell me about the training you've received to use our EHR for patient care.

Do you feel adequately trained to use our EHR for patient care?

Overall, are you satisfied or dissatisfied with our EHR's interface?

OUR EHR DX data entry questions

Now we're going to discuss our EHR's diagnosis data entry system.

How many structured DX codes and descriptions do you enter in our EHR per day?

Overall, are you satisfied or dissatisfied with our EHR's DX data entry interface?

Do you feel that our EHR supports you in entering accurate and precise clinical data?

Any particular shortcomings of this system you'd like to discuss?

Do you feel that the system enables you to select DX entries precisely? Consistently over time? Across care teams and workflows?

Any particular barrier, display, data, or system issues you have noticed that you'd like to discuss?

Is there anything else about the DX data entry system you'd like to tell me?

Think aloud session

Now I'm going to give you a few different scenarios and have you enter them into OUR EHR. As you're doing so, I'd like you to walk me through what you are doing by talking out loud. Remember to explain each specific step to me.

Scenario 1: Enter an encounter DX, a problem list DX, and an order for patient A, who's currently being treated for a frontal brain neoplasm.

Follow-up questions:

Tell me why you selected…?

What was your reasoning for selecting…?

Were you able to make the selection you intended to? If not, why not?

Is this the way you usually do it?

Scenario 2: Enter a DX for patient B that has had a brain neoplasm found with an imaging DX but not biopsy record available

Follow-up questions:

Tell me why you selected…?

What was your reasoning for selecting…?

Were you able to make the selection you intended to? If not, why not? Is this the way you usually do it?

Scenario 3: Enter a DX for a patient C who just came back after their first biopsy that revealed a parietal glioma.

Follow-up questions:

Tell me why you selected…?

What was your reasoning for selecting…?

Were you able to make the selection you intended to? If not, why not?

Is this the way you usually do it?

Scenario 4: Patient D was referred to [our institution] after having had a biopsy and a brain neoplasm diagnosed at a different healthcare system. Enter the DX for their first visit and describe the process.

Follow-up questions:

Tell me why you selected…?

What was your reasoning for selecting…?

Were you able to make the selection you intended to? If not, why not?

Is this the way you usually do it?

Closing questions

Now I'm going to ask you a few questions about how we can improve the EHR.

Overall, what ideas do you have for improvement to the DX data entry process in the EHR?

Are there any design features that you feel are hindering you from finding the best DX?

Where could suggestions be offered to improve the selection of the most accurate DX? To select it consistently?

Any particular data available in the EHR that would help you make the best possible call to select the best DX option?

How could this additional info be presented within the DX-selection screen?

Are these issues problematic to your day-to-day work? Are they bad enough that you'd want to modify the system?

Okay, that wraps up our interview today. Thank you for participating.

APPENDIX 2. Clinician-Led Focus Group Moderator's Guide

Greeting and Roles

Thank you for agreeing to participate in today's focus group. We really appreciate you taking the time to participate in this discussion. My name is [Name] and I will be the moderator for our discussion today.

My role today will be to ask some specific questions and to keep the conversation going. We have a lot to cover, so I may need to change the subject or move ahead with the discussion. But, please stop me if you want to add anything or if you have any questions. Our discussion today will last about an hour.

We are fortunate to have some help today. The note taker for today is [Name]. [Their] job will be to take notes during the discussion. We want to be sure to get all the important things you say.

I'd like to introduce you to our co-moderator, [Name]. [They] may ask some clarifying questions as they come up. He will also go over the consent form with you now. It's in front of you.

{read consent document}

Study Introduction

The purpose of this focus group is to gather information we can use to improve the ease of logging DX data in our EHR. The focus group will last approximately one hour and will be recorded for data analysis purposes. You will be asked to discuss questions as a group about your experience logging diagnosis (DX) data in our EHR system. Examples of logging diagnosis data include entering a “Visit Diagnosis,” creating a diagnosis in the “Problem List,” selecting a diagnosis when ordering labs or imaging studies. During this focus group, we are interested in understanding how you currently log diagnosis data, the workflow you use in the EHR to do this, the challenges you encounter, and potential ways of supporting your diagnosis data entry in daily practice.

Please remember that there are no right or wrong answers to my questions. We are interested in hearing your thoughts, as you all are the experts in this area. Everything you say will be kept confidential and nothing you say will be connected with your name.

How to Participate

Today, you will be participating in a group discussion. It's not an interview where I ask a question and each person answers the question and we move on to the next one. Instead, we'll be presenting topics with the goal of everyone participating in the discussion with each other. We would like you to speak freely, sharing your ideas and opinions even if they are different from others.

There are no right or wrong answers to these questions. All your views are important, whether positive or negative. We want to get as many different points of view as we can.

Ground Rules

We do have certain topics we need to cover, but everyone will have the chance to share their opinions or experiences. We want this to be a very open discussion. There are just a few ground rules we want to make certain you are aware of. These are to help everything go smoothly.

Please talk one at a time, in a voice that can be heard by everyone. This is important to allow our audio recordings to capture the discussion clearly.

Please don't have side conversations with your neighbor. Instead, share those thoughts with the group. What you have to say is very important to us.

You do not need to talk directly to me. You can respond directly to the person who has made a point, but please do so respectfully. And you do not have to be called on to talk.

Please check now to make sure your phones are on silent. Please refrain from using them during the focus group.

Does anyone have any questions before we begin?

Introduction and Ice Breaker

To help us get to know each other, please tell us your first name only, and one thing you like to do in your free time.

Section A: Introduction

We're going to start out by discussing OUR EHR's interface in general. We will present three data entry workflows to make sure we are on the same page about which EHR feature we are discussing. These features are Visit, Order, and Problem List DX entry.

Visit Diagnosis: [Screenshot Showing Visit Diagnosis Data Entry] Order Diagnosis: [Screenshot Showing Order Diagnosis Data Entry]

Problem List Diagnosis: [Screenshot Showing Order Diagnosis Data Entry]

Section B: Workflow

Now, we're going to discuss OUR EHR's diagnosis data entry system.

-

Tell me about the typical process that you go through to enter most of your diagnosis codes for patients.

a. When do you enter DX codes?

-

Tell me how your process for entering diagnosis codes changes based on where you are entering the code?

a. For lab ordering?

b. For imaging ordering?

c. For treatment plans?

d. For other things?

Where do you record the most precise DX information within OUR EHR?

Do you rely on structured DX data to review a patient chart or manage patient care? Why or why not?

So, what I'm hearing is that… [moderator to briefly summarize discussion and allow for any clarification or additional thoughts].

Section C: Diagnosis code precision

Now, we're going to discuss precision of the diagnosis codes you enter into OUR EHR.

-

Is it important that the diagnosis code be precise when you are entering diagnosis data? Why or why not?

a. Are there areas in OUR EHR where you want a precise diagnosis code?

b. Are there areas in OUR EHR where you would rather have a more general diagnosis code?

If OUR EHR were able to prompt you with the most precise diagnosis code in each of these places, would you select it? Why or why not?

-

What reasons would motivate you to change your workflow to start entering the most precise diagnosis codes?

-

a. Would you change your workflow for entering the most precise diagnosis codes if these data could be:

i. Inserted into your clinic note? Why or why not?

ii. Used to identify patients for clinical trials? Why or why not?

iii. Used to help the learning healthcare system determine best practices and prompt you with those for your patients? Why or why not?

iv. Are there other applications that would make you change your practice for entering diagnosis data? Why or why not?

-

Do the DX data codes reflect what is important clinically?

So, what I'm hearing is that… [moderator to briefly summarize discussion and allow for any clarification or additional thoughts].

Section D: Barriers to diagnosis code entry

Now we're going to discuss barriers to entering precise diagnosis codes

-

Tell me about how you go about searching for a diagnosis code.

a. Is there a diagnosis that you just tend to go for?

-

What do you think about the DX search feature?

a. Does it support entering concordant DX across workflows? Why or why not?

What are the barriers to your current workflow for DX data entry?

What are the barriers to entering the most precise DX data entry?

How do you feel about the amount of information displayed in the OUR EHR interface? Is it helpful or not helpful?

So, what I'm hearing is that… [moderator to briefly summarize discussion and allow for any clarification or additional thoughts].

Section E: Suggestions for re-designing diagnosis code entry

Now we're going to discuss your suggestions for re-designing OUR EHR to better support precise diagnosis codes.

-

If you could design a DX code entry method, what would it look like?

a. When would you enter it in the encounter?

b. Where would it exist within OUR EHR?

c. How would you navigate to it?

d. When would you want to pull that DX code? Into what?

What would make it easiest for you to enter precise DX codes?

Would allowing you to enter structured DX data within the clinical note entry interface make it easier to enter the most precise DX available?

So, what I'm hearing is that… [moderator to briefly summarize discussion and allow for any clarification or additional thoughts].

Conclusion

Is there anything else we have not yet discussed that anyone would like to mention related to what we've been talking about?

Great, we are finished. Thank you again for participating today.

APPENDIX 3. Survey Questionnaire

Introduction

Dear Clinician,

We are gathering feedback to improve the diagnosis data entry process within [our health record system]. We have shown diagnosis codes in our electronic health record (EHR) system vary even after biopsy reports are recorded and are not as precise as they could be. Part of our personnel has perceived the need for an informatics intervention to support the recording of structured diagnosis data to improve this process.

To make this intervention useful in clinical practice, we need your feedback through this 15 minute survey where you will be asked about your perception, preferences and experience using [our EHR] diagnosis entry interface. We will use this feedback to further develop an interactive prototype to improve data entry in our EHR system. We kindly request your expert feedback to support these efforts to build our learning healthcare system through the improvement of our clinical data.

You can ask any questions if you need help deciding whether to join the study. The person in charge of this study is [Principal Investigator Name]. If you have questions, suggestions, or concerns regarding this study or you want to withdraw from the study his/her contact information is:

[Contact Information]

If you have any questions, suggestions or concerns about your rights as a volunteer in this research, contact the Institutional Review Board at [Phone Number] or the Research Subject Advocate at [Phone Number].

Completion of the survey implies consent. Sincerely,

[Signature]

Background Section

-

What is your clinical role?

a. Physician

b. Physician Assistant

c. Nurse Practitioner

d. Nurse

e. Other (Please Specify)

What is your department? [Free Text]

What is your specialty? [Free Text]

EHR Workflow Section

-

When reviewing the chart during a patient's visit, for what reasons do you use structured diagnosis code data? (Check all that apply)

To select an encounter code for a visit

To order a lab test, imaging study, or procedure

To track patient diagnoses

For patient treatment in general

To order medications

To review patient charts for research

Other (Specify)

Never

-

Where do you most frequently enter diagnosis codes for patients?

a. Problem List

b. Visit Diagnosis

c. Medication Orders

d. Lab Orders

e. Imaging Orders

f. Treatment Plans

g. Other (Please Specify)

-

Do you have a “go to” diagnosis code for your specialty or specific conditions?

a. Yes (Please specify)

b. No

-

In your experience, where in the chart would you look to find the most accurate and precise diagnosis code information? (Check all that apply)

a. Problem List

b. Visit Diagnosis

c. Clinical Note

d. Medication Orders

e. Lab Orders

f. Imaging Orders

g. Treatment Plans

h. Other (Please Specify)

-

Based on your use of clinical charts, where in the chart would it be desirable to have more generic diagnosis codes and code descriptive text? (Check all that apply)

a. Problem List

b. Visit Diagnosis

c. Clinical Note

d. Medication Orders

e. Lab Orders

f. Imaging Orders

g. Treatment Plans

h. Other (Please Specify)

i. Nowhere

Diagnosis Code Precision Section

-

When you are entering a diagnosis code, do you always search for the most precise diagnosis code description possible?

a. Yes

b. No

-

In what areas in [the EHR] do YOU consistently want a precise diagnosis code? (Check all that apply)

a. Problem List

b. Visit Diagnosis

c. Clinical Note

d. Medication Orders

e. Lab Orders

f. Imaging Orders

g. Treatment Plans

h. Other (Please Specify)

i. None

-

In what areas in [the EHR] would YOU rather select or record a more general diagnosis code? (Check all that apply)

a. Problem List

b. Visit Diagnosis

c. Clinical Note

d. Medication Orders

e. Lab Orders

f. Imaging Orders

g. Treatment Plans

h. Other (Please Specify)

i. None

Barriers to Diagnosis Code Entry Section

-

Which barriers do you face when entering the most precise diagnosis data? (Check all that apply)

a. Tedious data entry

b. Too many clicks

c. Search function fails to return specific diagnoses

d. Too many results returned by search function

e. Search results not organized in an understandable way

f. It takes too long

g. I don't always find it important to enter the most precise diagnosis data

h. I have to think too much to find the right item

i. It isn't a clinical priority

j. Available synonyms of codes

Roy Strowd

Consulting or Advisory Role: Monteris Medical, Novocure

Research Funding: Southeastern Brain Tumor Foundation, Jazz Pharmaceuticals, NIH, American Board of Psychiatry and Neurology, Alpha Omega Alpha

Other Relationship: American Academy of Neurology (AAN)

Thomas W. Lycan

Travel, Accommodations, Expenses: Incyte

Open Payments Link: https://openpaymentsdata.cms.gov/physician/4354581 https://openpaymentsdata.cms.gov/physician/4354581

Brian J. Wells

Research Funding: Boehringer Ingelheim

Umit Topaloglu

Stock and Other Ownership Interests: CareDirections

No other potential conflicts of interest were reported.

DISCLAIMER

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Cancer Institute.

SUPPORT

Supported in part by the Cancer Center Support Grant from the National Cancer Institute to the Comprehensive Cancer Center of Wake Forest Baptist Medical Center (P30 CA012197), funded by the National Center for Advancing Translational Sciences (NCATS), National Institutes of Health (UL1TR001420); by the National Institute of General Medical Sciences' Institutional Research and Academic Career Development Award program (K12-GM102773); and by WakeHealth's Center for Biomedical Informatics' first Pilot Award.

AUTHOR CONTRIBUTIONS

Conception and design: Franck Diaz-Garelli, Brian J. Wells, Umit Topaloglu

Financial support: Franck Diaz-Garelli

Administrative support: Franck Diaz-Garelli

Provision of study materials or patients: Franck Diaz-Garelli, Roy Strowd, Umit Topaloglu

Collection and assembly of data: Franck Diaz-Garelli, Roy Strowd, Tamjeed Ahmed, Umit Topaloglu

Data analysis and interpretation: Franck Diaz-Garelli, Roy Strowd, Thomas W. Lycan, Sean Daley, Brian J. Wells, Umit Topaloglu

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/cci/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Roy Strowd

Consulting or Advisory Role: Monteris Medical, Novocure

Research Funding: Southeastern Brain Tumor Foundation, Jazz Pharmaceuticals, NIH, American Board of Psychiatry and Neurology, Alpha Omega Alpha

Other Relationship: American Academy of Neurology (AAN)

Thomas W. Lycan

Travel, Accommodations, Expenses: Incyte

Open Payments Link: https://openpaymentsdata.cms.gov/physician/4354581 https://openpaymentsdata.cms.gov/physician/4354581

Brian J. Wells

Research Funding: Boehringer Ingelheim

Umit Topaloglu

Stock and Other Ownership Interests: CareDirections

No other potential conflicts of interest were reported.

REFERENCES

- 1.Safran C.Reuse of clinical data Yearb Med Inform 952–542014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hershman DL, Wright JD.Comparative effectiveness research in oncology methodology: Observational data J Clin Oncol 304215–42222012 [DOI] [PubMed] [Google Scholar]

- 3.Vanderhook S, Abraham J.Unintended consequences of EHR systems: A narrative review Proc Int Symp Hum Factors Ergon Health Care 6218–2252017 [Google Scholar]

- 4.Zheng K, Abraham J, Novak LL, et al. A survey of the literature on unintended consequences associated with health information technology: 2014–2015 Yearb Med Inform 2513–292016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Downing NL, Bates DW, Longhurst CA.Physician burnout in the electronic health record era: Are we ignoring the real cause? Ann Intern Med 16950–512018 [DOI] [PubMed] [Google Scholar]

- 6.Gajra A, Bapat B, Jeune-Smith Y, et al. Frequency and causes of burnout in US community oncologists in the era of electronic health records JCO Oncol Pract 16e357–e3652020 [DOI] [PubMed] [Google Scholar]

- 7.Safran C.Using routinely collected data for clinical research Stat Med 10559–5641991 [DOI] [PubMed] [Google Scholar]

- 8.Beckmann JS, Lew D. Reconciling evidence-based medicine and precision medicine in the era of big data: Challenges and opportunities. Genome Med. 2016;8:134. doi: 10.1186/s13073-016-0388-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chambers DA, Feero WG, Khoury MJ.Convergence of implementation science, precision medicine, and the learning health care system: A new model for biomedical research JAMA 3151941–19422016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.National Research Council (US) Committee on a Framework for Developing a New Taxonomy of Disease . Toward Precision Medicine: Building a Knowledge Network for Biomedical Research and a New Taxonomy of Disease. Washington, DC: National Academies Press; 2011. [PubMed] [Google Scholar]

- 11.Doremus HD, Michenzi EM.Data quality: An illustration of its potential impact upon a diagnosis-related group's case mix index and reimbursement Med Care 211001–10111983 [PubMed] [Google Scholar]

- 12.Köpcke F, Prokosch H-U. Employing computers for the recruitment into clinical trials: A comprehensive systematic review. J Med Internet Res. 2014;16:e161. doi: 10.2196/jmir.3446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Moskowitz A, Chen K.Defining the patient cohort Secondary Analysis of Electronic Health Records Springer International Publishing; New York City, NY: 2016. pp 93–100 [Google Scholar]

- 14.Hersh WR, Weiner MG, Embi PJ, et al. Caveats for the use of operational electronic health record data in comparative effectiveness research Med Care 51S30–S372013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Botsis T, Hartvigsen G, Chen F, et al. Secondary use of EHR: Data quality issues and informatics opportunities Summit Transl Bioinform 20101–52010 [PMC free article] [PubMed] [Google Scholar]

- 16.Lloyd SS, Rissing JP.Physician and coding errors in patient records JAMA 2541330–13361985 [PubMed] [Google Scholar]

- 17.Johnson AN, Appel GL.DRGs and hospital case records: Implications for Medicare case mix accuracy Inquiry 21128–1341984 [PubMed] [Google Scholar]

- 18.Hsia DC, Krushat WM, Fagan AB, et al. Accuracy of diagnostic coding for Medicare patients under the prospective-payment system N Engl J Med 318352–3551988 [DOI] [PubMed] [Google Scholar]

- 19.Walji MF, Kalenderian E, Tran D, et al. Detection and characterization of usability problems in structured data entry interfaces in dentistry Int J Med Inform 82128–1382013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Diaz-Garelli F, Strowd R, Lawson VL, et al. Workflow differences affect data accuracy in oncologic EHRs: A first step toward detangling the diagnosis data babel JCO Clin Cancer Inform 4529–5382020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Howard J, Clark EC, Friedman A, et al. Electronic health record impact on work burden in small, unaffiliated, community-based primary care practices J Gen Intern Med 28107–1132013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Diaz-Garelli J-F, Wells BJ, Yelton C, et al. Biopsy records do not reduce diagnosis variability in cancer patient EHRs: Are we more uncertain after knowing? AMIA Jt Summits Transl Sci Proc 201872–802018 [PMC free article] [PubMed] [Google Scholar]

- 23.McGreevey JD, III, Mallozzi CP, Perkins RM, et al. Reducing alert burden in electronic health records: State of the art recommendations from Four Health Systems Appl Clin Inform 111–122020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kukreti V, Cosby R, Cheung A, et al. Computerized prescriber order entry in the outpatient oncology setting: From evidence to meaningful use Curr Oncol 21e604–e6122014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Henricks WH. “Meaningful use” of electronic health records and its relevance to laboratories and pathologists. J Pathol Inform. 2011;2:7. doi: 10.4103/2153-3539.76733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: Primary care physician workload assessment using EHR event log data and time-motion observations Ann Fam Med 15419–4262017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Khairat S, Burke G, Archambault H, et al. Perceived burden of EHRs on physicians at different stages of their career Appl Clin Inform 9336–3472018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Diaz-Garelli J-F, Strowd R, Wells BJ, et al. Lost in translation: Diagnosis records show more inaccuracies after biopsy in oncology care EHRs AMIA Jt Summits Transl Sci Proc 2019325–3342019 [PMC free article] [PubMed] [Google Scholar]

- 29.Gibson B, Butler J, Zirkle M, et al. Foraging for information in the EHR: The search for adherence related information by mental health clinicians AMIA Annu Symp Proc 2016600–6082017 [PMC free article] [PubMed] [Google Scholar]

- 30.Diaz-Garelli J-F, Strowd R, Ahmed T, et al. A tale of three subspecialties: Diagnosis recording patterns are internally consistent but specialty-dependent JAMIA Open 2369–3772019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Asan O, Nattinger AB, Gurses AP, et al. Oncologists' views regarding the role of electronic health records in care coordination JCO Clin Cancer Inform 21–122018 [DOI] [PubMed] [Google Scholar]

- 32.Luh JY, Thompson RF, Lin S.Clinical documentation and patient care using artificial intelligence in radiation oncology J Am Coll Radiol 161343–13462019 [DOI] [PubMed] [Google Scholar]

- 33.Berger ML, Curtis MD, Smith G, et al. Opportunities and challenges in leveraging electronic health record data in oncology Future Oncol 121261–12742016 [DOI] [PubMed] [Google Scholar]

- 34.Zhang J, Walji M. Better EHR: Usability, Workflow and Cognitive Support in Electronic Health Records. National Center for Cognitive Informatics and Decision Making in Healthcare; Houston, TX: 2014. [Google Scholar]

- 35.Kesselheim AS, Cresswell K, Phansalkar S, et al. Clinical decision support systems could be modified to reduce “alert fatigue” while still minimizing the risk of litigation Health Aff 302310–23172011 [DOI] [PubMed] [Google Scholar]

- 36.Payne TH.EHR-related alert fatigue: Minimal progress to date, but much more can be done BMJ Qual Saf 281–22019 [DOI] [PubMed] [Google Scholar]

- 37.Johnson CM, Johnson TR, Zhang J.A user-centered framework for redesigning health care interfaces J Biomed Inform 3875–872005 [DOI] [PubMed] [Google Scholar]

- 38.Miles MB, Huberman AM, Saldaña J. Qualitative Data Analysis: A Methods Sourcebook. ed 3. Thousand Oaks, CA: SAGE Publications; 2013. [Google Scholar]

- 39.Jaspers MWM, Steen T, van den Bos C, et al. The think aloud method: A guide to user interface design Int J Med Inform 73781–7952004 [DOI] [PubMed] [Google Scholar]

- 40.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support J Biomed Inform 42377–3812009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zheng K, Hanauer DA, Padman R, et al. Handling anticipated exceptions in clinical care: Investigating clinician use of “exit strategies” in an electronic health records system J Am Med Inform Assoc 18883–8892011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Johnson C. Effects of information display on the construction of clinician mental models [UT SBMI dissertations] 2003 https://digitalcommons.library.tmc.edu/uthshis_dissertations/5

- 43.Smith SW, Koppel R.Healthcare information technology's relativity problems: A typology of how patients' physical reality, clinicians' mental models, and healthcare information technology differ J Am Med Inform Assoc 21117–1312014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Tang Z, Johnson TR, Tindall RD, et al. Applying heuristic evaluation to improve the usability of a telemedicine system Telemed J E Health 1224–342006 [DOI] [PubMed] [Google Scholar]

- 45.Zhang J, Walji MF.TURF: Toward a unified framework of EHR usability J Biomed Inform 441056–10672011 [DOI] [PubMed] [Google Scholar]

- 46.Shanafelt TD, West CP, Sinsky C, et al. Changes in burnout and satisfaction with work-life integration in physicians and the general US working population between 2011 and 2017 Mayo Clinic Proc 941681–16942019 [DOI] [PubMed] [Google Scholar]

- 47.Sieja A, Markley K, Pell J, et al. Optimization sprints: Improving clinician satisfaction and teamwork by rapidly reducing electronic health record burden Mayo Clinic Proc 94793–8022019 [DOI] [PubMed] [Google Scholar]

- 48.DiAngi YT, Stevens LA, Halpern-Felsher B, et al. Electronic health record (EHR) training program identifies a new tool to quantify the EHR time burden and improves providers' perceived control over their workload in the EHR JAMIA Open 2222–2302019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: The impact of health information technology J Am Med Inform Assoc 26106–1142019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Adler-Milstein J, Zhao W, Willard-Grace R, et al. Electronic health records and burnout: Time spent on the electronic health record after hours and message volume associated with exhaustion but not with cynicism among primary care clinicians J Am Med Inform Assoc 27531–5382020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Tran B, Lenhart A, Ross R, et al. Burnout and EHR use among academic primary care physicians with varied clinical workloads AMIA Jt Summits Transl Sci Proc 2019136–1442019 [PMC free article] [PubMed] [Google Scholar]

- 52.Kirkendall ES, Ni Y, Lingren T, et al. Data challenges with real-time safety event detection and clinical decision support. J Med Internet Res. 2019;21:e13047. doi: 10.2196/13047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Roundtable on Value & Science-Driven Health Care; Institue of Medicine . Data Quality Challenges and Opportunities in a Learning Health System. Washington, DC: National Academies Press; 2013. [Google Scholar]

- 54.Weiner JP, Kfuri T, Chan K, et al. “e-Iatrogenesis”: The most critical unintended consequence of CPOE and other HIT J Am Med Inform Assoc 14387–3882007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Heeter C, Lehto R, Allbritton M, et al. Effects of a technology-assisted meditation program on healthcare providers' interoceptive awareness, compassion fatigue, and burnout. J Hosp Palliat Nurs. 2017;19:314. [Google Scholar]