Abstract

This study tested the hypotheses that (1) adolescents with cochlear implants (CIs) experience impaired spectral processing abilities, and (2) those impaired spectral processing abilities constrain acquisition of skills based on sensitivity to phonological structure but not those based on lexical or syntactic (lexicosyntactic) knowledge. To test these hypotheses, spectral modulation detection (SMD) thresholds were measured for 14-year-olds with normal hearing (NH) or CIs. Three measures each of phonological and lexicosyntactic skills were obtained and used to generate latent scores of each kind of skill. Relationships between SMD thresholds and both latent scores were assessed. Mean SMD threshold was poorer for adolescents with CIs than for adolescents with NH. Both latent lexicosyntactic and phonological scores were poorer for the adolescents with CIs, but the latent phonological score was disproportionately so. SMD thresholds were significantly associated with phonological but not lexicosyntactic skill for both groups. The only audiologic factor that also correlated with phonological latent scores for adolescents with CIs was the aided threshold, but it did not explain the observed relationship between SMD thresholds and phonological latent scores. Continued research is required to find ways of enhancing spectral processing for children with CIs to support their acquisition of phonological sensitivity.

I. INTRODUCTION

After roughly 30 years of pediatric cochlear implantation, a consistent picture of long-term language outcomes has emerged. Children born with severe-to-profound hearing loss who receive these devices are able to acquire language skills in their early years close to those of their peers with normal hearing (NH), allowing them to enter mainstream educational environments seemingly on track for typical language development and thus academic success. It is becoming increasingly apparent, however, that later language learning does not always progress as smoothly. Children with cochlear implants (CIs) often show escalating difficulty with language as it becomes more complex through the grade levels (Geers and Hayes, 2011; Kronenberger and Pisoni, 2019; Nittrouer and Lowenstein, 2021). Of concern to the current study is the consistent finding that these children have disproportionately large deficits with any language function that depends on being able to rapidly and accurately recover word-internal, phonological structure from the speech signal (Dillon and Pisoni, 2006; Nittrouer et al., 2014; Nittrouer et al., 2018). This specific deficit in recognizing phonological structure undoubtedly contributes strongly to the later language-learning difficulties of children with CIs, because it becomes increasingly important to be able to access that level of structure efficiently and accurately. Without that kind of phonological fluency, children have difficulty acquiring the progressively more technical vocabulary and processing the increasingly complex language of higher grade levels (Lowenstein and Nittrouer, 2021; Pisoni and Kronenberger, 2021; Smith et al., 2019). Thus, the diminished ability to perform rapid and efficient phonological coding presents a clear risk to academic success for children with CIs. The purpose of the study reported here was to investigate one potential source of that phonological deficit.

A. Why spectral processing

According to several prominent models of language acquisition, infants initially recover words from the ongoing speech signal by attending to relatively broad patterns of acoustic structure that recur repeatedly in the language the child hears. At these early ages, the child's lexicon can be described as consisting of holistic representations, most akin to word units and unanalyzed according to phonological elements (Ferguson and Farwell, 1975; Menyuk and Menn, 1979; Vihman and Velleman, 1989; Waterson, 1971). As long as the child has few lexical entries to store and recall, these entries are adequately represented with broad acoustic structure (Charles-Luce and Luce, 1990). But as the child gets older and adds more words to the lexicon, those word forms necessarily become more similar acoustically, mandating that finer-grained acoustic structure be utilized in storage. It is then that the lexicon is restructured according to phonemic constituents (Metsala, 1997; Storkel, 2002; Walley, 1993; Walley et al., 2003), allowing the lexicon to be better organized for efficient storage and retrieval. Not only does this lexical restructuring support further vocabulary development, it also facilitates other language processes, such as durable storage of linguistic material in short-term memory buffers through the operations of the phonological loop (Baddeley and Hitch, 2019; Gathercole and Baddeley, 1989). Nonetheless, the fact that young children can acquire vocabularies of reasonable size without relying on precisely specified phonological representations demonstrates that individuals can perform linguistic functions without necessarily invoking that level of structure. In fact, there is at least one group of individuals who appear to operate across the lifespan without well-specified phonological representations, and they are individuals with dyslexia (Ramus et al., 2003; Snowling, 2000; Vellutino et al., 2004). Nonetheless, it remains the case that the ability to rapidly and accurately process language benefits greatly from being able to recover phonological structure from the ongoing speech signal.

The premise of the current study is that the acquisition of sensitivity to phonological structure depends on having keen sensitivity to acoustic patterns in speech, especially in the spectral domain. Spectral patterns in the speech signal serve to define both vowels (e.g., Hillenbrand et al., 1995; Peterson and Barney, 1952) and consonants (e.g., Harris, 1958; Stevens and Blumstein, 1978). The ability to recover these spectral patterns is undoubtedly a prerequisite for sensitivity to that phonological structure. Work with NH infants reveals that spectral processing abilities are immature at birth (Horn et al., 2017b) but are honed across childhood (Horn et al., 2017a; Kirby et al., 2015; Peter et al., 2014). Unfortunately, such developmental improvement in spectral processing has not been observed for children with CIs (DiNino and Arenberg, 2018; Horn et al., 2017a; Jung et al., 2012), which might explain their large phonological deficits. In the study reported here, congenitally deaf children who used CIs and age-matched peers with NH served as participants. These children had all reached adolescence, which should have provided ample time for both the auditory and language skills under investigation to have developed, even if on a delayed timetable.

The sensitivity of these adolescents to spectral patterns in acoustic signals was examined. The question of interest was whether that spectral processing is related to the acquisition of lexicosyntactic or phonological skills. Based on the work described above, two specific hypotheses were posited: first, it was hypothesized that prelingually deaf adolescents with CIs would be less sensitive to spectral modulation than adolescents with NH. Second, it was hypothesized that sensitivity to spectral modulation would be significantly correlated with the acquisition of phonological skills for adolescents with CIs, but not with the acquisition of lexicosyntactic skills. A similar relationship might be obtained for adolescents with NH, but a strong hypothesis concerning that relationship for those adolescents was difficult to make going into this study. It might be that spectral processing abilities would be optimal for all these individuals, resulting in highly restricted variability and constraining the likelihood that correlational analysis could reveal a significant relationship.

B. Measuring spectral processing

Three experimental methods have been used to assess spectral processing. In spectral modulation discrimination—also termed spectral ripple discrimination—the depth of modulation is held constant, and the number of spectral prominences is varied adaptively. This has the effect of modifying the width of those prominences (Drennan et al., 2014; Henry and Turner, 2003; Henry et al., 2005; Horn et al., 2017a; Jeon et al., 2015; Peter et al., 2014; Won et al., 2007). In this paradigm, an oddball stimulus has inverted spectral prominences compared to a standard stimulus, and thresholds are obtained for the maximum number of prominences for which the listener can discriminate this change in shape. With relatively deep modulations (e.g., 20–30 dB), NH adults can typically recognize close to ten prominences per octave (Henry et al., 2005; Horn et al., 2017a; Peter et al., 2014; Shim et al., 2019). Developmental increases in prominences per octave that can be recognized are found for children with NH up to adolescence (Allen and Wightman, 1994; Horn et al., 2017a; Peter et al., 2014), but when this procedure is implemented with CI listeners, both adults and children generally show thresholds of less than two prominences per octave (Horn et al., 2017a). When these measures have been examined in relation to speech perception, correlations have been obtained. For example, Horn et al. (2017a) found significant relationships between the signal-to-noise ratio associated with 50% correct word recognition and spectral modulation discrimination thresholds.

A modified version of the spectral modulation discrimination task involves having spectral prominences change dynamically in phase (i.e., drift) over the course of a single stimulus presentation (Aronoff and Landsberger, 2013). The purpose of this modification is to protect against listeners attending to only a narrow frequency slice of the stimulus in the case of listeners with NH or to the output of only a single electrode in the case of listeners with CIs; it also protects against listeners making this discrimination based on the spectral centroid. Although this signal processing introduces temporal modulation, stimuli remain sensitive to spectral processing abilities. Using this method, Landsberger et al. (2018) observed that spectral modulation discrimination develops across childhood (up to age 13 years) for children with NH but does not change over childhood for children with CIs; they also found that it was not related to age of implantation or years of implant experience. DiNino and Arenberg (2018) observed that thresholds obtained using the spectral-temporal modulation test of Aronoff and Landsberger are well correlated with vowel identification for children with NH and CIs alike. In a different study using the same method with children who use hearing aids (Kirby et al., 2019), significant correlations were found for spectro-temporal discrimination thresholds and nonverbal intelligence and visual working memory. That result was interpreted as suggesting that cognitive abilities influence task performance. Because both additional tasks involved complex visual images, however, it is equally possible that these significant correlations indicate that a modality-independent ability involving perceptual organization was a common factor to both the auditory and visual tasks.

In the spectral modulation detection (SMD) task, which was used in the study reported here, the rate of modulation (i.e., number of prominences) is held constant while the depth of modulation is varied. Adaptive procedures are used to measure the threshold for detection of modulation in a signal when compared to unmodulated signals of the same duration, bandwidth, and overall amplitude. These thresholds have been found to correlate with measures of speech recognition for adults with NH as well as for those with hearing loss, regardless of whether they used CIs (Anderson et al., 2012; Litvak et al., 2007) or hearing aids (Davies-Venn et al., 2015). Such thresholds, however, have never been measured in children, although Gifford et al. (2018) used a quick version of the test in which both modulation rate and depth are held constant within a set, and percent correct recognition scores are obtained. In this case, the speech recognition of children (5–17 years) with prelingual deafness who wore CIs was not found to correlate with spectral processing abilities, a finding that conflicted with results for adults with either postlingual or prelingual deafness showing that these measures were correlated for those listeners. However, interpretation was made difficult by the juxtaposition of the findings that the children had poorer overall spectral processing abilities than adults, as evaluated by the quick SMD task, but better overall speech recognition than the adults to whom they were compared.

C. Current study

Overall, the review above illustrates that the majority of work that has been conducted to investigate the relationship between spectral processing and speech perception can be described as involving acoustically complex stimuli for the spectral processing measure: either both standard and target stimuli were comprised of spectrally modulated structure (spectral modulation discrimination) or modulation was imposed in two dimensions (spectro-temporal modulation discrimination). When results of these tasks have been compared to speech processing, the measures of speech processing have been limited to recognition. The primary difference between the current study and previous similar studies is that this one was focused on how sensitivity to spectral modulation has affected language acquisition, rather than speech recognition, for adolescents with congenital HL who use CIs. Therefore, a straightforward measure of sensitivity to spectral modulation seemed desirable, so the SMD task was selected for use. A low rate of modulation was selected—0.5 cycles per octave (cpo)—because thresholds at low rates have been found to correlate with speech recognition (Anderson et al., 2012; Gifford et al., 2014; Litvak et al., 2007). Also, a low modulation rate should enhance the probability that all adolescents with CIs would be able to recognize the modulation, even if only with deep modulation: Horn et al. (2017a) reported that children between six and eight years of age recognized stimuli with a depth of 10 dB only at low modulation rates. Furthermore, the acoustic theory of speech production (Fant, 1960) demonstrates that spectral prominences of a 17.5 cm tube, roughly the length of a male vocal tract, are found at 500, 1500, 2500 Hz, etc., which is a low rate. For all these reasons, this choice of modulation rate seemed appropriate and likely to elicit reliable and meaningful results. The first specific hypothesis tested by this study was that adolescents with CIs would demonstrate larger thresholds for detecting spectral modulation with these signals than adolescents with NH.

The second specific hypothesis tested in this study was that adolescents with CIs would show relationships between SMD thresholds and the measures of phonological sensitivity, but not between those thresholds and measures of lexicosyntactic abilities. Three measures of lexicosyntactic skills and three measures of phonological skills were included in this study to provide adequate sampling of skills across these two domains. The lexicosyntactic skills evaluated were comprehension of syntactic structures, vocabulary knowledge, and grammaticality judgments. The last of these measures might also rely on sensitivity to phonological structure to some extent because the test instrument includes some judgments involving grammatical morphemes, which are often structured as additional (bound) phonological segments attached to free morphemes. Nonetheless, it seemed a reasonable skill to assess and expanded the range of lexicosyntactic skills under study. All three of those measures were standardized test instruments. The phonological skills that were evaluated included a test of sensitivity to word-internal phonemic structure, a test of phonological processing abilities, and a standardized measure of word segmentation abilities.

Although not an explicit hypothesis, another goal of this study was to examine whether any specific audiologic factor accounted for spectral processing abilities in the children with CIs. The fundamental assumption of the work going into this study was that CI patients have poor spectral processing due to the degradation in signal quality they receive. Therefore, it would be of heuristic value to identify the specific factors largely responsible for that poor signal processing.

II. METHOD

A. Participants

One hundred eight adolescents participated in this study, immediately following completion of eighth grade. All these adolescents were participants in a longitudinal study of development in children with hearing loss, and most had been tested since they were infants (Nittrouer, 2010). Of these adolescents, 56 had NH (28 males), meaning that thresholds for the octave frequencies between 0.25 and 8.0 kHz were better than 20 dB hearing level in both ears. Another 52 adolescents wore CIs (23 males). Unaided thresholds were not collected at the time of testing for these adolescents.

Socioeconomic status (SES) is a factor that has been found to affect language performance such that children from lower SES homes perform more poorly (Hart and Risley, 1995; Hoff and Tian, 2005; Nittrouer, 1996; Nittrouer and Burton, 2005). In this study, SES was assessed using an index that has been used before (e.g., Nittrouer and Burton, 2005). On this scale, occupational status and highest educational level are ranked on scales from 1 to 8, from lowest to highest, for each parent in the home. These scores are multiplied together, for each parent, and the highest value obtained is used as the SES metric for the family. On this scale, scores of 30 and above indicate that at least one parent had a four-year university degree or better and a job commensurate with that level of education.

Cognitive abilities, especially working memory, are often cited as factors that contribute to variability in speech recognition for listeners with HL (Figueras et al., 2008; Kronenberger et al., 2014; Moberly et al., 2018; Pisoni et al., 2011), and one group of investigators (Kirby et al., 2019) found cognitive abilities to correlate with spectral modulation discrimination. Many measures of cognitive ability, however, require language either for giving directions or for collecting responses, leading to a confound in assessment of cognitive abilities independently of language abilities. To assess cognitive skills in a strictly non-linguistic manner, we have used the Leiter International Performance Scales—Revised (Roid and Miller, 2002). In this assessment instrument, no verbal instructions are given, and all responses are nonverbal. Four subtests form what is termed the “brief IQ” score. These subtests assess (1) figure-ground perception, involving the location of figures embedded in complex visual backgrounds; (2) form completion, involving the mental assembly of fragmented pictures to derive composite visual forms; (3) sequencing abilities, involving the identification of appropriately placed forms in visual sequences; and (4) repeated patterns, involving the identification of correct items to complete figural patterns. Thus, all these tasks involve perceptual organization of complex visual patterns. This brief IQ score was used in this study to measure nonverbal cognitive abilities in these adolescents and will be termed nonverbal IQ.

Working memory can be defined as the ability to temporarily store information for mental operations, and variability in this function can also help explain language outcomes in adolescents with HL, especially those who receive CIs (Cleary et al, 2000; Geers et al., 2013; Nittrouer et al., 2013). To assess working memory in this study, the forward digit span test of the Wechsler Intelligence Scale for Children (Wechsler, 1991) is commonly used, and so it was in this study. Unlike administration in most cases, however, a computer program was used to present digits, audio-recorded by a male talker. After the digits were presented auditorily, the digits appeared at the top of a touchscreen monitor, and the child's task was to tap them in the order recalled.

Although digit span is a commonly used task of working memory, it involves verbal working memory and, as such, depends on subjects' abilities to recover phonological structure in the phonological loop for coding in a working memory buffer (Baddeley and Hitch, 2019). Consequently, a strictly visual measure of working memory was also obtained. This task was similar to a Corsi-block task but did not measure memory span. Instead, the adolescent sat in front of a computer monitor that had a 3 × 2 matrix displayed. Each of the six blocks lit up in sequence, one at a time, with a display time of 1 s and an onset-to-onset of 1.5 s. The adolescent's task was then to touch each block in the order lit up. Ten trials were administered, making a total of 60 block presentations. The dependent measure was the percentage out of the 60 blocks touched in the correct order.

Table I displays means and SDs for each group for these five demographic variables of age, SES, brief IQ, digit span, and spatial memory. The only one of these variables that showed a significant difference across groups was forward digit span, t(106) = 4.55, p < 0.001, Cohen's d = 0.86.

TABLE I.

Mean (M) scores (and SDs) for each group for demographic variables. Socioeconomic status is on a 64-point scale. Nonverbal IQ is from the Leiter International Performance Scale, and standard scores are shown. Visual spatial memory is the percentage of blocks identified in the correct serial position out of 60. N = 56 for adolescents with normal hearing and 52 for adolescents with cochlear implants.

| Normal hearing | Cochlear implants | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Age (years, months) | 14, 5 | 0, 6 | 14, 8 | 0, 5 |

| Socioeconomic status | 37 | 14 | 33 | 11 |

| Nonverbal IQ | 106 | 13 | 103 | 14 |

| Forward digit span | 6.5 | 1.3 | 5.5 | 1.0 |

| Visual spatial memory | 88.0 | 9.4 | 85.3 | 9.8 |

Because these adolescents had participated in the longitudinal study, audiometric and treatment data were available for those with CIs. Median ages (and SDs) of reaching four specific age-related benchmarks were as follows: (1) identification of hearing loss = 3.0 months (6.6 months); (2) receiving a first HA = 5.0 months (6.5 months); (3) beginning early intervention = 6.0 months (7.0 months); and (4) receiving a first CI = 14.5 months (29.2 months). All these adolescents wore HAs prior to receiving a first CI. The median pure-tone average threshold (three-frequency average for 0.5, 1.0, and 2.0 kHz) in the better ear just prior to implantation was 100 dB hearing level (16 dB), and the median pure-tone aided threshold (four-frequency average for 0.5, 1.0, 2.0, and 4.0 kHz), measured free-field at the time of testing, was 20 dB hearing level (4.8 dB). Fifteen of these adolescents with CIs had just one CI and did not use a HA on the other ear. Of those 15 adolescents, five had a CI in the unaided ear but chose not to use it; in all cases, it was the second CI received. Median unaided pure-tone average threshold (three-frequency) in the unaided ear for those 15 adolescents was 110 dB hearing level (17 dB). Thirty-four adolescents had bilateral CIs, and three adolescents used a CI in one ear and a HA in the other ear. The median age of receiving a second CI, for those who had a second, was 46.5 months (37.3 months). Exactly half of adolescents with CIs wore a HA on the other ear for a period of a year or more at the time of receiving their first CI; the other half of these adolescents either received bilateral CIs simultaneously or did not have any amplification for the unaided ear between sequential CI surgeries. Of the 26 adolescents who had bimodal experience at the time of receiving a first CI, five adolescents simply stopped wearing a HA at some point, 18 adolescents got a CI in the ear with the HA before the time of this testing, and three adolescents continued to use a bimodal configuration. Parents of all adolescents kept up with advances in processors as well as they could and had the maps of their children's CIs checked annually. Table II shows CI manufacturers and processors for adolescents with one and two CIs as well as for those with bimodal stimulation at the time of this testing.

TABLE II.

Implant manufacturers and processor configurations for the adolescents with cochlear implants. Note that one adolescent was implanted with one Cochlear Corporation implant (Nucleus 5 processor) and one Advanced Bionics implant (Auria processor).

| Two CIs | One CI | Bimodal | |

|---|---|---|---|

| Cochlear Corporation | 18 adolescents | 7 adolescents | 1 adolescent |

| Nucleus 6: 12 | Nucleus 7: 3 | Nucleus 6: 1 | |

| Nucleus 5: 4 | Nucleus 5: 2 | Phonak HA: 1 | |

| Nucleus 7: 1 | Nucleus 6: 1 | ||

| Freedom: 1 | Freedom: 1 | ||

| Advanced Bionics | 14 adolescents | 6 adolescents | 2 adolescents |

| Naida: 9 | Naida: 5 | Naida: 2 | |

| Auria: 2 | Neptune: 1 | Phonak HA: 2 | |

| Harmony: 2 | |||

| Neptune: 1 | |||

| MedEl | 1 adolescent | 2 adolescents | |

| Opus 2: 1 | Opus 2: 1 | ||

| Sonnet: 1 |

The etiologies of hearing loss for many of these adolescents were unknown (N = 33). Eleven had a connexin 26 deficit. Six were diagnosed with enlarged vestibular aqueduct syndrome, although all were identified at or near birth. One had a mitochondrial mutation, and one was diagnosed with prenatal cytomegalovirus. Of most importance, children were excluded at the time of would-be enrollment if they presented with any obvious etiology that could on its own be suspected of increasing risk of developmental language delay.

All these children were mainstreamed in their local schools and had been since they began kindergarten. Prior to entering kindergarten, they had all received intervention services at least once a week from a provider with at least a master's degree in an area related to hearing loss, such as teacher of the deaf or speech-language pathology; from three years to the start of kindergarten, this intervention took the form of preschool. All parents of these children confirmed that it was their intention for their children to grow up with spoken language as their primary mode of communication, although 18 of the children received sign language support in their early intervention programs (including preschools) in addition to listening and spoken language. All these children had parents who had NH or hearing corrected to normal with HAs, and English was the only language spoken to the children in the home.

B. Equipment

All testing was conducted in a soundproof booth. All acoustic stimuli were presented through a computer equipped with a Creative Labs (Singapore) Soundblaster soundcard, a Samson (Hicksville, NY) C-Que 8 mixer, and a Roland (Hamamatsu, Japan) MA-12C powered speaker. The speaker was placed 1 m in front of the adolescent at 0° azimuth. This system used 16-bit digitization and had a flat frequency response. Presentation level of all acoustic signals was at 68 dB sound pressure level.

Stimuli for two of the lexicosyntactic tasks (sentence comprehension and grammaticality judgments) were video-recorded by a female speaker and presented in audio-video format on a computer rather than by live voice. Materials for two of the phonologic tasks (final consonant choice and backward words) were video-recorded by a male speaker. Materials for the third phonologic task (word segmentation) were video-recorded by a female speaker. All were presented in audio-video format. All video materials were presented on a widescreen monitor at a rate of 1500 kilobits/s. Presenting stimuli in audio-video format ensured that all adolescents were receiving the same presentation and ensured that all adolescents could recognize the stimuli.

Adolescents were video-recorded during testing of all lexicosyntactic and phonologic tasks. This was done so that the scoring of the examiner at the time of testing could be checked later by an independent laboratory member. To do this, a SONY (Tokyo, Japan) HDR-XR550V video recorder was used. Adolescents wore SONY FM transmitters, with the signal going to the video recorder, to ensure good sound quality.

matlab code was written for stimulus presentation and recording of responses for the following tasks: forward digit span, visual working memory, SMD, and two phonologic tasks.

C. Stimuli and procedures

All procedures were approved by the local institutional review board. These adolescents visited the laboratory on each of two successive days, in groups of three to six. They were tested in six 1-h sessions across the two days and had 1-h breaks between each. In addition to the measures reported here, data were also collected on reading proficiency, oral and written narrative abilities, speech-in-noise recognition, and socio-emotional development.

1. SMD

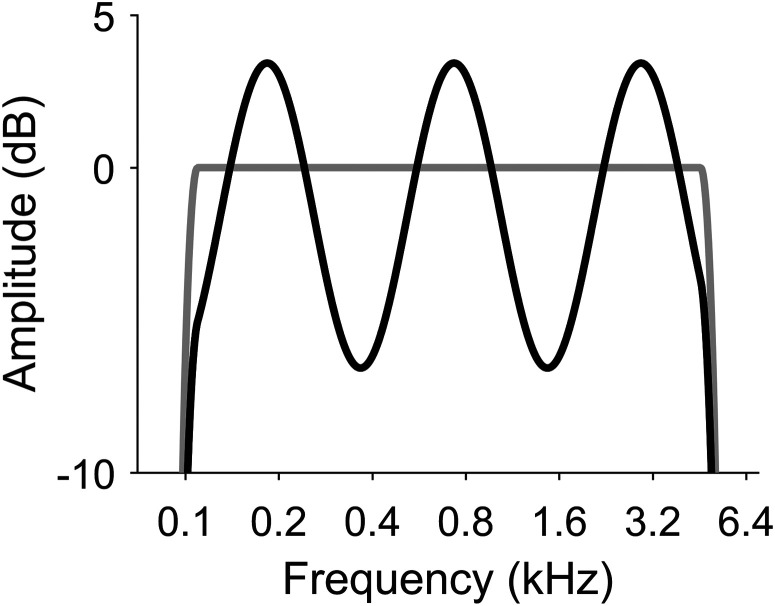

Stimuli were generated with 800 random-phase sinusoidal components, logarithmically spaced between 0.1 and 5.0 kHz, using a sampling rate of 44.1 kHz. For all stimuli, amplitudes of the sinewave components from 0.1 kHz to 1/4 octave above and from 5.0 kHz to 1/4 octave below were increased in a cosine-squared pattern to eliminate the possibility of audible artifacts associated with the spectral edges. All stimuli were 500 ms long, with 20-ms on and off ramps. The standard stimuli were unmodulated. Target stimuli were spectrally modulated at a rate of 0.5 cpo. On each trial, the starting phase of the modulation envelope was chosen randomly with uniform probability in a range from 0 to 2π. A speech-shaped filter was applied to all stimuli, as others have done (e.g., Henry and Turner, 2003; Henry et al., 2005). Root mean square (rms) amplitude remained constant across standard and target stimuli within each trial. Figure 1 shows an unmodulated signal as a gray line and a modulated signal as a black line, although the speech-shaped filter is not shown on these spectra.

FIG. 1.

Schematic illustration of stimuli in the SMD task. The gray line represents unmodulated signals (standards), and the black line represents the target, modulated signals. Speech-shaped filters are not depicted.

Adolescents were tested on this task with free-field presentation. Adolescents with CIs used their customary CI settings. Unimplanted ears of unilateral CI subjects were not plugged, because it was concluded that none of these adolescents had sufficient residual hearing to process stimuli in those ears. A three-alternative, forced-choice procedure was used in an adaptive paradigm designed to find the 70.7% threshold, which meant a two-down, one-up procedure was followed (Levitt, 1971). Starting modulation depth was 30 dB. Step size was 4 dB for the first four reversals and changed to 2 dB for the last eight reversals. Threshold was calculated as the mean of those last eight reversals. The SD of that SMD threshold (SMD-SD) was used as an index of attention to the task. This measure was computed for each child and is the SD of the threshold computed across the eight reversals; it should not be confused with SDs computed on group means. This SMD-SD would be higher for adolescents who were less attentive. Each child was tested twice, separated by testing on other tasks. Final SMD thresholds (in dB) used in analyses were the means across the two tests, and the final SMD-SDs (in dB) were the mean SMD-SDs across the two tests.

2. Lexicosyntactic stimuli

Three standardized measures of lexicosyntactic knowledge and processing abilities were examined in this study.

a. Sentence comprehension.

Comprehension of syntactic structures was assessed through the Sentence Comprehension of Syntax subtest from the Comprehensive Assessment of Spoken Language (CASL) (Carrow-Woolfolk, 1999). In this test, pairs of sentences differing in syntactic structure are presented. Each of the 21 test items consists of two pairs of sentences (i.e., four sentences per item). The first sentence in each pair is the same, but the second sentence differs. After being presented with a single pair of sentences, the participant must indicate whether the sentences have the same meaning with a “yes” or “no” response. The participant must correctly respond to both pairs in an item to get credit for that item. Testing stops after five consecutive errors. Two practice pairs are provided before testing. This subtest is sensitive to comprehension of complex syntax.

b. Vocabulary.

Vocabulary was assessed using the Expressive One-Word Picture Vocabulary Test–4 (Martin and Brownell, 2011). In this task, participants are shown a series of pictures, and they must label each one in turn by saying the word represented. Testing is discontinued after six consecutive errors.

c. Grammaticality judgments.

Finally, the Grammaticality Judgment subtest of the CASL was administered. This task consists of the presentation of single sentences that may or may not be grammatically correct. The child must decide if the sentence is correct and provide a corrected version if it is reported as being incorrect. Participants receive a point for accurately identifying a sentence as correct or incorrect and a further point for providing a corrected version of an incorrect item. The test has 57 items (46 incorrect and 11 correct), making a total of 103 possible points. This task differs from the sentence comprehension subtest in that grammatical morphemes are often manipulated to render sentences inaccurate. That manipulation has been found to result in scores on this task loading higher on a phonologic latent factor than scores on either the vocabulary or sentence comprehension task (Nittrouer et al., 2018). Testing is discontinued after errors on five consecutive items. Three practice sentences are presented before testing.

3. Phonological stimuli

Three measures were used to assess adolescents' sensitivity to phonological structure and their abilities to manipulate that structure. The first two measures have been used previously and were known to be reliable (e.g., Nittrouer et al., 2016, Nittrouer et al., 2011; Stanovich et al., 1984); the third measure derives from a standardized instrument. Again, stimuli for all three tasks were presented in audio-video format, so adolescents were able to recognize stimuli accurately.

a. Phonological sensitivity.

The final consonant choice task measured adolescents' sensitivity to phonological structure at the ends of words. In this task, a target word spoken by a talker was audio-visually presented, and the adolescent repeated it. If the adolescent mis-repeated it, the target was replayed up to three times until the adolescent correctly repeated it. This was an uncommon event: the median number of targets that needed to be repeated (out of 48) for adolescents with NH was less than one, and the median number of targets that needed to be repeated for adolescents with CIs was 2. After the adolescent correctly repeated the target, three words were presented, again in audio-video format. The adolescent had to select the word that ended in the same sound as the target. This task had a total of 48 test items and six practice items. Testing was discontinued after six consecutive errors. Test items can be found in Appendix A.

b. Phonological processing.

The backward words task assessed adolescents' abilities to manipulate phonological structure. It consisted of the adolescent being presented with a word. That target word was repeated by the adolescent. Again, the target could be repeated up to three times. For this task, the median number of target words that needed to be repeated (out of 48) for adolescents with NH was less than one, and the median number of target words that needed to be repeated for adolescents with CIs was one. Next, the adolescent had to produce the word that resulted from reversing the order of phonemes within the word. This task had a total of 48 test items and three practice items. Testing was discontinued after six consecutive errors. Test items can be found in Appendix B.

c. Word segmentation.

The third task was the Segmenting Words subtest from the Comprehensive Test of Phonological Processing (Wagner et al., 1999). In this task, the adolescent was presented with a word. The adolescent had to say the word. Then the adolescent had to say each of the phonemes in the word, separated by a pause, demonstrating the ability to isolate phonemes. There are a total of 20 words on this subtest varying in length from one to three syllables, with a total of 89 phonemes. There are five practice words. Typically, testing is discontinued after three consecutive errors, and the test is scored based on how many whole words are produced with correct segmentation before that ceiling is reached. However, in this experiment, we presented all target words and counted how many individual phonemes the adolescent was able to segment, even if all phonemes in a single word were not segmented appropriately. This provided a more sensitive measure of the skill because subjects could receive points for partially correct responses.

4. Scoring and analyses

Audio-video recordings of the lexicosyntactic and phonologic tasks scored by the experimenter present during testing were reviewed by a laboratory staff member who was not present at the time of testing and not familiar with the adolescent being tested. This staff member watched the video recording and assessed whether the scoring by the experimenter present at the time of testing was accurate. If there was a discrepancy, it was corrected according to the second reviewer, who had access to the video recording and therefore could replay it as necessary. Few scores needed to be changed. Those that did need to be changed were typically due either to the adolescent self-correcting after the experimenter had entered a response or due to the experimenter accidentally hitting a wrong key.

All data were entered into a data file by one staff member and subsequently checked by a second staff member. All scores in the form of proportions were transformed to rationalized arcsine units (RAUs; Studebaker, 1985); this included the final consonant choice and the backward words tasks. Data were screened for normality of distribution and homogeneity of variance. All were found to meet appropriate criteria, so no further transformations were applied. To analyze group differences, t-tests were used, with effect sizes given as Cohen's ds, computed as the difference between means, divided by the pooled SD. Pearson product-moment correlation coefficients represented the relationship between two variables. Confirmatory factor analysis was performed, and latent factors of lexicosyntactic and phonological measures were derived.

III. RESULTS

A. SMD

Mean SMD threshold (and SD) was 7.5 (2.8) for adolescents with NH and 9.8 (5.3) for adolescents with CIs. A t-test conducted on these results revealed a significant difference between groups, t(106) = –2.77, p = 0.007, Cohen's d = –0.53.

The reliability of these measures was assessed next. First, differences across the two test runs were examined. Mean absolute differences (and SDs) across these runs were 2.3 dB (2.9 dB) for adolescents with NH and 2.7 dB (2.7 dB) for adolescents with CIs. A t-test conducted on these data did not reveal a significant difference between groups. The overall mean difference of 2.5 dB across the two test runs was considered reasonable and an indication that the methods that were used produced reliable results: this difference is just a bit larger than one step size.

The next concern addressed had to do with variability of responding within a test run. In particular, a reasonable concern could be raised that if adolescents with CIs were not attending as well as NH adolescents to the task, their SMD thresholds could have been higher due to a general lack of attention. The measure of SMD-SD was examined as a way to assess this concern, with the view that adolescents who were inattentive would show greater variability around their thresholds. Mean SMD-SDs (and SDs) were 3.5 dB (0.5 dB) for adolescents with NH and 3.5 dB (0.7 dB) for adolescents with CIs, so there was no difference across groups. This overall mean SMD-SD of 3.5 dB was considered reasonable and an indication that adolescents in both groups paid attention to the task.

Finally, the potential effects of demographic and audiologic factors were examined. First, demographic factors were examined for both groups. To do this, Pearson product-moment correlation coefficients between SMD thresholds and those factors were generated, for adolescents with NH and those with CIs separately. Although means and SDs were similar for both groups on the factors of interest (except for forward digit span), it was possible that these factors could exert different effects across groups. The demographic variables of SES, age at the time of testing, nonverbal IQ scores, digit span, and spatial memory were examined. Of these, only the correlation between nonverbal IQ and SMD threshold was significant, and only for the adolescents with NH, r(56) = –0.372, p = 0.005. Subsequently, this relationship was examined for a potential influence on any observed relationship between SMD thresholds and either lexicosyntactic or phonological abilities; those outcomes are reported in Sec. III C1. Gender was examined for potential differences in SMD thresholds using t-tests, but no significant difference was observed for either group.

For adolescents with CIs, potential effects were examined for the five age-related benchmarks described in Sec. II A as well as for pre-implant unaided pure-tone average thresholds and aided thresholds at the time of testing. None of these audiologic measures was found to be correlated with SMD thresholds. No differences in SMD thresholds were found as a function of CI manufacturer. Furthermore, no difference was observed in SMD thresholds between adolescents who had a period of bimodal experience at the time of receiving a first implant and those who did not. There was a significant difference, however, between adolescents who used one CI at the time of testing, without a HA on the other ear (mean = 12.3, SD = 7.4), and those who used two CIs (mean = 8.8, SD = 3.9), t(47) = 2.166, p = 0.035, Cohen's d = 0.59; the mean SMD threshold for the three adolescents using bimodal stimulation was similar to that of the adolescents with two CIs (mean = 8.3, SD = 3.0).

B. Lexicosyntactic and phonological skills

The first step undertaken in the analysis of these dependent measures was to perform a confirmatory factor analysis to see if the characterization of specific measures as lexicosyntactic or phonologic was accurate. To this end, a principal components analysis with varimax rotation was performed on six measures: sentence comprehension raw scores, vocabulary raw scores, grammaticality judgment raw scores, final consonant choice RAUs, backward words RAUs, and word segmentation raw scores. Raw scores were used for measures for which standard scores could be derived, because those standard scores are based on the chronological age of the child. Although all these adolescents were tested at the end of eighth grade, chronological age varied across roughly a two-year range. This fact would constrain true variability across standard scores. Table III shows the results of this analysis and indicates that each measure loaded primarily on a factor that can be associated with the proposed category: lexicosyntactic or phonologic.

TABLE III.

Results from principal components analysis performed on six measures. Bold numbers indicate the primary factor load for each measure.

| Component | ||

|---|---|---|

| 1 | 2 | |

| Lexicosyntactic skills | ||

| Sentence comprehension | 0.820 | 0.203 |

| Vocabulary | 0.841 | 0.122 |

| Grammaticality judgments | 0.768 | 0.358 |

| Phonological skills | ||

| Final consonant choice | 0.144 | 0.821 |

| Backward words | 0.315 | 0.810 |

| Word segmentation | 0.194 | 0.823 |

Next, group differences in the observed measures were examined. Table IV displays means and SDs. This table includes mean raw scores for the lexicosyntactic measures, and these are the scores used in further analyses. However, mean standard scores can provide a snapshot of how these adolescents were performing overall in terms of language proficiency that is more interpretable. Therefore, Table V displays mean standard scores (and SDs) for sentence comprehension, expressive vocabulary, and grammaticality judgments. Mean scaled scores (and SDs) for word segmentation are also shown and were derived using the standard test protocol including the ceiling of three incorrect responses. Although mean scores for the adolescents with CIs were at or near normative means, adolescents in this group were not performing as well as their well-matched peers with NH.

TABLE IV.

Mean (M) scores (and SDs) for each group, for lexicosyntactic and phonological measures. Raw scores are shown for sentence comprehension, vocabulary, grammaticality judgments, and word segmentation. Rationalized arcsine units are shown for final consonant choice and backward words. N = 56 for adolescents with normal hearing, and 52 for adolescents with cochlear implants.

| Normal hearing | Cochlear implants | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Lexicosyntactic skills | ||||

| Sentence comprehension | 17.63 | 2.58 | 15.54 | 4.52 |

| Vocabulary | 142.29 | 13.80 | 132.37 | 17.15 |

| Grammaticality judgments | 68.71 | 9.30 | 57.65 | 15.20 |

| Phonological skills | ||||

| Final consonant choice | 93.64 | 9.43 | 73.79 | 20.38 |

| Backward words | 76.15 | 21.12 | 61.03 | 28.70 |

| Word segmentation | 57.95 | 23.91 | 52.15 | 25.33 |

TABLE V.

Mean (M) standard scores (and SDs) for each group for sentence comprehension, vocabulary, and grammaticality judgments; mean scaled scores (and SDs) for word segmentation. N = 56 for adolescents with normal hearing, and 52 for adolescents with cochlear implants.

| Normal hearing | Cochlear implants | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Sentence comprehension | 107 | 13 | 99 | 17 |

| Vocabulary | 113 | 16 | 101 | 18 |

| Grammaticality judgments | 100 | 11 | 87 | 17 |

| Word segmentation | 10.0 | 2.2 | 9.0 | 2.6 |

Table VI shows the outcomes of t-tests performed on raw scores or RAUs. Only the measure of word segmentation failed to show a significant overall effect for group. For all other measures, adolescents with CIs performed more poorly than adolescents with NH.

TABLE VI.

Outcomes of t-tests performed on each measure and Cohen's ds. Degrees of freedom = 106 for all analyses. Raw scores were used for sentence comprehension, vocabulary, grammaticality judgments, and word segmentation. Rationalized arcsine units were used for final consonant choice and backward words.

| t | p | Cohen's d | |

|---|---|---|---|

| Lexicosyntactic skills | |||

| Sentence comprehension | 2.97 | 0.004 | 0.57 |

| Vocabulary | 3.32 | 0.001 | 0.64 |

| Grammaticality judgments | 4.60 | <0.001 | 0.88 |

| Phonological skills | |||

| Final consonant choice | 6.57 | <0.001 | 1.25 |

| Backward words | 3.13 | 0.002 | 0.60 |

| Word segmentation | 1.22 | 0.224 | — |

C. How skills are supported by SMD

Scores were derived for the latent measures of lexicosyntactic and phonological skills. The group of adolescents with NH served as the standardized means because these adolescents represent typical language development. Therefore, means for the NH group were set to zero, and the SDs for this group were 1.0. Mean latent scores for both groups are shown in Table VII, and Table VIII shows the outcomes of t-tests performed on each of the latent lexicosyntactic and phonologic scores. These outcomes reveal that—as expected—adolescents with CIs had larger deficits (i.e., scored further below their NH peers) on the latent phonologic scores than on the latent lexicosyntactic scores.

TABLE VII.

Mean (M) scores (and SDs) for each group for the latent measures of lexicosyntactic and phonologic abilities. N = 56 for adolescents with normal hearing and 52 for adolescents with cochlear implants.

| Normal hearing | Cochlear implants | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Latent lexicosyntactic scores | 0.00 | 1.00 | −0.75 | 1.44 |

| Latent phonologic scores | 0.00 | 1.00 | −1.09 | 1.46 |

TABLE VIII.

Outcomes of t-tests performed on latent lexicosyntactic and phonologic measures and Cohen's ds. Degrees of freedom = 106 for both analyses.

| t | p | Cohen's d | |

|---|---|---|---|

| Latent lexicosyntactic scores | 3.15 | 0.002 | 0.60 |

| Latent phonologic scores | 4.54 | <0.001 | 0.87 |

Next, the relationships of spectral processing abilities to these latent scores were examined using Pearson product-moment correlation coefficients. Looking first at latent lexicosyntactic scores, the computed correlation with SMD thresholds when all adolescents were included was r(108) = –0.218, p = 0.024. For latent phonologic scores, the correlation with SMD thresholds when all adolescents were included was r(108) = –0.495, p < 0.001. Thus, spectral processing abilities were found to be strongly related to phonological sensitivity. Although the correlation for lexicosyntactic scores was significant, the coefficient of −0.218 represents a weak relationship. Furthermore, a problem in computing these correlation coefficients across groups is that they could be primarily a result of group differences in the two measures being correlated. An indication of within-group relationship is required to reach the conclusion that indeed spectral processing is related to phonological sensitivity, and that was the next step undertaken.

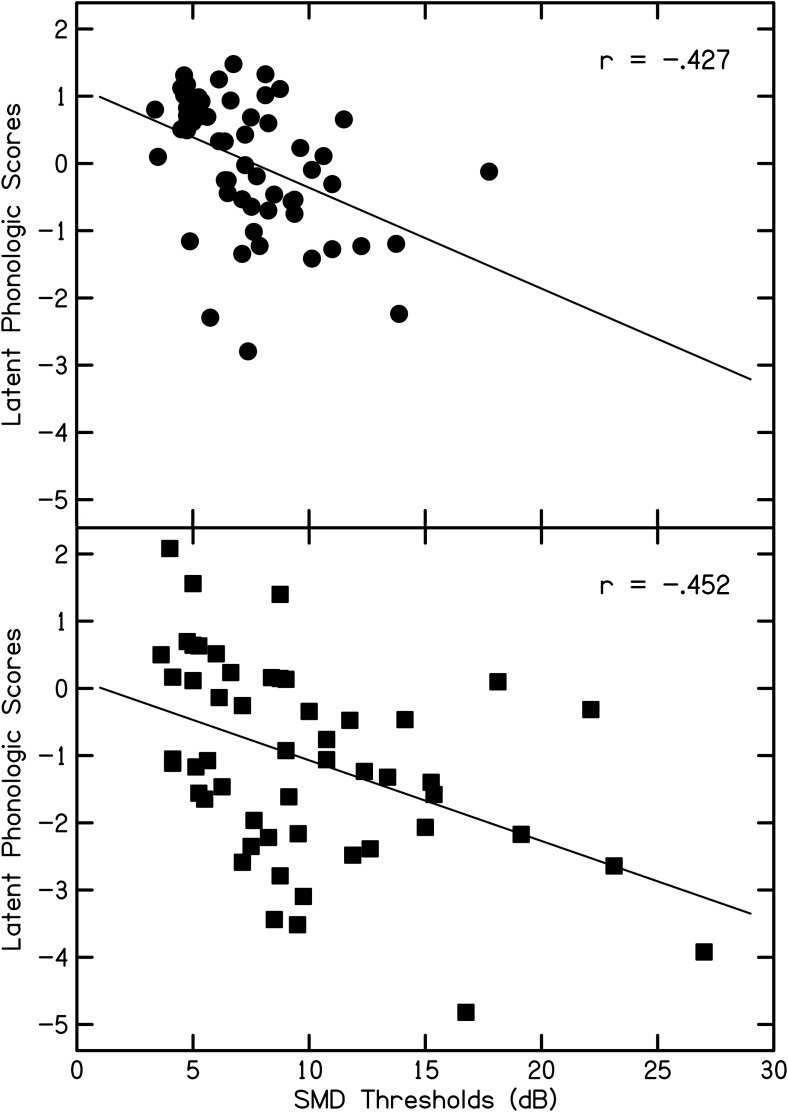

Pearson product-moment correlation coefficients were computed separately for adolescents with NH and those with CIs. Table IX shows these correlation coefficients for each group for each latent measure. As hypothesized, these coefficients are larger for the correlations between SMD thresholds and latent phonologic scores than between SMD thresholds and latent lexicosyntactic scores. Similar values are found for both groups, indicating that these relationships are similar, regardless of whether an adolescent has NH or CIs.

TABLE IX.

Pearson product-moment correlation coefficients between SMD thresholds and each latent measure for adolescents with NH and CIs.

| NH | CIs | |||

|---|---|---|---|---|

| r | p | r | p | |

| Latent lexicosyntactic scores | −0.196 | 0.148 | −0.138 | 0.331 |

| Latent phonologic scores | −0.427 | 0.001 | −0.452 | 0.001 |

1. Adolescents with NH

The top of Fig. 2 illustrates the relationship between SMD thresholds and latent phonologic scores for the adolescents with NH. This figure reveals that one adolescent might be considered an outlier, as the SMD threshold for this adolescent is higher than for any other adolescent with NH, but the latent phonologic score is average. To investigate the effects of this potential outlier, the correlation coefficient was re-computed without scores from this adolescent. In this case, r(55) = –0.481, p < 0.001, for the correlation of SMD threshold and the latent phonologic factor, indicating an even stronger relationship. With this adolescent's data point removed, the relationship of SMD threshold with the latent lexicosyntactic factor remained weak, r(55) = –0.231, p = 0.089, so it was concluded that this one adolescent's data could not account for the pattern of results observed.

FIG. 2.

Relationship between SMD Thresholds and Latent Phonologic scores for 56 adolescents with NH (top) and for 52 adolescents with CIs (bottom).

Nonverbal IQ scores were found to be significantly related to SMD thresholds for these adolescents with NH, with r = –0.372. Therefore, concern arose that perhaps nonverbal intelligence could account for the relationship found for SMD thresholds and latent phonologic scores. When nonverbal IQ scores were correlated with latent phonologic scores, a significant relationship was found, r(56) = 0.500, p < 0.001, suggesting that variance in latent phonologic scores explained jointly by SMD thresholds and nonverbal intelligence might be exaggerating the contribution of spectral processing to phonological sensitivity for these adolescents. To evaluate this concern, a partial correlation coefficient was obtained for SMD thresholds and latent phonologic scores, controlling for nonverbal IQ scores. A significant correlation was observed, r(53) = –0.300, p = 0.026, although of reduced magnitude. Consequently, the relationship between spectral processing and phonological sensitivity is not as robust for these adolescents with NH as at first thought. Instead, much of this relationship may be explained by nonverbal intelligence.

2. Adolescents with CIs

The bottom of Fig. 2 illustrates the relationship between SMD thresholds and latent phonologic scores for the adolescents with CIs. This figure clearly reveals the strong relationship between these two measures for these adolescents.

Although none of the demographic variables or the audiologic factors were significantly correlated with SMD thresholds, the relationships of those variables to latent phonologic scores were evaluated with Pearson product-moment correlation coefficients, excluding digit span; because that measure is highly dependent on phonological sensitivity, it would be expected to correlate strongly with the latent phonological factor. Only two variables were significantly correlated with the latent phonologic scores: nonverbal IQ, r(52) = 0.339, p = 0.014, and aided thresholds, r(52) = –0.397, p = 0.004. When partial correlation coefficients were computed, controlling for each one in separate analyses, the relationship between SMD thresholds and latent phonologic scores remained strong: controlling for nonverbal IQ, r(49) = –0.409, p = 0.003; controlling for aided thresholds, r(49) = –0.422, p = 0.002. Consequently, it can be concluded that for these adolescents with CIs, there was genuinely a strong relationship between spectral processing abilities and phonological sensitivity; this relationship was not based on a third mediating variable.

The analyses described above provide support for the assertion that good spectral processing abilities are necessary if children with CIs are going to acquire good phonological sensitivity. Another question that might be asked, however, is whether good spectral processing is sufficient. To address that question, outcomes were examined for the adolescents in each group who demonstrated the best spectral processing abilities, defined as better than the median threshold for adolescents with NH. That median threshold was 7.19, and 20 adolescents with CIs had thresholds better than this value; of course, 28 adolescents with NH met the criterion. Table X shows mean latent lexicosyntactic and phonologic scores for these two smaller groups as well as for the groups of adolescents who scored worse than that median for adolescents with NH. Several trends are apparent in this table. First, when examining scores for the adolescents with CIs who had SMD thresholds better than the NH median, it is clear that their latent phonological scores were much better than those of their peers with CIs who did not meet that threshold; a clear difference is not seen for latent lexicosyntactic scores. This claim is supported by t-tests performed on these latent measures for adolescents with CIs, comparing scores for those who had thresholds better than the NH median and those who had thresholds worse than the NH median: a significant difference was found for the phonological latent score, t(50) = 3.659, p = 0.001, Cohen's d = 1.06, but not for the lexicosyntactic latent score. This effect size indicates that having good spectral processing abilities was a strong support for acquiring good phonological sensitivity. Spectral processing abilities, however, did not affect lexicosyntactic knowledge, a finding that is commensurate with the lack of a significant correlation.

TABLE X.

Mean (M) latent lexicosyntactic and phonologic scores for each group, divided between those with SMD thresholds better than or worse than the median for adolescents with NH. Better than and worse than refer to better or worse SMD thresholds than the median threshold for adolescents with normal hearing. N = 28 for each of the two groups of adolescents with normal hearing, 20 for adolescents with cochlear implants, better than, and 32 for adolescents with cochlear implants, worse than.

| NH | CIs | |||||||

|---|---|---|---|---|---|---|---|---|

| Better than | Worse than | Better than | Worse than | |||||

| M | SD | M | SD | M | SD | M | SD | |

| Latent lexicosyntactic scores | 0.29 | 0.96 | −0.29 | 0.97 | −0.61 | 1.18 | −0.83 | 1.59 |

| Latent phonologic scores | 0.38 | 0.88 | −0.38 | 0.98 | −0.25 | 1.18 | −1.61 | 1.38 |

Phonological and lexicosyntactic abilities can also be compared to provide insights for those adolescents only who scored better than the NH median. When performance on latent measures is compared between adolescents with NH who scored better than the NH median and adolescents with CIs who scored better than the NH median, both comparisons are found to be significant: for the phonological latent score, t(46) = 2.104, p = 0.041, Cohen's d = 0.60, and for the lexicosyntactic latent score, t(46) = 2.896, p = 0.006, Cohen's d = 0.83. Thus, adolescents with CIs who demonstrated good spectral processing abilities, defined as in the top half of performance for adolescents with NH, had better phonological sensitivity than their peers with CIs who did not have good spectral processing abilities. Nonetheless, their phonological sensitivity was still poorer than peers with NH demonstrating similar SMD thresholds.

IV. DISCUSSION

A. General outcomes

Children with CIs have consistently been found to have larger deficits in phonological sensitivity than in lexical or syntactic knowledge when compared to children with NH. This trend led to the general hypothesis that the degraded spectral representations provided by CIs might disproportionately hamper learning about phonological structure. Children with CIs may be able to construct reasonably sized lexicons based on relatively coarse acoustic structure and learn the language-specific rules regarding how words should be ordered to convey information about relationships among those words. In contrast, the acquisition of precise phonological representations—especially phonemic representations—likely requires the ability to recover refined spectral and temporal structure; the first of these kinds of structure was examined in this study, with the testing of two specific hypotheses. First, it was hypothesized that adolescents with CIs would have poorer spectral processing abilities than adolescents with NH. Second, it was hypothesized that at least for adolescents with CIs, spectral processing abilities would be correlated with phonological sensitivity but not with lexicosyntactic skills.

The hypotheses tested in this study were largely supported. First, adolescents with CIs were found to have larger mean thresholds for detecting spectral modulation than adolescents with NH. This finding suggests that the diminished spectral processing of the adolescents with CIs likely arises due to something related to CI use. When specific effects were examined, however, the only factor related to CIs themselves that affected SMD thresholds was whether adolescents were using one or two CIs: adolescents with just one CI had higher (poorer) thresholds.

Second, when SMD thresholds were correlated with latent measures of lexicosyntactic knowledge and phonological sensitivity across all subjects, both correlation coefficients were significant, but the relationship was stronger for phonological sensitivity than lexicosyntactic knowledge. When these relationships were examined within each group separately, it was found for adolescents with NH and CIs alike that SMD thresholds were significantly correlated with the latent measure of phonological sensitivity, but not with the latent measure of lexicosyntactic knowledge. This lack of within-group correlation for SMD thresholds and lexicosyntactic knowledge means that the significant relationship found when groups were combined reflects group differences: adolescents with CIs performed more poorly than adolescents with NH on both measures, but that does not mean these measures are related beyond group effects.

Other findings revealed that adolescents with CIs generally performed more poorly than adolescents with NH on all language measures, and these deficits were largest for language measures that depended on phonological sensitivity, which primarily meant the final consonant choice task. The backward words task depended more on the ability to process—or manipulate—phonemes, something that children with CIs seem able to do, as long as they can recognize the separate phonemic units. Grammaticality judgments, which loaded strongly on a lexicosyntactic factor, however, had a relatively large effect size as well. This outcome likely reflected the fact that sensitivity to word-internal phonological structure is required to recognize bound morphemes, and the grammaticality judgments test examines recognition of those morphemes. Therefore, some part of performance on that task does require phonological sensitivity. Overall, the disproportionately large phonological deficit observed for these adolescents with CIs matches previous results (Lowenstein and Nittrouer, 2021; Nittrouer et al., 2018) and, in fact, served as the basis of the current study.

The suggestion that follows naturally from these two central findings—that children with CIs have poor spectral processing abilities and spectral processing abilities are well correlated with phonological sensitivity—is that children with CIs will develop keener sensitivity to phonological structure if they are just able to develop better spectral processing abilities. To explore that suggestion in more depth, latent phonological scores were examined for the adolescents with the best SMD thresholds, defined as better than the NH median. That analysis had the additional effect of dividing adolescents with CIs into two groups: one of “good spectral listeners” and one of “poor spectral listeners.” When this was done, a large difference was found between those two groups in phonological sensitivity, with d = 1.06. Thus, it can be concluded that keen spectral processing abilities can support the acquisition of phonological sensitivity for children with CIs. Nevertheless, even when a comparison was restricted only to adolescents with SMD thresholds better than the NH median (both NH and CI adolescents), scores on the latent phonological measure remained significantly worse for the adolescents with CIs than for their peers with NH. That outcome indicates that there are factors that contribute to the acquisition of phonological sensitivity for children who learn language through CIs other than those that could be identified in the current study. A focus of future investigations should include examining more potential sources of variability in phonological sensitivity. The relationship between spectral processing abilities and phonological sensitivity should also be examined in children with CIs at younger ages. It may be that their spectral processing abilities are even poorer, relative to those of children with NH, at those young ages. That would impede the early acquisition of phonological sensitivity, which could be a deficit that is difficult to overcome at later ages.

A difference between this study and at least one previous study (Kirby et al., 2019) is that the previous study found that spectro-temporal processing abilities were strongly related to cognitive abilities, specifically visual working memory and nonverbal IQ scores, for children with HAs. In the current study, only SMD thresholds for adolescents with NH were found to be related to nonverbal intelligence; SMD thresholds were not found to be related to visual working memory for either group. Kirby et al. concluded that the significant relationships they found might reflect cognitive demands of the psychophysical task itself, but no evidence of that was revealed in the current study. Instead, the finding reported earlier may reflect features of the tasks used by Kirby et al. In that study, the stimuli were manipulated in both the spectral and the temporal domains, so they could be described as acoustically more complex than those used in the current study. The visual working memory task was cognitively demanding, requiring storage of multiple visual positions derived from sequences of oddity choices; the nonverbal IQ task involved complex visual images. Thus, it may be that the findings of Kirby et al. reflect the operations of a central mechanism responsible for organizing complex sensory inputs. As in that experiment, the nonverbal IQ task used in the current study involved complex visual images, and performance on that task was found to correlate with SMD thresholds, but only for adolescents with NH. Additionally, the strength of the relationship was less in the current study than in Kirby et al.: –0.372 versus 0.48, respectively. That reduced effect might reflect the fact that stimulus complexity on at least one side of the comparison was less in this current experiment than in that earlier one, that being the acoustic stimuli. The finding that a relationship was not observed between nonverbal IQ scores and SMD thresholds for the adolescents with CIs could indicate that perceptual organization for auditory signals is less well developed than for visual signals for these deaf listeners, but by varying amounts across the group. Another focus of future studies could involve explicitly examining perceptual organization in congenitally deaf CI users.

B. Clinical significance

CIs have radically changed our expectations of how well children with severe-to-profound hearing loss can develop spoken language. Nonetheless, this progress has been uneven. Children who receive CIs are performing much better, relative to past performance and to their peers with NH, on measures of lexicosyntactic abilities than on language tasks requiring keen sensitivity to phonological structure (Harris et al., 2017; Nittrouer and Caldwell-Tarr, 2016), and that poor phonological sensitivity is likely responsible for academic problems encountered at higher grade levels (Geers and Hayes, 2011; Kronenberger and Pisoni, 2019; Lowenstein and Nittrouer, 2021). The primary purpose of the current study was to examine a potential source of this uneven progress, specifically, that poor spectral processing arising from the highly degraded spectral structure provided by CIs could affect phonological processing and representations more than the processing of lexical and syntactic structures. The evidence collected in this investigation supported this fundamental hypothesis. Now a question that can be asked is how this knowledge can serve the field clinically, as we attempt to move these children forward in their mastery of phonological processes.

At the most basic level, the outcomes of this investigation have implications for diagnostic procedures. Horn et al. (2017b) developed methods for testing spectral processing in infants who are pre-lexical. With that capability and armed with the information from the current study, it can be suggested that SMD measures might be used to help assess risk of phonological deficits among children with CIs early in life, even before they have started talking. This study has shown that spectral processing abilities support the development of phonological skills. If effective treatment methods can be developed, early testing of spectral processing abilities could allow clinicians to implement those treatments to maximally facilitate phonological acquisition.

C. Limitations of the current study

The primary limitations of this study rest with the restricted acoustic signal used. Only one measure of spectral processing was implemented: SMD. Although starting phase was varied as a way of ensuring that the adolescents in this study could not complete the task by monitoring loudness in a single channel or narrow frequency band, future work should be conducted utilizing other measures of spectral processing, such as spectral modulation discrimination, to see if similar relationships to phonological sensitivity, but not lexicosyntactic skills, are obtained. Furthermore, it would be useful to investigate how processing of temporal modulation by children or adolescents with congenital hearing loss who use CIs influences phonological sensitivity.

Another limitation of the current study was that only one modulation rate was used. Other modulation rates should be included in future studies, such as 1.0 and 2.0 cpo, to see if thresholds for other modulation rates are more (or less) sensitive to lexicosyntactic abilities and phonological sensitivity. Outcomes of this investigation could shed light on the specific relationship between spectral processing and phonological sensitivity by identifying the scale of spectral modulation that is related to that sensitivity. This exploration could also help in the design of diagnostic tasks by identifying the most sensitive modulation rate to phonological acquisition.

The third limitation to this study concerned details of device configurations. Possible sources of variability in spectral processing abilities associated with factors such as depth of electrode insertion could not be examined because that information was not available. Future studies will need to examine these device-related factors.

V. SUMMARY

Linguistic structure exists at two levels. First, meaningful words are represented in a durable, long-term mental lexicon and are combined into sentences in real time according to language-specific syntactic rules to convey information about the relationships among those words. Second, meaningless phonological elements comprise words, are recovered from the speech signal in real time, and are utilized in language-related functions such as short-term, or working, memory and novel word learning. The current study tested two hypotheses: (1) adolescents with CIs experience diminished sensitivity to spectral modulation in the speech signal due to the signal processing constraints of CIs; (2) that diminished sensitivity inhibits language processes involving the phonological level of structure but not processes involving lexicosyntactic structure. To test these hypotheses, SMD thresholds were measured for 14-year-old adolescents in two groups (NH or CIs), and three measures each of lexicosyntactic and phonological skills were obtained and used to compute latent scores. Results showed a higher (poorer) mean SMD threshold for adolescents with CIs compared to adolescents with NH. These poor thresholds could not be traced to any factor related to their CIs, leaving in question the source of the poor spectral processing. Nonetheless, SMD thresholds were found to be related to phonological sensitivity but not to lexicosyntactic knowledge. Overall, outcomes suggest that enhanced spectral processing would allow adolescents with CIs to develop sensitivity to phonological structure that is adequate for typical language processing, but further research is strongly needed to find ways of enhancing that spectral processing.

ACKNOWLEDGMENTS

This work was supported by Grant No. R01 DC015992 from the National Institutes of Health, National Institute on Deafness and Other Communication Disorders, to S.N. The authors thank staff, students, and families who participated in the collection of these data.

APPENDIX A: FINAL CONSONANT CHOICE TASK

The practice and test trial items for the final consonant choice task are listed in Table XI.

TABLE XI.

Final consonant choice task. Testing should be discontinued after six consecutive errors. The underlined word is the correct response.

| Practice items | Practice items | ||||||

|---|---|---|---|---|---|---|---|

| 1. rib | mob | phone | heat | 4. lamp | rock | juice | tip |

| 2. stove | hose | stamp | cave | 5. fist | hat | knob | stem |

| 3. hoof | shed | tough | cop | 6. head | hem | rod | fork |

| Test trials | Test trials | ||||||

| 1. truck | wave | bike | trust | 25. desk | path | lock | tube |

| 2. duck | bath | song | rake | 26. home | drum | prince | mouth |

| 3. mud | crowd | mug | dot | 27. leaf | suit | roof | leak |

| 4. sand | sash | kid | flute | 28. thumb | cream | tub | jug |

| 5. flag | cook | step | rug | 29. barn | tag | night | pin |

| 6. car | foot | stair | can | 30. doll | pig | beef | wheel |

| 7. comb | cob | drip | room | 31. train | grade | van | cape |

| 8. boat | skate | frog | bone | 32. bear | shore | clown | rat |

| 9. house | mall | dream | kiss | 33. pan | skin | grass | beach |

| 10. cup | lip | trash | plate | 34. hand | hail | lid | run |

| 11. meat | date | sock | camp | 35. pole | land | poke | |

| 12. worm | price | team | soup | 36. ball | clip | steak | pool |

| 13. hook | mop | weed | neck | 37. park | bed | lake | crown |

| 14. rain | thief | yawn | sled | 38. gum | shoe | gust | lamb |

| 15. horse | lunch | bag | ice | 39. vest | cat | star | mess |

| 16. chair | slide | chain | deer | 40. cough | knife | log | dough |

| 17. kite | bat | mouse | grape | 41. wrist | risk | throat | store |

| 18. crib | job | hair | wish | 42. bug | bus | leg | rope |

| 19. fish | shop | gym | brush | 43. door | pear | dorm | food |

| 20. hill | moon | bowl | hip | 44. nose | goose | maze | zoo |

| 21. hive | glove | light | hike | 45. nail | voice | chef | bill |

| 22. milk | block | mitt | tail | 46. dress | tape | noise | rice |

| 23. ant | school | gate | fan | 47. box | face | mask | book |

| 24. dime | note | broom | cube | 48. spoon | cheese | back | fin |

APPENDIX B: BACKWARD WORDS TASK

The practice and test trial items for the backward words task are listed in Table XII.

TABLE XII.

Backward words task. Testing should be discontinued after six consecutive errors.

| Practice examples | |||

|---|---|---|---|

| A. nip | pin | ||

| B. eat | tea | ||

| C. dice | side | ||

| Test trials | Answer | Test trials | Answer |

| 1. nap | pan | 25. chip | pitch |

| 2. sub | bus | 26. make | came |

| 3. tip | pit | 27. pans | snap |

| 4. cab | back | 28. nuts | stun |

| 5. gum | mug | 29. claw | walk |

| 6. pal | lap | 30. loops | spool |

| 7. meat | team | 31. spin | nips |

| 8. peek | keep | 32. swap | paws |

| 9. pool | loop | 33. pets | step |

| 10. leaf | feel | 34. stack | cats |

| 11. cat | tack | 35. sleep | peels |

| 12. right | tire | 36. cans | snack |

| 13. deer | reed | 37. spill | lips |

| 14. face | safe | 38. slim | mills |

| 15. time | might | 39. stone | notes |

| 16. pass | sap | 40. nicks | skin |

| 17. jab | badge | 41. plug | gulp |

| 18. gas | sag | 42. spans | snaps |

| 19. wall | law | 43. stops | spots |

| 20. peach | cheap | 44. snoops | spoons |

| 21. sob | boss | 45. lambs | small/smell |

| 22. shack | cash | 46. spins | snips |

| 23. name | mane | 47. leaver | reveal |

| 24. mile | lime | 48. turkeys | secret |

Portions of these data were presented in “Spectral modulation detection in adolescents with normal hearing or cochlear implants predicts some language skills, but not others,” 176th meeting of the Acoustical Society of America, Victoria, British Columbia, Canada, November 2018.

References

- 1.Allen, P., and Wightman, F. (1994). “ Psychometric functions for children's detection of tones in noise,” J. Speech Hear. Res. 37, 205–215. 10.1044/jshr.3701.205 [DOI] [PubMed] [Google Scholar]

- 2.Anderson, E. S., Oxenham, A. J., Nelson, P. B., and Nelson, D. A. (2012). “ Assessing the role of spectral and intensity cues in spectral ripple detection and discrimination in cochlear-implant users,” J. Acoust. Soc. Am. 132, 3925–3934. 10.1121/1.4763999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Aronoff, J. M., and Landsberger, D. M. (2013). “ The development of a modified spectral ripple test,” J. Acoust. Soc. Am. 134, EL217–EL222. 10.1121/1.4813802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Baddeley, A. D., and Hitch, G. J. (2019). “ The phonological loop as a buffer store: An update,” Cortex 112, 91–106. 10.1016/j.cortex.2018.05.015 [DOI] [PubMed] [Google Scholar]

- 5.Carrow-Woolfolk, E. (1999). Comprehensive Assessment of Spoken Language (CASL) ( Pearson Assessments, Bloomington, MN: ). [Google Scholar]

- 6.Charles-Luce, J., and Luce, P. A. (1990). “ Similarity neighbourhoods of words in young children's lexicons,” J. Child Lang. 17, 205–215. 10.1017/S0305000900013180 [DOI] [PubMed] [Google Scholar]

- 7.Cleary, M., Pisoni, D. B., and Kirk, K. I. (2000). “ Working memory spans as predictors of spoken word recognition and receptive vocabulary in children with cochlear implants,” Volta Rev. 102, 259–280; available at https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3111028/. [PMC free article] [PubMed] [Google Scholar]

- 8.Davies-Venn, E., Nelson, P., and Souza, P. (2015). “ Comparing auditory filter bandwidths, spectral ripple modulation detection, spectral ripple discrimination, and speech recognition: Normal and impaired hearing,” J. Acoust. Soc. Am. 138, 492–503. 10.1121/1.4922700 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dillon, C. M., and Pisoni, D. B. (2006). “ Nonword repetition and reading skills in children who are deaf and have cochlear implants,” Volta Rev. 106, 121–145; available at https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3111020/. [PMC free article] [PubMed] [Google Scholar]

- 10.DiNino, M., and Arenberg, J. G. (2018). “ Age-related performance on vowel identification and the spectral-temporally modulated ripple test in children with normal hearing and with cochlear implants,” Trends Hear. 22, 233121651877095. 10.1177/2331216518770959 [DOI] [PMC free article] [PubMed] [Google Scholar]