Abstract

Background

As governing bodies design new curricula that seek to further incorporate principles of competency-based medical education within time-based models of training, questions have been raised regarding the continued centrality of existing CanMEDS competencies. Although efforts have been made to align these new curricula with CanMEDS, we don’t yet know to what extent these competencies are meaningfully integrated.

Methods

A content analysis approach was used to systematically evaluate national Canadian curricula for 18 residency-training programs and determine the number of times each enabling CanMEDS competency was represented.

Results

Clear trends persisted across all programs. Medical Expert and Collaborator competencies were well integrated into curriculum (81% and 86% mapped to assessment) while competencies related to the Leader, Professional, and Health Advocate roles were less frequently mapped to assessment (41%, 36%, and 40%) and were often absent from the new curricula altogether (59%, 64%, and 60%).

Conclusion

Deliberate planning in curriculum development affords the early identification of gaps. These gaps can inform current assessment practice and future curricular development by providing direction for innovation. If we are to ensure that any new curricula meaningfully address all CanMEDS roles, we need to think carefully about how to best teach and assess underrepresented competencies.

Abstract

Contexte

Alors que les instances dirigeantes conçoivent de nouveaux programmes d’études visant une intégration plus poussée des principes de l’éducation médicale fondée sur les compétences, notamment dans des modèles de formation axés sur la durée, la question se pose de savoir si l’on parvient à y préserver le caractère central des compétences CanMEDS actuelles. Malgré les efforts consacrés pour aligner les nouveaux programmes d’études sur CanMEDS, nous ne savons pas encore si l’intégration de ces compétences se fait avec discernement.

Méthodes

L’approche d’analyse de contenu a été utilisée pour évaluer systématiquement 18 programmes de résidence au Canada et pour déterminer le nombre de fois que chaque compétence habilitante CanMEDS est représentée dans ces programmes.

Résultats

Des tendances claires se dégagent pour l’ensemble des programmes. Tandis que les compétences d’expert médical et de collaborateur sont bien intégrées aux programmes (elles se retrouvent à hauteur de 81 % et 86 % respectivement dans l’évaluation), celles liées aux rôles de leader, de professionnel et de promoteur de la santé se répercutent moins souvent dans l’évaluation (41 %, 36 % et 40 %) et elles sont même souvent absentes des nouveaux programmes (59 %, 64 % et 60 %).

Conclusion

Une planification rigoureuse dans le cadre du développement de cursuspermettrait de rapidement cibler les lacunes. La mise en lumière de ces lacunes peut éclairer les pratiques d’évaluation actuelles et orienter les programmes d’études futurs vers des pistes d’innovation. Si nous voulons nous assurer que tout nouveau programme d’études aborde de manière efficace l’ensemble des rôles CanMEDS, nous devons porter attention à la meilleure façon d’enseigner et d’évaluer les compétences sous-représentées.

Introduction

Competency-based medical education (CBME) has been adopted as the central organizing principle for several medical education systems throughout the world.1 Many of the approaches to CBME use the concept of roles to describe the competencies needed of physicians. With roots in addressing societal need through improved patient care,2,3 CanMEDS describes the competencies as seven roles – Medical Expert, Communicator, Collaborator, Health Advocate, Leader, Scholar, and Professional – mirroring the clinical approach to the patient as a whole person rather than a person with a specific disease.4 Competency-based approaches, like CanMEDS, promised to ensure that attention was paid to the full range of skills required of a 21st century physician. The development of CanMEDS represented a major step forward in the development of CBME. To skills that had been historically marginalized, such as health advocacy and professionalism, CanMEDS assigned a new status and provided a language for the development of curriculum and assessment around these roles. However, as governing bodies design new curricula that seek to further incorporate principles of CBME and move away from time-based models of training, we run the risk of eroding the hard won gains established through previous innovations - like CanMEDS - that were established to ensure medical education developed in physicians the broad spectrum of abilities required “to provide the highest quality care.”5

While CanMEDS has, for 15 years now, been the basis of Canadian specialty training, the recent introduction of a new nationally developed competency-based model of medical education has created questions regarding the continued centrality of the existing CanMEDS competencies. Although efforts have been made to align this new model with CanMEDS by mapping these existing competencies to the new curricular elements, we don’t yet know to what extent these competencies are meaningfully integrated. Without tracing the degree to which CanMEDS roles are represented within the new curriculum, we risk the erosion of the holistic curriculum that we have worked so hard to achieve.

Canadian setting and context

Canadian residency programs are transitioning to an approach to competency-based medical education that the specialty training governing body, the Royal College of Physicians and Surgeons of Canada (RCPSC), has called Competence by Design (Within this curriculum, workplace-based assessments are founded on entrustable professional activities (EPAs), core work-related tasks of a discipline6,7 and represent the organizing framework for assessment in this system. Each EPA contains within it, observable markers of an individual’s ability – milestones -- that provide explicit direction for learners as well as clear teaching and assessment goals for educators. EPAs and their associated milestones are thus not only tools for fostering learning and the ongoing development of competence, but also represent summative benchmarks of the learning that has taken place.

In moving from a curriculum based on a series of CanMEDS-linked competencies to one built on EPAs and milestones, efforts have been made to link pre-existing competencies to the new milestones; however, the extent to which this has been successful is currently unknown. The purpose of this study was to systematically map how CanMEDS competencies are represented within the new curricula for post-graduate programs. Although we have chosen to examine the Canadian national curricula, the approach to examining curricula change has relevance to any country or program that is undertaking curricular innovation or transitioning to a competency approach to medical education.

Methods

For this research, we have drawn on principles from program evaluation literature, specifically the conceptual framework adopted by the International Association for Evaluation of Educational Achievement (IEA) which makes explicit “the conceptual links between aspects of curriculum and the learning students attain.”8 This framework differentiates between the intended curriculum (what society intends individuals to learn), the implemented curriculum (what is actually taught), and the attained curriculum (what individuals learn).9 The assumption underlying this framework is that the primary determinant of learning is Opportunity to Learn (OTL), which is predicated on the intended and implemented curriculum.

In this study we used content analysis10 to identify how CanMEDS competencies are represented within the new national curriculum for specialty post-graduate training programs. Content analysis is a flexible methodology that uses a set of systematic procedures to make empirical observations about the content of texts.11 Within education research, content analysis is the most common methodological approach used to identify the OTL afforded by curriculum.8,12,13 Predominantly, content analyses of curriculum are undertaken for two purposes: to understand differences in achievement between institutions or countries8 and to explore the extent to which certain topics are well-covered, minimally-covered, or excluded from the curriculum.13 We were most interested in how CanMEDS roles were excluded during CBD design and implementation.

Given that our aim was to explore the quantitative representative of CanMEDS competencies (i.e., how many are present; how many learning opportunities is each role afforded; what, if any, gaps exist in the representation of the CanMEDS roles) we elected to use a deductive, manifest content analysis approach. This approach aims to reduce textual phenomenon into manageable, objective, and numeric data from which inferences can be drawn about the phenomenon itself.14 This approach requires that researchers systematically appraise the content of their texts - in our case, curriculum documents - to discover their manifest, rather than latent or connotative, meanings.14 More simply put, this approach to content analysis involves the systematic examination of texts to determine whether or not particular variables are represented.

Data collection and analysis

Our data set consisted of national curriculum documents for each of the 18 Canadian post-graduate programs who transitioned to CBD between 2017 and 2019 at our institution. The national curriculum documents we examined form the basis of the new curriculum and express the intended curriculum by representing national learning expectations. Conceptually, national curricula bridge the intended curriculum to the teaching and learning experiences that lead to the implemented curriculum.8 While there have been efforts to ensure the CanMEDS competencies have not been lost in this curricular transformation, the new curriculum has not yet been systematically examined to ensure the OTL remains identifiable for the full range of competencies. A systematic content analysis approach provides important insight into the OTL that are afforded residents through the identification of CanMEDS competencies that are supported by ample OTLs and the CanMEDS competencies that may not be sufficiently supported. Given the education quality improvement nature of this work, our institution REB exempted the study from full ethical review.

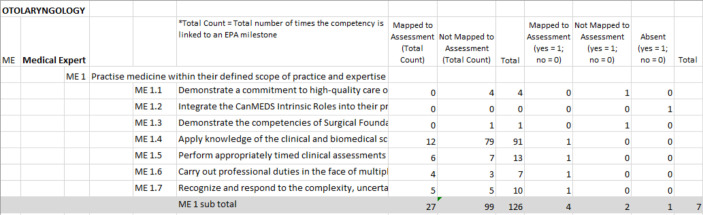

For each of the 18 postgraduate programs that had completed their implementation of CBD we examined two sets of documents: 1) the document that outlines the specific CanMEDS competencies trainees are required to cultivate before they enter independent practice (i.e., existing curriculum); and 2) the document that details the new, nationally created EPA and milestone-based curriculum (i.e., new curriculum). Given that the former document represents the outcomes desired from training, the competencies outlined in these documents became the variables for our manifest content analysis. To guide our content analysis, we created a data extraction form for each program that represented each of the CanMEDs competencies outlined in the existing curriculum (i.e., document 1). The CanMEDS competencies contained in the data extraction form were treated as variables in our manifest content analysis of each program’s new curriculum. We systematically hand-searched the new curriculum documents (i.e., document 2) for each program variable by variable (i.e., Medical Expert 1.1, Medical Expert 1.2 etc.,) to determine whether or not it was present within the document. Variables that were present within the document and required to be assessed within the context of a specific EPA were coded as mapped to assessment on our data extraction form. Competencies that were present within the document but were not required to be assessed within the context of a specific EPA were coded as not mapped to assessment. See Figure 1 for a visual representation of our data collection tool. We made the distinction between mapped and not mapped to assessment because in education, when items are linked to an assessment, they assume greater importance.15,16 Competencies that were absent within the document were coded as absent. This approach enabled us to systematically evaluate how well the existing CanMEDS competencies were represented within the new EPA curriculum.

Figure 1.

Data extraction tool

We conducted this hand-search for each of the 18 programs. Two researchers (RP and EF) conducted the search and entered the extracted, numeric information into our data extraction form. To ensure consistency in the hand-searching and data extraction process, both researchers analyzed the documents for the first four programs. Any inconsistencies identified between coders were resolved by a second hand-search for the variable in question. The remaining programs were coded by one researcher (RP and EF) only.

Once the data extraction forms were completed, we tabulated the number of times each specific CanMEDS competency occurred within the entire EPA document for each program, and the total number of competencies that were represented under each CanMEDS role. For example, in Otolaryngology – Head & Neck Surgery there are 19 Medical Expert competencies identified as training outcomes in the intended curriculum, so we mapped how many of these variables were represented in the new EPA-based curriculum.

Our extracted data were then analyzed using simple descriptive statistics to characterize the distribution, central tendency, and dispersion of the data. As the purpose of this study was to identify broad trends across the EPAs documents, we computed the distribution of the competencies associated with each CanMEDS role across the entire sample of programs. Range and standard deviation were also calculated to reflect the dispersion of data for each role across the entire national sample.

Results

We found that the CanMEDS competencies – the desired outcomes of specialty training - have not been entirely integrated into the EPA curriculum for the 18 Canadian post-graduate specialities we analyzed. As Table 2 illustrates, 55.6% of all CanMEDS competencies were mapped to assessment, 13.3% of the competencies were not mapped to assessment, and 31.1% of the competencies were absent from the curriculum entirely.

Table 2.

Percentage of total competencies across programs

| Mapped to assessment | Not mapped to assessment | Absent | |

|---|---|---|---|

| Mean | 55.6 | 13.3 | 31.1 |

| Standard Deviation | 10.33 | 7.32 | 12.57 |

| Range | 40 – 83 | 0 - 29 | 0 - 54 |

CBD). CBD is in the process of being implemented across all 67 specialty and subspecialty postgraduate training programs.

Table 1.

Specialty programs sampled

| Year | Program |

|---|---|

| 2017 | Anesthesiology, Otolaryngology-Head and Neck Surgery |

| 2018 | Emergency Medicine, Urology, Surgical Foundations, Nephrology, and Medical Oncology |

| 2019 | Anatomical Pathology, Cardiac Surgery, Critical Care Medicine – Adult & Pediatrics, Gastroenterology, General Internal Medicine, Geriatric Medicine, Internal Medicine, Neurosurgery, Obstetrics & Gynecology, Radiation Oncology, and Rheumatology |

Across the sample of 18 programs, there was heterogeneity with respect to how well the CanMEDS competencies were represented. For example, as seen in table 2, the percentage of total number of competencies absent from the EPA document ranged from 0% to 54%.

There were no clear patterns in CanMEDS competencies gaps across specialties (i.e. surgical vs non-surgical programs). Each program had its own unique gaps. Despite some differences among programs, however, an analysis of the dataset as a whole revealed some clear and compelling patterns regarding coverage. In what follows, we describe these patterns and point out any notable outliers.

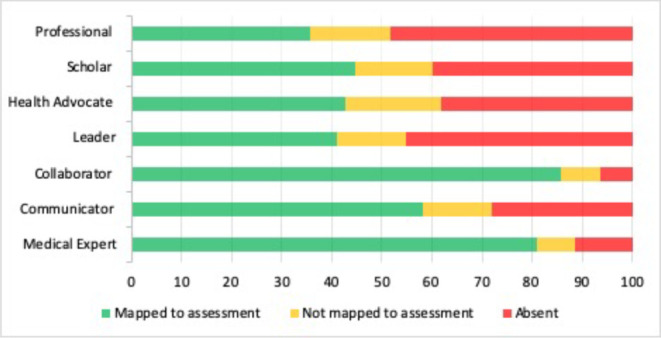

While the extent to which competencies were represented within programs’ EPA document varied, we noticed some patterns across specialities. These high-level trends are represented in Figure 2, which displays the percentage of enabling competencies for each CanMEDS role mapped to assessment, not mapped to assessment, and absent across all 18 programs.

Figure 2.

CanMEDS competency integration across programs

Medical Expert and Collaborator competencies were well integrated into EPA assessment protocols, with 81.1% and 85.8% respectively mapped to assessment. In contrast, competencies related to the Professional, Leader, Health Advocate, Scholar roles were less frequently mapped to EPA assessments (35.8%, 41.1%, 42.8%, and 44.8% respectively).

Table 3 reports the mean percentage of competencies mapped to assessment, not mapped to assessment and absent from the EPA documents for each CanMEDS role and provides information about dispersion and spread of the data around the mean. Competencies from Medical Expert and Collaborator roles were mapped to assessment consistently across the programs, with relatively small standard deviations and concentrated ranges. The Collaborator role was particularly well represented with eight programs achieving 100% representation (e.g., Rheumatology, Internal Medicine, Surgical Foundations, and Cardiac Surgery, etc.) However, there were a couple of outliers. Anatomical Pathology and Geriatric Medicine each represented only 57% of the Collaborator competencies. Competencies from the Health Advocate, Scholar, Professional, and Leader roles, on the other hand, were much more variable across the data, with much larger standard deviations and ranges across programs. While the Professional role had a large standard deviation, the majority of the programs were only able to represent between 23% - 31% of the competencies. Emergency Medicine was a significant outlier as it integrated 62% of the competencies. Additionally, programs consistently struggled to represent the Health Advocate role. The highest representation was 67% yet only two programs achieved this: Nephrology and Geriatric Medicine. Some programs had distinctly lower representation of the Health Advocate competencies than others. (e.g. Critical Care 12%; Neurosurgery 17%).

Table 3.

Percentage of competencies across programs by CanMEDS role

| Mapped to assessment | Not mapped to assessment | Absent | |

|---|---|---|---|

| Medical Expert | |||

| Mean (%) | 81.1 | 7.6 | 11.3 |

| Standard Deviation | 8.4 | 6.9 | 6.3 |

| Range | 70 – 94 | 0 – 23 | 0 – 28 |

| Communicator | |||

| Mean (%) | 58.3 | 13.6 | 28.1 |

| Standard Deviation | 20.5 | 10.4 | 17.8 |

| Range | 29 – 94 | 0 – 41 | 6 – 64 |

| Collaborator | |||

| Mean (%) | 85.8 | 7.9 | 6.3 |

| Standard Deviation | 15.5 | 10.1 | 10.1 |

| Range | 57 – 100 | 0 – 29 | 0 – 29 |

| Leader | |||

| Mean (%) | 41.1 | 13.6 | 45.3 |

| Standard Deviation | 21.1 | 12.1 | 21.2 |

| Range | 18 – 90 | 0 – 36 | 0 – 73 |

| Health Advocate | |||

| Mean (%) | 42.8 | 18.9 | 38.3 |

| Standard Deviation | 15.0 | 15.25 | 18.5 |

| Range | 12 – 67 | 0 – 50 | 0 – 63 |

| Scholar | |||

| Mean (%) | 44.8 | 15.4 | 39.8 |

| Standard Deviation | 22.9 | 10.7 | 25.8 |

| Range | 6 – 78 | 0 – 44 | 0 – 94 |

| Professional | |||

| Mean (%) | 35.8 | 15.9 | 48.3 |

| Standard Deviation | 18.5 | 16.4 | 22.7 |

| Range | 15 – 92 | 0 – 61 | 0 – 85 |

Discussion

The formidable process of revising curricula in a forward-thinking way has required tremendous collaborative work on the part of numerous stakeholders. We recognize that such a complex undertaking will never result in the ideal product envisioned on first try; iterative quality improvement is a necessary part of the process. We also acknowledge that EPAs are not intended to represent the entirety of the intended curriculum, but, in this process of transition, it is possible that they may in practice become so. Our focus on competencies attached to EPAs in this work is informed by the knowledge that competencies represented in EPAs gain ‘status’ through this association, and the possibility that competencies without status may be seen as less valued in the new curriculum. Thus, the insights we raise in this paper are intended to inform the iterative process of curriculum renewal and provide stakeholders with direction.

The results of our study lend credence to the concern that certain valuable curricular elements risk being lost in this process of curriculum redesign. We showed, for example, that within the new national curricula for 18 residency training programs, the Health Advocate, Professional, Scholar, and Leader roles were consistently unrepresented. While authentic acknowledgement and integration of non-medical expert CanMEDS roles - termed intrinsic roles in Canada - into residency training is and has been challenging as a whole, notably, the underrepresented roles identified in this study are the same roles that have been the most vulnerable and in some ways the hardest to assimilate into the practice of medical education in the first place.17,18,19,20 In particular, the Health Advocate role has been challenging to teach and assess.21,22,23 Advocacy is seen as an essential responsibility of physicians’ work and an integral component of their overarching covenant with society,24,25 yet less than half of the Health Advocate competencies are directly mapped to assessment within the new EPA curricula. The lack of accessible OTL for health advocacy within the new curriculum is curious given the clear communication in the literature of the pressing need for physicians to serve as meaningful health advocates.22,26 The fact that this critical need has not translated into the new curriculum raises important questions about the potential for widening gaps in health advocacy competencies.

In the same vein our results demonstrate that competencies related to professionalism had an even lower rate of integration than health advocacy (35.8% and 42.8% respectively). As with health advocacy, medical educators have struggled to teach and assess this competency.27,28 The challenge of meaningfully integrating this competency into the curriculum is heightened by the importance of this role; professionalism is the bedrock of the medical field’s contract with society.29,30 The absence of professionalism competencies is particularly concerning given the known detrimental effects on patient safety, patient satisfaction, and the performance and well-being of health care teams that arise in the absence of physician professionalism.30 Given the importance of professionalism and the clear deleterious effects of unprofessional behaviour, it is surprising that this role does not have a more central or formal role in this new curriculum. In the absence of a clear curriculum, residents are left to learn by example. Role modelling prevails as a teaching tool yet in one study, it was faculty’s own professionalism breaches and an inability to address the same in colleagues that was perceived as the predominant barrier to teaching this role.31

Similar complications abound in the teaching and assessing of other intrinsic CanMEDS roles,19 but work still needs to be done to understand the full impact for both education outcomes and patient care. Given the well-documented challenges of integrating the intrinsic roles into the previous model of time-based training, innovative approaches are required to ensure that the competencies associated with these roles are meaningfully integrated into the new EPA-based curriculum. Knowledge of the existing gaps in the curriculum are required to drive this innovation. Overall, this curriculum renewal effort must be and remain informed by the driving intention of competency-based medical education: the development of well-rounded, socially responsive physicians. Instead of supporting this aim, our current curricula now afford Medical Expert competencies more robust OTL than the other intrinsic roles. This raises a critical question about whether we are reverting to the practice of privileging the medical expert role over other physician competencies, despite the known importance of a well-rounded physician to both physician wellness and patient care.

The way forward

Broadly, the goal of CBME is to ensure that all graduates have the necessary knowledge and skills to be competent physicians, capable of responding to the needs of patients and society.32,33 CBME has been described as an educational framework that will ensure desired physician outcomes, inclusive of all roles, will be reliably taught and assessed. Despite the laudable aims of CBME, the results of this study illuminate gaps in the competencies present in the new Canadian curricula designed to further its aims. From an educational perspective, we must think carefully about the content of the curriculum and the OTLs it affords to ensure that the outcomes we all desire are meaningfully enabled.

From the genesis of this curriculum reform, programs have had clear direction that the EPAs are not meant to be completely comprehensive, and yet the complete absence of representation of certain competencies in the curriculum creates challenges. Clearly, supplemental content is needed to ensure comprehensive teaching and assessment of all CanMEDS competencies. However, our early experience in implementing the new curriculum demonstrates that programs are struggling to accumulate sufficient EPA assessments as it is, so the expectation that they will also attend to non-EPA assessments may be unrealistic at this juncture.

From a practical perspective, front line implementation of EPAs alone has been a tremendous challenge for many programs, requiring a great deal from physicians: culture change, faculty and resident training, curriculum mapping, and programmatic assessment, to name a few. Layering in the identification of missing CanMEDS competencies and revising curriculum to address them may be asking too much. In an effort to alleviate some of this burden, Pero et al.34 investigated the role of educational consultants (ECs) at one Canadian university. The ECs, holding graduate degrees in education, were leveraged to lighten the workload of clinicians during curricular transformation by “liaising with stakeholders, co-creating educational development and education technology activities, and leading training and research coordination.”34 After only one year, decanal leadership determined that ongoing funding of ECs was a priority due to the practical benefits they provided such as the design and delivery of curriculum, including assessment forms and curriculum mapping.34 Widespread use of ECs to assist programs in identification of missing competencies and developing educational approaches to address them would go a long way toward ensuring the original vision of CanMEDS is maintained in the new curriculum. At our university, we have used the results of this analysis to inform program directors of CanMEDS competencies that are not being assessed or are missing from their EPA-based curriculum. In particular, one surgical program worked extensively with an EC to identify if, and where, these competencies currently existed within the training experiences offered their residents. In many cases, competencies not assessed or present in the EPAs did indeed exist elsewhere in their comprehensive curriculum so we used this opportunity to ensure OTLs needed to achieve these competencies were accurately reflected in their curriculum map. In some cases, the process identified clear gaps which allowed the program director and EC to thoughtfully plan integration through teaching and assessment opportunities.

Our study has some important limitations. We examined two sets of national curriculum documents for 18 Canadian speciality programs: CanMEDS competencies, and EPAs. We recognize that more than 18 Canadian specialty programs have launched CBD since 2017; however, we investigated only those programs offered at our centre and used only the original versions of their documents. We also acknowledge that these documents are not representative of the entirety of the intended curriculum. However, they comprise the largest and most visible component of the curriculum. Although many CanMEDS competencies exist in the EPA documents, in our coding we specifically distinguish between competencies that are required to be assessed and those that are not required to be assessed. Generally, in education when things are not linked to an assessment, they assume less importance. Our coding choice reflects this. Additionally, because programs are struggling to implement just the fundamentals of EPAs, we decided to focus on the curriculum as written. Given this, understanding what competency gaps exist within the EPA curriculum has practical importance for post-graduate programs. This information can help to meaningfully drive the development of supplementary curriculum.

Conclusion

In the process of curriculum renewal, it is necessary to ensure curriculum aligns with the intended outcomes we want to achieve, whatever those outcomes are. Integrating the societal-based CanMEDS competencies adds another layer of complexity. Fulsome coverage ensures a holistic approach to training of future physicians. We hope this study will prompt national conversations around the presence and meaningful integration of the desired outcomes of training competency-based curricula; the purposeful match between competencies and assessment; the availability of appropriate OTLs to gain competencies; and the role of ECs in supporting the curricular implementation. There is great opportunity now to evaluate current approaches to curriculum development, thoughtfully and purposefully ensuring thorough integration of all the competencies we desire our graduates to possess.

Footnotes

Conflicts of Interest: None declared.

Funding: None declared.

References

- 1.Frank JR. Competency-based medical education: theory to practice. Med Teach. 2010; 32(8):638-45. 10.3109/0142159X.2010.501190 [DOI] [PubMed] [Google Scholar]

- 2.Neufeld VR, Maudsley RF, Pickering RJ, et al. Educating future physicians for Ontario. Acad Med. 1998;73(11):1133-1148. 10.1097/00001888-199811000-00010 [DOI] [PubMed] [Google Scholar]

- 3.Butt H, Duffin J. Educating future physicians for Ontario and the physicians’ strike of 1986: the roots of Canadian competency-based medical education. CMAJ. 2018;190(7):E196-E198. 10.1503/cmaj.171043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.McWhinney IR. Primary care: core values core values in a changing world. Br Med J. 1998;316:1807. 10.1136/bmj.316.7147.1807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Frank JR. A history of CanMEDS – chapter from Royal College of Physicians of Canada 75th Anniversary History. 2004. Available from https://www.efomp.org/uploads/b4cad47f-00a5-4128-a460-f0851bd7beed/CanMEDShistoryforRoyalCollege75thanniversary2004byJRFrank.pdf

- 6.ten Cate O, Schelle F. Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med. 2007;82(6):542-547. 10.1097/acm.0b013e31805559c7 [DOI] [PubMed] [Google Scholar]

- 7.ten Cate O, Snell L, Carraccio C. Medical competence: the interplay between individual ability and the health care environment. Med Teach. 2010;32(8):669-75. 10.3109/0142159x.2010.500897 [DOI] [PubMed] [Google Scholar]

- 8.Cogan L, Schmidt W. The concept of opportunity to learn (OTL) in international comparisons of education. In: Stacy K, Turner R, editors. Assess Math Lit PISA Exp. New York: Springer; 2015.p. 207-216. [Google Scholar]

- 9.Mullis I, Martin M, Ruddock G, O’Sullivan C, Preuschoff C. TIMSS 2011 assessment framework. Boston, MA:TIMSS & PRILS International Study Centre; 2009. [Google Scholar]

- 10.Neuendorf KA. The content analysis guidebook. 2nd ed. Sage Publications; 2017. [Google Scholar]

- 11.Weber, R. Basic content analysis. 2nd ed. Sage Publications, Inc.; 1990. [Google Scholar]

- 12.Pinchas T. Assessment and evaluation in science education: Opportunities to learn and outcomes. In: Fraser B, Tobin K, editors. Int Handb Sci Educ. New York: Kluwer Academic Publishers; 1998. p. 761–789. [Google Scholar]

- 13.Floden, R. The measurement of opportunity to learn. In: Porter A, Gamoran A, editors. Methodological adv cross-national surveys educ achievement. Washington, D.C.: National Academies Press; 2002. p.229-266. [Google Scholar]

- 14.Riffe D, Lacy S, Watson B, Fico F. Analyzing media messages. 4th ed. Taylor & Francis; 2019. [Google Scholar]

- 15.Van der Vleuten C. The assessment of professional competence: Developments, research, and practical implications. Adv Heal Sci Educ. 1996;1:41–67. 10.1007/BF00596229 [DOI] [PubMed] [Google Scholar]

- 16.Wormald BW, Schoeman S, Somasunderam A, Penn M. Assessment drives learning: an unavoidable truth? Anat Sci Educ. 2009;2(5):199–204. 10.1002/ase.102 [DOI] [PubMed] [Google Scholar]

- 17.Chou S, Cole G, McLaughlin K, Lockyer J. CanMEDS evaluation in Canadian postgraduate training programmes: tools used and programme director satisfaction. Med Educ. 2008;42(9):879–886. 10.1111/j.1365-2923.2008.03111.x [DOI] [PubMed] [Google Scholar]

- 18.Zibrowski EM, Singh I, Goldszmidt MA, et al. The sum of the parts detracts from the intended whole: competencies and in-training assessments. Med Educ. 2009;43(8):741–748. 10.1111/j.1365-2923.2009.03404.x [DOI] [PubMed] [Google Scholar]

- 19.Whitehead C, Martin D, Fernandez N, et al. Integration of CanMEDS expectations and outcomes. Members FMEC PG Consort. 2011:1–24. [Google Scholar]

- 20.Hubinette M, Dobson S, Towle A, Whitehead C. Shifts in the interpretation of health advocacy: a textual analysis. Med Educ. 2014;48(12):1235–1243. 10.1111/medu.12584 [DOI] [PubMed] [Google Scholar]

- 21.Verma S, Flynn L, Seguin R. Faculty’s and residents’ perceptions of teaching and evaluating the role of health advocate: a study at one Canadian university. Acad Med. 2005;80(1):103–108. 10.1097/00001888-200501000-00024 [DOI] [PubMed] [Google Scholar]

- 22.Gill PJ, Gill HS. Health advocacy training: Why are physicians withholding life-saving care? Med Teach. 2011;33(8):677–679. 10.3109/0142159x.2010.494740 [DOI] [PubMed] [Google Scholar]

- 23.Jones MD, Lockspeiser TM. Proceed with caution: implementing competency-based graduate medical education. J Grad Med Educ. 2018;10(3):276–278. 10.4300//JGME-D-18-00311.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dharamsi S, Ho A, Spadafora SM, Woollard R. The physician as health advocate: translating the quest for social responsibility into medical education and practice. Acad Med. 2011;86(9):1108–1113. 10.1097/acm.0b013e318226b43b [DOI] [PubMed] [Google Scholar]

- 25.Feldman AM. Advocacy: a new arena for the translational scientist. Clin Transl Sci. 2011;4(2):73–75. 10.1111/Fj.1752-8062.2011.00277.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Frenk J, Chen L, Bhutta ZA, et al. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010;376(9756):1923–58. 10.1016/s0140-6736(10)61854-5 [DOI] [PubMed] [Google Scholar]

- 27.Snell L. Teaching professionalism and fostering professional values during residency. In: Cruess R, Cruess S, Y S, editors. Teach Med Prof. New York: Cambridge University Press; 2008. p. 246–262. [Google Scholar]

- 28.Warren AE, Allen VM, Bergin F, et al. Understanding, teaching and assessing the elements of the CanMEDS professional role: Canadian program directors’ views. Med Teach. 2014;36(5):390–402. 10.3109/0142159x.2014.890281 [DOI] [PubMed] [Google Scholar]

- 29.ABIM Foundation. American Board of Internal Medicine. Medical professionalism in the new millennium: a physician charter. Ann Intern Med. 2002;136(3):243-6. [DOI] [PubMed] [Google Scholar]

- 30.Canadian Medical Protective Association . Physician professionalism – Is it still relevant? 2012. Available from https://www.cmpa-acpm.ca/en/advice-publications/browse-articles/2012/physician-professionalism-is-it-still-relevant (assessed 2020 Jan. 30)

- 31.Bryden P, Ginsburg S, Kurabi B, Ahmed N. Professing professionalism: are we our own worst enemy? Faculty members’ experiences of teaching and evaluating professionalism in medical education at one school. Acad Med. 2010;85(6):1025–1034. 10.1097/acm.0b013e3181ce64ae [DOI] [PubMed] [Google Scholar]

- 32.Frank JR, Danoff D. The CanMEDS initiative: implementing an outcomes-based framework of physician competencies. Med Teach. 2007;29(7):642–647. 10.1080/01421590701746983 [DOI] [PubMed] [Google Scholar]

- 33.Iobst WF, Sherbino J, Cate O Ten, et al. Competency-based medical education in postgraduate medical education. Med Teach. 2010;32(8):651–656. 10.3109/0142159X.2010.500709 [DOI] [PubMed] [Google Scholar]

- 34.Pero R, Pero E, Marcotte L, Dagnone JD. 2019. Educational consultants: fostering an innovative implementation of competency-based medical education. Med Educ. 2019;53(5):524–525. 10.1111/medu.13868 [DOI] [PubMed] [Google Scholar]