Abstract

Background:

Remote patient monitoring can shift important data collection opportunities to low-cost settings. Here, we evaluate whether the quality of blood-samples taken by patients at home differs from samples taken from the same patients by clinical staff. We examine the effects of socio-demographic and patient reported outcomes (PRO) survey data on remote blood sampling compliance and quality.

Methods:

Samples were collected both in-clinic by study-staff and remotely by subjects at home. During cataloguing the samples were graded for quality. We used chi-squared tests and logistic regressions to examine differences in quality and compliance between samples taken in-clinic versus samples taken by subjects at-home.

Results:

64.6% of in-clinic samples and 69.7% of samples collected remotely at home received a Good (compared to Not Good) quality grade (chi2=4.91; p=0.03). Regression analysis found remote samples had roughly 1.5 times higher odds of being Good quality compared to samples taken in-clinic (p<.001; 95% CI 1.18–2.03). Increased anxiety reduced odds of contributing a Good sample (p=.04; 95% CI .95–1.0). Response rates were significantly higher for in-clinic sampling (95.8% vs 89.8%; p<.001).

Conclusion:

Blood-samples taken by patients at home using a microsampling device yielded higher quality samples than those taken in-clinic.

INTRODUCTION

Remote, longitudinal health monitoring via mobile health technologies (mHealth) holds promise for improving health care research and outcomes. By enabling simultaneous data collection across multiple modalities - including those measuring physiologic, behavioral, social, and biological factors – these technologies allow researchers to explore longitudinal linkages between formerly disparate data with greater statistical power, reliability, and validity.1 When linked across research or health care contexts, such data can reveal novel correlates and predictors at lower costs compared to controlled studies or care conducted in clinical settings.1–3

The demand for more frequent biochemical assessment amid the rising costs of face-to-face contact has challenged clinicians and researchers to develop less expensive blood collection approaches. The cost-prohibitive nature of venipuncture undertaken by trained staff, combined with the challenge of collecting blood in large epidemiologic studies where subjects are dispersed all over the country, constitutes an opportunity for remote collection.4 It is now possible, for instance, that subjects can collect blood samples themselves and mail them back to study staff for storage, preparation, and analysis. The low cost of such blood collection facilitates increased sample size for large studies and increased accessibility for smaller scaled studies otherwise unable to afford biospecimen collection.5

Before remote blood sampling of this nature can be widely deployed, however, the feasibility, acceptability, and validity of the processes and devices involved must be established. If study subjects or patients are reluctant to provide blood samples, or the sampling techniques yield low quality samples for analysis, then remote sampling will not be preferred to sampling in clinical environments. If compliance varies by sociodemographic factors or study outcome measures, or if sample quality varies systematically by the same, then remote blood collection methods may introduce bias.4,5 Identifying these potential sources of bias, confirming feasibility, and taking steps toward establishing validity can aid protocol development and study design, or alert researchers to problems with device usability and performance in advance.

Our recent study, the Prediction, Risk, and Evaluation of Major Adverse Cardiac Events (PRE-MACE) sponsored by the California Initiative for the Advancement of Precision Medicine (CIAPM), provided an opportunity to assess the feasibility of patient-centric remote dried blood sampling in the context of a longitudinal analysis of major adverse cardiac event predictors. The Mitra microsampling device by Neoteryx (Torrance, CA, USA) is an FDA-listed class 1 device (D254956) that enables home- or clinic-based, longitudinal, volumetric blood sample collection using a simple finger prick.6 The device has an absorbent polymeric tip that draws up a fixed volume (10 µL) of blood, tolerates sample heterogeneity, and overcomes hematocrit bias issues associated with dried blood spots.7 Blood samples can be collected and stored in-clinic or collected by subjects and patients at home before being enclosed in a plastic clamshell, sealed in an aluminum foil pouch, and returned via mail. The analytes of interest in the PRE-MACE study are stable at room temperature for 13 weeks and for long term storage at −80 degrees centigrade for up to 22 weeks,8 making Mitra a potentially viable option for remote blood collection.

Because the PRE-MACE study involved a repeated-measures design wherein subjects contributed 4 blood micro-samples over a 3 month period, it provided a unique opportunity to compare response rates and sample quality in the same subjects across two different settings: 1) in-clinic sampling conducted by a trained technician; and 2) remote sampling conducted by the subject and returned by mail. The co-availability of socio-demographic data and patient reported outcomes (PRO) data allowed us to examine covariates influencing blood sample compliance and quality. The results suggest that remote blood sampling achieves equivalent or better sample quality, response rates, acceptability among study subjects, and overall feasibility compared to in-clinic sample collection.

METHODS

Recruitment and Study-Summary

The Prediction, Risk, and Evaluation of Major Adverse Cardiac Events (PRE-MACE) study is a longitudinal, prospective cohort study design involving 200 patients with stable ischemic heart disease. Subjects were recruited from a large, academic hospital-based cardiac rehabilitation center, a tertiary care women’s heart center, and independent physician panels. Subjects were required to own or have access to a smartphone, tablet, or desktop computer capable of connecting to the internet via web browser. Additionally, the smartphone, tablet, or desktop computer in question had to allow connection to monitoring devices via Bluetooth or USB cable.

The details of the scientific rationale, eligibility criteria, design, and methods of the study have been previously published.9 Briefly, 200 stable ischemic heart disease subjects were enrolled and attended an in-clinic appointment where they were equipped with and trained on 4 different activities: 1) Fitbit Charge 2 (Fitbit Inc., San Francisco, CA, USA), a heart rate and activity tracking device; and 2) AliveCor KardiaPro (Mountain View, CA, USA), a single channel electrocardiogram rhythm stripe used weekly or when experiencing cardiac symptoms, 3) Mitra® microsampling device (Neoteryx, Torrance, CA, USA) for monthly blood collection; and 4) Patient Reported Outcomes (PROs) which collects data via web-based questionnaires. Monitoring with the Fitbit was undertaken continuously throughout the 12-week study, only to be interrupted by bathing, swimming, or other activities involving water or while charging the device in its charging cradle. PROs were collected at baseline and during weekly follow up and included the Patient-Reported Outcomes Measurement Information System (PROMIS) global health, depression, emotional distress/anxiety, physical function, sleep disturbance and social isolation short form questionnaires. Each questionnaire was completed weekly via GetWellLoop (Bethesda, MD, USA), a cloud-based questionnaire platform. Compensation for participation totaled $50-$200 per subject.

Biochemical Biomarker Monitoring and Collection Device

We conducted remote blood microsample collection for purposes of detecting possible protein correlates to known MACE biomarkers. Collection proceeded at baseline and exit at the hospital, as well as 2 times monthly via self-administration from home with the option of having those done also at the hospital. Device collection consisted of 4 Mitra tips collected in-clinic by a trained technician at both baseline (day 0) and exit (day 90), and 2 Mitra tips collected by subjects themselves and returned by mail at both day 30 and day 60 (Supplemental Figure 1). Thus, a total of 8 in-clinic micro-samples collected by technicians and as many as 4 at-home micro-samples collected by patients created the two conditions - remote home-based collection vs on-site technician - on which to compare compliance rates and sample quality.

Each kit contained printed instructions, lancets, alcohol wipes, bandage, two or four Mitra® tips in a protective clam shell, a foil bag with desiccant, and a prepaid envelope with return address to the core lab. Email reminders for home Mitra® blood collection (day 30 and day 60) were sent to subjects one day prior to scheduled draw.

To take a remote blood micro-sample with the Mitra device, the finger is lanced and blood wicked up into the device, after which the blood should dry for three hours. The device is then placed inside a Teflon bag with desiccant and – if sampling is remote - into an envelope with prepaid postage. The microsamples can then be mailed via standard mail directly to the laboratory for storage and subsequent analysis.

Quality Assessment

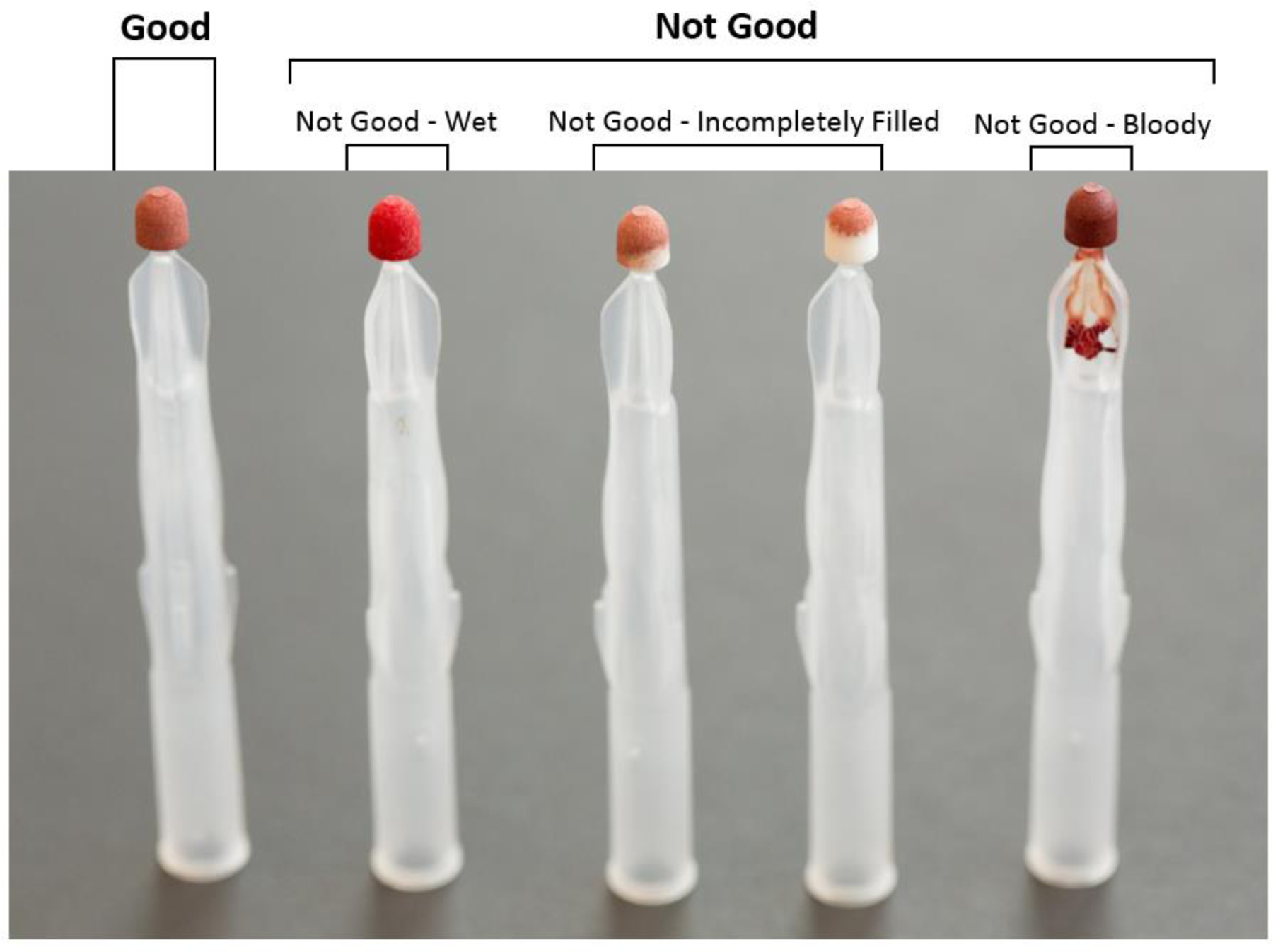

All Mitra® microsampling devices collected in-clinic or sent in by mail were bar coded upon receipt and catalogued accordingly. During cataloguing, the de-identified samples were graded for quality and assigned one of the following grades indicating acceptability for further analysis: Good, i.e. the tip was properly filled and dried per instructions; Not Good – Wet, meaning the tip had not been given sufficient time to dry; Not Good – Incompletely filled, meaning a low volume sample was collected; or Not Good – Bloody, meaning a sample with excess blood around tip was observed (Figure 1). For purposes of the present analysis, these quality assessments were collapsed to a binary quality metric: Good vs Not Good.

Figure 1.

During cataloguing, samples were graded for quality and assigned one of the following grades indicating acceptability for further analysis: Good; Not Good – Wet; Not Good – Incompletely filled; or Not Good – Bloody. These grades were collapsed to a binary measure – Good versus Not Good – that served as the dependent variable describing sample quality.

Outcomes and Statistical Methods

The unit of observation in this study was the individual Mitra tip, but data were aggregated at the subject level. The two outcomes of interest were sample quality and compliance, both operationalized as binary measures (e.g. Good vs Not Good and Missing vs Returned).

For both outcomes, we first tested for differences in distributions of covariates using Pearson chi-squared tests for categorical variables, and T-tests for continuous variables. Because these bivariate tests ignore the hierarchical nature of this data, results for each potential covariate are provided as preliminary evidence of differences in distributions only.

To account for likely autocorrelation resulting from repeated measures for patients contributing multiple samples, we used multilevel mixed-effects logistic regression to model both tip quality (Good vs Not Good) and compliance (Missing vs Returned). In the analysis of predictors of sample-quality, the primary regressor of interest was the sample-origin variable (in-clinic vs. at-home sample). The sample for this analysis included all monitoring periods: Baseline (Day 0), First Month (Day 30), Second Month (Day 60), and Exit (Day 90). A variable for monitoring period was included in the regressions to control for any patterns that might have issued from improvements (or decrements) in collection techniques over time or with repeated practice.

In the analysis of predictors of compliance, the sample was limited to just the Day 30 and Day 60 monitoring periods, as these were conducted remotely and were at greatest risk for non-compliance. With no primary regressor under investigation for this particular model, all variables were considered on the basis of their directionality, magnitude, and significance level. All computations were performed using the xtmelogit and logit with cluster–robust standard errors command in STATA, version 15 (Stata Corp). To confirm the appropriateness of these models, we calculated an Intraclass Correlation Coefficient and examined the results of Hausman tests. To summarize inferences we present odds ratios (ORs) for the predictors together with their 95% CIs and associated P-values.

RESULTS

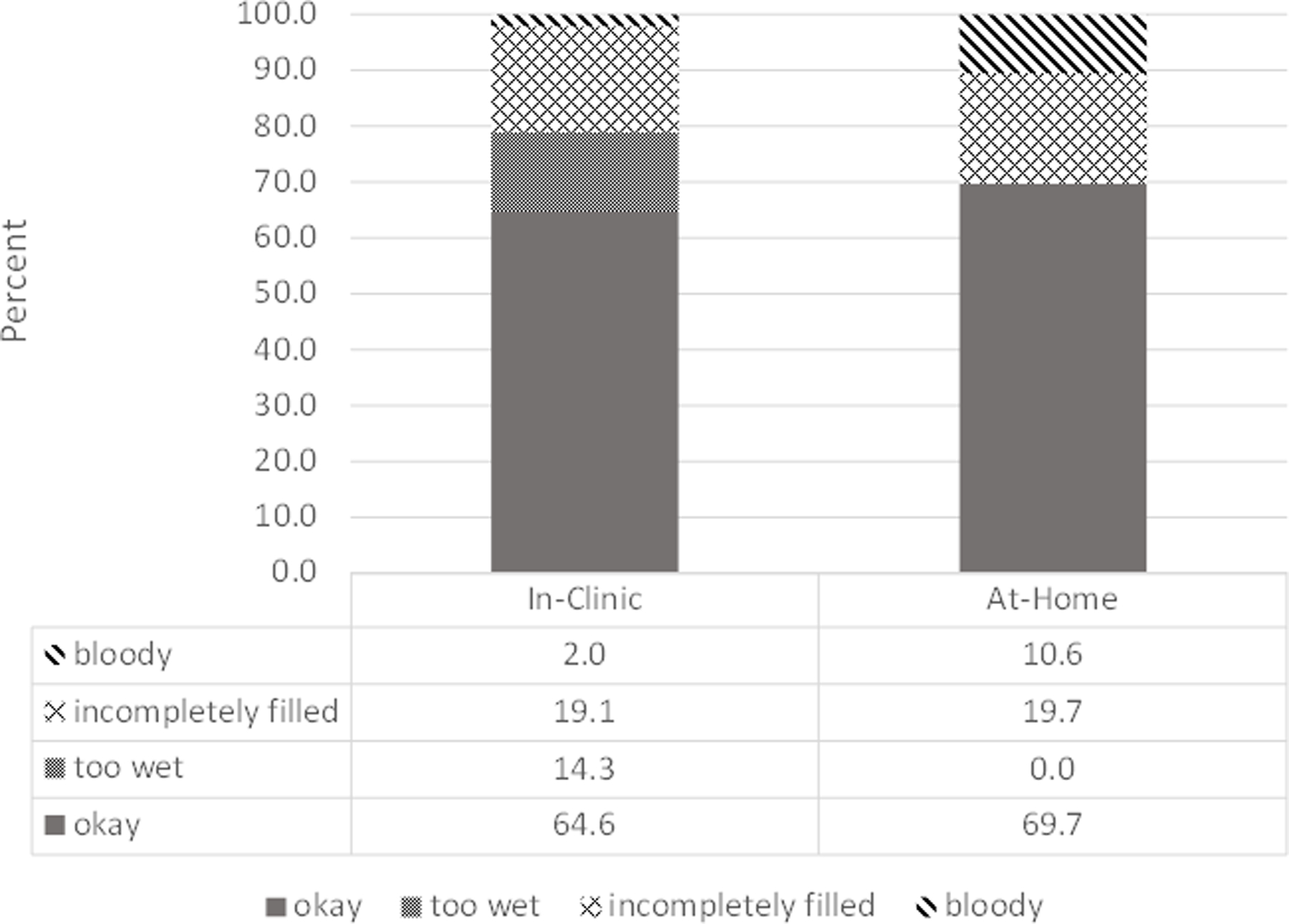

200 eligible subjects entered the study, with 2 declining to submit blood micro-samples at any time. A third subject failed to respond to any PROs, yielding an analysis sample of 197 for the full study. Table 1 provides baseline demographic and clinical characteristic for the sample. Response rates for baseline (Day 0) and exit (Day 90) (both in-clinic sample periods) were significantly higher than response rates for Day 30 and Day 60 (both remote sampling dates) (95.8% vs 89.8%, respectively; p<.001). In all, 2,132 Mitra samples were collected over the course of the study. The results of quality grading are organized by sample setting and displayed in Figure 2.

Table 1.

Baseline demographic and clinical characteristics for subjects submitting at least one blood sample via the Mitra device (n=197).

| Freq/Mean | Percent/SD | |

|---|---|---|

| Age | 64.7 | 10.8 |

|

| ||

| Sex | ||

| Female | 79 | 40.1 |

|

| ||

| BMI | 28.5 | 7.0 |

|

| ||

|

| ||

| Race | ||

| American Indian and Alaska Native | 2 | 1.0 |

| Asian | 13 | 6.6 |

| Black or African American | 20 | 10. 2 |

| Native Hawaiian and Other Pacific Islander | 2 | 1.0 |

| White | 146 | 74.1 |

| Other | 14 | 7.1 |

|

| ||

| Hispanic ethnicity | ||

| Hispanic | 22 | 11.2 |

|

| ||

| Marital Status | ||

| Never married | 22 | 11.2 |

| Divorced or separated/Widowed | 49 | 24.9 |

| Presently married/“Marriage like relationship” | 124 | 62.9 |

| Missing | 2 | 1.0 |

|

| ||

| Income | ||

| $100,000 or more | 86 | 43.6 |

| $50,000 to 99,000 | 43 | 21.8 |

| Under $50,000 | 48 | 24.5 |

| Don’t Know/Missing | 20 | 10.1 |

|

| ||

| PROMIS Measures | ||

| PROMIS – Physical Function | 46.1 | 8.3 |

| PROMIS – Mental Function | 50.0 | 8.3 |

| PROMIS - Depression | 48.8 | 8.0 |

| PROMIS – Anxiety | 52.5 | 8.6 |

Figure 2.

Results of quality grading organized by sample setting. Day 0 and Day 90 represent in-clinic only sampling periods, while Day 30 and Day 60 include at-home sampling periods.

When dichotomized as Good vs. Not Good sample quality, we found that 64.6% of in-clinic samples and 69.7% of samples collected remotely by participants at home received a grade of Good. A Pearson chi-square test indicated samples collected remotely by patients were rated Good at a significantly higher proportion than samples taken in-clinic by trained personnel (chi2=4.91; p=0.03).

Of the 2,132 samples collected, 1,691 samples were collected in periods with complete data from subjects and thus included in the regression analysis. Bivariate comparisons showed that subjects not included in this analysis due to incomplete data did not comprise a significantly different population from those subjects with complete data, nor did proportions of Good/Not-Good quality differ between the two samples. The mixed effects logistic regression confirmed the difference found in unadjusted comparisons: samples taken remotely by patients had roughly 1.5 times higher odds of being good quality compared to samples taken in-clinic (p=.001; 95% CI 1.18–2.03) (Table 2). A higher anxiety score on the PROMIS Anxiety Short Form assessment was a significant predictor of lower odds of contributing a good quality sample (p=.04; 95% CI .95–1.0.

Table 2.

Results of multi-level logistic regression clustered by patient with sample-quality of the Mitra devices (Good versus Not Good) as the dependent variable (n=197).

| OR (95% CI) | SE | P-value | |

|---|---|---|---|

| Home Sample | 1.55 (1.18–2.03) | .21 | .001 |

|

| |||

| Age | 1.01 (.99–1.03) | .90 | .39 |

|

| |||

| Sex | |||

| Female | .88 (.57–1.36) | .20 | .57 |

| Male | ref | ||

|

| |||

| BMI | 1.01 (.97–1.04) | .02 | .72 |

|

| |||

| Race | |||

| African American | 1.82 (.86–3.89) | .70 | .12 |

| Asian | .46 (.21-.1.02) | .19 | .06 |

| American Indian | .41 (.06–2.94) | .41 | .37 |

| Hawaiian/Pacific Islander | 2.54 (.31–20.65) | 1.72 | .38 |

| white | ref | ||

|

| |||

| Income Category | |||

| $100k+ | 1.22 (.68–2.17) | .36 | .50 |

| $50k-$100k | .64 (.34–1.19) | .20 | .16 |

| Under $50k | Ref | ||

|

| |||

| Don’t Know/Missing Income | 1.59 (.72–3.49) | .64 | .25 |

|

| |||

| PROMIS – Physical Function | .98 (.96–1.01) | .01 | .13 |

|

| |||

| PROMIS – Mental Function | .98 (.95–1.00) | .01 | .08 |

|

| |||

| PROMIS - Depression | .98 (.95–1.02) | .02 | .32 |

|

| |||

| PROMIS – Anxiety | .97 (.95-.1.00) | .01 | .04 |

|

| |||

| Nsubjects | 197 | ||

|

| |||

| Observations | 1,691 | ||

For the compliance analysis examining predictors of returned vs unreturned micro-samples for at-home sampling periods, 594 samples collected across 170 subjects had complete data. Bivariate comparisons showed that subjects not included in this analysis due to incomplete data did not comprise a significantly different population from those subjects with complete data. Among subjects with complete data, Pearson chi-square tests revealed subjects making less than $50k in annual income returned samples at higher rates than those making more than $50k (chi2=4.16; p=0.04). T-tests revealed that patients returning samples had a higher average age than patients failing to return samples (65.4 years vs 61.8 years; p=0.001). A logistic regression analysis did not reinforce these results or reveal any other potential predictors of import (Table 3). The coefficient for the variable controlling for Native Hawaiian and Other Pacific Islander racial status was statistically significant, but small cell-sizes prevent meaningful interpretation.

Table 3.

Results of multi-level logistic regression clustered by patient with compliance of the Mitra device (“Returned” versus “Not Returned”) as the dependent variable (n=170). Sample periods were limited to Days 30 and Day 60, as those were the periods in which compliance was determined by the patient.

| OR (95% CI) | SE | P-value | |

|---|---|---|---|

| Age | 1.02 (.97–1.08) | .03 | .39 |

|

| |||

| Sex | |||

| Female | .81 (.32–2.07) | .39 | .67 |

| Male | Ref | ||

|

| |||

| BMI | .97 (.91–1.03) | .03 | .28 |

|

| |||

| Race | |||

| African American | 1.72 (.30–9.82) | .1.53 | .54 |

| Asian | 1.77 (.19-.16.68) | 2.03 | .04 |

| American Indian | (omitted) | ||

| Hawaiian/Pacific Islander | .04 (.00-.43) | .05 | <.01 |

| white | Ref | ||

|

| |||

| Income Category | |||

| $100k+ | .55 (.15–1.98) | .36 | .34 |

| $50k-$100k | .45 (.10–2.03) | .35 | .30 |

| Under $50k | Ref | ||

|

| |||

| Don’t Know/Missing Income | .23 (.05–1.17) | .19 | .08 |

|

| |||

| PROMIS – Physical Function | .98 (.93–1.04) | .03 | .59 |

|

| |||

| PROMIS – Mental Function | .1.02 (.94–1.10) | .04 | .64 |

|

| |||

| PROMIS - Depression | 1.09 (.99–1.2) | .05 | .07 |

|

| |||

| PROMIS – Anxiety | .94 (.87-.1.02) | .04 | .16 |

|

| |||

| Nsubjects | 170 | ||

|

| |||

| Observations | 594 | ||

DISCUSSION

In this study, we found that blood microsamples collected remotely and returned by mail using the Mitra microsampling device, for purposes of detecting possible protein correlates to known MACE biomarkers, were successfully received 89.8% of the time. When comparing samples taken at-home to samples taken in-clinic, we found samples taken at-home had lower odds of being returned but higher odds of meeting quality standards. There were no significant demographic predictors of non-compliance, though patients with higher anxiety scores suffered decreased odds of contributing good quality samples, all else equal. This is an important finding that may signal a need to target subjects and/or patients with anxiety disorders with interventions designed to promote adherence to protocol and improve sample-taking technique.

Overall, and compared to other studies involving home-collection of bio-samples, 10–13 compliance rates were high in the present study. While these high rates are encouraging, we might expect better than average compliance given the population was recruited from within a cardiac rehab center, and likely consisted of highly motivated patients primed to exhibit high adherence to protocol. Though compensation was not tied directly to the return of blood micro-samples, the presence of compensation for participation in the study might also have contributed to that end. Still, tradeoffs in the time and effort associated with traveling to a clinic vs self-sampling at home, coupled with the high usability of the device, may very well have driven high compliance despite other forces in its favor.

Much of the difference in quality between samples taken at-home and samples taken in-clinic consisted of an increased proportion of Not Good – Wet grades arising in the latter group. This result likely stems from the increased time-in-transit that is a feature of at-home sampling: return via mail provided for increased drying time in the remote sampling group. Future training materials and protocols for in-clinic sampling will look to address the need for increased drying times, and so this advantage of remote sampling may be mitigated in future trials.

The cost savings associated with blood micro-samples taken at home and returned by mail, though not explicitly analyzed here, are likely significant. Unlike blood micro-samples collected in-clinic, trained clinical staff are not required when using the Mitra device from home. While compliance rates are lower for home collection, the cost-savings inherent to the method might easily be diverted to support larger sample sizes. How much larger of a cohort, how frequently samples should be collected, and whether these increases would be enough to account for differences in compliance encountered in less motivated populations, merits further investigation.

CONCLUSIONS

Our study suggests that blood micro-samples taken at home and returned by mail constitute a feasible, usable, and potentially valid solution for blood-sample collection. If the validity of analyses of micro-samples achieves par with that of in-clinic samples, then remote micro-sampling may be preferred generally for studies involving blood collection. Similarly, blood collection via micro-sampling might be ideal for geographically diverse samples. The technique may lower barriers to adoption, allowing introduction of remote micro-sampling alongside other data-collection modalities. Overall, home collection via Mitra device appears to offer a high quality alternative to in-clinic sampling, potentially making it a preferred strategy for data-collection in epidemiological studies.

Supplementary Material

Funding:

This work was supported by the National Center for Advancing Translational Sciences Grant UL1TR000124 and UL1TR000064, The California State Government: California Initiative to Advance Precision Medicine, San Francisco, California, Cedars-Sinai Precision Health Grants; The Barbra Streisand Women’s Cardiovascular Research and Education Program, Cedars-Sinai Medical Center, Los Angeles, California, The Society for Women’s Health Research (SWHR), Washington, D.C., The Linda Joy Pollin Women’s Heart Health Program, and the Erika J. Glazer Women’s Heart Health Project, Cedars-Sinai Medical Center, Los Angeles, California, The Cedars-Sinai Center for Outcomes Research and Education (CS-CORE), Cedars-Sinai Medical Center, Los Angeles, California, The Division of Informatics, Cedars-Sinai Medical Center, Los Angeles, California, The Advanced Clinical Biosystems Research Institute, Cedars-Sinai Smidt Heart Institute, Cedars-Sinai Medical Center, Los Angeles, California, The National Heart, Lung, and Blood Institute R56HL135425 and K23HL127262–01A1.

REFERENCES

- 1).Kumar S, Nilsen WJ, Abernethy A, Atienza A, Patrick K, Pavel M, … & Hedeker D. (2013). Mobile health technology evaluation: the mHealth evidence workshop. American journal of preventive medicine, 45(2), 228–236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2).Hobcraft J. Reflections on the incorporation of biomeasures into longitudinal social surveys: An international perspective. Biodemography and Social Biology. 2009;55(2):252–269. [DOI] [PubMed] [Google Scholar]

- 3).Lindau ST, McDade TW. Minimally Invasive and Innovative Methods for Biomeasure Collection in Population-Based Research. In: National Research Council (US) Committee on Advances in Collecting and Utilizing Biological Indicators and Genetic Information in Social Science Surveys; Weinstein M, Vaupel JW, Wachter KW, editors. Biosocial Surveys. Washington (DC): National Academies Press (US); 2008. 13. Available from: https://www.ncbi.nlm.nih.gov/books/NBK62423/ [PubMed] [Google Scholar]

- 4).Gatny HH, Couper MP, & Axinn WG (2013). New strategies for biosample collection in population-based social research. Social science research, 42(5), 1402–1409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5).Rockett John C., et al. “The value of home-based collection of biospecimens in reproductive epidemiology.” Environmental Health Perspectives 112.1 (2004): 94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6).Blood Collection Devices. https://www.neoteryx.com/micro-sampling-capillary-blood-collection-devices. AccessedDecember 17, 2019.

- 7).van den Broek I, Fu Q, Kushon S, et al. Application of volumetric absorptive microsampling for robust, high-throughput mass spectrometric quantification of circulating protein biomarkers. Clinical Mass Spectrometry. 2017;4–5:25–33. [Google Scholar]

- 8).van den Broek I, Fu Q, Kushon S, Kowalski MP, Millis K, Percy A, &Van Eyk JE (2017) Application of volumetric absorptive microsampling for robust, high-throuput mass spectrometric quantification of ciculating biomarkers. Clinical Mass Spectrometry 4(2017):25–33 [Google Scholar]

- 9).Shufelt C, Dzubur E, Joung S, Fuller G, Mouapi KN, Van Den Broek I, … & Mastali M. (2019). A protocol integrating remote patient monitoring patient reported outcomes and cardiovascular biomarkers. NPJ Digital Medicine, 2(1), 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10).Sakhi AK, Bastani NE, Ellingjord-Dale M, Gundersen TE, Blomhoff R, & Ursin G. (2015). Feasibility of self-sampled dried blood spot and saliva samples sent by mail in a population-based study. BMC cancer, 15(1), 265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11).Bhatti P, Kampa D, Alexander BH, McClure C, Ringer D, Doody MM, et al. Blood spots as an alternative to whole blood collection and the effect of a small monetary incentive to increase participation in genetic association studies. BMC Med Res Methodol. 2009;9:76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12).Williams SR, McDade TW. The use of dried blood spot sampling in the national social life, health, and aging project. J Gerontol B Psychol Sci Soc Sci. 2009;64:I131–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13).Hansen Thomas V. O., et al. Collection of blood, saliva, and buccal cell samples in a pilot study on the Danish nurse cohort: comparison of the response rate and quality of genomic DNA. Cancer Epidemiology and Prevention Biomarkers 16.10 (2007): 2072–2076. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.