Abstract

The ability to rapidly process others’ emotional signals is crucial for adaptive social interactions. However, to date it is still unclear how observing emotional facial expressions affects the reactivity of the human motor cortex. To provide insights on this issue, we employed single-pulse transcranial magnetic stimulation (TMS) to investigate corticospinal motor excitability. Healthy participants observed happy, fearful and neutral pictures of facial expressions while receiving TMS over the left or right motor cortex at 150 and 300 ms after picture onset. In the early phase (150 ms), we observed an enhancement of corticospinal excitability for the observation of happy and fearful emotional faces compared to neutral expressions specifically in the right hemisphere. Interindividual differences in the disposition to experience aversive feelings (personal distress) in interpersonal emotional contexts predicted the early increase in corticospinal excitability for emotional faces. No differences in corticospinal excitability were observed at the later time (300 ms) or in the left M1. These findings support the notion that emotion perception primes the body for action and highlights the role of the right hemisphere in implementing a rapid and transient facilitatory response to emotional arousing stimuli, such as emotional facial expressions.

Keywords: emotional facial expressions, transcranial magnetic stimulation, motor evoked potentials, early motor reactions, empathic traits

1. Introduction

Emotional facial expressions are a gold mine of social information, and the ability to accurately perceive and respond to them is crucial to social success. Humans show remarkable abilities to identify and judge others’ expressions even in difficult and ambiguous conditions, e.g., when faces are presented for less than one tenth of a second [1,2,3,4]. Meta-analyses addressing the neural bases of facial perception, have shown that the processing of emotional faces is associated with increased activation in a number of visual, limbic, temporoparietal and prefrontal areas, as well as in several motor structures [5,6,7]. Modulations of these areas occur rapidly as shown by several electroencephalography studies [8,9], in keeping with the notion that emotional signals rapidly engage neural resources to efficiently process and react to stimuli relevant for survival.

Scholars have commonly proposed that activation in motor areas during perception of emotional faces could reflect either the activation of motor resonance processes—which would support covert/overt mirroring of the observed expression in the observer [10,11,12]—and/or the activation of motor programs to implement adaptive motor responses (e.g., orienting/freezing or fight/flight responses) [13,14,15]. However, because imaging and electrocortical techniques suffer respectively from relatively low temporal and spatial resolution, and both methods can hardly distinguish between excitatory and inhibitory processes, previous works using these approaches were unable to establish the functional meaning of motor activations during observation of the emotional faces.

Transcranial magnetic stimulation (TMS) is a valuable method to investigate the temporal dynamics of the motor system during perception of emotional faces, via stimulation of the primary motor cortex (M1) and the consequent induction of motor-evoked potentials (MEPs) in target muscles. The amplitudes of TMS-induced MEPs provide an instantaneous readout of the excitability of the corticospinal system, allowing to probe distinct motor representations with high temporal resolution, and, importantly, to distinguish between excitatory (MEP increase) and inhibitory (MEP decrease) motor processes.

However, to date, TMS has been mostly used to investigate the involvement of the motor system in processing emotional signals during observation of complex emotional scenes [16,17,18,19,20,21] or emotional body postures [21,22,23,24,25,26,27,28,29], rather than observation of emotional faces (see below). A review of this work is nevertheless useful to understand the relation between emotional signals and motor processes across different classes of stimuli. Studies using complex natural scenes have typically selected stimuli from the international affective picture system (IAPS) and reported increased MEPs when participants were presented with both pleasant and unpleasant scenes [16,17,18]. A few studies using the same set of IAPS stimuli reported higher facilitation for unpleasant scenes, although this effect was probably due to the higher arousal of these scenes [19,20,21]. These facilitatory modulations have been commonly interpreted in terms of increased motor readiness to relevant arousing stimuli, reflecting preparation of adaptive motor responses [13,14,15]. Notably, in most of such studies, motor excitability was tested in a relatively late time window (i.e., at >300 ms after stimulus onset; but see [21]), when the magnitude of brain response to emotional scenes is typically similar for positive and negative stimuli and likely reflects enhanced resource allocation to motivationally relevant cues [30,31,32]. Only one study by Borgomaneri et al. [21], explored an earlier time point (i.e., 150 ms) and found increased excitability in the left M1 for negative scenes associated with higher arousal [21].

One potential issue in these previous studies, is that emotional scenes very often conveyed emotional meanings through the facial expressions of the depicted actors, suggesting that enhanced motor readiness could be triggered by emotional faces alone rather than (or in addition to) emotional contextual cues. Even more critically, emotional scenes often showed not only the faces but also the actors’ dynamic motor actions, whereas neutral scenes typically involved neutral contexts with no humans, raising the possible concern that increased motor excitability for emotional scenes could be due to the effect of observing human actions, i.e., motor resonance (see discussion in [22]), rather than any emotion-related neural modulations.

While studies testing natural scenes have reported increased motor excitability for emotional scenes, research investigating MEP response to the observation of emotional body expressions has commonly reported reduced motor excitability for emotional bodies [23,24,25,26,29]. These studies have used sets of validated pictures showing body postures in isolation with no facial or contextual cues, while participants were asked to actively recognize fearful and happy body postures (with comparable arousal), emotionally neutral dynamic body postures (with implied motion comparable to emotional postures) and neutral static postures. Notably, these studies explored early time windows and reported that seeing emotional bodies reduced motor excitability quite early in time (i.e., at 70–150 ms from picture onset) when the right M1 was targeted [23,24,25,26], with a tendency for fearful bodies to induce the earliest responses [25].

The early suppression of motor output has been interpreted as reflecting an orienting/freezing mechanism supporting the monitoring of relevant emotional signals [23,24,25,26,29]. However, it remains unclear whether early orienting is specific to emotional bodies, as a study using IAPS found no MEP reduction at 150 ms for emotional scenes [21]. At a later point (i.e., at 300 ms), two studies reported motor facilitation for dynamic expressions compared to static body postures, no matter whether the dynamic expressions were emotional or neutral, suggesting that these responses reflected a motor mapping of the observed action (i.e., motor resonance) rather than emotion-related modulations [22,23].

In sum, the literature addressing MEPs during perception of emotional scenes and body postures generically support the notion that the observers’ motor system could reflect both emotion-related adaptive responses to arousing stimuli (increased readiness, or orienting/freezing) and motor resonance. However, does the perception of emotional faces induce similar processes in the observers’ motor system? Despite the relevance of emotional facial expressions in our daily life, to our knowledge only two studies investigated corticospinal excitability during the observation of emotional faces [33,34]. In a first study, Schutter and colleagues recorded MEPs by stimulating the left M1 at 300 ms from the presentation of pictures of happy, fearful and neutral facial expressions during passive viewing. Results showed an increase in MEP amplitudes to fearful facial expressions compared to happy and neutral expressions [33], although the study did not check whether fearful expressions elicited higher arousal in the observers. Another TMS study focusing on left M1 corticohypoglossal excitability (i.e., with MEPs recorded from the tongue), showed no consistent modulation of left M1 corticospinal excitability (i.e., with MEPs recorded from a forearm muscle) tested on a late time window (1100–1400 ms from picture presentation) during the observation of neutral facial expressions and arousal-matched happy and disgusted expressions [34]. No other studies investigated how emotional faces affect motor excitability at early vs. later time and to what extent the two different hemispheres are engaged.

Thus, the novel goal of the present work is to investigate the time course of the motor system involvement in processing emotional facial expressions and to explore the different functional modulations of M1 in the two hemispheres. MEPs to single-pulse TMS of M1 were recorded from hand muscles during presentation of pictures of happy, fearful and neutral facial expressions during an active recognition task. In two sessions, we probed corticospinal excitability of the two hemispheres by targeting both the left M1 and the right M1 during the task. To investigate both early and late modulations of corticospinal excitability [21,23], we stimulated M1 at both 150 and 300 ms after stimuli presentation. We selected visual stimuli from a validated database [35] and assessed the valence and arousal of the stimuli as well as the subjective perception of motion implied in the picture. In this way, we could check whether any differential modulation for positive and negative stimuli could be merely due to higher arousal or implied motion rather than valence.

Our study allowed us to test alternative predictions derived from the literature. First of all, it allows to clarify the facilitatory/inhibitory nature of earlier and later motor response to emotional faces. If early and later responses to emotional faces reflect enhanced motor readiness to arousing stimuli [16,17,18,19,20,21], we predicted larger MEPs at 150 ms and 300 ms for fearful and happy facial expressions and no difference between the two types of pictures, as we selected stimuli with comparable potential for arousal. Investigating MEPs at 150 ms allows to test the alternative hypothesis of reduced motor reactivity to emotional faces at this earlier timing, which could reflect early orienting/freezing response to emotional signals, as suggested by research on emotional bodies [23]. In a similar vein, if MEPs at 300 ms mainly reflect motor resonance rather than any emotion-related processes [22,23], we expected changes in motor excitability only at 150 ms and no motor modulation at 300 ms, as in the present study we recorded MEPs from the hand, not from the face, and motor resonance effects at this timing are muscle specific [36,37,38].

Notably, by targeting both the left and right M1, our design also allowed us to provide further insights on the processes underlying early MEP changes. According to the classical hypothesis of the right hemisphere dominance [39,40,41,42] we predicted larger emotion-related effects over the right M1, as the right hemisphere is more involved in processing arousing stimuli. On the other hand, according to a purely “motor” hypothesis, larger effects could be expected over the left M1, controlling the right hand, as adaptive motor reactions could in principle engage the dominant hand to a greater extent [21,23]. Although not supported by previous MEP studies, our design also allowed to test the valence-specific [40,43,44] or the motivation-specific hypothesis [43,45,46] according to which, the right M1 and left M1 would be more sensitive to fearful and happy emotions, respectively.

Finally, emotional arousing social stimuli may also trigger empathy-related processing or personal distress [21,23,47,48,49] and indeed, studies have shown that motor reactivity during social perception can be predicted by stable empathy or personal distress dispositions [21,23,26,29,50,51,52,53]. Thus, an additional novel goal of the study is to test whether interindividual differences in empathy and personal distress dispositions predict the magnitude of motor response to facial expressions.

2. Materials and Methods

2.1. Participants

Twelve healthy subjects took part in the study (6 men, mean age ± S.D.: 23.5 y ± 0.7). All participants were right-handed according to a standard handedness inventory [54], had normal or corrected-to-normal visual acuity and were free from any contraindication to TMS [55]. They gave their written informed consent to take part in the study, which was approved by the Bioethical committee at the University of Bologna and was carried out in accordance with the ethical standards of the 1964 Declaration of Helsinki. No discomfort or adverse effects during TMS were reported or noticed.

2.2. Visual Stimuli and Pilot Experiments

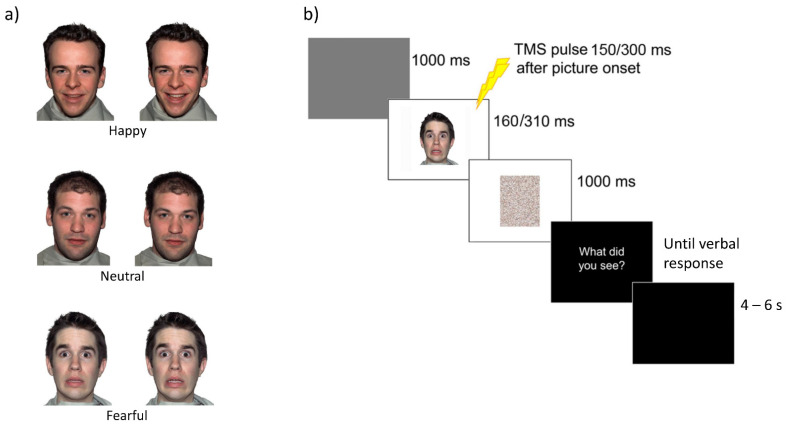

Visual stimuli consisted of 54 face pictures (1000 × 1500 pixels) taken from the Nimstim database [35], depicting five different male actors showing emotional facial expressions (happy and fearful) or without any expression (neutral) (Figure 1a). A total of 18 neutral, 18 fearful and 18 happy images of facial expressions were presented on a 19-inch screen located about 80 cm away from the participant.

Figure 1.

(a) Examples of visual body stimuli. (b) Trial sequence.

In order to increase our pool of stimuli and to create faces showing different quantities of happiness or fear (with matched intensity and recognition accuracy), we morphed the expressions of the five actors and generated a sample of 150 stimuli using the Fantamorph software (Abrosoft, PR, Italy; https://www.fantamorph.com (accessed on 1 March 2010)). The software allowed us to morph pairs of pictures of each actor and create transitions from neutral to emotional faces. For each model, two experimenters initially selected transitions near the two extremes (e.g., neutral and happy expressions or neutral and fearful expressions), so as to start from a pool of pictures showing slightly different, but not ambiguous, facial expressions. These 150 stimuli were initially presented to a sample of 15 participants (2 males, mean age 25.1 y) who were asked to judge the intensity of happiness and fearfulness conveyed by each face using a 9 point Likert-like scale (from 1—no emotion/neutral–to 9—maximal emotional intensity). Based on the participants’ evaluation, we selected 105 stimuli where fearful and happy expressions were matched for emotional intensity. These 105 stimuli were entered in a second validation experiment, in which 24 participants (10 males, mean age 24.9 y) were requested to categorize the facial expression (3 forced choices: happy, fearful and neutral). Based on this further test, we selected the final sample of 54 pictures (18 for each expression) to be used in the TMS experiment. The selected pictures were accurately recognized with comparable percentages of correct responses (happy faces: 91%; neutral faces: 92%; fearful faces: 92%) and congruent ratings of happiness (happy faces: 5.66; neutral faces: 1.74; fearful faces: 1.17) and fear (happy faces: 1.06; neutral faces: 1.46; fearful faces: 6.21).

2.3. Transcranial Magnetic Stimulation and Electromyography Recording

MEPs were collected in two separate sessions testing motor excitability of the right (M1right session) and left M1 (M1left session). Both sessions started with the electrode montage, detection of optimal scalp position and measurement of resting motor threshold. To explore motor excitability, single TMS pulses were delivered over the right and left M1, and MEPs were recorded from the first dorsal interosseus (FDI) muscles, contralateral and ipsilateral to the stimulated hemisphere, with a Biopac MP-35 (BIOPAC System Inc., Goleta, CA, USA.) electromyography (EMG) system. EMG signals were band-pass filtered (30–500 Hz), sampled at 5 kHz, digitized and stored on a computer for off-line analysis. Pairs of silver-chloride surface electrodes were placed in a belly-tendon montage with ground electrodes on the wrist.

MEPs were induced using a Magstim Rapid2 stimulator (Magstim, Whitland, Dyfed, UK) connected to a figure-of-eight coil (70 mm diameter; peak magnetic field 2.2 Tesla). The intersection of the coil was placed tangentially to the scalp with the handle pointing backward and laterally at a 45° angle from the midline, to induce a posterior-anterior current flow approximately perpendicular to the line of the central sulcus. Detection of optimal scalp position and resting motor threshold (rMT) was performed as follows. Optimal scalp position was identified by using a slightly suprathreshold stimulus intensity. The coil was moved over the target hemisphere to determine the optimal position from which maximal amplitude MEPs were elicited in the contralateral FDI muscle. In the M1right and M1left experiments, the intensity of magnetic pulses was set at 125% of the rMT, which was defined as the minimal intensity of stimulator output that produces 5 MEPs with an amplitude of at least 50 μV in the relaxed muscle in a series of 10 stimuli [56]. Mean stimulation intensity (mean % of maximal stimulator output ± S.D.) were similar for M1right (71.8% ± 6.2) and M1left (70.3% ± 7.6; Wilcoxon matched pairs test: Z = 1.78, p = 0.09).

The absence of voluntary contraction was visually verified continuously throughout the experiments in both the left and right FDI simultaneously. When muscle tension was detected in one of the two muscles, the experiment was briefly interrupted and the subject was invited to relax.

2.4. Procedure and Experimental Design

The experiment was programmed using Matlab software to control picture presentation and to trigger TMS pulses. In both sessions (rightM1 and leftM1 stimulation) MEPs were collected in four blocks. The first and the last blocks served as baseline: for each block 10 MEPs were recorded with an inter-pulse interval of 10 s while subjects kept their eyes closed with the instruction to imagine watching a sunset at the beach [22,57,58]. This number of trials (n = 10) provides stable MEP measurement [59]. In the other two blocks, consisting of 54 trials each, subjects were presented with the face pictures and were asked to categorize them as either a happy, fearful or neutral facial expression. Each trial started with a gray screen (1 s duration), followed by the test picture projected at the center of the screen (Figure 1b). In half the trials, stimuli were presented for 160 ms and TMS was delivered at 150 ms from stimulus onset. In the remaining trails, stimuli were presented for 310 ms and TMS was delivered at 300 ms from stimulus onset. The minimal asynchrony between the TMS pulse and picture offset (i.e., 10 ms) ensured that pictures were still on the screen when M1 was stimulated. The duration of the test stimuli was randomly distributed in the blocks. For each session (right M1, leftM1), stimulus exposure (150 ms, 300 ms) and condition (happy, fearful and neutral facial expressions), we collected 18 MEPs. This number of trials provides stable MEP measurement [59] and it is well in keeping with prior TMS work on emotional faces [33,34], bodies [22,23,24,25,26,29] and scenes [16,18,19].

After the picture presentation, a random-dot mask (obtained by scrambling the corresponding sample stimulus by means of a custom-made image segmentation software) appeared for 1 s. Then the question “What did you see?” was presented on the screen, and the subject had to provide a verbal response (forced choice). Possible choices were happy, fearful or neutral. An experimenter collected the answer by pressing a computer key. To avoid changes in excitability due to the verbal response [60,61], participants were invited to answer only during the question screen, a few seconds after the TMS pulse [58]. After the response, the screen appeared black for 4–6 s, ensuring an inter-pulse interval greater than 10 s and thereby avoiding changes in motor excitability due to TMS per se [62]. This was directly confirmed by the lack of changes in FDI MEP amplitudes between the first and the last baseline blocks in both the M1right (mean ± S.D. = 1.08 mV ± 0.75 vs. 1.14 mV ± 0.46; Wilcoxon test: Z = 0.15, p = 0.88) and the M1left sessions (1.51 mV ± 0.89 vs. 1.63 mV ± 0.66; Wilcoxon test: Z = 1.02, p = 0.31). However, the two averaged baseline values differed between the two sessions, with larger MEPs in the M1left session as compared with the M1right session (1.11 mV ± 0.53 vs. 1.57 mV ± 0.75; Wilcoxon test: Z = 2.35, p = 0.019).

To reduce the initial transient-state increase in motor excitability, before each block two magnetic pulses were delivered over the targeted M1 (inter-pulse interval >10 s). Each block lasted about 10 min. The order of the stimulated hemisphere was counterbalanced across participants.

2.5. Subjective Measures

After TMS, subjects were presented with all the stimuli (shown in a randomized order) and asked to evaluate arousal, valence and perceived movement using a 10 cm visual analogue scale (VAS). Each rating was collected separately during successive presentation of the whole set of stimuli, in order to minimize artificial correlations between the different judgments. Afterwards, to assess empathy and personal distress dispositions, participants were asked to fill out the interpersonal reactivity index (IRI) [63], a 28-item self-report survey consisting in four subscales, namely perspective taking (PT, that assesses the tendency to spontaneously imagine and assume the cognitive perspective of another person), fantasy scale (FS, that assesses the tendency to project oneself into the place of fictional characters in books and movies), empathic concern (EC, that assesses the tendency to feel sympathy and compassion for others in need) and personal distress (PD, that assesses the extent to which an individual feels distress in emotionally distressing interpersonal contexts). PT and FS allow to evaluate cognitive components of empathy, while EC and PD correspond to the notions of other-oriented empathy reaction and self-oriented emotional distress, respectively [63].

2.6. Data Analysis

Neurophysiological and behavioral data were processed off-line. Mean MEP amplitudes in each condition were measured peak-to-peak (in mV). MEPs associated with incorrect answers were excluded from the analysis (less than 6% in both sessions). It is well established that background EMG affects motor excitability [64]; to minimize this issue, we computed the mean rectified EMG signal across a 100-ms interval preceding the TMS artifact and discarded MEPs with preceding mean EMG signal deviating from the mean of the distribution of the relevant condition by more than 2 S.D. (less than 6%). This allowed to remove motor activations that could affect MEP amplitudes [64]. In none of the participants the TMS artifact affected measurement of EMG background or MEP amplitudes. Mean accuracy in both experiments was high (right M1: mean 94.2% ± 5%; left M1: mean 95.4% ± 4.1%) and comparable across the two sessions (Wilcoxon test: Z = 0.98; p = 0.33).

Due to the small sample size of the study, the analysis on MEPs was carried out by nonparametric Friedman ANOVA, with Condition (right-150-happy, right-150-neutral, right-150-fearful, right-300-happy, right-300-neutral, right-300-fearful, left-150-happy, left-150-neutral, left-150-fearful, left-300-happy, left-300-neutral, left-300-fearful) as the within-subjects factor. Further Friedman ANOVAs and Wilcoxon matched pairs tests were carried out to detect the source of significant modulations. Non parametric effect size r based on Wilcoxon tests were computed following the recommendation of Rosenthal [65]. By convention, r effect sizes of 0.1, 0.3, and 0.5 are considered small, medium, and large, respectively.

Mean VAS ratings for arousal, valence and perceived movement induced by the different images were analyzed by means of three different Friedman ANOVAs with ‘type of expression’ as within-factor (happy, fearful and neutral) and Wilcoxon matched pairs tests for follow-up analyses.

Friedman ANOVA on MEPs recorded when the TMS pulse was delivered in the right M1 at 150 ms of pictures onset showed an increase in motor excitability for the faces expressing emotion compared to the neutral faces. To test whether such effect was related to individual differences in both cognitive and emotional empathy, an index of the early and lateralized motor modulation was computed (mean of effect on right M1 at 150 ms for happy and fearful expression minus the mean of effect on right M1 at 150 ms for neutral expression) and was entered as a dependent variable in different Spearman correlation analyses, whereas individual questionnaire scores from the IRI subscales were entered as predictors.

3. Results

3.1. Subjective Measures

The Friedman ANOVA carried out on valence ratings was significant (χ2 = 18.50, p < 0.001; Table 1). Wilcoxon tests showed that valence ratings were lower for fearful (2.23 ± 1.34) compared to happy (7.02 ± 1.51) and neutral (4.76 ± 0.62) facial expressions (all Z ≥ 3.06, p < 0.001); moreover, valence ratings were higher for happy compared to neutral expressions (Z = 2.67, p < 0.01).

Table 1.

Mean ± S.D. of arousal, valance and implied motion ratings of the stimuli.

| Happy Expression | Fearful Expression | Neutral Expression | |

|---|---|---|---|

| Valence | 7.02 ± 1.51 | 2.23 ± 1.34 | 4.76 ± 0.62 |

| Arousal | 5.35 ± 1.72 | 6.45 ± 1.93 | 1.50 ± 1.08 |

| Implied motion | 6.37 ± 1.53 | 6.72 ± 1.55 | 1.03 ± 0.71 |

The Friedman ANOVA on arousal ratings was significant (χ2 = 18.17, p < 0.001; Table 1); Wilcoxon tests showed higher scores for happy (5.35 ± 1.72) and fearful (6.45 ± 1.93) compared to neutral facial expressions (1.50 ± 1.08; all Z ≥ 3.06, all p < 0.01). Moreover, arousal ratings were not significantly different between fearful and happy expressions (Z = 1.41, p = 0.16).

Finally, the Friedman ANOVA conducted on implied motion ratings was also significant (χ2 = 18.67, p < 0.0001; Table 1); Wilcoxon tests showed higher scores for happy (6.37 ± 1.53) and fearful (6.72 ± 1.55) compared to neutral (1.03 ± 0.71) facial expressions (all Z ≥ 3.06, all p < 0.01); moreover, implied motion scores were comparable between happy and fearful expressions (Z = 1.25, p = 0.21).

3.2. Neurophysiological Data

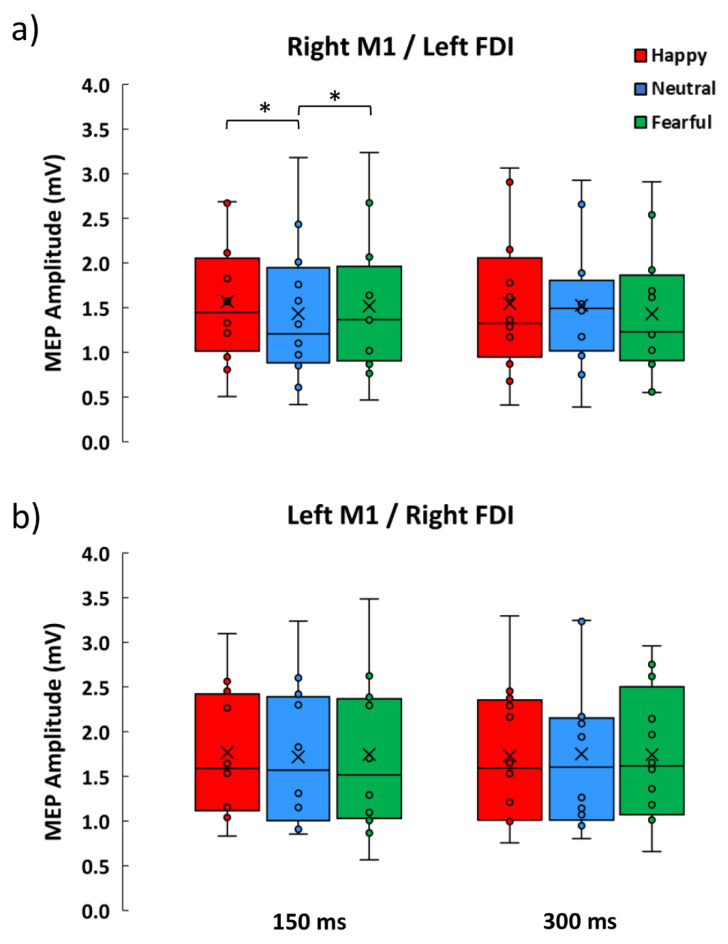

The Friedman ANOVA conducted on MEPs amplitude was significant (χ2 = 21.76, p = 0.026; Figure 2). To explore these findings, we conducted two separate analyses, one for each stimulating session. The Friedman ANOVA on data from the M1left session was not significant (χ2 = 3.52, p = 0.62; Figure 2b), whereas the Friedman ANOVA on data from the M1right session was significant (χ2 = 12.43, p = 0.029; Figure 1a), showing consistent modulations across early time conditions (150 ms: χ2 = 8.67, p = 0.013), but not at later time conditions (300 ms; χ2 = 3.5, p = 0.17). Wilcoxon matched pairs test showed that MEPs induced by right M1 stimulation at early timing were greater in the happy (1.57 mV ± 0.69) and fearful (1.52 mV ± 0.81) conditions compared to the neutral condition (1.43 mV ± 0.80; all Z ≥ 2.04, all p ≤ 0.04, all effect size r ≥ 0.59); moreover, happy and fearful conditions were statistically comparable (Z = 1.02, p = 0.31). MEPs across the six experimental conditions of M1right sessions were larger than the corresponding baseline MEPs (all Z ≥ 2.35, all p ≤ 0.019, all effect size r ≥ 0.61). In contrast, no amplitude difference was observed between the active conditions of the M1left session and the corresponding baseline (all Z ≤ 2.35, all p ≥ 0.08).

Figure 2.

Boxplots showing MEP amplitudes recorded during the presentation of happy, neutral and fearful facial expressions at 150 and 300 ms from the stimulus onset. (a) Data from the right M1 experiment showing an early increase of MEPs for emotional facial expressions. (b) Data from the left M1 showing no MEP modulation. Asterisks denote significant Wilcoxon comparisons (p < 0.05).

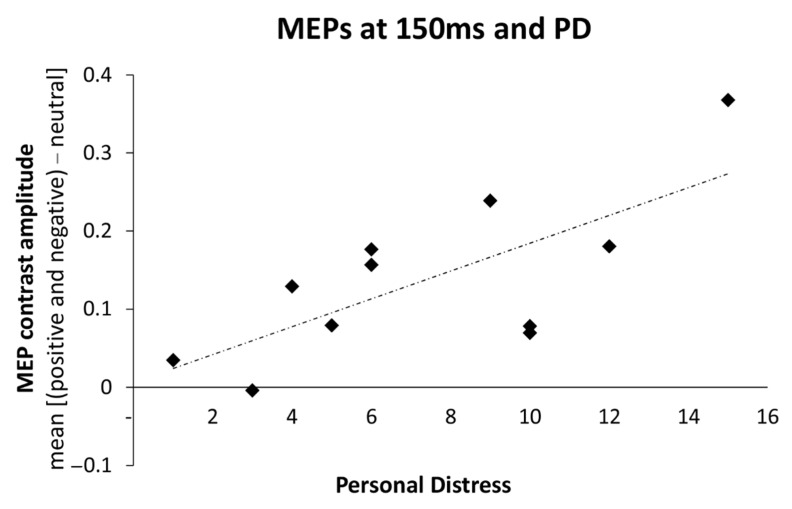

3.3. Relations between Changes in Motor Excitability and Dispositional Empathy

To test whether individual disposition in empathy correlates with the physiological changes induced by the processing of emotional facial expressions, Spearman’s rho correlations were used to assess the relationship between the index of early MEPs modulation for emotional faces (i.e., the increase of MEPs induced by right M1 stimulation collected in the 150 ms condition for emotional faces compared to the neutral face) and the scores on the IRI subscales. Initially, there were no significant correlations between the ratings on the IRI subscales and MEPs index (0.01 ≤ Spearman r ≤ 0.48; all p ≥ 0.11). However, after the removal of a statistical outlier in the data set with standard residual greater than two standard deviations, the magnitude of motor facilitation for emotional faces positively correlated with the personal distress (PD) subscale (Spearman r = 0.63; p = 0.037; Figure 3), suggesting that participants with higher scores in PD show greater MEPs increment for emotional faces. Other correlations remained non-significant even after the removal of the outlier (–0.02 ≤ Spearman r ≤ 0.40; all p ≥ 0.22).

Figure 3.

Simple correlation between MEP contrasts at 150 ms (amplitude during happy and fearful facial expressions minus neutral conditions) and the personal distress subscale of the Interpersonal Reactivity Index.

4. Discussion

Decoding and rapidly reacting to emotional facial expressions represents a fundamental human ability for effective social interactions. Due to the crucial importance of reacting to emotional facial expressions, it is reasonable to expect these stimuli to affect the motor system of an observer quite early in time. However, previous TMS investigations failed to test early reactivity to emotional faces. To fill this gap, here we tested this hypothesis, by using the single-pulse TMS to monitor early (at 150 ms) and later (at 300 ms) modulations in corticospinal excitability of the right and the left M1, while participants actively categorized emotional (happy or fearful) and neutral facial expressions.

Our results show that in general, the emotion recognition task enhanced motor excitability in the right M1 compared to baseline values, whereas no similar increase was observed for the left M1, suggesting that the emotion recognition task engaged the right M1 to a greater extent than the left M1, across all conditions. Even more importantly, during the emotion recognition task, we observed an early motor facilitation for emotional faces that was selective to the right M1: when TMS was administered at 150 ms from picture presentation, left FDI MEPs during the observation of happy and fearful faces were greater than left FDI MEPs during the observation of neutral faces. No difference was found between happy and fearful face stimuli, and subjective ratings ensured that these two classes of stimuli had different valence but comparable arousal and implied motion. No similar modulations were observed at the later time (300 ms) in the left FDI or in the right FDI, indicating no changes in corticospinal excitability in these conditions. Moreover, we found that the interindividual differences in the disposition to experience aversive feelings in interpersonal emotional contexts (i.e., personal distress, as tapped by the IRI’s PD scores) predicted interindividual differences in the magnitude of early right M1 facilitation for emotional faces. These findings expand on previous research by demonstrating an early and transient facilitatory corticospinal response to emotional faces within the right M1 and showing that this response is larger in participants with greater interpersonal anxiety-related personality traits.

Our findings suggest that corticospinal excitability in the right M1 is sensitive to facial emotional expressions during an active recognition task, whereas the left M1 showed no such sensitivity. Traditionally, two main theories have linked emotion perception to the issue of hemispheric laterality. According to one view, the right hemisphere has a pivotal role in processing all emotions, whereas other views, usually known as the valence-specific hypothesis [40,43,44] or the related motivation-specific hypothesis [43,45,46], suggest that the right and the left hemispheres are relatively specialized in processing different types of emotions (i.e., negatively and positively valenced emotions, or emotion based on withdrawal or approach motivation). By demonstrating that the observation of both happy and fearful facial expressions modulates the motor excitability in the right hemisphere only, our results appear more in line with the right hemisphere dominance hypothesis than with the valence- or motivation-specific hypotheses. Moreover, we found no support for a purely motor hypothesis according to which arousing stimuli would prime the dominant (left) hand for action.

The theory of the right hemisphere dominance in the processing of emotion [39,40,41,42] was originally supported by several clinical as well as experimental findings, and it is still supported by recent evidence (for a review see [66]). However, meta-analytic neuroimaging work [5,7] indicates that both hemispheres are engaged during the processing of emotional faces and other stimuli. Emotions are the result of activations in networks which are interrelated, but may have differential lateralization patterns, and classical proposals such as the right-hemisphere dominance or valence/motivation lateralization could reflect different aspects of emotion processing [67]. A recent review highlights the possibility that the brain is right-biased in emotional and neutral face perception by default, however, task conditions can activate a more distributed and bilateral brain network [68]. In light of this, our findings may suggest that motor networks within the right hemisphere are particularly engaged during recognition of emotional faces—although we focused on M1 only and therefore it remains to be investigated how the right M1 is embedded into a wider cortico-subcortical network involved in processing emotional faces, and what its specific role is in the network.

Our findings show larger MEPs when seeing emotional faces, in keeping with prior evidence of facilitatory response to emotional stimuli using the IAPS database [16,17,18,19,20,21] or facial expression as in the study of Schutter [33]. Our findings expand on these previous works by showing that facilitatory response to emotional faces is comparable for happy and fearful expressions and can occur at early timing, that is 150 ms. Two previous works investigated MEP modulation at that specific timing. Using IAPS, Borgomaneri et al. [21] reported increased excitability for negative scenes only, although these scenes were more arousing than positive scenes. Taken together, this prior study and the present one indicates that emotional faces and emotional scenes induce an early increase in motor excitability—possibly reflecting increased readiness—and suggest that the level of arousal is a key factor in driving change in motor excitability. In a second study, MEPs from the left and right M1 were collected at 150 ms from stimulus presentation, while participants were asked to actively categorize pictures of emotional body postures [23]. In line with the present findings, both fearful and happy expressions modulated the right M1 to a similar extent (and such response was predicted by interindividual differences in the personal distress), whereas no consistent modulation was observed over the left M1. However, in contrast to the present findings, emotional body postures inhibited motor excitability, and this inhibition was interpreted as reflecting an orienting response toward emotional salient cues. Thus, while all these studies converge in showing early motor modulations, the opposite sign of the change in motor excitability remains to be accounted for. This difference may be ascribed to the different kind of stimuli, since body postures can be conceived as more complex stimuli compared to facial expressions, and may, therefore, require more resources or time to be processed (see the latency of the ERP component N170 elicited by faces versus the N190 elicited by bodies; [69,70]). In this vein, one could speculate that early inhibitory motor modulations reflecting orienting could be either specific to bodies (or to any ambiguous emotional signal) as they require more resources to be decoded. Alternatively, inhibitory modulations could also be detected for stimuli depicting facial expressions, but at an earlier timing. Future studies could directly test these alternative possibilities. Indeed, it is important to mention that here we have tested only two time points, thus it is possible that other modulatory effects may be visible at different timings. In keeping with previous research [21,22,23,24,25,26,29,33] and to limit the duration of the experiment, we focused on happy, neutral and fearful expressions. Thus, future studies should test MEPs using additional facial expressions, to understand whether early increase in motor excitability is specific to certain expressions or is a common feature of emotional face processing. Moreover, we should also consider that our sample was relatively small, and the analyses implemented were not corrected for multiple comparisons. However, the critical comparisons were associated with large effect sizes. Nevertheless, our findings warrant replication in larger cohorts, possibly testing more facial expressions and time points.

Our design allowed us to provide some insights into the late motor modulations observed in prior work using emotional scenes and bodies, specifically at 300 ms from picture presentation [21,22,23]. This previous work showed that emotional IAPS stimuli increased motor excitability compared to neutral scenes [21]. However, as discussed in the introduction, emotional scenes often show facial and body dynamic cues (i.e., motor actions), whereas IAPS neutral scenes typically depict neutral contexts with no humans, raising the possible concern that motor facilitation to emotional scenes may be due to motor resonance, rather than emotion-related modulations. On this matter, two previous studies showed that motor facilitation at 300 ms is observed not only for emotional body expressions but also neutral body movements [22,23]. Our study further supports the possibility that MEP changes at this timing might reflect motor resonance rather than emotion-related motor modulations. Indeed, there is extensive evidence that motor resonance is muscle specific [36,37,71,72], particularly around these temporal windows [38], and this can explain why facilitations of hand motor representations were observed for moving bodies [22,23], but not facial expressions (present study). In sum, our study suggests that during active emotion recognition tasks, emotional related motor modulations are likely to be observed at an early (150 ms), not later (300 ms) time. These findings motivate to further explore dynamic modulations of motor excitability using different stimulus types to disentangle the functional meaning of motor modulations. Moreover, our findings suggest future studies should also disentangle the contribution of facial expressions and dynamic bodies in complex emotional scenes.

Lastly, we found that the magnitude of early motor facilitation to emotional faces was predicted by the IRI’s PD scores. The PD scale assesses aversive, self-focused emotional reactions of personal anxiety and distress when seeing the misfortunes of others [63]. While personal distress may counteract mature forms of empathy [47,73,74], imaging studies have reported that participants who score high on the PD scale show enhanced reactivity of the insula when seeing both happy and disgusted facial expressions [75], as well as painful expressions [76], suggesting that high personal distress levels are associated with a general increase in emotional reactivity to others’ emotions. These findings are in line with electrocortical and imaging evidence showing that stronger visual cortex sensitivity to social and emotional information is linked with interpersonal anxiety-related dispositions [77,78,79]. Such a link between inter-individual differences in PD scores and the magnitude of the motor cortex reactivity was also observed in other experimental conditions, i.e., with complex negative scenes and emotional body postures [21,23], as well as during the observation of the pain of others [74], in keeping with the notion that anxiety-related traits are associated with greater motor excitability [80] and weaker motor control when facing emotional stimuli [51]. Taken together, these findings provide further support to the view that anxiety-related traits influence the way in which social and emotional signals are processed in the brain [29,53,81,82,83,84,85].

5. Conclusions

In conclusion, our findings provide novel evidence of an early facilitatory response to emotional faces, with grater reactivity in participants with higher personal distress. Our study highlights the importance of exploring motor system involvement in both hemispheres and with high temporal resolution, and considering interindividual differences in emotional disposition.

Author Contributions

Conceptualization, A.A.; methodology, S.B. (Sara Borgomaneri) and A.A.; software, S.B. (Sara Borgomaneri); formal analysis, F.V.; investigation, S.B.; data curation, F.V.; writing—original draft preparation, S.B. (Sara Borgomaneri) and F.V.; writing—review and editing, S.B. (Sara Borgomaneri), S.B. (Simone Battaglia) and A.A.; visualization, S.B. (Simone Battaglia); supervision, S.B. (Sara Borgomaneri) and A.A.; project administration, S.B. (Sara Borgomaneri) and A.A.; funding acquisition, S.B. (Sara Borgomaneri) and A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by grants from the Bial Foundation [347/18] and the Ministero dell’Istruzione, dell’Università e della Ricerca [2017N7WCLP] awarded to Alessio Avenanti and by grants from the Ministero della Salute, Italy [GR-2018-12365733] awarded to Sara Borgomaneri.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board (or Ethics Committee) of the University of Bologna (Protocol Code 2412022014 approved on 4.5.2010).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The raw data that support the findings of this study are available in Open Science Framework at the following online repository https://osf.io/34cvg/?view_only=182a524ec3a84453ae17a12502461076 (accessed on 16 July 2021) or further material requests should be addressed to Sara Borgomaneri.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Olson I.R., Marshuetz C. Facial attractiveness is appraised in a glance. Emotion. 2005;5:498–502. doi: 10.1037/1528-3542.5.4.498. [DOI] [PubMed] [Google Scholar]

- 2.Pessoa L., Japee S., Ungerleider L.G. Visual awareness and the detection of fearful faces. Emotion. 2005;5:243–247. doi: 10.1037/1528-3542.5.2.243. [DOI] [PubMed] [Google Scholar]

- 3.Bar M., Neta M., Linz H. Very first impressions. Emotion. 2006;6:269–278. doi: 10.1037/1528-3542.6.2.269. [DOI] [PubMed] [Google Scholar]

- 4.Willis J., Todorov A. First impressions: Making up your mind after a 100-ms exposure to a face. Psychol. Sci. 2006;17:592–598. doi: 10.1111/j.1467-9280.2006.01750.x. [DOI] [PubMed] [Google Scholar]

- 5.Fusar-Poli P., Placentino A., Carletti F., Landi P., Allen P., Surguladze S., Benedetti F., Abbamonte M., Gasparotti R., Barale F., et al. Functional atlas of emotional faces processing: A voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 2009;34:418–432. [PMC free article] [PubMed] [Google Scholar]

- 6.Fusar-Poli P., Placentino A., Carletti F., Allen P., Landi P., Abbamonte M., Barale F., Perez J., McGuire P., Politi P.L. Laterality effect on emotional faces processing: ALE meta-analysis of evidence. Neurosci. Lett. 2009;452:262–267. doi: 10.1016/j.neulet.2009.01.065. [DOI] [PubMed] [Google Scholar]

- 7.Vytal K., Hamann S. Neuroimaging support for discrete neural correlates of basic emotions: A voxel-based meta-analysis. J. Cogn. Neurosci. 2010;22:2864–2885. doi: 10.1162/jocn.2009.21366. [DOI] [PubMed] [Google Scholar]

- 8.Vuilleumier P., Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia. 2007;45:174–194. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- 9.Schindler S., Bublatzky F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex. 2020;130:362–386. doi: 10.1016/j.cortex.2020.06.010. [DOI] [PubMed] [Google Scholar]

- 10.Keysers C., Gazzola V. Expanding the mirror: Vicarious activity for actions, emotions, and sensations. Curr. Opin. Neurobiol. 2009;19:666–671. doi: 10.1016/j.conb.2009.10.006. [DOI] [PubMed] [Google Scholar]

- 11.Wood A., Rychlowska M., Korb S., Niedenthal P. Fashioning the face: Sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 2016;20:227–240. doi: 10.1016/j.tics.2015.12.010. [DOI] [PubMed] [Google Scholar]

- 12.Paracampo R., Tidoni E., Borgomaneri S., di Pellegrino G., Avenanti A. Sensorimotor network crucial for inferring amusement from smiles. Cereb. Cortex. 2017;27:5116–5129. doi: 10.1093/cercor/bhw294. [DOI] [PubMed] [Google Scholar]

- 13.Izard C.E. Innate and universal facial expressions: Evidence from developmental and cross-cultural research. Psychol. Bull. 1994;115:288–299. doi: 10.1037/0033-2909.115.2.288. [DOI] [PubMed] [Google Scholar]

- 14.Ekman P., Davidson R.J. The Nature of Emotion: Fundamental Questions. Oxford University Press; New York, NY, USA: 1994. [Google Scholar]

- 15.Frijda N.H. Emotion experience and its varieties. Emot. Rev. 2009;1:264–271. doi: 10.1177/1754073909103595. [DOI] [Google Scholar]

- 16.Baumgartner T., Willi M., Jäncke L. Modulation of corticospinal activity by strong emotions evoked by pictures and classical music: A transcranial magnetic stimulation study. Neuroreport. 2007;18:261–265. doi: 10.1097/WNR.0b013e328012272e. [DOI] [PubMed] [Google Scholar]

- 17.Hajcak G., Molnar C., George M.S., Bolger K., Koola J., Nahas Z. Emotion facilitates action: A transcranial magnetic stimulation study of motor cortex excitability during picture viewing. Psychophysiology. 2007;44:91–97. doi: 10.1111/j.1469-8986.2006.00487.x. [DOI] [PubMed] [Google Scholar]

- 18.Coombes S.A., Tandonnet C., Fujiyama H., Janelle C.M., Cauraugh J.H., Summers J.J. Emotion and motor preparation: A transcranial magnetic stimulation study of corticospinal motor tract excitability. Cogn. Affect. Behav. Neurosci. 2009;9:380–388. doi: 10.3758/CABN.9.4.380. [DOI] [PubMed] [Google Scholar]

- 19.Coelho C.M., Lipp O.V., Marinovic W., Wallis G., Riek S. Increased corticospinal excitability induced by unpleasant visual stimuli. Neurosci. Lett. 2010;481:135–138. doi: 10.1016/j.neulet.2010.03.027. [DOI] [PubMed] [Google Scholar]

- 20.van Loon A.M., van den Wildenberg W.P.M., van Stegeren A.H., Hajcak G., Ridderinkhof K.R. Emotional stimuli modulate readiness for action: A transcranial magnetic stimulation study. Cogn. Affect. Behav. Neurosci. 2010;10:174–181. doi: 10.3758/CABN.10.2.174. [DOI] [PubMed] [Google Scholar]

- 21.Borgomaneri S., Gazzola V., Avenanti A. Temporal dynamics of motor cortex excitability during perception of natural emotional scenes. Soc. Cogn. Affect. Neurosci. 2014;9:1451–1457. doi: 10.1093/scan/nst139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Borgomaneri S., Gazzola V., Avenanti A. Motor mapping of implied actions during perception of emotional body language. Brain Stimul. 2012;5:70–76. doi: 10.1016/j.brs.2012.03.011. [DOI] [PubMed] [Google Scholar]

- 23.Borgomaneri S., Gazzola V., Avenanti A. Transcranial magnetic stimulation reveals two functionally distinct stages of motor cortex involvement during perception of emotional body language. Brain Struct. Funct. 2015;220:2765–2781. doi: 10.1007/s00429-014-0825-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Borgomaneri S., Vitale F., Gazzola V. Seeing fearful body language rapidly freezes the observer ’ s motor cortex. Cortex. 2015;65:232–245. doi: 10.1016/j.cortex.2015.01.014. [DOI] [PubMed] [Google Scholar]

- 25.Borgomaneri S., Vitale F., Avenanti A. Early changes in corticospinal excitability when seeing fearful body expressions. Sci. Rep. 2015;5:14122. doi: 10.1038/srep14122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Borgomaneri S., Vitale F., Avenanti A. Early motor reactivity to observed human body postures is affected by body expression, not gender. Neuropsychologia. 2020;146:107541. doi: 10.1016/j.neuropsychologia.2020.107541. [DOI] [PubMed] [Google Scholar]

- 27.Hortensius R., de Gelder B., Schutter D.J.L.G. When anger dominates the mind: Increased motor corticospinal excitability in the face of threat. Psychophysiology. 2016;53:1307–1316. doi: 10.1111/psyp.12685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Engelen T., Zhan M., Sack A.T., de Gelder B. The influence of conscious and unconscious body threat expressions on motor evoked potentials studied with continuous flash suppression. Front. Neurosci. 2018;12:480. doi: 10.3389/fnins.2018.00480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Borgomaneri S., Vitale F., Avenanti A. Behavioral inhibition system sensitivity enhances motor cortex suppression when watching fearful body expressions. Brain Struct. Funct. 2017;222:3267–3282. doi: 10.1007/s00429-017-1403-5. [DOI] [PubMed] [Google Scholar]

- 30.Cuthbert B.N., Schupp H.T., Bradley M.M., Birbaumer N., Lang P.J. Brain potentials in affective picture processing: Covariation with autonomic arousal and affective report. Biol. Psychol. 2000;52:95–111. doi: 10.1016/S0301-0511(99)00044-7. [DOI] [PubMed] [Google Scholar]

- 31.Keil A., Bradley M.M., Hauk O., Rockstroh B., Elbert T., Lang P.J. Large-scale neural correlates of affective picture processing. Psychophysiology. 2002;39:641–649. doi: 10.1111/1469-8986.3950641. [DOI] [PubMed] [Google Scholar]

- 32.Olofsson J.K., Nordin S., Sequeira H., Polich J. Affective picture processing: An integrative review of ERP findings. Biol. Psychol. 2008;77:247–265. doi: 10.1016/j.biopsycho.2007.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Schutter D.J.L.G., Hofman D., Van Honk J. Fearful faces selectively increase corticospinal motor tract excitability: A transcranial magnetic stimulation study. Psychophysiology. 2008;45:345–348. doi: 10.1111/j.1469-8986.2007.00635.x. [DOI] [PubMed] [Google Scholar]

- 34.Vicario C.M., Rafal R.D., Borgomaneri S., Paracampo R., Kritikos A., Avenanti A. Pictures of disgusting foods and disgusted facial expressions suppress the tongue motor cortex. Soc. Cogn. Affect. Neurosci. 2017;12:352–362. doi: 10.1093/scan/nsw129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tottenham N., Tanaka J.W., Leon A.C., McCarry T., Nurse M., Hare T.A., Marcus D.J., Westerlund A., Casey B.J., Nelson C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Fadiga L., Fogassi L., Pavesi G., Rizzolatti G. Motor facilitation during action observation: A magnetic stimulation study. J. Neurophysiol. 1995;73:2608–2611. doi: 10.1152/jn.1995.73.6.2608. [DOI] [PubMed] [Google Scholar]

- 37.Avenanti A., Candidi M., Urgesi C. Vicarious motor activation during action perception: Beyond correlational evidence. Front. Hum. Neurosci. 2013;7:185. doi: 10.3389/fnhum.2013.00185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Naish K.R., Houston-Price C., Bremner A.J., Holmes N.P. Effects of action observation on corticospinal excitability: Muscle specificity, direction, and timing of the mirror response. Neuropsychologia. 2014;64:331–348. doi: 10.1016/j.neuropsychologia.2014.09.034. [DOI] [PubMed] [Google Scholar]

- 39.Gainotti G. Emotional behavior and hemispheric side of the lesion. Cortex. 1972;8:41–55. doi: 10.1016/S0010-9452(72)80026-1. [DOI] [PubMed] [Google Scholar]

- 40.Silberman E.K., Weingartner H. Hemispheric lateralization of functions related to emotion. Brain Cogn. 1986;5:322–353. doi: 10.1016/0278-2626(86)90035-7. [DOI] [PubMed] [Google Scholar]

- 41.Bryson S.E., McLaren J., Wadden N.P., MacLean M. Differential asymmetries for positive and negative emotion: Hemisphere or stimulus effects? Cortex. 1991;27:359–365. doi: 10.1016/S0010-9452(13)80031-7. [DOI] [PubMed] [Google Scholar]

- 42.Borod J.C. Interhemispheric and intrahemispheric control of emotion: A focus on unilateral brain damage. J. Consult. Clin. Psychol. 1992;60:339–348. doi: 10.1037/0022-006X.60.3.339. [DOI] [PubMed] [Google Scholar]

- 43.Davidson R.J., Hugdahl K. Brain Asymmetry. MIT Press; Cambridge, MA, USA: 1995. [Google Scholar]

- 44.Borod J.C. The Neuropsychology of Emotion. Oxford University Press; Oxford, UK: 2000. [Google Scholar]

- 45.Harmon-Jones E. Contributions from research on anger and cognitive dissonance to understanding the motivational functions of asymmetrical frontal brain activity. Biol. Psychol. 2004;67:51–76. doi: 10.1016/j.biopsycho.2004.03.003. [DOI] [PubMed] [Google Scholar]

- 46.Carver C.S., Harmon-Jones E. Anger is an approach-related affect: Evidence and implications. Psychol. Bull. 2009;135:183–204. doi: 10.1037/a0013965. [DOI] [PubMed] [Google Scholar]

- 47.Lamm C., Batson C.D., Decety J. The neural substrate of human empathy: Effects of perspective-taking and cognitive appraisal. J. Cogn. Neurosci. 2007;19:42–58. doi: 10.1162/jocn.2007.19.1.42. [DOI] [PubMed] [Google Scholar]

- 48.Lamm C., Porges E.C., Cacioppo J.T., Decety J. Perspective taking is associated with specific facial responses during empathy for pain. Brain Res. 2008;1227:153–161. doi: 10.1016/j.brainres.2008.06.066. [DOI] [PubMed] [Google Scholar]

- 49.Morelli S.A., Rameson L.T., Lieberman M.D. The neural components of empathy: Predicting daily prosocial behavior. Soc. Cogn. Affect. Neurosci. 2012;9:39–47. doi: 10.1093/scan/nss088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Gazzola V., Aziz-Zadeh L., Keysers C. Empathy and the somatotopic auditory mirror system in humans. Curr. Biol. 2006;16:1824–1829. doi: 10.1016/j.cub.2006.07.072. [DOI] [PubMed] [Google Scholar]

- 51.Ferri F., Stoianov I.P., Gianelli C., D’Amico L., Borghi A.M., Gallese V. When action meets emotions: How facial displays of emotion influence goal-related behavior. PLoS ONE. 2010;5:e13126. doi: 10.1371/journal.pone.0013126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Lepage J.-F., Tremblay S., Théoret H. Early non-specific modulation of corticospinal excitability during action observation. Eur. J. Neurosci. 2010;31:931–937. doi: 10.1111/j.1460-9568.2010.07121.x. [DOI] [PubMed] [Google Scholar]

- 53.Hortensius R., Schutter D.J.L.G., de Gelder B. Personal distress and the influence of bystanders on responding to an emergency. Cogn. Affect. Behav. Neurosci. 2016;16:672–688. doi: 10.3758/s13415-016-0423-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Oldfield R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 55.Rossi S., Hallett M., Rossini P.M., Pascual-Leone A., Nasreldin M., Nakatsuka M., Koganemaru S., Fawi G., Group T.S. of T.C. Safety, ethical considerations, and application guidelines for the use of transcranial magnetic stimulation in clinical practice and research. Clin. Neurophysiol. 2009;120:2008–2039. doi: 10.1016/j.clinph.2009.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rossini P.M., Burke D., Chen R., Cohen L.G., Daskalakis Z., Di Iorio R., Di Lazzaro V., Ferreri F., Fitzgerald P.B., George M.S., et al. Non-invasive electrical and magnetic stimulation of the brain, spinal cord, roots and peripheral nerves: Basic principles and procedures for routine clinical and research application. An updated report from an I.F.C.N. Committee. Clin. Neurophysiol. 2015;126:1071–1107. doi: 10.1016/j.clinph.2015.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fourkas A.D., Ionta S., Aglioti S.M. Influence of imagined posture and imagery modality on corticospinal excitability. Behav. Brain Res. 2006;168:190–196. doi: 10.1016/j.bbr.2005.10.015. [DOI] [PubMed] [Google Scholar]

- 58.Tidoni E., Borgomaneri S., di Pellegrino G., Avenanti A. Action simulation plays a critical role in deceptive action recognition. J. Neurosci. 2013;33:611–623. doi: 10.1523/JNEUROSCI.2228-11.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Bastani A., Jaberzadeh S. A higher number of TMS-elicited MEP from a combined hotspot improves intra- and inter-session reliability of the upper limb muscles in healthy individuals. PLoS ONE. 2012;7:e47582. doi: 10.1371/journal.pone.0047582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Tokimura H., Tokimura Y., Oliviero A., Asakura T., Rothwell J.C. Speech-induced changes in corticospinal excitability. Ann. Neurol. 1996;40:628–634. doi: 10.1002/ana.410400413. [DOI] [PubMed] [Google Scholar]

- 61.Meister I.G., Boroojerdi B., Foltys H., Sparing R., Huber W., Töpper R. Motor cortex hand area and speech: Implications for the development of language. Neuropsychologia. 2003;41:401–406. doi: 10.1016/S0028-3932(02)00179-3. [DOI] [PubMed] [Google Scholar]

- 62.Chen R., Classen J., Gerloff C., Celnik P., Wassermann E.M., Hallett M., Cohen L.G. Depression of motor cortex excitability by low-frequency transcranial magnetic stimulation. Neurology. 1997;48:1398–1403. doi: 10.1212/WNL.48.5.1398. [DOI] [PubMed] [Google Scholar]

- 63.Davis M.H. Empathy: A Social Psychological Approach. Westview Press; Boulder, CO, USA: 1996. [Google Scholar]

- 64.Devanne H., Lavoie B.A., Capaday C. Input-output properties and gain changes in the human corticospinal pathway. Exp. Brain Res. 1997;114:329–338. doi: 10.1007/PL00005641. [DOI] [PubMed] [Google Scholar]

- 65.Rosenthal R. Parametric measures of effect size. In: Hedges L.V., Cooper H., editors. The Handbook of Research Synthesis. Russell Sage Foundation; New York, NY, USA: 1994. pp. 231–244. [Google Scholar]

- 66.Gainotti G. Emotions and the right hemisphere: Can new data clarify old models? Neuroscientist. 2019;25:258–270. doi: 10.1177/1073858418785342. [DOI] [PubMed] [Google Scholar]

- 67.Palomero-Gallagher N., Amunts K. A short review on emotion processing: A lateralized network of neuronal networks. Brain Struct. Funct. 2021 doi: 10.1007/s00429-021-02331-7. Online ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Stanković M. A conceptual critique of brain lateralization models in emotional face perception: Toward a hemispheric functional-equivalence (HFE) model. Int. J. Psychophysiol. 2021;160:57–70. doi: 10.1016/j.ijpsycho.2020.11.001. [DOI] [PubMed] [Google Scholar]

- 69.Thierry G., Pegna A.J., Dodds C., Roberts M., Basan S., Downing P. An event-related potential component sensitive to images of the human body. Neuroimage. 2006;32:871–879. doi: 10.1016/j.neuroimage.2006.03.060. [DOI] [PubMed] [Google Scholar]

- 70.Borhani K., Borgomaneri S., Làdavas E., Bertini C. The effect of alexithymia on early visual processing of emotional body postures. Biol. Psychol. 2016;115:1–8. doi: 10.1016/j.biopsycho.2015.12.010. [DOI] [PubMed] [Google Scholar]

- 71.Avenanti A., Bolognini N., Maravita A., Aglioti S.M. Somatic and motor components of action simulation. Curr. Biol. 2007;17:2129–2135. doi: 10.1016/j.cub.2007.11.045. [DOI] [PubMed] [Google Scholar]

- 72.Urgesi C., Maieron M., Avenanti A., Tidoni E., Fabbro F., Aglioti S.M. Simulating the future of actions in the human corticospinal system. Cereb. Cortex. 2010;20:2511–2521. doi: 10.1093/cercor/bhp292. [DOI] [PubMed] [Google Scholar]

- 73.Batson C.D., Polycarpou M.P., Harmon-Jones E., Imhoff H.J., Mitchener E.C., Bednar L.L., Klein T.R., Highberger L. Empathy and attitudes: Can feeling for a member of a stigmatized group improve feelings toward the group? J. Pers. Soc. Psychol. 1997;72:105–118. doi: 10.1037/0022-3514.72.1.105. [DOI] [PubMed] [Google Scholar]

- 74.Avenanti A., Minio-Paluello I., Bufalari I., Aglioti S.M. The pain of a model in the personality of an onlooker: Influence of state-reactivity and personality traits on embodied empathy for pain. Neuroimage. 2009;44:275–283. doi: 10.1016/j.neuroimage.2008.08.001. [DOI] [PubMed] [Google Scholar]

- 75.Jabbi M., Swart M., Keysers C. Empathy for positive and negative emotions in the gustatory cortex. Neuroimage. 2007;34:1744–1753. doi: 10.1016/j.neuroimage.2006.10.032. [DOI] [PubMed] [Google Scholar]

- 76.Saarela M.V., Hlushchuk Y., Williams A.C., Schürmann M., Kalso E., Hari R. The compassionate brain: Humans detect intensity of pain from another’s face. Cereb. Cortex. 2007;17:230–237. doi: 10.1093/cercor/bhj141. [DOI] [PubMed] [Google Scholar]

- 77.Kolassa I.-T., Miltner W.H.R. Psychophysiological correlates of face processing in social phobia. Brain Res. 2006;1118:130–141. doi: 10.1016/j.brainres.2006.08.019. [DOI] [PubMed] [Google Scholar]

- 78.Rossignol M., Campanella S., Maurage P., Heeren A., Falbo L., Philippot P. Enhanced perceptual responses during visual processing of facial stimuli in young socially anxious individuals. Neurosci. Lett. 2012;526:68–73. doi: 10.1016/j.neulet.2012.07.045. [DOI] [PubMed] [Google Scholar]

- 79.Schulz C., Mothes-Lasch M., Straube T. Automatic neural processing of disorder-related stimuli in social anxiety disorder: Faces and more. Front. Psychol. 2013;4:282. doi: 10.3389/fpsyg.2013.00282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Wassermann E.M., Greenberg B.D., Nguyen M.B., Murphy D.L. Motor cortex excitability correlates with an anxiety-related personality trait. Biol. Psychiatry. 2001;50:377–382. doi: 10.1016/S0006-3223(01)01210-0. [DOI] [PubMed] [Google Scholar]

- 81.Lawrence E.J., Shaw P., Giampietro V.P., Surguladze S., Brammer M.J., David A.S. The role of “shared representations” in social perception and empathy: An fMRI study. Neuroimage. 2006;29:1173–1184. doi: 10.1016/j.neuroimage.2005.09.001. [DOI] [PubMed] [Google Scholar]

- 82.Moriguchi Y., Ohnishi T., Lane R.D., Maeda M., Mori T., Nemoto K., Matsuda H., Komaki G. Impaired self-awareness and theory of mind: An fMRI study of mentalizing in alexithymia. Neuroimage. 2006;32:1472–1482. doi: 10.1016/j.neuroimage.2006.04.186. [DOI] [PubMed] [Google Scholar]

- 83.Azevedo R.T., Macaluso E., Avenanti A., Santangelo V., Cazzato V., Aglioti S.M. Their pain is not our pain: Brain and autonomic correlates of empathic resonance with the pain of same and different race individuals. Hum. Brain Mapp. 2013;34:3168–3181. doi: 10.1002/hbm.22133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Borgomaneri S., Bolloni C., Sessa P., Avenanti A. Blocking facial mimicry affects recognition of facial and body expressions. PLoS ONE. 2020;15:e0229364. doi: 10.1371/journal.pone.0229364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Kret M.E., Denollet J., Grèzes J., de Gelder B. The role of negative affectivity and social inhibition in perceiving social threat: An fMRI study. Neuropsychologia. 2011;49:1187–1193. doi: 10.1016/j.neuropsychologia.2011.02.007. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The raw data that support the findings of this study are available in Open Science Framework at the following online repository https://osf.io/34cvg/?view_only=182a524ec3a84453ae17a12502461076 (accessed on 16 July 2021) or further material requests should be addressed to Sara Borgomaneri.