Abstract

To better understand the effectiveness of Direct Instruction (DI), the empirical base related to DI’s instructional design components (explicit teaching, judicious selection and sequencing of examples) and principles (identifying big ideas, teaching generalizable strategies, providing mediated instruction, integrating skills and concepts, priming background knowledge, and providing ample review) are analyzed. Attention is given to the converging evidence supporting the design characteristics of DI, which has broad applicability across different disciplines, teaching methodologies, and perspectives.

Keywords: Direct instruction, Instructional design, Empirical evidence

Direct Instruction (DI) teaches so efficiently that all students learn the material in a minimal amount of time. Such highly effective teaching requires a laser-like focus on the design, organization, and delivery of instruction. Each and every DI program identifies concepts, rules, strategies, and “big ideas” to be taught, clearly communicates these through carefully crafted instructional sequences, organizes instruction to provide each student with appropriate and sufficient practice (through scheduling, grouping, and ongoing progress monitoring), and ensures active student engagement and lesson mastery via specific forms of student–teacher interactions. Every DI strategy, tactic, technique, or procedure involves careful design and testing. In Theory of Instruction: Principles and Applications (hereafter, Theory of Instruction), Engelmann and Carnine (1982, 1991) lay out how DI, and perhaps all instructional programs, should be designed.

What is Instructional Design?

Simply put, instructional design (ID) is the creation of materials and experiences that result in a learner’s acquisition of new knowledge and skills. A more formal description is that ID is “the process of systematically applying instructional theory and empirical findings to the planning of instruction” (Dick, 1987, p. 183) “in a consistent and reliable fashion” (Reiser & Dempsey, 2007, p. 11). As a discipline, ID is concerned with understanding, developing, improving, and applying methods of instruction, thus creating optimal models, blueprints, or programs for teaching (Reigeluth, 1983). Although ID is a field in its own right, other diverse fields (e.g., education, psychology, philosophy, and performance improvement) concern themselves with thinking, teaching, and learning, giving rise to a range of theories on how people learn.

Learning theories identify the conditions of learning and provide a conceptual framework and vocabulary for interpreting examples of learning. Instructional designers turn to learning theories as a source of strategies for facilitating learning and suggested solutions to learning problems (Ertmer & Newby, 2013; Joyce et al., 1997; Markle, 1978). Learning theories abound, and typically represent one of three philosophical frameworks: behaviorism, cognitivism, and constructivism (Ertmer & Newby, 2013; Schunk, 2007). In summary, behavioral learning theory learning outcomes are indicated by observable, measurable behavior and greatly influenced by “environmental events” (see Skinner, 1954). Cognitive theory traditionally focuses on the thought process behind the behavior; changes in behavior are indicators of activity in the learner's mind (see Phillips, 1995). Constructivist theory contends that learner construct their own individual perspectives of the world is based on experience and schema (internal knowledge structure; see Anderson, 1983). Although distinctions often made among these three types of learning theory, the instructional design procedures derived from behavioral, cognitive, and constructivist approaches have more in common than what is typically described—as evidenced by each field’s work in analytical thinking, reasoning, and problem solving (for examples, see Markle, 1981; Markle & Droege, 1980; Mechner et al., 2013; Robbins, 2011).

We concern ourselves with the design of instruction because we want our teaching to be effective. How people learn and how they learn best form the basis of instructional design. With the expanding multitude of instructional design theories, systems, products, and graduate programs, it would seem logical to simply access the evidence-base of best practices in instructional design to determine which model or program to use. However, the field of instructional design (or the process of systematically applying instructional theory and empirical findings to the design and delivery of instruction in particular) has yet to garner that level of empirical consensus. Although numerous theories abound, few have comprehensively undergone either a formative or summative evaluation process replicated across learners, content, or context. As a model, Direct Instruction (DI) may be one of the few exceptions.

Over 50 years of research shows DI to be highly effective with diverse learners across a wide range of content, including basic skills, complex learning tasks, and social emotional skills such as self-concept and confidence (see Mason & Otero, 2021; Stockard et al., 2018; and Stockard, 2021). Why is DI so successful? An analysis of instructional design components and principles essential to DI programs may contribute towards an understanding of the model’s overall effectiveness. This article focuses on research investigating the design features of DI.

Design Principles Evident in Direct Instruction

Direct Instruction programs feature obvious teaching practices, regardless of academic content, that define DI for many educators. DI teachers use scripted lessons, clear instructional language, signals for students to respond in unison, frequent questioning to achieve high rates of meaningful responding, and specific feedback with targeted error correction (Binder & Watkins, 1990; Stein et al., 1998; Watkins & Slocum, 2004). Other salient features include the identification of teaching goals, ongoing monitoring of student performance, and small-group instruction to ensure teachers are working with students with similar skills.

Each of these teaching practices offers a robust evidence base on their own, regardless of the name of the instructional model in which they are embedded (see Embry & Biglan, 2008, for an analysis of evidence-based educational tactics). Each of these teaching strategies also contributes to the DI research-base, because all DI programs contain these highly effective elements. As Carnine et al. (1988) noted, student perform better when teachers use materials that devote substantial time to active instruction and distill complex skills and concepts into smaller, easy to understand steps (ideally the largest attainable step). Student progress is often seen when teachers teach in a systematic fashion, provide immediate feedback, and conduct much of their instruction in small groups to allow for frequent interactions (Brody & Good, 1986; Rosenshine & Stevens, 1986).

DI is more than a container for individually effective instructional practices. It integrates such practices with a careful and logical analysis of content so that learners can accurately and systematically develop important background knowledge and apply it to building new knowledge and knowledge frameworks (see Kame’enui, 2021; Stein et al., 1998). In a comprehensive review of DI programs, Adams and Engelmann (1996) noted, “‘effective’ practices without a systematic instructional sequence will not necessarily lead to highly effective teaching” (p. 31). One of the most significant facets of DI is its design and sequencing. With Theory of Instruction as its foundation, DI reflects a meticulous and sophisticated analysis of curriculum, organization, and sequence of instructional content. Kinder and Carnine (1991) identified four design principles supporting DI: (1) explicit teaching of rules and strategies, (2) example selection, (3) example sequencing, and (4) covertization. Each of these principles and a review of the related research are described below.

Explicit Teaching of Rules and Strategies

DI programs make learning maximally effective through carefully designed instructional “communications” (i.e., what the teacher presents to the student) that do not rely on inherent characteristics of the learner (Carnine, 1983). The goal of DI is “faultless communication” between teachers and students; the communication is precise if and only if the learner “understands” the information conveyed (Carnine, 1983; Kinder & Carnine, 1991; Twyman, 2021).

Explicit teaching involves guiding student attention and responding toward a specific learning objective within a structured teaching environment. Curriculum content is typically taught in a logical order with the teacher providing demonstration, explanation, and opportunities for practice (often referred to as “Model, Lead, Test,” “I do, You do, We do,” or some variation). As noted in this example provided by Joseph et al. (2016), many of the teaching practices used within explicit instruction (e.g., modeling, prompting, frequent opportunities to respond with targeted feedback) are closely aligned with both DI principles and applied behavior analytic teaching:

. . . teaching students to spell words correctly may involve the following learning trials: (1) the teacher orally presents a word printed on a flashcard and prompts the student to look at the word and spell it along with him or her (stimulus), (2) the student spells the word with the teacher (response), and (3) the teacher provides immediate performance feedback (consequence). (p. 2)

DI’s explicit teaching also involves specific design components, which are discussed individually below. Since the late 1960s, research on explicit instruction has shown positive effects when rules and strategies are openly, overtly, and clearly taught (e.g., step-by-step instructions for a science experiment or how to think “out loud” when solving a problem, aka “talk aloud problem solving” [TAPS]; Whimby & Lochhead, 1982).

Improved outcomes have been demonstrated across grades (preschool through adult), student abilities (in special and general education settings), and subject matter (e.g., mathematics, language arts, social studies, science, art, and music; Archer & Hughes, 2010; Hall, 2002; Hughes et al., 2017). Pearson and Dole (1987) synthesized the research on three explicit instruction strategies for comprehension instruction (reciprocal teaching, process training, and inference training) and found explicit instruction taught comprehension more effectively traditional basal reading approaches (i.e., mentioning, practicing, and assessing). In a meta-analysis summarizing explicit teaching strategies to teach critical thinking, Bangert-Drowns and Bankert (1990) report 18 out of the 20 studies that met the review criteria consistently showed the positive effects of explicit instruction on critical thinking.

Other components of explicit instruction, such as those found in DI programs, have been shown to be highly successful with a range of student and teacher populations and classroom settings (Adams & Engelmann, 1996; Ellis & Worthington, 1994; Rosenshine & Stevens, 1986). Results from this extensive research base indicate that all students, not only those with learning difficulties or in special classrooms, benefit from well-designed explicit instruction (Hall, 2002). The explicit teaching of rules and strategies component of DI is well-supported in the research, and further adds to the support of DI as an evidence-based practice.

Example Selection

As noted by Layng (2016), our sense of the world is founded on our understanding of simple and complex concepts. The preponderance of curriculum content and instruction in K–12 and higher education involves concept teaching. A concept is defined the common attributes found in each example of the concept. Concept teaching relies on the analysis of examples and nonexamples (see Layng, 2019, for a tutorial). The selection of examples (and how the range of examples could be improved upon) is fundamental to DI. Consistent instructions communicate that the examples are the same and prompt the learner to induce the ways in which the examples are the same (Twyman, 2021).

A concept cannot be taught with a single example (Markle & Tiemann, 1970, 1971). A wide range of examples (is the concept) must be presented. These should vary greatly, yet are treated the same way (as a member of the concept). Negative examples (is not the concept) are also required; they help identify the boundaries of accurate generalization. Although overlapping with positive instances in various ways, the differences that make examples negative (aka nonexamples) should be clear.

Theory of Instruction explains four critical facts about presenting examples:

It is impossible to teach a concept through the presentation of one example. A single example allows for any number of possible interpretations.

It is impossible to present a group of positive examples that communicates only one interpretation. A set of positive examples still allows for more than one possible interpretation.

Any sameness share by both positive and negative examples rules out a possible interpretation. The communication given with positive and negative examples differs (e.g., “This is red” vs. “This is not red.”), thus any sameness observed between the positive and negative examples narrows the possible interpretations by ruling out the shared characteristic.

A negative example rules out the maximum number of interpretations when the negative example is at least different from some example. The more shared characteristics between positive and negative examples, the narrower the possible interpretation (more interpretations get ruled out). (Engelmann & Carnine, 1991, p. 37)

The emphasis on selecting positive and negative examples in Theory of Instruction overlaps greatly with an analysis of “critical and variable attributes.” To be considered an instance of the concept, an example must have certain defining, critical attributes. An example missing any of the critical attributes is not an instance of the concept. Variable attributes are characteristics or features that an example may have, but which have no effect on the example’s membership within a concept (Layng et al., 2011; Markle & Tiemann, 1969; Tiemann & Markle, 1990).

Harlow’s (1949) work with humans and primates in the development and testing of “learning sets” provides early model of the careful selection of examples. Harlow proposed that humans and other highly intelligent mammals not only learned isolated relationships but also noticed patterns that made learning more efficient, thus becoming faster at solving new problems upon experience solving similar classes of problems (Harlow & Warren, 1952; see also Critchfield & Twyman, 2014). This “learning to learn” phenomenon seems to be directly related to the “sameness” principle described in Theory of Instruction. By treating various examples the same way in instruction, the learner begins to identify what is the same about them and begins to treat new instances the same way—this is true even for problem-solving behavior.

Several empirical investigations have tested methods of example selection, especially during program design or revision. Working with 47 first- through fifth-grade students who had some knowledge of fractions but none of decimals, Carnine (1980a) found significantly greater transfer of accurately converting fractions with a denominator of 100 to decimals when demonstrations included a range of positive examples of fractions with single, double, and triple digit numerators (e.g., 7/100 = .07, 26/100 = .26, and 159/100 = 1.59) compared to a similar group of students who received the same training but with only one type of positive example (a double digit numerator, e.g., 26/100 = .26, 43/100 = .43, 78/100 = .78). The selection of negative examples is also integral to concept learning (Tennyson, 1973). In a series of studies involving teaching line angles to preschoolers, Williams and Carnine (1981) found clear differences in skill transfer when example sets contained positive and negative examples of angles compared to positive examples alone.

To investigate the effects of the degree of difference between positive and negative examples, Carnine (1980b) developed five sets of examples, each of which contained the same positive examples but different negative examples. The negative examples varied to the degree to which they were different from the positive example (least to most different). The data showed the example set with the least differences between positive and negative examples resulted in fewer training trials to criterion (7.6) compared to maximum difference between positive and negative examples group (26.0), supporting the design component of using close-in negative examples to reduce unintended interpretations of a concept.

Regarding concept teaching, Becker et al. (1975) analyzed four procedures designed to establish stimulus control of operant behavior (i.e., teaching general case), and demonstrated that a concept cannot be established with a single positive or a single negative example. Rather a set of positive examples that exhibited all the relevant concept characteristics as well as a negative example set that exhibited some or none of the characteristics were both necessary to teach the concept. Irrelevant characteristics also had to be varied across positive and negative examples. As noted by Carnine (1980a, p. 109), “Minimal stimulus variations between positive and negative examples can clearly define a concept boundary, and maximal relevant variations among positive examples shows how the full range of positive examples should be treated.”

General case instruction

Engelmann and Carnine (1991) stipulated that generalized responding requires the systematic selection of multiple examples that sample the range of stimulus and response variation. Often called “general case instruction,” this methodology has shown great utility in the applied research literature, perhaps because general case strategies use the smallest possible number of examples to produce the largest possible gains in learning (Horner et al., 2005). In general case instruction, teachers use a wide range of comparative examples (e.g., different species of plants, different minerals) to prepare students for novel situations outside of the classroom (Kozloff et al., 1999). The general case model evolved from DI principles and procedures and shares its careful use and sequencing of examples (viz. Becker et al., 1975; O’Neill, 1990).

In addition to its successful use in DI programs, general case programming has been shown to be effective with students with moderate (Gersten & Maggs, 1982) to severe disabilities (Horner et al., 1982; Horner & Albin, 1988), and adults (Ducharme & Feldman, 1992) across a variety of concepts and skills such as solving math story problems (Neef et al., 2003), requesting (Chadsey-Rusch & Halle, 1992), cooking (Trask-Tyler et al., 1994), operating vending machines (Sprague & Horner, 1984), vocational skills (Horner & McDonald, 1982), and dressing (Day & Horner, 1986). Applying general case instruction not only supports careful identification and sequencing of teaching examples, it also promotes generalization and more efficient learning. For example, by using general case strategies to teach 40 sounds and blending skills, students can learn a generalized decoding skill that applies to at least half of the words used in the English written language (Binder & Watkins, 1990).

The example selection and sameness-and-different approach is used in many models of concept formation, knowledge instruction, and extension to “real world” activities. As Markle and Tiemann (1970) noted, “[t]he key to producing the kind of generalization and discrimination that we want lies in the selection of a special rational set of examples and non-example” (p. 43) based on an analysis of how subject matter experts respond to the concept in the real world.

Likewise, Klausmeier (1980) focused on the attributes of examples and nonexamples of concepts, identifying four levels of concept learning: (1) concrete—recall of critical attributes, (2) identity—recall of examples, (3) classification—generalizing to new examples, and (4) formalization—discriminating new instances. Earlier work with colleagues identified a schema for testing concept mastery that focused not only on the examples and nonexamples of members of the concept, but also examples and nonexamples of each critical attribute as well (Frayer et al., 1969; Klausmeier et al., 1974). Concept learning is considered to have occurred when a learner is able to discriminate attributes of a concept and to evaluate new examples based on inclusion in the concept category (Klausmeier & Feldman, 1975).

A thorough analysis has gone into the selection of examples and nonexamples used within DI programs. The method and rationale for example selection not only has support via direct empirical investigations (providing research-guided and research-tested evidence), but also strong parallels in the concept building literature (providing research-informed support). These findings provide strong empirical support for the example selection methodology used within DI.

Example Sequencing

Although careful example selection is essential for teaching concepts, the sequence with which those examples and nonexamples are presented is also critical. Early research on concept teaching revealed that the presentation order of set of examples influences what is perceived about the examples, such as how things are the same or different (Klausmeier & Feldman, 1975; Markle, 1970; Tennyson & Park, 1980). Theory of Instruction provides five guidelines (called juxtaposition principles) for effectively presenting examples and nonexamples:

The wording principle. To make the sequence of examples as clear as possible, use the same wording on juxtaposed examples (or wording that is as similar as possible).

The set-up principle. To minimize the number of examples needed to demonstrate a concept, juxtapose examples that share the greatest possible number of features.

The difference principle. To show differences between examples, juxtapose examples that are minimally different and treat the examples differently.

The sameness principle. To show sameness across examples, juxtapose examples that are greatly different and indicated that the examples have the same label.

The testing principle. To test the learner, juxtapose examples that bear no predictable relationship to each other (Engelmann & Carnine, 1991, pp. 38–40).

Readers interested in example sequencing and related topics from an applied perspective are encouraged to view the articles by Johnson and Bulla, Slocum and Rolf, Rolf and Slocum, Spencer, and Twyman and Hockman in the special section on Direct Instruction in the September 2021 issue of Behavior Analysis in Practice.

An effective means of juxtaposing examples is through continuous conversion (i.e., how examples are changed from one example to the next). Creating examples using continuous conversion focuses the learner’s attention on the relevant changes between the examples (e.g., holding ones hands apart, then moving them further apart to show “wider”). Continuous conversion emphasizes change, allowing learners to focus on the critical details involved in the change (Engelmann & Carnine, 1991). Also known as the adjustment method, continuous conversion may the oldest and perhaps most effective procedure to teach dimensional concepts where a comparison is required (see Goldiamond & Thompson, 2004). In laboratory experiments the adjustment response occurs when the participant adjusts a stimulus until it reaches some criterion or makes an indicator response when a continually varying stimulus matches a constant standard.

These rules also influence the methods used to broaden the members of a concept, class, or set. Although demonstrating sameness and difference remain critical, the operations of interpolation, extrapolation, and stipulation help set the occasion for generalization (see Engelmann & Carnine, 1991, Ch. 14). In brief, interpolation involves changes along a single stimulus dimension, such as color hue or the addition or removal of parts. When a learner is presented with three examples differentiated along the single dimension, the learner will include any intermediate example as part of the set (e.g., when shown examples of light blue, dark blue, and blue, all identified as “blue” any example of a blue whose hue is on the continuum of the examples).

Extrapolation involves changes from positive to negative examples (or vice versa). If a small change makes a positive example negative, then any greater change along the same dimension will also make the example negative. For example, if two pictures are hung on the same horizontal plane (signaling “level”), moving one picture up 2 inches signals “not level.” Moving the picture up 2 feet would provoke the generalization of “not level.”

Stipulation requires repeated demonstrations of select examples that are highly similar, and presenting only those examples. This implies that any example outside the range is not the same (and should be treated differently). Stipulation relies on multiple presentations of the selected examples (such as numerous presentation of long objects held upright, signaling “vertical”). When one of the objects is presented at a discernable angle it would be treated differently (“not vertical”). This is somewhat related to the procedures of responding by exclusion first identified by Dixon (1977), involving a match-to-sample conditional discrimination procedure in which a novel sample stimulus (one not previously related to any other stimulus) is presented with a novel comparison stimulus and at least one previously known comparison stimulus. Across basic and applied studies, participants almost consistently select the novel stimulus while excluding sample-comparison pairs previously learned (e.g., Grassmann et al., 2015; Langsdorff et al., 2017).

Carnine and colleagues formatively evaluated variables related to continuous conversion, juxtaposition and other procedures related to example sequencing prior to and during the development of DI curricula. These procedures also have a history in the basic work of behavioral experimenters (for example see Azrin et al., 1961; Baum, 2012; Goldiamond & Malpass, 1961). Carnine (1980b) compared the continuous conversion procedure for presenting examples to procedures recommended by other researchers who suggested that examples are best presented by changing the emphasized features after every second set of examples. When comparing the Tennyson set up procedure (Tennyson, 1973; Tennyson et al., 1972; both cited in Carnine, 1980b) and a noncontinuous conversion procedure, Carnine found continuous conversion resulted in statistically significant training efficiency (i.e., fewer trials to criterion). In two separate experiments evaluating the effects of juxtaposition principles, Granzin and Carnine (1977), found that minimally different positive and negative training examples resulted in fewer trials to criteria when compared to maximally different examples. Carnine and Stein (1981) demonstrated the importance of juxtaposition in inducing a single transformation relationship in teaching 6-year-olds related math facts, showing that properly juxtaposed transformation procedures resulted in greater efficiency and generality. Stipulation procedures have been used effectively with students with severe intellectual disabilities (Sprague & Horner, 1984), early elementary students (L. Carnine, 1979, cited in Carnine, 1983), and students with motor skills deficits (Horner & McDonald, 1982), all demonstrating that students can learn that examples outside a predetermined range are not the same and are thus treated differently.

A clear set of logical rules that can be applied to any interaction involving communicating through examples is essential for any well-designed instructional program. This foundational, consistent rationale for selecting and sequencing examples was outlined by Markle (1969) and is evident in Gilbert’s (1912, 1969) mathetics, and Mechner's (1967) content and concept analysis protocols used in programmed instruction. More recent examples can be seen in internet-delivered instruction, such Headsprout Early Reading and Headsprout Reading Comprehension (see Layng et al., 2003, 2004; Leon et al., 2011).

The methods of analytical example sequencing in DI programs have strong research-tested support in the education literature. These five juxtaposition principles along with the use of continuous conversion, extrapolation, interpolation, and stipulation illustrate how DI programming principles guide the design and implementation of instruction (Binder & Watkins, 1990; Twyman & Hockman, 2021).

Covertization

Although explicit teaching procedures such as talk aloud problem-solving strategies are effective in teaching problem solving and other reasoning skills (Robbins, 2011; Whimby & Lochhead (1982, 1999), strategies that are made explicit during teaching do not have to remain explicit. The overt routines established during initial instruction make problem solving easier, however these routines should be shifted to interactions that contain few instructions or prompts and ultimately to covert responding by the learner.

Engelmann and Carnine (1991) provide guidelines for “covertizing” routines, including systematic reduction of overt responses over time and keeping the transition simple and relatively errorless from step to step. This can be done by dropping steps, regrouping a chain of instructions and responses, providing new “inclusive” wording (that provides less detail or combines steps), or providing equivalent pairs of instructions (used during covertization when later instructions do not appear earlier in the routine). Specific procedures may be governed by the type of task and can be combined. For a comprehension description of covertization routines, see Engelmann and Carnine (1991), Chapter 21.

The process of covertization has been empirically evaluated. In a study looking at miscues in early reading by kindergarten through third-grade children, Carnine et al. (1984) found that teacher directed exercises on sentence and story reading promoted covertization of a specific set of reading skills (such as anticipating meaning or self-correction of nonsensical words). Paine et al. (1982) investigated the need for covertization with third graders who were asked to generalize a math operation to a new situation. The researchers found that novel, independent student performance returned to mastery levels only after working through covertization routines.

Evidence-Based Design Components of Direct Instruction

Within a DI program, explicit teaching relies upon specific design components. Stein et al. (1998) and later Carnine and Kame’enui (1992) described several design practices central to DI: (1) identify big ideas; (2) teach explicit and generalizable (conspicuous) strategies; (3) provide mediated or scaffolded instruction; (4) integrate skills and concepts strategically; (5) prime background knowledge; and (6) provide adequate judicious review.

Identify Big Ideas

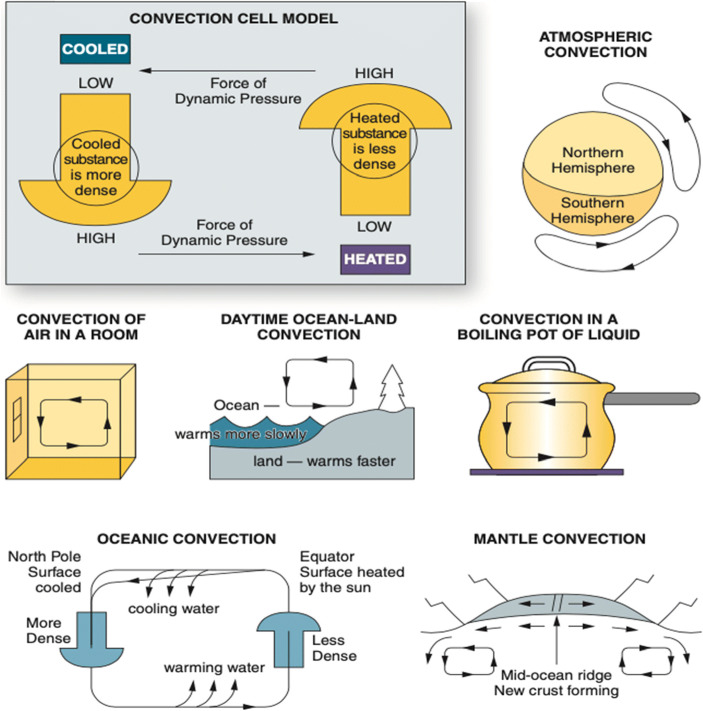

Direct Instruction focuses on “big ideas.” Big ideas are essential concepts within a content area are concepts or principles that facilitate the broadest acquisition of knowledge in the most efficient manner, and aide in the organization and interrelation of information (Carnine, 1997; Carnine et al., 1996; Slocum, 2004; Stein et al., 1998). Big ideas untangle a content area for a broad range of diverse learners by providing thorough knowledge of the most important aspects of a given content area (Kame'enui et al., 1998). Kame’enui and Carnine (1998) provide the following example as a big idea in a DI science curriculum: "the nature of science, energy transformations, forces of nature, flow of matter and energy in ecosystems, and the interdependence of life" (p. 119). Big ideas “are essential in building a level of scientific literacy among all students that is necessary for understanding and problem-solving within the natural and created world" (pp. 121–122). For example, to understand how a big idea is integrated across concepts, consider instruction on each of the properties of density, heat, and pressure as a strand (see Fig. 1). These strands overlap, leading to a larger integrated concept (e.g., convection cell). As a big idea, convection is illustrated as air circulating in a room. Integration of the concept is shown when convection is applied to liquid boiling in a pot, or to mantle, ocean, and ocean–land convection (Grossen et al., 2011).

Fig. 1.

Convection as a Big Idea

Another comprehensive example of the integration of a big idea is illustrated within a DI history curriculum. “Change” is a common theme through history and the big idea of “problem-solution-effect” primes and generates understanding of numerous historical movements (Carnine et al., 1994). Big ideas facilitate the generalization of knowledge to other areas and become part of the context of prior knowledge to which students integrate new learning (Kozloff et al., 1999). Big ideas enable instructional designers to organize instruction in ways that promote conceptual understanding (Stein et al., 1998). The goal is for students to acquire knowledge that is comprehensive, rich in detail, cohesive, and generalizable.

An overabundance of standards, educational goals, and teaching objectives are now available to educators. Early methods of determining what to teach emphasized an analysis of the knowledge or verbal repertoire of experts on a given topic (Markle, 1969). Subject matter expertise and the identification of critical knowledge came from this approach. Subject matter in any given area can be quite vast. In the 1960s, psychologists such as Gagné and Bruner postulated schemas for dealing with classes (concepts), hierarchies of classes (conceptual structures), and relationships between the classes (Bruner, 1960, 1966; Gagné, 1962, 1965, 1985; see also Smith, 2002). Gagné’s (1985) “knowledge hierarchy” proposes a ranked list of all knowledge; a list of all intellectual skills and all learning that progresses from the simplest to the most complex. Pieces of knowledge have associations and chains, discriminations, concepts, rules and generalizations, and higher-order rules; each one a prerequisite for the one that follows. In Gagné’s hierarchical model higher-level information can only be learned or understood when all the appropriate lower-level knowledge is mastered. This is similar to one of the most frequently used hierarchies in education for the past 50 years: Bloom’s taxonomy of educational objectives (Bloom, 1956). Bloom et al., (2001) categorized educational goals in a continuum from simple to complex and concrete to abstract, along six major categories: knowledge, comprehension, application, analysis, synthesis, and evaluation. Big ideas, as theorized in DI, endeavors to support all six.

Although there are many theories surrounding content organization and concept teaching (such as Merrill et al., 1992; Prater, 1993; Reigeluth & Darwazeh, 1982; Rowley & Hartley, 2008; Tennyson & Park, 1980), the research empirically evaluating the effectiveness of these theories is relatively thin. Most evaluations entail a comparison to theoretical framework or a set of design criteria (see Jitendra & Kame’enui, 1994). Johnson and Stratton (1966) evaluated concept learning using logical classification, four teacher-derived strategies (defining the concept, using it in a sentence, and providing synonyms) and a mixed method. The mixed method resulted in the greatest learning of new concepts, with each of the four independent methods showing equal effectiveness. In a review of concept-teaching procedures in five basal language programs for the early grades, Jitendra and Kame’enui (1988) found the programs rarely met 11 design-of-instruction criteria drawn from empirically based models of concept teaching. The lack of well-controlled studies highlights the need for empirical evaluations of both the effectiveness and the efficiency of teaching “big ideas” or other forms of concept learning.

Teach Explicit (Conspicuous) and Generalizable Strategies

Strategies are one way in which learners apply knowledge to a novel context. A strategy is a general framework or process, used by learners to analyze content and solve problems. For example, students may use the TINS method (Owen, 2003) when learning to solve math word problems. TINS stands for the overt steps used to solve the equation: T-Think (Think about what operation you need to do to solve this problem and circle the key words); I-Information (Write the information needed to solve the problem/draw a picture to represent the problem); N-Number (Write a number sentence to represent the problem); S-Solution (Write a solution sentence that explains your answer).

When learned overtly, cognitive routines aid in solving complex problems. DI programs teach generalizable strategies in visible and unambiguous ways. Cognitive routines are presented in explicit fashion, making them more similar to physical routines in that they can be observed, prompted, corrected, or reinforced. Once learned and applied to various (cross curriculum) examples, the overt instruction is gradually withdrawn and the learner moves to performing the strategy covertly (Carnine, 1983; Carnine et al., 1988; Carnine et al., 1996).

When converting thinking into doing, the instructional communication becomes clear. However, explicit cognitive routines are much more than simply making the covert overt. To successfully serve as a bridge the overt routine must completely and solely account for the outcome or solution to the problem. In other words it must be consistent with a single generalization and cover the full range of problem types of which it is to be applied. Generalizable strategies not only support student across a range of problem types, they assist learners in gaining new knowledge. (For a description of the formation of explicit strategies in DI see Carnine, 1983, Carnine et al., 1988; Carnine et al., 1996; or Stein et al., 1998.)

There is a sizable body of research evidence supporting DI’s use of explicit strategies. Following a survey of the existing literature, Falk and Wehby (2005) reported that the type of explicit and systematic instruction found in DI can improve academic and school outcomes for with emotional/behavioral disorders. Darch et al. (1984) looked at the effectiveness of teaching fourth graders to write mathematical equations from word story problems. They compared an explicit step-by-step teaching procedure to procedures identified in basal textbooks and found significant positive effects for the explicit teaching procedure.

In a controlled demonstration of the utility of conspicuous strategies, Ross and Carnine (1982) investigated the role of making strategies overt by comparing three teaching strategies: discovery, rule-plus-discovery, and rule-plus-overt-steps, within and across groups of second, third, and fifth graders. The discovery groups were given an example and asked if it fit the rule (with no explanation given). Students in the rule-plus-discovery groups were given the same examples but told the rule/explanation beforehand. The rule-plus-overt-steps groups were presented the rule and for each example, asked and told specifically why it did or did not fit the rule. In all grades the discovery strategy did significantly poorer on the training criterion. In the younger grades, 20% of the rule-plus-discovery children met the criterion compared to 83% of children who received the rule-plus-overt-steps. Carnine et al. (1982) demonstrated that with “sophisticated” learners the presentation of a rule without application may be sufficient, however, to make the learning perfectly clear the presentation of the rule should be followed with its application to a (carefully identified and sequenced) range of examples. These findings further reiterate the effectiveness of the “Ruleg” procedure of presenting a rule followed by examples and nonexamples first advocated by Evans et al. (1962); also see Hermann, 1971, for a comparison of “Ruleg” vs. “EgRule”).

The research base also informs us about the positive effects of explicit strategies in general. In an investigation of explicit-strategy instruction, whole-language instruction, and a combination of the two, Butyniec-Thomas and Woloshyn (1997) found experimental conditions that included explicit-strategy instruction generally were superior to the whole-language condition, suggesting that third-grade children learn to spell best when they are explicitly taught a repertoire of effective spelling strategies in a meaningful context. Reading instruction that provides direct and explicit teaching in specific skills and strategies have been shown to be most effective, both across grades and ages (Biancarosa & Snow, 2006; National Institute of Child Health & Human Development, 2000; Torgesen et al., 2007).

In an experiment examining the impact that explicit instruction in heuristic strategies, Schoenfeld (1979) carefully controlled conditions (training in problem solving, equal time working on the same problems, identical problem solutions) with half the students show explicitly how five strategies were used. The heuristics group significantly outperformed the other students. Hollingsworth and Woodward (1993) found explicit strategy group performed significantly better on transfer measures when evaluating secondary students’ ability to link facts, concepts, and problem solving in an unfamiliar learning domain. Lastly, in an extensive and important review of the literature evaluating guided models of instruction (e.g., DI) to minimally guided models (i.e., discovery, inquiry, experiential, and constructivist learning), Kirschner et al. (2006) found that providing only minimal guidance during instruction “simply does not work” (as paraphrased from the title: Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching). There is a substantial, clear empirical base suggesting that all students benefit from having effective strategies made apparent for them during instruction (Adams & Engelmann, 1996; Hall, 2002; Hattie, 2009, 2012; Hattie & Yates, 2013; Pearson & Dole, 1987). The explicit teaching of strategies is a critical design component of DI; its empirical base provides ample evidence of the strength of DI programs.

Scaffolded or Mediated Instruction

DI programs move instruction from a more teacher-led format to one requiring less teacher guidance. This process is often called mediated scaffolding (Kame'enui et al., 1998). Scaffolding reduces task complexity by structuring knowledge into manageable chunks to increase successful task completion, and supports the learner’s development just beyond the level of what the learner can do alone (Larkin, 2001; Olson & Platt, 2000; Raymond, 2000). Scaffolds occur most often during initial learning and may be in the form of prompts, cues, steps, or tasks provided either in-person (by teachers or peers) or embedded in the materials (via the sequence and selection of tasks). Mediated scaffolding calibrates ongoing modification of teacher assistance and the design of the examples used in teaching based on the student’s current knowledge or abilities (Coyne et al., 2007). Scaffolding assists students by teaching problem-solving strategies, helping students distinguish essential and nonessential details, and fading assistance provided from the teacher or by the materials (Becker & Carnine, 1981). An important consideration when scaffolding instruction is that the scaffolds are temporary; the type or degree of scaffolding is individualized and thus varies with the evolving abilities of the learner, goals of instruction, and complexities of the task. As the learner’s knowledge competency increases, the teacher-provided support decreases. The purpose of scaffolding is to help all students to become independently successful in activities (Hall, 2002; Kame'enui et al., 2002).

Instructional scaffolding enjoys broad support in the education literature. Scaffolding theory was first introduced by Bruner (1978) to describe how parents help their young children acquire oral language. As a teaching strategy, most historians associate scaffolding with Vygotsky’s sociocultural theory and the concept of the zone of proximal development. Vygotsky wrote “The zone of proximal development is the distance between what children can do by themselves and the next learning that they can be helped to achieve with competent assistance” (quoted in Raymond, 2000, p. 176). Scaffolds help learners through the zone of proximal development by supporting their ability to build on prior knowledge and internalize new information (Chang et al., 2002). According to Vygotsky, external scaffolds can be removed because the learner has gained “. . . more sophisticated cognitive systems, related to fields of learning such as mathematics or language, the system of knowledge itself becomes part of the scaffold or social support for the new learning” (Raymond, 2000, p. 176). The constructivist literature recently repackaged instructional scaffolding as “cognitive apprenticeship.” Building on the practice of apprenticeship and its long history of use in transmitting physical expertise (e.g., in learning a trade or a craft), a cognitive apprenticeship facilitates learning by using experts to help novices develop cognitive skills through participating in “authentic” learning experiences. Collins et al. (1989, p. 456, cited in Dennon, 2004) describe it as “learning-through-guided-experience on cognitive and metacognitive, rather than physical, skills and processes” (see also Collins et al., 1991).

With regard to prompt and cues within instructional and the fading of these stimuli, the behavior analytic literature is replete with studies of procedural and overall effectiveness (Cooper et al., 2020; MacDuff et al., 2001). The goal of these tactics is the transfer of stimulus control from the prompt or cue to the stimulus itself. Early behavioral research began with Terrace’s classic work on the transfer of stimulus control using fading and the superimposition of stimuli (Terrace, 1963) and continued with Touchette’s work on the systematic delay of the delivery of prompts or cues (Touchette, 1971).

Empirical studies have specifically investigated scaffolding procedures and confirmed the utility of specific, concise, structured methods that translate to independent skills (Darch & Carnine, 1986). In a study investigating an interactive teaching strategy designed to expedite reading comprehension, Bos et al. (1989) found that when junior high students with learning disabilities were given a prompt to self-monitor comprehension, their performance increased to equal that of average students. Within a one-to-one setting, Wertsch and Stone (1984) demonstrated how adult language directed a child with learning disabilities to strategically monitor his actions and clearly showed the transfer of responsibility from teacher-regulated to student-regulated behaviors. Given the spread of online education, Jumaat and Tasir (2014), provide an interesting meta-analysis of the types of scaffolding that could be used in an online learning environment and offer suggestions for validating students’ success in an online learning environment.

The research base on scaffolding shows a sizeable body of evidence indicating improved student learning with thoughtfully guided instruction (McKeough et al., 1995; Moreno, 2004; Rosenshine & Meister, 1995). Additional research or parametric studies investigating the various types of scaffolding (teacher delivered, via materials, or embedded within computer-based instruction) and how scaffolds are mediated or faded over time would be beneficial. Although additional investigations would be helpful in evaluating specific scaffolding procedures within DI programs, the existing empirical data on scaffolding clearly indicate it is another solid research-based component of DI programs.

Integrate Skills and Concepts Strategically

As noted previously, DI relies on clear, logical, and unambiguous communications tied to “big ideas” and their related “systems of knowledge classification” (Carnine et al., 1988). Concepts are not taught in isolation but instead are integrated within and across content areas. Strategic integration is used to illustrate how these analyses and applications can direct student progression toward higher-order thinking skills. Strategic integration links critical information together to promote new and more complex knowledge resulting in multiple benefits for the learner including learning when to apply knowledge.

Characteristics of strategic integration include (1) curriculum design that provides opportunities to combine several big ideas, (2) content applicable to multiple contexts, and (3) incorporation of concepts once mastered (Hall, 2002). Integration allows students to examine relationships among various concepts, and ultimately to combine relevant information to acquire new and more complex knowledge. Once a skill has been identified as important for teaching, designers must consider how to integrate it into a meaningful context. As noted by Stein et al. (1998), “A key role of an instructional designer is to determine those concepts that highlight relationships both within a content area and across different disciplines and to design instruction that facilitates understanding of those relationships” (p. 231). High quality, strategic integration (1) facilitates the assimilation of concepts and knowledge, and (2) aids the learner in applying new knowledge and “big ideas” to multiple contexts, including those beyond classroom applications (Hall, 2002; Kame'enui et al., 1998).

Graphic organizers—tables, charts, maps, or other visual schema—are frequently used within DI programs to convey big ideas and illustrate how information is interrelated. Early work in this type of organization can be traced back to Ausubel’s (1960, 1968) “advanced organizer” system. Used to convey large amounts of information within a subject area, advanced organizers start with a visual representation of the most general ideas within the concept and then proceed to introduce more detail and specificity. Graphic organizers often provide templates or frames for highlighting essential content, organizing information, and recording connections between facts, concepts, and ideas (Fountas & Pinnell, 2001; Lovitt & Horton, 1994).

There is a relatively strong research base supporting the use of graphic or other visual organizers to enhance content comprehension (Barton-Arwood & Little, 2013; Boyle & Weishaar, 1997; Gardill & Jitendra, 1999; Horton et al., 1990). Students at all grades and levels have been shown to benefit from the use of graphic organizers across a variety of content areas, because they provide support when previous information is reviewed and new information is presented (Dye, 2000; Gagnon & Maccini, 2000). When investigating the use of graphical organizers to teach conceptual content, Darch and Carnine (1986) and Darch et al. (1986) found such systems were highly related to increased student performance. In a study examining the relative effectiveness of visual spatial displays in enhancing comprehension during instruction, Darch and Eaves (1986) found that adolescent students with learning disabilities showed greater performance on short-term recall tests when taught with the visual displays (no differences were found with transfer or maintenance tests). Likewise, Carnine and Kinder (1985) found low-performing students made sizable improvements in generative and schema strategies for narrative and expository materials even with short use of these strategies. The authors emphasized the importance of carefully designed, concentrated instruction that increases students’ attention to text.

A robust empirical research base supports the integration of skills and concepts, in particular with the use of visual and graphic organizers (see Hall & Strangman, 2002). The research has grown to include the computer-assisted use of visual organizers (e.g., Anderson-Inman & Horney, 1997; Lin et al., 2004). Further study is needed to determine the transfer and generalization of these types of integration techniques presented via the computer. Advance organizers go beyond chapter summaries by using visual systems to bridge the gap between new material and the knowledge the student already has. Advance organizers help prime the student for learning and are a frequently used design component within DI.

Prime Background Knowledge

Direct Instruction programs stress the importance of building new knowledge from what is already known or just taught. “The primary goal of [DI] is to increase not only the amount of student learning but also the quality of that learning by systematically developing important background information and explicitly applying it to new knowledge” (Stein et al., 1998, p. 228). Research indicates that learning new skills or gaining new knowledge is largely dependent upon the knowledge a learner brings to the situation (Carnine et al., 1996; Marzano, 2004), making prior knowledge an essential variable in learning (Dochy et al., 1999). The utility of that previous knowledge is directly related to the accuracy of the information and the learner’s ability to use that information (Hall, 2002). Students with insufficient background knowledge (or those unable use this knowledge) often have difficulty progressing within the general curriculum (Strangman & Hall, 2004). To improve knowledge acquisition for all learners, the effective priming of background knowledge is an important design component of DI.

Direct Instruction programs prime background knowledge by connecting new information to what students have previously learned, by making comparisons between known items and the new concept or relate the topic to events or knowledge that are familiar to students. Some see priming as a method of addressing any memory or strategy deficits learners may bring to new tasks, as priming emphasizes critical features, activates current knowledge, and thus increases the likelihood of learner success on new tasks (Hall, 2002). In fact, priming background knowledge may be essential for many students, as an “analysis of history textbooks showed that texts assumed unrealistic levels of students’ prior knowledge and these unrealistic levels negatively influenced student understanding” (McKeown& Beck, 1990). It also has an interesting connection to analyses of dimensional versus instructional guidance of behavior (see Goldiamond, 1966), where dimensional control (SDd) could be considered a “what” discrimination whereas instructional control (SDi) could be considered a “how” discrimination (Layng et al., 2011). When presented with a hat, a learner may say “hat” (a tact); however, when the presentation is paired with varying instructions, such as “What color is it?,” “Is it soft or hard?,” or “Who wears a hat like this?,” the responses may change to “red,” “hard,” and “a fireman.” In each case the SDi (the instruction) narrows the response alternatives without changing the SDd (the hat; example derived from Layng et al., 2011). It would be interesting to determine if and how manipulation of SDi’s might assist in priming a learner’s background knowledge by manipulating SDi guidance and highlighting connections to prior knowledge.

Preteaching, or emphasizing critical concepts and how they relate to what is already known prior to teaching new material, is one way of priming background knowledge. A number of applied studies have shown that explicit preteaching using background knowledge substantially increases student understanding of relevant reading material (Graves et al., 1983; McKeown et al., 1992; Stevens, 1980). Stevens (1982) found that students who received specific instruction on the pertinent background related to an expository text demonstrated significantly greater reading comprehension of the text than those that received irrelevant instruction. Likewise, Dole et al. (1991) showed that students who learned relevant background information had significantly greater performance on comprehension questions than students without background knowledge instruction. In a component analysis of a DI math program, Kame’enui and Carnine (1986) found preteaching improved the subtraction performance of second graders, as compared to teaching component skills concurrently. Pearson et al. (1979) evaluated the comprehension of textually explicit and inferable information by above-average second grade readers and found a significant prior-knowledge effect on inferable knowledge but not on explicitly stated information. Thus, priming background knowledge seems to promote the development of inferential knowledge based on new material. With regard to concept formation, Pazzani (1991) demonstrated that prior knowledge influences the rate of concept learning by college undergraduates and also found that prior causal knowledge can influence the logical form being acquired.

Numerous classroom-based studies support the positive effects of priming background knowledge, and various techniques have been developed to do so effectively and efficiently (Dochy et al., 1999; Singleton & Filce, 2015). These include instruction on background knowledge, student recording of background knowledge, and contact with background knowledge through questioning (Strangman & Hall, 2004). A potential line of research could involve the use of augmented and virtual reality applications to create new experiences for learners, which then become “background knowledge” for a new concept or skill to be learned. Additionally, research is needed in the areas of student characteristics (e.g., familiarity with a content area and the accuracy of their prior knowledge) and how to provide instruction that clarifies and expands prior knowledge. The current research base provides ample direct support for instructional strategies that build and activate prior knowledge, making it a viable research-tested component of DI’s instructional design.

Provide Adequate Judicious Review

When building concepts and acquiring new knowledge, effective review of new information to novel contexts or at different times aids in the transfer, maintenance, and generalization of learning (Hall, 2002). Planned, thoughtful, specific (i.e., judicious) review is crucial to the solid understanding and application of the “big ideas” learned throughout DI programs. These programs carefully embed review into the teaching sequence, so that it is cumulative across knowledge, distributed across content, varied for practice across contexts (Carnine et al., 1996), and sufficient to cover the material presented (Stein et al., 1998). Regular progress monitoring allows teachers to individualize when, what, and how much information to review.

Computers can for provide effective opportunities for review. Johnson et al. (1987) compared two computer-based vocabulary programs to teach 50 vocabulary words to high school students identified as learning disabled. Twenty-five students were randomly assigned to either the small set condition (teaching and practice exercises on small sets 10 with cumulative review exercises on all words) or the large set condition (teaching and practice exercises on two sets of 25 words and no cumulative review). Of the 12 students assigned to the small teaching set, 10 achieved mastery more quickly than those with the larger teaching set, indicating frequent review to be more efficacious. In another computer-based investigation related to the review of chunks of information, Gleason et al. (1991) found the cumulative presentation of smaller chunks of information, where compared to the rapid introduction of new information, resulted in shorter learning time to mastery, fewer errors, and less student frustration for 60 elementary and middle school students (many with learning disabilities).

Mayfield and Chase (2002) compared three methods of teaching basic algebra rules to college students. The same procedures were used to teach the rules, with the kinds of practice provided during the four review sessions as the only differences between methods. On the final test, the cumulative review group scored highest on application and problem-solving items and completed the problem-solving items significantly more quickly than the two other groups. The authors suggest cumulative practice of component skills to be an effective method of training problem solving. Additional studies support the use of cumulative practice and review to promote new knowledge, especially within traditional academic domains. Frequent, cumulative review has become commonly recommend as an instructional methodology, especially for students who struggle to learn (as evidenced by its recommendation as a “best practice” by the Institute for Educational Sciences [Gersten et al., 2009]).

Although the benefits of frequent, cumulative review have been established within the education community, the specific parameters of cumulative, distributed, varied, and sufficient review have received less direct empirical scrutiny. Although derived in general from the research base on review, these specific forms of review used in DI programs would benefit from a more direct investigation of the efficacy of each of the components.

Conclusion

The theory of instruction underpinning DI rests on fundamental philosophical notions about knowledge, meaning, logic, and rigor, including the fundamental idea that “instruction is inductive in nature” (Engelmann & Carnine, 1991, p. 368). DI programs make these notions actionable through a careful, systematic blend of content analysis, logical analysis, and behavior analysis.

A critically important, yet often underused, form of instructional design comes from empirical evaluations of principles and procedures during program development (Layng et al., 2006; Markle, 1967). Engelmann and Carnine developed the principles and procedures elucidated in Theory of Instruction based on logic. However, logic by itself was (and is) insufficient for developing a cohesive, workable, effecting instructional technology. Empirical validation is vital. Engelmann (2009) reported that each time the authors formulated a principle, strategy, or tactic, they asked each other, “Do you know of any studies confirming this?” If not, Carnine and colleagues conducted formal experiments testing the logic, resulting in nearly 50 published studies evaluating components described in Theory of Instruction (see reviews or summaries in Becker, 1992; Becker & Carnine, 1980, 1981; Weisberg et al., 1981). Thus, they continuously validated the logical analyses1 via applied and field test studies, providing empirical support for specific design principles and teaching practices, and ultimately using the results to determine if communications are having their intended effect . . . or if, where, or how they might need to be refined (Binder & Watkins, 1990; Engelmann, 2009).

Numerous meta-analyses of the research base firmly support DI components or teaching strategies (Adams & Engelmann, 1996, Becker & Gersten, 1982; Ehri et al., 2001, Gersten, 1985; Marzano et al., 2001; Mason & Otero, 2021; White, 1988). As noted by Binder and Watkins (1990), “Direct Instruction principles and procedures are supported by general principles derived from basic behavioral research as well as by the literature of effective teaching practices” (p. 90). This type of scientifically validated instruction is critical for helping resolve our educational crisis (NICHHD, 2000; No Child Left Behind Act, 2001). However, the merit of any instructional program is not based on how rigorously it was developed. A program’s ultimate value is determined by the outcomes it produces in educational settings across the range of teachers and students found within our schools. A summary of the research reviews related to DI indicates that it (1) is consistent with known effective instructional practices; (2) has been found by independent evaluation to have strong positive effects; and (3) has been shown effective across student populations, grade levels, and content areas.

Reform in education often has focused on the goals of teaching and less on how those goals can be attained (Cuban, 1990). The attention on evidence-based practices has moved the spotlight to specific teaching practices, and determining which practices are most likely to be effective with what populations under what conditions (see Layng et al., 2006). This shift has tremendous implications for those who design curricula and instructional programs, underscoring the critical need to:

base instructional design and curriculum development on the best “state-of –the science” practices known to date (i.e., research-informed);

conduct multiple iterations of “formative evaluation” assessments of each component of instruction (i.e., research-guided); and

subject the instructional program to multiple variations of “summative evaluation” to determine the field conditions under which it does, and doesn’t work (i.e., research-tested).

It is clear from the evidence base that the theory, model, programs, and practices of DI have addressed all three.

The effectiveness of an instructional program should be determined by from its empirically derived evidence base. The research base associated with the principles and design features of DI reveals verification of effects across all three forms of evidence (research-tested, research-informed, and research-guided instruction), solidifying Direct Instruction as an evidence-based practice.

Declarations

Conflicts of Interest

The author has no known conflicts of interest to disclose.

Footnotes

Engelmann (2009) reports that only one component was not validated, that of beginning instruction with a negative example (e.g., “This is not glurm”). Effectiveness for the procedure produced mixed results in that law students learned with the procedure whereas preschoolers did not (reportedly because preschool participants did not attend to the negative example).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Adams GL, Engelmann S. Research in Direct Instruction: 25 years beyond DISTAR. Educational Achievement Systems; 1996. [Google Scholar]

- Anderson JR. The architecture of cognition. Harvard University Press; 1983. [Google Scholar]

- Anderson-Inman L, Horney M. Computer-based concept mapping: Enhancing literacy with tools for visual thinking. Journal of Adolescent & Adult Literacy. 1997;40(4):302–306. [Google Scholar]

- Archer AL, Hughes CA. Explicit instruction: Effective and efficient teaching. Guilford Press; 2010. [Google Scholar]

- Ausubel DP. The use of advance organizers in the learning and retention of meaningful verbal material. Journal of Educational Psychology. 1960;51(5):267–272. doi: 10.1037/h0046669. [DOI] [Google Scholar]

- Ausubel DP. Educational psychology: A cognitive view. Holt, Rinehart & Winston; 1968. [Google Scholar]

- Azrin NH, Holz W, Ulrich R, Goldiamond I. The control of the content of conversation through reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4(1):25. doi: 10.1901/jeab.1961.4-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bangert-Drowns RL, Bankert E. Meta-analysis of effects of explicit instruction for critical thinking (ED328614) ERIC; 1990. [Google Scholar]

- Barton-Arwood SM, Little MA. Using graphic organizers to access the general curriculum at the secondary level. Intervention in School & Clinic. 2013;49(1):6–13. doi: 10.1177/1053451213480025. [DOI] [Google Scholar]

- Baum WM. Rethinking reinforcement: allocation, induction, and contingency. Journal of the Experimental Analysis of Behavior. 2012;97(1):101–124. doi: 10.1901/jeab.2012.97-101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker, W. C. (1992). Direct Instruction: A twenty-year review. In R. P. West & L. A. Hamerlynck (Eds.), Designs for excellence in education: The legacy of B. F. Skinner (pp. 71–112) Sopris West.

- Becker WC, Carnine DW. Direct Instruction: An effective approach to educational intervention with the disadvantaged and low performers. In: Lahey BB, Kazdin AK, editors. Advances in clinical and child psychology. Plenum; 1980. [Google Scholar]

- Becker WC, Carnine DW. Direct Instruction: A behavior theory model for comprehensive educational intervention with the disadvantaged. In: Bijou SW, Ruiz R, editors. Behavior modification: Contributions to education. Lawrence Erlbaum Associates; 1981. [Google Scholar]

- Becker WC, Gersten R. A follow-up of follow through: The later effects of the direct instruction model on children in fifth and sixth grades. American Educational Research Journal. 1982;19(1):75–92. doi: 10.3102/00028312019001075. [DOI] [Google Scholar]

- Becker WC, Engelmann S, Thomas DR. Teaching 2: Cognitive learning and instruction. Science Research Associates; 1975. [Google Scholar]

- Biancarosa C, Snow C. Reading next—A vision for action and research in middle and high school literacy: A report to the Carnegie Corporation of New York. 2. Alliance for Excellent Education; 2006. [Google Scholar]

- Binder C, Watkins CL. Precision Teaching and Direct Instruction: Measurably superior instructional technology in schools. Performance Improvement Quarterly. 1990;3(4):74–96. doi: 10.1111/j.1937-8327.1990.tb00478.x. [DOI] [Google Scholar]

- Bloom, B. S. (1956). Taxonomy of educational objectives. Vol. 1: Cognitive domain. David McKay Co Inc.

- Bloom, B. S., Airasian, P., Cruikshank, K., Mayer, R., Pintrich, P., Raths, J., & Wittrock, M. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom's taxonomy of educational objectives. Prentice Hall.

- Bos CS, Anders PL, Filip D, Jaffe LE. The effects of an interactive instructional strategy for enhancing reading comprehension and content area learning for students with learning disabilities. Journal of Learning Disabilities. 1989;22(5):384–390. doi: 10.1177/002221948902200611. [DOI] [PubMed] [Google Scholar]

- Boyle JR, Weishaar M. The effects of expert-generated versus student-generated cognitive organizers on the reading comprehension of students with learning disabilities. Learning Disabilities Research & Practice. 1997;12(4):228–235. [Google Scholar]

- Brody J, Good T. Teacher behavior and student achievement. In: Wittrock M, editor. The third handbook of research on teaching. Macmillan; 1986. pp. 328–375. [Google Scholar]

- Bruner J. The process of education. Harvard University Press; 1960. [Google Scholar]

- Bruner JS. Toward a theory of instruction. Belknap Press of Harvard University Press; 1966. [Google Scholar]

- Butyniec-Thomas B, Woloshyn VE. The effects of explicit-strategy and whole-language instruction on students' spelling ability. Journal of Experimental Education. 1997;65(4):293–302. doi: 10.1080/00220973.1997.10806605. [DOI] [Google Scholar]

- Carnine D. Relationships between stimulus variation and the formation of misconceptions. Journal of Educational Research. 1980;74(2):106–110. doi: 10.1080/00220671.1980.10885292. [DOI] [Google Scholar]

- Carnine D. Three procedures for presenting minimally different positive and negative instances. Journal of Educational Psychology. 1980;72(4):452–456. doi: 10.1037/0022-0663.72.4.452. [DOI] [Google Scholar]

- Carnine, D. (1983). Direct Instruction: In search of instructional solutions for educational problems. In D. Carnine, D. Elkind, D. Melchenbaum, R. Lisieben, & F. Smith (Eds.), Interdisciplinary voices in learning disabilities and remedial education (pp. 1–66). Pro-Ed.

- Carnine D. Instructional design in mathematics for students with learning disabilities. Journal of Learning Disabilities. 1997;30(2):130–141. doi: 10.1177/002221949703000201. [DOI] [PubMed] [Google Scholar]

- Carnine D, Kame’enui EJ. Higher order thinking: Designing curriculum for mainstreamed students. Pro-Ed; 1992. [Google Scholar]

- Carnine D, Kinder D. Teaching low-performing students to apply generative and schema strategies to narrative and expository material. Remedial & Special Education. 1985;6(1):20–30. doi: 10.1177/074193258500600104. [DOI] [Google Scholar]

- Carnine DW, Stein M. Organizational strategies and practice procedures for teaching basic facts. Journal of Research in Mathematics Education. 1981;12(1):65–69. doi: 10.2307/748659. [DOI] [Google Scholar]

- Carnine DW, Kame’enui EJ, Maggs A. Components of analytic assistance: Statement saying, concept training and strategy training. Journal of Educational Research. 1982;75(6):374–377. doi: 10.1080/00220671.1982.10885412. [DOI] [Google Scholar]

- Carnine LM, Carnine D, Gersten R. Analysis of oral reading errors economically disadvantaged students taught with a synthetic phonics approach. Reading Research Quarterly. 1984;19(3):343–356. doi: 10.2307/747825. [DOI] [Google Scholar]

- Carnine D, Granzin A, Becker W. Direct Instruction. In: Graden J, Zins J, Curtis M, editors. Alternative educational delivery systems: Enhancing instructional options for all students. National Association of School Psychologists; 1988. pp. 327–349. [Google Scholar]

- Carnine, D., Crawford, D., Harniss, M. K., & Hollenbeck, K. (1994). Understanding U.S. history: Vol.1 Through the Civil War. Considerate Publishing.

- Carnine D, Caros J, Crawford D, Hollenbeck K, Harniss MK. Designing effective U.S. history curricula for all students. Advances in Research on Teaching. 1996;6:207–256. [Google Scholar]

- Chadsey-Rusch J, Halle J. The application of general-case instruction to the requesting repertoires of learners with severe disabilities. Journal of the Association for Persons with Severe Handicaps. 1992;17(3):121–132. doi: 10.1177/154079699201700301. [DOI] [Google Scholar]

- Chang K, Sung Y, Chen I. The effect of concept mapping to enhance text comprehension and summarization. Journal of Experimental Education. 2002;71(1):5–23. doi: 10.1080/00220970209602054. [DOI] [Google Scholar]

- Collins A, Brown JS, Holum A. Cognitive apprenticeship: Making thinking visible. American Educator. 1991;15(3):6–11. [Google Scholar]

- Cooper JO, Heron TE, Heward WL. Applied behavior analysis. 3. Pearson Education; 2020. [Google Scholar]

- Coyne MD, Kame’enui EJ, Carnine DW. Effective teaching strategies that accommodate diverse learners. 3. Pearson Education; 2007. [Google Scholar]

- Critchfield TS, Twyman JS. Prospective instructional design: Establishing conditions for emergent learning. Journal of Cognitive Education & Psychology. 2014;13(2):201–217. doi: 10.1891/1945-8959.13.2.201. [DOI] [Google Scholar]

- Cuban L. Reforming again, again, and again. Educational Researcher. 1990;19(1):3–13. doi: 10.2307/1176529. [DOI] [Google Scholar]

- Darch C, Carnine D. Teaching content area material to learning disabled students. Exceptional Children. 1986;53(3):240–246. doi: 10.1177/001440298605300307. [DOI] [PubMed] [Google Scholar]

- Darch C, Eaves RC. Visual displays to increase comprehension of high school learning-disabled students. Journal of Special Education. 1986;20(3):309–318. doi: 10.1177/002246698602000305. [DOI] [Google Scholar]

- Darch C, Carnine D, Gersten R. Explicit instruction in mathematics problem solving. Journal of Educational Research. 1984;77(66):350–359. doi: 10.1080/00220671.1984.10885555. [DOI] [Google Scholar]

- Darch C, Carnine D, Kame’enui E. The role of graphic organizers and social structure in content area instruction. Journal of Reading Behavior. 1986;18(4):275–295. doi: 10.1080/10862968609547576. [DOI] [Google Scholar]

- Day HM, Horner RH. Response variation and the generalization of a dressing skill: Comparison of single instance and general case instruction. Applied Research in Mental Retardation. 1986;7(2):189–202. doi: 10.1016/0270-3092(86)90005-6. [DOI] [PubMed] [Google Scholar]

- Dennon VP. Cognitive apprenticeship in educational practice: Research on scaffolding, modeling, mentoring, and coaching as instructional strategies. In: Jonassen DH, editor. Handbook of research on educational communications and technology. 2. Lawrence Erlbaum Associates; 2004. pp. 813–828. [Google Scholar]

- Dick W. A history of instructional design and its impact on educational psychology. In: Glover JA, Ronning RR, editors. Historical foundations of educational psychology. Plenum Press; 1987. pp. 183–202. [Google Scholar]

- Dixon LS. The nature of control by spoken words over visual stimuli selection. Journal of Experimental Analysis of Behavior. 1977;27(3):433–442. doi: 10.1901/jeab.1977.27-433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dochy F, Segers M, Buehl MM. The relation between assessment practices and outcomes of studies: The case of research on prior knowledge. Review of Educational Research. 1999;69(2):145–186. doi: 10.3102/00346543069002145. [DOI] [Google Scholar]

- Dole JA, Valencia SW, Greer EA, Wardrop JL. Effects of two types of prereading instruction on the comprehension of narrative and expository text. Reading Research Quarterly. 1991;26(2):142–159. doi: 10.2307/747979. [DOI] [Google Scholar]

- Ducharme JM, Feldman MA. Comparison of staff training strategies to promote generalized teaching skills. Journal of Applied Behavior Analysis. 1992;25(1):165–179. doi: 10.1901/jaba.1992.25-165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye G. Graphic organizers to the rescue! Teaching Exceptional Children. 2000;32(1):1–5. doi: 10.1177/004005990003200311. [DOI] [Google Scholar]

- Ehri LC, Nunes SR, Stahl SA, Willows DM. Systematic phonics instruction helps students learn to read: Evidence from the National Reading Panel’s meta-analysis. Review of Educational Research. 2001;71(3):393–447. doi: 10.3102/00346543071003393. [DOI] [Google Scholar]

- Ellis, E. S., & Worthington, L. A. (1994). Research synthesis of effective teaching principles and the design of quality tools for educators (Technical Report No. 5). University of Oregon, National Center to Improve the Tools of Educators.