Supplemental Digital Content is available in the text.

Keywords: Clinical burden, Community infection risk, COVID-19, Multilevel regression and poststratification

Abstract

Throughout the coronavirus disease 2019 (COVID-19) pandemic, government policy and healthcare implementation responses have been guided by reported positivity rates and counts of positive cases in the community. The selection bias of these data calls into question their validity as measures of the actual viral incidence in the community and as predictors of clinical burden. In the absence of any successful public or academic campaign for comprehensive or random testing, we have developed a proxy method for synthetic random sampling, based on viral RNA testing of patients who present for elective procedures within a hospital system. We present here an approach under multilevel regression and poststratification to collecting and analyzing data on viral exposure among patients in a hospital system and performing statistical adjustment that has been made publicly available to estimate true viral incidence and trends in the community. We apply our approach to tracking viral behavior in a mixed urban–suburban–rural setting in Indiana. This method can be easily implemented in a wide variety of hospital settings. Finally, we provide evidence that this model predicts the clinical burden of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) earlier and more accurately than currently accepted metrics. See video abstract at, http://links.lww.com/EDE/B859.

Early knowledge of incidence and trends of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) transmission in communities is crucial, but in the absence of universal screening or random testing, interested parties have been left to extrapolate impressions of community viral behavior from nonrepresentative data. Public health professionals have relied on state-sourced positivity rates and raw numbers of positive tests in any given jurisdiction as proxies for the true SARS-CoV-2 burden. Unfortunately, these presumed proxies are subject to substantial selection bias, as most testing protocols understandably target symptomatic and presumed-exposed populations. Further, tests have been applied with different criteria over time and geography according to test availability, perceived community SARS-CoV-2 burden, and disparate clinical or political testing norms. The uncontrolled nature of the data raises questions or criticism about their validity as determinants of policy that delimits clinical or economic behavior. Alleva et al1 provide a review of strategies and experiences currently in progress to estimate the SARS-CoV-2 incidence in the community.1 Briefly, the existing approaches include massive test campaigns without a formal sampling design, diagnostic tests through a probabilistic sample, volunteer massive surveys, and supplements of existing sample surveys. Absent randomized testing of the population or community, we need a means of normalizing currently available data to better track trends in the true underlying incidence, either as a more reliable metric or as a reassurance of the validity of our current ones in predicting clinical behavior of SARS-CoV-2.

In the present article, we apply multilevel regression and poststratification, a standard adjustment method used in survey research that is particularly effective when sample sizes are small in some demographic or geographic slices of the data.2,3 Multilevel regression and poststratification has increasingly been shown to be useful in public health surveys, and even be successful in highly unrepresentative probability or nonprobability samples.4–6 The authors work with data from the Community Hospital group in Indiana, which serves an urban–suburban–rural mix of patients. Coronavirus disease (COVID) testing was already being performed for patients in this hospital system, and it was relatively costless to augment this data collection with the statistical analysis presented here. For this reason, we believe that this method can be easily implemented in a wide variety of hospital settings.

METHODS

Study Data and Sample

On reopening without restriction to elective medical and surgical procedures after the early spring COVID-19 outbreak, clinical professionals in our hospital system were sufficiently concerned about asymptomatic viral shedding to test all patients for acute SARS-CoV-2 infection before performing any such procedure. All elective patients for invasive procedures are presumptively asymptomatic, as any potential surgical patient acknowledging symptoms or presenting a recent history of known viral exposure would have the procedure canceled or deferred. All prospective surgical (and other invasive procedure) patients were subjected to a preoperative evaluation of these issues and excluded if they showed evidence of symptoms or exposure.

This population presented a potentially valuable resource. All patients used the same test administered within a health system by similar health care professionals. There is a broad age, racial/ethnic, and economic diversity to this group, and its only overt correlation to disease status is that it is specifically selected for a lack of symptoms and a negative exposure history. By way of contrast, cumulative state-wide data include testing data from multiple sources (private clinics, large hospitals, pop-up clinics, large employers, universities, etc), with different types of tests, different levels of training for testers, different test settings (clinic office or drive through clinic), where the test results are valuable but likely much more variable than those under consideration. More importantly, the criteria used in the cumulative state data to determine whether to test individuals in the first place are subject to varying prior assumptions. As an example, an outpatient exposed to a family member suspected of active COVID has a substantially different prior than a dyspneic patient admitted to the emergency department, yet the implication of the state data trends is that these positive cases should be handled similarly.

Although not ideal, our test group is therefore a promising proxy for the general community. SARS-CoV-2 has clearly shown the ability to spread throughout the population via both asymptomatic and symptomatic infection. If we were to assume the as yet unverified but reasonable hypothesis that, for any uniform demographic, the ratio of asymptomatic-to-symptomatic viral infection is constant, then the asymptomatic population in a community would vary in a strict ratio with overall prevalence, and could therefore serve as an excellent proxy for true viral incidence. The trending of asymptomatic infection would be expected to be strictly proportional to clinical infection. To the degree to which external comorbidities or other factors might contribute to variation in this ratio across the sample, we anticipate that much of this variation would be captured by our demographic adjustments.

Of crucial importance, our sample group varies from a true random sample in predictable ways. It is selected rigorously for asymptomatic/nonexposed status, and age, racial/ethnic, and geographic demographics are well documented in the hospital electronic health records (EHR). It remains only to normalize our sample to the demographics of the larger community to represent the general population.

The University of Michigan Institutional Review Board (IRB) has determined that the project is exempt from the requirement for IRB review and approval.

Measures

We subjected all patients to polymerase chain reaction (PCR) testing for viral RNA, 4 days before their intended procedure. Samples were submitted to LabCorp for analysis using the Roche cobas system. This testing regime was used throughout the study interval and continues to be employed without change to the present day. A 70% clinical sensitivity is presumed for this test, based on near 100% internal agreement with positive controls on in vitro analytics7 and broadly observed clinical performance of PCR testing throughout the pandemic8; however, asymptomatic and presymptomatic patients may be harder to detect than predicted by these analytic data, as dates of infection as well as symptom status/onset are known to have a large effect on sensitivity.9 These effects would need to be acknowledged and, to the degree possible, accounted for in the model. Specificity is near 100%, with false positives likely generated only by cross-contamination or switched samples. These false positives become important when underlying prevalence is near zero,10 as was the case for our community this summer, and we have applied a Bayesian procedure to account for the false positivity. We evaluate whether the estimated trends and magnitudes are robust against the sensitivity and specificity parameters.

Statistical Analysis

We are interested in rates of SARS-CoV-2 infection in two populations: (1) Individuals undergoing care within the hospital system as patients, and (2) the community from which the hospital draws as a whole. In addition to adjusting for measurement error associated with PCR testing for SARS-CoV-2 infection, we need to generate standardized estimates that reflect prevalence in the populations of interest rather than merely our sample of elective surgery patients we are drawing on. We anticipate that this sample of asymptomatic patients is a fairly representative group with minor discrepancy selected from the community at large, but also expect that poststratification to the target population with matching sociodemographics would help enhance the accuracy of our conclusions. We acknowledge that those who seek even elective hospital-based procedures may further vary from the overall community with respect to their comorbidities (hence, their susceptibility to symptomatic COVID-19), but believe it is reasonable to infer that normalization of their age, race/ethnicity, gender, and geography with multilevel regression and poststratification will account for much of that discrepancy.

We use a Bayesian approach to account for unknown sensitivity and specificity and apply multilevel regression and poststratification to testing records for population representation, here using the following adjustment variables: reported gender, age (0–17, 18–34, 35–64, 65–74, and 75+), race (white, black, and other), and county (Lake and Porter). This method has two key steps: (1) fit a multilevel model for the prevalence with the adjustment variables based on the testing data; and (2) poststratify using the population distribution of the adjustment variables, yielding prevalence estimates in the target population.

We poststratify to two different populations: patients in the hospital database (those who have historically and currently obtained care in our regional hospital system) and residents of Lake/Porter County, Indiana. For the hospital, we use the EHR database to represent the population of patients from three hospitals in the Community Health System (Community Hospital, St. Catherine Hospital, and St. Mary Medical Center). For the community, we use the American Community Survey 2014–2018 data from the two counties.

We particularly care about changes in SARS-CoV-2 incidence over time. Indeed, even if our demographic and geographic adjustment is suspect (given systematic differences between sample and populations), the greatest clinical utility lies in being able to predict how much the clinical burden present today is likely to change in the future. Here, the adjustment may be particularly important, as the mix of patients has changed somewhat during the study interval. The statistical details are included in the eAppendix; http://links.lww.com/EDE/B832. We perform all computations in R11; data and code are publicly available at https://github.com/yajuansi-sophie/covid19-mrp.

Assumptions and Conjectures

We began the data collection with a few hypotheses or speculations. First, we expected that the ratio between asymptomatic and symptomatic patients would be relatively constant, for a uniform demographic distribution specific to age, gender, and race/ethnicity. Second, we anticipated that changes in PCR positivity among asymptomatic individuals would precede changes in symptomatic PCR-detected infections by several days, because of the known temporal relationship of viral shedding to the onset of clinical disease.12 Third, these hypotheses would imply that trends in our asymptomatic SARS-CoV-2 infections would predict the behavior of the virus within the community as a whole. To this end, we aim to determine whether our model mirrors or predicts hospitalization rates as a proxy for clinical viral burden.

In summary, we anticipated that appropriate modeling of the PCR dataset would allow us to measure changes in acute infection incidence as an early warning metric to grasp the developing trend of the disease, or at least in concert with any changes. The procedure provides accurate assessment of trends, rather than incidence, and offers more temporally relevant information than the current use of percent testing positive. Further, we aimed to evaluate the validity of positivity and counts of positive cases as metrics to predict clinical burden.

RESULTS

Demographic Stability

We collect the preoperative PCR test time and results of patients in the hospital system, and demographic and geographic information including gender, age, race, and counties. As one of our study interests was to compare our analytic method to established symptomatic testing metrics, we collected the records for both asymptomatic presurgical and symptomatic patients tested within our hospital system, where the asymptomatic patients are assumed as our proxy sample to the target population. The symptomatic group is represented exclusively by outpatients tested with a positive answer to one or more queries about COVID-19 symptoms as defined by the Centers for Disease Control and Prevention (CDC); these queries have only changed over time in concert with changes made by CDC itself. Our data include daily records from 28 April 2020 to 15 February 2021, representing 30,116 asymptomatic and 13,960 symptomatic patients who received PCR tests. We poststratified the patients with tests to the 35,838 hospital EHR records in 2019 and the 654,890 community residents in Lake and Porter counties. The Table summarizes the test results and sociodemographic distributions, as well as the sociodemographics in the hospital system and the community, thus illustrating the discrepancy between the sample and the population.

TABLE.

Descriptive Summary of Test Results and Sociodemographic Distributions

| Variable | Asymptomatic PCR | Symptomatic PCR | Hospital | Community |

|---|---|---|---|---|

| Size (n) | 30,116 | 13,960 | 35,838 | 654,890 |

| Prevalence (%) | 1 | 26 | NA | NA |

| Female (%) | 59 | 60 | 57 | 51 |

| Male (%) | 41 | 40 | 43 | 49 |

| Age 0–17 (%) | 3 | 15 | 9 | 24 |

| Age 18–34 (%) | 10 | 20 | 12 | 21 |

| Age 35–64 (%) | 46 | 44 | 30 | 40 |

| Age 65–74 (%) | 24 | 12 | 20 | 9 |

| Age 75+ (%) | 17 | 9 | 29 | 6 |

| White (%) | 72 | 75 | 65 | 69 |

| Black (%) | 14 | 10 | 19 | 19 |

| Other (%) | 14 | 15 | 16 | 12 |

| Lake (%) | 84 | 84 | 88 | 74 |

| Porter (%) | 16 | 16 | 12 | 26 |

PCR indicates polymerase chain reaction.

The observed incidence rates are quite naturally different between the PCR tests: 1% for asymptomatic patients and 26% for symptomatic patients. As compared with the hospital system patients, asymptomatic patients with PCR tests tend to be female, middle-aged (35–64), or old (65–74), and white. For this reason, neither the hospital patients nor the asymptomatic patients serve as a precise representation of the community population, in particular with an under-coverage for young, male, and nonwhite residents. These differences are not large (Table); nonetheless, they are potential sources of error if not accounted for in our statistical model, and can also interfere with estimates of trends if the demographic breakdown of hospital patients varies over time. Furthermore, the county representation is unbalanced. Some patients are from south Cook County, Illinois, and are grouped into the Lake County as a proxy. Fortunately for our analysis, these contiguous communities have similar socioeconomic and ethnic demographics. The demographic discrepancy can be caused by unmeasured factors of the asymptomatic patients seeking elective surgeries, such as comorbidity status and healthcare utilization measures, the direct adjustment of which is impractical without their population distribution. However, this confounding bias merely enhances the need to poststratify demographics.

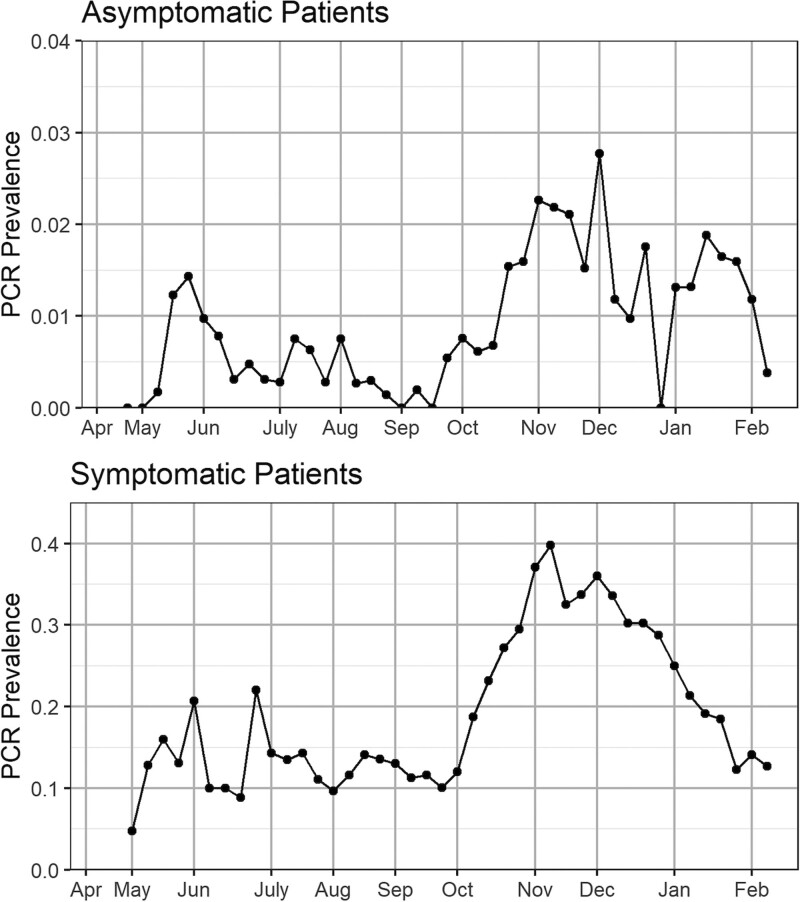

Figure 1 presents the observed PCR test incidence over time for asymptomatic and symptomatic patients. The two groups present different prevalence magnitudes and trends. The prevalence changed over time with low values until September, then we see an increasing trend, with a spike at the end of October, a decrease in November and December, and a bounce back in January.

FIGURE 1.

Observed weekly PCR test incidence for asymptomatic and symptomatic patients in the Community Hospital system. Note the different scales on the two graphs. The positions of the months on the x axis correspond to the week of data containing the first of that month. PCR indicates polymerase chain reaction.

We present the weekly number of asymptomatic patients seeking elective surgeries in the hospital system, which shows stable sample sizes, and examine the observed sociodemographic distributions of asymptomatic and symptomatic patients receiving PCR tests over time and find that the asymptomatic patients’ profiling is stable, while the sample decomposition of the symptomatic patients changes over time. Details are presented in eFigures 1–2; http://links.lww.com/EDE/B832. This discrepancy provides supporting evidence for our prestudy hypothesis that we should treat the asymptomatic samples as a substantially better proxy sample of the target hospital or community population than the corresponding symptomatic data.

The variation of prevalence could be due to various sample decompositions across time, but variation in thresholds for testing symptomatic people over time and demographics is certainly a likely factor. Overall, our analysis here calls into question relying on symptomatic data trends—as is currently the norm—in understanding the underlying true viral trends in the community and argues that asymptomatic testing is likely to be a superior proxy.

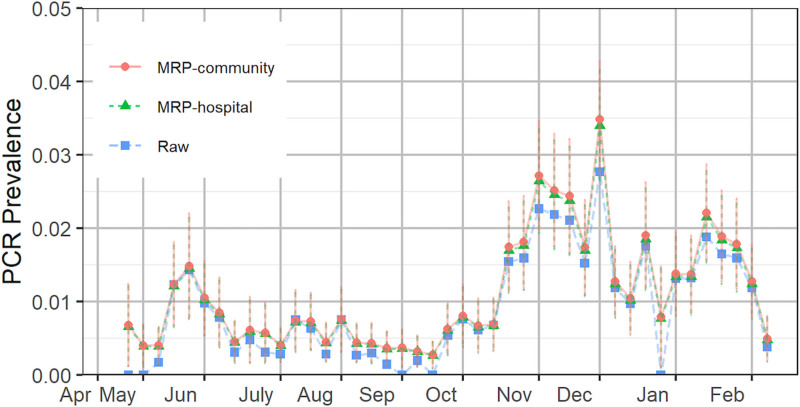

To correct for discrepancies between the sample demographics and those of the community at large, as necessitated by the above observations, we next apply multilevel regression and poststratification to model the incidence and poststratify to the hospital and community population for representative prevalence estimates. The outputs are given in Figure 2. For asymptomatic patients, the estimated positive PCR test prevalence is lower than the raw value after a spike between 19 May and 25 May and generally lower than 0.5% through 28 September. These findings reflect a low observed clinical burden of COVID-19 in our community after the initial March-April outbreak; see District 1 hospitalization13 in eFigure 3 in the eAppendix; http://links.lww.com/EDE/B832. We observe an increasing trend in October and then decreasing throughout November, with rates adjusted by multilevel regression and poststratification inflated substantially. The trend has spikes in December and January and decreases since mid-January.

FIGURE 2.

Estimated prevalence of the hospital system and community based on asymptomatic patients. The error bars represent one standard deviation of uncertainty. The positions of the months on the x axis correspond to the week of data containing the first of that month. MRP indicates multilevel regression and poststratification; PCR, polymerase chain reaction.

Prediction Metrics of Clinical Burden

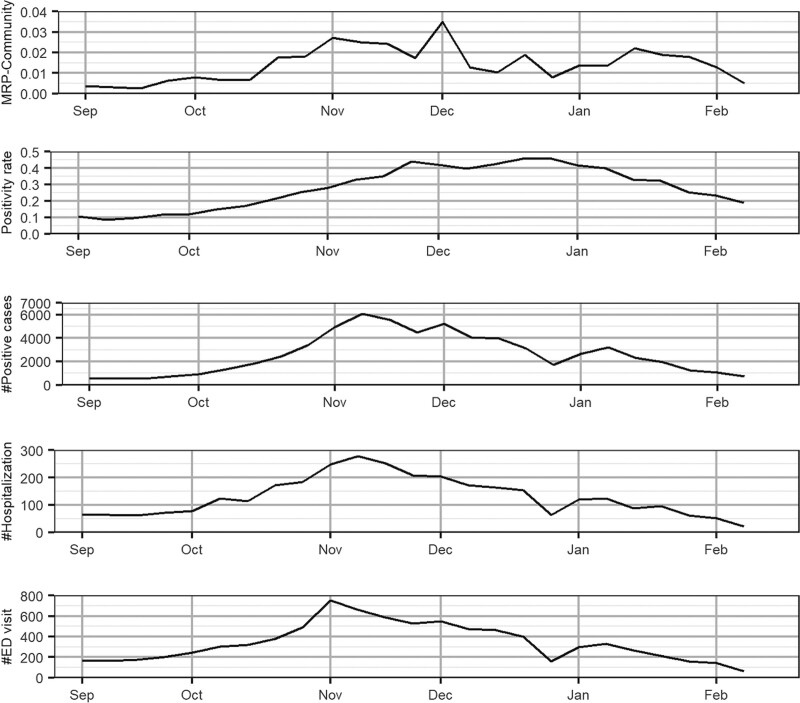

We are interested in evaluating whether the multilevel regression and poststratification-adjusted prevalence of asymptomatic COVID-19 could track with SARS-CoV-2–related hospitalization rates—as measured by counts of hospitalizations and emergency department (ED) visits—better than the currently applied metrics within our counties: positivity rate and counts of positive cases. Our expectation was that hospitalization census would lag viral incidence by a week or more and that COVID-19 related ED visits would track actual viral incidence, perhaps with a few days’ lag. These inferences follow from known lag times from exposure to symptoms to serious illness.12 To test this conjecture, we focus on the September 2020 to February 2021 interval as that timeframe encompasses all of the observed growth in viral burden after very low levels throughout late spring and the summer of 2020.

Our side-by-side analysis is illustrated in Figure 3. Each plot shows the week-to-week trend of the available metrics (multilevel regression and poststratification-normalized prevalence estimates of asymptomatic COVID-19, positivity rate, and number of positive cases) and those of hospitalization rates (the number of hospitalizations and ED visits) within Lake and Porter Counties. Comparison with district and state data are in the eAppendix; http://links.lww.com/EDE/B832.

FIGURE 3.

Comparison of MRP-normalized estimates based on asymptomatic cases (MRP community) with reported hospitalization counts, ED visits, positivity rate, and the number of positive cases in Lake and Porter counties. The vertical dashed lines indicate the peak values. Note the different scales on the five graphs. The positions of the months on the x axis correspond to the week of data containing the first of that month. ED indicates emergency department; MRP, multilevel regression and poststratification.

All three metrics parallel hospitalization through September up until mid-October, after which the growth in positivity and counts of positive cases far outstrip the growth in hospitalization while the multilevel regression and poststratification-estimated data remain in strict parallel throughout. Further, these estimates track even better with the ED visits. The hospitalization data, on the other hand, show a 1-week lag of the peak in November. Indeed, we begin to see some decrease in the adjusted asymptomatic positives in November and December that parallels a decrease in hospitalization while the generally accepted state metrics continue to increase and even accelerate. These data suggest that ongoing increases in District 1 positive testing metrics may simply be artifacts of the test selection process, rather than actual growth in the viral spread. Overall, a comparison of trends shows that symptomatic positive cases only begin to decrease at the time hospitalization does, and fully a week after ED visits do. Positivity rate does not identify the apparent decrease in clinical burden and has in fact accelerated through that decrease.

DISCUSSION

Our analysis indicates that applying our multilevel regression and poststratification normalization to data on the prevalence of asymptomatic SARS-CoV-2 infection produces a valuable leading indicator of hospital and community risk. When we set out to create a model for tracking viral incidence, we recognized substantial shortcomings in the available testing and its interpretation. Although state data have become much richer and testing protocols more uniform since we started applying our model, selection bias is still a substantial concern. Our goal for this study was to develop an easily implemented testing strategy—available to any hospital system—that, after demographic and geographic adjustment, could reasonably approximate a representative sample. In so doing, we hoped to assess the reliability of currently accepted metrics in their prediction of virus trends and, if possible, to improve our ability to anticipate those trends.

The asymptomatic preoperative patients we identify with our protocol are a favorable group to build upon. All sizeable hospital systems have a ready-made group of such patients who can produce a large number of data points quite rapidly. As patients continue to seek medical procedures, the population continues to naturally expand over time, and lends itself to trending data. In our nearly 900-bed hospital system, we have thus far generated over 30,000 data points over 43 weeks, representing a community of approximately 700,000 residents. The weekly number of data points has been fairly stable over time, and that observation is likely similar to many hospital systems. We have demonstrated that this sample population is fairly representative of the community demographics as a whole and that there has been minimal sample decomposition over time. That this population stability is not matched by similar demographic stability in the symptomatic population and that we are able to employ multilevel regression and poststratification to account for any demographic skew and instability in our own protocol both strongly argue that our model is far more representative of random sampling than the currently employed positive case and positivity data. We argue that hospital-based asymptomatic testing with this method is a more reliably random metric than any currently available and is easily generated from the routine testing of patients before their scheduled procedures.

Having established a reasonable statistical validity for our model, we wished to use it to measure the reliability of current state-based metrics. Our analysis finds that, in our community, all of the metrics trend similarly during viral surges. We would support the current view that numbers of positive cases and positivity both remain relatively stable during periods that our pseudorandom proxy method predicts to be stable and have increased during periods that our proxy predicts show true viral increases. Since the beginning of our study in early May, there have been substantial changes in test availability and certainly anecdotal evidence that the indications for testing have changed quite a bit as well. Consequently, the number of tests and clinical indications for testing have almost certainly both increased considerably over that interval, but the patterns cited above have remained stable. For that reason, we feel that there are good reasons to believe that the validity of positive case counts and positivity as metrics for viral spread is, in the event, relatively insensitive to test numbers, test availability, and clinical thresholds for testing, at least in our community.

Finally, we wanted to test each of these metrics as predictors of clinical burden. During the entire study period, we have used our model to predict clinical needs: staffing, bed and ventilator availability, personal protection equipment supplies, and so forth. Our general observation was that this proxy provided us some useful lead time to prepare for the virus. When we compare our model’s behavior to that of the standard metrics, we find it to be generally a better predictor of clinical burden. The effect is best seen in our November data. During the week of November 3–10, we were able to predict that viral transmission was decreasing and that our hospitalization was likely to be at or near its peak. Comparison of our model with COVID ED presentations in our area demonstrates a precise correlation, and that these changes occur about a week before positive cases and hospitalization census data change. Further, we see positivity rates continue to rise in our area well past the time that our metric and numbers of positive cases have declined. Given that ED visits and hospitalization census rates have also declined in that interval, we find that our model and the number of positive cases seem to be much better and more current predictors of the true viral clinical burden than positivity rates are.

In dealing with a case surge, the extra time of preparedness has been useful and nontrivial. Great benefit may also accrue from recognizing decreased transmission earlier, as we feel the model is able to do, as it may allow for opening up of needed clinical services and socioeconomic commerce in a community earlier than might otherwise be contemplated. In this sense, adherence to positivity rates may be particularly damaging.

We believe our model to be easily generalizable to many hospital systems. As discussed, the sample population and testing regime are readily available, likely to be reasonably representative and stable demographically over time, and easily normalized to true community demographics using the multilevel regression and poststratification code that we have made available. This approach represents a simple proxy for random sampling for any community that chooses to employ it. Further benefits might be gained by combining information from different hospital systems. If individual hospitals and medical groups gathered and analyzed their data as we propose in this article, with all the (deidentified) data shared in a common public repository, researchers could learn more by analyzing trends as they develop in the pooled dataset. This could be similar to other national data pooling efforts such as in the United States and Israel.14,15

We demonstrate the clinical utility of less rigorous approaches as well. Should a system choose to track its patients according to our testing protocol, but not incorporate the multilevel regression and poststratification adjustments, the relative stability of the population demographics suggests that the trends remain quite valid. Our regression models have shown potentially strong effects of age and racial/ethnic status on our metric such that one would need to ensure at least reasonable stability of those particular traits to trust observed raw trends without formal adjustment by multilevel regression and poststratification. We also find that while results depend strongly on the sensitivity of the test being employed, the trends in the results do not (details in the eAppendix; http://links.lww.com/EDE/B832).

This finding is encouraging for longer-term monitoring. Very inexpensive antigen testing is now becoming broadly available. These tests may be less sensitive or more time-specific than the PCR-based RNA testing we have been using and therefore less able to verify the true magnitude of viral spread. Nonetheless, our data show that they will likely function perfectly well to follow viral transmission and clinical burden trends, especially if normalized by multilevel regression and poststratification. Practically speaking, these trends are the prime concern of most healthcare entities.

Supplementary Material

Footnotes

This study was supported by the Michigan Institute of Data Science, National Science Foundation and National Institutes of Health.

Codes are publicly available at https://github.com/yajuansi-sophie/covid19-mrp. The data are confidential and cannot be released to the public.

The authors report no conflicts of interest.

Supplemental digital content is available through direct URL citations in the HTML and PDF versions of this article (www.epidem.com).

REFERENCES

- 1.Alleva G, Arbia G, Falorsi PD, Nardelli V, Zuliani A. A sampling approach for the estimation of the critical parameters of the SARS-CoV-2 epidemic: an operational design. 2020. Available at: https://arxiv.org/pdf/2004.06068.pdf. Accessed 6 July 2021.

- 2.Gelman A, Little TC. Poststratification into many categories using hierarchical logistic regression. Surv Methodol. 1997;23:127–135. [Google Scholar]

- 3.Si Y, Trangucci R, Gabry JS, Gelman A. Bayesian hierarchical weighting adjustment and survey inference. Surv Methodol. 2020;46:181–214. [Google Scholar]

- 4.Downes M, Gurrin LC, English DR, et al. Multilevel regression and poststratification: a modeling approach to estimating population quantities from highly selected survey samples. Am J Epidemiol. 2018;187:1780–1790. [DOI] [PubMed] [Google Scholar]

- 5.Zhang X, Holt JB, Lu H, et al. Multilevel regression and poststratification for small-area estimation of population health outcomes: a case study of chronic obstructive pulmonary disease prevalence using the behavioral risk factor surveillance system. Am J Epidemiol. 2014;179:1025–1033. [DOI] [PubMed] [Google Scholar]

- 6.Wang W, Rothschild D, Goel S, Gelman A. Forecasting elections with non-representative polls. Int J Forecast. 2015;31:980–991. [Google Scholar]

- 7.Roche cobas 6800/8800. Test performance in individual samples. 2020. Available at: https://diagnostics.roche.com/us/en/products/params/cobas-sars-cov-2-test.html. Accessed 6 July 2021.

- 8.Woloshin S, Patel N, Kesselheim AS. False negative tests for SARS-CoV-2 infection - challenges and implications. N Engl J Med. 2020;383:e38. [DOI] [PubMed] [Google Scholar]

- 9.Kucirka LM, Lauer SA, Laeyendecker O, Boon D, Lessler J. Variation in False-Negative rate of reverse transcriptase polymerase chain reaction-based SARS-CoV-2 tests by time since exposure. Ann Intern Med. 2020;173:262–267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gelman A, Carpenter B. Bayesian analysis of tests with unknown specificity and sensitivity. J R Stat Soc Ser C (Appl Stat). 2020;69:1269–1283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; 2020. [Google Scholar]

- 12.Lauer SA, Grantz KH, Bi Q, et al. The incubation period of coronavirus disease 2019 (COVID-19) from publicly reported confirmed cases: estimation and application. Ann Intern Med. 2020;172:577–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Indiana State Department of Health. COVID-19 Region-Wide Test, Case, and Death Trends. 2020. Available at: https://hub.mph.in.gov/dataset/covid-19-region-wide-test-case-and-death-trends. Accessed 6 July 2021.

- 14.Rosenfeld R, Tibshirani R, Brooks L, et al. COVIDcast. 2020. Available at: https://covidcast.cmu.edu/index.html. Accessed 6 July 2021.

- 15.Rossman H, Keshet A, Shilo S, et al. A framework for identifying regional outbreak and spread of COVID-19 from one-minute population-wide surveys. Nat Med. 2020;26:634–638. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.