Abstract

Instrumental (operant) behavior can be goal directed, but after extended practice it can become a habit triggered by environmental stimuli. There is little information, however, about the variables that encourage habit learning, or about the development of discriminated habits that are actually triggered by specific stimuli. (Most studies of habit in animal learning have used free-operant methods.) In the present experiments, rats received training in which a lever press was reinforced only in the presence of a discrete stimulus (S) and the status of the behavior as goal-directed or habitual was determined by reinforcer devaluation tests. Experiment 1 compared lever insertion and an auditory cue (tone) in their ability to support habit learning. Despite prior speculation in the literature, the “salient” lever insertion S was not better than the tone at supporting habit, although the rats learned more rapidly to respond in its presence. Experiment 2 then examined the role of reinforcer predictability with the brief (6-s) tone S. Lever pressing during the tone was reinforced on either every trial or on 50% of trials; habit was observed only with the highly predictable (100%) relationship between S and the reinforcer. Experiment 3 replicated this effect with the tone in a modified procedure and found that lever insertion contrastingly encouraged habit regardless of reinforcer predictability. The results support an interactive role for reinforcer predictability and stimulus salience in discriminated habit learning.

Keywords: goal-directed action, habit, reinforcer devaluation, reinforcer predictability, salience

Contemporary theories of instrumental (operant) behavior suggest that separable goal-directed and habit processes can control the response (e.g., Daw, Niv, & Dayan, 2005; Dickinson, 1985; 2012). According to these views, the goal-directed process allows the instrumental response to adjust to a change in the value of the reinforcing outcome. In contrast, the habit process allows antecedent stimuli to control efficient action that is insensitive to the value of the reinforcer. Both goal-directed and habit processes are thought to play a role in decision making (Balleine & O’Doherty, 2010; Daw, 2018; Verplanken, 2018; Wood, 2019), and several investigators have suggested that an imbalance between the two characterizes a range of psychiatric conditions including addiction, schizophrenia, depression, and obsessive-compulsive disorder (e.g., Robbins, Vaghi, & Banca, 2019).

One of the most well-known variables that influences goal-directed and habit processes is the amount of training or practice. Specifically, goal-directed actions can convert to habits when training is extended (Adams, 1982; Dickinson, Balleine, Watt, Gonzalez, & Boakes, 1995; Holland, 2004; Thrailkill & Bouton, 2015). However, the mechanisms that lead to the eventual conversion to habit are not yet clear. Thorndike (1898; 1911) suggested that habit strength is determined by the number of times a reinforcer is paired with a response; the reinforcement process was assumed to strengthen a direct association between some environmental stimulus (S) and the response (R) (see also, e.g., Wood, 2019; Wood & Rünger, 2016). Dickinson (1985; 1989) has emphasized a correlational perspective in which a weak experienced correlation between response rate and reinforcer rate allows habit to form. Early in training, or under a random-ratio schedule of reinforcement (e.g., Dickinson, Nicholas, & Adams, 1983), variability in behavior allows experience of its correlation with reinforcer rate. In contrast, after extensive training, or under a random-interval schedule, behavior rate varies less and the correlation between response rate and reinforcer rate is experienced less, allowing habit to form (Dickinson, 1985). More recently, experiments from our own laboratory suggested a role for reinforcer predictability and attention to the response in habit learning (Thrailkill, Trask, Alcalá, Vidal, & Bouton, 2018). We suggested that habit develops because increasingly predictable reinforcers allow the organism to pay less and less attention to its behavior. The present experiments were conducted in part to assess the generality of those initial findings.

Habits are typically distinguished from goal-directed actions with reinforcer devaluation methods (e.g., Adams, 1982). Most experiments consist of instrumental training followed by a phase without the response in which the value of the outcome is changed through taste aversion conditioning or sensory-specific satiety. After devaluation, the response is tested without the outcome (extinction). Goal-directed performance reflects the changed outcome value, so that a goal-directed action will be suppressed after the reinforcer has been devalued. In contrast, habitual performance is not affected by devaluation; the response instead occurs as if the outcome value has not changed.

It has long been accepted that operant responses are occasioned by discriminative stimuli (Skinner, 1938; Thorndike, 1911), and habits in the natural world are usually assumed to be occasioned by specific trigger stimuli (e.g., James, 1890; Wood, 2019). However, until very recently, habit learning has rarely been studied in the animal laboratory with discriminated operant procedures. Instead, habit has been studied with free-operant methods in which the experimental chamber, rather than a discrete cue, is implied to function as the discriminative stimulus (Adams, 1982; Dickinson et al., 1995; see Thrailkill & Bouton, 2015). To begin to fill this gap in knowledge, our recent experiments were designed to explore discriminated habit learning with a discriminated operant method (Thrailkill et al., 2018). The experiments initially established that habit, as indexed by insensitivity to reinforcer devaluation, can be difficult to observe in some discriminated operant procedures. In the initial experiments, rats received training in which presentations of a 30-s tone (S) signaled that lever presses could earn a pellet reinforcer according to a random-interval (RI) 30-s schedule. The lever was otherwise present during intertrial intervals, but not reinforced. This combination of reinforcement schedule and S duration has been widely used to study discriminated operants in this laboratory (Bouton, Todd, & León, 2014; Bouton, Trask, & Carranza-Jasso, 2016; Todd, Vurbic, & Bouton, 2014) and in others (Colwill, 1991; 1993; 1994; 2001; Colwill & Delamater, 1995; Colwill & Rescorla, 1988; 1990a; 1990b; Colwill & Triola, 2002; Rescorla, 1990a; 1990b; 1992; 1993; 1997; Rescorla & Colwill, 1989). Nonetheless, while using this procedure there were clear reinforcer devaluation effects, and hence evidence of goal-directed responding, following brief (4 sessions), moderate (22 sessions), and very extensive (66 sessions) amounts of instrumental training. The most extensive training involved approximately 830 occasions in which the response was reinforced in S, a number that exceeds the amount usually required for generating habit in free operant procedures (Adams, 1982; Dickinson et al., 1995; Thrailkill & Bouton, 2015).

Thrailkill et al. (2018) went on to discover that habit did develop when the duration of S was increased from 30 s (short) to 8 min (long). Although this result suggested a role for S duration in habit learning (and can be seen as consistent with the success of free-operant studies), the long S also guaranteed that the response would be reinforced in every S. In contrast, in the combination of a 30-s S with an RI 30-s reinforcement schedule, responses were reinforced on only half the trials, because the RI 30-s schedule generated intervals that were both less than and greater than 30 s with equal frequency. Thus, it was not clear whether habit learning was the result of a long stimulus duration or the experience of more predictable reinforcement in the S.

To separate these possibilities, a final experiment compared predictable versus unpredictable reinforcers within the 30-s S. Here, a partially reinforced group received training with the 30-s S with a RI 30-s schedule as in the previous experiments; results confirmed that roughly half the trials therefore contained a reinforced response and half did not. In contrast, a continuously reinforced group received training with the 30-s S and a modified variable-interval (VI) schedule that arranged for one reinforcer to be possible in every S. After this training, there was clear evidence of habit in the continuously reinforced group and goal-directed action in the partially reinforced group. Reinforcer predictability thus played a role in whether habit was learned in this discriminated operant task. The results were notably inconsistent with the Law of Effect and the correlation-based accounts of habit: Habit learning differed even though training resulted in the same number of response-reinforcer pairings, equally-strong stimulus control of the response, and experienced response-reinforcer rate correlations.

The present experiments were designed to further explore habit learning in discriminated operant procedures. They also further studied the influence of reinforcer predictability. The design of each is shown in Table 1. We began by replicating and extending a novel discriminated operant procedure introduced by Vandaele, Pribut, and Janak (2017) that appears to generate habit. In their method, rats received trials in which a lever was inserted into the chamber and the fifth response on it was always reinforced (the lever was withdrawn at the same time). The procedure generated habit learning, which Vandaele et al. suggested might be due to the high salience of the lever-insertion S (e.g., Holland et al., 2014; Meyer, Cogan, & Robinson, 2014). Experiment 1 compared this procedure with a second procedure in which the lever was continuously available but similar trials were signaled by a tone. Both Ss supported habit with sufficient training. We then asked in two experiments whether habit learning was prevented by making the reinforcer unpredictable from trial to trial. Overall, the results further confirm that habit can develop in discriminated operant methods, provide new support for the role of reinforcer predictability, and enhance our understanding of how stimulus salience might also contribute to habit formation.

Table 1.

General Experimental Design

| Discriminated operant training | Aversion conditioning | Test |

|---|---|---|

| S:R+ | Pellet-LiCl (Pellet) or Pellet, LiCl (Unpaired) | S:R− |

Note. The plus symbol (+) indicates reinforced; the minus symbol (−) indicates nonreinforced (extinction). S = discriminative stimulus; R = response, LiCl = Lithium Chloride.

Experiment 1

In the Vandaele et al. (2017) experiments, habit was acquired when insertions of the lever (S) signaled availability of a reinforcer and both the primary reinforcer and lever retraction were contingent on a fixed ratio (FR 5) schedule. The authors suggested that lever insertion may promote habit because it is an especially salient stimulus. In Experiment 1, half the rats received a lever-insertion procedure modeled on the Vandaele et al. (2017) method. The other half received a similar contingency, but the lever was continuously present in the chamber and the FR5 schedule was signaled by presentation of a tone. Half the rats in each condition then received taste aversion conditioning with the pellet reinforcer prior to a test with S under extinction. Experiment 1a examined sensitivity to devaluation after an amount of training that was similar to that used by Vandaele et al. (2017). Experiment 1b then compared sensitivity to devaluation after more extended training. If the salience of the lever insertion promotes habit, we expected to observe habit (insensitivity to reinforcer devaluation) more readily in the group trained with the lever than the group trained with the tone.

Method

Subjects

Sixty-four female Wistar rats (32 in Experiment 1a and 32 in Experiment 1b) were purchased from Charles River, St. Constance, Canada. The rats were aged 75 to 90 days at the beginning of the experiment and were individually housed in a climate-controlled room with a 16:8 light-dark cycle with unlimited access to water. Experimental sessions were conducted during the light portion of the cycle at approximately the same time each day. Rats were maintained at 80% of their free-feeding weights for the duration of the experiment with supplementary feeding at approximately 2hr postsession when necessary.

Apparatus

Two unique sets of four conditioning chambers (ENV008-VP; Med Associates, Fairfax, VT) were housed in separate rooms of the laboratory. Each chamber was in its own sound attenuation chamber. All boxes measured 30.5 by 24.1 by 23.5 cm (length by width by height). The side walls and ceiling were made of clear acrylic plastic, and the front and rear walls were made of brushed aluminum. A recessed food cup measured 5.1 cm by 5.1 cm and was centered on the front wall approximately 2.5 cm above the grid floor. A retractable lever (ENV-112CM, Med Associates) was located on the front wall on the left side of the food cup. It was 4.8 cm long, 6.3 cm above the grid floor, and protruded 1.9 cm from the front wall when extended. The chambers were illuminated by a 7.5-W incandescent bulb mounted to the ceiling of the sound attenuation chamber, 34.9 cm from the grid floor; ventilation fans provided background noise of 65 dBA. The two sets of chambers had unique features that allowed them to serve as different contexts but were not used for that purpose here. In one set of boxes, the floor grids were spaced 1.6 cm apart (center to center). In the other set of boxes, the floor consisted of alternating stainless-steel grids with different diameters (0.5 and 1.3 cm, spaced 1.6 cm apart). There were no other distinctive features between the two sets of chambers. Reinforcement consisted of the delivery of one 45-mg sucrose pellet into the food cup (5TUT; TestDiet, Richmond, IN). A 2,900-Hz tone (80 dB) could be delivered through a Sonalert tone generator (ENV-223AM; Med Associates) centered on the front wall above the food cup. The apparatus was controlled by computer equipment in an adjacent room.

Procedure

Experiment 1a.

Food restriction began one week prior to the beginning of training. Sessions were then conducted seven days a week at approximately the same time each day.

Magazine training.

On the first day, all rats received a single 30-min session of magazine training with the lever retracted. Sucrose pellets were delivered after 60 s on average according to a random-time (RT) 60-s schedule.

Acquisition.

For half the rats, lever insertion into the chamber was the discriminative stimulus (S; Group Lever). For the remaining half, the tone was the S (Group Tone). Instrumental training began on Day 2. For Group Lever, the session consisted of 20 insertions of the lever, upon which one press resulted in the delivery of a pellet (fixed ratio 1; FR 1) and immediate retraction of the lever. For Group Tone, the lever was present during the entire session. The first 20 presses delivered a pellet (FR 1); this was followed by 20 presentations of the tone during which a single lever press resulted in the delivery of a pellet and offset of the tone (FR 1). For each group, Day 3 consisted of 20 S presentations in which the response was reinforced according to FR 1. The S presentations were separated by a variable intertrial interval (ITI) that averaged 60 s. Throughout training, failure to complete the FR requirement within 60 s resulted in the termination of the S without reinforcement and start of the next ITI. The FR requirement was increased to FR 3 on Day 4 and to its final value of FR 5 on Day 5. Training ended after 16 sessions, which allowed the possibility of 320 reinforced responses.

Reinforcer devaluation.

On the next day, half the rats in the Lever and Tone groups were assigned to receive the sucrose pellets either paired or unpaired with an injection of lithium chloride (LiCl; 20 ml/kg, 0.15 M, i.p.). Devaluation took place in the operant chambers in six two-day cycles consisting of a LiCl injection on Day 1 and no injection on Day 2. The tone and/or lever were absent on all sessions. On Day 1, Groups Lever Paired and Tone Paired received 50 noncontingent pellets according to RT 60-s, and Groups Lever Unpaired and Tone Unpaired were placed in the chamber for the same amount of time without pellet deliveries. After the session, all rats were removed from the chamber and administered an immediate injection of LiCl and returned to their home cage. On Day 2, unpaired groups received 50 noncontingent pellets according to RT 60 s and paired groups were placed in the operant chambers but did not receive pellets. After the session, rats were returned to their home cages without LiCl injection. In each of the following cycles, the groups received the mean number of pellets eaten by the Paired groups in the preceding cycle in order to maintain equivalent pellet exposure. Aversion conditioning proceeded for a total of six 2-day cycles.

Test.

On the day following the last aversion-conditioning cycle, all rats received a test session containing 10 presentations of their training S (lever insertion or tone) separated by a variable 60-s ITI. Lever presses resulted in the termination of S according to FR5, but no pellets were delivered (extinction). Trials ended after 60 s if the ratio requirement was not met. On the following two days, rats received two additional tests to confirm the effectiveness of the reinforcer devaluation treatment. First, pellet consumption was assessed by placing the rats in the chamber without the lever and delivering 10 pellets on RT 60 s. On the final day, the ability of the pellet to support instrumental responding was assessed in a reacquisition session in which rats were allowed to press the lever to earn food pellets according to their previous discriminated operant procedures.

Experiment 1b.

Experiment 1b used the same procedure as Experiment 1a except that all rats received 24 days of operant training and each training session consisted of 60 trials (three times as many as in Experiment 1a). Training allowed a total of 1,440 possible reinforced responses. Reinforcer devaluation and test procedures were the same as in Experiment 1a.

Data analysis.

The computer recorded the number of lever presses made during each S as well as during each ITI period. For groups trained with the tone S, responding during both periods was of interest, and both are reported here. (Groups trained with the lever S could not make pre-S responses.) The pre-stimulus period for each trial was defined as the period immediately before each S that had a duration equal to the mean S duration that had been obtained in that session. Individuals were excluded as outliers if their z-score differed the group mean by more than 2 standard deviations (SD) (Field, 2005). Analyses of variance (ANOVAs) were used to assess differences based on between-group (e.g., training S type, devaluation) and within-subject factors (e.g., stimulus period, session, 4-trial block). Planned orthogonal contrasts were conducted for all devaluation effects. The results of the pellet consumption and reacquisition tests always confirmed devaluation effects and are available in a supplemental file. Nonparametric tests were used to compare groups when data violated the normality assumption. The rejection criterion was set at .05 for all statistical tests. When relevant to the hypotheses, we report effect sizes with confidence intervals calculated according to the method suggested by Steiger (2004).

Results

Experiment 1a.

Acquisition.

The rats acquired the discriminated operant response occasioned by the lever and the tone without incident. Data from the training phase are shown in Figure 1a. To facilitate a comparison of responding to the lever and tone at this time, responding in the tone was expressed as an elevation score calculated as response rate in the tone minus response rate in the pre-S period as defined above. (Pre-S responding in the Lever condition was always zero, so the elevation score in that group was response rate in S.) A Group (Lever, Tone) by Devaluation (Paired, Unpaired) by Session (16) ANOVA on the elevation scores found a significant effect of session, F(15, 420) = 35.43, MSE = 264.42, p < .001, and a significant group by session interaction, F(15, 420) = 2.93, p < .001. No other effects or interactions reached statistical significance, largest F(1, 28) = 2.62, MSE = 6,118.37.

Figure 1.

Results of Experiment 1a. Panel a. shows the results of the training phase for each group. Panel b. shows the results of the response test for Group Lever (Left) and Group Tone (Right). “S” refers to the stimulus-on period and “pre” refers to equivalent period prior to the presentation of the stimulus. Error bars are the standard error of the mean.

The group by session interaction suggests that the rats initially responded more rapidly to the lever, but the difference decreased as training progressed. To test this, Group by Devaluation by Session (4) ANOVAs were applied to blocks of 4 sessions across the training phase. Responding was higher in the Lever groups in the first two blocks, where there were significant effects of group, F(1, 28) = 29.27, MSE = 533.97, p < .001 and F(1, 28) = 4.45, MSE = 1,549.31, p = .044. There were also significant effects of session, F(3, 84) = 50.27, MSE = 93.18, p < .001 and F(3, 84) = 8.05, MSE = 1,141.22, p < .001. No effects or interactions involving the devaluation factor approached significance, largest Fs(3, 84) = 1.44 and 1.51. The group by session interaction was significant in blocks 1 and 3, F(3, 84) = 4.39, p = .006 and F(3, 84) = 3.66, MSE = 161.49, p = .016. There were no other significant effects or interactions in block 3, largest F(3, 84) = 2.50. If anything, responding was higher here in the tone group than the lever group. In the final block, there were no significant effects or interactions, largest F(3, 84) = 1.67, MSE = 162.66.

Elevation scores in Group Tone were not complicated by group differences in pre-S response rates. Response rate during the S and pre-S periods (not shown) was compared in a Devaluation by Stimulus Period (S, pre-S) by Session (16) ANOVA. There were significant effects of stimulus period, F(1, 14) = 16.38, MSE = 4,530.88, p < .001, session, F(15, 210) = 16.42, MSE = 345.90, p < .001, and a stimulus period by session interaction, F(15, 210) = 16.12, MSE = 214.61, p < .001. No effect involving devaluation approached significance, Fs < 1.

Over the course of instrumental training, the groups earned a mean of 316.1 (SEM = 1.2) total pellets. A Group by Devaluation ANOVA found no significant effects, largest F(1, 28) = 1.46, MSE = 55.59.

Test.

Reinforcer devaluation proceeded without incident; rats in the Paired groups learned to reject the sucrose pellets. On the last trial of the devaluation phase, Groups Lever Paired and Tone Paired ate a mean of 0.3 (SEM = 0.2) and 2.4 (SEM = 0.8) pellets. Results of the lever press extinction test are shown in Figure 1b. Groups Lever Paired and Tone Paired made fewer responses in the S than the corresponding Unpaired groups. The devaluation effect was not influenced by training stimulus. Response rates in the S were compared in a Group (Lever, Tone) by Devaluation (Paired, Unpaired) ANOVA. There was a significant effect of devaluation, F(1, 28) = 19.06, MSE = 431.19, p < .001, η2 = .41, 95% CI [.12, .59]. There was a nonsignificant trend suggesting more responding in Group Lever than Group Tone, F(1, 28) = 3.28, p = .081, and no group by devaluation interaction, F < 1. For Group Tone, a separate analysis compared response rate during the S and pre-S period. A Devaluation (Paired, Unpaired) by Stimulus Period (S, pre-S) ANOVA found significant effects of devaluation, F(1, 14) = 12.11, MSE = 272.02, p = .004, η2 = .46, 95% CI [.07, .68], stimulus period, F(1, 14) = 15.10, MSE = 195.58, p = .002, and an interaction, F(1, 14) = 9.26, MSE = 195.58, p = .009, η2 = .40, 95% CI [.03, .63]. A follow-up comparison of response rate in S and the pre-S period revealed an effect of devaluation in each stimulus period, smallest F(1, 14) = 10.61, MSE = 10.39, p = .006, η2 = .43, 95% CI [.05, .65]. Finally, planned orthogonal contrasts compared Paired and Unpaired test response rates in S in Groups Lever and Tone. Each contrast was significant, indicating lower response rate in Groups Lever Paired and Tone Paired, smallest F(1, 28) = 7.67, MSE = 431.19, p = .010, η2 = .22, 95% CI [.01, .44].

The results of the pellet consumption and reacquisition tests verified that the pellet no longer served as a reinforcer after aversion conditioning. Groups Lever Paired and Tone Paired ate a mean of 0.4 (SEM = 0.2) and 0.5 (SEM = 0.2) of the 10 pellets offered. Groups Lever Unpaired and Tone Unpaired consumed all 10 pellets. The following day, the reacquisition test further confirmed that the pellets no longer supported lever pressing in Groups Lever Paired and Tone Paired.

Experiment 1b.

Acquisition.

Groups Lever and Tone again acquired discriminated operant responding without incident. Response rates and elevation scores across sessions of the training phase are shown in Figure 2a. (Recall that there were 60 trials in each session here as opposed to 20 in Experiment 1a.) A Group by Devaluation by Session ANOVA found a significant effect of session, F(23, 644) = 32.69, MSE = 682.85, p < .001, as well as a group by session interaction, F(23, 644) = 3.17, p < .001. No other effects or interactions reached statistical significance, largest F(1, 28) = 1.20, MSE = 42,038.33.

Figure 2.

Results of Experiment 1b. Panel a. shows the results of the training phase for each group. Panel b. shows the results of the response test for Group Lever (Left) and Group Tone (Right). “S” refers to the stimulus-on period and “pre” refers to equivalent period prior to the presentation of the stimulus. Error bars are the standard error of the mean.

As before, the group by session interaction suggested that the rats learned more rapidly to respond to the lever, but this difference decreased as training progressed. We applied Group by Devaluation by Session (4) ANOVAs to blocks of 4 sessions across the training phase. On Sessions 1–4, there was an effect of group, F(1, 28) = 9.84, MSE = 1,509.54, p = .004, session, F(3, 84) = 7.98, MSE = 593.64, p < .001, and a Group by Session interaction, F(3, 84) = 8.71, p < .001. Responding was higher in the Lever groups at this time. There were no effects or interactions involving the group or devaluation factors in any of the remaining four-session blocks, largest F(1, 28) = 3.27, MSE = 4,493.77. For Sessions 5–8, 9–12, and 13–16, there was a significant effect of session, smallest F(3, 84) = 4.88, MSE = 213.55, p = .004, and for Sessions 17–20 and 21–24, there were no significant effects, largest F(3, 84) = 1.82, MSE = 217.31.

A separate analysis compared response rate in Group Tone during the S and pre-S periods. A Devaluation by Stimulus Period (S, pre-S) by Session (24) ANOVA found significant effects of stimulus period, F(1, 14) = 56.56, MSE = 2,7479.60, p < .001, session, F(23, 322) = 22.46, MSE = 659.30, p < .001, and a stimulus period by session interaction, F(23, 322) = 17.38, MSE = 473.60, p < .001. No effect involving devaluation approached significance, Fs < 1.

The groups earned a mean of 1436.3 (SEM = 2.3) total pellets during acquisition. A one-way ANOVA compared obtained pellets in Groups Lever Unpaired, Tone Paired, and Tone Unpaired (Group Lever Paired was excluded due to a lack of variance). The analysis found no evidence of a difference between the groups, F(2, 21) = 1.96, MSE = 70.64, p = .166.

Test.

Devaluation again proceeded without incident. On the last trial of the devaluation phase, Groups Lever Paired and Tone Paired ate a mean of 0.4 (SEM = 0.2) and 0.1 (SEM = 0.1) pellets, respectively. Response rates in the test session are shown in Figure 2b. In both the Lever (left) and Tone (right) conditions, the Paired and Unpaired groups responded similarly. This insensitivity to reinforcer devaluation (i.e., habit) did not depend on the type of S during training. A Group (Lever, Tone) by Devaluation (Paired, Unpaired) ANOVA found no significant effects or interactions, largest F(1, 28) = 2.61, MSE = 1,668.72, p = .117. A separate analysis compared response rate in the Paired and Unpaired Tone groups during the S and pre-S periods. A Devaluation by Stimulus Period ANOVA found an effect of stimulus period, F(1, 14) = 7.65, MSE = 1,075.13, p = .015. The effects of devaluation and interaction did not approach significance, F < 1. Planned orthogonal contrasts of Paired and Unpaired groups were conducted for S response rates in Groups Lever and Tone. Neither contrast was significant, largest F(1, 28) = 1.18, MSE = 1,668.72. Despite its lack of effect on instrumental responding, the effectiveness of the reinforcer devaluation treatment was confirmed by the consumption and reacquisition tests. Groups Lever Paired and Tone Paired rejected all pellets offered and the Unpaired groups ate all 10. The following day, the reacquisition test further confirmed that the pellet no longer supported lever pressing in Groups Lever Paired and Tone Paired.

Discussion

Rats successfully acquired discriminated operant responding with either lever insertion or the tone as the S. Lever insertion controlled more responding than the tone early during conditioning, perhaps consistent with the possibility that it was more salient. However, under the present conditions, the type of S did not influence whether the response became a habit or a goal-directed action. In Experiment 1a, rats received reinforcer devaluation and testing after the amount of training used by Vandaele et al. (2017, e.g., Figure 4). In contrast to their findings, we observed sensitivity to reinforcer devaluation, indicating goal-directed action, after training with the lever-insertion S (as well as the tone). In Experiment 1b, after more extensive training, reinforcer devaluation did not reduce responding occasioned by either lever-insertion or tone. The results confirm that habit can be created with procedures involving discrete stimuli (e.g., Thrailkill et al., 2018; Vandaele et al., 2017). But there was no evidence that lever and tone differ in their ability to support goal-directed and habitual behavior under the conditions examined here. Overall, the results were highly similar with either stimulus.

Experiment 2

The goal of the second experiment was to test whether reinforcer predictability influences habit formation using the discriminated operant procedure with the tone that was developed in Experiment 1. (The selection of the tone as S was arbitrary.) Following Thrailkill et al. (2018), we compared a procedure in which every S presentation potentially predicted an earned reinforcer (continuous reinforcement; CRF) with one in which S predicted a reinforcer only half the time (partial reinforcement; PRF). The procedure differed from that of Thrailkill et al. (2018) in how it arranged PRF and CRF training and used substantially shorter trial and reinforcement schedule durations. All rats received the same number of reinforced trials in each session as in Experiment 1b. For one group, partial reinforcement was created by adding nonreinforced trials to the ITI between reinforced trials. A second group received continuous reinforcement (Group CRF) consisting of S presentations whose timing was yoked to the onset of the reinforced trials in Group PRF. If habit development assessed by reinforcer devaluation depends on receiving reinforcers predictably in each trial (Thrailkill et al., 2018, Experiment 4), we should observe goal-directed responding in Group PRF and habitual responding in Group CRF. To avoid continuous reinforcement in the PRF group that might be produced by response-contingent stimulus offset (a possible conditioned reinforcer), Experiment 1’s procedure was modified so that the tone terminated on every trial in a manner that was not contingent on the response.

Method

Subjects and apparatus

Thirty-two naïve female Wistar rats were purchased from same supplier and maintained under the same conditions as in Experiment 1. The apparatus consisted of the same conditioning chambers used in Experiment 1.

Procedure

Magazine training.

On the first day of training, all rats received a 30-min session of magazine training in the operant chambers. Sucrose pellets were delivered freely on an RT 60-s schedule.

Acquisition.

On the next day, rats received a 30-min session of lever-press training in which the lever was continuously present and presses were reinforced according to an RI 30-s schedule. Discriminated operant training began the following day and continued for the remaining 24 sessions of the phase. Sixteen rats assigned to Group PRF received 120 presentations of a 6-s tone and were reinforced with a sucrose pellet, on average, on 50% of the trials according to a VI 3-s schedule of reinforcement. The ITI was variable around 30 s. The remaining 16 rats, assigned to Group CRF, received only the reinforced trials yoked to a master rat in Group PRF. That is, tone presentations in this group occurred at the same points in time that reinforced trials did in Group PRF. Group CRF therefore received 60 trials separated by a variable 60-s ITI and could earn a sucrose pellet on every trial. The VI parameter was based on the mean trial length obtained by Group Tone in Experiment 1b during the final training phase session. The VI was modified to limit intervals to a range of 1 s to 4 s. The Tone duration was fixed at 6 s, and its offset was thus not contingent on a response. This change from Experiment 1 was designed to avoid conditioned reinforcement that could have otherwise occurred on every trial resulting from response-contingent offset of S (Locurto, Terrace, & Gibbon, 1978).

Reinforcer devaluation.

Rats received six 2-session cycles of reinforcer devaluation in the same manner as in Experiment 1.

Test.

As in Experiment 1, testing occurred across three days. On Day 1, lever responding was tested in a session containing 10 presentations of the 6-s tone S separated by a variable 42.4 s ITI. The ITI duration was the geometric mean of 30 s and 60 s in order to reduce the possible differences in generalization decrement from training ITI to test ITI between Groups CRF and PRF (Church & Deluty, 1977). Lever presses did not result in a pellet (extinction). On Day 2, strength of reinforcer devaluation was tested by delivering 10 pellets on a RT 60 s schedule. Finally, on Day 3, all rats received a session in which they could press the lever to earn food pellets according to their previous discriminated operant procedures.

Results

Data from a rat in Group CRF Unpaired and a rat from Group PRF Paired were excluded because their response totals in the test session were greater than 2 SD above the group means (Zs = 3.69 and 7.26; Field, 2005). Since the S duration was fixed at 6 s, the pre-stimulus period was the 6 s before each S. For the test session, we analyzed the number of responses averaged over 10 trials.

Acquisition.

The rats acquired the discriminated operant response without incident. The left panels of Figure 3 show mean responses during the S and pre-S periods across sessions of the training phase. For Group PRF, we focused on the reinforced trials. The training procedures resulted in similar responding in the reinforced S, though responding tended to be higher in Group PRF during both the S and the pre-S period. A Group (CRF, PRF) by Devaluation (Paired, Unpaired) by Stimulus Period (S, pre-S) by Session (24) ANOVA found significant effects of group, F(1, 26) = 14.75, MSE = 36.29, p = .001, stimulus period, F(1, 26) = 112.69, MSE = 15.04, p < .001, and session, F(23, 598) = 4.65, MSE = 0.49, p < .001. It also found several interactions: group by stimulus period, F(1, 26) = 9.39, p = .005, stimulus period by session, F(23, 596) = 6.27, MSE = .252, p < .001, group by stimulus period by session, F(23, 598) = 2.35, p < .001, and the four-way interaction, F(23, 598) = 2.30, p = .001. The group by session interaction approached significance, F(23, 598) = 1.50, p = .064. To understand the pattern, we analyzed responding in S and pre-S periods in separate Group by Devaluation by Session ANOVAs. In the S periods, there was a significant effect of session, F(23, 598) = 10.77, MSE = 0.29, p < 001, and a group by session interaction, F(23, 598) = 1.82, p = .011. The other effects and interactions did not reach significance, largest Fs were for group and the group by devaluation interaction, F(1, 26) = 3.50, MSE = 17.82, p = .071, and F(1, 26) = 3.99, p = .056. In the pre-S periods, there were significant effects of group, F(1, 26) = 18.30, MSE = 33.50, p < .001, session, F(23, 598) = 1.59, MSE = 0.46, p = .041, and a group by session interaction, F(23, 598) = 1.76, p = .016. There was more responding in the pre-S period in the PRF groups. The other effects or interactions did not reach significance, largest F = 1.10.

Figure 3.

Results of Experiment 2. Top. Results of the training (Left) and test (Right) phases for Group PRF (partial reinforcement). Bottom. Results of the training (Left) and testing (Right) phases for Group CRF (continuous reinforcement). “S” refers to the stimulus-on period and “pre” refers to equivalent period prior to the presentation of the stimulus. Error bars are the standard error of the mean.

In the final session, a Group by Devaluation by Stimulus Period ANOVA found significant effects of group, F(1, 26) = 15.53, MSE = 2.12, p < .001, stimulus period, F(1, 26) = 100.41, MSE = 0.87, p < .001, and their interaction, F(1, 26) = 6.78, p = .015. The other effects and interactions were not significant, largest F = 1.67. To understand the interaction, we conducted separate Group by Devaluation ANOVAs for the S and pre-S periods. For S, there was an effect of group, F(1, 26) = 5.09, MSE = 1.07, p = .033, consistent with a higher response rate in Group PRF, and no other significant effects, largest F = 2.54. For pre-S, there was also an effect of group, F(1, 26) = 17.43, MSE = 1.91, p < .001, indicating greater pre-S responding in Group PRF. There were no other significant effects, Fs < 1. Elevation scores in the final session were 3.2 (SD = 1.1), 2.9 (SD = 0.6), 1.7 (SD = 1.9), and 1.8 (SD = 1.4) in Groups CRF Paired, CRF Unpaired, PRF Paired, and PRF Unpaired. A Group by Devaluation ANOVA found a significant effect of group, F(1, 26) = 6.78, MSE = 1.74, p = .015, and no other significant effects or interaction, Fs < 1. The analysis suggests that the tone occasioned fewer responses in Group PRF than Group CRF at the end of training.

By the end of training, the groups had earned a mean of 1,366.5 (SEM = 7.4) pellets. A Group by Devaluation ANOVA found no significant effects, Fs < 1.

Test.

Aversion conditioning proceeded without incident. Groups CRF Paired and PRF Paired ate a mean of 1.3 (SEM = 0.5) and 0.3 (SEM = 0.2) pellets in the final aversion conditioning trial. (The Unpaired groups ate all pellets offered.) Results from the test of lever pressing are shown in the right panels of Figure 3. Following devaluation, responding in the S was not reduced in Group CRF, suggesting habit, but it was suppressed in Group PRF, suggesting goal direction. Response rate in the pre-S periods was similar in each group at this time. A Training (CRF, PRF) by Devaluation (Paired, Unpaired) by Stimulus Period (S, pre-S) ANOVA confirmed these observations. There was a significant effect of stimulus period, F(1, 26) = 82.04, MSE = 1.50, p < .001. Importantly, there was a significant training by devaluation by stimulus period interaction, F(1, 26) = 10.25, p = .004, η2 = .28, 95% CI [.04, .50]. The group by devaluation interaction approached significance, F(1, 26) = 3.64, MSE = 3.95, p = .078. No other effects approached significance, largest F = 1.27. Separate Training by Devaluation ANOVAs compared responding in each stimulus period. In S, there was a significant training by devaluation interaction, F(1, 26) = 6.96, MSE = 4.12, p = .014, η2 = .21, 95% CI [.01, .44], and no other effects, Fs < 1. In the pre-S periods, there were no effects or interaction, largest F = 2.01, MSE = 1.34. Planned orthogonal contrasts of Paired and Unpaired groups in the S confirmed no devaluation effect in Group CRF, F(1, 26) = 1.40, p = .247, but a significant one in Group PRF, F(1, 26) = 6.48, MSE = 4.12, p = .017, η2 = .20, 95% CI [.01, .43]. The results are consistent with habit in Group CRF and goal-directed action in Group PRF.

The effectiveness of the devaluation treatment was confirmed in the subsequent pellet consumption test: Groups CRF Paired and PRF Paired ate a mean of 3.4 (SEM = 1.2) and 0.6 (SEM = 0.4) of the 10 pellets offered, while Groups PRF Unpaired and CRF Unpaired ate all 10 pellets. The reacquisition test further confirmed that the pellets no longer functioned to support lever pressing in Groups CRF Paired and PRF Paired.

Discussion

Rats acquired the discriminated operant response under continuous and partial reinforcement. During testing, the lack of a devaluation effect in the CRF groups replicated the results of Experiment 1b. The modified method, which arranged the same average delay to reinforcement as in Experiment 1 but without the response-contingent termination of S on every trial, thus continued to produce habit. But more important, the reinforcer devaluation effect evident in the PRF groups suggested the presence of goal-direction rather than habit. That result extends the findings of Thrailkill et al. (2018) and suggests generality for the role of reinforcer predictability in habit learning.

One additional result was the higher pre-S responding in Group PRF relative to Group CRF during the acquisition phase. The result may not be surprising from the perspective of theories of associative learning: The nonreinforced trials delivered in the PRF procedure might weaken the conditioning of S, and S would therefore be less effective at competing with (blocking) contextual conditioning, which presumably controls responding in the pre-S period. However, differences in pre-S responding also make it difficult to know the strength of conditioning and operant responding in S. This could introduce the possibility that simple differences in the level of conditioning in S, rather than reinforcer predictability per se, accounts for the difference in sensitivity to devaluation between CRF and PRF groups. Thrailkill et al. (2018) reported similar action in PRF and habit in CRF in groups that did not differ in levels of conditioning to S. And consistent with that, there was equivalent responding in S, and no pre-S differences, observed during the lever press test in the present experiment. We nonetheless conducted Experiment 3 with methods that were designed to further reduce this possibility.

Experiment 3

Experiment 3 arranged a different PRF procedure by deleting reinforced trials scheduled in the CRF procedure. (In the previous experiment, PRF was created by adding nonreinforced trials between scheduled reinforced trials.) The new method of creating PRF increases the time between reinforced trials and the time within S before each reinforcer by the same factor. According to some theories (e.g., Gallistel & Gibbon, 2000), it may therefore be expected to create equivalent conditioning to S and therefore instrumental responding in S in each group. It might also therefore create equivalent competition with the context, and hence equivalent pre-S responding. Using this method, Experiment 3a compared sensitivity to reinforcer devaluation in CRF and PRF groups trained with the tone S, and Experiment 3b tested with the lever insertion as the S. Note that the latter method prevented pre-S responding, and thus allowed us to examine conditioning in S free of potential complications resulting from differences in pre-S responding.

Method

Subjects and apparatus

Sixty-four naïve female Wistar rats (32 in Experiment 3a and 32 in Experiment 3b) were purchased from same supplier and maintained under the same conditions as in the previous experiments. The apparatus was also the same.

Procedure

Experiment 3a.

Magazine, initial response, and acquisition.

Initial magazine and lever press training were the same as in Experiment 2. The lever was continuously present throughout the session and a 6-s tone (2,900 Hz; 80 dB, Sonalert) served as the S. Group CRF received 60 presentations of the tone and could earn a reinforcer on every trial according to the modified VI 3-s schedule. The ITI averaged 30 s. (The ITI was half as long as that experienced by Group CRF in Experiment 2.) Group PRF received the same trial schedule except that half the reinforcers were omitted. PRF sessions consisted of 120 trials; 60 trials in which a reinforcer could be earned according to the modified VI 3-s schedule, and 60 nonreinforced trials. The distribution of runs of reinforced and nonreinforced trials was the same as that experienced by Group PRF in Experiment 2. Sessions lasted approximately 64 min and 32 min for Groups PRF and CRF, respectively. Sessions were conducted once a day for 24 days.

Reinforcer devaluation.

Rats received six 2-session cycles of reinforcer devaluation in the same manner as in Experiment 1. There was a Paired and an Unpaired group.

Test.

As in Experiments 1 and 2, rats were tested for lever pressing in extinction, pellet consumption, and reacquisition. On Day 1, all rats received a session consisting of 10 presentations of the 6-s tone S separated by a variable 30-s ITI. Lever presses did not result in reinforcement (extinction). On Days 2 and 3, strength of reinforcer devaluation was tested by delivering 10 pellets on a RT 60 s schedule and in a session with the training contingencies reinstated.

Experiment 3b.

Magazine training.

Initial magazine training was the same as in Experiment 1. Pellets were delivered on a RT 60-s schedule.

Acquisition.

As in Experiment 1, the second session consisted of 20 insertions of the lever, upon which one press resulted in the delivery of a pellet (FR1) and retraction of the lever. The lever otherwise retracted after 60 s without a press. Lever insertions were separated by a variable 30-s ITI beginning with lever retraction. For the subsequent sessions, each trial consisted of the 6-s insertion of the lever. Successive trials were separated by a variable 30-s ITI. For Group PRF, a reinforcer could be earned in 60 of the 120 trials on the same modified VI 3-s schedule as in Experiment 2. The distribution of runs of reinforced and nonreinforced trials was the same as that programmed for Group PRF in Experiment 2. Group CRF received 60 presentations of the lever and could earn a reinforcer on each trial according to the modified VI 3-s schedule. Sessions lasted approximately 64 min and 32 min for Groups PRF and CRF, respectively. Rats received one session a day for 24 days.

Reinforcer devaluation.

Paired and Unpaired groups received six 2-session cycles of reinforcer devaluation in the same manner as in Experiment 1.

Test.

As in Experiments 1 and 2, rats were tested for lever pressing, pellet consumption, and reacquisition. On Day 1, all rats received a session consisting of ten 6-s presentations of the lever separated by a variable 30-s ITI. Lever presses had no programmed consequence. On Day 2, rats received a consumption test with 10 pellets delivered on a RT 30 s schedule without the lever. On Day 3, rats received an opportunity to reacquire responding under the training conditions.

Results

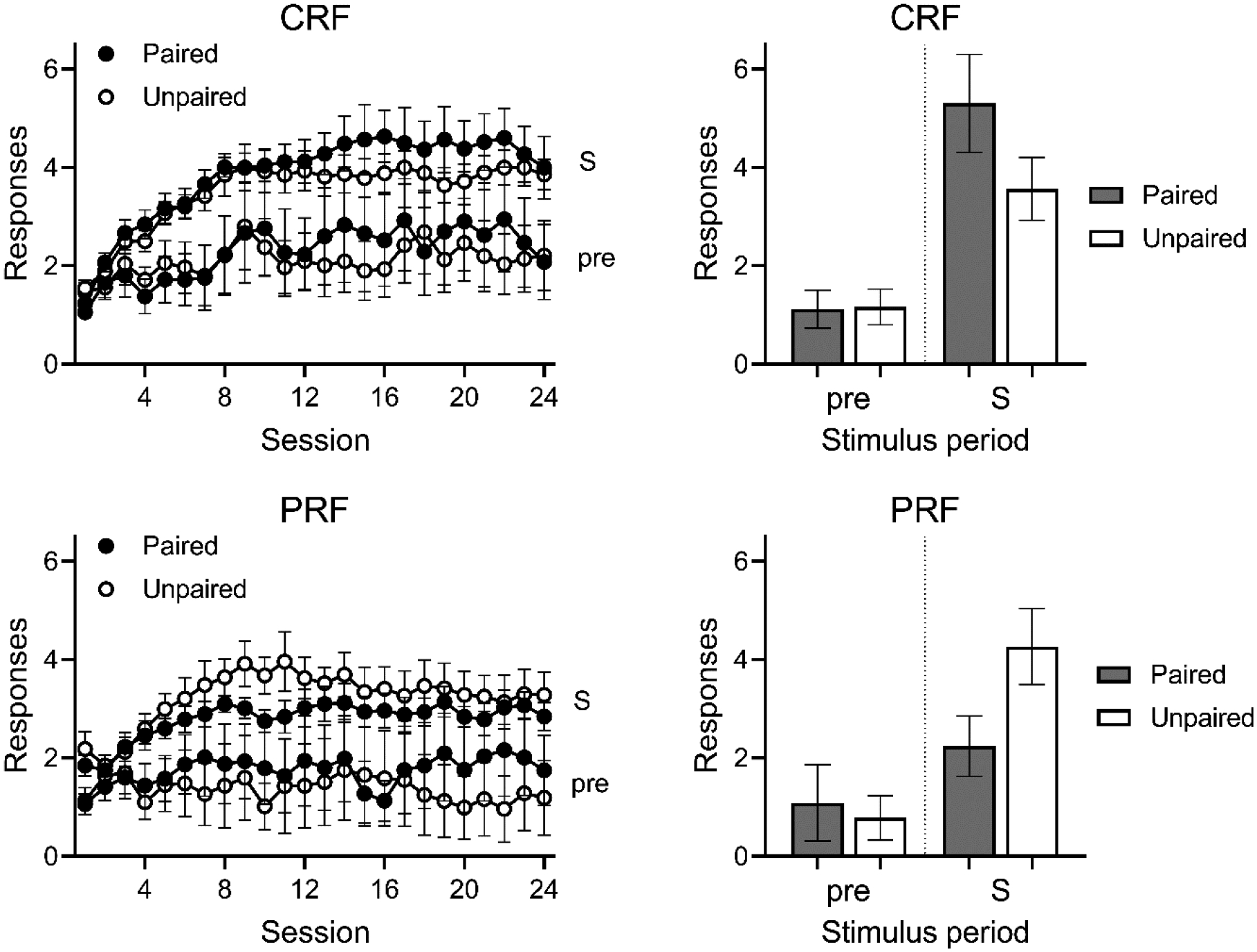

Experiment 3a

Acquisition.

The results of Experiment 3a are summarized in Figure 4. The rats again acquired the operant response occasioned by the tone (at left). Two rats in Group PRF Unpaired responded at an abnormally high rate during training (z = 3.29 and 2.46) and two rats in Group CRF Paired failed to acquire a taste aversion to the sucrose pellet after 6 pairings. These four rats were excluded from all analyses, leaving ns of 6, 8, 8, and 6 for groups CRF Paired, CRF Unpaired, PRF Paired, and PRF Unpaired. The analysis again focused on the reinforced trials. A Group (CRF, PRF) by Devaluation (Paired, Unpaired) by Stimulus Period (S, pre-S) by Session (24) ANOVA found significant effects of stimulus period, F(1, 24) = 26.52, MSE = 26.87, p < .001, session, F(23, 552) = 10.18, MSE = 0.88, p < .001, and a stimulus period by session interaction, F(23, 552) = 5.79, p < .001. There was also a significant devaluation (a dummy factor during training) by session interaction, F(23, 552) = 2.00, MSE = .86, p = .004. No other effect or interaction approached significance, largest F(1, 24) = 2.39, MSE = 58.18. Responding during the S and pre-S periods was analyzed with separate Group by Devaluation by Session ANOVAs. For the pre-S periods, there was an effect of session, F(23, 552) = 1.86, MSE = 0.86, p = .009, and no other significant effects, Fs < 1. Thus, the method of scheduling the nonreinforced trials was successful at eliminating the baseline difference between CRF and PRF that was present in Experiment 2. For responses in S, the analysis found significant effects of group, F(1, 24) = 5.23, MSE = 13.81, p = .031, session, F(23, 552) = 22.12, MSE = 0.45, p < .001, and a group by session interaction, F(23, 552) = 2.77, p < .001. No effects involving devaluation were significant, largest F(1, 28) = 1.85. Finally, a Group by Devaluation ANOVA comparison of responding in the S from the final session of training found higher responding in Group CRF, F(1, 24) = 4.31, MSE = 1.19, p = .049, but no effect of devaluation or interaction, Fs < 1. The same analysis of pre-S period responding found no significant effects, Fs < 1

Figure 4.

Results of Experiment 3a. Top. Results of the training (Left) and test (Right) phases for Group PRF (partial reinforcement). Bottom. Results of the training (Left) and testing (Right) phases for Group CRF (continuous reinforcement). “S” refers to the stimulus-on period and “pre” refers to equivalent period prior to the presentation of the stimulus. Error bars are the standard error of the mean.

The groups earned a mean total of 1,352.0 (SEM = 11.0) pellets during the training phase. Levine’s test indicated that variances were not equal; therefore we compared pellets earned in each group using a nonparametric Kruskal-Wallis test, which did not find a statistically significant difference in pellets earned among the groups, H(3) = 7.58, p = .056.

Test.

Aversion conditioning proceeded without incident. Paired rats in Groups CRF and PRF ate a mean of 0.5 (SEM = 0.2) and 0.5 (SEM = 0.2) pellets, respectively, in the final conditioning trial. Results from the test are shown at right in Figure 5. Responding in Group PRF was reduced in the Paired group compared to the Unpaired group, suggesting a goal-directed action. In contrast, Group CRF showed a trend in the opposite direction (if anything), again suggesting habit. A Group (CRF, PRF) by Devaluation (Paired, Unpaired) by Stimulus Period (S, pre-S) ANOVA found a significant effect of stimulus period, F(1, 24) = 53.29, MSE = 2.56, p < .001, a group by devaluation interaction, F(1, 24) = 4.69, MSE = 4.12, p = .040, η2 = .16, 95% CI [.00, .40], and a group by devaluation by stimulus period interaction, F(1, 24) = 6.22, p = 6.22, p = .020, η2 = .21, 95% CI [.00, .44]. The main effects of group and devaluation did not approach significance, largest F = 2.19. In order to understand the interactions, separate Group by Devaluation ANOVAs were conducted on responding during the S and pre-S periods. For S, there was a significant group by devaluation interaction, F(1, 24) = 6.03, MSE = 5.83, p = .022, η2 = .20, 95% CI [.00, .44]. Neither main effect approached significance, largest F = 1.07. For pre-S, a similar analysis found a nonsignificant trend suggesting greater pre-S responding in Group CRF rather than PRF, F(1, 24) = 3.65, MSE = 0.85, p = .068, but no effect of devaluation or interaction, Fs < 1. Planned orthogonal contrasts of test responding in S found no effect in Group CRF, F(1, 24) = 2.71, MSE = 3.81, p = .113, and a marginal devaluation effect in Group PRF, F(1, 24) = 3.70, p = .066, η2 = .13, 95% CI [.00, .37]. The effectiveness of the devaluation treatment was confirmed in the pellet consumption test: Paired rats in Groups CRF and PRF ate a mean of 2.3 (SEM = 1.0) and 0.7 (SEM = 0.3) of the 10 pellets offered, while unpaired rats consumed all 10 pellets. A final reacquisition test further confirmed that the pellets could no longer support lever pressing in the paired rats.

Figure 5.

Combined test results of the CRF (continuous reinforcement) and PRF (partial reinforcement) groups in Experiments 2 and 3a. “S” refers to the stimulus-on period and “pre” refers to equivalent period prior to the presentation of the stimulus. Error bars are the standard error of the mean.

Combined analysis of Experiments 2 and 3a.

To further evaluate the hypotheses that the PRF and CRF procedures produced sensitivity and insensitivity to devaluation, respectively, we analyzed the data after combining Experiments 2 and 3a. The combined test data from the Paired (n = 14) and Unpaired (n = 15) CRF groups and Paired (n = 15) and Unpaired (n = 14) PRF groups are shown in the top panels of Figure 5. An Experiment (2, 3a) by Group (CRF, PRF) by Devaluation (Paired, Unpaired) by Stimulus Period (S, pre-S) ANOVA assessed the mean responding in the test. There was a group by devaluation interaction, F(1, 50) = 5.49, MSE = 4.21, p = .023, η2 = .10, 95% CI [.00, .27], as well as significant effects of stimulus period, F(1, 50) = 154.02, MSE = 1.49, p < .001, and a group by devaluation by stimulus period interaction, F(1, 50) = 19.98, p < .001, η2 = .29, 95% CI [.09, .46]. The remaining effects did not reach significance, largest F(1, 50) = 2.19, MSE = 4.21. Separate ANOVAs compared responding in S and pre-S periods. For responding in the S, there was a significant group by devaluation interaction, F(1, 50) = 13.28, MSE = 3.97, p = .001, η2 = .21, 95% CI [.04, .39], and no other effects, largest F = 1.93. To evaluate our hypothesis regarding the effect of CRF vs. PRF training on devaluation, we compared each training group in an Experiment (2, 3a) by Devaluation (Paired, Unpaired) ANOVA. For Group CRF, no effect reached significance. There was a nonsignificant trend suggesting greater responding in Group CRF Paired than Unpaired, F(1, 25) = 3.90, MSE = 4.07, p = .059. For Group PRF, there was a significant effect of devaluation, F(1, 25) = 10.20, MSE = 3.87, p = .004, η2 = .29, 95% CI [.04, .51], and no other effects, Fs < 1. For the pre-S period, no effects approached significance, largest F = 1.33, MSE = 1.73. The main conclusion is that continually reinforcing S in the CRF groups produced habit, and partially reinforcing S in the PRF groups maintained a goal-directed action.

The bottom row of Figure 5 shows test responses in S as a proportion of baseline responding (mean responses per trial from the final four training sessions). These data were evaluated because acquisition in Experiments 2 and 3a suggested small between-group trends that seemed to carry over into the test results. However, even when expressed as a proportion of baseline responding, Paired rats responded less than Unpaired rats in Group PRF, but not in the CRF condition. This description is supported by an Experiment (2a, 3) by Group (CRF, PRF) by Devaluation (Paired, Unpaired) ANOVA, which confirmed a significant group by devaluation interaction, F(1, 50) = 8.77, MSE = 0.21, p = .005, η2 = .15, 95% CI [.02, .32]. There was a significant devaluation effect in Group PRF, F(1, 50) = 8.36, MSE = 0.21, p = .006, η2 = .14, 95% CI [.01, .32], and none in Group CRF, F(1, 50) = 1.68. This analysis suggests that trending differences in response rate present in the training phases, while not significant, do not account for the finding of goal-directed action in Group PRF and habit in Group CRF.

Experiment 3b

Acquisition.

The rats learned to lever press upon lever insertion without incident (see left side of Figure 6). A Group (CRF, PRF) by Devaluation (Paired, Unpaired) by Session (24) ANOVA found a significant effect of session, F(23, 644) = 7.29, MSE = 0.52, p < .001. There were no effects or interactions involving group or devaluation, largest F(23, 644) = 1.22. A comparison of mean responses on the final session found no differences between the groups, Fs < 1. The CRF and PRF procedures thus produced equivalent levels of responding. Rats earned a mean of 1391.6 (SEM = 3.8) pellets during the phase. A Group by Devaluation ANOVA found no significant effects, largest F(1, 28) = 3.34, MSE = 436.30.

Figure 6.

Results of Experiment 3b. Top. Results of the training (Left) and test (Right) phases for Group PRF (partial reinforcement). Bottom. Results of the training (Left) and testing (Right) phases for Group CRF (continuous reinforcement). Error bars are the standard error of the mean.

Test.

Aversion conditioning proceeded without incident. Paired rats in Groups CRF and PRF ate a mean of 0.5 (SEM = 0.2) and 0.2 (SEM = 0.1) pellets, respectively, in the final trial. Results from the lever-press test are shown at right in Figure 6. They clearly suggest similar levels of responding in each training group and no difference between the Paired and Unpaired groups. A Group (CRF, PRF) by Devaluation (Paired, Unpaired) ANOVA found no significant effects, Fs < 1. Planned orthogonal contrasts of Paired and Unpaired groups also found no evidence of differences after CRF and PRF training, Fs < 1. The effectiveness of the devaluation treatment was confirmed in the subsequent pellet consumption test: Paired rats in Groups CRF and PRF ate a mean of 0.9 (SEM = 0.3) and 0.6 (SEM = 0.2) of the 10 pellets offered, while unpaired rats ate all 10 pellets. A final reacquisition test further confirmed that the pellets no longer supported responding in the paired rats.

Discussion

Operant responding was again robust in each training condition. The new method of scheduling reinforced and nonreinforced trials successfully eliminated differences in pre-S responding between the CRF and PRF groups. In Experiment 3a, there was a modestly higher response rate in Group CRF than PRF, which may suggest slightly better conditioning in Group CRF. This result is not predicted by models of conditioning that emphasize rate of reinforcement across accumulating time in the stimulus (Gallistel & Gibbon, 2000). On the other hand, there was no effect of CRF vs. PRF in the lever insertion experiment (Experiment 3b). The test results provided several new insights. First, the results with the tone (Experiment 3a) provide further support for a role for reinforcer predictability in habit formation. The combined analysis indicates that the CRF procedure yielded habit, whereas the PRF procedure yielded goal-directed action after pre-S responding was equated during training. Second, the results of Experiment 3b suggest that responding to the lever S was less influenced by reinforcer predictability. Habit was observed there with a PRF procedure that had yielded goal-directed action with the tone in Experiments 2 and 3a. Thus, a variable that distinguishes lever from the tone, such as the lever’s stronger salience (Experiment 1), may have overridden the effect of partial reinforcement on habit learning in Experiment 3b.

General Discussion

As noted in the Introduction, the study of habit learning in discriminated operant (as opposed to free operant) situations is rare despite the idea that everyday habits are controlled by discrete trigger stimuli (e.g., James, 1890; Lally & Gardner, 2013; Wood, 2019). Experiment 1 therefore adapted a new discriminated operant method introduced by Vandaele et al. (2017) and compared the effectiveness of two such trigger stimuli, lever insertion (as used by Vandaele et al.) and tone, that set the occasion for lever pressing. Lever insertion is often assumed to be especially salient (Beckmann & Chow, 2015; Holland et al., 2014; Meyer et al., 2014), and consistent with that view, it promoted lever pressing more quickly than the tone did over trials. (We would note that this comparison is slightly complicated by the fact that the Tone groups could make nonreinforced lever presses during the ITI and the Lever groups could not.) However, both Ss came to control lever pressing at comparable rates during later trials. And both supported goal-directed action after a modest amount of training (Experiment 1a) and habit after more extended training (Experiment 1b). Despite their possible difference in salience, lever and tone supported similar action and habit processes with the continuous-reinforcement method used in Experiment 1.

Experiments 2 and 3 then provided new tests of the hypothesis that reinforcer predictability plays a role in discriminated habit learning (Thrailkill et al., 2018). In their key experiment, Thrailkill et al. had found that habit was learned when a 30-s tone signaled reinforcement (on VI 30) on every trial, but not when it signaled reinforcement on only 50% of the trials. Experiments 2 and 3 likewise compared 100% (CRF) and 50% (PRF) conditions, but with a briefer 6-s stimulus that was inspired by the relatively short stimulus duration and average delay to reinforcement that emerged in Experiment 1. To avoid the continuous conditioned reinforcement that would be created by using the response-contingent stimulus offset procedure of Experiment 1, we eliminated the offset contingency and instead presented the cue consistently for 6 s; the response was reinforced on a VI 3-s schedule within that period. In Experiment 2, the method produced habit in a CRF group that earned a reinforcer on every trial, but goal-directed action in a PRF group that received a reinforcer on only half the trials (as in Thrailkill et al., 2018). Those results were extended in Experiment 3, where a different method of trial spacing better equated pre-S responding in the PRF and CRF groups. Here again habit was produced in Group CRF and goal-directed action was produced in Group PRF. Thus, over a range of conditions, training a discriminated operant response with a PRF schedule prevented habit learning that otherwise developed under CRF. A discriminated habit thus develops when the reinforcer is consistently predicted by S.

In the acquisition phases of Experiments 2 and 3a, responding occasioned by S in PRF groups appeared weaker than responding in CRF groups. However, the groups earned a similar number of reinforcers during training and never differed in responding during testing. Responding in S in both conditions was also always strong. Moreover, our previous results found that PRF supported goal-directed action and CRF supported habit when responding in the S and pre-S was equivalent during acquisition (Thrailkill et al., 2018, Experiment 4). These observations suggest that weaker conditioning after PRF training may not be a crucial factor in explaining why PRF did not support habit learning.

Experiment 3b went on to use Experiment 3a’s method with the lever-insertion stimulus instead of the tone. This time, habit was observed in both PRF and CRF conditions, suggesting that habit learning with lever insertion might be less affected by reinforcer predictability. Lever insertion has become a common CS for studying Pavlovian phenomena such as autoshaping and sign-tracking in rats (e.g., Boakes, 1977; Robinson & Flagel, 2009), and recent results from other laboratories also suggest that it may be an especially salient stimulus. For example, a lever-insertion CS is resistant to blocking by an auditory CS (Holland et al., 2014). Based on these and other findings, Holland has suggested that lever insertion has unique properties as a Pavlovian stimulus (but see Derman, Schneider, Juarez, & Delamater, 2018). The results of Experiment 3b may be consistent with the idea that the salient properties of lever insertion might overpower the influence of partial reinforcement on preventing habit learning. Recall that there was no difference in Experiment 1 between the lever and the tone in goal-direction and habit learning when training had occurred on a continuous reinforcement schedule. Instead, the fact that lever and tone produced different results with partial reinforcement (Experiment 3) implies that stimulus salience might interact with the effects of nonreinforced trials, perhaps by reducing their impact. Future research will be necessary to further understand why this might occur. But consistent with the possibility, there was little difference in responding between the CRF group and the PRF group (with its nonreinforced trials) during acquisition when lever insertion was the stimulus in Experiment 3b.

Role of Pavlovian contingencies

Lever-insertion has been widely used to study Pavlovian learning processes (e.g., Flagel, Akil, & Robinson, 2009), and this might raise the possibility that the lever-insertion procedure used by Vandaele et al. (2017) and us to study habit might introduce an additional role for Pavlovian learning. However, the evidence suggests that a purely Pavlovian perspective cannot explain the current findings. A key result was that lever-pressing was unaffected by reinforcer devaluation after extended training (Experiment 1; see also Experiments 2 and 3). The development of insensitivity to reinforcer devaluation is consistent with a role for habit, but not Pavlovian conditioning, because Pavlovian responding does not appear to become insensitive to reinforcer devaluation after extensive training (e.g., Holland, 1990, 2005). Although there are some reports of Pavlovian sign-tracking to lever insertion becoming less affected by devaluation after extensive training (Morrison, Bamkole, & Nicola, 2015; Nasser, Chen, Fiscella, & Nicola, 2016; Patittuci, Nelson, Dwyer, & Honey, 2016), Amaya, Stott, and Smith (2020) recently concluded that most of those studies used weak devaluation procedures that, for example, involved taste aversion conditioning in a different context (see also Derman et al., 2018). Amaya et al. (2020) found that aversion conditioning in a different context did not transfer well to the context in which sign-tracking occurred (see also Bouton, Allan, Tavakkoli, Steinfeld, & Thrailkill, 2021). And when they used taste aversion conditioning in the same context, Amaya et al. (2020) found a robust reinforcer devaluation effect on sign-tracking after extensive training. Overall, the weight of the evidence thus suggests that the present development of insensitivity to reinforcer devaluation argues for a role for instrumental habit, and not purely Pavlovian learning, in the present experiments.

A related purely Pavlovian account might emphasize the possibility that the reinforcer devaluation effect seen after minimal training might be due to direct, potentiated aversion conditioning to the context (Jonkman, Kosaki, Everitt, & Dickinson, 2010) rather than actual action (R-O) learning. Aversion conditioning with either the context or the S might weaken the invigoration of responding they otherwise provide through Pavlovian-instrumental transfer (e.g., Holmes, Marchand, & Coutureau, 2010). Such a possibility could question whether it is appropriate to speak of action (and therefore habit) learning in the present experiments. However, once again the fact that responding became insensitive to devaluation after extensive training appears to argue against a simple role for such a possibility, because (as noted in the previous paragraph), it provides no clear account of the loss of the devaluation effect with extended training. Overall, the results seem best explained by the modulation of action and habit learning by factors like the amount of training and the partial- or continuous-reinforcement history of S.

Further analysis of the role of reinforcer predictability

Our conclusion that habit develops when reinforcers are predictable may appear to contrast with the results of free-operant experiments that have found evidence for habit after training on variable-interval (VI) reinforcement schedules but action after training on fixed-interval (FI) schedules (DeRusso et al., 2010; Garr, Bushra, Tu, and Delamater, 2020). Since reinforcers delivered on FI schedules are arguably more “predictable” than reinforcers delivered on VI schedules, such results seem incompatible with the present conclusions. We would note that reinforcer predictability was created by the relation between the S and the reinforcer in our discriminated operant methods (see also Thrailkill et al., 2018), and not by the operant schedule of reinforcement. Moreover, VI and FI schedules also produce well-known differences in the timing of responses (Catania & Reynolds, 1968), and therefore the degree of contiguity between the response and the reinforcer. Specifically, because of the typical FI scallop, FI schedules result in many more responses occurring close to the reinforcer in time. Importantly, short response-reinforcer intervals preserve goal-directed action, and thus interfere with habit learning, whereas longer intervals support habit (Urcelay & Jonkman, 2019). Thus, more habit learning with VI than FI schedules could be the simple product of longer average delays between response and reinforcer.

Our experiments appear to have broken the confound between reinforcer predictability and response-reinforcer contiguity that exists when comparing FI and VI schedules. First, in Thrailkill et al. (2018, Experiment 4), reinforcer predictability influenced habit learning even when response-reinforcer contiguity was matched in CRF- and PRF-trained groups. Second, in the present Experiments 2 and 3a, PRF training did weaken response-reinforcer contiguity compared to CRF training, because the animals made many responses in trials that were remote from (did not yield) a reinforcer. Yet, despite the weaker contiguity, the response remained a goal-directed action (rather than became a habit) with PRF training. Such considerations may add more weight to the reinforcer predictability account. It is also worth noting that the idea that habits develop because of reinforcer uncertainty is not consistent with habits as we know them in everyday life: With common habits such as smoking (for example), there is high certainty, not low certainty, that the habitual response will be reinforced.

Theories of habit learning

The present results are not compatible with classic habit theories that emphasize that the stimulus-response (habit) association is merely strengthened with each reinforcement, as in the Law of Effect (James, 1890; Thorndike, 1911; see also Wood, 2019; Wood & Rünger, 2016). Such a view does not anticipate the difference between the effects of partial and continuous reinforcement schedules that are equated on the number of reinforced trials, as they were in Experiments 2 and 3. The results also contrast with dual-system theories that maintain that habit strength develops through a similar reinforcement process (de Wit & Dickinson, 2009; Dickinson & Balleine, 1993) or through a model-free reinforcement learning algorithm that also views habit as a product of the number of S, R, and reinforcer conjunctions (see below; Daw et al., 2005; Perez & Dickinson, 2020). The present PRF and CRF groups were equated on the number of those conjunctions. Further, these theories often imply that S-R habit strength should develop simultaneously with the S’s ability to evoke the response. But this does not appear to be the case. In PRF training procedures, goal-direction remained strong even though the stimulus acquired strong control over the response. Habit and stimulus control do not necessarily go hand in hand.

The results may also be problematic for the idea that habit develops when the organism experiences a low correlation between response rate and reinforcer rate (e.g., Dickinson, 1985). This view has been incorporated into a dual-system theory as another cause of habit formation (Dickinson & Balleine, 1993; de Wit & Dickinson, 2009; Dickinson, 2012; Perez & Dickinson, 2020). One problem for such a view may be the fact that habits can be acquired at all in discriminated operant situations (present results; Thrailkill et al., 2018; Vandaele et al, 2017). The problem arises because discriminated operant responding can maintain a strong correlation between response rate and reinforcer rate, which are both high when S is present and low when it is not. A second problem is that the response correlation view is not equipped to explain the effect of reinforcer predictability in S. In the present Experiments 2 and 3a, the PRF schedules arranged twice as much time in S than was experienced in CRF while they equated the number of earned reinforcers. Animals made many responses in nonreinforced Ss and thus experienced a weaker response-reinforcer correlation in PRF – but were still goal directed. Third, our previous study (Thrailkill et al., 2018, Experiment 4) arranged identical exposure to S and thus identical response-reinforcer correlations in PRF- and CRF-trained groups. The finding that PRF sustains goal-directed control, while CRF does not, may be difficult to reconcile with a rate correlation view. A fourth problem for at least one version of correlation theory (Perez & Dickinson, 2020) is other evidence suggesting that the acquisition of an S-R habit does not appear to erase or destroy previously-acquired action (R-O) knowledge (e.g., Bouton, Broomer, Rey, & Thrailkill, 2020; Steinfeld & Bouton, 2020; Trask, Shipman, Green, & Bouton, 2020).

Existing models from the reinforcement learning (RL) tradition also do not appear to capture the present results. In these models, the goal of a simulated subject or “agent” is to maximize reinforcement using as few resources as possible. This is accomplished by modeling the task as a series of discrete choice points or “states” and calculating the utility or value of the available actions at each state. Agents can base their responding on either a model-free system that uses a running average or “cached” value of the response or a model-based system that evaluates the outcomes of all possible responses prior to selecting the action and thus requires more extensive resources (Daw et al., 2005; Dolan & Dayan, 2013). The model-free/model-based distinction is often mapped on to the habitual/goal-directed distinction (e.g., Balleine & O’Doherty, 2010; Niv, 2009). However, cached value within the model-free system is acquired according to a learning algorithm that responds to S-R-O conjunctions but does not discriminate between predictable and unpredictable outcomes.

The present findings might be useful in helping guide the development of RL models of habit learning. RL models generally account for habit performance (as opposed to habit learning) by relying on an “arbitration” process that determines whether the model-based or model-free system is in control. After overtraining, arbitration finds the model-free system to have greater value or utility than the model-based system, and chooses it accordingly. Various models differ on why the arbitration process chooses the model-free system. Some dimensions that have been considered are efficiency (Anderson, 2007; Keramati, Dezfouli, & Piray, 2011), strength (Miller, Shenhav, & Ludvig, 2019), reliability (Daw et al., 2005; Lee, Shimojo, & O’Doherty, 2014), and expected versus obtained reinforcers (e.g., Dezfouli & Balleine, 2013). Some models also allow the two systems to interact dynamically to determine control on a trial-by-trial basis (e.g., Gershman, Otto, & Markman, 2014). The present results, along with those of Thrailkill et al. (2018), suggest that either the learning algorithm or the manner in which the model-free (and thus habit) system is chosen might be influenced by reinforcer predictability and stimulus salience. The data may thus be useful in constraining future RL accounts of habit learning.

We have suggested that habit alternatively develops when the training conditions allow the organism to pay less and less attention to the response (Thrailkill et al., 2018). According to the Pearce-Hall model of Pavlovian conditioning (Pearce & Hall, 1980), attention to, or processing of, a conditioned stimulus (CS) decreases during conditioning as it becomes a better and better predictor of the unconditioned stimulus (US). The model also predicts that attention will be maintained to a CS if the CS is unpredictably paired with a US on only half the trials, because this maintains the surprisingness of the US. The prediction has been supported empirically (e.g., Kaye & Pearce, 1984; Pearce, Kaye, & Hall, 1982). We suggest a parallel between Pavlovian and instrumental learning. When reinforcers become well predicted in discriminated instrumental conditioning, they lead to reduced attention to the instrumental response, allowing it to be emitted more automatically. In PRF conditions, the reinforcer during any S becomes less predictable and more surprising, thus maintaining attention to both the stimulus and the response. Other recent free-operant studies from this laboratory have produced results that can be viewed from this perspective (Bouton et al., 2020; Trask et al., 2020). Bouton et al. (2020) trained rats on schedules of reinforcement that typically result in habit (Dickinson et al., 1995; Thrailkill & Bouton, 2015). Prior to a test for sensitivity to reinforcer devaluation, half the rats received exposure to a different reinforcer. In comparison to untreated rats, which demonstrated habit, rats that received the surprising outcome were sensitive to devaluation, and thus demonstrated goal direction. Similar results were found in experiments that arranged the unexpected reinforcers to be contingent or not contingent on a second response (Trask et al., 2020). As noted by Bouton and colleagues (Bouton et al., 2020; Trask et al., 2020), the results are consistent with the idea that an unpredicted, or surprising, outcome results in an increase in attention to the response in a manner similar to the increase in CS processing envisaged by Pearce and Hall (1980). In the free operant studies, surprise allowed rats to switch from habit back to action, while in the present studies it prevented habit from developing under PRF.