See the article by Lu et al., Issue 9, pp. 1560–1568

Stereotactic radiosurgery (SRS) is increasingly being utilized as a treatment for patients with brain metastasis and benign primary brain tumors, fueled in part, by rapid technology development in SRS delivery platforms. Yet, a detailed examination of the individual steps in the process itself remains lacking in standardization, automation, and integration of artificial intelligence (AI). In fact, the critical steps—such as importing images into treatment planning systems, coregistering diagnostic images to treatment planning studies (used for dosimetry calculation), target volume delineation, treatment plan development, evaluation of isodose distributions, treatment delivery, and comparison of posttreatment imaging studies to pretreatment images for response assessment—require significant manual effort and therefore are subject to substantial inter-physician heterogeneity.

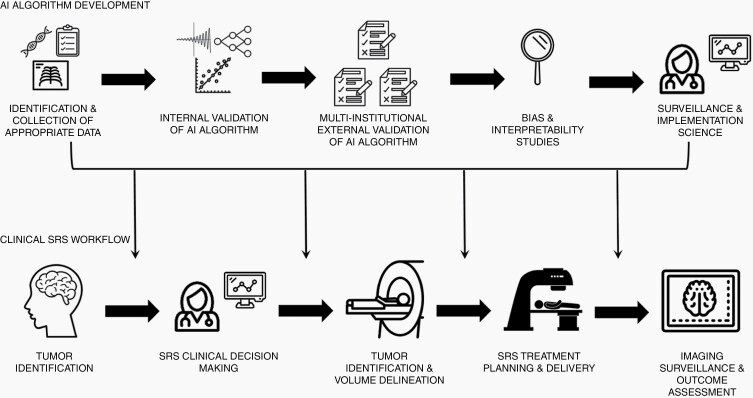

Several prior studies have demonstrated significant variability in delineating SRS targets, from national quality assurance programs1 to multi-institutional studies performed at dedicated SRS centers.2 In addition to target volume delineation itself, however, there are a number of additional areas in which AI can clinically improve the practice of SRS (Figure 1). To aid in treatment planning, AI algorithms such as convolutional neural networks have already shown the ability to improve the efficiency of image co-registration, a necessary step prior to accurate target delineation.3 Additionally, there are ongoing efforts to improve radiation therapy treatment planning by leveraging the use of machine learning algorithms. Lastly, there are several ways in which clinicians may benefit from AI assistance when making complex decisions regarding SRS techniques.4 Key areas of ongoing interest include clinical prognostication (ie, identifying the patients who would benefit the most from SRS)5 and implementation of machine learning techniques in evaluating equivocal lesions after prior SRS to differentiate radiation necrosis from disease progression.6

Fig. 1.

Artificial intelligence algorithm development pathways and potential areas of application in the stereotactic radiosurgery treatment workflow.

In contrast to most of the existing literature in this space, in which deep learning based on convolutional neural networks has been reported to improve predefined metrics (such as lesion detection and segmentation) in retrospective comparisons to prior datasets, Lu and colleagues performed a randomized, cross-modal, multi-reader, multi-specialty, multi-case study to evaluate the impact of a state-of-the-art auto-contouring algorithm built on multi-modality imaging and ensemble neural networks on 10 brain tumor SRS cases.7 The automated brain tumor segmentation algorithm used in this study was a deep learning-based segmentor that used multi-modal imaging and ensemble neural networks and was developed on a dataset of over 100 patients and over 2500 brain tumors. Of significant promise, the workflow of image processing, neural network inference, and data post-processing, was integrated with SRS treatment planning and on average took only 90 seconds. Integrating AI assistance into the clinical workflow increased lesion detection, enhanced inter-reader agreement, improved contouring accuracy (especially for less experienced physicians), and demonstrated time savings with improved efficiency. These preliminary findings have direct potential impacts on patient outcomes by aiding in the determination of all sites of disease to treat with SRS, improving target volume delineation to better enhance the therapeutic ratio of radiotherapy, and reducing time and effort spent on the process.

Despite the promises of AI to personalize cancer treatment, there is a glaring lack of standardization in the development of clinically ready AI algorithms.8 Unlike emerging pharmaceutical treatments or medical devices, AI algorithms currently do not possess a widely accepted pathway for safe clinical implementation. Efforts should be taken to assure that proposed AI algorithms have been trained on sufficiently large and clinically heterogeneous datasets. In addition to single-institution training and validation as performed by Lu and colleagues, all AI algorithms should be externally validated in a multicentered fashion across expert levels of differing experience. Given the increasing evidence of bias within AI algorithms, researchers should attempt to increase algorithmic transparency through rigorous interpretability studies.9 Lastly, as AI solutions are clinically implemented, surveillance studies must be undertaken to assure that AI algorithms are being applied correctly by members of the clinical team. Further work is clearly needed to understand the potential application of AI into widespread clinical practice.

Acknowledgment

The text is the sole product of the authors and that no third party had input or gave support to its writing.

Conflict of interest statement. R.K.: Honoraria from Accuray Inc., Elekta AB, Viewray Inc., Novocure Inc., Elsevier Inc. Institutional research funding from Medtronic Inc., Blue Earth Diagnostics Ltd., Novocure Inc., GT Medical Technologies, AstraZeneca, Exelixis, Viewray Inc. S.A.: Research funding from American Cancer Society, American Society of Clinical Oncology, National Cancer Institute, and National Science Foundation.

Cite sources of support (if applicable). None.

References

- 1.Growcott S, Dembrey T, Patel R, Eaton D, Cameron A. Inter-observer variability in target volume delineations of benign and metastatic brain tumours for stereotactic radiosurgery: results of a national quality assurance programme. Clin Oncol (R Coll Radiol). 2020;32(1):13–25. [DOI] [PubMed] [Google Scholar]

- 2.Sandström H, Jokura H, Chung C, Toma-Dasu I. Multi-institutional study of the variability in target delineation for six targets commonly treated with radiosurgery. Acta Oncol. 2018;57(11):1515–1520. [DOI] [PubMed] [Google Scholar]

- 3.de Vos BD, Berendsen FF, Viergever MA, Sokooti H, Staring M, Išgum I. A deep learning framework for unsupervised affine and deformable image registration. Med Image Anal. 2019;52:128–143. [DOI] [PubMed] [Google Scholar]

- 4.Thompson RF, Valdes G, Fuller CD, et al. Artificial intelligence in radiation oncology: a specialty-wide disruptive transformation? Radiother Oncol. 2018;129(3):421–426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chang E, Joel MZ, Chang HY, et al. Comparison of radiomic feature aggregation methods for patients with multiple tumors. Sci Rep. 2021;11(1):9758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Peng L, Parekh V, Huang P, et al. Distinguishing true progression from radionecrosis after stereotactic radiation therapy for brain metastases with machine learning and radiomics. Int J Radiat Oncol Biol Phys. 2018;102(4):1236–1243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lu SL, Xiao FR, Cheng JC, et al. Randomized multi-reader evaluation of automated detection and segmentation of brain tumors in stereotactic radiosurgery with deep neural networks. Neuro Oncol. 2021;23(9):1560–1568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Haibe-Kains B, Adam GA, Hosny A, et al. ; Massive Analysis Quality Control (MAQC) Society Board of Directors . Transparency and reproducibility in artificial intelligence. Nature. 2020;586(7829):E14–E16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zou J, Schiebinger L. AI can be sexist and racist - it’s time to make it fair. Nature. 2018;559(7714):324–326. [DOI] [PubMed] [Google Scholar]