Abstract

Background:

Research has demonstrated that active learning, spaced education, and retrieval-based practice can improve knowledge acquisition, knowledge retention, and clinical practice. Furthermore, learners prefer active learning modalities that use the testing effect and spaced education as compared to passive, lecture-based education. However, most research has been performed with students and residents rather than practicing physicians. To date, most continuing medical education (CME) opportunities use passive learning models, such as face-to-face meetings with lecture-style didactic sessions. The aim of this study was to investigate learner engagement, as measured by the number of CME credits earned, via two different learning modalities.

Methods:

Diplomates of the American Board of Anesthesiology or candidates for certification through the board (referred to colloquially and for the remainder of this article as board certified or board eligible) were provided an opportunity to enroll in the study. Participants were recruited via email. Once enrolled, they were randomized into 1 of 2 groups: web-app–based CME (Webapp CME) or an online interface that replicated online CME (Online CME). The intervention period lasted 6 weeks and participants were provided educational content using one of the two approaches. As an incentive for participation, CME credits could be earned (without cost) during the intervention period and for completion of the postintervention quiz. The same number of CME credits was available to each group.

Results:

Fifty-four participants enrolled and completed the study. The mean number of CME credits earned was greater in the Webapp group compared to the Online group (12.3 ± 1.4 h versus 4.5 ± 2.3 h, P < .001). Concerning knowledge acquisition, the difference in postintervention quiz scores was not statistically significant (Webapp 70% ± 7% versus Online 60% ± 11%, P = .11). However, only 29% of the Online group completed the postintervention quiz, versus 77% of the Webapp group (P < .001), possibly showing a greater rate of learner engagement in the Webapp group.

Conclusion:

In this prospective, randomized controlled pilot study, we demonstrated that daily spaced education delivered to learners through a smartphone web app resulted in greater learner engagement than an online modality. Further research with larger trials is needed to confirm our findings.

Keywords: Retrieval-based learning, spaced education, continuing medical education, web app, active learning

Introduction

The optimal method for engaging busy physicians in continuing medical education (CME) remains unknown.1–8 Research concerning established educational frameworks, such as Bloom’s taxonomy and Moore’s seven levels of outcomes in CME, has clearly demonstrated that passive learning is insufficient to produce higher levels of learning that lead to practice change.9 Additionally, educational research has demonstrated that active learning with spaced education and retrieval-based practice can improve knowledge acquisition, knowledge retention, and, in some cases, clinical practice.10–12 However, most CME offerings today continue to rely on passive learning models through lecture-style presentations in face-to-face meetings.

Spaced education and retrieval-based practice with feedback are pedagogical approaches that have been proven to be effective methods of education based on the neurobiology of learning.13–15 Spaced education distributes the learning event over multiple, shorter encounters, allowing for effective repetition that ultimately leads to deeper learning in a more efficient manner.16 Retrieval-based practice actively engages the learner by querying their knowledge base and then giving feedback concerning their responses.8 Taken together, spaced education through retrieval-based practice has been shown to be a more effective and efficient means of learning for trainees and practicing physicians.17

The aim of this study was to compare the level of learner engagement between two educational modalities, as measured by the number of CME credits earned. A secondary aim was to compare knowledge acquisition between groups. The two methods of delivering educational content were a web application (web app) that distributes multiple-choice questions (MCQs) with immediate feedback via SMS (Short Message Service) text or email and an online learning management system where learners are required to answer MCQs after reading a journal article on a topic. Thus, the research questions this study sought to answer were (1) Do end users engage one type of learning modality over another as measured through the amount of CME consumed? and (2) Is knowledge acquisition increased if the questions are disseminated via a smartphone web application versus an online learning management system that replicates common online modules for CME (eg, as is common for journal-based CME related to review articles)? The hypothesis of the study was that the use of the web-app system would result in a greater number of CME credits earned.

Materials and Methods

This study was approved by the Vanderbilt Institutional Review Board (study no. 181415; Nashville, TN).

Recruitment emails were sent to board-certified and board-eligible anesthesiologists in the United States who were members of component state societies of the American Society of Anesthesiologists. Leaders of state societies of anesthesiologists (eg, the president of the Tennessee Society of Anesthesiologists) were contacted and asked to disseminate a recruitment email to their members. Accordingly, the exact number of anesthesiologists contacted as potential participants for this study is unknown. A web link to online enrollment materials was included in the email recruitment information (see Appendix 1). Respondents were enrolled through REDCap.18 The duration of the enrollment period was 1 week, which commenced when the first recruitment materials were sent. Only board-eligible or board-certified anesthesiologists in the United States were included in the study. After the enrollment period concluded, participants were randomized into two groups: web-app–based CME (Webapp CME) and an online interface that replicated CME offerings through many peer-reviewed journal publishers (Online CME).*

As an incentive for participation, CME credits could be earned, without cost, for participation during the intervention period and for completion of the postintervention quiz. The number of credits earned were determined by levels of engagement with the learning programs. Based on time needed for completion, 1 hour of CME credit was earned for each block of 5 items that were completed by the participants during the intervention period. For the Online CME group, participants were instructed to read the article and then answer the question items. Participants in this group received 1 hour of CME credit for every 5 questions that they answered. For the Webapp CME group, completion of an item required that the participant read the question, select an answer, review the rationale (which included a significant portion of text from the associated journal article), and confirm that the rationale was reviewed. The participants in this group were not asked to read an article before answering the question. However, the article was made available through links in the rationale after each question.

Study Procedures

Content Development

Question items were developed in a structured fashion (Appendix 2 illustrates the structure and content of a question item). First, a template for creating question items was given to all content creators and followed during the development of each item (Appendix 3). Second, the faculty (N = 6) who contributed question items received training in psychometrics and question writing in one of two forms: from the American Board of Anesthesiology or National Board of Medical Examiners to provide questions for MOCA Minute or national board exams, or from an internal training based on courses in writing exam questions. Those who received the latter underwent a 3-hour training session through the Vanderbilt School of Medicine Educator Development Program as well as a departmental training of approximately 2 hours, both of which included question-writing practice.

Third, the questions were submitted to two authors (M.D.M. and G.M.F.) for review. Minor edits (eg, verb tense) were performed without further review. If major edits were required (changes to clinical stem, suggestions for different distractors), these were returned to the original authors for review. As a final round of review, the completed question set was sent to all question writers (N = 6) for approval. While about half of the questions required major edits, no questions were discarded. Fourth, all items were mapped to specific content in the published articles, with a standard of 10 question items per article. The goal of following a standardized process for item creation and review was to increase the content validity of the items themselves.19 Using MCQs as the format for assessing learner engagement and knowledge acquisition should ensure adequate validity from a response-process perspective, as this is an accepted and frequently used format for physician assessment.19

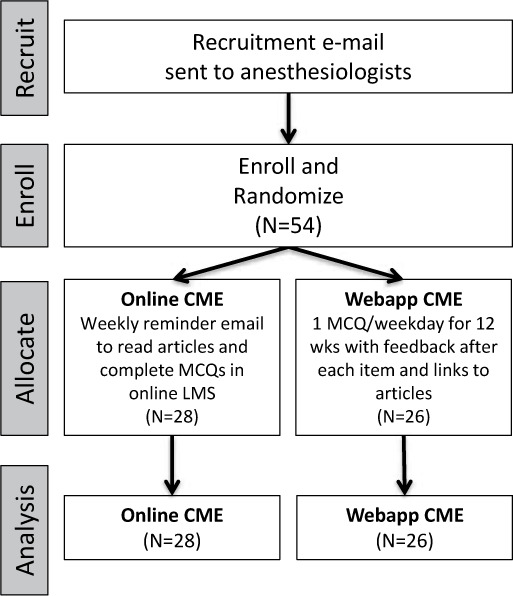

Intervention. The study intervention consisted of a 6-week period during which educational content was delivered based on assigned study groups using two different modalities, as specified later (Figure 1). The educational content was based on six articles published in the past 5 years in Anesthesia & Analgesia, with 10 MCQs per article, as already noted.20–25 Additionally, the educational content and questions in both learning modalities were identical.

Figure 1.

CONSORT diagram.

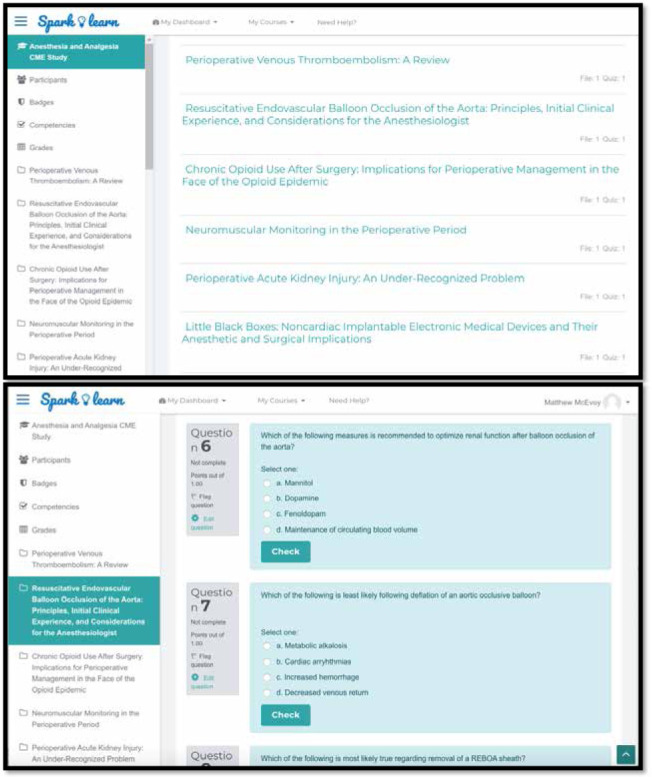

Online CME. Access to the CME articles and questions required logging in to the Vanderbilt learning management system (https://spark.app.vumc.org). Participants were provided unlimited access to a published pdf of each CME article, with permission granted by the publisher for the study period. As a counterbalancing measure to the reminders for the Webapp group (described later), participants in this group received an email at the beginning of each week to remind them of the availability of articles and questions. As noted in the Introduction, the Vanderbilt learning management system site was configured to match the components of online CME offerings through many peer-reviewed journals. These components include access to the CME article, with instructions to read it before completing the questions; MCQs related to the article with, feedback about whether the answer to the question was correct or incorrect; and CME credit for completion of the question items (see Figure 2 for screenshots).

Figure 2.

Vanderbilt University online learning management system.

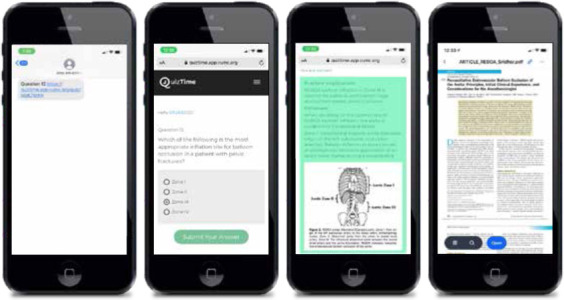

Webapp CME. Participants in the Webapp CME group received an email informing them that they would receive one question each weekday (Monday–Friday) for 12 weeks, for a total of 60 questions. Questions were delivered at 10 am each day via SMS text or email, with a hyperlink to a web app that presented the question item.† The web app allows for one discrete delivery time per cohort of participants in a specific module. The content delivered in the questions was identical to that made available to the Online CME group. This group also had unlimited online access to the published pdf of the article through the Vanderbilt learning management system and through hyperlinks in the answers to the MCQs. Figure 3 illustrates the process of receiving a text message and being presented a question item.

Figure 3.

Web interface for question distribution, presentation, and answer review.

Postintervention knowledge quiz. One week after the completion of the study period, participants in both groups were asked to complete a 24-MCQ quiz to assess their knowledge acquisition. The quiz contained 4 MCQs related to each CME article used in the study (see Appendix 4), a subset of the 60 questions used during the intervention period. Participants were offered an additional 3 hours of CME credit for completing this quiz.

Statistical Analysis

The primary outcome measure was the difference between cohorts in amount of CME consumed. Analyses were conducted in an intention-to-treat manner based on group randomization, regardless of user engagement. Unpaired 2-tailed t tests were used to evaluate the statistical significance of differences between cohorts for continuous variables, the Fischer exact test for categorical variables, and the Mann-Whitney U test for comparison of medians. All data are presented as mean ± 95% confidence interval unless otherwise noted. A P value threshold of .05 was used for determining significance.

We are unaware of previous studies that have investigated the differential consumption of educational material based on pedagogical approach. Based on prior studies reporting the preference of learners for this form of education, if we assumed that participants in the Online CME group would on average consume 66% of the CME offered (10/15 h) with a standard deviation of 2 h, then 32 participants (16 per group) would be needed to show a 20% difference in consumption between groups.3,7

Results

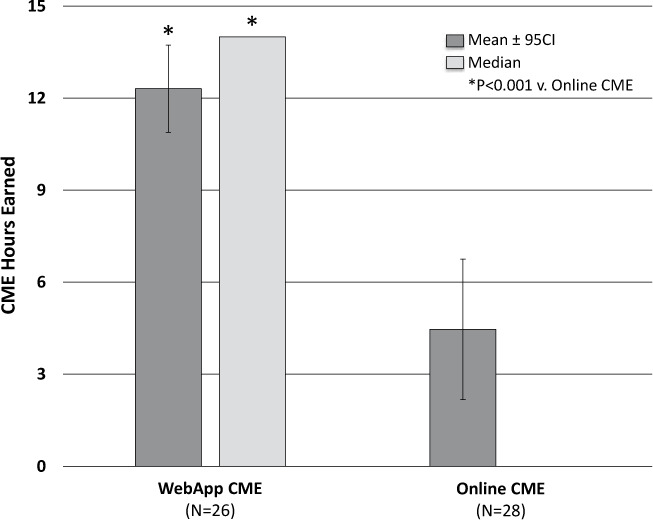

A total of 54 participants enrolled in the study (Online, N = 28; Webapp, N = 26). Participant demographic characteristics are shown in Table 1. The mean number of CME credits awarded was significantly greater in the Webapp group than in the Online CME group (12.3 ± 1.4 versus 4.5 ± 2.3, P < .001; see Figure 4). Webapp participants answered more questions during the 6-week intervention period than participants in the Online CME group: respectively, mean = 49.5 ± 1.4 versus 18.6 ± 2.3 MCQs answered out of 60 total (P < .001) and median = 56 versus 0 (P < .001). More specifically, 54% of learners in the Online CME group did not answer any MCQs (0 out of 60), whereas 25% answered more than 50. This explains the median of 0 CME credits awarded (out of 15 h available) for the Online CME group, compared to 14 h in the Webapp group (see Figure 4).

Table 1.

Study Participant Demographics (N = 54)

| Count, N | |||

|---|---|---|---|

| Survey Question | Webapp | Online | Category Total, N (%) |

| Gender | |||

| Female | 12 | 11 | 23 (43) |

| Male | 14 | 17 | 31 (57) |

| Location Setting | |||

| Urban | 22 | 23 | 45 (83) |

| Suburban | 4 | 5 | 9 (17) |

| Time Zone | |||

| Central | 9 | 11 | 20 (37) |

| Eastern | 17 | 17 | 34 (63) |

| Practice Setting | |||

| Academic | 13 | 17 | 30 (56) |

| Private | 13 | 11 | 24 (44) |

| Years in Practice | |||

| 0–5 | 6 | 6 | 12 (22) |

| 6–10 | 7 | 8 | 15 (28) |

| 11–20 | 7 | 8 | 15 (28) |

| 21 or more | 6 | 6 | 12 (22) |

| Practice Size | |||

| Small (<10) | 2 | 1 | 3 (5) |

| Medium (10–30) | 4 | 4 | 8 (15) |

| Large (31–100) | 11 | 12 | 23 (43) |

| Very large (>100) | 9 | 11 | 20 (37) |

Figure 4.

Learner engagement (CME credit consumption) by educational intervention.

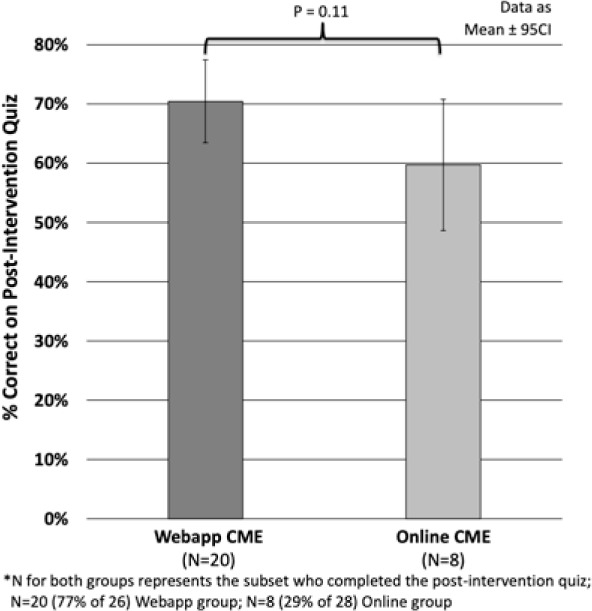

Concerning analysis of knowledge acquisition, 77% of the Webapp group versus 29% of the Online group completed the postintervention quiz (P < .001). The difference in postintervention quiz scores was not statistically significant (Webapp 70% ± 7% versus Online 60% ± 11%, P = .11; see Figure 5). Of note, those who completed the postintervention quiz had a very high completion rate of the 60 MCQs in both groups. Specifically, participants who completed the postintervention quiz answered 51.8 ± 13.9 MCQs, whereas those who did not take the quiz answered only 13.7 ± 20.1.

Figure 5.

Knowledge acquisition by educational intervention.

Discussion

The optimal method by which to engage busy clinicians in CME activities is unknown.16 In light of this problem, the present pilot study reports several interesting findings. First, learner engagement was significantly greater with automated, system-activated education that was driven to learners on a daily basis through a web app than it was for the online education modality. Second, we found a trend toward greater knowledge acquisition with daily web-app–based spaced education as compared to a system that replicates online CME offerings. Each of these findings will be discussed in light of the current literature.

Our findings demonstrate that board-certified or board-eligible anesthesiologists receiving daily spaced education through a web app earned almost 3 times the amount of CME credits as those using an online CME system. As already noted, other than the approximately 25% of highly engaged learners in the Online CME group, this group had very little participation. This is demonstrated by the median number of CME credits earned being 0 h, compared to 14 h in the Webapp group. These findings correspond with those of prior studies showing a high rate of engagement among learners when retrieval-based spaced education is driven to them by providing MCQs via text or email either daily or multiple times each week.1,11,12 These findings also support the popularity of one component of the Maintenance of Certification in Anesthesiology (MOCA) program from the American Board of Anesthesiology, namely, MOCA Minute.26,27 The biggest difference between the web-app system used in this study and the MOCA Minute program is that in our system a prompt to participate is driven to the learner on a daily basis. In comparison, MOCA Minute requires the learner to remember to pick up their smartphone and choose to participate. Whether one form of engagement versus another results in increased participation and increased knowledge acquisition over time will require additional larger studies. Some learners may be engaged in earning CME credits no matter how learning is offered to them. This might be seen from the fact that completion of the postintervention quiz for 3 CME credits was associated with a very high completion rate of question items in both the Webapp and Online CME groups. Specifically, for the Webapp group, those who completed the postintervention quiz also completed 90% of the MCQs, versus 58% for those who did not complete the postintervention quiz. The respective rates of MCQ completion in the Online group were 77% and 13%.

Concerning knowledge acquisition as demonstrated by performance on the postintervention quiz, we found no significant difference between the groups. However, this should be interpreted carefully in light of the size of this pilot study. The overall participation rates in the postintervention quiz were very different, and quite low in the Online group (29%). Additionally, there was a trend toward score improvement in the Webapp group versus the Online group (absolute 10%, relative 18% higher). This might represent a substantial effect size, and should be investigated in further large studies in our specialty, as prior research in other disciplines has shown improved knowledge acquisition from spaced education through retrieval-based practice.11,12,28–31 Of note, ongoing education through retrieval-based practice using MCQs is increasingly being used by anesthesiology trainees and may show benefit with regard to knowledge acquisition as demonstrated by improved scores on standardized exams.32 Whether this translates into the continuing professional development of practicing anesthesiologists remains to be seen.

Our study has several important limitations. First, the size is small, and the sample consisted of a specific group of learners. It should be regarded as a pilot study that requires additional larger trials to confirm or refute its findings. Second, there may be significant selection bias regarding those who chose to participate. Even though participants were randomized to different interventions, the learners included in this study may not represent anesthesiologists as an entire group. Additionally, due to our means of contacting practicing anesthesiologists, we are uncertain of the actual number of potential participants for this study and thus do not know whether selection bias was further a factor. Therefore, care should be taken when interpreting the generalizability of our findings. It is also important to note that we were unable to gather baseline or historical CME consumption data because of the method by which the participants were contacted.

Third, because we included participants from numerous institutions and practice settings, we were not able to assess the impact of the learning interventions on clinical practice (eg, through evaluation of any practice change noted in an electronic health record). Fourth, the 24 question items in the postintervention quiz were taken directly from the complete set of 60 used during the intervention period. We did not take into account whether the learners had previously completed any given question. This could lead to recall as a bias for those who completed more CME, and this is a limitation of the study. Future research in anesthesiology and perioperative medicine should involve larger trials to assess the effects of various approaches on knowledge acquisition as well as the impact on care delivery.

Conclusions

In a pilot prospective, randomized controlled trial, we demonstrated that delivery of daily spaced education driven to learners through a smartphone web app resulted in greater learner engagement than an online modality, as defined by the number of CME credits earned per group. Further research needs to investigate whether these findings are confirmed in larger groups of anesthesiologists and whether this pedagogical approach results in a demonstrable improvement in knowledge acquisition and change in care delivery.

Acknowledgments

We dedicate this article to our coauthor Dr Geoffrey Fleming, who passed away in December 2020. Geoffrey is an exemplar of excellence as a physician, educator, and leader, and most importantly as a husband, father, and friend.

Appendices

Appendix 1. Recruitment Email

Dear Anesthesiologist Colleague:

You are being asked to participate in a research study because you are an anesthesiologist in the United States. The purpose of the study is to compare two modalities for providing CME learning opportunities. Upon completion of this study, you will be eligible to claim up to 15 AMA PRA Category 1 Credits related to content from 6 CME published in Anesthesia & Analgesia in 2017. These credits will count toward your MOCA Part 2 CME requirements. Your study participation is completely voluntary and anonymous, and collected information will not be shared with your employer or the American Board of Anesthesiology. You will not be paid to take part in this study.

Your contact information (name, phone number, and email address) is necessary to randomize you for this study and will be stored in REDCap, which is a secure research database. All identifiers will be removed prior to analysis. Study information will be stored in REDCap until destruction of the database. Only the study team will have access to the information you provide.

Your participation in the study would last approximately 2 months. Participants in this study will complete a brief survey and set up a CME profile to receive their credits. After enrollment is closed, participants will receive an email assigning them to one of two learning groups for a 6-week period.

The Journal-Based CME Group will receive weekly emails concerning online availability of articles and related questions in the Vanderbilt Learning Management System. This system mimics what you would see online for journal-based CME for Anesthesia & Analgesia.

The QuizTime-Based CME Group will receive two SMS texts each weekday. Each text will contain 1 question along with online access to a PDF of the article.

About 1 week after the study, all participants complete the study period they will receive a 24-question quiz. Three CME credits will be earned for completing the quiz. A post-study survey will also be sent.

To take part in this study, you must be a member of the following group:

ABA Diplomate or a candidate in the ABA Examination System

Your participation is voluntary, and you may leave the study at any time by notifying the Principal Investigator. If you would like to take part in this study or would like more information, please click here to complete the Demographics Survey. The Vanderbilt IRB has approved this study. If you have questions regarding this study please contact: Matt McEvoy, MD or Leslie Fowler, Ed.D, MEd, at (615) 343-4034 or by email at AnesthesiaEducationResearch@vumc.org. You may also contact the Vanderbilt Human Subjects Protection Program (IRB) office at (866) 224-8273. Thank you again for your consideration.

Sincerely,

Matt McEvoy, MD Amy Robertson, MD Brian Gelfand, MD Leslie Fowler, Ed.D, M.Ed

You may open the survey in your web browser by clicking the link below: CME Project A & A If the link above does not work, try copying the link below into your web browser: =https://redcap.vanderbilt.edu/surveys/?s=JgIRiLMSmN

This link is unique to you and should not be forwarded to others.

Appendix 2. Questions Mapped to Article

Appendix 3. Item Structure for Content Developmenta

[Question #: Topic (contributors/reviewer)]

Question 1: Likelihood of Chronic Opioid Use (ALLEN/RICE)

Stem:

[Make as concise as possible; only include essential info; only test 1 principle per question.]

A 52-year-old opioid naive woman presents to the emergency department with a migraine headache. Evaluation and management of her condition by a physician who frequently prescribes opioids increases the likelihood of which of the following:

Answers:

[List 4 answers; cannot use “all of the above” or “none of the above”; only 1 correct answer; should be parallel in verb tense and length.]

A. Long-term opioid use

B. Future hospitalization

C. Respiratory failure

D. Cardiac arrest

Practice Implication:

[This is the key take-home point that you would like for learners to know.]

The approach to treatment of acute pain varies widely amongst healthcare professionals. In opioid-naïve patients, treatment by a clinician who prescribes opioids more frequently than their peers is strongly correlated with subsequent long-term opioid use in patients. Additionally, risk of long-term opioid use increases rapidly with commonly prescribed doses and durations of therapy. Strategies to mitigate risk should be employed with every patient encounter in which opioids are prescribed.

Rationale:

[This is a longer explanation that will contain the Practice Implications.]

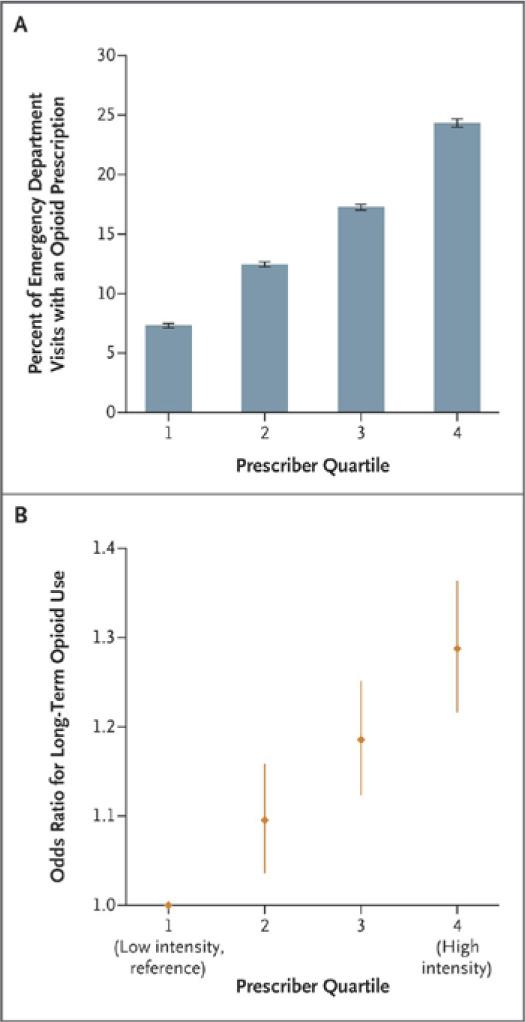

Increasing overuse of opioids in the United States may be driven in part by prescribing habits of healthcare professionals.

In a recent study of almost 400,000 patients (Barnett ML et al, NEJM 2017), ED physicians were categorized as being high-intensity or low-intensity opioid prescribers according to relative quartiles of prescribing rates within their hospital. Long-term opioid use was defined ≥180 days of opioids supplied in the 12 months after the index ED visit, excluding prescriptions within 30 days after the index visit. Rates of long-term opioid use were compared in patients treated by high or low-intensity prescribers. Overall, patient characteristics and diagnoses treated were similar across all prescribers. There was >3-fold increase in opioid prescription rates by the high-intensity prescribers as compared to low-intensity (24.1% vs. 7.3% of ED visits, see figure below). Patients treated by high-intensity opioid prescribers had a 30% increased risk of long-term opioid use.

A similar study (Deyo RA et al, JGIM 2017) evaluating 500,000 opioid-naïve patients demonstrated that both the number of prescriptions in the 1st month of opioid consumption and total morphine milligram equivalents (MMEs) prescribed were highly correlated with risk of long-term use, defined as ≥6 opioid prescriptions in the subsequent 12 months. After excluding patients with cancer pain and non-cancer chronic pain conditions, compared to the group that only filled 1 prescription in the first month, those who filled ≥2 were 4 to 10 times as likely to become long-term users. Additionally, those who were dispensed >120 morphine milligram equivalents total (MME; e.g. 120 MME = oxycodone 5mg PO q6h PRN x 4 days) were 2–16 times as likely to be long-term opioid users, with increasing MME dispensed associated with increased risk.

If a prescription is given for opioids for acute non-cancer pain, the shortest duration and the lowest number of MMEs (by total dose and pill count) possible should be given. Per latest CDC guidelines, 3 days of opioid therapy is often sufficient and >7 days is rarely needed for acute pain; Appendix 3 Figure 1.

[Any type of media can be used in QuizTime: images or video.]

References:

[Include 1–3 references with PubMed link to online abstract.]

[Format: first author, et al. Journal Name, Year;Volume:page numbers. pubmed hyperlink]

Barnett ML, et al. NEJM 2017;376:663-673. https://www.ncbi.nlm.nih.gov/pubmed/28199807

a Boldface indicates correct answer.

Appendix 3 Figure 1:

Item structure for content development.

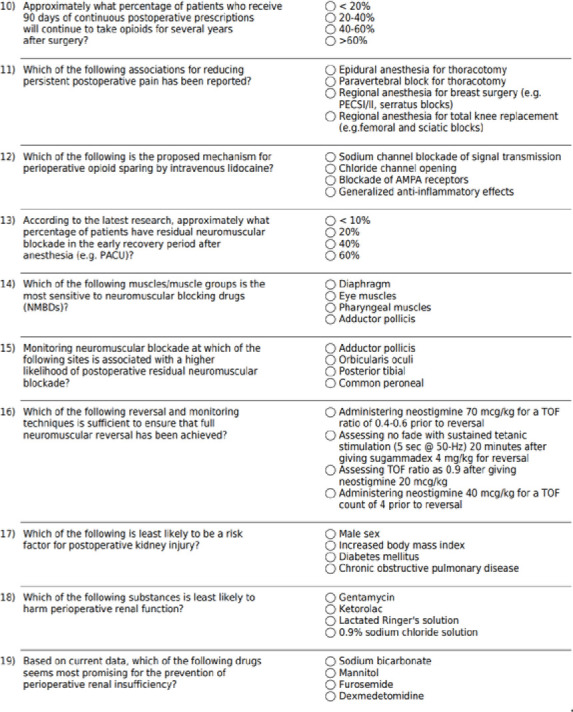

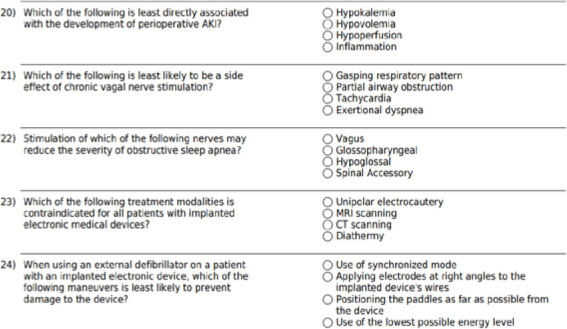

Appendix 4. CME Knowledge Quiz

Footnotes

Details of the Vanderbilt system are provided later. To compare to a live online CME system, see https://www.asahq.org/shop-asa/e020j00w00 (accessed December 3, 2020).

The web app used for this study is named QuizTime and was developed by the Vanderbilt University School of Medicine (https://quiztime.app.vumc.org/). It is currently not available for commercial purchase and use but likely will be in the future.

Funding: This study was performed with intramural funding from the Vanderbilt University Department of Anesthesiology and School of Medicine.

References

- 1.Kerfoot BP, Baker H. An online spaced-education game for global continuing medical education: a randomized trial. Ann Surg. 2012;256(1):33–8. doi: 10.1097/SLA.0b013e31825b3912. [DOI] [PubMed] [Google Scholar]

- 2.Al-Azri H, Ratnapalan S. Problem-based learning in continuing medical education: review of randomized controlled trials. Can Fam Physician. 2014;60(2):157–65. [PMC free article] [PubMed] [Google Scholar]

- 3.Shaw T, Long A, Chopra S, Kerfoot BP. Impact on clinical behavior of face-to-face continuing medical education blended with online spaced education: a randomized controlled trial. J Contin Educ Health Prof. 2011;31(2):103–8. doi: 10.1002/chp.20113. [DOI] [PubMed] [Google Scholar]

- 4.Curran VR, Fleet LJ, Kirby F. A comparative evaluation of the effect of Internet-based CME delivery format on satisfaction, knowledge and confidence. BMC Med Educ. 2010;10:10. doi: 10.1186/1472-6920-10-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mazzoleni MC, Rognoni C, Finozzi E, et al. Usage and effectiveness of e-learning courses for continuous medical education. Stud Health Technol Inform. 2009;150:921–5. [PubMed] [Google Scholar]

- 6.Casebeer L, Engler S, Bennett N, et al. A controlled trial of the effectiveness of internet continuing medical education. BMC Med. 2008;6:37. doi: 10.1186/1741-7015-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sargeant J, Curran V, Jarvis-Selinger S, et al. Interactive on-line continuing medical education: physicians’ perceptions and experiences. J Contin Educ Health Prof. 2004;24:227–36. doi: 10.1002/chp.1340240406. [DOI] [PubMed] [Google Scholar]

- 8.Roediger HL, 3rd, Butler AC. The critical role of retrieval practice in long-term retention. Trends Cogn Sci. 2011;15(1):20–7. doi: 10.1016/j.tics.2010.09.003. [DOI] [PubMed] [Google Scholar]

- 9.Moore DE, Jr, Green JS, Gallis HA. Achieving desired results and improved outcomes: integrating planning and assessment throughout learning activities. J Contin Educ Health Prof. 2009;29:1–15. doi: 10.1002/chp.20001. [DOI] [PubMed] [Google Scholar]

- 10.Cervero RM, Gaines JK. The impact of CME on physician performance and patient health outcomes: an updated synthesis of systematic reviews. J Contin Educ Health Prof. 2015;35(2):131–8. doi: 10.1002/chp.21290. [DOI] [PubMed] [Google Scholar]

- 11.Magarik M, Fowler LC, Robertson A, et al. There’s an app for that: a case study on the impact of spaced education on ordering CT examinations. J Am Coll Radiol. 2019;16(3):360–4. doi: 10.1016/j.jacr.2018.10.024. [DOI] [PubMed] [Google Scholar]

- 12.Kerfoot BP, Lawler EV, Sokolovskaya G, Gagnon D, Conlin PR. Durable improvements in prostate cancer screening from online spaced education a randomized controlled trial. Am J Prev Med. 2010;39(5):472–8. doi: 10.1016/j.amepre.2010.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tabibian B, Upadhyay U, De A, et al. Enhancing human learning via spaced repetition optimization. Proc Natl Acad Sci U S A. 2019;116(10):3988–93. doi: 10.1073/pnas.1815156116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Weidman J, Baker K. The cognitive science of learning: concepts and strategies for the educator and learner. Anesth Analg. 2015;121(6):1586–99. doi: 10.1213/ANE.0000000000000890. [DOI] [PubMed] [Google Scholar]

- 15.Martinelli SM, Isaak RS, Schell RM, et al. Learners and Luddites in the twenty-first century: bringing evidence-based education to anesthesiology. Anesthesiology. 2019;131(4):908–28. doi: 10.1097/ALN.0000000000002827. [DOI] [PubMed] [Google Scholar]

- 16.Phillips JL, Heneka N, Bhattarai P, Fraser C, Shaw T. Effectiveness of the spaced education pedagogy for clinicians’ continuing professional development: a systematic review. Med Educ. 2019;53(9):886–902. doi: 10.1111/medu.13895. [DOI] [PubMed] [Google Scholar]

- 17.Larsen DP, Butler AC, Roediger HL., 3rd Repeated testing improves long-term retention relative to repeated study: a randomised controlled trial. Med Educ. 2009;43(12):1174–81. doi: 10.1111/j.1365-2923.2009.03518.x. [DOI] [PubMed] [Google Scholar]

- 18.Deasy C, Bray JE, Smith K, et al. Resuscitation of out-of-hospital cardiac arrests in residential aged care facilities in Melbourne, Australia. Resuscitation. 2012;83(1):58–62. doi: 10.1016/j.resuscitation.2011.06.030. [DOI] [PubMed] [Google Scholar]

- 19.Downing SM. Validity: on meaningful interpretation of assessment data. Med Educ. 2003;37(9):830–7. doi: 10.1046/j.1365-2923.2003.01594.x. [DOI] [PubMed] [Google Scholar]

- 20.Gordon RJ, Lombard FW. Perioperative venous thromboembolism: a review. Anesth Analg . 2017;125(2):403–12. doi: 10.1213/ANE.0000000000002183. [DOI] [PubMed] [Google Scholar]

- 21.Hah JM, Bateman BT, Ratliff J, Curtin C, Sun E. Chronic opioid use after surgery: implications for perioperative management in the face of the opioid epidemic. Anesth Analg. 2017;125(5):1733–40. doi: 10.1213/ANE.0000000000002458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Meersch M, Schmidt C, Zarbock A. Perioperative acute kidney injury: an under-recognized problem. Anesth Analg. 2017;125(4):1223–32. doi: 10.1213/ANE.0000000000002369. [DOI] [PubMed] [Google Scholar]

- 23.Srejic U, Larson P, Bickler PE. Little black boxes: noncardiac implantable electronic medical devices and their anesthetic and surgical implications. Anesth Analg. 2017;125:124–38. doi: 10.1213/ANE.0000000000001983. [DOI] [PubMed] [Google Scholar]

- 24.Sridhar S, Gumbert SD, Stephens C, Moore LJ, Pivalizza EG. Resuscitative endovascular balloon occlusion of the aorta: principles, initial clinical experience, and considerations for the anesthesiologist. Anesth Analg. 2017;125:884–90. doi: 10.1213/ANE.0000000000002150. [DOI] [PubMed] [Google Scholar]

- 25.Murphy GS. Neuromuscular monitoring in the perioperative period. Anesth Analg . 2018;126(2):464–8. doi: 10.1213/ANE.0000000000002387. [DOI] [PubMed] [Google Scholar]

- 26.Bjork RA. Commentary on the potential of the MOCA Minute program. Anesthesiology. 2016;125:844–845. doi: 10.1097/ALN.0000000000001302. [DOI] [PubMed] [Google Scholar]

- 27.Sun H, Zhou Y, Culley DJ, et al. Association between participation in an intensive longitudinal assessment program and performance on a cognitive examination in the Maintenance of Certification in Anesthesiology program. Anesthesiology. 2016;125(5):10461055. doi: 10.1097/ALN.0000000000001301. [DOI] [PubMed] [Google Scholar]

- 28.Kerfoot BP. Interactive spaced education versus web based modules for teaching urology to medical students: a randomized controlled trial. J Urol. 2008;179(6):2351–6. doi: 10.1016/j.juro.2008.01.126. discussion 2356–7. [DOI] [PubMed] [Google Scholar]

- 29.Kerfoot BP. Learning benefits of on-line spaced education persist for 2 years. J Urol. 2009;181(6):2671–3. doi: 10.1016/j.juro.2009.02.024. [DOI] [PubMed] [Google Scholar]

- 30.Kerfoot BP. Adaptive spaced education improves learning efficiency: a randomized controlled trial. J Urol. 2010;183(2):678–81. doi: 10.1016/j.juro.2009.10.005. [DOI] [PubMed] [Google Scholar]

- 31.Kerfoot BP, Kearney MC, Connelly D, Ritchey ML. Interactive spaced education to assess and improve knowledge of clinical practice guidelines: a randomized controlled trial. Ann Surg. 2009;249(5):744–9. doi: 10.1097/SLA.0b013e31819f6db8. [DOI] [PubMed] [Google Scholar]

- 32.Manuel SP, Grewal GK, Lee JS. Millennial resident study habits and factors that influence American Board of Anesthesiology in-training examination performance: a multi-institutional study. J Educ Perioper Med. 2018;20(2):E623. [PMC free article] [PubMed] [Google Scholar]