Abstract

Humans are remarkably adept in listening to a desired speaker in a crowded environment, while filtering out nontarget speakers in the background. Attention is key to solving this difficult cocktail-party task, yet a detailed characterization of attentional effects on speech representations is lacking. It remains unclear across what levels of speech features and how much attentional modulation occurs in each brain area during the cocktail-party task. To address these questions, we recorded whole-brain blood-oxygen-level-dependent (BOLD) responses while subjects either passively listened to single-speaker stories, or selectively attended to a male or a female speaker in temporally overlaid stories in separate experiments. Spectral, articulatory, and semantic models of the natural stories were constructed. Intrinsic selectivity profiles were identified via voxelwise models fit to passive listening responses. Attentional modulations were then quantified based on model predictions for attended and unattended stories in the cocktail-party task. We find that attention causes broad modulations at multiple levels of speech representations while growing stronger toward later stages of processing, and that unattended speech is represented up to the semantic level in parabelt auditory cortex. These results provide insights on attentional mechanisms that underlie the ability to selectively listen to a desired speaker in noisy multispeaker environments.

Keywords: cocktail-party, dorsal and ventral stream, encoding model, fMRI, natural speech

Introduction

Humans are highly adept at perceiving a target speaker in crowded multispeaker environments (Shinn-Cunningham and Best 2008; Kidd and Colburn 2017; Li et al. 2018). Auditory attention is key to behavioral performance in this difficult “cocktail-party problem” (Cherry 1953; Fritz et al. 2007; McDermott 2009; Bronkhorst 2015; Shinn-Cunningham et al. 2017). Literature consistently reports that attention selectively enhances cortical responses to the target stream in auditory cortex and beyond, while filtering out nontarget background streams (Hink and Hillyard 1976; Teder et al. 1993; Alho et al. 1999, 2003, 2014; Jäncke et al. 2001, 2003; Lipschutz et al. 2002; Rinne et al. 2008; Rinne 2010; Elhilali et al. 2009; Gutschalk and Dykstra 2014). However, the precise link between the response modulations and underlying speech representations is less clear. Speech representations are hierarchically organized across multiple stages of processing in cortex, with each stage selective for diverse information ranging from low-level acoustic to high-level semantic features (Davis and Johnsrude 2003; Griffiths and Warren 2004; Hickok and Poeppel 2004, 2007; Rauschecker and Scott 2009; Okada et al. 2010; Friederici 2011; Di Liberto et al. 2015; de Heer et al. 2017; Brodbeck et al. 2018a). Thus, a principal question is to what extent attention modulates these multilevel speech representations in the human brain during a cocktail-party task (Miller 2016; Simon 2017).

Recent electrophysiology studies on the cocktail-party problem have investigated attentional response modulations for natural speech stimuli (Kerlin et al. 2010; Ding and Simon 2012a, 2012b; Mesgarani and Chang 2012; Power et al. 2012; Zion Golumbic et al. 2013; Puvvada and Simon 2017; Brodbeck et al. 2018b; O’Sullivan et al. 2019; Puschmann et al. 2019). Ding and Simon (2012a, 2012b) fit spectrotemporal encoding models to predict cortical responses from the speech spectrogram. Attentional modulation in the peak amplitude of spectrotemporal response functions was reported in planum temporale in favor of the attended speech. Mesgarani and Chang (2012) built decoding models to estimate the speech spectrogram from responses measured during passive listening and examined the similarity of the decoded spectrogram during a cocktail-party task to the isolated spectrograms of attended versus unattended speech. They found higher similarity to attended speech in nonprimary auditory cortex. Zion Golumbic et al. (2013) reported amplitude modulations in speech-envelope response functions toward attended speech across auditory, inferior temporal, frontal, and parietal cortices. Other studies using decoding models have similarly reported higher decoding performance for the speech envelope of the attended stream in auditory, prefrontal, motor, and somatosensory cortices (Puvvada and Simon 2017; Puschmann et al. 2019). Brodbeck et al. (2018b) further identified peak amplitude response modulations for sublexical features including word onset and cohort entropy in temporal cortex. Note that because these electrophysiology studies fit models for acoustic or sublexical features, the reported attentional modulations primarily comprised relatively low-level speech representations.

Several neuroimaging studies have also examined whole-brain cortical responses to natural speech in a cocktail-party setting (Nakai et al. 2005; Alho et al. 2006; Hill and Miller 2010; Ikeda et al. 2010; Wild et al. 2012; Regev et al. 2019; Wikman et al. 2021). In the study of Hill and Miller (2010), subjects were given an attention cue (attend to pitch, attend to location or rest) and later exposed to multiple speech stimuli where they performed the cued task. Partly overlapping frontal and parietal activations were reported, during both the cue and the stimulus exposure periods, as an effect of attention to pitch or location in contrast to rest. Furthermore, pitch-based attention was found to elicit higher responses in bilateral posterior and right middle superior temporal sulcus, whereas location-based attention elicited higher responses in left intraparietal sulcus. In alignment with electrophysiology studies, these results suggest that attention modulates relatively low-level speech representations comprising paralinguistic features. In a more recent study, Regev et al. (2019) measured responses under 2 distinct conditions: while subjects were presented bimodal speech-text stories and asked to attend to either the auditory or visual stimulus, and while subjects were presented unimodal speech or text stories. Intersubject response correlations were measured between unimodal and bimodal conditions. Broad attentional modulations in response correlation were reported from primary auditory cortex to temporal, parietal, and frontal regions in favor of the attended modality. Although this finding raises the possibility that attention might also affect representations in higher-order regions, a systematic characterization of individual speech features that drive attentional modulations across cortex is lacking.

An equally important question regarding the cocktail-party problem is whether unattended speech streams are represented in cortex despite the reported modulations in favor of the target stream (Bronkhorst 2015; Miller 2016). Electrophysiology studies on this issue identified representations of low-level spectrogram and speech envelope features of unattended speech in early auditory areas (Mesgarani and Chang 2012; Ding and Simon 2012a, 2012b; Zion Golumbic et al. 2013; Puvvada and Simon 2017; Brodbeck et al. 2018b; Puschmann et al. 2019), but no representations of linguistic features (Brodbeck et al. 2018b). Meanwhile, a group of neuroimaging studies found broader cortical responses to unattended speech in superior temporal cortex (Scott et al. 2004, 2009a; Wild et al. 2012; Scott and McGettigan 2013; Evans et al. 2016; Regev et al. 2019). Specifically, Wild et al. (2012) and Evans et al. (2016) reported enhanced activity associated with the intelligibility of unattended stream in parts of superior temporal cortex extending to superior temporal sulcus. Although this implies that responses in relatively higher auditory areas carry some information regarding unattended speech stimuli, the specific features of unattended speech that are represented across the cortical hierarchy of speech is lacking.

Here we investigated whether and how attention affects representations of attended and unattended natural speech across cortex. To address these questions, we systematically examined multilevel speech representations during a diotic cocktail-party task using naturalistic stimuli. Whole-brain BOLD responses were recorded in 2 separate experiments (Fig. 1) while subjects were presented engaging spoken narratives from “The Moth Radio Hour.” In the passive-listening experiment, subjects listened to single-speaker stories for over 2 h. Separate voxelwise models were fit that measured selectivity for spectral, articulatory, and semantic features of natural speech during passive listening (de Heer et al. 2017). In the cocktail-party experiment, subjects listened to temporally overlaid speech streams from 2 speakers while attending to a target category (male or female speaker). To assess attentional modulation in functional selectivity, voxelwise models fit during passive listening were used to predict responses for the cocktail-party experiment. Model performances were calculated separately for attended and unattended stories. Attentional modulation was taken as the difference between these 2 performance measurements. Comprehensive analyses were conducted to examine the intrinsic complexity and attentional modulation of multilevel speech representations and to investigate up to what level of speech features unattended speech is represented across cortex.

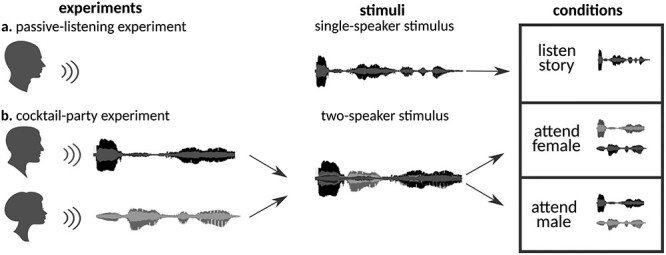

Figure 1 .

Experimental design. (a) “Passive-listening experiment.” 10 stories from Moth Radio Hour were used to compile a single-speaker stimulus set. Subjects were instructed to listen to the stimulus vigilantly without any explicit task in the passive-listening experiment. (b) “Cocktail-party experiment.” A pair of stories told by individuals of different genders were selected from the single-speaker stimulus set and overlaid temporally to generate a 2-speaker stimulus set. Subjects were instructed to attend either to the male or female speaker in the cocktail-party experiment. The same 2-speaker story was presented twice in separate runs while the target speaker was varied. Attention condition was fixed within runs and it alternated across runs.

Materials and Methods

Participants

Functional data were collected from 5 healthy adult native subjects (4 males and one female; aged between 26 and 31) who had no reported hearing problems and were native English speakers. The experimental procedures were approved by the Committee for the Protection of Human Subjects at University of California, Berkeley. Written informed consent was obtained from all subjects.

Stimuli

Figure 1 illustrates the 2 main types of stimuli used in the experiments: single-speaker stories and 2-speaker stories. Ten single-speaker stories were taken from The Moth Radio Program: “Alternate Ithaca Tom” by Tom Weiser; “How to Draw a Nekkid Man” by Tricia Rose Burt; “Life Flight” by Kimberly Reed; “My Avatar and Me” by Laura Albert; “My First Day at the Yankees” by Matthew McGough; “My Unhurried Legacy” by Kyp Malone; “Naked” by Catherine Burns; “Ode to Stepfather” by Ethan Hawke; “Targeted” by Jen Lee; and “Under the Influence” by Jeffery Rudell. All stories were told before a live audience by a male or female speaker, and they were about 10–15 min long. Each 2-speaker story was generated by temporally overlaying a pair of stories told by different genders and selected from the single-speaker story set. When the durations of the 2 single-speaker stories differed, the longer story was clipped from the end to match durations. Three 2-speaker stories were prepared: from “Targeted” and “Ode to Stepfather” (cocktail1); from “How to Draw a Nekkid Man” and “My First Day at the Yankees” (cocktail2); and from “Life Flight” and “Under the Influence” (cocktail3). In the end, the stimuli consisted of 10 single-speaker and three 2-speaker stories.

Experimental Procedures

Figure 1 outlines the 2 main experiments conducted in separate sessions: passive-listening and cocktail-party experiments. In the passive-listening experiment, subjects were instructed to listen to single-speaker stories vigilantly without an explicit attentional target. To facilitate sustained vigilance, we picked engaging spoken narratives from the Moth Radio Hour (see Stimuli). Each of the 10 single-speaker stories was presented once in a separate run of the experiment. Two 2-hour sessions were conducted, resulting in 10 runs of passive-listening data for each subject. In the cocktail-party experiment, subjects were instructed to listen to 2-speaker stories while attending to a target speaker (either the male or the female speaker). Our experimental design focuses on attentional modulations of speech representations when a stimulus is attended versus unattended. Each of the 3 cocktail-stories was presented twice in separate runs. This allowed us to present the same stimulus set in attended and unattended conditions to prevent potential biases due to across condition stimulation differences. To minimize adaptation effects, different 2-speaker stories were presented in consecutive runs while maximizing the time window between repeated presentations of a 2-speaker story. Attention condition alternated across consecutive runs. An exemplary sequence of runs was: cocktail1-M (attend to male speaker in cocktail1), cocktail2-F (attend to female speaker in cocktail2), cocktail3-M, cocktail1-F, cocktail2-M, and cocktail3-F. The first attention condition assigned to each 2-speaker story (M or F) was counterbalanced across subjects. This resulted in a balanced assignment of “attended” versus “unattended” conditions during the second exposure to each 2-speaker story. Furthermore, for each subject, the second exposure to half of the single-speaker stories (3 out of 6 included within the 2-speaker stories) coincided with the “attended” condition, whereas the second exposure to the other half coincided with the “unattended” condition. Hence, second exposure to each story was balanced across “attended” and “unattended” conditions both within and across subjects. A 2-hour session was conducted, resulting in 6 runs of cocktail-data. Note that the 2-speaker stories used in the cocktail-party experiment were constructed from the single-speaker story set used in passive-listening experiment. Hence, for each subject, the cocktail-party experiment was conducted several months (~5.5 months) after the completion of the passive-listening experiment to minimize potential repetition effects. The dataset collected from the passive-listening experiment was previously analyzed (Huth et al. 2016; de Heer et al. 2017); however, the dataset collected from the cocktail-party experiment was specifically collected for this study.

In both experiments, the length of each run was tailored to the length of the story stimulus with additional 10 s of silence both before and after the stimulus. All stimuli were played at 44.1 kHz and delivered binaurally to both ears using Sensimetrics S14 in-ear piezo-electric headphones. The Sensimetrics S14 is a magnetic resonance imaging (MRI)-compatible auditory stimulation system with foam canal tips to reduce scanner noise (above 29 dB as stated in specifications). The frequency response of the headphones was flattened using a Behringer Ultra-Curve Pro Parametric Equalizer. Furthermore, the level of sound was adjusted for each subject to ensure clear and comfortable hearing of the stories.

MRI Data Collection and Preprocessing

MRI data were collected on a 3T Siemens TIM Trio scanner at the Brain Imaging Center, UC Berkeley, using a 32-channel volume coil. For functional scans, a gradient echo EPI sequence was used with TR = 2.0045 s, TE = 31 ms, flip angle = 70°, voxel size = 2.24 × 2.24 × 4.1 mm3, matrix size = 100 × 100, field of view = 224 × 224 mm2 and 32 axial slices covering the entire cortex. For anatomical data, a T1-weighted multiecho MP-RAGE sequence was used with voxel size = 1 × 1 × 1 mm3 and field of view = 256 × 212 × 256 mm3.

Each functional run was motion corrected using FMRIB’s Linear Image Registration Tool (FLIRT) (Jenkinson and Smith 2001). A cascaded motion-correction procedure was performed, where separate transformation matrices were estimated within single runs, within single sessions and across sessions sequentially. To do this, volumes in each run were realigned to the mean volume of the run. For each session, the mean volume of each run was then realigned to the mean volume of the first run in the session (see Supplementary Table 1 for within-session motion statistics during the cocktail-party experiment). Lastly, the mean volume of the first run of each session was realigned to the mean volume of the first run of the first session of the passive-listening experiment. The estimated transformation matrices were concatenated and applied in a single step. Motion-corrected data were manually checked to ensure that no major realignment errors remained. The moment-to-moment variations in head position were also estimated and used as nuisance regressors during model estimation to regress out motion-related nuisance effects from BOLD responses. The Brain Extraction Tool in FSL 5.0 (Smith 2002) was used to remove nonbrain tissues. This resulted in 68 016–84 852 brain voxels in individual subjects. All model fits and analyses were performed on these brain voxels in volumetric space.

Visualization on Cortical Flatmaps

Cortical flatmaps were used for visualization purposes, where results in volumetric space were projected onto the cortical surfaces using PyCortex (Gao et al. 2015). Cortical surfaces were reconstructed from anatomical data using Freesurfer (Dale et al. 1999). Five relaxation cuts were made into the surface of each hemisphere, and the surface crossing the corpus callosum was removed. Functional data were aligned to the individual anatomical data with affine transformations using FLIRT (Jenkinson and Smith 2001). Cortical flatmaps were constructed for visualization of significant model prediction scores, functional selectivity and attentional modulation profiles, and representational complexity and modulation gradients.

ROI Definitions and Abbreviations

We defined region of interests for each subject based on an atlas-based parcellation of the cortex (Destrieux et al. 2010). To do this, functional data were coregistered to the individual-subject anatomical scans with affine transformations using FLIRT (Jenkinson and Smith 2001). Individual-subject anatomical data were then registered to the Freesurfer standard anatomical space via the boundary-based registration tool in FSL (Greve and Fischl 2009). This procedure resulted in subject-specific transformations mapping between the standard anatomical space and the functional space of individual subjects. Anatomical regions of interest from the Destrieux atlas were outlined in the Freesurfer standard anatomical space; and they were back-projected onto individual-subject functional spaces via the subject-specific transformations using PyCortex (Gao et al. 2015). The anatomical regions were labeled according to the atlas. To explore potential selectivity gradients across the lateral aspects of Superior Temporal Gyrus and Superior Temporal Sulcus, these ROIs were further split into 3 equidistant subregions in posterior-to-anterior direction. Heschl’s Gyrus and Heschl’s Sulcus were considered as a single ROI as prior reports suggest that primary auditory cortex is not constrained by Heschl’s Gyrus and extends to Heschl’s Sulcus as well (Woods et al. 2009, 2010; da Costa et al. 2011). We only considered regions with at least 10 speech-selective voxels in each individual subject for subsequent analyses.

Supplementary Table 2 lists the defined ROIs and the number of spectrally, articulatorily, and semantically selective voxels within each ROI, with number of speech-selective voxels. ROI abbreviations and corresponding Destrieux indices are Heschl’s Gyrus and Heschl’s Sulcus (HG/HS: 33 and 74), Planum Temporale (PT: 36), posterior segment of Slyvian Fissure (pSF: 41), lateral aspect of Superior Temporal Gyrus (STG: 34), Superior Temporal Sulcus (STS, 73), Middle Temporal Gyrus (MTG: 38), Angular Gyrus (AG: 25), Supramarginal Gyrus (SMG: 26), Intraparietal Sulcus (IPS: 56), opercular part of Inferior Frontal Gyrus/Pars Opercularis (POP: 12), triangular part of Inferior Frontal Gyrus/Pars Triangularis (PTR: 14), Precentral Gyrus (PreG: 29), medial Occipito-Temporal Sulcus (mOTS:60), Inferior Frontal Sulcus (IFS: 52), Middle Frontal Gyrus (MFG:15), Middle Frontal Sulcus (MFS: 53), Superior Frontal Sulcus (SFS: 54), Superior Frontal Gyrus (SFG: 16), Precuneus (PreC: 30), Subparietal Sulcus (SPS: 71), and Posterior Cingulate Cortex (PCC: 9 and 10). The subregions of STG are aSTG (anterior one-third of STG), mSTG (middle one-third of STG), and pSTG (posterior one-third of STG). The subregions of STS are aSTS (anterior one-third of STS), mSTS (middle one-third of STS) and pSTS (posterior one-third of STS). MTG was not split into subregions since these subregions did not have a sufficient number of speech-selective voxels in each individual subject.

Model Construction

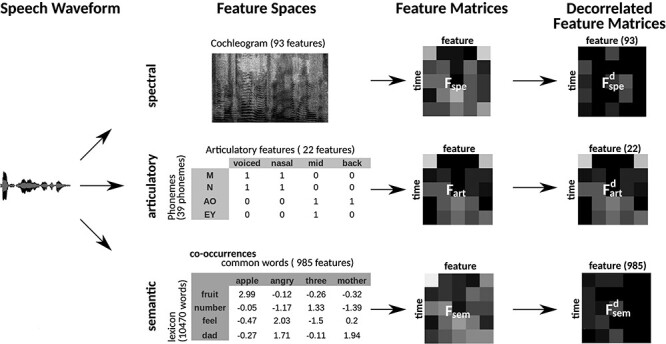

To comprehensively assess speech representations, we constructed spectral, articulatory, and semantic models of the speech stimuli (Fig. 2; de Heer et al. 2017).

Figure 2 .

Multilevel speech features. Three distinct feature spaces were constructed to represent natural speech at multiple levels: spectral, articulatory, and semantic spaces. Speech waveforms were projected separately on these spaces to form stimulus matrices. The spectral feature matrix captured the cochleogram features of the stimulus in 93 channels having center frequencies between 115 and 9920 Hz. The articulatory feature matrix captured the mapping of each phoneme in the stimulus to 22 binary articulation features. The semantic feature matrix captured the statistical co-occurrences of each word in the stimulus with 985 common words in English. Each feature matrix was Lanczos-filtered at a cutoff frequency of 0.25 Hz and downsampled to 0.5 Hz to match the sampling rate of fMRI. Natural speech might contain intrinsic stimulus correlations among spectral, articulatory, and semantic features. To prevent potential biases due to stimulus correlations, we decorrelated the 3 feature matrices examined here via Gram–Schmidt orthogonalization (see Materials and Methods). The decorrelated feature matrices were used for modeling BOLD responses.

Spectral Model

For the spectral model, cochleogram features of speech were estimated based on Lyon’s Passive Ear model. Lyon’s human cochlear model involves logarithmic filtering, compression and adaptive gain control operations applied to input sound (Lyon 1982; Slaney 1998; Gill et al. 2006). Depending on the sampling rate of the input signal, the cochlear model generates 118 waveforms with center frequencies between ~ 84 Hz and ~ 21 kHz. Considering the frequency response of the headphones used in the experiment, 93 waveforms with center frequencies between 115 Hz and 9920 Hz were selected as the features of the spectral model. The spectral features were Lanczos-filtered at a cutoff frequency of 0.25 Hz and downsampled to 0.5 Hz to match the sampling rate of functional MRI. The 93 spectral features were then temporally z-scored to zero mean and unit variance.

Articulatory Model

For the articulatory model, each phoneme in the stories was mapped onto a unique set of 22 articulation features; for example, phoneme /3/ is postalveolar, fricative, and voiced (Levelt 1993; de Heer et al. 2017). This mapping resulted in 22-dimensional binary vectors for each phoneme. To obtain the timestamp of each phoneme and word in the stimuli, the speech in the stories was aligned with the story transcriptions using the Penn Phonetics Lab Forced Aligner (Yuan and Liberman 2008). Alignments were manually verified and corrected using Praat (www.praat.org). The articulatory features were Lanczos-filtered at a cutoff frequency of 0.25 Hz and downsampled to 0.5 Hz. Finally, the 22 articulatory features were z-scored to zero mean and unit variance.

Semantic Model

For the semantic model, co-occurrence statistics of words were measured via a large corpus of text (Mitchell et al. 2008; Huth et al. 2016; de Heer et al. 2017). The text corpus was compiled from 2 405 569 Wikipedia pages, 36 333 459 user comments scraped from reddit.com, 604 popular books, and the transcripts of 13 Moth stories (including the stories used as stimuli). We then built a 10 470-word lexicon from the union set of the 10 000 most common words in the compiled corpus and all words appearing in the 10 Moth stories used in the experiment. Basis words were then selected as a set of 985 unique words from Wikipedia’s List of 1000 Basic Words. Co-occurrence statistics of the lexicon words with 985 basis words within a 15-word window were characterized as a co-occurrence matrix of size 985 × 10 470. Elements of the resulting co-occurrence matrix were log-transformed, z-scored across columns to correct for differences in basis-word frequency, and z-scored across rows to correct for differences in lexicon-word frequency. Each word in the stimuli was then represented with a 985-dimensional co-occurrence vector based on the speech-transcription alignments. The semantic features were Lanczos-filtered at a cutoff frequency of 0.25 Hz and downsampled to 0.5 Hz. The 985 semantic features were finally z-scored to zero mean and unit variance.

Decorrelation of Feature Spaces

In natural stories, there might be potential correlations among certain spectral, articulatory, or semantic features. If significant, such correlations can partly confound assessments of model performance. To assess the unique contribution of each feature space to the explained variance in BOLD responses, a decorrelation procedure was first performed (Fig. 2). To decorrelate a feature matrix F of size  from a second feature matrix K of size

from a second feature matrix K of size  , we first found an orthonormal basis for the column space of K (

, we first found an orthonormal basis for the column space of K ( ) using economy-size singular value decomposition:

) using economy-size singular value decomposition:

|

where U contains left singular vectors as columns, V contains right singular vectors, and S contains the singular values. Left singular vectors were taken as the orthonormal basis for col{K}, and each column of F was decorrelated from it according to the following formula:

|

where  ,

,  are the column vectors of F and U respectively, and

are the column vectors of F and U respectively, and  is the column vectors of the decorrelated feature matrix,

is the column vectors of the decorrelated feature matrix,  . To decorrelate feature matrices for the models considered here, we took the original articulatory feature matrix as a reference, and decorrelated the spectral feature matrix from the articulatory feature matrix, and decorrelated the semantic feature matrix from both articulatory and spectral feature matrices. This decorrelation sequence was selected because spectral and articulatory features capture lower-level speech representations, and the articulatory feature matrix had the fewest number of features among all models. In the end, we obtained 3 decorrelated feature matrices whose columns had zero correlation with the columns of the other 2 matrices.

. To decorrelate feature matrices for the models considered here, we took the original articulatory feature matrix as a reference, and decorrelated the spectral feature matrix from the articulatory feature matrix, and decorrelated the semantic feature matrix from both articulatory and spectral feature matrices. This decorrelation sequence was selected because spectral and articulatory features capture lower-level speech representations, and the articulatory feature matrix had the fewest number of features among all models. In the end, we obtained 3 decorrelated feature matrices whose columns had zero correlation with the columns of the other 2 matrices.

Analyses

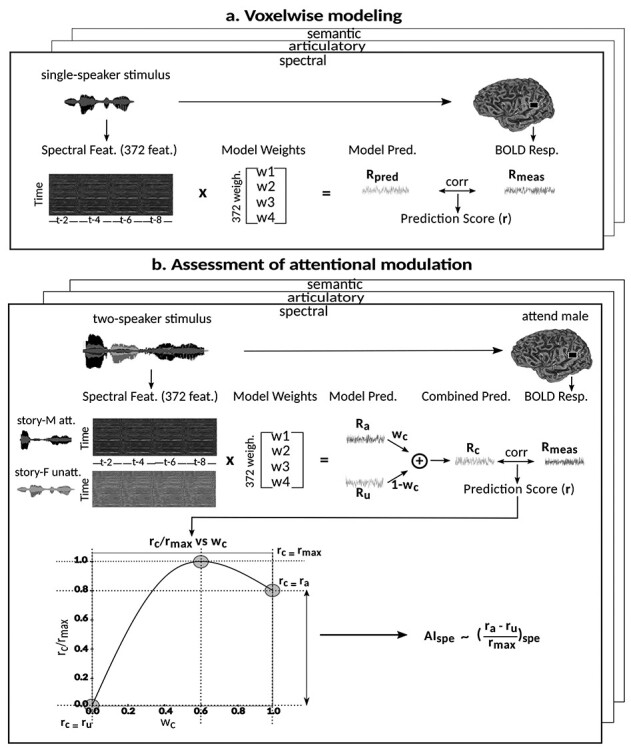

The main motivation of this study is to understand whether and how strongly various levels of speech representations are modulated across cortex during a cocktail-party task. To answer this question, we followed a 2-stage approach as illustrated in Figure 3. In the first stage, we identified voxels selective for speech features using data from the passive-listening experiment. To do this, we measured voxelwise selectivity separately for spectral, articulatory, and semantic features of the single-speaker stories. In the second stage, we used the models fit using passive-listening data to predict BOLD responses measured in the cocktail-party experiment. Prediction scores for attended versus unattended stories were compared to quantify the degree of attentional modulations, separately for each model and globally across all models.

Figure 3 .

Modeling procedures. (a) “Voxelwise modeling.” Voxelwise models were fit in individual subjects using passive-listening data. To account for hemodynamic response, a linearized 4-tap FIR filter spanning delayed effects at 2–8 s was used. Models were fit via L2-regularized linear regression. BOLD responses were predicted based on fit voxelwise models on held-out passive-listening data. Prediction scores were taken as the Pearson’s correlation between predicted and measured BOLD responses. For a given subject, speech-selective voxels were taken as the union of voxels significantly predicted by spectral, articulatory, or semantic models (q(FDR) < 10−5, t-test). (b) “Assessment of attentional modulation.” Passive-listening models for single voxels were tested on cocktail-party data to quantify attentional modulations in selectivity. In a given run, one of the speakers in a 2-speaker story was attended while the other speaker was ignored. Separate response predictions were obtained using the isolated story stimuli for the attended speaker and for the unattended speaker. Since a voxel can represent information from both attended and unattended stimuli, a linear combination of these predicted responses was considered with varying combination weights (wc in [0 1]). BOLD responses were predicted based on each combination weight separately. Three separate prediction scores were calculated based on only the attended stimulus (wc = 1), based on only the unattended stimulus (wc = 0), and based on the optimal combination of the 2 stimuli. A model-specific attention index, ( ) was then computed as the ratio of the difference in prediction scores for attended versus unattended stories to the prediction score for their optimal combination (see Materials and Methods).

) was then computed as the ratio of the difference in prediction scores for attended versus unattended stories to the prediction score for their optimal combination (see Materials and Methods).

Note that a subset of the 10 single-speaker stories was used to generate three 2-speaker stories used in the experiments. To prevent potential bias, a 3-fold cross-validation procedure was performed for testing models fit using passive-listening data on cocktail-party data. In each fold, models were fit using 8-run passive-listening data; and separately tested on 2-run passive-listening data and 2-run cocktail-party data. The same set of test stories were used both in the passive-listening and cocktail-party experiments to minimize risk of poor model generalization between the passive-listening and cocktail-party experiments due to uncontrolled stimulus differences. There was no overlap between the stories in the training and testing runs. Model predictions were aggregated across 3-fold, and prediction scores were then computed.

Voxelwise Modeling

In the first stage, we fit voxelwise models in individual subjects using passive-listening data. To account for hemodynamic delays, we used a linearized 4-tap finite impulse response (FIR) filter to allow different HRF shapes for separate brain regions (Goutte et al. 2000). Each model feature was represented as 4 features in the stimulus matrix to account for their delayed effects in BOLD responses at 2, 4, 6, and 8 s. Model weights,  , were then found using L2-regularized linear regression:

, were then found using L2-regularized linear regression:

|

Here,  is the regularization parameter,

is the regularization parameter,  is the decorrelated feature matrix for a given model and

is the decorrelated feature matrix for a given model and  is the aggregate BOLD response matrix for cortical voxels. A cross-validation procedure with 50 iterations was performed to find the best regularization parameter for each voxel among 30 equispaced values in log-space of

is the aggregate BOLD response matrix for cortical voxels. A cross-validation procedure with 50 iterations was performed to find the best regularization parameter for each voxel among 30 equispaced values in log-space of  . The training passive-listening data was split into 50 equisized chunks, where 1 chunk was reserved for validation and 49 chunks were reserved for model fitting at each iteration. Prediction scores were taken as Pearson’s correlation between predicted and measured BOLD responses. The optimal

. The training passive-listening data was split into 50 equisized chunks, where 1 chunk was reserved for validation and 49 chunks were reserved for model fitting at each iteration. Prediction scores were taken as Pearson’s correlation between predicted and measured BOLD responses. The optimal  value for each voxel was selected by maximizing the average prediction score across cross-validation folds. The final model weights were obtained using the entire set of training passive-listening data and the optimal

value for each voxel was selected by maximizing the average prediction score across cross-validation folds. The final model weights were obtained using the entire set of training passive-listening data and the optimal  . Next, we measured the prediction scores of the fit models on testing data from the passive-listening experiment. Spectrally, articulatorily, and semantically selective voxels were separately identified in each ROI based on the set of significantly predicted voxels by each model. A given ROI was considered selective for a model, only if it contained 10 or more significant voxels for that model

. Next, we measured the prediction scores of the fit models on testing data from the passive-listening experiment. Spectrally, articulatorily, and semantically selective voxels were separately identified in each ROI based on the set of significantly predicted voxels by each model. A given ROI was considered selective for a model, only if it contained 10 or more significant voxels for that model  . Speech-selective voxels within the ROI were then taken as the union of these spectrally, articulatorily, and semantically selective voxels. Subsequent analyses were performed on speech-selective voxels.

. Speech-selective voxels within the ROI were then taken as the union of these spectrally, articulatorily, and semantically selective voxels. Subsequent analyses were performed on speech-selective voxels.

- Model-specific selectivity index. Single-voxel prediction scores on passive-listening data were used to quantify the degree of selectivity of each ROI to the underlying model features under passive-listening. To do this, a model-specific selectivity index, (

), was defined as follows:

), was defined as follows:

where  is the average prediction score across speech-selective voxels within the ROI during passive-listening.

is the average prediction score across speech-selective voxels within the ROI during passive-listening.  is in the range of [0, 1], where higher values indicate stronger selectivity for the underlying model.

is in the range of [0, 1], where higher values indicate stronger selectivity for the underlying model.

- Complexity index. The complexity of speech representations was characterized via a complexity index, (

), which reflected the relative tuning of an ROI for low- versus high-level speech features. The following intrinsic complexity levels were assumed for the 3 speech models considered here: (

), which reflected the relative tuning of an ROI for low- versus high-level speech features. The following intrinsic complexity levels were assumed for the 3 speech models considered here: ( ,

,  ,

,  ) = (

) = ( ,

,  ,

,  ). Afterward,

). Afterward,  was taken as the average of the complexity levels weighted by the selectivity indices:

was taken as the average of the complexity levels weighted by the selectivity indices:

is in the range of [0, 1], where higher values indicate stronger tuning for semantic features and lower values indicate stronger tuning for spectral features.

is in the range of [0, 1], where higher values indicate stronger tuning for semantic features and lower values indicate stronger tuning for spectral features.

Assessment of Attentional Modulations

In the second stage, we tested the passive-listening models on cocktail-party data to quantify ROI-wise attentional modulation in selectivity for corresponding model features and to find the extent of the representation of unattended speech. These analyses were repeated separately for the 3 speech models.

Model-specific attention index. To quantify the attentional modulation in selectivity for speech features, we compared prediction scores for attended versus unattended stories in the cocktail-party experiment. Models fit using passive-listening data were used to predict BOLD responses elicited by 2-speaker stories. In each run, only one of the speakers in a 2-speaker story was attended, whereas the other speaker was ignored. Separate response predictions were obtained using the isolated story stimuli for the attended and unattended speakers. Since a voxel can represent information on both attended and unattended stimuli, a weighted linear combination of these predicted responses was considered:

|

Where  and

and  are the predicted responses for the attended and unattended stories in a given run;

are the predicted responses for the attended and unattended stories in a given run;  is the combined response and

is the combined response and  is the combination weight. We computed

is the combination weight. We computed  for each separate

for each separate  value in [0:0.1:1]. Note that

value in [0:0.1:1]. Note that  when

when  ; and

; and  when

when  . We then calculated single-voxel prediction scores for each

. We then calculated single-voxel prediction scores for each  value. An illustrative plot of

value. An illustrative plot of  versus

versus  is given in Figure 3b, where

is given in Figure 3b, where  denotes the prediction scores and

denotes the prediction scores and  denotes the maximum

denotes the maximum  value (the optimal combination).

value (the optimal combination).  and

and  are the prediction scores for attended and unattended stories respectively. To quantify the degree of attentional modulation, a model-specific attention index (

are the prediction scores for attended and unattended stories respectively. To quantify the degree of attentional modulation, a model-specific attention index ( ) was taken as:

) was taken as:

|

where  denotes an ideal upper limit for model performance, and

denotes an ideal upper limit for model performance, and  reflects the relative model performance under the cocktail-party task. Note that

reflects the relative model performance under the cocktail-party task. Note that  considers selectivity to the underlying model features when calculating the degree of attentional modulation.

considers selectivity to the underlying model features when calculating the degree of attentional modulation.

- Global attention index (

). We then computed

). We then computed  as follows:

as follows:

Both  and

and  are in the range [−1,1]. A positive index indicates attentional modulation of selectivity in favor of the attended stimuli and a negative index indicates attentional modulation in favor of the unattended stimuli. A value of zero indicates no modulation.

are in the range [−1,1]. A positive index indicates attentional modulation of selectivity in favor of the attended stimuli and a negative index indicates attentional modulation in favor of the unattended stimuli. A value of zero indicates no modulation.

Colormap in Selectivity and Modulation Profile Flatmaps

The cortical flatmaps of selectivity and modulation profiles use a colormap that shows the relative contributions of all 3 models to the selectivity and attention profiles. For selectivity profiles, a continuous colormap was created by assigning significantly positive articulatory, semantic and spectral selectivity to the red, green and blue (R, G, B) color channels, respectively. During assignment, selectivity values were normalized to sum of one, and then normalized to linearly map the interval [0.15 0.85] to [0 1]. Distinct colors were assigned to 6 landmark selectivity values: red for (1, 0, 0), green for (0, 1, 0), blue for (0, 0, 1), yellow for (0.5, 0.5, 0), magenta for (0.5, 0, 0.5), and turquoise for (0, 0.5, 0.5). The same procedures were also applied for creating a colormap for modulation profiles.

Statistical Tests

Significance Assessments within Subjects

For each voxel-wise model, the significance of prediction scores was assessed via a t-test; and resulting P values were false-discovery-rate corrected for multiple comparisons (FDR; Benjamini and Hochberg 1995).

A bootstrap test was used in assessments of  ,

,  ,

,  , and

, and  within single subjects. In ROI analyses, speech-selective voxels within a given ROI were resampled with replacement 10 000 times. For each bootstrap sample, mean prediction score of a given model was computed across resampled voxels. Significance level was taken as the fraction of bootstrap samples in which the test metric computed from these prediction scores is less than 0 (for right-sided tests) or greater than 0 (for left-sided tests). The same procedure was also used for comparing pairs of ROIs, where ROI voxels were resampled independently.

within single subjects. In ROI analyses, speech-selective voxels within a given ROI were resampled with replacement 10 000 times. For each bootstrap sample, mean prediction score of a given model was computed across resampled voxels. Significance level was taken as the fraction of bootstrap samples in which the test metric computed from these prediction scores is less than 0 (for right-sided tests) or greater than 0 (for left-sided tests). The same procedure was also used for comparing pairs of ROIs, where ROI voxels were resampled independently.

Significance Assessments Across Subjects

A bootstrap test was used in assessments of  ,

,  ,

,  , and

, and  across subjects. In ROI analyses, ROI-wise metrics were resampled across subjects with replacement 10 000 times. Significance level was taken as the fraction of bootstrap samples where the test metric averaged across resampled subjects is less than 0 (for right-sided tests) or greater than 0 (for left-sided tests). The same procedure was also used for comparisons among pairs of ROIs. Here, we used a more stringent significance definition for across-subjects tests that focuses on effects consistently observed in each individual subject. Therefore, an effect was taken significant only if the same metric was found significant in each individual subject.

across subjects. In ROI analyses, ROI-wise metrics were resampled across subjects with replacement 10 000 times. Significance level was taken as the fraction of bootstrap samples where the test metric averaged across resampled subjects is less than 0 (for right-sided tests) or greater than 0 (for left-sided tests). The same procedure was also used for comparisons among pairs of ROIs. Here, we used a more stringent significance definition for across-subjects tests that focuses on effects consistently observed in each individual subject. Therefore, an effect was taken significant only if the same metric was found significant in each individual subject.

Results

Attentional Modulation of Multilevel Speech Representations

To examine the cortical distribution and strength of attention modulations in speech representations, we first obtained a baseline measure of intrinsic selectivity for speech features. For this purpose, we fit voxelwise models using BOLD responses recorded during passive listening. We built 3 separate models containing low-level spectral, intermediate-level articulatory, and high-level semantic features of natural stories (de Heer et al. 2017). Supplementary Fig. 1 displays the cortical distribution of prediction scores for each model in a representative subject, and Supplementary Table 2 lists the number of significantly predicted voxels by each model in anatomical ROIs. We find “spectrally selective voxels” mainly in early auditory regions (bilateral HG/HS and PT; and left pSF) and bilateral SMG, and “articulatorily selective voxels” mainly in early auditory regions (bilateral HG/HS and PT; and left pSF), bilateral STG, STS, SMG, and MFS as well as left POP and PreG. In contrast, “semantically selective voxels” are found broadly across cortex except early auditory regions (bilateral HG/HS and right PT).

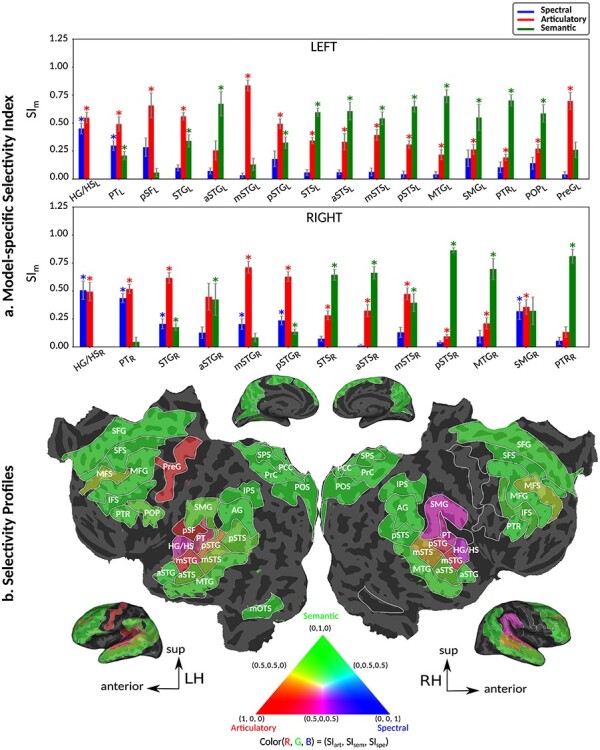

To quantitatively examine cortical overlap among spectral, articulatory, and semantic representations, we separately measured the degree of functional selectivity for each feature level via a model-specific selectivity index ( ; see Materials and Methods). Bar plots of selectivity indices are displayed in Figure 4a for perisylvian cortex and in Supplementary Fig. 2 for nonperisylvian cortex (see Supplementary Fig. 3a–e for single-subject results). Distinct selectivity profiles are observed from distributed selectivity for spectral, articulatory, and semantic features (e.g., left PT and right pSTG) to strong tuning to a single level of features (e.g., left IPS and PCC). The selectivity profiles of the ROIs are also visualized on the cortical flatmap projecting articulatory, semantic, and spectral selectivity indices of each ROI to the red, green, and blue channels of the RGB colormap as seen in Figure 4b (see Supplementary Fig. 4 for selectivity profile flatmaps in individual subjects; see Materials and Methods for colormap details). A progression from low–intermediate to high-level speech representations is apparent across bilateral temporal cortex in superior–inferior direction (HG/HS → mSTG → mSTS → MTG) consistently in all subjects. Furthermore, many higher-order regions in parietal (bilateral AG, IPS, SPS, PrC, PCC, and POS) and frontal cortices (bilateral PTR, IFS, MFG, SFS, and SFG; and left POP) manifest dominant semantic selectivity consistently in all subjects (P < 0.05; see Supplementary Fig. 3a–e for single-subject results). To examine the hierarchical organization of the speech representations in a finer scale, we also defined a complexity index,

; see Materials and Methods). Bar plots of selectivity indices are displayed in Figure 4a for perisylvian cortex and in Supplementary Fig. 2 for nonperisylvian cortex (see Supplementary Fig. 3a–e for single-subject results). Distinct selectivity profiles are observed from distributed selectivity for spectral, articulatory, and semantic features (e.g., left PT and right pSTG) to strong tuning to a single level of features (e.g., left IPS and PCC). The selectivity profiles of the ROIs are also visualized on the cortical flatmap projecting articulatory, semantic, and spectral selectivity indices of each ROI to the red, green, and blue channels of the RGB colormap as seen in Figure 4b (see Supplementary Fig. 4 for selectivity profile flatmaps in individual subjects; see Materials and Methods for colormap details). A progression from low–intermediate to high-level speech representations is apparent across bilateral temporal cortex in superior–inferior direction (HG/HS → mSTG → mSTS → MTG) consistently in all subjects. Furthermore, many higher-order regions in parietal (bilateral AG, IPS, SPS, PrC, PCC, and POS) and frontal cortices (bilateral PTR, IFS, MFG, SFS, and SFG; and left POP) manifest dominant semantic selectivity consistently in all subjects (P < 0.05; see Supplementary Fig. 3a–e for single-subject results). To examine the hierarchical organization of the speech representations in a finer scale, we also defined a complexity index,  , that reflects whether an ROI is relatively tuned for low-level spectral or high-level semantic features. A detailed investigation of the gradients in

, that reflects whether an ROI is relatively tuned for low-level spectral or high-level semantic features. A detailed investigation of the gradients in  across 2 main auditory streams (dorsal and ventral stream) was conducted (see Supplementary Results). These results corroborate the view that speech representations are hierarchically organized across cortex with partial overlap mostly in early and intermediate stages of speech processing.

across 2 main auditory streams (dorsal and ventral stream) was conducted (see Supplementary Results). These results corroborate the view that speech representations are hierarchically organized across cortex with partial overlap mostly in early and intermediate stages of speech processing.

Figure 4 .

Selectivity for multilevel speech features. (a) “Model-specific selectivity indices.” Single-voxel prediction scores on passive-listening data were used to quantify the selectivity of each ROI to underlying model features. Model-specific prediction scores were averaged across speech-selective voxels within each ROI and normalized such that the cumulative score from all models was 1. The resultant measure was taken as a model-specific selectivity index, ( ).

).  is in the range of [0, 1], where higher values indicate stronger selectivity for the underlying model. Bar plots display

is in the range of [0, 1], where higher values indicate stronger selectivity for the underlying model. Bar plots display  for spectral, articulatory, and semantic models (mean ± standard error of mean (SEM) across subjects). Significant indices are marked with * (P < 0.05; see Supplementary Fig. 3a–e for selectivity indices of individual subjects). ROIs in perisylvian cortex are displayed (see Supplementary Fig. 2 for nonperisylvian ROIs; see Materials and Methods for ROI abbreviations). ROIs in LH and RH are shown in the top and bottom panels, respectively. POPR and PreGR that did not have consistent speech selectivity in individual subjects were excluded (see Materials and Methods). (b) “Intrinsic selectivity profiles.” Selectivity profiles of cortical ROIs averaged across subjects are shown on the cortical flatmap of a representative subject (S4). Significant articulatory, semantic, and spectral selectivity indices of each ROI are projected to the red, green, and blue channels of the RGB colormap (see Materials and Methods). This analysis only included ROIs with consistent selectivity for speech features in each individual subject. Medial and lateral views of the inflated hemispheres are also shown. A progression from low–intermediate to high-level speech representations are apparent across bilateral temporal cortex in the superior–inferior direction; consistently in all subjects (see Supplementary Fig. 4 for selectivity profiles of individual subjects). Meanwhile, semantic selectivity is dominant in many higher-order regions within the parietal and frontal cortices (bilateral AG, IPS, SPS, PrC, PCC, POS, PTR, IFS, SFS, SFG, MFG, and left POP) (P < 0.05; see Supplementary Fig. 3a–e). These results support the view that speech representations are hierarchically organized across cortex with partial overlap between spectral, articulatory, and semantic representations in early to intermediate stages of auditory processing.

for spectral, articulatory, and semantic models (mean ± standard error of mean (SEM) across subjects). Significant indices are marked with * (P < 0.05; see Supplementary Fig. 3a–e for selectivity indices of individual subjects). ROIs in perisylvian cortex are displayed (see Supplementary Fig. 2 for nonperisylvian ROIs; see Materials and Methods for ROI abbreviations). ROIs in LH and RH are shown in the top and bottom panels, respectively. POPR and PreGR that did not have consistent speech selectivity in individual subjects were excluded (see Materials and Methods). (b) “Intrinsic selectivity profiles.” Selectivity profiles of cortical ROIs averaged across subjects are shown on the cortical flatmap of a representative subject (S4). Significant articulatory, semantic, and spectral selectivity indices of each ROI are projected to the red, green, and blue channels of the RGB colormap (see Materials and Methods). This analysis only included ROIs with consistent selectivity for speech features in each individual subject. Medial and lateral views of the inflated hemispheres are also shown. A progression from low–intermediate to high-level speech representations are apparent across bilateral temporal cortex in the superior–inferior direction; consistently in all subjects (see Supplementary Fig. 4 for selectivity profiles of individual subjects). Meanwhile, semantic selectivity is dominant in many higher-order regions within the parietal and frontal cortices (bilateral AG, IPS, SPS, PrC, PCC, POS, PTR, IFS, SFS, SFG, MFG, and left POP) (P < 0.05; see Supplementary Fig. 3a–e). These results support the view that speech representations are hierarchically organized across cortex with partial overlap between spectral, articulatory, and semantic representations in early to intermediate stages of auditory processing.

Next, we systematically examined attentional modulations at each level of speech representation during a diotic cocktail-party task. To do this, we recorded whole-brain BOLD responses while participants listened to temporally overlaid spoken narratives from 2 different speakers and attended to either a male or female speaker in these 2-speaker stories. We used the spectral, articulatory, and semantic models fit using passive-listening data to predict responses during the cocktail-party task. Since a voxel can represent information on both attended and unattended stimuli, response predictions were expressed as a convex combination of individual predictions for the attended and unattended story within each 2-speaker story. Prediction scores were computed based on estimated responses as the combination weights were varied in [0 1] (see Materials and Methods). Scores for the optimal combination model were compared against the scores from the individual models for attended and unattended stories. If the optimal combination model significantly outperforms the individual models, it indicates that the voxel represents information from both attended and unattended stimuli.

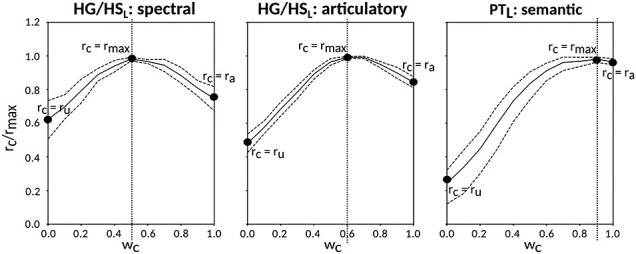

Figure 5 displays prediction scores of the spectral, articulatory, and semantic models as a function of the combination weight in representative ROIs, including HG, HS, and PT. Scores based on only attended story ( ), based on only the unattended story (

), based on only the unattended story ( ), and based on the optimal combination of the two (

), and based on the optimal combination of the two ( ) are marked. A diverse set of attentional effects are observed for each type of model. For the “spectral model” in left HG/HS, the optimal combination assigns matched weights to attended and unattended stories, and

) are marked. A diverse set of attentional effects are observed for each type of model. For the “spectral model” in left HG/HS, the optimal combination assigns matched weights to attended and unattended stories, and  is larger than

is larger than  (P < 10−4). This finding implies that spectral representations of the unattended story are mostly maintained; and there is no apparent bias toward the attended story at spectral level in left HG/HS. For the “articulatory model” in left HG/HS,

(P < 10−4). This finding implies that spectral representations of the unattended story are mostly maintained; and there is no apparent bias toward the attended story at spectral level in left HG/HS. For the “articulatory model” in left HG/HS,  is larger than

is larger than  (P < 10−4), whereas

(P < 10−4), whereas  is greater than

is greater than  (P < 10−2). Besides, the optimal combination gives slightly higher weight to the attended versus unattended story. This result suggests that attention moderately shifts articulatory representations in left HG/HS in favor of the attended stream such that articulatory representations of the unattended story are preserved to a degree. For the “semantic model” in left PT,

(P < 10−2). Besides, the optimal combination gives slightly higher weight to the attended versus unattended story. This result suggests that attention moderately shifts articulatory representations in left HG/HS in favor of the attended stream such that articulatory representations of the unattended story are preserved to a degree. For the “semantic model” in left PT,  is much higher than

is much higher than  (P < 10−4). Besides, the optimal combination assigns substantially higher weight to the attended story in this case. This finding indicates that attention strongly shifts semantic representations in left PT toward the attended stimulus. A simple inspection of these results suggests that attention may have distinct effects at various levels of speech representation across cortex. Hence, a detailed quantitative analysis is warranted to measure the effect of attention at each level.

(P < 10−4). Besides, the optimal combination assigns substantially higher weight to the attended story in this case. This finding indicates that attention strongly shifts semantic representations in left PT toward the attended stimulus. A simple inspection of these results suggests that attention may have distinct effects at various levels of speech representation across cortex. Hence, a detailed quantitative analysis is warranted to measure the effect of attention at each level.

Figure 5 .

Predicting cocktail-party responses. Passive-listening models were tested during the cocktail-party task by predicting BOLD responses in the cocktail-party data. Since a voxel might represent information from both attended and unattended stimuli, response predictions were expressed as a convex combination of individual predictions for the attended and unattended story within each 2-speaker story. Prediction scores were computed as the combination weights ( ) were varied in [0 1] (see Materials and Methods). Prediction scores for a given model were averaged across speech-selective voxels within each ROI (

) were varied in [0 1] (see Materials and Methods). Prediction scores for a given model were averaged across speech-selective voxels within each ROI ( ). The normalized scores of spectral, articulatory, and semantic models are displayed in several representative ROIs (HG/HS, HG/HS, and PT). Solid and dashed lines indicate mean and 95% confidence intervals across subjects. Scores based on only the attended story (

). The normalized scores of spectral, articulatory, and semantic models are displayed in several representative ROIs (HG/HS, HG/HS, and PT). Solid and dashed lines indicate mean and 95% confidence intervals across subjects. Scores based on only the attended story ( ), based on only the unattended story (

), based on only the unattended story ( ), and based on the optimal combination of the two (

), and based on the optimal combination of the two ( ) are marked with circles. For the “spectral model” in left HG/HS,

) are marked with circles. For the “spectral model” in left HG/HS,  is larger than

is larger than  (P < 10−4); and the optimal combination equally weighs attended and unattended stories. For the “articulatory model” in left HG/HS,

(P < 10−4); and the optimal combination equally weighs attended and unattended stories. For the “articulatory model” in left HG/HS,  is larger than

is larger than  (P < 10−4), whereas

(P < 10−4), whereas  is greater than

is greater than  (P < 10−2). Besides, the optimal combination puts slightly higher weight to attended story than unattended story. For the “semantic model” in left PT,

(P < 10−2). Besides, the optimal combination puts slightly higher weight to attended story than unattended story. For the “semantic model” in left PT,  is much higher than

is much higher than  (P < 10−4), and the optimal combination puts much greater weight to attended story than unattended one. These representative results imply that attention may have divergent effects at various levels of speech representations across cortex.

(P < 10−4), and the optimal combination puts much greater weight to attended story than unattended one. These representative results imply that attention may have divergent effects at various levels of speech representations across cortex.

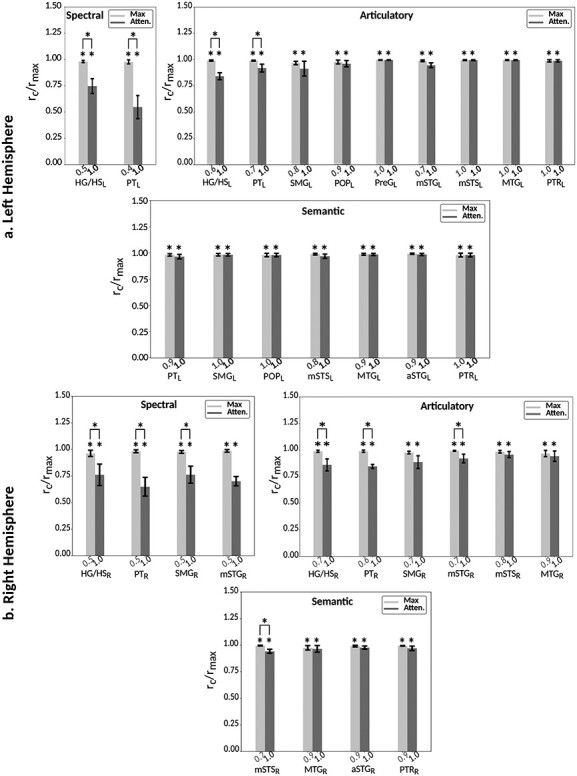

Level-Specific Attentional Modulations

To quantitatively assess the strength and direction of attentional modulations, we separately investigated the modulatory effects on spectral, articulatory, and semantic features across cortex. To measure modulatory effects at each feature level, a model-specific attention index ( ) was computed, reflecting the difference in model prediction scores when the stories were attended versus unattended (see Materials and Methods).

) was computed, reflecting the difference in model prediction scores when the stories were attended versus unattended (see Materials and Methods).  is in the range of [−1, 1]; a positive index indicates selectivity modulation in favor of the attended stimulus, whereas a negative index indicates selectivity modulation in favor of the unattended stimulus. A value of zero indicates no modulation.

is in the range of [−1, 1]; a positive index indicates selectivity modulation in favor of the attended stimulus, whereas a negative index indicates selectivity modulation in favor of the unattended stimulus. A value of zero indicates no modulation.

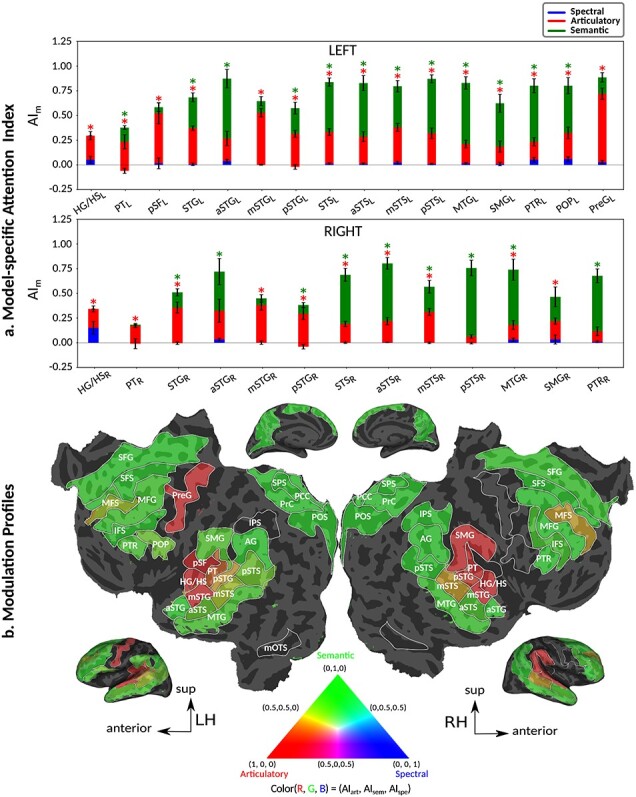

Figure 6a and Supplementary Figure 7 display the attention index for spectral, articulatory, and semantic models across perisylvian and nonperislyvian ROIs, respectively (see Supplementary Fig. 8a–e for single-subject results). The modulation profiles of the ROIs are also visualized on the cortical flatmap, projecting articulatory, semantic, and spectral attention indices to the red, green, and blue channels of the RGB colormap as seen in Figure 6b (see Supplementary Fig. 9 for modulation profile flatmaps in individual subjects). Here we discuss the attention index for each model individually. “Spectral modulation” is not consistently significant in each subject across perisylvian ROIs (P > 0.05). On the other hand, moderate spectral modulation is found in right SFG consistently in all subjects (P < 10−3). “Articulatory modulation” starts as early as HG/HS bilaterally (P < 10−3). In the dorsal stream, it extends to PreG and POP in the left hemisphere (LH) and to SMG in the right hemisphere (RH; P < 10−2); and it becomes dominant only in left PreG consistently in all subjects (P < 0.05). In the ventral stream, it extends to left PTR and bilateral MTG (P < 10−2). Articulatory modulation is also found—albeit generally less strongly—in frontal regions (bilateral MFS; left MFG; and right IFS and SFG) consistently in all subjects (P < 0.05). In the dorsal stream, “semantic modulation” starts in PT and extends to POP in LH (P < 10−2), whereas it is not apparent in the right dorsal stream (P > 0.05). In the ventral stream, semantic modulation starts in aSTG and mSTS bilaterally (P < 0.05). It extends to MTG and PTR, and becomes dominant in both ends of the bilateral ventral stream (P < 0.05). Lastly, semantic modulation is observed widespread across higher-order regions within frontal and parietal cortices consistently in all subjects (P < 0.05), with the exception of left IPS (P > 0.05). Taken together, these results suggest that attending to a target speaker alters articulatory and semantic representations broadly across cortex.

Figure 6 .

Attentional modulation of multilevel speech representations. (a) “Model-specific attention indices.” A model-specific attention index ( ) was computed based on the difference in model prediction scores when the stories were attended versus unattended (see Materials and Methods).

) was computed based on the difference in model prediction scores when the stories were attended versus unattended (see Materials and Methods).  is in the range of [−1,1], where a positive index indicates modulation in favor of the attended stimulus and a negative index indicates modulation in favor of the unattended stimulus. For each ROI in perisylvian cortex, spectral, articulatory, and semantic attention indices are given (mean ± SEM across subjects), and their sum yields the overall modulation (see Supplementary Fig. 7 for nonperisylvian ROIs). Significantly positive indices are marked with * (P < 0.05, bootstrap test; see Supplementary Fig. 8a–e for attention indices of individual subjects). ROIs in the LH and RH are shown in top and bottom panels, respectively. These results show that selectivity modulations distribute broadly across cortex at the linguistic level (articulatory and semantic). (b) “Attentional modulation profiles.” Modulation profiles averaged across subjects are displayed on the flattened cortical surface of a representative subject (S4). Significantly positive articulatory, semantic, and spectral attention indices are projected onto the red, green and blue channels of the colormap (see Materials and Methods). A progression in the level of speech representations dominantly modulated is apparent from HG/HS to MTG across bilateral temporal cortex (see Supplementary Fig. 9 for modulation profiles of individual subjects). Articulatory modulation is dominant in one end of the dorsal stream (left PreG), whereas semantic modulation becomes dominant in both ends of the ventral stream (bilateral PTR and MTG) (P < 0.05; see Supplementary Figs. 8a–e and 9). On the other hand, semantic modulation is dominant in most of the higher-order regions in the parietal and frontal cortices consistently in all subjects (bilateral AG, SPS, PrC, PCC, POS, SFG, SFS, and PTR; left MFG; and right IPS) (P < 0.05; see Supplementary Fig. 8a–e).

is in the range of [−1,1], where a positive index indicates modulation in favor of the attended stimulus and a negative index indicates modulation in favor of the unattended stimulus. For each ROI in perisylvian cortex, spectral, articulatory, and semantic attention indices are given (mean ± SEM across subjects), and their sum yields the overall modulation (see Supplementary Fig. 7 for nonperisylvian ROIs). Significantly positive indices are marked with * (P < 0.05, bootstrap test; see Supplementary Fig. 8a–e for attention indices of individual subjects). ROIs in the LH and RH are shown in top and bottom panels, respectively. These results show that selectivity modulations distribute broadly across cortex at the linguistic level (articulatory and semantic). (b) “Attentional modulation profiles.” Modulation profiles averaged across subjects are displayed on the flattened cortical surface of a representative subject (S4). Significantly positive articulatory, semantic, and spectral attention indices are projected onto the red, green and blue channels of the colormap (see Materials and Methods). A progression in the level of speech representations dominantly modulated is apparent from HG/HS to MTG across bilateral temporal cortex (see Supplementary Fig. 9 for modulation profiles of individual subjects). Articulatory modulation is dominant in one end of the dorsal stream (left PreG), whereas semantic modulation becomes dominant in both ends of the ventral stream (bilateral PTR and MTG) (P < 0.05; see Supplementary Figs. 8a–e and 9). On the other hand, semantic modulation is dominant in most of the higher-order regions in the parietal and frontal cortices consistently in all subjects (bilateral AG, SPS, PrC, PCC, POS, SFG, SFS, and PTR; left MFG; and right IPS) (P < 0.05; see Supplementary Fig. 8a–e).

Global Attentional Modulations

It is commonly assumed that attentional effects grow stronger toward higher-order regions across the cortical hierarchy of speech (Zion Golumbic et al. 2013; O’Sullivan et al. 2019; Regev et al. 2019). Yet, a systematic examination of attentional modulation gradients across dorsal and ventral streams is lacking. To examine this issue, we measured overall attentional modulation in each region via a  (see Materials and Methods). Similar to the model-specific attention indices, a positive

(see Materials and Methods). Similar to the model-specific attention indices, a positive  indicates modulations in favor of the attended stimulus, and a negative

indicates modulations in favor of the attended stimulus, and a negative  indicates modulations in favor of the unattended stimulus.

indicates modulations in favor of the unattended stimulus.

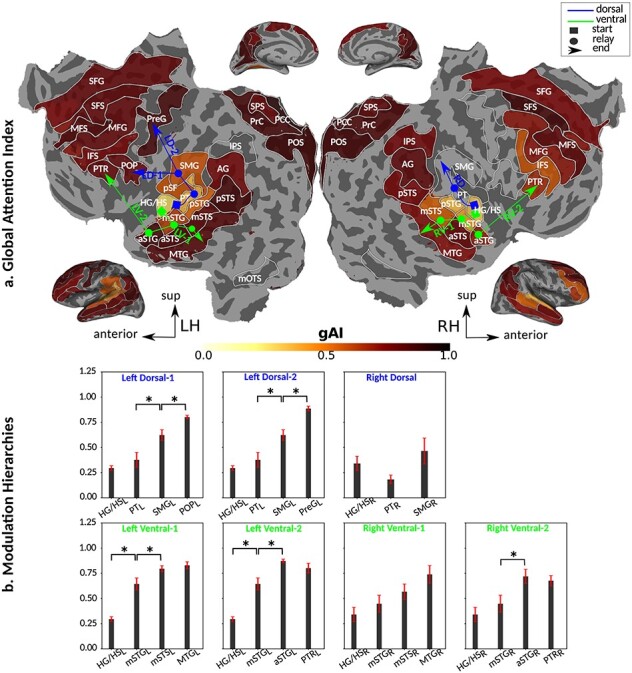

Dorsal stream. We first examined variation of

across the dorsal stream (left dorsal-1: HG/HSL → PTL → (SMGL) → POPL, left dorsal-2: HG/HSL → PTL → (SMGL) → PreGL, and right dorsal: HG/HSR → PTR → SMGR) as shown in Figure 7. We find significant increase in

across the dorsal stream (left dorsal-1: HG/HSL → PTL → (SMGL) → POPL, left dorsal-2: HG/HSL → PTL → (SMGL) → PreGL, and right dorsal: HG/HSR → PTR → SMGR) as shown in Figure 7. We find significant increase in  across the following left dorsal subtrajectories consistently in all subjects (P < 0.05; see Supplementary Figure 11 for gradients in individual subjects):

across the following left dorsal subtrajectories consistently in all subjects (P < 0.05; see Supplementary Figure 11 for gradients in individual subjects):  and

and  . In contrast, we find no consistent gradient in the right dorsal stream (P > 0.05). These results suggest that attentional modulations grow progressively stronger across the dorsal stream in LH.

. In contrast, we find no consistent gradient in the right dorsal stream (P > 0.05). These results suggest that attentional modulations grow progressively stronger across the dorsal stream in LH.Ventral stream. We then examined variation of

across the ventral stream (left ventral-1: HG/HSL → mSTGL → mSTSL → MTGL, left ventral-2: HG/HSL → mSTGL → aSTGL → PTRL, right ventral-1: HG/HSR → mSTGR → mSTSR → MTGR and right ventral-2: HG/HSR → mSTGR → aSTGR → PTRR), as shown in Figure 7. We find significant increase in

across the ventral stream (left ventral-1: HG/HSL → mSTGL → mSTSL → MTGL, left ventral-2: HG/HSL → mSTGL → aSTGL → PTRL, right ventral-1: HG/HSR → mSTGR → mSTSR → MTGR and right ventral-2: HG/HSR → mSTGR → aSTGR → PTRR), as shown in Figure 7. We find significant increase in  across the following subtrajectories consistently in all subjects (P < 0.05; see Supplementary Fig. 11 for gradients in individual subjects):

across the following subtrajectories consistently in all subjects (P < 0.05; see Supplementary Fig. 11 for gradients in individual subjects):  and

and  in the left ventral stream, and

in the left ventral stream, and  in the right ventral stream. In contrast, we find no difference between aSTG and PTR bilaterally, between mSTS and MTG in the left ventral stream, and between HG/HS, mSTG, mSTS, and MTG in the right ventral stream (P > 0.05). These results suggest that overall attentional modulations gradually increase across the ventral stream, and that the increases are more consistent in LH compared with RH.

in the right ventral stream. In contrast, we find no difference between aSTG and PTR bilaterally, between mSTS and MTG in the left ventral stream, and between HG/HS, mSTG, mSTS, and MTG in the right ventral stream (P > 0.05). These results suggest that overall attentional modulations gradually increase across the ventral stream, and that the increases are more consistent in LH compared with RH.Representational complexity versus attentional modulation. Visual inspection of Supplementary Figure 6b and Figure 7b suggests that the subtrajectories with significant increases in

and in

and in  overlap largely in left ventral stream and partly in left dorsal stream. To quantitatively examine the overlap in left ventral stream, we analyzed the correlation between CI and gAI across the left-ventral subtrajectories where significant increases in

overlap largely in left ventral stream and partly in left dorsal stream. To quantitatively examine the overlap in left ventral stream, we analyzed the correlation between CI and gAI across the left-ventral subtrajectories where significant increases in  are observed. We find significant correlations in

are observed. We find significant correlations in  and in

and in  (r > 0.98, bootstrap test, P < 10−4) consistently in all subjects. In line with a recent study arguing for stronger attentional modulation and higher representational complexity in STG compared with HG (O’Sullivan et al. 2019), our results indicate that attentional modulation increases toward higher-order regions as the representational complexity increases across the dorsal and ventral streams in LH (more apparent in ventral than dorsal stream).

(r > 0.98, bootstrap test, P < 10−4) consistently in all subjects. In line with a recent study arguing for stronger attentional modulation and higher representational complexity in STG compared with HG (O’Sullivan et al. 2019), our results indicate that attentional modulation increases toward higher-order regions as the representational complexity increases across the dorsal and ventral streams in LH (more apparent in ventral than dorsal stream).Hemispheric asymmetries in attentional modulation. To assess potential hemispheric asymmetries in attentional modulation, we compared

between the left and right hemispheric counterparts of each ROI. This analysis was restricted to ROIs with consistent selectivity for speech features in both hemispheres in each individual subject (see Materials and Methods). Supplementary Table 3 lists the results of the across-hemisphere comparison. No consistent hemispheric asymmetry is found across cortex with the exception of mSTG having a left-hemispheric bias in

between the left and right hemispheric counterparts of each ROI. This analysis was restricted to ROIs with consistent selectivity for speech features in both hemispheres in each individual subject (see Materials and Methods). Supplementary Table 3 lists the results of the across-hemisphere comparison. No consistent hemispheric asymmetry is found across cortex with the exception of mSTG having a left-hemispheric bias in  consistently in all subjects (P < 0.05). These results indicate that there is mild lateralization in attentional modulation of intermediate-level speech features.

consistently in all subjects (P < 0.05). These results indicate that there is mild lateralization in attentional modulation of intermediate-level speech features.

Figure 7 .

Global attentional modulation. (a) “Global attention index.” To quantify overall modulatory effects on selectivity across all examined feature levels, global attentional modulation ( ) was computed by summing spectral, articulatory, and semantic attention indices (see Materials and Methods).

) was computed by summing spectral, articulatory, and semantic attention indices (see Materials and Methods).  is in the range of [−1,1] and a value of zero indicates no modulation. Colors indicate significantly positive

is in the range of [−1,1] and a value of zero indicates no modulation. Colors indicate significantly positive  averaged across subjects (see legend; see Supplementary Fig. 10 for bar plots of

averaged across subjects (see legend; see Supplementary Fig. 10 for bar plots of  across cortex). Dorsal and ventral pathways are shown with blue and green lines, respectively: left dorsal-1 (LD-1), left dorsal-2 (LD-2) and right dorsal (RD), left ventral-1 (LV-1), left ventral-2 (LV-2), right ventral-1 (RV-1) and right ventral-2 (RV-2). Squares mark regions where pathways begin; arrows mark regions where pathways end; and circles mark relay regions in between. (b) “Modulation hierarchies.” Bar plots display

across cortex). Dorsal and ventral pathways are shown with blue and green lines, respectively: left dorsal-1 (LD-1), left dorsal-2 (LD-2) and right dorsal (RD), left ventral-1 (LV-1), left ventral-2 (LV-2), right ventral-1 (RV-1) and right ventral-2 (RV-2). Squares mark regions where pathways begin; arrows mark regions where pathways end; and circles mark relay regions in between. (b) “Modulation hierarchies.” Bar plots display  (mean ± SEM across subjects) along LD-1, LD-2, RD, LV-1, LV-2, RV-1 and RV-2, shown in separate panels. Significant differences in

(mean ± SEM across subjects) along LD-1, LD-2, RD, LV-1, LV-2, RV-1 and RV-2, shown in separate panels. Significant differences in  between consecutive ROIs are marked with brackets (P < 0.05, bootstrap test; see Supplementary Fig. 11 for single-subject results). Significant gradients in

between consecutive ROIs are marked with brackets (P < 0.05, bootstrap test; see Supplementary Fig. 11 for single-subject results). Significant gradients in  are

are  in LD-1,

in LD-1,  in LD-2,

in LD-2,  in LV-1,

in LV-1,  in LV-2, and

in LV-2, and  in RV-2. In the LH,

in RV-2. In the LH,  gradually increases from early auditory regions to higher-order regions across the dorsal and ventral pathways. Similar patterns are also observed in the right hemisphere, although the gradients in

gradually increases from early auditory regions to higher-order regions across the dorsal and ventral pathways. Similar patterns are also observed in the right hemisphere, although the gradients in  are less consistent across subjects.

are less consistent across subjects.

Cortical Representation of Unattended Speech