Abstract

The term ‘responsible AI’ has been coined to denote AI that is fair and non-biased, transparent and explainable, secure and safe, privacy-proof, accountable, and to the benefit of mankind. Since 2016, a great many organizations have pledged allegiance to such principles. Amongst them are 24 AI companies that did so by posting a commitment of the kind on their website and/or by joining the ‘Partnership on AI’. By means of a comprehensive web search, two questions are addressed by this study: (1) Did the signatory companies actually try to implement these principles in practice, and if so, how? (2) What are their views on the role of other societal actors in steering AI towards the stated principles (the issue of regulation)? It is concluded that some three of the largest amongst them have carried out valuable steps towards implementation, in particular by developing and open sourcing new software tools. To them, charges of mere ‘ethics washing’ do not apply. Moreover, some 10 companies from both the USA and Europe have publicly endorsed the position that apart from self-regulation, AI is in urgent need of governmental regulation. They mostly advocate focussing regulation on high-risk applications of AI, a policy which to them represents the sensible middle course between laissez-faire on the one hand and outright bans on technologies on the other. The future shaping of standards, ethical codes, and laws as a result of these regulatory efforts remains, of course, to be determined.

Keywords: Accountability, AI principles, Bias, Ethical code, Ethics washing, Explainability, Fairness, Privacy, Regulation, Responsible AI, Security, Standards

Introduction

Out of concern for the unprecedented pace of AI development and the ensuing social and moral problems from 2016 onwards, a great many organizations have issued statements of commitment to principles for AI. Companies, civil society organizations, single-issue groups, professional societies, academic organizations, and governmental institutions from mainly the Western world and Asia started to formulate statements of principle.

Scepticism sets in soon enough. Would organizations involved in AI really be making steps towards implementing these lofty principles in practice? After all, in comparison with medicine, several obstacles immediately catch the eye: the young AI community lacks common values, professional norms of good practice, tools to translate principles into practices, and mechanisms of accountability (Mittelstadt, 2019). A stronger backlash against the continuing flow of declarations of good intent by for-profit companies in particular articulated even sterner doubts: they are just trying to embellish their corporate image with superficial promises, and effective implementation of the principles in practice is bound to remain toothless. They are just in the business of ‘ethics washing’, a neologism coined by analogy with ‘green washing’ to denote the ‘self-interested adoption of appearances of ethical behaviour’ by technology companies (Bietti, 2020). The language of ethics is being instrumentalized for self-serving corporate ends. They hope that as a result, regulation proper can be weakened or kept at bay; ethics is thereby transformed into a novel form of industrial self-regulation (Wagner, 2018).1

So, we confront the following question: are the companies that have publicly committed themselves to AI principles actually trying to practice what they preach—albeit in the face of serious obstacles? Or are they effectively not trying, but just buying time from the ever-looming threat of increasing governmental regulation? Are they just engaging in a public relations offensive that signals their virtues while masking their lack of proper action? These questions of the number and value of actual efforts for implementing responsible AI and the intentions behind them are the main inspiration for this research.

The research zooms in on the firms’ attitudes to responsible AI from two angles. On the one hand, I ask myself whether the companies involved did try to implement AI principles in practice in their own companies and, if so, precisely how and to what extent. This includes efforts by these companies to act in concert with other companies and realize responsible AI amongst themselves (self-regulation). On the other hand, societal organizations of every kind—governmental ones included—are clamouring for more principled AI. Their declarations about responsible AI for the future vastly outnumber the declarations by the companies themselves about principled AI. To what extent are ‘committed’ companies willing to grant them a say in the AI issues involved? Phrased otherwise, what are their attitudes towards the issue of regulation proper? On the one hand, they may still—as is usually the case—consider regulation a catastrophic outcome to be avoided as supposedly stifling innovation and stress self-regulation as the preferred alternative. On the other hand, they may embrace regulation as a means to ward off social unrest about the new AI technologies unfolding. Which stance on AI regulation are they currently adopting?

At the outset, an important qualification has to be mentioned. Throughout, I have only selected principled companies that are substantially involved in AI practices: they have their own AI expertise, build their own algorithms and models, and as a rule have special departments or sections for AI/ML development. This enables them to advance the state of the art in AI/ML, to be innovators, not just followers. In particular, they have the capacity to pioneer fresh approaches to responsible AI. The outcomes of these AI efforts are used internally for their own products or processes, for selling AI (as software or cloud services) to clients, or for advising about AI—or, of course, for a combination of those activities. Such companies, to be referred to as ‘AI companies’, have exclusively been selected for further consideration since only those kinds of firms are able to change the character of AI and transform it into responsible AI in practice. Companies embracing AI principles that lack those AI capabilities can only be expected to promote the cause of responsible AI more modestly by focussing on such AI whenever they source AI solutions from elsewhere or by recommending responsible AI to their clients.

A caveat on method is also indicated here. In collecting these accounts of principles for and regulation of AI, I faced the problem that these often do not refer to AI in general but more narrowly to the particular AI that is embedded in a company’s business processes or product offerings. When Facebook talks about AI, it is from the perspective of the AI embedded in their platform services. When Philips or Health Catalyst talk about AI, they do so with their medical applications of AI in mind. When Google talks about AI, they have their much broader spectrum of AI applications in mind—from search engines, natural language understanding applications, self-driving cars, to drones. Vice versa, if we start from the angle of technology, views about, say, facial recognition technology are mainly expressed by companies that actually sell services of the kind (such as Amazon, Microsoft, and IBM). So, comments about AI principles or AI regulation are usually delivered from a specific ‘corner’ of AI, either narrow or broad, without being specified as such. It therefore remains imperative for readers—as well as this author—to always consider the corporate context and be on the alert for possible incompatibilities between accounts.

For a broad definition of the AI involved in these accounts, the reader may usefully be referred to a document from the ‘High-Level Expert Group on AI’ (AI HLEG) which describes the joint understanding of AI that the group uses in its work. This describes AI as a system composed of perception, reasoning/decision-making, and actuation; and as a discipline including machine learning, machine reasoning, and robotics.2

AI Companies and AI Principles

This research began with a precise identification of the AI companies that have embraced principles for AI. Several projects across the globe compile inventories of organizations in general subscribing to AI guidelines/principles or issuing statements/studies about AI governance. The most important source was the article by Jobin et al. (2019) who performed a carefully tailored web search. Other important sources that I have consulted include the following (in alphabetical order of their URLs): the web log maintained by Alan Winfield,3 a Harvard study from the Berkman Klein Center,4 the Future of Life Institute,5 the 2019 AI Index Report,6 AlgorithmWatch,7 the University of Oxford website maintained by Paula Boddington,8 and the AI Governance Database maintained by NESTA.9

However, these lists lump together all kinds of organizations that subscribe to principles for AI. So, as a first task, companies had to be disentangled from these lists. Subsequently, duplicates amongst them were removed and only proper ‘AI companies’ retained. This yielded 18 results. For the sake of completeness and in order to catch the most recent developments, I performed a supplementary web search for AI companies subscribing to AI principles (for details on search method, see Appendix). This only yielded one more result (Philips).

Further, the Partnership on AI has been taken into consideration. This early coalition (2016) of large AI companies (Amazon, Apple, Facebook, Google, IBM, and Microsoft) focusses on the development of benchmarks and best practices for AI. At present, any organization is welcome in this multi-stakeholder organization, as long as they ‘submit an expression of interest, signed by its authorized representative declaring a commitment to: [e]ndeavor to uphold the Tenets of the Partnership and support the Partnership’s purposes’ and ‘[p]romote accountability with respect to implementation of the Tenets and of the best practices which the PAI community generates […]’.10 Therefore, for the purposes of this investigation, companies that became partners of the PAI can also be considered to be committed to AI principles. In total, I counted 17 corporate PAI members that were proper AI companies; five were new names, so they were added to my list (Amazon, Apple, Facebook, Health Catalyst, Affectiva).

Finally, the AI HLEG has been inspected. This temporary group was formed by the European Commission (mid-2018) to advise on the implementation of AI across Europe. It consists of 52 experts, who are mainly appointed in their personal capacity (17 so-called type A members) or as organization representatives (29 type C members). Several of those type C members come from firms: 12 in all.11 Should their participation be considered a commitment to AI principles and their companies candidates for my list? I would argue that participation in the AI HLEG falls short of such a commitment. For one thing, the selection criteria do not require adherence to any kind of principles—it is just expertise that counts.12 For another, as far as their final report is concerned, members only ‘support the overall framework for Trustworthy AI put forward in these Guidelines, although they do not necessarily agree with every single statement in the document’.13 In view of both considerations, participation in the AI HLEG cannot be interpreted as fully binding any firm—or any other organization for that matter—to principles for AI. The Expert Group is a political arena for developing a common standard for AI principles, not a forum for commitment to it.

As a result of this exercise, I obtained 24 ‘committed’ AI companies in total; these are listed in Table 1. Note that the whole search procedure was conducted in English, leaving out any committed AI companies that exclusively (or predominantly) publish their company documents in another language. Moreover, Chinese companies such as Tencent and Baidu have been left out, since apart from the hurdle of language, the Chinese political system is hardly to be compared with that of the USA or Europe; comparing statements about the ethics and governance of AI would be a strenuous exercise. Further, many companies with clear commitment to AI principles have been left out where my second criterion was not met: they do not have substantial AI capabilities of their own (such as The New York Times, Zalando), have just started their AI efforts (Telia), or went commercial only recently (OpenAI).14

Table 1.

AI companies that committed to AI principles (ordered by revenue)1

| Type of industry Headquarters’ location |

Commitment to AI principles | Member of ‘Partnership on AI’ | |

|---|---|---|---|

| Amazon |

E-commerce, cloud computing Seattle, USA |

+ | |

| Apple |

Hardware and software, services Cupertino, USA |

+ | |

| Samsung |

Electronics, semiconductors Suwon, South Korea |

* | + |

|

Internet, cloud computing, software Mountain View, USA |

* | + | |

| Microsoft |

Hardware, software, electronics Redmond, USA |

* | + |

| Deutsche Telekom |

Telecommunications Bonn, Germany |

* | + |

| Sony |

Audio, video, photography Tokyo, Japan |

* | + |

| IBM |

Cloud computing, AI, hardware and software New York, USA |

* | + |

| Intel Corporation |

Semiconductors Santa Clara, USA |

* | + |

|

Social media Seattle, USA |

+ | ||

| Telefónica |

Telecommunications Madrid, Spain |

* | |

| Accenture |

Management consulting Dublin, Ireland |

* | + |

| SAP |

Enterprise software Walldorf, Germany |

* | |

| Philips |

Consumer electronics, healthcare Amsterdam, the Netherlands |

* | |

| Salesforce |

CRM services San Francisco, US |

* | + |

| McKinsey (Quantum-Black)2 |

Management consulting (data analytics) (No headquarters) |

* | + |

| Sage |

Enterprise software Newcastle upon Tyne, UK |

* | |

| TietoEVRY |

Enterprise software Helsinki, Finland |

* | |

| Kakao |

Social media, services Jeju City, South Korea |

* | |

| Unity Technologies |

Video games San Francisco, USA |

* | |

| Health Catalyst |

Medical data analytics Salt Lake City, USA |

+ | |

| DeepMind (Google)3 |

AI London, UK |

* | + |

| Element AI |

AI Montreal, Canada |

* | + |

| Affectiva |

Emotion AI Boston, USA |

+ |

1Note that Deutsche Telekom, Salesforce, Health Catalyst, and Element AI originally were partners of the PAI and have therefore been listed as such. At the time of finishing this manuscript (early 2021), however, they appear to have cancelled their membership. Note also that PAI member OpenAI, a general purpose AI research laboratory, has been left out since it went commercial only very recently (their GTP-3 language model was launched in June 2020).

2Quantum-Black is the data analytics arm of McKinsey.

3As a research laboratory, DeepMind enjoys considerable independence within Google. They also issued AI principles of their own (long before Google did so). Therefore, DeepMind is listed separately from Google.

After having identified these 24 ‘committed’ AI companies, I proceeded to delve deeper into their commitments. What exactly are the component parts of their declarations of principle? This exercise is not unimportant; after all, subsequently, I take these firms at their word and investigate whether they practice what they preach. I went back to the statements about AI principles for each of these companies (as well as the PAI) looking for the precise way in which the AI principles or guidelines were publicly formulated. The results are listed in Table 2.

Table 2.

Exact phrasing of AI principles by the PAI and the 19 AI companies with explicit commitment to AI principles

| PAI and AI companies committed to AI principles | Their own phrasing of ‘AI principles’ | Fairness, justice | Transparency, explainability | Security, safety | Privacy | Responsibility, accountability | Broader principles |

|---|---|---|---|---|---|---|---|

| Partnership on AI | Thematic pillars; tenets | No bias, fairness, inclusivity | Transparency, explainability, interpretability, understandability | Safety, security, reliability, robustness | Privacy | Accountability | Trustworthiness; empowering people; study the social and economic impact of AI; no AI that violates international conventions or human rights; open dialogue and engagement with stakeholders |

| Samsung | Principles for AI ethics | No unfair bias, equal access, equality and diversity | Transparency, explainability | Security | Social and ethical responsibility | To the benefit of society; for corporate citizenship | |

| AI principles | No unfair bias | Relevant explanations | Safety, security | Privacy | Accountability | Humans in control of AI; uphold scientific excellence; AI to be socially beneficial; no pursuit of AI that causes overall harm or injures people, is intended for illegitimate surveillance purposes, or contravenes international law/human rights | |

| Microsoft | Principles for responsible AI | Fairness, inclusiveness | Transparency, intelligibility | Safety, reliability | Data privacy and security | Accountability | |

| Deutsche Telekom | AI guidelines | No biased data, fairness | Transparency, no black boxes | Data security, robustness | Data privacy | Auditability, responsibility | Trust; supporting customers; cooperation between human and machine; engagement with stakeholders; sharing our know-how |

| Sony | AI ethics guidelines | Fairness, diversity | Transparency, explainability | Safety, security | Privacy | Respect for human rights; support for creative lifestyles and a better society; engagement with stakeholders; fostering AI human talent | |

| IBM | Trust and transparency principles for AI | No bias, no discrimination, fairness | Transparency, explainability | Data security, robustness | Data privacy | Accountability | Client control of data; value alignment; augment human intelligence |

| Intel* | None | No biased data, no discrimination | Explainability | Security (from cyber-attacks) | Privacy | Accountability | |

| Telefónica | AI principles | No bias, no discrimination, fairness | Transparency, explainability | Security | Privacy | Checking the veracity of data and logic used by suppliers; benefits for people; humans in control of AI; respect for human rights and sustainable development | |

| Accenture* | Responsible AI | No bias, no discrimination, equality, diversity | Transparency, explainability | Security | Data protection, Privacy | Liability | Human values |

| SAP | Guiding principles for AI | No bias, no discrimination, inclusivity | Transparency | Safety, security, reliability | Data protection, privacy | Respect for human rights; empowering people; engaging with wider society to discuss economic and social impact and normative issues | |

| Philips | AI principles, data principles | No bias, no discrimination, fairness | Transparency | Security (as data principle), robustness against misuse | Privacy (as data principle) | Human supervision of AI; people’s well-being; medical care for all; sustainable development | |

| Salesforce | AI ethics | Inclusivity | Transparency, explainability | Safety | Accountability | Customer control of data and models; empowering and benefitting society; respect for human rights | |

| McKinsey (Quantum Black)* | Responsible AI | No bias, fairness | Explainability | Privacy | |||

| Sage | Core principles for ethical and responsible AI | No bias, inclusivity | Safety, security | Accountability | Align with human values; cooperation between AI and humans | ||

| Tieto | AI ethics guidelines | No bias, fairness, inclusivity | Transparency, explainability | Safety, security | Responsibility | AI for good; respect for human rights | |

| Kakao | Algorithm ethics | No bias | Explainability | Security | Ethical data collection and management; enhance benefit and well-being of mankind; embrace our society | ||

| Unity Technologies | Guiding principles for ethical AI | No bias | Transparency | Data protection | Accountability, responsibility | No manipulation of users; respect for human rights | |

| Deep Mind (Google subsidiary)* | Responsible AI | No bias, no discrimination, fairness, inclusivity | Transparency | Privacy | Accountability | Beware of misuse and unintended consequences; consider social and economic impact | |

| Element AI | Responsible AI | Fairness | Transparency, explainability | Safety | Accountability | Individual control of personal data; humans in control of AI; respect for human rights; no offensive weapons for the military/police; sustainable development | |

| Number of firms mentioning: | n/a | 19 | 18 | 17 | 13 | 13 | 16 |

*Dispersed over various documents

Notes: Companies ordered by revenue. The 5 companies without explicit commitment to AI principles (members only of the PAI) are not listed in the table since they only conform to the principles of the PAI. The principles are displayed in the order of their empirical frequencies amongst the 19 companies (see last row). The headings for the 6 columns are mine. Accuracy (mentioned by Telefónica only) deleted since part of normal requirements. Safety and privacy partly overlap for ML; therefore, they are considered together. Adjectives from the original sources in columns 3–7 converted to nouns (transparent → transparency, etc.) for easier comparison. Some companies in addition drafted more general codes of conduct; these codes are not considered here. Links to the documents that I drew my information from are available on request.

In the first row, the tenets of the PAI are explicated. In the rows below, results are tabulated for the 19 companies with explicit declarations of AI principles (leaving out the five members-only of the PAI). Throughout, I classified the terms in use under six headings, ordered according to the empirical frequencies obtained.15 Table 2 shows clearly that almost all firms emphasize four to five of the five main principles for AI: fairness/justice, transparency/explainability, security/safety, privacy, and responsibility/accountability. Only three firms (out of 19 in all) mention just three of them. So, a clear and homogeneous sense of purpose emerges as far as responsible AI is concerned: AI is to be fair and just, transparent and explainable, secure and safe, and privacy-proof, with responsibility and accountability taken care of; broader principles such as humans in control, benefitting society, respect for human rights, and not causing harm are stressed in various combinations. Note that this conception—not coincidentally of course—matches closely the term ‘trustworthy AI’ that has been coined in EU circles, the AI HLEG in particular. Five of their seven ‘key requirements’ for trustworthy AI (which is, in addition to being lawful, both ‘ethical’ and ‘robust’) match one to one with the above; their requirements of ‘human agency and oversight’ and ‘societal and environmental well-being’ correspond to my ‘broader principles’.16

Below, I zoom in on these five ‘core’ principles, as representing the common denominator of the promise made by the 24 firms committed to AI. Notice that the first four of these principles imply requirements on the technical core of AI: the methods of ML have to change for them to be satisfied. Without this transformation, these principles cannot be satisfied—let alone any of the other more general principles associated with responsible AI (the ‘broader principles’ in Table 2).

A caveat is in order: the homogeneity may look impressive, but the terms employed leave ample room for interpretation. While, for example, security and robustness have acquired quite circumscribed meanings, transparency and explainability are more ambiguous. Do they refer to clarifying how predictions were produced? To the importance of features involved in a specific prediction? To counterfactual-like clarifications? So, all depends on how the committed companies are actually going about these challenges in practice.

Implementation of AI Principles Inside the Firm

Very little literature is available about corporate implementation of AI principles. While Darrell West is one the first authors to write about the issue,17 Ronald Sandler and John Basl, in an Accenture report, paint the following broader picture based on several additional sources.18 Upon acceptance of an AI code of ethics, an ethics advisory group (or council) is to be installed at the top of an organization in order to import outside viewpoints and expertise, and an ethical committee (or review board), led by a chief data/AI officer, is to be created which provides guidance on AI policy and evaluates AI projects in progress.19 In both groups, ethicists, social scientists, and lawyers are to be represented. Further, auditing as well as risk and liability assessments have to become standard procedures for AI product lines and products. Keeping track of audit trails is to support the auditing. Finally, training programmes for ethical AI are to be implemented, and a means for remediation provided in case AI inflicts damage or causes harm to consumers. Note that West reported that in a US public survey, many of these ‘ethical safeguards’ obtained the support of 60–70% of the respondents.20

A further source is a report produced by several governmental organizations from Singapore,21 which presents a state-of-the-art manual for implementing responsible AI within the existing governance structures of organizations in general (p. 16). Particularly interesting for my purposes are their proposals (p. 21 ff.) for the introduction of adequate governance structures (such as an AI review board), clear roles and responsibilities concerning ethical AI (e.g., for risk management and risk control of the algorithmic production process), and staff training. Further, they propose ways to put ‘operations management’ (i.e., management of the processes of handling data and producing models from them by means of ML) on a more ethical trajectory (p. 35 ff.). They suggest an exhaustive series of requirements: accountability for data practices (including attention to possible bias) (p. 36 ff.), explainability, repeatability, robustness, traceability, reproducibility, and auditability (p. 43 ff.).

An aspect that is mostly glossed over in these sources is that requirements like the absence of bias, explainability, and robustness in particular cannot adequately be met by citing measures culled from management handbooks alone—especially as new techniques of ML will have to be invented and associated software tools be coded from scratch.

With this broad canvas in mind, to what extent did the 24 committed companies implement their AI principles in corporate practice? I performed a web search to discover associated mechanisms of implementation of AI principles (see Appendix for the method, searching with KS2). Which implementations did the committed companies report of their own accord? All results are rendered in succinct form in Table 3.

Table 3.

Companies practicing and developing AI in order to apply/sell/advise about AI that explicitly have committed to principles or guidelines for AI and/or to the tenets for AI of the Partnership on AI—tabulated according to their internal governance for responsible AI; training and educational materials about responsible AI; new tools for fair/non-biased AI and explainable AI and secure/privacy-proof AI and accountability of AI; and their external collaboration and funding for research into responsible AI

| AI companies committed to AI principles1 |

Governance for responsible AI inside the firm2 |

Training and educational materials produced about responsible AI | New tools for fair/non-biased AI | New tools for explainable AI | New tools for secure/privacy-proof AI3 | New tools for accountability of AI | External collaboration and funding for responsible AI research |

|---|---|---|---|---|---|---|---|

| Amazon | SHAP values and feature importance tools (proprietary) | Co-funding of NSF project ‘Fairness in AI’ | |||||

| Advanced Technology External Advisory Council (now defunct); Ethics & Society team; responsible innovation teams review new projects for conformity to AI principles | Employee training about ethical AI, educational materials (see ‘People + AI Guidebook’) | Facets, What-If tool, Fairness Indicators (all open source) | What-If tool (open source) | CleverHans (open source); Private Aggregation of Teacher Ensembles (open source), Tensor Flow Privacy (open source); Federated Learning, RAPPOR, Cobalt (open source) | Model cards | ||

| Microsoft | AI and Ethics in Engineering Research Committee, Office of Responsible AI | Internal guidelines and checklists (e.g., ‘In Pursuit of Inclusive AI’, ‘Inclusive Design’) |

FairLearn (open source) |

InterpretML (open source) |

WhiteNoise package (open source) |

Data sheets for datasets | |

| IBM | AI Ethics Board (chaired by AI Ethics Global Leader and Chief Privacy Officer) | Guidelines for AI developers (‘Everyday Ethics for AI’) |

AI Fairness 360 Toolkit (open source) |

AI Explainability 360 Toolkit (open source) | Adversarial Robustness 360 Toolbox (open source) | Fact sheets | Joint research with Institute for Human-Centred AI (Stanford University), funding of Tech Ethics Lab (University of Notre Dame) |

| Intel | AI Ethics and Human Rights Team | ||||||

| AI Ethics Team | Fairness Flow (proprietary) | Captum (for deep neural networks) (open source) | Funding of Institute of Ethics in AI (TU Munich) | ||||

| Telefónica | AI Ethics course and AI Ethics self-assessment (for employees) | ||||||

| Accenture | Advocates ‘responsible, explainable, citizen AI’ to clients, educational materials | AI Fairness Tool, part of AI Launchpad (proprietary) | |||||

| SAP | AI Ethics Advisory Panel, AI Ethics Steering Committee; diverse and interdisciplinary teams | Course about ‘trustworthy AI’ (for employees and other stakeholders) | |||||

| Philips | |||||||

| Salesforce | Ethical Use Advisory Council, Office of Ethical and Humane Use of Technology, data science review board; inclusive teams | Teaching module about bias in AI (for employees and clients) | Einstein discovery tools (proprietary) | Einstein discovery tools (proprietary) | |||

| McKinsey (Quantum Black) | Advocates ‘responsible AI’ approach to clients, educational materials |

CausalNex (open source) |

|||||

| Sage | Team diversity | ||||||

| Tieto | In-company ethics certification, special AI ethics engineers, and trainers appointed | ||||||

| Health Catalyst | |||||||

| Deep Mind (Google subsidiary) | External ‘fellows’, Ethics Board, Ethics and Society Team | ||||||

| Element AI | Team diversity | Internal blogposts about responsible AI | Fairness tools (proprietary) | Explainability tools (proprietary) |

1Companies are ordered by revenue. Apple, Samsung, Deutsche Telekom, Sony, Kakao, Unity Technologies, and Affectiva have been omitted from the table since my searches yielded no results for them.

2 ‘Ethics Team’ denotes what is variously referred to as ethical/ethics/review committee/board (highest corporate body concerning affairs of ethical AI).

3Techniques such as privacy by design (de-identification of data) and security by design (encryption) are not mentioned in the table since they are well-known and do not refer specifically to problems of ML.

Note: The sources that I drew my information in the table from are for the most part given in footnotes in the text of the article; otherwise, the sources are available on request.

At the outset, it is to be reported that seven of my committed firms did not publish anything about these issues (or, for that matter, about their attitudes towards (self-)regulation of AI—on which I report later). So, there is simply no material to be inserted under the headings of Table 3—the entries for them have remained empty throughout my search efforts (with KS2, see Appendix). Inside some of these firms, I did trace discussions about AI being conducted, but clear results have not been published. This specifically concerns Apple, Samsung, Deutsche Telekom, Sony, Kakao, Unity Technologies, and Affectiva. Therefore, in the sequel, I no longer take them into consideration; specifically, their names do not appear in Table 3.

Internal Governance for Responsible AI

As far as governance for responsible AI is concerned, implementations varied from substantial to marginal to none at all (for this section as a whole: cf. Table 3). More than half of the committed firms had not introduced any concrete steps towards responsible AI (14 out of 24 companies).22 To be sure, they had discussions about it internally, yet nothing materialized that became public. Two others have at least taken steps to diversify the composition of their teams, as a contribution to the reduction of bias in AI (Sage, Element AI). The eight remaining companies did indeed introduce governance mechanisms at the top as suggested by West, Sandler and Basl, and the Singapore manual: an ethics advisory group (or council) for external input, and/or an ethical committee (or review board) that installs and oversees teams and/or working groups. However, the setup in full is only adopted by the smaller firms amongst them: SAP, Salesforce,23 and DeepMind.24 With the five remaining larger firms on my list (Google, Microsoft, IBM, Intel, and Facebook), input from the outside world did not materialize: an ethics advisory group has simply not been installed—only an ethical committee or review board.25 The firms in question will surely argue that they have ample contacts and consultations with ‘outsiders’. This is undoubtedly true, but the noncommittal nature of these interactions does not signal much of a willingness to grant influence to other societal actors.

Let me illustrate the workings of such internal governance with two examples. SAP’s ‘AI ethics steering committee’ is composed of senior leaders from across the organization. After having formulated SAP’s ‘guiding principles for AI’, it now ‘focuses on SAP’s internal processes and on the products that result from them, ensuring that the software is built in line with ethical principles’. At the same time, they installed an external ‘AI ethics advisory panel’, with ‘experts from academia, politics, and business whose specialisms are at the interface between ethics and technology—AI in particular’.26 Their setup is transparent— the names of all the panel members are made public.27

The ethical committee that Microsoft has installed is called AETHER (AI Ethics in Engineering and Research). Composed of ‘experts in key areas of responsible AI, engineering leadership, and representatives nominated by leaders from major divisions’,28 it gives advice and develops recommendations about AI innovation across all Microsoft divisions. In particular, it has installed working groups that focus on subthemes like ‘AI Bias and Fairness’ and ‘AI Intelligibility and Explanation’. This AETHER Committee uses the services of the Office of Responsible AI that convenes teams to ensure that products align with AI principles. Moreover, the Office takes care of public policy formulation and the review of ‘sensitive use cases’ (such as facial recognition software) related to responsible AI.

The New Practice of Responsible AI: Training, Techniques, and Tools

Let us next turn to ‘operations management’ and the measures taken to transform it along the requirements of responsible AI. Companies committed to responsible AI have to ask new questions and develop new insights. The problem that they face is that most of the principles involved—fairness, explainability, security, and privacy—strike at the very heart of ML: the ways in which to perform ML have to be reflected upon. For these complex issues, proper research into the fundamentals of ML has to be performed. But research alone is not enough. ML and AI are eminently practical exercises, looking for the best algorithmic ways to produce models that can be used in practice. Therefore, at the end of the day, fresh research insights have to be translated into fresh software tools that update current practices. These tools allow taking ethical questions into account and may help in deciding about the most ethical course of action.

Against this background, I have been looking for new materials (blog posts, guidelines, manuals, checklists, techniques, tools, and the like) that have been developed by the 24 committed companies—with a particular focus on new software techniques and software tools. The results are reported in Table 3.

Half of all the 24 companies on my list, again, did not produce materials of any kind or set up courses for their employees. The principles for responsible AI are simply not (yet) addressed at the level of their workforce. The other half did address the espoused principles, to varying degrees. Four of them introduced (mostly informal) trajectories for training for responsible AI, accompanied by appropriate materials, either inside the firm (Telefónica, SAP, and Tieto) or geared towards external clients (McKinsey).29 Another three were able to develop some software tools geared towards responsible AI and incorporate them into their existing products (Amazon, Facebook,30 and Element AI31). Consider Amazon, for example, one of the largest providers of software as a service. Their clients can build ML models with Amazon SageMaker; it now incorporates some (proprietary) explainability tools (delivering feature importance and SHAP values—more about these indicators below).

The remaining five firms have actively been developing both new materials to be used in training sessions (for their personnel and/or clients) and new software tools. The two smaller firms amongst them, Accenture and Salesforce, have developed some first steps. Let me delve deeper into the case of Salesforce, a company that sells CRM (customer relationship management) software. Their course is a module about bias in AI that they have put up on their online platform called Trailhead (freely accessible to everyone).32 Apart from mentioning the virtues of diversity, respect for human rights and the GDPR, the ‘crash course’ focusses on bias and fairness in their various forms. This leads to ethical questions about AI which the various employee ranks have to ask themselves. Ultimately, AI may just amplify those biases—so how to remove them from one’s datasets? The module suggests conducting ‘pre-mortems’ addressing issues of bias, performing a thorough technical analysis of one’s datasets, and remaining critical of one’s model both while it is being deployed and afterwards.

In addition to this module, they developed a few software tools for responsible AI. Clients can bring their own datasets to the Salesforce platform and develop ML models from them (‘Einstein Discovery Services’). These services contain new tools that act as ‘ethical guardrails’.33 Top predictive factors for a global model are produced upon request (→ explainability). Moreover, customers may define ‘protected fields’ (such as race, religion); that is, they are to receive equal treatment. The system then issues a warning whenever a proxy for them is detected in the dataset submitted for training (→ bias).

Training materials and software tools from Salesforce, though, are just tiny steps forward if we compare them with the offerings of the remaining three companies on my list: Google, Microsoft, and IBM. These easily outpace all the efforts mentioned so far in both quantity and quality. Their employee training has attained large proportions, and the materials for them stack up to an impressive list of notes, guides, manuals, and the like that probe deeply into the aspects of bias, fairness, explainability, security, privacy, and accountability.

Additionally, many of the new techniques involved have been encoded into programs in order to become effective. These are usually published on GitHub, as open source: other developers may download the source code and use or modify it. This move is not mere altruism, of course. The code involved may get better because of modifications contributed in return; the code may become a de facto standard; and it may help the company to attract clients to their commercial products. Notably, these new techniques and tools are largely the result of big research efforts by Google, Microsoft, and IBM into the features of bias, explainability, and security/privacy of ML. Since about 2016 onwards, their publication output in computer science journals, with a focus on these areas, has risen considerably.

Since the instruction materials from these three firms (perusable on their respective websites) are comparable to the Salesforce example above, below I focus on their software contributions. These are discussed in the order in which the features of responsible AI have become the subject of research: the issue of bias has been researched for a longer time, while the issues of explainability and security/privacy (insofar as arising from adversarial attacks) have come to the fore just recently.

Google, Microsoft, IBM: Fairness Tools

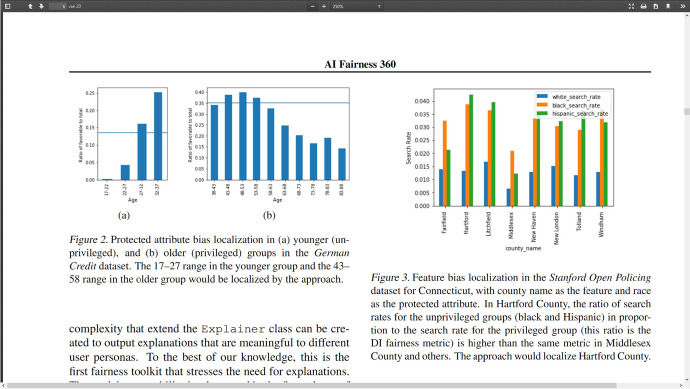

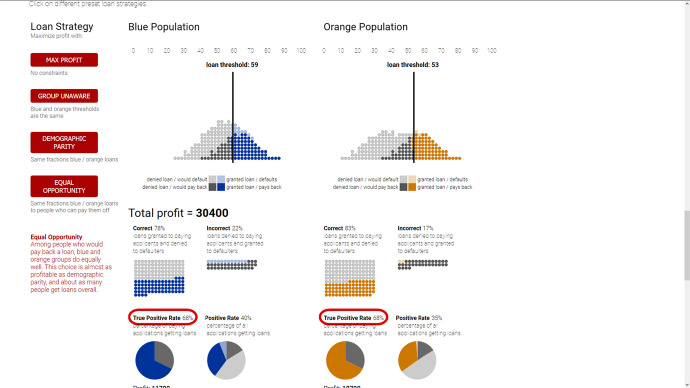

The fairness issue derives from the observation that bias against one group or another may easily creep into the ML process: bias in datasets (cf. Fig. 1) translates into ML producing a biased model as output. As a consequence, the generated predictions discriminate against specific groups. In order to address this fairness issue, Google, Microsoft, and IBM have developed several techniques and tools (cf. Table 3). Let me go into Google’s offerings first. Facets Overview enables visualizing datasets intended to be used (e.g., allowing to detect groups that are not well represented, potentially leading to biased results).34 With the What-If tool, one may investigate the performance of learned models (classifiers, multi-class models, regression models) using appropriate test sets.35 It may, in particular, address fairness concerns by analysing that performance across protected groups. With the push of a button, several definitions of fairness (‘fairness indicators’) can be implemented for any such group: the threshold levels are shifted accordingly (cf. Fig. 2).36 Microsoft, on its part, offers similar analyses and tools bundled together in its Python package called FairLearn.37 It also delves into fairness metrics for the various relevant groups and measures to mitigate corresponding fairness concerns in the ML process.

Fig. 1.

Localization of bias in datasets about creditworthiness (left) and police search rate (right) across privileged and unprivileged groups (IBM).

Fig. 2.

Various fairness measures and their thresholds: screenshot for equal opportunity (synthetic data from Google).

Source: https://research.google.com/bigpicture/attacking-discrimination-in-ml/

In comparison, though, both ‘fairness packages’ are less developed than the one from IBM.38 Big Blue offers a larger menu of fairness measures which users may choose from (depending on the particular use case). For protected variables, metrics such as equal parity, demographic parity, and equal opportunity may be chosen. In order to mitigate biases, a range of techniques are presented—as invented by researchers from academia and industry, often working together. In general, training data may be processed before training starts (e.g., reweighing training data), the modelling itself can be adjusted (e.g., taking prejudices into account while processing the data), or biases in the algorithmic outcomes may be mitigated (e.g., by changing the model’s predictions in order to achieve more fairness). For most of the techniques involved (about 10 in all), IBM has developed software implementations.

Google, Microsoft, IBM: Explainability Tools

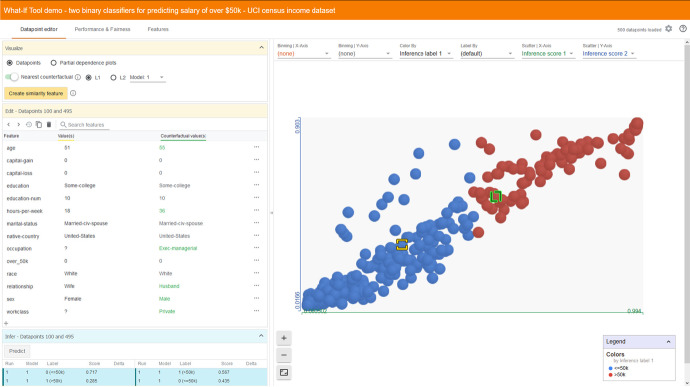

While ML took off in the 1990s with algorithms producing models that often could easily be explained (such as regression and single decision trees), soon enough the modelling became more complex (such as boosting and bagging, neural networks, deep learning); accordingly, interpreting models was no longer possible. How to interpret black boxes and the predictions they generate, both in general and for individual datapoints? Concerning this issue of explainability,39 the three companies have put in great efforts as well (listed in Table 3). In their documentation materials, Google, Microsoft, and IBM all stress the point—accepted wisdom by now—that explanations must be tailored to the specific public involved: whether data scientists, business decision-makers, bank clients, judges, physicians, hospital patients, or regulators. Each of these groups has their own specific preferences for what an explanation should entail.40 With this in mind, Google has further developed their What-If tool (already mentioned above).41 It enables ML practitioners to focus on any particular datapoint and change some of its features manually in order to see how the outcome predicted by their specific model changes. In particular, one may find the most similar datapoint with a different prediction (‘nearest counterfactual’) (cf. Fig. 3). Moreover, one can explore how changes in a feature of a datapoint may affect the prediction of the model (partial dependence plots).

Fig. 3.

Finding the nearest counterfactual with the What-If tool (Google) (UCI census income dataset).

Source: https://pair-code.github.io/what-if-tool/demos/uci.html

In comparison, the options developed by Microsoft and IBM are more extended. Their packages each have their own flavour. Let me first discuss some IBM tools, bundled in their AI Explainability 360 Toolkit.42 As directly interpretable models, they offer the BRCG (Boolean Rule Column Generation) which learns simple (or/and) classification rules, and the GLRM (Generalized Linear Rule Model), which learns weighted combinations of rules (optionally in combination with linear terms). A more experimental tool for obtaining an interpretable model is TED (‘teaching AI to explain its decisions’), which allows you to build explanations into the learning process from the start. Post-hoc interpretation tools are also made available. For one, several varieties of ‘contrastive explanations’: identification of feature values that minimally need to be present for a positive outcome, in combination with the relative importance of those features (‘pertinent negatives’). Similarly, their ‘Protodash’ method allows to put a specific datapoint under scrutiny and obtain a few other datapoints with similar profiles in the training set (prototypes), thus suggesting reasons for the prediction produced by the model. As can be seen, IBM offers tools that are similar to the What-If tool from Google, but they unfold a much broader spectrum of approaches.

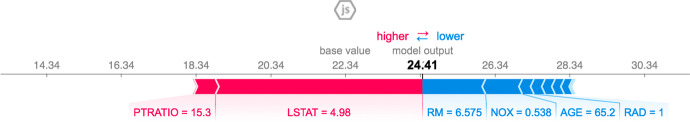

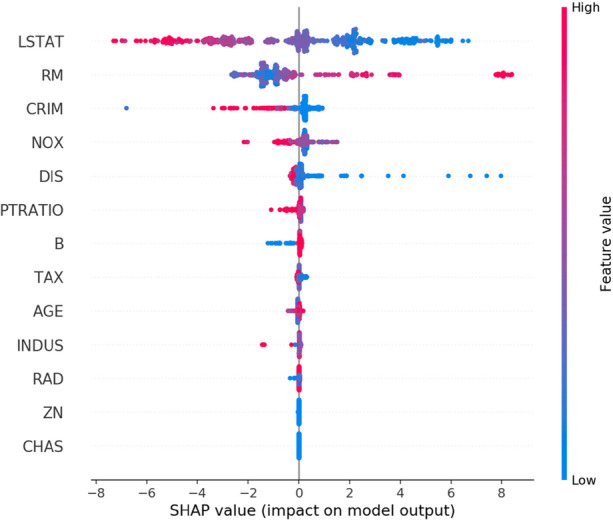

Microsoft, finally, has also developed a variety of interpretability tools (under the label InterpretML), which, again, are based on advances in ML as recently published in computer science journals.43 As an interpretable model, they propose the novel Explainable Boosting Machine (yielding both accuracy and intelligibility). For black box ML models, several explainer tools are provided. First and foremost, a family of tools is offered based on SHAP values—the method, pioneered by Lloyd Shapley, originates in game theory. The approach is model-specific, so for each type of ML model, a separate explainer has to be encoded. A SHAP-based explainer may then contribute to a global explanation by showing the top important features and dependence plots (the relative contribution of feature values to feature importance) (see Fig. 4). The explainer can also produce local explanations by calculating the importance of features to individual predictions (see Fig. 5) and by offering so-called what-if techniques that allow to see how various changes in a particular datapoint change the outcome.

Fig. 4.

Feature values and their impact on model output (SHAP value); high feature values in red, low feature values in blue (global explanation).

Fig. 5.

SHAP force plot, showing the contribution of features pushing model output higher (red) or lower (blue) than the base value (local explanation).

Secondly, besides SHAP explainers, a new interpretable model may be trained on the predictions of a black box model. Either train an interpretable model (say linear regression or a decision tree) on the output data of the black box model under scrutiny (global surrogate model; the method is called ‘mimic explainer’) or use the LIME (Local Interpretable Model-Agnostic Explanation) algorithm to train a local surrogate model that ‘explains’ an individual prediction.44 Finally, for classification and regression models, the ‘permutation feature explainer’ is provided. This global explanation method revolves around the idea of randomly shuffling datapoint features over the entire dataset involved.

Google, Microsoft, IBM: Security and Privacy Tools

Before elaborating on the tools developed by Google, Microsoft, and IBM for enhancing security and privacy in AI, let me first briefly explain what these concepts imply for AI specifically. Whenever sensitive data are collected and processed by organizations, privacy is a vital issue of concern. The usual tools to handle such concerns are encryption, anonymization, and the like. Organizations have been confronted with this issue for quite some time now; the same goes for security. If sensitive data are involved in ML applications in particular, additional issues impacting on privacy and security come to the fore since adversaries may mount ‘adversarial attacks’ on the system. Such attacks aim to get hold of system elements (the underlying data, the algorithm, or the model) or to disrupt the functioning of the system as a whole. These issues are of more recent date, and efforts to deal with them are still in their infancy.

Several adversarial attacks may usefully be distinguished.45 In the first category (targeting system elements), attackers may retrieve at least some additional feature values of personal records used for training (‘model inversion’). Similarly, outsiders may infer from a person’s record whether he/she was part of the training set (‘membership inference attack’). After a client has used data to train an algorithm, these may be recovered by a malicious provider of ML services if he/she has installed a backdoor in that algorithm (‘recovering training data’). Attackers may even emulate a trained model as a whole, by repeatedly querying the target (‘model stealing’).

The second category concerns the integrity of an AI system as a whole. Attackers may produce an ingenuous query and submit it to a model in deployment in order to disturb its classification performance (‘perturbation attack’, ‘evasion attack’). Specific data to be used for training may be compromised (or even datasets as a whole that are widely in use poisoned), affecting the trained model’s performance (‘data poisoning’). Finally, training may be outsourced to a provider that tampers with the training data and installs a backdoor in the produced model, resulting in degraded classification performance for specific triggers (‘backdoor ML’).

All this is very new terrain, with lots of active R&D, but as yet only a small repertoire of solutions and remedies. Most prominent is the approach of generating adversarial examples and retraining one’s algorithm to become immune to them (‘adversarial ML’). Another approach is ‘differential privacy’, which adds noise to ML: either locally to datasets so that individual datapoints can no longer be identified by users of the datasets or, in a more sophisticated vein, to the actual process of ML itself in order to render the final model privacy-proof—the algorithm becomes ‘differentially private’. The model no longer ‘leaks’ training data as belonging to specific individuals.

Finally, ‘federated learning’ is on the march.46 Suppose an ML model is to be trained dynamically from local data on mobile phones. These are no longer uploaded to a central location but stay where they are. After receiving the current model, each phone locally performs an update which is uploaded and used for ‘transfer learning’. Apart from enhancing security, this obviously reduces the risk of violation of privacy.

Which tools of the kind are offered by Google, Microsoft, and IBM (see Table 3)? IBM appears to be the minor player here. It only offers the Adversarial Robustness 360 Toolbox, an open sourced toolbox with tools to defend deep neural networks against adversarial attacks.47 Microsoft, on their part, have open sourced the WhiteNoise toolkit for implementing differential privacy schemes, focussing on datasets.48

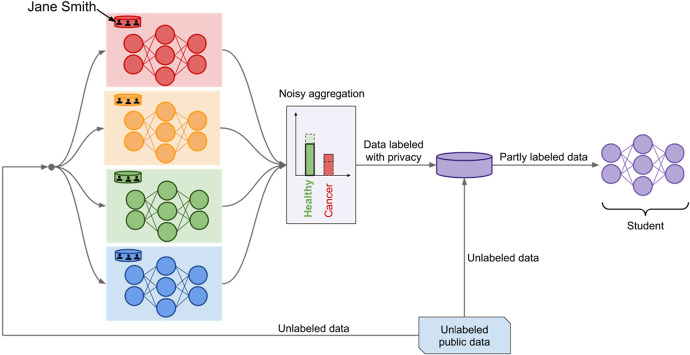

Google, finally, seems to have the edge at the moment. To begin with, they offer a library of adversarial attacks (CleverHans).49 Furthermore, they have developed two differential privacy schemes focussing on the very process of ML. The first scheme, TensorFlowPrivacy, provides a method that introduces noise into the gradient descent method used in neural networking.50 Private Aggregation of Teacher Ensembles (PATE), the second scheme, proposes ML in two steps.51 Teach an ensemble of models first, add noise to their collective ‘voting’, and learn a student model from fresh data (which have obtained their label from the ensemble voting). Only the latter student model is to become public, all others are discarded (see Fig. 6). Their Cobalt pipeline, finally, combines various security measures: federated learning, local differential privacy, and anonymization and shuffling of data.52

Fig. 6.

Teacher-Student Adversarial Training (Google).

Source: http://www.cleverhans.io/privacy/2018/04/29/privacy-and-machine-learning.html

Accountability Tools

The final aspect that has frequently been stressed as an element of responsible AI is accountability (cf. Table 2): providers of AI have to be able to produce a proper account of all the steps in the process of production of their solutions. While most companies on my list of 24 just pay lip service to this requirement, only three firms have actually developed accountability tools tailored to AI. In a kind of silent competition, Google, Microsoft, and IBM have each submitted proposals of the kind (Table 3). As we will see, these complement each other. Let me first discuss the proposal from (mainly) Microsoft employees about datasets to be used for training (Gebru et al. 2018–2020). The authors emphasize that quality of datasets is paramount for ML. Datasets may contain unwanted biases, and the deployment context may deviate substantially from the training context. As a consequence, the trained model performs badly. In order for dataset consumers to be prepared, they suggest that dataset creators draft ‘datasheets for datasets’. These are to give full details about how the dataset has been ‘produced’: its creation (e.g., by whom), composition (e.g., labels, missing data, sensitive data, data splits), collection (e.g., sampling procedure, consent), pre-processing (e.g., cleaning, labelling), uses (e.g., in other instances), distribution (e.g., to whom, licensing conditions), and maintenance (e.g., support, updates).

Further, ‘model cards’ are proposed by Google employees (Mitchell et al. 2018–2019). These cards provide details about the performance of a specific trained model. Subsections are to specify details of the model; intended uses; factors to be considered for model performance; appropriate metrics for the actual performance of the model; specifics about test data and training data; and ethical considerations to be taken into account. As the authors note, such cards are especially important whenever models are developed, say by Google, and offered in the cloud (‘Google Cloud’) and subsequently deployed in contexts such as healthcare, employment, education, and law enforcement (Mitchell et al. 2018–2019: 220). From the point of view of responsible AI, this accounting procedure is interesting, since any of its aspects can be incorporated in a model card as an issue to be reported on. Take fairness (Mitchell et al. 2018–2019: 224): whenever different groups (say age, gender, or race) are involved, the actual performance of the model across them can be specified (say by means of a confusion matrix). This allows to inspect whether equal opportunity has been satisfied.

Finally, ‘Factsheets for AI-services’ are proposed—by IBM employees this time (Arnold et al. 2018–2019). As a rule, AI services do not rely on single datasets or single pretrained models alone but on an amalgam of many models trained on many datasets. Typically, consumers send in their data and just receive answers in black box fashion. In order to inspire confidence in such services, the proposed factsheets can first of all enumerate details of the outputs, training data and test data involved, training models employed, and test procedures adhered to. Furthermore, several issues related to responsible AI may be accounted for: fairness, explainability, and security/safety (such as protection against adversarial attacks and concept drift).

External Collaboration and Funding concerning Responsible AI Research

Many companies on my list of 24 perform some degree of R&D internally. Moreover, some of them nurture close ties with outside non-profits or universities concerning research areas they deem important. Regular publications in computer journals are common. Take DeepMind. Acquired by Google in 2014, it is now Google’s research department in the UK that cooperates with many outside organizations and has maintained a consistent research output over the years. Or take IBM. Big Blue has always had a strong research department, outside collaborations, and a high research output as a result. At the moment, it does joint R&D with dozens of academic institutions. One recent example, initiated in 2019 (see Table 3), is their joint research with the Institute for Human-Centred AI at Stanford, focussing on responsible AI—as well as natural language processing and neuro-symbolic computation.53

As far as responsible AI is concerned, a new pattern has emerged on top of these regular R&D efforts: erecting completely new institutes (or programmes) with an exclusive focus on responsible AI and furnishing the money for them. Three such initiatives are current (Table 3). Facebook has created the Institute of Ethics in AI, located at the TU Munich (2019). With a budget of 7.5 million dollars over five years, it will perform research about several aspects of responsible AI.54 Amazon co-finances an NSF project called ‘Fairness in AI’ with 10 million dollars over the next three years (2019). The project distributes grants to promising scholars located at universities all over the USA. Its title is slightly misleading, though, since they actually intend to cover many aspects of responsible AI, not just fairness.55 IBM tops them all by funding a separate Tech Ethics Lab at the University of Notre Dame (2020). With 20 million dollars at their disposal for the next 10 years, the lab will study ethical concerns raised by advanced technologies, including AI, ML, and quantum computing.56

For a proper perspective, these initiatives should be placed in context. For IBM, it clearly represents an extension of already considerable research into ethical aspects of AI/ML. For Facebook and Amazon, the context differs: their programmes mark an effort to catch up. Their spending on research into aspects of responsible AI (and corresponding publication output) has been negligible until recently—especially in comparison with the main companies pushing for responsible AI: Google, Microsoft, and IBM.57

Implementation of AI-principles: Overview and Discussion

Let me at this point summarize the steps which the 24 companies that committed to principles for responsible AI have actually taken to move from those principles to their implementation in practice. The summary will be used for an attempt at answering the question whether we can accuse the companies involved of mere ‘ethics washing’ or not.

Overview

As appropriate governance structure for responsible AI, it is usually suggested to introduce a two-fold setup at the top of an organization: an ethics advisory group (or council) for input from society and an ethical committee (or review board) for guiding and steering internally towards responsible AI. It turns out that a large majority on my list of 24 did not care about such new governance: only eight companies did introduce such measures. Five firms installed an ethical committee alone, while three firms installed the full governance setup of ethical committee and advisory group. Remarkably, none of the largest companies usually very vocal about their mission to realize responsible AI (Google, Microsoft, and IBM) cared to install such an advisory council on top of their ethical committee.

The statistics are slightly better as far as developing new educational materials for training purposes (blog entries, guidelines, checklists, courses, and the like), or coding new software tools is concerned. While half of all 24 committed firms did not contribute anything of the kind, four companies introduced relevant training options, and three companies incorporated some smaller tools (for fairness or explainability) into their current software offerings. Only five companies realized that fully responsible AI can only come to fruition if documentation and software tools about all aspects of responsible AI are created and made available to employees/clients.

Amongst these, Google, Microsoft, and IBM stand out. Their educational materials and software tools are in a class of their own that no other large company—such as Amazon or Facebook—has been able to match. Based on thorough research, usually performed together with other researchers at universities and non-profits, the whole package represents the forefront of current ideas about responsible implementation of AI. Let me just recapitulate some highlights.

Concerning fairness, a whole menu of metrics has been developed. Building on this, several techniques for mitigating bias have been developed, each implemented in relevant source code. As far as explainability is concerned, post-hoc interpretable models can be learned on the predictions from a black box model. Methods have been developed to find the most similar datapoints with the same prediction or the nearest datapoints with the opposite prediction. Approaches based on SHAP values report the most important features for either a model as a whole or a particular prediction. For countering adversarial attacks, prominent tools are libraries of adversarial attacks and the addition of noise to datasets or to the neural networking itself. Finally, serious steps towards realizing accountability are schemes that enable to account for datasets, models, or AI-as-a-service.

Discussion

So, first steps towards responsible AI have been taken, in particular, by the largest tech companies involved. How are we to evaluate these steps? Do they amount to mere ‘ethics washing’? The charge of mere ethics washing is to mean that all the developments reported above are just ‘ethical theatre’ (yielding nothing of value) intended to keep regulation at bay (a goal that may or may not be reached).58 Such activities may substitute for stricter regulation. In order to inspect this charge, I propose a nuanced approach which breaks it down into its component parts and discusses them in an analytic fashion. Building upon arguments developed by Elettra Bietti, Brent Mittelstadt, Julia Powles, and others, three perspectives are explored, focussing, respectively, on (a) the impact on regulatory alternatives, (b) the constraints on governance initiatives within a firm, and (c) charges of a narrow focus on ‘technological fixes’.

Impact on Regulation

Concerning regulation, the actual impact of new governance structures and new educational materials and software tools (combined with massive funds for collaboration between industry and academia) (Table 3) on regulatory alternatives is to be explored, as well as the companies’ intentions behind these actions. First, did these initiatives for responsible AI effectively freeze regulatory alternatives? Has valuable time been won by the companies involved and regulatory pressures staved off (cf. Bietti, 2020: 217)? I would argue that this potential effect has not materialized. In retrospect, after some early sporadic calls for responsible AI (from 2016 onwards), the flood of declarations of AI principles issued by companies began in earnest at the beginning of 2018. This soon enough led a dozen of them to initiate experiments with new governance structures and/or creating manuals and software tools for responsible AI (as presented in Table 3). In addition, important industry-academia collaborations were staged (Table 3). Concurrently though—with small beginnings even before 2018—governmental and civil society actors, the professions, and academia alike started to press home their views on responsible AI. In this cacophony of voicesm important corporations such as Microsoft, IBM, and Google (in that order) began to realize that governmental regulation of AI was unavoidable. From mid-2018 onwards, they publicly uttered their willingness to cooperate with efforts towards regulation of the kind (to be discussed more fully below, Sect. 5.3.2). So, any softening or delaying of AI regulation does not seem to have occurred.

As to their intentions, second, some companies committed to AI principles (from Table 3) initially may well have harboured hopes for state regulation to be delayed or weakened as a result of their AI initiatives. After all, such hopes are usually supposed to be the intention behind pleas for self-regulation by firms (cf. also Sect. 5.1 below)—and the campaign for responsible AI as just described is just another form of self-regulation, this time within the individual firm.59 Anyway, whatever hopes may have been entertained by any firm; these were effectively squashed by the incessant pressures from society for an AI responsive to its needs.

Corporate Constraints

Next, one has to consider the corporate context within which these initiatives for responsible AI unfold, potentially reducing the scope of possible reform (cf. Bietti, 2020: 216–217). Several questions impose themselves. What discussions are considered legitimate? What is considered out of bounds? How do decision powers influence outcomes? How does the new governance for responsible AI influence actual project decisions? Were the AI products developed demonstrably more ‘responsible’? Were any projects deflected in their course? Were any projects (say about autonomous AI) halted out of ethical considerations? Are AI governance practices made transparent? May outside experts speak freely about their experiences or not?

Answering these questions about the gains of corporate new governance of AI as tabulated in Table 3 is a thorny issue. Concerning ethics councils, review boards, and team diversity, I have only assembled materials as published by the firms themselves. This produces an overview of rules and procedures that have been introduced—not of the results obtained (if any). Companies do not report detailed evaluations of the various procedures involved. So, I simply cannot answer the above questions.

Occasionally, incidents leak out that remind us that company preferences and constraints are in force. In spite of their lofty AI principles, Google had initiated cooperation with the Pentagon for the Maven project; their task was to improve the analysis of footage of surveillance drones by means of ML. When details of this contract came into the open, massive employee protest erupted. As a result, the company decided to cancel its cooperation (mid-2018).60 More recently (December 2020), AI researcher Timnit Gebru was fired by Google.61 The direct cause was an argument about the future publication of a research paper she had co-authored. It alerted to the dangers of large natural language modelling (like BERT and GPT-3), especially the large environmental footprint it requires.62 In the background, though, there was also resentment on the part of Google management about her fight for inclusiveness inside the company. With this alarming incident also, several thousands of people (including many Googlers) immediately protested. Doubts about Google’s stance towards inclusivity and principled AI were expressed openly. Another prominent member of the AI ethics group, Margaret Mitchell, who openly supported Gebru, was fired two months later (February 2021).63 Steps the company might take in response to the protests and actual repercussions for the company’s efforts towards responsible AI as a whole are yet to be determined. Have responsible AI and Google’s corporate environment become incompatible after all?

Obtaining more thorough insights about the achievements of said new governance would require in-depth scholarship that obtains independent access to the firms, their employees, and their committee members. So, the only conclusion that can be drawn for the moment is that, indeed, corporate energies have been channelled into bending AI practices towards more ‘responsibility’—with research participants apparently trying to achieve tangible outcomes. Whether this is actually the case remains to be determined.

A Narrow Focus on ‘Technological Fixes’?

The final fruits of responsible AI efforts are the courses, materials, guidelines, instructions, and software packages as tabulated in Table 3. Several authors have been dismissive of these steps on the road to responsible AI practices, the new software tools in particular. These are variously debunked as ‘technological solutionism’, as based on the mistaken conception of ethical challenges as ‘design flaws’ (Mittelstadt, 2019: 10); as ‘mathematization of ethics’ (Benthall, 2018) which only serves to provide ‘a false sense of assurance’ (Whittaker et al. 2018: ch. 2, p. 27); or, as Julia Powles puts it, talking about the efforts to overcome bias in AI systems: ‘the preoccupation with narrow computational puzzles distracts us from the far more important issue of the colossal asymmetry between societal cost and private gain in the rollout of automated systems; (…) the endgame is always to “fix” A.I. systems, never to use a different system or no system at all’ (Powles & Nissenbaum 2018).64

The charge is that the AI community of experts is tempted to reduce the ethical challenges involved to a technocratic task: the appropriate conceptions are to be made computable and implementable, and all AI will be beneficial. As a result, the inherent tensions behind these ‘essentially contested concepts’, emanating from the clash between the interests of the various stakeholders involved, are ignored and remain unaddressed.

Note the parallel: while the earlier critique of new instruments in general for responsible AI argued that these may obscure regulatory initiatives and thereby soften or keep them at bay, this additional critique of technical solutions in particular argues that these tend to obscure the more fundamental problems underlying application of AI and therefore keep consideration of them at bay. Both may be considered to be forms of the ‘ethics washing’ argument—but they point to different phenomena being obscured, both deemed a nuisance by companies.

How serious is this technocratic critique to be taken? It is no coincidence, of course, that in their declarations, the 24 committed companies have precisely zoomed in on those principles that require new technical methods (for fairness, explainability, security and privacy). To them, as computer scientists, it is appealing to solve the puzzles involved. However, the charge appears to suggest that, therefore, close to nothing has been gained. I dare to challenge this assessment.

Take explainability: of course, only a broad societal debate can determine what a proper explanation is to mean, for each relevant public and for each relevant context. But let us not overlook the fact that that debate is already underway. Academia and industry have been discussing the need to distinguish between different publics that require explanations, and the sort of explanations they require. Thereupon, much energy has been put into translating these requirements into concrete software tools (most of which have been open sourced). As a result, the ethical discussion has not necessarily been foreclosed, but has acquired the tools needed for the debate to continue and possibly reach some kind of consensus. Instead of decrying the ‘mathematization of ethics’, one could try to see it in reverse: the mathematical tools created may readily invigorate the ethical debate.

The same argument can be made for fair AI. The concept of fairness is essentially contested, indeed. But for now, a debate spanning academia and industry circles (and beyond) has made clear that a multitude of fairness conceptions are to be distinguished. As the next step, this has been translated into more precise fairness metrics, which subsequently have been implemented in software tools (open sourced again) that allow updating learned models to conform to one’s fairness metric of preference. Again, I interpret this development as a welcome tool for a fruitful ethical discussion, not as a technocratic solution that necessarily diverts attention away from the underlying societal tensions and stakeholders involved.

Rounding off the whole discussion about ‘ethics washing’ in this Sect. (4.2), I conclude that overall, some progress towards responsible AI has been made. Although an assessment of the new governance for responsible AI remains elusive, some positive first steps have been taken, especially by the companies on my list that have produced guidelines, brochures, checklists, and, last but not least, concrete software implementations for the newly invented techniques (Table 3).65 For those companies at least, the accusation of being involved in mere ‘ethics washing’ seems to be misplaced. As for the committed companies on my list that apparently—at least publicly—have not set any first steps on that road, the jury is still out; though belatedly, they still might catch up.

A Future with Responsible AI?

So, may we conclude that a future with responsible AI is near and all the promises will turn into reality? That is, presumably the following scenario unfolds. The responsible AI tools from Google, Microsoft, and IBM will (continue to) trickle down and increasingly be used by other producers and consumers of AI solutions. As a result, biases will be reduced to a minimum, and recipients at the end of the AI chain (such as physicians, patients, or bank clients) and overseers (such as regulators) will receive the explanations they desire—all of this (almost) completely shielded from the fall-out of adversarial attacks. I am afraid, however, that it is too soon for jubilation; many obstacles remain to be overcome.

Let us consider, by way of example, the companies in my research that did introduce training materials and/or software tools connected to explainability. For one thing, it is difficult to ascertain whether their training sessions for ML practitioners to develop the correct ‘mindset’ for handling the issue of explainable AI are actually effective. Moreover, whenever companies do use the software tools involved, overwhelmingly they appear to be used by ML practitioners only for the purpose of sanity checks on the models they produced (Bhatt et al. 2019–2020).66 The relevant end users simply do not (yet) receive explanations from them.

Studies suggest that many hurdles have to be overcome before ML practitioners feel confident to do so (Bhatt et al., 2020). There are some technical issues (such as how to find counterfactuals and construct measures of confidence). More importantly, though, explanations have to be put into the particular context, stakeholders’ needs have to be considered, and the process of explanation should preferably allow interacting with the model. Finally, most difficult of all, interpreting important factors of an explanation as causal remains a fragile undertaking. The article by Bhatt and others (2020) provides a fascinating array of situations in which unanswered urgent questions emerge—bringing home the point that technical prowess concerning ML is one thing but putting those instruments to work in actual practice in responsible fashion is quite another. That implementation phase is a formidable hurdle that is underexplored at present.

Currently, only a fraction of companies (let alone organizations in general) are recognizing the risks associated with the explainability of AI and taking steps to mitigate them. A McKinsey study from 2019 amongst firms using AI found that the percentage was 19% (amongst ‘AI high performers’ it rose to 42%).67 If a much wider audience of companies (and other organizations) becomes convinced that the call for explainable AI must be answered, they will have to adopt the relevant training materials and software tools. That spread, though, is likely to face additional obstacles. Kaur et al. (2020) did research about the potential use of the interpretable GAM (General Additive Model) and SHAP explainers by data scientists. After having been introduced to the new tools, the majority of their respondents did not appear to properly understand the tools and their visualizations. As a result, instead of (correctly) using them for critical assessment of their models, these practitioners either uncritically accepted the tools (‘overuse’), blinded by their public availability and apparent transparency, or they came to distrust those tools and showed reluctance to use them at all (‘underuse’). If these obstacles are not cleared, expectations about proper explanations being provided to end-users are even more utopian.

So, the road to AI with stakeholders being satisfied in their demand for explanations seems to be full of obstacles. The same goes, I presume, for the road to fully de-biased, fair, and secure AI—each with obstacles of their own. And as concerns the accountability tools mentioned, these may evolve into standards, but as long as they remain voluntary, their wide and—especially—faithful and complete adoption is far from guaranteed.

After these sobering conclusions about the future of (responsible) AI, it is time to move on to the issue of AI regulation.

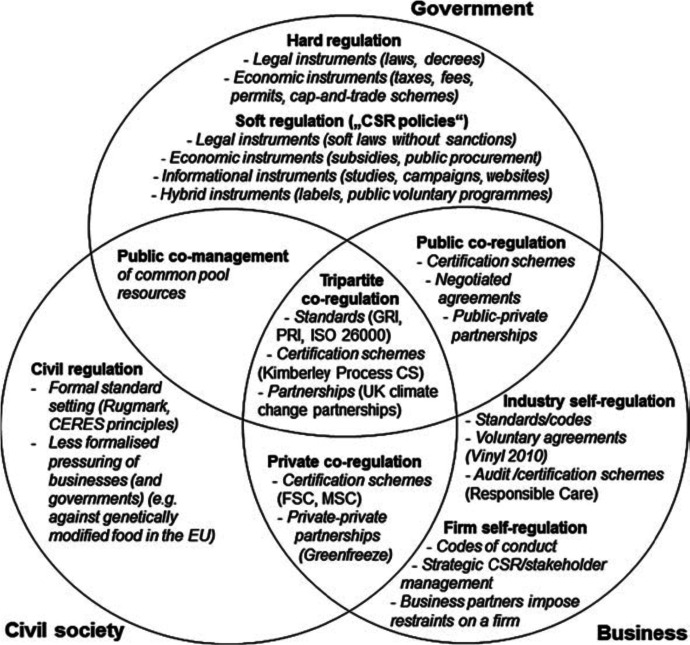

Regulation of AI