Abstract

Many studies on brain–computer interface (BCI) have sought to understand the emotional state of the user to provide a reliable link between humans and machines. Advanced neuroimaging methods like electroencephalography (EEG) have enabled us to replicate and understand a wide range of human emotions more precisely. This physiological signal, i.e., EEG-based method is in stark comparison to traditional non-physiological signal-based methods and has been shown to perform better. EEG closely measures the electrical activities of the brain (a nonlinear system) and hence entropy proves to be an efficient feature in extracting meaningful information from raw brain waves. This review aims to give a brief summary of various entropy-based methods used for emotion classification hence providing insights into EEG-based emotion recognition. This study also reviews the current and future trends and discusses how emotion identification using entropy as a measure to extract features, can accomplish enhanced identification when using EEG signal.

Keywords: EEG, Emotion recognition, Entropy measure, Feature extraction, Signal processing

Introduction

Emotions are biological states related to the nervous systems, which are usually reflection of changes in neuro-physiological condition. Being an indispensable part of human life, if emotions could be anticipated by machines precisely, it would accelerate the progress of artificial intelligence or the brain–computer interface field [1]. Presently there is no scientific agreement on a definition of emotions. One of the definitions, as given by William James, claims that "the bodily changes follow directly the perception of the exciting fact, and that our feelings of the changes as they occur are emotion” [2]. As an evolving field of research with vital importance and vast implementation, emotion classification has drawn interest from different disciplines like neuroscience, neural engineering, psychology, computer science, mathematics, biology, physics and has always remained in the spotlight [3, 4]. Various experiments are being done to accomplish higher instinctive human–computer interaction and ultimate goal is to devise advanced gadgets, which would distinguish various human emotions, in real-time [5]. With the absence of the capability to quantify emotions, computers and robots cannot naturally connect with humans. Therefore, human emotion recognition is the key technology for human–machine interaction [6, 7].

Why EEG for emotion recognition?

In current practice, emotion recognition is done widely in two ways, either by making use of non-physiological signals or by making use of physiological signals. Non-physiological methods use text, speech, facial expressions, or gestures. Most of the previous studies are based on this method [8–10]. However, this method cannot be considered reliable, as facial gesture or voice tone can be voluntarily obscured. Unlike the first, the second approach employing physiological signals seems more efficient and reliable because one cannot restrain them willfully [3]. At present among all available physiological signals, emotion detection using the EEG signal has become most popular non-invasive one as EEG efficiently records the electrical activity of brain [11]. With the advancement of sensor networks [12, 13], intelligent sensing system [14–16], and energy-efficient biomedical systems [17, 18], EEG-based methods has gained feasibility. Additionally, several researches have proved the reliability of EEG for application in the BCI [19], electronic gadgets [20, 21] as well as in medicine to closely inspect various brain disorders [22–24]. Some EEG-based research indicate that EEG builds enhanced databases in comparison with other available emotion databases like the non-physiological datasets. Thus because EEG is meticulously tied in with brain activities [25–27] and also because it is immediate and comparatively reliable than electrodermal activity (EDA; sometimes known as galvanic skin response, or GSR), electrocardiogram (ECG), photoplethysmography (PPG), electromyography (EMG), etc., is highly recommended over any other physiological signals [28, 29].

How does entropy contribute in emotion recognition?

Entropy is a nonlinear thermodynamic quantity that specifies the system’s degree of randomness. Measures of entropy are usually employed to assess the inconsistency, intricacy and unpredictability of biomedical data sets and this principle of entropy has also been extended to study the complexity of EEG signals. A number of studies are available to prove that entropy measures have a significant capability to access knowledge regarding regularity from EEG data sets [18]. Therefore, entropy, being a nonlinear feature that measures the level of randomness of any system, is effective in distinguishing various emotions based on their level of irregularity in the EEG signal.

Major contributions of present study

In short, contributions of this article are:

From analysis, it has been discovered that recurrence quantification analysis entropy along with ANN-based classifiers approach dominates other approaches.

This analysis will assist researchers to determine the combination of entropy characteristics and classification methods that is more appropriate for further enhancement of the current emotion detection methods.

This will assist learners in better comprehension of various existing EEG datasets of emotions.

Study also proposes that entropy function algorithms can be effective, for other information-retrieval tasks such as detection of emotion-related mental disorders, in addition to emotion recognition.

Finally, through the present review and analysis, certain findings and suggestions have been listed for further studies in this field.

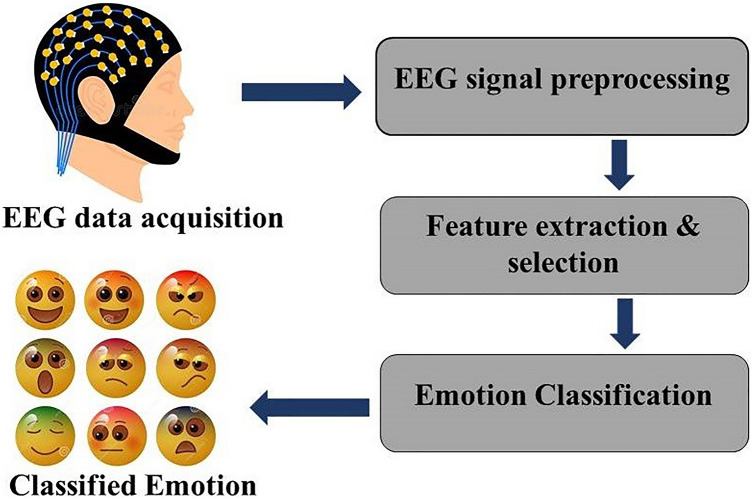

A generalized block diagram of the proposed methodology in various papers discussed in this review is given in Fig. 1. The methodology includes four main tasks; first is the data acquisition, i.e., EEG device records a high-quality signal from the brain. These raw signals are often contaminated with unwanted noise and artifacts. The second task is the preprocessing of the raw signal to remove or minimize these noise and artifacts, which uses different filters, and then the raw signal is down-sampled to some sampling frequency. After the signal is preprocessed, feature extraction is carried out. Here various entropy methods are used to extract significant information from the EEG data. Last is the classification task: after selecting the features useful to the psychological state, classifiers must be trained in such a way that different emotional states can be categorized using the extracted features.

Fig. 1.

Generalized block diagram of the proposed methodology

There is increasing evidence that entropy-based methods can provide higher accuracy for emotion recognition [29–33]. The objective of this paper is to comprehend various entropy measures for EEG-based emotion classification. The article is structured as follows: Sect. 2 presents the theories of emotion and EEG as psycho-physiological measures for emotion evaluation. Section 3 discusses the implementation of EEG measures for emotion recognition. Section 4 provides an analysis of the previous research works on EEG-based emotion recognition using different entropy measures. Section 5 concludes with the findings of this review on emotion recognition and also provides some suggestions for future research in this field.

Theory and measures of emotion

Theories of emotion

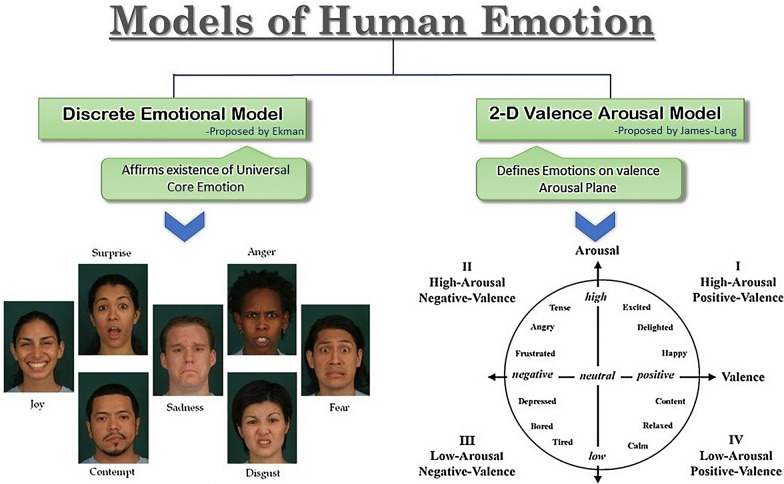

Emotions show up in our day to day lives and they influence our human awareness significantly [34]. Principally, an emotion is a psychological state that arises unconsciously instead of a conscious effort and it appears as physiological changes in the body [35]. Different theories have been proposed by psychologists and neuroscientists regarding why and how any living body experiences emotion [36]. However, the two widely used models in emotion recognition are the discrete emotional model and the two-dimensional valence-arousal model, proposed by Ekman [37] and Lang [38], respectively. The discrete emotional model affirms the existence of a few primitive or core emotions universally in all perceptions. Different psychologists have proposed various classes of emotions, but a substantial agreement lies for six emotions specified as happiness, sadness, surprise, anger, disgust, and fear [39]. The dimensional model attempts to conceptualize human emotions by defining where they lie in the two or three dimensions [36] and classifies emotions depending on their valence-arousal scale. Valence indicates pleasantness and ranges from negative to positive. Arousal represents the activation level and ranges from low to high [40]. Figure 2 summarizes the models of emotions mentioned above.

Fig. 2.

Models of human emotion widely used in emotion recognition

EEG as a psycho-physiological emotion assessment measure

Psycho-physiology refers to the part of brain science that deals with the physiological bases of psychological processes. Regardless of whether an individual expresses the emotion through speech and gesture, a change in cognitive mode is unavoidable and measurable [41] as receptive nerves of the autonomic nervous system are stimulated once an individual is positively or negatively excited. This stimulation elevates fluctuation in heart rate, increases respiration rate, raises blood pressure, and decreases heart rate variability [42].

Monitoring brain activity through EEG is among the most widely accepted psycho-physiological measures used in the research field of the BCI. EEG databases correspond to the functions of the central nervous system (CNS) which monitors and records activity in the brain.

Methods and materials

Emotion elicitation stimuli

Emotion elicitation is required in the subject to obtain a high-quality database for building an emotion classification model [6, 43]. To obtain a good emotion dataset it is important to elicit the emotion in the subject naturally as EEG is measured in ANS/CNS. Many different protocols for emotion elicitation on subjects have been proposed using various types of stimuli. The most common of all is audiovisual. Images have also been used to elicit emotions, international affective picture system (IAPS) [44, 45] is a very well-known project to develop an international framework for emotional elicitation based on a static picture. The link between music and emotions has also inspired the use of music and audio stimuli to elicit emotions [40]. Memory recalling [43] and multimodal approach [6] are some other strategies used.

The purpose of the emotion elicitation technique is to stimulate the desired emotion in the subject by eliminating the possibility of stimulating multiple emotions. A study by Gross and Levenson reveals that psychologically characterized movies attained better outcomes considering its dynamic profile [46].

Data used

In this review article, results from various entropy approaches have been reviewed. Different researchers used different emotion database as per the need and suitability of the data for better results.

There are two benchmark databases; dataset for emotion analysis using physiological signals (DEAP) and SJTU Emotion EEG Dataset (SEED) that are widely used. The other datasets were similarly recorded, but are not publicly available for use.

DEAP dataset

Dataset for emotion analysis using physiological signals (DEAP) is a multimodal dataset designed to study human cognitive states by Queen Mary University in London. The database has been obtained using a BioSemi acquisition system of 32 channels and it recorded the electroencephalogram (EEG) and peripheral physiological signals of 32 participants as each subject viewed 40 1-min music video excerpts. The subjects scored the videos according to the extent of arousal, valence, like/dislike, dominance, and familiarity. Frontal face video was also tracked for 22 of the 32 subjects. A unique stimulus selection approach has been used, using affective tag retrieval from the last.fm website, video highlight identification, and an online assessment tool. The dataset is made freely accessible, and it allows other researchers to use it to check their methods of estimating the effective state. The dataset was first presented in a paper by Koelstra et al. [46]. For further specifics on DEAP database, please find the details online at https://www.eecs.qmul.ac.uk/mmv/datasets/deap/index.html.

SEED dataset

The SEED dataset includes 62-channel EEG recordings from 15 subjects (7 males and 8 females, 23.27 2.37 years) according to the international standard 10–20 system. The emotions of the subjects are triggered through 15 video shots, and every video shot is of 4 min duration. It measures three types of emotions (positive, neutral, negative) and every category of emotion is associated with five video shots, respectively. At a time interval of 1 week or longer between multiple sessions, each participant was advised to enroll in the experiments for three sessions [47, 48]. For further specifics on SEED database, please find the details online at http://bcmi.sjtu.edu.cn/home/seed/seed.html.

Entropy feature extraction

In building an emotion recognition system, different features are required to be retrieved, whichever better describes the behavior (either static or dynamic) of brain electrical activity during different emotional states. Distinct kinds of emotions depending on different entropy characteristics are assessed in this article.

Entropy function is a dynamic feature that describes the chaotic nature of any system and evaluates the amount of information acquisition which could be employed to isolate necessary information from the interfering data [49]. Greater the value of entropy, greater is the irregularity of the system. This section provides a concise description of various entropy used as feature to classify different emotions.

Sample entropy

Sample entropy (SampEn) quantifies a physiological signal’s complexity irrespective of the signal length and therefore has a trouble-free implementation. Conceptually, sample entropy is based on the conditional probability that two sequences of length ‘n + 1’ randomly selected from a signal will match, given that they match for the first ‘n’ elements of the sequences. Here ‘match’ means that the distance between the sequences is less than some criterion ‘k’ which is usually 20% of the standard deviation of the data sequence taken into account. Distance is measured in a vector sense. Defining ‘k’ as a fraction of the standard deviation eliminates the dependence of SampEn on signal amplitude. The conditional probability is estimated as the ratio of the unconditional probabilities of the sequences matching for lengths ‘n + 1’ and ‘n’, and SampEn is calculated as the negative logarithm of this conditional probability [49]. Thus, SampEn is defined as:

| 1 |

where is the estimated probability that two sequences match for n points, and is the estimated probability that the sequences match for n + 1 points. and are evaluated from data using a relative frequency approach.

Sample entropy is comparatively reliable and decreases the bias of approximate entropy [31]. Greater value of estimated sample entropy suggests that signal is extremely unpredictable and lower value suggests that signal is predictable. The value of ‘n’ has to be considered as per the preference of the researcher and it differs from work to work.

SampEn’s advantage is that it can distinguish a number of systems from one another. It gives a much better result than approximate entropy with the theory of random numbers. Self matches are not included in this entropy so the bias decreases. The entropy esteems are relatively steady across various sample lengths. However, lack of consistency for sparse data is the key downside of SampEn.

Dynamic sample entropy

As the name suggests dynamic sample entropy (DySampEn) is the dynamic extension of the Sample entropy and is applied to evaluate the EEG signal. DySampEn is often seen as the dynamic feature of EEG signals that can track the temporal dynamics of the emotional state over time. Dynamic sample entropy determination strategy follows the calculation of SampEn from EEG signals by sliding time windows (a set of consecutive time windows) [29] by employing sliding time windows with window length and moving window length ∆t, the DySampEn can be expressed as:

| 2 |

where subscript represents sliding time windows (k = 1,2,3,…) and is measure of sliding time windows and T is the total length of EEG signal and corresponds to floor function that rounds to largest integer not exceeding .

Time window length and moving window length ∆t have to be taken as per research need. As EEG features a temporal profile, the benefits of this entropy is that it can provide more accurate emotionally relevant signal patterns for emotion classification than sample entropy [18, 29].

Differential entropy

Differential entropy (DE) is the entropy of a continuous random variable and measures its uncertainty. It is also related to minimum description length. One may describe the mathematical formulation as

| 3 |

where X is a random variable, is its probability density. So for any time series X obeying gauss distribution , its differential entropy can be expressed as [50]:

| 4 |

Disadvantage is estimation of this entropy is quite difficult in practice, as it requires estimation of density of X, which is recognized to be both theoretically difficult and computational demanding [3, 51].

Power spectral entropy

Power spectral entropy (PSE) is standardized model of Shannon’s entropy. It utilizes the component of the power spectrum amplitude of the time series to evaluate entropy from data [3, 50], i.e., it measures the spectral complexity of any EEG signal and so also regarded as frequency domain information entropy [51].

Mathematically it is given by

| 5 |

where is power spectral density.

Shannon’s entropy (ShEn) is a set of relational variables that changes linearly with the logarithm of a range of possibilities. This is also a data spread metric, which is widely applicable in a system’s dynamic order determination.

Shannon’s entropy being based on the additivity law of the composite system, i.e., if a system is divided into two statistically independent subsystems A and B then as per additivity law

where S (A, B) is the total entropy of the system and S(A) and S(B) are entropy of subsystems A and B, respectively. So, Shannon’s entropy successfully addresses extensive (additive) systems involving short-ranged effective microscopic interactions. Now, physically ‘‘dividing the total system into subsystems’’ implies that the subsystems are spatially separated in such a way that there is no residual interaction or correlation. If the system is governed by a long-range interaction, the statistical independence can never be realized by any spatial separation since the influence of the interaction persists at all distances and therefore correlation always exists for such systems. This explains the disadvantage of ShEn that it fails miserably for non-extensive (non-additive) systems that is governed by long-range interactions. It overestimates the entropy level when a larger number of domains are considered, and also does not clarify the temporal connection between different values extracted from a time series signal [18].

Wavelet entropy

One of the quantitative measures in the study of brain dynamics is wavelet entropy (WE). It quantifies the degrees of disorder related to any multi-frequency signal response [52].

It is obtained as

| 6 |

where defines the probability distribution of a time series signal and defines different resolution level [18].

It is utilized to recognize episodic behavior in EEG signal and provides better results for time-varying EEG [53]. The benefit of wavelet entropy is it efficiently detects the subtle variations in any dynamic signal. It takes lesser computation time, noise can be eliminated easily and its performance is independent of any parameters [18].

EMD approximate entropy

Approximate entropy (ApEn) is a ‘regularity statistics’ measuring the randomness of the fluctuation in given data set. Generally, one may assume that existence of repeated fluctuation patterns in any data set makes it a little less complex than other dataset without many repetitive patterns. Approximate entropy ensures identical patterns of predictions are not accompanied by further identical patterns. A data set with a lot of recurring motifs/patterns has notably lower ApEn, whereas a more complex, i.e., less predictable data set has higher ApEn [54]. ApEn estimation algorithm is described in many papers [33, 54–57]. Mathematically it can be calculated as

| 7 |

where and are pattern mean of length and , respectively. ApEn is robust to noise and relies on a less amount of data. It detects changes in series and compares the similarity of samples by pattern length and similarity coefficient ‘’. The appropriate selection of parameters ‘n’ (subseries length), k (similarity tolerance/coefficient) and N (data length) is critical and are chosen as per research needs. Traditionally, for some of clinical datasets, ‘n’ is to be set at 2 or 3, ‘k’ is to be set between 0.1 and 0.25 times the standard deviation of time series taken into account to eliminate the dependence of entropy on signal’s amplitude and N as equal to or greater than 1000. However, these values do not always produce optimal results for all types of data. The paper cited presents a method that employs the empirical mode decomposition (EMD) to disintegrate the EEG data and then calculates ApEn of disintegrated data and so, is called E-ApEn. EMD is a time frequency analysis method that decomposes nonlinear signals into oscillations at various frequencies.

The advantages of EMD-ApEn are that it is measurable for shorter datasets with high interference and it effectively distinguishes various systems based on their level of periodicity and chaos [49, 54]. The disadvantages are it strongly depends on the length of input signal [58]. Meaningful interpretation of entropy is compromised by significant noise. As it depends on length, it’s a biased statistics [18, 59].

Kolmogorov Sinai entropy

The volatility of data signal over time is assessed using entropy defined by Kolmogorov Sinai shortly known as KS entropy. It is determined by identifying points on the trajectory in phase space that is similar to each other and not correlated with time. Divergence rate of these point pairs yields the value of KSE [60], calculated as

| 8 |

where is the correlation function which provides probability of two points being closer to each other than r. Higher KSE value signifies higher unpredictability. Hence KSE does not give the accurate results for signals with slightest noise.

The advantages is that it differentiates between periodic and chaotic systems effectively [61, 62]. And this being decayed towards zero with increasing length is its main limitation [63].

Permutation entropy

It is also possible to measure the intricacy of brain activity using the symbolic dynamic theory where a data set is being plotted to a symbolic sequence through which the permutation entropy (PE) is generated. The highest value of PE is 1, signifying that the data series is purely unpredictable; whereas the lowest value of PE is 0, signifying that the data series is entirely predictable [4, 76]. At higher frequency, permutation entropy amplifies with the incongruity of data series while permutations related to reported oscillations are seldom at a lower frequency.

Mathematically PE is described as

| 9 |

where represents the relative frequency of possible sequence patterns, implies permutation order of [63–65].

Permutation entropy is a measure of chaotic and non-stationary time series signal in the presence of dynamical noise. This algorithm is reliable, effective, and yields instant outcomes regardless of the noise level in data [64, 65]. Thus, it can be used for processing of huge data sets without preprocessing of data and fine-tuning of complexity parameters [13]. The advantages of this entropy are it is simple, robust and less prone to computational complexity. It is applicable to real and noisy data [66], does not require any model assumption and is suitable for the analysis of nonlinear processes [67]. The main limitation is its inability to include all ordinal patterns or permutations of order ‘n’, when ‘n’ is assigned a larger value for a finite input time series [18, 68].

Singular spectrum entropy

Entropy calculated from singular spectrum analysis (SSA) components are known as singular spectrum entropy. SSA is an important signal decomposition method based on principal component analysis, which can decompose the original time series into the sum of a small number of interpretable components. SSA usually involves two complementary stages, one is the stage of decomposition and the other is the stage of reconstruction. The stage of decomposition consists of two steps: embedding and singular value decomposition (SVD). The stage of reconstruction also consists of two steps: grouping and diagonal averaging [69]. Singular spectrum entropy function represents instability of energy distribution and is a predictor of event-related desynchronization (ERD) and event-related synchronization (ERS) [70].

Multiscale fuzzy entropy

The measures of fuzziness are known as fuzzy information measures and the measure of a quantity of fuzzy information gained from a fuzzy set or fuzzy system is known as fuzzy entropy. No probabilistic concept is needed to define fuzzy entropy like the other classical entropy that needs probabilistic concept. This is due to the fact that fuzzy entropy contains vagueness and ambiguity uncertainties, while Shannon entropy contains the randomness uncertainty (probabilistic).

Multiscale fuzzy entropy extracts multiple scales of original time series with a coarse-gaining method and then calculates the entropy of each scale separately. Assuming an EEG signal with ‘N-point’ samples is reconstructed to obtain a set of ‘m-dimensional’ vectors with and taken as width, gradient and the similarity degree of the two vectors (fuzzy membership matrix), respectively, final expression for fuzzy entropy appears as

| 10 |

It can also be defined as for EEG signals where number of given time series sample N is limited, where is a function defined to construct a set of (m + 1)-dimensional vector and is taken as:

| 11 |

with fuzzy membership matrix

For detailed mathematical formulation one can refer to [71, 72]. The advantage of this entropy is that it is insensitive to noise; and is highly sensitive to changes in the content of information [32, 68, 71].

Recurrence quantification analysis entropy

This is a measure of the average information contained in the line segment distribution or line structures in a recurrence plot. Recurrence plot is a visualization or a graph of a square matrix built from the input time series. This is one of the state-space trajectories-based approaches of recurrence quantification analysis (RQA). This helps to compute the number and duration of the recurrence of a chaotic system [73]. RQA evaluates the forbidden precession of a data set and is computed to portray a time-varying input signal in contexts of its intricacy and randomness. Recurrence entropy helps detect chaos–chaos transitions, unstable periodic orbits, time delays, and extracts appropriate information from short and nonlinear input signals [18, 30].

Classification

After extracting features that seem to be appropriate to the emotional responses, these are then used to build a classification model with the intent to recognize specific emotions employing proposed attributes. Different classifiers like K-Nearest Neighbor (KNN) [74], Support Vector Machines (SVM) [3, 29, 32, 51, 52], integration of deep belief network and SVM (DBN-SVM) [75], channel frequency convolutional neural network (CFCNN) [76], multilayer perception, time-delay neural network, probabilistic neural network (PNN) [77], least-square SVM, etc., are used by various researchers for emotion recognition. It is difficult to compare the different classification algorithms, as different research works employ different datasets, that differs significantly in the manner emotions are evoked. In general, the recognition rate is significantly greater when various physiological signals such as EEG, GSR, PPG, etc., are employed together compared to the use of a single physiological signal for emotion recognition [78].

Discussion

In earlier days, numerous approaches were sought to measure human emotion. Several scientific researches on emotion classification were carried out using the EEG signal in the last couple of years. The human emotion recognition study began with a subject-dependent approach and is now moved more towards a subject-independent approach.

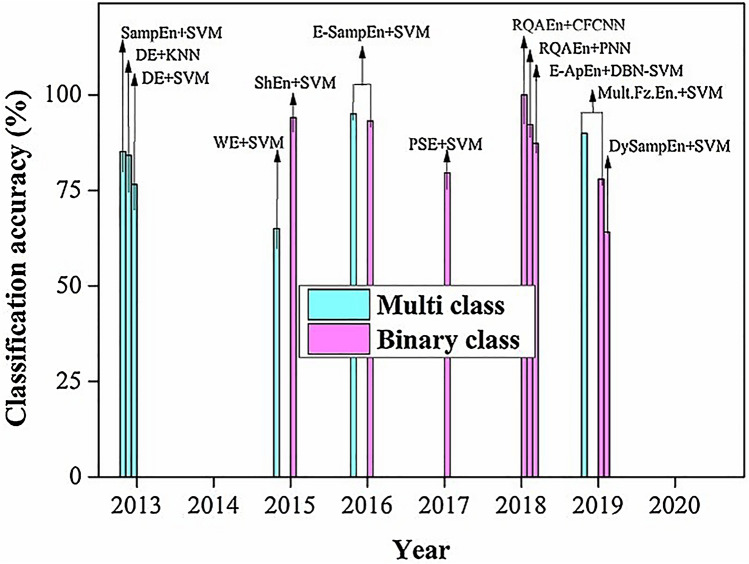

Vijayan et al. have formulated an emotion recognition strategy that takes Shannon’s entropy as an attribute. Elucidated algorithm with a multiscale SVM classifier gives 94.097% accuracy in classifying four emotions namely excitement, happiness, sadness, and hatred [3]. Duan et al. provided a feasible study on novel EEG feature differential entropy to describe the characteristic of the thoughts and emotions. In his study, he compared DE with the traditional energy spectrum feature of frequency domain. The result shows an accuracy of 84.22% with SVM classifier over 76.56% accuracy of ES [72–74]. Authors as in [33] have extracted features using E-ApEn and employed DBN-SVM classifier for four type emotion recognition named happy, calm, sad, fear. The study shows an accuracy of 87.32% with the DBN-SVM classifier. Lu et al. published an extensive study on a newer model of pattern learning positioned on dynamic entropy to empower user-independent emotion detection from the EEG signal. The result from the study reveals that the best average accuracy of 85.11% is attained for classifying negative and positive emotions [29]. Li et al. used 18 kinds of linear and nonlinear features, in which entropies namely approximate entropy, K.S entropy, permutation entropy, singular entropy, Shannon's entropy, and spectral entropy have been used in cross-subject emotion recognition. The result shows automatic feature selection gives the highest mean recognition accuracies of 59.06% on the DEAP dataset and 83.33% on the SEED dataset [4]. Zhang et al. developed a human emotion recognition algorithm by combining EMD and sample entropy with the SVM classifier. The experiment has been done to classify four categories of emotional states such as high arousal high valence (HAHV), low arousal high valence (LAHV), low arousal low valence (LALV), high arousal low valence (HALV) on DEAP Database. The result shows the average accuracy of the proposed method is 94.98% for a binary-class task and the best accuracy achieves 93.20% for multiclass tasks, respectively, [74]. Candra et al. employed the wavelet entropy feature to build an automated EEG classifier means for human emotion recognition. Study shows that accuracy can be improved further using this method for shorter time segments and the highest accuracy of 65% for both arousal and valence emotions were achieved [51]. Lotfalinezhad separated two and three levels of emotion in arousal and valence space using a multiscale fuzzy entropy feature. Work used the DEAP database and SVM classifier and achieved a classification accuracy of 90.81% and 90.53% in two-level emotion classification and 79.83% and 77.80% in three-level classifications in arousal and valence space, respectively, for subject-dependent systems [71]. Tong et al. computed power spectral entropy and correlation dimension feature along with SVM classifier to differentiate three categories of emotion namely positive neutral and negative. Result shows with the proposed method three kinds of emotions can be classified with relatively high accuracy of 79.58% [50]. Yong et al. presented a study on emotion recognition using recurrence quantification analysis entropy with channel frequency convolutional neural network (CFCNN) as a classifier. Result gives the best accuracy of 92.24 ± 2.11% for three types of emotion recognition and also shows its remarkable efficiency over traditional methods like PSD + SVM and DE + SVM [74]. Xiang et al. in his research applied the sample entropy feature along with the SVM classifier to distinguish positive and negative emotions from EEG and achieved an accuracy of 80.43% [77]. Goshvarpour et al. in their study of emotion recognition from EEG applied several measures of recurrence quantification analysis including recurrence rate, deterministic, average line length of diagonal lines, entropy, laminarity, and trapping time along with neural network and probabilistic neural networks (PNN). The result suggests RAQ gives the best accuracy of 99.96% of three-level emotion classifications with the PNN classifier and so it can be used as an appropriate tool for emotion recognition [76]. Figure 3 graphically represents the classification accuracy of different approaches mentioned above with their publication year. We have also summarized the investigations carried out on EEG signal and entropy feature extraction in human emotion recognition in Table 1.

Fig. 3.

Classification accuracy of different methods with their publication year

Table 1.

Summary of studies conducted on EEG-based emotion recognition using entropy as a feature

| Reference | No. of subjects | Emotions | Features | Database | Classifier | Accuracy |

|---|---|---|---|---|---|---|

| [30] | 3 men, 3 women | Positive, negative | ES, DE, DASM, RASM | Private |

SVM KNN |

76.56% 84.22% |

| [77] | – | Positive, negative | Sample entropy | – | SVM weight classifier | 85.11% |

| [51] | 16 men, 16 women | Arousal, valence | Wavelet entropy | DEAP | SVM | 65% |

| [29] | 7 men, 8 women | Positive, neutral, negative | Dynamic sample entropy | SEED | SVM | 64.15% |

| [50] | 6 men, 7 women | Positive, neutral, negative | Power spectral entropy, correlation dimension | Private | SVM |

79.58% 82.58% |

| [76] | 5 men | Happy, neutral, disgust | RAQA; Shannon’s entropy and 5 others | eNTERFACE06_EMOBRAIN | Multilayer perception | 36% |

| Time-delay neural network | 36% | |||||

| Probabilistic neural network | 99.96% | |||||

| [75] | 5 | Happy, sadness, fear | RAQA; entropy and 5 others | Private | SVM | 92.24% |

| [3] | 16 men, 16 women | Excitement, happiness, sadness, hatred | Shannon’s entropy and 3 others | DEAP | Multiclass SVM | 94.097% |

| [33] | 5 men, 5 women | Happy, calm, sad, fear | EMD approximate entropy | Private | Integration of deep belief network and SVM (DBN-SVM) | 87.32% |

| [4] | 16 men, 16 women | Excitement, happy, sadness, hatred | Approximate entropy, K-S entropy, permutation entropy, singular entropy, Shannon’s entropy | DEAP | SVM | 59.8% |

| 7 men, 8 women | Positive, neutral, negative | Spectral entropy and 12 other nonlinear entropy methods | SEED | 83.33% | ||

| [71] | 16 men, 16 women | 2 and 3 level of labeling in arousal and valence space | Multiscale fuzzy entropy | DEAP | SVM |

2-class 90.81% (A) 90.53% (V) 3-class 79.83% (A) 77.80% (V) |

| [74] | 16 men, 16 women | HAHV, HALV, LAHV, LALV |

EMD Sample entropy |

DEAP | SVM |

94.98% (binary class) 93.20% (multiclass) |

Conclusions

Emotions reflect one’s mental state and can be analyzed through physiological data of the brain such as the EEG. EEG signals are dynamic and also have greater inter and intra-observer variability therefore proved to be useful in automated human emotion recognition. Features like entropy can be used to describe the chaotic nature of EEG signals as entropy the degree of variability and complexity of any system. In this paper, we have presented a comprehensive review of the use of different entropy features for recognizing human emotions using EEG signals. It should be acknowledged that the identification of real-time emotions is still in its initial phase of evolution. Because, emotions are unique to each person, the provision of a standardized method for categorizing various primary emotions remains a challenge. The majority of the framework created to date is subject dependent, and subject-independent methods need more precision. The emotion changes in the EEG signal can be observed for a very short period of about 3–15 s. Therefore, extracting the data within the subject at the moment of emotional elicitation will produce better result which requires a window-based framework in EEG processing for emotion recognition. Work can be further extended for different purposes like optimization of the above algorithm and development of a unified algorithm. There is a need for a larger-scale and well-balanced data set to avoid bias and over-fitting of the classification task. Human emotions are found to be predominantly allocated in frontal and temporal lobes, and the gamma-band is ideally suited for identifying emotions. Evidently, not all electrode locations are useful for the identification of emotion. Further studies are needed for automatic choice of selecting the suitable and optimal EEG electrode placement to enhance the efficiency and reduce the confusion of muscle signal. Added, issues such as choosing more efficient EEG emotional attributes and reducing the interference from the exterior surrounding are required to be studied. Sturdier and progressive feature extraction techniques such as machine learning should be considered. Emotion experiences can differ in both individuals male and female, so developing a cross-gender EEG emotion detection framework can be a crucial problem to be investigated for emotion identification to be more generalized. Some research shows that correlation analysis is not really adequate to predict the purpose of certain functionalities as well as to determine the places of relevance. In forthcoming researches, more investigation from multiple points of view employing different methods will be expected. The use of the smallest number of EEG channels can probably boost user comfort and cut down the related computation cost. Another potential research work could be to develop a framework based on these features for emotion-related to mental disorder identification such as depression.

Further studies can also be done on the influence of other unused entropy features for emotion recognition. Also, there is still a need to reduce the calculation cost of used entropies. EEG does have a very high temporal resolution but a relatively lower spatial resolution. So, the precise classification can be gained by integrating EEG with some higher spatial resolution signals such as NIRS and fMRI.

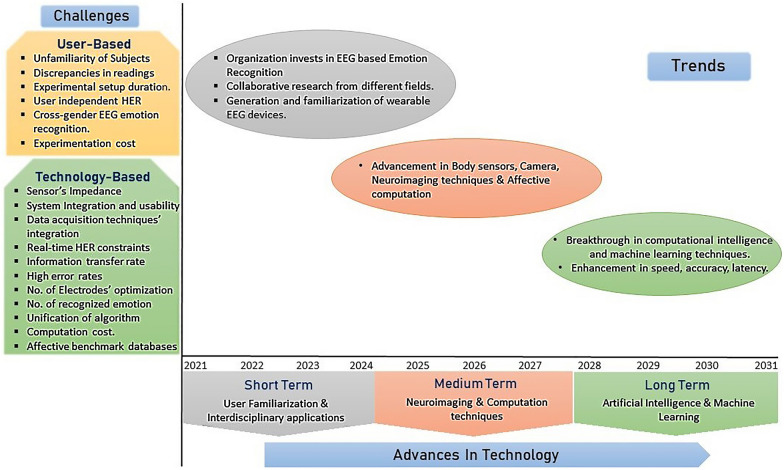

There are in total 8 valence levels of emotion. Most of the researches done to date is rough emotion classification, i.e., it only classifies a maximum of three or four types of emotion. Future research must focus on the classification of more detailed or all eight types of valence levels. Figure 4 summarizes the paper, presenting direction for future study, issues, and developments in EEG-based human emotion recognition from our viewpoint. It illustrates several issues associated with emotion recognition in BCI framework, which in general is divided into two domains: technology based and user based. It also shows how the advances in technology and trends would affect the field of EEG-based human emotion recognition research. Emotion identification in the field of BCI continues to be challenging and needs more research and experimentation. Higher research on designing a reliable and emotion classification system is still a needful job to implement a seamless communication between humans and machines.

Fig. 4.

Problems identified and possible advancement in the EEG-based emotion recognition research

Acknowledgements

The authors acknowledge Pondicherry University for financial support through University Fellowship.

Abbreviations

- EEG

Electroencephalography

- BCI

Brain–computer interface

- IAPS

International affective picture system

- DEAP

Dataset for emotion analysis using physiological signals

- SEED

SJTU Emotion EEG Dataset

- SampEn

Sample entropy

- DySampEn

Dynamic sample entropy

- DE

Differential entropy

- PSE

Power spectral entropy

- WE

Wavelet entropy

- EMD ApEn

Empirical Mode Decomposition Approximate Entropy

- KSE

Kolmogorov Sinai entropy

- PE

Permutation entropy

- RQAEn

Recurrence quantification analysis entropy

- KNN

K-Nearest Neighbor

- SVM

Support Vector Machines

- DBN-SVM

Deep Belief Network-Support Vector Machines

- CFCNN

Channel frequency convolutional neural network

- PNN

Probabilistic neural network

- HAHV

High arousal high valence

- HALV

High arousal low valence

- LAHV

Low arousal high valence

- LALV

Low arousal low valence

- fMRI

Functional Magnetic Resonance Imaging

- NIRS

Near infrared spectroscopy

Authors’ contributions

PP: conceptualization, writing—original draft. RR: writing—reviewing and editing. RNA: writing—reviewing, editing and supervision. All authors read and approved the final manuscript.

Funding

Not applicable.

Availability of data and materials

Not applicable.

Declarations

Ethics approval and consent to participate

This article does not contain any studies with human participants or animals performed by any of the authors.

Consent for publication

We consent for the publication of this article.

Competing interests

The authors declare that they have no known competing financial interests or personal relationship that could have appeared to influence the work reported in this paper.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Pragati Patel, Email: Pragati96.res@pondiuni.edu.in.

Raghunandan R , Email: Raghunandan.officialuse@gmail.com.

Ramesh Naidu Annavarapu, Email: rameshnaidu.phy@pondiuni.edu.in.

References

- 1.Wortham J (2013) If our gadgets could measure our emotions. New York Times

- 2.Scherer KR. What are emotions? And how can they be measured? Soc Sci Inf. 2005;44:695–729. doi: 10.1177/0539018405058216. [DOI] [Google Scholar]

- 3.Vijayan AE, Sen D, Sudheer AP (2015) EEG-based emotion recognition using statistical measures and auto-regressive modeling. In: 2015 IEEE international conference on computational intelligence & communication technology. pp 587–591

- 4.Li X, Song D, Zhang P, et al. Exploring EEG features in cross-subject emotion recognition. Front Neurosci. 2018;12:162. doi: 10.3389/fnins.2018.00162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bos DO, et al. EEG-based emotion recognition. Influ Vis Audit Stimul. 2006;56:1–17. [Google Scholar]

- 6.Picard RW, Vyzas E, Healey J. Toward machine emotional intelligence: analysis of affective physiological state. IEEE Trans Pattern Anal Mach Intell. 2001;23:1175–1191. doi: 10.1109/34.954607. [DOI] [Google Scholar]

- 7.Jerritta S, Murugappan M, Nagarajan R, Wan K (2011) Physiological signals based human emotion recognition: a review. In: 2011 IEEE 7th international colloquium on signal processing and its applications. pp 410–415

- 8.Liu Y, Sourina O, Nguyen MK (2010) Real-time EEG-based human emotion recognition and visualization. In: 2010 international conference on cyberworlds. pp 262–269

- 9.Anderson K, McOwan PW. A real-time automated system for the recognition of human facial expressions. IEEE Trans Syst Man Cybern B. 2006;36:96–105. doi: 10.1109/TSMCB.2005.854502. [DOI] [PubMed] [Google Scholar]

- 10.Ang J, Dhillon R, Krupski A et al (2002) Prosody-based automatic detection of annoyance and frustration in human–computer dialog. In: Seventh international conference on spoken language processing. pp 2037–2040

- 11.Herwig U, Satrapi P, Schönfeldt-Lecuona C. Using the international 10–20 EEG system for positioning of transcranial magnetic stimulation. Brain Topogr. 2003;16:95–99. doi: 10.1023/B:BRAT.0000006333.93597.9d. [DOI] [PubMed] [Google Scholar]

- 12.Pirbhulal S, Zhang H, Wu W, et al. Heartbeats based biometric random binary sequences generation to secure wireless body sensor networks. IEEE Trans Biomed Eng. 2018;65:2751–2759. doi: 10.1109/TBME.2018.2815155. [DOI] [PubMed] [Google Scholar]

- 13.Pirbhulal S, Zhang H, Mukhopadhyay SC, et al. An efficient biometric-based algorithm using heart rate variability for securing body sensor networks. Sensors. 2015;15:15067–15089. doi: 10.3390/s150715067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Li M, Lu B-L (2009) Emotion classification based on gamma-band EEG. In: 2009 annual international conference of the IEEE engineering in medicine and biology society. pp 1223–1226 [DOI] [PubMed]

- 15.Wu W, Pirbhulal S, Sangaiah AK, et al. Optimization of signal quality over comfortability of textile electrodes for ECG monitoring in fog computing based medical applications. Future Gener Comput Syst. 2018;86:515–526. doi: 10.1016/j.future.2018.04.024. [DOI] [Google Scholar]

- 16.Pirbhulal S, Zhang H, Alahi E, ME,, et al. A novel secure IoT-based smart home automation system using a wireless sensor network. Sensors. 2017;17:69. doi: 10.3390/s17010069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sodhro AH, Pirbhulal S, Qaraqe M, et al. Power control algorithms for media transmission in remote healthcare systems. IEEE Access. 2018;6:42384–42393. doi: 10.1109/ACCESS.2018.2859205. [DOI] [Google Scholar]

- 18.Acharya UR, Sree SV, Swapna G, et al. Automated EEG analysis of epilepsy: a review. Knowl Based Syst. 2013;45:147–165. doi: 10.1016/j.knosys.2013.02.014. [DOI] [Google Scholar]

- 19.Hosseini SA, Naghibi-Sistani MB. Emotion recognition method using entropy analysis of EEG signals. Int J Image Graph Signal Process. 2011;3:30. doi: 10.5815/ijigsp.2011.05.05. [DOI] [Google Scholar]

- 20.Oberman LM, McCleery JP, Ramachandran VS, Pineda JA. EEG evidence for mirror neuron activity during the observation of human and robot actions: toward an analysis of the human qualities of interactive robots. Neurocomputing. 2007;70:2194–2203. doi: 10.1016/j.neucom.2006.02.024. [DOI] [Google Scholar]

- 21.Wang Q, Sourina O, Nguyen MK (2010) EEG-based "serious" games design for medical applications. In: 2010 international conference on cyberworlds. pp 270–276

- 22.Tzallas AT, Tsipouras MG, Fotiadis DI. Epileptic seizure detection in EEGs using time–frequency analysis. IEEE Trans Inf Technol Biomed. 2009;13:703–710. doi: 10.1109/TITB.2009.2017939. [DOI] [PubMed] [Google Scholar]

- 23.Thatcher RW, Budzynski T, Budzynski H et al (2009) EEG evaluation of traumatic brain injury and EEG biofeedback treatment. In: Introduction to quantitative EEG and neurofeedback: advanced theory and applications. pp 269–294

- 24.Pandiyan PM, Yaacob S, et al. Mental stress level classification using eigenvector features and principal component analysis. Commun Inf Sci Manag Eng. 2013;3:254. [Google Scholar]

- 25.Aftanas LI, Reva NV, Varlamov AA, et al. Analysis of evoked EEG synchronization and desynchronization in conditions of emotional activation in humans: temporal and topographic characteristics. Neurosci Behav Physiol. 2004;34:859–867. doi: 10.1023/B:NEAB.0000038139.39812.eb. [DOI] [PubMed] [Google Scholar]

- 26.Hadjidimitriou SK, Hadjileontiadis LJ. Toward an EEG-based recognition of music liking using time–frequency analysis. IEEE Trans Biomed Eng. 2012;59:3498–3510. doi: 10.1109/TBME.2012.2217495. [DOI] [PubMed] [Google Scholar]

- 27.Jenke R, Peer A, Buss M. Feature extraction and selection for emotion recognition from EEG. IEEE Trans Affect Comput. 2014;5:327–339. doi: 10.1109/TAFFC.2014.2339834. [DOI] [Google Scholar]

- 28.Lay-Ekuakille A, Vergallo P, Griffo G, et al. Entropy index in quantitative EEG measurement for diagnosis accuracy. IEEE Trans Instrum Meas. 2013;63:1440–1450. doi: 10.1109/TIM.2013.2287803. [DOI] [Google Scholar]

- 29.Lu Y, Wang M, Wu W, et al. Dynamic entropy-based pattern learning to identify emotions from EEG signals across individuals. Measurement. 2020;150:107003. doi: 10.1016/j.measurement.2019.107003. [DOI] [Google Scholar]

- 30.Duan R-N, Zhu J-Y, Lu B-L (2013) Differential entropy feature for EEG-based emotion classification. In: 2013 6th international IEEE/EMBS conference on neural engineering (NER). pp 81–84

- 31.Ni L, Cao J, Wang R. Analyzing EEG of quasi-brain-death based on dynamic sample entropy measures. Comput Math Methods Med. 2013 doi: 10.1155/2013/618743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Xiang J, Li C, Li H, et al. The detection of epileptic seizure signals based on fuzzy entropy. J Neurosci Methods. 2015;243:18–25. doi: 10.1016/j.jneumeth.2015.01.015. [DOI] [PubMed] [Google Scholar]

- 33.Chen T, Ju S, Yuan X, et al. Emotion recognition using empirical mode decomposition and approximation entropy. Comput Electr Eng. 2018;72:383–392. doi: 10.1016/j.compeleceng.2018.09.022. [DOI] [Google Scholar]

- 34.Plutchik R, Kellerman H. Theories of emotion. Cambridge: Academic Press; 2013. [Google Scholar]

- 35.Strongman KT. The psychology of emotion: theories of emotion in perspective. New York: Wiley; 1996. [Google Scholar]

- 36.Emotion wikipedia page. Wikipedia

- 37.Ekman P, Friesen WV, O’sullivan M, et al. Universals and cultural differences in the judgments of facial expressions of emotion. J Pers Soc Psychol. 1987;53:712. doi: 10.1037/0022-3514.53.4.712. [DOI] [PubMed] [Google Scholar]

- 38.Lang PJ. The emotion probe: studies of motivation and attention. Am Psychol. 1995;50:372. doi: 10.1037/0003-066X.50.5.372. [DOI] [PubMed] [Google Scholar]

- 39.Peter C, Herbon A. Emotion representation and physiology assignments in digital systems. Interact Comput. 2006;18:139–170. doi: 10.1016/j.intcom.2005.10.006. [DOI] [Google Scholar]

- 40.Kim J, André E. Emotion recognition based on physiological changes in music listening. IEEE Trans Pattern Anal Mach Intell. 2008;30:2067–2083. doi: 10.1109/TPAMI.2008.26. [DOI] [PubMed] [Google Scholar]

- 41.Rani P, Liu C, Sarkar N, Vanman E. An empirical study of machine learning techniques for affect recognition in human–robot interaction. Pattern Anal Appl. 2006;9:58–69. doi: 10.1007/s10044-006-0025-y. [DOI] [Google Scholar]

- 42.Kim KH, Bang SW, Kim SR. Emotion recognition system using short-term monitoring of physiological signals. Med Biol Eng Comput. 2004;42:419–427. doi: 10.1007/BF02344719. [DOI] [PubMed] [Google Scholar]

- 43.Rigas G, Katsis CD, Ganiatsas G, Fotiadis DI. A user independent, biosignal based, emotion recognition method. In: Conati C, McCoy K, Paliouras G, editors. User modeling 2007. Berlin: Springer; 2007. pp. 314–318. [Google Scholar]

- 44.Haag A, Goronzy S, Schaich P, Williams J. Emotion recognition using bio-sensors: first steps towards an automatic system. In: André E, Dybkjær L, Minker W, Heisterkamp P, editors. Affective dialogue systems. Berlin: Springer; 2004. pp. 36–48. [Google Scholar]

- 45.Gross JJ, Levenson RW. Emotion elicitation using films. Cogn Emot. 1995;9:87–108. doi: 10.1080/02699939508408966. [DOI] [Google Scholar]

- 46.Koelstra S, Muhl C, Soleymani M, et al. Deap: a database for emotion analysis; using physiological signals. IEEE Trans Affect Comput. 2011;3:18–31. doi: 10.1109/T-AFFC.2011.15. [DOI] [Google Scholar]

- 47.Zheng W-L, Liu W, Lu Y, et al. Emotionmeter: a multimodal framework for recognizing human emotions. IEEE Trans Cybern. 2018;49:1110–1122. doi: 10.1109/TCYB.2018.2797176. [DOI] [PubMed] [Google Scholar]

- 48.Lemons DS. A student’s guide to entropy. Cambridge: Cambridge University Press; 2013. [Google Scholar]

- 49.Richman JS, Moorman JR. Physiological time-series analysis using approximate entropy and sample entropy. Am J Physiol Circ Physiol. 2000;278:H2039–H2049. doi: 10.1152/ajpheart.2000.278.6.H2039. [DOI] [PubMed] [Google Scholar]

- 50.Tong J, Liu S, Ke Y et al (2017) EEG-based emotion recognition using nonlinear feature. In: 2017 IEEE 8th international conference on awareness science and technology (iCAST). pp 55–59

- 51.Candra H, Yuwono M, Chai R et al (2015) Investigation of window size in classification of EEG-emotion signal with wavelet entropy and support vector machine. In: 2015 37th annual international conference of the IEEE engineering in medicine and biology society (EMBC). pp 7250–7253 [DOI] [PubMed]

- 52.Rosso OA, Blanco S, Yordanova J, et al. Wavelet entropy: a new tool for analysis of short duration brain electrical signals. J Neurosci Methods. 2001;105:65–75. doi: 10.1016/S0165-0270(00)00356-3. [DOI] [PubMed] [Google Scholar]

- 53.Ho KKL, Moody GB, Peng C-K, et al. Predicting survival in heart failure case and control subjects by use of fully automated methods for deriving nonlinear and conventional indices of heart rate dynamics. Circulation. 1997;96:842–848. doi: 10.1161/01.CIR.96.3.842. [DOI] [PubMed] [Google Scholar]

- 54.Pincus SM. Approximate entropy as a measure of system complexity. Proc Natl Acad Sci. 1991;88:2297–2301. doi: 10.1073/pnas.88.6.2297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Voss A, Baier V, Schulz S, Bar KJ. Linear and nonlinear methods for analyses of cardiovascular variability in bipolar disorders. Bipolar Disord. 2006;8:441–452. doi: 10.1111/j.1399-5618.2006.00364.x. [DOI] [PubMed] [Google Scholar]

- 56.Ryan SM, Goldberger AL, Pincus SM, et al. Gender-and age-related differences in heart rate dynamics: are women more complex than men? J Am Coll Cardiol. 1994;24:1700–1707. doi: 10.1016/0735-1097(94)90177-5. [DOI] [PubMed] [Google Scholar]

- 57.Seely AJE, Macklem PT. Complex systems and the technology of variability analysis. Crit Care. 2004;8:R367. doi: 10.1186/cc2948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Pincus S. Approximate entropy (ApEn) as a complexity measure. Chaos Interdiscip J Nonlinear Sci. 1995;5:110–117. doi: 10.1063/1.166092. [DOI] [PubMed] [Google Scholar]

- 59.Farmer JD. Information dimension and the probabilistic structure of chaos. Zeitschrift für Naturforsch A. 1982;37:1304–1326. doi: 10.1515/zna-1982-1117. [DOI] [Google Scholar]

- 60.Falniowski F. On the connections of generalized entropies with Shannon and Kolmogorov-Sinai entropies. Entropy. 2014;16:3732–3753. doi: 10.3390/e16073732. [DOI] [Google Scholar]

- 61.Misiurewicz M. A short proof of the variational principle for a ℤ+ N action on a compact space. Asterisque. 1976;40:147–157. [Google Scholar]

- 62.Cover TM, Thomas JA. Elements of information theory, New York: Wiley; 1991. pp. 69–73. [Google Scholar]

- 63.Li X, Ouyang G, Richards DA. Predictability analysis of absence seizures with permutation entropy. Epilepsy Res. 2007;77:70–74. doi: 10.1016/j.eplepsyres.2007.08.002. [DOI] [PubMed] [Google Scholar]

- 64.Bandt C, Pompe B. Permutation entropy: a natural complexity measure for time series. Phys Rev Lett. 2002;88:174102. doi: 10.1103/PhysRevLett.88.174102. [DOI] [PubMed] [Google Scholar]

- 65.Riedl M, Müller A, Wessel N. Practical considerations of permutation entropy. Eur Phys J Spec Top. 2013;222:249–262. doi: 10.1140/epjst/e2013-01862-7. [DOI] [Google Scholar]

- 66.Zanin M, Zunino L, Rosso OA, Papo D. Permutation entropy and its main biomedical and econophysics applications: a review. Entropy. 2012;14:1553–1577. doi: 10.3390/e14081553. [DOI] [Google Scholar]

- 67.Fadlallah B, Chen B, Keil A, Principe J. Weighted-permutation entropy: a complexity measure for time series incorporating amplitude information. Phys Rev E. 2013;87:22911. doi: 10.1103/PhysRevE.87.022911. [DOI] [PubMed] [Google Scholar]

- 68.Kosko B. Fuzzy entropy and conditioning. Inf Sci. 1986;40:165–174. doi: 10.1016/0020-0255(86)90006-X. [DOI] [Google Scholar]

- 69.Lu Y, Wang M, Wu W, et al. Entropy-based pattern learning based on singular spectrum analysis components for assessment of physiological signals. Complexity. 2020 doi: 10.1155/2020/4625218. [DOI] [Google Scholar]

- 70.Zhang XP, Fan YL, Yang Y. On the classification of consciousness tasks based on the EEG singular spectrum entropy. Comput Eng Sci. 2009;31:117–120. [Google Scholar]

- 71.Lotfalinezhad H, Maleki A. Application of multiscale fuzzy entropy features for multilevel subject-dependent emotion recognition. Turkish J Electr Eng Comput Sci. 2019;27:4070–4081. doi: 10.3906/elk-1805-126. [DOI] [Google Scholar]

- 72.Borowska M. Entropy-based algorithms in the analysis of biomedical signals. Stud Logic Gramm Rhetor. 2015;43:21–32. doi: 10.1515/slgr-2015-0039. [DOI] [Google Scholar]

- 73.Marwan N, Wessel N, Meyerfeldt U, et al. Recurrence-plot-based measures of complexity and their application to heart-rate-variability data. Phys Rev E. 2002;66:26702. doi: 10.1103/PhysRevE.66.026702. [DOI] [PubMed] [Google Scholar]

- 74.Zhang Y, Ji X, Zhang S. An approach to EEG-based emotion recognition using combined feature extraction method. Neurosci Lett. 2016;633:152–157. doi: 10.1016/j.neulet.2016.09.037. [DOI] [PubMed] [Google Scholar]

- 75.Yang Y-X, Gao Z-K, Wang X-M, et al. A recurrence quantification analysis-based channel-frequency convolutional neural network for emotion recognition from EEG. Chaos Interdiscip J Nonlinear Sci. 2018;28:85724. doi: 10.1063/1.5023857. [DOI] [PubMed] [Google Scholar]

- 76.Goshvarpour A, Abbasi A, Goshvarpour A. Recurrence quantification analysis and neural networks for emotional EEG classification. Appl Med Inform. 2016;38:13–24. [Google Scholar]

- 77.Jie X, Cao R, Li L. Emotion recognition based on the sample entropy of EEG. Biomed Mater Eng. 2014;24:1185–1192. doi: 10.3233/BME-130919. [DOI] [PubMed] [Google Scholar]

- 78.Raheel A, Majid M, Alnowami M, Anwar SM. Physiological sensors based emotion recognition while experiencing tactile enhanced multimedia. Sensors. 2020;20:1–19. doi: 10.3390/s20144037. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.