Abstract

Background

Actionable information about the readiness of health facilities is needed to inform quality improvement efforts in maternity care, but there is no consensus on the best approach to measure readiness. Many countries use the WHO’s Service Availability and Readiness Assessment (SARA) or the Demographic and Health Survey (DHS) Programme’s Service Provision Assessment to measure facility readiness. This study compares measures of childbirth service readiness based on SARA and DHS guidance to an index based on WHO’s quality of maternal and newborn care standards.

Methods

We used cross-sectional data from Performance Monitoring for Action Ethiopia’s 2019 survey of 406 health facilities providing childbirth services. We calculated childbirth service readiness scores using items based on SARA, DHS and WHO standards. For each, we used three aggregation methods for generating indices: simple addition, domain-weighted addition and principal components analysis. We compared central tendency, spread and item variation between the readiness indices; concordance between health facility scores and rankings; and correlations between readiness scores and delivery volume.

Results

Indices showed moderate agreement with one another, and all had a small but significant positive correlation with monthly delivery volume. Ties were more frequent for indices with fewer items. More than two-thirds of items in the relatively shorter SARA and DHS indices were widely (>90%) available in hospitals, and half of the SARA items were widely (>90%) available in health centres/clinics. Items based on the WHO standards showed greater variation and captured unique aspects of readiness (eg, quality improvement processes, actionable information systems) not included in either the SARA or DHS indices.

Conclusion

SARA and DHS indices rely on a small set of widely available items to assess facility readiness to provide childbirth care. Expanded selection of items based on the WHO standards can better differentiate between levels of service readiness.

Keywords: health services research, health systems, obstetrics, maternal health, child health

Key questions.

What is already known?

Many health facilities in low-income and middle-income countries operate under significant constraints, such as inadequate staffing, medicine stock-outs, equipment shortages and poorly functioning information and referral systems, which limit their capacity to provide safe and effective childbirth care.

Information about the readiness of health facilities to provide childbirth care is needed to guide quality improvement efforts, but there is no consensus on the best approach to measure readiness.

What are the new findings?

This study compares three facility survey assessment tools and statistical methods for constructing indices to measure facility childbirth service readiness in Ethiopia and finds that indices show moderate agreement with one another.

More than two-thirds of items in the relatively shorter tools were widely (>90%) available in hospitals in Ethiopia, limiting the ability of the tools to discriminate between readiness levels.

Items based on the WHO quality of care standards showed greater variation and captured unique aspects of readiness not included in other indices.

What do the new findings imply?

It is feasible to create a service readiness index without the use of complex statistical methods; additive methods produce indices that are easy to generate, interpret and deconstruct to identify bottlenecks to health system performance.

Item selection should favour inclusion of items with a strong theoretical basis and the ability to discriminate between levels of service readiness.

Introduction

Building on momentum to end preventable maternal and newborn deaths, country and global stakeholders have committed to meet the Sustainable Development Goals (SDGs) of reducing the global maternal mortality ratio to less than 70 deaths per 100 000 live births and neonatal mortality rates to 12 or fewer deaths per 1000 live births in all countries by 2030.1 Achievement of these targets will depend on improving coverage of life-saving interventions during the intrapartum period and the first 24 hours following birth, when an estimated 46% of maternal deaths and 40% of neonatal deaths and stillbirths occur.2

Improving skilled birth attendance, primarily through increasing the proportions of births at health facilities, is a key intervention for achieving the SDG-3 goals. A recent analysis of household survey and routine health information system data show an increase in the global proportion of deliveries that occur in a health facility from 65% in 2006–2012 to 76% in 2014–2019, with the largest increases observed in sub-Saharan Africa and South Asia.3 However, increased use of facility childbirth services has not consistently translated into the expected gains in maternal and neonatal survival. Research offers mixed evidence of the relationship between use of facility childbirth services and maternal and newborn health outcomes in low-income and middle-income countries (LMICs).4–12 For maternal health, these inconsistent findings may, in part, be explained by differences in the risk profile of patients accessing services,4 5 9 10 with high-risk patients being more likely to seek care at a health facility, therefore, biasing the results towards the appearance of limited or no effectiveness. The mixed evidence also points to significant variations in the quality of care provided across facilities and contexts. Secondary analysis of two large population-based cluster-randomised control trials in Ghana found no evidence of an association between facility birth and mortality outcomes, but the overall result masked differences in quality of care across facilities; proximity to facilities offering high-quality care was associated with lower risk of intrapartum stillbirth and composite mortality outcomes.7 Indeed, the quality of childbirth care is now receiving heightened attention globally.13

Ensuring facility readiness is an essential first step towards improving the quality of care in LMICs. Readiness, as conceptualised by WHO, is the capacity of a facility to provide services to a defined minimum standard, including the presence of trained staff, commodities and equipment; appropriate systems to support quality and safety; and provider knowledge.14 Kanyangarara et al’s analysis of survey data from health facilities in 17 LMICs found wide variation in the availability of such essential resources. For example, the availability of magnesium sulphate—a drug used to prevent or treat seizures for patients with (pre-)eclampsia—ranged from 10% to 97% across countries.15 Moreover, inadequate provider knowledge and poor adherence to clinical practice standards exacerbate deficiencies in the provision of quality care.16–20 As a result, large gaps exist between ‘service contact’ (ie, individuals who use childbirth services) and ‘effective coverage’ (ie, individuals who experience a positive health gain from using childbirth services) in maternal and newborn health in LMICs.21–23

For childbirth services, several indices have been proposed to measure service readiness. The WHO’s health facility assessment tool, Service Availability and Readiness Assessment (SARA), proposes indices to measure basic and comprehensive obstetric care readiness.24 25 The Demographic and Health Surveys (DHS), the major source of data on population, health and nutrition in LMICs, have also collected facility level data in selected countries using the Service Provision Assessment (SPA) tool since 1999. The SPA surveys cover facility readiness in terms of infrastructure, resources and management systems for antenatal care, delivery services, newborn care and emergency obstetric care. Wang et al offer an alternative obstetric and newborn care readiness index in an analytical study using the DHS data.26 27 Others have measured readiness to perform obstetric signal functions based on the framework for monitoring emergency obstetric care developed by the WHO, United Nations Population Fund (UNFPA), UNICEF and the Mailman School of Public Health Averting Maternal Death and Disability programme,28–33 expanded by some to include signal functions for routine childbirth and newborn care as well as emergency referrals.34–40 More recently, researchers and practitioners have proposed using indicators from the WHO’s Standards for improving quality of maternal and newborn care in health facilities to assess a broader range of quality domains.41–45

These measurement approaches share many commonalities. However, there are important differences in item selection and aggregation methods and, to date, there is no consensus on the best approach for measuring facility readiness for childbirth services in LMICs. Conventional indices tend to focus on the availability of commodities with limited consideration of the systems necessary to support quality and safety. These conventional indices may not fully capture the readiness elements predictive of quality care. A previous study by Leslie et al found that service readiness, based on an index constructed from SARA tracer items, was weakly associated with observed clinical quality of care in Kenya and Malawi.46 The need to refocus health facility assessments to measure quality of care—including key readiness, process and outcomes measures—has been a key consideration in the ongoing process to revise the DHS SPA as well as the process led by the WHO, in collaboration with the Health Data Collaborative, to develop a standardised health facility assessment.

Health authorities require actionable information about the readiness of health facilities to guide quality improvement efforts, but there is no agreement on how best to measure readiness. The objective of this study is to compare childbirth service readiness indices to ascertain their relative utility for programming and decision making.

Methods

Study setting

The study is based on data from health facilities in Ethiopia. The public sector health service in Ethiopia is designed as a three-tiered system. In rural areas, the primary level consists of an interconnected network of health posts, health centres and primary hospitals, with linkages to general and specialised hospitals.47 In urban areas, health centres are linked directly to general hospitals and specialised hospitals. The public sector provides labour and delivery services at health centres and hospitals. Government health centres provide routine delivery services and basic emergency obstetric and neonatal care (BEmONC); government hospitals provide comprehensive emergency obstetric and neonatal care (CEmONC),48 which includes caesarean sections and blood transfusions.47 48 However, in practice, gaps exist in the capacity of health facilities to provide the full range of obstetric and neonatal care services. A 2016 survey found that only 5% of government health centres were able to provide all BEmONC signal functions, and only 52% of government hospitals had the capacity to offer all CEmONC components.48

The private health sector in Ethiopia encompasses a heterogeneous mix of private-for-profit, non-profit and faith-based hospitals and clinics. However, the 2014 SPA-Plus survey estimated that less than one-third of private-for-profit facilities offer labour and delivery services.49 These services are generally limited to routine delivery services; few private facilities have the capacity to provide emergency care.48 49 Among women who delivered in a health facility, the Ethiopia Mini DHS 2019 estimated that 95% of women delivered in a public facility and only 5% delivered in a private facility.50

Study design and procedures

The study uses cross-sectional data collected between September and December 2019 from a sample of service delivery points (SDPs) across all regions and two city administrations in Ethiopia. SDPs were identified following selection of the study’s enumeration areas (EAs) as described in the study protocol available elsewhere.51 All government health posts, health centres, and primary level and general hospitals whose catchment area covers a sampled EA were eligible for the survey. In addition, private sector SDPs located within the EA’s kebele—the lowest level administrative division in Ethiopia—were invited to participate in the survey, up to a maximum of three private SDPs per EA. Private health facilities are relatively rare in rural Ethiopia, and few women in Ethiopia deliver in private facilities.50 Our sample reflects this reality, where most kebeles in the Performance Monitoring for Action Ethiopia (PMA-ET) sample did not have even one private SDP.

After obtaining consent from the head of the facility or designated authority, data were collected using a standardised questionnaire, publicly available at http://www.doi.org/10.34976/kvvr-t814.52 A total of 534 hospitals, health centres and health clinics completed the survey, a response rate of 98.9%; among these, 406 facilities provide childbirth services. The survey was administered as part of PMA-ET, a project implemented by the Addis Ababa University School of Public Health and the Johns Hopkins Bloomberg School of Public Health, and funded by the Bill & Melinda Gates Foundation (INV 009466).

Measurement

Selection of items for the childbirth service readiness indices followed existing guidance or theoretical frameworks (online supplemental table S1). The first approach to item selection relies on tracer items for basic and comprehensive obstetric care listed in the WHO SARA Reference Manual24; these items were selected by WHO in consultations with service delivery experts.25 A second approach uses items included in the index developed by Wang et al for a DHS analytical study26 27; item selection for this index was also guided by the WHO SARA Reference Manual,24 as well as the recommendations by the Newborn Indicator Technical Working Group and a review conducted by Gabrysch et al.40

bmjgh-2021-006698supp001.pdf (588.8KB, pdf)

In the third approach, PMA-ET items were mapped to the WHO Standards for improving quality of maternal and newborn care in health facilities41 53 to identify a pool of 67 candidate items for health centres/clinics and 79 candidate items for hospitals. Analyses were performed to identify a smaller, parsimonious set of items that would capture the three ‘provision of care’ standards (evidence-based practices, information systems, referral systems) and two ‘cross-cutting’ standards (human resources, physical resources) in the WHO framework.41 53 To assess the value of candidate items, we first calculated the percentages of hospitals and the percentages of health centres/clinics that had each item available at the time of the 2019 PMA survey. We excluded items that were nearly universally (>97%) available since these items had limited ability to differentiate between facilities, and we excluded items flagged for concerns about response bias or with unclear interpretations (online supplemental table S2). After this initial round of exclusions, we examined the correlation structure between items overall and by readiness domain: (1) equipment, supplies and amenities; (2) medicines and health commodities; (3) staffing and systems for quality and safety; and (4) performance of signal functions. For each domain, a two-parameter logistic item response model was fitted to characterise item discrimination (ie, the ability of the item to differentiate between facilities of different readiness levels) and item difficulty (ie, whether the item is widely or rarely available in facilities irrespective of readiness level). The final set of 44 items for health centres and 52 items for hospitals was determined based on statistical properties and conceptual alignment with the WHO framework (online supplemental tables S1, S2). Retained items showed variation across facilities and good discrimination, and together, the selection ensured representation across the four readiness domains and five WHO standards.

A scoping review of published and grey literature identified three common approaches for aggregating items to generate a single composite readiness score for childbirth care: simple addition of items, domain-weighted addition of items and the data dimensionality reduction method of principal components analysis (PCA). We paired each of the three-item selection methods with the three aggregation methods to generate nine indices (table 1). Prior to aggregation, all items were coded as 0 ‘no’ or 1 ‘yes’ to indicate whether the item was observed on the day of the assessment, whether the function was reported as performed, or whether the system was reported as being in place. The few instances (<1%) where a response was missing or where interviewees responded ‘don’t know’ were coded to 0. Additionally, five items were only asked for a subset of health facilities (eg, government facilities) and marked ‘not applicable’ for the remainder (3%–8%). For those facilities, the ‘not applicable’ items were excluded and the denominator adjusted accordingly to calculate scores using simple or weighted addition; the ‘not applicable’ responses were coded to 0 prior to aggregation by PCA.

Table 1.

Methods to construct service readiness indices

| Item selection | |

| SARA tracer items for obstetric care* | 15 items for health centres plus seven additional items for hospitals based on the WHO SARA basic and comprehensive obstetric care tracer items.23 These correspond to three readiness domains: (A) staff and training; (B) equipment and (C) medicines and commodities. |

| DHS analytical study’s obstetric and newborn care readiness indicators* | 30 items for health centres plus three additional items for hospitals based on obstetric and newborn readiness indicators described in the DHS analytical studies No. 65.24 25 This includes items across five readiness domains: (A) performance of signal functions for emergency obstetric care; (B) performance of newborn care functions; (C) general requirements; (D) equipment and (E) medicines and commodities. The DHS programme proposes an additional domain for ‘guidelines, staff training and supervision’; however, this domain is excluded from the domain-weighted addition given limited availability of these items in the PMA-ET survey. |

| WHO standards for improving quality of maternal and newborn care readiness items* | 44 items for health centres plus eight additional items for hospitals available in the PMA-ET survey instrument mapped to the WHO Standards for improving quality of maternal and newborn care in health facilities.39 These include three ‘provision of care’ standards and two ‘cross-cutting’ standards: (1) evidence-based practices for routine care and management of complications; (2) actionable information systems; (3) functional referral systems; (4) competent, motivated human resources and (5) essential physical resources. These items are also grouped in four readiness domains: (A) equipment, supplies and amenities; (B) medicines and health commodities; (C) staffing and systems for quality and safety; and (D) performance of signal functions. |

| Aggregation method | |

| Simple addition of items | The number of items that is available on the day of the assessment is added together. The number of available items is divided by the total number of possible items to compute a score ranging from 0 to 1. Each item is given equal weight. |

| Weighted addition of items by readiness domain | Within each readiness domain, the number of items that is available on the day of the assessment is added together. The number of available items per domain is divided by the number of possible items per domain to compute a domain score. The sum of the domain scores is divided by the number of domains to compute a score ranging from 0 to 1. Each domain is given equal weight. |

| Principal components analysis (PCA) | PCA is a data reduction technique that converts a set of correlated items into orthogonal components. Each component explains some proportion of the variation across the items, with the first component explaining the largest proportion. The first component is extracted and rescaled to a score ranging between 0 and 1. |

| Composite indices | |

| Combination of item selections with aggregation methods | Each of the item selections (1=SARA, 2=DHS, 3=WHO standards) are aggregated using three different methods (1=simple addition, 2=weighted addition, 3=PCA) to generate nine childbirth service readiness indices. |

*Please refer to online supplemental tables S1 and S6 for the complete list of items selected for each of the readiness indices and for information on any items excluded due to lack of available data.

DHS, Demographic and Health Survey; PMA-ET, Performance Monitoring for Action Ethiopia; SARA, Service Availability and Readiness Assessment.

Readiness scores were calculated separately for hospitals and for health centres/clinics to reflect the difference in services provided at different levels of Ethiopia’s tiered health system. In addition to routine childbirth services, health centres offer BEmONC whereas hospitals offer CEmONC that includes caesarean sections and blood transfusions.47 Thus, readiness scores for hospitals were computed using an expanded list of items relevant for CEmONC. Similarly, PCA scores were generated separately for hospitals and for health centres/clinics. As a result, scores are comparable within each tier, but not directly comparable across tiers.

Statistical analysis

We calculated readiness scores for each health facility in the sample using all nine indices. We then compared measures of central tendency, spread, skewness and kurtosis across approaches. We also examined eigenvalues and loadings for indices generated using PCA in order to assess the variance explained by the first component, subsequently used to calculate the readiness score.

To compare the variability and distribution of scores across indices, we adopted an approach similar to that used by Sheffel et al to develop quality of antenatal care indices. 54 Ideally, an index can accurately differentiate between facilities with differing levels of readiness, including those at the high and low ends. To assess this characteristic, we calculated the coefficient of variation and proportion of facilities scoring either a 0 (floor) or 1 (ceiling). Another desirable characteristic is that the individual items that comprise an index demonstrate a range of variability. We assess this by calculating the proportion of items that are rare (<40%) or widely available (>90%).

We calculated differences between readiness scores and between rankings within health facilities measured using different indices and compared these differences using graphical displays. We expected facilities to consistently score high or low regardless of the methods used to assess their readiness. If an index score deviates substantially relative to other indices, this likely indicates that it is measuring a different construct or that particular item(s) are unduly influencing the score. Next, to understand differences in the data structure and composition of the indices, we deconstructed the composite scores into domain-specific scores, and then we examined interdomain correlations, interitem correlations, and the internal consistency of items overall and within each domain.

Prior research suggests an association between childbirth service readiness and delivery volume.26 We evaluated this association using Spearman’s ranked correlation coefficient. All statistical analyses were performed using Stata IC, V.15.1.55

Patient and public involvement

Patients were not involved in the research. A project advisory board, chaired by the Deputy Minister of Health, and composed of representatives from the Federal Ministry of Health, professional associations, multilateral organisations, non-governmental organisations and donors provided input during survey design and development. The project advisory board advises PMA-Ethiopia on data analysis, utilisation and dissemination.

Results

Of the 406 facilities that provide childbirth services, the vast majority are public facilities: 96.3% of hospitals and 93.9% of health centres and clinics (table 2). Facilities are distributed across all regions of the country, with a higher proportion located in the more populous regions of Oromiya, Amhara and the Southern Nations, Nationalities and Peoples Region (SNNP).

Table 2.

Sample characteristics

| Hospitals (n=160) |

Health centres/ clinics (n=246) | |||

| n | % | n | % | |

| Managing authority | ||||

| Government | 154 | 96.3 | 231 | 93.9 |

| Private | 6 | 3.8 | 15 | 6.1 |

| Teaching status | ||||

| Teaching facility | 23 | 14.4 | n/a | n/a |

| Region | ||||

| Addis | 5 | 3.1 | 24 | 9.8 |

| Afar | 6 | 3.8 | 10 | 4.1 |

| Amhara | 33 | 20.6 | 51 | 20.7 |

| Benishangul-Gumuz | 3 | 1.9 | 9 | 3.7 |

| Dire Dawa | 3 | 1.9 | 12 | 4.9 |

| Gambella | 4 | 2.5 | 7 | 2.8 |

| Harari | 3 | 1.9 | 5 | 2.0 |

| Oromiya | 38 | 23.8 | 51 | 20.7 |

| SNNP* | 38 | 23.8 | 43 | 17.5 |

| Somali | 5 | 3.1 | 6 | 2.4 |

| Tigray | 22 | 13.8 | 28 | 11.4 |

*Includes facilities located in the newly formed Sidama region. The survey was administered in 2019 prior to the ratification of regional statehood for Sidama; data reflects the regional distribution at the time of data collection.

n/a, not applicable; SNNP, Southern Nations, Nationalities, and Peoples Region.

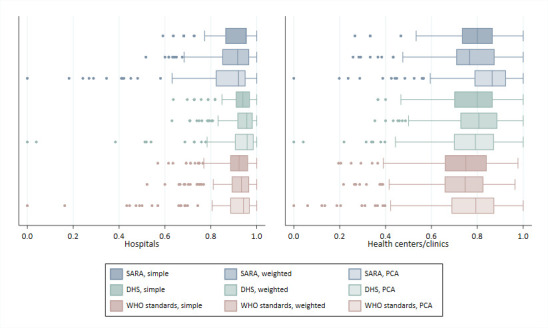

Figure 1 shows the distribution of scores by index (see also online supplemental table 3). Median index scores range from 0.92 to 0.96 for hospitals and from 0.75 to 0.86 for health centres/clinics. WHO standards-based indices generate slightly lower median scores relative to other indices. Across indices, scores show substantial skewness and kurtosis, with observations clustered around the highest scores (online supplemental figure S1). Scores generated using PCA show the greatest skewness and kurtosis.

Figure 1.

Comparison of childbirth service readiness index scores. DHS, Demographic and Health Survey; PCA, principal components analysis; SARA, Service Availability and Readiness Assessment.

Scores generated using SARA tracer items show limited item variation and more ceiling effects (table 3); using the SARA simple addition method, 34 (21.2%) hospitals receive a perfect score and 50 (31.2%) tie for the next highest score. Among health centres, 16 (6.5%) receive a perfect score and 45 (18.3%) tie for the next highest rank (online supplemental table S4). The inclusion of more items in the WHO standards-based indices reduces ceiling effects and limits ties in rankings. Use of PCA produces a higher coefficient of variation relative to the simple or domain-weighted addition methods for SARA, DHS and WHO standards-based indices. PCA-derived scores are calculated using the first component, and the eigenvalues for the first component range from 2.5 to 6.6 across indices, explaining 12%–17% of the total variance among items (online supplemental table S5).

Table 3.

Key characteristics of childbirth service readiness indices

| No of items | Items <40% | Items ≥90% | Coefficient of variation | Floor effects (score=0) | Ceiling effects (score=1) | Correlation with delivery volume | ||

| n | % | % | % | % | r* | P value | ||

| Hospitals (n=160) | ||||||||

| SARA tracer, simple | 22 | 0 | 73 | 0.10 | 0 | 21 | 0.33 | <0.001 |

| SARA tracer, weighted | “ | “ | “ | 0.11 | 0 | 21 | 0.32 | <0.001 |

| SARA tracer, PCA | “ | “ | “ | 0.20 | 1 | <1 | 0.33 | <0.001 |

| DHS, simple | 33 | 0 | 85 | 0.07 | 0 | 14 | 0.25 | 0.002 |

| DHS, weighted† | “ | “ | “ | 0.07 | 0 | 20 | 0.20 | 0.013 |

| DHS, PCA | “ | “ | “ | 0.15 | 1 | <1 | 0.31 | <0.001 |

| WHO standards, simple | 52 | 0 | 67 | 0.09 | 0 | 6 | 0.23 | 0.003 |

| WHO standards, weighted | “ | “ | “ | 0.09 | 0 | 6 | 0.26 | <0.001 |

| WHO standards, PCA | “ | “ | “ | 0.16 | 1 | <1 | 0.29 | <0.001 |

| Health centres/clinics (n=246) | ||||||||

| SARA tracer, simple | 15 | 7 | 53 | 0.17 | 0 | 7 | 0.19 | 0.004 |

| SARA tracer, weighted | “ | “ | “ | 0.19 | 0 | 7 | 0.18 | 0.004 |

| SARA tracer, PCA | “ | “ | “ | 0.18 | 1 | 7 | 0.20 | 0.002 |

| DHS, simple | 30 | 3 | 33 | 0.16 | 0 | 1 | 0.35 | <0.001 |

| DHS, weighted† | “ | “ | “ | 0.16 | 0 | 2 | 0.39 | <0.001 |

| DHS, PCA | “ | “ | “ | 0.20 | 1 | 1 | 0.36 | <0.001 |

| WHO standards, simple | 44 | 2 | 23 | 0.20 | 0 | 0 | 0.31 | <0.001 |

| WHO standards, weighted | “ | “ | “ | 0.20 | 0 | 0 | 0.35 | <0.001 |

| WHO standards, PCA | “ | “ | “ | 0.24 | 1 | <1 | 0.31 | <0.001 |

*Spearman’s correlation coefficients.

†Weighted addition of DHS items excluded the domain for ‘guidelines, staff training and supervision’ given limited information on these items for this sample.

DHS, Demographic and Health Survey; PCA, principal components analysis; SARA, Service Availability and Readiness Assessment.

Individual items contribute different levels of information to the index. Items that are almost universally available—such as fetal scopes, sharps containers, sterile gloves, delivery beds and toilets—provide little information to differentiate between health facilities (online supplemental table S6). Over 70% of items that comprise the SARA and DHS indices are widely available (>90%) in hospitals, and half of items that comprise the SARA index are widely available (>90%) in health centres/clinics (table 3). A slight but significant positive correlation is observed between service readiness scores and monthly delivery volume (table 3).

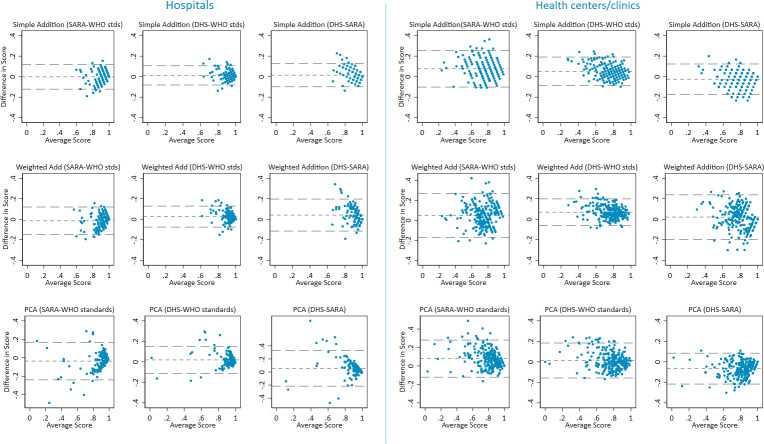

Figure 2 is Bland-Altman graphs that show agreement between service readiness scores generated using different indices (see also online supplemental table S7 and figure S2). There are minimal systemic differences in readiness scores, although WHO standards-based indices produce slightly lower scores than SARA-based or DHS-based indices. SD of differences range from 0.05 to 0.14 among hospitals and from 0.07 to 0.11 among health centres/clinics. DHS and WHO standards-based indices show the greatest consistency in scores, with smaller SD of differences. Among aggregation methods, simple addition produces smaller SD and fewer outliers than PCA and domain-weighted addition.

Figure 2.

Difference against mean childbirth services readiness Scores. Note: short dashed line indicates mean difference in Readiness Scores and long dashed line indicates 2 SD of the mean difference. DHS, Demographic and Health Survey; PCA, principal components analysis; SARA, Service Availability and Readiness Assessment.

By and large, there are minimal systemic differences in facility rankings across indices; facilities ranked in the top and bottom tiers by one index are generally ranked similarly by other indices (online supplemental tables S4 and S7). However, some variations do exist, with SARA and WHO standards-based indices displaying the greatest differences in facility rankings (online supplemental figure S2, S3). Additionally, ties are frequent for indices with fewer items, such as the SARA-based indices and, to a lesser extent, the DHS-based indices.

Indices can be deconstructed to measure readiness by their component domains. The SARA and DHS-based indices rely on relatively few items to calculate each domain score and interitem correlation is low; as a result, the internal consistency among items that comprise each domain is weak (online supplemental table S8). Internal consistency improves with the addition of items in the WHO standards-based indices. Across indices, domain-specific rankings generally show slight to moderate correlation with one another (online supplemental table S9). As expected, correlations in domain-specific rankings are highest for domains comprised of similar items (eg, SARA equipment and supplies domain is highly correlated with the WHO standards’ equipment and supplies domain). Meanwhile, the WHO standards’ domain of staffing and systems to support quality, a highly unique domain, exhibits significant but slight correlation with most other domains (r: 0.07–0.30 for hospitals; 0.15–0.43 for health centres). Of note, the DHS newborn signal functions domain appears misaligned to other domains, displaying either no significant or small correlation with other domains.

Discussion

Our study compares childbirth service readiness scores generated using three different item selection approaches (SARA tracer items, DHS items and WHO standards items) and three-item aggregation methods for each. To our knowledge, it is the first study to compare existing methods for assessing facility readiness using the SARA and DHS guidance and a new method based on the WHO quality of care standards. We find moderate agreement between indices generated using different combinations of items and aggregation methods. Different indices usually produce similar readiness scores—the majority of within-facility scores differ by less than 0.1 on a 0–1 scale—but exceptions occur where scores for the same facility differ by more than 0.4. Importantly, indices also differ in their ability to discriminate between facilities with similar readiness. The SARA-based and DHS-based indices generate more frequent ties and are more prone to ceiling effects, particularly among hospitals with higher levels of readiness. As expected, indices generated using the larger set of WHO standards items produce fewer ties and slightly lower median index scores, a result of selecting items with greater variation across facilities. Among aggregation methods, PCA tends to produce scores with the greatest skewness and kurtosis, and its results are the most difficult to interpret. Other studies have, likewise, found challenges in the use and interpretation of PCA-derived quality of care indices.54 56

Differences across the indices arise mainly from differences in item selection and, to a lesser extent, aggregation methods. The DHS and WHO standards-based indices show the greatest agreement. Unlike SARA, these indices include items to measure the past performance of signal functions. We expect service readiness to be closely tied to the ability to perform signal functions when required. A 2016 national assessment of emergency obstetric and newborn care in Ethiopia found that lack of medicines, supplies, equipment and staff were common reasons given by facility staff for not performing a signal function, but other reasons, such as a supportive policy environment and training, were also important.48 Past performance of a signal function can be a proxy indicator for these unmeasured elements of readiness and may better predict readiness than the availability of inputs alone, since past performance requires that staff have a minimum level of capacity to recognise and manage obstetric or neonatal emergencies.

Another important difference in the composition of indices is whether they include systems to support quality and patient safety. These include functional referral systems, actionable information systems and processes for continuous quality improvement as conceptualised in the WHO framework for the provision of quality maternal and newborn care.53 With the exception of one item related to emergency transport, these systems are not captured in SARA-based or DHS-based indices. Their inclusion in the WHO standards-based indices provides unique information not otherwise captured.

Other differences between indices relate to which medicines are included and how their availability is determined. There are few medicines in the SARA tracer items and these are widely available (eg, oxytocin, magnesium sulfate), whereas WHO standards items include a broader set of medicines for the mother and newborn (eg, BCG vaccine, chlorhexidine gel, dexamethasone/betamethasone, benzathine benzylpenicillin). Of note, SARA and WHO standards-based indices require that medicines be observed in the facility on the day of the assessment, while DHS-based indices require that the medicine be observed in the delivery room.

A key consideration when weighing the merits of a facility readiness index is its usefulness to decision-makers. A good index should provide a clear and accurate overview of readiness, which can be easily deconstructed into its components to assist decision-makers in pinpointing areas of weakness. The SARA-based and DHS-based indices generate domain-specific scores using relatively few items with weak internal consistency; this raises concerns about the robustness of domain-specific scores. Conversely, the greater number items for all readiness domains in the WHO standards-based indices improves internal consistency and generates confidence that domain-specific scores are not excessively sensitive to differences in a single item.

Our study has some limitations. First, health facility assessments are not standardised, and survey items vary across the SARA, SPA and PMA-ET instruments. The PMA-ET survey did not collect data on a few items collected by SARA and SPA (online supplemental table S1). As a result, we are unable to construct the SARA-based and DHS-based indices according to the full list of items referenced in their guidance. Likewise, as recognised in reviews by Brizuela et al and Sheffel et al,42 43 conventional health facility assessments do not generate data to fully measure all standards in the WHO framework; this finding also applies to the PMA-ET survey. As a result, we are unable to consider all potential items that that could be relevant for constructing a WHO standards-based index. In particular, the lack of measures to assess provider knowledge and competency (standard 7 in the WHO framework) is missing across most conventional health facility assessments. While some assessments ask about the receipt of training or supervision, these are not direct measures of provider knowledge or competency. Provider knowledge and competency are, therefore, missing from all facility readiness indices we compared. Second, limited information is available to validate the individual items that comprise the indices. While the majority of items are based on the enumerator’s observation of at least one valid dose or one functional item on the day of assessment per recommended practice,57 other items are based on self-reported information prone to recall and other response bias. Third, this study analyses data from a sample of health facilities in one country; results may not be generalisable across other LMIC settings. Finally, traditional epidemiological methods for validating measures are not appropriate for this study—no gold standard exists and the lack of information on individual risk factors complicates assessment against patient outcomes. Instead of validating the index against a traditional gold standard, we considered the face validity and construct validity of indices. Indicative of face validity, items selected for the indices are closely aligned with existing guidelines and the WHO framework for the provision of quality maternal and newborn care, the latter having been developed through an extensive literature review and expert consultations.41 53 Indicative of construct validity, service readiness scores are positively correlated with delivery volume.

Our findings have implications for the measurement of service readiness. First, it is feasible to create a service readiness index without the use of complex statistical methods. Simple addition and domain-weighted addition performed better than PCA. These methods produce indices that are easy to generate, interpret and deconstruct to identify bottlenecks to health system performance. Second, indices generated using relatively few items are prone to frequent ties and ceiling effects, a deficiency that is more pronounced when a large proportion of items are almost universally available. The addition of items improves index performance, but should be balanced against the additional data collection burden. Item selection should favour inclusion of high value items with a strong theoretical basis and the ability to discriminate between levels of service readiness. Moreover, we recognise that the availability of medicines, equipment, staff and systems are necessary but not sufficient for the provision of quality care. Incorporating measures of provider knowledge and competency into standard health facility assessment tools—potentially through clinical vignettes as done with the World Bank’s Service Delivery Indicator surveys58 or through the observation of real or simulated cases—could better assist decision-makers in identifying and addressing readiness gaps. Understanding the relationship between service readiness, processes of care and outcomes is critical for improving quality and addressing gaps to effective coverage of care during childbirth. Future research by PMA-ET aims to explore these relationships, by linking data on facility readiness to data collected from peripartum women residing in facilities’ catchment area.

Acknowledgments

PMA Ethiopia project relies on the work of many individuals. Special thanks are due to Ayanaw Amogne, Selam Desta, Solomon Emyu Ferede, Bedilu Ejigu, Celia Karp, Ann Rogers, Sally Safi, Assefa Seme, Aisha Siewe, Shannon Wood, Mahari Yihdego, and Addisalem Zebene. Finally, thanks to the Ethiopia country team and resident enumerators who are ultimately responsible for the success of PMA Ethiopia.

Footnotes

Handling editor: Sanni Yaya

Twitter: @LinneaZPhD

Contributors: EKS developed research aims, performed data analysis and drafted the initial manuscript; AAC supervised this work and SA, SS and LZ provided critical input and guidance. SS and LZ were responsible for project administration and supervision of data collection for PMA Ethiopia, the parent study. All authors reviewed successive versions of the draft manuscript and approved the final manuscript.

Funding: This work was supported, in whole, by the Bill & Melinda Gates Foundation (INV 009466). Under the grant conditions of the Foundation, a Creative Commons Attribution 4.0 Generic Licence has already been assigned to the author accepted manuscript version that might arise from this submission.

Disclaimer: The sponsors of the study had no role in the study design, data collection, data analysis, data interpretation, or writing of this article. The corresponding author had full access to all of the data in the study and had final responsibility for the decision to submit for publication.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data are publicly available at https://www.pmadata.org/data/request-access-datasets.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

Ethics approval was obtained by Addis Ababa University (075/13/SPH) and Johns Hopkins Bloomberg School of Public Health (00009391) Institutional Review Boards.

References

- 1.United Nations . Transforming our world: the 2030 agenda for sustainable development. United Nations, 2015. [Google Scholar]

- 2.Lawn JE, Blencowe H, Oza S, et al. Every newborn: progress, priorities, and potential beyond survival. Lancet 2014;384:189–205. 10.1016/S0140-6736(14)60496-7 [DOI] [PubMed] [Google Scholar]

- 3.Data: delivery care. [Internet]. UNICEF, 2020. Available: https://data.unicef.org/topic/maternal-health/delivery-care/ [Accessed 6 Oct 2020].

- 4.Altman R, Sidney K, De Costa A, et al. Is institutional delivery protective against neonatal mortality among poor or tribal women? a cohort study from Gujarat, India. Matern Child Health J 2017;21:1065–72. 10.1007/s10995-016-2202-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chinkhumba J, De Allegri M, Muula AS, et al. Maternal and perinatal mortality by place of delivery in sub-Saharan Africa: a meta-analysis of population-based cohort studies. BMC Public Health 2014;14:1014. 10.1186/1471-2458-14-1014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fink G, Ross R, Hill K. Institutional deliveries weakly associated with improved neonatal survival in developing countries: evidence from 192 demographic and health surveys. Int J Epidemiol 2015;44:1879–88. 10.1093/ije/dyv115 [DOI] [PubMed] [Google Scholar]

- 7.Gabrysch S, Nesbitt RC, Schoeps A, et al. Does facility birth reduce maternal and perinatal mortality in Brong Ahafo, Ghana? A secondary analysis using data on 119 244 pregnancies from two cluster-randomised controlled trials. Lancet Glob Health 2019;7:e1074–87. 10.1016/S2214-109X(19)30165-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hounton S, Menten J, Ouédraogo M, et al. Effects of a skilled care initiative on pregnancy-related mortality in rural Burkina Faso. Trop Med Int Health 2008;13:53–60. 10.1111/j.1365-3156.2008.02087.x [DOI] [PubMed] [Google Scholar]

- 9.Khanam R, Baqui AH, Syed MIM, et al. Can facility delivery reduce the risk of intrapartum complications-related perinatal mortality? findings from a cohort study. J Glob Health 2018;8:010408. 10.7189/jogh.08.010408 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Montgomery AL, Fadel S, Kumar R, et al. The effect of health-facility admission and skilled birth attendant coverage on maternal survival in India: a case-control analysis. PLoS One 2014;9:e95696. 10.1371/journal.pone.0095696 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Randive B, Diwan V, De Costa A. India's conditional cash transfer programme (the JSY) to promote institutional birth: is there an association between institutional birth proportion and maternal mortality? PLoS One 2013;8:e67452. 10.1371/journal.pone.0067452 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Tura G, Fantahun M, Worku A. The effect of health facility delivery on neonatal mortality: systematic review and meta-analysis. BMC Pregnancy Childbirth 2013;13:18. 10.1186/1471-2393-13-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kruk ME, Gage AD, Arsenault C, et al. High-quality health systems in the sustainable development goals era: time for a revolution. Lancet Glob Health 2018;6:e1196–252. 10.1016/S2214-109X(18)30386-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.World Health Organization . Core indicators and measurement methods: a standardized health facility survey module. Geneva, Switzerland: World Health Organization, 2019. [Google Scholar]

- 15.Kanyangarara M, Chou VB, Creanga AA, et al. Linking household and health facility surveys to assess obstetric service availability, readiness and coverage: evidence from 17 low- and middle-income countries. J Glob Health 2018;8:010603. 10.7189/jogh.08.010603 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jayanna K, Mony P, B M R, et al. Assessment of facility readiness and provider preparedness for dealing with postpartum haemorrhage and pre-eclampsia/eclampsia in public and private health facilities of northern Karnataka, India: a cross-sectional study. BMC Pregnancy Childbirth 2014;14:304. 10.1186/1471-2393-14-304 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Iyengar K, Jain M, Thomas S, et al. Adherence to evidence based care practices for childbirth before and after a quality improvement intervention in health facilities of Rajasthan, India. BMC Pregnancy Childbirth 2014;14:270. 10.1186/1471-2393-14-270 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chaturvedi S, Upadhyay S, De Costa A. Competence of birth attendants at providing emergency obstetric care under India's JSY conditional cash transfer program for institutional delivery: an assessment using case vignettes in Madhya Pradesh Province. BMC Pregnancy Childbirth 2014;14:174. 10.1186/1471-2393-14-174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stanton CK, Deepak NN, Mallapur AA, et al. Direct observation of uterotonic drug use at public health facility-based deliveries in four districts in India. Int J Gynaecol Obstet 2014;127:25–30. 10.1016/j.ijgo.2014.04.014 [DOI] [PubMed] [Google Scholar]

- 20.Brhlikova P, Jeffery P, Bhatia G. Intrapartum oxytocin (mis)use in South Asia. J Health Stud 2009;2:33–50. [Google Scholar]

- 21.Marsh AD, Muzigaba M, Diaz T, et al. Effective coverage measurement in maternal, newborn, child, and adolescent health and nutrition: progress, future prospects, and implications for quality health systems. Lancet Glob Health 2020;8:e730–6. 10.1016/S2214-109X(20)30104-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Amouzou A, Leslie HH, Ram M, et al. Advances in the measurement of coverage for RMNCH and nutrition: from contact to effective coverage. BMJ Glob Health 2019;4:e001297. 10.1136/bmjgh-2018-001297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tanahashi T. Health service coverage and its evaluation. Bull World Health Organ 1978;56:295–303. [PMC free article] [PubMed] [Google Scholar]

- 24.World Health Organization . Service availability and readiness assessment (SARA): an annual monitoring system for service delivery reference manual. Geneva, Switzerland: World Health Organization, 2013. [Google Scholar]

- 25.O'Neill K, Takane M, Sheffel A, et al. Monitoring service delivery for universal health coverage: the service availability and readiness assessment. Bull World Health Organ 2013;91:923–31. 10.2471/BLT.12.116798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wang W, Mallick L, Allen C, et al. Effective coverage of facility delivery in Bangladesh, Haiti, Malawi, Nepal, Senegal, and Tanzania. PLoS One 2019;14:e0217853. 10.1371/journal.pone.0217853 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wang W, Mallick L, Allen C. Effective coverage of facility delivery in Bangladesh, Haiti, Malawi, Nepal, Senegal, and Tanzania. DHS analytical studies No. 65. Rockville, MD, USA, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kruk ME, Leslie HH, Verguet S, et al. Quality of basic maternal care functions in health facilities of five African countries: an analysis of national health system surveys. Lancet Glob Health 2016;4:e845–55. 10.1016/S2214-109X(16)30180-2 [DOI] [PubMed] [Google Scholar]

- 29.Gage AD, Carnes F, Blossom J, et al. In low- and middle-income countries, is delivery in high-quality obstetric facilities geographically feasible? Health Aff 2019;38:1576–84. 10.1377/hlthaff.2018.05397 [DOI] [PubMed] [Google Scholar]

- 30.Lama TP, Munos MK, Katz J, et al. Assessment of facility and health worker readiness to provide quality antenatal, intrapartum and postpartum care in rural southern Nepal. BMC Health Serv Res 2020;20:16.. 10.1186/s12913-019-4871-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.World Health Organization, UNFPA, UNICEF, Mailman School of Public Health . Averting maternal death and disability (AMDD). monitoring emergency obstetric care: a Handbook. Geneva, Switzerland: World Health Organization, 2009. [Google Scholar]

- 32.UNICEF, WHO, UNFPA . Guidelines for monitoring the availability and use of obstetric services. New York, NY, USA: UNICEF, 1997. [Google Scholar]

- 33.Averting Maternal Death and Disability (AMDD) . Needs assessment of emergency obstetric and newborn care toolkit: AMDD, 2015. Available: https://www.publichealth.columbia.edu/research/averting-maternal-death-and-disability-amdd/toolkit [Accessed 15 Jun 2021].

- 34.Manu A, Arifeen S, Williams J, et al. Assessment of facility readiness for implementing the WHO/UNICEF standards for improving quality of maternal and newborn care in health facilities - experiences from UNICEF's implementation in three countries of South Asia and sub-Saharan Africa. BMC Health Serv Res 2018;18:531. 10.1186/s12913-018-3334-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Willey B, Waiswa P, Kajjo D, et al. Linking data sources for measurement of effective coverage in maternal and newborn health: what do we learn from individual- vs ecological-linking methods? J Glob Health 2018;8:010601. 10.7189/jogh.08.010601 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Otolorin E, Gomez P, Currie S, et al. Essential basic and emergency obstetric and newborn care: from education and training to service delivery and quality of care. Int J Gynaecol Obstet 2015;130:S46–53. 10.1016/j.ijgo.2015.03.007 [DOI] [PubMed] [Google Scholar]

- 37.Nesbitt RC, Lohela TJ, Manu A, et al. Quality along the continuum: a health facility assessment of intrapartum and postnatal care in Ghana. PLoS One 2013;8:e81089. 10.1371/journal.pone.0081089 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Diamond-Smith N, Sudhinaraset M, Montagu D. Clinical and perceived quality of care for maternal, neonatal and antenatal care in Kenya and Namibia: the service provision assessment. Reprod Health 2016;13:92. 10.1186/s12978-016-0208-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cavallaro FL, Benova L, Dioukhane EH, et al. What the percentage of births in facilities does not measure: readiness for emergency obstetric care and referral in Senegal. BMJ Glob Health 2020;5:e001915. 10.1136/bmjgh-2019-001915 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Gabrysch S, Civitelli G, Edmond KM, et al. New signal functions to measure the ability of health facilities to provide routine and emergency newborn care. PLoS Med 2012;9:e1001340. 10.1371/journal.pmed.1001340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.World Health Organization . Standards for improving quality of maternal and newborn care in health facilities. Geneva, Switzerland: World Health organization, 2016. Available: https://www.who.int/publications/i/item/9789241511216 [Accessed 15 Jun 2021].

- 42.Sheffel A, Karp C, Creanga AA. Use of service provision assessments and service availability and readiness assessments for monitoring quality of maternal and newborn health services in low-income and middle-income countries. BMJ Glob Health 2018;3:e001011. 10.1136/bmjgh-2018-001011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Brizuela V, Leslie HH, Sharma J, et al. Measuring quality of care for all women and newborns: how do we know if we are doing it right? A review of facility assessment tools. Lancet Glob Health 2019;7:e624–32. 10.1016/S2214-109X(19)30033-6 [DOI] [PubMed] [Google Scholar]

- 44.Wilhelm D, Lohmann J, De Allegri M, et al. Quality of maternal obstetric and neonatal care in low-income countries: development of a composite index. BMC Med Res Methodol 2019;19:154. 10.1186/s12874-019-0790-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Quality, equity, dignity (QED), a network for improving quality of care for maternal, newborn and child health. quality of care for maternal and newborn health: a monitoring framework for network countries. Geneva, Switzerland, 2019. Available: https://www.who.int/publications/m/item/quality-of-care-for-maternal-and-newborn-a-monitoring-framework-for-network-countries [Accessed 15 Jun 2021].

- 46.Leslie HH, Sun Z, Kruk ME. Association between infrastructure and observed quality of care in 4 healthcare services: a cross-sectional study of 4,300 facilities in 8 countries. PLoS Med 2017;14:e1002464. 10.1371/journal.pmed.1002464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Federal Democratic Republic of Ethiopia Ministry of Health . Health Sector Transformation Plan 2015/16 - 2019/20. Addis Ababa, Ethiopia, 2015. [Google Scholar]

- 48.Ethiopian Public Health Institute . Federal Ministry of health, averting maternal death disability (AMDD). Ethiopian emergency obstetric and newborn care (EmONC) assessment 2016 final report. Addis Ababa, Ethiopia: Federal Ministry of Health, 2017. [Google Scholar]

- 49.Ethiopian Public Health Institute, Federal Ministry of Health, ICF International Ethiopia . Service provision assessment plus survey 2014. Addis Ababa, Ethiopia: Ethiopian Public Health Institute, 2014. [Google Scholar]

- 50.Ethiopian Public Health Institute (EPHI) and ICF . Ethiopia mini demographic and health survey 2019: final report. Rockville, Maryland, USA: EPHI and ICF, 2021. [Google Scholar]

- 51.Zimmerman L, Desta S, Yihdego M, et al. Protocol for PMA-Ethiopia: a new data source for cross-sectional and longitudinal data of reproductive, maternal, and newborn health. Gates Open Res 2020;4:126. 10.12688/gatesopenres.13161.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Addis Ababa University School of Public Health, The Bill & Melinda Gates Institute for Population and Reproductive Health at The Johns Hopkins Bloomberg School of Public Health . Performance monitoring for action Ethiopia (PMA-ET) service delivery point cross-sectional survey 2019, PMAET-SQ-2019. Ethiopia and Baltimore, Maryland, USA, 2019. [Google Scholar]

- 53.Tunçalp Ӧ, Were WM, MacLennan C, et al. Quality of care for pregnant women and newborns-the who vision. BJOG 2015;122:1045–9. 10.1111/1471-0528.13451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Sheffel A, Zeger S, Heidkamp R, et al. Development of summary indices of antenatal care service quality in Haiti, Malawi and Tanzania. BMJ Open 2019;9:e032558. 10.1136/bmjopen-2019-032558 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.StataCorp . Stata statistical software: release 15. College Station, TX, USA: StataCorp LLC, 2017. [Google Scholar]

- 56.Mallick L, Temsah G, Wang W. Comparing summary measures of quality of care for family planning in Haiti, Malawi, and Tanzania. PLoS One 2019;14:e0217547. 10.1371/journal.pone.0217547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Choi Y, Ametepi P. Comparison of medicine availability measurements at health facilities: evidence from service provision assessment surveys in five sub-Saharan African countries. BMC Health Serv Res 2013;13:266. 10.1186/1472-6963-13-266 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.World Bank . Service delivery indicators. world bank, 2017. Available: https://www.sdindicators.org/ [Accessed 28 Jul 2021].

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjgh-2021-006698supp001.pdf (588.8KB, pdf)

Data Availability Statement

Data are publicly available at https://www.pmadata.org/data/request-access-datasets.