Abstract

Background

Methods to visualise patient safety data can support effective monitoring of safety events and discovery of trends. While quality dashboards are common, use and impact of dashboards to visualise patient safety event data remains poorly understood.

Objectives

To understand development, use and direct or indirect impacts of patient safety dashboards.

Methods

We conducted a systematic review in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines. We searched PubMed, EMBASE and CINAHL for publications between 1 January 1950 and 30 August 2018 involving use of dashboards to display data related to safety targets defined by the Agency for Healthcare Research and Quality’s Patient Safety Net. Two reviewers independently reviewed search results for inclusion in analysis and resolved disagreements by consensus. We collected data on development, use and impact via standardised data collection forms and analysed data using descriptive statistics.

Results

Literature search identified 4624 results which were narrowed to 33 publications after applying inclusion and exclusion criteria and consensus across reviewers. Publications included only time series and case study designs and were inpatient focused and emergency department focused. Information on direct impact of dashboards was limited, and only four studies included informatics or human factors principles in development or postimplementation evaluation.

Discussion

Use of patient-safety dashboards has grown over the past 15 years, but impact remains poorly understood. Dashboard design processes rarely use informatics or human factors principles to ensure that the available content and navigation assists task completion, communication or decision making.

Conclusion

Design and usability evaluation of patient safety dashboards should incorporate informatics and human factors principles. Future assessments should also rigorously explore their potential to support patient safety monitoring including direct or indirect impact on patient safety.

Keywords: informatics, data visualization, safety management

Introduction

Since the 2000 release of the Institute of Medicine’s landmark report, To Err is Human: Building a Safer Healthcare System,1 healthcare organisations have increasingly gathered, analysed and used data to improve the safety of healthcare delivery. Despite increased research and quality improvement efforts, how data on patient safety events is communicated to people who will act on these data is not well understood. For instance, due to national quality reporting programmes, such as the Centers for Medicare & Medicaid Services’ Quality Payment Program,2 which adjusts healthcare organisation’s reimbursement rates based on meeting certain quality measures, dashboards have been used extensively to visualise and disseminate process-based quality measures such as understanding how well haemoglobin A1c is controlled across all of a clinic’s patients. However, an understanding of how commonly dashboards are used for patient safety-specific measures and how effective they are at advancing patient safety efforts and safety culture remains unknown.

Dashboards have been used extensively within and outside healthcare and serve as a form of visual information display that allows for efficient data dissemination.3 4 Dashboards aggregate data to provide overviews of key performance indicators to facilitate decision making, and when used correctly, enable efforts to improve an organisation’s structure, process and outcomes.4 5 For dashboards to play a strategic role in communicating patient safety data, it is essential they are designed to relay key information about performance effectively.6 Thus, the dashboard design must consider informatics and human factors principles to ensure information is efficiently communicated. Informatics and human factors approaches have been successful in the design and evaluation of user interfaces in healthcare, and have variably been applied to dashboard development.7 One common approach is user-centred design, which is an iterative design process that aims to optimise usability of a display by focusing on users and their needs through requirement analysis, translation of requirements into design elements, application of design principles and evaluation.8 Considering dashboards, usability would be defined as the extent to which a dashboard can be used by clinicians to understand and achieve specified goals with effectiveness, efficiency and satisfaction in clinical settings.9

Three main goals that guided this study were: (1) To understand the frequency and settings of use of patient safety dashboards in healthcare, (2) To determine the effectiveness of dashboards on directly or indirectly impacting patient safety at healthcare organisations and (3) To determine whether informatics and human factors principles are commonly used during dashboard development and evaluation. Our study focused on dashboards that displayed the frequency or rate of events, that is, those that facilitated retrospective review of past safety events to reduce these types of events in the future or dashboards that identified safety events of individual patients in real-time in order to mitigate further harm. We excluded dashboards that only displayed risk of an event.

Methods

Design

We conducted a systematic literature review in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines.

Search strategy and inclusion criteria

We searched all available published and unpublished works in English using three literature databases (MEDLINE via PubMed, EMBASE and CINAHL). Publications were eligible for inclusion if they included discussion about a dashboard for displaying patient safety event data in the healthcare setting. Patient safety event data were based on the list of ‘Safety Targets’ (table 1) on the Agency for Healthcare Research and Quality’s (AHRQ) Patient Safety Network (PSNet),10 and excluded process measures. Because of the variety of topics within patient safety, we ultimately used only the word ‘dashboard’ in our keyword and title search of all three databases, since this maximised the number of known publications identified without excluding relevant publications. Thus, our inclusion parameters, in PICOS format, were:

Table 1.

Agency for Healthcare Research and Quality Safety Targets

| No | Safety topic | Examples |

| 1 | Alert Fatigue | Failure to recognise ventilator alarm |

| 2 | Device-related complication | Device malfunction |

| 3 | Diagnostic errors | Delayed stroke diagnosis, test misinterpretation |

| 4 | Discontinuities, gaps and hand-off problems | Missed critical lab result |

| 5 | Drug shortages | Antibiotics shortage |

| 6 | Failure to rescue | Death from postpartum haemorrhage |

| 7 | Fatigue and sleep deprivation | Resident errors due to sleep deprivation |

| 8 | Identification errors | Wrong-patient procedures |

| 9 | Inpatient suicide | Death of hospitalised patient |

| 10 | Interruptions and distractions | Incorrect surgical counts due to distractions |

| 11 | Medical complications | Falls, pressure ulcers, nosocomial infections, thromboembolism |

| 12 | Medication safety | Dispensing errors, medication-related hypoglycaemic or renal failure |

| 13 | MRI safety | Harm related to unsafe MRI practice |

| 14 | Nonsurgical procedural complications | Bedside procedure complications |

| 15 | Overtreatment | Complications after inappropriate antibiotic use |

| 16 | Psychological and social complications | Privacy violations |

| 17 | Second victims | Clinician emotional harm after adverse event |

| 18 | Surgical complications | Unexpected return to surgery, surgical site infection |

| 19 | Transfusion complications | Transfusion of incompatible blood types |

MRI, Magnetic Resonance Imaging.

Population: Organisations providing medical care.

Interventions: Dashboards used to disseminate patient safety data (defined as measures related to any topic defined as a ‘Safety Target’ (table 1) by the AHRQ).10

Comparators: Settings with and without the use of patient safety dashboards.

Outcomes: (1) Settings where patient safety dashboards were used and (2) Impact of use of patient safety dashboards on reducing patient safety events.

Time frame: Studies published in English from 1 January 1950 to 30 August 2018.

Setting: Ambulatory care, inpatient and emergency department settings.

Screening process

After manually removing duplicates and non-journal publications (eg, magazine articles and book chapters), two authors (DRM and TS) with expertise in clinical care, informatics and human factors reviewed titles and abstracts of each remaining article or abstract. Works were only included if they described display of patient safety event data (based on AHRQ’s PSNet list of Patient Safety Targets) on a dashboard. Publications that discussed only non-safety event-related aspects of quality (eg, haemoglobin A1c control or rates of mammography screening) were excluded. Similarly, literature on dashboards displaying risk factors to prevent patient safety events rather than events themselves (eg, intensive care screens that display a particular patient’s heart rate and oxygenation saturation or calculate a real-time risk level) were beyond the scope of this study and were excluded. We reviewed all publications potentially meeting study criteria in full. Reviewers discussed each inclusion, and disagreements regarding whether an article or abstract met criteria were resolved by consensus.

Publication evaluation

Three authors (DRM, TS and AS) independently extracted data from each identified publication using a structured review form. Reviewers specifically identified (1) the setting the dashboard was used in, (2) the patient safety topic displayed on the dashboard, (3) the type of informatics or human factors principles used in dashboard design or usability evaluation performed on the final dashboard and (4) the impact of the dashboard, both related to reducing patient safety events in the setting where it was used and other impacts identified by each publication’s authors. To assess the level of evidence in improving patient safety, reviewers also assessed the study type and whether a control or other comparison group was used. Findings are aggregated and reported using descriptive statistics.

Results

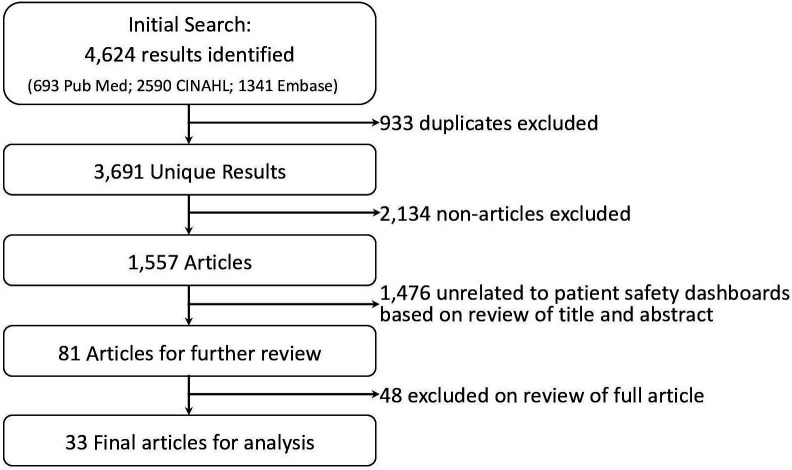

Our literature search identified a total of 4624 results (PubMed: 693, CINAHL: 2590, Embase: 1341). After 933 duplicates were removed, 3691 result entries remained. One reviewer (TS) subsequently removed 2134 magazine articles, newspaper articles, thesis papers, conference papers, reports that were unrelated to the topic of patient safety, as well as publications not in English. Titles and abstracts of the remaining 1557 articles and conference abstracts were independently reviewed by two reviewers (TS and DRM). Reviewers manually reviewed titles and abstracts and excluded (A) publications that did not include discussion of a dashboard as a primary or secondary focus, and (B) publications where dashboards were mentioned, but the dashboard did not include measures related to any of the AHRQ ‘Safety Targets’ (table 1). After exclusions, reviewers identified a combined total of 81 publications that warranted further review of the entire publication. Reviewers discussed each publication, and after consensus, identified 33 final publications that warranted inclusion in the analysis. Reference sections of each publication were reviewed for additional sources but did not identify additional publications. Figure 1 displays a flow chart of the search strategy.

Figure 1.

Flow chart of literature search results and the selection process of accepted/excluded publications.

Search results

The final set included 33 publications, including 5 conference abstracts and 28 full articles (table 2). The earliest publications describe use of patient safety measures on a dashboard in 2004, 2005 and 2006,11–13 followed by a paucity of additional publications until 2010.

Table 2.

Final studies using patient safety dashboards identified during literature search

| Citation | Type | Setting | Safety topic | Study type |

| Anand (2015)14 | Article | Paediatric cardiac ICU | Pressure ulcers, unplanned extubation, hospital infections (CAUTI, CLABSI, VAP) | Case report |

| Bakos (2012)24 | Article | Trauma centre | Hospital infections (CLABSI) | Case report |

| Chandraharan (2010)17 | Article | Maternity ward | Postpartum haemorrhage | Case report |

| Coleman (2013)18 | Article | Hospital wards | Medication-related events | Time series |

| Collier (2015)22 | Article | Inpatient maternity and paediatrics wards | Pressure ulcers | Case report |

| Conway (2012)25 | Article | Trauma centre | Surgical site infections | Case report |

| Dharamshi (2011)27 | Article | Surgery | Return to surgery | Case report |

| Donaldson (2005)12 | Article | Surgery, critical care floors | Pressure ulcers, falls | Case report |

| Fong (2017)23 | Article | Pharmacy | Medication-related events | Case report |

| Frazier (2012)29 | Article | Whole hospital | Falls, hospital infections (MRSA, C. Diff, VAP, CLABSI, CAUTI), Pressure ulcers | Case report |

| Gardner (2015)36 | Article | Whole hospital | Falls | Case report |

| Hebert (2018)15 | Article | Cardiac surgery unit and ICU | Hospital infections (VAP) | Time series |

| Hendrickson (2013)30 | Abstract | Whole hospital | Hospital infections (VAP, CLABSI, CAUTI, MRSA, VRE, C. Diff) | Case report |

| Hyman (2017)37 | Article | Whole hospital | Hospital infections (CLABSI, CAUTI, CAP), falls, VTE | Case report |

| Johnson (2006)13 | Article | Whole hospital | Medication-related events | Case report |

| Lau (2012)19 | Abstract | Hospital oncology and GI departments | Delays in biopsy follow-up | Case report |

| Lo (2014)33 | Article | Whole hospital | Hospital infections (CAUTI) | Case report |

| Mackie (2014)35 | Article | Whole hospital | Pressure ulcers | Time series |

| Madison (2013)31 | Abstract | Whole hospital | Hospital infections (CLABSI) | Case report |

| Mane (2018)26 | Article | Emergency department | Delays in CVA diagnosis | Case report |

| Mayfield (2013)16 | Abstract | ICU, oncology ward | Hospital infections (CLABSI, VAP) | Case report |

| Mazzella-Ebstein (2004)11 | Article | Hospital wards | Pressure ulcers, falls, DVTs | Case report |

| Milligan (2015)41 | Article | Whole hospital | Hypoglycaemic | Time series |

| Mlaver (2017)20 | Article | Hospital floor | Pressure ulcers, hypoglycaemic | Case report |

| Nagelkerk (2014)50 | Article | Paediatrics ward | Hospital deaths | Case report |

| Pemberton (2014)51 | Article | Dental hospital | Wrong-site surgery, falls, medication errors | Case report |

| Rao (2011)32 | Abstract | Whole hospital | Hospital infections (VAP) | Case report |

| Ratwani (2015)38 | Article | Whole hospital | Falls | Case report |

| Riley (2010)34 | Article | Whole hospital | Hospital infections (MRSA, C. Diff), falls, pressure ulcers, medication errors | Case report |

| Rioux (2007)28 | Article | Surgery | Surgical site infections | Time series |

| Skledar (2013)39 | Article | Whole hospital | Medication-related events | Case report |

| Stone (2018)40 | Article | Whole hospital | Medication-related events | Case report |

| Waitman (2011)21 | Article | Hospital wards, pharmacy | Renal failure | Case report |

CAUTI, catheter-associated urinary tract infection; C. Diff, Clostridium difficile; CLABSI, central line-associated blood stream infection; CVA, cerebrovascular ccident; DVT, deep vein thrombosis; GI, gastrointestinal; ICU, intensive care unit; MRSA, methicillin-resistant Staphylococcus aureus; VAP, ventilator-associated pneumonia; VRE, vancomycin-resistant enterococcus infection; VTE, Venous Thromboembolism;

Clinical settings

All patient safety dashboards were used in the hospital setting, often at the level of the entire hospital or hospital system. Several patient safety dashboards were used in ICUs,12 14–16 hospital wards,11 12 17–22 pharmacies,21 23 emergency departments and trauma centres,24–26 and surgical settings.12 27 28 No use of patient safety dashboards was identified in the ambulatory care setting.

Patient safety topics

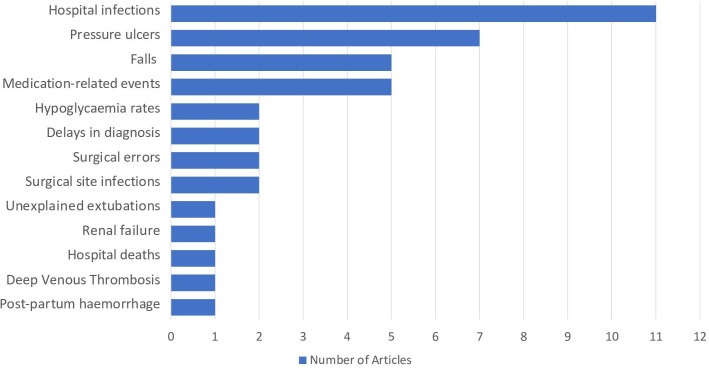

The most common use of patient safety dashboards (11 of 33) was tracking hospital infections (figure 2). Types of infection tracked included central line-related blood stream infections,14 16 29–31 ventilator-associated pneumonia,14 16 29 30 32 catheter-associated urinary tract infections,14 29 30 33 methicillin-resistant Staphylococcus aureus infections,29 30 34 vancomycin-resistant Enterococcus infections30 and Clostridium difficile infections.29 30 Dashboards additionally displayed rates of pressure ulcers,11 12 14 20 22 34 35 patient falls11 29 36–38 and medication-related errors,13 18 23 39 40 followed less commonly by other patient safety topics (See table 2 for all safety topics and figure 2 for chart of topic frequencies).

Figure 2.

Number of publications identified by dashboard patient safety topic.

Impact of Dashboard use and level of evidence

Of all studies identified, 5 used a time series design15 18 28 35 41 while the remaining 28 used case report designs describing specific implementations of patient safety dashboards without statistical analyses performed. Of the five time series studies, Coleman et al18 identified a 0.41% decrease in missed doses of medications other than antibiotics (p=0.007); however, it was part of four concurrent interventions to reduce missed and delayed medication doses, and thus, the specific impact of the dashboard was unclear. Similarly, Milligan et al41 reported a reduction in hypoglycaemic rates, Rioux et al28 reported a decrease in surgical site infections over a 6-year period after dashboard implementation, and Mackie et al35 reported a reduction in hospital-acquired pressure ulcers; however, in each case, the dashboard was one aspect of a broader campaign to reduce the respective patient safety events. Other studies, including Bakos, Chandraharan, Collier, Conway, Hebert, Hendrickson and Hyman,15 17 22 24 25 30 37 reported a subjective reduction in patient safety events, but did not describe a statistical analysis. The remaining publications did not include discussion of the direct or indirect impact of the dashboard on patient safety events.

Most publications that evaluated the dashboard focused instead on sensitivity and specificity of dashboard measures, employee satisfaction with the dashboards and reduction in time required to gather data for the dashboard compared with previous manual data collection. Another impact of dashboards described included dissemination of patient event data in real time or closer to real time than previously possible due to algorithms that monitor electronic patient safety data and automatically update dashboards. Direct impact on culture and staffing levels of patient safety personnel were not described in any of the studies. However, as described above, several studies implemented dashboards as a package with other patient safety-focused efforts, suggesting changes in culture, infrastructure, and staffing likely occurred, but concomitantly with the dashboard implementation rather in response to it.

Usability

Only two studies used a human factors approach for design and evaluation of dashboards. Ratwani and Fong38 described a development process employing commonly accepted human factors design principles,42 followed by focus groups with users and a 2-week pilot phase to collect usability data and make improvements to the dashboard. Mlaver et al20 used a participatory design approach that employed collaboration with users during iterative refinements. Two additional studies discussed more limited efforts to obtain feedback. Dharamshi et al27 performed a limited usability analysis with an anonymous survey of dashboard users at 6-months after implementation to understand factors that limited the usability of the dashboard. Stone et al40 iteratively obtained feedback from physician users between dashboard revisions. However, the majority of studies did not describe the use of an informatics or human factors approach that considered usability design principles, user-centred design processes or usability evaluation methods. Thus, there was little evidence of design elements that were most useful or usable across scenarios or settings.

Discussion

Our systematic review identified 33 publications discussing the use of dashboards to communicate and visualise patient safety data. All publications were published since 2004, suggesting increased measurement of patient safety after the 1999 publication of To Err is Human. All publications involved display of patient safety events in the inpatient setting, the most common of which were hospital acquired infections. There may, thus, exist opportunities for similar efforts in the ambulatory setting (eg, falls, lost referrals, abnormal test results lost to follow-up or medication prescribing errors).

Overall, the level of evidence that dashboards directly or indirectly impact patient safety was limited. Only five of the publications used time series designs with the remaining designs comprised of case reports of dashboard implementations either alone or as part of broader patient safety interventions. No interventional studies were identified. Most studies reported on accuracy of the measures displayed or survey-based user satisfaction with the dashboard, rather than the dashboards’ impact on patient safety events. Studies that provided data on reductions in patient safety events either did not report statistical analyses to support the reduction, or more commonly, were part of a broad process improvement effort containing multiple interventions, making it difficult to tease out which intervention truly impacted safety. While it can be argued that the intent of a patient safety dashboard is to communicate data about the extent of safety issues at an organisation and support other improvement efforts, the act of showing data via a dashboard may alone have an impact of motivating quality and safety efforts. Dashboards likely have impacts on safety culture and indirectly lead to allocation of resources to reducing patient safety events. The studies identified did not describe these impacts in response to dashboard implementation, and thus, this topic warrants future exploration.

Most publications described dashboard development as a quality improvement approach to addressing a specific organisational problem or to meet institutional or national standards. Several studies reported high user satisfaction with the dashboard, though these were often limited assessments and did not capture whether users fully understood the content of the dashboard. With four exceptions, studies lacked informatics or human factors design approaches during development, application of standardised design principles and use of usability evaluations. Without informatics, human factors or user-centred design approaches, information requirements from users may not be well understood. Thus, there is limited evidence about the dashboard acceptance, frequency of use or whether dashboards satisfactorily met the needs of intended users. For example, a common mention was use of colour coding following a traffic light scheme (red=poor status, yellow=warning, green=good status), without a formal evaluation of the usability for the 8% of men and 0.5% of women in the population with red-green colour blindness.43

Some dashboards were implemented within a bundle of other interventions. The lack of dashboard usability testing before and after implementation made it difficult to identify the impact or effect of the dashboard. As with many clinical informatics interventions, there could be numerous social and/or technical factors that may have influenced the reported outcomes beyond the dashboard. Rigorous informatics and human factors design approaches44–47 are needed to improve the use and impact of patient safety dashboards. Because intervention development is often time constrained, rapid qualitative assessment approaches or human factors methods involving rapid prototyping,48 49 for example, can be adapted to meet the shorter timelines needed for rapid cycle quality improvement. This will ensure dashboards are useful and usable and generate much needed evidence about efficiency, effectiveness and satisfaction in various care settings.

Our study has several limitations. First, it is subject to a potential reporting bias. While we analysed publications based on the content reported, it is possible that additional statistical analyses and usability assessments were performed that were not reported. Furthermore, there is likely to be greater use of patient safety dashboards developed as part of routine quality improvement efforts within healthcare organisations, but these may not be published. Nevertheless, this is an area that is ripe for additional research. Second, there was a significant variability in how dashboards were described, ranging from basic text descriptions to full-colour screenshots. This variability made performing standardised usability assessments impossible. Finally, our search was limited to the publications present in the databases we searched. While we used three different databases to mitigate this impact, if publications did not appear in any of our search databases, they would have been missed.

In conclusion, we identified a growing use of patient safety dashboards, largely focused on displaying inpatient safety events. Due to limited use of informatics and human factors-based approaches during development or postimplementation evaluation, the usability of such dashboards was difficult to assess. Furthermore, because of limited evaluation of the impact of dashboards and because dashboards were often implemented as part of a variety of process improvement efforts, the literature is not clear on direct impact of dashboard implementation on patient safety events. Because well-designed dashboards have potential to support patient safety monitoring, our study should encourage integration of informatics and human factors principles into design and usability evaluation of dashboards as well as assessment of their direct or indirect impact on patient safety.

Footnotes

Twitter: @DeanSittig, @HardeepSinghMD

Contributors: DRM, DS and HS developed idea for this systematic review. DRM and TS performed the literature search. DRM, TS and AS critically reviewed and extracted data from the publications identified. All authors contributed to the writing of the initial manuscript and of revising subsequent versions. All authors had control over the decision to publish. DRM had access to the full data set and accepts full responsibility for the finished article.

Funding: This project was funded by an Agency for Healthcare Research and Quality Mentored Career Development Award (K08-HS022901) and partially funded by the Houston VA HSR&D Center for Innovations in Quality, Effectiveness and Safety (CIN 13-413). HS is additionally supported by the VA Health Services Research and Development Service (IIR17-127; Presidential Early Career Award for Scientists and Engineers USA 14-274), the VA National Center for Patient Safety, the Agency for Health Care Research and Quality (R01HS27363), and the Gordon and Betty Moore Foundation (GBMF 5498 and GBMF 8838). AS is additionally supported by the VA HSR&D Center for Health Information and Communication (CIN 13-416), National Institutes of Health, National Center for Advancing Translational Sciences, and Clinical and Translational Sciences Award (KL2TR002530 and UL1TR002529). There are no conflicts of interest for any authors.

Disclaimer: These funding sources had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript.

Competing interests: None declared.

Provenance and peer review: Commissioned; externally peer reviewed.

Data availability statement

Data sharing not applicable as no datasets generated and/or analysed for this study. Not applicable.

Ethics statements

Patient consent for publication

Not applicable.

References

- 1.Kohn LT, Corrigan JM, Donaldson MS, Committee on Quality of Health Care in America, Institute of Medicine . To err is human: building a safer health system. The National Academies Press, 2000. [PubMed] [Google Scholar]

- 2.Quality payment program. United States Centers for Medicare & Medicaid Services. Available: https://qpp.cms.gov/ [Accessed 6/6/2021]. [Google Scholar]

- 3.Hugo JV, S SG. Human factors principles in information Dashboard design. Idaho Falls, ID (United States: Idaho National Lab. (INL), 2016. [Google Scholar]

- 4.Dowding D, Randell R, Gardner P, et al. Dashboards for improving patient care: review of the literature. Int J Med Inform 2015;84:87–100. 10.1016/j.ijmedinf.2014.10.001 [DOI] [PubMed] [Google Scholar]

- 5.Sarikaya A, Correll M, Bartram L, et al. What do we talk about when we talk about Dashboards? IEEE Trans Vis Comput Graph 2019;25:682–92. 10.1109/TVCG.2018.2864903 [DOI] [PubMed] [Google Scholar]

- 6.Dowding D, Merrill JA. The development of Heuristics for evaluation of Dashboard Visualizations. Appl Clin Inform 2018;9:511–8. 10.1055/s-0038-1666842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Carayon P, Hoonakker P. Human factors and usability for health information technology: old and new challenges. Yearb Med Inform 2019;28:071–7. 10.1055/s-0039-1677907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Norman DA, Draper SW. User centered system design: new perspectives on Human-Computer interaction. 1st ed. CRC Press, 1986. [Google Scholar]

- 9.20282-2 usability of consumer products and products for public use, 2013International Organization of Standards. Available: https://www.iso.org/obp/ui/#iso:std:iso:ts:20282:-2:ed-2:v1:en [Google Scholar]

- 10.PSNet topics, 2021. Available: https://psnet.ahrq.gov/topics-0 [Accessed 6/6/2021].

- 11.Mazzella-Ebstein AM, Saddul R. Web-Based nurse executive dashboard. J Nurs Care Qual 2004;19:307–15. 10.1097/00001786-200410000-00004 [DOI] [PubMed] [Google Scholar]

- 12.Donaldson N, Brown DS, Aydin CE, et al. Leveraging nurse-related dashboard benchmarks to expedite performance improvement and document excellence. J Nurs Adm 2005;35:163–72. 10.1097/00005110-200504000-00005 [DOI] [PubMed] [Google Scholar]

- 13.Johnson K, Hallsey D, Meredith RL, et al. A nurse-driven system for improving patient quality outcomes. J Nurs Care Qual 2006;21:168–75. 10.1097/00001786-200604000-00013 [DOI] [PubMed] [Google Scholar]

- 14.Anand V, Cave D, McCrady H, et al. The development of a congenital heart programme quality dashboard to promote transparent reporting of outcomes. Cardiol Young 2015;25:1579–83. 10.1017/S1047951115002085 [DOI] [PubMed] [Google Scholar]

- 15.Hebert C, Flaherty J, Smyer J, et al. Development and validation of an automated ventilator-associated event electronic surveillance system: a report of a successful implementation. Am J Infect Control 2018;46:316–21. 10.1016/j.ajic.2017.09.006 [DOI] [PubMed] [Google Scholar]

- 16.Mayfield J, Wood H, Russo AJ, et al. Facility level dashboard utilized to decrease infection preventionists time disseminating data. Am J Infect Control 2013;41:S54. 10.1016/j.ajic.2013.03.112 [DOI] [Google Scholar]

- 17.Chandraharan E. Clinical dashboards: do they actually work in practice? Three-year experience with the maternity Dashboard. Clin Risk 2010;16:176–82. 10.1258/cr.2010.010022 [DOI] [Google Scholar]

- 18.Coleman JJ, Hodson J, Brooks HL, et al. Missed medication doses in hospitalised patients: a descriptive account of quality improvement measures and time series analysis. Int J Qual Health Care 2013;25:564–72. 10.1093/intqhc/mzt044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lau S, Wehbi M, Varma V. Electronic tool for tracking patient care after positive colon biopsy. Am J Clin Pathol 2012;138:A122. 10.1093/ajcp/138.suppl2.191 [DOI] [Google Scholar]

- 20.Mlaver E, Schnipper JL, Boxer RB, et al. User-Centered collaborative design and development of an inpatient safety dashboard. Jt Comm J Qual Patient Saf 2017;43:676–85. 10.1016/j.jcjq.2017.05.010 [DOI] [PubMed] [Google Scholar]

- 21.Waitman LR, Phillips IE, McCoy AB, et al. Adopting real-time surveillance dashboards as a component of an enterprisewide medication safety strategy. Jt Comm J Qual Patient Saf 2011;37:326–AP4. 10.1016/S1553-7250(11)37041-9 [DOI] [PubMed] [Google Scholar]

- 22.Collier M. Pressure Ulcer Incidence: The Development and Benefits of 10 Year’s-experience with an Electronic Monitoring Tool (PUNT) in a UK Hospital Trust. EWMA J 2015;15:15–20. [Google Scholar]

- 23.Fong A, Harriott N, Walters DM, et al. Integrating natural language processing expertise with patient safety event review committees to improve the analysis of medication events. Int J Med Inform 2017;104:120–5. 10.1016/j.ijmedinf.2017.05.005 [DOI] [PubMed] [Google Scholar]

- 24.Bakos KK, Zimmermann D, Moriconi D. Implementing the clinical Dashboard at VCUHS. NI 2012 2012;2012:11. [PMC free article] [PubMed] [Google Scholar]

- 25.Conway WA, Hawkins S, Jordan J, et al. The Henry Ford health system no harm campaign: a comprehensive model to reduce harm and save lives. Jt Comm J Qual Patient Saf 2012;38:318–AP1. 10.1016/S1553-7250(12)38042-2 [DOI] [PubMed] [Google Scholar]

- 26.Mane KK, Rubenstein KB, Nassery N, et al. Diagnostic performance dashboards: tracking diagnostic errors using big data. BMJ Qual Saf 2018;27:567–70. 10.1136/bmjqs-2018-007945 [DOI] [PubMed] [Google Scholar]

- 27.Dharamshi R, Hillman T, Shaw R. Increasing engagement with clinical outcome data. Br J Healthc Manag 2011;17:585–9. 10.12968/bjhc.2011.17.12.585 [DOI] [Google Scholar]

- 28.Rioux C, Grandbastien B, Astagneau P. Impact of a six-year control programme on surgical site infections in France: results of the INCISO surveillance. J Hosp Infect 2007;66:217–23. 10.1016/j.jhin.2007.04.005 [DOI] [PubMed] [Google Scholar]

- 29.Frazier JA, Williams B. Successful implementation and evolution of Unit-Based nursing Dashboards. Nurse Leader 2012;10:44–6. 10.1016/j.mnl.2012.01.003 [DOI] [Google Scholar]

- 30.Hendrickson C, Guelcher A, Guspiel AM, et al. Results of increasing the frequency of healthcare associated infections (HAI) data reported to managers with an embedded quality improvement process. Am J Infect Control 2013;41:S114. 10.1016/j.ajic.2013.03.230 [DOI] [Google Scholar]

- 31.Madison AS, Johnson D, Shepard J. Leveraging internal knowledge to create a central line associated bloodstream infection surveillance and reporting tool. Am J Infect Control 2013;41:S56. 10.1016/j.ajic.2013.03.11623622750 [DOI] [Google Scholar]

- 32.Rao R. Application of six sigma process to implement the infection control process and its impact on infection rates in a tertiary health care centre. BMC Proc 2011;5. 10.1186/1753-6561-5-S6-P228 [DOI] [Google Scholar]

- 33.Lo Y-S, Lee W-S, Chen G-B, et al. Improving the work efficiency of healthcare-associated infection surveillance using electronic medical records. Comput Methods Programs Biomed 2014;117:351–9. 10.1016/j.cmpb.2014.07.006 [DOI] [PubMed] [Google Scholar]

- 34.Riley S, Cheema K. Quality Observatories: using information to create a culture of measurement for improvement. Clin Risk 2010;16:93–7. 10.1258/cr.2010.010002 [DOI] [Google Scholar]

- 35.Mackie S, Baldie D, McKenna E, et al. Using quality improvement science to reduce the risk of pressure ulcer occurrence – a case study in NHS Tayside. Clin Risk 2014;20:134–43. 10.1177/1356262214562916 [DOI] [Google Scholar]

- 36.Gardner LA, Bray PJ, Finley E, et al. Standardizing falls reporting: using data from adverse event reporting to drive quality improvement. J Patient Saf 2019;15:135–42. 10.1097/PTS.0000000000000204 [DOI] [PubMed] [Google Scholar]

- 37.Hyman D, Neiman J, Rannie M, et al. Innovative use of the electronic health record to support harm reduction efforts. Pediatrics 2017;139:e20153410–e10. 10.1542/peds.2015-3410 [DOI] [PubMed] [Google Scholar]

- 38.Ratwani RM, Fong A. 'Connecting the dots': Leveraging visual analytics to make sense of patient safety event reports. J Am Med Inform Assoc 2015;22:312–7. 10.1136/amiajnl-2014-002963 [DOI] [PubMed] [Google Scholar]

- 39.Skledar SJ, Niccolai CS, Schilling D, et al. Quality-Improvement analytics for intravenous infusion pumps. Am J Health Syst Pharm 2013;70:680–6. 10.2146/ajhp120104 [DOI] [PubMed] [Google Scholar]

- 40.Stone AB, Jones MR, Rao N, et al. A Dashboard for monitoring Opioid-Related adverse drug events following surgery using a national administrative database. Am J Med Qual 2019;34:45-52. 10.1177/1062860618782646 [DOI] [PubMed] [Google Scholar]

- 41.Milligan PE, Bocox MC, Pratt E, et al. Multifaceted approach to reducing occurrence of severe hypoglycemia in a large healthcare system. Am J Health Syst Pharm 2015;72:1631–41. 10.2146/ajhp150077 [DOI] [PubMed] [Google Scholar]

- 42.Shneiderman B. The eyes have it: a task by data type taxonomy for information Visualizations. Proceedings 1996 IEEE Symposium on Visual Languages 1996:336–43. 10.1109/VL.1996.545307 [DOI] [Google Scholar]

- 43.Roskoski R. Guidelines for preparing color figures for everyone including the colorblind. Pharmacol Res 2017;119:240–1. 10.1016/j.phrs.2017.02.005 [DOI] [PubMed] [Google Scholar]

- 44.Holden RJ, Carayon P. SEIPS 101 and seven simple SEIPS tools. BMJ Qual Saf 2021. 10.1136/bmjqs-2020-012538. [Epub ahead of print: 26 May 2021]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Carayon P, Hoonakker P, Hundt AS, et al. Application of human factors to improve usability of clinical decision support for diagnostic decision-making: a scenario-based simulation study. BMJ Qual Saf 2020;29:329–40. 10.1136/bmjqs-2019-009857 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Savoy A, Militello LG, Patel H, et al. A cognitive systems engineering design approach to improve the usability of electronic order forms for medical consultation. J Biomed Inform 2018;85:138–48. 10.1016/j.jbi.2018.07.021 [DOI] [PubMed] [Google Scholar]

- 47.Harte R, Glynn L, Rodríguez-Molinero A, et al. A Human-Centered design methodology to enhance the usability, human factors, and user experience of connected health systems: a three-phase methodology. JMIR Hum Factors 2017;4:e8. 10.2196/humanfactors.5443 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wilson J, Rosenberg D. Chapter 39 - Rapid Prototyping for User Interface Design. In: Helander M, ed. Handbook of Human-Computer Interaction. North. Holland, 1988: 859–75. [Google Scholar]

- 49.McMullen CK, Ash JS, Sittig DF, et al. Rapid assessment of clinical information systems in the healthcare setting: an efficient method for time-pressed evaluation. Methods Inf Med 2011;50:299–307. 10.3414/ME10-01-0042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Nagelkerk J, Peterson T, Pawl BL, et al. Patient safety culture transformation in a children's Hospital: an interprofessional approach. J Interprof Care 2014;28:358–64. 10.3109/13561820.2014.885935 [DOI] [PubMed] [Google Scholar]

- 51.Pemberton MN, Ashley MP, Shaw A, et al. Measuring patient safety in a UK dental Hospital: development of a dental clinical effectiveness dashboard. Br Dent J 2014;217:375–8. 10.1038/sj.bdj.2014.859 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing not applicable as no datasets generated and/or analysed for this study. Not applicable.