Abstract

Background

It is generally accepted that evidence-informed decision making contributes to better health system performance and health outcomes, yet we are lacking benchmarks to monitor the impact of national health information systems (HIS) in policy and practice. Hence in this study, we have aimed to identify criteria for monitoring Knowledge Translation (KT) capacity within countries.

Methods

We conducted a web-based Delphi with over 120 public health professionals from 45 countries to reach agreement on criteria to monitor KT at the level of national HIS. Public health professionals participated in three survey rounds, in which they ranked 85 preselected criteria and could suggest additional criteria.

Results

Experts working in national (public) health agencies and statistical offices, as well as in health policy and care agreed on 29 criteria which constitute the Health Information (HI)-Impact Index. The criteria cover four essential domains of evaluation: the production of high-quality evidence, broad access and dissemination, stakeholder engagement and knowledge integration across sectors and in civil society. The HI-Impact Index was pretested by officials working in ministries of health and public health agencies in eight countries; they found the tool acceptable and user-friendly.

Conclusions

The HI-Impact Index provides benchmarks to monitor KT so that countries can assess whether high-quality evidence can be easily accessed and used by the relevant stakeholders in health policy and practice, by civil society and across sectors. Next steps include further refining the procedure for conducting the assessment in routine, and sharing experiences from HIS evaluations using the HI-Impact Index.

Introduction

In the beginning of 2020, health systems across the globe were hit with the SARS-COV-2. Globally, there have been over 187 million confirmed cases of COVID-19, among which 57 million in Europe. Faced with a death toll of over 4 million globally, data on case fatality rates and health service use indicators have been crucial in determining containment measures and strategies across countries. These data are generated by national health information systems (HIS) which consist in population-based health, and administrative data sources (i.e. patient registries, hospital discharge, vital statistics). The pandemic illustrated how health information (HI), meaning data on population health status, the determinants of health and service needs, can inform decision making in the health system.1–3 Currently however, there is no consensus on how to audit knowledge translation at population level,4–6 which is defined as: the appropriate exchange, synthesis and ethically sound application of knowledge to strengthen the healthcare system and improve health.7 The focus in health information system (HIS) evaluation thus far has largely been on assessing the production of data, and less the use and impact of the evidence in practice.8

Therefore, our aim in this study was to address an important health systems research gap. We developed a tool, the Health Information (HI)-Impact Index, to monitor knowledge translation capacity of national HIS. We used a participatory approach in collaboration with professionals working in public health monitoring and surveillance, research, health policy and care. This was a timely endeavor in the context of the current EU-Joint Action on Health Information (InfAct https://www.inf-act.eu/), gathering 40 partners: public health institutes, ministries of health and research, and health agencies from 28 countries striving to build an integrated EU infrastructure to advance the use of HI for analysis and decision making.

Methods

Conceptual framework

In 2018, the HI-Impact framework was developed to monitor the impact of national HIS.8 Whereas the four domains of HIS evaluation in this conceptual framework are described below, the evaluation criteria necessary to carry out this assessment in practice were not specified.

Health Information Evidence Quality: this domain aims to monitor the quality,reliability, and relevance of the evidence generated by HIS

HI System (HIS) Responsiveness: this domain aims to monitor the access and availability of the evidence—including dissemination efforts targeting a wide range of stakeholders.

Stakeholder Engagement: this domain aims to monitor the use of evidence in the health care system for capacity-building, decision making and intervention.

Knowledge Integration: this domain aims to monitor the use of evidence by civil society, and across sectors to have more insight on the societal impact.

Preselection of criteria to include in the HI-Impact Index

This conceptual framework was developed to monitor knowledge translation within countries. Therefore, in line with this objective, we searched for criteria that reflect good capacity for routine data production, dissemination and use of HI. We reviewed criteria in the literature8,9, and existing tools used to evaluate the performance of national HIS.10,11 We examined the World Health Organization (WHO) Support Tool for Strengthening HIS12; the United States Agency for International Development (USAID) tools to evaluate knowledge exchange and capacity-building in development programs13,14 and Canada’s data source quality assessment tool.15 We also expanded our scope by examining criteria in other health (policy) performance indexes: the OECD Better Life Index, the Migrant Integration Policy Index, The European Population Health Index, and the Evidence Informed Policy Making indicators developed by Tudisca et al.16

As set out in our Terms of Reference for the study, our aim was to include 30 criteria in the HI-Impact Index (this number was chosen to allow for a comprehensive and swift evaluation process);therefore we aimed for around three times as many indicators to start the Delphi. We identified 85 criteria that capture how evidence is produced, and applied strategically for organizational and quality improvements in the health care system (Supplementary table 1). On average, we noted that 53% of criteria in existing HIS evaluation tools pertained to ‘HI Evidence Quality’, 28% to ‘Stakeholder Engagement’, 18% ‘HIS Responsiveness’ and 2% ‘Knowledge Integration’ (Supplementary figure 1). To maintain the integrity of the conceptual framework used in this study, we captured a greater number of criteria from the literature in the domains which were least represented in existing tools.

Implementing the web-based DELPHI

Our aim was to find agreement with HI experts on criteria that could be used to monitor knowledge translation capacity . A Delphi is a well-known consensus technique 17which involves engaging a panel of experts in ranking and prioritizing items over several rounds in order to reach agreement. The process which is anonymous and iterative can be implemented online, and it is also one of the preferred methodologies in the development of an indicator set.16,18 Whereas rankings are done individually, aggregated results are shared among participants between survey rounds. MD developed the Delphi surveys using LimesurveyV2 and these were pretested by the InfAct coordination team (L.A.A., H.V.O.) and other scientists (R.C., E.B.) at Sciensano, the Belgian Institute for Health.

We invited nearly 700 European public health professionals and health policy makers to participate in the Delphi. We aimed for a multidisciplinary representation of data providers and end-users in policy and practice. Therefore, we outreached to members of the European Public Health Association, Euro-Peristat, a European perinatal health surveillance network (www.europeristat.com), the HealthPros initiative, which gathers healthcare performance intelligence professionals (https://www.healthpros-h2020.eu/), and to the national representatives in the InfAct consortium. We used a snowball sampling approach by which participants could further extend the Delphi invitation to their colleagues.

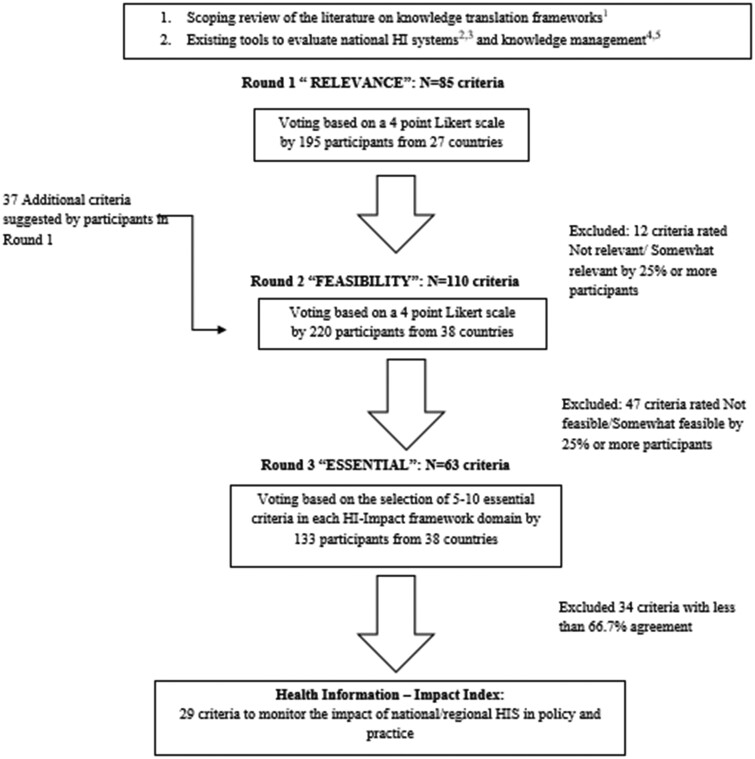

As shown in figure 1, the Delphi consisted of three consecutive surveys. We adapted the decision rules developed by Tudisca et al. to enhance evidence-informed policy making.16 The Delphi panelists were invited to rank the 85 preselected criteria; there were 15–25 criteria in each HI-Impact framework domain to assess. In Round 1, they could also suggest additional criteria for Round 2 per standard Delphi practice.16 They ranked criteria based on their ‘relevance’ in Round 1, and ‘feasibility’ in Round 2. Relevance was defined as the extent to which a criteria inferred or facilitated knowledge translation. Feasibility was defined as the extent to which a criteria was applicable in national or regional HIS evaluation processes. During the first two rounds, the participants ranked criteria using a 4-point Likert scale (i.e. 3—very relevant/feasible, 2—relevant/feasible, 1—somewhat relevant/feasible, 0—not relevant/feasible). The algorithm developed to accept or reject indicators was based on summary statistics of the assessment attributes (i.e. relevance/feasibility). If 25% or more of the panelists rated the criteria poorly (score of 0 or 1), the indicator was excluded from proceeding to the next round. Panelists were encouraged to justify their ratings.

Figure 1.

Delphi flow chart for developing the Health Information-Impact Index. Notes: 1. Delnord M, Tille F, Abboud LA, Ivankovic D, Van Oyen H, How can we monitor the impact of national health information systems? Results from a scoping review, European Journal of Public Health, Volume 30, Issue 4, August 2020, Pages 648–59; 2. Health Metrics Network (2008) Assessing the National Health Information System An Assessment Tool VERSION 4.00, World Health Organization, Geneva; 3. https://www.cihi.ca/sites/default/files/document/cihi-data-source-assessmenttool- en.pdf; 4. https://www.globalhealthknowledge.org/sites/ghkc/files/km-monitoring-and-eval-guide.pdf; 5. https://www.k4health.org/sites/default/files/guide-to-monitoring-and-evaluating-health-information.pdf

Finally, there was a third survey round to prioritize the criteria to include in the HI-Impact Index. By domain, the panelists were invited to flag between 5 and 8 criteria recognized as ‘essential’ to support knowledge translation. We retained those flagged by at least two-thirds (67%) of the participants using the highest level of agreement by domain as reference, as we did not expect to reach consensus (ie. 100% agreement on criteria across the 4 domains of evaluation in the HI-Impact framework) . To explore potential biases we also conducted sensitivity analyses: we compared the final list of criteria had we used a more stringent agreement threshold (75% instead of 67%), and using the 30 highest ranked criteria overall instead of by domain. Finally, we pretested the HI-Impact Index within the InfAct consortium, during a face-to-face workshop with the Steering Committee in Brussels, Belgium in September 2019.

Results

Description of the Delphi surveys

In table 1, we present the characteristics of the Delphi panel. Out of 700 contacted experts, our response rate ranged between 28% and 33% across surveys. Participants came from 45 countries with representation from all EU-MS as well as from Albania, Belarus, Bosnia Herzegovina, Bulgaria, Iceland, Kosovo, Montenegro, Norway, Romania, Switzerland and Serbia (cf. Supplementary figure 2). There were also some working outside Europe: in Bangladesh, Ethiopia, Israel, Mexico, Pakistan, Saudi Arabia, Tunisia and Turkey. On average, 30% of the participants categorized their work as ‘doing research or teaching’, 19% working in ‘health statistics and quality assurance’, and less than 10% in ‘public health policy’, ‘funding grants, health programs and research projects’, or ‘developing health information technology’. Also, we asked in Round 1 about participants’ main interests and areas of work, they cited: public health surveillance and statistical methods (27%), lifestyle and chronic diseases (25%), maternal and child health (15%), and health economics (13%) (see Supplementary figure 3).

Table 1.

Health Information (HI)-Impact Index Delphi survey characteristics

| Survey 1 | Survey 2 | Survey 3 | ||

|---|---|---|---|---|

| Number of responses: total (of which fully completed) | 195 (93) | 228 (86) | 133 (82) | |

| Number of criteria in each survey | 85 | 110 | 63 | |

| Response Rate in %, N = 700 | 27.9 | 32.6 | 19 | |

| Country of occupation: % EU/Non-EU | 100/0 | 95.6/4.4 | 92.5/7.5 | |

| Top 10 contributing countries (% contribution, N = 45 countriesa) | ||||

| 1. Italy | 8.4% | |||

| 2. Portugal | 8.4% | |||

| 3. Belgium | 6.6% | |||

| 4. Switzerland | 6.6% | |||

| 5. Germany | 6.3% | |||

| 6. Spain | 6.1% | |||

| 7. UK | 5.6% | |||

| 8. France | 4.7% | |||

| 9. Austria | 4.5% | |||

| 10. Netherlands | 2.8% | |||

| Self-reported professional activities of survey participantsa | ||||

| % Doing research, teaching | 29 | 31 | 29 | |

| % Health statistics and quality assurance | 19 | 17 | 20 | |

| % Writing about health issues | 14 | 13 | 14 | |

| % Advocating for change, raising awareness | 8 | 8 | 7 | |

| % Developing health information technology and data services | 8 | 7 | 7 | |

| % Funding grants, health programs and research projects | 7 | 4 | 6 | |

| % Making policies, laws | 6 | 7 | 5 | |

| % Other | 6 | 7 | 4 | |

| % Providing health care | 4 | 6 | 7 | |

| % Financing health care (i.e. insurance) | 1 | 1 | 1 | |

Note: Delphi panel participants came from 45 countries including all EU-MS as well as other European countries: Albania, Belarus, Bosnia Herzegovina, Bulgaria, Kosovo, Iceland, Montenegro, Norway, Romania, Switzerland and Serbia; and other countries outside Europe: in Bangladesh, Ethiopia, Israel, Mexico, Pakistan, Saudi Arabia, Tunisia and Turkey. See also map in Supplementary material.

Participants could select more than one category.

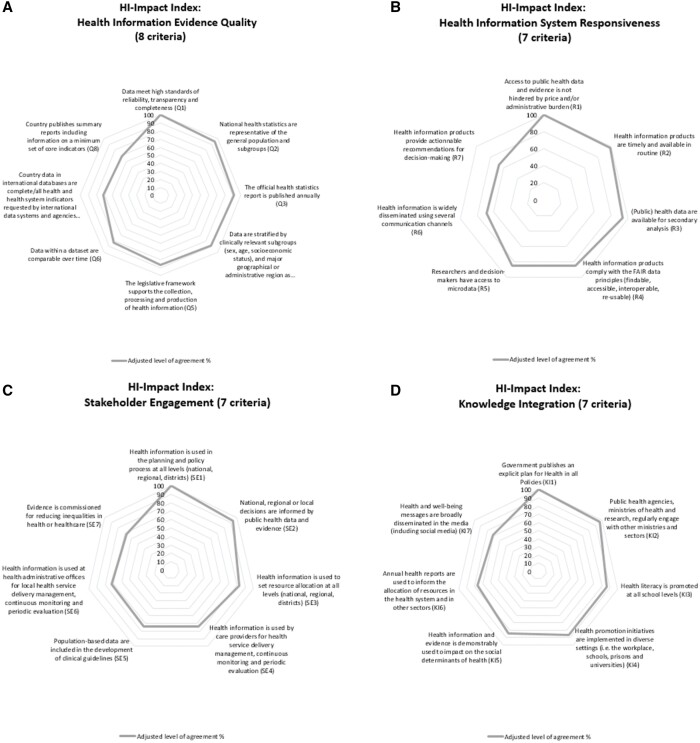

In Round 1, Relevance ratings were high indicating the Delphi panel was satisfied with the preselection of criteria from the literature and existing tools. Overall 24 out of 25 criteria in ‘HI Evidence Quality’, 20/24 in ‘HIS Responsiveness’, 13/20 in ‘Stakeholder Engagement’ and 14/16 in ‘Knowledge Integration’ advanced to Round 2. Participants also suggested 37 additional criteria (see Supplementary table 1). In Round 2, most criteria were rated as ‘Feasible’ or ‘Somewhat feasible’. The domain of ‘HI Evidence Quality’ had the highest feasibility ratings, while the domains of ‘Stakeholder Engagement’ and ‘Knowledge Integration’ had the lowest ratings. Finally, in Round 3, there were 23 criteria to choose from in ‘HI Evidence Quality’, 15 in ‘HIS Responsiveness’, 15 in ‘Stakeholder Engagement’ and 10 in ‘Knowledge Integration’. Based on our decision rule for Round 3 (67% agreement at least), we accepted 29 criteria to include in the HI-Impact Index. We have displayed the Delphi selection process in Supplementary figure 4 showing the evolution in the number of criteria from Round 1 to Round 3, and the criteria are listed in Supplementary table 1. In figure 2, we have displayed the criteria that were retained in the HI-Impact Index by level of agreement in the Delphi panel.

Figure 2.

HI-Impact Index criteria displayed by domain and level of agreement in the Delphi panel. Notes: The Health Information (HI)-Impact Index consists in 29 criteria to monitor knowledge translation capacity of national health information systems. The % level of agreement was adjusted using the highest rated criteria in each domain as reference

The results of the sensitivity analyses are provided in Supplementary table 2. We found that a 75% cutoff was too stringent, as we would have limited the Index to 20 criteria only. Moreover, this mainly excluded criteria in the domain of ‘Stakeholder Engagement’ which defeats the purpose of this study. Similarly, looking at the 30 highest ratings overall, we would have included even more criteria in ‘HI Evidence Quality’ instead of monitoring ‘Stakeholder Engagement’ which is currently the main gap in the field.

Synthesis of the HI-Impact Index: 29 accepted criteria

The criteria in the HI-Impact Index and the data sources from which they originated (i.e. from the literature, existing HIS tools or suggestion made by the Delphi panel) are listed in table 2. There are eight criteria to monitor the quality of HI data production, seven to monitor the level of access to evidence, seven to capture how HI is used for decision making in the health system, and seven on the use of evidence by civil society and across sectors. We have also added one last item to allow users to comment freely on knowledge translation capacity in their country.

Table 2.

The Health Information-Impact Index: 29 criteria to monitor the knowledge translation capacity of national health information systems

| HI-Impact framework | Number | Evaluation criteria (Adapted from) | % agreement |

|---|---|---|---|

| Domains | |||

| HI Evidence Quality | Q1 | Data meet high standards of reliability, transparency and completeness9,22 |

100% Ref: selected by 60/98 participants |

| Q2 | National health statistics are representative of the general population and subgroups9 | 95.0% | |

| Q3 | The official health statistics report is published annually12 | 91.7% | |

| Q4 | Data are stratified by clinically relevant subgroups (sex, age, socioeconomic status), and major geographical or administrative region as appropriate9 | 88.3% | |

| Q5 | The legislative framework supports the collection, processing and production of health informationa | 86.7% | |

| Q6 | Data within a dataset are comparable over time9 | 83.3% | |

| Q7 | Country data in international databases are complete/all health and health system indicators requested by international data systems and agencies are provided (OECD, Eurostat, WHO)9 | 71.7% | |

| Q8 | Country publishes summary reports including information on a minimum set of core indicators12 | 68.3% | |

| HIS Responsiveness | R1 | Access to public health data and evidence is not hindered by price and/or administrative burden9 |

100% Ref : 60/86 participants |

| R2 | Health information products are timely and available in routine (9,15) | 98.3% | |

| R3 | (Public) health data are available for secondary analysisa | 93.3% | |

| R4 | Health information products comply with the FAIR data principles (findable, accessible, interoperable, re-usable)9 | 85.0% | |

| R5 | Researchers and decision-makers have access to microdataa | 85.0% | |

| R6 | Health information is widely disseminated using several communication channels9 | 68.3% | |

| R7 | Health information products provide actionable recommendations for decision makinga | 66.7% | |

| Stakeholder Engagement | SE1 | Health information is used in the planning and policy process at all levels (national, regional, districts)12 |

100% Ref: 62/83 participants |

| SE2 | National, regional or local decisions are informed by public health data and evidencea,16 | 93.5% | |

| SE3 | Health information is used to set resource allocation at all levels (national, regional, districts)12 | 82.3% | |

| SE4 | Health information is used by care providers for health service delivery management, continuous monitoring and periodic evaluation12 | 74.2% | |

| SE5 | Population-based data are included in the development of clinical guidelines9 | 74.2% | |

| SE6 | Health information is used at health administrative offices for local health service delivery management, continuous monitoring and periodic evaluation12 | 72.6% | |

| SE7 | Evidence is commissioned for reducing inequalities in health or healthcare9 | 67.7% | |

| Knowledge Integration | KI1 | Government publishes an explicit plan for Health in all Policies (HiaP)b |

100% Ref: 67/82 participants |

| KI2 | Public health agencies, ministries of health and research, regularly engage with other ministries and sectors9 | 97.0% | |

| KI3 | Health literacy is promoteda | 86.6% | |

| KI4 | Health promotion initiatives are implemented in diverse settings (i.e. the workplace, schools, prisons and universities)a | 86.6% | |

| KI5 | Health information and evidence is demonstrably used to impact on the social determinants of healtha | 85.1% | |

| KI6 | Annual health reports are used to inform the allocation of resources in the health system and in other sectorsa | 76.1% | |

| KI7 | Health and well-being messages are broadly disseminated in the media (including social media)9 | 71.6% | |

| Additional comments | ADD1 | Please provide any additional comments you might have on the uptake of evidence in policy development and practice |

Note: Health information products include official publications with data on population health status, the determinants of health, and service use. In this table, the % level of agreement was adjusted using the highest rated criteria in each domain as reference.

Criteria suggested by the HI-Impact Index Delphi panel in Round 1.

From the Migrant Integration Policy Index http://www.mipex.eu/methodology.

The criteria in ‘HI Evidence Quality’ essentially address whether HI is fit-for use by decision-makers. The panel agreed this relies on assessing data quality (Q1,2,6), and production (Q3,Q5,7,8), and that the legislative framework supports prioritization in data collection activities (Q5). Other criteria in this domain address whether or not HIS generate complete information for population-based intervention (Q2,4).

In the domain of ‘HIS Responsiveness’, the panel agreed on criteria to examine the availability of HI to a broad range of stakeholders including researchers (HIR1,3,4), policy makers (HIR1,5–7), clinicians (HIR2), health advocates and patients (HIR6). As a guiding principle, the panelists agreed HI should be timely (HIR2), widely available and FAIR (findable, accessible, interoperable and reusable) (HIR4). These standards for data collection and reporting were developed in 2016 by Wilkinson et al.19 As reflected by criteria in the third domain of ‘Stakeholder Engagement’, the Delphi panel agreed on monitoring the nature of the interaction between HIS and key actors in the health systems: clinicians (SE4,5), health policy planners (SE1–3,7) and community health managers (SE6), at the national, regional and local level. This is also because knowledge translation relies on facilitated interactions between data providers and end-users.

Finally, the criteria in the fourth domain of ‘Knowledge Integration’ explore the use of evidence in sectors outside the health system (KI1,2,6), and the potential for embedding evidence within the broader political, and social context (KI2).9 On one hand, criteria in this domain give an indication on how HIS contribute to targets in overarching frameworks such as Health in all Policies (KI1), or the Sustainable Development Goals (KI5).20,21 The criteria also address the extent to which HIS influence healthy choices by examining the promotion of health literacy (KI3), the role of the media (KI7), andthe implementation of health promotion initiatives in diverse settings (KI4).

Piloting the HI-Impact Index

Next, we further piloted the HI-Impact Index within the InfAct Joint Action. The aim was to have better insight on how the Index might be used by European (public) health agencies. We will report here only on the process of carrying out the evaluation of knowledge translation capacity and not on the content of the individual country assessments—this will be done at a later stage. Eight InfAct representatives from Croatia, France, Germany, Italy, Lithuania, Malta, Portugal and Slovenia, working in senior public health and governmental positions, participated in this exercise held during an InfAct Steering Committee meeting (List of Steering Committee members available here: https://www.inf-act.eu/project-oversight). The country representatives provided feedback on how ‘user-friendly’ they found the scoring sheet. Experts based their scores on their professional experience and personal opinions. Participants noted that the HI-Impact Index promotes transparency in the implementation of evidence-based approaches in public health policy and practice. They found the HIS evaluation tool was easy to use, and the time needed to fill the evaluation acceptable. They asked though for more specific evaluation instructions as to who shall be responsible for conducting the evaluation (i.e. multistakeholder evaluation vs. single governing body), and which type of population health data sources should be covered by the evaluation. This pilot is a crucial step before deploying the evaluation more broadly across countries and providing international comparisons of knowledge translation capacity—this is an area for future work in collaboration with InfAct.

Discussion

Knowledge translation supports evidence-informed decision making, and better health system performance. This is why in 2016, the WHO presented an action plan to encourage the use of evidence for policy-making in the European Region.22–24 The distinctive contribution of the paper is that it seeks to monitor the uptake of evidence generated by national HIS based on a list of 29 criteria. Traditionally, national HIS are evaluated on the accuracy of the data provided and less on the use of HIS outputs (i.e. Public health reports, dashboard etc.). This study shines light on an important new area of assessment by considering the return of investment of HI activities, and the societal impact of evidence. The web-based Delphi allowed us to develop an instrument national and regional health agencies could use to set benchmarks for HI data production, dissemination and outreach. This objective is also in line with the 2019 OECD recommendations on data governance to maximize the social and economic value of evidence.25

Monitoring knowledge translation is an original endeavor within the scope of HIS evaluations.8,22,26,27 This study builds on a scoping review of knowledge translation frameworks8 as well as existing HIS evaluation tools.13–20 Furthermore, the criteria in the HI-Impact Index primarily target stakeholders knowledgeable about population health information and data within countries. In this context, it is of interest that we have had a majority of these professionals represented in the Delphi. There were over 120 experts across European countries that critically evaluated 85 criteria, and our response rate was over 25% in all rounds. We further piloted the HI-Impact Index with national HI experts from the InfAct consortium who gave positive feedback.

Yet, there were also many limitations. Inherent to the Delphi methodology, the expert panel drives the results of the consensus process. Had we used a different outreach strategy to constitute the panel; this may have led to another version of the HI-Impact Index. For example, several items relevant for HIS evaluation were not retained by this Delphi panel on the costs of data collection, and the impact of data privacy. The fact that there were much fewer HI end-users (i.e. Health policy makers and care providers) than data providers (i.e. public health information specialists) that participated is also likely to have influenced the outcome of this study. Nonetheless, we have provided our list of preselected criteria at the start of the Delphi which would allow other researchers to replicate this study and test the robustness of our results (see Supplementary table 1). We have also conducted sensitivity analyses (see Supplementary table 2).

The HI-Impact Index formalizes the need of monitoring the use of evidence for better accessibility and availability in the health system. Our study confirms that now more than ever ‘just’ producing data is not enough, national health agencies need to envision ways to bring high-quality information center-stage into policy and practice.27 Scientific evidence and HI should also be available at theappropriate level of granularity (i.e. Q2/3), to facilitate targeted interventions in vulnerable subgroups (such as children or the elderly), and geographic areas—otherwise, the probability of implementing any intervention with confidence is reduced.8,28 This is especially true when there are large inequalities between regions, which lower the potential for national indicators to be relevant for decisions at the local level. Many countries have regional HIS, and cohesion funds used to implement policies and interventions are allocated to the NUTS II level.28

In the Delphi, experts also agreed that monitoring knowledge translation consists in examining what efforts have been made to disseminate and engage stakeholders with evidence. HIS have a large role to play in ensuring that the best available evidence is accessible for decision making. We saw that during the COVID-19 pandemic, scientific evidence on potential risk factors and treatments was accompanied with an unprecedented infodemic, defined as an excessive amount of information about a problem making it difficult to identify solutions, retrieve reliable evidence, and which impedes on the public health response. At the level of national HIS, the challenge is to develop customized communication and reporting strategies that meet the expectations of different types of stakeholders, as well as their health literacy level. . Engaging a broad pool of key players in the health system with HI and actionable recommendations contributes to better knowledge translation , and might promote better equity in health as well (ie.SE7).

Nonetheless, the process itself of conducting a HIS evaluation is complex. During the Delphi, participants recognized monitoring knowledge translation as highly relevant in Round 1, but ratings on the feasibility of the evaluation were lower in Round 2. This could be due to the fact that the range of data sources which contribute to a country's HIS t is quite broad: electronic health records, routine data from health care facilities, records from professional documentation obligations. Each of these data sources has their own rules of procedure and governance frameworks operating at international (i.e. the EU General Data Protection Regulation, or Decision No 922/2009/EC on interoperability solutions in public administrations), national (i.e. quality assurance laws) or subnational level (i.e. collection of public vs. private healthcare data). Furthermore, the HI-Impact Index includes some criteria that are more subjective than others. In the domain of ‘HIS Responsiveness’ for instance, HIR7 relates to the extent to which data providers provide actionable recommendations for decision making—this criteria addresses whether a technical experts can also champion health interventions per se, and views on this could differ based on the organizational structures and culture of the HIS in each country24.

The HI-Impact Index emphasizes the societal value of evidence, and could provide national HIS with a deeper understanding of how their outputs are used. Notwithstanding, that scientific evidence is not the only type of evidence that is relevant in health policy and practice. Based on our pilot within InfAct, the HI-Impact Index could be implemented in a modular approach to complement existing HIS evaluation tools, and to provide an overview of KT capacity for institutionalized knowledge brokers, such as the European Observatory on Health systems and policies29,30 or the Healthcare Knowledge Centre (KCE) in Belgium which acts an as independent institution responsible for facilitating decision making and policy development.

In conclusion, the HI-Impact Index is a new tool that could provide a more balanced assessment of national HIS—one that goes beyond assessing the quality of data production, and also looks into the uptake of evidence. It does so by proposing a reference framework, and criteria to monitor in a more systematic way the communication strategy of HIS and stakeholder engagement. To explore the full potential of the HI-Impact Index, next steps consist in specifying the auditing rules and institutional agreements for conducting the evaluation within countries. Sharing results from implementing the HI-Impact Index could also contribute to the identification of best practices for HIS. Ultimately, it will be crucial to maintain a cyclic evaluation system that fosters a shared-understanding between data providers and end-user in policy and practice.

Supplementary data

Supplementary data are available at EURPUB online.

Supplementary Material

Acknowledgements

We thank Rana Charafeddine and Elise Braekman from Sciensano for pretesting the Delphi surveys. We also acknowledge the InfAct consortium for participating in the pilot of the HI-Impact Index.

Funding

This work was supported by the Marie Skłodowska-Curie Actions [Individual fellowship GA No 795051]; the European Union’s Health Program 2014-2020 [GA No 801553]; the Foundation for Science and Technology, FCT [SFRH/BD/132218/2017]; UIDB/04084/2020.

Conflicts of interest

None declared.

Key points

We developed a tool, the Health Information (HI)-Impact Index, that European (public) health agencies could use to evaluate the routine production, dissemination and use of health information in policy and practice based on 29 criteria .

We conducted a DELPHI with over 120 European public health experts to reach agreement on criteria to include in the HI-Impact Index. The HI-Impact Index was further pretested by InfAct, the EU Joint Action on Health Information which includes 28 participating countries.

An important aspect of this study is that it provides a reference framework for monitoring knowledge translation capacity which is a new area for Health Information System evaluation.

References

- (1). Hanney SR, Gonzalez-Block MA.. ‘Knowledge for better health’ revisited - the increasing significance of health research systems: a review by departing Editors-in-Chief. Health Res Policy Syst 2017;15:81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (2). Verschuuren M, van BA, Rosenkotter N, et al. Towards an overarching European health information system. Eur J Public Health 2017;27:44–8. [DOI] [PubMed] [Google Scholar]

- (3). Braithwaite J, Marks D, Taylor N.. Harnessing implementation science to improve care quality and patient safety: a systematic review of targeted literature. Int J Qual Health Care 2014;26:321–9. [DOI] [PubMed] [Google Scholar]

- (4). Eslami AA, Scheepers H, Rajendran D, Sohal A.. Health information systems evaluation frameworks: a systematic review. Int J Med Inform 2017;97:195–209. [DOI] [PubMed] [Google Scholar]

- (5). Straus SE, Tetroe J, Graham I.. Defining knowledge translation. CMAJ 2009;181:165–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (6). Levesque JF, Sutherland K.. What role does performance information play in securing improvement in healthcare? a conceptual framework for levers of change. BMJ Open 2017;7:e014825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (7). AbouZahr C, Boerma T, Hogan D.. Global estimates of country health indicators: useful, unnecessary, inevitable? Glob Health Action 2017;10:1290370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (8). Delnord M, Tille F, Abboud LA, et al. How can we monitor the impact of national health information systems? Results from a scoping review. Eur J Public Health 2020;30:648–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (9). Peirson L, Catallo C, Chera S.. The Registry of Knowledge Translation Methods and Tools: a resource to support evidence-informed public health. Int J Public Health 2013;58:493–500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (10). Cheetham M, Wiseman A, Khazaeli B, et al. Embedded research: a promising way to create evidence-informed impact in public health? J Public Health (Oxf) 2018;40:i64–i70. [DOI] [PubMed] [Google Scholar]

- (11). Yost J, Dobbins M, Traynor R, et al. Tools to support evidence-informed public health decision making. BMC Public Health 2014;14:728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (12).World Health Organisation. Support tool to assess health information systems and develop and strengthen health information strategies. Available at: https://www.euro.who.int/en/publications/abstracts/support-tool-to-assess-health-information-systems-and-develop-and-strengthen-health-information-strategies.2015 (27 January 2021, date last accessed).

- (13). Sullivan TM, Strachan M, Timmons BK.. Guide to Monitoring and Evaluating Health Information Products and Services. Baltimore, Maryland: Center for Communication Programs, Johns Hopkins Bloomberg School of Public Health, 2007. [Google Scholar]

- (14). Ohkubo S, Sullivan TM, Harlan SV, et al. Guide to Monitoring and Evaluating Knowledge Management in Global Health Programs. Baltimore, Maryland: Johns Hopkins Bloomberg School of Public Health, 2013. [Google Scholar]

- (15).Canadian Institute for Health Information. Canadian Data Source Assessment Tool. 2019. Available at: https://www.cihi.ca/sites/default/files/document/cihi-data-source-assessment-tool-en.pdf (27 January 2021, date last accessed).

- (16). Tudisca V, Valente A, Castellani T, et al. ; REPOPA Consortium. Development of measurable indicators to enhance public health evidence-informed policy-making. Health Res Policy Syst 2018;16:47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (17). Elwyn G, O'Connor A, Stacey D, et al. ; International Patient Decision Aids Standards (IPDAS) Collaboration. Developing a quality criteria framework for patient decision aids: online international Delphi consensus process. BMJ 2006;333:417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (18). Zeitlin J, Wildman K, Breart G, et al. PERISTAT: indicators for monitoring and evaluating perinatal health in Europe. Eur J Public Health 2003;13(3 Suppl):29–37. [DOI] [PubMed] [Google Scholar]

- (19). Wilkinson MD, Dumontier M, Aalbersberg IJ, et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data 2016;3:160018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (20). Al-Mandhari A, El-Adawy M, Khan W, Ghaffar A.. Health for all by all-pursuing multi-sectoral action on health for SDGs in the WHO Eastern Mediterranean Region. Global Health 2019;15:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (21). Lawless A, Baum F, Delany-Crowe T, et al. Developing a framework for a program theory-based approach to evaluating policy processes and outcomes: health in all policies in South Australia. Int J Health Policy Manag 2017;7:510–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (22). Blessing V, Davé A, Varnai P. Evidence on mechanisms and tools for use of health information for decision-making. 2017. Health Evidence Network synthesis report 54. Available at: http://www.euro.who.int/__data/assets/pdf_file/0011/351947/HEN-synthesis-report-54.pdf?ua=1 (27 January 2021, date last accessed). [PubMed]

- (23).WHO Regional Office for Europe. Action plan to strengthen the use of evidence, information and research for policy-making in the WHO European Region. 2016. Available at: https://www.euro.who.int/en/about-us/governance/regional-committee-for-europe/past-sessions/66th-session/documentation/working-documents/eurrc6612-action-plan-to-strengthen-the-use-of-evidence,-information-and-research-for-policy-making-in-the-who-european-region (27 January 2021, date last accessed).

- (24). van de Goor I, Hamalainen RM, Syed A, et al. REPOPA Consortium. Determinants of evidence use in public health policy making: results from a study across six EU countries. Health Policy 2017;121:273–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (25).Organisation for Economic Cooperation and Development. Enhancing Access to and Sharing of Data: Reconciling Risks and Benefits for Data Re-Use across Societies. Paris: OECD Publishing, 2019. [Google Scholar]

- (26). Aqil A, Lippeveld T, Hozumi D.. PRISM framework: a paradigm shift for designing, strengthening and evaluating routine health information systems. Health Policy Plan 2009;24:217–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (27). Martin K, Mullan Z, Horton R.. Overcoming the research to policy gap. Lancet Glob Health 2019;7 Suppl 1:S1–S2. [DOI] [PubMed] [Google Scholar]

- (28). Costa C, Freitas A, Stefanik I, et al. Evaluation of data availability on population health indicators at the regional level across the European Union. Popul Health Metr 2019;17:11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (29). Lessof S, Figuera J, McKee M, et al. Observatory 20th anniversary Special Issue: 20 years of evidence into practice. Eurohealth 2018;24:4–7. [Google Scholar]

- (30). Ward V, House A, Hamer S.. Developing a framework for transferring knowledge into action: a thematic analysis of the literature. J Health Serv Res Policy 2009;14:156–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.