Abstract

Background

Colonoscopy remains the gold-standard screening for colorectal cancer. However, significant miss rates for polyps have been reported, particularly when there are multiple small adenomas. This presents an opportunity to leverage computer-aided systems to support clinicians and reduce the number of polyps missed.

Method

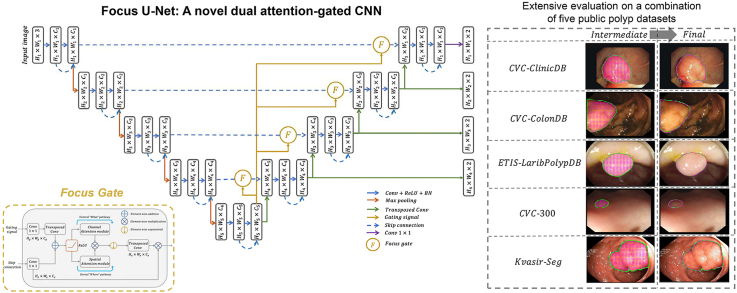

In this work we introduce the Focus U-Net, a novel dual attention-gated deep neural network, which combines efficient spatial and channel-based attention into a single Focus Gate module to encourage selective learning of polyp features. The Focus U-Net incorporates several further architectural modifications, including the addition of short-range skip connections and deep supervision. Furthermore, we introduce the Hybrid Focal loss, a new compound loss function based on the Focal loss and Focal Tversky loss, designed to handle class-imbalanced image segmentation. For our experiments, we selected five public datasets containing images of polyps obtained during optical colonoscopy: CVC-ClinicDB, Kvasir-SEG, CVC-ColonDB, ETIS-Larib PolypDB and EndoScene test set. We first perform a series of ablation studies and then evaluate the Focus U-Net on the CVC-ClinicDB and Kvasir-SEG datasets separately, and on a combined dataset of all five public datasets. To evaluate model performance, we use the Dice similarity coefficient (DSC) and Intersection over Union (IoU) metrics.

Results

Our model achieves state-of-the-art results for both CVC-ClinicDB and Kvasir-SEG, with a mean DSC of 0.941 and 0.910, respectively. When evaluated on a combination of five public polyp datasets, our model similarly achieves state-of-the-art results with a mean DSC of 0.878 and mean IoU of 0.809, a 14% and 15% improvement over the previous state-of-the-art results of 0.768 and 0.702, respectively.

Conclusions

This study shows the potential for deep learning to provide fast and accurate polyp segmentation results for use during colonoscopy. The Focus U-Net may be adapted for future use in newer non-invasive colorectal cancer screening and more broadly to other biomedical image segmentation tasks similarly involving class imbalance and requiring efficiency.

Keywords: Polyp segmentation, Colorectal cancer, Colonoscopy, Computer-aided diagnosis, Focus U-Net, Attention mechanisms, Loss function

Graphical abstract

Highlights

-

•

Automatic polyp segmentation can support clinicians to reduce polyp miss rates.

-

•

Focus U-Net combines efficient spatial and channel attention into a Focus Gate.

-

•

Focus Gate uses tunable focal parameter to control degree of background suppression.

-

•

Deep supervision and new Hybrid Focal loss function further improve performance.

-

•

Focus U-Net outperforms state-of-the-art results across five public polyp datasets.

1. Introduction

Globally, colorectal cancer (CRC) ranks third in terms of incidence, and second only to lung cancer as a leading cause of cancer death [1]. The absence of specific symptoms in the early stages of disease often results in delays in diagnosis and treatment, with the stage of disease at diagnosis strongly linked to prognosis. In the United States, the 5-year relative survival rate for Stage I colon cancer is 92%, decreasing to 12% in those with Stage IV [2].

In 1988, Vogelstein proposed the adenoma-carcinoma sequence model for CRC carcinogenesis, describing the transition from benign adenoma to adenocarcinoma with associated well-defined histology at each stage [3]. Importantly, there is a prolonged, identifiable and treatable preclinical phase lasting years prior to malignant transformation [4,5]. As a result, CRC is highly suitable for population level screening, which has been shown to be effective at reducing overall mortality [6,7].

Non-invasive CRC screening tests include stool-based tests, such as the faecal occult blood test, and more recent blood-based tests, such as Epi proColon® (Epigenomics AG, Berlin, Germany). Capsule colon endoscopy and CT colonography are newer, non-invasive radiological investigations useful for screening high-risk individuals unsuitable for colonoscopy. Invasive options include flexible sigmoidoscopy and colonoscopy, offering direct visualisation and the ability to obtain biopsy specimens for histological analysis. Sigmoidoscopy is limited to cancer in the rectum, sigmoid and descending colon, and colonoscopy remains the gold-standard screening tool for CRC with the highest sensitivity and specificity [8]. However, colonoscopy is associated with significant miss rates for polyp detection, contributed by both patient and polyp-related factors [[9], [10], [11]]. The risk of missing polyps significantly increases in patients with two or more polyps, with higher miss rates for flat or sessile compared to pedunculated or sub-pedunculated polyps and miss rates vary from 2% for adenomas ≥ 10 mm to 26% for adenomas < 5 mm.

The difficulty in detecting polyps during colonoscopy presents an opportunity to incorporate computer-aided systems to reduce polyp miss rates [12]. Polyps may remain hidden from the field of view, for which a real-time Artificial Intelligence (AI) model has been developed to assess the quality of colonoscopy [13]. Alternatively, polyps may enter the field of view but remain undetected by the operator. In this case, polyp segmentation approaches not only aim to detect polyps, but to also accurately delineate the polyp border from surrounding mucosa. Early automated methods to segment polyps relied on hand-crafted feature extraction, using either shape-based [[14], [15], [16]] or texture and colour-based analysis [17,18]. While considerable advancements were made, the accuracy of polyp segmentation remained low with hand-crafted features unable to capture the scale of polyp heterogeneity [19].

This paper is structured as follows. Section 2 outlines the state-of-the-art of polyp segmentation on colonoscopy images. Section 3 describes the architecture of the proposed Focus U-Net. Section 4 describes the analysed datasets and the evaluation metrics used in this study. Section 5 present the experimental results. Finally, Section 6 provides a discussion and concluding remarks.

2. Related work

In recent years, significant improvements have been achieved by adopting automatic methods based on deep learning. The introduction of Fully Convolutional Networks (FCN) enabled Convolutional Neural Network (CNN) architectures to tackle semantic image segmentation tasks [20]. The application of FCNs to polyp segmentation has yielded impressive results [21,22]. Currently, the state-of-the-art approaches are largely based on the U-Net, a modified FCN architecture developed for biomedical image segmentation [23]. The U-Net consists of an encoding network used to capture the image context, followed by a symmetrical decoding network enabling localisation of salient regions. UNet++ extends the U-Net by incorporating a series of nested skip connections, reducing the semantic gap between the features maps of the encoder and decoder networks prior to fusion [[24], [25], [26]]. The ResUNet++ combines residual units with the spatial attention-based Atrous Spatial Pyramidal Pooling (ASPP) and channel attention-based squeeze-and-excitation block [25,26]. Similarly, both attention components are incorporated into the DoubleU-Net, which further leverages transfer learning from the first U-Net to generate features as input into the second network [27]. Despite excellent segmentation results with these models, the large memory and associated long inference time limits use in clinical practice where real-time polyp segmentation is required. Recently, several efficient models with significantly faster inference times, in addition to greater accuracy, have been proposed. Priotising efficiency over performance, PolypSegNet introduces the depth dilated inception module, enabling efficient feature extraction across a range of receptive field sizes [28]. Similarly, ColonSegNet is a light-weight network that includes residual connections and channel attention to achieve real-time polyp segmentation [29]. PraNet uses a two-step process that involves initial localisation of the polyp area, followed by progressive refining of the polyp boundary, resembling the method by which humans identify polyps [30]. HarDNet-MSEG uses a low memory latency HarDNet68 backbone [31], together with a Cascaded Partial Decoder [32] for fast and accurate polyp segmentation. Progressively normalised self-attention network introduces a self-attention module that incorporates channel-split, query-dependent and normalisation rules to improve computational efficiency [33]. The feedback attention network (FANet) uses a form of hard attention based on an iterative refinement method using Otsu thresholding [34].

In this paper, we introduce a novel attention-gated U-Net architecture, named the Focus U-Net, which uses a new attention module known as the Focus Gate (FG), incorporating both spatial and channel-based attention with a focal parameter to control the degree of background suppression. Using this architecture, we achieve state-of-the-art results across five public polyp segmentation datasets. With an efficient and accurate polyp segmentation algorithm, we provide the latest advancement towards using AI in colonoscopy practice, with the aim of assisting clinicians by increasing polyp detection rates.

3. The proposed focus U-Net architecture

In this section, we introduce the techniques used in the Focus U-Net, beginning with the FG and associated channel and spatial attention modules, followed by explanations of deep supervision and loss function optimisation.

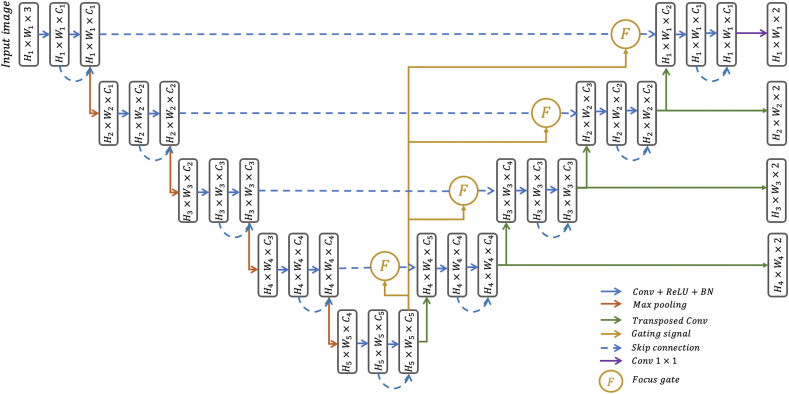

3.1. Overview of the focus U-Net

The architecture of the Focus U-Net is shown in Fig. 1. Similar to the U-Net, the Focus U-Net begins with an encoding network, capturing features relevant to polyps such as edges, texture and colour. The deepest layer of the network contains the richest information relating to image features, at the cost of spatial resolution, and forms the gating signal used as input into the FG. The FG uses the gating signal to refine incoming signals from the encoding network in the form of long-range skip connections, by highlighting specific image features and regions that are integrated into the decoding network. Successive upsampling in the decoding network enables polyp localisation at progressively higher resolution, with the final output producing the segmentation map defining, if present, the precise shape and location of the polyp. Short-range skip connections and deep supervision create additional pathways for information transfer, diversifying the features extracted and providing shortcuts for the loss to propagate backwards to the deeper layers when updating parameters.

Fig. 1.

Architecture of the proposed Focus U-Net. The gating signal originates from the deepest layer in the network and refines incoming skip connection input at each depth. Deep supervision is represented by the dark green arrows generating outputs at each depth.

3.2. Attention Gates and the Focus Gate

The concept of attention mechanisms in neural networks is inspired by cognitive attention, where relevant stimuli in the visual field are identified and selectively processed. In the context of neural networks, distinctions are made between hard and soft attention, as well as global and local attention [35,36]. Hard attention calculates attention scores for each region of the image to select the regions to attend. This requires a stochastic sampling process, which is a non-differentiable calculation relying on reinforcement learning to update parameters [37]. In contrast, soft attention is deterministic and assigns regions of interest (ROIs) with higher weight, with the benefit that this process is differentiable and therefore trainable by standard backpropagation [38,39]. The distinction between global and local attention refers to whether the whole input or only a subset of the input is attended [40]. For training of neural networks, a combination of soft and local attention is often favoured [41].

Attention Gates (AGs) provide neural networks with the capacity to selectively attend to inputs. The use of AG first originated in the context of machine translation as part of Natural Language Processing (NLP) [40,[42], [43], [44]], but has also more recently shown success in Computer Vision, with particular interest in the Attention U-Net for medical image segmentation [41,45].

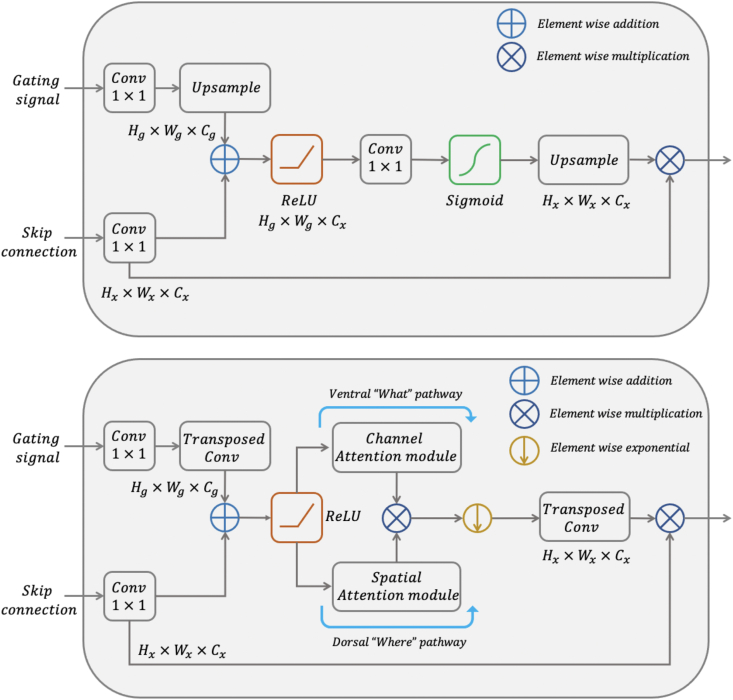

The structure of the additive AG is illustrated in Fig. 2 [41]. This AG receives two inputs, the gating signal and associated skip connection generated at that level. The gating signal originates from the deepest layer of the neural network, where feature representation is the greatest at the cost of significant down-sampling. In contrast, skip connections arise in more superficial layers, where feature representation is coarser, but image resolution is relatively spared. The AG uses contextual information from the gating signal to prune the skip connection, highlighting ROIs and therefore reducing false positive predictions. To accomplish this, the initial stage involves simultaneous upsampling of the gating signal and downsampling of the skip connection to produce equivalent image dimensions enabling element-wise addition. Although computationally more expensive, additive attention has been shown to achieve higher accuracy than multiplicative attention [40].

Fig. 2.

Top: schematic of the additive AG. The gating signal and skip connection are first resized and then combined to form attention coefficients. Multiplication of the original skip connection with the attention coefficients provides spatial context highlighting ROIs. Bottom: schematic of the Focus Gate. The gating signal and skip connection are first resized and then combined prior to spatial and object-related feature extraction. The attention coefficients pass through an additional focal filter controlling the degree of background suppression. Finally, multiplying the original skip connection with the attention coefficients provides both spatial and feature context highlighting regions and features of interest.

The resulting matrix is passed through a ReLU activation, followed by global average pooling along the channel axis and final sigmoid activation, generating a matrix of attention weights, also known as the attention coefficients i ∈ [0, 1].

The final step is an element-wise multiplication of the upsampled attention coefficients with the original skip connection input, providing spatial context to the skip connection prior to fusion with outputs from the decoder network.

Before describing the FG, illustrated in the bottom of Fig. 2, we first describe two of its main components, namely the channel attention module and the spatial attention module.

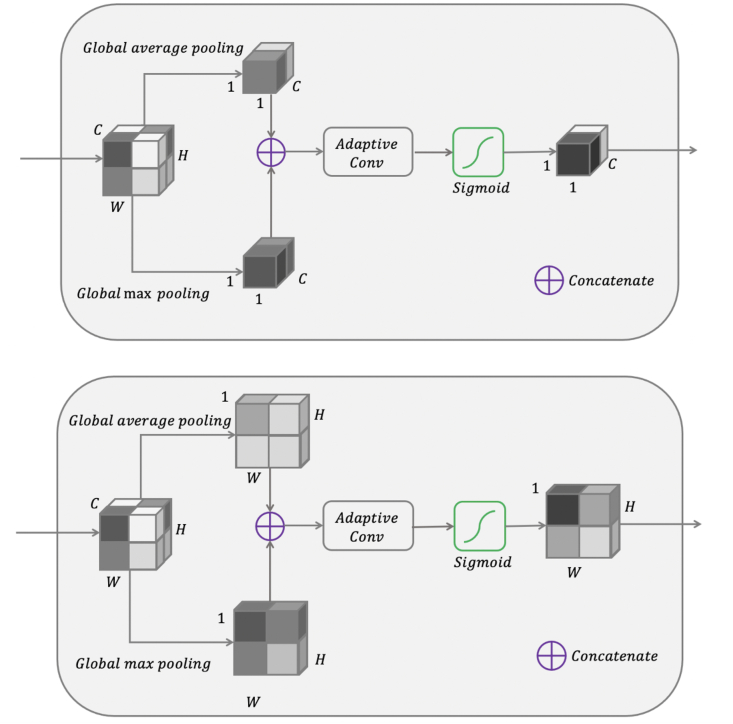

3.3. Channel attention module

The global average pooling operation in the additive AG extracts the spatial context to localise the ROIs. However, by pooling across the channel axis, information conveyed by the channels relating to objects features, such as edges and colour, is lost. On the contrary, by assigning weights along the channel axis, channel interdependencies may be explicitly modelled, enabling networks to better recalibrate the features used for segmentation [[46], [47], [48], [49]]. Squeeze-and-excitation (SE) blocks achieve this by initial feature aggregation using global average pooling along the spatial axis, known as the ‘squeeze’ operation, followed by two fully connected layers with ReLU and sigmoid activations producing the ‘excitation’ operation [46,50]. The two fully connected layers involve dimensionality reduction to control model complexity, with implications for computation and performance. Efficient Channel Attention (ECA) [51] avoids dimensionality reduction by modelling cross-channel interaction with an adaptive kernel size k, defined by:

| (1) |

, where C is the channel dimension, while b and γ are set to 2 and 1, respectively.

A separate insight incorporated into the Convolutional Block Attention Module (CBAM) for channel attention involves using a global max pooling operation in addition to global average pooling, providing two complementary spatial contexts prior to feature recalibration [48].

The channel module used in the FG is illustrated in Fig. 3. We extend the ideas provided by ECA and CBAM by using initial global average and global max pooling to generate two separate spatial contexts, followed by feature recalibration using an adaptive convolutional kernel size avoiding dimensionality reduction. Finally, a sigmoid activation redistributes the values between [0, 1], generating attention coefficients along the channel axis.

Fig. 3.

Top: schematic of the channel attention module used in the Focus Gate. Global max pooling and global average pooling generate two complementary spatial contexts prior to channel weighting. Bottom: schematic of the spatial attention module used in the Focus Gate. Global max pooling and global average pooling generate two complementary channels contexts prior to spatial context generation.

3.4. Spatial attention module

Complementary to the channel attention module, spatial attention modules involve feature aggregation along the channel axis [47,48,52]. While dimensional reduction is not an issue for spatial attention modules, the replacement of fully connected layers with a convolutional layer requires an additional kernel size parameter. Larger kernel sizes provide a larger receptive field, with better performance but at the cost of computational efficiency [52]. The spatial attention module used in the FG is illustrated in Fig. 3. Again, we extend the ideas provided by ECA and CBAM by using initial global average and global max pooling along the channel axis, generating two separate channel contexts, followed by spatial recalibration with an adaptive convolutional kernel size. In contrast to ECA, the spatial dimension is inversely proportional to the channel dimension, and therefore we modify the original equation and determine kernel size k for the spatial attention module by:

| (2) |

where Cmax is the maximum channel dimension of the network, C0 is the channel dimension for the first layer, and C is the channel dimension for current layer. The parameters b and γ are set to 2 and 1, respectively [51]. This provides an efficient compromise by scaling the kernel size in proportion to the input dimension, with larger kernel sizes reserved for larger inputs.

3.5. Focus gate

Having introduced both spatial and channel attention modules, in this section we describe the structure of the FG (Fig. 2).

Similar to the attention gate, the gating signal is generated from the deepest layer of the network. The upsampling operation is replaced with a learnable kernel weight using a transposed convolution, but otherwise the skip connection and gating signal are resampled to matching dimensions. Following element-wise addition and non-linear activation, spatial and channel attention coefficients are processed in parallel, analogous to processing of the dorsal “where” and ventral “what” pathways, respectively, of the two-streams hypothesis for visual processing [53]. The spatial and channel attention coefficients are combined with element-wise multiplication, and passed through a tunable filter involving element-wise exponential parameterised by the focal parameter prior to resampling.

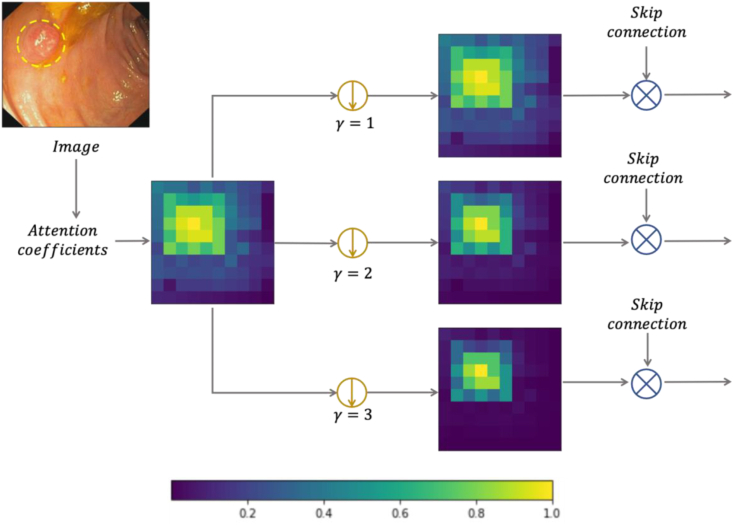

The concept of a focal parameter originates from work on loss function optimisation, where the contributions of easy examples are downweighed enabling the learning of harder examples [54,55]. Here, we apply the focal parameter to the matrix of attention coefficients, enhancing the contrast between foreground and background objects by controlling the degree of background suppression. Following sigmoid activation, all attention coefficient values are redistributed i ∈ [0, 1]. This enables higher values of the focal parameter to significantly reduce the weights of irrelevant regions and features, while salient regions and important features are relatively spared. The effect of altering the focal parameter is illustrated in Fig. 4.

Fig. 4.

The effect of modifying the focal parameter in the Focus Gate. The brighter colours in the heatmaps are associated with higher activations, here corresponding to the polyp location. Higher focal parameter values lead to increased background suppression.

Careful tuning of the focal parameter is required, to suppress background regions while preserving attention for borders between foreground and background where attention coefficients take middle values.

3.6. Deep supervision

The vanishing and exploding gradients problems are well-recognised issues with training deep CNNs [56,57]. The Focus U-Net incorporates two separate, complementary mechanisms to overcome this. Firstly, short-range skip connections, in addition to the long-range skip connections characteristic of the U-Net, allow the error signal to propagate to earlier layers more directly. However, this comes at the cost of computational efficiency, and therefore for the Focus U-Net, we leverage filter factorisation introduced by the Inception network and incorporated into the MultiResUNet, providing an efficient implementation while maintaining performance gains [58,59].

In contrast, deep supervision encourages semantic discrimination of intermediate feature maps at each level by assigning a loss to outputs at multiple layers [60,61]. Equal weighting of outputs produces sub-optimal results due to converging to solutions favouring improved performance of deeper layers at the cost of performance of the final layer. To overcome this, more complicated solutions have been developed, such as multi-scale training [55], or fine-tuning using a fully connected layer [41]. To preserve efficiency, here we assign weights w to different output layers according to Eq. (3):

| (3) |

where the stride length and width refer to the final transposed convolution stride dimensions required to resample the feature map to the original image dimension.

Intuitively, higher weights are therefore assigned to the layers requiring a smaller degree of upsampling, with the greatest weight assigned to the final output, followed by an exponential decrease in weighting with increasing depth of the network.

3.7. Hybrid Focal loss

The training of neural networks is based on solving the optimisation problem defined by the loss function. For semantic segmentation tasks, a popular choice of loss function is the sum of the Dice similarity coefficient (DSC) loss and cross entropy (CE) loss:

| (4) |

with:

| (5) |

| (6) |

where TP, FP and FN refer to true positives, false positives and false negatives respectively, and where refers to the predicted value and y refers to the ground truth label.

However, with class imbalanced tasks such as polyp segmentation, the resulting segmentation using the Dice loss often leads to high precision but low recall rate [62]. By weighting false negative predictions more heavily, the Tversky loss improves recall-precision balance:

| (7) |

where the Tversky index (TI) is defined as:

| (8) |

p0i is the probability of pixel i belonging to the foreground class and p1i is the probability of pixel belonging to background class; g0i is 1 for foreground and 0 for background and conversely g1i takes values of 1 for background and 0 for foreground.

Complementary to weighting the positive and negative examples, applying focal parameters to both the Tversky and cross entropy loss enables the downweighting of background objects in favour of foreground object segmentation, and produces the Focal Tversky loss and Focal loss, respectively [54,55]:

| (9) |

| (10) |

where α controls the class weights.

Finally, we define the Hybrid Focal loss (HFL) as the sum of the Focal Tversky loss and Focal loss:

| (11) |

To mitigate suppression of the loss near convergence, we supervise the last layer without the focal parameters [55].

4. Materials and evaluation methods

4.1. Dataset descriptions

We assess the ability of the Focus U-Net to accurately segment polyps using five public datasets containing images of polyps taken during optical colonoscopy: CVC-ClinicDB [63], Kvasir-SEG [64], CVC-ColonDB [65], ETIS-Larib PolypDB [66] and EndoScene test set (CVC-T) [67]. CVC-ClinicDB, CVC-ColonDB, ETIS-Larib PolypDB and CVC-T were created by the Hospital Clinic, Universidad de Barcelona, Spain, while the Kvasir-SEG database was produced by the Vestre Viken Health Trust, Norway.

The CVC-ClinicDB database consists of 612 frames containing polyps with image resolution 288 × 368 pixels, generated from 23 video sequences from 13 different patients using standard optical colonoscopy interventions. The Kvasir-SEG database consists of 1000 polyp images collected and verified by experienced gastroenterologists. Images vary in size from 332 × 487 to 1920 × 1072 pixels. CVC-ColonDB consists of 300 images of resolution 500 × 574 pixels obtained from 15 video sequences with a random sample of 20 frames per sequence. ETIS-Larib PolypDB similarly consists of 300 images, with image resolution 1225 × 966 pixels. Lastly, CVC-T consists of 182 frames containing polyps from 8 patients derived from either the CVC-ClinicDB and CVC-ColonDB datasets, with image resolutions of 288 × 384 or 500 × 574 pixels.

4.2. Experimental setup and implementation details

For our experiments, we use the Medical Imaging Segmentation with Convolutional Neural Networks (MIScnn) open-source Python library [68]. For all datasets, images and associated ground truth masks are provided in the png file format. For the Kvasir-SEG dataset, we resize all images to 512 × 512 pixels following pre-processing methods used in previous models [30,31], but otherwise resize images to 288 × 384 pixels for all other datasets. Pixel values are normalised to [0, 1] using the z-score. We perform full-image analysis with a batch size of 16, except for the Kvasir-SEG dataset where the large image sizes required the batch size to be reduced to 8. We use the Focus U-Net architecture as described previously with a final softmax activation layer.

For the ablation studies, we use the CVC-ClinicDB dataset, with five-fold cross validation using random assignment. We evaluate the baseline performance of the U-Net [23] and Attention U-Net [41], and sequentially assess the performance with subsequent additions of the Focus Gate, Hybrid Focal loss, short-range skip connections and deep supervision. Similar to hyperparameter selection of Focal loss functions [54,55,69], we perform a grid-search, selecting values for the focal parameter γ ∈ [1,3]. Model parameters are initialised with Xavier initialisation, and each model is trained for 100 epochs using Stochastic Gradient Descent with Nesterov momentum (μ = 0.99). We set the initial learning rate at 0.01, and follow a polynomial learning decay rate schedule [70]:

| (12) |

For fairer comparison, we do not apply any data augmentation techniques at this stage.

In contrast, when attempting for state-of-the-art results on the CVC-ClinicDB dataset, we train our final model using five-fold cross validation for 500 epochs and use the following data augmentation techniques: scaling, rotation, elastic deformation, mirror and gamma transformations. For both evaluation on the Kvasir-SEG dataset and evaluation on the combination of all five public datasets, we follow the single train-test split used in Refs. [30,31], and train each model for 1000 epochs with the same data augmentation settings for result reproducibility and comparisons.

For the Hybrid Focal loss, we follow the optimal hyperparameters reported in the original studies. We set α = 0.3 and β = 0.7 for the Tversky index, α = 0.25, γ = 2 for the Focal loss and α = 0.3, β = 0.7 and γ = 3/4 for the Focal Tversky loss [54,55,62].

For all cases, the validation loss is evaluated at the end of each epoch, and the model with the lowest validation loss is selected as the final model. All experiments are programmed using Keras with TensorFlow backend and trained with NVIDIA P100 GPUs, with CUDA version 10.2 and cuDNN version 7.6.5. Source code is available at: https://github.com/mlyg/Focus-U-Net.

4.3. Evaluation metrics

To assess segmentation accuracy, we follow recommendations from Ref. [64], and use DSC and intersection over union (IoU) as the two main metrics. DSC is previously defined in Eq. (5), and IoU is defined as:

| (13) |

The IoU metric penalises single instances of poor pixel classification more heavily than DSC, providing similar but complementary perspectives on assessing segmentation accuracy. We further assess recall and precision:

| (14) |

| (15) |

In the context of polyp segmentation, recall, also known as sensitivity, measures the proportion of the pixels corresponding to the polyp that are correctly identified. In contrast, precision, also known as the positive predictive value, measures the proportion of pixels correctly labelled as representing the polyp. While both are accounted for in the DSC metric, measuring recall and precision provides additional information regarding the false positive and false negative rates.

Finally, we evaluate model efficiency by calculating the frames per second (FPS) using the mean inference time:

| (16) |

5. Experimental results

We first perform a series of ablation studies to evaluate individual components of the Focus U-Net, followed by separate evaluations on the CVC-ClinicDB and Kvasir-SEG datasets, and finally evaluate against a test set combining five public polyp datasets.

The results from the ablation study are shown in Table 1.

Table 1.

Results from training on the CVC-ClinicDB for the U-Net, Attention U-Net and Focus U-Net. The best result is seen with the addition of the Focus Gate, short skip connections, deep supervision and use of the Hybrid Focal loss.

| Model | Loss function | Focal parameter γ | Short skip connections | Deep supervision | mDSC | mIoU | Recall | Precision | FPS |

|---|---|---|---|---|---|---|---|---|---|

| U-Net | DSC + CE | – | ✗ | ✗ | 0.828 ± 0.021 | 0.747 ± 0.022 | 0.817 ± 0.024 | 0.877 ± 0.021 | 27 |

| Attention U-Net | DSC + CE | – | ✗ | ✗ | 0.801 ± 0.019 | 0.705 ± 0.023 | 0.799 ± 0.012 | 0.844 ± 0.030 | 25 |

| Focus U-Net | DSC + CE | 1 | ✗ | g ✗ | 0.838 ± 0.018 | 0.755 ± 0.018 | 0.833 ± 0.016 | 0.876 ± 0.028 | 25 |

| Focus U-Net | DSC + CE | 1.25 | ✗ | ✗ | 0.844 ± 0.011 | 0.762 ± 0.011 | 0.844 ± 0.018 | 0.876 ± 0.020 | 25 |

| Focus U-Net | DSC + CE | 1.5 | ✗ | ✗ | 0.842 ± 0.025 | 0.755 ± 0.027 | 0.845 ± 0.038 | 0.866 ± 0.016 | 25 |

| Focus U-Net | DSC + CE | 2 | ✗ | ✗ | 0.817 ± 0.025 | 0.728 ± 0.030 | 0.817 ± 0.023 | 0.859 ± 0.026 | 25 |

| Focus U-Net | DSC + CE | 3 | ✗ | ✗ | 0.825 ± 0.022 | 0.736 ± 0.022 | 0.820 ± 0.035 | 0.863 ± 0.022 | 25 |

| Focus U-Net | DSC + CE | 1.25 | ✓ | ✗ | 0.867 ± 0.018 | 0.800 ± 0.011 | 0.852 ± 0.023 | 0.908 ± 0.017 | 25 |

| Focus U-Net | HFL | 1.25 | ✓ | ✗ | 0.869 ± 0.013 | 0.797 ± 0.014 | 0.870 ± 0.017 | 0.892 ± 0.010 | 2 |

| Focus U-Net | HFL | 1.25 | ✓ | ✓ | 0.875 ± 0.016 | 0.801 ± 0.017 | 0.878 ± 0.013 | 0.889 ± 0.018 | 25 |

Performance gains are observed with successive addition of each component, and with all components present there is a significant improvement with a DSC score of 0.875 ± 0.016 compared to the U-Net (0.828 ± 0.021) and Attention U-Net (0.801 ± 0.019). The Focus U-Net achieves similar FPS performance to the Attention U-Net and comparable FPS performance to the U-Net, justifying the improvement in accuracy with minimal efficiency losses.

The results for the CVC-ClinicDB dataset are shown in Table 2.

Table 2.

Results for the CVC-ClinicDB dataset. Boldface numbers denote the highest values for each metrics.

| Model | mDSC | mIoU | Recall | Precision |

|---|---|---|---|---|

| FCN–8S [21] | 0.810 | – | 0.748 | 0.883 |

| Multi-scale patch-based CNN [71] | 0.813 | – | 0.786 | 0.809 |

| FCN [72] | 0.830 | – | 0.773 | 0.900 |

| MultiResUNet [59] | – | 0.821 | – | – |

| cGAN [73] | 0.872 | 0.795 | – | – |

| U-Net [23] | 0.878 | 0.788 | 0.787 | 0.933 |

| Multiple encoder-decoder network [74] | 0.889 | 0.894 | – | – |

| PraNet [30] | 0.898 | 0.840 | – | – |

| PolypSegNet [28] | 0.915 | 0.862 | 0.911 | 0.962 |

| ResUNet++ with CRF [26] | 0.920 | 0.890 | 0.939 | 0.846 |

| Double U-Net [27] | 0.924 | 0.861 | 0.846 | 0.959 |

| FANet [34] | 0.934 | 0.894 | 0.934 | 0.940 |

| Focus U-Net | 0.941 | 0.893 | 0.956 | 0.930 |

The Focus U-Net achieves state-of-the-art results with a mDSC score of 0.941 and a mIoU score of 0.893, outperforming the ResUNet++ with Conditional Random Field (CRF) and DoubleU-Net. Focus U-Net also has the best recall-precision balance, while PolypSegNet achieves the highest precision at the cost of recall, and conversely the ResUNet++ with CRF achieves high recall at the cost of precision.

Next, we evaluate our model on the Kvasir-SEG dataset. The results are shown in Table 3. The Focus U-Net achieves state-of-the-art results with a mDSC score of 0.910 and mIoU score of 0.845. The highest mIoU is achieved by HarDNet-MSEG [31].

Table 3.

Results for the Kvasir-SEG dataset. Boldface numbers denote the highest values for each metrics.

| Model | mDSC | mIoU | Recall | Precision |

|---|---|---|---|---|

| U-Net [23] | 0.715 | 0.433 | 0.631 | 0.922 |

| Double U-Net [27] | 0.813 | 0.733 | 0.840 | 0.861 |

| FCN8 (VGG16 backbone) [20] | 0.831 | 0.737 | 0.835 | 0.882 |

| PSPNet (ResNet50 backbone) [75] | 0.841 | 0.744 | 0.836 | 0.890 |

| HRNet [76] | 0.845 | 0.759 | 0.859 | 0.878 |

| ResUNet++ with CRF [26] | 0.851 | 0.833 | 0.876 | 0.823 |

| DeepLabv3+ (ResNet101 backbone) [77] | 0.864 | 0.786 | 0.859 | 0.906 |

| U-Net (ResNet34 backbone) [23] | 0.876 | 0.810 | 0.944 | 0.862 |

| FANet [34] | 0.880 | 0.81 | 0.906 | 0.901 |

| PolypSegNet [28] | 0.887 | 0.826 | 0.925 | 0.917 |

| HarDNet-MSEG [31] | 0.904 | 0.848 | 0.923 | 0.907 |

| Focus U-Net | 0.910 | 0.845 | 0.916 | 0.917 |

Finally, Table 4 shows the results for the evaluation on five public polyp datasets. It is worth noting that, for a fair comparison, the evaluations metrics are shown only for the approaches that focused on segmentation performance over computational efficiency.

Table 4.

Results for evaluation on five public polyp datasets. The combined score takes into account the number of images used for each dataset, enabling cross dataset comparisons.

| Dataset (number of images) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CVC-ClinicDB (61) |

CVC-ColonDB (380) |

ETIS-LaribPolypDB (196) |

CVC-T (60) |

Kvasir-SEG (100) |

Combined Score |

|||||||

| Model | mDSC | mIoU | mDSC | mIoU | mDSC | mIoU | mDSC | mIoU | mDSC | mIoU | mDSC | mIoU |

| SFA [78] | 0.700 | 0.607 | 0.469 | 0.347 | 0.297 | 0.217 | 0.467 | 0.329 | 0.723 | 0.611 | 0.476 | 0.367 |

| U-Net++ [24] | 0.794 | 0.729 | 0.483 | 0.410 | 0.401 | 0.344 | 0.707 | 0.624 | 0.821 | 0.743 | 0.546 | 0.476 |

| ResUNet++ [25] | 0.796 | 0.796 | – | – | – | – | – | – | 0.813 | 0.793 | – | – |

| U-Net [23] | 0.823 | 0.755 | 0.512 | 0.444 | 0.398 | 0.335 | 0.710 | 0.627 | 0.818 | 0.746 | 0.561 | 0.493 |

| PraNet [30] | 0.899 | 0.849 | 0.709 | 0.640 | 0.628 | 0.567 | 0.871 | 0.797 | 0.898 | 0.840 | 0.740 | 0.675 |

| HarDNet-MSEG [31] | 0.932 | 0.882 | 0.731 | 0.660 | 0.677 | 0.613 | 0.887 | 0.821 | 0.912 | 0.857 | 0.768 | 0.702 |

| Focus U-Net | 0.938 | 0.889 | 0.878 | 0.804 | 0.832 | 0.757 | 0.920 | 0.860 | 0.910 | 0.853 | 0.878 | 0.809 |

The Focus U-Net achieves the highest score across four of the five datasets with a mDSC of 0.938 and mIoU of 0.889 on the CVC-ClinicDB, mDSC of 0.878 and mIoU of 0.804 for CVC-ColonDB, mDSC of 0.832 and mIoU of 0.757 for ETIS-LaribPolypDB, mDSC of 0.920 and mIoU of 0.860 for CVC-T and mDSC of 0.910 and mIoU of 0.853 for the Kvasir-SEG dataset. Importantly, the combined score takes into account the relative number of images of each dataset, and the Focus U-Net achieves a mDSC of 0.878 and mIoU of 0.702, a 14% increase in mDSC over the previous state-of-the-art (HarDNet-MSEG, mDSC = 0.768) and 15% increase in mIoU (HarDNet-MSEG, mIoU = 0.702). The greatest improvements are observed in the most challenging datasets, namely the CVC-ColonDB and ETIS-LaribPolypDB datasets. For CVC-ColonDB, a 20% increase in mDSC is observed over the previous state-of-the-art (HarDNet-MSEG, mDSC = 0.731) and an 87% increase in mDSC compared to the previously top performing Selective Feature Aggregation (SFA) model. Even more significantly, for ETIS-LaribPolypDB, a 23% increase in mDSC is observed over the previous state-of-the-art (HarDNET-MSEG, mDSC = 0.677) and a 180% increase in mDSC compared to SFA.

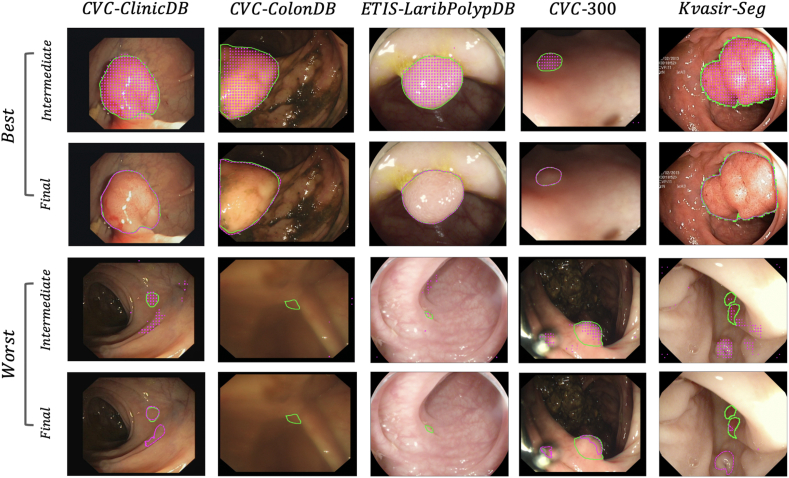

Examples of polyp segmentations for the five datasets are shown in Fig. 5.

Fig. 5.

Examples of the best and worst cases in terms of DSC from each of the five public datasets. The solid green line represents the ground truth mask. The dashed magenta line corresponds to the predictions yielded by the Focus U-Net. The intermediate prediction is derived from the deepest layer of the network, while the final prediction is used for evaluation.

The accuracy of segmentations obtained from the intermediate layer highlights the ability for the deepest layers to localise the polyp effectively. The Focus U-Net generalises well with consistently accurate segmentations across all datasets. For the images corresponding to the poorest segmentation quality, these are either objectively challenging polyps to identify, or in many cases poor-quality images such as in the CVC-ColonDB example.

6. Discussion and conclusion

In this paper, we introduce a novel dual attention-gated U-Net architecture, named the Focus U-Net, which uses a Focus Gate to encourage learning of salient regions combined with a focal parameter controlling suppression of irrelevant background regions. Moreover, with the additions of short-range skip connections and deep supervision, as well as optimisation based on the Hybrid Focal loss, the Focus U-Net outperforms the state-of-the-art results across five public polyp datasets. Importantly, the proposed architecture performs consistently well across all datasets, demonstrating an ability to generalise to unseen data from different datasets. Visualising the resulting polyp segmentations confirms the segmentation quality, with poorer segmentations associated with a combination of either poorer image quality or objectively more challenging polyps to identify.

The proposed Focus U-Net is the latest addition to lightweight, yet accurate, polyp segmentation models, achieving state-of-the-art results with a mDSC of 0.878 and mIoU score of 0.809 when evaluated on the combination of five public datasets, a 14% and 15% improvement over the previous state-of-the-art results from HarDNet-MSEG with a mDSC of 0.768 and mIoU of 0.702, respectively.

While these results are promising, it is important to determine whether such a model may be applied in clinical practice. Given that colonoscopy involves recordings of live video, a model with a fast inference time is required to process images in real-time. Accordingly, the Focus U-Net architecture is efficiently designed, with both efficient channel and spatial attention mechanisms, as well as a lightweight U-Net backbone, with comparable FPS performance to the standard U-Net [79]. With polyp miss rates as high as 26% reported for small adenomas [9], the primary advantage of AI-assisted colonoscopy is to aid clinicians in reducing polyp miss-rate detection. However, a secondary advantage with segmentation-based computer-aided detection is providing an accurate and operator-independent estimate of the polyp size; an important factor in guiding biopsy decisions that may be required during colonoscopy.

There are several limitations associated with our current study. Firstly, the datasets used to train our model consist of images all containing polyps, in contrast to in practice, where the majority of live video data would not contain a polyp. However, in terms of model training, it has been observed that training with images in the absence of polyps results in poorer generalisation [80]. In terms of model performance, we would expect a higher false positive rate. This is not as undesirable as the converse of a high false negative rate, because the purpose of the computer-aided system is to focus the operator to attend to highlighted regions that may contain missed polyps [81].

While colonoscopy remains the gold-standard for investigating suspected CRC, CT virtual colonography is a relatively newer method for bowel cancer screening that offers non-invasive visualisation of the colon [82]. The flexibility of our model does not restrict usage to polyps in visible light and is equally applicable for polyp detection using CT colonography. However, these newer modalities present additional challenges, such as fluid submersion obscuring polyps [83]. In fact, the scope for using the Focus U-Net architecture is not limited for colorectal polyps, and is applicable for any image segmentation problem where there is the issue of class imbalance and requirement for efficiency.

Declaration of competing interest

All authors in this paper do not have any conflict of interest.

Acknowledgements

This work was partially supported by The Mark Foundation for Cancer Research and Cancer Research UK Cambridge Centre [C9685/A25177], the CRUK National Cancer Imaging Translational Accelerator (NCITA) [C42780/A27066] and the Wellcome Trust Innovator Award, United Kingdom [215733/Z/19/Z]. Additional support was also provided by the National Institute of Health Research (NIHR) Cambridge Biomedical Research Centre [BRC-1215-20014] and the Cambridge Mathematics of Information in Healthcare (CMIH) [funded by the EPSRC grant EP/T017961/1]. The views expressed are those of the authors and not necessarily those of the NHS, the NIHR, or the Department of Health and Social Care.

CBS in addition acknowledges support from the Leverhulme Trust project on ‘Breaking the non-convexity barrier’, the Philip Leverhulme Prize, the Royal Society Wolfson Fellowship, the EPSRC grants EP/S026045/1, EP/N014588/1, European Union Horizon 2020 research and innovation programmes under the Marie Skodowska-Curie grant agreement No. 777826 NoMADS and No. 691070 CHiPS, the Cantab Capital Institute for the Mathematics of Information and the Alan Turing Institute.

This work was performed using resources provided by the Cambridge Service for Data Driven Discovery (CSD3) operated by the University of Cambridge Research Computing Service (www.csd3.cam.ac.uk), provided by Dell EMC and Intel using Tier-2 funding from the Engineering and Physical Sciences Research Council (capital grant EP/P020259/1), and DiRAC funding from the Science and Technology Facilities Council (www.dirac.ac.uk).

Contributor Information

Michael Yeung, Email: mjyy2@cam.ac.uk.

Evis Sala, Email: es220@medschl.cam.ac.uk.

Carola-Bibiane Schönlieb, Email: cbs31@cam.ac.uk.

Leonardo Rundo, Email: lr495@cam.ac.uk.

References

- 1.Bray F., Ferlay J., Soerjomataram I., Siegel R.L., Torre L.A., Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2018;68(6):394–424. doi: 10.3322/caac.21492. [DOI] [PubMed] [Google Scholar]

- 2.Rawla P., Sunkara T., Barsouk A. Epidemiology of colorectal cancer: incidence, mortality, survival, and risk factors. Przeglad Gastroenterol. 2019;14(2):89. doi: 10.5114/pg.2018.81072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Vogelstein B., Fearon E.R., Hamilton S.R., Kern S.E., Preisinger A.C., Leppert M., Smits A.M., Bos J.L. Genetic alterations during colorectal-tumor development. N. Engl. J. Med. 1988;319(9):525–532. doi: 10.1056/NEJM198809013190901. [DOI] [PubMed] [Google Scholar]

- 4.Kuntz K.M., Lansdorp-Vogelaar I., Rutter C.M., Knudsen A.B., Van Ballegooijen M., Savarino J.E., Feuer E.J., Zauber A.G. A systematic comparison of microsimulation models of colorectal cancer: the role of assumptions about adenoma progression. Med. Decis. Making. 2011;31(4):530–539. doi: 10.1177/0272989X11408730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Brenner H., Hoffmeister M., Stegmaier C., Brenner G., Altenhofen L., Haug U. Risk of progression of advanced adenomas to colorectal cancer by age and sex: estimates based on 840 149 screening colonoscopies. Gut. 2007;56(11):1585–1589. doi: 10.1136/gut.2007.122739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schreuders E.H., Ruco A., Rabeneck L., Schoen R.E., Sung J.J., Young G.P., Kuipers E.J. Colorectal cancer screening: a global overview of existing programmes. Gut. 2015;64(10):1637–1649. doi: 10.1136/gutjnl-2014-309086. [DOI] [PubMed] [Google Scholar]

- 7.Arnold M., Sierra M.S., Laversanne M., Soerjomataram I., Jemal A., Bray F. Global patterns and trends in colorectal cancer incidence and mortality. Gut. 2017;66(4):683–691. doi: 10.1136/gutjnl-2015-310912. [DOI] [PubMed] [Google Scholar]

- 8.Issa I.A., Noureddine M. Colorectal cancer screening: an updated review of the available options. World J. Gastroenterol. 2017;23(28):5086. doi: 10.3748/wjg.v23.i28.5086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kim N.H., Jung Y.S., Jeong W.S., Yang H.-J., Park S.-K., Choi K., Park D.I. Miss rate of colorectal neoplastic polyps and risk factors for missed polyps in consecutive colonoscopies. Int. Res. 2017;15(3):411. doi: 10.5217/ir.2017.15.3.411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Van Rijn J.C., Reitsma J.B., Stoker J., Bossuyt P.M., Van Deventer S.J., Dekker E. Polyp miss rate determined by tandem colonoscopy: a systematic review. Am. J. Gastroenterol. 2006;101(2):343–350. doi: 10.1111/j.1572-0241.2006.00390.x. [DOI] [PubMed] [Google Scholar]

- 11.Leufkens A., Van Oijen M., Vleggaar F., Siersema P. Factors influencing the miss rate of polyps in a back-to-back colonoscopy study. Endoscopy. 2012;44:470–475. doi: 10.1055/s-0031-1291666. 05. [DOI] [PubMed] [Google Scholar]

- 12.Le Berre C., Sandborn W.J., Aridhi S., Devignes M.-D., Fournier L., Smaïl-Tabbone M., Danese S., Peyrin-Biroulet L. Application of artificial intelligence to gastroenterology and hepatology. Gastroenterology. 2020;158(1):76–94. doi: 10.1053/j.gastro.2019.08.058. [DOI] [PubMed] [Google Scholar]

- 13.Thakkar S., Carleton N.M., Rao B., Syed A. Use of artificial intelligence-based analytics from live colonoscopies to optimize the quality of the colonoscopy examination in real time: proof of concept. Gastroenterology. 2020;158(5):1219–1221. doi: 10.1053/j.gastro.2019.12.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Krishnan S., Yang X., Chan K., Kumar S., Goh P. vol. 2. IEEE; 1998. Intestinal abnormality detection from endoscopic images; pp. 895–898. (Proc. 20th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC)). [Google Scholar]

- 15.Hwang S., Oh J., Tavanapong W., Wong J., De Groen P.C. vol. 2. IEEE; 2007. Polyp detection in colonoscopy video using elliptical shape feature; pp. II–465. (Proc. IEEE International Conference on Image Processing (ICIP)). [Google Scholar]

- 16.Bernal J., Sánchez J., Vilarino F. Towards automatic polyp detection with a polyp appearance model. Pattern Recogn. 2012;45(9):3166–3182. [Google Scholar]

- 17.Karkanis S.A., Iakovidis D.K., Maroulis D.E., Karras D.A., Tzivras M. Computer-aided tumor detection in endoscopic video using color wavelet features. IEEE Trans. Inf. Technol. Biomed.e. 2003;7(3):141–152. doi: 10.1109/titb.2003.813794. [DOI] [PubMed] [Google Scholar]

- 18.Coimbra M.T., Cunha J.S. MPEG-7 visual descriptors—contributions for automated feature extraction in capsule endoscopy. IEEE Trans. Circ. Syst. Video Technol. 2006;16(5):628–637. [Google Scholar]

- 19.Yu L., Chen H., Dou Q., Qin J., Heng P.A. Integrating online and offline three-dimensional deep learning for automated polyp detection in colonoscopy videos. IEEE J. Biomed. Health Inform. 2016;21(1):65–75. doi: 10.1109/JBHI.2016.2637004. [DOI] [PubMed] [Google Scholar]

- 20.Long J., Shelhamer E., Darrell T. Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. CVPR); 2015. Fully convolutional networks for semantic segmentation; pp. 3431–3440. [DOI] [PubMed] [Google Scholar]

- 21.Akbari M., Mohrekesh M., Nasr-Esfahani E., Soroushmehr S.R., Karimi N., Samavi S., Najarian K. Proc. 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) IEEE; 2018. Polyp segmentation in colonoscopy images using fully convolutional network; pp. 69–72. [DOI] [PubMed] [Google Scholar]

- 22.Brandao P., Mazomenos E., Ciuti G., Caliò R., Bianchi F., Menciassi A., Dario P., Koulaouzidis A., Arezzo A., Stoyanov D. vol. 10134. International Society for Optics and Photonics; 2017. Fully convolutional neural networks for polyp segmentation in colonoscopy; p. 101340F. (Proc. Medical Imaging 2017: Computer-Aided Diagnosis). [Google Scholar]

- 23.Ronneberger O., Fischer P., Brox T., U-Net . Proc. International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) Springer; 2015. Convolutional networks for biomedical image segmentation; pp. 234–241. [Google Scholar]

- 24.Zhou Z., Siddiquee M.M.R., Tajbakhsh N., Liang J. UNet++: redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med. Imag. 2019;39(6):1856–1867. doi: 10.1109/TMI.2019.2959609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Jha D., Smedsrud P.H., Riegler M.A., Johansen D., De Lange T., Halvorsen P., Johansen H.D. Proc. IEEE International Symposium on Multimedia (ISM) IEEE; 2019. ResUNet++: an advanced architecture for medical image segmentation; pp. 225–2255. [Google Scholar]

- 26.Jha D., Smedsrud P.H., Johansen D., de Lange T., Johansen H.D., Halvorsen P., Riegler M.A. A comprehensive study on colorectal polyp segmentation with ResUNet++, conditional random field and test-time augmentation. IEEE J. Biomed. Health Inform. 2021;25(6):2029–2040. doi: 10.1109/JBHI.2021.3049304. [DOI] [PubMed] [Google Scholar]

- 27.Jha D., Riegler M.A., Johansen D., Halvorsen P., Johansen H.D., DoubleU-Net . Proc. IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS) IEEE; 2020. A deep convolutional neural network for medical image segmentation; pp. 558–564. [Google Scholar]

- 28.Mahmud T., Paul B., Fattah S.A. PolypSegNet: a modified encoder-decoder architecture for automated polyp segmentation from colonoscopy images. Comput. Biol. Med. 2021;128:104119. doi: 10.1016/j.compbiomed.2020.104119. [DOI] [PubMed] [Google Scholar]

- 29.Jha D., Ali S., Tomar N.K., Johansen H.D., Johansen D., Rittscher J., Riegler M.A., Halvorsen P. Real-time polyp detection, localization and segmentation in colonoscopy using deep learning. IEEE Access. 2021;9:40496–40510. doi: 10.1109/ACCESS.2021.3063716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Fan D.-P., Ji G.-P., Zhou T., Chen G., Fu H., Shen J., Shao L. Proc. International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) Springer; 2020. PraNet: parallel reverse attention network for polyp segmentation; pp. 263–273. [Google Scholar]

- 31.C.-H. Huang, H.-Y. Wu, Y.-L. Lin, HarDNet-MSEG: A Simple Encoder-Decoder Polyp Segmentation Neural Network that Achieves over 0.9 Mean Dice and 86 FPS, arXiv preprint arXiv:2101.07172.

- 32.Chao P., Kao C.-Y., Ruan Y.-S., Huang C.-H., Lin Y.-L. Proc. IEEE International Conference on Computer Vision. ICCV); 2019. HarDNet: a low memory traffic network; pp. 3552–3561. [Google Scholar]

- 33.G.-P. Ji, Y.-C. Chou, D.-P. Fan, G. Chen, H. Fu, D. Jha, L. Shao, Progressively Normalized Self-Attention Network for Video Polyp Segmentation, arXiv preprint arXiv:2105.08468.

- 34.N. K. Tomar, D. Jha, M. A. Riegler, H. D. Johansen, D. Johansen, J. Rittscher, P. Halvorsen, S. Ali, FANet: A Feedback Attention Network for Improved Biomedical Image Segmentation, arXiv preprint arXiv:2103.17235. [DOI] [PubMed]

- 35.Han C., Rundo L., Murao K., Noguchi T., Shimahara Y., Milacski Z.Á., Koshino S., Sala E., Nakayama H., Satoh S. MADGAN: unsupervised medical anomaly detection GAN using multiple adjacent brain MRI slice reconstruction. BMC Bioinf. 2021;22:31. doi: 10.1186/s12859-020-03936-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chen S., Zou Y., Liu P.X., IBA-U-Net Attentive BConvLSTM U-Net with redesigned inception for medical image segmentation. Comput. Biol. Med. 2021:104551. doi: 10.1016/j.compbiomed.2021.104551. [DOI] [PubMed] [Google Scholar]

- 37.Tagliarini G., Page E. Proc. Neural Information Processing Systems. NIPS); 1987. A neural-network solution to the concentrator assignment problem; pp. 775–782. [Google Scholar]

- 38.S. Jetley, N. A. Lord, N. Lee, P. H. Torr, Learn to Pay Attention, arXiv preprint arXiv:1804.02391.

- 39.Wang F., Jiang M., Qian C., Yang S., Li C., Zhang H., Wang X., Tang X. Proc. IEEE/CVF Conference Computer Vision and Pattern Recognition. CVPR); 2017. Residual attention network for image classification; pp. 3156–3164. [Google Scholar]

- 40.M.-T. Luong, H. Pham, C. D. Manning, Effective Approaches to Attention-Based Neural Machine Translation, arXiv preprint arXiv:1508.04025.

- 41.Schlemper J., Oktay O., Schaap M., Heinrich M., Kainz B., Glocker B., Rueckert D. Attention gated networks: learning to leverage salient regions in medical images. Med. Image Anal. 2019;53:197–207. doi: 10.1016/j.media.2019.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.D. Bahdanau, K. Cho, Y. Bengio, Neural Machine Translation by Jointly Learning to Align and Translate, arXiv preprint arXiv:1409.0473.

- 43.Shen T., Zhou T., Long G., Jiang J., Pan S., Zhang C. vol. 32. 2018. DiSAN: directional self-attention network for rnn/cnn-free language understanding; pp. 5446–5455. (Proc. AAAI Conference on Artificial Intelligence). [Google Scholar]

- 44.A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, I. Polosukhin, Attention Is All You Need, arXiv preprint arXiv:1706.03762.

- 45.O. Oktay, J. Schlemper, L. L. Folgoc, M. Lee, M. Heinrich, K. Misawa, K. Mori, S. McDonagh, N. Y. Hammerla, B. Kainz, et al., Attention U-Net: Learning where to Look for the Pancreas, arXiv preprint arXiv:1804.03999.

- 46.Hu J., Shen L., Sun G. Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. CVPR); 2018. Squeeze-and-excitation networks; pp. 7132–7141. [Google Scholar]

- 47.Fu J., Liu J., Tian H., Li Y., Bao Y., Fang Z., Lu H. Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. CVPR); 2019. Dual attention network for scene segmentation; pp. 3146–3154. [Google Scholar]

- 48.Woo S., Park J., Lee J.-Y., Kweon I.S. Proc. European Conference on Computer Vision. ECCV); 2018. CBAM: convolutional block attention module; pp. 3–19. [Google Scholar]

- 49.Gao Z., Xie J., Wang Q., Li P. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019. Global second-order pooling convolutional networks; pp. 3024–3033. [Google Scholar]

- 50.Rundo L., Han C., Nagano Y., Zhang J., Hataya R., Militello C., Tangherloni A., Nobile M.S., Ferretti C., Besozzi D. USE-Net: incorporating squeeze-and-excitation blocks into U-Net for prostate zonal segmentation of multi-institutional MRI datasets. Neurocomputing. 2019;365:31–43. [Google Scholar]

- 51.Wang Q., Wu B., Zhu P., Li P., Zuo W., Hu Q., ECA-Net . Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) IEEE; 2020. Efficient channel attention for deep convolutional neural networks; pp. 11531–11539. [DOI] [Google Scholar]

- 52.Roy A.G., Navab N., Wachinger C. Proc. Int. Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) Springer; 2018. Concurrent spatial and channel ‘squeeze & excitation’ in fully convolutional networks; pp. 421–429. [Google Scholar]

- 53.Goodale M.A., Milner A.D. Separate visual pathways for perception and action. Trends Neurosci. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 54.Lin T.-Y., Goyal P., Girshick R., He K., Dollár P. Proc. IEEE International Conference on Computer Vision. ICCV); 2017. Focal loss for dense object detection; pp. 2980–2988. [Google Scholar]

- 55.Abraham N., Khan N.M. Proc. IEEE 16th International Symposium on Biomedical Imaging (ISBI) IEEE; 2019. A novel focal Tversky loss function with improved attention U-Net for lesion segmentation; pp. 683–687. [Google Scholar]

- 56.Glorot X., Bengio Y. Proc. 13th Int. Conference on Artificial Intelligence and Statistics (AISTATS) JMLR Workshop and Conference Proceedings; 2010. Understanding the difficulty of training deep feedforward neural networks; pp. 249–256. [Google Scholar]

- 57.Pascanu R., Mikolov T., Bengio Y. Proc. Int. Conference on Machine Learning (ICML) PMLR; 2013. On the difficulty of training recurrent neural networks; pp. 1310–1318. [Google Scholar]

- 58.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. Proc. IEEE Conference on Computer Vision and Pattern Recognition. CVPR); 2016. Rethinking the inception architecture for computer vision; pp. 2818–2826. [DOI] [Google Scholar]

- 59.Ibtehaz N., Rahman M.S. MultiResUNet: rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Network. 2020;121:74–87. doi: 10.1016/j.neunet.2019.08.025. [DOI] [PubMed] [Google Scholar]

- 60.Lee C.-Y., Xie S., Gallagher P., Zhang Z., Tu Z. Proc. 18th Int. Conference on Artificial Intelligence and Statistics (AISTATS) PMLR; 2015. Deeply-supervised nets; pp. 562–570. [Google Scholar]

- 61.Dou Q., Yu L., Chen H., Jin Y., Yang X., Qin J., Heng P.-A. 3D deeply supervised network for automated segmentation of volumetric medical images. Med. Image Anal. 2017;41:40–54. doi: 10.1016/j.media.2017.05.001. [DOI] [PubMed] [Google Scholar]

- 62.Salehi S.S.M., Erdogmus D., Gholipour A. Proc. Int. Workshop on Machine Learning in Medical Imaging (MLMI) Springer; 2017. Tversky loss function for image segmentation using 3D fully convolutional deep networks; pp. 379–387. [Google Scholar]

- 63.Bernal J., Sánchez F.J., Fernández-Esparrach G., Gil D., Rodríguez C., Vilariño F. WM-DOVA maps for accurate polyp highlighting in colonoscopy: validation vs. saliency maps from physicians. Comput. Med. Imag. Graph. 2015;43:99–111. doi: 10.1016/j.compmedimag.2015.02.007. [DOI] [PubMed] [Google Scholar]

- 64.Jha D., Smedsrud P.H., Riegler M.A., Halvorsen P., de Lange T., Johansen D., Johansen H.D., Kvasir S.E.G. Proc. International Conference on Multimedia Modeling (MMM) Springer; 2020. A segmented polyp dataset; pp. 451–462. [Google Scholar]

- 65.Tajbakhsh N., Gurudu S.R., Liang J. Automated polyp detection in colonoscopy videos using shape and context information. IEEE Trans. Med. Imag. 2015;35(2):630–644. doi: 10.1109/TMI.2015.2487997. [DOI] [PubMed] [Google Scholar]

- 66.Silva J., Histace A., Romain O., Dray X., Granado B. Toward embedded detection of polyps in WCE images for early diagnosis of colorectal cancer. Int. J. Comput. Assist. Radiol. Surg. 2014;9(2):283–293. doi: 10.1007/s11548-013-0926-3. [DOI] [PubMed] [Google Scholar]

- 67.Vázquez D., Bernal J., Sánchez F.J., Fernández-Esparrach G., López A.M., Romero A., Drozdzal M., Courville A. A benchmark for endoluminal scene segmentation of colonoscopy images. J. Healthc. Eng. 2017 doi: 10.1155/2017/4037190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Müller D., Kramer F. MIScnn: a framework for medical image segmentation with convolutional neural networks and deep learning. BMC Med. Imag. 2021;21(1):1–11. doi: 10.1186/s12880-020-00543-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.M. Yeung, E. Sala, C.-B. Schönlieb, L. Rundo, Unified Focal Loss: Generalising Dice and Cross Entropy-Based Losses to Handle Class Imbalanced Medical Image Segmentation, arXiv preprint arXiv:2102.04525. [DOI] [PMC free article] [PubMed]

- 70.Isensee F., Jaeger P.F., Kohl S.A., Petersen J., Maier-Hein K.H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods. 2021;18(2):203–211. doi: 10.1038/s41592-020-01008-z. [DOI] [PubMed] [Google Scholar]

- 71.Banik D., Bhattacharjee D., Nasipuri M. Advanced Computing and Systems for Security. Springer; 2020. A multi-scale patch-based deep learning system for polyp segmentation; pp. 109–119. [Google Scholar]

- 72.Li Q., Yang G., Chen Z., Huang B., Chen L., Xu D., Zhou X., Zhong S., Zhang H., Wang T. Proc. 10th Int. Congress on Image and Signal Processing, Biomedical Engineering and Informatics (CISP-BMEI) IEEE; 2017. Colorectal polyp segmentation using a fully convolutional neural network; pp. 1–5. [Google Scholar]

- 73.Poorneshwaran J., Kumar S.S., Ram K., Joseph J., Sivaprakasam M. Proc. 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) IEEE; 2019. Polyp segmentation using generative adversarial network; pp. 7201–7204. [DOI] [PubMed] [Google Scholar]

- 74.Nguyen Q., Lee S.-W. Proc. IEEE First Int. Conference on Artificial Intelligence and Knowledge Engineering (AIKE) IEEE; 2018. Colorectal segmentation using multiple encoder-decoder network in colonoscopy images; pp. 208–211. [Google Scholar]

- 75.Zhao H., Shi J., Qi X., Wang X., Jia J. Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. CVPR); 2017. Pyramid scene parsing network; pp. 2881–2890. [Google Scholar]

- 76.J. Wang, K. Sun, T. Cheng, B. Jiang, C. Deng, Y. Zhao, D. Liu, Y. Mu, M. Tan, X. Wang, et al., Deep high-resolution representation learning for visual recognition, IEEE Trans. Pattern Anal. Mach. Intell. [DOI] [PubMed]

- 77.Chen L.-C., Zhu Y., Papandreou G., Schroff F., Adam H. Proc. European Conference on Computer Vision. ECCV); 2018. Encoder-decoder with atrous separable convolution for semantic image segmentation; pp. 801–818. [Google Scholar]

- 78.Fang Y., Chen C., Yuan Y., Tong K.-y. Proc. Int. Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) Springer; 2019. Selective feature aggregation network with area-boundary constraints for polyp segmentation; pp. 302–310. [Google Scholar]

- 79.Wang Z., Zou Y., Liu P.X. Hybrid dilation and attention residual U-Net for medical image segmentation. Comput. Biol. Med. 2021;134:104449. doi: 10.1016/j.compbiomed.2021.104449. [DOI] [PubMed] [Google Scholar]

- 80.Brandao P., Zisimopoulos O., Mazomenos E., Ciuti G., Bernal J., Visentini-Scarzanella M., Menciassi A., Dario P., Koulaouzidis A., Arezzo A. Towards a computed-aided diagnosis system in colonoscopy: automatic polyp segmentation using convolution neural networks. J. Med. Robot. Res. 2018;3:1840002. 02. [Google Scholar]

- 81.Mori Y., Kudo S.-e., Misawa M., Takeda K., Kudo T., Itoh H., Oda M., Mori K. Artificial intelligence for colorectal polyp detection and characterization. Curr. Treat. Options Gastroenterol. 2020;18(2):200–211. doi: 10.1007/s11938-020-00287-x. [DOI] [Google Scholar]

- 82.Summers R.M., Yao J., Pickhardt P.J., Franaszek M., Bitter I., Brickman D., Krishna V., Choi J.R. Computed tomographic virtual colonoscopy computer-aided polyp detection in a screening population. Gastroenterology. 2005;129(6):1832–1844. doi: 10.1053/j.gastro.2005.08.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Bräuer C., Lefere P., Gryspeerdt S., Ringl H., Al-Mukhtar A., Apfaltrer P., Berzaczy D., Füger B., Furtner J., Müller-Mang C. CT colonography: size reduction of submerged colorectal polyps due to electronic cleansing and CT-window settings. Eur. Radiol. 2018;28(11):4766–4774. doi: 10.1007/s00330-018-5416-0. [DOI] [PMC free article] [PubMed] [Google Scholar]