Abstract

Objective

To determine user and electronic health records (EHR) integration requirements for a scalable remote symptom monitoring intervention for asthma patients and their providers.

Methods

Guided by the Non-Adoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) framework, we conducted a user-centered design process involving English- and Spanish-speaking patients and providers affiliated with an academic medical center. We conducted a secondary analysis of interview transcripts from our prior study, new design sessions with patients and primary care providers (PCPs), and a survey of PCPs. We determined EHR integration requirements as part of the asthma app design and development process.

Results

Analysis of 26 transcripts (21 patients, 5 providers) from the prior study, 21 new design sessions (15 patients, 6 providers), and survey responses from 55 PCPs (71% of 78) identified requirements. Patient-facing requirements included: 1- or 5-item symptom questionnaires each week, depending on asthma control; option to request a callback; ability to enter notes, triggers, and peak flows; and tips pushed via the app prior to a clinic visit. PCP-facing requirements included a clinician-facing dashboard accessible from the EHR and an EHR inbox message preceding the visit. PCP preferences diverged regarding graphical presentations of patient-reported outcomes (PROs). Nurse-facing requirements included callback requests sent as an EHR inbox message. Requirements were consistent for English- and Spanish-speaking patients. EHR integration required use of custom application programming interfaces (APIs).

Conclusion

Using the NASSS framework to guide our user-centered design process, we identified patient and provider requirements for scaling an EHR-integrated remote symptom monitoring intervention in primary care. These requirements met the needs of patients and providers. Additional standards for PRO displays and EHR inbox APIs are needed to facilitate spread.

Keywords: remote symptom monitoring, electronic health record integration, user-centered design, intervention design, application programmer interfaces

INTRODUCTION

Roughly 60% of people in the United States have 1 or more chronic diseases. Healthcare for these individuals accounts for 90% of all healthcare expenditures.1,2 While chronic diseases typically require continuous management, delivery of healthcare is episodic: patients are left to independently manage symptoms between time-limited visits with their providers.3 Digital health tools that are integrated with the electronic health record (EHR)4 offer promising and scalable solutions.5,6 Smartphones, in particular, can serve as a platform for digital health interventions: smartphone adoption is above 81% in the US and higher in other countries.7 The increasing number of patients with multiple chronic comorbidities8 underscore the urgency to scale and spread digital remote monitoring interventions. The COVID-19 pandemic has heightened this need, as care is increasingly delivered remotely,9 particularly for chronically ill, elderly, and other vulnerable patients who are disproportionately impacted.10–13 Such interventions may prevent emergency room and urgent care visits by facilitating compliance with condition-specific guidelines.

Self-management tools that do not connect patients to their providers face challenges with attracting and retaining users.14 Integration into clinical workflow via the EHR represents a critical step to enable more robust adoption and is supported by the US Federal Health IT Strategic Plan which calls for the use of “digital engagement technologies beyond portals to connect patients more easily with their providers.”15 To date, there are few studies of integrated remote symptom monitoring interventions: most have targeted oncology and surgical patients on a standardized care pathway or clinical protocol.16 Attention deficit hyperactivity disorder17 and use of PROMIS measures in the ambulatory pediatric settings18 have also been studied. Furthermore, to be effective and scalable for patients with chronic conditions, clinically integrated interventions require more than summative evaluations: attention to rigorous design based on the real-world needs of patients, caregivers, and providers is paramount.19 Determining requirements for effective digital health interventions remains a major challenge.20 The lack of attention to the design and development of these interventions may explain the poor track record of broad adoption of digital health innovations into routine care.21

We designed a clinically integrated remote symptom monitoring intervention with the intent of broad scale and spread. We selected asthma as a model because of its high prevalence (8% of the US population), disease burden, and healthcare costs, all of which are largely preventable with appropriate management and treatment.22–25 Additionally, in asthma, clinical guidelines based on clinical trial evidence are well-established and recommend symptom monitoring between visits through patient-reported outcomes (PROs).

As part of our prior feasibility study,26,27 we developed and tested an initial prototype of this intervention for asthma patients in pulmonary subspecialty clinics to understand clinical workflows and key technology features required for patients with poorly controlled asthma. Our original intervention consisted of a smartphone app that prompted patients to report asthma symptoms every week, an electronic provider view showing the reported PRO data, and application workflows. If patients reported worsening asthma symptoms, the app prompted them to request a phone call from a nurse in their clinic. While the app prototype was not integrated into the EHR, PRO data were manually inserted into physician notes to test the utility of EHR-integrated PRO reporting during the clinic visit. We demonstrated high patient adherence and retention, low provider burden, as well as other benefits.26

For our current study, we adapted this intervention to the primary care setting, where most asthma patients receive routine care.28–30 In contrast to sub-specialty care, the care of asthma patients affiliated with primary care clinics requires attention to a breadth of health issues, different clinical workflows, variability in asthma knowledge among the care team, and variability in level of disease severity. Within PCP panels, asthma patients are relatively fewer in number, and many have less severe asthma but can still experience exacerbations and benefit from monitoring. Furthermore, by adapting our original intervention for primary care, we envisioned developing a model of a clinically integrated, between-visit remote monitoring intervention that addresses user needs and has potential for broad scale and spread. If successful for asthma, this intervention could be replicated for other diseases (eg, rheumatoid arthritis, depression) and for patients with multiple chronic conditions.31–34

OBJECTIVE

Our objective was to determine software requirements, defined as “software capabilit[ies] needed by a user to solve a problem or achieve an objective,” 35 for users (patient, primary care provider, nurse) and for integration of third-party applications with the EHR—both of which are necessary to create a remote symptom monitoring intervention that has potential for scale and spread across the primary care setting for asthma patients. For this study, user requirements included functional requirements for the technology (patient app and provider dashboard), workflow needs of nurses and PCPs, and high-level user interface design needs for the purpose of collecting PRO questionnaire data and monitoring asthma symptoms between visits according to clinical guidelines.22,23 We also determined EHR integration requirements, a type of technical “interface requirement” which describes how a third-party application that satisfies user requirements would need to interact with the EHR.36

MATERIALS AND METHODS

Overview and framework

To elicit user requirements for the intervention, we conducted a secondary analysis of interview transcripts from our prior feasibility study. We then employed user-centered design (UCD) methods: design sessions, surveys, stakeholder meetings, and iterative prototyping.37,38 To ensure the user requirements could be supported by the technology, we sought to determine EHR integration requirements as part of the design process. We developed the patient-facing mobile app and clinician dashboard while planning to integrate the technology with our institution’s EHR (Epic Systems, Inc.).

To ensure scalability, we used the Non-Adoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) framework to formulate pertinent research questions.21 NASSS is an evidence-based framework specifically designed to go beyond adoption to include scale, spread, and sustainability of health technology innovations. Empirical application of NASSS shows that innovations with more complexity are less likely to be mainstreamed.39 We therefore used NASSS to anticipate potential complexities, and identify the simplest, and thus, most scalable, requirements along each dimension and at each stage of our UCD process (Table 1) and analysis (Table 3). We designed the intervention with the NASSS framework in mind from the beginning and used it throughout.

Table 1.

The Non-Adoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) framework as applied to an asthma symptom monitoring intervention in primary care

| Domain and characteristics of scalable interventions | Pertinent research questions for identifying requirements | Methods used to identify requirements for asthma symptom monitoring intervention in primary care |

|---|---|---|

| 1. CONDITION: Well-characterized, well-understood, and predictable; few sociocultural factors or comorbidities significantly affect care |

|

|

| 2. TECHNOLOGY: Direct measurements of medical condition; minimal knowledge or support required to use the technology |

|

|

| 3. VALUE PROPOSITION: Clear business case for developer with return on investment (supply-side value); technology is desirable for patients, safe, effective, and affordable |

|

|

| 4. ADOPTER SYSTEM: Minimal changes required in terms of staff roles or expectations of patients/caregivers |

|

|

| 5. ORGANIZATION: Minimal changes required in team interactions or care pathways; minimal work needed to establish shared vision, monitor impact |

|

|

| 6. WIDER CONTEXT: Financial and regulatory requirements already in place; supportive professional organizations |

|

|

| 7. ADAPTATION OVER TIME: Strong scope for adapting and embedding the technology as local need or context changes |

|

|

Abbreviations: COPD, chronic obstructive pulmonary disease; EHR, electronic health record; PRO, patient-reported outcome; UCD, user-centered design.

Table 3:

User requirements for a scalable remote monitoring intervention in primary care

| User requirement | NASSS framework domain applied to clinically integrated intervention |

|---|---|

| Patient-facing requirements | |

| Either a weekly 5- or 1-item questionnaire depending if symptoms were present in the preceding week with data viewable as a graph | Domain 1. Condition: Adjusting number of items based on symptoms allows for patients with mild intermittent, mild persistent, moderate persistent, or severe persistent asthma to be included, simplifying recruitment in the primary care setting |

| Domain 2. Technology: PRO questionnaire directly measures asthma symptom control and requires minimal training | |

| Domain 4. Adopter System (Patient): 5 or 1-item per week PRO questionnaire is acceptable to patients with poorly controlled (5-item) and well-controlled (1-item) asthma, which also could be completed by the patient’s caregiver | |

| Domain 6. Wider Context: PRO-based symptoms monitoring is consistent with established clinical guidelines | |

| Patient requested callback from nurse | Domain 4. Adopter System (Patient): An option for patient-requested callback on demand desired by patients to facilitate clinical contact. Automatic callbacks based on PROs alone likely unhelpful to patients and burdensome to providers. |

| Educational videos and text within app, with reminders after weekly questionnaires | Domain 4. Adopter System (Patient): Reinforces education received during clinic visit. |

| Domain 4. Adopter System (PCP): Saves PCPs clinic visit time explaining to patients. | |

| Domain 6. Wider Context: Content of education promotes established clinical recommendations. | |

| Peak flow values, weekly notes, and triggers entered into app and viewable in the app and EHR | Domain 3. Value Proposition: Strongly desired by some patients with severe asthma. Engages patients by consolidating asthma-related data in a single location. |

| Domain 4. Adopter System (Patient): Few patients may use these features, so there should be no expectation for using them. | |

| Previsit tips sent to patients as push notification, includes reminder to take their phone to their PCP appointment and discuss their condition | Domain 3. Value Proposition: Tips highly valued by patients, enhancing engagement by prompting discussion with clinicians about patient responses to PRO questionnaires. |

| Domain 4. Adopter System (Patient): Minimal additional task for patients, similar to routine reminders of upcoming scheduled clinic appointments. | |

| PCP-facing requirements | |

| PRO dashboard accessible in 1 click within a patient’s chart, including a link to updated guidelines | Domain 4. Adopter System (PCP): Dashboard accessible from chart requires minimal additional work to view the asthma PRO data. |

| Domain 6. Wider Context: Reinforces guidelines by facilitating access to them within EHR in context of asthma PRO data | |

| Previsit EHR inbox reminder sent to inform PCPs that patient is using app | Domain 4. Adopter System (PCP): EHR inbox the most preferred method for reminders of PRO data, compatible with existing workflows |

| Nurse-facing requirements | |

| Callback requests initiates message to EHR inbox monitored by triage nurses | Domain 2. Technology: Minimal training required |

| Domain 4. Adopter System (nurse): Aligned with current nurse roles and workflows (ie, similar to responding to a patient phone call) | |

| Nurse-driven triage protocol available when patient requests callback | Domain 5. Organization: Aligned with existing care pathways, enabling nurses to handle additional clinical situations with minimal additional burden |

| Domain 6. Wider Context: Reinforces guidelines by incorporating them into nursing working flow and responsibilities | |

Abbreviations: EHR, electronic health record; NASSS, nonadoption, abandonment, scale-up, spread, and sustainability; PRO, patient reported outcome.

Setting and participants

Our study was approved by the Institutional Review Boards of Mass General Brigham (MGB) and the RAND Corporation and was performed between January 2019 and November 2020. The study was conducted at 5 primary care clinics affiliated with Brigham Health, an academic health system in Boston, Massachusetts. All clinics were members of the Primary Care Practice-Based Research Network and used a single EHR (Epic Systems, Inc.). Clinics ranged in number of physicians (8 to 37 per clinic), physician clinical effort (part-time vs full-time), patient populations (majority Spanish vs English-speaking), and clinic type (teaching vs non-teaching).

Data collection and analysis

As part of our UCD process, we elicited patient and provider requirements sequentially using the 3 methods described below. Because the requirements for each user type and for EHR integration were interdependent, we considered all of them during each stage of data collection and analysis.

Secondary analysis of exit interviews from prior feasibility study

At the conclusion of the prior feasibility study, we conducted interviews with participating patients and providers about their experience with the intervention.26,27 For the current study, we re-reviewed transcripts of these interviews to identify new technical requirements and user interface enhancements that would improve upon the original intervention and would be appropriate for primary care. Two research team members independently reviewed transcript excerpts to identify new and relevant features. From these data, we assembled a preliminary list of user requirements. We then assessed each requirement based on the interview data and NASSS domains for inclusion in subsequent design sessions.

Design sessions and stakeholder meetings

We conducted a series of in-person design sessions with patients and providers. We aimed to recruit a diverse range of patients and providers in terms of age, gender, race, and ethnicity. One or 2 research team members conducted each design session, lasting approximately 60 minutes for patients and 30–60 minutes for providers. All design sessions were audio-recorded. Participants were shown wireframes developed using an app prototyping tool (Fluid UI) for user interface design40 and were encouraged to “think aloud” to describe their reactions to questions about each app feature.41 We alternated design sessions with patients and providers to ensure we reconciled both perspectives. For Spanish-speaking patient participants, 1 or 2 native Spanish speakers conducted the design sessions. We aimed to keep the reading level for the patient participants at the 6th grade level and assessed patients’ comprehension during design sessions. We gathered additional input through informal meetings with clinic medical directors, nurses, and 2 nurses in system leadership positions. Provider design sessions and meetings with clinic medical directors included questions about workflow and EHR integration. We met weekly with a user-experience expert to advise on interface considerations. We continued recruiting until we no longer identified any new requirements for features those users considered to be critical (saturation).

Audio recordings were independently reviewed by 2 research team members. We used conventional content analysis methods to identify user reactions which fell into the following categories: specific functionality, usability issues, and suggestions for changes to app wording, functionality, or workflow.42 After the team members reviewed the recordings, they recorded a bulleted list of findings within each of these categories in Microsoft Word documents, discussed and resolved any differences considering relevant NASSS domains, and applied changes to the wireframes for future design sessions.

PCP survey

To quantify the scope and prevalence of the broad range of preferences identified in PCP design sessions, we developed a survey for PCPs who treat asthma patients from the 5 study clinics. We drafted survey items based on findings from the design sessions and refined the items through cognitive testing with 3 practicing PCPs. Survey question topics included preferences for reminders that PROs were available in the EHR, graphical presentation of PROs, and anticipated benefits of the intervention. We distributed the survey using REDCap.40 PCPs were offered a $25 gift card upon completion. We calculated aggregate results of discrete items. For open-ended questions, 2 research team members independently reviewed and coded all responses into 2 categories: provider notification of asthma data within workflows and visual display of asthma data. The research team members then discussed each response individually and selected representative quotes that explained quantitative survey findings.

Software development, enterprise data service provisioning, and EHR app user interface integration

We used an agile software development approach to translate user interface and EHR integration requirements that emerged from our UCD process to ensure that our application would be delivered within the constraints of our study period and MGB’s emerging governance process for digital health.43 We met regularly with our app developer (First Line Software, Inc.) and MGB’s EHR governance teams to determine the most feasible strategies for implementing our technical and functional requirements. We identified key EHR application programming interfaces (APIs) that were required to pull certain data elements into the application; initiated the enterprise data service provisioning process for requesting APIs; identified graphical user-interface constraints for provider access to third-party applications via the EHR; and finally, iteratively refined the user interface, developed using React Native, an open-source mobile application framework.44 To ensure security of the protected health information (PHI) collected by the app, the application was hosted inside the health system’s firewall on a secure server and underwent a rigorous security review as required by our institution’s Research Information Security Office.

RESULTS

We analyzed 26 exit interview transcripts (21 patients, 5 providers) from our prior feasibility study, conducted 21 new design sessions (15 patients, 6 providers), and analyzed results of 55 survey responses from 78 PCP (71% response rate), all of whom were physicians. The demographic characteristics of these participants are summarized in Table 2. Prior feasibility demographic data was reported previously.26

Table 2.

Characteristics of study participants

| Design session participants | |

|---|---|

| Patients | N = 15 |

| Age (years) | |

| < 30 | 2 (13) |

| 31-45 | 6 (40) |

| > 45 | 7 (47) |

| Ethnicity | |

| Hispanic or Latinx | 5 (33) |

| Not Hispanic or Latinx | 10 (67) |

| Race | |

| White | 10 (67) |

| Non-White Native American, Black or African | 5 (33) |

| American | |

| Gender | |

| Male | 4 (27) |

| Female | 11 (73) |

| Language | |

| Spanish | 6 (40) |

| English | 9 (60) |

| Level of Education | |

| Did not finish high school | 1 (7) |

| High school diploma or GED | 1 (7) |

| Some college but no degree | 3 (20) |

| 2-year college degree | 3 (20) |

| 4-year college degree or more | 7 (47) |

| PCPs | N = 6 |

| Gender | |

| Male | 3 (50) |

| Female | 3 (50) |

| Number of Years since Residency (years) | |

| < 6 | 1 (17) |

| 6-15 | 3 (50) |

| > 15 | 2 (33) |

| PCP survey participants | N = 55 |

| Gender | |

| Male | 25 (45) |

| Female | 30 (55) |

| Number of Years since Residency (years)* | |

| < 6 | 8 (15) |

| 6-15 | 17 (31) |

| > 15 | 27 (49) |

| Number of half day primary care sessions per week | |

| <3 | 28 (51) |

| >4 | 27 (49) |

∗Incomplete participant values recorded.

Patient-facing, PCP-facing, and nursing-facing requirements informed by the corresponding NASSS domain(s) are described in Table 3. Given the interdependencies and interactions among the 3 types of users, requirements based on each user’s experience were reconciled with the perspectives of other users. For example, requirements for patient-facing components were identified based on input not only from patients but also from PCPs and nurses. We note divergences in requirements within and among the 3 user groups (eg, different preferences among PCPs regarding PRO displays). Specific EHR integration requirements (eg, EHR inbox messaging), were necessary to support certain critical user requirements (eg, callback requests routed to a triage nurse). Many of the requirements were determined by a combination of results from the prior study, design sessions, and survey results.

Patient-facing requirements

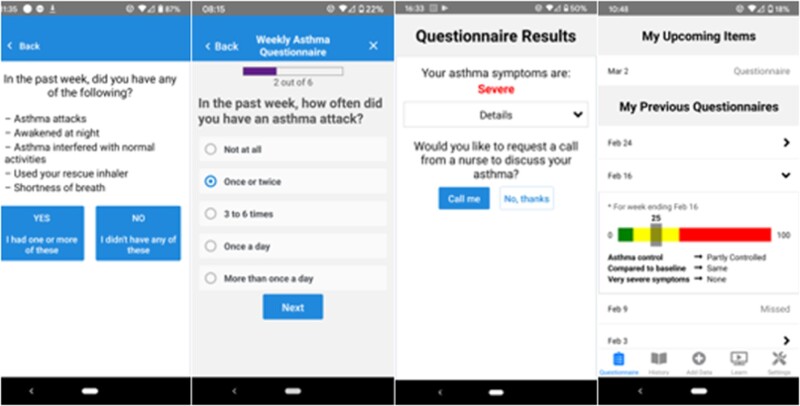

Results from the UCD process and application of NASSS translated into requirements for the patient-facing app, which were largely consistent for English and Spanish-speaking patients. Feasibility test data and design sessions suggested strong user support for administering the questionnaires on a weekly basis with 48-hour deadlines (eg, “I think it’s great that you give that amount of time. I just did it, because once it pops up, I just do it just to get it off my… to do list.” [Test Patient exit interview]). However, for patients with well-controlled asthma over several weeks or months, feasibility test and design session participants suggested that completing all 5 questions was too burdensome. Consequently, we designed a modification to allow patients without symptoms during the preceding week to complete a single question, addressing whether patients had any relevant symptoms, instead of the entire 5-item PRO questionnaire. An affirmative response prompted the 5-item PRO questionnaire (Figure 1). Patients participating in design sessions viewed this feature favorably: “I like the option of putting ‘no’ and then you’re not going to answer all these other questions.” (Design Patient)

Figure 1.

Example screenshots of patient-facing app. The app prompts patients through push notifications to answer 1 or 5 questions depending on their previous week’s responses. Patients with poor or worsening asthma control are offered the option to request a callback from a nurse in their clinic.

Secondary analysis of our prior feasibility study data demonstrated support for an app feature allowing patients to request callbacks for deteriorating PROs: “I would always answer ‘yes’ [when the app prompted me to request a call] … after a couple of hours, someone from the staff would call me to see how I was doing and if I needed to go in to see the doctor” (Test Patient exit interview). However, the “mandatory callback” feature, in which patients with poor asthma control are automatically called by a nurse, were perceived as too burdensome to nurses and low-value by patients: “it was just annoying, because I’m dealing with asthma… I’m going to call my doctor. I don’t want you [the app] to call my doctor for me” (Test Patient exit interview). Specifically, patients and the nurse participant from our prior feasibility study suggested removing the mandatory callback requirement and only including the optional feature which allowed patients to decline to request a callback. Therefore, we removed this feature and did not assess it further in design sessions.

Other patient-facing requirements included: ability to record notes (prior feasibility study test patients, design sessions); triggers and peak flow measurements to engage some patients with poor asthma control (prior feasibility study test patients, design sessions); previsit reminders to discuss asthma data with providers at upcoming clinic visits (design sessions); and educational information—videos, brief tips about asthma, and general medical care (design sessions). The latter requirement was also reflected in feedback during design sessions from PCPs who supported empowering patients through education, which could then be reinforced during clinic visits. PCPs provided guidance on high priority education topics for patients to view on the app, including how to use an inhaler and how to distinguish rescue from controller inhalers. Two Spanish-speaking patients in our design sessions requested that the app offered faster response times for requested calls. One suggested allowing the app user to enter a level of urgency for the call. However, this request could not be reconciled with existing clinics workflows for responding to patient queries.

PCP-facing requirements

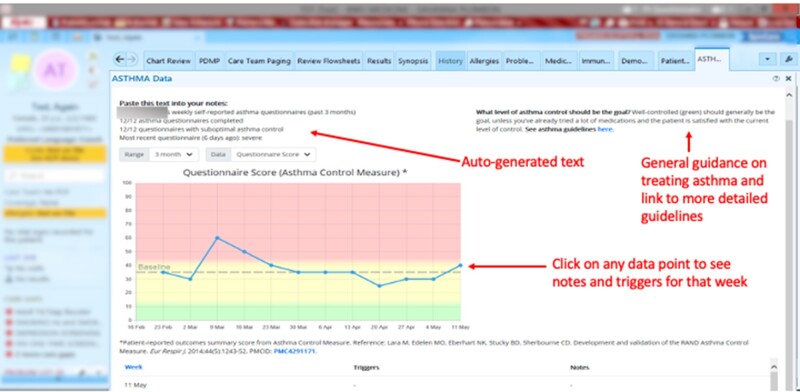

We identified 2 PCP-facing requirements from design sessions and the PCP survey (Tables 3 and 4). The first requirement was a dashboard containing patients’ asthma data that would be accessible with minimal effort from the patient’s chart in the EHR. An interactive graphical display of PRO data (Figure 2) was iteratively developed with input from PCPs. In this display, survey responses were almost evenly divided regarding preferences for whether higher numbers of the graph should mean better asthma control (47%, eg, “I'm trained to think about asthma in terms of ACT scores, so to me a higher number is better”), or worse asthma control (40%, eg, “I usually think about asthma control in terms of how frequent and severe are symptoms–so the higher [the score] = red = bad–fits my general mindset”).

Table 4.

Results of PCP survey about intervention requirements

| No. (%) | |

|---|---|

| Survey Respondents (PCPs) | 55 |

| Preferred method of being reminded of PRO data* | |

| In-basket** message (in Staff | 35 (64) |

| Message folder) | |

| Email to your Partners email | 2 (4) |

| Account | |

| Interruptive (pop up) alert in | 17 (31) |

| Epic (also called BPA) | |

| Have a medical assistant tell me | 23 (42) |

| None | |

| Preferred category of patients about which to be reminded of PRO data (select 1) | |

| All patients with the app (even those with no completed questionnaires, eg, to see non-adherence) | 6 (11) |

| Only patients with some PRO data | |

| Only patients with reported worse asthma control since last visit | 16 (29) |

| Other | 27 (49) |

| Never (I don’t want to be reminded) | 4 (7) |

| 2 (4) | |

| Preferred timing of reminder PRO data (check all that apply) | |

| Day before visit | 23 (42) |

| Day of visit | 19 (35) |

| When I open the patient's chart | 33 (60) |

| Other times | 8 (15) |

| Never (I don't want to receive a reminder) | 1 (2) |

| Preferred graph (select 1) | |

| Graph 1 (higher means better asthma control) | 26 (47) |

| Graph 2 (higher means worse asthma control) | 22 (40) |

| I don’t have a preference | 7 (13) |

| Usefulness of generated text (select 1) | |

| Yes, useful often | 25(45) |

| Yes, useful sometimes | 22(40) |

| No, not useful | 5(9) |

| Not sure | 3(5) |

| Anticipated benefits of intervention (check all that apply) | |

| Improve asthma control | 36 (65) |

| Reduce asthma emergencies (eg, ED visits) | 25 (45) |

| Give patients faster access to care | 21 (38) |

| Make patients feel more connected to their care team | 38 (69) |

| Make patients more aware of their asthma | 44 (80) |

| Give me better data for treatment decisions | 42 (76) |

| No major benefit | 2 (4) |

| Change in interest in participating in digital remote monitoring interventions in the era of COVID-19 | |

| I am much more interested/I am more interested | 32 (58) |

| I have the same level of interest | 21 (38) |

| I am less interested/I am much less interested | 1 (2) |

Counts are provided for response of “Would be Nice” or “Want This.” Other response options were “I don’t want this” and “No Preference.”

In-basket refers to the EHR inbox.

Figure 2.

Asthma dashboard in Electronic Health Record. All PRO data, notes, and triggers entered by the patient are available with 1 click from the patient’s chart. An auto-generated text summary of the patient’s PRO data and link to current guidelines (developed by an asthma expert based on guidelines and asthma research) is shown. EHR is from © 2021 Epic Systems Corporation, used with permission. Dashboard was developed by the research team.

Design sessions with providers yielded several enhancements to the PRO dashboard: an auto-generated text summary of the patient’s recent asthma data that could be incorporated into notes, and point-of-care access to evidence-based guidelines for asthma management (a statement summarizing asthma control goals and a link to more detailed guidance—the statement was developed by a pulmonologist with expertise in asthma from our institution and incorporated guidelines from the National Heart, Lung, and Blood Institute, the Global Initiative for Asthma, and recent asthma literature). In free-text comments and in the design session, PCPs suggested additional types of EHR data that could be incorporated into the display to make it more useful (eg, current asthma medications and refill data, recent ED visits or hospitalizations, name of asthma specialist treating patient). However, other respondents preferred to access these data through current EHR locations (eg, “I don't need other data. I have a routine for following/checking other data that works for me.”)

The second requirement was previsit reminders: all 6 PCPs in design sessions endorsed the idea of having the app remind patients to bring their phones and medications to their visits. Of the 55 providers who responded to the survey, 53 (96%) wanted to be reminded to check the PROs. Of other options offered, 35 (64%) PCPs preferred to receive an EHR inbox message as a previsit reminder; 23 (42%) preferred in-person reminders from a nurse or medical assistant; and 17 (31%) wanted to receive a clinical decision support alert (ie, “Best Practice Alert”) during the encounter. In written survey comments, 3 PCPs suggested adding the reminder in the “huddle note” (ie, a short note within the daily clinic schedule view in the EHR).

Nurse-facing requirements

We identified 2 requirements for nurses to be able to feasibly respond to callback requests in their existing workflows. First, nurses in all 5 clinics required all patient callback requests to be sent in the form of EHR inbox messages to the individual nurse or nurse pool assigned to the requesting patient and affiliated with the clinic. Because nurses’ workflows were centered around responding to EHR inbox messages, nurse managers suggested that other methods for delivering the requests (eg, email) would not be reliable. Nurses requested that these messages include the patient’s responses to the recent questionnaire, directions for how to access the asthma data within Epic, and other key information to assist with prioritizing the callback request (eg, if they had already called the clinic about their asthma).

Second, nurse leaders suggested that a prespecified nurse-driven clinical protocol designed specifically for asthma would be most effective for triaging callback requests. Otherwise, nurses would default to referring patient-reported issues they identify to the PCP rather than addressing these issues themselves, creating an inefficient workflow.

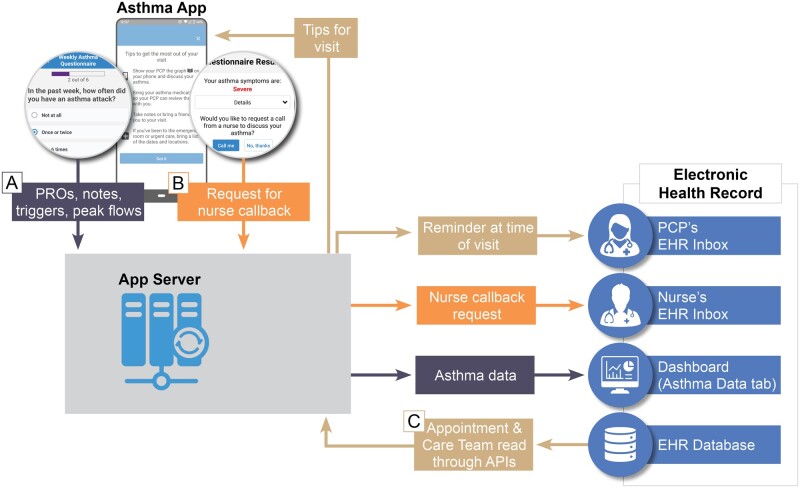

EHR integration requirements

We identified 3 EHR integration requirements (Figure 3). First, PCPs indicated that the asthma PRO dashboard must be easily accessible from patients’ charts before or during a visit. To address this, we were required to register the third-party application as an “extension.” The PRO dashboard could then be added to the main navigation bar for all PCP users, and individual PCP users also had the option of customizing their patient chart view to include the PRO dashboard as a default tab (Figure 2). When selected, the PRO dashboard application reads credentials of the current PCP user (eg, username) and selected patient (eg, MRN) from the EHR, rendering the appropriate patient’s dashboard in context of the current PCP user and selected patient. The PRO dashboard automatically authenticates the current PCP user using our institution’s Single Sign-On (SSO) process, thereby eliminating a second login step.

Figure 3.

Asthma App Technical Architecture and EHR Integration. Three information flows (A, B, and C) are shown with dark blue, orange, and tan, respectively. (A) PROs and other data entered into the app by the patient are made available to the provider in a dashboard in the EHR along with auto-generated text that can be pasted into a note. PRO data are stored in the app server but currently not written back to the EHR database. The dashboard is accessible to the provider using Single Sign On and does not require a separate login. (B) If symptoms meet certain criteria, the patient will have the option to request a nurse callback. This request is sent through the app server to the appropriate nurse or nurse pool’s EHR inbox. (C) Upcoming visit information is read from the EHR database on a daily basis. This information is fed through the app server, which sends information to both patients and providers before a visit. Patients receive tips for their visit, reminding them, for instance, to bring their phone and discuss their asthma with their providers. Providers receive EHR inbox messages reminding them to check the dashboard for PROs and other information gathered by the app regarding their patients.

Second, a data services API was required to send EHR inbox notifications to nurses and PCPs for patient callback requests and previsit reminders, respectively. Since a standards-based API that supports EHR inbox messaging did not exist, we used a custom API built by our institution that allows a third-party app to send an EHR inbox message to any specified EHR user or pool of users. Third, to send previsit tips to patients and reminders to PCPs, the application required access to visit schedules and the identity of the PCP. While visits schedules and PCP identity could be accessed via Fast Health Interoperability Resources (FHIR) APIs (“Appointment” and “Care Team” resources, respectively), we initially decided to use our institution’s custom APIs (given our software vendor’s experience) while exploring the feasibility of transitioning to FHIR APIs supported by US Core profiles as part of our scale and spread efforts.

DISCUSSION

Using the NASSS framework to guide our UCD process, we identified user and EHR integration requirements for the design of a scalable, remote symptom monitoring intervention for primary care. Patient-facing requirements included allowing patients with no active symptoms to answer only 1 item per week instead of 5; allowing patients to view educational videos and enter notes, triggers, and peak flows; and sending relevant visit-related tips to encourage the patient to discuss their asthma with their PCPs. PCP-facing requirements included allowing the dashboard to be viewed in the EHR with 1 click from the patient’s chart and sending the PCP an EHR inbox message preceding patient visits. Nurse-facing requirements included receiving requests for callbacks via EHR inbox message, and a clinical triage protocol for handling such calls. While we were able to integrate the application’s PRO dashboard into our institution’s EHR for clinician access, requirements for sending EHR inbox messages could not be addressed with current open standards, requiring us to use our institution’s previously developed custom APIs.

By applying research methods in each NASSS domain, we were able to identify requirements that would be pertinent to the potential broad use of our original intervention for asthma in a primary care setting once adapted. We were able to reconcile requirements elicited from English- and Spanish-speaking patients, PCPs, and nurses. Inconsistencies in PCP preferences may reflect variations in PCP workflows identified in prior studies.45,46 Several preference variations could be addressed through configuration options. For example, most PCPs preferred receiving an EHR inbox message reminder regarding available asthma data, while others preferred an alert or to be told by clinic staff. “Alert fatigue” may be the reason most prefer not to receive an alert.47–50 PCPs differed regarding the dashboard’s purpose, whether to solely display asthma PROs or to serve as a comprehensive dashboard for all asthma-related data in the EHR. While clinicians may distrust data sourced from third party apps, this may become less of a problem as these users become accustomed to integrated apps. The most striking variation we found was divergent preferences among PCPs for the direction of better or worse asthma control on the dashboard, suggesting the need for more standardized presentations to enable consistent communication among patients and providers.51–54

Our study addresses a notable gap in prior literature by identifying requirements for a scalable digital remote monitoring intervention for adult asthma patients that may have relevance to other clinical domains. As care is increasingly delivered remotely, which has accelerated due to the COVID-19 pandemic, such requirements are likely to become more important.9 By applying NASSS framework domains—especially technology, value proposition, and adopter systems for patients and providers—we were able to prioritize scalability throughout our requirements elicitation process. Our effort is distinct from other reported efforts at developing clinically integrated remote monitoring interventions, which lack prioritization of requirements,55 require additional clinical staff, such as care managers, to monitor data,56 or require a device (eg, for measuring inhaler usage).57 These alternate approaches may encounter scalability issues, such as extra complexity due to prioritizing less important requirements, or costs challenges for using devices where they aren’t necessary. Furthermore, we provide new knowledge regarding how a third-party application can be integrated into an EHR with patient- and provider-facing components to enable the use of PROs for between-visit monitoring, complementing our previous work in the hospital setting.6,58–61 Even with recent advances, FHIR standards could not address all of our requirements for this application, suggesting a need for additional standards, specifically for sending messages to EHR inboxes.

Limitations

Our study has several limitations. First, our remote monitoring intervention is restricted to a single condition. Additional UCD is required to understand requirements for other medical conditions (eg, mental health, rheumatological diseases). Second, clinical users were affiliated with 1 academic health center that used a single EHR (Epic Systems, Inc.). Requirements may vary for other health systems and EHR vendors. As described in the NASSS framework, organizations with lower capacity to innovate may have more complex requirements. Also, organizations without a clearly defined governance process for integrating third party apps into the EHR will likely encounter challenges. Third, our app is dependent on smartphone ownership and use by patients. Although a growing majority of patients have smartphones, some may have difficulty using them (though many may have a caregiver who uses a smartphone).7,62–64 Fourth, the PRO data was stored in the app server and not written back to the EHR database. However, institutions are currently considering how to integrate and utilize high-value patient-generated data into the EHR.65 Finally, EHR functionality and clinician use of EHRs are evolving. As PRO functionality within native EHRs is increasingly adopted, user requirements for clinicians’ engagement with these data may change, and future validation and replication of our findings is necessary.

CONCLUSION

We determined user and EHR integration requirements for a clinically integrated remote symptom monitoring intervention for asthma using PROs. The requirements reflect the NASSS framework and are designed to scale across primary care. This study demonstrates how third-party apps can be used for PRO-based between-visit monitoring in a real-world clinical setting with the goal of maximizing use, usability, and scalability in parallel with native EHR functionality and patient portal offerings.6,58–61,66–68 We identified gaps in current technical and graphical standards that would facilitate spread of this intervention. Although we focused exclusively on asthma, these findings may generalize to other chronic conditions that benefit from routine symptom monitoring using standardized PROs, such as rheumatologic disease, mental health illness, and irritable bowel disease.69 Similar requirements elicitation approaches also have the potential to develop scalable interventions for monitoring overall health of patients with multiple chronic conditions, such as captured by global health PROs which measure general physical, mental, and social health.70–73 With further testing, iterative development, and continued attention to scalability, the rapidly evolving efforts of digital remote monitoring between visits may be achievable at the population level for patients with chronic conditions.

FUNDING

This project was supported by grant number R18HS026432 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

AUTHOR CONTRIBUTIONS

Concept and design: RSR, AKD; acquisition of data: RSR, SP1, SP2; analysis and interpretation of data: RSR, SP1, JS, AKD; drafting of the manuscript: RSR, SP1; critical revision of the manuscript for important intellectual content: all authors; obtaining funding: RSR, AKD; administrative, technical, or logistic support: SP1, SP2, JS; and supervision: RSR, AKD.

DATA AVAILABILITY STATEMENT

The data underlying this article cannot be shared publicly due to the privacy of individuals that participated in the study. The data will be shared on reasonable request to the corresponding author.

ACKNOWLEDGMENTS

The authors would like to thank Pamela Garabedian for advising on usability and Christopher H. Fanta, William W. Crawford, and Alec Peterson for providing input on clinical and implementation aspects of the intervention.

CONFLICT OF INTEREST STATEMENT

Dr Bates eports grants and personal fees from EarlySense, personal fees from CDI Negev, equity from ValeraHealth, equity from Clew, equity from MDClone, personal fees and equity from AESOP, and grants from IBM Watson Health outside the submitted work. Dr Foer reports research funding from IBM Watson Health and CRICO for work unrelated to this submission. Dr Dalal reports personal fees from MayaMD, and an equity interest in the I-Pass institute for work unrelated to this submission. No other authors have competing interests to declare.

REFERENCES

- 1. Buttorff C, Ruder T, Bauman M. A Multiple Chronic Conditions in the United States; 2008. www.rand.org/giving/contribute Accessed February 3, 2021.

- 2. Martin AB, Hartman M, Lassman D, Catlin A, National Health Expenditure Accounts Team. National Health Care spending in 2019: steady growth for the fourth consecutive year. Health Aff (Millwood) 2021; 40 (1): 14–24. [DOI] [PubMed] [Google Scholar]

- 3.The Duration of Office Visits in the United States, 1993. to 2010 | AJMC. 2014. https://www.ajmc.com/view/the-duration-of-office-visits-in-the-united-states-1993-to-2010 Accessed February 12, 2021. [PubMed]

- 4.Adoption of Electronic Health Record Systems among U.S. Non-Federal Acute Care Hospitals: 2008-2015. 2016. https://dashboard.healthit.gov/evaluations/data-briefs/non-federal-acute-care-hospital-ehr-adoption-2008-2015.php Accessed February 12, 2021.

- 5. Dalal AK, Piniella N, Fuller TE, et al. Evaluation of electronic health record-integrated digital health tools to engage hospitalized patients in discharge preparation. J Am Med Inform Assoc 2021. doi:10.1093/jamia/ocaa321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Fuller TE, Pong DD, Piniella N, et al. Interactive digital health tools to engage patients and caregivers in discharge preparation: implementation study. J Med Internet Res 2020; 22 (4): e15573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.PewResearchCenter. Mobile Fact sheet. Demographics of Mobile Device Ownership and Adoption in the United States. 2018. http://www.pewinternet.org/fact-sheet/mobile/ Accessed April 6, 2020.

- 8. Boersma P, Black LI, Ward BW.. Prevalence of multiple chronic conditions among US adults, 2018. Prev Chronic Dis 2020; 17: 200130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Oudshoorn N. How places matter: telecare technologies and the changing spatial dimensions of healthcare. Soc Stud Sci 2012; 42 (1): 121–42. [DOI] [PubMed] [Google Scholar]

- 10. Wang L, Foer D, Bates DW, Boyce JA, Zhou L.. Risk factors for hospitalization, intensive care and mortality among patients with asthma and COVID-19. J Allergy Clin Immunol 2020. doi: 10.1016/j.jaci.2020.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Palacio A, Tamariz L.. Social determinants of health mediate COVID-19 disparities in South Florida. J Gen Intern Med 2021; 36 (2): 472–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Wu C, Chen X, Cai Y, et al. Risk factors associated with acute respiratory distress syndrome and death in patients with coronavirus disease 2019 pneumonia in Wuhan, China. JAMA Intern Med 2020; 180 (7): 934–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Gordon WJ, Henderson D, Desharone A, et al. Remote patient monitoring program for hospital discharged COVID-19 patients. Appl Clin Inform 2020; 11 (5): 792–801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Chan YFY, Wang P, Rogers L, et al. The Asthma Mobile Health Study, a large-scale clinical observational study using ResearchKit. Nat Biotechnol 2017; 35 (4): 354–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Office of the National Coordinator for Health Information Technology (ONC). 2020–2025 Federal Health IT Strategic Plan. https://www.healthit.gov/topic/2020-2025-federal-health-it-strategic-plan Accessed August 9, 2021.

- 16. Gandrup J, Ali SM, McBeth J, van der Veer SN, Dixon WG.. Remote symptom monitoring integrated into electronic health records: a systematic review. J Am Med Inform Assoc 2020; 27 (11): 1752–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Michel JJ, Mayne S, Grundmeier RW, et al. Sharing of ADHD information between parents and teachers using an EHR-linked application. Appl Clin Inform 2018; 9 (4): 892–904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Cox ED, Dobrozsi SK, Forrest CB, et al. Considerations to support use of patient-reported outcomes measurement information system pediatric measures in ambulatory clinics. J Pediatr 2021; 230: 198–206.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Rudin RS, Bates DW, MacRae C.. Accelerating innovation in health IT. N Engl J Med 2016; 375 (9): 815–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Brooks F. No silver bullet: essence and accidents of software engineering. Computer (Long Beach Calif) 1987; 20 (4): 10–9. [Google Scholar]

- 21. Greenhalgh T, Wherton J, Papoutsi C, et al. Beyond adoption: a new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J Med Internet Res 2017; 19 (11): e367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.National Quality Forum. Asthma assessment. https://www.qualityforum.org/QPS/MeasureDetails.aspx?standardID=366&print=0&entityTypeID=1 Accessed February 12, 2021.

- 23. Lang DM. New asthma guidelines emphasize control, regular monitoring. Cleve Clin J Med 2008; 75 (9). [DOI] [PubMed] [Google Scholar]

- 24. Wisnivesky JP, Lorenzo J, Lyn-Cook R, et al. Barriers to adherence to asthma management guidelines among inner-city primary care providers. Ann Allergy Asthma Immunol 2008; 101 (3): 264–70. [DOI] [PubMed] [Google Scholar]

- 25.Asthma Prevalence. https://www.cdc.gov/asthma/data-visualizations/prevalence.htm Accessed February 12, 2021.

- 26. Rudin RS, Fanta CH, Qureshi N, et al. A clinically integrated mHealth app and practice model for collecting patient-reported outcomes between visits for asthma patients: implementation and feasibility. Appl Clin Inform 2019; 10 (5): 783–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Rudin RS, Fanta CH, Predmore Z, et al. Core components for a clinically integrated mHealth app for asthma symptom monitoring. Appl Clin Inform 2017; 8 (4): 1031–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Fletcher MJ, Tsiligianni I, Kocks JWH, et al. Improving primary care management of asthma: do we know what really works? NPJ Prim Care Respir Med 2020; 30 (1): 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Trevor JL, Chipps BE.. Severe asthma in primary care: identification and management. Am J Med 2018; 131 (5): 484–91. [DOI] [PubMed] [Google Scholar]

- 30.Center for Health Statistics N. National Ambulatory Medical Care Survey: 2015 State and National Summary Tables. 2015. https://www.cdc.gov/nchs/data/ahcd/2015_NAMCS_PRF_Sample_Card.pdf Accessed February 23, 2021.

- 31. Solomon DH, Rudin RS.. Digital health technologies: opportunities and challenges in rheumatology. Nat Rev Rheumatol 2020; 16 (9): 525–11. [DOI] [PubMed] [Google Scholar]

- 32. Lee YC, Lu F, Colls J, et al. Outcomes of a mobile app to monitor patient reported outcomes in rheumatoid arthritis: a randomized controlled trial. Arthritis Rheumatol 2021; article: 41686.doi:10.1002/art.41686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Beckman AL, Mechanic RE, Shah TB, Figueroa JF.. Accountable Care Organizations during Covid-19: routine care for older adults with multiple chronic conditions. Healthc (Amst) 2021; 9 (1): 100511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Williams K, Markwardt S, Kearney SM, et al. Addressing implementation challenges to digital care delivery for adults with multiple chronic conditions: stakeholder feedback in a randomized controlled trial. JMIR mHealth uHealth 2021; 9 (2): e23498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.24765-2017 - ISO/IEC/IEEE International Standard - Systems and software engineering–Vocabulary | IEEE Standard | IEEE Xplore. https://ieeexplore.ieee.org/document/8016712 Accessed May 14, 2021.

- 36.Software and Systems Engineering Vocabulary. https://pascal.computer.org/sev_display/index.action Accessed February 12, 2021.

- 37. Kinzie MB, Cohn WF, Julian MF, Knaus WA.. A user-centered model for Web site design: Needs assessment, user interface design, and rapid prototyping. J Am Med Inform Assoc 2002; 9 (4): 320–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Martin B, Hanington B.. Universal Methods of Design: 100 Ways to Research Complex Problems, Develop Innovative Ideas, and Design Effective Solutions. Beverly, MA: Rockport Publishers; 2012. [Google Scholar]

- 39. Greenhalgh T, Wherton J, Papoutsi C, et al. Analysing the role of complexity in explaining the fortunes of technology programmes: empirical application of the NASSS framework. BMC Med 2018; 16 (1): 66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.FluidUI.com - Create Web and Mobile Prototypes in Minutes. https://www.fluidui.com/ Accessed January 5, 2021.

- 41.IBM Research | Technical Paper Search | Using the “Thinking-aloud” Method in Cognitive Interface Design(Search Reports). https://dominoweb.draco.res.ibm.com/2513e349e05372cc852574ec0051eea4.html Accessed February 11, 2021.

- 42. Hsieh HF, Shannon SE.. Three approaches to qualitative content analysis. Qual Health Res 2005; 15 (9): 1277–88. [DOI] [PubMed] [Google Scholar]

- 43. Shore J, Warden S.. The Art of Agile Development: Pragmatic Guide to Agile Software Development. Sebastopol, CA: O’Reilly Media; 2007. [Google Scholar]

- 44.React Native. A framework for building native apps using React. https://reactnative.dev/ Accessed February 11, 2021.

- 45. Militello LG, Arbuckle NB, Saleem JJ, et al. Sources of variation in primary care clinical workflow: implications for the design of cognitive support. Health Inform J 2014; 20 (1): 35–49. [DOI] [PubMed] [Google Scholar]

- 46. Holman GT, Beasley JW, Karsh BT, Stone JA, Smith PD, Wetterneck TB.. The myth of standardized workflow in primary care. J Am Med Inform Assoc 2016; 23 (1): 29–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Kuperman GJ, Bobb A, Payne TH, et al. Medication-related clinical decision support in computerized provider order entry systems: a review. J Am Med Inform Assoc 2007; 14 (1): 29–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Van Der Sijs H, Aarts J, Vulto A, Berg M.. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006; 13 (2): 138–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Eslami S, Abu-Hanna A, de Keizer NF.. Evaluation of outpatient computerized physician medication order entry systems: a systematic review. J Am Med Inform Assoc 2007; 14 (4): 400–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Kesselheim AS, Cresswell K, Phansalkar S, Bates DW, Sheikh A.. Analysis & commentary: Clinical decision support systems could be modified to reduce “alert fatigue” while still minimizing the risk of litigation. Health Aff (Millwood) 2011; 30 (12): 2310–7. [DOI] [PubMed] [Google Scholar]

- 51. Snyder CF, Smith KC, Bantug ET, et al. ; PRO Data Presentation Stakeholder Advisory Board. What do these scores mean? Presenting patient-reported outcomes data to patients and clinicians to improve interpretability. Cancer 2017; 123 (10): 1848–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Brundage MD, Smith KC, Little EA, Bantug ET, Snyder CF, The PRO Data Presentation Stakeholder Advisory Board. Communicating patient-reported outcome scores using graphic formats: results from a mixed-methods evaluation. Qual Life Res 2015; 24 (10): 2457–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Nystrom DT, Singh H, Baldwin J, Sittig DF, Giardina TD.. Methods for patient-centered interface design of test result display in online portals. EGEMS (Wash DC) 2018; 6 (1): 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Arcia A, Suero-Tejeda N, Spiegel-Gotsch N, Luchsinger JA, Mittelman M, Bakken S.. Helping Hispanic family caregivers of persons with dementia “get the Picture” about health status through tailored infographics. Gerontologist 2019; 59 (5): e479–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Hui CY, Walton R, McKinstry B, Jackson T, Parker R, Pinnock H.. The use of mobile applications to support self-management for people with asthma: a systematic review of controlled studies to identify features associated with clinical effectiveness and adherence. J Am Med Inform Assoc 2017; 24 (3): 619–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Kew KM, Cates CJ.. Home telemonitoring and remote feedback between clinic visits for asthma. Cochrane Database Syst Rev 2016; (8): 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Mosnaim GS, Stempel DA, Gonzalez C, et al. The impact of patient self-monitoring via electronic medication monitor and mobile app plus remote clinician feedback on adherence to inhaled corticosteroids: a randomized controlled trial. J Allergy Clin Immunol Pract 2021; 9 (4): 1586–94. doi:10.1016/j.jaip.2020.10.064. [DOI] [PubMed] [Google Scholar]

- 58. Dalal AK, Piniella N, Fuller TE, et al. Evaluation of electronic health record-integrated digital health tools to engage hospitalized patients in discharge preparation. J Am Med Inform Assoc 2021; 28 (4): 704–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Bersani K, Fuller TE, Garabedian P, et al. Use, perceived usability, and barriers to implementation of a patient safety dashboard integrated within a vendor EHR. Appl Clin Inform 2020; 11 (1): 34–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Dalal AK, Dykes P, Samal L, et al. Potential of an electronic health record-integrated patient portal for improving care plan concordance during acute care. Appl Clin Inform 2019; 10 (03): 358–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Dalal AK, Dykes PC, Collins S, et al. A web-based, patient-centered toolkit to engage patients and caregivers in the acute care setting: a preliminary evaluation. J Am Med Inform Assoc 2016; 23 (1): 80–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Hoogland AI, Mansfield J, Lafranchise EA, Bulls HW, Johnstone PA, Jim HSL.. eHealth literacy in older adults with cancer. J Geriatr Oncol 2020; 11 (6): 1020–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Rodriguez JA, Saadi A, Schwamm LH, Bates DW, Samal L.. Disparities in telehealth use among California patients with limited English proficiency. Health Aff 2021; 40 (3): 487–95. [DOI] [PubMed] [Google Scholar]

- 64. Rodriguez JA, Clark CR, Bates DW.. Digital health equity as a necessity in the 21st century cures act era. J Am Med Assoc 2020; 323 (23): 2381–2. [DOI] [PubMed] [Google Scholar]

- 65. Bakken S. Patients and consumers (and the data they generate): an underutilized resource. J Am Med Inform Assoc 2021; 28 (4): 675–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Bloomfield RA, Polo-Wood F, Mandel JC, Mandl KD.. Opening the Duke electronic health record to apps: implementing SMART on FHIR. Int J Med Inform 2017; 99: 1–10. [DOI] [PubMed] [Google Scholar]

- 67. Neinstein A, Thao C, Savage M, Adler-Milstein J.. Deploying patient-facing application programming interfaces: thematic analysis of health system experiences. J Med Internet Res 2020; 22 (4): e16813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Odisho AY, Lui H, Yerramsetty R, et al. Design and development of referrals automation, a SMART on FHIR solution to improve patient access to specialty care. JAMIA Open 2020; 3 (3): 405–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Rudin RS, Friedberg MW, Solomon DH. In the COVID-19 Era, and Beyond, Symptom Monitoring Should be a Universal Health Care Function | Health Affairs. Health Affairs Blog; 2020. https://www.healthaffairs.org/do/10.1377/hblog20200616.846648/full/ Accessed June 18, 2020.

- 70. Rose AJ, Bayliss E, Huang W, et al. Evaluating the PROMIS-29 v2.0 for use among older adults with multiple chronic conditions. Qual Life Res 2018; 27 (11): 2935–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Rose AJ, Bayliss E, Baseman L, Butcher E, Huang W, Edelen MO.. Feasibility of distinguishing performance among provider groups using patient-reported outcome measures in older adults with multiple chronic conditions. Med Care 2019; 57 (3): 180–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Lloyd H, Wheat H, Horrell J, et al. Patient-reported measures for person-centered coordinated care: a comparative domain map and web-based compendium for supporting policy development and implementation. J Med Internet Res 2018; 20 (2): e54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Hays RD, Bjorner JB, Revicki DA, Spritzer KL, Cella D.. Development of physical and mental health summary scores from the patient-reported outcomes measurement information system (PROMIS) global items. Qual Life Res 2009; 18 (7): 873–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data underlying this article cannot be shared publicly due to the privacy of individuals that participated in the study. The data will be shared on reasonable request to the corresponding author.