Abstract

The research presented in this manuscript proposes a novel Harris Hawks optimization algorithm with practical application for evolving convolutional neural network architecture to classify various grades of brain tumor using magnetic resonance imaging. The proposed improved Harris Hawks optimization method, which belongs to the group of swarm intelligence metaheuristics, further improves the exploration and exploitation abilities of the basic algorithm by incorporating a chaotic population initialization and local search, along with a replacement strategy based on the quasi-reflection-based learning procedure. The proposed method was first evaluated on 10 recent CEC2019 benchmarks and the achieved results are compared with the ones generated by the basic algorithm, as well as with results of other state-of-the-art approaches that were tested under the same experimental conditions. In subsequent empirical research, the proposed method was adapted and applied for a practical challenge of convolutional neural network design. The evolved network structures were validated against two datasets that contain images of a healthy brain and brain with tumors. The first dataset comprises well-known IXI and cancer imagining archive images, while the second dataset consists of axial T1-weighted brain tumor images, as proposed in one recently published study in the Q1 journal. After performing data augmentation, the first dataset encompasses 8.000 healthy and 8.000 brain tumor images with grades I, II, III, and IV and the second dataset includes 4.908 images with Glioma, Meningioma, and Pituitary, with 1.636 images belonging to each tumor class. The swarm intelligence-driven convolutional neural network approach was evaluated and compared to other, similar methods and achieved a superior performance. The obtained accuracy was over 95% in all conducted experiments. Based on the established results, it is reasonable to conclude that the proposed approach could be used to develop networks that can assist doctors in diagnostics and help in the early detection of brain tumors.

Keywords: swarm intelligence, Harris Hawks optimization, exploitation–exploration trade-off, chaotic, quasi-reflection-based learning, convolutional neural networks, classification

1. Introduction

As technology advances further every year, people are recognizing new means of solving certain problems with greater quality, precision, and efficiency. One of the technological domains that uncovered broad possibilities, and continues to do so, is artificial intelligence (AI). AI is not something that was revealed in the near past; it has existed for decades, but has only recently gained popularity among researchers and companies. The reason for this is the breakthrough in its processing power and storage capabilities, which increased the potential for more advanced AI applications. Today, almost every field uses some kind of AI, such as medicine, economy, marketing, etc. Moreover, most people are unaware that AI is influencing their life in multiple ways.

As AI is applied to a wide variety of fields, different methodologies and algorithms exist within this domain. Therefore, various AI taxonomies can be found in the modern literature; however, from the authors’ perspective, one of the most important taxonomies splits AI methods into two categories: metaheuristics and machine learning. Metaheuristics are problem-independent, high-level algorithms that provide a set of strategies to develop a heuristic for solving a particular problem and, as such, they cannot guarantee an optimum problem solution. However, they can obtain satisfying (in most cases, near-optimum) solutions in a rational amount of time [1].

Further, based on the type of phenomena that they emulate, metaheuristics can be divided into those that are inspired and those which are not inspired by nature [2]. Examples of non-nature inspired metaheuristics include tabu search (TS) [3] and differential evolution (DE) [4]. The two most prominent groups of nature-inspired metaheuristics include evolutionary algorithms (EA), which simulate the process of natural evolution, and swarm intelligence, which mimics a group of organisms from nature.

Swarm intelligence algorithms are known as efficient solvers of many NP-hard challenges [5]. Although many swarm algorithms have been devised in the recent decade, there is always a space for new ones, as well as for improvements in the existing ones, as a universal algorithm that can successfully tackle all problems cannot be created.

The main focus of the research proposed in this manuscript is the development of an enhanced Harris Hawks optimization (HHO) algorithm that addresses the observed flaws of its basic version. The HHO is a recently proposed, yet well-known swarm intelligence metaheuristics [6] that showed great potential in tackling many real-world challenges [7,8]. In this study, the authors tried to further investigate and expand the HHO’s potential by incorporating a chaotic mechanism and a novel replacement strategy that enhance both the exploitation and exploration of the basic algorithm, with only a small additional overhead in terms of computational complexity and new control parameters.

Established practice from the modern computer science literature states that when a new optimization method is devised, or an existing one is improved, it should first be validated on a more extensive set of so-called benchmarks and then applied to a practical problem. According to the no free lunch theorem (NFL), a method that performs equally well for all types of problems does not exist, and for some method to be tagged as well-performing, it should obtain a good average performance for most benchmark instances. Following this strategy, the proposed, enhanced HHO was first validated against a set of 10 novel CEC2019 bound-constrained benchmarks and, in these experiments, the near-optimum control parameter values, that obtain the best average results, are determined.

To further validate the proposed HHO, a method was adopted to tackle the practical issue of evolving convolutional neural network (CNN) hyper-parameters. One of the greatest challenges and obstacles in devising high-performing CNNs is the fact that a universal network, which will obtain a good performance for all problems, does not exist. Therefore, untrainable CNN parameters, which are known in the literature as hyper-parameters, should be finetuned to better fit certain optimization problems. Some of these parameters determine the overall CNN architecture (design); more precisely, they define the number and types of layer, along with their size in terms of the kernel, number of neurons and connections that are used. Other parameters determine the type of optimizer and activation function that is used, the learning rate value, etc. Noting this, there can be an infinite number of combinations in which one CNN can be structured, which itself represents one instance of NP-hard optimization problems.

The proposed HHO is utilized to find a proper CNN structure for the classification of magnetic resonance imaging (MRI) brain tumor grades. This specific dataset is chosen because the MRI brain tumor grades’ classification represents a very important task in the domain of medicine, which can potentially save lives and, therefore, the basic motivation behind this practical application is engaging efforts to further enhance the CNNs classification performance for this dataset and devise an "early warning" tool that will help doctors to diagnose brain tumors in early stages of development. Moreover, according to the literature survey, the potential of swarm intelligence for developing CNN structures for these types of data is not investigated enough. Rxamples of previous research from this domain are efforts shown in [9,10]. Thus, by evolving CNN structures for MRI tumor datasets, we aim to acccomplish the following objectives: first, to evaluate the proposed method for a specific practical problem and to try to improve the classification accuracy for this very important dataset.

In practice, it would be impossible to examine all possible hyper-parameters’ values, and for each hyper-parameter, lower and upper bounds are defined by the domain expert, as shown in [9]. In this way, by adjusting the search space boundaries, the evolved CNN’s structures target specific MRI brain tumor grade classification datasets, not any generic dataset.

It is noted that the practical application of the research shown in this manuscript represents the continuation of the investigation shown in [10]. Additionally, in the proposed study, other well-known swarm algorithms are also implemented for CNN design, and a broader comparative analysis is established. Most of these metaheuristics have not been evaluated for this challenge before.

Based on the above, the research proposed in this manuscript is guided by two basic investigation questions:

Is it possible to further improve the basic HHO algorithm by addressing both search processes—exploitation and exploration?

Is it possible to evolve a CNN that will establish better classification metrics for MRI brain tumor datasets than other state-of-the-art methods proposed in the modern literature?

The contribution of proposed study is three-fold:

An enhanced version of the HHO metaheuristics has been developed that specifically targets the observed and known limitations and drawbacks of the basic HHO implementation;

It was shown that the proposed method can generate high-performing CNN structures for MRI brain tumor classification that establish better performance metrics than other outstanding methods proposed in the literature;

Other well-known swarm intelligence algorithms were tested for CNN design and a broader comparative analysis was performed.

The remainder of this paper is structured as follows: Section 2 provides a short overview of theoretical background relevant to proposed research along with literature review, Section 3 first provides basics of the HHO, then summarizes its drawbacks, and finally proposes the enhanced version of this promising algorithm. Section 4 and Section 5 are practical, where results of the proposed method along with comparative analysis with other state-of-the-art methods are first reported for standard CEC2019 benchmarks and then for CNN design for MRI brain tumor grade classification. The final Section 6 exhibits the closing remarks, and suggests possible future work in this field along with limitations of the performed study.

2. Preliminaries and Related Works

The goal of this section is to introduce readers to some basic concepts that serve as a background for this paper. Moreover, this section provides a literature review of the relevant topics.

2.1. Swarm Intelligence

Swarm intelligence algorithms come from a group of nature-inspired optimizers. These are population-based, iterative approaches that incrementally try to provide a solution for the problem at hand. Each individual from the population represents one potential solution, while communication between solutions is established by using some kind of indirect communication mechanism without a central component. The search process is guided by exploitation (intensification) and exploration (diversification) mechanisms, where the first one performs a search in the neighborhood of existing solutions and the latter explores previously undiscovered parts of the search space.

In most cases, it is possible to spot the natural phenomenon that algorithm simulates from its name, e.g., particle swarm optimization (PSO) [11], firefly algorithm (FA) [12], artificial bee colony (ABC) [13], seagull optimization algorithm (SOA) [14], bat algorithm (BA) [15], etc. [16,17].

With all advanced strategies of how the best swarm algorithms operate, they are successfully applied even outside research circles. Consequently, they make a huge contribution to real-world problems such as cloud computing and task scheduling [18,19], wireless sensor network localization and routing [20,21], numerous fields of medicine [22,23,24], prediction of COVID-19 cases [25], anomaly detection [26], etc. [27,28].

As the popularity of swarm intelligence metaheuristics grows, numerous different solutions are reported that enhance vast realms of AI methods and techniques. One of the most recent and prominent research fields is hybrid methods of swarm intelligence and various machine learning models adapted for a large number of real-world problems. Some practical machine learning challenges that are successfully tackled with swarm intelligence include feature selection [29,30,31], training artificial neural networks (ANNs) [32,33], text clustering [34] and many others [35,36].

2.2. Convolutional Neural Networks

The CNNs are well-known and widely utilized methods from the deep learning area, and they are capable of providing outstanding results in different application domains. The CNNs have achieved outstanding results in the areas of computer vision, speech recognition, and natural language processing (NLP) [37,38,39,40].

The CNN model is based on the visual cortex of the brain, which consists of several layers. This structure starts with the input layer, and every subsequent layer receives the outputs of the previous layer as its inputs, processes the information, and sends it to the next layer. After each of the layers in the network, data become more filtered. In this way, each layer produces output more detailed data, while the first layer can process more easily, without losing important features. Layers in the CNN structure are separated into three groups: convolutional, pooling, and fully connected (or dense).

Convolutional layers are responsible for filtering the data by utilizing the convolutional operation and extracting the features in a smaller size than the input. Typical sizes include 3 × 3, 5 × 5, and 7 × 7. Convolutional function over the input vector can be mathematically represented by Equation (1):

| (1) |

where represents value of the output feature of the k-th feature map at position and layer l. Representation of the input at location is given as x; w represents the filters, while the bias is denoted as b.

After convolution, the activation is performed, by using the following expression:

| (2) |

where the denotes the non-linear function using the output.

Pooling layers have the task of reducing the resolution. Two commonly used variants of pooling layer implementations are max and average pooling. The function that defines the pooling is given with Equation (3).

| (3) |

where y denotes the output of the pooling operation.

Finally, fully connected layers have the task of carrying out the classification process. Classification is performed by the softmax layer in the case of multi-labeled datasets, or by the logistic layer (sigmoidal) in the case of binary classification.

The CNN training is usually executed by utilizing gradient-descent-based methods [41]. The goal of these optimizers is to minimize the loss function over a series of steps called epochs. In each epoch, network weights and biases are adjusted, so the loss function is minimized. Again, in the literature, as well as in practical applications, many loss functions exist and some of the most commonly employed are binary and categorical cross-entropy H for binary and multi-label classification tasks, respectively. The function is specified by two distributions p and q across discrete variable x, as shown in Equation (4).

| (4) |

One of the problems that CNNs face is creating a model that will perform well on both the training set and the new data. In many practical uses, the model performs well on the training data; however, it fails to establish a satisfying classification accuracy on the testing data. This problem is known in the literature as over-fitting. Many methods have been proposed to address this issue; however, one of the most commonly used is the dropout technique [42], which falls in the domain of regularization. Dropout is not considered computationally expensive, but is a very efficient approach that prevents the over-fitting problem by the random removal of some neurons in the fully connected layer during the training process.

The standard, traditional way to determine the appropriate CNNs design, as well as a classic ANN architecture, for a given problem is to evaluate them by first performing training on the train set and then executing testing on previously unseen data. As already noted in Section 1, this represents a major issue in this domain, which is known as the tuning of hyper-parameters (optimization). Unfortunately, this procedure is lengthy and time-consuming; moreover, it is based on "trial and error" and requires human intervention. Recently, many automated frameworks based on metaheuristics that generate CNN structures for a given task, are devised [43]. Due to the NP-hard nature of the hyper-parameters’ optimization challenge, metaheuristics, especially swarm intelligence, proved very efficient methods for devising such frameworks [44,45,46].

It should also be emphasized that the HHO algorithm has already been implemented to optimize ANN and CNN hyperparameters in previous research [7,47]. However, since every problem is specific, and by taking the NFL into account, the HHOs potential to evolve a CNN’s structure to classify MRI brain tumor images has not been established.

2.3. MRI Brain Tumor Grades Classification

Glioma tumors are the most frequent type of brain tumor in the adult population [48]. They are classified into four grades, corresponding to severity levels ranging from I to IV [49]. Gliomas classified as grade I are considered benign, while grade IV gliomas represent malignant tumors that spread fast. One of the key factors of the successful treatment of patients with glioma is a fast diagnostics and early detection [50]. However, in practice, this procedure is complicated and includes magnetic resonance imaging (MRI), followed by invasive biopsy if a tumor is suspected. It can take weeks, sometimes months, for a procedure to finish and to obtain a decisive answer. To speed up the process, the usage of computer-aided tools is necessary to help doctors interpret the MRI images and classify the grade of the tumors [51,52].

For tumor diagnosis, MRI is the only non-invasive methodology that provides valuable data in the shape of 2D and 3D images. The CNNs are known and proven classifiers that can be utilized to help in the identification of objects in MRI images and perform segmentation. However, since the MRI dataset is specific and different from other, especially generic, datasets, the CNN with a specific design should be evolved, and that is why some most recent research from this domain utilizes MRI paired with CNNs and swarm intelligence metaheuristics [10,53].

3. Proposed Method

In the beginning, this section introduces the basic HHO. Then, it points out the noticed deficiencies of original HHO implementation and, finally, the modifications that target the observed flaws of the basic HHO are proposed at the end of this section.

3.1. Original HHO

The HHO algorithm, as its name states, is inspired by different Harris Hawks’ strategies during their attacks on prey in nature. These attacking phases consist of three steps: exploration, the transition from exploration to exploitation, and, finally, the exploitation phase. The algorithm was initially proposed by Heidari et al. in 2019 [6].

In the exploration phase, the HHO algorithm strives to find the closest solution to the global optimum. During this phase, an algorithm is randomly initialized on multiple locations and, step-by-step, moves closer to its prey, mimicking how hawks attack in their natural surroundings. To achieve this efficiently, the HHO utilizes two strategies with equal probabilities, determined with parameter q as follows [6]:

| (5) |

where q, together with , , and , represent random numbers from the range , which are updated in each iteration, is the solutions’ position vector for next iteration, while , and denote the best, current and average solutions’ positions in the ongoing iteration t. Finally, and are lower and upper bounds of variables that define the scope of solutions in the search space. Furthermore, to obtain an average position of the solutions , the simplest averaging approach is employed:

| (6) |

where N presents the total number of solutions and is location (position) of individual X at iteration t.

During the exploration phase, the HHO can change from exploitation to exploration for different amounts of time, depending on the strength of the solution (prey energy). The strength of solution updates in each iteration is as follows:

| (7) |

where T expresses the maximal number of rounds (iterations) in a run and initial strength of pray energy, which changes randomly inside the interval.

During the exploitation phase, the hawk attacks its prey. However, the prey tries to escape, so the hawk needs to change between different strategies in order to exhaust and consequently catch the prey. In a real situation, hawks will try to come closer and closer to the prey, to make it easier for them to catch it. To incorporate this into the optimization algorithm, they change their attacking pattern from softer to harder. When , a soft besiege is applied, while when , hard besiege occurs.

When and , the prey still has energy, so hawks will encircle softly to make the prey more exhausted. This behavior is modeled with the following expressions [6]:

| (8) |

| (9) |

where is a vector difference between the best solution (prey) and solution position in iteration t. Attribute J is randomly changed in each round and simulates the target escaping strategy:

| (10) |

where is randomly generated number in interval . In a case when and , prey is exhausted and hawks can perform a hard attack. In this case, the current positions are updated as:

| (11) |

Furthermore, in the situation when the prey still has some energy available, a soft push is performed before the hawks’ attack can occur. This form of attack utilizes zig-zag movements known as leapfrog movements, which are common in nature. To perform this, the hawk can evaluate the following rule:

| (12) |

and then dive in a leapfrog pattern as follows:

| (13) |

where D is the dimension of a problem and S represents a random vector of size, while is levy flight function, calculated as:

| (14) |

Hence, the strategy for updating individuals’ positions is calculated as follows:

| (15) |

where Y and Z are calculated by utilizing Equations (12) and (13).

Finally, in the and case (hard besiege with progressive rapid dives), the prey does not have energy, and a hard attack is utilized before hawks hunt down the prey. Therefore, hawks decrease their average distance from the pray using the following strategy:

| (16) |

where, contrary to Equation (15), Y and Z are obtained using the following rules:

| (17) |

| (18) |

In the modern literature from the domain of swarm intelligence, the two most commonly used methods for calculating algorithms’ complexity are established: the first one only accounts for the number of fitness function evaluations (FFEs) because this operation is the most expensive in terms of computational resources [54] and the second one, besides FFEs, also includes the cost of updating solutions’ positions [6,17,55]. The cost of generating the initial population is excluded because it is relatively inexpensive compared to the cost of the solutions’ update process. Both methods calculate complexity in terms of T; however, if the total number of FFEs is taken as the termination condition when comparing the performance of different algorithms, a comparison in terms of complexity is not needed.

Following the second method, as was suggested in [6], the complexity of the original HHO is described as follows:

Number of FFEs in the initialization phase-

Number of FFEs in the solutions’ updating phase-

Cost of solutions’ updating phase-

Taking all the above into account, the computational complexity of the HHO is derived as follows:

| (19) |

More details about HHO can be captured from [6].

3.2. Motivation for Improvements and Proposed Enhanced HHO Method

Notwithstanding the outstanding performance of basic HHO [6], by conducting simulations with standard CEC bound-constrained benchmarks, it was noted that the original version can be further improved by addressing both processes—exploitation and exploration.

For some testing instances, especially with a higher number of dimensions, it happens that, in early iterations, the algorithm gets stuck in sub-optimal domains of the search space. After analyzing the diversity of convergence and solutions in such scenarios, it was concluded that if the “early” best solutions miss the right part of the search space, then most of the solutions also converge there and it is hard for the HHO to “escape” from this region. In other words, solution diversity for some problems is not satisfying in early execution cycles, and that is extremely dangerous if most of the solutions are generated far from the optimum domain. However, after some iterations, by performing HHO exploration, promising domains are discovered. However, this usually happens in the final stages of execution, when it is late for the search process to perform fine-tuning in this region, and consequently, less good solutions are generated at the end of a run.

The above-mentioned limitation of the basic HHO can also be viewed from the perspective of exploration–exploitation balance. Namely, in early iterations, this trade-off is biased towards exploitation, while in later iterations, when it should move towards exploitation, the intensification–diversification trade-off is in an equilibrium.

To address the above-mentioned issues in the original HHO, this study proposes three modifications.

The first modification is chaos-based population initialization. The idea of embedding chaotic maps in metaheuristics algorithms was first introduced by Caponetto et al. in [56]. The stochastic nature of most metaheuristics approaches is based on random number generators; however, many recent studies have shown that the search process can be more efficient if it is based on chaotic sequences [57,58].

Chaos is defined as non-linear movements of the dynamic systems that exhibit ergodicity and stochasticity, and are susceptible to initial conditions. Generation of the population by chaotic sequences has previously been used in multiple metaheuristics approaches in various domains. Some examples include chaotic multi-swarm whale optimizer for boosting the support vector machine (SVM) that assists doctors in medical diagnostics [57], chaos-enhanced firefly metaheuristics, applied to the mechanical design optimization problem [59], K-means clustering algorithm enhanced with chaotic ABC [60], and many others [58,61,62].

Many chaotic maps are available, such as the circle map, Chebyshev map, intermittency map, iterative map, logistic map, sine map, sinusoidal map, tent map, and singer map. After performing experiments with all the above-mentioned chaotic maps, the best results were achieved with a logistic map, and this was chosen for implementation in HHO.

The chaotic search is implemented in the proposed HHO by generating the chaotic sequence in accordance with the constraints of the observed problem, and then the generated sequence is used by individuals for exploration of the search space. The proposed method utilizes the chaotic sequence , that starts from the initial random number , generated by the logistic mapping, according to the Equation (20):

| (20) |

where and N are the chaotic control parameter and size of population, respectively. The usually has the value 4 [62], as was also set in this study, to ensure chaotic movements of individuals, while and .

The process of mapping solutions to generated chaotic sequences is accomplished with the following expression for each parameter j of individual i:

| (21) |

where is new position of individual i after chaotic perturbations.

Taking all these into account, the details of chaotic-based population initialization are provided in Algorithm 1.

| Algorithm 1 Pseudo-code for chaotic-based population initialization |

|

In this way, the quality of solutions is improved at the beginning of a run and the search agents may utilize more iterations for exploitation. However, despite the efficient chaotic-based initialization, when tackling challenges with many local optima, metaheuristics may still suffer from premature convergence. As noted above, the exploration of basic HHO, which is crucial for avoiding premature convergence, should be improved, which motivated the introduction of the second modification.

One of the most efficient available strategies for improving both intensification and exploration, as well as its balance, is the quasi-reflection-based learning (QRL) procedure [63]. By using the QRL, quasi-reflexive-opposite solutions are generated and if, for example, the original individual’s position is far away from the optimum, there is a good chance that its opposite solution may be in the domain in which an optimum resides.

By applying the QRL procedure, the quasi-reflexive-opposite individual of the solution X is generated in the following way:

| (22) |

where generates random number from uniform distribution in range . This procedure is executed for each parameter of solution X in D dimensions.

The proposed improved HHO adopts a simple replacement strategy of the worst and best individuals from the population based on the QRL. Applying the QRL mechanism yields the best performance in early iterations by significantly improving exploration if the current worst solution () is replaced with the quasi-reflexive-opposite best individual (). However, based on empirical research, in later iterations, when exploitation should be intensified, it is better if the is replaced with its quasi-reflexive-opposite. In both cases, a greedy selection is applied and a solution with a higher fitness value is kept in the population for the next iteration.

Finally, the third modification that was incorporated into the basic HHO is chaotic search (CS) strategy [64] around the solution. During practical experiments, it was noted that, for very challenging benchmarks, e.g., multi-modal shifted and rotated functions [65], in late iterations, the may become “trapped” in one of the local optima and, in such scenarios, the QRL mechanism is not able to generate with better fitness than the original . The consequence of such scenarios is a quality of worse solutions at the end of a run and, consequently, worse mean values.

The abovementioned case is mitigated by employing the CS strategy in the following way: in later iterations, if the cannot be improved in best threshold () fitness function evaluations (FFEs), instead of generating , the CS is performed around the . The CS strategy for generating the chaotic current best () is described with the following equations:

| (23) |

| (24) |

where is a new chaotic sequence for the determined by Equation (20) and is dynamic shrinkage parameter that depends on the current FFEs and the maximum number of fitness function evaluations () in the run:

| (25) |

Dynamic enables a better exploitation–exploration trade-off by establishing wider and narrower search radius around the in lower and higher iterations, respectively. The FFEs and can be replaced with t and T when the total number of iterations in a run is considered the termination condition.

Finally, to control which of the proposed two QRL or the CS strategies will be triggered, another control parameter, adaptive behavior, denoted as , is introduced. This procedure is mathematically described with the following three expressions:

| (26) |

| (27) |

| (28) |

where k is incremented every time cannot be improved by QRL and stands for the predefined improvement threshold. The FFEs simply represents the current number of fitness function evaluations and can be replaced with t if the number of iterations is taken as the termination condition. The above expression will execute if, and only if, the newly generated solution is better than the current solution, according to the greedy selection mechanism.

Since metaheuristics are stochastic methods in which randomness plays an important part, empirical simulations are the most reliable way of determining how the control parameters’ values affect the search process. Additionally, in the case of the proposed enhanced HHO, as shown in Section 4, the values of control parameters and , that, on average, obtain the best performance for a wider set of benchmarks, are empirically determined.

Notwithstanding that the enhanced HHO metaheuristics adopts chaotic population initialization, QRL replacement, and CS strategies, for the sake of simple naming conventions, the proposed method is titled enhanced HHO (eHHO) and its working details, in a form of pseudo-code, are provided in Algorithm 2.

| Algorithm 2 Proposed eHHO pseudo-code |

|

As shown in Section 4 and Section 5, the proposed eHHO managed to achieve a better performance than the basic HHO. However, according to the NFL theorem, there is always a trade-off. The proposed eHHO employs additional control parameters and and performs N more, and one more FFEs, during initialization and throughout its iterations, respectively. Moreover, the eHHO updates one more solution in each iteration.

Following the same approach as that used to calculate computational complexity, as well as the costs (Equation (19)), in terms of T, the eHHO complexity can be expressed as:

| (29) |

4. CEC2019 Benchmark Functions Experiments

According to good experimental practice, the proposed approach was verified with the standard unconstrained benchmark functions, set before application to a concrete practical task. The introduced eHHO method was evaluated on the 10 recent benchmark function set, introduced in the Congress on Evolutionary Computation 2019 (CEC2019) [66], and the obtained experimental results were compared to other modern approaches, which are followed by the standard statistical tests.

The CEC2019 bound-constrained benchmark function details are provided in Table 1.

Table 1.

CEC 2019 benchmark characteristics.

| No. | Functions | D | Search Range | |

|---|---|---|---|---|

| CEC01 | Storn’s Chebyshev Polynomial Fitting Problem | 1 | 9 | [−8192, 8192] |

| CEC02 | Inverse Hilbert Matrix Problem | 1 | 16 | [−16,384, 16,384] |

| CEC03 | Lennard-Jones Minimum Energy Cluster | 1 | 18 | [−4, 4] |

| CEC04 | Rastrigin’s Function | 1 | 10 | [−100, 100] |

| CEC05 | Griewangk’s Function | 1 | 10 | [−100, 100] |

| CEC06 | Weierstrass Function | 1 | 10 | [−100, 100] |

| CEC07 | Modified Schwefel’s Function | 1 | 10 | [−100, 100] |

| CEC08 | Expanded Schaffer’s F6 Function | 1 | 10 | [−100, 100] |

| CEC09 | Happy Cat Function | 1 | 10 | [−100, 100] |

| CEC10 | Ackley Function | 1 | 10 | [−100, 100] |

4.1. Experimental Setup and Control Parameters’ Adjustments

The performance of the suggested eHHO metaheuristics was evaluated by comparative analysis with the basic HHO, and nine other modern metaheuristics approaches, namely elephant herding optimization (EHO) [67], EHO improved (EHOI) [68], salp swarm algorithm (SSA) [69], sine cosine algorithm (SCA) [70], grasshopper optimization algorithm (GOA) [55], whale optimization algorithm (WOA) [17], biogeography-based optimization (BBO) [71], moth–flame optimization (MFO) [72] and particle swarm optimization (PSO) [11].

The simulation results obtained with the above-mentioned algorithms for the same benchmarks were reported in [68]. However, in the study proposed in this manuscript, experiments are recreated to validate the results of [68] and establish a more objective comparative analysis. The methods in [68] were tested with N = 50 and T = 500, and this setup may lead to a biased comparative analysis because not all algorithms utilize the same number of FFEs in one iteration. Therefore, in this study, the termination condition for all approaches was set according to the total utilized FFEs, and to establish similar conditions, as in [68], the was set to 25,000 ().

The results are reported for 50 separate runs, while the average (mean) and standard deviation (std) results were taken as performance metrics. The value of dynamic behavior parameter was determined empirically and set to /4; in this case, to 6250 (25,000/4). Similarly, by performing extensive simulations, the mathematical expression for calculating value is derived: , where represents a standard function that rounds input to the closest integer. In this case, the was set to 38 ((25,000–6250)/10×50). Other parameters are adjusted as is suggested in [6]. All other HHO and eHHO control parameters are dynamic, and their values are adjusted throughout the run according to the FFEs and . For more details, please refer to Section 3.1.

Control parameters for other metaheuristics included in the analysis were adjusted as suggested in the original manuscript.

A brief summary of eHHO control parameters used throughout the conducted experiments is given in Table 2.

Table 2.

The eHHO control parameters overview.

| Parameter Interpretation | Value |

|---|---|

| Population size (N) | 50 |

| Maximum number of FFEs () | 25,000 |

| Dynamic behavior () | /4 = 6250 |

| Best threshold () | = 38 |

4.2. Results and Discussion

The results from the ten conducted CEC2019 simulations are presented in Table 3. The reported results are shown as scientific notations, and the best mean values for each of the benchmark instances are shown in bold for easier reading. It is noted similar performance metrics to those reported in [68] were obtained.

Table 3.

Evaluation of results achieved by different well-known metaheuristics on CEC2019 benchmark function set.

| Function | Stats | EHOI | EHO | SCA | SSA | GOA | WOA | BBO | MFO | PSO | HHO | eHHO |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CEC01 | mean | |||||||||||

| std | ||||||||||||

| CEC02 | mean | |||||||||||

| std | ||||||||||||

| CEC03 | mean | * | ||||||||||

| std | ||||||||||||

| CEC04 | mean | |||||||||||

| std | ||||||||||||

| CEC05 | mean | |||||||||||

| std | ||||||||||||

| CEC06 | mean | |||||||||||

| std | ||||||||||||

| CEC07 | mean | |||||||||||

| std | ||||||||||||

| CEC08 | mean | |||||||||||

| std | ||||||||||||

| CEC09 | mean | |||||||||||

| std | ||||||||||||

| CEC10 | mean | |||||||||||

| std |

* All methods obtained the same result.

A comparative analysis of the results provided in Table 3 indicates that the upper-level performance of the suggested eHHO approach is better than the other compared algorithms. For the majority of CEC2019 functions (more precisely, eight out of ten), eHHO achieved the best mean values. The exceptions include CEC03 benchmark, where all algorithms obtained the same mean value, and CEC07 test instance, where the BBO, on average, managed to achieve the best quality of solutions. Additionally, it can be noted that the eHHO algorithm drastically improves the performance of basic HHO metaheuristics, which obtained mediocre results compared to other approaches, justifying the goal of devising an enhanced HHO algorithm.

In modern computer science theory, when comparing different algorithms, it is typically not enough to declare that one algorithm is better than others only in terms of the obtained results. It is also necessary to determine if the generated improvements are significant in terms of statistics. Therefore, a Friedman test [73,74] and two-way variance analysis by ranks were employed to determine if there is a significant difference in the results of the proposed eHHO and other methods encompassed by the comparison. The Friedman test results for the 11 compared algorithms over the 10 CEC19 functions are presented in Table 4.

Table 4.

Friedman test ranks for the compared algorithms over 10 CEC2019 functions.

| Function | EHOI | EHO | SCA | SSA | GOA | WOA | BBO | MFO | PSO | HHO | eHHO |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CEC01 | 2 | 4 | 7 | 5 | 9 | 8 | 10 | 6 | 11 | 3 | 1 |

| CEC02 | 2 | 3 | 8 | 4.5 | 6.5 | 4.5 | 10 | 6.5 | 11 | 9 | 1 |

| CEC03 | 6 | 6 | 6 | 6 | 6 | 6 | 6 | 6 | 6 | 6 | 6 |

| CEC04 | 2 | 3 | 11 | 4 | 9 | 10 | 7 | 8 | 6 | 5 | 1 |

| CEC05 | 2 | 3 | 11 | 8 | 7 | 10 | 6 | 4 | 9 | 5 | 1 |

| CEC06 | 6 | 8 | 11 | 2 | 5 | 7 | 4 | 3 | 10 | 9 | 1 |

| CEC07 | 3 | 4 | 11 | 6 | 5 | 9 | 1 | 7 | 10 | 8 | 2 |

| CEC08 | 2 | 3 | 11 | 6 | 9 | 10 | 5 | 8 | 7 | 4 | 1 |

| CEC09 | 2 | 4 | 11 | 3 | 5 | 10 | 8 | 6 | 7 | 9 | 1 |

| CEC10 | 2 | 11 | 10 | 5 | 3 | 7 | 9 | 4 | 8 | 6 | 1 |

| Average | 2.9 | 4.9 | 9.7 | 4.95 | 6.45 | 8.15 | 6.6 | 5.85 | 8.5 | 6.4 | 1.6 |

| Rank | 2 | 3 | 11 | 4 | 7 | 9 | 8 | 5 | 10 | 6 | 1 |

The results presented in Table 4 statistically indicate that the proposed eHHO algorithm has a superior performance when compared to the other ten algorithms, with an average rank value of 1.6. The basic HHO obtained an average ranking of 6.4. Additionally, the Friedman statistics () is larger than the critical value, with 10 degrees of freedom (), at the significance level . As the result, the null hypothesis () can be rejected, and it can be stated that the suggested eHHO achieved significantly different results to the other ten algorithms.

Furthermore, the research published in [75] reports that the Iman and Davenport’s test [76] could provide more precise results than the . Therefore, Iman and Davenport’s test was also conducted. After calculations, the result of the Iman and Davenport’s test is , which is significantly larger than the critical value of the F-distribution (). Consequently, Iman and Davenport’s test also rejects . The is smaller than the significance level for both the executed statistical tests.

Results for Friedman and Iman and Davenport’s test with are summarized as follows: , , , and of and .

Since the null hypothesis was rejected by both performed statistical tests, the non-parametric post-hoc procedure, Holm’s step-down procedure, was also conducted and presented in Table 5. By using this procedure, all methods are sorted according to their p value and compared with , where k and i represent the degree of freedom (in this work ) and the algorithm number after sorting, according to the p value in ascending order (which corresponds to rank), respectively. In this study, the is set to 0.05 and 0.1. It is also noted that the p-value results are provided in scientific notation.

Table 5.

Results of the Holm’s step-down procedure.

| Comparison | p-Value | Rank | 0.05/(k − i) | 0.1/(k − i) |

|---|---|---|---|---|

| eHHO vs. SCA | 0 | 0.005 | 0.01 | |

| eHHO vs. PSO | 1 | 0.005556 | 0.01111 | |

| eHHO vs. WOA | 2 | 0.006250 | 0.01250 | |

| eHHO vs. BBO | 3 | 0.007143 | 0.01429 | |

| eHHO vs. GOA | 4 | 0.008333 | 0.01667 | |

| eHHO vs. HHO | 5 | 0.010000 | 0.02000 | |

| eHHO vs. MFO | 6 | 0.012500 | 0.02500 | |

| eHHO vs. SSA | 7 | 0.016667 | 0.03333 | |

| eHHO vs. EHO | 8 | 0.025000 | 0.05000 | |

| eHHO vs. EHOI | 9 | 0.050000 | 0.10000 |

The results given in the Table 5 suggest that the proposed algorithm significantly outperformed all opponent algorithms, except EHOI, at both significance levels and .

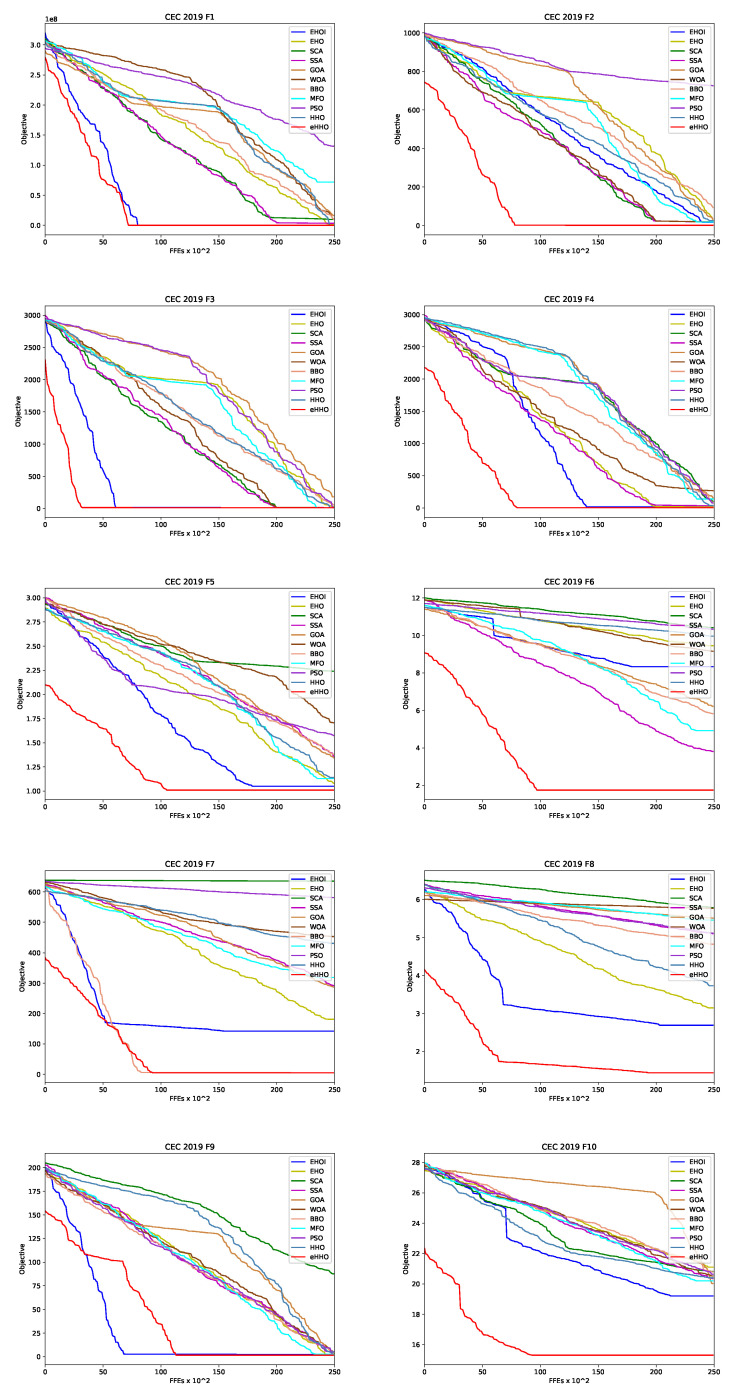

An average convergence speed comparison between proposed eHHO and 10 other metaheuristics included in the analysis is given in Figure 1. From the presented graphs, the superiority of the devised approach compared to other methods can be categorically validated, and a few interesting things can be noticed. First, the effect of chaotic-based population initialization on the search space exploration is evident—this mechanism enables eHHO to better identify the boundaries of the optimum search domain at the beginning of a run. Second, in most challenges, during FFEs, the eHHO managed to converge to near optimum levels and after this, a fine-tuned exploitation, that further improved the results’ quality, was performed. However, for problem CEC09 6250 FFEs was not enough to find a proper part of the search space, which can be clearly seen in the graph. In this case, somewhere between 5000 aQ!nd 6500 FFEs, the search process was “stuck”; however, when the is triggered, it converges relatively smoothly towards an optimum.

Figure 1.

Graphs for convergence speed comparison for 10 CEC2019 benchmark functions—eHHO vs. other approaches.

Finally, to demonstrate the effects of the control parameter on the search process, additional experiments with varying values are conducted by taking increments of 6250 FFEs. Therefore, besides , as the most promising value for CEC2019 benchmarks, was adjusted to 0, 12,500, 18,750, and 25,000 in four additional simulations. In the first case, when , the greedy selection between and is be performed in each iteration, and the exploration process is amplified during the whole run. However, in the last case, when = 25,000, the greedy selection between and or and is executed, favoring intensification around the . Mean values, calculated over 50 independent runs with varying for CEC2019 simulations, are summarized in Table 6, where the best results are marked with bold font.

Table 6.

Results with varying value of proposed eHHO for CEC2019 benchmark suite.

| Function | Statistics | |||||

|---|---|---|---|---|---|---|

| CEC01 | mean | |||||

| CEC02 | mean | |||||

| CEC03 | mean | * | ||||

| CEC04 | mean | |||||

| CEC05 | mean | |||||

| CEC06 | mean | |||||

| CEC07 | mean | |||||

| CEC08 | mean | |||||

| CEC09 | mean | |||||

| CEC10 | mean | |||||

| Total best | 0 | 7 | 2 | 0 | 0 |

* All methods obtained the same result.

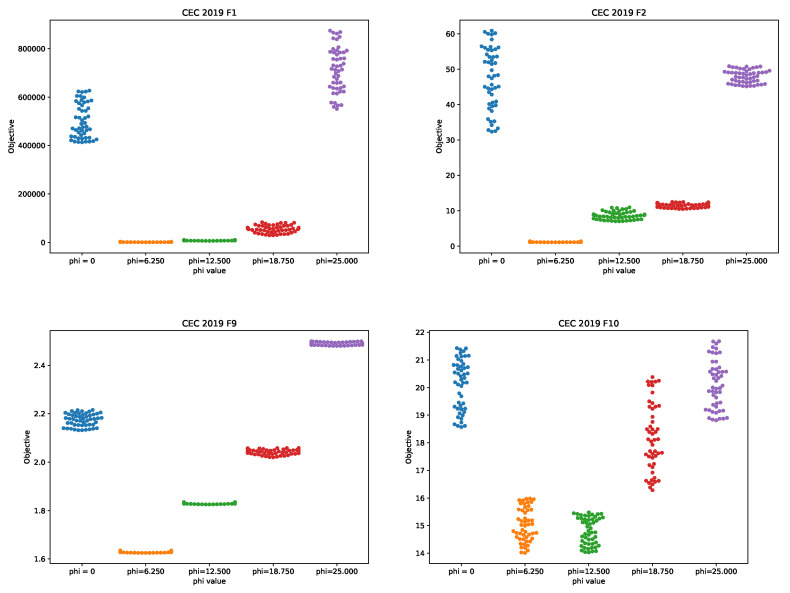

From the presented table with varying values, a few interesting things can be observed. First, according to the NFL theorem, there are no universal control parameters’ values that can obtain the best results for all benchmark instances, and that is why the results generated with control parameter values that establish the best average quality of solutions are reported. In this case, for seven out of the ten benchmarks, the proposed eHHO obtains the best performance when , while, for two benchmark instances, it was determined that the best performance is achieved when . For the test instance, with CEC03 for all settings, the same result is achieved. These statistics are provided in the last row of Table 6.

Further, it can be observed that the worst results are generated with (the only exception isthe CEC05 test instance). When is adjusted to 25.000, the eHHO in each iteration performs exploitation around the current best solution by using either or . Since the basic HHO suffers from poor exploration in early cycles, by conducting exploitation around the throughout the whole run, the mean results are worse. This is because the algorithm often misses promising regions of the search space in early iterations.

It also can be noted that the eHHO outscores BBO in CEC10 benchmark when , while, with set to 6.250, the BBO outperforms the method proposed in this study, as shown in Table 3. However, the only objective comparative analysis can be performed when the same control parameter values are used for all benchmark instances, and the above-mentioned remark cannot be taken into account when comparing the quality of the results the proposed eHHO with other state-of-the-art approaches.

Finally, to better visualize eHHO performance with varying values, swarm plot diagrams for some functions over 50 runs are shown in Figure 2.

Figure 2.

The eHHO swarm plots for CEC01, CEC02, CEC09 and CEC10 benchmarks with varying value.

5. CNN Design for MRI Brain Tumor Grades Simulations

After validating the proposed eHHO on the CEC2019 bound-constrained benchmarks, following good practice from the literature, simulations for the practical CNN design challenge were performed. The generated CNN structures were evaluated for classification tasks on two MRI brain tumor grades datasets.

The CNN hyperparameters’ optimization study adopts a similar approach as in [9]. Instead of training and evaluating millions of possible CNNs architectures, e.g., by using a grid search, the appropriate architectures were evolved by evaluating only a few hundred potential candidates using the guided eHHO approach, which leads to a drastic decrease in the overall computational costs. As in the referenced paper [9], this research utilizes the dropout technique [42] as the regularization option. This method is not considered computationally expensive, yet it is a very efficient approach that prevents the over-fitting problem by the random removal of some neurons in the fully connected layer during the training process.

All implementations are conducted in Python with TensorFlow and Keras libraries, along with scikitlearn, numpy and pandas, while matplotlib and seaborn libraries are used for visualization. Simulations are conducted on Intel®i7 platform with six × NVidia Geforce RTX 3080 GPUs.

This section first provides information about datasets and pre-processing, followed by the basic simulation setup (employed hyper-parameters, algorithm adaptations, and flow-chart) and the obtained results, along with a comparative analysis.

5.1. Datasets Details and Pre-Processing

Similarly, as in [9,10], two datasets are used in experiments. The first dataset (dataset1) is comprised of 600 normal brain images retrieved from the IXI database [77] and of 591 glioma brain tumor images with four grades (130 retrieved from REMBRANDT repository [78], 262 captured from TCGA-GBM dataset [79] and 199 downloaded from TCGA-LGG dataset [80]). The second dataset (dataset2) consists of 3064 T1-weighted images with Glioma, Meningioma, and Pituitary brain tumor types, collected from 233 patients by Cheng et al. [81]. The second dataset can be retrieved from: https://figshare.com/articles/dataset/brain_tumor_dataset/1512427 (accessed on 28 June 2021).

Considering normal MRIs, an average of six middle MR slices were taken in the same intervals for every patient. The slices were utilized to differentiate healthy brains from brains with tumors. The identification of tumors was performed with the help of contrast infusion. Since the tumors can vary in size and their respective position in the brain, a different number of slices was used in various cases.

After the network has been trained, the classification of healthy and ill slices can be performed. The classifier network is able to recognize and distinguish abnormal images from healthy brains. This process has a two-fold benefit. First, it assists in identifying the presence of the tumor, and second, it pinpoints the approximate position of the tumorous tissue in the observed brain. Finally, it is possible to also determine the approximate size and the tumor grade.

The same image processing as in [9,10] is employed: pixel values of each image are normalized to scale and, with the goal of increasing the size of the training dataset, the data augmentation technique is used.

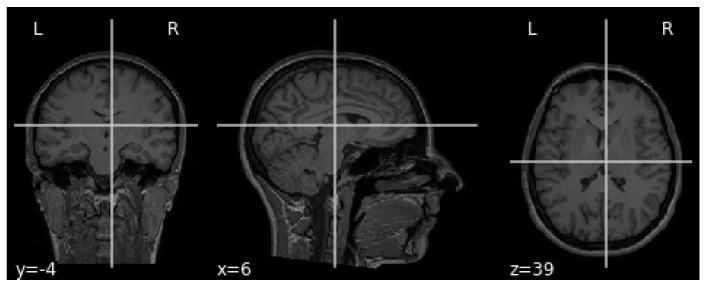

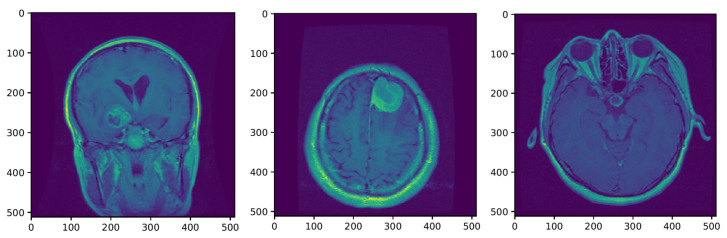

The MRI requires the setup of specific parameters, including radio-frequency pulse and gradient. T1 and T2 sequences are usually used in practice, with both providing specific information about the observed tissue. This research uses the T1 sequence. To reduce the amount of images required for the healthy brain, six sections were chosen from MRI images. A healthy brain sample is shown in Figure 3.

Figure 3.

Axial MRI sample of a normal person with healthy brain.

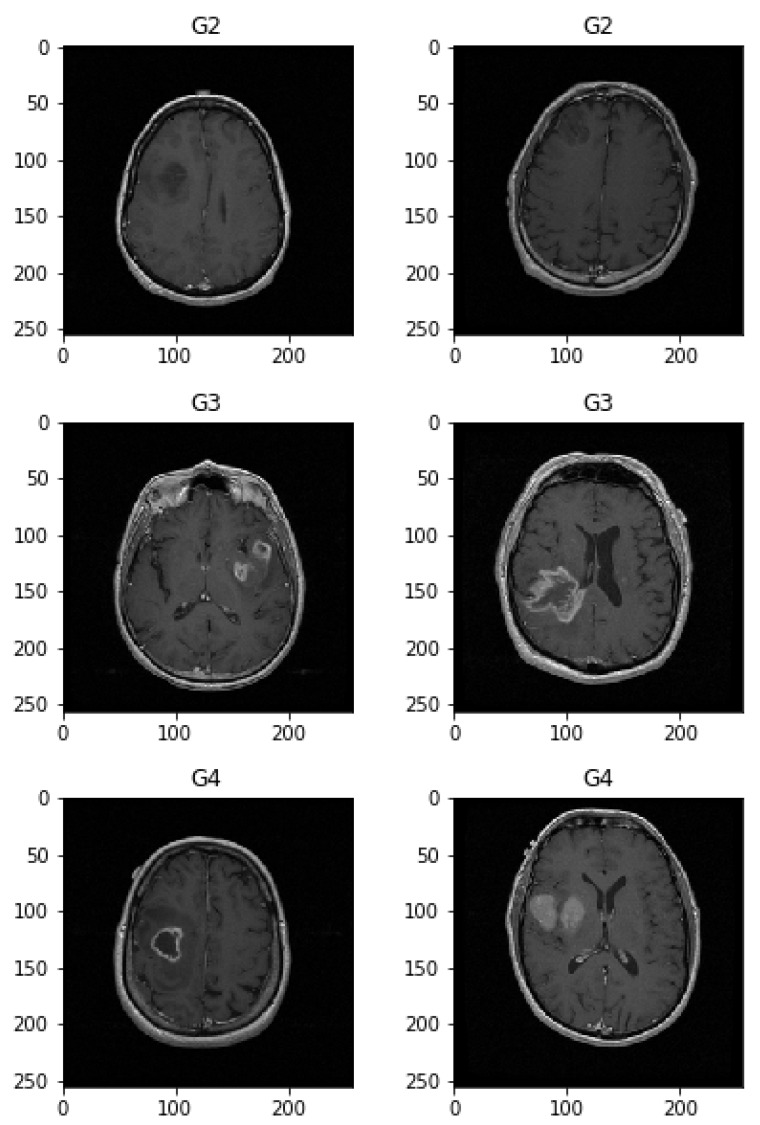

To help distinguish the tumor tissue and more precisely determine the tumor borders, the patients are typically injected with a contrast solution (Gadolinium). These images can be utilized in the classification of the tumor grade. Figure 4 shows axial MRIs with Gadolinium infusion of three grades of glioma brain tumors. Finally, Figure 5 shows three brain tumor cases from the second dataset utilized in this research [81]. As mentioned, all employed images were normalized to the interval, and their dimension was set to pixels.

Figure 4.

Axial MRI samples of different glioma grades: (top) glioma grade 2; (middle) glioma grade 3; (bottom) glioma grade 4.

Figure 5.

Axial brain samples from the public dataset given by Cheng in [81]: (left) glioma; (center) meningioma; (right) pituitary tumors.

The network training on a bigger dataset is considered a successful approach for the generalization of and reduction in the over-fitting problem. Data augmentation refers to the process of creating fake data and the addition of these data to the dataset. In this study, some images were manipulated by utilizing random modifications of the original ones, including rotation by 10, 20, or 30 degrees in a random direction, translation by 15 pixels, re-scaling the image to 3/4 of the original dimensions, mirroring of the images, and combining those changes at the same time. The resulting manipulated images were included in the original datasets.

Consequently, the first dataset size is increased to 16,000 and consists of 8000 healthy and 8000 glioma brain tumor images with grades I-IV. Out of 16,000, 2000 images, which include 500 images from each category (normal, grade II, III and IV) were used for testing, while the remaining are used for training. The second dataset initially consisted of a total of 3064 axial images split into three categories: 708 meningioma tumors, 1426 glioma tumors, and 930 pituitary tumors. After performing the same data augmentation process, each of the three categories consists of 1521 images utilized for training, and 115 used for the test, the grand total of 4908 images. More details regarding the pre-processing phase and the split datasets can be captured from [9,10].

5.2. Basic Experimental Setup

The experimental setup (in terms of data pre-processing, split, validation criteria, and other simulation parameters) utilized in the proposed research is the same as in the referenced paper [9]. Moreover, as noted in Section 1, this research represents the continuation of the study shown in [10].

Hyper-parameters that have been put through the process of optimization included the number of convolutional layers and filters per layer, along with their respective size, employed activation function, pooling layer, number of fully connected layers and the hidden units in each, the number of dropout layers with dropout ratio, type of optimizer, and finally, the learning rate. Since the search space is enormous, it was limited by defining hyper-parameters’ possible values within the lower and upper bounds, which were suggested by the domain expert, as shown in [9]. A full list of hyper-parameters, together with their corresponding boundaries, is given in Table 7.

Table 7.

List of CNN hyper-parameters along with search space boundaries.

| Hyperparameter Description | Boundaries |

|---|---|

| Number of convolutional layer () | [2, 6] |

| Number of max-pooling layer () | [2, 6] |

| Number of dense layer () | [1, 3] |

| Number of dropout layers () | [1, 3] |

| Filter size () | [2, 7] |

| Number of filters () | [16, 128] |

| Number of hidden units () | [128, 512] |

| Dropout rate () | [0.1, 0.5] |

| Activation function () | [1, 4] |

| Optimizer () | [1, 6] |

| Learning rate () | [0.01, 0.0001] |

The number of convolutional and pooling layers ( and ) possible values are defined by the set . The kernel size of the pooling layer has been fixed to . The filter size () has been limited from 2 to 7. The number of filters () was defined with the set of possible values . The fully connected () and dropout () layers’ number was taken from the interval . Concerning the number of hidden units (), this can take values from the set .

The choice of the activation function () was limited to four types, encoded with numeric values, as follows: ReLU = 1, ELU = 2, SELU = 3, and finally, LReLU = 4. The dropout rate () and the learning rate () can take any continuous value from the interval and , respectively. This research, as in the ones proposed in [9,10], assumes the cross-entropy is utilized as a loss function with five possible optimizer () options, encoded with integers (similar to the activation functions), and specified with the set {, , , , , }. After defining all the hyper-parameters that are subjected to the CNN optimization process, the search space can be formulated as:

| (30) |

where every element from the set refers to the corresponding hyper-parameter and its possible range of values. For more details, the interested reader can refer to the papers [9,10].

Therefore, according to (30), each solution from the population, which represents one possible CNN structure, is encoded as an array of size 11, and the whole population is represented as a matrix of . Moreover, it should also be noted that some solution parameters are continuous, while some are discrete. Since the boundaries of integer parameters are relatively low, the search process is conducted by simply rounding the generated continuous to the nearest discrete value. Based on the previous studies, this is the most efficient way, with no additional computational burden [18,19].

The initial population is generated with random CNN architectures, followed by a calculation of the fitness for each CNN (candidate solution). The study proposed in [9,10] evolves 50 () possible network structures in 15 iterations and this setup, in the case of most metaheuristics approaches, yields a total number of 800 FFEs (). However, as pointed out in Section 4, not all metaheuristics use the same number of FFEs in each iteration and, for that reason, in the proposed study, is used as the termination condition instead of . With this setup, similar conditions as in [9,10] were established. The eHHO control parameter was set to 200, according to the expression shown in Section 4.1. However, since the CNN design experiment involves a much lower number of FFEs than CEC2019 simulations, the value for was determined empirically and set to 12, using the expression .

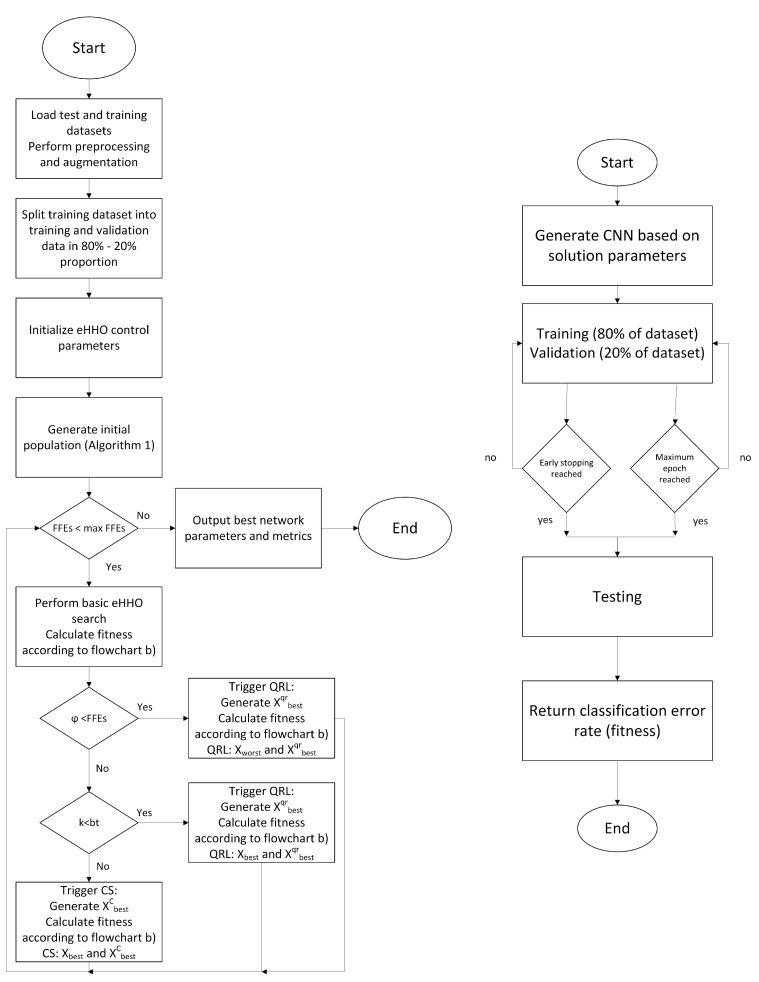

The classification error rate on the test set represents the fitness function and the optimization goal is to find a CNN architecture with maximum accuracy (lowest error rate). The training set is split into the training and validation data using the split rule. Each possible generated CNN structure (candidate solution) is trained on the training set and validated on the validation set in 100 epochs. With the goal of reducing computational costs, an early stopping condition is implemented, and if there is no improvement in validation accuracy in three subsequent epochs, the training will stop. When the training is finished, the fitness of the CNN structure (candidate individual) is calculated.

Taking all into account, flowchart of proposed eHHO for CNN design (CNN + eHHO) is given in Figure 6.

Figure 6.

CNN + eHHO main flowchart (left); CNN + eHHO flowchart for calculating fitness (right).

5.3. Results, Comparative Analysis and Discussion

In this study, the performance of the proposed eHHO was compared with other state-of-the-art metaheuristics along with some classical machine learning approaches. All methods covered in the comparative analysis were implemented and tested for the purpose of this research under the same conditions to maintain an unbiased and fair comparison. Details of the experimental setup are explained in Section 5.2.

All metaheuristics were executed according to the flow diagram shown in Figure 6 in 10 independent runs, and the best results are reported. The adaptations of metaheuristics for CNN design are labeled with the prefix “CNN”, e.g., CNN + eHHO.

Metaheuristics were used to find a proper CNN structure within the boundaries of a search space, and this guided exploration is much more efficient than the exhaustive one. The search space is enormous and there are more than 1 million possible hyperparameter combinations. With this hyperparameter setup, a grid search cannot execute in a reasonable amount of computational time; however, by using metaheuristics, a satisfying CNN is generated by evaluating only 800 possible CNN architectures.

Due to the abovementioned argument, the grid search, as one of the most commonly used tools for searching near-optimal hyperparameter values for a specific classification/regression problem, is not included in the comparative analysis. Hoewver, the grid search method that consumes approximately the same processing time as the approach proposed in this study can only evaluate a few possible CNN structures and, as such, would be useless in practical implementation.

Besides the original HHO, the following metaheuristics were also included in analysis: genetic algorithm (GA) implementation proposed in [9], basic FA [82], modified firefly algorithm (mFA) [10], bat algorithm (BA) [15], EHO [67], WOA [17], SCA [70] and PSO [11]. All methods were tested with the control parameters’ values, suggested in the original papers, which are summarized in Table 8. For additional details, please refer to original manuscripts, where the abovementioned methods are proposed for the first time.

Table 8.

Control parameters’ setup for metaheuristics included in analysis.

Moreover, to further facilitate comparative analysis and better evaluate the robustness of the proposed CNN + eHHO, some standard network structures (not evolved by metaheuristics) were also encompassed in comparative analysis: support vector machine (SVM) + recursive feature elimination (RFE) [83], Vanilla preprocessing + shallow CNN [84], CNN LeNet-5 [85], VGG19 [86] and DenseNet (Keras DenseNet201 instance) [87]. All these methods were instantiated with default parameters and trained for these particular datasets under the same conditions as metaheuristics.

Comparative analysis results in terms of classification accuracy between the proposed eHHO and original EHO, along with other state-of-the-art methods, are provided in Table 9. The results obtained for the GA and mFA differ from the ones reported in [9,10], respectively, because, in this study, the is taken as a termination condition.

Table 9.

MRI tumor grades classification comparative analysis.

| Approach | Accuracy | ||||

|---|---|---|---|---|---|

| Case Study I (dataset1) – Glioma Grade II/Grade III/Grade IV | |||||

| SVM + RFE [83] | 62.5% | ||||

| Vanilla pre-processing + shallow CNN [84] | 82.8% | ||||

| CNN LeNet-5 [85] | 63.6% | ||||

| VGG19 [86] | 87.3% | ||||

| DenseNet [87] | 88.1% | ||||

| CNN + GA [9] | 91.3% | ||||

| CNN + FA [82] | 92.5% | ||||

| CNN + mFA [10] | 93.0% | ||||

| CNN + BA [15] | 91.6% | ||||

| CNN + EHO [67] | 90.7% | ||||

| CNN + WOA [17] | 92.1% | ||||

| CNN + SCA [70] | 92.8% | ||||

| CNN + HHO | 92.8% | ||||

| CNN + eHHO | 95.6% | ||||

| Case Study II (dataset2) – Glioma/Meningioma/Pituitary | |||||

| SVM + RFE [83] | 71.2% | ||||

| Vanilla preprocessing + shallow CNN [84] | 91.4% | ||||

| CNN LeNet-5 [85] | 74.9% | ||||

| VGG19 [86] | 92.6% | ||||

| DenseNet [87] | 92.7% | ||||

| CNN + GA [9] | 94.6% | ||||

| CNN + FA [10] | 95.4% | ||||

| CNN + mFA [10] | 96.2% | ||||

| CNN + BA [15] | 94.7% | ||||

| CNN + EHO [67] | 93.9% | ||||

| CNN + WOA [17] | 94.5% | ||||

| CNN + SCA [70] | 95.7% | ||||

| CNN + HHO | 95.9% | ||||

| CNN + eHHO | 97.7% | ||||

Based on the results shown in Table 9, even the basic CNN + HHO obtained promising results compared to other methods. The CNN + HHO performed similarly to CNN + SCA and better than all other metaheuristics, except for the proposed CNN + eHHO and CNN + mFA. In both experiments (dataset1 and dataset2), CNN + EHO managed to generate a CNN that obtains 90.7% and 93.9 % accuracy for dataset1 and dataset2, respectively, which are the worst results among all the metaheuristics-based approaches. The other methods showed a similar performance for both datasets.

The proposed CNN + eHHO was established as a more robust and precise method compared to the CNN + HHO and other presented approaches for this specific task. For both datasets, the proposed CNN + eHHO managed to establish the greatest classification accuracy among all competing algorithms. The second best state-of-the-art approach is CNN + mFA [10]; however, CNN + eHHO outscores this for 2.6% in dataset1 and for 1.5% in dataset2 simulations in terms of accuracy. These performance improvements may not seem much; however, taking the sensitivity of medical diagnostics into consideration, even small enhancements in percent fractions can save lives by confirming glioma tumor cases in the early phases of progression.

Furthermore, some well-known CNN structures shown in the comparative analysis are deeper than the best-evolved solutions by metaheuristics, while the networks designed by metaheuristics achieved better classification accuracy. The metaheuristics enable an automated means of evolving possible CNN structures in an iterative guided manner by trying to minimize the classification error and, when a manual network crafting is performed, some of the possible hyper-parameters combinations can be examined.

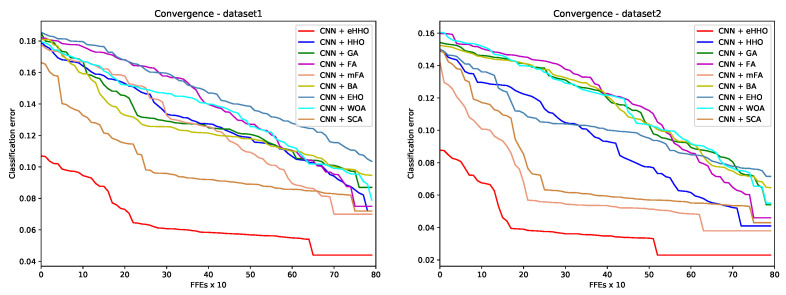

Convergence speed graphs for one of the best runs for the classification error metrics of compared metaheuristics-based methods are provided in Figure 7. From the presented graphs, the superiority of CNN + eHHO in terms of solution quality (accuracy) and convergence speed can be observed. Moreover, it can be observed that the chaos-based initialization offers a significant advantage to CNN + eHHO upon generation of the initial population.

Figure 7.

Classification error convergence speed graphs for dataset1 and dataset2 as a direct comparison between proposed eHHO and other metaheuristics.

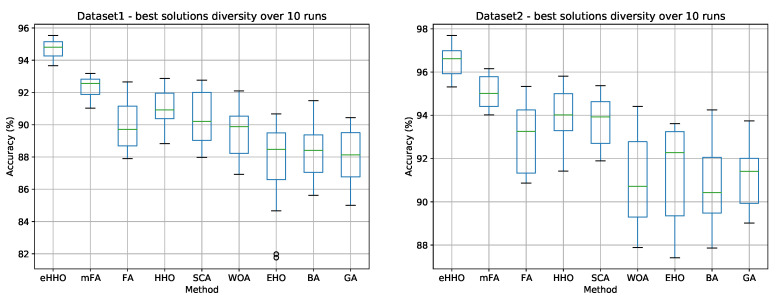

Besides the convergence speed, another very important indicator of an algorithm’s performance is diversity. By observing the best solutions’ diversity, generated at the end of the runs, the stability of the approach can be investigated. The diversity of the best solution (generated CNN structure) for all methods included in an analysis over 10 runs is shown in the box and whiskers diagrams presented in Figure 8.

Figure 8.

Diversity analysis for dataset1 and dataset2 as a direct comparison between proposed eHHO and other metaheuristics.

From the presented graphs, it can be seen that the proposed CNN + eHHO and CNN + mFA exhibits stable behavior for both datasets, with a relatively low standard deviation value. Other methods, except for CNN + EHO, also perform well in terms of stability. However, the CNN + EHO approach exhibits higher standard deviation value, with an emphasized discrepancy between the best and worst solutions and, in the case of dataset1, even with some outliers.

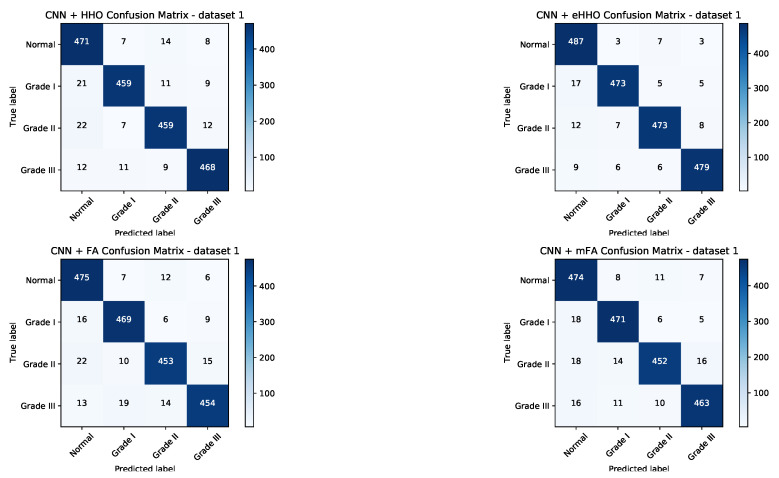

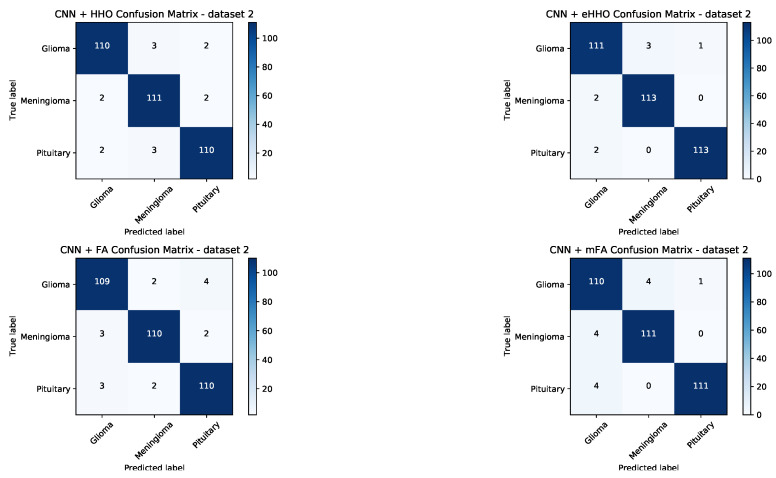

To provide better insight into the achieved results, the confusion matrices for both datasets have been generated for CNN + HHO, CNN + eHHO, CNN + FA and CNN + mFA approaches, and shown in Figure 9 and Figure 10.

Figure 9.

Confusion matrix for dataset1 with a direct comparison between proposed eHHO, HHO, FA and mFA approaches.

Figure 10.

Confusion matrix for dataset2 with a direct comparison between proposed CNN + eHHO, CNN + HHO, CNN + FA and CNN + mFA approaches.

In general, among all algorithms included in the comparative analysis, the two best approaches that were capable of generating the best-performing CNNs for both datasets are the proposed CNN + eHHO and CNN + mFA. Nonetheless, both methods for dataset1 and dataset1 managed to classify each tumor category with high accuracy. From the presented matrices, it can be unambiguously concluded that the CNN + eHHO performs better.

To further investigate the robustness of the two best-performing CNN classifiers, other performance metrics are presented for CNN + eHHO and CNN + mFA. These indicators are shown in Table 10 and Table 11. Both approaches were tested, with as the termination condition, and since FFEs is the most expensive operation, both algorithms take approximately the same computational time, and the complexity can be neglected. However, if the total number of iterations is taken as the termination condition, the mFA would be much more expensive than eHHO because, in each iteration, FA executes two loops and performs significantly more FFEs [54].

Table 10.

MRI brain tumor classification performance metrics for proposed CNN + eHHO approach.

| TP | FP | TN | FN | TPR | TNR | PPV | NPV | FPR | FNR | FDR | ACC | F1 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DATASET 1 | ||||||||||||||

| Normal | 487 | 38 | 1462 | 13 | 0.974 | 0.974 | 0.928 | 0.991 | 0.025 | 0.026 | 0.072 | 0.974 | 0.950 | |

| Grade II | 473 | 16 | 1484 | 27 | 0.946 | 0.989 | 0.967 | 0.982 | 0.011 | 0.054 | 0.033 | 0.979 | 0.957 | |

| Grade III | 473 | 18 | 1482 | 27 | 0.946 | 0.988 | 0.963 | 0.982 | 0.012 | 0.054 | 0.037 | 0.978 | 0.955 | |

| Grade IV | 479 | 16 | 1484 | 21 | 0.958 | 0.989 | 0.967 | 0.986 | 0.011 | 0.042 | 0.032 | 0.982 | 0.963 | |

| DATASET 2 | ||||||||||||||

| Glioma | 111 | 4 | 226 | 4 | 0.965 | 0.983 | 0.965 | 0.983 | 0.017 | 0.035 | 0.035 | 0.977 | 0.965 | |

| Meningioma | 113 | 3 | 227 | 2 | 0.983 | 0.987 | 0.974 | 0.991 | 0.013 | 0.017 | 0.026 | 0.986 | 0.978 | |

| Pituitary | 113 | 1 | 229 | 2 | 0.983 | 0.996 | 0.991 | 0.991 | 0.004 | 0.017 | 0.009 | 0.991 | 0.987 | |

Table 11.

MRI brain tumor classification performance metrics for CNN + mFA approach [10].

| TP | FP | TN | FN | TPR | TNR | PPV | NPV | FPR | FNR | FDR | ACC | F1 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DATASET 1 | ||||||||||||||

| Normal | 474 | 52 | 1448 | 26 | 0.948 | 0.965 | 0.901 | 0.982 | 0.035 | 0.052 | 0.099 | 0.961 | 0.924 | |

| Grade II | 471 | 33 | 1467 | 29 | 0.942 | 0.978 | 0.935 | 0.981 | 0.022 | 0.058 | 0.065 | 0.969 | 0.938 | |

| Grade III | 452 | 27 | 1473 | 48 | 0.904 | 0.982 | 0.944 | 0.968 | 0.018 | 0.096 | 0.056 | 0.963 | 0.924 | |

| Grade IV | 463 | 28 | 1472 | 37 | 0.926 | 0.981 | 0.943 | 0.975 | 0.019 | 0.074 | 0.057 | 0.967 | 0.934 | |

| DATASET 2 | ||||||||||||||

| Glioma | 110 | 8 | 222 | 5 | 0.957 | 0.965 | 0.932 | 0.978 | 0.035 | 0.043 | 0.068 | 0.962 | 0.944 | |

| Meningioma | 111 | 4 | 226 | 4 | 0.965 | 0.983 | 0.965 | 0.983 | 0.017 | 0.035 | 0.035 | 0.977 | 0.965 | |

| Pituitary | 111 | 1 | 229 | 4 | 0.965 | 0.996 | 0.991 | 0.983 | 0.004 | 0.035 | 0.009 | 0.986 | 0.978 | |

BExplanations for each abbreviation used in Table 10 and Table 11 are provided below:

TP represents the true positives (correct predictions of positive class).

TN represents the true negatives (correct predictions of negative class).

FP denotes the false positives (incorrect predictions of positive class).

FN denotes the false negatives (incorrect predictions of negative class).

TPR stands for true positives rate, calculated as TP/(TP + FN). This value is also known as sensitivity, hit rate, or recall.

TNR stands for true negatives rate, calculated as TN/(TN + FP). This value is also referred to as specificity.

PPV denotes positive predictive value, calculated as TP/(TP + FP). This value is referred to as precision.

NPV denotes negative predictive value, calculated as TN/(TN + FN).

FPR stands for false positives rate, calculated as FP/(FP + TN).

FNR stands for false negatives rate, calculated as FN/(TP + FN).

FDR represents false discovery rate, calculated as FP/(TP + FP).

ACC represents the overall accuracy, calculated as (TP + TN)/(P + N) = (TP + TN)/(TP + FP + FN + TN).

The results given in Table 10 and Table 11 show that the proposed CNN + eHHO approach is capable of achieving notably better results. When dataset1 is considered, the proposed CNN + eHHO achieves an accuracy of 0.974 for healthy brains (compared to the 0.961 achieved by CNN + mFA), 0.979 for grade II glioma (mFA achieved 0.969), 0.978 for grade III glioma (mFA scored 0.963), and 0.982 for grade IV glioma (compared to 0.967 achieved by CNN + mFA). When dataset2 is observed, the proposed eHHO achieved an accuracy of 0.977 for glioma tumors (compared to 0.962 achieved by mFA), 0.986 for meningioma tumors (CNN + mFA scored 0.977), and finally, 0.991 for pituitary tumors (while mFA achieved 0.986).

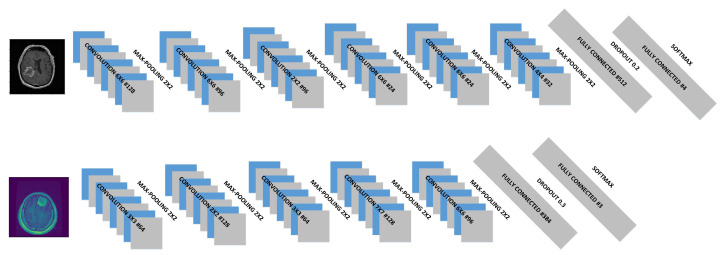

Some of the best-performing CNN structures generated by CNN + eHHO for both datasets are shown in Figure 11. Most of the best-performing CNN structures generated for dataset1 consist of six convolutional and max pooling layers with filters, optimizers or , and activation function. However, the best-performing CNN architectures for dataset2 include five convolutional and max pooling layers with filters, optimizers or , and or activation functions.

Figure 11.

Examples of best performing network structures: dataset1 (top); dataset2 (bottom).

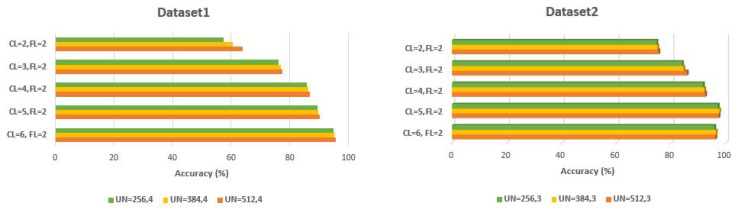

During simulations, various generated CNN structures evolved by CNN + eHHO were captured. It was determined that the highest impact on CNN performance for both datasets is the number of convolutional layers () and the number of hidden units in the dense layers (), while most of the evolved network structures have two fully connected layers (). The impact of and on the classification performance of some generated networks for dataset1 and dataset2 is shown in Figure 12.

Figure 12.

Impact of and in the dense layers on classification accuracy.

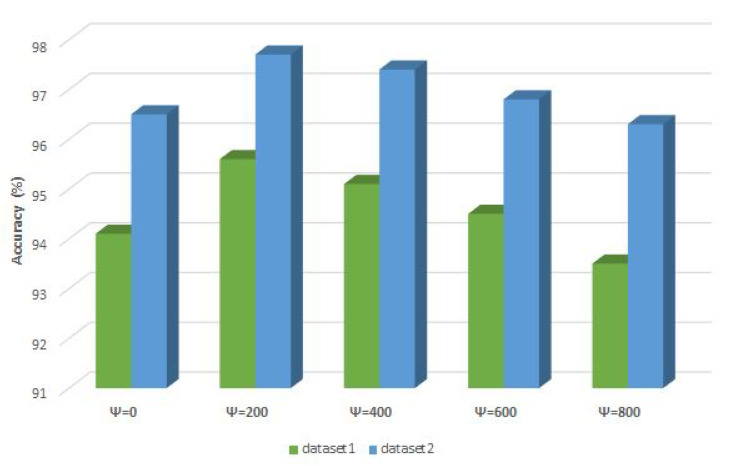

Finally, as in the experiments with CEC2019 benchmarks (Section 4), to better determine the influence of control parameters and on the CNN + eHHO performance, additional experiments with varying values are conducted. The was adjusted in increments of 200 FFEs ( and 800), and the best solution’s accuracy (evolved CNN structure) is reported from a set of 10 independent runs. The value depends on and was calculated according to the expression shown in Section 5.2. The results of this experiment for both datasets are summarized in Figure 13.

Figure 13.

Effect of control parameter on the CNN + eHHO performance.

From the presented chart, it can be firmly concluded that with set to 200 FFEs, the proposed CNN + eHHO obtains the best performance. The CNNs that obtain the lowest accuracy are generated with (magnified exploration) and (amplified exploitation). Moreover, it is interesting to note that in all additional experiments, the CNN + eHHO managed to find a CNN structure that outscores all other metaheuristics included in comparative analysis (Table 9).

6. Conclusions

The research shown in this manuscript proposes an enhanced version of HHO metaheuristics. The introduced eHHO algorithm was developed specifically to target the observed drawbacks of the original method by incorporating the chaotic mechanism and a novel, quasi-reflexive learning replacement strategy that enhances both the exploitation and exploration, with only a small additional overhead in terms of its computational complexity and new control parameters.

In the presented study, two types of experiments were performed. The proposed eHHO was first validated on a standard bound-constrained CEC2019 benchmark functions set, according to the firmly established practice in the recent computer science literature. The method was further validated on the practical problem of CNN design (hyperparameter optimization) and tested for classification of brain tumor MRI images.

The proposed method was compared to other state-of-the-art algorithms and obtained a superior performance in both conducted experiments. In simulations with CEC2019 instances, eHHO proved to be a robust approach and outscored all opponents in 8 out of 10 test functions. In the second experiment, CNN + eHHO managed to generate CNNs that are able to classify different grades of glioma tumors and two other common brain tumor variants with high precision by taking the raw MRI as input. Additionally, in the proposed study, other well-known swarm algorithms were also implemented for CNN design, and a broader comparative analysis was established. The CNN + eHHO substantially outscored all other approaches. In this way, the approach introduced in this study can be utilized as an additional option to help doctors in the early detection of gliomas and reduce the need for invasive procedures such as biopsy. It can also reduce the time for diagnostics, as the time needed to perform the classification is far shorter than the time required to analyze the biopsy.

Consequently, the contributions of this manuscript can be summarized as follows: basic HHO was improved and showed a superior performance compared to the other state-of-the-art methods for standard unconstrained problems and for the practical CNN design challenge. Furthermore, other swarm intelligence approaches that had not been tested before for this problem were implemented, and a performance comparison is provided of different metaheuristics for CNN hyperparameters’ optimization.

It should be stated here that the conducted research has some limitations. First, since the hyperparameters’ values were limited according to the domain expert from this area, as stated in [9] (otherwise, the search space would be infinite), not all possible structures were tested. Second, the eHHO algorithm that generates the possible CNN structures was not tested on generic datasets, such as CIFAR-10, USPS, Semeion, and MNIST, and this will be included in one of the future studies. Finally, since there are numerous new networks and approaches, not all of them could be included in the comparative analysis, as a long period of time would be needed for testing.