Abstract

We present a general mathematical framework for trajectory stratification for simulating rare events. Trajectory stratification involves decomposing trajectories of the underlying process into fragments limited to restricted regions of state space (strata), computing averages over the distributions of the trajectory fragments within the strata with minimal communication between them, and combining those averages with appropriate weights to yield averages with respect to the original underlying process. Our framework reveals the full generality and flexibility of trajectory stratification, and it illuminates a common mathematical structure shared by existing algorithms for sampling rare events. We demonstrate the power of the framework by defining strata in terms of both points in time and path-dependent variables for efficiently estimating averages that were not previously tractable.

I. INTRODUCTION

Computer simulation is a powerful tool for the study of physical processes. Specifically, stochastic simulation methods have broad applicability in modeling physical systems in a variety of fields including chemistry, physics, climate science, engineering, and economics [1, 2]. In many practical applications, the statistical properties of the process of interest are approximated by averages over many independent realizations of trajectories of the process, or, in the case of ergodic properties, by averages taken over a single very long trajectory of the process. However, for many systems, the most interesting events occur infrequently and are therefore very difficult to observe by direct numerical integration of the equations governing the dynamics. For example, in chemistry, the conformational changes responsible for the function of many molecules and, in climate science, extreme events like severe droughts and violent hurricanes, occur on timescales orders of magnitude longer than the timestep for numerical integration. This basic observation has motivated the development of numerous techniques aimed at enhancing the sampling of rare events of interest without sacrificing statistical fidelity (see [3] for an account within the context of molecular simulation).

In this article, we depart from standard enhanced sampling approaches and develop a general mathematical and computational framework for the estimation of statistical averages involving rare trajectories of stochastic processes. Our approach can be viewed as a form of stratified sampling, long a cornerstone of experimental design in statistics (e.g., [4]). In stratified sampling, a population is divided into subgroups (strata), averages within those strata are computed separately, and then averages over the entire state space are assembled as weighted sums of the strata averages. Stratification also has a long history in computer simulations of condensed-phase systems as umbrella sampling (US) [3, 5–8]. The key idea behind any stratified sampling strategy is that, when the strata are chosen appropriately, their statistics can be obtained accurately with relatively low effort and combined to estimate the average of interest with (much) less overall effort than directly sampling the stochastic process to the same statistical precision. Here we show that the trajectories of an arbitrary discrete-time Markov process (including many dynamics with memory, so long as they can be written as a suitable mapping) can also be stratified: they can be decomposed into fragments restricted to regions of trajectory space (strata), averages over the distributions of trajectory fragments within the strata can be computed with limited communication between them, and those averages can be combined in a weighted fashion to yield a very broad range of statistics that characterize the dynamics.

These basic features are at the core of the existing nonequilibrium umbrella sampling (NEUS) method [9–11], which forms the starting point for our development. NEUS was originally introduced to estimate stationary averages with respect to a given, possibly irreversible, stochastic process [9]. Starting in [10, 11] it was observed that the general NEUS approach was applicable to certain dynamic averages as well. The basic NEUS approach has been been applied and further developed in subsequent articles [12–15] and in the Exact Milestoning scheme [16], which was derived from the Milestoning method [17] but is very similar in structure to NEUS. At its most basic level, NEUS relies on duplication of states in rarely visited regions of space and subsequent forward evolution of the duplicated states. In this way it is similar to a long list of so-called “trajectory splitting” techniques [18–26] that are also able to compute averages of dynamic quantities. Like NEUS, splitting techniques also often involve a decomposition of state space into regions. Unlike NEUS however, in most splitting techniques bias is removed through the use of a separate weight factor for each individual sample (rather than for an entire region), and the computational effort expended in each region is not controlled directly. What makes the NEUS method unique among splitting techniques is that it is also a trajectory stratification strategy.

Our goal in this article is to provide a clear and general mathematical framework for trajectory stratification that builds upon the NEUS method. In the process we clearly delineate the range of statistics that can be estimated by NEUS, including more general quantities than previously computed. Our analysis of the underlying mathematical structure of US [27, 28] has already facilitated the derivation of a central limit theorem for US and a detailed understanding of its error properties. Here, our framework reveals unanticipated connections between the equilibrium and nonequilibrium US methods and places the nonequilibrium algorithm within the well-studied family of stochastic approximation methods [29]. The analysis leads to a practical scheme that departs dramatically from currently available alternatives. We demonstrate the use of trajectory stratification to compute a hitting time distribution as well as to compute the expectation of a path-dependent functional that gives the relative normalization constants for two arbitrary, user-specified un-normalized probability densities.

II. A UNIFIED FRAMEWORK

In this section we present a framework that reveals the unified structure underlying umbrella sampling in both the equilibrium and nonequilibrium case. In Section II A, we review the equilibrium approach [27, 28] to introduce terminology and the central eigenproblem in a context where the analogies to traditional umbrella sampling descriptions [3, 5–8] are readily apparent. In Section II B, we present the nonequilibrium version of the algorithm and show how this interpretation results in a flexible scheme for computing dynamic averages. As for its equilibrium counterpart, an eigenproblem lies at the core of the nonequilibrium method. This eigenproblem however, involves a matrix that depends on the desired eigenvector, introducing the need for a self-consistent iteration. In Section III, we give a precise description of the fixed-point problem solved by this iteration and show that the algorithm is an example of a stochastic approximation strategy [29]. In Section IV we specialize our development to the context of steady-state averages that motivated the original development of NEUS [9].

A. Averages with Respect to a Specified Density

Our presentation in this section follows [27]. We view umbrella sampling as a method to compute averages of the form

| (1) |

where π is a known probability distribution and d is the dimension of the underlying system (e.g., the total number of position coordinates for all atoms in a molecular system). For example, π might be the canonical distribution, π(dx) ∝ e−βV (x)dx where V is a potential energy function, β is an inverse temperature, and f might be 1 on some set A and 0 elsewhere. In this case, −β−1 log ∫ f(x)π(dx) can be regarded as the free energy of the set A.

Note that in our notation π is a probability measure on and dx is an infinitesimal volume element in . If the distribution π has a density function p(x) then π(A) = ∫x∈A p(x)dx and, in particular, π(dx) = p(x)dx. This more general notation is useful when we move to our description of the nonequilibrium umbrella sampling scheme. As an aid to the reader, we choose to introduce it in the simpler setting of this section.

Consistent with traditional implementations of US [3, 6], we divide the computation of the average in (1) into a series of averages over local subsets of space. More precisely, instead of directly computing averages with respect to π, we compute averages with respect to n probability distributions, πj, each of which concentrates probability in a restricted region of space (relative to π itself) with the goal of eliminating or reducing barriers to efficient sampling associated with π. So that general averages with respect to π can be assembled, the πj satisfy for a set of weights zj to be defined in a moment.

To obtain the restricted distributions πj we can set

| (2) |

where the ψj are non-negative user defined functions satisfying for all x (this last requirement is relaxed in [27]). For example, one might choose , where the Aj are a collection of sets covering the space to be sampled, and, for any set Aj, the function is 1 if x ∈ Aj and 0 otherwise.

Note that is satisfied with

| (3) |

and that the average (1) with respect to π can be reconstructed using the equation

| (4) |

with

| (5) |

Here zj is the statistical weight associated with each distribution πj and 〈f〉j are the averages of the observable f against πj. From (4) we see that if we can sample from the πj and compute the zj then we can compute averages with respect to π. Since πj is known explicitly in this case, it can be sampled by standard means (e.g., Langevin dynamics or Metropolis Monte Carlo [3]).

Our key observation underpinning the equilibrium umbrella sampling method is that the zj themselves are functions of averages with respect to the local distributions πj:

| (6) |

where

| (7) |

The matrix F is stochastic (i.e., has non-negative entries with rows that sum to 1) and (6), which is written in matrix-vector form as

| (8) |

is an eigenproblem that can be solved easily for the vector z.

We now have a stratification scheme for computing the target average in (1) by sampling from the distributions πj. Operationally, the main steps are as follows.

Assemble F defined in (7) (or the alternative in Appendix A below) and 〈f〉j defined in (5) by sampling from πj defined in (2).

Solve the eigenvector equation (8) for z defined in (3).

Compute the desired expectation via (4).

The efficiency of this equilibrium US scheme has been analyzed in detail elsewhere [27, 28]. Roughly, the benefit of US is due to the facts that averages with respect to the πj are often sufficient to solve for all desired quantities, and one can choose ψj so that averages with respect to the πj converge much more quickly than averages with respect to π itself. It is this basic philosophy that we extend in Section II B to the computation of dynamic averages.

B. Averages with Respect to a Given Markov Process

The mathematical description of the nonequilibrium umbrella sampling scheme that follows reveals how the stratification strategy developed for the equilibrium case in Section II A can be extended to compute nearly arbitrary dynamic statistics. Our interest in this section is computing averages over trajectories of some specified Markov process, X(t). This process can be time-inhomogenous, i.e., given the value of X(t), the distribution of X(t+1) can depend on the value of t. We compute averages of trajectories evolved up to a first exit time of the process (t,X(t)) from a user specified set of times and positions, D—i.e., trajectories terminate when they first leave the set D. We consider averages over trajectories of X(t) run until time

| (9) |

for a set . In the first numerical example in Section V, D is a set of times and positions for which we would like to compute an escape probability. In the second numerical example, D restricts only the times over which we simulate. The averages are of the form

| (10) |

We note that the average in (10) is not completely general, in order to streamline the developments below. Without any modification, we can compute averages similar to (10) but with the argument (t,X(t)) in the definitions of τ and f replaced by (t,X(t−1),X(t)). On the other hand, expectations with (t,X(t)) replaced by (t,X(t−m),…,X(t−1),X(t)) for m ≥ 2 cannot be obtained immediately. These and many more general expectations can, however, be accommodated by applying the algorithm to an enlarged process (e.g., (t,X(t−m) ,… ,X(t−1),X(t))) at the cost of storing copies of the enlarged process. For many expectations, this cost is quite manageable. Finally, we require that E[τ] < ∞. The limit τ → ∞ is considered in Section IV.

Below we show that expectations of time-dependent functions can be decomposed as a weighted sum of expectations computed over restricted subsets of the full space and, in turn, how the statistical weights can be computed as expectations over these subsets, mirroring the basic structure of the equilibrium scheme described in Section II A. However, as we discuss in Section III, the algorithm for computing these local expectations departs significantly from the equilibrium case because their form is not known a priori in the nonequilibrium setting.

1. The Index Process

The US scheme in Section II A used the basis functions ψj to stratify the sampling of the distribution π by decomposing averages with respect to π into averages with respect to the more easily sampled πj. To arrive at an analogous partitioning of state space for the nonequilibrium case, we introduce an index process J(t) that takes values in {1,2, … , n} and (roughly) labels the point (t,X(t)) in time and space, . Our objective is to generate fragments of trajectories of X(t) consistent with specific values of J(t) thereby breaking the coupled process (X(t), J(t)) into separate regions corresponding to a given value of J(t) (see panel A of Figure 1).

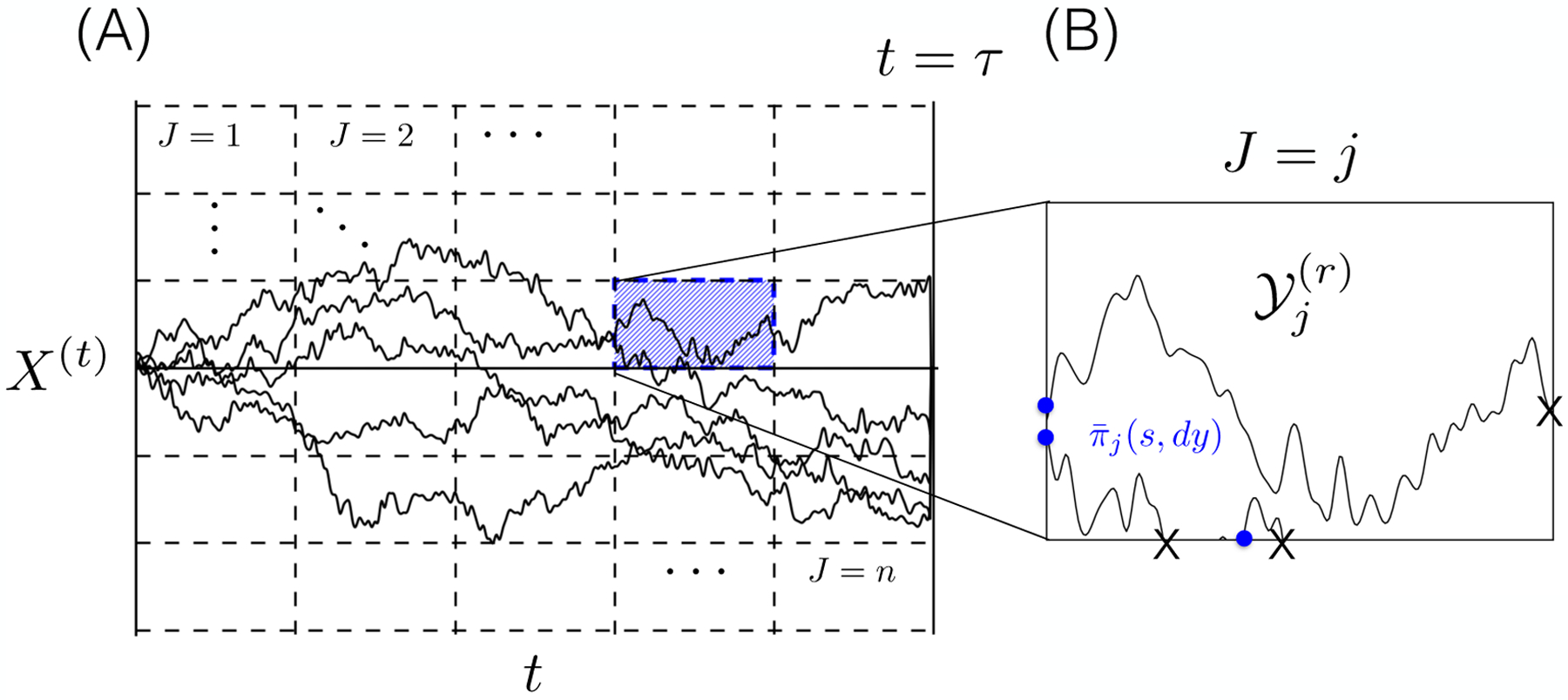

FIG. 1.

Illustration of the stratification of a process (X(t), J(t)) (solid black lines, panel A) via the scheme outlined in Section II B. (A) The restricted distributions corresponding to each value of the index process J(t) are outlined as discrete regions of the (t, X(t)) space (panel A, black dashed lines). In this depiction, the value of J(t) corresponds to the current cell containing (t, X(t)) within a rectangular grid of times and positions. (B) Each of the restricted distributions πj(t, dx) are sampled by integrating a locally restricted dynamics (panel B, black lines). The process is generated by integrating an excursion of the unbiased process (X(t), J(t)) corresponding to a particular fixed value of J = j (panel A). As each excursion transitions from J = i to J = j with j ≠ i, the dynamics are stopped and a new excursion is started at a time and point (s, y) (panel B, blue dots) drawn from the flux distribution .

The idea of discretizing a process X(t) according to the value of some user-specified index process is not new in computational statistical mechanics. For example, in our notation, given a partition of state space A1,A2,…,An, the Milestoning procedure [17] and some Markov State Modeling procedures [30] correspond to an index process that marks the pairs of sets (Ai,Aj) for i ≠ j between which X(t) last transitioned. In the Milestoning method, the pairs of sets are considered unordered, so that a transition from Aj to Ai immediately following a transition from Ai to Aj does not correspond to a change in J(t), and J(t) can assume distinct values. The original presentation of NEUS on the other hand corresponds to a process J(t) which marks the index of the set Aj containing X(t). For accurate results, the Milestoning procedure requires that the index process J(t) itself be Markovian. Even under the best circumstances, that assumption is only expected to hold approximately. It is not required by the NEUS algorithm. Our presentation below reveals the full flexibility in the choice of J(t) within NEUS. That flexibility is essential in the generalized setting of this article.

In the developments below we require that J(t) is chosen so that the joint process (X(t), J(t)) is Markovian. This assumption allows that trajectories can be continued beyond a single transition event (before τ) without additional information about the history of X(t) or J(t). We do not assume that J(t) alone is Markovian and in general it is not. Our assumption implies no practical restriction on the underlying Markov process X(t). When X(t) is non-Markovian, additional variables can often be appended to X(t) to yield a new Markov process to which the developments below can be applied. A version of this idea is applied in Section V C where we append a variable representing a nonequilibrium work to an underlying Markov process.

2. The Eigenproblem

Given a specific choice of index process J(t), the nonequilibrium umbrella sampling algorithm stratifies trajectories of X(t) according to their corresponding values of J(t). That is, for each possible value of the index process, NEUS generates segments of trajectories of X(t) between the times that J(t) transitions to and from J = j. To make this idea more precise, we need to carefully describe the distribution sampled by these trajectory fragments:

| (11) |

where

| (12) |

For each j, πj is the distribution of time and position pairs (t,X(t)) conditioned on J(t) = j and t < τ. We call the πj restricted distributions. We have reused the notations πj and zj from our account of the equilibrium umbrella sampling scheme to emphasize the analogous roles played by those objects in both sections. Note that here we are treating time as an additional random variable. Also note that in these definitions as well as in the formulas below, P and E represent probabilities and expectations with respect to the original, unbiased X(t) and J(t). We assume that zj > 0 for all j since we can remove the index j from consideration if zj = 0. The zj are all finite because , which we assume is finite.

Observe that

| (13) |

where

| (14) |

Thus we have a decomposition of (10) analogous to the decomposition of (1) in (4). Also as in the equilibrium case, the zj can be computed from averages with respect to the πj. To see this, observe that for any t we can write

| (15) |

Summing this expression over t we obtain

| (16) |

These expressions are all bounded by E[τ] and are therefore finite. Expression (16) can be rewritten as an affine eigenequation

| (17) |

where z is defined in (12),

| (18) |

and

| (19) |

Equation (17) is the analog of (8) in Section II A. Here, the matrix element Gij stores the expected number of transitions from J = i to J = j, normalized by the expected number of time steps with J = i. Note that the matrix G is substochastic; that is, it has non-negative entries and rows that sum to a number less than or equal to one.

To complete the analogy with the umbrella sampling scheme described in Section II A, we need to show that the elements of the matrix G are expressible as expectations over the πj. Indeed,

| (20) |

where Pt,x,i is used to denote probabilities with respect to X initialized at time and position (t,x) and conditioned on J(t) = i and t < τ. Note that in the first line we have appealed to the Markovian assumption on (X(t), J(t)). Had we instead assumed that J(t) alone was Markovian, we could have ignored the x dependence in (20).

Just as for the umbrella sampling algorithm described in Section II A, we arrive at a procedure for computing (10) via stratification:

Assemble Gij defined in (18) and 〈f〉j defined in (14) by sampling from the πj defined in (11).

Solve the affine eigenvector equation (17) for z defined in (12).

- Compute

via (13).(21)

Relative to the scheme in Section II A, sampling the restricted distributions πj requires a more complicated procedure. This is the subject of Section III. In Section III, instead of G, we choose to work with the matrix

| (22) |

where

| (23) |

is the time of the ℓth change in the value of J(t) for a given realization of the coupled process (X(t), J(t)). Likewise, instead of z, we choose to work with the weights

| (24) |

We show in Appendix B that is related to G by the identity

| (25) |

and that is related to z by

| (26) |

Therefore, knowledge of G implies knowledge of and , and the algorithm detailed in the next section could also be expressed in terms of G and z at the cost of additional factors of 1–Gjj in several formulas. Moreover, identities (17), (25), and (26) imply

| (27) |

that is, and solve the same affine eigenproblem as z and G. We emphasize and over G and z only to simplify the presentation and interpretation of the algorithm in Section III.

To give an appealing intuitive interpretation of , we note that for i ≠ j,

| (28) |

We refer to this quantity as the net probability flux from J = i to J = j; it is the expected number of transitions of the process J(t) from J = i to J = j before time τ. The matrix stores the relative probabilities of transitions to different values of J before time τ and is the expected number of transitions into J = j before time τ.

Finally, we remark that rapid convergence of the scheme in practice rests upon the choice of J(t). Roughly, one should choose the index process so that the variations in estimates of the required averages with respect to the πj (e.g., estimates of the Gij) are small. In practice, this requires that transitions between values of J(t) are frequent, which is the analog of selecting the biases in equilibrium US to limit the range of the free energy over each subset of state space (see [27, 28]). In Section V we describe this and other important implementation details in the context of particular applications.

III. A GENERAL NEUS FIXED-POINT ITERATION

In this section we present a detailed algorithm for computing (10) by the stratification approach outlined in Section II B. To accomplish this one must be able to generate samples from the restricted distributions πj(t, dx). In NEUS, the restricted distributions are sampled by introducing a set of Markov processes

| (29) |

called excursions whose values are triples of a time , a position , and a value of the index process . To avoid confusion, we consistently use the variable r for the time associated with an excursion and the variable t for the time associated with the process (t,X(t), J(t)).

Roughly speaking, each excursion is a finite segment of a trajectory of the process (t, X(t), J(t)) with J = j. These segments are stopped either on reaching time τ or at the first time when J ≠ j. To be precise, excursions are generated as follows:

Draw an initial time and position pair from the distribution specified below or from an estimate of that distribution. Set .

Set , and generate from the distribution of conditioned on and .

Stop on reaching time τ or when J ≠ j. That is, stop when r reaches

| (30) |

The excursions are illustrated in Figure 1 for a particular choice of index process.

For the excursions to sample the restricted distribution πj(t, dx), we must take the initial distribution to be the distribution of times s and positions y at which the process (t,X(t),J(t)) transitions from a state J(s−1) = i with i ≠ j to state J(s) = j (see Section III A and Appendix C). We call these distributions the flux distributions.

In general, the flux distributions are not known a priori and must be computed approximately. In the NEUS algorithm, we begin with estimates of the flux distributions and the matrix . We then compute excursions initialized from these estimates of the flux distributions. From the excursions and the current estimate of , we compute statistics which are used to improve the estimates of both the flux distributions and . Thus, NEUS is an iteration designed to produce successively better estimates of the flux distributions and simultaneously.

In Section III B, we derive a fixed-point equation solved by and the flux distributions, and we motivate NEUS as a self-consistent iteration for solving this equation. In Section III C, we describe the complete NEUS algorithm in detail and interpret it as a stochastic approximation algorithm [29] for solving the fixed-point equation derived in Section III B. In the Supplementary Material, we analyze a simple four-site Markov model to clearly illustrate the structure of this self-consistent iteration and the terminology of the framework.

A. The Flux Distributions

Before deriving the fixed-point problem and the corresponding stochastic approximation algorithm, we define the flux distributions precisely. We let

| (31) |

be the distribution of time and position pairs conditioned on . With this definition of , an excursion samples the restricted distribution πj(t,dx) in the sense that

| (32) |

where

| (33) |

and ρj is defined in (30). We prove (32) in Appendix C.

Given (32), we may express any average over πj as an average over . For example,

| (34) |

Moreover, from (13), we can express general averages as

| (35) |

where

| (36) |

We use these facts in our interpretation of the NEUS algorithm in Section III B.

Instead of working directly with the flux distributions, we find it convenient to express both the fixed-point problem and the algorithm in terms of the probability distribution of time and position pairs (t, X(t)) conditioned on observing a transition from J = i to J = j at time t, i.e., in terms of

| (37) |

which is defined only for s > 0. To simplify notation, we let γ denote the set of all conditional distributions γij. Recall from (28) that is the net probability flux from J = i to J = j. The following simple but key identity relates γ to the flux distributions :

| (38) |

The s > 0 term is the contribution from transitions into state J = j from the neighboring state J = i, and the s = 0 term accounts for the initial t = 0 contribution of the underlying process when J = j. We emphasize that both the fixed-point problem and the iteration that we define below could be expressed in terms of the flux distributions instead of γ. We choose to express them in terms of γ because the resulting formalism more naturally captures the implementation of the method used to generate our numerical results in Section V.

B. The Fixed-Point Problem

We now derive the fixed-point problem. Our goal is to find an expression of the form

| (39) |

that characterizes the desired matrix and collection of probability measures γ as the fixed-point of a pair of maps and that take as arguments approximations of and of γ and return, respectively, a new substochastic matrix and a new collection of probability measures.

To this end, we define a function mapping and to an approximation of the flux distribution . We denote this function by the corresponding capital letter . Based on (27) and (38), we define

| (40) |

where solves the equation . The matrices that we consider are strictly substochastic. We assume that is also irreducible, in which case the solution exists and is unique. To motivate the definition above, we observe that for the exact values and γ, by (38). Moreover, given and samples from , one can generate samples from ; see Section III C. This is crucial in developing a practical algorithm to solve the fixed-point problem.

At this point we are ready to define the functions and Γ appearing in (39) above. For a substochastic matrix and a collection of probability distributions , define the substochastic matrix

| (41) |

and the collection of probability distributions

| (42) |

Because , expressions (34) and (37) imply that and , establishing our fixed-point relation (39).

Having fully specified the fixed-point problem, we can now consider iterative methods for its solution. One approach would be to fix some ε ∈ (0,1] and compute the deterministic fixed-point iteration

| (43) |

given initial guesses and for G and γ, respectively. One would typically choose ε = 1 in this deterministic iteration; we consider arbitrary ε ∈ (0,1] to motivate the stochastic approximation algorithm developed in Section III C.

In practice, computing and Γ in the right hand side of (43) requires computing averages over trajectories of (X(t), J(t)) initiated from . While we cannot hope to compute these integrals exactly, we can construct a stochastic algorithm approximating the iteration in (43) using a finite number of sampled trajectories. The resulting scheme, which we detail in Section III C, fits within the basic stochastic approximation framework.

C. A Stochastic Approximation

In this section, we present the full NEUS algorithm and we interpret it as a stochastic approximation algorithm analogous to the deterministic fixed-point iteration (43). In NEUS, as in the fixed-point iteration, we generate a sequence of approximations and , converging to and γ, respectively. During the mth iteration of the NEUS algorithm, we update the current approximations and based on statistics gathered from K independent excursions defined according to the rules governing enumerated above with drown from , the current (at the mth iteration of the scheme) estimate of the flux distribution .

We now state the NEUS algorithm. To simplify the expressions below, we sometimes omit the iteration number m. The algorithm proceeds as follows:

Choose initial approximations and of and γ, respectively. Fix the number K of independent excursions to compute for each restricted distribution πj(t,dx). Choose the maximum number of new points L included in the update to the empirical approximations of the distributions .

-

For each j = 1,2, … , n generate K independent excursions

(44) Let

be the length of the excursion as in (30).(45) - Let

be the number of i to j transitions of the index process observed while generating the excursions . Let and be the times and positions for which and .(46) - Compute

(47)

and(48)

where L ∧ Mij(m) = min{L,Mij(m)}. In Equation (48), δx represents the Dirac delta function centered at position x.(49) - Replace the deterministic iteration (43) by the approximation

and(50)

where(51)

and εm > 0 satisfies(52) (53) - Update the expectations

(54) - Once the desired level of convergence has been reached, compute

where the vector solves .(55)

We now interpret NEUS as a stochastic approximation algorithm analogous to the deterministic fixed-point iteration (43). First, we observe that approximates in the following sense. Suppose we were to compute a sequence , as in NEUS, except holding the values of and fixed. We would then have that , and that each of the were independent (conditionally on and ). A Law of Large Numbers would therefore apply and we could conclude that

| (56) |

The distribution γij(m) approximates in a similar sense. Therefore, the NEUS iteration (50) is a version of the deterministic fixed-point iteration (43) but with a shrinking sequence εm instead of a fixed ε and with random approximations instead of the exact values of and Γ. The conditions (53) on the sequence εm are common to most stochastic approximation algorithms [29]; they ensure convergence of the iteration when and Γ can only be approximated up to random errors.

We remark that in practice the empirical measures are stored as lists of time and position pairs. The update in (50) allows the number of pairs stored in these lists to grow with each iteration. This can lead to impractical memory requirements for the method. We therefore limit the size of each list to a fixed maximum value by implementing a selection step in which the points that have been stored for the most iterations are removed to make room for the points in the updates of when this maximum is exceeded. Also, in our numerical experiments in Section V, we use εm = 1/(m+1) in which case,

| (57) |

and

| (58) |

This and other details of our implementation are explained in Section V.

The implementation detailed above borrows ideas from several earlier modifications of the basic NEUS algorithm. The use of a linear system solve for the weights z was introduced in [11]. In the scheme presented above, the number of samples, K, of the process is fixed at the beginning of each iteration of the scheme. In this aspect, the implementation above is similar to the Exact Milestoning approach presented in [16]. With the number of samples of fixed, the total amount of computational effort, as measured in number of time steps of the process X(t), becomes a random variable (with expectation KE[σ(S(ℓ))]). In practical applications, it may be advantageous to fix the total computational effort expended per iteration in each J = j. An alternative version of the NEUS scheme is therefore to fix the total computational effort expended (or similarly the number of numerical integration steps) and allow the number of samples, K, to be a random number. In our tests (not shown here), neither implementation showed a clear advantage provided that a sufficient number of samples, K, was generated to compute the necessary transition statistics.

It is also important to note that if the number of points used in the representation of is restricted (as it typically has to be in practice), any of the implementations of NEUS that we have described has a systematic error that decreases as the number of points increases or as the work per iteration increases. Earlier implementations of NEUS [9–12, 14] computed transition statistics that were normalized with respect to the simulation time spent associated with each J = j rather than the number of samples of generated. This implementation choice leads to a scheme with a systematic error that vanishes only as the number of points allowed in the representation of grows, regardless of the work performed per iteration.

IV. ERGODIC AVERAGES

In this section we consider the calculation of ergodic averages with respect to a general (not necessarily time-homogenous) Markov process. We also describe the simplifications that occur when the target Markov process is time-homogenous as in the original NEUS algorithm.

In order to ensure that the definitions in this section are sensible, we require that

| (59) |

exists as a probability distribution on and let

| (60) |

This general ergodicity requirement allows processes X(t) with periodicities or time dependent forcing.

Our goal is to compute ergodic averages of the form

| (61) |

To that end, we fix a deterministic time horizon τ > 0 in (12) and (18); the condition t < τ can thus be written as an upper bound of τ − 1 on the summation index. If we divide both sides of (17) by τ and take the limit τ → ∞, we obtain the equation

| (62) |

where now

| (63) |

and

| (64) |

Note that the matrix G is now stochastic and that . We can rewrite the ergodic average of f as

| (65) |

where

| (66) |

and we represent the large τ limit of the position marginal distribution of πj defined in (11) as

| (67) |

These formulas indicate that the only modification of the algorithm in Section III that is required to compute a long-time average is to set τ = ∞ in the definition of the processes , to set a = 0 in (40), and let solve with . In other words, the algorithm seamlessly transitions from solving the initial value problem to solving the infinite time problem as τ becomes large.

When the joint process (X(t), J(t)) is time-homogenous and stationary and our goal is to compute the average of a position dependent observable f(x) with respect to the stationary distribution π of X(t), the above relations can be further simplified. In this case,

| (68) |

where zj defined in (63) becomes

| (69) |

The matrix G in (64) can now be written

| (70) |

and the vector 〈f〉j defined in (66) becomes

| (71) |

These simplifications lead to a version of the original NEUS method [9] that employs a direct method for solving for the weights similar to the scheme in [11].

In [11] and [10] the basic NEUS approach was extended to the estimation of transition rates between sets for a stationary Markov process. Implicit in this extension was the observation that any algorithm that can efficiently compute averages with respect to the stationary distribution of a time-homogenous Markov process can be applied to computing dynamic averages more generally by an enlargement of the state space, i.e., by applying the scheme to computing stationary averages for a higher dimensional time-homogenous Markov process. This idea is also central to Exact Milestoning [16], which extends the original Milestoning procedure [17] to compute steady-state averages with respect to a time-homogenous Markov process and is very similar in structure to steady-state versions of NEUS.

V. NUMERICAL EXAMPLES

Here we illustrate the flexibility of the generalized algorithm with respect to both the means of restricting the trajectories (the choice of the J(t) process) and the averages that can be calculated. Specifically, in Section V A we discuss our choice of the J(t) process. In Section V B we show how finite-time hitting probabilities can be calculated by discretizing the state space according to both time and space. In Section V C we show how free energies can be obtained by discretizing the state space according to time and the irreversible work.

A. One Choice of the J(t) Process

Rapid convergence of the scheme outlined in Section III rests on the choice of J(t). Perhaps the most intuitive choice is

| (72) |

where the subsets A1, A2, … , An partition . Indeed, earlier steady-state NEUS implementations [9–12, 14] employed an analogous rule using a partition of the space variable (the time variable was not stored or partitioned). However, even with an optimal choice of the subsets A1, A2, … , An, (72) has an important disadvantage: in many situations, X(t) frequently recrosses the boundary between neighboring subsets Ai and Aj, which slows convergence. Fortunately, there are many alternative choices of J(t) that approximate the choice in (72) while mitigating this issue. We give one simple and intuitive alternative which we use in the numerical examples that follow.

Let ψj be a set of non-negative functions on for which . The ψj are generalizations of the functions in that they serve to restrict trajectories to regions of state space. In practice, given a partition of space A1, A2, … , An, the ψj can be chosen to be smoothed approximations of the functions Given a trajectory of X(t), the rule defining J(t) is as follows. Initially, choose J(0) ∈ {1, 2, … , n} with probabilities proportional to {ψ1(0, X(0)), ψ2(0, X(0)), … , ψn(0, X(0))}. At later times J(t) evolves according to the rule

If then J(t) = J(t−1).

Otherwise sample J(t) independently from {1, 2, … , n} according to probabilities {ψ1(t, X(t)), ψ2(t, X(t)), …, ψn(t, X(t))}.

While transitions out of J(t) = i occur when X(t) leaves the support of ψi, transitions back into J(t) = i can only occur outside of the support of ψj. Thus, this transition rule allows one to separate in space the values of X(t) at which J(t) transitions away from i from those where J(t) transitions into i, mitigating the recrossing issues mentioned above.

In our examples, we discretize time and only one additional “collective variable” (a dihedral angle in Section V B and the nonequilibrium work in Section V C). Here we denote the collective variable by ϕ, and we discretize it within some interval of values [a,b] (though it may take values outside this interval). In both examples [a, b] is evenly discretized into a set of points for some integer mϕ. Letting ϕj be any of the points in that discretization, we set

| (73) |

where Δϕ is some fixed value controlling the width of the support of ψj, and the indicator 1[a,b] restricts the terminal functions. Recall that the ψj are required to sum to 1. We choose and to equally divide the interval [0,τ), where, in our examples, τ is a fixed time horizon. The function ψj is largest when and ϕ(x) = ϕj. The supports of the various ψj correspond to products of overlapping intervals in the ϕ variable, but non-overlapping intervals in time. The fact that ψj depends on time is essential in our examples.

B. Finite-Time Hitting Probability

In this section we compute the probability, PBA(τmax), of hitting a set B before a separate set A and before a fixed time τmax > 0 given that the system is at a point X(0) ∉ A ∪ B at time t = 0. In the case where X(0) and B are separated by a large free energy barrier while X(0) and A are not, computing PBA(τmax) can be challenging since trajectories that contribute to PBA(τmax) are rare in direct simulations. To compute PBA(τmax) via the scheme in Section III C, we let the stopping time τ be the minimum of τmax and the first time, t, at which X(t−1) is in either A or B, i.e., τ – 1 = min{τA, τB, τmax − 1} where τA and τB are the first times that enters the sets A and B respectively. Strictly speaking, to write τ in the form in (9), we need to replace (t,X(t)) in that equation by (t,X(t−1),X(t)). The set D corresponding to our choice of τ is then D = {(t, x, y): t < τmax, x ∉ (A ∪ B)}. As we have already mentioned, this can be done without further modification of the scheme. Then f(t, X(t)) in (10) is

| (74) |

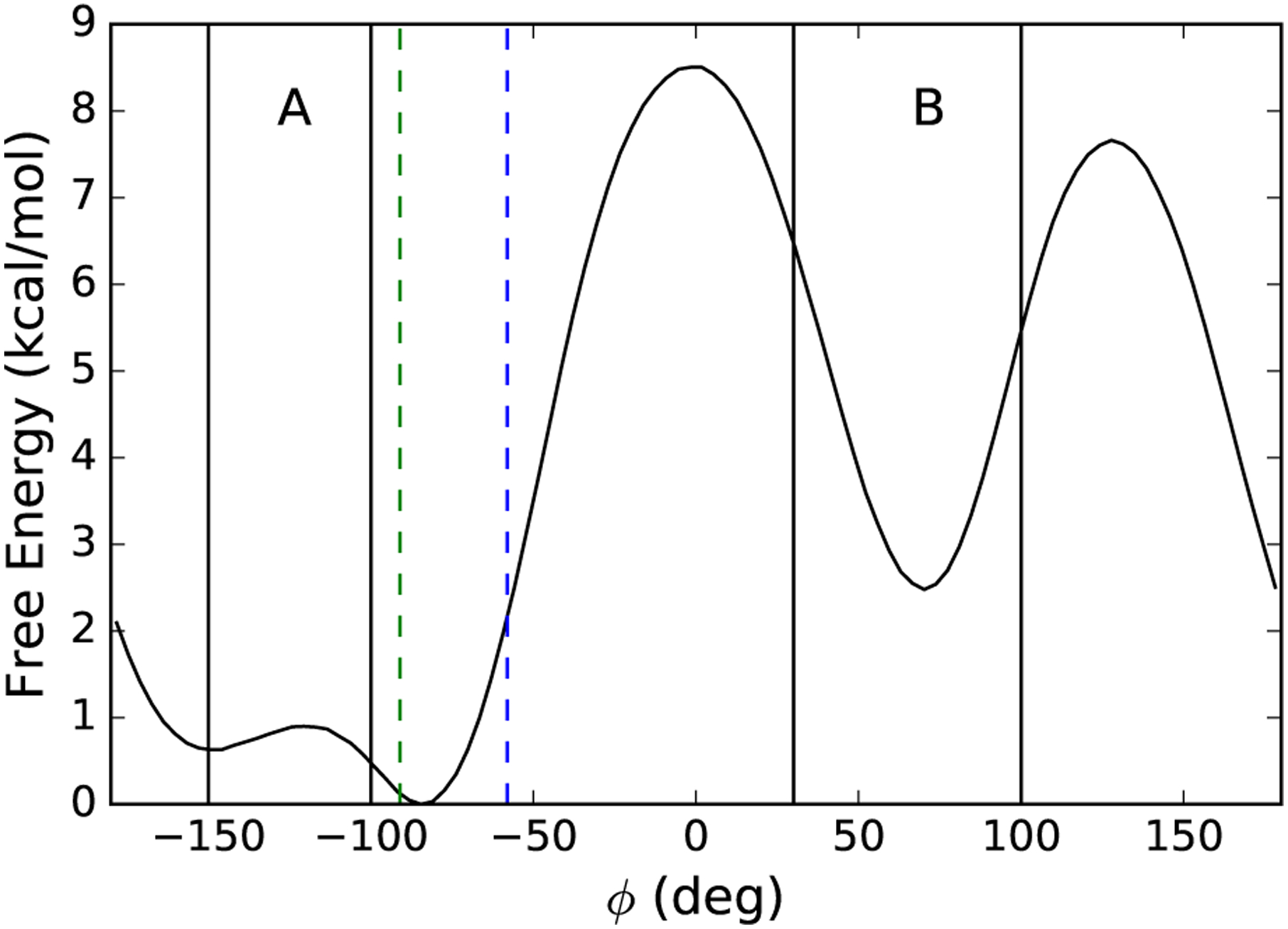

The system that we simulate is the alanine dipeptide (CH3-CONH-CαH(CβH3)-CONH-CH3) in vacuum modeled by the CHARMM 22 force field [31]. We use the default Langevin integrator [32] implemented in LAMMPS [33], with a temperature of 310 K, a timestep of 1 fs and a damping coefficient of 30ps−1. The SHAKE algorithm is used to constrain all bonds to hydrogens [34]. We consider the system to be in set A if −150° < ϕ < −100° and in set B if 30° < ϕ < 100° (Figure 2). We discretize time into intervals of tend−tstart = 103 time steps with a terminal time of τmax = 104 time steps. We use the rule outlined in Section V A for the evolution of J(t) with the ψj of the form in (73). The ϕj in (73) are chosen from the set {−100°,−74°,−48°,−22°,4°,30°} with [a, b] = [100°, 30°] and Δϕ = 20°.

FIG. 2.

Free energy (black curve) of the alanine dipeptide projected onto the ϕ dihedral angle, with sets A and B indicated. The initial positions of X(0) at ϕ = −58.0° (blue) and ϕ = −91.0° (green) are shown as vertical dashed lines. The free energy is computed from the method presented in Section II A as implemented in [27].

We generate the initial point X(0) by running an unbiased simulation at 310 K and choosing a single point X(0) between the sets A and B. The vector a defined in (19) is

| (75) |

Note that the initial condition at J(0) can be drawn from an ensemble of configurations with minimal changes to the algorithm, but we restrict our attention to the initial condition consisting of a single point. To evaluate the performance of the algorithm in Section III C, we choose two points from our direct simulation, one at ϕ = −58.0° and one at ϕ = −91.0°. The former is chosen to allow the NEUS results to be compared with results from unbiased direct simulations, while the latter provides a more challenging test because PBA becomes small when X(0) is close to A.

We set K = 100 and L = 1 and perform a total of 104 iterations (about 7.2 μs of dynamics) of the scheme in Section III C for each starting point. Each step of the process corresponds to 10 time steps of the physical model. The are represented as lists of time and position pairs with associated weights. We cap the maximum size of those lists at 25 entries. If by the following. With probability aj/zj, set S = 0 and select Y from P[X(0) ∈ dy|J(0) = j], or with the remaining probability select an index I proportional to the flux and then select (S,Y) from the list of weighted samples comprising . For each j we compute where MjB is the total number of transition events of into B observed after m iterations (mK is the total number of excursions in state j after m iterations). The estimate of PBA(τmax) after m iterations is then computed as .

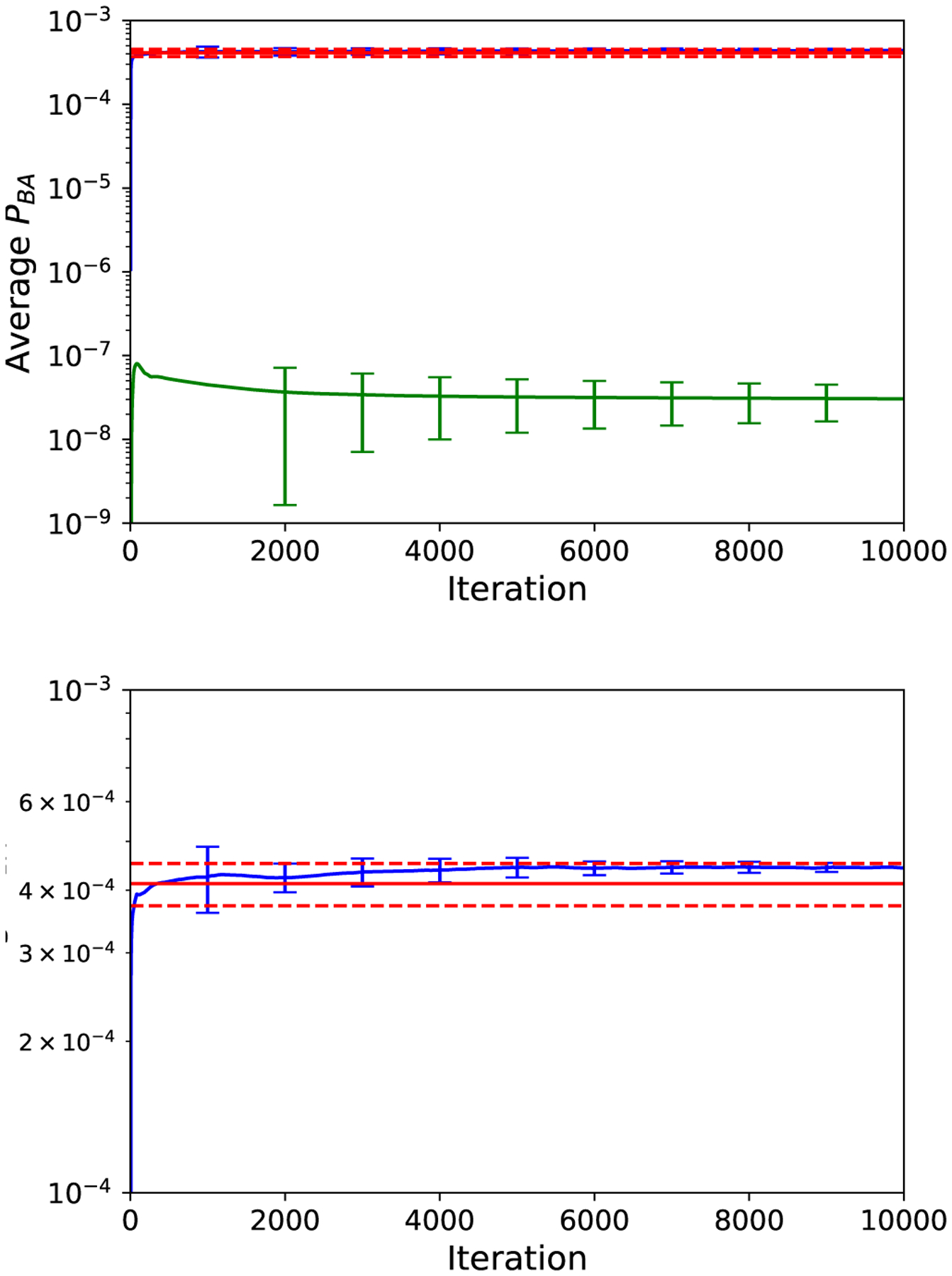

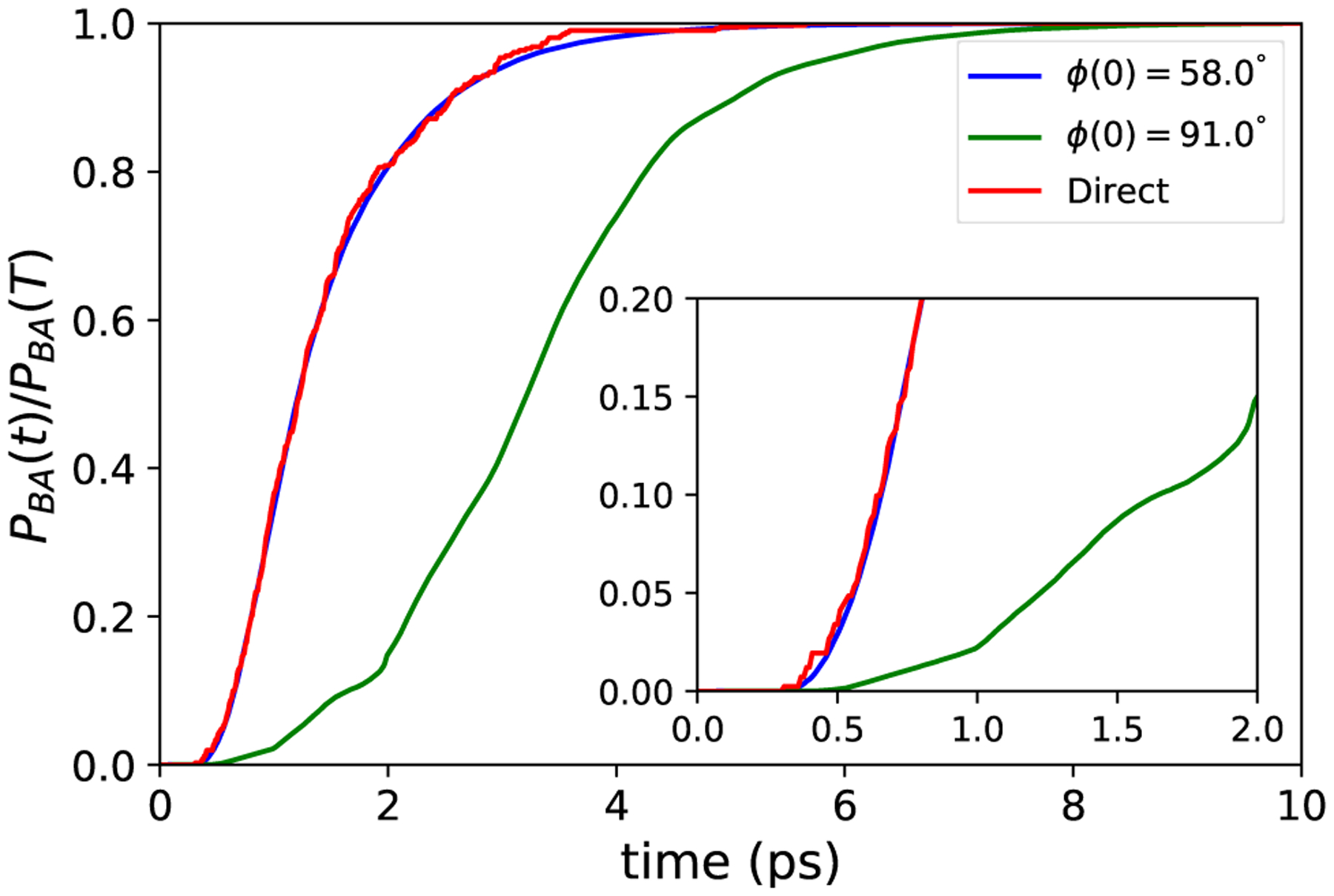

To assess the efficiency of the trajectory stratification, we also estimate PBA(τmax) by integrating an ensemble of n = 106 unbiased dynamics trajectories for τmax time steps from the initial point X(0). In this case, PBA(τmax) ≈ NB/N, where NB is the number of trajectories that hit set B before set A. To assess the accuracy of the NEUS result, we perform 10 independent NEUS calculations. In each NEUS simulation, we estimate the value of PBA as the average over the final 1000 iterations of each simulation and compute the mean of this estimate over 10 independent NEUS simulations. We obtain PBA(τmax) ≈ 4.43 × 10−4 from NEUS and PBA(τmax) ≈ 4.12 × 10−4 from direct simulation for the starting point at ϕ = −58.0° (Figure 3). In this case, the NEUS result is within the 95% confidence interval [3.72 × 10−4, 4.52 × 10−4] (estimated as , where p is the estimate of PBA from the direct simulation) for the direct simulation estimate given the number of samples. We obtain PBA(τmax) ≈ 2.78 × 10−8 from NEUS for the starting point at ϕ = −91.0°, consistent with the fact that none of the unbiased trajectories reached B before A in this case. From the same data (for either NEUS or direct simulation), one can easily assemble estimates of PBA(t) for any t ≤ τmax by counting only those transitions into B that occur before t time steps. Up to a normalization, PBA(t) is the cumulative distribution function for the time that it takes X(t) to enter B conditioned on not entering A. Estimates of this cumulative distribution function compiled from the NEUS and direct simulation data are plotted in Figure 4. The NEUS results show excellent agreement with the results from the direct simulation.

FIG. 3.

Running estimate of PBA from NEUS for dynamics starting at ϕ = −58.0° (blue, upper curve; error bars are computed every 1000 iterations and indicate where s is the standard error estimated from n = 10 independent NEUS simulations) compared to the final result from direct simulation (red solid line; dashed lines indicate , where n = 106 is the number of physically weighted trajectories generated and p is the estimate of PBA from the direct simulation). Also shown is the estimate from NEUS for dynamics starting from ϕ = −91.0° (green, lower curve; error bars computed similarly as the blue curve). The estimate at each iteration is computed as the average of the previous 1000 iterations. Lower panel is a magnification of the upper panel.

FIG. 4.

Estimate of the cumulative distribution function of the time to enter set B conditioned on not entering A from NEUS for the dynamics starting at ϕ = −58.0° (blue) and ϕ = −91.0° (green) compared to the result from the direct simulation (red). (Inset) The early time portion is shown. The estimate from each NEUS simulation at each time is computed as an average over the last 1000 iterations of the calculation and then averaged over 10 independent NEUS simulations.

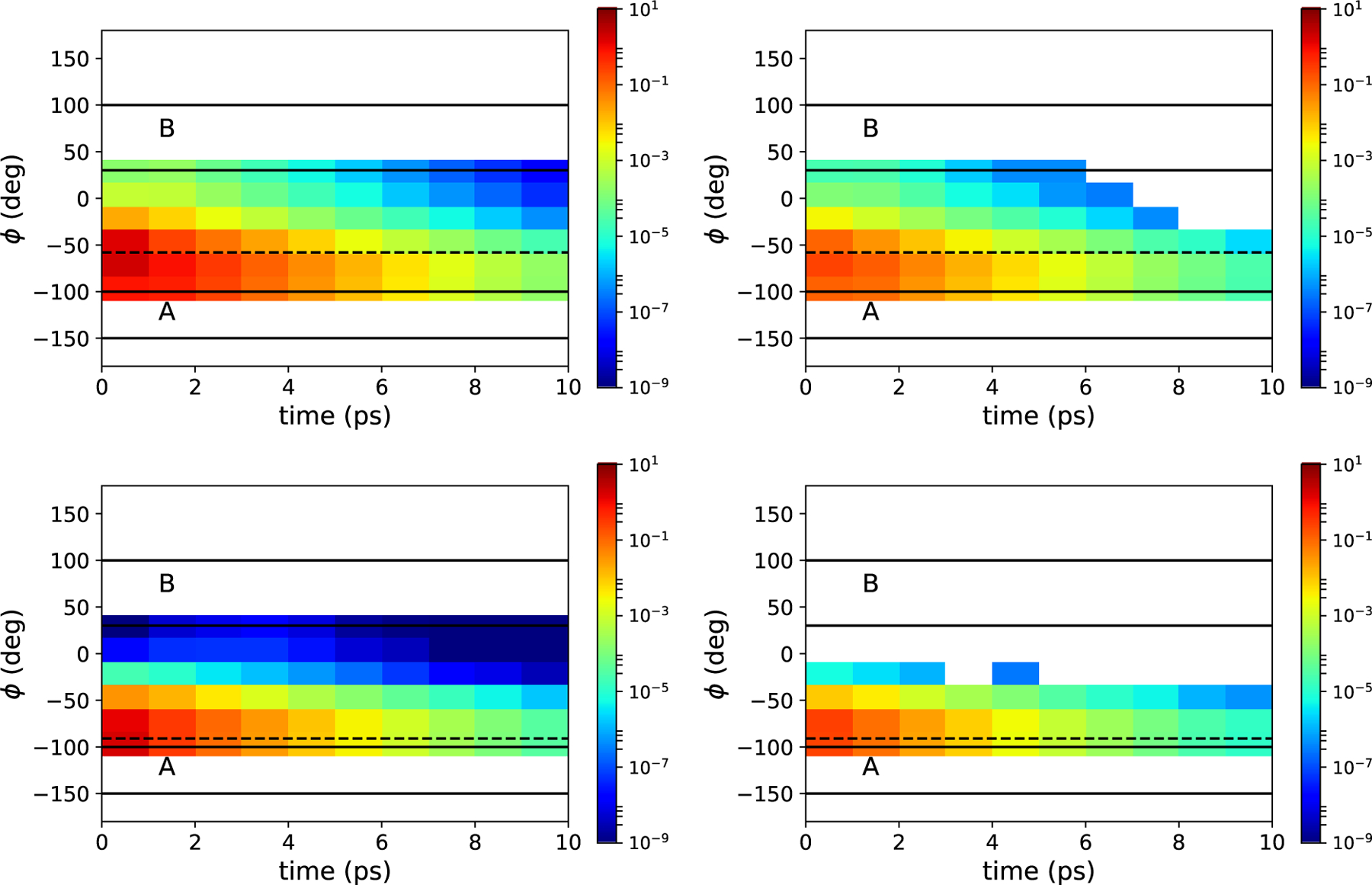

Spatiotemporal plots of the weights computed from the converged NEUS calculations and the direct simulations are shown in Figure 5. For both starting points, the stratification scheme is able to efficiently sample events with weights spanning 12 orders of magnitude. When X(0) is close to the boundary of set A, accurate estimation of the very small probability PBA(τmax) depends sensitively on the ability to realize a set of very rare trajectories, ruling out the use of direct simulation.

FIG. 5.

Estimates of the subset weights from NEUS (left) and direct simulations (right). Upper panels show the dynamics starting from ϕ = −58.0° (dashed line) and lower panels show the dynamics starting from ϕ = −91.0° (dashed line). White space represents subsets which were not sampled.

C. Free Energy Differences via the Jarzynski Equation

In this section, we show how a specific choice of the J(t) process enables us to stratify a path-dependent variable, specifically, the accumulated work appearing in the Jarzynski equation [8, 35]. For a statistical model defined by a density proportional to exp[−V (x)] (e.g., V (x) is a potential function or a log-likelihood), the normalization constant is Q = ∫ e−V (x)dx. In fields ranging from statistics to chemistry, a ratio of normalization constants is often used to compare models [36, 37]. Subject to certain conditions [35, 38], the Jarzynski equation relates the ratio of normalization constants to an average over paths of a time-dependent process, X(t):

| (76) |

where

| (77) |

and we refer to ΔF = −log(Qt/Q0) as the free energy difference. For example, for a small time discretization parameter, dt, a suitable choice of dynamics is

| (78) |

where ξt is a standard Gaussian random variable and X(0) is drawn from p0 ∝ exp[−V (0,x)].

Formula (76) suggests a numerical procedure for estimating free energy differences in which one simulates many trajectories of X(t), evaluates the work W(t) for each, and then uses this sample to compute the expectation on the right hand side of (76) approximately. This approach has been particularly useful in the context of single-molecule laboratory experiments [39, 40]. A well-known weakness of this strategy in the fast-switching (small t) regime is large statistical errors result from the fact that low-work trajectories contribute significantly to the expectation but are infrequently sampled [39, 41–44].

The quantity that we seek to compute is the free energy difference between a particle in a double-well potential that is additionally harmonically restrained with spring constant k = 20 near x = −1 and a particle in the same potential restrained near x = 1. The model is adapted from the one presented in [36]. Setting τ = 501, for t < τ we define

| (79) |

where dt = 0.001. We show V(0, x), V(τ – 1, x) and V (x;k = 0) in Figure 6. The process X(t) evolves according to (78).

FIG. 6.

V (0, x) (blue) and V (τ−1, x) (green) for the switching process used to compute Jarzynski’s equality. For reference, the potential with k = 0 (black) is also shown.

The reader may be concerned that the expectation in (76) is not immediately of the general form in (10) suitable for an application of NEUS. We apply NEUS as described in Section II B to the augmented process Z(t) = (X(t), W(t)). To compute the expectation of the left hand side of (76) via NEUS, we compute the expectation in (10) with

| (80) |

The index process J(t) marks transitions between regions of the time t and accumulated work W(t) variables. We discretize the work space in overlapping subsets using the pyramid form in (73). We use 100 subsets with centers evenly spaced on the interval [−35.0,35.0] with a width of Δϕ = 0.6. We discretize time into 5 discrete nonover-laping subsets every 100 time steps for a total of 500 subsets. We cap the maximum size of the list representation of at 50 entries using the same scheme as in Section V B.

To assess the accuracy of the NEUS result, we perform 10 independent NEUS simulations. For both NEUS and direct simulations, we prepare an ensemble of 1000 starting states X(0) by performing an unbiased simulation with fixed potential V (0, x) for 106 steps, saving every 1000 steps. The direct fast-switching simulations start from each of these points and comprise 500 steps of integration forward in time; each trajectory contributes equally to the left hand side of (76). For the NEUS simulations, the vector a is constructed as in (75), and trajectories are initialized at J(0) by drawing uniformly from this ensemble. We set K = 100 and L = 1, and we perform 500 iterations. Each step in K corresponds to a single step of (78). As in Section V B, we sample only in the restricted distributions where there is at least one point stored in from which to restart the dynamics.

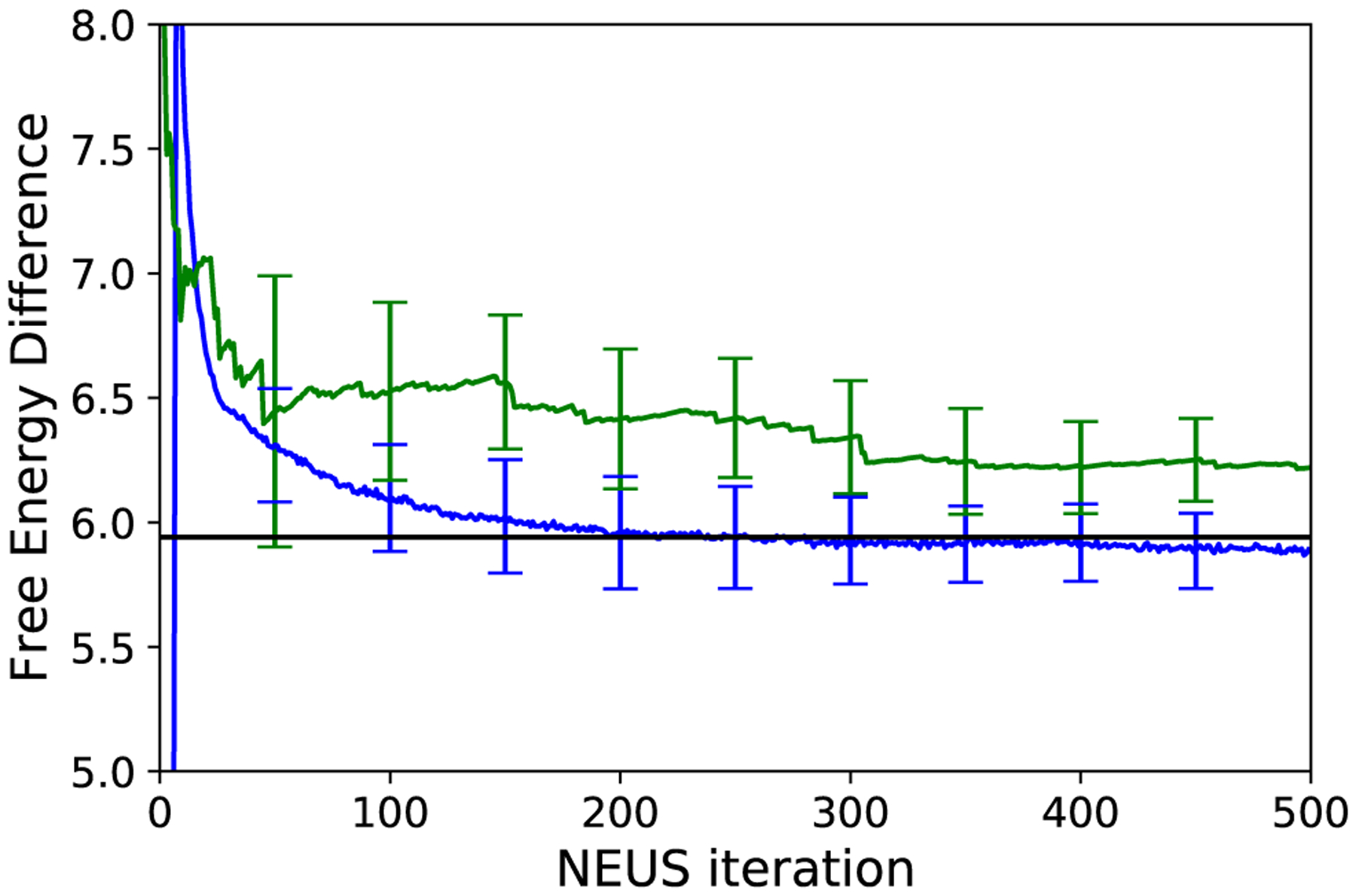

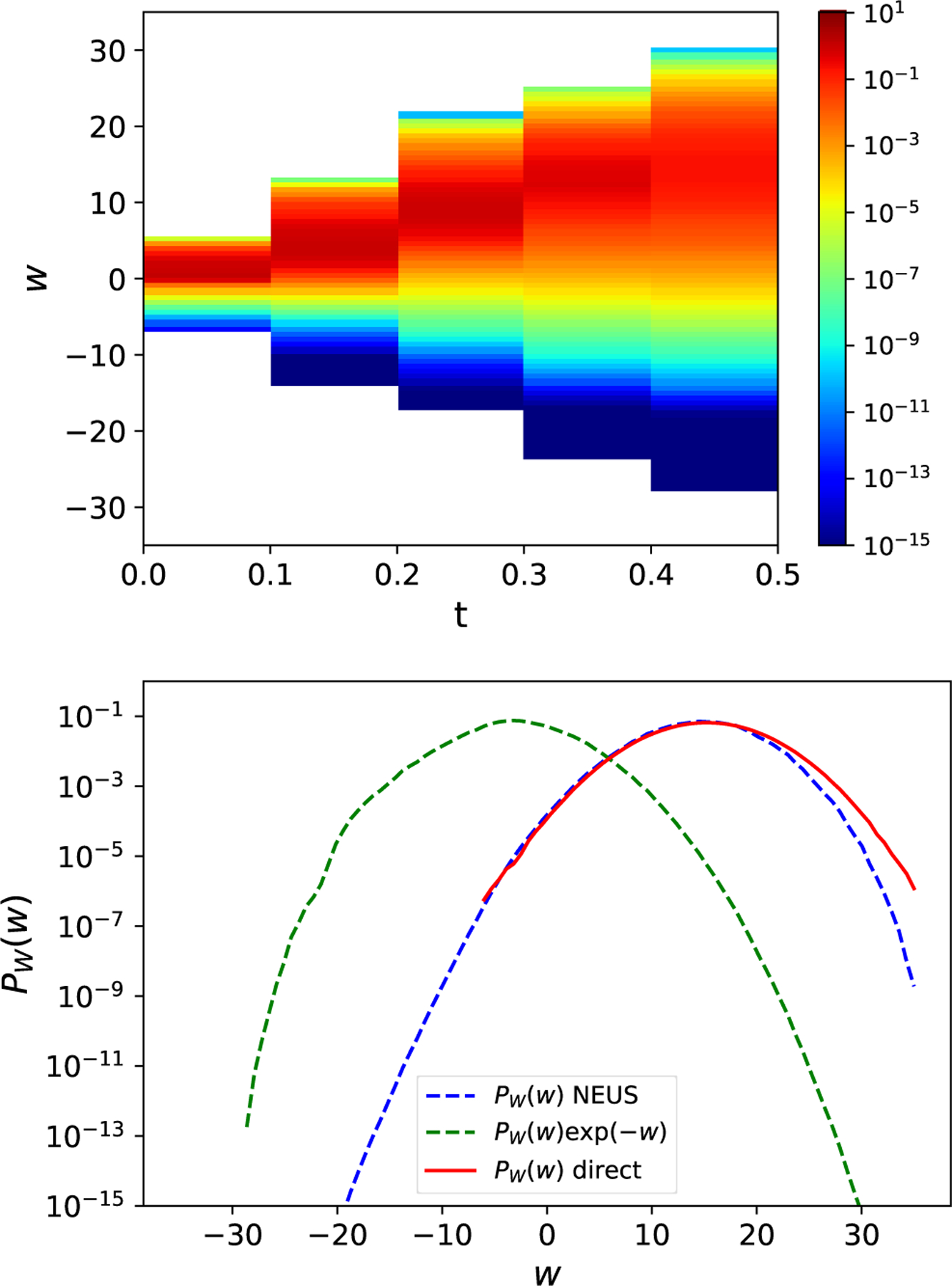

The estimated ΔF produced from data generated in the last 50 iterations of NEUS is 5.89 (the units are chosen to absorb temperature factors above), which is in excellent agreement with the reference value of 5.94, in contrast to the estimate from direct simulation (Figure 7). The left panel of Figure 8 shows the weights along the time and work axes. In the right panel of Figure 8 we plot histogram approximations of the density PW(w) of W(τ−1) along with the weighted density proportional to PW(w)exp(−w). The separation of the peaks of this distribution highlight how NEUS is able to effectively sample the low work tails that contribute significantly to the expectation in the Jarzynski relation in (76) but are rarely accessed by the switching procedure in the unbiased simulations.

FIG. 7.

Estimate of the free energy computed from NEUS (blue; error bars are computed every 50 iterations and indicate where s is the standard error estimated from n = 10 independent NEUS simulations) and from conventional fast-switching simulations (green; error bars are computed every 50 iterations and indicate where s is the standard error estimated from n = 10 independent direct simulations). The value computed from numerically integrating the potentials is shown as a black line. For the direct fast-switching simulations, we scale the number of repetitions to the number of NEUS iterations that are equivalent in computational effort.

FIG. 8.

Sampling the work with NEUS. (top) The estimate of the dynamic weights, , from the final iteration of the NEUS calculation. White space represents subsets that are not visited in the NEUS calculation. (bottom) The probability density PW(w) of the accumulated work W(τ−1) estimated from NEUS (blue dashed line), from direct integration (red solid line) and the exponentially scaled probability density proportional to PW(w)exp(−w) estimated from the NEUS calculations (green dashed line). The estimates of PW(w) and PW(w)exp(−w) from NEUS (blue dashed line and green dashed line respectively) at each value of W(τ−1) are computed as an average over the last 10 iterations and then averaged over 10 independent NEUS simulations. The estimate of PW(w) from direct integration (red solid line) is computed as an average over 10 independent direct simulations that are equivalent in computational effort to the 10 independent NEUS simulations.

VI. CONCLUSIONS

We describe a trajectory stratification framework for the estimation of expectations with respect to arbitrary Markov processes. The basis for this framework is the nonequilibrium umbrella sampling method (NEUS) originally introduced to compute steady state averages. Our development highlights the structural similarities between the nonequilibrium and equilibrium US algorithms and places the NEUS method within the general context of stochastic approximation. These connections have practical implications for further optimizing the procedure and point the way to a more in depth convergence analysis that will be the subject of future work.

Our development reveals that the basic trajectory stratification approach can be useful well beyond the estimation of stationary averages for time-homogenous Markov processes. This flexibility is demonstrated in two examples, both involving an expectation over trajectories of finite duration. In the first example, we show that the probability of first hitting a set within a finite time can be efficiently computed via stratification even when the dynamics start close to a competing absorbing state. In our second example, we use NEUS to stratify a process according to a path-dependent variable, the accumulated work in a nonequilibrium process appearing in the Jarzynski equation. The result is a novel and effective scheme for estimating free energy differences by enhancing sampling of the tails of the accumulated work distribution.

Our general framework also suggests new and exciting applications of trajectory stratification. For example, with little modification, these methods can be applied to sequential data assimilation applications where the goal is to approximate averages with respect to the conditional distribution of a hidden signal X(t) given sequentially arriving observations (i.e., with respect to the posterior distribution). In high-dimensional settings (e.g., weather forecasting) the only practical alternatives are limited to providing information about only the mode of the posterior distribution (i.e., variational methods) or involve uncontrolled and often unjustified approximations (i.e., Kalman-type schemes). The approach that we present here opens the door to efficient data assimilation, machine learning, and, more generally, new forms of analysis of complex dynamics.

Supplementary Material

ACKNOWLEDGMENTS

The authors would like to thank David Aristoff, James Dama, Jianfeng Lu, Charles Matthews, Erik Thiede, Omiros Papaspiliopoulos, and Eric Vanden-Eijnden for helpful discussions. This research is supported by the National Institutes of Health (NIH) Grant Number 5 R01 GM109455-02. Computational resources were provided by the University of Chicago Research Computing Center (RCC).

Appendix A: An Alternative F

Here we present an alternative construction of the stochastic matrix F (Section II A) that more closely aligns with the nonequilibrium version of the algorithm presented in Section II B. Suppose that one has available a transition distribution p(dy | x) for a Markov chain that preserves (or nearly preserves) the target density, π, in the sense that

| (A1) |

For example, p(dy | x) might be the transition density for a number of steps of a Langevin dynamics integrator. We can again express the zi as the solution to an eigenproblem (8) where now

| (A2) |

Note that when ψi(x) = 1Ai for some partition of space {Ai}, and p(dy | x) is reversible with respect to π, the entry Fij can be estimated by evolving samples according to p(dy | x), rejecting any proposed samples that lie outside of Ai (so that πi is preserved), and then counting the number of times the chain attempts transitions from set Ai to set Aj. For a closely related approach to approximating certain nonequilibrium quantities see [45].

Appendix B: Expressions for and

In this appendix we establish the identities

| (B1) |

appearing in (22) and (24). First, note that the equality for i ≠ j (which follows immediately from the definitions of , , z, and G) together with implies the expression for in terms of G. It remains then only to establish the expression for in terms of z and G. To that end, notice that

| (B2) |

so that

| (B3) |

Appendix C: Excursions sample the restricted distributions

Here, we establish (32). We have

| (C1) |

References

- [1].Asmussen S and Glynn PW, Stochastic Simulation: Algorithms and Analysis (Springer, 2007). [Google Scholar]

- [2].Gardiner CW, Stochastic Methods: A Handbook for the Natural and Social Sciences (Springer, 2009). [Google Scholar]

- [3].Frenkel D and Smit B, Understanding Molecular Simulation (Academic Press, 2002). [Google Scholar]

- [4].Neyman J, Journal of the Royal Statistical Society 97, 558 (1934). [Google Scholar]

- [5].Torrie GM and Valleau JP, Journal of Computational Physics 23, 187 (1977). [Google Scholar]

- [6].Pangali C, Rao M, and Berne BJ, J. Chem. Phys 71, 2975 (1979). [Google Scholar]

- [7].Chandler D, Introduction to Modern Statistical Mechanics (Oxford University Press, 1987). [Google Scholar]

- [8].Leliévre T, Rousset M, and Stoltz G, Free Energy Computations: A Mathematical Perspective (Imperial College Press, 2010). [Google Scholar]

- [9].Warmflash A, Bhimalapuram P, and Dinner AR, J. Chem. Phys 127, 154112 (2007). [DOI] [PubMed] [Google Scholar]

- [10].Dickson A, Warmflash A, and Dinner AR, J. Chem. Phys 131, 154104 (2009). [DOI] [PubMed] [Google Scholar]

- [11].Vanden-Eijnden E and Venturoli M, J. Chem. Phys 131, 044120 (2009). [DOI] [PubMed] [Google Scholar]

- [12].Dickson A, Warmflash A, and Dinner AR, J. Chem. Phys 130, 074104 (2009). [DOI] [PubMed] [Google Scholar]

- [13].Dickson A and Dinner AR, Annual review of physical chemistry 61, 441 (2010). [DOI] [PubMed] [Google Scholar]

- [14].Dickson A, Maienschein-Cline M, Tovo-Dwyer A, Hammond JR, and Dinner AR, J. Chem. Theory Comput 7, 2710 (2011). [DOI] [PubMed] [Google Scholar]

- [15].Xu X, Rice SA, and Dinner AR, Proceedings of the National Academy of Sciences 110, 3771 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Bello-Rivas JM and Elber R, J. Chem. Phys 142, 094102 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Faradjian AK and Elber R, J. Chem. Phys 120, 10880 (2004). [DOI] [PubMed] [Google Scholar]

- [18].Glasserman P, Heidelberger P, Shahabuddin P, and Zajic T, “A look at multilevel splitting,” in Monte Carlo and Quasi-Monte Carlo Methods 1996: Proceedings of a conference at the University of Salzburg, Austria, July 9–12, 1996, edited by Niederreiter H, Hellekalek P, Larcher G, and Zinterhof P (Springer; New York, New York, NY, 1998) pp. 98–108. [Google Scholar]

- [19].Huber GA and Kim S, Biophys. J 70, 97 (1996). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Haraszti Z and Townsend JK, ACM Trans. Model. Comput. Simul 9, 105 (1999). [Google Scholar]

- [21].van Erp TS, Moroni D, and Bolhuis PG, J. Chem. Phys 118, 7762 (2003). [DOI] [PubMed] [Google Scholar]

- [22].Allen RJ, Warren PB, and ten Wolde PR, Phys. Rev. Lett 94, 018104 (2005). [DOI] [PubMed] [Google Scholar]

- [23].Johansen A, Del Moral P, and Doucet A, in Proceedings of the 6th International Workshop on Rare Event Simulation (Bramberg, 2006). [Google Scholar]

- [24].Cérou F and Guyader A, Stochastic Analysis and Applications 25, 417 (2007). [Google Scholar]

- [25].Guttenberg N, Dinner AR, and Weare J, J. Chem. Phys 136, 234103 (2012). [DOI] [PubMed] [Google Scholar]

- [26].Hairer M and Weare J, Commun. Pure Appl. Math 67, 1995 (2014). [Google Scholar]

- [27].Thiede E, Van Koten B, Weare J, and Dinner AR, J. Chem. Phys 145, 084115 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Dinner AR, Thiede E, Van Koten B, and Weare J, arxiv 1705.08445 (2017). [Google Scholar]

- [29].Kushner HJ and Yin GG, Stochastic Approximations and Recursive Algorithms and Applications, second edition ed. (Springer, 2003). [Google Scholar]

- [30].Schütte C, Noé F, Lu J, Sarich M, and Vanden-Eijnden E, J. Chem. Phys 134, 204105 (2011). [DOI] [PubMed] [Google Scholar]

- [31].MacKerell AD Jr., Bashford D, Bellott M, Dunbrack JRL, Evanseck JD, Field MJ, Fischer S, Gao J, Guo H, Ha S, Joseph-McCarthy D, Kuchnir L, Kuczera K, Lau FTK, Mattos C, Michnick S, Ngo T, Nguyen DT, Prodhom B, Reiher WE, Roux B, Schlenkrich M, Smith JC, Stote R, Straub J, Watanabe M, Wio J´Yin rkiewiczKuczera, D., and Karplus M, J. Phys. Chem. B 102, 3586 (1998). [DOI] [PubMed] [Google Scholar]

- [32].Schneider T and Stoll E, Phys. Rev. B 17, 1302 (1978). [Google Scholar]

- [33].Plimpton S, J. Comp. Phys 117, 1 (1995). [Google Scholar]

- [34].Ryckaert J, Ciccotti G, and Berendsen JC, J. Comp. Phys 23, 327 (1977). [Google Scholar]

- [35].Jarzynski C, Phys. Rev. Lett 78, 2690 (1997). [Google Scholar]

- [36].Chipot C and Pohorille A, Free Energy Simulations (Springer, 2007). [Google Scholar]

- [37].Kass RE and Raftery AE, Journal of the American Statistical Association 90, 773 (1995). [Google Scholar]

- [38].Neal RM, Stat. Comput 11, 125 (2001). [Google Scholar]

- [39].Hummer G, J. Chem. Phys 114, 7330 (2001). [Google Scholar]

- [40].Hummer G and Szabo A, Biophys. J 85, 5 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Ytreberg FM and Zuckerman DM, J. Chem. Phys 120, 10876 (2004). [DOI] [PubMed] [Google Scholar]

- [42].Oberhofer H, Dellago C, and Geissler PL, J. Phys. Chem. B 109, 6902 (2005). [DOI] [PubMed] [Google Scholar]

- [43].Jarzynski C, Phys. Rev. E 73, 046105 (2006). [DOI] [PubMed] [Google Scholar]

- [44].Vaikuntanathan S and Jarzynski C, J. Chem. Phys 134, 054107 (2011). [DOI] [PubMed] [Google Scholar]

- [45].Vanden-Eijnden E and Venturoli M, The Journal of Chemical Physics 130, 194101 (2009). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.