The Theory of Active and Latent Failures was proposed by James Reason in his book, Human Error. [1] According to Reason, accidents within most complex systems, such as healthcare, are caused by a breakdown or absence of safety barriers across four levels within a sociotechnical system. These levels can best be described as Unsafe Acts, Preconditions for Unsafe Acts, Supervisory Factors, and Organizational Influences. [2] Reason used the term “active failures” to describe factors at the Unsafe Acts level, whereas the term “latent failures” was used to describe unsafe conditions located higher up in the system. [1]

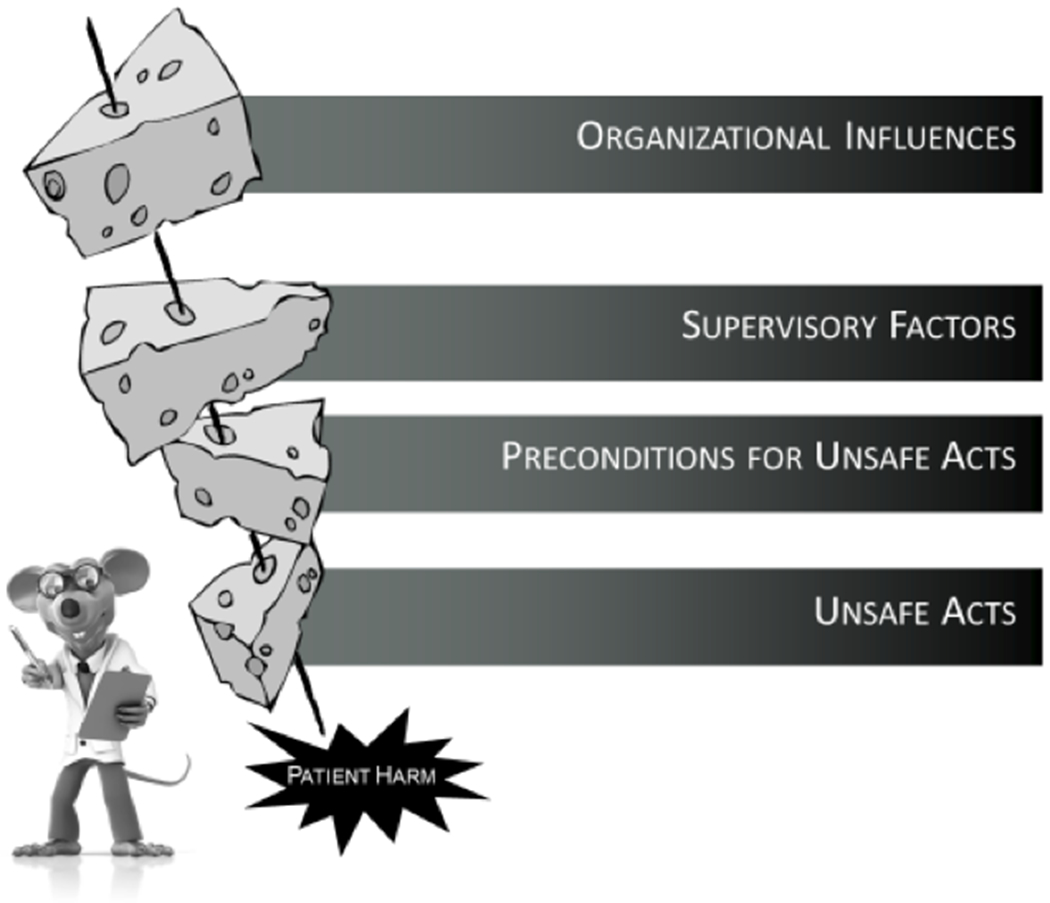

Today, most people refer to Reason’s theory as the “Swiss Cheese Model” because of the way it is typically depicted (See Figure 1). For example, each level within the model is often shown as an individual layer or slice of cheese. [3] Absent or failed barriers at each level are represented as holes in the cheese (hence the cheese is “Swiss”). When holes across each level of the system line up, they provide a window of opportunity for an accident or patient harm event to occur.

Figure 1.

Swiss Cheese Model

The Swiss Cheese Model is commonly used to guide root cause analyses (RCAs) and safety efforts across a variety of industries, including healthcare. [4–12] Various safety and RCA frameworks that define the holes in the cheese and their relationships have also been developed, such as the Human Factors Analysis and Classification System (HFACS; see Table 1). [2, 4–8] The Swiss Cheese Model and its associated tools are intended to help safety professionals identify holes in each layer of cheese, or level of the system, that could (or did) lead to an adverse event, so they can be addressed and mitigated before causing harm in the future. [2]

Table 1.

The Human Factors Analysis and Classification System for Healthcare

| Organizational Influences |

| Organizational Culture: Shared values, beliefs, and priorities regarding safety that govern organizational decision-making, as well as the willingness of an organization to openly communicate and learn from adverse events. |

| Operational Process: How an organization plans to accomplish its mission, as reflected by its strategic planning, policies/procedures, and corporate oversight. |

| Resource Management: Support provided by senior leadership to accomplish the objectives of the organization, including the allocation of human, equipment/facility, and monetary resources. |

| Supervisory Factors |

| Inadequate Supervision: Oversight and management of personnel and resources, including training, professional guidance, and engagement. |

| Planned Inappropriate Operations: Management and assignment of work, including aspects of risk management, staff assignment, work tempo, scheduling, etc. |

| Failed to Correct Known Problem: Instances in which deficiencies among individuals or teams, problems with equipment, or hazards in the environment, are known to the supervisor yet are allowed to continue unabated. |

| Supervisory Violations: The willful disregard for existing rules, regulations, instructions, or standard operating procedures by managers or supervisors during the course of their duties. |

| Preconditions for Unsafe Acts |

| Environmental Factors |

| Tools and Technology: This category encompasses a variety of issues, including the design of equipment and controls, display/interface characteristics, checklist layouts, task factors, and automation. |

| Physical Environment: This category includes the setting in which individuals perform their work and consists of such things as lighting, layout, noise, clutter, and workplace design. |

| Task: Refers to the nature of the activities performed by individuals and teams, such as the complexity, criticality, and consistency of assigned work. |

| Individual Factors |

| Mental State: Cognitive/emotional conditions that negatively affect performance such as mental workload, confusion, distraction, memory lapses, pernicious attitudes, misplaced motivation, stress and frustration. |

| Physiological State: Medical and/or physiological conditions that preclude safe performance, such as circadian dysrhythmia, physical fatigue, illness, intoxication, dehydration, etc. |

| Fitness for Duty: Off-duty activities that negatively impact performance on the job such as the failure to adhere to sleep/rest requirements, alcohol restrictions, and other off-duty mandates. |

| Team Factors |

| Communication: The sharing of information among team members including providing/requesting information and the the use of two-way (positive confirmation) communication. |

| Coordination: This category refers to the interrelationship among team members including such things as planning, monitoring and providing back-up where necessary. |

| Leadership: The team leader’s performance of his or her responsibilities such as the failure to adopt a leadership role or model/reinforce principles of teamwork. |

| Unsafe Acts |

| Errors |

| Decision Errors: Goal-directed behavior that proceed as intended, yet the plan proves inadequate or inappropriate for the situation. These errors typically result from a lack of information, knowledge or experience. |

| Skill-based Errors: These “doing” errors occur frequently during highly practiced activities and appear as attention failures, memory failures, or errors associated with the technique with which one performs a task. |

| Perceptual Errors: Errors that occur during tasks that rely heavily on sensory information which is obscured, ambiguous or degraded due to impoverished environmental conditions or diminished sensory system. |

| Violations |

| Routine Violations: Often referred to as “bending the rules,” this type of violation tends to be habitual by nature, engaged in by others, and tolerated by supervisor and management. |

| Exceptional Violations: Isolated departures from authority, neither typical of the individual nor condoned by management |

Although the Swiss Cheese model has become well-known in most safety circles, there are several aspects of its underlying theory that are often misunderstood. [13] Without a basic understanding of its theoretical assumptions, the Swiss Cheese Model can easily be viewed as a rudimentary diagram of a character and slices of cheese. Indeed, some critics have expressed a viewpoint that the Swiss Cheese Model is an oversimplification of how accidents occur and have attempted to modify the model to make it better equipped to deal with the complexity of human error in healthcare. [14, 15] Others have called for the extreme measure of discarding the model in its entirety. [16–19] Perhaps the rest of us, who have seen numerous illustrations and superficial references to the model in countless conference presentations, simply consider it passé.

Such reactions are legitimate, particularly when the actual theory underlying the model gets ignored or misinterpreted. Nevertheless, there is much to the Swiss Cheese Model’s underlying assumptions and theory that, when understood, make it a powerful approach to accident investigation and prevention. Therefore, the purpose of the present paper is to discuss several key aspects of the Theory of Active and Latent Failures upon which the Swiss Cheese Model is based. We will begin our conversation by discussing the “holes in the cheese” and reviewing what they represent. As we dive deeper into the model, we will further explore the theoretical nature of these “holes,” including their unique characteristics and dynamic interactions. Our hope is that this knowledge of the Swiss Cheese Model’s underlying theory will help overcome many of the criticisms that have been levied against it. A greater appreciation of the Theory of Active and Latent Failures will also help patient safety professionals fully leverage the Swiss Cheese Model and associated tools to support their RCA and other patient safety activities.

THE THEORY BEHIND THE CHEESE

Let’s start by reminding ourselves why Reason’s theory is represented using Swiss cheese. As stated previously, the holes in the cheese depict the failure or absence of safety barriers within a system. Some examples could be a nurse mis-programming an infusion pump or an anesthesia resident not providing an adequate briefing when handing off a patient to the ICU. Such occurrences represent failures that threaten the overall safety integrity of the system. If such failures never occurred within a system (i.e., if the system were perfectly safe), then there wouldn’t be any holes in the cheese. The cheese wouldn’t be Swiss. Rather, the system would be represented by solid slices of cheese, such as cheddar or provolone.

Second, not every hole that exists in a system will lead to an accident. Sometimes holes may be inconsequential. Other times, holes in the cheese may be detected and corrected before something bad happens. [20–25] The nurse who inadvertently mis-programs an infusion pump, for example, may notice that the value on the pump’s display is not right. As a result, the nurse corrects the error and enters the correct value for the patient. This process of detecting and correcting errors occurs all the time, both at work and in our daily lives. [20] None of us would be alive today if every error we made proved fatal!

This point leads to another aspect of the theory underlying the Swiss Cheese Model. Namely, that the holes in the cheese are dynamic, not static. [1–2] They open and close throughout the day, allowing the system to function appropriately without catastrophe. This is what Human Factors Engineers call “resilience.” [26] A resilient system is one that is capable of adapting and adjusting to changes or disturbances in the system in order to keep functioning safely. [26]

Moreover, according to the Theory of Active and Latent Failures, holes in the cheese open and close at different rates. In addition, the rate in which holes pop up or disappear is determined by the type of failure the hole represents. Holes that occur at the Unsafe Acts level, and even some at the Preconditions level, represent active failures. [1–2] They are called “active” for several reasons. First, they occur most often during the process of actively performing work, such as treating patients, performing surgery, dispensing medication, and so on. Such failures also occur in close proximity, in terms of time and location, to the patient harm event. They are seen as being actively involved or directly linked to the bad outcome. Another reason why they are called active failures is because they actively change during the process of performing work or providing care. They open and close constantly throughout the day as people make errors, catch their errors, and correct them.

In contrast to active failures, latent failures have different characteristics. [1–2] Latent failures occur higher up in the system, above the Unsafe Acts level. These include the Organizational, Supervisory and Preconditions levels. These failures are referred to as “latent” because when they occur or open, they often go undetected. They can lie “dormant” or “latent” in the system for a long period of time before they are recognized. Furthermore, unlike active failures, latent failures do not close or disappear quickly. [1, 20] They may not even be detected until an adverse event occurs. For example, a hospital may not realize that the pressures it imposes on surgical personnel to increase throughput of surgical patients results in staff cutting corners when cleaning and prepping operating rooms. Only after there is a spike in the number of patients with surgical site infections does this problem become known.

It is also important to realize that most patient harm events are often associated with multiple active and latent failures. [1–2] Unlike the typical Swiss Cheese diagram (Figure 1), which shows an arrow flying through one hole at each level of the system, there can be a variety of failures at each level which interact to produce an adverse event. In other words, there can be several failures at the Organizational, Supervisory, Preconditions and Unsafe Acts level that all lead to patient harm. Although not explicitly stated in the Theory of Active and Latent Failures, research indicates that the number of holes in the cheese associated with accidents are more frequent at the Unsafe Acts and Preconditions levels, but become fewer as one progresses upward through the Supervisory and Organizational levels. [2,4–8]

Given the frequency and dynamic nature of activities associated with providing patient care, there are more opportunities for holes to open up at the Unsafe and Preconditions levels on a daily basis. Consequently, there are often more holes identified at these levels during an RCA investigation. Furthermore, if an organization were to have numerous failures at the Supervisory and Organizational levels, it would not be a viable competitor within its industry. It would have too many accidents to survive. A clear example from aviation is the former airline, ValuJet, that had a series of fatal accidents in a short period of time; all due to organizational (i.e., latent) failures. [27]

How the holes in the cheese interact across levels is also important to understand. Given that we tend to find fewer holes as we move up the system, a one-to-one mapping of these failures across levels is not expected. In layman’s terms, this means that a single hole, for example, at the Supervisory level, can actually result in multiple failures at the Preconditions level, or a single hole at the Preconditions level may result in several holes at the Unsafe Acts level. [2, 28] This phenomenon is often referred to as a “one-to-many” mapping of causal factors. However, the converse can also be true. For example, multiple causal factors at the Preconditions level might interact to produce a single hole at the Unsafe Acts level. This is referred to as a “many-to-one” mapping of causal factors.

This divergence and convergence of factors across levels is one reason, in addition to others, [29, 30] that the traditional RCA method of asking “5 Whys” isn’t a very effective approach. Given that failures across levels can interact in many different ways, there can be multiple causal factor pathways associated with any given event. Thus, unlike the factors that can often cause problems with physical systems (e.g., mechanical failures), causal pathways that lead to human errors and patient harm cannot be reliably identified by simply asking a set number of “why” questions in linear fashion.

Just because there can be several causal pathways for a single event, however, doesn’t mean that every pathway will ultimately lead to organizational roots. There may be some pathways that terminate at the Precondition or Supervisory levels. Nonetheless, the assumption underlying the Theory of Active and Latent Failures is that all pathways can be linked to latent failures. Therefore, the job of RCA investigators is to always explore the potential contributions of factors across all levels of the system. Whether or not latent failures exist becomes an empirical, not theoretical, question after an accident occurs.

IMPLICATIONS

There are several reasons why it is important to identify holes at each level of a system, or at least look for them during every safety investigation. The more holes we identify, the more opportunities we have to improve upon system safety. Another important reason is that the type of hole we identify, be it active or latent, will have a significantly different effect on system safety if we are able to fill it in.

To illustrate this point, let’s consider the classic childhood story about a Dutch boy and leaking dam, written by Dodge in 1865. [31] The story is about a young boy, named Hans Brinker, who observes a leak in a dam near his village as he is walking home from school. As he approaches the village, he notices that everyone in the village is panicking. They are terrified the water leaking through the dam is going to flood the entire village. As a result of their fear, they are immobilized and unable to take action. Nevertheless, the young Dutch boy stays calm. He walks over to the dam and plugs the hole with his finger. The tiny village is saved! They all celebrate and dub Hans a hero.

While the moral of this story is to stay calm in a time of crisis, there is another moral that we want to emphasize here. From our perspective, the hole in the dam is analogous to an active failure, such as a surgeon leaving a surgical instrument inside a patient. The Dutch boy’s act of putting his finger in the hole to stop the leak is analogous to a local fix or corrective action for preventing the surgical instrument from being retained inside another patient. For instance, the corrective action might be to add the retained instrument to the list of materials that are to be counted prior to closing the surgical incision. Such a fix to the problem is very precise. It focuses on a specific active failure – a retained surgical instrument used in a specific surgical procedure. It can also be implemented relatively quickly. In other words, it is an immediate, localized intervention to address a known hazard, just like Hans plugging the hole in the dam with his finger.

While such corrective actions are needed, and should be implemented, they often do not address the underlying causes of the problem (i.e., the reasons for the leak in the dam). It is just a matter of time before the next hole opens up. But what happens when little Hans ultimately runs out of fingers and toes to plug up the new holes that will eventually occur? Clearly, there is a need for the development other solutions that are implemented upstream from the village and dam. Perhaps the water upstream can be rerouted, or other dams put in place. Whatever intervention we come up with, it needs to reduce the pressure on the dam so that other holes don’t emerge.

Let’s return to our example of the retained surgical instrument. At this point in our discussion, we don’t really know why the instrument was left in the patient. Perhaps the scrub nurse, surgical technician, or circulating nurse all knew the instrument had been left in the patient. However, they were afraid to speak up because they were worried about how the surgeon would react if they said something. Maybe they were all in a hurry because they felt pressure to turn over the operating room and get it ready for the next surgical patient in the queue. Perhaps there was a shift turnover in the middle of the surgical procedure and the oncoming surgical staff were unaware that the instrument had been used earlier in the case. There could have been new surgical staff present who were undergoing on-the-job training, which resulted in confusion about who had performed the count.

The point here is that unless we address the underlying latent failures that occurred during the case, our solution to add the retained instrument to the counting protocol will not prevent other “yet-to-be-retained” objects from being retained in the future. Moreover, as other objects do get retained over time, our solution of adding them to the list of items to be counted becomes highly impractical. Even worse, given that the solution of expanding our count list does not actually mitigate the underlying latent failures, other seemingly different active failures are bound to emerge. These may include such active failures as wrong site surgeries, delayed antibiotic administration, or inappropriate blood transfusion. As result, we will need to develop interventions to address each of these active failures as well. Like the little Dutch boy, we will ultimately run out of fingers and toes trying to plug up every hole!

This is not to say that directly plugging up an active failure is unimportant. On the contrary, when an active failure is identified, action should be taken to directly address the hazard. However, sometimes such fixes are seen as “system fixes” particularly when they can be easily applied and spread. Returning again to our retained instrument example, the hospital’s leadership could decide to require every surgical team to count the instrument (and all others similar to it), regardless of the specific surgical procedure or type of patient. Such a process is considered a system fix because it was “spread throughout the system.” In essence, however, what we’ve actually done is require the entire village to stand watch at the dam to ensure that all future leaks are caught and plugged before the village floods. We have not fixed the system.

To truly improve system safety, interventions need to also address latent failures. These types of interventions will serve to reduce a variety of harmful events throughout the system (i.e., across units, divisions, departments and the organization). System improvements that address latent failures such as conflicting policies and procedures, poorly-maintained and out-of-date technology, counterproductive incentive systems, lack of leadership engagement, workload and competing priorities, laxed oversight and accountability, poor teamwork and communication, and excessive interruptions are ultimately needed to reduce future leaks and subsequent demand for more “fingers and toes.” Indeed, such system fixes release the villagers from their makeshift role as civil engineers plugging holes in the dam, freeing them to passionately pursue their lives’ true calling to the best of their ability.

CONCLUSION

Numerous articles have been published on the Swiss Cheese Model. However, there are several aspects of its underlying theory that are often misunderstood. [13] Without a basic understanding of its theoretical assumptions, the Swiss Cheese Model can easily be criticized for being an oversimplification of how accidents actually occur. [16, 17]. Indeed, many of the criticisms and proposed modifications to the model reflect a lack of appreciation for the theory upon which the model is based. Nonetheless, the goal of this paper was not to go point-counterpoint with these critics in an attempt to win a debate. Rather, the purpose of the present paper was to simply discuss specific aspects of the Swiss Cheese Model and its underlying Theory of Active and Latent Failures. This theory explains, in system terms, why accidents happen and how they may be prevented from happening again. When understood, the Swiss Cheese Model has proven to be an effective foundation for building robust methods to identify and analyze active and latent failures associated with accidents across a variety of complex industries. [2–8] A firm grasp of the Swiss Cheese Model and its underlying theory will prove invaluable when using the model to support RCA investigations and other patient safety efforts.

AKNOWLEDGEMENTS

The project described was supported by the Clinical and Translational Science Award (CTSA) program, through the NIH National Center for Advancing Translational Sciences (NCATS), grant UL1TR002373, as well as the UW School of Medicine and Public Health’s Wisconsin Partnership Program (WPP). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH or WPP.

Contributor Information

Douglas A. Wiegmann, Department of Industrial and Systems Engineering, University of Wisconsin-Madison, 1513 University Ave, Madison, WI 53706 USA

Laura J. Wood, Department of Industrial and Systems Engineering, University of Wisconsin-Madison, 1513 University Ave, Madison, WI 53706 USA

Tara N. Cohen, Department of Surgery, Cedars-Sinai, 825 N. San Vicente Blvd, Los Angeles, CA 90069

Scott A. Shappell, Department of Human Factors and Behavioral Neurobiology, Embry-Riddle Aeronautical University, Daytona Beach, FL 32115

REFERENCES

- 1.Reason JT (1990). Human error. Cambridge, England: Cambridge University Press. [Google Scholar]

- 2.Shappell SA & Wiegmann DA (2003). A human error approach to aviation accident analysis: The human factors analysis and classification system. Burlington, VT: Ashgate Press. [Google Scholar]

- 3.Stein JE & Heiss K (2015). The swiss cheese model of adverse event occurrence--Closing the holes. Seminar in Pediatric Surgery, 24(6), 278–82. doi: 10.1053/j.sempedsurg.2015.08.003 [DOI] [PubMed] [Google Scholar]

- 4.Reinach S & Viale A (2006). Application of a human error framework to conduct train accident/incident investigations. Accident Analysis and Prevention, 38(2), 396–406. [DOI] [PubMed] [Google Scholar]

- 5.Li WC & Harris D (2006). Pilot error and its relationship with higher organizational levels: HFACS analysis of 523 accidents. Aviation, Space, and Environmental Medicine, 77(10), 1056–1061. [PubMed] [Google Scholar]

- 6.Patterson JM & Shappell SA (2010). Operator error and system deficiencies: analysis of 508 mining incidents and accidents from Queensland, Australia using HFACS. Accident Analysis & Prevention, 42(4), 1379–1385. doi: 10.1016/j.aap.2010.02.018 [DOI] [PubMed] [Google Scholar]

- 7.Diller T, Helmrich G, Dunning S, Cox S, Buchanan A, Shappell S (2013). The human factors analysis classification system (HFACS) applied to health care. American Journal of Medical Quality, 29(3), 181–190. doi: 10.1177/1062860613491623 [DOI] [PubMed] [Google Scholar]

- 8.Spiess BD, Rotruck J, McCarthy H, Suarez-Wincosci O, Kasirajan V, Wahr J, & Shappell S (2015). et al. Human factors analysis of a near-miss event: oxygen supply failure during cardiopulmonary bypass. Journal of Cardiothoracic and Vascular Anesthesia, 29(1), 204–209. doi: 10.1053/j.jvca.2014.08.011 [DOI] [PubMed] [Google Scholar]

- 9.Collins SJ, Newhouse R, Porter J, & Talsma A (2014). Effectiveness of the surgical safety checklist in correcting errors: A literature review applying Reason’s swiss cheese model. AORN Journal, 100(1), 65–79. doi: 10.1016/j.aorn.2013.07.024 [DOI] [PubMed] [Google Scholar]

- 10.Thonon H, Espeel F, Frederic F, & Thys F (2019). Overlooked guide wire: A multicomplicated swiss cheese model example. Analysis of a case and review of the literature. Acta Clinica Belgica, 1–7. doi: 10.1080/17843286.2019.1592738 [DOI] [PubMed] [Google Scholar]

- 11.Neuhaus C, Huck M, Hofmann G, Pierre M St., Weigand MA, & Lichtenstern C (2018). Applying the human factors analysis and classification system to critical incident reports in anaesthesiology. Acta Anaesthesiologica Scandinavica, 62(10), 1403–1411. doi: 10.1111/aas.13213. [DOI] [PubMed] [Google Scholar]

- 12.Durstenfeld MS, Statman S, Dikman A, Fallahi A, Fang C, Volpicelli FM, & Hochman KA (2019). The swiss cheese conference: Integrating and aligning quality improvement education with hospital patient safety initiatives. American Journal of Medical Quality, 1–6. Advance online publication. doi: 10.1177/1062860618817638 [DOI] [PubMed] [Google Scholar]

- 13.Perneger TV (2005). The Swiss cheese model of safety incidents: Are there holes in the metaphor?. BMC Health Services Research, 5(71). doi: 10.1186/1472-6963-5-71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Li Y & Thimbleby H (2014). Hot cheese: a processed swiss cheese model. The Journal of the Royal College of Physicians of Edinburgh, 44(2), 116–121. doi: 10.4997/JRCPE.2014.205 [DOI] [PubMed] [Google Scholar]

- 15.Seshia SS, Bryan Young G, Makhinson M, Smith PA, Stobart K, & Croskerry P (2018). Gating the holes in the swiss cheese (part I): Expanding professor Reason’s model for patient safety. Journal of Evaluation in Clinical Practice, 24(1), 187–197. doi: 10.1111/jep.12847 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hollnagel E & Woods DD (1983). Cognitive systems engineering: New wine in new bottles. International Journal of Man-Machine Studies, 18(6), 583–600. [DOI] [PubMed] [Google Scholar]

- 17.Leveson N (2004). A new accident model for engineering safer systems. Safety Science, 42(4); 237–270 [Google Scholar]

- 18.Dekker SW (2002). Reconstructing human contributions to accidents: The new view on error and performance. Journal of Safety Research, 33(3), 371–385. doi: 10.1016/S0022-4375(02)00032-4 [DOI] [PubMed] [Google Scholar]

- 19.Buist M & Middleton S (2016). Aetiology of hospital setting adverse events 1: Limitations of the ‘swiss cheese’ model. British Journal of Hospital Medicine, 77(11), C170–C174. doi: 10.12968/hmed.2016.77.11.C170 [DOI] [PubMed] [Google Scholar]

- 20.Reason J (2000). Human error: Models and management. The BMJ, 320(7237), 768–770. doi: 10.1136/bmj.320.7237.768 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kontogiannis T (1999). User strategies in recovering from errors in man-machine systems. Safety Science, 32, 49–68. [Google Scholar]

- 22.de Leval MR, Carthey J, Wright DJ, Farewell VT, & Reason JT (2000). Human factors and cardiac surgery: a multicenter study. The Journal of Thoracic and Cardiovascular Surgery, 119(4), 661–672. doi: 10.1016/S0022-5223(00)70006-7 [DOI] [PubMed] [Google Scholar]

- 23.King A, Holder MG Jr., & Ahmed RA (2013). Errors as allies: Error management training in health professions education. BMJ Quality and Safety, 22(6), 516–519. doi: 10.1136/bmjqs-2012-000945 [DOI] [PubMed] [Google Scholar]

- 24.Law KE, Ray RD, D’Angelo AL, Cohen ER, DiMarco SM, Linsmeier E, Wiegmann DA, & Pugh CM (2016). Exploring senior residents’ intraoperative error management strategies: A potential measure of performance improvement. Journal of Surgical Education, 73(6), 64–70. [DOI] [PubMed] [Google Scholar]

- 25.Hales BM & Pronovost PJ (2006). The checklist—a tool for error management and performance improvement. Journal of Critical Care, 21(3):231–235. [DOI] [PubMed] [Google Scholar]

- 26.Hollnagel E, Woods DD, & Leveson NC (Eds.) (2006). Resilience engineering: Concepts and precepts. Aldershot, UK: Ashgate Publishing, Ltd. [Google Scholar]

- 27.National Transportation Safety Board. In-flight fire and impact with terrain ValuJet Airlines flight 592, DC-9-32, N904VJ, everglades, near Miami, Florida (Report No. AAR-97-06). 1997. Retrieved January 4, 2021 from https://www.ntsb.gov/investigations/AccidentReports/Reports/AAR9706.pdf. [Google Scholar]

- 28.ElBardissi AW, Wiegmann DA, Dearani JA, Daly RC, & Sundt TM III (2007). Application of the human factors analysis and classification system methodology to the cardiovascular surgery operating room. The Annals of Thoracic Surgery, 83(4), 1412–1419. doi: 10.1016/j.athoracsur.2006.11.002 [DOI] [PubMed] [Google Scholar]

- 29.Card AJ (2017). The problem with ‘5 whys’. BMJ Quality & Safety, 26(8); 671–677. [DOI] [PubMed] [Google Scholar]

- 30.Peerally MF, Carr S, Waring J, & Dixon-Woods M (2017). The problem with root cause analysis. BMJ Quality & Safety, 26(5); 417–422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Dodge MM, (1865). Hans Brinker; or, the silver skates. New York, NY: James O’Kane. [Google Scholar]