Abstract

There is a long history of using angle sensors to measure wavefront. The best example is the Shack-Hartmann sensor. Compared to other methods of wavefront sensing, angle-based approach is more broadly used in industrial applications and scientific research. Its wide adoption is attributed to its fully integrated setup, robustness, and fast speed. However, there is a long-standing issue in its low spatial resolution, which is limited by the size of the angle sensor. Here we report a angle-based wavefront sensor to overcome this challenge. It uses ultra-compact angle sensor built from flat optics. It is directly integrated on focal plane array. This wavefront sensor inherits all the benefits of the angle-based method. Moreover, it improves the spatial sampling density by over two orders of magnitude. The drastically improved resolution allows angle-based sensors to be used for quantitative phase imaging, enabling capabilities such as video-frame recording of high-resolution surface topography.

Subject terms: Optical sensors, Optical metrology

Generally, wavefronts are measured using angle-based sensors like the Shack-Hartmann sensor. Here, the authors present an angle-sensitive device that uses flat optics integrated on a focal plane array for compact wavefront sensing with improved resolution.

Introduction

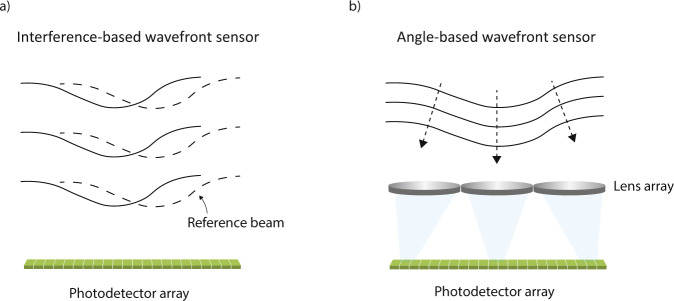

Measuring the wavefront of light has broad application in optical characterization. Wavefront cannot be directly measured, because it is determined by phase. There are two types of indirect methods to measure the wavefront. The first is based on interference as shown in Fig. 1a. A secondary reference wave, often a replica of the primary incident wave, is brought in to interfere with the primary wave. The resulting interference patterns are used to measure the phase1 or the gradient of the phase2. It offers high spatial resolution for quantitative phase imaging1–3. However, the interference method has certain limitations related to delicate interference setups and complex signal acquisition schemes, making it sensitive to temporal perturbation or spatial alignment. Recent work has shown exciting progresses in overcoming these shortcomings4. Despite rapid progress in the interference method, a second method based on angle measurement is much more dominant in commercial applications because of its robustness. Figure 1b illustrates the working principle of angle-based wavefront sensors. Arrays of micro-lenses sample the incident angles on a set of grid points. These angles together can determine the wavefront5–7. Shack–Hartmann wavefront sensors8,9, one type of angle sensor, have been widely used in adaptive optics such as astronomy10,11 and biomedical imaging12–14. It is also widely used in metrology15–18. While angle-based method is fast and robust, they have a long-standing difficulty. Its spatial resolution is low, orders of magnitude lower than that of interference methods. As such, they are mostly used for slowly varying wavefronts and are not suitable for demanding applications such as quantitative phase imaging. In addition to these two main methods, there are other innovative approaches such as spatial wavefront sampling19.

Fig. 1. Two different types of wavefront sensing.

a Interference between the incident wave and a reference wave produces intensity modulation that can be recorded by photodetector arrays. The reference wave can often be generated from the same incident wave. Multiple interference patterns are usually needed in order to retrieve the wavefront without ambiguity. b Arrays of micro-lenses are used to measure the spatial distribution of incident angles on the wavefront. The size of lenses is usually much larger than the optical wavelength in order to have a good focus.

In this work, we demonstrate a high-resolution wavefront sensor based on integrated angle sensors. It has a high spatial resolution and high dynamic range. It can measure high-resolution surface morphology better than most state-of-the-art commercial tools including white light interferometry (WLI). Its fast speed and robustness also make it possible to have real-time record of the temporal dynamics of surface morphology, which used to be extremely difficult to achieve with traditional metrology tools.

Results

Working principle of angle-based wavefront sensing

The low spatial resolution of angle-based wavefront sensors is caused by the large size of the lenses. One cannot decrease the size of lens indefinitely, because increased diffraction in small lenses reduces their capability to focus. Recently, we developed an ultra-small angle sensor by exploiting the near-field coupling effect between nanoscale resonators20. Although it, in principle, can enable high-resolution wavefront sensing, the approach cannot leverage today’s advanced image sensor technology and thus it is difficult to scale to large arrays. Here we use an angle sensor based on flat optics. Flat optics21,22 has been used for lenses, holography, and on-chip optical processing23–26. Recently, the integration of flat optics directly with sensor arrays enable new functionalities, such as ultra-compact spectrometers27, interference-based wavefront sensors2, and metasurface sensor28,29. Unlike most flat optics that relies on far-field effect, we explore the near-field effect of flat optics. We use the energy distribution of optical field right after a flat optics component to measure the incident angle.

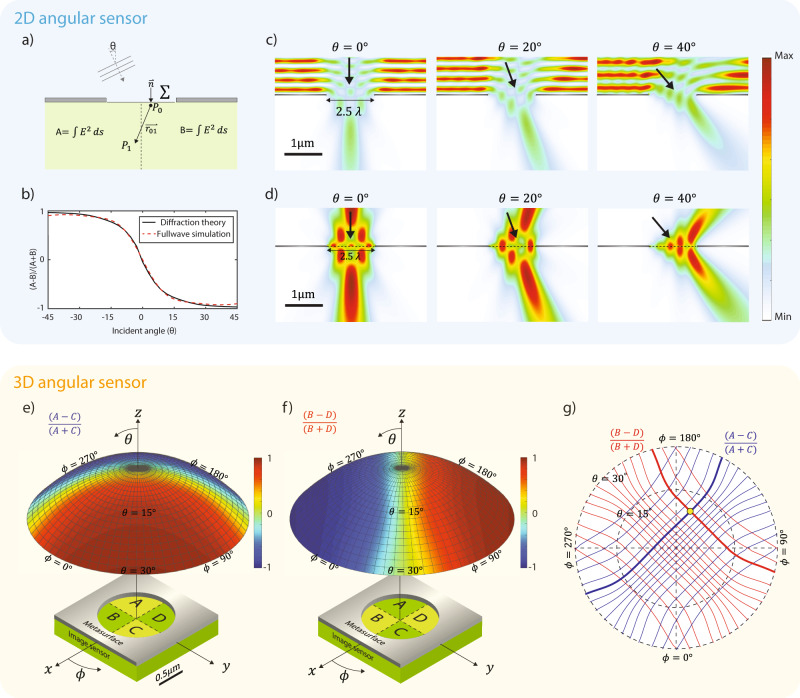

Figure 2 shows the working principle of the angle sensor. We first illustrate the idea in two-dimensional space. We consider an aperture illuminated by a plane wave (Fig. 2a). Light passing through the aperture substantially deviates from a plane wave, in particular when the aperture size is comparable to the wavelength. Two edges of the aperture produce scattering waves with a phase difference determined by the incident angle . Consequently, the scattered waves lead to a near-field distribution that is a strong function of the incident angle. To quantify this dependence, we draw a line along the center of the aperture and compare the optical energy on both sides. Rayleigh–Sommerfeld diffraction theory is used to calculate the field after the aperture (see Supplementary Section 1). At the normal direction, there is an equal amount of energy on both sides. When the incident angle tilts toward one side, energy is more concentrated on the other side. A monotonic relationship between the ratio of energy and the incident angle holds up to 45° (Fig. 2b). The local energy distribution can be measured by placing two photodetectors immediately after the aperture. As the photocurrent is determined by , where is the effective conductivity of the material, the ratio of photocurrents directly measures the energy ratio. The monotonic relationship also holds well for apertures with the finite thickness. It has weak wavelength dependence, allowing it for broadband operations (see Supplementary Section 2).

Fig. 2. Schematic of angle sensing in 2D and 3D space.

a Schematic of single slit aperture for calculating field energy using Rayleigh–Sommerfeld diffraction theory. b Plot of total energy ratio between two equally divided regions (A and B) for different incident angles. Calculation based on full-wave simulation and Rayleigh–Sommerfeld diffraction theory are in good agreement. c The electric field intensity profile of a plane wave incident on a PEC slab with an open aperture of 2.5 width. The intensity profile is a strong function of the incident angle . d The scattered field by the open aperture. It is calculated by subtracting the incident field from the total field. The incident field is defined as the field without the open aperture but with a perfect PEC reflector. e Schematic of angle sensor with an aperture on four photodetectors labeled by A, B, C, and D. The map of energy ratio for different incident angles is shown on the hemisphere. Each point on the hemisphere represents one combination of polar angle and azimuthal angle . f The map of energy ratio . g Contour lines extracted from hemisphere in e and f. A unique incident angle can be determined when two ratios and are measured by the four photodetectors.

We can understand the field by using the total-field scattered-field method (Fig. 2c, d). If without the aperture, a perfect metal mask will simply reflect the incident wave. We will have interference built up in front of the perfect metal and no field after it. If we create an opening in the perfect metal, there would be field scattered by this opening and the field will be superimposed on top of the background field. The non-uniform field distribution as seen in Fig. 2d is created by such scattering field. This scattered field is directional, depending on the incident angle. It creates angular dependence of the system. Angular response of the actual three-dimensional (3D) structure is calculated using hardware-accelerated full-wave solver, Tidy3D (https://simulation.cloud; see Supplementary Section 2).

Angles in 3D space can be measured similarly. We divide the volume below an aperture into four equal parts as shown in Fig. 2e, each of which is measured by a photodetector. For any incident wave, we measure two energy ratios defined as and where A, B, C, and D represent the energy in each region. Figure 2e, f show the energy ratios for different incident angles. We plot the contours of the two ratios in a polar diagram in Fig. 2g. The combination of two ratios uniquely determines one incident angle as shown by the only crossing point in Fig. 2g.

Fabrication and characterization of the device

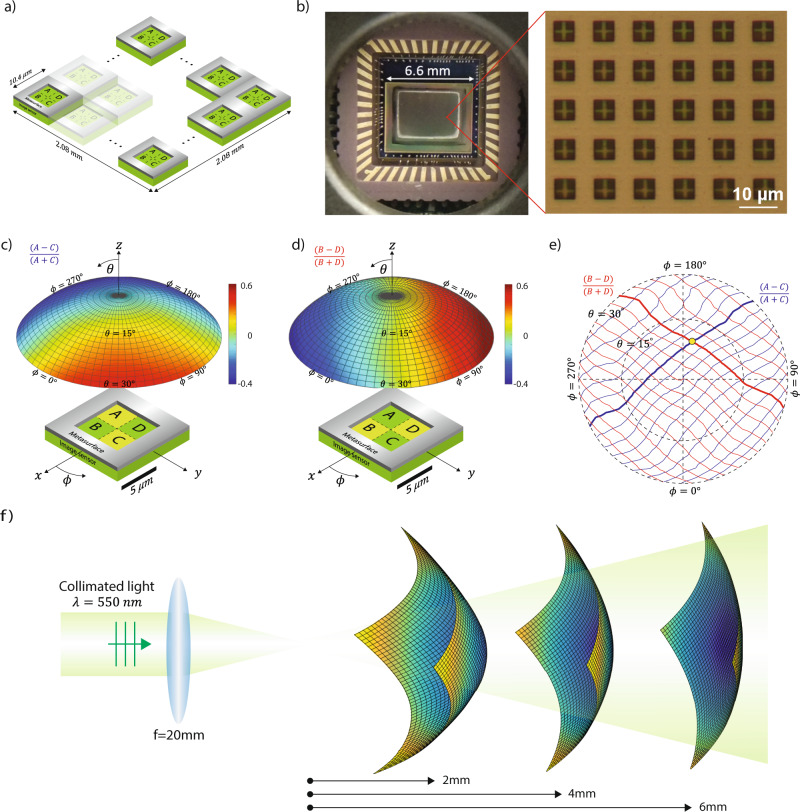

Next, we discuss the fabrication of the device. Here we use a monochrome complementary metal-oxide-semiconductor (CMOS) image sensor Aptina MT9M001C12STM with a pixel size of 5.2 μm. First, we removed the micro lens array layer to expose the silicon photosensitive materials, which was covered by a thin passivation dielectric. Next, the CMOS Si chip was removed from the chip holder for microfabrication. We used photolithography to define arrays of square patterns on a negative tone photoresist layer. Each square is centered at the touching corner of four neighboring pixels as in Fig. 3a. Its size is 5.2 μm with a periodicity of 10.4 μm. Next, 150 nm-thick aluminum was evaporated directly onto the image sensor followed by a lift-off process, leaving an aluminum film with square apertures on top of the image sensor. Figure 3b shows a microscope image of a fabricated CMOS Si chip. It is then mounted back to the original chip holder through wire bounding. Each square aperture together with its four pixels underneath constitutes one angle sensor.

Fig. 3. Wavefront sensor fabrication and characterization.

a Schematic of a wavefront sensor that consists arrays of unit cell. Each unit cell consists of 2 × 2 pixels. b Image of a fabricated wavefront sensor and a microscope image showing its local region where 2 × 2 pixels are partially exposed under each aperture. c Measured pixel intensity ratio of one unit cell as a function of incident angle shown on a hemisphere. Each point on the hemisphere represents one combination of polar angle and azimuthal angle . d Measured pixel intensity ratio of one unit cell as a function of incident angle shown on a hemisphere. e Contour lines extracted from the hemisphere in c and d. A unique incident angle can be determined when two ratios and are measured by the four photodetectors. f Measurement of a diverging wavefront at three different locations that are 2, 4, and 6 mm away from the focal point. Each grid point represents a measured data. It can be seen that the wavefront is characterized at a high spatial resolution.

The quantum efficiency is reduced by four times compared to the original CMOS image sensor due to light being blocked by metals. We can significantly improve the quantum efficiency by improving the throughput of light. For example, one design is to use phase aperture instead of the binary aperture, which in principle can improve the light through over 90%.

Large arrays of angle sensors form a wavefront sensor with a very high spatial resolution. In contrast, a Shack–Hartman wavefront sensor uses several hundreds of pixels in each angle sensor and thus has a much lower spatial resolution. See Supplementary Section 10 where we compare conventional Hartmann sensors and our wavefront sensors. They operate at different length scales and thus have different performance.

The sensor needs to be calibrated because of the randomness in the fabrication process and the response of photodetectors. The calibration is done by using a collimated light-emitting diode (LED) light source. Two rotation stages and one linear stage were connected to move the sensor in polar and azimuthal ϕ directions with respect to the collimated beam. [Details available in Supplementary Section 3]. The measured pixel intensity ratio and of one unit cell obtained from the calibration are shown in Fig. 3c, d on the hemisphere. The incident angle can be uniquely determined as shown by the only crossing point in Fig. 3e.

After calibration, we first characterize a simple wavefront formed by a collimated beam passing through a biconvex lens as in Fig. 3f. We measured the wavefront at three different distances away from the focal point. The curvature of the measured wavefront agrees very well with analytical calculation based on a spherical wavefront originating from the focal point [see details in Supplementary Section 4].

The most significant advantage of this wavefront sensor is its high resolution. The density of spatial sampling is two orders of magnitude higher than most traditional angle-based sensors. For example, here the sampling density is 9246 point/mm2, whereas it is usually 44 points/mm2 for a Shack–Hartmann sensor (e.g., Thorlabs WFS20-5C). A high sampling density makes it possible to resolve fine features in a wavefront. The capability of resolving fine features can be quantified by the maximum phase gradient that can be measured by a wavefront sensor. It is determined by the spatial sampling density and angular dynamic range. Commercial Shack–Hartmann sensors such as Thorlabs WFS20-5C typically have a spatial resolution of 100 μm and an angular dynamic range of < 1°. As a result, its maximum phase gradient at the entrance of the sensor is limited to 0.1 mrad/μm. The sensor shown here has a spatial resolution of 10 μm and an angular dynamic range of 30°. The maximum phase gradient can reach up to 50 mrad/μm, which is 500 times larger than Shack–Hartmann sensor. Such enhanced capability allows angle-based sensors to perform quantitative phase measurement, which is traditionally dominated by the interference-based method.

Microscopic surface topography

Next, we use this wavefront sensor to measure microscopic surface topography. Surface topography is widely used in material research and manufacturing, as well as in life science research. Most existing methods are based on interference. Here we show that angle sensors can reach the same level of accuracy and resolution, meanwhile retaining their intrinsic advantages of fast speed and robustness.

The sample to be characterized was a droplet of polymethyl methacrylate (PMMA) polymer on a microscope slide. It was heated under a hot plate to the temperature of 180 °C. As the temperature rose, the polymer changed its state from liquid to solid, creating a wrinkled surface. The goal is to measure its height profile. We choose this sample, because its surface has abundant microscopic features. The sharply curved morphology with diverse scales can be used to test the performance limit of various surface profilers. For this purpose, we choose a challenging region on the sample with sharp slopes.

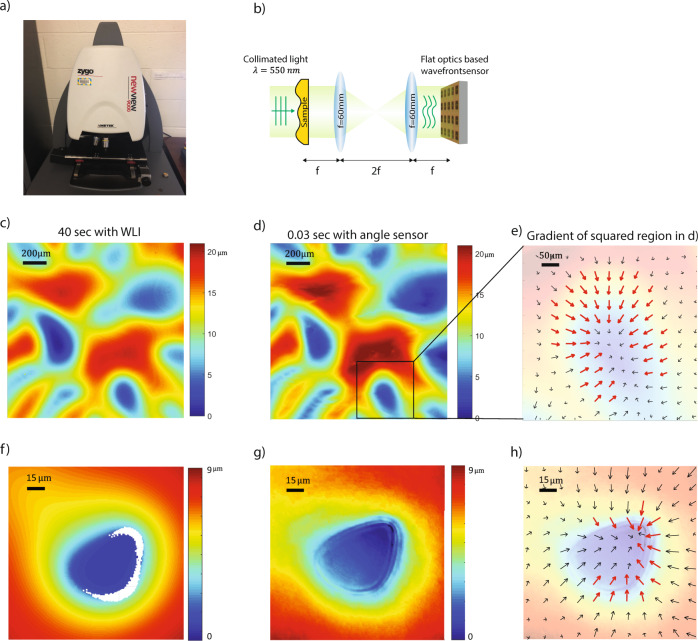

One standard metrology tool for this task would be WLI30. It scans along the direction perpendicular to sample plane to measure the height. Here we use Zygo NewView 9000 as shown in Fig. 4a. The results are shown in Fig. 4c. The surface varies by 20 μm in height over the measured region. It took 40 s to acquire the image in Fig. 4c.

Fig. 4. Surface profile measurement comparison with white light interferometer.

a Photo of a commercial white light interferometer (Zygo NewView 9000) for surface profile measurement. b Optical setup for surface profile measurement using the proposed wavefront sensor where f is the focal length of the lens. c Surface profile of a PMMA polymer on a microscope slide measured with a WLI. d Same sample as in c measured with the proposed wavefront sensor. e Close-up view of the cropped region in d where the slope of the surface is represented with arrows indicating projected vector components. f PMMA polymer surface measured with a WLI equipped with a 10× objective lens. g Same sample as in f measured with our wavefront sensor equipped a 10× objective lens. h Red arrows indicate the region with steep slope where WLI fails to report the height.

Next, we measured the same region using our wavefront sensor setup as show in Fig. 4b. It uses a collimated illumination from an LED in a simple 4f system. Two biconvex lenses have a 60 mm focal length. The wavefront sensor directly measures the distribution of angles on the sensor plane, which is then converted to wavefront using zonal estimation described in7 (see Supplementary Section 5). The surface profile measured by our sensor agrees very well with that from WLI as shown in Fig. 4d. We also show the angular information in Fig. 4e. The direction of arrows represents azimuthal direction of light propagation. The length of arrows represents the magnitude of the polar angle, defined by the angle between the vertical z-axis and the direction of light propagation. Polar angles, i.e., the length of arrow, reflect how fast surface heights vary. We use red arrows to indicate a steep slope where surface height varies quickly. These regions can be challenging for WLI to measure. For example, we show another microscopic region (Fig. 4f) that has steeper slopes. The WLI reports white area for these challenging regions (see Supplementary Section 8 for more discussion). On the other hand, our angular sensor, with a high dynamic range of measurable angles, can easily resolve these steep slopes. Figure 4g shows the results with 10 × magnification obtained by replacing the first lens in the 4f system with a 10 × objective lens and second lens with the associated tube lens. The topography obtained from WLI (Fig. 4f) agrees well with that from our sensor in slowly varying regions. The large angles can be observed in Fig. 4h, which is consistent with white regions in Fig. 4f where WLI fails.

A differential interference contrast microscope is known to provide a qualitative image of a surface roughness. A comparison between qualitative image and a quantitative image taken by our wavefront sensor is discussed in Supplementary Section 9.

WLI can realize diffraction-limited resolution in the horizontal plane and can achieve a sub-nanometer resolution in the vertical direction. Although our sensors can also realize diffraction-limited resolution, it is challenging to realize sub-nanometer resolution in the vertical direction. The important advantage of angle-based sensor over WLI is the fast speed. A single topography took 30 ms to acquire the results shown in Fig. 4d. This time is only limited by the frame acquisition time of cameras. Thus, it can be easily improved by using high-speed camera to reach above 1000 Hz. In contrast, the image in Fig. 4c took WLI 40 s due to vertical scanning and stitching.

The fast speed allows us to demonstrate a useful capability: capturing real-time topography at a video-frame rate. This capability can be used for observing temporal evolution of living biological samples or for studying dynamics of materials going through fast changes.

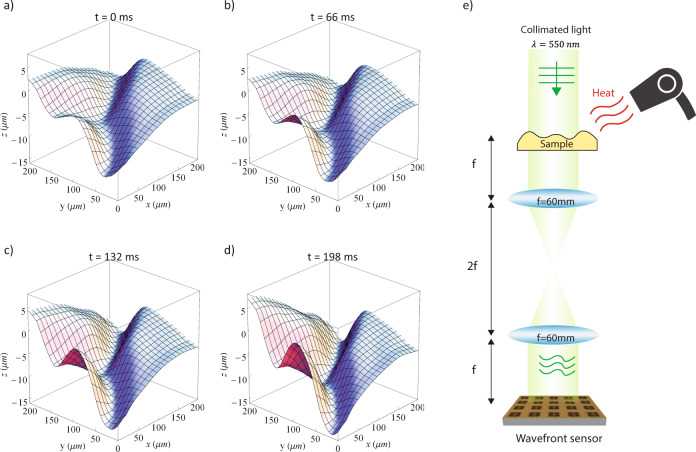

Here we measure the temporal dynamics of a surface during a fast coagulating process. A droplet of PMMA polymer on a transparent microscope slide is heated by a heat gun. Under the blow of air flow and high temperature, its surface goes through rapid change. The surface topography is recorded at 30 frames per second. Figure 5e shows the setup. Figure 5a–d show the time lapse of the surface profile during the phase transition. The video is available in the Supplementary Material. Before the heat is applied, PMMA remains calm and the surface is mostly flat. As the temperature of PMMA increases, the surface will start to show wrinkles, as it begins to coagulate starting from the side where the heat is applied. The surface morphology goes through rapid change as shown in Fig. 5a–d. For future development, it is possible to observe microscopic surface morphology with micro-second temporal resolution by using high-speed camera (Memrecam-ACS-1-M60-40-Data-Sheet.pdf)31.

Fig. 5. Surface topography in video-frame rate.

a–d Selected time lapse of a surface profile captured at a video-frame rate. Sample is a PMMA polymer on a transparent microscope slide that goes through a fast coagulation process, while its temperature increases. e Measurement setup for a video-frame rate measurement. Optical setup is same as that in Fig. 4b. A heat gun is used to increase the temperature of a PMMA polymer on a microscope slide.

The wavefront sensor can also operate under reflection mode by adding a beam splitter to the existing setup. Reflection mode is particularly useful for measuring a sample that is highly reflective. Reflection mode setup and measurement results are discussed in Supplementary Section 6. The sensitivity of the wavefront measurement relies on accurate measurement of incident angle, which is a strong function of the signal-to-noise ratio of the photodetector. We provide brief analysis in Supplementary Section 7.

Discussion

In conclusion, robust and fast wavefront measurement is of great potential for a number of areas including material characterization, manufacturing, and life science research. Angle-based wavefront sensors have been successful in characterizing slowly varying wavefronts. Here we greatly improve its sampling density and angular dynamic range, to allow it to be used for demanding applications such as quantitative phase imaging. It can complement the interference-based methods that usually dominate this application area. The sensors only involve one deposition and etching step, and thus can be fully integrated into the existing CMOS sensor fabrication process. It could be mass produced at a low cost32. The video-frame topography could be useful for material and life scientists to study the temporal dynamics of microscopic samples.

Supplementary information

Description of Additional Supplementary Files

Acknowledgements

The work was supported by NSF Career Award (1749050). We acknowledge helpful discussion with Professor Na Ji at UC Berkeley.

Author contributions

S.Y., L.Y., and Z.Y. conceived the project. S.Y. fabricated the device. S.Y. and J.X. designed the experimental setup and performed the measurements. S.Y., M.Z., and Z.W. performed the simulations. All authors discussed the results and contributed to the writing of the manuscript.

Data availability

The data that support the finding of this study are available from the corresponding author upon reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-021-26169-z.

References

- 1.Bon P, Maucort G, Wattellier B, Monneret S. Quadriwave lateral shearing interferometry for quantitative phase microscopy of living cells. Opt. Express. 2009;17:13080–13094. doi: 10.1364/OE.17.013080. [DOI] [PubMed] [Google Scholar]

- 2.Kwon H, Arbabi E, Kamali SM, Faraji-Dana M, Faraon A. Single-shot quantitative phase gradient microscopy using a system of multifunctional metasurfaces. Nat. Photonics. 2020;14:109–114. doi: 10.1038/s41566-019-0536-x. [DOI] [Google Scholar]

- 3.Popescu G, Ikeda T, Dasari RR, Feld MS. Diffraction phase microscopy for quantifying cell structure and dynamics. Opt. Lett. 2006;31:775–777. doi: 10.1364/OL.31.000775. [DOI] [PubMed] [Google Scholar]

- 4.Park Y, Depeursinge C, Popescu G. Quantitative phase imaging in biomedicine. Nat. Photonics. 2018;12:578–589. doi: 10.1038/s41566-018-0253-x. [DOI] [Google Scholar]

- 5.Fried DL. Least-square fitting a wave-front distortion estimate to an array of phase-difference measurements. JOSA. 1977;67:370–375. doi: 10.1364/JOSA.67.000370. [DOI] [Google Scholar]

- 6.Cubalchini R. Modal wave-front estimation from phase derivative measurements. JOSA. 1979;69:972–977. doi: 10.1364/JOSA.69.000972. [DOI] [Google Scholar]

- 7.Southwell WH. Wave-front estimation from wave-front slope measurements. JOSA. 1980;70:998–1006. doi: 10.1364/JOSA.70.000998. [DOI] [Google Scholar]

- 8.Platt BC, Shack R. History and principles of Shack-Hartmann wavefront sensing. J. Refract. Surg. 2001;17:S573–S577. doi: 10.3928/1081-597X-20010901-13. [DOI] [PubMed] [Google Scholar]

- 9.Primot J. Theoretical description of Shack–Hartmann wave-front sensor. Opt. Commun. 2003;222:81–92. doi: 10.1016/S0030-4018(03)01565-7. [DOI] [Google Scholar]

- 10.Wizinowich PL, et al. The W. M. Keck Observatory Laser Guide Star Adaptive Optics System: overview. Publ. Astron. Soc. Pac. 2006;118:297–309. doi: 10.1086/499290. [DOI] [Google Scholar]

- 11.Davies R, Kasper M. Adaptive optics for astronomy. Annu. Rev. Astron. Astrophys. 2012;50:305–351. doi: 10.1146/annurev-astro-081811-125447. [DOI] [Google Scholar]

- 12.Liang J, Williams DR, Miller DT. Supernormal vision and high-resolution retinal imaging through adaptive optics. JOSA A. 1997;14:2884–2892. doi: 10.1364/JOSAA.14.002884. [DOI] [PubMed] [Google Scholar]

- 13.Wang K, et al. Direct wavefront sensing for high-resolution in vivo imaging in scattering tissue. Nat. Commun. 2015;6:7276. doi: 10.1038/ncomms8276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ji N. Adaptive optical fluorescence microscopy. Nat. Methods. 2017;14:374–380. doi: 10.1038/nmeth.4218. [DOI] [PubMed] [Google Scholar]

- 15.Forest CR, Canizares CR, Neal DR, McGuirk M, Schattenburg ML. Metrology of thin transparent optics using Shack-Hartmann wavefront sensing. Opt. Eng. 2004;43:742–753. doi: 10.1117/1.1645256. [DOI] [Google Scholar]

- 16.Li C, et al. Three-dimensional surface profiling and optical characterization of liquid microlens using a Shack-Hartmann wave front sensor. Appl. Phys. Lett. 2011;98:171104. doi: 10.1063/1.3583379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li C, et al. Three-dimensional surface profile measurement of microlenses using the Shack–Hartmann wavefront sensor. J. Microelectromech. Syst. 2012;21:530–540. doi: 10.1109/JMEMS.2012.2185821. [DOI] [Google Scholar]

- 18.Gong H, et al. Optical path difference microscopy with a Shack–Hartmann wavefront sensor. Opt. Lett. 2017;42:2122–2125. doi: 10.1364/OL.42.002122. [DOI] [PubMed] [Google Scholar]

- 19.Soldevila F, Durán V, Clemente P, Lancis J, Tajahuerce E. Phase imaging by spatial wavefront sampling. Optica. 2018;5:164–174. doi: 10.1364/OPTICA.5.000164. [DOI] [Google Scholar]

- 20.Yi S, et al. Subwavelength angle-sensing photodetectors inspired by directional hearing in small animals. Nat. Nanotechnol. 2018;13:1143–1147. doi: 10.1038/s41565-018-0278-9. [DOI] [PubMed] [Google Scholar]

- 21.Kildishev, A. V., Boltasseva, A. & Shalaev, V. M. Planar photonics with metasurfaces. Science339, 1232009 (2013). [DOI] [PubMed]

- 22.Yu N, Capasso F. Flat optics with designer metasurfaces. Nat. Mater. 2014;13:139–150. doi: 10.1038/nmat3839. [DOI] [PubMed] [Google Scholar]

- 23.Khorasaninejad M, et al. Metalenses at visible wavelengths: diffraction-limited focusing and subwavelength resolution imaging. Science. 2016;352:1190–1194. doi: 10.1126/science.aaf6644. [DOI] [PubMed] [Google Scholar]

- 24.Wang S, et al. A broadband achromatic metalens in the visible. Nat. Nanotechnol. 2018;13:227–232. doi: 10.1038/s41565-017-0052-4. [DOI] [PubMed] [Google Scholar]

- 25.Li X, et al. Multicolor 3D meta-holography by broadband plasmonic modulation. Sci. Adv. 2016;2:e1601102. doi: 10.1126/sciadv.1601102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wu PC, et al. Visible metasurfaces for on-chip polarimetry. ACS Photonics. 2018;5:2568–2573. doi: 10.1021/acsphotonics.7b01527. [DOI] [Google Scholar]

- 27.Wang Z, et al. Single-shot on-chip spectral sensors based on photonic crystal slabs. Nat. Commun. 2019;10:1020. doi: 10.1038/s41467-019-08994-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Singh R, et al. Ultrasensitive terahertz sensing with high-Q Fano resonances in metasurfaces. Appl. Phys. Lett. 2014;105:171101. doi: 10.1063/1.4895595. [DOI] [Google Scholar]

- 29.Yang Y, Kravchenko II, Briggs DP, Valentine J. All-dielectric metasurface analogue of electromagnetically induced transparency. Nat. Commun. 2014;5:5753. doi: 10.1038/ncomms6753. [DOI] [PubMed] [Google Scholar]

- 30.Wyant JC. White light interferometry. Proc. SPIE. 2002;4737:98–107. doi: 10.1117/12.474947. [DOI] [Google Scholar]

- 31.nac Image Technology. Memrecam-ACS-1-M60-40-Data-Sheet.pdf. https://www.nacinc.com/pdf.php?pdf=/datasheets/Memrecam-ACS-1-M60-40-Data-Sheet.pdf.

- 32.The Growing World of the Image Sensors Market. FierceElectronicshttps://www.fierceelectronics.com/embedded/growing-world-image-sensors-market (2012).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Description of Additional Supplementary Files

Data Availability Statement

The data that support the finding of this study are available from the corresponding author upon reasonable request.