Abstract

Functional localizers are invaluable as they can help define regions of interest, provide cross-study comparisons, and most importantly, allow for the aggregation and meta-analyses of data across studies and laboratories. To achieve these goals within the non-human primate (NHP) imaging community, there is a pressing need for the use of standardized and validated localizers that can be readily implemented across different groups. The goal of this paper is to provide an overview of the value of localizer protocols to imaging research and we describe a number of commonly used or novel localizers within NHPs, and keys to implement them across studies. As has been shown with the aggregation of resting-state imaging data in the original PRIME-DE submissions, we believe that the field is ready to apply the same initiative for task-based functional localizers in NHP imaging. By coming together to collect large datasets across research group, implementing the same functional localizers, and sharing the localizers and data via PRIME-DE, it is now possible to fully test their robustness, selectivity and specificity. To do this, we reviewed a number of common localizers and we created a repository of well-established localizer that are easily accessible and implemented through the PRIME-RE platform.

Keywords: fMRI, Non-human primate, Brain, Localizers, Retinotopy, Face, Metadata

1. Importance of functional localizers in the study of primate brain functions

Localizers are extensively used in fMRI studies, whether in human or non-human primates, in order to identify cortical regions of interest in a systematic and repeatable manner. For example, in a face localizer paradigm (Kanwisher et al., 1997), stimuli from different visual categories containing face and non-face stimuli are presented to identify face-selective areas by contrasting the hemodynamic responses to face and non-face stimuli. These activations can subsequently serve as reference anatomo-functional anchor points that can be compared across studies and to guide invasive follow-up studies. For example, face areas can be easily identified in and compared across multiple subjects or across species using a face localizer (Tsao et al., 2008a). These activations can also serve as a starting point for studies investigating the functional properties of these localized areas in independent tasks. For example, one can study how face patches differentially respond to positive and negative facial expressions (Hadj-Bouziane et al., 2008; Zhu et al., 2013). Moreover, these studies can serve to build probabilistic functional brain atlas (Huang et al., 2019; Janssens et al., 2014). Last but not least, such localizers are becoming more and more critical for guiding invasive electrophysiological and perturbation studies which are required to unravel the precise neuronal properties in these fMRI-defined patches (Alizadeh et al., 2018; Caprara et al., 2018; Conway et al., 2007; Dubois et al., 2015; Freiwald et al., 2009; Gerits et al., 2012; Miyamoto et al., 2017; Park et al., 2017; Taubert et al., 2015; Tsao et al., 2006; Van Dromme et al., 2015).

1.1. Characteristics of efficient functional localizers

A functional localizer ideally needs to meet specific conditions that are briefly reviewed here (Saxe et al., 2006). A valid localizer needs to be robust and specific. The validity of a given localizer will rely on whether when the same localizer, same task, and same stimuli are used, the obtained brain activations are highly reproducible across subjects, studies, and research centers. Additionally, the localizer needs to be specific enough to selectively activate regions of interest involved in the targeted functional processes to the exclusion of others (Berman et al., 2010). For example, a face localizer can be defined by the contrast between face stimuli and inanimate object stimuli. A more specific face localizer can be defined by the contrast between face stimuli and a mixture of inanimate object stimuli and animated non-face stimuli such as animals, headless bodies and so on.

Lastly a localizer should also be unbiased. While specificity is necessary for a good functional localizer, for example, using expanding rings in a retinotopic localizer to favor a response towards eccentricity coding. This specificity must be considered when interpreting results. For example, although a given localizer (e.g. standardized neutral front-facing faces) might specifically target a certain functional property in an unbiased manner (i.e., neutral and front-facing faces), the same localizer might miss areas relevant to another process of interest (e.g. gaze following, (Marciniak et al., 2014)). Therefore, it is important for researchers to fully understand the notion of specificity associated with a given localizer, and the potential descriptive biases it inherently introduces. Accounting for these features and correcting for them can help foster the development of novel localizers. Additionally, novel analytical approaches (such as dimensionality reduction or machine learning algorithms) can help uncover and correct for biases introduced by a given localizer, by a combination of localizers to look for common and defining features.

The activations obtained by such localizers can be directly compared across subjects and experimental setups. Importantly, the resultant functional observations can be shared and pooled, enhancing the statistical power of the described effects and fostering the inferences that can be drawn from such data (Rossion et al., 2012). In addition, they often capture functional properties more consistently and precisely than atlas-based stereotaxic or anatomical localization, particularly when the function does not perfectly correspond to structural landmarks. The subject-specific regions of interest (ROI) identified by such localizers are described at a higher sensitivity and an enhanced functional resolution (Nieto-Castañón and Fedorenko, 2012). They can subsequently be used in order to probe local functional responses to more complex fMRI designs (Berman et al., 2010). Because hypotheses can be tested on these ROIs rather than on the whole brain, statistical power is enhanced, and this statistical benefit is further boosted in multivariate analyses within these regions, such as multi-voxel pattern analyses (MVPA, (Abassi and Papeo, 2020; Dubois et al., 2015; Rezk et al., 2020; Shashidhara et al., 2020; Valdés-Sosa et al., 2020)). Care should be taken to avoid double-dipping and circular statistical reasoning, whereby the subsequent functional interrogation of localizer-identified ROIs is performed on a different dataset than the localizer data (Button, 2019; Kriegeskorte et al., 2009). In the context of non-human primate research, these functional ROIs or fiducials can also be targeted by single cell recordings or focal perturbation tools to identify the neuronal categories that subserve their functional activation as well as the underlying computation (Dubois et al., 2015; Freiwald et al., 2009; Park et al., 2017; Popivanov et al., 2014; Taubert et al., 2015; Klink et al., 2021, this issue). Overall, an efficient localizer thus enhances solid knowledge accumulation. Several localizer sharing initiatives can be found in the human fMRI community (e.g., the Stanford Vision and Perception Neuroscience localizers, or the Social Cognitive Neuroscience lab localizers). Starting such a localizer sharing initiative is at the core of the present paper.

1.2. Benefits of localizer studies to NHP neuroscience research

In the context of NHP imaging, functional localizers have distinctive added value compared to human imaging. The first most straightforward advantage is the increase in the number of subjects, when pooled across study sites, while at the same time allowing each study to comply with the reduction principle of the 3R’s (refinement, reduction, replacement) (Prescott and Lidster, 2017; Prescott and Poirier, 2021; Tannenbaum and Bennett, 2015). This results in higher power in group analyses while at the same time offering a better description and understanding of inter-individual differences. Second, as already indicated above, localizer-identified ROIs have extremely high value to precisely guide electrophysiological recordings or reversible manipulations at well-identified functional sites in the same animal (Alizadeh et al., 2018; Caprara et al., 2018; Conway et al., 2007; Dubois et al., 2015; Freiwald et al., 2009; Gerits et al., 2012; Miyamoto et al., 2017; Park et al., 2017; Taubert et al., 2015; Tsao et al., 2006; Van Dromme et al., 2015). In addition, the understanding of inter-individual variability in these localizer-identified ROIs can serve to optimize electrophysiological or focal perturbations in monkeys that cannot be scanned for fMRI due to non-MRI compatible head-mounted hardware, easy access to MRI facility, or time constraints. When time constraints are at stake, the use of localizers that require minimal animal training, or relying on probabilistic localizers can be useful (Huang et al., 2019; Janssens et al., 2014). Last but not least, using the same functional localizer in different primate species (e.g., humans, macaques, and marmosets) is crucial to understand functional homologies and differences in functional and anatomo-functional organization of the brain (Hung et al., 2015; Mantini et al., 2012b; Orban and Jastorff, 2014; Peeters et al., 2009; Van Essen and Glasser, 2018). In the following, we exemplify the prospective utility of sharing NHP localizer data to two specific topics: cortical lateralization in primates and anatomo-functional organization constraints across primate species.

Hemispheric specialization is thought to be an important feature for sensory and cognitive processing, and is especially pronounced in humans (Karolis et al., 2019). Although anatomical, psychophysical and lesion studies in monkeys provided some evidence for hemispheric lateralization (Denenberg, 1981; Falk, 1987; Halpern et al., 2005; Hamilton, 1983; Vogels et al., 1994), surprisingly little physiological data exist outside the auditory system for lateralized processing in non-human primates (Heffner and Heffner, 1984; Joly et al., 2012b; Poremba et al., 2004). The main reason is that electrophysiology, which constitutes the majority of functional studies in NHP, is suboptimal to provide conclusive evidence concerning lateralized processing. Asymmetrical electrophysiological responses in the two hemispheres may be explained by mismatched homotopic targets leading to systematic sampling biases. To avoid such issues, whole brain imaging is superior for addressing the lateralization question, as imaging is expected to be void of spatial sensitivity biases across both hemispheres when care is taken that the profile and sensitivity of surface coils are well balanced. Although several neuroimaging laboratories obtained indications for lateralized processing in NHP (Gil-da-Costa et al., 2006; Joly et al., 2012b; Petkov et al., 2008; Poremba et al., 2004), these data cannot be considered irrefutable due to the low number of animals participating in such experiments. Concerted efforts using similar paradigms, as promoted in the present manuscript will yield enough power to finally make conclusive inferences about this potentially fundamental functional organization principle in primate brains. This type of data sharing can be further enhanced by a systematic documentation of monkey manual lateralization using for example the bimanual coordination tube task (Hopkins, 1995). This would be important as, for macaques and marmosets, the two main primate species used in MRI neuroimaging, the existence of a population-level handedness remains unclear for bimanual coordinated tasks, although at least in macaques, strong individual hand preferences have been reported (Meguerditchian et al., 2013).

What defines the localization of functional areas with respect to anatomical landmarks such as sulci or fundi and whether this could be at the root of inter-species and -individual differences is an important question in neuroscience. Indeed, anatomical landmarks such as sulci or gyri are thought to reflect underlying cortico-cortical and subcortical connectivity (Passingham et al., 2002; Van Essen, 1997), cortical folding patterns have been associated with psychiatric and neurological disorders (Cachia et al., 2008; Im et al., 2008; Penttilä et al., 2009), and described to be under genetic control (Atkinson et al., 2015; Belmonte et al., 2015). Accordingly, the morphology of human ventromedial prefrontal or midcingulate cortex influences its functional organization (Amiez et al., 2018; 2013; Lopez-Persem et al., 2019). In parallel, recent studies have compared sulcal organization across primates (humans, chimpanzees, Baboons and macaques), and showed a progressive complexification of sulci brain patterns, some patterns being preserved across species, others the probability of occurring increasing from macaques to humans, and others yet only present in chimps or humans (Amiez et al., 2019; Van Essen et al., 2019). This contributes to addressing the question of evolution of brain functions across macaque species (Van Essen et al., 2016; Van Essen and Glasser, 2018). In the discussion on inter-species and inter-individual functional variability, the question of functional-anatomical correspondence is a crucial question, as some anatomical landmarks are preserved across species and individuals while others are not (Amiez et al., 2019; Baumann et al., 2013; Orban et al., 2004; Sereno and Tootell, 2005; Tootell et al., 2003; Vanduffel et al., 2014). This question has been widely explored in humans due to the data availability in large cohorts of subjects. So far, this is an underexploited research avenue in monkeys, the major limiting factor being cohort size.

There is thus a strong scientific need for collecting robust and replicable imaging data from NHPs. However, the primate neuroimaging community has collected localizer data using non-uniform laboratory specific protocols. As a result, and because of the lack of coherence across localizer studies, little of the existing data can currently be shared or pooled. Platforms such as PRIME-DE and PRIME-RE aim to achieve scalable NHP imaging (Milham et al., 2020, 2018; Messinger et al., 2021, this issue) and collect standardized localizer data across centers worldwide. Most of the localizers that will be discussed next have also been used in humans, thus opening the floor to in-depth and large-scale investigation of cross-species functional and anatomo-functional homologies. Obtaining such large scale ‘homogeneous’ data sets using the same paradigms is exactly one of the ideas put forward in the current manuscript.

1.3. Proper understanding of localizer data

While the localizer approach has proven extremely useful in human and monkey cognitive neuroscience research using fMRI, a critique of this approach has also been voiced (Friston et al., 2006). The main idea behind these considerations is that one always needs to keep in mind what specific process each specific localizer captures and which processes it might actually miss. For example, the target sensory or cognitive process might be state and/or context-dependent. Thus, a single localizer might be too restrictive in identifying relevant functional ROIs. This might be more critical for ROIs higher up in the processing hierarchy, where a diversity of operations may be implemented, compared to lower level ROIs. Also, by using localizer-based ROIs to seed more complex fMRI task analyses, one assumes response homogeneity within these ROIs. If one believes there may be significant heterogeneity of responses with a region, voxel-based analyses, such as searchlight MVPA (Haxby et al., 2001), might be more appropriate to probe the target functional networks.

Furthermore, while the aim of this review is to provide a set of unifying localizers that can be implement across research groups, it is additionally important for new localizers to be developed for identifying more specific or even novel targets. Such new localizers are expected to refine current knowledge about the brain, and to acquire new knowledge about functional brain organization. In this respect, it is useful to associate them with more standard localizers, so as to have precise grounds of description and comparison relative to prior knowledge. For example, the gaze following face patch cannot be identified using the classical face patch localizers and requires a specific type of localizers to be identified (Marciniak et al., 2014).

2. Challenges in the face of efficient cross-center non-human primate fMRI localizer data sharing

While the scientific value of collecting and sharing NHP localizer fMRI data is clear, several constraints need to be anticipated and solutions formalized in order to make such data collection and sharing as efficient and useful as possible. In the following section, we identify two independent types of challenges. The first pertains to sources of possible cross-center discrepancies in data collection which complicate subsequent cross-center data analysis (see Box 1). The second relates to the need for minimizing the time and effort put on each center in the process of data collection, so that as many centers as possible invest in this effort.

Box 1. Sources of variance in standard localizers.

The goal of any good localizer is to provide a reliable estimate of the region(s) that respond best to a particular category of stimuli. The estimates need to be reliable both within a given subject and importantly, across the population. It is therefore critical for researchers to assess sources of variance for each type of localizer in order to accurately compare responses across subjects and different localizers. Below, we describe some of the main sources of variance that can influence the reliability and generalizability of localizers:

Stimuli: The choice of specific stimuli can have a major impact on the reliability and reproducibility of a given localizer paradigm, in a given subject and across subjects. This is particularly true for early perceptual localizer such as visual retinotopic and auditory tonotopic mapping, whereas for higher level localizers there can be more leeway in the exact stimulus choice. As an example, in retinotopic mapping the choice between expanding rings and rotating wedges will provide differential maps of retinotopy based on eccentricity or angular preference. However, face localizers are only minorly influenced by the use of human or monkey faces, or using objects or scrambled images as the control condition. The choice of a given stimulus should be made carefully with respect to the exact nature of the localizer, and one should try to match the existent literature as it will increase the ability to compare across studies and populations.

Paradigm: Generally speaking, there are three main paradigms used in localizers, block designs, event-related designs, and phase-encoding approaches. Block designs tend to have the most reliable response as they take advantage of the cumulative effect of the slow hemodynamic response, whereas event-related designs are excellent for differentiating individual stimuli and components of a given task. Phase encoding approaches do not seek to identify specific regions of interests. Rather, their aim is to identify the preferred response dimension of each cortical voxel (e.g. eccentricity or orientation), all throughout the cortical extent of interest (e.g. striate, extrastriate, temporal, parietal etc.)

Behavior: A critical component of almost any localizer is the animal’s behavior, and how performance is measured. It cannot be overstated how reliant fMRI data is on the compliance of the subject to the task parameters. Like stimulus choice, behavior can have a particularly strong influence on low-level perceptual localizers. Retinotopic maps require the tight fixation of the subject across the whole paradigm. Behavior also has a strong influence on higher-order cognitive localizers, involving attention or decision-making. Indeed, several of the cortical regions involved in these processes are also responsive to eye saccades. More generally, any source of signal of non-interest (saccades, retinal slip, change in blink rate or in heart rate, etc.) will impact the reliability with which the signals of interest can be described (Poirier et al., this issue).

2.1. Sources of possible variability across center & minimal harmonization requirements

Signal-to-noise ratio can vary across non-human MRI centers due to multiple non-neuronal sources of variability (Milham et al., 2018). These include the specific scanner, receive coils, transmit coils, and sequences employed. Other sources relate to the use of BOLD vs. contrast agents (type, dosage, half-life, etc.), head fixation system, monkey training procedures for minimizing head motion artefacts, and maybe most important, actual monkey behavior. While some of these cross-center differences are inescapable, others can be minimized thanks to anticipated coordination (e.g., BOLD vs. contrast agents).

Additional sources of cross-center differences arise from the specifics of the experimental design and hardware used, in terms of stimulus duration, frequency, luminosity, contrast w/background, categories, and the quality of projectors, MR compatible headphones and other stimulus-delivery hardware. Subtle variations in these components may impact effect size at equal sample sizes (number of blocks or runs). In phase-encoded retinotopic mapping, differences in timing parameters (e.g., stimulus timing) or spatial stimulation (e.g., visual field coverage) parameters may have a drastic impact on the analyses. This will affect (or even preclude) group analyses and bias inter-individual variability analyses. Collectively agreeing beforehand on a standardized localizer, i.e. a set of stimuli and a specific stimulation design, ideally by sharing the stimuli and the design on a preselected set of experimental control platforms would help solve several of these issues and provide a clear guide for critical experimental parameters (see Box 2).

Box 2. Requirements for sharing localizer data and links to localizer stimuli samples.

Equalizing stimulation conditions:

One should aim to equalize, as much as possible, the sensory stimulation across different laboratories, which are usually equipped with different stimulation and acquisition hardware. For visual stimulation, for example, one should try to present the stimuli at the same size, the same spatial and temporal resolution, and with same mean luminance. To measure accurately all events, it can be useful to present a small white square, synchronized with the on- and offset of the stimuli, in one of the corners of the screen, but invisible to the monkeys. The same can be done for natural movie sequences, whereby the photocell can be stimulated at the beginning of each TR, simply by inserting this small white square in the corner of a movie, again invisible for the monkeys. These light transitions can be captured with a photocell. Timestamps of these photo events can be used in subsequent analysis and are exceedingly useful to have accurate post-hoc logs of all event types, including the synchronized position of the eyes, hands, pupil size, juice rewards, etc. These events can be further used as regressors of (no) interest. The photo events on their own are an excellent means to check post-hoc if the planned stimuli were actually presented during the experiment, particularly relevant in event-related designs.

Metadata to collect in addition to imaging data:

(see Poirier et al., this issue for a broader discussion on this topic). For most localizers, it is important to collect concomitantly with fMRI data eye information, either using MRI compatible video-tracking systems (at 60 Hz or more) or eye information extracted from the fMRI images (at TR resolution). For some localizer data, heart rate can be an important metadata to track. Automated video-based heart rate tracking methods are now available and could be used across centers (Froesel et al., 2020). As larger cohorts of animals start to participate in experiments across multiple sites, it would be highly beneficial to acquire blood samples for genetic analysis. Other more trivial experimental details such as weight, gender, date and hour of data acquisition, housing conditions should be accurately logged as they may provide additional insights in the results. Although not feasible for many sites, additional physiological measures such as respiration, but also body motion could add useful information in the analyses and for the interpretation of the results.

Pick and collect your localizer:

Below are links towards downloadable localizer stimuli from the Prime-RE website @https://prime-re.github.io/resources. Each localizer comes with a description file that specifies the minimal data collection requirement to maximize data sharing feasibility (in terms of implementation and data pooling), quality and optimal exploitation (in terms of metadata). See supplementary material for localizer description. Beyond the localizers made available at this time, this list is meant to be dynamically incremented as new localizers are validated, and older localizers refined. Please make sure to indicate when sharing your data the exact version of the localizer that your used. For any specific question contact Brian Russ, brian.russ@nki.rfmh.org. Individual localizer DIO should be properly cited on publication

Retinotopic localizer, http://doi.org/10.5281/zenodo.4043025, Wim.vanduffel@kuleuven.be

Monkey Face/Body Localizer, http://doi.org/10.5281/zenodo.4041128, Wim.vanduffel@kuleuven.be

Monkey Voice Localizer, http://doi.org/10.17605/OSF.IO/ARQP8, chris.petkov@newcastle.ac.uk

Tonotopic Localizer, http://doi.org/10.17605/OSF.IO/ARQP8, chris.petkov@newcastle.ac.uk

Movie localizer 1, https://doi.org/10.5281/zenodo.4044578, Brian.Russ@nki.rfmh.org

Movie localizer 2, upon request due to commercial copyrights, sk5899@nyu.edu

Movie localizer 3, upon request due to commercial copyrights, Wim.vanduffel@kuleuven.be

2.2. Minimal data collection burden

Localizers are usually run in preparation of, or in association with the main scientific goals of an experiment. Thus, localizers may vary from one laboratory to another and from one experiment to another. Getting multiple centers and multiple laboratories to contribute to the collection of the same set of predefined standardized localizers thus requires a minimization of the experimental burden. Animal training requirements should include head fixation and aim for minimal head movements during MRI scanning. Some localizers will additionally require that monkeys are trained on a variety of tasks, such as gaze fixation, or lever presses. Gaze fixation allows for controlling the retinal location of visual stimulation, and provides a direct measure for eye movement regressors of non-interest when an eye calibration is run before data acquisition. Most of these methods rely on video-eye tracking and cannot be used in naive untrained animals. It is noteworthy that eye position information can also be directly inferred from the fMRI time series of the eye voxels, albeit only at TR resolution. This approach has been developed in humans (Son et al., 2020) and is currently being tested in NHP experiments. The time cost of localizers on the rest of the scanning session should also be minimal. A target time cost below 10 min seems reasonable though this might be unachievable for some localizers. Alternatively, localizer data can be collected independently from the main experiment in one or two sessions. Ideally, the behavioral requirement on the monkeys would be such that the localizer runs could be played at the end of the experimental session rather than at the beginning, so as not to jeopardize data collection for the main experiment. A clear effect size analysis should allow researchers to specify, beforehand, the length of individual runs, the required number of runs collected per scanning session, as well as the overall recommended number of runs to be collected for reliable individual subject data analysis (see Box 1). Last, a clear common format for data and metadata logging should be defined in anticipation so as to facilitate data sharing and its expected benefit (see Box 2).

3. Existent localizers

A large variety of localizers have been developed and used in NHP fMRI research. In the following sections, we discuss both categorical or discrete localizers that identify ROIs selectively activated by one stimulus category (e.g. face localizers) and continuous localizers that allow the mapping of the topographical organization of a specific cortical function (e.g. phase-encoded retinotopic visual mapping localizers). We first review low-level sensory localizers. We subsequently address higher-level sensory and cognitive localizers. Whenever possible, we discuss whether and how the Prime-DE/Prime-RE consortia could contribute to enhancing data sharing for each type of localizer.

3.1. Low-level features

Perceptual features are a mainstay of functional localizers across all species, and have been used extensively with fMRI localizer paradigms. These ‘localizers’ are typically based on discoveries of the functional organization of sensory systems.

3.2. Somatosensory maps

Somatosensory regions of the primate cortex have been characterized extensively at the neurophysiological level. The localization of different body parts can be traced to an organized map that spans primary somatosensory cortex (S1) and is mirrored in the corresponding regions of primary motor cortex (M1). Due to this well-organized mapping of bodily regions, a number of studies have used somatosensory mapping as a means of validating their methods. Of particular relevance to the current discussion, the early functional resting state studies used interhemispheric somatosensory cortex to show that resting state fluctuations can be used to probe functionally connected regions of the brain (Biswal et al., 1995). While this research was conducted in humans, similar efforts have been conducted in NHPs (Xu et al., 2018).

The actual mapping of somatosensory cortex is fairly straightforward, though some of the required equipment is a bit specialized. The basic design used by most studies is a pneumatically driven device to deliver precisely timed pressurized air puffs via rigid tubing to tactors placed on the subject. These air puffs produce a light tap on the targeted tissue both in humans (Huang and Sereno, 2007) and monkeys (Avillac et al., 2007; Xu et al., 2018). Most often this stimulation is used in a block design, where two or more target regions are stimulated in alternating blocks, usually with rest, or unstimulated, blocks interspersed. Importantly, somatosensory mapping can be done in the anesthetized state making it easier to implement than many other localizers which can require significant behavioral training. The resolution of the somatosensory maps that are produced will vary based on both the spatial resolution of the MRI protocol and the placement of the differentially tactors. In the awake monkey, air puffs are often thought to be aversive. However, if training is performed with air puffs progressively increasing in intensity, up to an individual subject’s threshold, habituation can easily be achieved. It is easy, requiring minimal training, to target isolated body parts such as the face or the upper arms. In contrast, targeting the arms, hands, trunk or legs requires extensive training of monkeys not to move during the scanning sessions.

Surprisingly, to date, very few studies have provided a systematic whole-brain somatosensory fMRI mapping in the macaque monkey. Fine-grained functional connectivity on the somatosensory cortex has, for example, been mapped with ultra-high resolution rs-fMRI (9.4T) in the anesthetized squirrel monkey (Chen et al., 2007; Yang et al., 2018). In the awake macaque, Wardak et al. (2016) describe the face-centered somatosensory maps, comparing the cortical activations obtained for stimulation of the center or the periphery of the face, or the shoulders. Activations encompass primary and secondary somatosensory areas, prefrontal and premotor areas, and parietal, temporal, and cingulate areas as well as low-level visual cortex. While most of these cortical regions show a topographical organization of somatosensory responses, a parieto-frontal network appears to be selective for face stimulations and coincides with the network activated by visual stimulations in the peripersonal head-centered space (Cléry et al., 2018b) or approaching this space (Cléry et al., 2017). Somatomotor maps have also been investigated using fMRI in new-born monkeys, showing a refinement of the topographic somatosensory representation over development (Arcaro et al., 2019). Last, somatosensory maps have also been investigated using fMRI in the awake marmoset (Cléry et al., 2020).

A possible easy way to implement contribution of the Prime-DE consortium would be that multiple centers associate to provide, in the anesthetized monkey, a whole-body or body-part-centered high resolution mapping of somatosensory networks in multiple animals. This would entail sharing a common anesthesia protocol, acquisition sequence and coil positioning, an agreement on the use of a contrast agent and a precise definition of the body localization of the stimulations and their strength. This latter point could be achieved by sharing the prints for a 3D printable prosthesis embedding stimulators and sensors, and associated hardware.

3.3. Low-level auditory features

The functional organization of the macaque auditory cortex has been extensively studied over decades with lesion, tracer and neurophysiological studies. It is thought to consist of a set of interconnected, tonotopically organized ‘core’ regions receiving direct input from the medial geniculate nucleus, connected in turn to adjacent ‘belt’ and ‘para-belt’ regions along two bilateral dorsal and ventral streams of processing (Rauschecker, 1998; Rauschecker and Tian, 2000). In the auditory cortex, as in the visual cortex, there is an orderly progression of topography that captures the stimulation pattern across the sensory epithelium (the basilar membrane in the cochlea).There are also mirror reversals between adjacent fields that can be used to define borders between fields (Formisano et al., 2003). In the auditory domain, this can be conducted with tones or other sounds that have a certain center frequency and the variability in the center frequency will excite different parts of the auditory cortex where neurons show a preference for certain sound frequencies over others. Typically, a gradient analysis is conducted to evaluate the change in retinotopic or tonotopic selectivity and borders are easier to define between fields where gradients reverse. In the auditory system, this works well in between areas within primary (core) auditory cortical fields that are sensitive to pure tones and show mirror reversed gradients.

More recently, fMRI studies inspired by human neuroimaging have been used to functionally localize cortical fields using tonotopic mapping. Petkov et al. (2006) used sparse-sampling fMRI during auditory stimulation with tones and noises of different frequencies to derive gradients of frequency preference across voxels and identify region boundaries based on gradient reversals. This protocol could easily be adapted to a shorter ‘tonotopic localizer’ that could be used to identify primary cortical fields in a standardized manner across studies and sites.

However, non-primary fields surrounding the core (i.e., belt non-primary auditory cortex) respond more strongly to complex sounds like band-passed noise rather than simple tones (Rauschecker, 1998). Although these fields have gradients that are co-linear with the adjacent core fields, it is difficult to objectively define a border between the core and belt fields since it is based on the strength of tone/noise responses rather than a mirror reversed border. Although, currently, not all borders between fields can be defined with the same level of accuracy, having retinotopic or tonotopic maps allows making more meaningful inferences about where fMRI effects fall either based on individually identified maps or probability maps from the organization across several animals.

An alternative could consist of using auditory stimulation with complex natural sounds to derive tonotopic gradients and region boundaries based on modeling of each voxel’s frequency response. Using such an approach in the human brain, Moerel et al. (2014) found that tonotopic gradients were observable far beyond the primary areas known to respond to synthetic tones or noises. An added benefit of using complex natural stimuli for mapping is that features other than frequency can be mapped at the same time, such as pitch, spectral and temporal modulation rate, etc. A comparative macaque-human study presented similar natural sounds and used a multivariate model-based decoding approach in an attempt to compare the topographic organization of different auditory areas in the two species (Erb et al., 2019). This study revealed largely similar topographic maps of acoustic feature preferences for frequency, spectral and temporal modulations in the auditory belt and para-belt regions in both species. Tonotopic maps exhibited a mirror-symmetric low-high-low-high frequency gradient from posterior to anterior sectors in the lateral fissure.

Auditory localizers can be run on animals with head fixation implants and minimal eye fixation training. Multiple centers from the Prime-DE/Prime-RE consortium could thus associate to provide auditory localizer data on multiple monkeys. Similar to the somatosensory localizer, this would entail sharing a common acquisition sequence and coil positioning, an agreement on the use of a contrast agent, sharing the stimulation presentation design, as well as the auditory stimuli themselves (see Box 2).

3.4. Low-level visual features

As with human neuroimaging, the implementation of visual feature localizers has been practiced extensively in NHPs. Early visual areas are characterized by sensitivity to where light stimulates the retina topographically.

Retinotopic Organization.

One of the most implemented sets of localizers are those that help define retinotopic maps across early visual cortex. These localizers stimulate the visual field in a systematic manner which leads to an orderly activation of different portions of the visual cortices representing the stimulated regions. Retinotopic organization of the cortex has been hypothesized to be a defining principle for the organization of the primate visual cortices (Arcaro and Livingstone, 2017; Conway, 2018; Srihasam et al., 2014). A hierarchical, topographic organization is already present at birth in NHP and has been suggested to constitute a proto-organization for the entire primate visual system (Arcaro and Livingstone, 2017; Srihasam et al., 2014). As a functional localizer, retinotopic mapping can help delineate borders of early visual areas and to define the functional organization of early visual regions from V1 up to posterior inferotemporal cortex (Janssens et al., 2014; Kolster et al., 2014). Similarly, while receptive fields become larger in higher order visual areas (Boussaoud et al., 1991), numerous regions throughout the ventral and dorsal pathways respond to particular hemifields and exhibit differential mapping of foveal representations compared to peripheral representations (Ben Hamed et al., 2001; Kolster et al., 2014). With retinotopic organization being fairly ubiquitous throughout the visual brain, including significant portions of occipital, temporal, parietal and frontal cortex, retinotopic functional localizers are one of the most useful localizers to differentiate particular regions.

From a behavioral perspective, it is critically important that the eye position of the subject be tightly controlled. This has been accomplished in the past through two different means. Firstly, it is possible to map the retinotopic organization of the cortex while the subject is anesthetized, by opening the eye lids and projecting the stimuli on the retina using a fiber-optic system and a fundus camera (Brewer et al., 2002). Secondly, with well-trained animals, and a properly implemented eye tracking system, it is possible to collect retinotopic maps from awake animals. The quality of the functional data during such awake behaving retinotopy scans can be substantially improved when the position of the hands are also controlled. Information on both the hand and eye positions can be implemented during the operant conditioning of the subjects. The use of awake animals is particular attractive as it opens the door to comparing retinotopy to activations driven by more complex stimuli and tasks, and to reliably evoke visually-driven activity in higher order cortex which is often silenced during anesthesia.

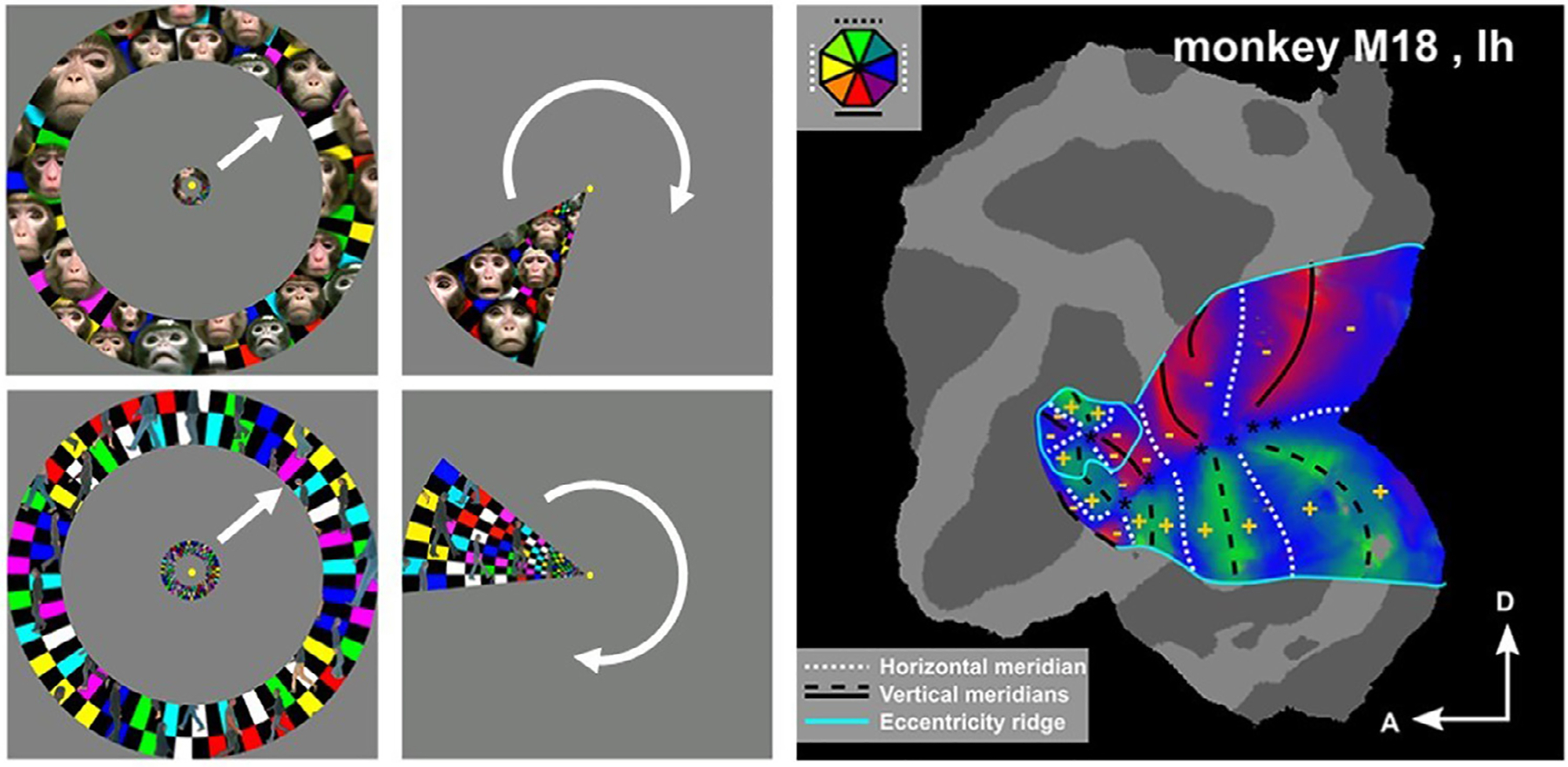

There have been a number of different retinotopic mapping techniques depending on the exact nature of the research question being asked. We will discuss some of the more common paradigms. Historically, one started with the static presentation of flickering checkerboard patterns restricted to either the horizontal and vertical meridian, or to annuli at different eccentricities and scaled to the cortical magnification factor (Fize et al., 2003; Vanduffel et al., 2002). This approach, however, only reveals the representations of discrete portions of the visual field which is just sufficient to estimate roughly which eccentricities are represented in early visual cortex and to approximate borders of retinotopically organized visual areas, such as the vertical meridian representation between area V1 and V2, the horizontal meridian between V2 and V3, etc. In fact, most studies are restricted to only 10–15° of eccentricity, given hardware limitations to present large visual stimuli in a scanner. To obtain polar angle and eccentricity information from all voxels within these eccentricity ranges, phase encoded retinotopic mapping was first developed for humans (Sereno et al., 1995), and subsequently adopted by the macaque imaging community (Fig. 1, (Brewer et al., 2002; Janssens et al., 2014; Kolster et al., 2009, 2014)). In these paradigms, macaques are presented with alternating runs of expanding/contracting rings and clockwise/counterclockwise rotating wedges composed of flickering checkerboard patterns, while the monkeys maintain fixation at the center of the screen. The exact specifics of the timing of the paradigm will depend as much on the specifics of the scanner protocol, particularly the spatial resolution and repetition time (TR). However, generally, the rate of change in the ring expansion and wedge rotation should align with the protocol’s TR. In more recent approaches, more complex stimuli are embedded within the rotating wedges or expanding/contracting annuli, with the aim to drive cells with more complex receptive field properties in higher order areas -i.e., cells that are driven by complex stimulus features and not by simple checkerboards. These stimuli can be mixtures between flickering checkerboards and incorporated natural moving objects such as dynamic faces and walking subjects (Fig. 1). Together with stringent eye-movement and hand-movement controls these stimuli revealed highly robust retinotopic maps throughout substantial portions of visually-driven cortex (see supplemental information for the stimuli and Box 2, (Janssens et al., 2014; Kolster et al., 2014; Zhu and Vanduffel, 2019).

Fig. 1.

Phase-encoded visual retinotopic mapping rely on expanding/contracting annuli (left panels), clockwise/counterclockwise rotating wedges (middle panels). Stimuli (from Zhu and Vanduffel, 2019) can be flickering checkerboards or mixtures between flickering checkerboards and incorporated natural moving objects such as dynamic faces and walking subjects (left and middle panels). Such mapping results in a polar angle and eccentricity information from all voxels and a precise description of the retinotopic organization of the visual cortex (right panel; adapted from Vanduffel et al., 2014).

Motion Sensitivity.

As visual information proceeds along the visual pathway, the information that is processed within each region becomes more complex. One such form of information is visual motion. As with retinotopic mapping, a variety of relevant paradigms have been used to map the location of motion sensitive regions within the cortex of both NHPs and humans. The simplest form of a motion processing localizer involves the use of static versus moving dots in a standard block design format. The contrast of moving dots versus static dots reveals a robust set of activations within the early visual system that is composed of areas MT, MST, and VIP (Vanduffel et al., 2001). To localize more specialized motion-processing regions within the superior temporal sulcus and parietal cortex, one can use more complex motion stimuli including, for example, optic flow patterns and structure from motion stimuli (Nelissen et al., 2006; Sereno et al., 2002; Vanduffel et al., 2002). Other studies used a two (Kinematics: biological and translational) by two (Configuration: full and scrambled) stimulus matrix which allowed them to localize not just motion sensitive regions, but also the types of motion which the regions were responding to (Jastorff et al., 2012). They found that distinct portions within the motion sensitive regions of the posterior STS responded to different forms of motion. Rostral to MT, the kinematic and configural components of the stimuli were processed within distinct regions. These results highlight the strength of using more specific stimulus conditions to tease apart complex stimulus information.

Depth coding.

An important visual feature of our daily environment is how objects are organized in 3D space. This covers at least four distinct aspects. The first is how individual objects are organized in 3D, i.e. their three dimensional shape. Specific stereoscopic or 3D-structure from motion stimuli are used to identify distinct superior temporal and intraparietal regions activated by 3D object information (Durand et al., 2007; Sereno et al., 2002; Tsao et al., 2003b; Vanduffel et al., 2002). The second aspect of depth information is the relation of objects to each other in space. A monkey fMRI study comparing near or far depths defined by binocular disparity, relative motion, and their combination showed that area MT computes the fusion of disparity and motion depth signals, exactly as shown for human area V3B/KO (Armendariz et al., 2019). Such comparative studies are powerful means to link electrophysiological studies with human imaging. Specifically, this study reconciled previously reported discrepancies between depth processing in the dorsal stream of human and monkey. The third aspect is about where stimuli are in space relative to the subject. Cléry et al. (2018) have used ecological stimuli presented either far away from the subject or within 30 cm for the monkey’s head. Stimuli could either have the same real size or the same retinal size. Near stimuli provided a very specific and reliable functional identification of a core peripersonal space network composed of ventral intraparietal area VIP and premotor zone PMz (Cléry and Ben Hamed, 2018). The fourth aspect is about the processing of relative motion to the subject, contrasting optic flow stimuli, mimicking ego-motion, to 2D translational large field motion (Guipponi et al., 2013, 2015) or to multifocal optic flow stimuli (Cottereau et al., 2017). These studies identify specific cortical regions involved in the coding of ego-motion. Finally, imaging-based localization of intraparietal patches coding for depth structure can be targeted by electrophysiology, microstimulation or inactivations during fMRI. Such studies reveal effective connectivity patterns within the parietal cortex (Premereur et al., 2015) and between parietal and inferotemporal cortex (Van Dromme et al., 2016).

Overall, while one may think that the use of low-level visual localizers has exhausted what one can learn about the organization of the primate visual system, recent studies, either mapping a larger extent of the visual field (Rima et al., 2020) or using submillimeter fMRI (Zhu and Vanduffel, 2019), describe new retinotopic clusters in the macaque brain. Prime-DE/Prime-RE consortia are expected to foster such novel research avenues across research centers. Through the collaboration of multiple centers, we expect to discover new knowledge on the organization of early visual cortex and importantly on its individual variation in relation with both behavioral and genetic variability.

3.5. Low-level multisensory mapping

Our perception of our environment is most often based onto the combination of sensory information from multiple senses. Accordingly, converging evidence indicates that the brain is massively multisensory (Guipponi et al., 2015; Schroeder and Foxe, 2005). However, the exact network bases of multisensory perception are still poorly understood and systematic multisensory mapping localizers would be extremely helpful to gain a better understanding of these processes. For example, Guipponi et al. (2015) characterized, using visual and somatosensory fMRI mapping in the awake monkey, the cortical regions that respond to both large field visual stimuli and face or shoulder somatosensory stimulations. They report two main findings: The first, is the observation that visuo-tactile convergence spans a large portion of early visual striate and extrastriate areas, mostly those regions coding for the periphery of the visual field. This finding has been independently confirmed in another unpublished study (Armendariz et al., 2018). While there have previously been reports of a modulation of visual V1 single unit responses by auditory stimuli (Wang et al., 2008), these large somatosensory activations in the early visual cortex are a first demonstration of massive heteromodal modulatory influences in the primary visual cortex. The second observation is that the spatial organization of this visuo-tactile convergence, throughout the brain, is stimulus dependent. Areas responding to tactile face stimulations and static whole field visual stimuli do not fully coincide with cortical regions responding to tactile face stimulations and whole field optic flow stimuli, nor to regions responding to tactile shoulder stimulations and static whole field visual stimuli. Thus, the exact pattern of multisensory convergence is stimulus dependent. Guipponi et al. (2013) further probed multisensory convergence of visual, auditory and tactile information within the intra-parietal sulcus (Fig. 2A). This study identifies ventral intra-parietal area VIP based on its responsiveness to large-field dynamic stimuli. They show that only part of this larger VIP is responsive to tactile face stimulations and that only part of this visuo-tactile VIP is further responsive to auditory stimuli. This study clearly highlights the complexity of multisensory cortical brain organization. Importantly, it also highlights inter-individual differences, whereby one monkey has a unique bilateral intraparietal visuo-tactile convergence ROIs, while the other monkey has two such convergence ROIs (observation reproduced in other monkeys, Ben Hamed et al., personal communication).

Fig. 2.

The conjunction of multiple localizers allows to identify interindividual variations in cortical functional organization. (A) Projection of the (visual and tactile) and (visual, tactile and auditory) conjunction onto the left and right flattened intraparietal cortex of two monkeys M1 and M2. Limits of intraparietal areas LOP, LIPv, LIPd, VIPm, VIPl and AIP defined based on the F6 Caret atlas. Adapted from Guipponi et al., 2013). (B) Anterior and medial cingulate face fields defined by local maxima for reward, blink, saccade and tactile to the face and to the shoulder localizers. Adapted from Cléry et al., 2018.

The complexity of multisensory convergence patterns as well as inter-individual differences in this respect is apparent using low-level sensory localizers. Higher levels of complexity are expected for higher level sensory localizers. Fig. 2B represents the functional identification of cingulate face fields based on multiple localizers (reward, spontaneous blinks, saccades, tactile stimulations to the face and tactile stimulations to the shoulders, Cléry et al., 2018a). While the medial and anterior cingulate face fields can be identified in both monkeys bilaterally, all localizers did not activate all face fields, suggesting some degree of functional inter-individual differences.

Gaining a proper understanding of how multisensory convergence is organized across stimulus ranges and levels of complexity, while important to understand perception, cannot be carried out by a single lab. The Prime-DE/Prime-RE initiative is expected to be instrumental in providing simple multisensory localizers that can be run and parametrically varied in several labs, thus increasing both stimuli ranges and subject sample size.

3.6. High-Level features

The localization of high-level perceptual features is of great interest within the NHP neuroimaging community. In the following section, we will discuss the advantages and disadvantages of a few well-known functional localizers for high-level perceptual and cognitive features. The goal is not to cover all possible localizers but to highlight localizers that are fairly commonly used and how we may improve upon the current state of these localizers.

3.7. Face processing system

One of the most common localizers used in both human and NHP neuroimaging is a face processing localizer. Kanwisher et al. (1997) began the explosion of face localizer tasks with their discovery of a region of ventral aspect of the human temporal lobe, on the fusiform gyrus, that responded most strongly to faces over other stimuli. This discovery has led to both a large body of literature investigating face processing within the human cortex, and a fair bit of controversy (Gauthier et al., 2000; Haxby et al., 2001). Not long after the discovery of the fusiform face area, Tsao et al. (2003a) used a similar face localizer paradigm in the macaque monkey. They found not a single face region but instead discovered what has come to be known as the face patch system consisting of five to seven regions along the temporal lobe.

As with the low-level localizers discussed earlier, face localizer tasks have tended to fall into one of two categories, block designs or event-related designs, with a majority using block designs. The issue that arises when targeting the localization of high-level features is exactly what comparison will best localize a given feature. There has been considerable debate within the field about what set of stimuli are best used for precise localization of the face-selective regions (Bell et al., 2011; Ku et al., 2011; Pinsk et al., 2005a; Premereur et al., 2016; Russ and Leopold, 2015; Tsao et al., 2008a). The main set of categories that have been used are conspecific faces, and/or human faces, versus objects, scenes, and phase scrambled versions of faces. Each category provides a distinct form of comparison and control. The field has generally agreed that the comparison of faces and any of the other categories provides a fairly accurate mapping of face responsive regions within the cortex, and temporal lobe in general. However, face-selective regions are often defined as a subset of that space which are best localized by the comparison of faces and objects (see Popivanov et al., 2012 for comparison across these conditions).

Similar to face localization, a number of other categories of stimuli have been shown to activate particular regions within the NHP cortex (Bao and Tsao, 2018; Lafer-Sousa and Conway, 2013; Pinsk et al., 2009; Popivanov et al., 2014, 2012). In particular, researchers have shown that in conjunctions with the network of face patches, there are corresponding, usually connected regions that respond more to body parts than objects (Pinsk et al., 2005a, 2009; Popivanov et al., 2012, 2014). These regions often overlap face-selective regions but are significantly larger. As with the face-localizer tasks, they are mapped using a similar localizer of body parts, either full animal images or isolated limbs, and inanimate objects. A well-controlled localizer for both faces and bodies, in which low-level image characteristics (aspect ratio, mean luminance, contrast, surface area) were equated as much as possible across different object classes, was used in a study by Popivanov et al. (2012). This localizer consists of 10 categories (monkey bodies, human bodies, mammals, birds, monkey faces, human faces, body-like sculptures, fruits/vegetables, and two sets of control objects), and one scan session is typically sufficient to reliably identify all the face and body patches consistent with previous localizers (Bell et al., 2011; Ku et al., 2011; Pinsk et al., 2005a; Premereur et al., 2016; Russ and Leopold, 2015; Tsao et al., 2008a), as well as object-processing regions within monkey cortex.

The use of multiple localizers can provide further validity and robustness to the discovery of a particular feature. For example, there are numerous paradigms that have localized face, body, scene selectivity across labs (Tsao et al., 2003; Moeller et al., 2008; Moeller et al., 2009; Freiwald et al., 2009; Russ and Leopold 2015; Popivanov et al. 2014; Janssens et al. 2014; Koyano et al., 2021). By and large, major category-selective areas/patches, are surprisingly well reproducible across sites and tasks (e.g. discussed in Vanduffel et al., 2014 and shown in Janssens et al., 2014). This is also illustrated in the data presented in Fig. 3B. Here we have combined face selective responses across 5 animals who participated in one of three tasks: face vs scrambled images (block design), face versus objects (block design), or a naturalistic movie free-viewing task processed with a face regressor. These individual animals were all mapped to the common NMT-template (Seidlitz et al., 2017) and plotted on the NMT surface maps along with the probabilistic face-responses generated from Janssens and colleagues (2014). As can be seen, in general, all the subjects and the probabilistic maps are overlapping to a significant degree with each other along the temporal lobe. However, there are also substantial individual differences in the exact location of the face-responsive regions. These differences are likely a result of both true individual differences, measurement errors, and differences in behavioral performance (e.g. fixation performance). The use of convergent paradigms across sites and within individuals can help to elucidate how much of these errors can be truly linked to individual differences in the functional organization of the brain.

Fig. 3.

A) Number of blocks for estimating the location of the face patches. Subject participated in multiple sessions, with multiple runs per session, of a block design experiment (intact faces versus phase scrambled faces; data from Russ and Leopold, 2015). T-values were calculated for an increasing number of blocks within predefined face selective regions (14) and visually responsive regions (10). Mean and standard deviation of the calculated t-value for each set of blocks is displayed. A representative t-map for 4 sets of blocks are shown above. B) Mapping of face selectivity: comparison across research centers and tasks. NHP 1–5 were collected animals on the 4.7T Bruker Vertical bore at the National Institutes of Health. Three face-processing localizers were used, a Face/Objects (NHPs 1 and 2), Face/Scrambled images (NHP 3 and 4), and Face Regressor in a movie (NHP 5) (data from: Russ and Leopold 2015; Koyano et al., 2021; McMahon et al., 2015). In addition, the probabilistic face-selectivity maps from Janssens and colleagues (2014) is mapped to show the overlap between single subjects and the maps generated from separate subjects collected at 3T.

Based on the extensive literature available, we believe that the best way forward for standardizing the localization of face responsive regions is to employ a block design that incorporates 6 separate categories of stimuli: conspecific faces and bodies, objects, and hetero-specific mammals, birds, and plants (see box 2 for link to stimuli). Pseudo-randomization of these blocks across 10 min of imaging would provide a good estimation of face responsive and face-selective regions within the population. Fig. 3 describes, on empirical data, t-value scores as a function of number of collected blocks in a predefined face patch. Note that the more blocks are collected, the more face patches are identified.

3.8. Object processing

On the flip side of both body and face localizers, it is possible to compute the inverse contrast highlighting regions that respond more strongly to objects compared to faces and/or body parts (Bao and Tsao, 2018; Bell et al., 2009, 2011). Previous research has shown that regions outside of the face and body areas respond more strongly to objects than biological stimuli regions (Bell et al., 2011; Pinsk et al., 2005a, 2009; Popivanov et al., 2012, 2014). These responses can be further broken down into more distinct regions depending on the particular set of stimuli and contrast used.

Of particular note, modulating the amount of color present within the stimulus set has revealed a set of color responsive patches that mirror, but are ventral to, the face patch system (Lafer-Sousa and Conway, 2013). The presence of these color patches, in conjunction to the face patches, have put forth the hypothesis that the ventral visual system is organized into sets of interconnected streams that specialize in particular stimuli (Bao et al., 2020; Conway, 2018; Premereur et al., 2016).

3.9. Voice localizers

One often-used localizer in human neuroimaging is the ‘Voice Localizer’ contrasting vocal (speech and non-speech) vs non-vocal sounds to localize the temporal (TVAs) and frontal (FVA) voice areas (Pernet et al., 2015). Because it does not focus on (uniquely-human) speech, but on voice (conspecific vocalizations), this localizer can be transposed to macaque fMRI (Joly et al., 2012a). Petkov et al. (2008) contrasted macaque vocalizations to nonvocal sounds and identified at least one anterior ‘voice patch’, later shown to contain between 25 and 55% of voice-selective neurons. Experience with the human voice localizer suggests that the voice/non-voice contrast is so strong that it only requires a small amount of stimulation and can be achieved in less than 10 min as an fMRI localizer. Such a contrast of vocalizations versus other sounds could potentially also be used in macaques to localize primary auditory regions within a single localizer task (cf. section on low-level localizers).

The contrast of the voice versus non-voice conditions will identify several areas in the temporal, and even frontal cortex, that show sensitivity to the voice set of stimuli. This is analogous to the face versus non-face visual localizer (Freiwald et al., 2009; Tsao et al., 2008b). For instance, one could use a set of different vocalizations from the same and different individuals. Then the stimulation could be different vocalizations from the same individual or the same vocalization from different individuals (Belin and Zatorre, 2003). Many auditory areas will respond to the acoustical difference of different vocalizations from the same individual, but some areas preferentially respond to vocalizations from the different individuals (who vocalized rather than what was vocalized (Petkov et al., 2006). Identity-specific stimulation seems to involve more anterior temporal lobe regions (Perrodin et al., 2015). A link to a voice localizer can be found in box 2.

3.10. Movies (a.k.a. naturalistic materials)

One potential avenue for obtaining multiple functional localizers from a single task is the use of naturalistic movies. Naturalistic movies by definition have a large number of superposed features that, if taken into account, can provide functional localizers for both low-level perceptual features, including low-level retinotopic maps (Nishimoto et al., 2011), and high-level semantic/cognitive features (Huth et al., 2012; Mantini et al., 2013, 2012a, 2012b; Russ and Leopold, 2015; Sliwa and Freiwald, 2017). In fact, the use of naturalistic movies has increased significantly in the last decade as both human and animal research has seen the potential of using these less constrained but more ethologically relevant stimuli (Gao et al., 2020). Just within NHPs, naturalistic movies have been used to localize low-level visual features such as contrast, luminance, and motion responses (Russ and Leopold, 2015), face specific responses (Russ and Leopold, 2015; Sliwa and Freiwald, 2017), eye-movement related signals (Russ et al., 2016), cross-species functional homologies (Mantini et al., 2013, 2012a; 2012b), and social processing (Sliwa and Freiwald, 2017). The use of a naturalistic movie set to localize features, such as the face processing system, can be particularly useful as it requires no experimental training (Russ and Leopold, 2015), whereas standard visual localizers usually require the subjects to maintain fixation within a small fixation window (Pinsk et al., 2005b). Removing these training requirements can drastically reduce the need for additional behavioral training and provide quick functional localizations of a variety of features.

Challenges and analytical approaches of using movies as localizers:

These studies have highlighted the use of naturalistic movies as an effective method for localizing all manner of functional domains using a variety of analytical methods. Traditionally, the two main methods currently employed for localizing function with naturalistic movies are: 1) regression models based on the coding of particular features; and 2) inter-subject correlation methods where the stimulus is used as a common driver of neural activity. Given the complexity of movies as localizers, a number of more recently developed methods also warrant mentioning.

Regression models

Regression models for naturalistic movies have provided relatively good localizers for a variety of features. Although the exact nature of the regression model varies between studies, the main component of each method remains constant. An algorithmically or user-defined feature vector is extracted from the movie and then compared to the fMRI activity. Some studies have used short movies to target particular variables. For example, Sliwa et al. (2017) used a set of short movies with particular social interactions, a method very similar to a standard block design, to localize regions of the macaque cortex that are preferentially associated with social processing. Like the more standard functional localizers discussed above, these study designs have the benefit of controlling for variables of no-interest and isolate the desired feature. However, by controlling for all these features they may lack the ability to localize any feature not specifically designed to be tested for.

A complementary method for using naturalistic movies is to use longer movies that allow for a variety of different features to be present at any given moment. Mantini et al. used three 30-minute video fragments (2012b), and Russ and Leopold (2015) used a set of fifteen 5-minute movies that contained multiple visual features superposed. A downside of these less constrained movies (independently of their length) is that any particular feature may be correlated with other features of interest in your regression model, thus potentially violating assumptions of independence between variables. Luckily, there are numerous methods to clean these correlations up, such as ridge regression, stepwise regressions, principal components analysis (PCA), and partial correlations, to name a few (Draper and Smith 1998). Each method provides a particular trade-off when attempting to reduce or remove correlations between variables. For example, partial correlations and stepwise regressions both implement an ordered regression where variance is attributed to one variable before other variables are considered. This removes the potential for shared variance, but can inappropriately attribute variance to the wrong variable depending on the order they enter into the model. PCA, on the other hand, can help to reduce issues of independence by first creating a new set of variables that are orthogonal to each other by combining variables together. However, the new variables that are created may not map specifically onto a particular feature of interest.

As mentioned with each of these methods, a trade-off is made to try to minimize the effects of collinearity between the variables. Russ and Leopold (2015) used a stepwise regression approach in which up to 21 different visual feature models were submitted. They could reliably map the functional localization of a variety of the visual features based on the stimulus models such as: the location of contrast sensitive early visual regions; motion sensitive regions, such as MT and MST; and the face patch system (Bell et al., 2011; Freiwald et al., 2009; Pinsk et al., 2005a; Premereur et al., 2016; Tsao et al., 2008a, 2003a). As was discussed above, the localization of the face patch system has been explored by a number of labs and represents a reliable localizer across groups and animals. Russ and Leopold (2015) found that the face feature model extracted from their naturalistic movie set produced a near identical set of patches compared to an independently run face processing localizer. Additionally, they were able to localize the face patches with only 15 min of naturalistic viewing.

Recent advances of the deep learning approaches:

Beyond the afore-mentioned methods, recent advances in convolutional neural network (CNN) and deep learning methods also provide important insights into how to best leverage the visual complexity embedded in naturalistic viewing paradigms. Bao et al. (2020) made use of a deep network (AlexNet) that is trained on object classification to identify an object space network in the monkeys’ inferotemporal cortex. In fact, these deep learning approaches have also been used previously to explain variance of cortical activity in humans while they watch natural-videos. For instance, Wen et al. (2018) used a deep CNN, trained with supervised learning for image recognition, to form a model of visual cortex’s feed-forward neural computation. Han et al. (2019) developed a variational auto-encoder that could predict and decode cortical activity observed with fMRI from a viewing experiment in humans. However, while these CNNs can predict cortical representations across most levels of visual processing, their application to the modeling of higher cognitive processing that are inherent in movies (such as storyline, social saliency, and plots) remains a challenge.

Functional Alignment through Hyperalignment:

An important assumption in neuroimaging studies is that each subject’s brain responses to the same information are the same across subjects. However, there is a wide variation in each individual’s neuroanatomy. As a result, a critical step is to normalize each subject’s responses into a common ‘functional’ space. This improves cross-subject comparisons and thus the exploitation of a common movie localizer. To address these issues, a class of algorithms, which is collectively termed as hyperalignment (or functional alignment), have been developed. For example, response-based hyperalignment uses an iterative Procrustes transform to scale, rotate, and reflect voxel time series so that they are in the same functional space across participants (Guntupalli et al., 2016; Haxby et al., 2011; Taschereau-Dumouchel et al., 2018). Take Guntupalli et al. (2016) as an example, their hyperalignment model was able to account for individual variability of coarse-scale topographies, such as retinotopy and category selectivity, which can produce better classification performance for movie segments than models that are based on standard anatomically aligned features across occipital, temporal, parietal, and prefrontal cortices. A second approach known as Shared Response Model, which learns a joint singular value decomposition (joint-SVD) and can project subjects into a lower dimensional common space (Chen et al., 2014, 2015).

Inter-subject activity correlation:

The inter-subject correlation method has been used extensively in human subjects research to investigate a number of functional topics ranging from temporal processing, memory, and face processing (Chen et al., 2015, 2017; Hasson et al., 2012, 2008, 2004; Nguyen et al., 2019; Regev et al., 2013). A recent review of inter-subject correlation studies was published highlighting its many uses (Simony and Chang, 2020). However, this approach has not been used on a large scale in NHP imaging studies, mostly due to a need for a larger number of subjects than are used in a typical awake NHP neuroimaging study. Mantini et al. (2012b), however, have demonstrated its potential applications in NHP. In this study, humans and NHPs both watched the same Hollywood movie (The Good, the Bad, and the Ugly). The authors then used inter-species activity correlation (ISAC) to investigate functional homologies across species. They found that while many primary sensory cortical regions remain preserved across species, a number of higher-order processing regions appear to differentially move between the two species, in a manner not simply consistent with cortical expansion. Expanding on these methods with large groups of NHP subjects viewing more ethologically relevant stimuli has the potential to expand our understanding of inter-subject cognition similar to the current results coming from human research. Moreover, it will provide a unique opportunity to investigate functional homologies across primate species in a completely data-driven manner.

Inter-subject beta correlations:

The inter subject correlation method has recently been complemented by a data-driven inter-subject beta-correlation approach whereby beta-coefficients across subjects of the same species, or even across species (coined inter-species beta correlations, ISBC) can be compared (Caspari et al., 2018). For an ISBC analysis, one first performs a regular GLM analysis on the two independent fMRI data sets acquired in the two species. This analysis will yield beta-values for each voxel and for each condition of a specific paradigm. This results in a fingerprint of beta values for each voxel, and the more conditions, the more informative the fingerprint. Subsequently, one can perform a voxel-to-voxel correlation analysis of these beta-fingerprints across species. Alternatively, one averages all the beta-fingerprints of all voxels within an independently defined ROI of one species, and correlate the average beta-fingerprint of the ROI with those of all voxels in the other species. This approach is different from ISAC because it circumvents the timing issues when the timing of different trials and sub-trial components might not be synchronized across subjects. For example, when the animal is engaged in a task whereby the pace of trials is determined by task performance, the ISAC procedure cannot be used. For example, Caspari et al. (2018) used the ISBC approach to compare shifts of selective spatial attention between humans and monkeys. In such experiments, the timing of different trials and sub-trial components can be completely de-synchronized across subjects, hence the ISAC approach is useless. The ISBC approach, whereby the beta coefficients obtained in one species are correlated with those in the other species, showed that specific regions within the superior parietal lobe are engaged during shifts of spatial attention. The richer the set of beta values (i.e. the more conditions in an experimental paradigm), the more powerful the ISBC approach, provided that there is enough contrast-to-noise ratio for each of the individual conditions -i.e. the signal-to-noise ratio (SNR) of the modulated signal. The same approach can be used both for within and across-species comparisons using natural movie designs, but also in regular well-controlled block or event-related designs.

Inter-subject representational similarity analysis:

However, one problem with ISAC is the fact that it operates at the level of subject pairs, while behaviors (responses to movies) operate at the individual subject level. It has been recently proposed that we can take a subjects-by-subjects ISC matrix as a “brain similarity ” matrix, and then construct a “behavioral similarity” matrix and use representational similarity analysis (RSA) to identify brain regions where subjects who are more similar in their behavior are also more similar in their neural response (Finn et al., 2020). Each viewer is associated with both behavioral scores (if any) and a pattern of brain activity (e.g., time series data from a region of interest during movie-viewing). Weighted graphs obtained from similarity matrices can then be computed, compared and tested statistically pairwise (i.e., subject-by-subject), using IS-RSA. However, this analysis method is not only specific to movie paradigms, it is well suited for any paradigm that involve behavioral data and can be applied to detect shared structure between brain data and behavioral data (Bacha-Trams et al., 2018; Chen et al., 2020; Gruskin et al., 2020; Jääskeläinen et al., 2016; Mantini et al., 2011; Nguyen et al., 2019; Nummenmaa et al., 2012; Saalasti et al., 2019; Tei et al., 2019; van Baar et al., 2019). As for the ISAC and ISBC tools, the inter-subject representational similarity analysis can also be extended to compare activity patterns across species. For example, the inter-species representational similarity method has been used to provide data-driven evidence for linking the different face patches in humans and monkeys (Zhu et al., 2015).

Automated annotations & automated feature extraction: