Abstract

Acquisition of natural language has been shown to fundamentally impact both one’s ability to use the first language, and the ability to learn subsequent languages later in life. Sign languages offer a unique perspective on this issue, because Deaf signers receive access to signed input at varying ages. The majority acquires sign language in (early) childhood, but some learn sign language later - a situation that is drastically different from that of spoken language acquisition. To investigate the effect of age of sign language acquisition and its potential interplay with age in signers, we examined grammatical acceptability ratings and reaction time measures in a group of Deaf signers (age range: 28–58 years) with early (0–3 years) or later (4–7 years) acquisition of sign language in childhood. Behavioral responses to grammatical word order variations (subject-object-verb vs. object-subject-verb) were examined in sentences that included: 1) simple sentences, 2) topicalized sentences, and 3) sentences involving manual classifier constructions, uniquely characteristic of sign languages. Overall, older participants responded more slowly. Age of acquisition had subtle effects on acceptability ratings, whereby the direction of the effect depended on the specific linguistic structure.

Keywords: age of acquisition, age, sign language, Austrian Sign Language

Introduction

Two factors that are known to affect language ability are age and the time point when someone has acquired a language (i.e. from birth, in childhood or as an adult) (Calabria et al., 2015; Capilouto et al., 2016; Malaia & Wilbur, 2010). Exposure to a natural language in early infancy, which triggers language acquisition, is a crucial factor determining not only the eventual linguistic proficiency, but also the cognitive and socio-emotional development (Cheng & Mayberry, 2018). The majority of Deaf children do not have access to sign language from birth.1 This is due to the facts that a) most Deaf children have hearing parents and b) the educational system for Deaf children does not provide access to sign language by early intervention from birth. Hence, many Deaf children acquire sign language in Kindergarten or in Deaf school primarily from Deaf peers.2 Some Deaf children get access to sign language even later in life, i.e. in adolescence or as young adults (Mayberry, 2007; Mayberry & Kluender, 2018). Only ~5% of Deaf children are born to Deaf parents and acquire sign language from birth (Mitchell & Karchmer (2004) for American Sign Language, ASL). Because the majority of Deaf children do not have full access to sign language in early life and for many of them the acquisition of spoken language through hearing aids or cochlear implants is problematic, many Deaf children suffer from language deprivation, which may have tremendous negative effects on child development (Humphries et al., 2014; Hall et al., 2019).

The present study focused on the relationship between the effects of age of language acquisition (AoA) and age on three distinct levels of linguistic processing (pragmatic, semantic and syntactic) in Deaf signers of Austrian Sign Language (ÖGS or “Österreichische Gebärdensprache”). In the following sections, we briefly summarize separate bodies of literature that investigated effects of AoA and age on different levels of linguistic processing, and outline linguistic features of sign languages that were critical for the research design.

Relationship between Aging and Linguistic Processing

Studies examining the effect of aging on language proficiency suggest that healthy aging (i.e. aging without any neurological disorders) impacts spoken language abilities in different ways: while some aspects of linguistic processing remain stable across the lifespan, others either decline or improve over time. Specifically, semantic processing and lexical retrieval are relatively unaffected by age (Beese et al., 2018).

On the other hand, both the processing of more complex syntactic constructions, and the retrieval of phonological and orthographic information about a word decline with age (Wingfield et al., 2003; Thornton & Light, 2006). Language production has been shown to be more vulnerable to negative effects of aging, as compared to language comprehension (e.g. James & MacKay, 2007). For example, older speakers produce more off-topic utterances (Arbuckle et al., 2000) and experience more tip-of-the-tongue (TOT) states3 (Abrams, 2008; Evrard, 2002). While older speakers’ language comprehension abilities remain intact at the single-word level (e.g. Burke & MacKay, 1997), comprehension and retention of sentences with more complex grammatical structures, or ambiguous sentences, become more difficult with age (e.g. Johnson, 2003; Burke & Shafto, 2008).

At the same time, older speakers have an increased vocabulary (e.g. Verhaeghen, 2003; Park et al., 2002). Older adults are also better storytellers (James et al., 1998; Kemper et al., 1989), and can be more accurate in lexical decision tasks (e.g. James & MacKay, 2007). Recent research shows that effects of age on language acquisition are observed as early as 40 years of age (Fernandez et al., 2019). The present study focused on sign language processing of Deaf participants between 28 and 58 years of age.

Studies examining the effect of aging on language processing have almost exclusively focused on spoken language (in auditory or written form), as opposed to sign language. As a result, less is known about the sign language ability trajectory over the lifespan, or about age effects on proficiency in linguistic subdomains of sign language – lexical, syntactic, or pragmatic. There exists some research in atypical sign language processing in aging populations, such as in older signers suffering from dementia (Atkinson et al., 2015), or Parkinson’s disease (Brentari et al., 1995). The trajectory of sign language processing during the lifespan in neurologically healthy signers has not been studied. Knowledge about the effects of aging on sign language processing can provide a more comprehensive answer to the question of how aging influences language processing, e.g. by comparing the effects of age across modalities in which language is expressed. Only the investigation of both spoken and sign languages can provide differentiation between the effect of aging due to the decline of perceptual abilities (i.e. auditory and visual perception abilities) and the effect of aging on linguistic processing.

Relationship between Age of Acquisition and Linguistic Processing

In spoken language research, age of acquisition (AoA) is mainly considered in the context of L2 learning, as the exposure to L1 in the hearing community is universally early. The effects of AoA on L1 spoken language acquisition could only be assessed in exceptional cases of isolated children who did not receive any language input in early childhood (e.g. Fromkin et al., 1974). Studies examining the effects of AoA on language processing show that the acquisition of a natural language early in life is a crucial factor that impacts L1 proficiency, and the ability to learn additional languages later in life. An L1 acquired in infancy facilitates L2 learning regardless of the language modality (spoken or sign) of the early L1 or the later acquired L2 (cf. Mayberry et al., 2002).

Sign languages offer a unique perspective on the effects of AoA, because the majority of signers acquire their primary language (i.e. sign language) later in life. The vast majority of Deaf children are born to hearing parents and have no access to sign language from birth. Later learners of L1 can provide unique data for studying the effects of AoA on different components of language ability (e.g. potential differential effects of AoA timing on syntax, pragmatics, semantics).

At the abstract (modality-independent) level of linguistic analysis, sign languages (including ÖGS) are similar to spoken language in that they are organized hierarchically: lexical items, constructed from phonetic (distinctive, sub-lexical) features, are combined into sentences using inflectional4 and derivational5 morphemes (Wilbur, 2015, 2018), while sentence structure is governed by syntactic rules (Padden, 1983, 1988; Schalber, 2015). Sign languages are produced by manual and non-manual means. Each sign consists of a handshape (and its orientation), a place of articulation and (most of the time) a specific movement pattern. Non-manual markings include linguistically significant expressions of the face (eyebrow raises/furrows, blinks, mouth expressions), head (nods, tilts), and posture (shoulder stance changes). Non-manual markings are relevant on all levels of sign language grammar, although the inventories and linguistic relevance of non-manual markings differ across sign languages (Wilbur, 2000).

Prior research has suggested that late AoA detrimentally affects signers’ proficiency in both morphology and syntax (Malaia et al., 2020; Emmorey et al., 1995; Boudreault & Mayberry, 2006). Production studies examining ultimate attainment at the morphosyntactic level indicated that Deaf non-native signers are less accurate in shadowing ASL narratives6 or recalling ASL sentences (Mayberry & Fischer, 1989). With regard to comprehension, late learners of sign language L1 perform worse than Deaf native signers: they are less sensitive to verb agreement violations (Emmorey et al., 1995), and less accurate in grammaticality judgment for ASL sentences (Boudreault & Mayberry, 2006). More recent research investigating school-aged native and non-native Deaf signers confirms that later exposure to sign language negatively impacts grammatical judgement of the signers (e.g. Novogrodsky et al. 2017). While AoA has an impact on late learners’ linguistic knowledge, their cognitive function is unimpaired (as assessed by performance on non-verbal cognitive tasks; cf. Mayberry & Fischer, 1989; Mayberry & Eichen, 1991).

Phonological7 and lexical processing in sign language are also strongly influenced by AoA; the two have been often studied in concert, as phonological features strongly affect lexical processing in primarily monosyllabic sign languages. Different error patterns have been reported for native and non-native signers in narrative shadowing and recall: while native signers’ errors are more often related to the semantics of the stimulus (i.e. they use a different semantically related sign instead of the target sign), non-native signers make errors which are related only to the phonological form of the sign (i.e. replacing the target sign by a phonologically related sign that shares two of three formational parameters with the stimulus sign: handshape, location, or movement) resulting in a non-meaningful response sentence (Mayberry & Fischer, 1989). These phonological-lexical errors in language production in late learners are negatively correlated with both signers’ comprehension accuracy, and with AoA (Mayberry & Fischer, 1989; Mayberry & Eichen, 1991). Eye-tracking has indicated that native Deaf signers are sensitive to the phonological structure of signs during lexical recognition, while non-native Deaf signers are not. This finding suggests different organization of late signers’ mental lexicon, as compared to early learners’ (e.g. Lieberman et al., 2015). Native and non-native Deaf signers also differ in comprehension of phonological features (Hildebrandt & Corina, 2002; Orfanidou et al., 2010), and in performance on lexical decision tasks (Carreiras et al., 2008; Dye & Shih, 2006).

Remarkably, the length of language experience in signers who acquired sign language later in life does not correlate with proficiency (Newport, 1990; Mayberry & Eichen, 1991). For example, an investigation of signers’ performance on tasks requiring knowledge of complex ASL verb morphology8 (e.g. verb agreement; see below for a more detailed description of verb agreement in sign languages) indicated that linguistic performance declined as a linear function of AoA, even though all participants had at least 30 years of experience with ASL (Newport, 1990). Thus, the delay in acquisition of sign language irretrievably affects language processing at the levels of phonology, semantics, and syntax.

The nature of the experimental task is an important factor in performance assessments for AoA studies. The existing literature indicates that similar linguistic structures may be differently affected by AoA depending on the nature of task requirements in the experiment. For example, while Newport (1990) reported no effect of AoA on basic word order processing in late signers, another study (Boudreault & Mayberry, 2006) indicated a significant effect. While Newport’s (1990) study used a sentence-to-picture matching task asking about the implicit comprehension of the meaning (semantics), Boudreault and Mayberry (2006) asked signers to judge signed sentences with respect to grammaticality (i.e. relying on signers’ metacognition and explicit knowledge of syntax), which might have yielded the differences in processing.

Relationship between age and AoA in sign language: study design

Previous research reported effects of AoA on sign language processing, as well as effects of aging on spoken language abilities. It is an open question, however, whether and how the factors of AoA and Age affect different levels of linguistic processing in signers. Besides effects of word order, we studied three distinct levels of linguistic structures in ÖGS (semantic, syntactic, pragmatic). Relevant linguistic features of word order, topicalization, and classifiers in sign languages are summarized below.

Verb agreement and word order in sign languages (syntactic level).

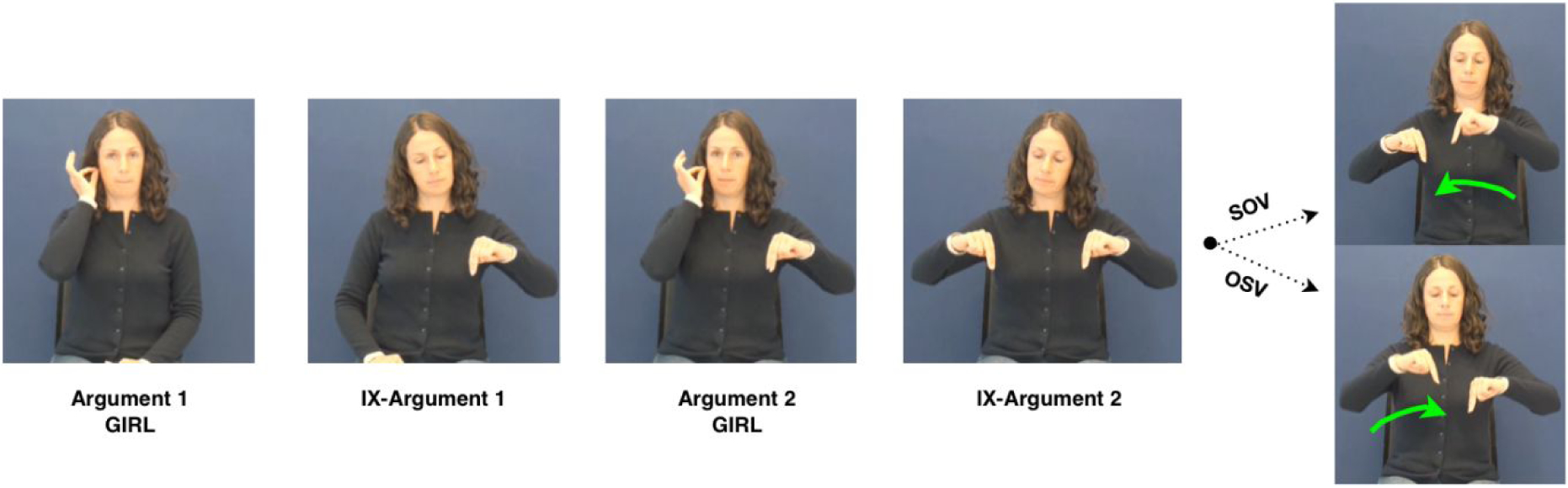

In sign languages, discourse referents can be referenced in the three-dimensional signing space in front of the signer by manual index signs (pointing signs). Verb agreement can be expressed by spatial modification of the beginning and ending location of the movement of the verb sign. Typically, the verb that has a directional motion component (often called “agreeing verb”) moves from the location associated with the subject argument towards the location associated with the object argument (e.g. Fischer, 1975; Padden, 1983; see Figure 1). Some verbs indicate verb agreement by hand orientation (sometimes in addition to path movement), whereby the palm and/or the fingertips face towards the location associated with the object argument (so-called “facing”) (Brentari, 1989). The basic and preferred word order of ÖGS is subject-object-verb (SOV) (Wilbur, 2002; Krebs et al., 2018; Krebs et al., 2019a, b). However, object-subject-verb (OSV) orders are possible (and grammatical) in sentences with agreeing verbs or classifier signs (Krebs, 2017; Krebs et al., 2018). In these sentences, the referent at which an agreeing verb begins is considered the subject (regardless of whether it is the first or the second noun in the sentence), and the referent at which an agreeing verb terminates is the object.

Figure 1.

A static word-by-word representation of an ÖGS sentence in SOV vs. OSV (simple word order) condition. In both constructions (SOV and OSV) the argument NPs (in this case GIRL) were signed in the same order and were referenced at the same points in space; i.e. the first argument was always referenced at the left side of the signer (IX = index/pointing sign). The path movement of the sentence-final critical verb sign (agreeing verb HELP) unambiguously marks the argument structure by movement from subject to object location. The signs indicating argument structure are marked by arrows. The sentence shown means: “The girl helps another girl (either the one referenced on the left side helps the one referenced on the right side or vice versa).” The figure demonstrates differences in the conditions: the sentences are signed in full for each specific stimulus.

Topic marking in sign languages (pragmatic level).

Topic, as a marker of information structure (pragmatics), has the same function in sign languages as in spoken languages: the topicalized argument is in focus, i.e. emphasized (Wilbur, 2012). Topic marking changes the interpretation of the sentence at the level of information structure/pragmatics. In ÖGS, as well as in other sign languages, topic marking is expressed by non-manual markings, and the topic is in sentence-initial position (often followed by a pause) (Hausch, 2008; Ni, 2014; Aarons, 1996). Topicalized stimuli in the present study use the same sentences as simple condition; however the first argument in topicalized sentences has non-manual topic marking (see Figure 2).

Figure 2.

A representation of non-manual topic marking in ÖGS. In the stimulus material used in the present study topic marking accompanies the sentence-initial argument and the index sign referencing this argument. Topic marking is expressed by raised eyebrows, wide eyes, chin directed towards the chest and an enhanced mouthing.

Classifiers in sign languages (semantic level).

Classifiers in sign languages are specific handshapes that are bound to verbs to express handling, motion, and/or location of referents (Frishberg, 1975); classifiers express specific meanings, and are part of the lexicon in sign languages (Brentari & Padden, 2001)9. The classifier handshapes used in the present study denote physical properties of the two entities/human beings (see Figure 3). It is important to note that classifiers are linguistically complex constructions, and not mere gestures, as indicated by protracted and error-prone course of L1 and L2 acquisition of sign language classifiers (Marshall & Morgan, 2015; Newport, 1990) (see Appendices A and B for a more detailed description of the stimuli).

Figure 3.

A static word-by-word representation of ÖGS sentence in SOV vs. OSV conditions with classifier predicates. GIRL CL-LOCATED3a GIRL CL-LOCATED3b 3aCL-JUMP3b, “Two girls stand opposite each other and the girl on the left jumps towards the girl on the right”, and GIRL CL-LOCATED3a GIRL CL-LOCATED3b 3bCL-JUMP3a, “Two girls stand opposite each other and the girl on the right jumps towards the girl on the left.” The sentence-final classifier predicate indicates the spatial relation between the arguments by movement from subject to object location. Both arguments were referenced in space by a classifier handshape (in this case the two referents are placed in space in a way indicating that they are standing opposite each other with more distance between them). Then, either the hand representing the first referent (signer’s left hand in SOV orders) or the second referent (signer’s right hand in OSV orders) started to move; i.e. indicating the active referent. The sentence shown means: “Two girls stand opposite each other and one of them (either the one on the left or the one on the right side) jumps towards the other.” The signs indicating argument structure are marked by arrows. The figure demonstrates differences in the conditions: the sentences are signed in full for each specific stimulus.

We investigated the effects of AoA and Age on the processing of the three distinct levels of linguistic structures in ÖGS (semantic, syntactic, pragmatic) described above using a linguistic acceptability task. Using this task allows us to compare our data with previous research on AoA effects on sign language sentence processing that used similar tasks (e.g. Emmorey et al., 1995; Boudreault & Mayberry, 2006; Henner et al., 2016; Novogrodsky et al., 2017).

All stimuli sentences in our study included two argument noun phrases (subject and object) and a sentence-final verb (which is the normal position in ÖGS). The stimuli varied in word order (subject-object-verb orders (SO) vs. object-subject-verb orders (OS)), both of which are acceptable as grammatical by native signers (Krebs, 2017). Additionally, 1/3 of the stimuli sentences contained topic constructions10 (pragmatic marker), and another 1/3 contained verbal classifier constructions (the stimuli used in the study are fully described in the Appendix (Table 1) as well as by Figures 1–3).

The hypotheses tested in the present study were framed based on results of previous studies examining the effects of AoA on various linguistic structures. For example, previous research on ASL indicated that the processing of basic word order is unaffected or minimally affected by AoA (Newport, 1990; Boudreault & Mayberry, 2006). Newport (1990) reported that AoA does not affect comprehension and production of basic sign order in ASL. In addition, Boudreault and Mayberry (2006) observed that although late AoA does have a detrimental effect on comprehension of both simple and complex structures in signed sentences, comprehension of basic sign order is relatively intact in contrast to more complex constructions (as indicated by the accuracy of participants’ responses; response latency was not affected by AoA).

The effects of AoA on the processing and comprehension of pragmatic features (such as topic marking) have not been previously examined in sign languages. However, late learners of sign languages have been reported to not produce non-manual topic markings; this might indicate that late learners experience difficulties at this level of linguistic structure (Cheng & Mayberry, 2018). Finally, comprehension of specific semantic classes of words, such as classifier constructions, has been reportedly affected by AoA (Boudreault & Mayberry, 2006). However, signers showed relatively high sensitivity with respect to the grammatical structure of classifier constructions. The same study indicated an interaction between AoA and grammaticality of the stimulus: late signers made more errors in the task when it came to ungrammatical sentences. However, ungrammatical sentences with classifiers were less affected by AoA, in contrast to other morpho-syntactically complex constructions. Yet, despite relatively intact comprehension of classifiers, late learners have been reported to produce fewer classifiers in comparison to native signers (Newport, 1990). This finding suggests a difficulty late learners might have in integrating semantics of classifiers with the syntactic structure of the sentence in production.

To assess signers’ comprehension of various linguistic structures, we designed a linguistic acceptability task, which yielded acceptability rating and response time data. Participants were shown videos of three sentence types: simple sentences, sentences with a non-manual topic marking, and sentences in which a classifier verb was used. Sentences in each of the conditions were manipulated such that they varied in word order - SOV vs. OSV. Participants’ ages and ages of sign language acquisition (AoA) varied considerably, providing a testing ground for assessing relative effects of age and AoA on syntactic, semantic, and pragmatic processing in ÖGS. Based on the inferences from previous research, this design aimed to test the following hypotheses:

Age is expected to affect reaction times, leading to overall slower reaction times in older participants. At the same time, older participants have more experience with language, and are thus likely to rate a variety of linguistic structures as more acceptable.

Earlier age of sign language acquisition (AoA) is expected to facilitate syntactic processing. Early learners (in comparison with later learners) are thus expected to give higher acceptability ratings to sentences with more complex syntactic structures, such as sentences with OSV word order.

Later age of sign language acquisition is hypothesized to be associated with a greater reliance on semantic/iconic processing (classifiers) than on syntactic or pragmatic processing.

Methods

Materials and Procedure

Participants were presented with video clips of full grammatical sentences in ÖGS containing 1) simple word order sentences, 2) topic constructions, and 3) classifier constructions; each sentence conformed either to SOV or to OSV word order.40 stimuli sentences were presented for each of the six conditions. All sentences were grammatical and acceptable to native signers (for examples of the experimental conditions see Table 1, Appendix B). Participants were asked to watch the videos of signed sentences and give an acceptability judgement for each sentence on a 7-point Likert scale (1 stood for ‘that is not ÖGS at all’; 7 for ‘that is good ÖGS’). After each video (i.e. after each sentence) a green question mark appeared lasting for 3000 ms. The participants were instructed to give their ratings when the question mark appeared by a button-press on the keyboards. Missing responses or responses made after the question mark disappeared (3000 ms after the video) were not included in the analysis. The sentences were presented in pseudo-randomized order. (see Appendices A, B and C for further details on materials and procedure).

Participants

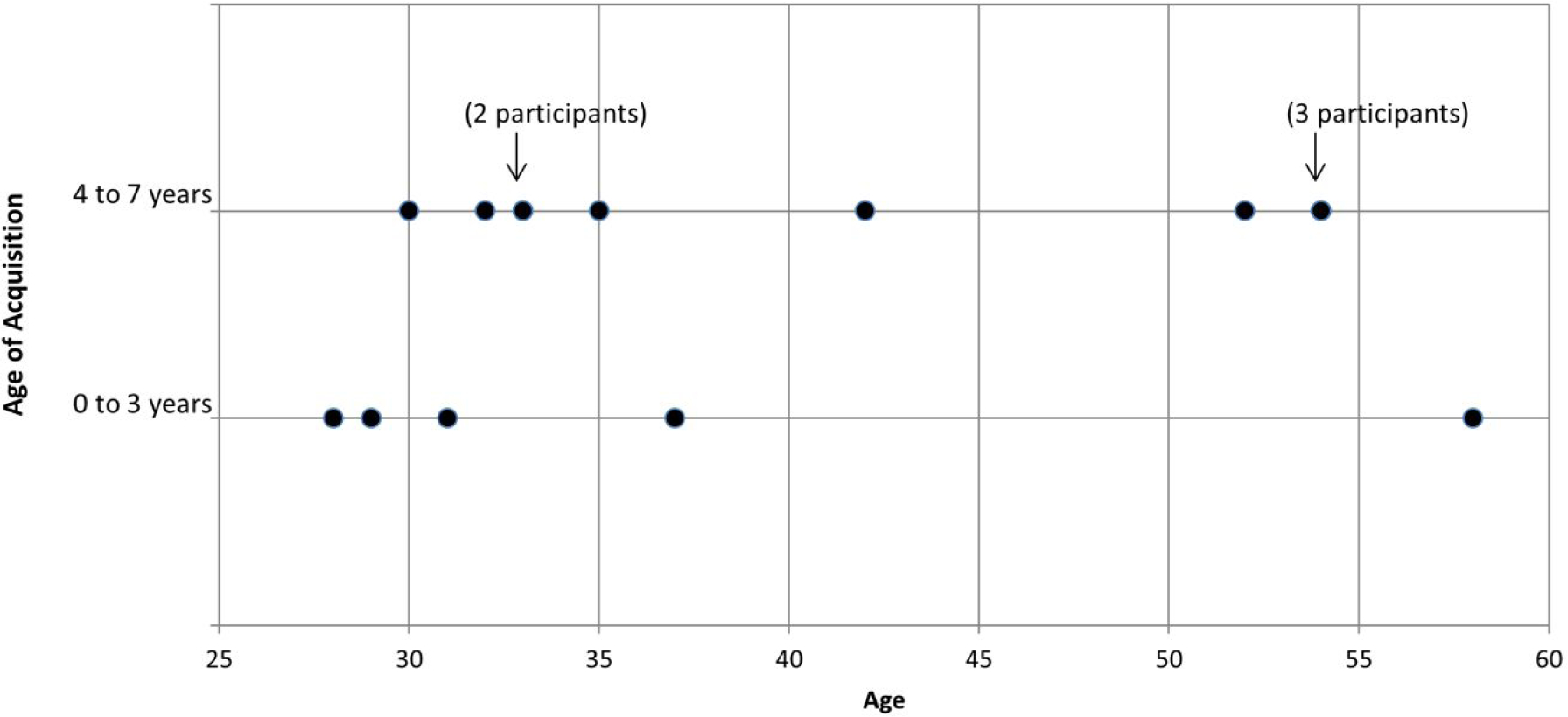

15 participants (F N=8, M N=7, age M=40.13, SD=11.03; age range 28 to 58 years) took part in the study. All were born Deaf, or lost their hearing early in life. Participants came from different areas of Austria and acquired ÖGS at varying ages. Due to privacy concerns, age of sign language acquisition was coded within approximate ranges: 0–3 years of age (N=5) and 4–7 years of age (N=10); an age/AoA distribution plot is presented in Figure 4. All of the participants used ÖGS as their primary language in daily life, and were members of the Deaf community in Austria. Participants’ proficiency was independently evaluated as part of the consent procedure by an ÖGS interpreter; only data from proficient participants who understood and carried out the acceptability rating task correctly was used in the analysis. Each participant received 30€ as reimbursement.

Figure 4.

The two groups of AoA (A: AoA between 0–3; B: AoA between 4–7) plotted against Age ranging from 28–58 years of age. Three participants were 54 years old and two participants were 33 years old (marked by the number in parentheses above the data point). Slight collinearity between AoA and Age (R2=.05) is observed.

Data analysis

The design of the experiment included the between-subjects variable AoA (with the two groups: 0–3 years of age and 4–7 years of age), and the within subjects variables (word) Order (SO, OS) and (linguistic) Structure (simple sentence, topic orders, classifiers) (see Table 1 in Appendix B). Age (centered) was included as a continuous covariate. The statistical analyses for the two dependent variables acceptability rating and reaction time were conducted using mixed-effects models (see Appendix D, model specification).

Mean acceptability ratings and mean response times were calculated based on AoA (ÖGS acquisition between 0–3 years of age, N=5; between 4–7 years of age, N=10). We also calculated mean acceptability ratings and response times for participants before and after age 40 (younger than 40 years of age, N=9; older than 40 years of age11, N=6).

Results

Acceptability ratings

Mean acceptability ratings for the reversed-video filler condition confirmed behavioral compliance of the participants with the task (Mean ratings for the filler items: 0–3 AoA group: M=1.96, SD=1.36; 4–7 AoA group: M=1.43, SD=1.16).12 Overall, the mean acceptability ratings ranged from 4.19 to 6.96 (M=5.75, SD=1.55).

The mixed-effects model for acceptability ratings revealed significant main effects of linguistic structure (Table 1, significant results; also see Appendix E for all results).

Table 1.

Acceptability ratings: summary of significant results

| Predictors | Estimates | Standard error | z-value | p |

|---|---|---|---|---|

| Structure [Classifier sentences] | −0.37 | 0.15 | −2.51 | < 0.05 |

| Structure [Topic sentences] | 0.22 | 0.09 | 2.42 | < 0.05 |

| Structure [Classifier sentences] : AoA [0–3 group] | 0.40 | 0.06 | 6.31 | < 0.001 |

| Structure [Topic sentences] : AoA [0–3 group] | −0.20 | 0.06 | −3.20 | < 0.01 |

| Age : Word order [OS] | −0.008 | 0.004 | −1.86 | 0.06 |

| Age : Word order [OS] : AoA [0–3 group] | −0.009 | 0.004 | −2.08 | < 0.05 |

Classifier sentences were rated lower than average (i.e. lower than the grand mean over all conditions). Topic sentences were rated higher than average. In the analysis of acceptability ratings, Age and AoA effects were specific to linguistic structures as revealed by two- and three-way interactions discussed further.

Two-way interactions that reached significance included interactions of Age of acquisition and sentence type, indicating that classifier sentences were rated higher within the 0–3 AoA group compared to the 4–7 AoA group (sentences with classifiers: 0–3 AoA group: M=5.66, SD=1.40; 4–7 AoA group: M=5.28, SD=1.92), and topic sentences were rated lower within the 0–3 AoA group compared to the 4–7 AoA group (topic sentences: 0–3 AoA group: M=5.74, SD=1.37; 4–7 AoA group: M=6.08, SD=1.30).

The three-way interaction between Age and Word Order with AoA indicated that older participants from the 0–3 AoA group gave lower acceptability ratings to OS orders (Figure 6). Figure 6 further illustrates the trend that acceptability ratings for both OS and SO word orders increased as signers were aging.

Figure 6.

Older participants from the 0–3 AoA group gave lower acceptability ratings to OS orders. Centered age (AGEC) is presented on the x-axis (note that the x-axis is scaled so that centered ages appear equidistant, even though they are not); estimated marginal means are presented on the y-axis.

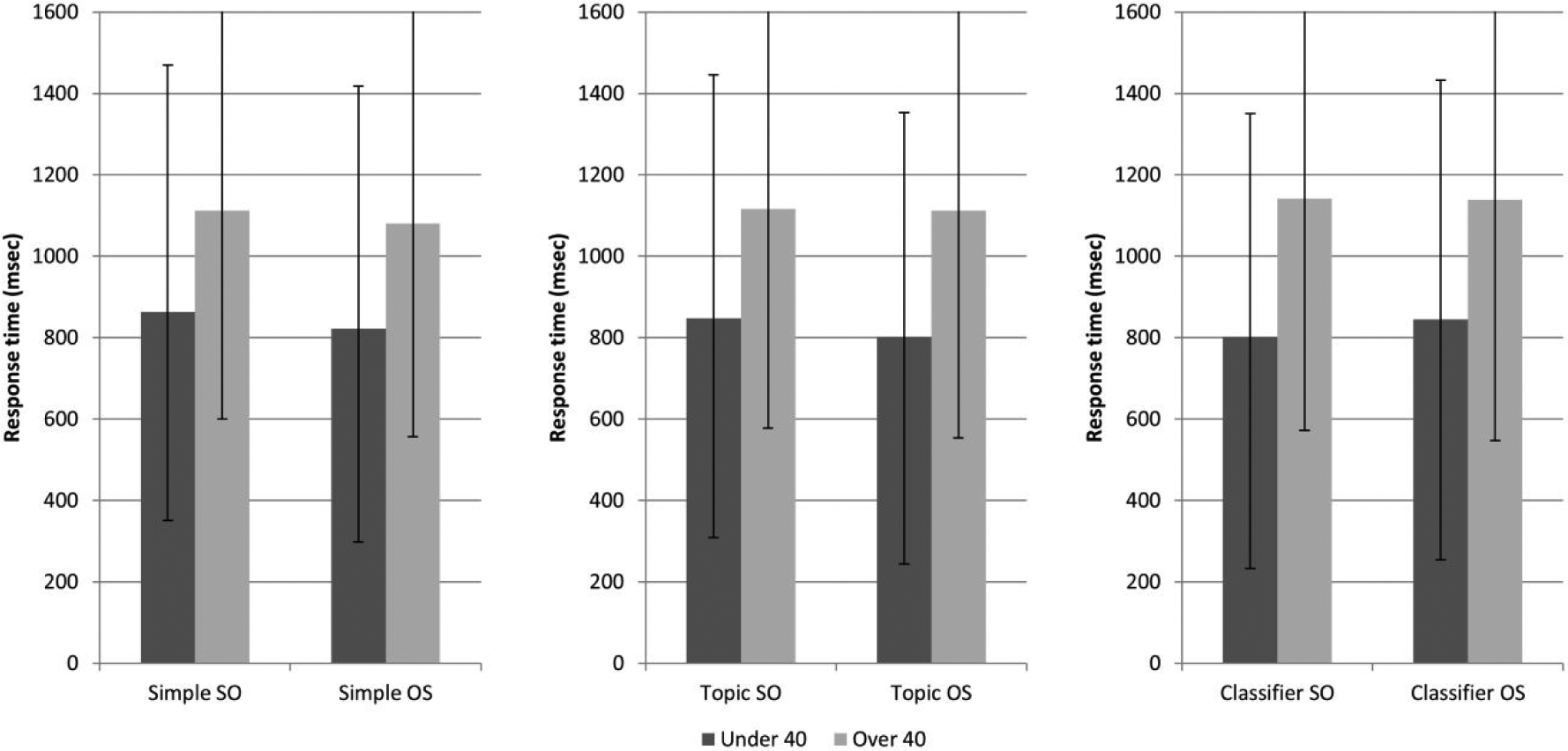

Reaction times

Overall, the mean reaction times ranged from 408 ms to 1785 ms (M=945, SD=586).

The linear mixed model analysis revealed a significant main effect of Age (Estimate: 0.02; CI: 0.0009 – 0.047; p < 0.05). Mean reaction times for younger (than 40) vs. older (than 40) signers are presented in Figure 7. Older signers showed overall higher reaction times compared to younger signers (older signers, reaction time M=1117, SD=549; younger signers, M=830, SD=581). No additional significant effects were revealed.13

Figure 7.

Reaction times by younger (N=9) vs. older (N=6) signers across experimental conditions, with slower reaction times in older signers across conditions. Whiskers indicate SD.

Discussion

Our study assessed the effects of participants’ age and age of sign language acquisition (AoA) on acceptability ratings and response times to linguistic phenomena at the levels of semantics (sentences with classifiers), syntax (word order variation), and pragmatics (topicalization).

Age effects.

With respect to reaction time data, data analysis indicated that aging affects language processing in Deaf signers in a similar way as reported for hearing older speakers of spoken languages. The linear mixed model revealed a significant main effect of Age. Thus, older age resulted in longer overall reaction times. The higher reaction times observed for older speakers in speech and sign language suggests that this aging effect is independent of language modality, but rather associated with the effect of aging on processing speed.

Overall, the findings with respect to age influences on sign language processing are in agreement with current literature on age effects in speech processing. One caveat to be considered, however, is the specific age cutoff used in different studies: a number of the studies on healthy aging in spoken language users report data from a group of older speakers spanning three or four decades, which makes it difficult to determine when language decline begins (Abrams et al., 2010). Thus, the question of when language processing/abilities/use starts to change during healthy aging remains open with respect to either spoken or signed languages.

Analysis of acceptability ratings revealed a significant interaction between Age, Word Order, and AoA indicating that older participants from the 0–3 AoA group gave lower ratings to OS orders. Whether lower ratings of OS word order by older early signers result from better knowledge of probabilistic conventions of language use due to increasing length of experience with a variety of linguistic structures, or stem from greater leniency in acceptability, or are due to individual variation, cannot be determined on the basis of available data.

Effects of age of acquisition (AoA).

Mixed effects modeling of acceptability ratings revealed differences between the two AoA groups. The group that acquired sign language between the ages of 4 to 7 years rated classifiers lower and topic sentences higher than the 0–3 AoA group. However, the difference in ratings between the two AoA groups is relatively small, and thus should not be overstated. The effects of AoA revealed by the present analysis are relatively subtle suggesting that the experimental factors examined in this study are only affected to a minor extent by AoA - at least when contrasting 0–3 and 4–7 AoA groups. Perhaps AoA effects are more visible when investigating the language ability of signers who acquire ÖGS at a later age?

We additionally collected data from Deaf ÖGS learners who acquired ÖGS past puberty (i.e. after 13 years of age, N=5, mean age: 40.4; range 30–56 years). All of these late learners had contact with other languages before learning ÖGS (either a spoken language, or another sign language). Because these late learners acquired ÖGS at various ages and therefore cannot be grouped into a common AoA group, they were not included in the data analysis in the present study.

A descriptive analysis of the data of the late learners revealed higher ratings for SO orders compared to OS orders for all linguistic structures, although for classifiers, OS order seemed to be more acceptable for this group (i.e. the difference in acceptability of SO vs. OS word order seemed to be attenuated for the classifiers).

Lower ratings for OS word order were also revealed by the mixed model analysis in the 0–3 AoA group (at least for older signers). However, when comparing overall mean ratings, the 0–3 and the 4–7 AoA groups did not show a notable preference for word order acceptability, and thus did not find either word order more acceptable than the other. Interestingly, the late learners rated all sentences with SO word order, and all sentences with classifiers (SO and OS orders) higher than the 0–3 and the 4–7 AoA group (see Appendix F). This latter result might seem contradictory when compared to the results of the mixed model analysis revealing that classifier sentences were rated lower by the 4–7 AoA group in comparison to the 0–3 AoA group (i.e one might rather expect that the late learners would pattern more like the 4–7 AoA group than like the 0–3 AoA group). Although the difference between the 0–3 and 4–7 AoA group is systematic (i.e. statistically significant) and might reflect differences in processing, this difference in ratings is relatively subtle. However, comparison of mean acceptability ratings across linguistic structures indicates that the late learners differ substantially from both the 0–3 and 4–7 AoA groups in giving higher ratings to sentences with classifiers (Appendix F).

Classifiers in sign languages tend to be highly iconic, i.e. showing a close relationship between meaning and form.14 Although earlier studies suggest that iconicity has relatively little effect on L1 sign language acquisition by children (Meier et al., 2008; Newport & Meier, 1985; Orlansky & Bonvillian, 1984), more recent studies report that the first signs children acquire are iconic (Thompson et al., 2012; Caselli & Pyers, 2017). Iconicity has also been reported to have a facilitating effect on L2 sign language acquisition, i.e. helping sign language learners to learn, memorize and translate signs (Baus et al., 2013) as well as support comprehension of signs (Ortega et al., 2019). Thus, resilience of processing for sentences with classifiers in late learners might be due to overt iconicity of classifier constructions, which can engage a non-language-specific semantic system in late signers (Ferjan Ramirez et al., 2014; Malaia & Wilbur, 2019).

Multiple lines of research indicate that the effect of iconicity on language acquisition is not restricted to the visual-(non)manual modality. Previous research suggests that iconicity impacts the processing and development of both sign and spoken languages (Perniss et al., 2010). Iconicity has been shown to support L1 acquisition of spoken languages (Imai & Kita, 2014; Kantartzis et al., 2011; Laing, 2014), as well as L2 spoken language learning (Deconinck et al., 2017). The present data lends further support to the hypothesis that iconic words/signs are easier to learn because these are more grounded in perceptual and motoric experience (Imai & Kita, 2014; Perniss & Vigliocco, 2014; Ortega et al., 2016).

In general, late (post-puberty) learners of ÖGS appeared to respond relatively fast to the stimuli. They respond almost as fast as the 0–3 AoA group and faster than the 4–7 AoA group. The faster response times observed for late learners might have been a result of faster shallow (semantic, but not syntactic) processing of the stimuli by late signers (cf. Morford and Carlson, 2011). This does not mean that the processing was somehow incorrect; only that the processing strategy might differ based on the age of ÖGS acquisition. Note however, the smaller number of late signers and the smaller number of participants in the 0–3 AoA group might have skewed the findings of response time differences between the different groups (i.e. there were more participants in the 4–7 AoA group compared to the 0–3 AoA group and compared to the group of late ÖGS learners).

The present findings, overall, support and extend the understanding of age of acquisition as a critical parameter for achieving proficiency in syntactic processing. The findings regarding the late (post-puberty) ÖGS learners suggest that the later age of acquisition might result in increased reliance on the surface, shallow levels of linguistic hierarchy (perceptual/sensory and semantic/iconic processing), which were observed for processing of sentences with classifiers in the present study. Our findings are in agreement with existing literature on the influence of AoA on processing at the interface of phonology and lexicon – e.g. the finding that Deaf late L1 learners are more sensitive to the visual properties of signs, as compared to native Deaf signers and hearing L2 signers (Best et al., 2010; Morford et al., 2008).

As sign language input is quantitatively different from non-linguistic biological motion that humans can be exposed to (Borneman et al., 2018; Malaia et al., 2016; Blumenthal-Drame & Malaia, 2018), the reliance on semantics for communication in later learners might suggest that there are limits to neuroplasticity as the brain matures. The results of the study highlight the importance of comprehensive analysis of language proficiency at the interfaces between multiple linguistic domains to better understand the processes that underlie language acquisition.

Study limitations: effects of individual variability

Individual differences observed in the present study included some younger signers with relatively long reaction times, as well as older signers with relatively short reaction times. It is, however, difficult to judge whether any of these individuals are atypical for the population. Future research might consider adopting longitudinal approach while increasing the numbers of participants to allow for time-varying mixed effects modeling. Other moderating and mediating variables of language proficiency that might help improve external validity of findings in future studies include working memory, non-verbal IQ, and estimation of environmental influences, such as quantity and quality of linguistic input.

Furthermore, the use of a non-linguistic processing speed task would be recommended for future research, to decorrelate individual (non-linguistic) processing speed from the effects of aging and AoA on language processing.

A number of previous studies indicated that individual differences do affect the language acquisition trajectory, language use, and processing speed and strategy, at least for spoken language (cf. Kidd et al., 2018). Currently, little is known about individual differences among users of sign language, but it would be reasonable to assume that signers are similar to speakers in terms of individual differences in language processing. In future work with signing communities, use of language proficiency assessment tools (yet unavailable for ÖGS), non-linguistic measures of reaction times, as well as measures of temporal resolution in visual perception would allow for in-depth investigation of individual, linguistic, and cognitive variables on sign language task performance.

Conclusion

The present study focuses on the influences of age and age of sign language acquisition on the processing of a range of linguistic structures in Austrian Sign Language, and highlights the fact that both the language acquisition timeline and aging have measurable effects on linguistic processing. Reaction time for older signers was, in general, slower. While aging, in general, results in slower processing speed, it does not selectively affect specific linguistic levels.

Age of acquisition, on the other hand, selectively affected specific linguistic structures: The 4–7 AoA group rated classifiers lower and topic sentences higher than the 0–3 AoA group. However, these differences were relatively subtle. The data from later (post-puberty) learners shows that the processing of classifier constructions was resilient to detrimental effects of late acquisition. At the same time, syntactic processing was affected in late sign language learners, increasing their preference for basic SO word order. This suggests that syntactic processing that allows flexibility in syntactic re-analysis appears to be established early in the course of language acquisition.

Figure 5.

Acceptability ratings by the two AoA groups (0–3 AoA group (N=5) vs. 4–7 AoA group (N=10)) across experimental conditions, with higher ratings for simple and topic sentences but lower ratings for classifiers for the 4–7 AoA group compared to the 0–3 AoA group. Whiskers indicate SD. Note that the limit on the rating was 7; 8 on the graph is for the purposes of illustrating standard deviations.

Funding and Acknowledgements

Preparation of this manuscript was partially funded by NSF grants #1734938, #1932547 and NIH 1R01DC014498. We thank all Deaf informants taking part in the present study, with special thanks to Waltraud Unterasinger for creating the stimulus material.

Appendix A: List of nouns/verbs/classifiers used in the study

Nouns:

MAN

WOMAN

BOY

GIRL

Verbs:

HIT

CONTROL

LOOK-FOR

OPPRESS

SUPPORT

THREATEN

KILL

ATTACK

PRAISE

CRITICIZE

EXAMINE

CONGRATULATE

TEACH

CARE-FOR

INFORM

RESPECT

TRUST

GREET

LOOK-OVER/EYEBALL

ADORE

KISS

WAKE-UP

HUG

CONSOLE

WATCH

THANK

CONVEY-INFORMATION-TO

INFLUENCE

HELP

PROTECT

ANNOY

SCARE

HATE

ANSWER

INFECT

CATCH

LOOK-AT

ASK

SCOLD

VISIT

Classifier verbs:

CL-WALK-TOWARDS

CL-JUMP-TOWARDS (small successive jumps)

CL-JUMP-TOWARDS (one big jump)

CL-WALK-AWAY

CL-JUMP-AWAY (small successive jumps)

CL-JUMP-AWAY (one big jump)

In the classifier constructions both arguments were referenced by the same whole-entity classifier within one sentence, i.e. either with the classifier for a standing or a sitting person. To create a set of 40 sentences for each condition and thereby use only two classifier handshapes (for sitting or standing person) we varied the spatial distance between the arguments (little vs. more distance between the referents) as well as their orientation with respect to each other (sitting/standing opposite to each other vs. next to each other). After the arguments were referenced in space by whole-entity classifiers, the classifier predicate indicated the movement of one referent, who either walked, jumped with small successive jumps or jumped with one big jump towards or away from the other referent. After the movement of the active referent ended, the active referent either stood beside/opposite the other (passive) referent or sat beside/opposite/in front of or behind the other referent.

Appendix B: Materials

Table 1 summarizes linguistic features of word order, topicalization, and classifiers in the stimuli.

Table 1.

Examples of the six different experimental conditions. Simple orders, sentences involving topic marking, and classifier constructions were presented in SOV and OSV orders.

| Linguistic Structure | SOV word order | OSV word order |

|---|---|---|

| Simple sentence | GIRL IX3a GIRL IX3b

3aVISIT3b The girl (left) visits the girl (right). |

GIRL IX3a GIRL IX3b

3bVISIT3a The girl (right) visits the girl (left). |

| Sentence with topic marking | ________t GIRL IX3a, GIRL IX3b 3aVISIT3b The girl (left), visits the girl (right). |

________t GIRL IX3a, GIRL IX3b 3bVISIT3a The girl (left), the girl (right) visits. |

| Sentence with a classifier | GIRL CL-LOCATED3a GIRL

CL-LOCATED3b 3aCL-JUMP3b Two girls stand opposite each other and the girl on the left jumps towards the girl on the right. |

GIRL CL-LOCATED3a GIRL

CL-LOCATED3b 3bCL-JUMP3a Two girls stand opposite each other and the girl on the right jumps towards the girl on the left. |

Signs are glossed with capital letters; IX = index/pointing sign; subscripts indicate reference points in signing space. Non-manual markings and the scope of non-manual markings are indicated by a line above the glosses; t at the end of the line stands for topic marking; the comma after the topic indicates the prosodic break, i.e. the pause after the topic. CL-LOCATED stands for the classifier representing the referents and their spatial position (and the spatial relationship between the referents). CL-JUMP stands for the classifier verb representing the movement of the active referent (in this example a jumping movement).

All stimulus materials were signed by a Deaf woman who acquired ÖGS early in life, uses ÖGS in her daily life, and is a member of the Deaf community in Austria. The sentence contexts involved non-compound, relatively frequent signs (e.g. MAN, WOMAN). To avoid any semantic biases, we used the same arguments within one sentence (e.g. The man asks the man). The nouns and their distribution were the same across all stimuli types. The referencing of the arguments within the sentences were kept constant within conditions in that the sentence-initial argument was always referenced at the left side of the signer. The same agreeing verbs were used in the simple sentence condition and topic condition; thus, for simple and topic conditions there were 40 sentences (items) in 4 conditions. Classifier constructions use classifier predicates (instead of agreeing verbs). This results in a total of 80 items. The duration of stimuli with classifiers was slightly longer than the sentences with topic marking or simple sentences. See Appendix A for a list of the nouns, verbs and classifiers used in the present experiment, and see below for a stimuli length summary by condition.1

In all sentences, sentence-final verb signs disambiguated argument roles by means of directionality: it identified the agent of the action, which is the subject of the sentence, and the patient, which is the object, by means of movement from subject to object position. In SOV sentences, the argument that was referenced first in space (the subject) moved towards the argument referenced second (the object). In OSV sentences, the argument referenced second (the subject) moved towards the argument referenced first (the object). Thus, in SOV sentences, the first argument was the subject, while in OSV sentences, the second argument was the subject. All 6 conditions are summarized in Table 1, with glossing and English translations provided; below, we elaborate on each of the three types of linguistic structures under manipulation.

Syntactic condition.

After both arguments were referenced in space by an index sign, the agreeing verb unambiguously marked the argument structure by path movement and/or handshape orientation (facing). In the SOV word order condition, the verb showed a movement from the argument which was introduced first in the sentence towards the argument which was referenced second (and/or shows facing towards the second argument); in the OSV condition, the verb moves from the second argument to the argument referenced first (and/or shows facing towards the first argument; Figure 1 in the paper). 40 sentences were presented in each simple word order condition (40 with SOV word order; 40 with OSV word order).

Pragmatic condition.

In the Pragmatic/Topic condition, the same argument NPs and agreeing verbs were used as in the simple order condition. The sentence-initial argument in this condition was marked as topic by a combination of non-manuals such as raised eyebrows, wide eyes, chin directed towards the chest and enhanced mouthing. Topic marking nonmanuals co-occurred with the sentence initial topic argument and the index sign referencing the topic argument (Figure 2 in the paper). The index sign referencing the topic (sentence-initial) argument was also followed by a pause, during which the index sign was held in space. 40 sentences were presented in each pragmatic condition (40 with SOV word order and topic marking; 40 with OSV word order and topic marking).

Semantic/Classifier condition.

After the arguments were referenced in space, a classifier predicate indicated the spatial relation between them (e.g. a girl moving towards another girl). The sentence-initial argument was always referenced on the left side of the signer by a whole entity classifier2. In the SOV word order condition, the classifier referencing the first argument moved in relation to the argument referenced second; in OSV condition, the classifier referencing the second argument moved in relation to the argument referenced first (Figure 3 in the paper). 40 sentences were presented in each classifier condition (40 with SOV word order and classifier; 40 with OSV word order and classifier). In order to construct locally ambiguous classifier constructions, both arguments had to be referenced by the same whole-entity classifier within one sentence (e.g. with the classifier for a standing person). The use of identical classifier handshapes in one sentence ensured that both arguments were equally likely to represent the active referent within the sentence, creating true ambiguity with no irrelevant biases.

Analysis of video length

The mean length of the videos (in seconds) as well as the standard deviation and range of video length per condition are presented in the table below.

| Condition | Mean | SD | Range |

|---|---|---|---|

| Subject-Object, simple sentence | 5.7 | 0.56 | 5–7 |

| Object-Subject, simple sentence | 5.7 | 0.69 | 5–7 |

| Subject-Object, topicalized sentence | 5.63 | 0.63 | 5–7 |

| Object-Subject, topicalized sentence | 5.7 | 0.69 | 5–8 |

| Subject-Object, sentences with a classifier | 8.9 | 0.81 | 7–10 |

| Object-Subject, sentences with a classifier | 8.88 | 0.82 | 7–10 |

Appendix C: Procedure

The videos of signed sentences were presented to participants on a computer screen (35.3 × 20 cm) in 20-sentence blocks (14 blocks total). Sentence presentation order was pseudo-randomized among the 6 conditions (simple SOV, simple OSV, topic-marked SOV, topic-marked OSV, SOV with classifier, OSV with classifier) using 2 lists, in which no condition occurred more than twice in a row, for a total of 240 critical sentences, interspersed with 40 fillers. Each of the two lists included all stimuli; thus each participant saw all stimuli. The fillers were time-reversed videos (videos of sign language sentences played backwards) included to ensure participants’ attentional engagement and task compliance (i.e. showing whether the participants understood the task) and to distract from strategic processing.

An acceptability judgement task, consisting of a 7-point Likert scale typical for experimental linguistic research was used; a 7-point scale was used to give participants a maximal range within which to judge stimuli quality (1 stood for ‘that is not ÖGS at all’; 7 for ‘that is good ÖGS’). After each video (i.e. after each sentence) a green question mark appeared lasting for 3000 ms. The participants were instructed to give their ratings when the question mark appears. Participants provided their ratings by a button press on a keyboard. Missing responses or responses made after the question mark disappeared (3000 ms after the video) were not included in the analysis. Percentages of data included per age/AoA (without including the ratings for the filler material in the calculation) constituted: younger signers, 99.44%; older signers, 98.89%; 0–3 AoA group: 99.92%; 4–7 AoA group, 98.88%.

Instructions were given in an ÖGS video signed by one of the study authors. Prior to starting the experiment, a training block was presented to familiarize participants with task requirements, and allow them time to ask questions if anything was unclear. The experiment lasted approximately an hour. The duration of breaks after each block was determined by the participants.

Appendix D: Model specification

For Likert-scale acceptability ratings, ordinal mixed-effects logistic regression was performed using the ordinal package (Christensen, 2019) in R (R Core Team, 2014). The ordinal mixed-effects logistic regression included the continuous variable Age (centered), the factor AoA (0–3 years of age, 4–7 years of age), the factor (word) Order (SO, OS) and the factor (linguistic) Structure (simple sentence, topic orders, classifiers) as fixed effects, with by-participant, by-item, and by-item order (order of item occurrence in the stimuli list) as random effects, coded in R as clmm(Rating ~ AGE*AoA*STRUCTURE*ORDER + (1|Subject) + (1|Item)+ (1|ItemNumber). Two stimuli lists were used, with pseudo-randomized order of stimuli by conditions; half of the participants were presented with stimuli in the order of list 1; the other half – in the order of list 2. Sum coding was used for main effects testing. To analyze reaction times, a linear mixed-effects model (LME) analysis was performed using the lme4 package (Bates et al., 2015) in R; the LME model for reaction times included the same factors as the ordinal mixed-effects logistic regression for acceptability ratings, coded in R as lmer(log(Reaction time+1) ~ AGE*AoA*STRUCTURE*ORDER + (1|Subject) + (1|Item)+ (1|ItemNumber). Response time outliers were not removed from the data; reaction time data was log-transformed. A t-value of 2 and above was interpreted as indicating a significant effect (Baayen et al., 2008); p-values were assessed using lmerTest package; p-values were obtained by using maximum likelihood estimators.

Appendix E: Results

Table 2.

Acceptability ratings: summary of results

| Predictors | Estimates | Standard error | z-value | p |

|---|---|---|---|---|

| Age | 0.07 | 0.04 | 1.71 | 0.09 |

| Structure [Classifier sentences] | -0.37 | 0.15 | -2.51 | < 0.05 |

| Structure [Topic sentences] | 0.22 | 0.09 | 2.42 | < 0.05 |

| Word order [OS] | 0.04 | 0.05 | 0.98 | 0.33 |

| AoA [0–3 group] | −0.11 | 0.44 | −0.24 | 0.81 |

| Age : Structure [Classifier sentences] | 0.006 | 0.006 | 0.99 | 0.32 |

| Age : Structure [Topic sentences] | −0.0002 | 0.006 | −0.03 | 0.98 |

| Age : Word order [OS] | −0.008 | 0.004 | −1.86 | 0.06 |

| Structure [Classifier sentences] : Word order [OS] | −0.03 | 0.07 | −0.42 | 0.67 |

| Structure [Topic sentences] : Word order [OS] | 0.005 | 0.06 | 0.08 | 0.94 |

| Age : AoA [0–3 group] | 0.01 | 0.04 | 0.26 | 0.80 |

| Structure [Classifier sentences] : AoA [0–3 group] | 0.40 | 0.06 | 6.31 | < 0.001 |

| Structure [Topic sentences] : AoA [0–3 group] | -0.20 | 0.06 | -3.20 | < 0.01 |

| Word order [OS] : AoA [0–3 group] | 0.04 | 0.04 | 0.81 | 0.42 |

| Age : Structure [Classifier sentences] : Word order [OS] | 0.005 | 0.006 | 0.82 | 0.41 |

| Age : Structure [Topic sentences] : Word order [OS] | −0.002 | 0.006 | −0.40 | 0.69 |

| Age : Structure [Classifier sentences] : AoA [0–3 group] | −0.005 | 0.006 | −0.72 | 0.47 |

| Age : Structure [Topic sentences] : AoA [0–3 group] | 0.0006 | 0.006 | 0.10 | 0.92 |

| Age : Word order [OS] : AoA [0–3 group] | -0.009 | 0.004 | -2.08 | < 0.05 |

| Structure [Classifier sentences] : Word order [OS] : AoA [0–3 group] | −0.007 | 0.06 | −0.12 | 0.91 |

| Structure [Topic sentences] : Word order [OS] : AoA [0–3 group] | 0.01 | 0.06 | 0.22 | 0.82 |

| Age : Structure [Classifier sentences] : Word order [OS] : AoA [0–3 group] | 0.004 | 0.006 | 0.63 | 0.53 |

| Age : Structure [Topic sentences] : Word order [OS] : AoA [0–3 group] | −0.001 | 0.006 | −0.21 | 0.84 |

Appendix F: Acceptability ratings for each stimulus type from three AoA groups (including late learners)

Table 3.

Mean ratings and standard deviations per condition and per AoA group

| Condition | 0–3 AoA group | 4–7 AoA group | Late learners |

|---|---|---|---|

| Subject-Object, simple sentence | 5.57 (1.44) | 6.00 (1.40) | 6.81 (0.50) |

| Object-Subject, simple sentence | 5.80 (1.41) | 5.99 (1.48) | 5.77 (1.68) |

| Subject-Object, topicalized sentence | 5.65 (1.32) | 6.09 (1.28) | 6.83 (0.49) |

| Object-Subject, topicalized sentence | 5.83 (1.42) | 6.08 (1.32) | 5.80 (1.81) |

| Subject-Object, sentences with a classifier | 5.63 (1.36) | 5.29 (1.92) | 6.81 (0.47) |

| Object-Subject, sentences with a classifier | 5.70 (1.44) | 5.26 (1.92) | 6.44 (1.02) |

Standard deviation is presented in parentheses.

References

Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59, 390–412.

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-Effects models using lme4. Journal of Statistical Software, 67, 1–48.

Christensen R. H. B. (2019). “ordinal—Regression Models for Ordinal Data.” R package version 2019.12–10. https://CRAN.R-project.org/package=ordinal.

R Core Team (2014). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. Retrieved from http://www.R-project.org/

Wilbur, R. B., Bernstein, M. E., & Kantor, R. (1985). The semantic domain of classifiers in American Sign Language. Sign Language Studies, 46, 1–38. doi:10.1353/sls.1985.0009

1 The background color and light conditions in the video material were kept constant across conditions.

2 A whole entity classifier is a sign language handshape classifier that is representative of an object as a whole reflecting particular grammatically-relevant semantic or physical features of noun referent classes (e.g. persons, animals, vehicles; Wilbur et al., 1985).

Footnotes

Per convention, Deaf with an upper-case D refers to deaf or hard-of-hearing humans who define themselves as members of the sign language community. In contrast, deaf refers to the audiological status of an individual.

In Austrian Deaf Schools, Austrian Sign Language, i.e. Österreichische Gebärdensprache (abbreviated ÖGS) is not the language of teaching and is not taught as a separate subject. The language of teaching is mostly spoken German - a foreign language for Deaf children and not completely accessible for the majority of them. There are very few Deaf teachers and many of the hearing teachers have no (or insufficient) ÖGS competence (Dotter et al., 2019).

Tip-of-the-tongue (TOT) state is a temporary inability to produce a word, although the speaker knows its meaning.

Pertaining to change in the form of a word/sign to express a grammatical function.

Pertaining to change in the form of a word/sign to form a new word.

Shadowing refers to the process of simultaneously watching and reproducing signed input.

Related to minimal contrastive components of either auditory or visual signal in a particular language. For the visual signal in sign language, a change in place of articulation (position of the hand in relation to the body), handshape, or motion dynamics can constitute a phonological change and/or error (depending on how far the production of the sign departs from the vocabulary form of the sign).

In sign language linguistics, morphology of a verb describes the structure of the verb sign, and alterable components of it that can be used to express meaning or grammatical function (such as directionality or reduplication in a sign).

Classifiers in sign languages do have similarities to classifiers in spoken languages in terms of their linguistic function. For spoken languages, classifiers are words, or affixes, which classify nouns based on the type of the referent (cf. “three pieces of candy”). In sign languages, the classifier handshape is combined with a hand motion (in the function of a verb showing how the classified object moves, or how it is related to other objects), resulting in a classifier construction that can also serve as a grammatical verb, but refers to, and categorizes, noun referents (Wilbur et al., 1985).

The term “construction” in sign language literature refers to sentence and phrase structures; it does not refer to construction grammar.

Based on recent research showing that effects of age on language acquisition are observed as early as 40 years of age (Fernandez et al., 2019) we thus chose to use linguistically motivated cutoff of 40 years of age for the present data set. Statistically-motivated analysis using median split (above vs. below 36 years old) yielded similar results, since only one participant, aged 37, moved to the ‘older’ group.

For group comparison, descriptive mean values are reported.

Since no further predictor or interaction was significant, the model output is not included in the Appendix.

Note that iconicity parameter is a gradient, rather than a categorical one; i.e. some signs are more transparent than others, but without a distinctive dichotomy (Klima & Bellugi, 1979; Ortega et al., 2016). Ortega et al. (2016) report that the type of iconicity has an effect on sign learning. Classifier handshapes used in the present study (whole entity classifiers representing person(s) sitting/standing/walking/jumping etc.) show a close relationship between form and meaning.

References

- Aarons D (1996). Topics and topicalization in American Sign Language. Stellenbosch Papers in Linguistics, 30, 65–106. [Google Scholar]

- Abrams L (2008). Tip-of-the-tongue states yield language insights. American Scientist, 96, 234–239. [Google Scholar]

- Abrams L, & Meagan T Farrell MT (2010). Language Processing in Normal Aging. In: Guendouzi J, Loncke F, & Williams MJ (Eds.), The Handbook of Psycholinguistic and Cognitive Processes. Perspectives in Communication Disorders Routledge Handbooks Online; (https://www.routledgehandbooks.com/doi/10.4324/9780203848005.ch3), 49–73. [Google Scholar]

- Arbuckle TY, Nohara-LeClair M, & Pushkar D (2000). Effect of off-target verbosity on communication efficiency in a referential communication task. Psychology and Aging, 15, 65–77. [DOI] [PubMed] [Google Scholar]

- Atkinson J, Denmark T, Marshall J, Mummery C, & Woll B (2015). Detecting cognitive impairment and dementia in deaf people: the British Sign Language cognitive screening test. Archives of Clinical Neuropsychology, 30, 694–711. [DOI] [PubMed] [Google Scholar]

- Baus C, Carreiras M, & Emmorey K (2013). When does iconicity in sign language matter? Language and Cognitive Processes, 28, 261–271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beese C, Werkle-Bergner M, Lindenberger U, Friederici AD, & Meyer L (2018). Adult age differences in the benefit of syntactic and semantic constraints for sentence processing. Psychology and Aging. 10.1037/pag0000300 [DOI] [PubMed] [Google Scholar]

- Best CT, Mathur G, Miranda KA, & Lillo-Martin D (2010). Effects of sign language experience on categorical perception of dynamic ASL pseudosigns. Attention, Perception, & Psychophysics, 72, 747–762. [DOI] [PubMed] [Google Scholar]

- Blumenthal-Dramé A, & Malaia E (2018). Shared neural and cognitive mechanisms in action and language: The multiscale information transfer framework. Wiley Interdisciplinary Reviews: Cognitive Science, e1484. [DOI] [PubMed] [Google Scholar]

- Borneman JD, Malaia E, & Wilbur RB (2018). Motion characterization using optical flow and fractal complexity. Journal of Electronic Imaging, 27, 051229. [Google Scholar]

- Boudreault P, & Mayberry RI (2006). Grammatical processing in American Sign Language: Age of first-language acquisition effects in relation to syntactic structure. Language and Cognitive Processes, 21, 608–635. [Google Scholar]

- Brentari D (1989). Backwards verbs in ASL: Agreement re-opened. In: MacLeod L (Ed.), Parasession on agreement in grammatical theory (CLS 24, Vol. 2). Chicago: Chicago Linguistic Society, 16–27. [Google Scholar]

- Brentari D, Poizner H, & Kegl J (1995). Aphasic and Parkinsonian signing: differences in phonological disruption. Brain and Language, 48, 69–105. [DOI] [PubMed] [Google Scholar]

- Brentari D, & Padden C (2001). Native and foreign vocabulary in American Sign Language: A lexicon with multiple origins. In Brentari D (ed.) Foreign vocabulary in sign languages: A cross-linguistic investigation of word formation, 87–119. New York: Academic Press [Google Scholar]

- Burke DM, & MacKay DG (1997). Memory, language and ageing. Philosophical Transactions of the Royal Society: Biological Sciences, 352, 1845–1856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke DM, & Shafto MA (2008). Language and aging. In: Craik FIM & Salthouse TA (Eds.), The handbook of aging and cognition. New York: Psychology Press, 373–443. [Google Scholar]

- Calabria M, Branzi FM, Marne P, Hernández M, & Costa A (2015). Age-related effects over bilingual language control and executive control. Bilingualism: Language and Cognition, 18, 65–78. [Google Scholar]

- Capilouto GJ, Wright HH, & Maddy KM (2016). Microlinguistic processes that contribute to the ability to relay main events: influence of age. Aging, Neuropsychology, and Cognition, 23, 445–463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carreiras M, Gutiérrez-Sigut E, Baquero S, & Corina D (2008). Lexical processing in Spanish sign language (LSE). Journal of Memory and Language, 58, 100–122. [Google Scholar]

- Caselli NK, & Pyers JE (2017). The road to language learning is not entirely iconic: Iconicity, neighborhood density, and frequency facilitate acquisition of sign language. Psychological science, 28(7), 979–987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng Q, & Mayberry RI (2018). Acquiring a first language in adolescence: the case of basic word order in American Sign Language. Journal of child language, 1–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deconinck J, Boers F, & Eyckmans J (2017). “Does the form of this word fit its meaning?” The effect of learner-generated mapping elaborations on L2 word recall. Language Teaching Research, 21, 31–53. [Google Scholar]

- Dotter F, Krausneker V, Jarmer H, & Huber L (2019). Austrian Sign Language: Recognition achieved but discrimination continues. In: De Meulder M, Murray JJ, & McKee R (Eds.), The Legal Recognition of Sign Languages: Advocacy and Outcomes Around the World. Bristol: Multilingual Matters, 209–223. [Google Scholar]

- Dye MW, & Shih S (2006). Phonological priming in British sign language. Laboratory phonology, 8, 241–263. [Google Scholar]

- Emmorey K, Bellugi U, Friederici AD, & Horn P (1995). Effects of age of acquisition on grammatical sensitivity: Evidence from on-line and off-line tasks. Applied Psycholinguistics, 16, 1–23. [Google Scholar]

- Evrard M (2002). Ageing and lexical access to common and proper names in picture naming. Brain and Language, 81, 174–179. [DOI] [PubMed] [Google Scholar]

- Ferjan Ramirez N, Leonard MK, Davenport TS, Torres C, Halgren E, & Mayberry RI (2014). Neural language processing in adolescent first-language learners: Longitudinal case studies in American Sign Language. Cerebral Cortex, 26, 1015–1026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandez L, Engelhard P, Patarroyo A, & Allen S (2019). The role of speech rate on anticipatory eye movements in L2 speakers of English and older and younger L1 English speakers. Poster presented at the PIPP conference, Iceland. [Google Scholar]

- Fischer S (1975). Influences on word order change in American Sign Language. In: Li CN (ed). Word Order and Word Order Change. Austin, TX: University of Texas Press. [Google Scholar]

- Frishberg N (1975). Arbitrariness and iconicity: Historical change in American Sign Language. Language, 51, 696–719. [Google Scholar]

- Fromkin V, Krashen S, Curtiss S, Rigler D, & Rigler M (1974). The development of language in Genie: a case of language acquisition beyond the ‘critical period’. Brain and Language, 1, 81–107. [Google Scholar]

- Hall ML, Hall WC, & Caselli NK (2019). Deaf children need language, not (just) speech. First Language, 0142723719834102. [Google Scholar]

- Hausch C (2008). Topickonstruktionen und Satzstrukturen in der ÖGS. Veröffentlichungen des Zentrums für Gebärdensprache und Hörbehindertenkommunikation der Universität Klagenfurt; (Vol. 13), 85–94. [Google Scholar]

- Henner J, Caldwell-Harris CL, Novogrodsky R, & Hoffmeister R (2016). American Sign Language syntax and analogical reasoning skills are influenced by early acquisition and age of entry to signing schools for the deaf. Frontiers in Psychology, 7, 1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hildebrandt U, & Corina D (2002). Phonological similarity in American Sign Language. Language and Cognitive Processes, 17, 593–612. [Google Scholar]

- Humphries T, Kushalnagar P, Mathur G, Napoli DJ, Padden C, & Rathmann C (2014). Ensuring language acquisition for deaf children: What linguists can do. Language, 90(2), e31–e52. [Google Scholar]

- Imai M, & Kita S (2014). The sound symbolism bootstrapping hypothesis for language acquisition and language evolution. Philosophical Transactions of the Royal Society of London, Series B. Biological Sciences, 369, 20130298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James LE, Burke DM, Austin A, & Hulme E (1998). Production and perception of “verbosity” in younger and older adults. Psychology and Aging, 13, 355–367. [DOI] [PubMed] [Google Scholar]

- James L, & MacKay DG (2007). New age-linked asymmetries: Aging and the processing of familiar versus novel language on the input versus output side. Psychology and Aging, 22, 94–103. [DOI] [PubMed] [Google Scholar]

- Johnson RE (2003). Aging and the remembering of text. Developmental Review, 23, 261–346. [Google Scholar]

- Kantartzis K, Imai M, & Kita S (2011). Japanese sound-symbolism facilitates word learning in English-speaking children. Cognitive Science, 35, 575–586. [Google Scholar]

- Kemper S, Kynette D, Rash S, Sprott R, & O’Brien K (1989). Life-span changes to adults’ language: Effects of memory and genre. Applied Psycholinguistics, 10, 49–66. [Google Scholar]

- Kidd E, Donnelly S, & Christiansen MH (2018). Individual differences in language acquisition and processing. Trends in cognitive sciences, 22, 154–169. [DOI] [PubMed] [Google Scholar]

- Klima ES, & Bellugi U (1979). The signs of language. Cambridge, MA: Harvard University Press. [Google Scholar]

- Krebs J (2017). The syntax and the processing of argument relations in Austrian Sign Language (ÖGS). (Doctoral dissertation) University of Salzburg, Salzburg, Austria. [Google Scholar]

- Krebs J, Malaia E, Wilbur RB, & Roehm D (2018). Subject preference emerges as cross-modal strategy for linguistic processing. Brain Research, 1691, 105–117. [DOI] [PubMed] [Google Scholar]

- Krebs J, Wilbur RB, Alday PM, & Roehm D (2019a). The impact of transitional movements and non-manual markings on the disambiguation of locally ambiguous argument structures in Austrian Sign Language (ÖGS). Language and Speech, 62(4), 652–680. doi: 10.1177/0023830918801399 [DOI] [PubMed] [Google Scholar]

- Krebs J, Malaia E, Wilbur RB & Roehm D (2019b). Interaction between topic marking and subject preference strategy in sign language processing. Language, Cognition & Neuroscience,1–19. [Google Scholar]

- Laing CE (2014). A phonological analysis of onomatopoeia in early word production. First Language, 34, 387–405. [Google Scholar]

- Lieberman AM, Borovsky A, Hatrak M, & Mayberry RI (2015). Real-time processing of ASL signs: Delayed first language acquisition affects organization of the mental lexicon. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41, 1130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malaia E, & Wilbur RB (2010). Early acquisition of sign language: What neuroimaging data tell us. Sign Language and Linguistics, 13, 183–199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malaia E, Borneman JD, & Wilbur RB (2016). Assessment of information content in visual signal: analysis of optical flow fractal complexity. Visual Cognition, 24, 246–251. [Google Scholar]

- Malaia E, & Wilbur RB (2019). Visual and linguistic components of short-term memory: Generalized Neural Model (GNM) for spoken and sign languages. Cortex, 112, 69–79. DOI: 10.1016/j.cortex.2018.05.020. [DOI] [PubMed] [Google Scholar]

- Malaia E, Krebs J, Wilbur RB, Roehm D (2020). Age of acquisition effects differ across linguistic domains in sign language: EEG evidence. Brain and Language, 200. DOI: 10.1016/j.bandl.2019.104708 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marshall CR, & Morgan G (2015). From gesture to sign language: Conventionalization of classifier constructions by adult hearing learners of British Sign Language. Topics in Cognitive Science, 7, 61–80. [DOI] [PubMed] [Google Scholar]

- Mayberry RI (2007). When timing is everything: Age of first-language acquisition effects on second-language learning. Applied Psycholinguistics, 28, 537–549. [Google Scholar]

- Mayberry RI, & Fischer SD (1989). Looking through phonological shape to lexical meaning: The bottleneck of non-native sign language processing. Memory & Cognition, 17, 740–754. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, & Eichen EB (1991). The long-lasting advantage of learning sign language in childhood: Another look at the critical period for language acquisition. Journal of Memory and Language, 30, 486. [Google Scholar]

- Mayberry RI, Lock E, & Kazmi H (2002). Linguistic ability and early language exposure. Nature, 417, 38. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, & Kluender R (2018). Rethinking the critical period for language: New insights into an old question from American Sign Language. Bilingualism: Language and Cognition, 21, 886–905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meier R, Mauk CE, Cheek A, & Moreland CJ (2008). The form of children’s early signs: Iconic or motoric determinants? Language Learning and Development, 4, 63–98. [Google Scholar]

- Mitchell RE, & Karchmer M (2004). Chasing the mythical ten percent: Parental hearing status of deaf and hard of hearing students in the United States. Sign Language Studies, 4, 138–163. [Google Scholar]

- Morford JP, Grieve-Smith AB, MacFarlane J, Staley J, & Waters G (2008). Effects of language experience on the perception of American Sign Language. Cognition, 109, 41–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford JP, & Carlson ML (2011). Sign perception and recognition in non-native signers of ASL. Language learning and development, 7(2), 149–168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newport EL (1990). Maturational constraints on language learning. Cognitive Science, 14, 11–28. [Google Scholar]

- Newport EL, & Meier RP (1985). The acquisition of American Sign Language. In: Slobin D (Ed.), The cross-linguistic study of language acquisition. Mahwah, NJ: Erlbaum, 881–938. [Google Scholar]

- Ni D (2014). Topikkonstruktionen in der Österreichischen Gebärdensprache (master’s thesis). University of Hamburg. [Google Scholar]

- Novogrodsky R, Henner J, Caldwell-Harris C, & Hoffmeister R (2017). The development of sensitivity to grammatical violations in American Sign Language: native versus nonnative signers. Language Learning, 67, 791–818. [Google Scholar]

- Orfanidou E, Adam R, Morgan G, & McQueen JM (2010). Recognition of signed and spoken language: Different sensory inputs, the same segmentation procedure. Journal of Memory and Language, 62, 272–283. [Google Scholar]

- Orlansky MD, & Bonvillian JD (1984). The role of iconicity in early sign language acquisition. Journal of Speech & Hearing Disorders, 49, 287–292. [DOI] [PubMed] [Google Scholar]

- Ortega G, Sümer B, & Özyürek A (2016). Type of iconicity matters in the vocabulary development of signing children. Developmental Psychology, 53, 89. [DOI] [PubMed] [Google Scholar]

- Ortega G, Özyürek A, & Peeters D (2019). Iconic gestures serve as manual cognates in hearing second language learners of a sign language: An ERP study. Journal of Experimental Psychology: Learning, Memory, and Cognition. [DOI] [PubMed] [Google Scholar]

- Padden C (1983). Interaction of morphology and syntax in American Sign Language. PhD thesis, University of California, San Diego: [published 1988, New York: Garland Press]. [Google Scholar]

- Park DC, Lautenschlager G, Hedden T, Davidson NS, Smith AD, & Smith PK (2002). Models of visuospatial and verbal memory across the adult life span. Psychology and Aging, 17, 299. [PubMed] [Google Scholar]

- Perniss P, Thompson RL, & Vigliocco G (2010). Iconicity as a general property of language: Evidence from spoken and signed languages. Frontiers in Psychology, 1, 227. [DOI] [PMC free article] [PubMed] [Google Scholar]