Key Points

Question

Is the redaction of identifiers that may trigger implicit bias (eg, name, sex or gender, race and ethnicity) associated with differences in scores on ophthalmology residency application screening for applicants underrepresented in medicine?

Findings

This quality improvement study found that redaction of identifiers was not associated with differences in application scores. The distribution of application scores was similar for redacted vs unredacted applications, with no difference based on an applicant’s sex, underrepresentation in medicine status (traditionally comprising American Indian or Alaskan Native, Black, and Hispanic individuals), or international medical graduate status.

Meaning

Although study size may have precluded identifying differences, these findings suggest that redacting applicant characteristics is not associated with application scores among disadvantaged candidates.

Abstract

Importance

Diversity in the ophthalmology profession is important when providing care for an increasingly diverse patient population. However, implicit bias may inadvertently disadvantage underrepresented applicants during resident recruitment and selection.

Objective

To evaluate the association of the redaction of applicant identifiers with the review scores on ophthalmology residency applications as an intervention to address implicit bias.

Design, Setting, and Participants

In this quality improvement study, 46 faculty members reviewed randomized sets of 462 redacted and unredacted applications from a single academic institution during the 2019-2020 ophthalmology residency application cycle.

Interventions

Applications electronically redacted for applicant identifiers, including name, sex or gender, race and ethnicity, and related terms.

Main Outcomes and Measures

The main outcome was the distribution of scores on redacted and unredacted applications, stratified by applicant’s sex, underrepresentation in medicine (URiM; traditionally comprising American Indian or Alaskan Native, Black, and Hispanic individuals) status, and international medical graduate (IMG) status; the application score β coefficients for redaction and the applicant and reviewer characteristics were calculated. Applications were scored on a scale of 1 to 9, where 1 was the best score and 9 was the worst score. Scores were evaluated for a significant difference based on redaction among female, URiM, and IMG applicants. Linear regression was used to evaluate the adjusted association of redaction, self-reported applicant characteristics, and reviewer characteristics with scores on ophthalmology residency applications.

Results

In this study, 277 applicants (60.0%) were male and 71 (15.4%) had URiM status; 32 faculty reviewers (69.6%) were male and 2 (0.4%) had URiM status. The distribution of scores was similar for redacted vs unredacted applications, with no difference based on sex, URiM status, or IMG status. Applicant’s sex, URiM status, and IMG status had no association with scores in multivariable analysis (sex, β = –0.08; 95% CI, –0.32 to 0.15; P = .26; URiM status, β = –0.03; (95% CI, –0.36 to 0.30; P = .94; and IMG status, β = 0.39; 95% CI, –0.24 to 1.02; P = .35). In adjusted regression, redaction was not associated with differences in scores (β = −0.06 points on a 1-9 scale; 95% CI, –0.22 to 0.10 points; P = .48). Factors most associated with better scores were attending a top 20 medical school (β = −1.06; 95% CI, –1.37 to –0.76; P < .001), holding an additional advanced degree (β = −0.86; 95% CI, –1.22 to –0.50; P < .001), and having a higher United States Medical Licensing Examination Step 1 score (β = −0.35 per 10-point increase; 95% CI, –0.45 to –0.26; P < .001).

Conclusions and Relevance

This quality improvement study did not detect an association between the redaction of applicant characteristics on ophthalmology residency applications and the application review scores among underrepresented candidates at this institution. Although the study may not have been powered adequately to find a difference, these findings suggest that the association of redaction with application review scores may be preempted by additional approaches to enhance diversity, including pipeline programs, implicit bias training, diversity-centered culture and priorities, and targeted applicant outreach. Programs may adapt this study design to probe their own application screening biases and track over time before-and-after bias-related interventions.

This quality improvement study evaluates the association of redaction of applicant identifiers with review scores on ophthalmology residency applications as 1 intervention to address implicit bias.

Introduction

Diversity brings value to the ophthalmology profession. Female physicians engage in more active patient-centered communication, have higher empathy scores, and perform better on care quality metrics.1,2,3 Physicians from racial and ethnic populations underrepresented in medicine (URiM; traditionally comprising American Indian or Alaskan Native, Black, and Hispanic individuals) are more likely to serve areas with racial and ethnic minority populations (which disproportionately experience physician shortages), care for race-concordant Black and Hispanic patients, and care for Medicaid and uninsured patients.4,5 Patients treated by race-concordant physicians who are URiM report greater satisfaction and may better adhere to medical advice.6,7,8,9

However, the level of diversity of the ophthalmology workforce remains limited to date, with little change during the past decade. Based on physician workforce and US Census data, women represented only 22.7% of practicing ophthalmologists and 44.3% of ophthalmology residents in 2015 compared with 50.8% of the US population at large.10 Even more concerning, physicians who were URiM represented only 6% of practicing ophthalmologists and 7.7% of ophthalmology residents vs 30.7% of the US population at large. Between 2005 and 2015, female representation increased modestly (by 3.3% among practicing ophthalmologists and 8.7% among residents), but the representation of physicians who are URiM remained unchanged among practicing ophthalmologists and decreased by 1% among residents during the same decade. Representation in academic ophthalmology leadership and national organizations has also been found suboptimal and slow to change.11,12,13

Better recruitment and retention strategies are indicated. Implicit bias—unconscious heuristics or stereotypes attributed to a particular group (eg, negative associations based on race and ethnicity, sex, or gender)—is a potential target of investigation. These implicit biases may contribute to the lack of diversity in residency applicant selection.14,15,16,17,18,19 Studies outside of medicine have shown that male and White candidates’ curriculum vitae are rated more positively than female and racial and ethnic minority candiates’ identical curriculum vitae.20,21,22 There is evidence that individuals perceived as female or URiM are rated less likeable, less competent, less skilled, and less suitable for high-status jobs.21,22,23,24,25,26,27

Given the deeply rooted nature of implicit bias, data on self-identified sex or gender or race and ethnicity and gender- or ethnicity-evocative names or activities may inadvertently disadvantage female and URiM applicants, even when there is a goal to increase diversity. However, the potential value of redacting these data has not been evaluated in the ophthalmology residency application and selection process, to our knowledge. In this study, we compared redacted vs unredacted application reviews to (1) assess the association of redaction with application review scores, (2) evaluate whether redaction is associated with application review scores specifically for female and/or URiM applicants, and (3) assess the association of other applicant and faculty reviewer characteristics with review scores.

Methods

Study Design

We evaluated data from a single academic institution (Byers Eye Institute, Stanford University School of Medicine, Stanford, California) in the 2019-2020 ophthalmology residency application cycle. This study was reviewed and deemed exempt by the Stanford University institutional review board, based on Office for Human Research Protections regulations for the protection of human participants in research 45 CFR §46, section 46.104 (d) (2), and clinical investigation 21 CFR §56.

Application Redaction, Review, and Randomization

Department staff manually screened each application, using Acrobat DC, release 19.012.20036 (Adobe Inc) to redact applicant identifiers that might trigger implicit bias, including name, sex or gender (including pronouns signifying gender), race and ethnicity, and race- or gender-associated groups or activities (eg, Latino Medical Student Association). Each application was reviewed in redacted form by one reviewer and unredacted form by a second reviewer, each of whom received implicit bias training. Each faculty member reviewed a mix of redacted and unredacted applications. Forty-six faculty members reviewed 462 applications (19-23 each). Applications were randomly assigned to reviewers to minimize confounding from within-reviewer effects. Reviewers assigned a score from 1 (best) to 9 (worst), relative to the usual spectrum of applications. Reviewers were instructed that most scores should fall midrange, using a score of 5 to represent the average applicant (strong overall), and that it would be unusual to see more than 1 or 2 applications rated 1 in each set. Faculty members were given examples to aid review (eMethods in the Supplement).

Variables

Our outcome was application score. Our variable of interest was redaction (redacted vs unredacted applications). We evaluated applicants based on sex, self-identified URiM status (SFMatch allows applicants to self-identify as an underrepresented minority individual, comprising American Indian or Alaskan Native, Black or African American, or Hispanic or Latino groups), and international medical graduate (IMG) status (yes or no).28,29 We also considered other applicant and reviewer characteristics. Applicant characteristics included United States Medical Licensing Examination (USMLE) Step 1 and Step 2 scores, doctor of medicine (MD) vs doctor of osteopathic medicine (DO) degree, presence of second advanced degree (eg, PhD, MS, or PharmD), Alpha Omega Alpha medical honor society selection status (yes, no, not yet determined, or not available), and medical school ranking. Reviewer characteristics included reviewer sex, URiM status, faculty line, faculty rank (none or clinical instructor, assistant, associate, or full professor), and primary practice location (Stanford University vs adjunct or affiliate site) (additional details are in the eMethods and eFigure 3 in the Supplement).

Statistical Analysis

Applicant and reviewer characteristics were summarized using frequencies and percentages. We evaluated the distribution of application scores based on applicants’ sex, URiM status, and IMG status, stratified by application redaction. We used t tests to evaluate for statistically significant differences between redacted and unredacted scores in each subgroup.

We computed total variance in application scores attributed to between-application vs between-reviewer differences, and we used mixed-effects multivariable linear regression modeling to evaluate the adjusted association of redaction, applicant characteristics, and reviewer characteristics with review scores. Because application scores were nested at both the reviewer and applicant level, we treated applicant and reviewer identification as crossed random effects, and all other covariates as fixed effects (eMethods in the Supplement).

All P values were 2-sided in multivariable adjusted regression, not adjusted for multiple analyses (eg, Bonferroni correction). To compute P values, we used an asymptotic χ2 test comparing (1) the complete multivariable model with interactions between each variable and redaction status (or no interactions, for redaction-status P values) vs (2) the same model omitting the specified variable. P < .05 was used to determine statistical significance for the primary outcome. We used these P values to develop a parsimonious model including all variables demonstrating a statistically significant association (eTable 1 in the Supplement). This model fit just as well as the full multivariate model did (including interactions) based on the χ2 test (P = .27). Analyses were performed using R, version 3.6.3 (R Group for Statistical Computing) with the lme4, version 1.1-23 package for crossed random effects.30,31

Results

Applicant and Reviewer Characteristics

Of 462 applicants, 185 (40.0%) were female, 277 (60.0%) were male, 71 (15.4%) self-identified as URiM, and 47 (10.2%) were IMG applicants (Table 1). Among IMG applicants, 19 (40.4%) were female, and 16 (34.0%) were applicants who were URiM.

Table 1. Residency Applicant and Reviewer Characteristics, 2019-2020.

| Characteristic | No. (%) |

|---|---|

| Applicants | 462 (100) |

| Sex | |

| Female | 185 (40.0) |

| Male | 277 (60.0) |

| Self-reported URiM race and ethnicity | 71 (15.4) |

| International medical graduate | 47 (10.2) |

| Top 10 medical school (per US News & World Report)a | 56 (12.1) |

| Top 20 medical school (per US News & World Report)a | 97 (21.0) |

| Top 10 ophthalmology hospital (per US News & World Report)a | 59 (12.8) |

| Top 10 ophthalmology department (per Ophthalmology Times)a | 53 (11.5) |

| Graduation yearb | |

| Same as application year | 391 (84.6) |

| Medical degree | |

| MD | 451 (97.6) |

| DO | 11 (2.4) |

| Second degree (eg, PhD, MS, or MPH) | 59 (12.8) |

| Alpha Omega Alpha membershipc | 34 (7.4) |

| USMLE Step 1 score, mean (SD)d | 244.3 (13.4) |

| USMLE Step 2 score, mean (SD)e | 248.4 (12.4) |

| Reviewers | 46 (100) |

| Female sex | 14 (30.4) |

| Self-reported URiM race and ethnicity | 2 (0.43) |

| Faculty linef | |

| Clinician educator | 12 (26.1) |

| Medical center line | 21 (45.7) |

| Affiliated or adjunct | 13 (28.2) |

| Faculty rank | |

| Clinical instructor | 5 (10.1) |

| Assistant professor | 17 (37.0) |

| Associate professor | 12 (26.1) |

| Full professor | 12 (26.1) |

| Primary location | |

| Stanford University | 31 (67.4) |

| Affiliated clinical site (eg, VA or county hospital) | 15 (32.6) |

Abbreviations: DO, doctor of osteopathic medicine; MD, doctor of medicine; URiM, underrepresented in medicine; USMLE, United States Medical Licensing Examination; VA, Veterans Affairs.

See eTable 1 and eTable 3 in the Supplement.

Indicates applicants who completed medical school in the same year that they applied for residency (2020) vs an earlier year.

Incomplete data because not all schools have Alpha Omega Alpha and many students submitted applications before Alpha Omega Alpha elections at their medical schools.

Missing data on 3 applicants (osteopathic medical students). Mean Step 1 score, 242.7 among female applicants, 245.3 among male applicants, 237.0 among URiM applicants, and 245.6 among non-URiM applicants.

Missing data on 284 applicants. Mean Step 2 score, 248.5 among female applicants, 247.9 among male applicants, 242.2 among URiM applicants, and 250.2 among non-URiM applicants.

Clinician educator faculty are clinical care and education focused; medical center line faculty are generally hybrid clinical care and education and research; affiliate or adjunct faculty are predominantly clinical at an affiliated site (eg, VA or county hospital).

Students from US News & World Report top-ranked medical schools had slightly higher proportions of both female and URiM applicants vs the applicant pool at large (top 10 schools: female applicants, 23 of 56 [41.1%]; and URiM applicants, 9 of 56 [16.1%]; top 20 schools: female applicants, 44 of 97 [45.4%]; and URiM applicants, 16 of 97 [16.5%]). However, students from schools affiliated with top ophthalmology programs had slightly lower rates of female and URiM applicants compared with the applicant pool at large (schools affiliated with US News & World Report top ophthalmology programs: female applicants, 22 of 59 [37.3%]; and URiM applicants, 7 of 59 [11.9%]; schools affiliated with Ophthalmology Times top programs: female applicants, 21 of 53 [39.6%]; and URiM applicants, 6 of 53 [11.3%]).

Of 46 reviewers, 14 (30.4%) were female, 32 (69.6%) were male, and 2 (0.4%) were URiM (Table 1). The most common academic rank was assistant professor (17 [37.0%]), followed by associate and full professor (each 12 [26.1%]) and clinical instructor (5 [10.9%]). Most reviewers’ primary practice location was Stanford University (31 [67.4%]) vs affiliated sites, such as a Veterans Affairs or county hospital (15 [32.6%]).

Application Review Scores

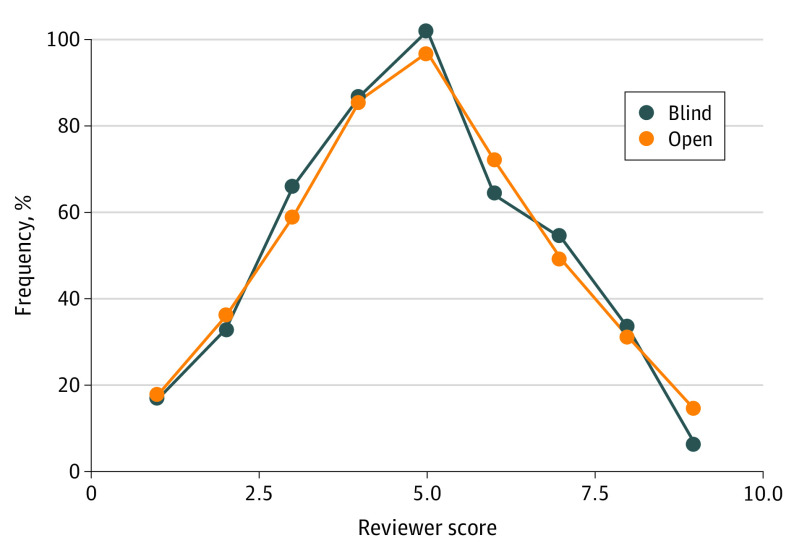

The mean (SD) application review score was 4.83 (1.88), near the intended midpoint score of 5. Scores followed a normal distribution (Figure 1), with a mean (SD) difference between reviewer scores of 0.06 (2.06) and mean (SD) of the absolute value of the difference between reviewer scores of 1.61 (1.30). Only 73 applications (15.8%) received the same score from both reviewers; however, scores differed by 3 or less for 387 applications (83.8%).

Figure 1. Distribution of Preliminary Residency Application Rankings, 2019-2020.

Association of Redaction With Application Review Scores

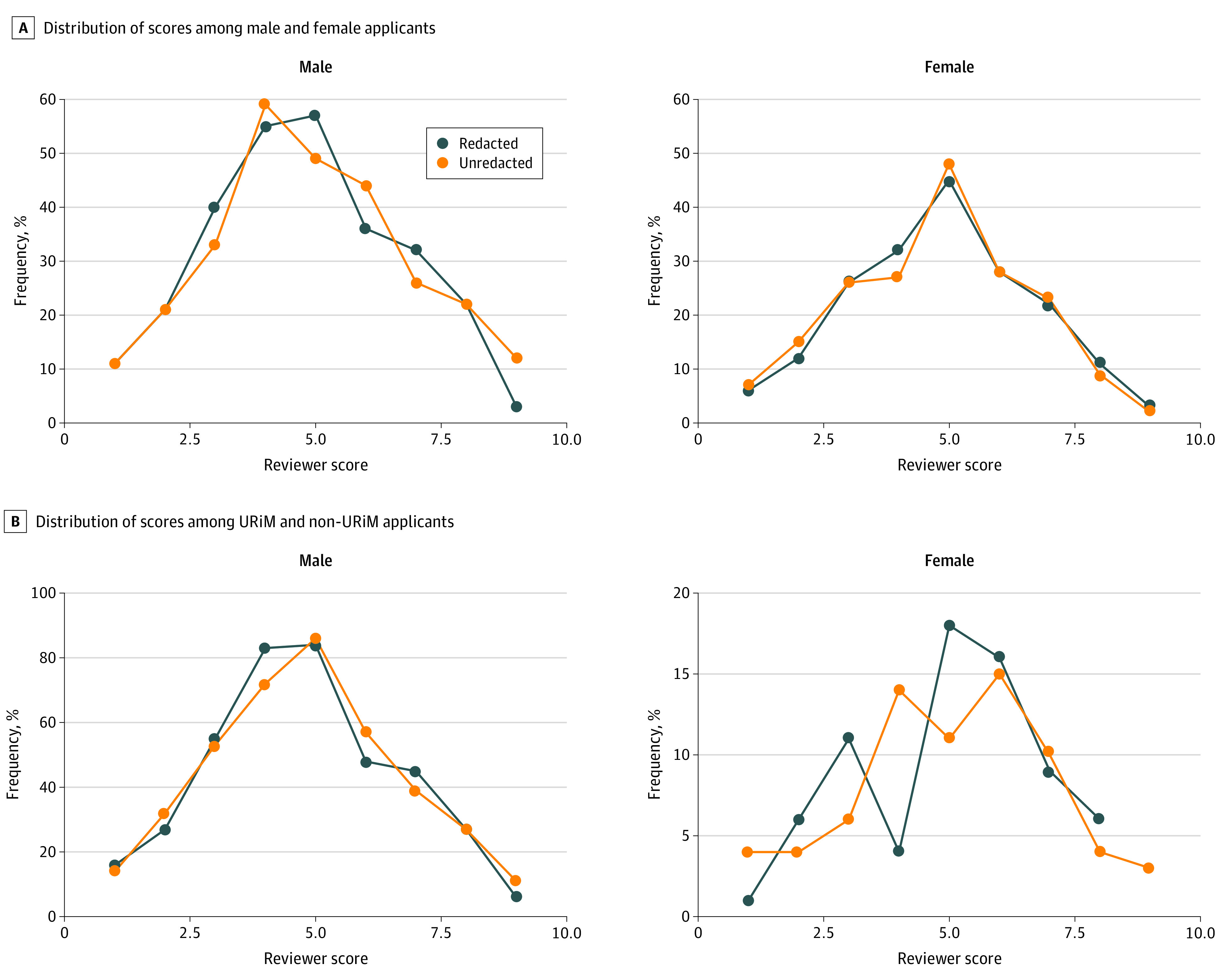

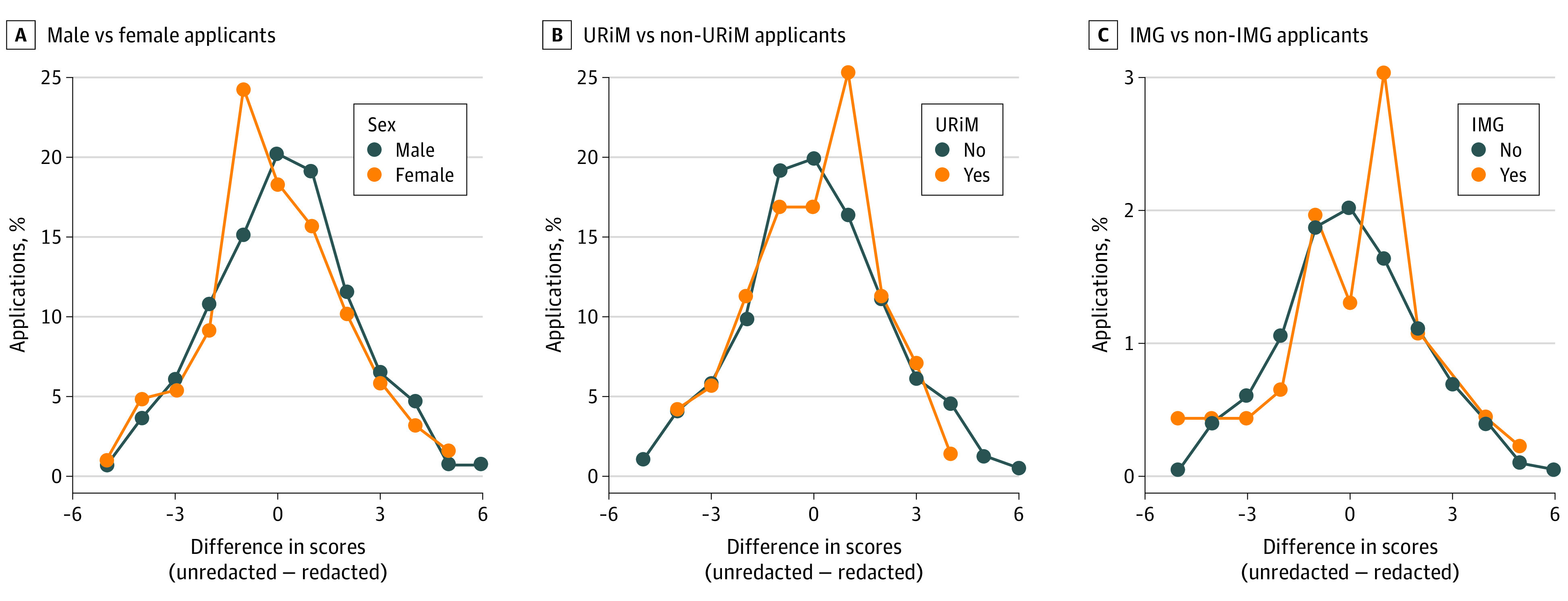

The distribution of redacted scores and the distribution of unredacted scores were similar, both in aggregate (Figure 1; eFigure 1 in the Supplement) and stratified by applicant sex, URiM status, and IMG status (Figure 2; eFigure 2 in the Supplement). In adjusted regression, redaction was not associated with a statistically significant score difference (β = –0.06 points on 1-9 scale; 95% CI, –0.22 to 0.10; P = .48) (Table 2). When we compared differences in scores between reviewers who received redacted applications and those who received unredacted applications, we did not find a significant difference favoring any group (Figure 3). Total variance in reviewer scores was associated more with between-application differences (26% total variance) than with between-reviewer differences (8% total variance).

Figure 2. Distribution of Preliminary Residency Application Rankings by Applicant Subgroup, 2019-2020.

A, Distribution of redacted and unredacted reviewer scores among male and female applicants. P = .23 (male applicants) and P = .57 (female applicants) for a statistically significant difference between redacted and unredacted scores. B, Distribution of redacted and unredacted reviewer scores among underrepresented in medicine (URiM) and non-URiM applicants. P = .95 (URiM applicants) and P = .56 (non-URiM applicants) for a statistically significant difference between redacted and unredacted scores.

Table 2. Multivariable Linear Regression Model of Redaction, Applicant Characteristics, and Reviewer Characteristics on Preliminary Application Scores, Using 2019-2020 Residency Application Dataa.

| Characteristic | β Coefficient (95% CI) | P valueb |

|---|---|---|

| Redacted application vs unredacted | –0.06 (–0.22 to 0.10) | .48 |

| Applicant characteristics | ||

| Female sex | –0.08 (–0.32 to 0.15) | .26 |

| Self-reported URiM race and ethnicity | –0.03 (–0.36 to 0.30) | .94 |

| International medical graduate | 0.39 (–0.24 to 1.02) | .35 |

| Top 20 medical school (per US News & World Report)c | –1.06 (–1.37 to –0.76) | <.001 |

| Graduation yeard | ||

| Same as application year | –0.38 (–0.87 to 0.11) | .27 |

| Second degree (eg, PhD, MS, or MPH) | –0.86 (–1.22 to –0.50) | <.001 |

| Alpha Omega Alpha membership (reference = not yet determined)e | .005 | |

| Yes | –0.35 (–0.75 to 0.05) | .08 |

| No | 0.46 (0.12 to 0.80) | .01 |

| Not available at medical school | –0.28 (–0.66 to 0.11) | .16 |

| USMLE Step 1 score (10-point increments) | –0.35 (–0.45 to –0.26) | <.001 |

| Reviewer characteristics | ||

| Female sex | –0.15 (–0.62 to 0.31) | .79 |

| Self-reported URiM race and ethnicity | NA | NA |

| Faculty rank as full professor | 0.57 (0.08 to 1.06) | .08 |

Abbreviations: NA, not applicable. URiM, underrepresented in medicine; USMLE, United States Medical Licensing Examination.

The model was performed with crossed random effects for applicants and reviewers and excluded 3 applicants with missing USMLE Step 1 scores (osteopathic medical students). Reviewer URiM status and allopathic vs osteopathic medical degree variable were omitted from the model owing to small sample size.

Calculated based on χ2 test comparison of (1) complete multivariable model with interactions between each variable and redaction status vs (2) the same model except completely omitting the specified characteristic. For redacted vs unredacted, however, the P value is from χ2 test comparing (1) complete multivariable model without any interactions vs (2) the same model except omitting this characteristic. For individual levels of Alpha Omega Alpha status, we computed empirical P values based on 10 000 sets of simulated scores.

Used top 20 medical school (per US News & World Report) criterion for medical school ranking (eTable 3 in the Supplement). This ranking had stronger effect size than any of the other rankings, which were consequently dropped owing to collinearity.

Indicates applicants who completed medical school in the same year that they applied for residency (2020) vs an earlier year.

Incomplete data because not all schools have Alpha Omega Alpha and many students submitted applications before Alpha Omega Alpha elections at their medical schools.

Figure 3. Differences in Redacted and Unredacted Preliminary Application Rankings, by Applicant Subgroup, 2019-2020.

A, Distribution of differences in reviewer scores (unredacted minus redacted) among male and female applicants. A positive difference means that the redacted score is better. P = .39 for a statistically significant difference between male and female applicants. B, Distribution of differences in reviewer scores among underrepresented in medicine (URiM) and non-URiM applicants. A positive difference means that the redacted score is better. P = .99 for a statistically significant difference between URiM and non-URiM applicants. C, Distribution of differences in reviewer scores among international medical graduate (IMG) and non-IMG applicants. A positive difference means that the redacted score is better. P = .98 for a statistically significant difference between IMG and non-IMG applicants.

Association of Applicant and Reviewer Characteristics With Applicant Review Scores

In adjusted multivariable regression, applicant sex, URiM status, and IMG status were not significantly associated with application review scores (sex, β = –0.08; 95% CI, –0.32 to 0.15; P = .26; URiM status, β = –0.03; 95% CI, –0.36 to 0.30; P = .94; and IMG status, β = 0.39; 95% CI, –0.24 to 1.02; P = .35) (Table 2). The factors significantly associated with scores were attending a top 20 medical school (β = –1.06; 95% CI, –1.37 to –0.76; P < .001), holding a second advanced degree (β = –0.86; 95% CI, –1.22 to –0.50; P < .001), and having a higher USMLE Step 1 score (β = –0.35 with every 10 point increase in Step 1 score; 95% CI, –0.45 to –0.26; P < .001). (Because lower scores were better, a negative β coefficient indicates a better score.) Not being elected to Alpha Omega Alpha was associated with worse scores (β = 0.46; 95% CI, 0.12-0.80; P = .01). After restricting to applications with USMLE Step 2 scores, we found a strong association between Step 1 and Step 2 scores (β = 0.63). Although Step 1 scores were more strongly associated with application scores than Step 2 scores, after adjusting for Step 1 scores, higher Step 2 scores were still associated with better application scores (β = –0.30 with every 10-point increase in Step 2 score; 95% CI, –0.50 to –0.09; eTable 2 in the Supplement).

Full professor reviewers were slightly more likely to give worse scores. In complete multivariable models, full professor rank had P = .02 without interactions (β = 0.59; 95% CI, 0.05-1.13) and P = .08 with interactions (β = 0.57; 95% CI, 0.08-1.06). It was also statistically significant in our parsimonious best-fit model (β = 0.60; 95% CI, 0.12-1.08; P = .02; eTable 1 in the Supplement). Reviewer sex was not significantly associated with the distribution of scores. There was no statistically significant interaction association of reviewer sex, rank, or applicant USMLE Step 1 score with applicant sex or URiM status.

Discussion

Insufficient representation of women and URiM individuals among ophthalmology residents, faculty, and practicing ophthalmologists remains a reality; addressing this inequity will require selecting a pipeline of more diverse resident trainees.10 However, implicit bias may have an unintentional negative influence on perception of applicants, potentially hindering recruitment of diverse trainees.15,16,17 To test the outcome of an intervention addressing potential triggers of implicit bias, we assigned faculty to review random sets of residency applications with or without redacted identifiers. Compared with complete, unredacted applications and adjusting for other applicant and reviewer factors, including USMLE scores, Alpha Omega Alpha status, degrees, graduation year, reviewer sex, and faculty rank, we found that redaction was not significantly associated with application review scores overall or specifically for female or URiM applicants. This finding is important because residency programs increasingly recognize the importance of diversity and examine recruitment processes.

Evidence for implicit bias is robust. For example, medical school admissions committee members have been reported to be more likely to associate negative words with images of Black and homosexual people and to associate men as career professionals and women as homemakers.17,32 Although data from experimental studies in psychology and leadership science imply a potential benefit from blinding reviewers to applicant sex or gender or race and ethnicity,20,21,22,23,24,25,26,27 doing so did not improve scores for female or URiM candidates in our study, suggesting that alternative strategies need to be examined. These strategies may include interventions such as (1) increasing awareness (eg, Implicit Association Tests); (2) increasing trainee, female, and URiM representation on admissions committees17; (3) selection committee training (eg, role-playing and case-based moderated workshops)14,32; (4) alternative assessment tools (eg, standardized situational judgment tests in which applicants review hypothetical scenarios and identify responses relevant to role-specific competencies, which may better measure professionalism and improve URiM recruitment compared with traditional screening approaches)33,34,35; and (5) institutional leadership.14,32,36,37 Residency programs can also intentionally emphasize diversity in recruitment materials and policies.16

Prior implementation of strategies such as these may have affected the observed lack of association between redaction and application review scores in our analysis. During the past 5 years, our department prioritized diversity using several approaches. Diversity and inclusion are promoted by the medical school dean’s office, hospital, and department chair, as well as divisional and program leadership, including annual diversity, equity, and inclusion–themed ophthalmology grand rounds. Appointment of an ophthalmology faculty diversity liaison and senior associate dean for faculty development and diversity are complemented by programs including the Graduate Medical Education Diversity Committee, Women in Medicine Leadership Council, “first-generation” mentorship program, Center of Excellence in Diversity in Medical Education, Leadership Education in Advancing Diversity Program, and annual Diversity and Inclusion Forum. Newer initiatives include the School of Medicine Diversity Center of Representation and Empowerment and ophthalmology department Committee on Diversity, Equity, and Inclusion. These programs reflect prioritization at all levels. Evidence indicates that female and URiM applicants place greater importance on seeing diversity among current program faculty members and residents; thus, progress in improving diversity may yield benefits carrying forward.38,39 We matriculated our first URiM resident in the 2017-2018 academic year. By the 2019-2020 academic year, our program included 54% female, 46% URiM, 8% IMG, and 8% LGBTQ (lesbian, gay, bisexual, transgender, and queer) residents. This shift preceded our redaction experiment and—we hypothesize—reflects culture and consistent messaging.

Although our study focused on testing application redaction, we also evaluated the relative association of other objective applicant and reviewer characteristics with application review scores. Alpha Omega Alpha election did not demonstrate a consistent association with scores, likely reflecting that many schools no longer offer Alpha Omega Alpha or hold elections well after early-match ophthalmology application submission. Full professors appeared to give slightly worse scores, perhaps reflecting more exposure to strong applicants over time. The factors associated most strongly with better application review scores were, in order of effect size, attendance at a top 20 medical school, holding additional graduate degree(s), and higher USMLE Step 1 and 2 scores. However, none of these individual factors were associated with changes in review scores by more than approximately 1 point on a scale of 1 to 9.

Limitations

This study had several important limitations, including data from a single institution and application cycle, which restricted the sample size for reviewer subgroups and, recognizing differences in attention to diversity and unconscious bias training, may have affected the generalizability of findings. The absence of a significant association between redaction and application review scores in our analysis may reflect existing institutional and departmental prioritization of diversity, and the study could have had insufficient power to detect a true difference from redaction. Evaluating redaction across multiple study sites (especially those without existing diversity initiatives) and over multiple years is needed to improve statistical power and the generalizability of findings and to enable an assessment of the association of reviewer sex and ethnicity with review scores (eg, URiM applicants may be scored better by a URiM reviewer of a similar background). We also relied on self-reported applicant data, which may, for example, have underreported URiM status. We were unable to quantify other important qualitative factors associated with resident selection, such as personal narrative, leadership, service, research efforts, publications, and letters of recommendation, which may be important in the application process. Furthermore, although seemingly objective, metrics such as USMLE scores may be subject to confounding and bias. Although we did not detect a significant interaction between USMLE scores and female sex or URiM status, the mean Step 1 scores were lower among URiM applicants in our sample and have previously been shown to disadvantage female and URiM applicants, and while they may be associated with performance on the American Board of Ophthalmology written board examination, they were not associated with clinical outcomes, professionalism, or residency core competencies.33,40,41,42,43,44,45,46 Residency programs are increasingly recognizing the importance of a holistic review in the residency application process, and USMLE Step 1 scores are moving to pass-fail reporting in 2022.33,47

Medical school rankings are also subject to confounding and bias. Applicants’ prior selection into academically elite colleges and graduate programs may affect the perceived strength of their residency application; however, admissions bias in these and earlier educational programs may have a cumulative negative association with representation. Despite these potential limitations to the pipeline of ophthalmology applicants, in our pool of ophthalmology applicants, we observed a higher proportion of female and URiM students among applicants from top-ranked vs lesser-ranked medical schools. This finding may reflect multiple factors. It is possible that top-ranked schools are more successful at recruiting URiM students or are better able to recruit academically competitive URiM students. It is also possible that lower-ranked schools have more URiM students but directly or indirectly steer them away from competitive specialties such as ophthalmology, something we cannot determine without broader data. Students may also have access to different opportunities at different schools, which may affect their competitiveness or consideration of ophthalmology as a career. For example, students at top-ranked schools may have more access to research and funding, local and international outreach programs, mentorship, and innovation and leadership opportunities. Considering medical school identity in the context of available opportunities and students’ “distance traveled” (challenges unique to an applicant’s personal history to reach the point of applying for ophthalmology residency) to finish college and/or medical school may allow a more holistic evaluation of potentially disadvantaged applicants.

Conclusions

These considerations suggest the need for pipeline programs and targeted outreach to support women, URiM applicants, first-generation college graduate applicants, and LGBTQ+ applicants. Research on the outcomes of such programs will also be important. For example, the American Academy of Ophthalmology/Association of University Professors in Ophthalmology Minority Ophthalmology Mentoring program provides an early introduction, resources, and dedicated mentorship to URiM premedical and medical students.48 Some institutions offer funded away rotations with dedicated mentorship and community, waived application fees or tuition, housing, and/or stipends for URiM and other disadvantaged students—for example, the Byers Eye Institute/Stanford Clinical Opportunity for Residency Experience (SCORE) Program, the Stanford Clinical Opportunity for Residency Experience Program, or the New York Eye and Ear Infirmary/Mt Sinai Visiting Electives Program for Students Underrepresented in Medicine fellowship,49,50,51 as well as programs at Vanderbilt University, Wilmer Eye Institute/Johns Hopkins University (for first-year as well as clinical students), and Bascom Palmer Eye Institute/University of Miami (for medical students at any level), and a summer program for first-year medical students at Kellogg Eye Center/University of Michigan. These initiatives are intended to facilitate recruiting diverse trainees to ophthalmology programs and warrant further analyses of their effectiveness.

Overall, we believe that our study results are encouraging. Our findings suggest that applicants from disadvantaged backgrounds may be judged based on other criteria of merit and are no less qualified than peers from less disadvantaged backgrounds, and/or that being from a disadvantaged background may actually be associated with an application review advantage at institutions where diversity is a stated goal. Both of these possibilities are positive, the latter also consistent with goals to increase equity by adjusting for prior disadvantages.52,53

Beyond the long-term benefits to the profession and patients, diversity and inclusion may have direct benefits for the health and success of residency programs and departments by increasing excellence, innovation, and cultural competence.54 Efforts to recruit a diverse group of students and sustain diversity among ophthalmology trainees are critical. In this analysis, we found that a diversity-blind application review was not associated with the application review scores of female or URiM candidates, in the context of programs, culture, and messaging that promote diversity. Other initiatives to improve diversity may include pipeline programs, implicit bias workshops, institutional- and department-level policies and culture, and targeted outreach to underrepresented applicants. These initiatives should be implemented if shown to be effective, or they should be implemented on the assumption of effectiveness pending further studies. Programs may also reproduce or modify this study design to probe their own levels of detectable bias in application screening and to track such bias over time (eg, before and after interventions addressing bias).

eMethods.

eReferences.

eTable 1. Parsimonious Best-Fit Multivariable Linear Regression Model of Applicant and Reviewer Characteristics on Preliminary Application Scores, Using 2019-2020 Residency Application Data

eTable 2. Parsimonious Best-Fit Multivariable Linear Regression Model of Applicant and Reviewer Characteristics on Preliminary Application Scores, Using 2019-2020 Residency Application Data Among Applicants With Step 2 Scores

eTable 3. Medical School Rankings

eFigure 1. 2D Histogram of Redacted and Unredacted Preliminary Residency Application Rankings, 2019-2020

eFigure 2. Distribution of Preliminary Residency Application Rankings Based on International Medical Graduate Status, 2019-2020

eFigure 3. Residency Application Ranking Best-Fit Model Residuals for Osteopathic Applicants

References

- 1.Abelson JS, Symer MM, Yeo HL, et al. Surgical time out: our counts are still short on racial diversity in academic surgery. Am J Surg. 2018;215(4):542-548. doi: 10.1016/j.amjsurg.2017.06.028 [DOI] [PubMed] [Google Scholar]

- 2.Chaitoff A, Sun B, Windover A, et al. Associations between physician empathy, physician characteristics, and standardized measures of patient experience. Acad Med. 2017;92(10):1464-1471. doi: 10.1097/ACM.0000000000001671 [DOI] [PubMed] [Google Scholar]

- 3.Dahrouge S, Seale E, Hogg W, et al. A comprehensive assessment of family physician gender and quality of care: a cross-sectional analysis in Ontario, Canada. Med Care. 2016;54(3):277-286. doi: 10.1097/MLR.0000000000000480 [DOI] [PubMed] [Google Scholar]

- 4.Komaromy M, Grumbach K, Drake M, et al. The role of Black and Hispanic physicians in providing health care for underserved populations. N Engl J Med. 1996;334(20):1305-1310. doi: 10.1056/NEJM199605163342006 [DOI] [PubMed] [Google Scholar]

- 5.Moy E, Bartman BA. Physician race and care of minority and medically indigent patients. JAMA. 1995;273:1515-1520. doi: 10.1001/jama.1995.03520430051038 [DOI] [PubMed] [Google Scholar]

- 6.Laveist TA, Nuru-Jeter A. Is doctor-patient race concordance associated with greater satisfaction with care? J Health Soc Behav. 2002;43(3):296-306. doi: 10.2307/3090205 [DOI] [PubMed] [Google Scholar]

- 7.Traylor AH, Schmittdiel JA, Uratsu CS, Mangione CM, Subramanian U. Adherence to cardiovascular disease medications: does patient-provider race/ethnicity and language concordance matter? J Gen Intern Med. 2010;25(11):1172-1177. doi: 10.1007/s11606-010-1424-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cooper LA, Roter DL, Johnson RL, Ford DE, Steinwachs DM, Powe NR. Patient-centered communication, ratings of care, and concordance of patient and physician race. Ann Intern Med. 2003;139(11):907-915. doi: 10.7326/0003-4819-139-11-200312020-00009 [DOI] [PubMed] [Google Scholar]

- 9.Powe NR, Cooper LA. Disparities in patient experiences, health care processes, and outcomes: the role of patient-provider racial, ethnic, and language concordance. Commonwealth Fund. 2004. Accessed August 16, 2020. https://www.commonwealthfund.org/publications/fund-reports/2004/jul/disparities-patient-experiences-health-care-processes-and

- 10.Xierali IM, Nivet MA, Wilson MR. Current and future status of diversity in ophthalmologist workforce. JAMA Ophthalmol. 2016;134(9):1016-1023. doi: 10.1001/jamaophthalmol.2016.2257 [DOI] [PubMed] [Google Scholar]

- 11.Azad AD, Rosenblatt TR, Chandramohan A, Fountain TR, Kossler AL. Progress towards parity: female representation in the American Society of Ophthalmic Plastic and Reconstructive Surgery. Ophthalmic Plast Reconstr Surg. 2021;37(3):236-240. doi: 10.1097/IOP.0000000000001764 [DOI] [PubMed] [Google Scholar]

- 12.Camacci ML, Lu A, Lehman EB, Scott IU, Bowie E, Pantanelli SM. Association between sex composition and publication productivity of journal editorial and professional society board members in ophthalmology. JAMA Ophthalmol. 2020;138(5):451-458. doi: 10.1001/jamaophthalmol.2020.0164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tuli SS. Status of women in academic ophthalmology. J Acad Ophthalmol. 2019;11:e59-e64. doi: 10.1055/s-0039-3401849 [DOI] [Google Scholar]

- 14.Lewis D, Paulsen E, eds. Proceedings of the Diversity and Inclusion Innovation Forum: unconscious bias in academic medicine: how the prejudices we don’t know we have affect medical education, medical careers, and patient health. Association of American Medical Colleges and The Kirwan Institute for the Study of Race and Ethnicity at The Ohio State University; 2017. Accessed August 16, 2020. https://store.aamc.org/downloadable/download/sample/sample_id/168/

- 15.Corrice A. Unconscious bias in faculty and leadership recruitment: a literature review. AAMC Analysis in Brief. 2009;9(2). Accessed August 16, 2020. https://www.aamc.org/system/files/reports/1/aibvol9no2.pdf

- 16.Nwora C, Allred DB, Verduzco-Gutierrez M. Mitigating bias in virtual interviews for applicants who are underrepresented in medicine. J Natl Med Assoc. 2021;113(1):74-76. doi: 10.1016/j.jnma.2020.07.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Capers Q IV, Clinchot D, McDougle L, Greenwald AG. Implicit racial bias in medical school admissions. Acad Med. 2017;92(3):365-369. doi: 10.1097/ACM.0000000000001388 [DOI] [PubMed] [Google Scholar]

- 18.Sabin J, Nosek BA, Greenwald A, Rivara FP. Physicians’ implicit and explicit attitudes about race by MD race, ethnicity, and gender. J Health Care Poor Underserved. 2009;20(3):896-913. doi: 10.1353/hpu.0.0185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.FitzGerald C, Hurst S. Implicit bias in healthcare professionals: a systematic review. BMC Med Ethics. 2017;18(1):19. doi: 10.1186/s12910-017-0179-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Steinpres RE, Anders KA, Ritzke D. The impact of gender on the review of the curricula vitae of job applicants and tenure candidates: a national empirical study. Sex Roles. 1999;41:509-528. doi: 10.1023/A:1018839203698 [DOI] [Google Scholar]

- 21.King EB, Madera JM, Hebl MR, et al. What’s in a name? a multiracial investigation of the role of occupational stereotypes in selection decisions. J Appl Soc Psychol. 2006;36:1145-1159. doi: 10.1111/j.0021-9029.2006.00035.x [DOI] [Google Scholar]

- 22.Bertrand M, Mullainathan S. Are Emily and Greg more employable than Lakisha and Jamal? a field experiment on labor market discrimination. May 27, 2003. MIT Department of Economics Working Paper No. 03-22. Accessed August 16, 2020. http://ssrn.com/abstract=422902

- 23.Heilman ME, Okimoto TG. Why are women penalized for success at male tasks?: the implied communality deficit. J Appl Psychol. 2007;92(1):81-92. doi: 10.1037/0021-9010.92.1.81 [DOI] [PubMed] [Google Scholar]

- 24.Trix F, Psenka C. Exploring the color of glass: letters of recommendation for female and male medical faculty. Discourse Society. 2003;14:191-220. doi: 10.1177/0957926503014002277 [DOI] [Google Scholar]

- 25.Wennerås C, Wold A. Nepotism and sexism in peer-review. Nature. 1997;387(6631):341-343. doi: 10.1038/387341a0 [DOI] [PubMed] [Google Scholar]

- 26.Goldin C, Rouse C. Orchestrating impartiality: the impact of “blind” auditions on female musicians. Am Econ Rev. 2000;90(4):715-741. doi: 10.1257/aer.90.4.715 [DOI] [Google Scholar]

- 27.Biernat M, Manis M. Shifting standards and stereotype-based judgments. J Pers Soc Psychol. 1994;66(1):5-20. doi: 10.1037/0022-3514.66.1.5 [DOI] [PubMed] [Google Scholar]

- 28.SFMatch Residency and Fellowship Match Services . Ophthalmology. Accessed September 17, 2021. https://www.sfmatch.org/specialty/97baf738-9b5b-4b50-b715-444111a28b6d/87ecb563-c86a-45b8-990c-e7fea102e529

- 29.Lett LA, Murdock HM, Orji WU, Aysola J, Sebro R. Trends in racial/ethnic representation among US medical students. JAMA Netw Open. 2019;2(9):e1910490. doi: 10.1001/jamanetworkopen.2019.10490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.R Core Team . R: a language and environment for statistical computing. R Foundation for Statistical Computing. Accessed August 16, 2020. https://www.R-project.org/

- 31.Bates D, Maechler M, Bolker B, Walker S. Fitting linear mixed-effects models using lme4. J Stat Software. 2015;67(1):1-48. doi: 10.18637/jss.v067.i01 [DOI] [Google Scholar]

- 32.Capers Q IV. How clinicians and educators can mitigate implicit bias in patient care and candidate selection in medical education. ATS Sch. 2020;1(3):211-217. doi: 10.34197/ats-scholar.2020-0024PS [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pershing S, Co JPT, Katznelson L. The new USMLE Step 1 paradigm: an opportunity to cultivate diversity of excellence. Acad Med. 2020;95(9):1325-1328. doi: 10.1097/ACM.0000000000003512 [DOI] [PubMed] [Google Scholar]

- 34.Gardner AK, Cavanaugh KJ, Willis RE, Dunkin BJ. Can better selection tools help us achieve our diversity goals in postgraduate medical education? comparing use of USMLE Step 1 scores and situational judgment tests at 7 surgical residencies. Acad Med. 2020;95(5):751-757. doi: 10.1097/ACM.0000000000003092 [DOI] [PubMed] [Google Scholar]

- 35.Lyons J, Bingmer K, Ammori J, Marks J. Utilization of a novel program-specific evaluation tool results in a decidedly different interview pool than traditional application review. J Surg Educ. 2019;76(6):e110-e117. doi: 10.1016/j.jsurg.2019.10.007 [DOI] [PubMed] [Google Scholar]

- 36.Butler PD, Aarons CB, Ahn J, et al. Leading from the front: an approach to increasing racial and ethnic diversity in surgical training programs. Ann Surg. 2019;269(6):1012-1015. doi: 10.1097/SLA.0000000000003197 [DOI] [PubMed] [Google Scholar]

- 37.Handelsman J, Sakraney N. Implicit bias. White House Office of Science and Technology Policy. 2015. Accessed August 16, 2020. https://obamawhitehouse.archives.gov/sites/default/files/microsites/ostp/bias_9-14-15_final.pdf

- 38.Yousuf SJ, Kwagyan J, Jones LS. Applicants’ choice of an ophthalmology residency program. Ophthalmology. 2013;120(2):423-427. doi: 10.1016/j.ophtha.2012.07.084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Agawu A, Fahl C, Alexis D, et al. The influence of gender and underrepresented minority status on medical student ranking of residency programs. J Natl Med Assoc. 2019;111(6):665-673. doi: 10.1016/j.jnma.2019.09.002 [DOI] [PubMed] [Google Scholar]

- 40.Edmond MB, Deschenes JL, Eckler M, Wenzel RP. Racial bias in using USMLE Step 1 scores to grant internal medicine residency interviews. Acad Med. 2001;76(12):1253-1256. doi: 10.1097/00001888-200112000-00021 [DOI] [PubMed] [Google Scholar]

- 41.Rubright JD, Jodoin M, Barone MA. Examining demographics, prior academic performance, and United States Medical Licensing Examination scores. Acad Med. 2019;94(3):364-370. doi: 10.1097/ACM.0000000000002366 [DOI] [PubMed] [Google Scholar]

- 42.Raman T, Alrabaa RG, Sood A, Maloof P, Benevenia J, Berberian W. Does residency selection criteria predict performance in orthopaedic surgery residency? Clin Orthop Relat Res. 2016;474(4):908-914. doi: 10.1007/s11999-015-4317-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kenny S, McInnes M, Singh V. Associations between residency selection strategies and doctor performance: a meta-analysis. Med Educ. 2013;47(8):790-800. doi: 10.1111/medu.12234 [DOI] [PubMed] [Google Scholar]

- 44.McCaskill QE, Kirk JJ, Barata DM, Wludyka PS, Zenni EA, Chiu TT. USMLE Step 1 scores as a significant predictor of future board passage in pediatrics. Ambul Pediatr. 2007;7(2):192-195. doi: 10.1016/j.ambp.2007.01.002 [DOI] [PubMed] [Google Scholar]

- 45.Lee AG, Oetting TA, Blomquist PH, et al. A multicenter analysis of the ophthalmic knowledge assessment program and American Board of Ophthalmology written qualifying examination performance. Ophthalmology. 2012;119(10):1949-1953. doi: 10.1016/j.ophtha.2012.06.010 [DOI] [PubMed] [Google Scholar]

- 46.Lee M, Vermillion M. Comparative values of medical school assessments in the prediction of internship performance. Med Teach. 2018;40(12):1287-1292. doi: 10.1080/0142159X.2018.1430353 [DOI] [PubMed] [Google Scholar]

- 47.Katsufrakis PJ, Uhler TA, Jones LD. The residency application process: pursuing improved outcomes through better understanding of the issues. Acad Med. 2016;91(11):1483-1487. doi: 10.1097/ACM.0000000000001411 [DOI] [PubMed] [Google Scholar]

- 48.Olivier MMG, Forster S, Carter KD, Cruz OA, Lee PP. Lighting a pathway: the Minority Ophthalmology Mentoring program. Ophthalmology. 2020;127(7):848-851. doi: 10.1016/j.ophtha.2020.02.021 [DOI] [PubMed] [Google Scholar]

- 49.Stanford Medicine . Stanford Clinical Opportunity for Residency Experience program. Accessed August 16, 2020. https://med.stanford.edu/clerkships/score-program.html

- 50.Icahn School of Medicine at Mount Sinai . Visiting Electives Program for Students Underrepresented in Medicine (VEPSUM) fellowship. Accessed August 16, 2020. https://icahn.mssm.edu/about/diversity/cmca/electives

- 51.University of Utah School of Medicine . Clinical preceptorship in ophthalmology. Accessed August 16, 2020. https://medicine.utah.edu/ophthalmology/education/courses/7600.php

- 52.RISE . RISE module: equality vs. equity. Accessed August 22, 2021. https://risetowin.org/what-we-do/educate/resource-module/equality-vs-equity/index.html

- 53.Henderson RI, Walker I, Myhre D, Ward R, Crowshoe LL. An equity-oriented admissions model for Indigenous student recruitment in an undergraduate medical education program. Can Med Educ J. 2021;12(2):e94-e99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Nivet MA. Commentary: diversity 3.0: a necessary systems upgrade. Acad Med. 2011;86(12):1487-1489. doi: 10.1097/ACM.0b013e3182351f79 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eMethods.

eReferences.

eTable 1. Parsimonious Best-Fit Multivariable Linear Regression Model of Applicant and Reviewer Characteristics on Preliminary Application Scores, Using 2019-2020 Residency Application Data

eTable 2. Parsimonious Best-Fit Multivariable Linear Regression Model of Applicant and Reviewer Characteristics on Preliminary Application Scores, Using 2019-2020 Residency Application Data Among Applicants With Step 2 Scores

eTable 3. Medical School Rankings

eFigure 1. 2D Histogram of Redacted and Unredacted Preliminary Residency Application Rankings, 2019-2020

eFigure 2. Distribution of Preliminary Residency Application Rankings Based on International Medical Graduate Status, 2019-2020

eFigure 3. Residency Application Ranking Best-Fit Model Residuals for Osteopathic Applicants